Abstract

Bayesian inference is a common method for conducting parameter estimation for dynamical systems. Despite the prevalent use of Bayesian inference for performing parameter estimation for dynamical systems, there is a need for a formalized and detailed methodology. This paper presents a comprehensive methodology for dynamical system parameter estimation using Bayesian inference and it covers utilizing different distributions, Markov Chain Monte Carlo (MCMC) sampling, obtaining credible intervals for parameters, and prediction intervals for solutions. A logistic growth example is given to illustrate the methodology.

Keywords: Bayesian, Inference, Model fitting, Data, Dynamical system, Mathematical model

1. Introduction

A common method for performing parameter estimation for dynamical systems is to use Bayesian inference (Ghasemi et al., 2011; Higham & Husmeier, 2013; Ma & Berndsen, 2014; Periwal et al., 2008; Vanlier, Tiemann, Hilbers, & van Riel, 2012). Despite the popularity of using Bayesian inference for performing parameter estimation for dynamical systems and useful computational manuals, there is a need for a formalized and comprehensive methodology.

The methods described in this paper assume that the behaviors of the dynamical system of interest have been mathematically analyzed and that the solutions of the dynamical system are well-behaved. Additionally, it is assumed that if a numerical scheme is being used to solve the dynamical system that the numerical scheme is stable. The methodology is presented from a mathematical biology perspective and it will focus on systems of ordinary differential equations (ODEs); however, the Bayesian inference methodology presented can be applied to other areas of applied mathematics and other differential equations systems such as partial differential equations (PDEs). This paper will provide a formalized methodology for dynamical system parameter estimation using Bayesian inference and it will cover utilizing different distributions, Markov Chain Monte Carlo (MCMC) sampling, obtaining credible intervals for parameters, and prediction intervals for solutions. The methodology is illustrated by using a logistic growth example.

2. Dynamical system

Assume that the dynamical system of interest can be described by the following autonomous ODE system (1) written as a vector differential equation:

| (1) |

where and , with the vector of initial conditions .

It is assumed that the that the unique solution vector, , of system (1) exists and can be obtained either explicitly or using numerical approximation. If a numerical approximation method is used, it is assumed that the numerical approximation scheme is stable.

All the parameters in system (1) will be denoted by the vector . If the initial conditions will also be estimated, then let the initial conditions be contained in vector as well.

The dependence of the unique solution vector on both time, t, and the vector of parameters, , will be emphasized and the unique solution vector will be denoted as .

3. Data

Suppose there are m time series data sets. It is important to ensure that the correct ODE model solution or combination of ODE model solutions is fit to the time series data set ().

Sometimes a data set is scaled differently than the model solutions or the data set can be described by a summation of the ODE model solutions. In order to include these situations, we can use a linear combination of the ODE model solutions, , to fit to the time series data set. (The simpler case where only the specific ODE model solution is to be fit to the time series data set, is included in the linear combination where and the other constants are zero.) If the nonzero vector of constants, , will be estimated, then let the nonzero vector of constants, , for , be contained in vector

Also, if the data set can be described by a nonlinear combination of the ODE model solutions, then, similarly, let any estimated nonzero vector of constants, , be contained in vector

So, in general, we fit the function, , to the data set.

4. Distribution of data over time

The distribution of the observations over time for each data set must be chosen before fitting system (1) to the data. The following sections will describe the Gaussian, Poisson, Negative Binomial, and other distribution options.

4.1. Gaussian distribution

Let Y be a random variable from the Gaussian distribution with parameters μ and , . The formulation of the Gaussian distribution is given by the following continuous probability density function (pdf), (Bain & Engelhardt, 1987):

| (2) |

The mean, , of the Gaussian distribution is given by μ and the variance, , of this distribution is given by .

Assume that the time series data set is given by observations with corresponding times . and that the probability of observing is given by the Gaussian distribution:

| (3) |

where the mean changes depending on the time, and the variance is specific to the data set.

Given our assumption of fitting the function of the ODE model solutions and any necessary constants, , to the time series data set, we set

| (4) |

Equation (4) can be thought of as a type of link function. In statistics, for generalized linear models (GLMs), a link function is defined as the function that transforms the mean of a distribution to a linear regression model (Montgomery, Peck, & Vining, 2006). Equation (4) equates the mean of the Gaussian distribution to the ODE model solutions.

4.2. Poisson distribution

Let Y be a random variable from the Poisson distribution with parameter , . The formulation of the Poisson distribution is given by the following discrete pdf, (Bain & Engelhardt, 1987):

| (5) |

where .

The mean, , of the Poisson distribution is given by μ. For the Poisson distribution, the variance is equal to the mean, .

Assume that the time series data set is given by observations with corresponding times and that the probability of observing is given by the Poisson distribution:

| (6) |

where the mean changes depending on the time, . Hence, the variance, , also changes over time.

Again, we will use equation (4) to equate the mean, , to the ODE model solutions.

The Poisson distribution is used for count data of rare events. The fact that the variance is dependent on the mean is particularly useful since in practice when observing count data over time the count data generally expresses more variability at higher values than at lower values (Bolker, 2007). The restriction that the variance is strictly equal to the mean is commonly violated for many types of count data. Count data where the variance is larger than the mean is called overdispersed. The negative binomial distribution can be used for count data with overdispersion.

4.3. Negative binomial distribution

Let Y be a random variable from the negative binomial distribution with parameters and , . The formulation of the negative binomial distribution is given by the following discrete pdf, (Linden & Mantyniemi, 2011):

| (7) |

where .

The interpretation of this formulation of the negative binomial distribution is that y are the number of failures before the success and p is the probability of success per trial (Linden & Mantyniemi, 2011).

The mean, , of the negative binomial distribution is given by and the variance, , of this distribution is given by

For count data, the negative binomial distribution can be interpreted as the mean number of counts with the variance overdispersed, since , (Bolker, 2007).

Assume that the time series data set is given by observations with corresponding times and that the probability of observing is given by the negative binomial distribution:

| (8) |

where changes depending on the time, and is specific to the data set. Hence, the variance, , also changes over time.

As before, we will use equation (4) to equate the mean, , to the ODE model solutions.

4.4. Other distributions

It is seen from sections 4.1, 4.2, 4.3 that in general if the time series data set is given by observations with corresponding times and the probability of observing is given by the distribution with pdf with mean , then equation (4) is used to equate the mean, , to the ODE model solutions.

5. Likelihood function

In a dynamical system, the dependency of solutions on each other is built into the mathematical model itself. Assuming that the mathematical model correctly describes the data sets of interest, the data sets can be considered independent from each other. With m independent time series data sets, there will be m likelihood functions associated with each of the independent data sets and the combined likelihood function is given by

| (9) |

where θ is the vector of parameters to estimate, and C is any positive constant not depending on θ used to simplify the likelihood function (Kalbfleisch, 1979).

5.1. Gaussian probability model for m data sets and combined likelihood function

Assume, for , that the time series data set is given by observations with corresponding times and that the probability of observing is given by the Gaussian distribution in equation (3) where the mean changes depending on the time, and the variance is specific to the data set. Then the probability of the observed counts is given by

| (10) |

where equation (4) is used to equate the mean, , to the ODE model solutions and

The Gaussian probability model is very beneficial for fitting since even poor initial guesses of the vector of parameters, , will still produce a nonzero probability.

The combined likelihood function is given by

| (11) |

where C = simplifies the likelihood function. The value of that maximizes will also maximize (Kalbfleisch, 1979).

5.2. Poisson probability model for m data sets and combined likelihood function

Assume, for , that the time series data set is given by observations with corresponding times and that the probability of observing is given by the Poisson distribution in equation (6) where the mean (and hence the variance, ) changes depending on the time, . Then the probability of the observed counts is given by

| (12) |

where equation (4) is used to equate the mean, , to the ODE model solutions and .

The combined likelihood function is given by

| (13) |

where C = simplifies the likelihood function.

5.3. Negative binomial probability model for m data sets and combined likelihood function

Assume, for , that the time series data set is given by observations with corresponding times and that the probability of observing is given by the negative binomial distribution in equation (8) where the mean (and hence the variance ) changes depending on the time, . Then the probability of the observed counts is given by

| (14) |

where , equation (4) is used to equate the mean, , to the ODE model solutions and

The combined likelihood function is given by

| (15) |

where C = simplifies the likelihood function.

6. Bayesian framework

The Bayesian framework is set up by first assuming a probability model for the observed data D given a vector of unknown parameters , which is . Then it is assumed that is randomly distributed from the prior distribution . Statistical inference for is based on the posterior distribution, . Using Bayes’ theorem we have

| (16) |

where is the parameter space of and is the likelihood function. is called the prior predictive distribution and it is the normalizing constant of the posterior distribution (Chen, Shao, & Ibrahim, 2000). The unnormalized posterior distribution is given by .

The Bayesian framework is very useful to use for statistical inference that occurs in mathematical biology since there is generally prior information about the unknown parameters in the literature.

6.1. Prior distribution

In biological applications there may exist literature regarding an appropriate prior distribution for a parameter of interest. However, in many cases, only a general range is known from the literature about a parameter of interest and the uniform distribution is chosen as the prior distribution for the parameter of interest.

7. Markov Chain Monte Carlo algorithms

Markov Chain Monte Carlo (MCMC) algorithms are designed to sample and to fully explore the parameter space where the unnormalized posterior distribution is positive (Lynch, 2007). The MCMC algorithms involve a process where a new vector of parameter values is sampled from the posterior distribution, , based off of the previous vector of parameter values, . A successful MCMC algorithm results in a sample path (also called a chain or walker) that has arrived at a stationary process and covers the domain of the target unnormalized posterior distribution.

7.1. Metropolis-Hastings algorithm

The Metropolis-Hastings algorithm is one of the classic MCMC algorithms (Chen et al., 2000):

A starting point is selected.

For every iteration :

randomly select a proposal for , , from the proposal distribution

proposal for is accepted with probability

random sample from

if , the proposal is accepted and .

If not,

where is the unnormalized posterior distribution.

7.1.1. Random-walk Metropolis-Hastings algorithm

If a symmetric proposal distribution is chosen in the Metropolis-Hastings Algorithm, then the proposal distribution randomly perturbs the current position of the vector of unknown parameters, , and these algorithms are called Random-Walk Metropolis-Hastings algorithms (Lynch, 2007).

A symmetric proposal distribution has the property that and this simplifies the acceptance probability to .

7.2. Affine invariant ensemble Markov Chain Monte Carlo algorithm

The affine invariant ensemble MCMC algorithm is shown to perform better than the Metropolis-Hastings algorithm and other MCMC algorithms (Goodman & Weare, 2010). The algorithm uses K walkers and the positions of the walkers are updated based on the present positions of the K walkers (Weikun, 2015, pp. 1–8). The following is the affine invariant ensemble MCMC algorithm:

A starting point is selected for each of the walkers, .

For every iteration :

For :

randomly select a walker j from the K walkers such that

randomly choose z from the distribution ,

proposal for is (Stretch Move)

proposal for is accepted with probability

random sample from . If , the proposal is accepted and . If not,

where is the unnormalized posterior distribution, is adjusted to improve performance, and satisfies the symmetry condition .

The equation is the equation of a line parallel to the vector . By randomly choosing z, the stretch move in the algorithm moves to a vector position, , a certain distance up or down the line. Then the vector proposal, , is either accepted or rejected based on the acceptance probability, α.

The set of samples from each of the K walkers will converge to the unnormalized posterior distribution, . After running the method, the set of samples from each of the K walkers can be pooled together to form a larger sample from the unnormalized posterior distribution, samples. Since the samples from the first iterations are generally far away from the highest density of the unnormalized posterior distribution, the first iterations are usually deleted from each of the K walkers; the deletion of the first iterations is called burn-in. Let H be the number of pooled samples after the burn-in is completed.

8. Diagnostics

The samples from the MCMC provide a sample path. It is important to diagnose if this sample path produces a sample from the target unnormalized posterior distribution, . In other words, the sample path converges to the target unnormalized posterior distribution, . From the plot of the sample path, it is vital to find that the sample path has arrived at a stationary process and the sample path covers the domain of the target unnormalized posterior distribution, .

The sample path for each parameter should be plotted. It is ideal to find that the sample path for each parameter is oscillating very fast and displays no apparent trend; this indicates that the sample path has arrived at a stationary process. By observing the marginal posterior distribution, for each parameter , it should be observed that the sample path covers the domain of the target unnormalized posterior distribution, .

A formalized test of the convergence of the MCMC sampling to the estimated unnormalized posterior distribution for each parameter is found by using a general univariate comparison method (Gelman & Brooks, 1998). The general univariate comparison method uses the distance of the empirical interval for the pooled samples, S, and divides this distance by the average of the distances of the empirical interval for each of the K walkers, , to receive the potential scale reduction factor, η (Gelman & Brooks, 1998):

| (17) |

When the potential scale reduction factor, η, is close to 1 for all the estimated parameters, this indicates that the MCMC sampling converged to the estimated posterior distribution for each parameter.

9. Credible intervals for parameters

For a unimodel, symmetric marginal posterior distribution, , for , the 95% credible interval for is given by the 2.5 and 97.5 percentiles of the marginal posterior distribution of (Chen et al., 2000).

9.1. Non-uniqueness

Non-uniqueness occurs when there is more than one solution vector that explains the data, D, equally as well.

When there is non-uniqueness, the marginal posterior distribution, , for is constant over an interval and the credible interval for is given by the upper and lower limits of the interval (Chen et al., 2000).

The credible intervals resulting from non-uniqueness are still very beneficial since they are often more specific than the initial prior distributions specified for the parameters.

10. Posterior predictive distribution

Let be future responses of interest for the m datasets. The posterior predictive distribution of is given by

| (18) |

where is the posterior distribution and is the same probability model for the data specified in the Bayesian framework (16).

To generate the posterior predictive distribution.

For each pooled sample :

randomly sample from the probability distribution specified for the data at

where H is the number of samples from the unnormalized posterior distribution.

The 95% prediction intervals for each data set is found by determining the 2.5 and 97.5 percentiles of the posterior predictive distribution at each .

The posterior predictive mean is found by taking the mean of the posterior predictive distribution at each .

11. An example: logistic growth

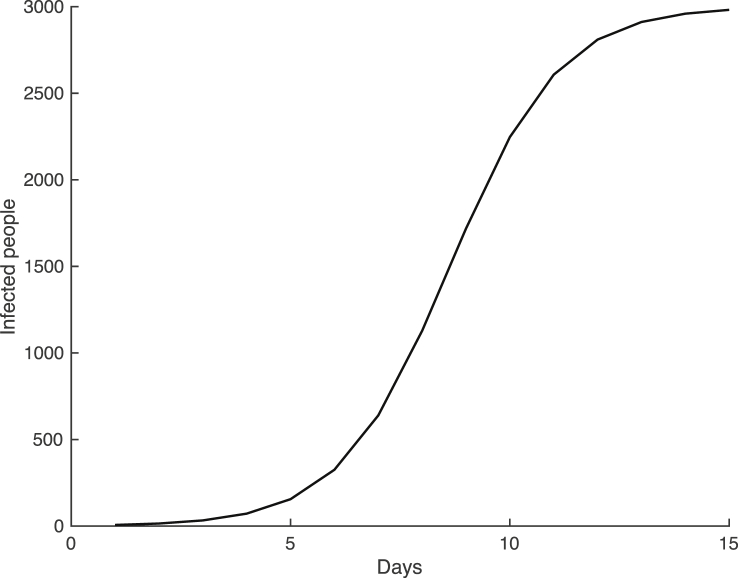

Assume there are three people infected with a virus in an isolated town of 3000 people. Furthermore, assume that the true model for the first 15 days of the virus across the population is plotted in Fig. 1 and given by the following differential equation

| (19) |

where , and .

Fig. 1.

The true logistic growth model for the spread of viral infection in the small town with,and.

Now, this differential equation (19) can be solved analytically and we receive the logistic equation

| (20) |

where

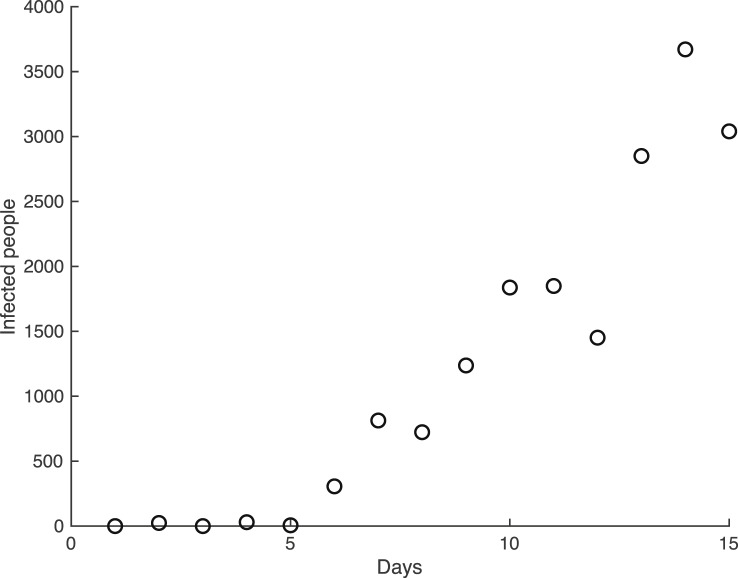

Now, assume that the town collects count data for the number of people infected with the virus. We will generate this observed data by randomly sampling from the Negative Binomial distribution with mean given by (20) with , and , and variance given by the mean divided by p, where p is chosen as 0.005. The generated observed data for the first 15 days of the virus across the population is plotted in Fig. 2.

Fig. 2.

The generated data for the spread of a viral infection in the small town.

Now, we will use Bayesian inference to determine the following unknown vector of parameters

In this scenario, equation (4) is and the negative binomial distribution (8) is chosen to describe the observed data.

The following uniform prior distributions are chosen for the parameters:

with distribution

r with distribution

N with distribution

p with distribution .

The affine invariant ensemble MCMC algorithm is used with iterations and walkers. The potential scale reduction factor, η, for each parameter:

for

for r

for N

for p.

All potential scale reduction factors are close to 1 and this indicates that the algorithm converged to the posterior distribution.

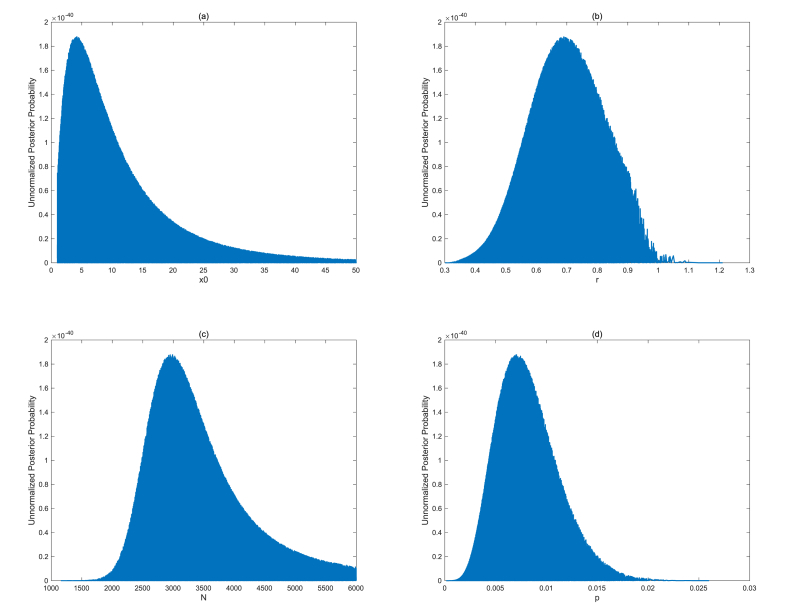

The marginal unnormalized posterior distribution for each parameter is plotted in Fig. 3. The estimated parameters with 95% credible intervals are the following:

is estimated to be 4.13 (1.68, 19.58),

r is estimated to be 0.690 (0.474, 0.834),

N is estimated to be (, ), and

p is estimated to be 0.0070 (0.0032, 0.0111).

Fig. 3.

Marginal unnormalized posterior distribution for (a), (b)r, (c)N, and (d)p.

The true parameter values for , r, N, and p all lie within the 95% credible intervals.

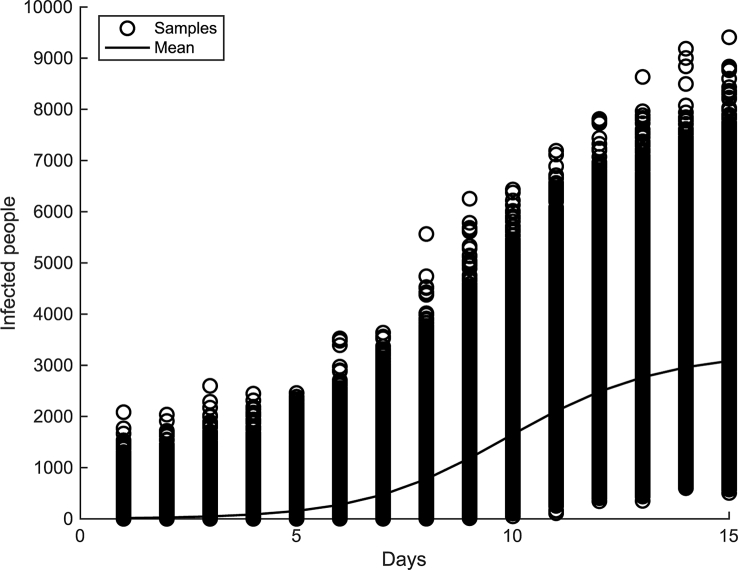

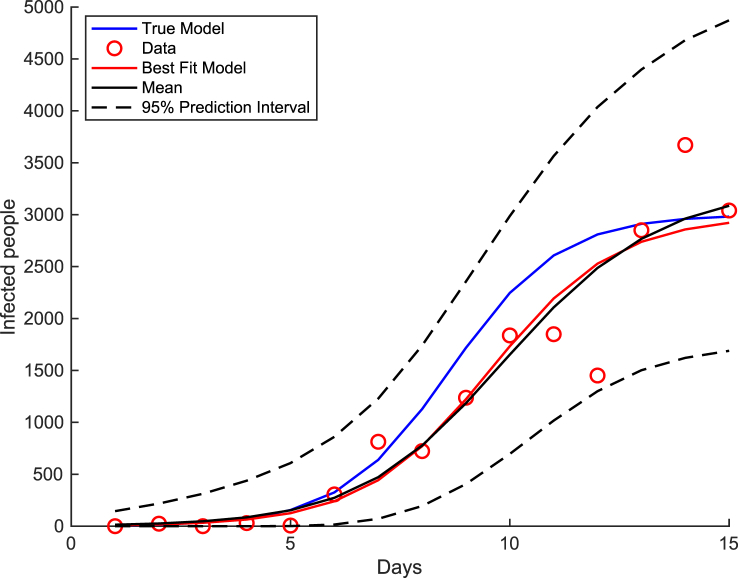

Samples from the posterior predictive distribution and the posterior predictive mean are displayed in Fig. 4. The true model, best fit model (model with the highest unnormalized posterior probability), and posterior predictive mean are compared in Fig. 5. It is seen that the best fit model (red curve) lies very close to the posterior predictive mean (black curve) and is near the true model (blue curve). It is observed that the true model (blue curve) and all of the generated data (red circles) lie within the 95% prediction intervals (dashed black curves).

Fig. 4.

Posterior predictive distribution with the posterior predictive mean.

Fig. 5.

Best fit and true model for the spread of a viral infection in the small town with 95% prediction interval.

Declaration of competing interest

I wish to confirm that there are no known conflicts of interest associated with these lecture notes.

Handling Editor: Dr. J Wu

Footnotes

Peer review under responsibility of KeAi Communications Co., Ltd.

References

- Bain L.J., Engelhardt M. 2nd ed. Edition. Brooks/Cole; 1987. Introduction to probability and mathematical statistics. [Google Scholar]

- Bolker B. Princeton University Press; Princeton-New Jersey: 2007. Ecological models and data in R. [Google Scholar]

- Chen M., Shao Q., Ibrahim J.G. Springer-Verlag; New York-New York: 2000. Monte Carlo methods in Bayesian computation. [Google Scholar]

- Gelman A., Brooks S.P. General methods for monitoring convergence of iterative simulations. J. Comput. Graph. Stat. 1998;7(4):434–455. [Google Scholar]

- Ghasemi O., Lindsey M.L., Yang T., Nguyen N., Huang Y., Jin Y.-F. Bayesian parameter estimation for nonlinear modelling of biological pathways. BMC Syst. Biol. 2011;5(Suppl 3):S9. doi: 10.1186/1752-0509-5-S3-S9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodman J., Weare J. Ensemble samplers with affine invariance. Comm. App. Math,. Com. Sc. 2010;5(1):65–80. [Google Scholar]

- Higham C.F., Husmeier D. A bayesian approach for parameter estimation in the extended clock gene circuit of arabidopsis thaliana. BMC Bioinformatics. 2013;14(Suppl 10):S3. doi: 10.1186/1471-2105-14-S10-S3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalbfleisch J.G. Vol. 2. Springer-Verlag New York, Inc.; 1979. (Probability and statistical inference). Statistical Inference. [Google Scholar]

- Linden A., Mantyniemi S. Using the negative binomial distribution to model overdispersion in ecological count data. Ecology. 2011;92(7):1414–1421. doi: 10.1890/10-1831.1. [DOI] [PubMed] [Google Scholar]

- Lynch S.M. Springer; New York: 2007. Introduction to applied Bayesian statistics and estimation for social scientists. [Google Scholar]

- Ma Y.Z., Berndsen A. How to combine correlated data sets - a bayesian hyperparameter matrix method. Astron. Comput. 2014;5:45–56. [Google Scholar]

- Montgomery D.C., Peck E.A., Vining G.G. John Wiley & Sons, Inc.; Hoboken-New Jersey: 2006. Introduction to linear regression analysis. [Google Scholar]

- Periwal V., Chow C.C., Bergman R.N., Ricks M., Vega G.L., Sumner A.E. Evaluation of quantitative models of the effect of insulin on lipolysis and glucose disposal. Am. J. Physiol. Regul. Integr. Comp. Physiol. 2008;295:R1089–R1096. doi: 10.1152/ajpregu.90426.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanlier J., Tiemann C.A., Hilbers P.A.J., van Riel N.A.W. A bayesian approach to targeted experiment design. Bioinformatics. 2012;28(8):1136–1142. doi: 10.1093/bioinformatics/bts092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weikun C. 2015. A parallel implementation of mcmc. [Google Scholar]