Abstract

BACKGROUND

As current augmented-reality (AR) smart glasses are self-contained, powerful computers that project 3-dimensional holograms that can maintain their position in physical space, they could theoretically be used as a low-cost, stand-alone neuronavigation system.

OBJECTIVE

To determine feasibility and accuracy of holographic neuronavigation (HN) using AR smart glasses.

METHODS

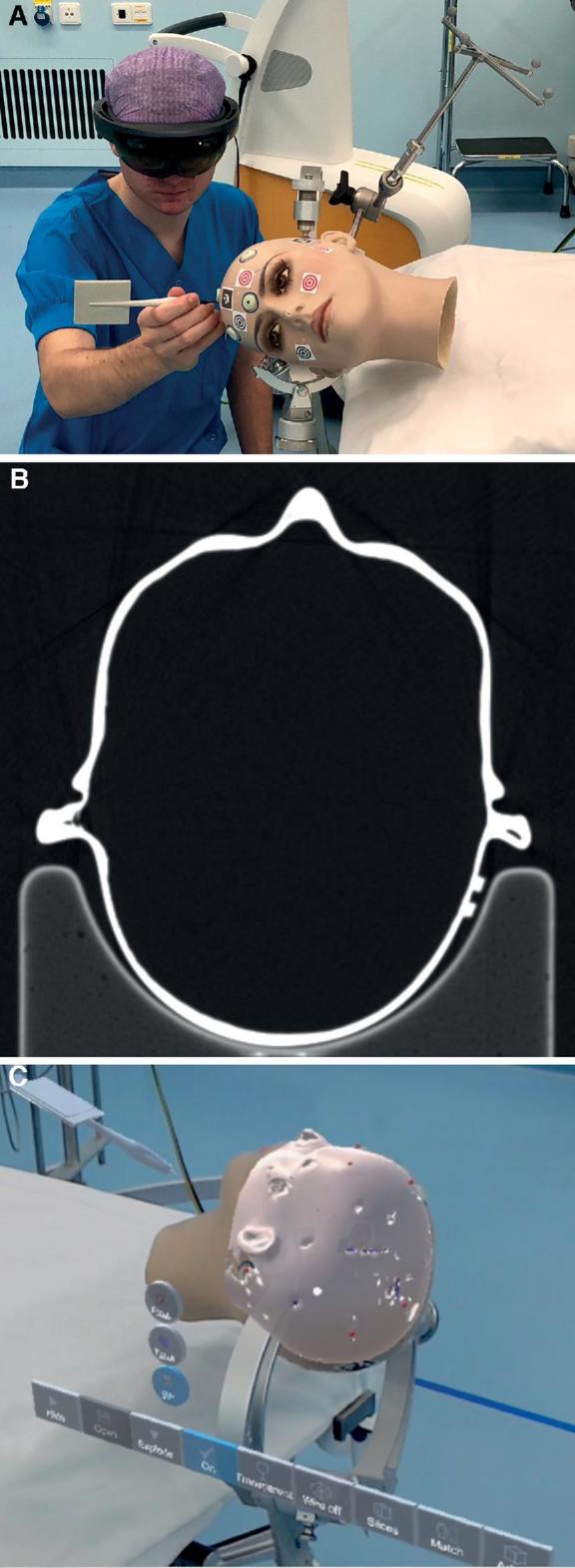

We programmed a fully functioning neuronavigation system on commercially available smart glasses (HoloLens®, Microsoft, Redmond, Washington) and tested its accuracy and feasibility in the operating room. The fiducial registration error (FRE) was measured for both HN and conventional neuronavigation (CN) (Brainlab, Munich, Germany) by using point-based registration on a plastic head model. Subsequently, we measured HN and CN FRE on 3 patients.

RESULTS

A stereoscopic view of the holograms was successfully achieved in all experiments. In plastic head measurements, the mean HN FRE was 7.2 ± 1.8 mm compared to the mean CN FRE of 1.9 ± 0.45 (mean difference: –5.3 mm; 95% confidence interval [CI]: –6.7 to –3.9). In the 3 patients, the mean HN FRE was 4.4 ± 2.5 mm compared to the mean CN FRE of 3.6 ± 0.5 (mean difference: –0.8 mm; 95% CI: –3.0 to 4.6).

CONCLUSION

Owing to the potential benefits and promising results, we believe that HN could eventually find application in operating rooms. However, several improvements will have to be made before the device can be used in clinical practice.

Keywords: Augmented reality, Navigation, Surgery

ABBREVIATIONS

- AR

augmented reality

- CI

confidence interval

- CN

conventional neuronavigation

- CT

computed tomography

- FRE

fiducial registration error

- HN

holographic neuronavigation

- MRI

magnetic resonance imaging

- RMS

root-mean-square

- TRE

target registration error

Augmented reality (AR) is defined as the projection of a computer-generated image overlay (such as a hologram) added to the real environment. Smart glasses are AR devices through which both virtual objects and reality can be observed. In general, AR is a promising technique in medicine. First, AR can be used to explain to patients in detail about their disease. For example, a tumor can be projected together with its anatomical environment as a 3-dimensional hologram in the patient body, in a phantom, or in open space. Second, the technique can be used for teaching.1-3 For example, anatomy or certain surgical approaches can be more easily appreciated when projected with AR. For neurosurgery, AR has some additional theoretical possibilities. Current AR, smart glasses are self-contained, powerful computers that project holograms that accurately maintain their position in physical space. Therefore, theoretically, they can facilitate the creation of an intuitive and relatively low-cost, stand-alone neuronavigation system. AR smart glasses can be controlled in a sterile way by means of gesture recognition, allowing full intraoperative use. Stereoscopic visualization of the 3-dimensional anatomical location of critical structures and their corresponding navigation accuracy, continuously projected in the surgeon's field of view, has possible benefits when compared to using a separate side screen.

To explore these possibilities, we programmed a fully functional, stand-alone neuronavigation application on commercially available smart glasses (HoloLens®, Microsoft, Richmond, Virginia). In this study, we evaluate the feasibility and accuracy of holographic navigation (HN) in the clinical setting compared to conventional neuronavigation (CN).

METHODS

Fiducial registration error (FRE) is defined as the root-mean-square (RMS) of the distance between corresponding skin fiducials in 2 point clouds.4 We performed 10 FRE measurements on a plastic head with HN and CN to test the feasibility of the system. Subsequently, we performed 3 FRE measurements with HN and CN in 3 patients.

Plastic Head

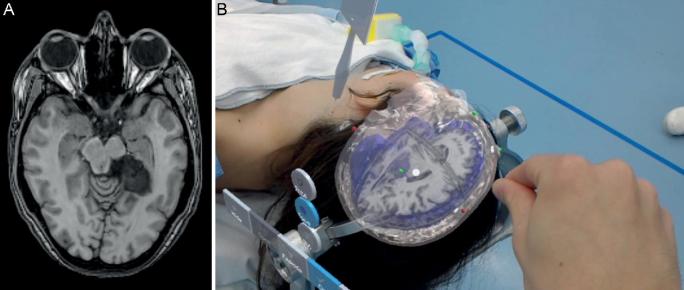

Eight skin fiducials (IZI Medical Products, Maryland) were placed on standard locations on a plastic mannequin head: 4 in a diamond-shape formation on the forehead and 3 on the posterior skull. A standard navigation computed tomography (CT) scan with 0.5-mm stacks was performed (Figure 1).

FIGURE 1.

A, Point matching to install holographic navigation on plastic head. B, CT scan of the plastic head. C, Picture through the smart glasses showing the matched hologram over the plastic head.

Patients

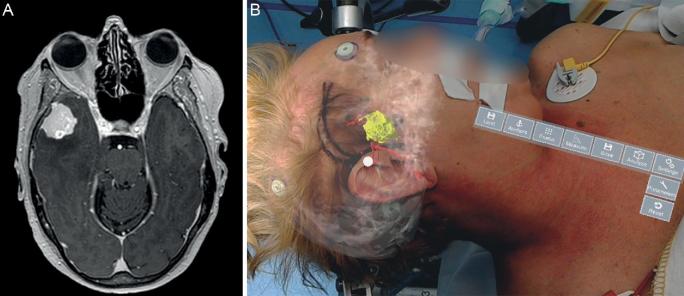

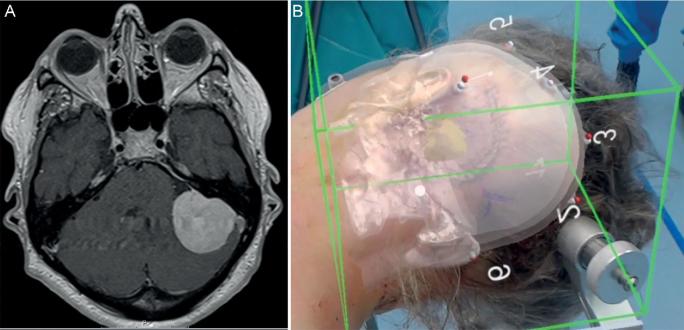

We operated the first patient for a left-sided mesiotemporal epidermoid cyst, the second patient for a sphenoid meningioma on the right side, and the third patient for a left-sided vestibular schwannoma. All patients gave informed consent to perform HN measurements before surgery. Standard preoperative navigation magnetic resonance imaging (MRI) images were obtained after standard fiducial placement (Figures 2-4). All patients were further operated using CN as the primary navigation method. The Institutional Medical Ethical Board approved this pilot study. All patients gave informed consent for the measurements.

FIGURE 2.

A, MRI patient 1. Left-sided mesiotemporal epidermoid. B, Hologram patient 1 including MRI.

FIGURE 3.

A, MRI patient 2. Right-sided meningioma. B, Hologram patient 2 showing tumor and vasculature.

FIGURE 4.

A, MRI patient 3. Left-sided vestibular schwannoma. B, Hologram patient 3 showing tumor.

Hologram Creation

Using open source software programs InVesalius (Information Technology Center Renato Archer, Campinas, Sao Paolo, Brazil) and MeshLab (Institute for Computer Science and Technologies, Pisa, Italy), 3-dimensional surface models were created based on navigation scan DICOM images. For the plastic head, we created a single-surface model. For the 3 patients, we rendered a surface model of the skin, skull, brain, relevant blood vessels, and intracranial pathology, and we attached the relevant MRI images. Subsequently, these models were transcribed into an HN-compatible hologram using a cross-platform game engine Unity (Unity Technology, San Francisco, California). We identified, located, and numbered the fiducial points, creating a virtual point cloud for both models.

We uploaded the surface models, including the point clouds to the smart glasses. Hereto, we programmed a dedicated application in C# and C++ using Unity, image-recognition Vuforia Library (PTC, Needham, Massachusetts), and Microsoft Visual Studio (Microsoft, Redmond, Washington). This navigation application automatically rendered the hologram in proper scale, including the first point cloud with numbers.

Point Matching

The surface mesh created by a HoloLens is too crude to use surface registration algorithms.5 Manual placement has a major disadvantage of visual misinterpretation; holograms can appear to project accurately over the patient from a certain perspective, whereas moving around the hologram reveals very inaccurate hologram placement from other perspectives.5 Therefore, we decided to use manual fiducial matching using an iterative closest point algorithm. This method uses fiducial markers to create 2 point clouds. A point-matching procedure was performed with the HN application to match the virtual hologram on the real subject (Figure 1; Video, Supplemental Digital Content). FRE was automatically measured by the HN application. Hereto, we 3D printed a pointer with a marker (Figure 5A). The HN recognizes this pointer, and a virtual pointer is directly projected over the real pointer at placement in the field of view (Figure 5B). We always used the same pointer for these experiments and performed 1 registration per fiducial per measurement. However, although 3D printing is already highly accurate, slight variability in pointer length can occur, also because of slight damage or wear and tear. This variability can be corrected using the calibration option in our software. This causes the digital pointer tip always to be at exactly the same location as the physical pointer tip. The centers of the fiducials were all subsequently tapped with the pointer. When the tip of the virtual tool was positioned at the desired spot, a voice command (“Point”) added a point on this exact location and thus created a second point cloud. After tapping all points, the first and the second point clouds were matched by pressing a virtual “match” button, activating an iterative closest point algorithm. We programmed an automatic FRE calculation that is projected in the heads up display directly after matching of the hologram with the subject. Subsequently, in the AR application, the transparency of the different layers of the hologram can be customized to facilitate optimal visibility of relevant structures (Video, Supplemental Digital Content). Subsequently, CN was installed (Brainlab, Munich, Germany) using the same fiducials and point matching using the system standard optical instruments. CN FRE was calculated from the given error per fiducial by the system software. CN was used in all patients for the rest of the procedure.

FIGURE 5.

A, Pointer for point matching. B, Holographic pointer over real pointer.

RESULTS

A stereoscopic view and subsequent matching of the plastic head hologram (Figure 1) and 3 patient holograms (Figures 2-4) were successfully achieved. Hologram manipulation and operation using hand gestures was possible from any angle and distance. The software occasionally showed short frame freezes. Throughout 60 min (12 measurements of 5 min each) of intensive usage, we recorded, in total, 6 frame freezes. These frame freezes had a mean length of 1.2 s (range, 0.4-1.8 s) and had no effect on hologram location or unintentional hologram movement. The holograms showed minor drift (range, 1-2 mm) while moving around them.

In the 10 plastic head measurements, the mean HN FRE was 7.2 ± 1.8 mm compared to the mean CN FRE of 1.9 ± 0.45 (mean difference: –5.3 mm; 95% CI: –6.7 to –3.9). In the 3 patients, the mean HN FRE was 4.4 ± 2.5 mm compared to the mean CN FRE of 3.6 ± 0.5 (mean difference: –0.8 mm; 95% CI: –3.0 to 4.6).

DISCUSSION

In this study, we analyzed the accuracy of holographic navigation using the HoloLens (HN) and open source software programming and compared with CN. The currently described AR system has the functionality of a fully working, stand-alone neuronavigation system. A major benefit of the system compared to currently available neuronavigation techniques is that the location and 3-dimensional anatomy of certain surgical targets are continuously stereoscopically projected in the surgeon's field of view, as opposed to using a 2-dimensional separate side screen. The placement error of the holograms can be directly assessed by just looking at the patients and the matched hologram, as the hologram location is continuously visualized in relation to the patient. However, the measured HN accuracy was relatively low in the plastic head experiments compared to CN. This is caused by the use of CT for these measurements; CN recognizes fiducials better on CT than our HN application, and therefore, CN facilitates more accurate point cloud matching with CT. Fiducial recognition using MRI is equally difficult between CN and HN, and therefore, the accuracy measurements in patients, which were based on MRI scans, were less different between HN and CN. Despite the relative low number of measurements, we can conclude that HN accuracy is not sufficient yet for clinical use, and several improvements are needed. Based on our current methods of accuracy measurement, HN FRE should be under 2 mm for CT and under 4 mm for MRI to be noninferior to CN.

Current Literature

To assess results of previous studies considering the accuracy of HN using the HoloLens, we conducted a literature search using PubMed (November 15, 2018). The sole search term “HoloLens” (All Fields) yielded 39 articles. Four of the 39 articles considered HoloLens-guided navigation accuracy. These 4 articles all used different methods of accuracy measurement. Kuhlemann et al5 measured accuracy by calculating the RMS error of landmark matching using a pointer tool on a phantom model, a method similar to the calculation of FRE. This study reported an overall RMS of 4.3 ± 0.7 mm. McJunkin et al6 measured target registration error (TRE) on a 3D-printed model. This study reported a mean TRE of 5.8 ± 0.5 mm. Incekara et al7 measured accuracy by marking tumor centers on patient skin using both AR and CN. Mean distance between marked centers was 4 (interquartile range, 0-8) mm. Meulstee et al8 determined accuracy of a HoloLens integrated in an image-guided surgery system. The authors measured a mean Euclidian distance between a plastic and a virtual model of 2.3 ± 0.5 mm. In conclusion, although different techniques were used in these studies and our study and low numbers of measurements were performed, we can state that the accuracy measurements of our application are broadly within the range of these 4 publications. None of these studies have results that we would regard as clinically acceptable.

Distinguishing Aspects

The current study distinguishes itself from earlier studies for multiple reasons. We are the first to describe a fully functioning, stand-alone neuronavigation application programmed on the HoloLens or any other commercially available AR device. Custom-build devices are often expensive. Moreover, they are difficult to replicate, certify, and make broadly available. Our application allows for extensive manipulation of the hologram, including moving, rotating, resizing, separating different tissues, making different tissues transparent, and overlaying CT or MRI slices. We incorporated holographic point matching. This unique feature has also not been described before. Manual placement has the major disadvantage of visual misrepresentation: holograms can appear to project accurately over the patient from a certain perspective, whereas moving around the hologram reveals very inaccurate hologram placement from other perspectives and is, therefore, less accurate.

Potential Improvements

Our initial results are promising; however, some improvements are needed.

First, the mean FRE as observed during the plastic head model experiment is too high to be clinically acceptable. To increase accuracy, the matching process has to be improved. Hence, developers of next-generation smart glasses should eliminate the holographic drift. The second improvement that should be made is the correction for object movement. CN follows the object because the point cloud is matched on a visual marker that is fixated to the object (the “reference arc”). Hologram placement is currently based on 3-dimensional surface scanning of the room. Therefore, using the current method of HN, the hologram will have to be manually replaced in case of operating table movement or significant motion of objects in the room. This is not clinically acceptable. We aim to develop markers placed in the operative field that will accurately keep the hologram on the matched volume. Third, a method for automatized, standardized TRE measurements should be programmed in the system and incorporated in measurement results. TRE is defined as the displacement of a specific chosen point (for example, an anatomical point, not the fiducial used for registration) in virtual space compared to physical space. Currently, because of software and hardware restrictions, it is not possible to measure HN TRE automatically with sufficient precision. A previous study that did try to measure HN TRE used manual methods.9 However, the associated level of measurement accuracy and bias with this technique is unacceptable for standardized neurosurgical validation. Future studies should focus on automatized TRE measurements on representative neuroanatomical models.

Our application does not account for brain shift, as no other current system does. However, the currently described HN system has the potency to do this, because the hologram can be manipulated during surgery. In the future, surface scanning of the operative field combined with intraoperative tracking by smart glasses will probably facilitate a solution for this long-standing problem.

CONCLUSION

In conclusion, the accuracy of holographic navigation using commercially available smart glasses is currently not within clinically acceptable levels. However, the results are promising. We believe that it is possible to reach clinically acceptable levels of accuracy in the near future and to overcome the problems as mentioned above. Owing to the potential benefits of this technique over current navigation techniques, AR neuronavigation offers great potential for further developments and will ultimately find application in operating rooms.

Disclosures

The authors have no personal, financial, or institutional interest in any of the drugs, materials, or devices described in this article.

Supplementary Material

Supplemental Digital Content. Video. Matching of plastic head with hologram is shown. Subsequently, patient 1, 2, and 3 are shown in the operation room with a matched hologram, including possible interactions with the hologram.

REFERENCES

- 1. Barsom EZ, Graafland M, Schijven MP. Systematic review on the effectiveness of augmented reality applications in medical training. Surg Endosc. 2016;30(10):4174-4183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Kugelmann D, Stratmann L, Nuhlen Net al.. An augmented reality magic mirror as additive teaching device for gross anatomy. Ann Anat. 2018;215:71-77. [DOI] [PubMed] [Google Scholar]

- 3. Pelargos PE, Nagasawa DT, Lagman Cet al.. Utilizing virtual and augmented reality for educational and clinical enhancements in neurosurgery. J Clin Neurosci. 2017;35:1-4. [DOI] [PubMed] [Google Scholar]

- 4. Shamir RR, Joskowicz L. Geometrical analysis of registration errors in point-based rigid-body registration using invariants. Med Image Anal. 2011;15(1):85-95. [DOI] [PubMed] [Google Scholar]

- 5. Kuhlemann I, Kleemann M, Jauer P, Schweikard A, Ernst F. Towards X-ray free endovascular interventions using HoloLens for on-line holographic visualisation. Healthc Technol Lett. 2017;4(5):184-187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. McJunkin JL, Jiramongkolchai P, Chung Wet al.. Development of a mixed reality platform for lateral skull base anatomy. Otol Neurotol. 2018;39(10):e1137-e1142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Incekara F, Smits M, Dirven C, Vincent A. Clinical feasibility of a wearable mixed-reality device in neurosurgery. World Neurosurg. 2018;118:e422-e427. [DOI] [PubMed] [Google Scholar]

- 8. Meulstee JW, Nijsink J, Schreurs Ret al.. Toward holographic-guided surgery. Surg Innov. 2019;26(1):86-94. [DOI] [PubMed] [Google Scholar]

- 9. Maruyama K, Watanabe E, Kin Tet al.. Smart glasses for neurosurgical navigation by augmented reality. Oper Neurosurg. 2018;15(5):551-556. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.