Abstract

Introduction

Reproducibility is critical to diagnostic accuracy and treatment implementation. Concurrent with clinical reproducibility, research reproducibility establishes whether the use of identical study materials and methodologies in replication efforts permits researchers to arrive at similar results and conclusions. In this study, we address this gap by evaluating nephrology literature for common indicators of transparent and reproducible research.

Methods

We searched the National Library of Medicine catalog to identify 36 MEDLINE-indexed, English-language nephrology journals. We randomly sampled 300 publications published between January 1, 2014, and December 31, 2018.

Results

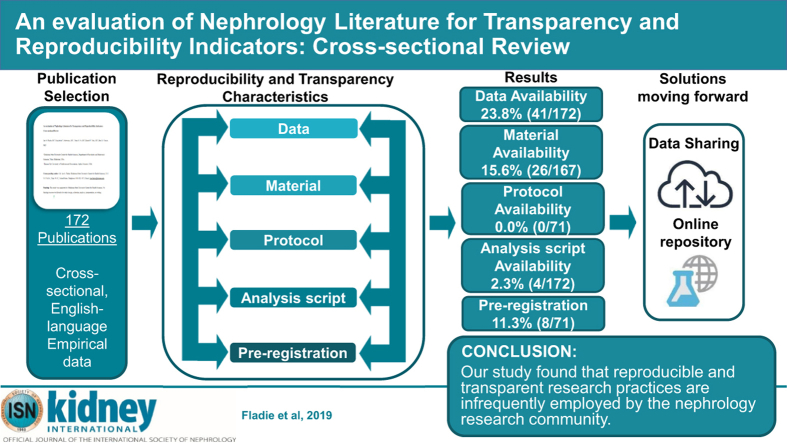

Our search yielded 28,835 publications, of which we randomly sampled 300 publications. Of the 300 publications, 152 (50.7%) were publicly available, whereas 143 (47.7%) were restricted through paywall and 5 (1.7%) were inaccessible. Of the remaining 295 publications, 123 were excluded because they lack empirical data necessary for reproducibility. Of the 172 publications with empirical data, 43 (25%) reported data availability statements and 4 (2.3%) analysis scripts. Of the 71 publications analyzed for preregistration and protocol availability, 0 (0.0%) provided links to a protocol and 8 (11.3%) were preregistered.

Conclusion

Our study found that reproducible and transparent research practices are infrequently used by the nephrology research community. Greater efforts should be made by both funders and journals. In doing so, an open science culture may eventually become the norm rather than the exception.

Keywords: data availability, evidence-based science, reproducibility, transparency

Graphical abstract

See Commentary on Page 118

Reproducibility is critical to diagnostic accuracy and treatment implementation. In nephrology, a substantial body of literature is devoted to establishing the reproducibility of diagnostic tests or procedures. Examples include an evaluation of the reproducibility of the Banff classification for surveillance renal allograft biopsies among pathologists across transplant centers,1 a novel analytic technique for renal blood oxygenation level–dependent magnetic resonance imaging,2 and a food frequency questionnaire among patients with chronic kidney disease.3 This form of reproducibility is important clinically, as such studies establish our confidence in tests or procedures for applications to patient care.

Concurrent with clinical reproducibility, research reproducibility establishes whether use of identical study materials and methodologies in replication efforts permits researchers to arrive at similar results and conclusions. In other cases, reproducibility may mean attempts to reanalyze study data to determine whether the same results can be obtained. The National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK) supports the National Institutes of Health’s (NIH) rigor and reproducibility initiative, which was created to foster greater reproducibility of studies funded by taxpayer dollars. The NIDDK also sponsors dkNET,4 a portal for the dissemination of research protocols and data sets as well as tools and training to promote compliance with the NIH initiative for rigorous and reproducible research.5,6 Further, the NIDDK directly supports reproducible research through grant funding. As one example, the NIDDK has cosponsored a funding opportunity with other NIH institutes and centers to develop novel, reliable, and cost-effective methods to standardize and increase the utility and reproducibility of human induced pluripotent stem cells. The NIDDK has specifically tasked researchers to develop these stem cells for the replacement of endocrine cells, disease modeling, treatments for diabetic wounds, and reversal of diabetic neuropathy.7 Research into stem cells has provided significant medical advancements and has the opportunity to demonstrate the importance of reliable and reproducible clinical and basic science research.

Although efforts have been made with various stakeholders to foster reproducible research, little is known about the practices actually implemented by researchers involved in nephrology research. In this study, we address this gap by evaluating nephrology literature for common indicators of transparent and reproducible research. By assessing the current state of affairs, we can identify areas of greatest need and establish baseline data for subsequent investigations.

Methods

Study Design

Our cross-sectional study used a methodology similar to that of Hardwick et al.8 with our own modifications to evaluate indicators of reproducibility and transparency. Given that this study did not use human subjects, it was not subject to institutional review board approval. When applicable, the Preferred Reporting for Systematic Reviews and Meta-analyses (PRISMA) guidelines were used.9 The following materials can be accessed on Open Science Framework (https://osf.io/n4yh5/): protocols, raw data, training recording, and additional material.

Journal and Publication Selection

Nephrology medicine journals were searched on the National Library of Medicine catalog on June 5, 2019, by DT for the subject term tag “Nephrology[ST]”. To meet the inclusion criteria, journals had to be MEDLINE indexed, full text, and published in English. To be included in the study, journals also needed to have an electronic International Standard Serial Number (ISSN) and if the electronic ISSN is not available, they needed to have a linking ISSN (https://osf.io/tck6m/). DT searched PubMed using the list of ISSN to encompass articles from January 1, 2014, through December 31, 2018. A total of 300 publications were then randomly selected to be included in the analysis (https://osf.io/mzj45/). A complete list of journals that had publications included in our study can be found in Appendix S1.

Data Extraction Training

On June 10, 2019, we had an in-person training session led by DJT for the investigators (TMA, IAF, and NHV) on how to extract data. In training, we reviewed study data extraction, design, and protocol. The following investigators, TMA, IAF, and NHV extracted data from 2 publications and reconciled discrepancies after extraction, as an example of the process. At the end of the training session, the investigators also applied the same system for the next 10 publications to ensure that the process was well standardized and reliable. Starting on July 11, TMA, IAF, and NHV conducted extraction of the remaining 289 publications using a duplicate and blinded method. On completion of data extraction, the investigators (TMA, IAF, and NHV) met to reconcile the discrepancies from data extraction. DJT also was available to arbitrate situations when a consensus could not be reached among the investigators (TMA, IAF, and NHV). The training session from June 10, 2019, was recorded and made available online to investigators for reference (https://osf.io/tf7nw/).

Data Extraction

We used a Google form similar to the form used by Hardwicke et al.,8 with modifications (https://osf.io/3nfa5/).8 Our form contained the following modifications: 5-year impact factor, impact factor for the most recent year listed, additional study design options (cohort studies, case series, secondary analyses, chart reviews, and cross-sectional studies), and additional funding options (university, hospital, public, private/industry, or nonprofit). The Google form prompted investigators to assess the overall reporting of transparency and reproducibility characteristics. Data extraction was dependent on the study design of the publication. Publications without any empirical data were excluded because they fail to provide reproducibility-related characteristics. The following study designs were modified: systematic reviews, meta-analyses, case studies, and case series. Systematic reviews and meta-analyses generally do not contain data measuring materials, thus we excluded them from evaluating for material availability. Case reports and case series contain empirical data, but are generally not descriptive enough in their design to be reproduced in subsequent publications and were not expected to contain reproducibility characteristics.10

Open Access Article Availability

We used a systematic approach to determine if publications were made openly available to the public. First, we searched each publication by title and DOI by using the Open Access Button (https://openaccessbutton.org/). If the Open Access Button failed to identify the publication online or reported an error, we then searched PubMed and Google to identify any other forms of public availability. If the first and second step failed to find the full text, then the publication was determined to be paywall restricted and not available through open access.

Replication Attempts and Use in Research Synthesis

Using the publication title and the DOI, we searched Web of Science (https://webofknowledge.com) for the following: (i) the number of times a publication was cited by a systematic review/meta-analysis, and (ii) the number of times a publication was cited by a validity/replication study.

Statistical Analysis

We used Microsoft Excel functions to provide our statistical analysis, including percentages, fractions, and confidence intervals. In addition, Microsoft Excel (Redmond, WA) was used to assign each publication a random number. The spreadsheet was then sorted in order of sequential numbers. The top 300 publications were used for our random sample.

Results

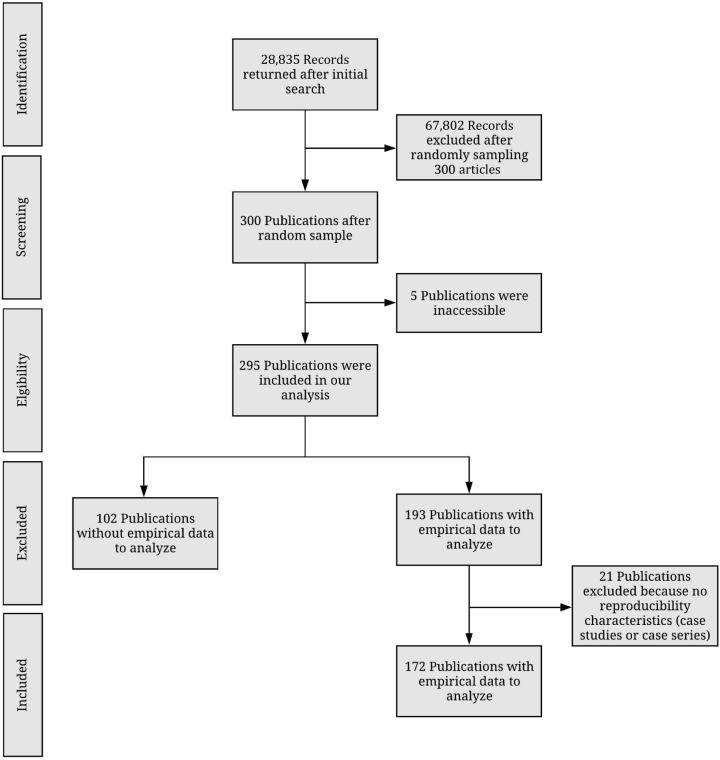

We identified 36 nephrology journals that met our inclusion criteria. Our search yielded 28,835 publications within our time frame. We randomly sampled 300 publications to include for data extraction. Of the 300 publications, 295 were accessible and contained information to be analyzed. Five publications were inaccessible and therefore were excluded. From the remaining 295 publications, 123 lacked empirical data to be analyzed, including 21 that were case studies or case series; therefore, they were excluded from our final analysis. Our final analysis included 172 publications with empirical data (Figure 1). Table 1 provided additional information for each indicator used to assess reproducibility and transparency.

Figure 1.

Flow diagram of included and excluded studies in nephrology journals.

Table 1.

Analyzed components of each publication

| Study design | Significance in reproducibility of research | |

|---|---|---|

| Publication accessibility | ||

| All included studies (n = 295) | Publication open access (Using the Open Access Button or PubMed was the publication available to the public? Is the full text accessible through paywalls requirements?) |

Publications that are open access are more accessible, thus making it easier to be reproduced. Given that a publication is restricted behind paywall, this potentially limits investigators from obtaining essential information necessary for reproducing similar trial designs. |

| Funding source | ||

| All included studies (n = 295) | Funding statement (Do the authors disclose the source of funding? If a funding statement was received, do the authors provide the name of the funding source?) |

Funding sources can influence research and impose biases. Authors can mitigate potential bias by disclosing relationships with the funding agency. |

| Financial conflicts of interest | ||

| All included studies (n = 295) | Conflicts of interest statements (Do the authors provide a conflict of interest statement?) |

Conflict of interest statements aid in providing transparent research. The disclosure of potential conflicts that may pertain to the conduction of the study inform readers about potential biases that might have influenced the conduction of the study. |

| Protocol availability | ||

| Empirical studiesa (n = 71) | Protocol statement (Is there a statement provided indicating the use of a protocol for conduction of the research project?) |

Full protocols are essential for the reproducibility of a study by providing a step-by-step outline of the study’s methodology. |

| Components (If the protocol is accessible, what aspects of the protocol were made available for interpretation?) |

||

| Data availability | ||

| Empirical studiesb (n = 172) | Data statement (Is there a statement provided that guides readers to the data collected in the publication?) |

Raw data aids in reproducibility by allowing readers and other investigators an opportunity to synthesize and verify significant findings. Given that a study provides all raw data and is reproduced with similar findings, the results of the study that was reproduced would become more robust because similar studies have found similar results. |

| Availability (If the data are accessible, how were the data made accessible, e.g., on request from author or in supplementary material or journal?) |

||

| Components (Do the investigators provide all the raw data collected?) |

||

| Organization (Are the data provided in a clean and easy-to-interpret manner?) |

||

| Analysis scripts availability | ||

| Empirical studiesb (n = 172) | Analysis script statement (Is there a statement providing a link to the analysis script used in the publication?) |

Analysis scripts aid in reproducibility by providing a detailed description of how the data analysis was conducted and how the data were interpreted. |

| Availability (If the analysis script is available, how was it made available and is it accessible, e.g., on request from author or in supplementary material or journal?) |

||

| Registration availability | ||

| Empirical studiesa (n = 71) | Prospective registration statement (Is there a registration statement or number?) |

Preregistration improves both transparency and reproducibility of the publication by providing documentation of the publication before data extraction and analysis. Preregistration helps to mitigate selective reporting bias, publication bias, and p-hacking. |

| Registration availability (Where was the publication registered, e.g., at Clinicaltrials.gov?) |

||

| Accessibility (Is the registration accessible?) |

||

| Components (If the registration is accessible, what aspects of the manuscript were registered?) |

||

| Material availability | ||

| Empirical studiesc (n = 167) | Materials statement (Is there a statement providing a link to the materials used in the publication?) |

Materials aid in reproducibility by ensuring the resources used in conducting the manuscript retain the same properties as different material could produce different outcomes. |

| Material availability (If the materials are available, how were they made available and are they accessible, e.g, on request from author or in supplementary material or journal?) |

||

| Evidence synthesis | ||

| Empirical studiesc (n = 167) | Meta-analysis, systematic review, replication citations (Is the publication cited by any of the following: meta-analyses, systematic reviews, or replication studies?) |

Systematic reviews and meta-analyses compile published evidence to evaluate strengths and limitations within the area of focus. Given that the rigor of systematic reviews and meta-analyses are highly regarded in the medical community, publications incorporated into these studies should be reproducible. |

Components analyzed varied by study type. Additional details can be found at: https://osf.io/tck6m/.

Clinical trials, cohort, case control, and meta-analyses and systematic reviews were included in these categories because they are commonly registered on a trial registry.

Empirical studies include clinical trials, cohort, case series, case reports, case control, secondary analyses, chart review, commentaries (with data analysis), laboratory, and cross-sectional study designs.

Case reports, case series, commentaries with analysis, meta-analysis or systematic review, and nonempirical studies were excluded from this category, as these characteristics were not typically reported within these study designs.

Study Characteristics

Most publications included in our analysis were cohort (46 of 295; 15.6%) and laboratory studies (46 of 295; 15.6%). Among the 295 publications, the impact factor could not be found for 19 publications. The median 5-year impact factor was 3.232 (interquartile range, 2.053–7.065) with 182 (of 295; 61.7%) of the publications published in US journals. Furthermore, most corresponding authors were from the United States (97 of 295; 32.9%). Among the publications analyzed, human participants were the most common populations (145 of 295; 49.2%). Additional sample characteristics can be found in Supplementary Table S1.

Transparency Related Characteristics

Among the 295 publications that were accessible, each were analyzed for transparency characteristics: open access availability, conflict of interest statements, and funding statements. Of these publications, 152 (51.5%) were made open access to the public, with the remaining 143 (48.5%) accessible through a paywall. Of the 295 publications, 253 (85.8%) provided conflict of interest statements. Most declared none of the authors had a conflict of interest: 188 (63.7%). Approximately one-fifth of publications were funded by the public (63 of 295; 21.3%), whereas hospitals (2 of 295; 0.7%) contributed the least to funding. Furthermore, 19 (of 295; 6.4%) publications reported no funding received to assist in conduction of the publication. Additional transparency characteristics can be found in Table 2.

Table 2.

Reproducibility indicators of analyzed nephrology articles

| Characteristics | Variables |

|

|---|---|---|

| n (%) | [95% CI] | |

| Funding (n = 295) | ||

| University | 7 (2.4) | [0.7–4.1] |

| Hospital | 2 (0.7) | [0.3–1.6] |

| Public | 63 (21.3) | [21.4–16.7] |

| Private/Industry | 19 (6.4) | [3.7–9.2] |

| Nonprofit | 3 (1.0) | [0.1–2.2] |

| No statement listed | 129 (43.7) | [38.1–49.3] |

| No funding received | 19 (6.4) | [3.7–9.2] |

| Mixed funding received | 53 (18.0) | [13.6–22.3] |

| Conflict of interest statement (n = 295) | ||

| Statement provided 1 or more conflicts of interest | 65 (22.0) | [17.3–26.7] |

| Statement provided no conflict of interest | 188 (63.7) | [58.3–69.2] |

| No conflict of interest statement provided | 42 (14.2) | [10.3–18.2] |

| Data availability (n = 172) | ||

| Statement was provided saying some data were available | 41 (23.8) | [19.0–28.7] |

| Statement was provided saying no data were available | 2 (1.2) | [0.1–2.4] |

| No data availability statement provided | 129 (75.0) | [70.1–79.9] |

| Material availability (n = 167) | ||

| Statement provided saying some materials were available | 26 (15.6) | [11.5–19.7] |

| Statement provided saying some materials were not available | 1 (0.6) | [0.3–1.5] |

| No materials availability statement provided | 140 (83.8) | [79.7–89.0] |

| Analysis script availability (n = 172) | ||

| Statement provided saying an analysis script was available | 4 (2.3) | [0.6–4.0] |

| Statement provided saying analysis scripts were not available | 1 (0.6) | [0.3–1.4] |

| No analysis script availability statement was provided | 167 (97.1) | [95.2–99.0] |

| Replication studies (n = 172) | ||

| Replication study | 0 (0.0) | [0.0–0.0] |

| No statement was provided stating the study was a replicated publication | 172 (100.0) | [100.0–100.0] |

| Cited by systematic review/meta-analysis (n = 167) | ||

| Not cited | 167 (100.0) | [100.0–100.0] |

| Cited a single time | 0 (0.0) | [0.0–0.0] |

| Cited 1 to 5 times | 1 (0.6) | [0.0–1.8] |

| Cited more than 5 times | 2 (1.2) | [0.0–2.8] |

| Cited by a replication study (n = 167) | ||

| Not cited | 167 (100) | [100.0–100.0] |

| Cited a single time | 0 (0.0) | [0.0–0.0] |

| Protocol availability (n = 71) | ||

| Protocol was made available | 0 (0.0) | [0.0–0.0] |

| No protocol was made available | 71 (100.0) | [100.0–100.0] |

| Preregistration (n = 71) | ||

| Statement provided saying publication was preregistered | 8 (11.3) | [3.9–18.6] |

| Statement provided saying publication was not preregistered | 0 (0.0) | [0.0–0.0] |

| There is no preregistration statement provided in the publication | 63 (88.7) | [81.4–96.1] |

| Open access (n = 295) | ||

| Yes, the publication was found via Open Access Button | 148 (49.3) | [4.4–55.0] |

| Yes, the publication was found via other means | 4 (1.3) | [0.0–2.6] |

| The publication could not access through paywall | 143 (48.5) | [42.0–53.3] |

CI, confidence interval.

Reproducibility-Related Characteristics

A total of 172 publications were analyzed for data, analysis scripts, and material statements. Of these 172 publications, 43 (25%) provided a statement regarding the data used in conducting the trial. Furthermore, few studies were accessible and contained all the raw data used in the publication. The least reported reproducibility characteristics were analysis scripts, with only 4 (2.3%) publications containing a statement. For analysis of materials and evidence synthesis, meta-analyses (n = 4) and commentaries with analysis (n = 1) were excluded because they lack materials necessary for reproducibility. Of the remaining 167 publications, the majority failed to report material availability statements (140 of 167; 83.83%). Preregistered studies aid in providing documentation of methods, protocols, analysis scripts and hypotheses before data extraction. To measure preregistration and protocol availability characteristics, we excluded laboratory publications (n = 46), chart reviews (n = 16), observational studies (n = 59), and publications with multiple study designs (n = 1) because preregistration on a trial database is not necessary for trial conduction. Among the 71 publications that qualify for preregistration, of which 17 were clinical trials, 8 (of 71; 11.3%) clinical trials were preregistered. Furthermore, of the publications that were preregistered, 7 publications contained information regarding the methods. Of the 71 publications, none of the meta-analyses and systematic reviews were preregistered through PROSPERO, and no cohort studies were preregistered on a trial database such as ClinicalTrials.gov. Among the 71 publications, no study design provided a protocol for how the publication was conducted. Detailed reproducibility indicator descriptions can be found in Tables 2 and 3.

Table 3.

Additional reproducibility characteristics

| Characteristics | Variables |

|---|---|

| n (%) | |

| Type of study (n = 295) | |

| No empirical | 102 (34.6) |

| Meta-analysis | 4 (1.4) |

| Commentary with analysis | 1 (0.3) |

| Clinical trial | 17 (5.8) |

| Case study | 18 (6.1) |

| Case series | 3 (1.0) |

| Cohort | 46 (15.6) |

| Chart review | 16 (5.4) |

| Case control | 4 (1.4) |

| Data survey | 8 (2.7) |

| Laboratory | 46 (15.6) |

| Multiple | 1 (0.3) |

| Othera | 29 (9.8) |

| Material availability statement (n = 26) | |

| Personal or institutional | 5 (19.2) |

| Hosted by the journal | 18 (69.2) |

| Online third party | 1 (3.9) |

| On request | 2 (7.7) |

| Material was downloaded and accessible | 17 (65.4) |

| Material could not be downloaded and was not accessible | 9 (34.6) |

| Data availability statement (n = 41) | |

| Personal or institutional | 4 (9.8) |

| Supplementary information hosted by the journal | 31 (75.6) |

| Online third party | 0 (0.0) |

| On request | 6 (14.6) |

| Data could be accessed and downloaded | 31 (75.6) |

| Data could not be accessed and downloaded | 10 (24.4) |

| Documented data with all raw material (n = 31) | |

| Data files clearly documented | 31 (100.0) |

| Data files not clearly documented | 0 (0.0) |

| Data files contain all raw data used for study conduction | 11 (35.5) |

| Data files do not contain all raw data used for study conduction | 20 (64.5) |

| Preregistration statement listed (n = 8) | |

| Preregistration could be accessed | 7 (87.5) |

| Preregistration could not be accessed | 1 (12.5) |

| Preregistered on clinicaltrials.gov | 5 (62.5) |

| Otherb | 3 (37.5) |

| Documented within preregistration (n = 8) | |

| Hypothesis was included and documented within the preregistration | 2 (25.0) |

| Methods was included and documented within the preregistration | 7 (87.5) |

| Analysis plan was included and documented within the preregistration | 0 (0.0) |

| Protocol available (n = 4) | |

| Hypothesis was included within the protocol | 0 (0.0) |

| Methods was included within the protocol | 0 (0.0) |

| Analysis plan was included within the protocol | 0 (0.0) |

| Analysis script available (n = 4) | |

| Personal or institutional | 0 (0.0) |

| Supplementary information hosted by the journal | 4 (100.0) |

| Online third party | 0 (0.0) |

| On request | 0 (0.0) |

ISRCTN, International Standard Randomised Controlled Trial Number.

Includes cross-sectional (n = 18), secondary analysis (n = 11).

Includes Australian New Zealand Clinical Trials Registry (n = 1), Iranian Registry of Clinical Trials (n = 1), ISRCTN Registry (n = 1).

Evidence Synthesis

Of the 167 publications included in our analysis, most were not cited by either a meta-analysis or systematic review (140 of 167; 83.8%). Furthermore, no publications were replicated studies of previously published articles.

Discussion

Results from our study indicate that the current state of nephrology research is not inclusive of transparent and reproducible research practices. Few studies in our sample provided access to study protocols, materials, analysis scripts, or study data. These results mirror a broad investigation of 441 publications in biomedical sciences, in which only 1 provided access to study protocols, 0 provided raw data, and only 4 were replication studies.11 In this study, we highlight 2 important indicators of transparency and reproducibility that were exceptionally deficient in our sample. When discussing these indicators, we outline possible solutions for both funders and journals, when possible, and we also describe current efforts under way to promote such practices.

First, data sharing is a high yield for analytic reproducibility of a previous study. Few investigators made data publicly accessible, which hampers such efforts. From a funding perspective, various institutes within the NIH have established data repositories for institute-funded investigations. Some institutes have been more dedicated to these efforts than others. The National Institute of Allergy and Infectious Diseases, for example, supports 8 data repositories, including Immune Tolerance Network (ITN) TrialShare, which makes data and analysis code underlying ITN-published manuscripts publicly available with the goal of promoting transparency, reproducibility, and scientific collaboration.12 The NIDDK funds a central repository that contains a biorepository that gathers, stores, and distributes biological samples from studies in addition to a data repository that stores data for all NIDDK grant research projects.13 This central repository is important for the NIDDK, as their data sharing policy lists out various timelines for expected data to be deposited depending on the study design. The data sharing policy goes further to explain that all data will eventually become publicly available to increase its use and analysis in subsequent studies.14,15 Furthermore, some private foundations require data sharing, such as the Bill and Melinda Gates Foundation.16 Given that funders are able to impose such requirements, they have great influence on whether and how study data are made available. From a journal perspective, we selected 3 journals that had the highest h5-index rankings in Google Scholar’s urology and nephrology category (after excluding urology journals) to provide the basis for discussion. The Journal of the American Society of Nephrology (JASN) subscribes to the ICMJE Data Sharing policy for clinical trials.17 All manuscripts of clinical trials must submit a data sharing plan according to ICMJE standards.18 Data from systems-level analyses (such as genomics, metabolomics, and proteomics) must be deposited in appropriate publicly accessible archiving sites. Furthermore, several journals such as Kidney International’s (KI) require authors to provide “Data Availability Statements” within their manuscripts.19 The American Journal of Kidney Diseases (AJKD) requires all clinical trials to provide a data sharing statement. The instructions for authors note that, “at this stage AJKD does not have a particular data sharing expectation.”20 Although a limited sample, differences within these journals showcase that variation exists by the very entities that arbitrate what and how nephrology research is published. We recommend that nephrology journals consider moving beyond indifference and adopt stricter policies. Although dissenting views exist,21 we believe that data sharing ultimately serves the best interests of the public, who provides tax dollars to fund research, and trial participants who subject themselves to potentially harmful interventions for the public good and want their data to count.22,23 Certainly, data sharing issues can be complex, such as cases in which patient confidentiality must be maintained, and these issues have been addressed by the Findability, Accessibility, Interoperability, and Reusability (FAIR) principles.24

Second, protocols were seldom provided by the study authors among publications in our sample. Detailed protocols are necessary for subsequent investigators to reproduce an original study or for readers to evaluate any deviations that occurred following protocol development. The Health and Human Services Department issued a “final rule” for clinical trials registration and results information submission. This rule specifies how data collected and analyzed during the trial should be reported to ClinicalTrials.gov. Specific to protocols, the rule requires “submission of the full version of the protocol and the statistical analysis plan (if a separate document)” as part of clinical trial results information.25 Thus, federal statutes require protocol sharing for some clinical trials. Building on our comparison of nephrology journals, we evaluated the guidance for submitting authors concerning protocol publication. We failed to locate any information on the KI or JASN authorship guidelines with regard to protocol submission except in the case of brief reports.17,19 The AJKD requires that clinical trials include a study protocol with dated changes for the confidential review process, but leaves the protocol publication to the author’s discretion.20 Thus, a comparison of current journal requirements for publishing research protocols suggests, at most, that the decision to publish a protocol may be left to the individual authors. When protocols are required at the time of submission, only peer reviewers and editors have access to them. When protocols remain unpublished, studies are unable to be reproduced or critically inspected. On many occasions, methods sections within published reports are too concise to truly understand whether the research methodology was robust or whether critical errors were made over the course of the study.26 Protocols, which can easily be provided as supplementary documents on journal Web sites or deposited to platforms such as Open Science Framework (https://osf.io/), are necessary to fill in these gaps. Another platform, Protocols.io,27 has been developed specifically for the publication of research protocols. We also note that protocols are most often associated with clinical trials; however, we suggest that protocols are also necessary for other study types, such as observational studies. Protocols, for example, can carefully prespecify a priori which confounding factors are to be included in regression models. Absent publication of protocols, it is not possible to know whether model adjustments were made post hoc.

Strengths and Limitations

Our study had many strengths, including taking a random sample from a broad swath of all nephrology literature. Using this sampling methodology, we increase the likelihood that our results are generalizable to the nephrology research community as a whole. Our methodology, which included data extraction by 2 investigators in a blinded, duplicate fashion, is the gold standard methodology in systematic reviews and is endorsed by the Cochrane Collaboration. We also made our study protocol, materials, and data publicly available to foster greater transparency and reproducibility. Regarding limitations, our sample is restricted to nephrology journals that are indexed in PubMed and published in English. We also surveyed publications over a fixed time period. These considerations should be taken into account when interpreting and generalizing our study’s findings, as nephrology-related studies also could be published in general medicine journals.

In conclusion, our study found that reproducible and transparent research practices are infrequently used by the nephrology research community. Greater efforts should be made by both funders and journals, 2 entities that have the greatest ability to influence change. In doing so, an open science culture may eventually become the norm rather than the exception.

Disclosure

All the authors declared no competing interests.

Acknowledgments

This study was funded by the 2019 Presidential Research Fellowship Mentor–Mentee Program at Oklahoma State University Center for Health Sciences.

Footnotes

Table S1. Sample characteristics of analyzed nephrology publications.

Appendix S1. Included journals from a random sample of publications.

Supplemental Material

References

- 1.Veronese F.V., Manfro R.C., Roman F.R. Reproducibility of the Banff classification in subclinical kidney transplant rejection. Clin Transplant. 2005;19:518–521. doi: 10.1111/j.1399-0012.2005.00377.x. [DOI] [PubMed] [Google Scholar]

- 2.Piskunowicz M., Hofmann L., Zuercher E. A new technique with high reproducibility to estimate renal oxygenation using BOLD-MRI in chronic kidney disease. Magn Reson Imaging. 2015;33:253–261. doi: 10.1016/j.mri.2014.12.002. [DOI] [PubMed] [Google Scholar]

- 3.Affret A., Wagner S., El Fatouhi D. Validity and reproducibility of a short food frequency questionnaire among patients with chronic kidney disease. BMC Nephrol. 2017;18:297. doi: 10.1186/s12882-017-0695-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.dkNET | Introduction. Available at: https://dknet.org/about/product_info. Accessed August 16, 2019.

- 5.dkNET | NIH Policy Rigor Reproducibility. Available at: https://dknet.org/about/NIH-Policy-Rigor-Reproducibility. Accessed August 16, 2019.

- 6.Enhancing Reproducibility through Rigor and Transparency | grants.nih.gov. Available at: https://grants.nih.gov/policy/reproducibility/index.htm. Accessed August 16, 2019.

- 7.RFA-GM-19-001: Methods to Improve Reproducibility of Human iPSC Derivation, Growth and Differentiation (SBIR) (R44 Clinical Trial Not Allowed). Available at: https://grants.nih.gov/grants/guide/rfa-files/RFA-GM-19-001.html. Accessed August 16, 2019.

- 8.Hardwicke TE, Wallach JD, Kidwell M, et al. An empirical assessment of transparency and reproducibility-related research practices in the social sciences (2014–2017). Available at: 10.31222/osf.io/6uhg5. Accessed August 16, 2019. [DOI] [PMC free article] [PubMed]

- 9.Liberati A., Altman D.G., Tetzlaff J. The PRISMA statement for reporting systematic reviews and meta-analyzes of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. 2009;62:e1–e34. doi: 10.1016/j.jclinepi.2009.06.006. [DOI] [PubMed] [Google Scholar]

- 10.Wallach J.D., Boyack K.W., Ioannidis J.P.A. Reproducible research practices, transparency, and open access data in the biomedical literature, 2015–2017. PLoS Biol. 2018;16 doi: 10.1371/journal.pbio.2006930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Iqbal S.A., Wallach J.D., Khoury M.J. Reproducible research practices and transparency across the biomedical literature. PLoS Biol. 2016;14 doi: 10.1371/journal.pbio.1002333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sign In: /home. Available at: https://www.itntrialshare.org/login/home/login.view?returnUrl=%2Fproject%2Fhome%2Fstart.view%3F. Accessed August 16, 2019.

- 13.Hornik K, Others. The r FAQ. 2002. Available at: https://repository.niddk.nih.gov/faq/. Accessed August 16, 2019.

- 14.Rasooly R.S., Akolkar B., Spain L.M. The National Institute of Diabetes and Digestive and Kidney Diseases Central Repositories: a valuable resource for nephrology research. Clin J Am Soc Nephrol. 2015;10:710–715. doi: 10.2215/CJN.06570714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Policies for Clinical Researchers | NIDDK. National Institute of Diabetes and Digestive and Kidney Diseases. Available at: https://www.niddk.nih.gov/research-funding/human-subjects-research/policies-clinical-researchers. Accessed August 16, 2019.

- 16.Open Access Policy. Bill & Melinda Gates Foundation. Available at: https://www.gatesfoundation.org/How-We-Work/General-Information/Open-Access-Policy/Page-2. Accessed August 16, 2019.

- 17.Author Resources | American Society of Nephrology. Available at: https://jasn.asnjournals.org/content/authors/ifora. Accessed August 16, 2019.

- 18.Taichman D.B., Sahni P., Pinborg A. Data sharing statements for clinical trials: a requirement of the International Committee of Medical Journal Editors. Ethiop J Health Sci. 2017;27:315–318. [PMC free article] [PubMed] [Google Scholar]

- 19.Elsevier. Guide for authors - Kidney International - ISSN 0085-2538. Available at: https://www.elsevier.com/journals/kidney-international/0085-2538/guide-for-authors. Accessed December 17, 2019.

- 20.AJKD Information for Authors & Journal Policies. Available at: https://sites.google.com/site/ajkdinfoforauthors/. Accessed August 16, 2019.

- 21.Longo D.L., Drazen J.M. Data sharing. N Engl J Med. 2016;374:276–277. doi: 10.1056/NEJMe1516564. [DOI] [PubMed] [Google Scholar]

- 22.Alliance - Resolution to Share Legacy Clinical Trial Data. Available at: https://www.allianceforclinicaltrialsinoncology.org/main/public/standard.xhtml?path=%2FPublic%2FPAC-Resolution. Accessed August 16, 2019.

- 23.Aitken M., de St Jorre J., Pagliari C. Public responses to the sharing and linkage of health data for research purposes: a systematic review and thematic synthesis of qualitative studies. BMC Med Ethics. 2016;17:73. doi: 10.1186/s12910-016-0153-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wilkinson M.D., Dumontier M., Aalbersberg I.J.J. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data. 2016;3:160018. doi: 10.1038/sdata.2016.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Health and Human Services Department Clinical trials registration and results information submission. Fed Regist. 2016;81:64981–65157. [PubMed] [Google Scholar]

- 26.Steward O., Popovich P.G., Dietrich W.D. Replication and reproducibility in spinal cord injury research. Exp Neurol. 2012;233:597–605. doi: 10.1016/j.expneurol.2011.06.017. [DOI] [PubMed] [Google Scholar]

- 27.About protocols.io. Available at: https://www.protocols.io/about. Accessed August 16, 2019.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.