Abstract

Securing extramural grant funding and publishing in peer-reviewed journals are key indicators of success for many investigators in academic settings. As a result, these expectations are also sources of stress for investigators and trainees considering such careers. As competition over grant funding, costs of conducting research, and diffusion of effort across multiple demands increase, the need to submit high quality applications and publications is paramount. For over three decades, the Center for AIDS Prevention Studies at the University of California, San Francisco has refined an internal, pre-submission, peer-review program to improve the quality and potential success of products before external submission. In this article, the rationale and practical elements of the system are detailed, and recent satisfaction reports, grant submission outcomes, and plans for ongoing tracking of the success rates of products reviewed are discussed. The program includes both early-stage concept reviews of ideas in their formative state and full product reviews of near-final drafts. Recent evaluation data indicate high levels of reviewee satisfaction with multiple domains of the process, including scheduling the review sessions, preparedness and expertise of the reviewers, and overall quality of the review. Outcome data from reviews conducted over a recent 12-month period demonstrate subsequent funding of 44% of proposals reviewed through the program, a success rate that surpasses the National Institutes of Health funding success rates for the same time period. Suggestions for the sustainability of the program and for its adoption at other institutions and settings less dependent on extramural funding are provided.

For investigators in academic settings, the expectation to successfully compete for extramural grant funding is often one of the most stressful aspects of their positions.1 The need to acquire funding has joined or exceeded the “publish or perish” stature of authoring peer-reviewed articles and books as an element of basic survival in academic positions, and grant funding is often a top criterion for promotion. The pressure is compounded during times of shrinking pay lines, reduced funding for education, increased costs of conducting research, and distribution of investigator effort across multiple funding sources and professional demands.2,3 While these forces are particularly pronounced in soft-money settings (i.e., positions dependent upon extramural funding), similar dynamics play out in tenured and other hard-money settings, in which the successful recruitment of research grant funding is a factor in buying out teaching, administrative, or clinical time and is often an important consideration in promotion and tenure decisions.

The increased competition for funding heightens the importance of submitting the highest quality grant applications to maximize potential success. Submitting sloppy or hastily prepared proposals or manuscripts can result in rejection or poor review scores that are difficult to recover from, even when care is taken in subsequent revision. Moreover, such a product can reflect poorly on the institution from which it is submitted. Even when investigators are careful in preparing proposals, they can still overlook potential scientific issues in their applications. Funding bodies recommend having others review applications before submitting,4 citing the harm that errors (small and large), missing information, and inconsistencies can inflict on the chances of success. In this article, we describe a sustained program of internal peer review designed to improve the quality and impact of grant proposals and manuscripts prior to their submission.

History of the Program

Over the past three decades, the Center for AIDS Prevention Studies (CAPS), a National Institute of Mental Health (NIMH) funded research center at the University of California, San Francisco, has supported innovative research on the social and behavioral factors affecting HIV prevention and care. A central service for CAPS investigators, cultivated by senior leadership, has been a structured program to provide internal pre-submission review of scientific products such as grant applications and journal manuscripts (and other products such as abstracts for scientific conferences), to maximize the strengths of each product and increase its likelihood of success (i.e., funding or acceptance for publication). This internal program is a key mechanism to support investigators in their efforts to propose and conduct rigorous, high-impact science. On average, the center conducts approximately 40 review sessions per year. There are publications describing analogous programs in place at other institutions,5,6 and there are likely others that have not been documented in the literature. More common is the publishing of tips for effective grant writing,7–12 and overviews of broader grant-writing training programs with a range of components, including structured didactic instruction on elements of grant writing,13,14 the use of writing coaches,15 and the sponsoring of writing groups.16 Where there are components involving mock reviews,6,17 they are either not well-detailed and thus not easily replicated or, as in one case, designed for academic instruction rather than increasing the likelihood of funding success.18 In summary, while there is evidence of parallel approaches to the program described here, to our knowledge there is a lack of detailed documentation of such initiatives that offer a firm, clear structure that can be replicated in other settings.

Structure of the Program

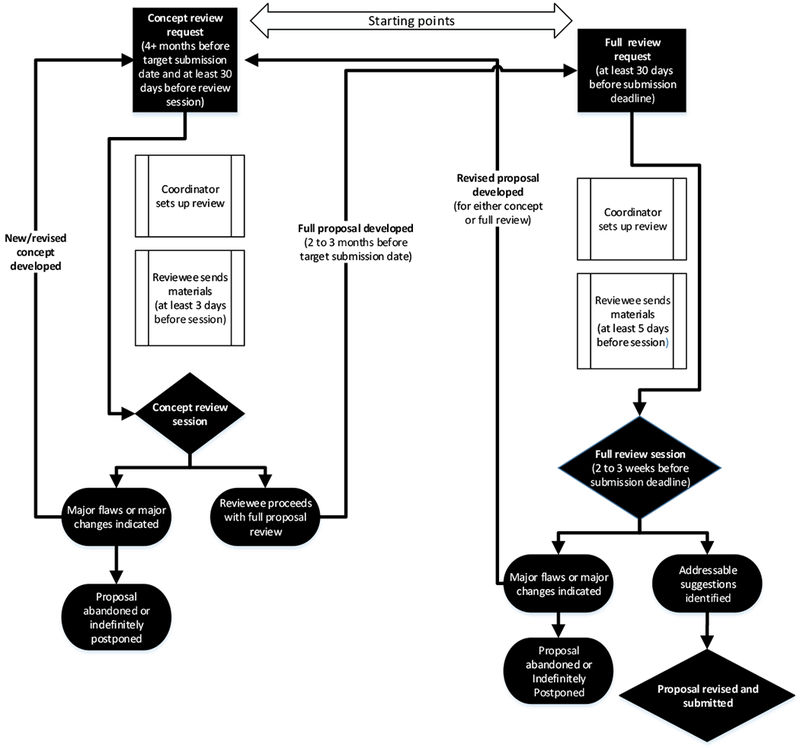

Within the CAPS program, internal peer reviews are organized for funding proposals, manuscripts, conference presentations, and other written materials prior to their submission or presentation. While peer reviews of many products (e.g., manuscripts, conference abstracts, presentations, surveys,) are offered, the preponderance of the reviews that investigators submit (>90%) are for research funding applications, and approximately 60% of proposals submitted from investigators in the home division of this program undergo pre-submission review. Our focus in this article is on the program as it primarily relates to reviewing funding application; the process of reviewing other products is modified accordingly (e.g., responding to manuscript reviews; identification of alternative target journals for manuscript submissions). See Figure 1 for an illustration of the process, which is described below.

Figure 1.

Internal peer review process designed to improve the quality and potential success of scholarly research before external submission for funding or acceptance, as implemented at the Center for AIDS Prevention Studies of University of California, San Francisco.

Types of reviews

There are generally two types of reviews, depending on the developmental stage of the product being written: concept reviews and full reviews. Reviews of summary statements from prior application submissions are also conducted, which are similar to concept reviews, but focus on developing plans to respond to extramural reviewer comments in anticipation of resubmission.

Concept reviews.

This type of review is done as early as possible in the developmental process (at least 4 months before the target submission date) and focuses on an early draft of the study aims, with preliminary ideas about significance, innovation, and study design. For a career development award application, the concept review includes a 2–3 page outline of career development aims, research aims, and proposed mentoring team. These foundational features of the project are reviewed and discussed (structure of review session described below) during the concept review stage so that major issues can be identified early in the process, making it easier for the investigator to make substantive changes before fully developing an application. Early-career investigators are required by the Division of Prevention Science, the administrative home for CAPS in the Department of Medicine (and others are strongly encouraged), to conduct an early concept review of each grant application idea. Anecdotally, investigators are more open to course corrections when the proposal is in the earlier stages rather than when a full draft proposal has been completed. In the latter scenario, reviewees can be resistant to major changes to their work, even when they are critical to correct important problems. Concept reviews have occasionally resulted in the recognition of a “fatal flaw” the research idea or design, and investigators are faced with the need to move on to another idea. Concept reviews also provide a timely opportunity to link the investigator to institutional resources, including consultations on biostatistics, qualitative methods, community engagement approaches, and measurement issues or to other potential co-investigators who possess expertise that is identified as missing during the concept review. Closer to the submission date, a full proposal review is scheduled.

Full product reviews.

Approximately 2 to 4 weeks before submission to the funding agency, a full product review is conducted, which focuses on the fully-developed proposal so that reviewers can comprehensively identify strengths and weaknesses. These sessions are scheduled late enough in the process to have a near-final draft, but with enough time remaining before the deadline to allow revision according to feedback. In these reviews, the reviewers will often follow the review criteria of the target funding agency or journal, for example with reviewers commenting on significance, innovation, investigators, and approach (or analogous criteria for career development awards) for National Institutes of Health (NIH) funding applications. It is important to note that these reviews are more substance-based, rather than stylistic. Therefore, while some reviewers may correct typos and grammatical errors, reviewees are advised that this review does not take the place of a careful proofing and editing, which should occur by someone other than the reviewee on a subsequent, closer-to-final version of the product.

Requesting a review

The process begins when an investigator submits a request for review to the peer review Coordinator via an online survey using Qualtrics (Qualtics, Provo, UT), which queries basic details (working title, whether concept or full review, type of product being reviewed) and asks the reviewee for a list of potential reviewers. In addition, there are questions to solicit other information helpful in setting up the review, such as availability (List 1). The request is ideally initiated at least 30 days before the desired date of review when possible. At the time of the request, the materials to be reviewed are typically not ready for review. Often, scheduling the review session can serve as a motivator to structure writing schedules, such that the reviewee is accountable to be ready in time for the review.

List 1.

Information in Online Peer Review Request Form, From a Pre-Submission Review Program Designed to Improve the Quality and Potential Success of Scholarly Research Before External Submission for Funding or Acceptance, as Implemented at the Center for AIDS Prevention Studies of University of California, San Francisco

Reviewee name, contact information, title, and affiliation

Type of product being reviewed (grant application, journal manuscript, etc.)

Working title of product

Collaborators, co-authors (who should not be invited to review)

Collaborators or co-authors who should be present for the review

Potential dates and times for the review (ideally between 2 and 4 weeks of request)

3–5 potential reviewers (if known)

Specific expertise requested of reviewers

Openness to a member of the Community Advisory Board to participate or observe

Openness to a trainee to observe

In addition to these compulsory elements of the review request, this is also an opportunity to link to other optional services available through the center. To provide community input, a member of the center’s Community Advisory Board may be invited to possibly serve as an additional reviewer. Similarly, a postdoctoral trainee may be invited to sit in on the session to gain exposure to the research grant/manuscript writing and review processes. Reviewees are queried about openness to these optional additional participants during the review request process. This survey also serves as an opportunity to offer investigators additional consultations on research methods or ethics or, if applicable, intervention development. If a reviewee indicates interest in these services, an email is automatically sent to the relevant consultant so that they can reach out to the reviewee directly.

Setting up the review session

Once the request is submitted, the review coordinator reaches out to potential reviewers, prioritizing individuals identified by the reviewee. The coordinator consults a list of investigators and their expertise in order to match the content of the application with the expertise of potential reviewers. Ideally, there are 3 reviewers and a chair, who is an established investigator who may also serve as one of the reviewers. Collaborators or mentors who are involved or otherwise closely familiar with the product are not considered to be acceptable reviewers, as the review is likely to be more productive when conducted by fresh eyes. Effort is made to recruit reviewers with content knowledge (e.g., of the target population and topic) and relevant methodological expertise (e.g., qualitative, randomized controlled designs, eHealth methods). Reviewers are expected to provide written comments, and it is strongly preferred to have reviewers participate in real time (either in person or by video/phone conferencing) rather than sending written comments only. Written comments are acceptable in cases where the reviewer cannot participate in real time, but this is suboptimal because it eliminates the opportunity for discussion and cross-fertilization of ideas during the discussion phase of review session. Although there is not a formal policy regarding the number of written reviews, in general if more than one reviewer cannot be present (in person or via phone or video), then the review is typically rescheduled or other reviewers are sought.

Before the review session.

After reviewers are identified and the time and date of the review is set, the coordinator sends all parties a confirmation that includes a document detailing the peer review guidelines. The next step is for the reviewee to send out relevant documents to the reviewers and chair as follows:

The reviewee should reach out to reviewers as soon as the review is scheduled to let them know exactly when they should expect to receive the review documents (which should be in line with the timelines detailed below), allowing reviewers to plan their review around their existing obligations. Any changes in this timeline should be accompanied by clear communication from the reviewee. Inherent in these communications should be expressions of appreciation of reviewers’ time and willingness to review the materials.

If the review is a concept review that does not require the review of a full-length document (e.g., not a full grant proposal or full-length manuscript), materials should be sent to reviewers a minimum of 3 business days prior to the session. If it is a full product review, materials should be sent a minimum of 5 business days prior to the session.

Materials provided to reviewers should include the document(s) being reviewed, a memorandum with specific questions the reviewee would like addressed, and any relevant supplemental material (e.g., funding announcement).

The coordinator monitors that reviewers receive documents according to the timeline developed for that review.

During the session.

The session is generally scheduled for 1 hour and starts with the Chair facilitating an introduction of all participants (if some are not known to each other), followed by an overview of the intent and structure of the session. The chair reminds all participants of the confidential nature of the review; reviewees are often sharing their formative ideas, which they do not want distributed beyond the members of the review session. Therefore, reviewers are reminded to destroy review materials following the review and to refrain from discussing the review or the ideas discussed within it with unrelated parties. The chair confirms whether each reviewer will be sharing written comments, which takes pressure off of the reviewee to take notes during the session. Similarly, the reviewee may ask to audio record the session so that no comments or discussion points are lost. Ideally, reviewers will send their written comments and will agree to be recorded so that the reviewee can focus on listening and integrating feedback rather than taking notes. Sometimes reviewees bring someone else from their team to write notes during the review session.

The chair then invites the reviewee to give a brief overview (2–3 minutes) of the review product and reiterate any specific queries for reviewers. To keep the session on time and to make sure all reviewers have time to give their input, a structure is carefully followed: The Chair asks for a single reviewer to go first, and that person spends about 5–8 minutes providing an appraisal of the product. Rather than articulating every comment, reviewers are asked to provide a marked-up version of the document to the reviewee so that the reviewer can focus on the larger issues during the review. Therefore, the reviewer is asked to provide the following: 1–3 strengths of the work, 1–3 major issues or concerns, and some specific recommendations for addressing the concerns. The chair guides the feedback session such that it does not become a back-and-forth between reviewer and reviewee, but more resembles a study section meeting in which the reviewee does not have the luxury of explaining things that were not clear in the document. Thus, the reviewee is asked to resist the natural urge to interject explanations, objections, or questions during this part of the review. The chair will discourage such exchanges unless they are required to correct gross misunderstandings that would affect the quality of the review. When the first reviewer is finished, the Chair thanks the person and invites the next reviewer to give their review. By design, there is no discussion between the presentations of each reviewer. Rather, the discussion is deferred until all reviewers have given their input so that there can be an integration of feedback and time to attempt to examine patterns and reconcile discrepant feedback that may emerge between reviewers.

The chair serves as timekeeper so that no reviewers exceed their allotted time, allowing sufficient time at the end for discussion and brainstorming. During the discussion phase, reviewers can comment on their colleagues’ observations, and the reviewee can ask questions of reviewers. Often, the chair guides this discussion to circle back to the reviewee’s a priori stated requests to see whether those have been sufficiently addressed. In wrapping up the session, the chair summarizes the key points of feedback and discussion and, along with the reviewee, thanks the reviewers. The chair might also inquire as to whether reviewers are willing to have the reviewee follow up with them one-on-one as they revise their product. (See List 2 for a summary of guidelines set for reviewees, reviewers, and chairs.)

List 2.

Guidelines for Conduct Before and During Peer Review Sessions, From a Pre-Submission Review Program Designed to Improve the Quality and Potential Success of Scholarly Research Before External Submission for Funding or Acceptance, as Implemented at the Center for AIDS Prevention Studies of University of California, San Francisco

Reviewees

Request review early

Provide documents on time

Be open to feedback (do not be defensive)

Listen

Show appreciation and respect for reviewers’ time

Don’t interrupt reviewers during session

Send thank you email following session

Agree to review others’ work in the future

Reviewers

Agree to review whenever feasible

Provide written comments to reviewee

Only hit highlights of major strengths and weaknesses during session

Be honest but productive and encouraging when giving feedback

Offer solutions to problems raised where you have them

Aim to improve the product rather than merely evaluate it

Agree to be audio recorded during the session

Invite reviewee to follow up with you following the review session

Chairs

Set a tone of respect, collaboration, and collegiality for the session

Adhere to time limits to structure the review

Discourage cross-talk during the review phase of the session

Structure discussion phase of the session around reconciling discrepant feedback and brainstorming of solutions

Ensure reviewee has opportunity to ask questions after each reviewer has presented

End with a summary of key issues

Thank reviewers

After the session.

Soon after the review, the reviewee should send a thank-you email to the reviewers and the chair for their input. The peer review session can be intense, as the reviewee will likely receive a range of criticisms of their ideas. There can be conflicting feedback between (or even within) reviewers, and there may be feedback with which the reviewee does not agree. Reviewees are not required to incorporate every recommendation from each reviewer; instead, the session is meant to provide a range of perspectives and input, although not all input may ultimately improve the final product. However, the review session may reveal major flaws with the proposed work, in which case drastic modifications, or even complete new directions, may be indicated. In most cases, a radical change is not needed, but rather the feedback may point to a need for further justification or detail somewhere in the document that may inoculate against that critique in the subsequent actual review. In some cases, the reviewee will postpone submission to have more time to integrate feedback, and may then schedule an additional peer review before submitting. Reviewees are encouraged to check in with mentors, collaborators, or other colleagues, who may or may not have been present during the review session, to process the feedback and come up with a plan. For our research center, the review session is also used as an opportunity to alert the leadership if a particular product (typically a grant application) has serious problems, such as ethical issues, overlap with other projects/proposals, or gross underdevelopment. The review chair reports such issues to the department leadership, who must subsequently sign off on the application so that a plan for remediation or delay of the submission can be considered.

Elements of a Successful Program

A successful internal peer review program requires commitment on the part of the institution and its constituent stakeholders. From a practical standpoint, it is essential to have an organized (and ideally charismatic) coordinator to handle the logistical elements, not the least demanding of which are the tasks of identifying willing reviewers and coordinating schedules. The program at CAPS relies upon 20% effort of a staff-level science coordinator whose remaining effort is dedicated to other aspects of the research center. In addition, the center funds 20% of a faculty coordinator, whose effort beyond the peer-review program also includes overseeing mentoring and other developmental programs within the center. In other settings where such designated funding may not exist, institutional support (e.g., from the department chair, dean, or chancellor) for these roles is critical to appropriately support such a program.

The program described here provides reviews primarily for PhDs and MDs, but there has been active involvement from researchers from other backgrounds, including those with PharmDs and doctorates in nursing. The program is open to investigators from CAPS and from affiliated programs and centers. Reviewees are more often early-career investigators (i.e., fellows or assistant professors), as they are required to have their applications reviewed through the program. Mid- and senior-level investigators are encouraged to have their applications reviewed, but are not required. Reviewers come from similar backgrounds, with leadership of the center making an effort to have a mix of established and early career investigators serving as reviewers for all sessions. We recognize that not all institutions have a large group of experienced investigators available in-house to do reviews, and recommend that systems be developed to incentivize outside reviewers to participate via phone or video. It is critical that all reviewers are familiarized with the culture, intent, and guidelines of the review process.

Fundamentally, there must be a culture that prioritizes collaboration over competition and sharing over territoriality, such that people are willing to share their works in progress, and lend their time, expertise, and encouragement so that others may succeed. Ideally, investigators will see the program as an asset to themselves as well as the institution and feel committed to its success. Similar to participation and service to NIH study sections or scholarly journals, investigators also recognize the contribution their participation in peer review has to science and knowledge. This means that experienced scientists, when asked to review, say yes more often than not, and that they approach the review in a constructive, nurturing manner. Likewise, support and enthusiasm for the program should be present at all levels, with a particular need for leadership to set expectations of participation and to eagerly engage as both reviewers and reviewees. A system with an atmosphere characterized by a sense of intrusion and lack of appreciation will likely breed resentment, and hostility or hazing may emerge in the review setting. Similarly, procedures should be in place so that the review load is spread across all qualified reviewers, so that burnout is avoided among over-taxed reviewers.

Various strategies should also be put in place to incentivize participation in a program. It is important that reviewers experience value and reward through their participation. In a healthy atmosphere of collaboration, participation as a reviewer will provide its own intrinsic, altruistic reward. Participation in the process can also help ignite new collaborations between previously disconnected participants; new ideas are often sparked well beyond those contained within the specific topic area of the product being reviewed. Participating in this type of intramural, pre-submission review process can also help prepare early-career investigators for “real world” reviewer roles at the NIH, National Science Foundation, or other funding bodies and better understand how experienced reviewers approach reviews. To encourage investigators to donate their time to the process, the center has issued guidance for claiming peer review service in faculty CVs and for reporting these efforts as mentoring, informal teaching, and university service, all of which are valued in the academic promotions process. Recently, an award for outstanding service to peer review has been instituted at CAPS, for which investigators can be nominated by their peers and recognized for their service. Other incentives may be considered, such as monetary compensation for review or teaching release time for sustained and substantial review service.

Evaluation of the Program

Monitoring outcomes of the peer review program is important, but challenging. While it is logical that any pre-submission critique and revision would make a submission stronger than had that review not been provided, it is difficult to test empirically. Those who participate in the peer review program have good success relative to funding averages, but it is impossible to know whether those whose work was reviewed through the program would have been successful regardless. Those who participate eagerly as reviewees in the program tend to be more open to feedback and are more inclined to plan ahead, if only to avail themselves of the review process. The program also does not track specific input from reviewers nor the degree to which the reviewees revise according to that feedback, as such input is voluminous, sometimes disparate across reviews, and reviewees are not required to accept all feedback. Likewise, monitoring outcomes in funding success can be challenging because many applications require multiple submissions and, hence, additional reviews, evolutions in scope, aims, titles, and funding mechanism—making outcome ascertainment difficult, but not impossible.

Although the program at CAPS has been in place for several decades and has accumulated a wealth of anecdotal evidence, efforts have been undertaken recently to systematically track outcomes of the products reviewed. This includes monitoring funding success and publication outcomes and collecting confidential satisfaction ratings of all elements of the program and recommendations for improvement. From 2016–2018, reviewees from 80 review sessions rated the following domains either excellent or good (on a 5-point scale of excellent, good, fair, poor, very poor): scheduling the peer review (80 reviewees, 100%), expertise of reviewers (80, 100%), preparedness of reviewers (78, 98%), and overall quality of the review session (78, 98%).

In terms of funding outcomes from proposals that have gone through the program, results have been ascertained for reviews that occurred during the 2016–2017 academic year; this period was selected so that there has been sufficient time for follow-up to occur (e.g., proposal submissions, funding determinations, resubmissions, etc.). During that 1-year period, 46 review sessions were conducted, for which 40 were for competing funding applications. Of those 40 proposal reviews, 27 (68%) were for unique products whereas 13 (32%) were for repeat reviews of revised applications that had been previously reviewed during the same period. Of the 27 unique applications reviewed, for 4 (15%) there was no subsequent evidence that they were submitted for funding, 11 (40%) were submitted but without any verification of funding, and 12 (44%) were documented as funded. This 44% funding success rate is encouraging in the context of published success rates at the NIH for the same time period. For NIH research project grants in FY2017, the success rate was 18.7% and between 31.5%–34% for K award applications.19 The success rate of applications having gone through the CAPS internal review system is more than double the rate for NIH research project applications and higher than the success rates for K awards (of note, there were only two K award applications reviewed during this period, but they were both subsequently funded). Over time, more longitudinal data will be available to document the outcomes of grant proposals and manuscripts undergoing review in the system.

In Sum

Submitting grant applications takes administrative effort and often involves the dedication of numerous investigators and administrative and research staff,20,21 and it often represents an investment via a temporary diversion of time and attention from investigators’ other duties, such as teaching, administrative work, and clinical care. It can also come at a cost to quality of life and family/relationship stability, as the pressure to acquire funding takes time and motivation away from non-work priorities.22 When a proposal is submitted with conceptual weaknesses, poorly justified elements, typographical or grammatical errors, or otherwise preventable problems, time and resources can be wasted, and otherwise promising ideas may go unrealized. The CAPS program has been adopted by other units at University of California, San Francisco and beyond, with collaborators and other visiting faculty having implemented versions of it at their home institutions. Our overall goal in sharing our experience is to facilitate the further adoption, adaptation, and implementation of this well-established and valued program. The internal peer review program described here offers a structured and sustainable approach to improving the quality of grant and manuscript submissions, thereby increasing investigators’ likelihood of success.

Acknowledgments:

The authors would like to thank the academic community whose participation in the program is vital to its success. The authors also thank Dr. Susan Folkman for her leadership and vision in setting up the original iteration of the center’s peer review program.

Funding/Support: The program described in this article is supported by grant number P30MH062246 from the National Institute of Mental Health (NIMH) of the National Institutes of Health (NIH).

Footnotes

Ethical approval: Reported as not applicable.

Other disclosures: None reported.

Disclaimers: None reported.

Previous presentations: None reported.

Data: All data are from the authors’ program and institution.

Contributor Information

Mallory O. Johnson, Department of Medicine, University of California, San Francisco, San Francisco, California.

Torsten B. Neilands, Department of Medicine, University of California, San Francisco, San Francisco, California.

Susan M. Kegeles, Department of Medicine, University of California, San Francisco, San Francisco, California.

Stuart Gaffney, Department of Medicine, University of California, San Francisco, San Francisco, California.

Marguerita A. Lightfoot, Department of Medicine, University of California, San Francisco, San Francisco, California.

References

- 1.Daniels RJ. A generation at risk: Young investigators and the future of the biomedical workforce. Proc Natl Acad Sci U S A. 2015;112:313–318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gallup GG, Svare BB. Hijacked by an External Funding Mentality. Inside Higher Ed. 2016. https://www.insidehighered.com/views/2016/07/25/undesirable-consequences-growing-pressure-faculty-get-grants-essay Accessed September 13, 2019. [Google Scholar]

- 3.Norris J The Crisis in Extramural Funding. American Association of University Professors; 2011. https://www.aaup.org/article/crisis-extramural-funding#.XXwoIWzsZPY Accessed September 13, 2019. [Google Scholar]

- 4.National Institutes of Health (NIH). Grants and Funding. NIH Central Resource for Grants and Funding Information; Write Your Application https://grants.nih.gov/grants/how-to-apply-application-guide/format-and-write/write-your-application.htm Accessed September 13, 2019. [Google Scholar]

- 5.Kulage KM, Larson EL. Intramural pilot funding and internal grant reviews increase research capacity at a school of nursing. Nurs Outlook. 2018;66:11–17. [DOI] [PubMed] [Google Scholar]

- 6.Kupfer DJ, Murphree AN, Pilkonis PA, et al. Using peer review to improve research and promote collaboration. Acad Psychiatry. 2014;38:5–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Botham CM, Arribere JA, Brubaker SW, Beier KT. Ten simple rules for writing a career development award proposal. PLoS Comput Biol. 2017;13:e1005863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chung KC, Shauver MJ. Fundamental principles of writing a successful grant proposal. J Hand Surg Am. 2008;33:566–572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gomez-Cambronero J, Allen LA, Cathcart MK, et al. Writing a first grant proposal. Nat Immunol. 2012;13:105–108. [DOI] [PubMed] [Google Scholar]

- 10.Munger R Seeing your proposal through the reviewer’s eyes. Emerg Med Serv. 2002;31:79–84. [PubMed] [Google Scholar]

- 11.Pullen RL Jr., Mueller SS. How to prepare a successful grant proposal. Nurse Pract. 2010;35:14–15. [DOI] [PubMed] [Google Scholar]

- 12.Wisdom JP, Riley H, Myers N. Recommendations for writing successful grant proposals: An information synthesis. Acad Med. 2015;90:1720–1725. [DOI] [PubMed] [Google Scholar]

- 13.Brownson RC, Colditz GA, Dobbins M, et al. Concocting that magic elixir: Successful grant application writing in dissemination and implementation research. Clin Transl Sci. 2015;8:710–716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Monte AA, Libby AM. Introduction to the specific aims page of a grant proposal. Acad Emerg Med. 2018;25:1042–1047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jones HP, McGee R, Weber-Main AM, et al. Enhancing research careers: An example of a U.S. national diversity-focused, grant-writing training and coaching experiment. BMC Proc. 2017;11(Suppl 12):16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wiebe NG, Maticka-Tyndale E. More and better grant proposals? The evaluation of a grant-writing group at a mid-sized Canadian university. J Res Admin. 2017;48:67–92. [Google Scholar]

- 17.Winckler E, Brown J, Lebailly S, et al. A novel program trains community-academic teams to build research and partnership capacity. Clin Transl Sci. 2013;6:214–221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Itagaki H The use of mock NSF-type grant proposals and blind peer review as the capstone assignment in upper-level neurobiology and cell biology courses. J Undergrad Neurosci Educ. 2013;12:A75–A84. [PMC free article] [PubMed] [Google Scholar]

- 19.National Institutes of Health (NIH). Research Portfolio Online Reporting Tools (RePORT). Research Project Success Rates by Type and Activity for 2017.. https://report.nih.gov/success_rates/Success_ByActivity.cfm Accessed September 13, 2019.

- 20.Herbert DL, Barnett AG, Clarke P, Graves N. On the time spent preparing grant proposals: An observational study of Australian researchers. BMJ Open. 2013;3:e002800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.von Hippel T, von Hippel C. To apply or not to apply: A survey analysis of grant writing costs and benefits. PLoS One. 2015;10:e0118494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Barnett A, Herbert D. The personal cost of applying for research grants. The Guardian. December 7, 2014. [Google Scholar]