Abstract

What is universal about music, and what varies? We built a corpus of ethnographic text on musical behavior from a representative sample of the world’s societies, and a discography of audio recordings. The ethnographic corpus reveals that music appears in every society observed; that music varies along three dimensions (formality, arousal, religiosity), more within societies than across them; and that music is associated with certain behavioral contexts such as infant care, healing, dance, and love. The discography, analyzed through machine summaries, amateur and expert listener ratings, and manual transcriptions, revealed that acoustic features of songs predict their primary behavioral context; that tonality is widespread, perhaps universal; that music varies in rhythmic and melodic complexity; and that melodies and rhythms found worldwide follow power laws.

One sentence summary:

Ethnographic text and audio recordings map out universals and variation in world music.

At least since Henry Wadsworth Longfellow declared in 1835 that “music is the universal language of mankind” (1) the conventional wisdom among many authors, scholars, and scientists is that music is a human universal, with profound similarities across societies (2). On this understanding, musicality is embedded in the biology of Homo sapiens (3), whether as one or more evolutionary adaptations for music (4, 5), the byproducts of adaptations for auditory perception, motor control, language, and affect (6–9), or some amalgam.

Music certainly is widespread (10–12), ancient (13), and appealing to almost everyone (14). Yet claims that it is universal or has universal features are commonly made without citation (e.g., (15–17)), and those with the greatest expertise on the topic are skeptical. With a few exceptions (18), most music scholars suggest there are few if any universals in music (19–23). They point to variability in the interpretations of a given piece of music (24–26), the importance of natural and social environments in shaping music (27–29), the diverse forms of music that can share similar behavioral functions (30), and the methodological difficulty of comparing the music of different societies (12, 31, 32). Given these criticisms, along with a history of some scholars using comparative work to advance erroneous claims of cultural or racial superiority (33), the common view among music scholars today (34, 35) is summarized by the ethnomusicologist George List: “The only universal aspect of music seems to be that most people make it. … I could provide pages of examples of the non-universality of music. This is hardly worth the trouble.” (36)

Are there, in fact, meaningful universals in music? No one doubts that music varies across cultures, but diversity in behavior can shroud regularities emerging from common underlying psychological mechanisms. Beginning with Noam Chomsky’s hypothesis that the world’s languages conform to an abstract Universal Grammar (37, 38), many anthropologists, psychologists, and cognitive scientists have shown that behavioral patterns once considered arbitrary cultural products may exhibit deeper, abstract similarities across societies emerging from universal features of human nature. These include religion (39–41), mate preferences (42), kinship systems (43), social relationships (44, 45), morality (46, 47), violence and warfare (48–50), and political and economic beliefs (51, 52).

Music may be another example, though it is perennially difficult to study. A recent analysis of the Garland Encyclopedia of World Music revealed that certain features, such as the use of words, chest voice, and an isochronous beat, appear in a majority of songs within each of nine world regions (53). But the corpus was sampled opportunistically, which made generalizations to all of humanity impossible; the musical features were ambiguous, leading to poor interrater reliability; and the analysis studied only the forms of the societies’ music, not the behavioral contexts in which it is performed, leaving open key questions about functions of music and their connection to its forms.

Music perception experiments have begun to address some of these issues. In one, internet users reliably discriminated dance songs, healing songs, and lullabies sampled from 86 mostly small-scale societies (54); in another, listeners from the Mafa of Cameroon rated “happy”, “sad”, and “fearful” examples of Western music somewhat similarly to Canadian listeners, despite having had limited exposure to Western music (55); in a third, Americans and Kreung listeners from a rural Cambodian village were asked to create music that sounded “angry”, “happy”, “peaceful”, “sad”, or “scared”, and generated similar melodies to one another (56). These studies suggest that the form of music is systematically related to its affective and behavioral effects in similar ways across cultures. But they can only provide provisional clues on which aspects of music, if any, are universal, because the societies, genres, contexts, and judges are highly limited, and because they too contain little information about music’s behavioral contexts across cultures.

A proper evaluation of claims of universality and variation requires a natural history of music: a systematic analysis of the features of musical behavior and musical forms across cultures, using scientific standards of objectivity, representativeness, quantification of variability, and controls for data integrity. We take up this challenge here. We focus on vocal music (hereafter, song) rather than instrumental music (cf. (57), because it does not depend on technology, has well-defined physical correlates (i.e., pitched vocalizations; 19), and has been the primary focus of biological explanations for music (4, 5).

Leveraging more than a century of research from anthropology and ethnomusicology, we built two corpora, which collectively we call the Natural History of Song. The NHS Ethnography is a corpus of descriptions of song performances, including their context, lyrics, people present, and other details, systematically assembled from the ethnographic record to representatively sample diversity across societies. The NHS Discography is a corpus of field recordings of performances of four kinds of song — dance, healing, love, and lullaby — from an approximately representative sample of human societies, mostly small-scale. We use the corpora to test five sets of hypotheses about universality and variability in musical behavior and musical forms.

First, we test whether music is universal by examining the ethnographies of 315 societies, and then a geographically stratified pseudorandom sample of them.

Second, we assess how the behaviors associated with song differ among societies. We reduce the high-dimensional NHS Ethnography annotations to a small number of dimensions of variation while addressing challenges in the analysis of ethnographic data, such as selective nonreporting. This allows us to assess how the variation in musical behavior across societies compares with the variation within a single society.

Third, we test which behaviors are universally or commonly associated with song. We catalogue 20 common but untested hypotheses about these associations, such as religious activity, dance, and infant care (4, 5, 40, 54, 58–60), and test them after adjusting for sampling error and ethnographer bias, problems which have bedeviled prior tests.

Fourth, we analyze the musical features of songs themselves, as documented in the NHS Discography. We derived four representations of each song, including blind human ratings and machine summaries. We then applied machine classifiers to these representations to test whether the musical features of a song predict its association with particular behavioral contexts.

Finally, in exploratory analyses we assess the prevalence of tonality in the world’s songs, show that variation in their annotations falls along a small number of dimensions, and plot the statistical distributions of melodic and rhythmic patterns in them.

All data and materials are publicly available at http://osf.io/jmv3q. We also encourage readers to view and listen to the corpora interactively via the plots available at http://themusiclab.org/nhsplots.

Music appears in all measured human societies

Is music universal? We first addressed this question by examining the eHRAF World Cultures database (61, 62), developed and maintained by the Human Relations Area Files organization. It includes high-quality ethnographic documents from 315 societies, subject-indexed by paragraph. We searched for text that was tagged as including music (instrumental or vocal) or that contained at least one keyword identifying vocal music (e.g., “singers”).

Music was widespread: the eHRAF ethnographies describe music in 309 of the 315 societies. Moreover, the remaining 6 (the Turkmen, Dominican, Hazara, Pamir, Tajik, and Ghorbat peoples) do in fact have music, according to primary ethnographic documents available outside the database (63–68). Thus music is present in 100% of a large sample of societies, consistent with the claims of writers and scholars since Longfellow (1, 4, 5, 10, 12, 53, 54, 58–60, 69–73). Given these data, and assuming that the sample of human societies is representative, the Bayesian 95% posterior credible interval for the population proportion of human societies that have music, with a uniform prior, is [0.994, 1].

To examine what about music is universal and how music varies worldwide, we built the NHS Ethnography (Fig. 1 and SI Text 1.1), a corpus of 4,709 descriptions of song performances drawn from the Probability Sample File (74–76). This is a ~45 million-word subset of the 315-society database, comprising 60 traditionally-living societies that were drawn pseudorandomly from each of Murdock’s 60 cultural clusters (62), covering 30 distinct geographical regions and selected to be historically mostly independent of one another. Because the corpus representatively samples from the world’s societies, it has been used to test cross-cultural regularities in many domains (46, 77–83), and these regularities may be generalized (with appropriate caution) to all societies.

Fig. 1. Design of NHS Ethnography.

The illustration depicts the sequence from acts of singing to the ethnography corpus. (A) People produce songs in conjunction with other behavior, which scholars observe and describe in text. These ethnographies are published in books, reports, and journal articles and then compiled, translated, catalogued, and digitized by the Human Relations Area Files organization. We conduct searches of the online eHRAF corpus for all descriptions of songs in the 60 societies of the Probability Sample File (B) and annotate them with a variety of behavioral features. The raw text, annotations, and metadata together form the NHS Ethnography. Codebooks listing all available data are in Tables S1–S6; a listing of societies and locations from which texts were gathered is in Table S12.

The NHS Ethnography, it turns out, includes examples of songs in all 60 societies. Moreover, each society has songs with words as opposed to just humming or nonsense syllables (which are reported in 22 societies). Because the societies were sampled independently of whether or not their people were known to produce music, in contrast to prior cross-cultural studies (10, 53, 54), the presence of music in each one, recognized by the anthropologists who embedded themselves in the society and wrote their authoritative ethnographies, constitutes the clearest evidence supporting the claim that song is a human universal. Readers interested in the nature of the ethnographers’ reports, which bear on what constitutes “music” in each society (cf. (27)) are encouraged to consult the interactive NHS Ethnography Explorer at http://themusiclab.org/nhsplots.

Musical behavior worldwide varies along three dimensions

How do we reconcile the discovery that song is universal with the research from ethnomusicology showing radical variability? We propose that the music of a society is not a fixed inventory of cultural behaviors but the products of underlying psychological faculties which make certain kinds of sound feel appropriate to certain social and emotional circumstances. These include entraining the body to acoustic and motoric rhythms, analyzing harmonically complex sounds, segregating and grouping sounds into perceptual streams (6, 7), parsing the prosody of speech, responding to emotional calls, and detecting ecologically salient sounds (8, 9). These faculties may interact with others that specifically evolved for music (4, 5). Musical idioms differ in which acoustic features they employ and which emotions they engage, but they all draw from a common suite of psychological responses to sound.

If so, what should be universal about music is not specific melodies or rhythms but clusters of correlated behaviors, such as slow soothing lullabies sung by a mother to a child or lively rhythmic songs sung in public by a group of dancers. We thus asked how musical behavior varies worldwide and how the variation within societies compares to the variation between them.

Reducing the dimensionality of variation in musical behavior

To determine whether the wide variation in the annotations of the behavioral context of songs in the database (detailed in SI Text 1.1) falls along a smaller number of dimensions capturing the principal ways that musical behavior varies worldwide, we used an extension of Bayesian principal components analysis (84), which, in addition to reducing dimensionality, handles missing data in a principled way, and provides a credible interval for each observation’s coordinates in the resulting space. Each observation is a “song event”, namely, a description in the NHS Ethnography of a song performance, a characterization of how a society uses songs, or both.

We found that three latent dimensions is the optimum number, explaining 26.6% of variability in NHS Ethnography annotations. Fig. 2 depicts the space and highlights examples from excerpts in the corpus; an interactive version is available at http://themusiclab.org/nhsplots. Details of the model are presented in SI Text 2.1, including the dimension selection procedure, model diagnostics, a test of robustness, and tests of the potential influence of ethnographer characteristics on model results. To interpret the space, we examined annotations that load highly on each dimension, and to validate this interpretation, we searched for examples at extreme locations and examined their content. (Loadings are presented in Tables S13–S15; a selection of extreme examples is given in Table S16.)

Fig. 2. Patterns of variation in the NHS Ethnography.

The figure depicts a projection of a subset of the NHS Ethnography onto three principal components (A). Each point represents the posterior mean location of an excerpt, with points colored by which of four types (identified by a broad search for matching keywords and annotations) it falls into: dance (blue), lullaby (green), healing (red), or love (yellow). The geometric centroids of each song type are represented by the diamonds. Excerpts that do not match any single search are not plotted, but can be viewed in the interactive version of this figure at http://themusiclab.org/nhsplots, along with all text and metadata. Selected examples of each song type are presented here (highlighted circles and B, C, D, E). Density plots (F, G, H) show the differences between song types on each dimension. Criteria for classifying song types from the raw text and annotations are presented in Table S17.

The first dimension (accounting for 15.5% of the variance, including error noise) captures variability in the Formality of a song: excerpts high along this dimension describe ceremonial events involving adults, large audiences, and instruments; excerpts low on it describe informal events with small audiences and children. The second dimension (accounting for 6.2%) captures variability in Arousal: excerpts high along this dimension describe lively events with many singers, large audiences, and dancing; excerpts low on it describe calmer events involving fewer people and less overt affect, such as people singing to themselves. The third dimension (4.9%) distinguishes Religious events from secular ones: passages high along the dimension describe shamanic ceremonies, possession, and funerary songs; passages low on it describe communal events without spiritual content, such as community celebrations.

To validate whether this dimensional space captured behaviorally relevant differences among songs, we tested whether we could reliably recover clusters for four distinctive, easily identifiable, and regularly occurring song types: dance, lullaby, healing, and love (54). We searched the NHS Ethnography for excerpts that match at least one of the four types using keyword searches and human annotations (Table S17).

While each song type can appear throughout the space, clear structure is observable (Fig. 2): the excerpts falling into each type cluster together. On average, dance songs (1089 excerpts) occupy the high-Formality, high-Arousal, low-Religiosity region. Healing songs (289 excerpts) cluster in the high-Formality, high-Arousal, high-Religiosity region. Love songs (354 excerpts) cluster in the low-Formality, low-Arousal, low-Religiosity region. Lullabies (156 excerpts) are the sparsest category (but see SI Text 2.1.5), and are located mostly in the low-Formality and low-Arousal regions. An additional 2821 excerpts matched either more than one category or none of the four.

To specify the coherence of these clusters formally rather than just visually, we asked what proportion of song events are closer to the centroid of their own type’s location than to any other type (SI Text 2.1.6). Overall, 64.7% of the songs were located closest to the centroid of their own type; under a null hypothesis that song type is unrelated to location, simulated by randomly shuffling the song labels, only 23.2% would do so (p < .001 according to a permutation test). This result was statistically significant for three of the four song types (dance: 66.2%; healing: 74.0%; love: 63.6%; ps < .001) though not for lullabies (39.7%, p = .92). The matrix showing how many songs of each type were near each centroid is in Table S18. Note that these analyses eliminated variables with high missingness; a validation model that analyzed the entire corpus yielded similar dimensional structure and clustering (Figs. S1–S2 and SI Text 2.1.5).

The range of musical behavior is similar across societies

We next examined whether this pattern of variation applies within all societies. Do all societies take advantage of the full spectrum of possibilities made available by the neural, cognitive, and cultural systems that underlie music? Alternatively, is there only a single, prototypical song type that is found in all societies, perhaps reflecting the evolutionary origin of music (love songs, say, if music evolved as a courtship display; or lullabies, if it evolved as an adaptation to infant care), with the other types haphazardly distributed or absent altogether, depending on whether the society extended the prototype through cultural evolution? As a third alternative, do societies fall into discrete typologies, such as a Dance Culture, or a Lullaby Culture? As still another alternative, do they occupy sectors of the space, so that there are societies with only arousing songs, or only religious ones, or ones whose songs are equally formal and vary only by arousal, or vice versa? The data in Fig. 2, which pool song events across societies, cannot answer such questions.

We estimated the variance of each society’s scores on each dimension, aggregated across all ethnographies from that society. This revealed that the distributions of each society’s observed musical behaviors are remarkably similar (Fig. 3), such that a song with “average formality”, “average arousal”, or “average religiosity” could appear in any society we studied. This finding is supported by comparing the global average along each dimension to each society’s mean and standard deviation, which summarizes how unusual the average song event would appear to members of that society. We found that in every society, a song event at the global mean would not appear out of place: the global mean always falls within the 95% confidence interval of every society’s distribution (Fig. S3). These results do not appear to be driven by any bias stemming from ethnographer characteristics such as sex or academic field (Fig. S4 and SI Text 2.1.7), nor are they artifacts of a society being related to other societies in the sample by region, subregion, language family, subsistence type, or location in the Old versus New World (Fig S5 and SI Text 2.1.8).

Fig. 3. Society-wise variation in musical behavior.

Density plots for each society showing the distributions of musical performances on each of the three principal components (Formality, Arousal, Religiosity). Distributions are based on posterior samples aggregated from corresponding ethnographic observations. Societies are ordered by the number of available documents in the NHS Ethnography (the number of documents per society is displayed in parentheses). Distributions are color-coded based on their mean distance from the global mean (in z-scores; redder distributions are farther from 0). While some societies’ means differ significantly from the global mean, the mean of each society’s distribution is within 1.96 standard deviations of the global mean of 0. One society (Tzeltal) is not plotted, because it has insufficient observations for a density plot. Asterisks denote society-level mean differences from the global mean. *p < .05; **p < .01; ***p <.001

We also applied a comparison that is common in studies of genetic diversity (85) and that has been performed in a recent cultural-phylogenetic study of music (86). It revealed that typical within-society variation is approximately six times larger than between-society variation. Specifically, the ratios of within- to between-society variances were 5.58 for Formality (95% Bayesian credible interval [4.11, 6.95]); 6.39 [4.72, 8.34] for Arousal; and 6.21 [4.47, 7.94] for Religiosity. Moreover, none of the 180 mean values for the 60 societies over the 3 dimensions deviated from the global mean by more than 1.96 times the standard deviation of that society (Fig. S3 and SI Text 2.1.9).

These findings demonstrate global regularities in musical behavior, but they also reveal that behaviors vary quantitatively across societies — consistent with the longstanding conclusions of ethnomusicologists. For instance, the Kanuri’s musical behaviors are estimated to be less formal than those of any other society, whereas the Akan’s are estimated to be the most religious (in both cases, significantly different from the global mean on average). Some ethnomusicologists have attempted to explain such diversity, noting, for example, that more formal song performances tend to be found in more socially rigid societies (10).

Despite this variation, a song event of average formality would appear unremarkable in the Kanuri’s distribution of songs, as would a song event of average religiosity in the Akan. Overall, we find that for each dimension, approximately one-third of all societies’ means significantly differed from the global mean, and approximately half differed from the global mean on at least one dimension (Fig. 3). But despite variability in the societies’ means on each dimension, their distributions overlap substantially with one another and with the global mean. Moreover, even the outliers in Fig. 3 appear to represent not genuine idiosyncrasy in some cultures but sampling error: the societies that differ more from the global mean on some dimension are those with sparser documentation in the ethnographic record (Fig. S6 and SI Text 2.1.10). To ensure that these results are not artifacts of the statistical techniques employed, we applied them to a structurally analogous dataset whose latent dimensions are expected to vary across countries, namely climate features (since temperature, for instance, is related to elevation, which certainly is not universal); the results were entirely different than what we found when analyzing the NHS Ethnography (Figs. S7–S8 and SI Text 2.1.11).

The results suggest that societies’ musical behaviors are largely similar to one another, such that the variability within a society exceeds the variability between them (all societies have more soothing songs, such as lullabies; more rousing songs, such as dance tunes; more stirring songs, such as prayers; and other recognizable kinds of musical performance), and that the appearance of uniqueness in the ethnographic record may reflect under-reporting.

Associations between song and behavior, corrected for bias

Ethnographic descriptions of behavior are subject to several forms of selective nonreporting: ethnographers may omit certain kinds of information because of their academic interests (e.g., the author focuses on farming and not shamanism), implicit or explicit biases (e.g., the author reports less information about the elderly), lack of knowledge (e.g., the author is unaware of food taboos), or inaccessibility (e.g., the author wants to report on infant care but is not granted access to infants). We cannot distinguish among these causes, but we can discern patterns of omission in the NHS Ethnography. For example, we find that when the singer’s age is reported, they are likely to be young, but when the singer’s age is not reported, other cues are statistically present (such as the fact that a song is ceremonial) which suggest that they are old. Such correlations — between the absence of certain values of one variable and the reporting of particular values of others— were aggregated into a model of missingness (SI Text 2.1.12) that forms part of the Bayesian principal component analysis reported in the previous sections.

This allows us to test hypotheses about the contexts with which music is strongly associated worldwide, while accounting for reporting biases. We compared the frequency with which a particular behavior appears in text describing song with the estimated frequency with which it appears across the board, in all the text written by that ethnographer about that society, which can be treated as the null distribution for that behavior. If a behavior is systematically associated with song, then its frequency in the NHS Ethnography should exceed its frequency in that null distribution, which we estimate by randomly drawing the same number of passages from the same documents (full model details are in SI Text 2.2).

We generated a list of 20 hypotheses about universal or widespread contexts for music (Table 1) from published work in anthropology, ethnomusicology, and cognitive science (4, 5, 54, 58–60, 83), together with a survey of nearly 1000 scholars which solicited opinions about which behaviors might be universally linked to music (SI Text 1.4.1). We then designed two sets of criteria for determining whether a given passage of ethnography represented a given behavior in this list. The first used human-annotated identifiers, capitalizing on the fact that every paragraph in the Probability Sample File comes tagged with one of more than 750 identifiers from the Outline of Cultural Materials (OCM), such as MOURNING, INFANT CARE, or WARFARE.

Table 1. Cross-cultural associations between song and other behaviors.

We tested 20 hypothesized associations between song and other behaviors by comparing the frequency of a behavior in song-related passages to that in comparably-sized samples of text from the same sources that are not about song. Behavior was identified with two methods: topic annotations from the Outline of Cultural Materials (“OCM identifiers”), and automatic detection of related keywords (“WordNet seed words”; see Table S19). Significance tests compared the frequencies in the passages in the full Probability Sample File containing song-related keywords (“Song freq.”) with the frequencies in a simulated null distribution of passages randomly selected from the same documents (“Null freq.”).

| Hypothesis | OCM identifier(s) | Song freq. | Null freq. | WordNet seed word(s) | Song freq. | Null freq. |

|---|---|---|---|---|---|---|

| Dance | DANCE | 1499*** | 431 [397, 467] | dance | 11145*** | 3283 [3105, 3468] |

| Infancy | INFANT CARE | 63* | 44 [33, 57] | infant, baby, cradle, lullaby | 688** | 561 [491, 631] |

| Healing | MAGICAL AND MENTAL THERAPY; SHAMANS AND PSYCHOTHERAPISTS; MEDICAL THERAPY; MEDICAL CARE | 1651*** | 1063 [1004, 1123] | heal, shaman, sick, cure | 3983*** | 2466 [2317, 2619] |

| Religious activity | SHAMANS AND PSYCHOTHERAPISTS; RELIGIOUS EXPERIENCE; PRAYERS AND SACRIFICES; PURIFICATION AND ATONEMENT; ECSTATIC RELIGIOUS PRACTICES; REVELATION AND DIVINATION; RITUAL | 3209*** | 2212 [2130, 2295] | religious, spiritual, ritual | 8644*** | 5521 [5307, 5741] |

| Play | GAMES; CHILDHOOD ACTIVITIES | 377*** | 277 [250, 304] | play, game, child, toy | 4130*** | 2732 [2577, 2890] |

| Procession | SPECTACLES; NUPTIALS | 371*** | 213 [188, 240] | wedding, parade, march, procession, funeral, coronation | 2648*** | 1495 [1409, 1583] |

| Mourning | BURIAL PRACTICES AND FUNERALS; MOURNING; SPECIAL BURIAL PRACTICES AND FUNERALS | 924*** | 517 [476, 557] | mourn, death, funeral | 3784*** | 2511 [2373, 2655] |

| Ritual | RITUAL | 187*** | 99 [81, 117] | ritual, ceremony | 8520** | 5138 [4941, 5343] |

| Entertainment | SPECTACLES | 44*** | 20 [12, 29] | entertain, spectacle | 744*** | 290 [256, 327] |

| Children | CHILDHOOD ACTIVITIES | 178*** | 108 [90, 126] | child | 4351*** | 3471 [3304, 3647] |

| Mood/emotions | DRIVES AND EMOTIONS | 219*** | 138 [118, 159] | mood, emotion, emotive | 796*** | 669 [607, 731] |

| Work | LABOR AND LEISURE | 137*** | 60 [47, 75] | work, labor | 3500** | 3223 [3071, 3378] |

| Storytelling | VERBAL ARTS; LITERATURE | 736*** | 537 [506, 567] | story, history, myth | 2792*** | 2115 [1994, 2239] |

| Greeting visitors | VISITING AND HOSPITALITY | 360*** | 172 [148, 196] | visit, greet, welcome | 1611*** | 1084 [1008, 1162] |

| War | WARFARE | 264 | 283 [253, 311] | war, battle, raid | 3154*** | 2254 [2122, 2389] |

| Praise | STATUS, ROLE, AND PRESTIGE | 385 | 355 [322, 388] | praise, admire, acclaim | 481*** | 302 [267, 339] |

| Love | ARRANGING A MARRIAGE | 158 | 140 [119, 162] | love, courtship | 1625*** | 804 [734, 876] |

| Group bonding | SOCIAL RELATIONSHIPS AND GROUPS | 141 | 163 [141, 187] | bond, cohesion | 1582*** | 1424 [1344, 1508] |

| Marriage/weddings | NUPTIALS | 327*** | 193 [169, 218] | marriage, wedding | 2011 | 2256 [2108, 2410] |

| Art/creation | n/a | n/a | n/a | art, creation | 905*** | 694 [630, 757] |

p < .001

p < .01

p < .05

using adjusted p-values (88); 95% intervals for the null distribution are in brackets.

The second was needed because some hypotheses corresponded only loosely to the OCM identifiers (e.g., “love songs” is only a partial fit to ARRANGING A MARRIAGE, and not an exact fit to any other identifier), and still others fit no identifier at all (e.g., “music perceived as art or as a creation” (51)). So we designed a method that examined the text directly. Starting with a small set of seed words associated with each hypothesis (e.g., “religious”, “spiritual”, and “ritual”, for the hypothesis that music is associated with religious activity), we used the WordNet lexical database (87) to automatically generate lists of conceptually related terms (e.g., “rite” and “sacred”). We manually filtered the lists to remove irrelevant words and homonyms and add relevant keywords that may have been missed, then conducted word stemming to fill out plurals and other grammatical variants (full lists are in Table S19). Each method has limitations: automated dictionary methods can erroneously flag a passage which contains a word that is ambiguous, whereas the human-coded OCM identifiers may miss a relevant passage, misinterpret the original ethnography, or paint with too broad a brush, applying a tag to a whole paragraph or to several pages of text. Where the two methods converge, support for a hypothesis is particularly convincing.

After controlling for ethnographer bias via the method described above, and adjusting the p-values for multiple hypotheses (88), we find support from both methods for 14 of the 20 hypothesized associations between music and a behavioral context, and support from one method for the remaining 6 (Table 1). To verify that these analyses specifically confirmed the hypotheses, as opposed to being an artifact of some other nonrandom patterning in this dataset, we re-ran them on a set of additional OCM identifiers matched in frequency to the ones used above (the selection procedure is described in SI Text 2.2.2). They covered a broad swath of topics, including DOMESTICATED ANIMALS, POLYGAMY, and LEGAL NORMS that were not hypothesized to be related to song (the full list is in Table S20). We find that only 1 appeared more frequently in song-related paragraphs than in the simulated null distribution (CEREAL AGRICULTURE; see Table S20 for full results). This contrasts sharply with the associations reported in Table 1, suggesting that they represent bona fide regularities in the behavioral contexts of music.

Universality of musical forms

We now turn to the NHS Discography to examine the musical content of songs in four behavioral contexts, (dance, lullaby, healing, and love; Fig. 4A), selected because each appears in the NHS Ethnography, is widespread in traditional cultures (59), and exhibits shared features across societies (54). Using predetermined criteria based on liner notes and supporting ethnographic text (Table S21), and seeking recordings of each type from each of the 30 geographic regions, we found 118 songs of the 120 possibilities (4 contexts by 30 regions) from 86 societies (Fig. 4B). This coverage underscores the universality of these four types; indeed, in the two possibilities we failed to find (healing songs from Scandinavia and from the British Isles), documentary evidence shows that both existed (89, 90) but were rare by the early 1900s, when collecting field recordings in remote areas became feasible.

Fig. 4. Design of the NHS Discography.

The illustration depicts the sequence from acts of singing to the audio discography. (A) People produce songs, which scholars record. We aggregate and analyze the recordings via four methods: automatic music information retrieval, annotations from expert listeners, annotations from naive listeners, and staff notation transcriptions (from which annotations are automatically generated). The raw audio, four types of annotations, transcriptions, and metadata together form NHS Discography. The locations of the 86 societies represented are plotted in (B), with points colored by the song type in each recording (dance in blue, healing in red, love in yellow, lullaby in green). Codebooks listing all available data are in Tables S1 and S7–S11; a listing of societies and locations from which recordings were gathered is in Table S22.

The data describing each song comprised (a) machine summaries of the raw audio using automatic music information retrieval techniques, particularly the audio’s spectral features (e.g., mean brightness and roughness, variability of spectral entropy; SI Text 1.2.1); (b) general impressions of musical features (e.g., whether its emotional valence was happy or sad) by untrained listeners recruited online from the United States and India (SI Text 1.2.2); (c) ratings of additional music-theoretic features (e.g., high-level rhythmic grouping structure), similar in concept to previous rating-scale approaches to analyzing world music (10, 53) from a group of experts, namely 30 musicians that included PhD ethnomusicologists and music theorists (SI Text 1.2.3); and (d) detailed manual transcriptions, also by expert musicians, of musical features (e.g., note density of sung pitches; SI Text 1.2.4). To ensure that classifications were driven only by the content of the music, we excluded any variables that carried explicit or implicit information about the context (54), such as the number of singers audible on a recording and a coding of polyphony (which indicates the same thing implicitly). This exclusion could be complete only in the manual transcriptions, which are restricted to data on vocalizations; the music information retrieval and naïve listener data are practically inseparable from contextual information, and the expert listener ratings contain at least a small amount, since despite being told to ignore the context, the experts could still hear some if it, such as accompanying instruments. Details about variable exclusion are in SI Text 2.3.1.

Listeners accurately identify the behavioral contexts of songs

In a previous study, people listened to recordings from the NHS Discography and rated their confidence in each of six possible behavioral contexts (e.g., “used to soothe a baby”). On average, the listeners successfully inferred a song’s behavioral context from its musical forms: the songs that were actually used to soothe a baby (i.e., lullabies) were rated highest as “used to soothe a baby”; dance songs were rated highly as “used for dancing”, and so on (54).

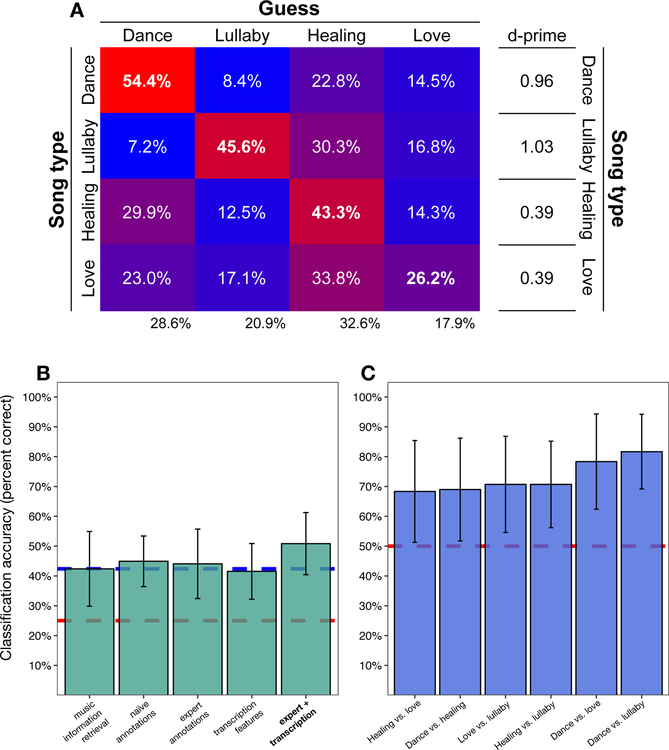

We ran a massive conceptual replication (details in SI Text 1.4.2) where 29,357 visitors to the citizen-science website http://themusiclab.org listened to songs drawn at random from the NHS Discography and were asked to guess what kind of song they were listening to from among 4 alternatives (yielding 185,832 ratings, i.e., 118 songs rated about 1,500 times each). Participants also reported their musical skill level and degree of familiarity with world music. Listeners guessed the behavioral contexts with a level of accuracy (42.4%) that is well above chance (25%), showing that the acoustic properties of a song performance reflect its behavioral context in ways that span human cultures.

The confusion matrix (Fig. 5A) shows that listeners identified dance songs most accurately (54.4%), followed by lullabies (45.6%), healing songs (43.3%), and love songs (26.2%), all significantly above chance (ps < .001). Dance songs and lullabies were the least likely to be confused with each other, presumably because of their many contrasting features, such as tempo (a possibility we examine below; see Table 2). The column marginals suggest that the raters were biased toward identifying recordings as healing songs (32.6%, above their actual proportion of 25%), and away from identifying them as love songs (17.9%), possibly because healing songs are less familiar to Westernized listeners and they were overcompensating in identifying examples. As in previous research (54), love songs were least reliably identified, despite their ubiquity in Western popular music, possibly because they span a wide range of styles (compare Love Me Tender to Burning Love, to take just one artist). Nonetheless, d-prime scores (Fig. 5A), which capture the sensitivity to a signal independently of response bias, show that all behavioral contexts were identified at a rate higher than chance (d’ = 0).

Fig. 5. Form and function in song.

In a massive online experiment (N = 29,357), listeners categorized dance songs, lullabies, healing songs, and love songs at rates higher than chance level of 25% (A), but their responses to love songs were by far the most ambiguous (the heatmap shows average percent correct, color coded from lowest magnitude, in blue, to highest magnitude, in red). Note that the marginals (below the heatmap), are not evenly distributed across behavioral contexts: listeners guessed “healing” most often and “love” least often despite the equal number of each in the materials. The d-prime scores estimate listeners’ sensitivity to the song-type signal independent of this response bias. Categorical classification of the behavioral contexts of songs (B), using each of the four representations in the NHS Discography, is substantially above the chance performance level of 25% (dotted red line) and is indistinguishable from the performance of human listeners, 42.4% (dotted blue line). The classifier that combines expert annotations with transcription features (the two representations that best ignore background sounds and other context) performs at 50.8% correct, above the level of human listeners. (C) Binary classifiers which use the expert annotation + transcription feature representations to distinguish pairs of behavioral contexts (e.g., dance from love songs, as opposed to the 4-way classification in B), perform above the chance level of 50% (dotted red line). Error bars represent 95% confidence intervals from corrected resampled t-tests (95).

Table 2. Features of songs that distinguish between behavioral contexts.

The table reports the predictive influence of musical features in the NHS Discography in distinguishing song types across cultures, ordered by their overall influence across all behavioral contexts. The classifiers used the average rating for each feature across 30 annotators. The coefficients are from a penalized logistic regression with standardized features and are selected for inclusion using a lasso for variable selection. For brevity, we only present the subset of features with notable influence on a pairwise comparison (coefficients greater than 0.1). Changes in the values of the coefficients produce changes in the predicted log-odds ratio, so the values in the table can be interpreted as in a logistic regression.

| Coefficient (pairwise comparison) | |||||||

|---|---|---|---|---|---|---|---|

| Musical feature | Definition | Dance (−) vs. Lullaby (+) | Dance (−) vs. Love (+) | Healing (−) vs. Lullaby (+) | Love (−) vs. Lullaby (+) | Dance (−) vs. Healing (+) | Healing (−) vs. Love (+) |

| Accent | The differentiation of musical pulses, usually by volume or emphasis of articulation. A fluid, gentle song will have few accents and a correspondingly low value. | −0.64 | −0.24 | −0.85 | −0.41 | . | −0.34 |

| Tempo | The rate of salient rhythmic pulses, measured in beats per minute; the perceived speed of the music. A fast song will have a high value. | −0.65 | −0.51 | . | . | −0.76 | . |

| Quality of pitch collection | Major versus minor key. In Western music, a key usually has a “minor” quality if its third note is three semitones from the tonic. This variable was derived from annotators’ qualitative categorization of the pitch collection, which we then dichotomized into Major (0) or Minor (1). | . | 0.26 | 0.44 | . | −0.37 | 0.35 |

| Consistency of macro-meter | Meter refers to salient repetitive patterns of accent within a stream of pulses. A micro-meter refers to the low-level pattern of accents; a macro-meter refers to repetitive patterns of micro-meter groups. This variable refers to the consistency of the macro-meter, in an ordinal scale, from “No macro-meter” (1) to “Totally clear macro-meter” (6). A song with a highly variable macro-meter will have a low value. | −0.44 | −0.49 | . | . | −0.46 | . |

| Number of common intervals | Variability in interval sizes, measured by the number of different melodic interval sizes that constitute more than 9% of the song’s intervals. A song with a large number of different melodic interval sizes will have a high value. | . | 0.58 | . | . | . | 0.62 |

| Pitch range | The musical distance between the extremes of pitch in a melody, measured in semitones. A song that includes very high and very low pitches will have a high value. | . | . | . | −0.49 | . | . |

| Stepwise motion | Stepwise motion refers to melodic strings of consecutive notes (1 or 2 semitones apart), without skips or leaps. This variable consists of the fraction of all intervals in a song that are 1 or 2 semitones in size. A song with many melodic leaps will have a low value. | . | . | . | . | 0.61 | −0.20 |

| Tension/release | The degree to which the passage is perceived to build and release tension via changes in melodic contour, harmonic progression, rhythm, motivic development, accent, or instrumentation. If so, the song is annotated with a value of 1. | . | 0.27 | . | . | . | 0.27 |

| Average melodic interval size | The average of all interval sizes between successive melodic pitches, measured in semitones on a 12-tone equal temperament scale, rather than in absolute frequencies. A melody with many wide leaps between pitches will have a high value. | . | −0.46 | . | . | . | . |

| Average note duration | The mean of all note durations; a song predominated by short notes will have a low value. | . | . | . | . | . | −0.49 |

| Triple micro-meter | A low-level pattern of accents that groups together pulses in threes. | . | . | . | . | −0.23 | . |

| Predominance of most-common pitch class | Variety versus monotony of the melody, measured by the ratio of the proportion of occurrences of the second-most-common pitch (collapsing across octaves) to the proportion of occurrences of the most common pitch; monotonous melodies will have low values. | . | . | . | . | −0.48 | . |

| Rhythmic variation | Variety versus monotony of the rhythm, judged subjectively and dichotomously. Repetitive songs have a low value. | . | . | . | . | 0.42 | . |

| Tempo variation | Changes in tempo: a song that is perceived to speed up or slow down is annotated with a value of 1 | . | . | . | . | . | −0.27 |

| Ornamentation | Complex melodic variation or “decoration” of a perceived underlying musical structure. A song perceived as having ornamentation is annotated with a value of 1. | . | 0.25 | . | . | . | . |

| Pitch class variation | A pitch class is the group of pitches that sound equivalent at different octaves, such as all the Cs, not just Middle C. This variable, another indicator of melodic variety, counts the number of pitch classes that appear at least once in the song. | . | . | −0.25 | . | . | . |

| Triple macro-meter | If a melody arranges micro-meter groups into larger phrases of three, like a waltz, it is annotated with a value of 1. | . | . | 0.14 | . | . | . |

| Predominance of most-common interval | Variability among pitch intervals, measured as the fraction of all intervals that are the most common interval size. A song with little variability in interval sizes will have a high value. | . | . | . | . | 0.12 | . |

Are accurate identifications of the contexts of culturally unfamiliar songs restricted to listeners with musical training or exposure to world music? In a regression analysis, we found that participants’ categorization accuracy was statistically related to their self-reported musical skill (F(4,16245) = 2.57, p = .036) and their familiarity with world music (F(3,16167) = 36.9, p < .001; statistics from linear probability models), but with small effect sizes: the largest difference was a 4.7 percentage point advantage for participants who reported they were “somewhat familiar with traditional music” relative to those who reported that they had never heard it, and a 1.3 percentage point advantage for participants who reported that they have “a lot of skill” relative to “no skill at all.” Moreover, when limiting the dataset to listeners with “no skill at all” or listeners who had “never heard traditional music”, mean accuracy was almost identical to the overall cohort. These findings suggest that while musical experience enhances the ability to detect the behavioral contexts of songs from unfamiliar cultures, it is not necessary.

Quantitative representations of musical forms accurately predict behavioral contexts of song

If listeners can accurately identify the behavioral contexts of songs from unfamiliar cultures, there must be acoustic features that universally tend to be associated with these contexts. To identify them, we evaluated the relationship between a song’s musical forms (measured in four ways; see SI Text 1.2.5 and (12, 31, 32, 91–93) for discussion of how difficult it is to represent music quantitatively) and its behavioral context using a cross-validation procedure that determined whether the pattern of correlation between musical forms and context computed from a subset of the regions could be generalized to predict a song’s context in the other regions (as opposed to being overfitted to arbitrary correlations within that subsample). Specifically, we trained a LASSO-regularized categorical logistic regression classifier (94) on the behavioral context of all the songs in 29 of the 30 regions in NHS Discography, and used it to predict the context of the unseen songs in the 30th. We ran this procedure 30 times, omitting a different region each time (SI Text 2.3.2 and Table S23). We compared the accuracy of these predictions to two baselines: pure chance (25%), and the accuracy of listeners in the massive online experiment (see above) when guessing the behavioral context from among four alternatives (42.4%).

We found that with each of the four representations, the musical forms of a song can predict its behavioral context (Fig. 5B) at rates comparably high to those of the human listeners in the online experiment. This finding was not attributable to information in the recordings other than the singing, which could be problematic, if, for example, the presence of a musical instrument on a recording indicated that it is likelier to be a dance song than a lullaby (54), artificially improving classification. Representations with the least extraneous influence — the expert annotators and the summary features extracted from transcriptions — had no lower classification accuracy than the other representations. And a classifier run on combined expert and transcription data had the best performance of all, 50.8% (95% CI [40.4%, 61.3%], computed by corrected resampled t-test (95)), well exceeding that of human ratings.

To ensure that this accuracy did not merely consist of patterns in one society predicting patterns in historically or geographically related ones, we repeated the analyses, cross-validating across groupings of societies, including superordinate world region (e.g., “Asia”), subsistence type (e.g., “hunter-gatherers”); and Old versus New World. In many cases, the classifier performed comparably well as did the main model (Table S24), though low power in some cases (i.e., training on less than half the corpus) substantially reduced precision.

In sum, the acoustic form of vocal music predicts its behavioral contexts worldwide (54), at least in the contexts of dance, lullaby, healing, and love: all classifiers performed above chance and within 1.96 standard errors of the performance of human listeners.

The musical features characterizing the behavioral contexts of songs across societies

Showing that the musical features of songs predict their behavioral context provides no information about which musical features those are. To help identify them, we determined how well the combined expert + transcription data distinguished between specific pairs of behavioral contexts rather than among all four, using a simplified form of the classifiers described above, which not only distinguished the contexts but also identified the most reliable predictors of each contrast, without overfitting (96). This can reveal whether tempo, for example, helps distinguish dance songs from lullabies while failing to distinguish lullabies from love songs.

Performance once again significantly exceeded chance (in this case, 50%) for all 6 comparisons (ps < .05; Fig. 5C). Table 2 lays out the musical features that drive these successful predictions and thereby characterize the four song types across cultures. Some are consistent with common sense; for instance, dance songs differ from lullabies in tempo, accent, and the consistency of their macro-meter (i.e., the superordinate grouping of rhythmic notes). Other distinguishers are subtler: the most common interval of a song occurs a smaller proportion of the time in a dance song than in a healing song, suggesting that dance songs are more melodically variable than healing songs (for explanations of musical terminology, see Table 2). Similarly, it is unsurprising that lullabies and love songs are more difficult to distinguish than lullabies and dance songs (97); nonetheless, they may be distinguished by two features: the strength of metrical accents and the size of the pitch range (both larger in love songs).

In sum, four common song categories, distinguished by their contexts and goals, tend to have distinctive musical qualities worldwide. These results suggest that universal features of human psychology bias people to produce and enjoy songs with certain kinds of rhythmic or melodic patterning that naturally go with certain moods, desires, and themes. These patterns do not consist of concrete acoustic features, such as a specific melody or rhythm, but rather of relational properties like accent, meter, and interval structure.

Of course, classification accuracy that is twice the level of chance still falls well short of perfect prediction, showing that many aspects of music cannot be manifestations of universal psychological reactions. Though musical features can predict differences between songs from these four behavioral contexts, a given song may be sung in a particular context for other reasons, including its lyrics, its history, the style and instrumentation of its performance, its association with mythical or religious themes, and constraints of the culture’s musical idiom. And while we have shown that Western listeners, who have been exposed to a vast range of musical styles and idioms, can distinguish the behavioral contexts of songs from non-Western societies, we do not know whether non-Western listeners can do the same. To reinforce the hypothesis of universal associations between musical form and context, similar methods should be tested with non-Western listeners.

Explorations of the structure of musical forms

The NHS Discography may be used to explore world music in many other ways. We present three exploratory analyses here, mindful of the limitation that they may apply only to the four genres the corpus includes.

Signatures of tonality appear in all societies studied

A basic feature of many styles of music is tonality, in which a melody is composed of a fixed set of discrete tones (perceived pitches, as opposed to actual pitches, a distinction dating to Aristoxenus’s Elementa Harmonica; (98)), and some tones are psychologically dependent on others, with one tone felt to be central or stable (99–101). This tone (more accurately, perceived pitch class, embracing all the tones one or more octaves apart) is called the tonal center or tonic, and listeners characterize it as a reference point, point of stability, basis tone, “home”, or tone that the melody “is built around” and where it “should end.” For example, the tonal center of Row your boat is found in each of the “row”s, the last “merrily”, and the song’s last note, “dream.”

While tonality has been studied in a few non-Western societies (102, 103) its cross-cultural distribution is unknown. Indeed, the ethnomusicologists who responded to our survey (SI Text 1.4.1) were split over whether the music of all societies should be expected to have a tonal center: 48% responded “probably not universal” or “definitely not universal.” The issue is important because a tonal system is a likely prerequisite for analyzing music, in all its diversity, as the product of an abstract musical grammar (73). Tonality also motivates the hypothesis that melody is rooted in the brain’s analysis of harmonically complex tones (104). In this theory, a melody can be considered a set of “serialized overtones,” the harmonically related frequencies ordinarily superimposed in the rich tone produced by an elongated resonator such as the human vocal tract. In tonal melodies, the tonic corresponds to the fundamental frequency of the disassembled complex tone, and listeners tend to favor tones in the same pitch class as harmonics of the fundamental (105).

To explore tonality in the NHS Discography, we analyzed the expert listener annotations and the transcriptions (SI Text 2.4.1). Each of the 30 expert listeners was asked, for each song, whether or not they heard at least one tonal center, defined subjectively as above. The results were unambiguous: 97.8% of ratings were in the affirmative. More than two-thirds of songs were rated as “tonal” by all thirty expert listeners, and 113 of the 118 were rated as tonal by over 90% of them. The song with the most ambiguous tonality (the Kwakwaka’wakw healing song) still had a majority of raters respond in the affirmative (60%).

If listeners heard a tonal center, they were asked to name its pitch class. Here too, listeners were highly consistent: there was either widespread agreement on a single tonal center or the responses fell into two or three tonal centers (Fig. 6A; the distributions of tonality ratings for all 118 songs are in Fig. S10). We measured multimodality of the ratings using Hartigan’s dip test (106). In the 73 songs that the test classified as unimodal, 85.3% of ratings were in agreement with the modal pitch class. In the remaining 45 songs, 81.7% of ratings were in agreement with the two most popular pitch classes, and 90.4% were in agreement with the three most popular. The expert listeners included 6 PhD ethnomusicologists and 6 PhD music theorists; when restricting the ratings to this group alone, the levels of consistency were comparable.

Fig. 6. Signatures of tonality in the NHS Discography.

Histograms (A) representing the ratings of tonal centers in all 118 songs, by thirty expert listeners, show two main findings. First, most songs’ distributions are unimodal, such that most listeners agreed on a single tonal center (represented by the value 0). Second, when listeners disagree, they are multimodal, with the most popular second mode (in absolute distance) 5 semitones away from the overall mode, a perfect fourth. The music notation is provided as a hypothetical example only, with C as a reference tonal center; note that the ratings of tonal centers could be at any pitch level. The scatterplot (B) shows the correspondence between modal ratings of expert listeners with the first-rank predictions from the Krumhansl-Schmuckler key-finding algorithm. Points are jittered to avoid overlap. Note that pitch classes are circular (i.e., C is one semitone away from C# and from B) but the plot is not; distances on the axes of (B) should be interpreted accordingly.

In songs where the ratings were multimodally distributed, the modal tones were often hierarchically related; for instance, ratings for the Ojibwa healing song were evenly split between B (pitch class 11) and E (pitch class 4), which are a perfect fourth (5 semitones) apart. The most common intervals between the two modal tones were the perfect fourth (in 15 songs), a half-step (1 semitone, in 9 songs), a whole step (2 semitones, in 8 songs), a major third (4 semitones, in 7 songs), and a minor third (3 semitones, in 6 songs).

We cannot know which features of the recordings our listeners were responding to in attributing a tonal center to it, nor whether their attributions depended on expertise that ordinary listeners lack. We thus sought converging, objective evidence for the prevalence of tonality in the world’s music by submitting NHS Discography transcriptions to the Krumhansl-Schmuckler key-finding algorithm (107). This algorithm sums the durations of the tones in a piece of music and correlates this vector with each of a family of candidate vectors, one for each key, consisting of the relative centralities of those pitch classes in that key. The algorithm’s first guess (i.e., the key corresponding to the most highly correlated vector) matched the expert listeners’ ratings of the tonal center 85.6% of the time (measured via a weighted average of its hit rate for the most common expert rating when the ratings were unimodal and either of the two most common ratings when they were multimodal). When we relaxed the criterion for a match to the algorithm’s first- and second-ranked guesses, it matched the listeners’ ratings on 94.1% of songs; adding its third-ranked estimate resulted in matches 97.5% of the time, and adding the fourth resulted in matches with 98.3% (all ps < .0001 above the chance level of 9.1%, using a permutation test; see SI Text 2.4.1). These results provide convergent evidence for the presence of tonality in the NHS Discography songs (Fig. 6B).

These conclusions are limited in several ways. First, they are based on songs from only four behavioral contexts, omitting others such as mourning, storytelling, play, war, and celebration. Second, the transcriptions were created manually, and could have been influenced by the musical ears and knowledge of the expert transcribers. (Current music information retrieval algorithms are not robust enough to transcribe melodies accurately, especially from noisy field recordings, but improved ones could address this issue.) The same limitation may apply to the ratings of our expert listeners. Finally, the findings do not show how the people from the societies in which NHS Discography songs were recorded hear the tonality in their own music. To test the universality of tonality perception, one would need to conduct field experiments in diverse populations.

Music varies along two dimensions of complexity

To examine patterns of variation among the songs in the NHS Discography, we applied the same kind of Bayesian principal-components analysis used for the NHS Ethnography to the combination of expert annotations and transcription features (i.e., the representations that focus most on the singing, excluding context). The results yielded two dimensions, which together explain 23.9% of the variability in musical features. The first, which we call Melodic Complexity, accounts for 13.1% of the variance (including error noise); heavily-loading variables included the number of common intervals, pitch range, and ornamentation (all positively) and the predominance of the most-common pitch class, the predominance of the most-common interval, and the distance between the most-common intervals (all negatively, see Table S25). The second, which we call Rhythmic Complexity, accounts for 10.8% of the variance; heavily-loading variables included tempo, note density, syncopation, accent, and consistency of macro-meter (all positively); and the average note duration and duration of melodic arcs (all negatively; see Table S26). The interpretation of the dimensions is further supported in Fig. 7, which shows excerpts of transcriptions at the extremes of each dimension; an interactive version is at http://themusiclab.org/nhsplots.

Fig. 7. Dimensions of musical variation in the NHS Discography.

A Bayesian principal components analysis reduction of expert annotations and transcription features (the representations least contaminated by contextual features) shows that these measurements fall along two dimensions (A) that may be interpreted as rhythmic complexity and melodic complexity. Histograms for each dimension (B, C) show the differences — or lack thereof — between behavioral contexts. In (D-G) we highlight excerpts of transcriptions from songs at extremes from each of the four quadrants, to validate the dimension reduction visually. The two songs at the high-rhythmic-complexity quadrants are dance songs (in blue), while the two songs at the low-rhythmic-complexity quadrants are lullabies (in green). Healing songs are depicted in red and love songs in yellow. Readers may listen to excerpts from all songs in the corpus at http://osf.io/jmv3q; an interactive version of this plot is available at http://themusiclab.org/nhsplots.

In contrast to the NHS Ethnography, the principal-components space for the NHS Discography does not distinguish the four behavioral contexts of songs in the corpus. We found that only 39.8% of songs matched their nearest centroid (overall p = .063 from a permutation test; dance: 56.7%, p = .12; healing: 7.14%, p > .99; love: 43.3%, p = .62; lullaby: 50.0%, p = .37; a confusion matrix is in Table S27). Similarly, k-means clustering on the principal components space, asserting k = 4 (because there are 4 known clusters) failed to reliably capture any of the behavioral contexts. Finally, given the lack of predictive accuracy of songs’ location in the 2D space, we explored each dimension’s predictive accuracy individually, using t-tests of each context against the other three, adjusted for multiple comparisons (88). Melodic complexity did not predict context (dance: p = .79; healing: p = .96, love: p = .13; lullaby: p = .35), though rhythmic complexity did distinguish dance songs (which were more rhythmically complex, p = .01) and lullabies (which were less rhythmically complex, p = .03) from other songs; it did not distinguish healing or love songs (ps > .99). When we adjusted these analyses to account for across-region variability, the results were comparable (SI Text 2.4.2). Thus, while musical content systematically varies in two ways across cultures, this variation is mostly unrelated to the behavioral contexts of the songs, perhaps because complexity captures distinctions that are salient to music analysts but not strongly evocative of particular moods or themes among the singers and listeners themselves.

Melodic and rhythmic bigrams are distributed according to power laws

Many phenomena in the social and biological sciences are characterized by Zipf’s law (108), in which the probability of an event is inversely proportional to its rank in frequency, an example of a power law distribution (in the Zipfian case, the exponent is 1). Power law distributions (as opposed to, say, geometric and normal distributions) have two key properties: a small number of highly frequent events account for the majority of observations, and there are a large number of individually improbable events, whose probability falls off slowly in a thick tail (109).

In language, for example, a few words appear with very high frequency, such as pronouns, while a great many are rare, such as the names of species of trees, but any sample will nevertheless tend to contain several rare words (110). A similar pattern is found in the distribution of colors among paintings in a given period of art history (111). In music, Zipf’s law has been observed in the melodic intervals of Bach, Chopin, Debussy, Mendelssohn, Mozart, and Schoenberg (112–116); in the loudness and pitch fluctuations in Scott Joplin piano rags (117); in the harmonies (118–120) and rhythms of classical music (121); and, as Zipf himself noted, in melodies composed by Mozart, Chopin, Irving Berlin, and Jerome Kern (108).

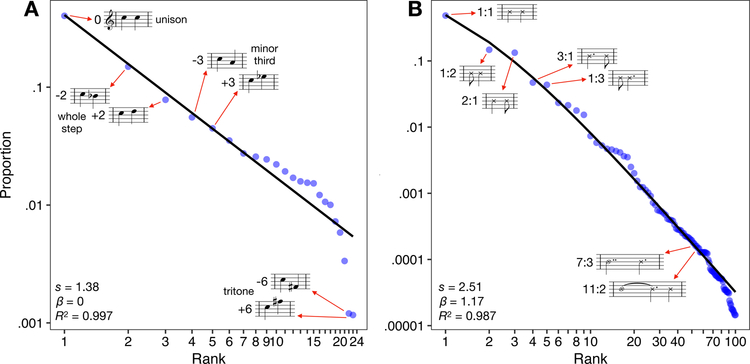

We tested whether the presence of power law distributions is a property of music worldwide by tallying relative melodic bigrams (the number of semitones separating each pair of successive notes) and relative rhythmic bigrams (the ratio of the durations of each pair of successive notes) for all NHS Discography transcriptions (see SI Text 2.4.3 for details). The bigrams overlapped, with the second note of one bigram comprising the first note of the next.

We found that both the melodic and rhythmic bigram distributions followed power laws (Fig. 8), and this finding held worldwide: the fit between the observed bigrams and the best-fitting power function was high within each region (melodic bigrams: median R2 = 0.97, range 0.92–0.99; rhythmic bigrams: median R2 = 0.98, range 0.88–0.99). The most prevalent bigrams were the simplest. Among the melodic bigrams (Fig. 8A), three small intervals (unison, major 2nd, and minor 3rd) accounted for 73% of the bigrams; the tritone (6 semitones) was the rarest, accounting for only 0.2%. The prevalence of these bigrams is significant: using only unisons, major 2nds, and minor 3rds, one can construct any melody in a pentatonic scale, a scale found in many cultures (122). Among the rhythmic bigrams (Fig. 8B), three patterns with simple integer ratios (1:1, 2:1, and 3:1) accounted for 86% of observed bigrams, while a large and eclectic group of ratios (e.g., 7:3, 11:2) accounted for fewer than 1%. The distribution is thus consistent with earlier findings that rhythmic patterns with simple integer ratios appear to be universal (123). The full lists of bigrams, with their cumulative frequencies, are in Tables S28–S29.

Fig. 8. The distributions of melodic and rhythmic patterns in the NHS Discography follow power laws.

We computed relative melodic (A) and rhythmic (B) bigrams and examined their distributions in the corpus. Both distributions followed a power law; the parameter estimates in the inset correspond to those from the generalized Zipf-Mandelbrot law, where s refers to the exponent of the power law and β refers to the Mandelbrot offset. Note that in both plots, the axes are on logarithmic scales. The full lists of bigrams are in Tables S28–S29.

These results suggest that power law distributions in music are a human universal (at least in the four genres studied here), with songs dominated by small melodic intervals and simple rhythmic ratios and enriched with many rare but larger and more complex ones. Since the specification of a power law is sensitive to sampling error in the tail of the distribution (124), and since many generative processes can give rise to a power-law distribution (125), we cannot identify a single explanation. Among the possibilities are that control of the vocal tract is biased toward small jumps in pitch that minimize effort, that auditory analysis is biased toward tracking similar sounds that are likely to be emitted by a single sound-maker, that composers tend to add notes to a melody that are similar to ones already contained in it, and that human aesthetic reactions are engaged by stimuli that are power-law distributed, which makes them neither too monotonous nor too chaotic (116, 126, 127) — “inevitable and yet surprising”, as the music of Bach has been described (128).

A new science of music

The challenge in understanding music has always been to reconcile its universality with its diversity. Even Longfellow, who declared music to be mankind’s universal language, celebrated the many forms it could take: “The peasant of the North…sings the traditionary ballad to his children…the muleteer of Spain carols with the early lark…The vintager of Sicily has his evening hymn; the fisherman of Naples his boat-song; the gondolier of Venice his midnight serenade” (1). Conversely, even an ethnomusicologist skeptical of universals in music conceded that “most people make it” (36). Music is universal but clearly takes on different forms in different cultures.

To go beyond these unexceptionable observations and understand exactly what is universal about music, while circumventing the biases inherent in opportunistic observations, we assembled databases which combine the empirical richness of the ethnographic and musicological record with the tools of computational social science.

The findings allow the following conclusions. Music exists in every society, varies more within than between societies, and has acoustic features that are systematically (albeit probabilistically) related to the behaviors of singers and listeners. At the same time, music is not a fixed biological response with a prototypical adaptive function such as mating, group bonding, or infant care: it varies substantially in melodic and rhythmic complexity and is produced worldwide in at least fourteen behavioral contexts that vary in formality, arousal, and religiosity. But music does appear to be tied to identifiable perceptual, cognitive, and affective faculties, including language (all societies put words to their songs), motor control (people in all societies dance), auditory analysis (all musical systems have some signatures of tonality), and aesthetics (their melodies and rhythms are balanced between monotony and chaos).

Methods Summary

To build the NHS Ethnography, we extracted descriptions of singing from the Probability Sample File by searching the database for text that was tagged with the topic MUSIC and that included at least one of ten keywords that singled out vocal music (e.g., “singers”, “song”, “lullaby”; see SI Text 1.1). This search yielded 4,709 descriptions of singing (490,615 words) drawn from 493 documents (median 49 descriptions per society). We manually annotated each description with 66 variables to comprehensively capture the behaviors reported by ethnographers (e.g., age of the singer, duration of the song). We also attached metadata about each paragraph (e.g., document publication data; tagged non-musical topics) using a matching algorithm that located the source paragraphs from which the description of the song was extracted. Full details on corpus construction are in SI Text 1.1, annotation types are listed in Tables S1–S6, and a listing of societies and locations is in Table S12.

Song events from all the societies were aggregated into a single dataset, without indicators of the society they came from. The range of possible missing values was filled in using a Markov chain Monte Carlo procedure which assumes that their absence reflects conditionally random omission with probabilities related to the features that the ethnographer did record, such as the age and sex of the singer or the size of the audience (SI Text 2.1). For the dimensionality reduction, we used an optimal singular value thresholding criterion (129) to determine the number of dimensions to analyze, which we then interpreted by three techniques: examining annotations that load highly on each dimension; searching for examples at extreme locations in the space and examining their content; and testing whether known song types formed distinct clusters in the latent space (e.g., dance songs vs. healing songs; see Main Text and Fig. 2).

To build the NHS Discography, and to ensure that the sample of recordings from each genre is representative of human societies, we located field recordings of dance songs, lullabies, healing songs, and love songs using a geographic stratification approach similar to that of the NHS Ethnography, namely, by drawing one recording representing each behavioral context from each of 30 regions. We chose songs according to predetermined criteria (Table S21), studying recordings’ liner notes and the supporting ethnographic text without listening to the recordings. When more than one suitable recording was available, we selected one at random. Details on corpus construction are in SI Text 1.2, annotation types are listed in Tables S1 and S7–S11, and a listing of societies and locations is in Table S22.

For analyses of the universality of musical forms, we studied each of the four representations of individually (machine summaries, naïve listener ratings, expert listener ratings, and features extracted from manual transcriptions), along with a combination of the expert listener and manual transcription data, which excluded many “contextual” features of the audio recordings (e.g., the sound of an infant crying during a lullaby). For the explorations of the structure of musical forms, we studied the manual transcriptions of songs and also used the Bayesian principal components analysis technique (described above) on the combined expert + transcription data summarizing NHS Discography songs.

Both the NHS Ethnography and NHS Discography can be explored interactively at http://themusiclab.org/nhsplots.

Supplementary Material

Acknowledgments

We thank the hundreds of anthropologists and ethnomusicologists whose work forms the source material for all our analyses; the countless people whose music those scholars reported on; and the research assistants who contributed to the creation of the Natural History of Song corpora and to this research, here listed alphabetically: Z. Ahmad, P. Ammirante, R. Beaudoin, J. Bellissimo, A. Bergson, M. Bertolo, M. Bertuccelli, A. Bitran, S. Bourdaghs, J. Brown, L. Chen, C. Colletti, L. Crowe, K. Czachorowski, L. Dinetan, K. Emery, D. Fratina, E. Galm, S. Gomez, Y-H. Hung, C. Jones, S. Joseph, J. Kangatharan, A. Keomurjian, H. J. Kim, S. Lakin, M. Laroussini, T. Lee, H. Lee-Rubin, C. Leff, K. Lopez, K. Luk, E. Lustig, V. Malawey, C. McMann, M. Montagnese, P. Moro, N. Okwelogu, T. Ozawa, C. Palfy, J. Palmer, A. Paz, L. Poeppel, A. Ratajska, E. Regan, A. Reid, R. Sagar, P. Savage, G. Shank, S. Sharp, E. Sierra, D. Tamaroff, I. Tan, C. Tripoli, K. Tutrone, A. Wang, M. Weigel, J. Weiner, R. Weissman, A. Xiao, F. Xing, K. Yong, H. York, and J. Youngers. We also thank C. Ember and M. Fischer for providing additional data from the Human Relations Area Files, and for their assistance using those data; S. Adams, P. Laurence, P. O’Brien, A. Wilson, the staff at the Archive of World Music at Loeb Music Library (Harvard University), and M. Graf and the staff at the Archives of Traditional Music (Indiana University) for assistance with locating and digitizing audio recordings; D. Niles, S. Wadley, and H. Wild for contributing recordings from their personal collections; S. Collins for producing the NHS Ethnography validity annotations; M. Walter for assistance with digital processing of transcriptions; J. Hulbert and R. Clarida for assistance with copyright issues and materials sharing; V. Kuchinov for developing the interactive visualizations; S. Deviche for contributing illustrations; and the Dana Foundation, whose program “Arts and Cognition” led in part to the development of this research. Last, we thank A. Rehding, G. Bryant, E. Hagen, H. Gardner, E. Spelke, M. Tenzer, G. King, J. Nemirow, J. Kagan, and A. Martin for their feedback, ideas, and intellectual support of this work.

Funding: This work was supported by the Harvard Data Science Initiative (S.A.M.); the National Institutes of Health Director’s Early Independence Award DP5OD024566 (S.A.M.); the Harvard Graduate School of Education/Harvard University Presidential Scholarship (S.A.M.); the Harvard University Department of Psychology (S.A.M. and M.M.K.); a Harvard University Mind/Brain/Behavior Interfaculty Initiative Graduate Student Award (S.A.M. and M.S.); the National Science Foundation Graduate Research Fellowship Program (M.S.); the Microsoft Research postdoctoral fellowship program (D.K.); the Washington University Faculty of Arts & Sciences Dean’s Office (C.L.); the Columbia University Center for Science and Society (N.J.); the Natural Sciences and Engineering Research Council of Canada (T.J.O); Fonds de Recherche du Québec Société et Culture (T.J.O); and ANR Labex IAST (L.G.).

Footnotes

Competing interests: The authors declare no competing interests.