Abstract

Most animals need to move, and motion will generally break camouflage. In many instances, most of the visual field of a predator does not fall within a high-resolution area of the retina and so, when an undetected prey moves, that motion will often be in peripheral vision. We investigate how this can be exploited by prey, through different patterns of movement, to reduce the accuracy with which the predator can locate a cryptic prey item when it subsequently orients towards a target. The same logic applies for a prey species trying to localize a predatory threat. Using human participants as surrogate predators, tasked with localizing a target on peripherally viewed computer screens, we quantify the effects of movement (duration and speed) and target pattern. We show that, while motion is certainly detrimental to camouflage, should movement be necessary, some behaviours and surface patterns reduce that cost. Our data indicate that the phenotype that minimizes localization accuracy is unpatterned, having the mean luminance of the background, does not use a startle display prior to movement, and has short (below saccadic latency), fast movements.

Keywords: motion camouflage, defensive coloration, visual search, peripheral vision, position perception

1. Introduction

If motion breaks camouflage [1,2], exploring the determinants of detection of a single moving target in central vision can be considered trivial. However, the peripheral visual field is generally a region of diminished resolution [3], so detection of motion need not guarantee successful targeting of a prey that subsequently stops and resumes crypsis. Localization of a camouflaged target in the periphery is arguably a more ecologically valid characterization of the early stages of predation than testing detection ability within central vision: there is a low probability that a predator will be looking directly at a concealed prey item at the moment that it starts to move and, by the time attention is focused on the prey, it may have stopped moving and returned to a static camouflaged state. The same holds true for prey trying to locate a stalking predator.

Previous research on camouflage has focused predominantly upon the effectiveness of strategies in the absence of motion [4–7] (although see [8]). Camouflage operates by exploiting a predator's perceptual system, making detection difficult (e.g. by reducing the signal at the stage of lower-level visual processing), and/or manipulating a predator's cognitive mechanisms so that identification is difficult (acting at a higher level of information processing) [6,7,9]. Movement, a salient cue, allows an observer to segregate an object from the background through relative motion information [10,11]. Movement appears to be incompatible with camouflage, resulting in the general consensus that motion breaks camouflage [1,2,8]. However, an organism must often move, whether to get to a point of refuge, a feeding site or a mating prospect.

Here, using human observers, we investigate a common situation when predators are foraging but have yet to detect a prey item, or a prey item is vigilant in the face of predation risk: the target is most likely to be detected, via its motion, in the predator's peripheral visual field, with attention subsequently brought to bear on it [12]. Localizing and responding to a stimulus in the periphery is complicated by the need to take into account cortical transmission and processing delays, as well as those associated with the preparation and execution of motor actions [13]. Studies on humans suggest that the perceived position of a moving target is predicted via motion extrapolation, and that localization is affected by the time it takes for the observer to move their eyes towards the target (i.e. the saccadic latency) [13]. Many species use saccades alongside fixations to perceive their environment; typically, these are eye saccades, but they can also be head saccades, in the case of birds, or body saccades, in the case of insects [14]. Furthermore, many species have a region of the visual field that has a high concentration of cone photoreceptors (e.g. area centralis [14]; see also table 3, p. 187 of [15]), giving good visual acuity; as eccentricity from this region increases, photoreceptor density, and thus acuity, decreases. Among other things, the fixate–saccade strategy allows an organism to divert the higher-resolution region of its visual field towards an object [14]. What prey movement strategies might minimize the probability of localization, and does surface patterning affect this? Here, we focus on two key parameters of transient movement (duration and speed) and their interaction with surface pattern. In addition, we included a flash manipulation, where a highly conspicuous display occurs before target movement. Some otherwise cryptic insects reveal conspicuous underwings when they fly. These are usually considered to be displays that startle a predator or interfere with identification [16–19] when the predator has already detected the prey and is initiating an attack. Here, we explore a different possible advantage that occurs when prey movement occurs in peripheral vision: gaze may be ‘anchored’ upon the initial location by a highly salient but transient display, and subsequent movement masked due to a flash-lag effect [20] or sensory overload [21]. Instead of exploring the effectiveness of motion camouflage strategies with regards to impeding capture, as in motion dazzle experiments [22–28], we aim to explore the phenotype's effects on localization.

2. Material and methods

(a). Set-up

The control program was written in Matlab (The Mathworks Inc. Natick, MA) with the Psychophysics Toolbox extensions [29–31]. The experiment used two gamma-corrected 21.5″ iiyama ProLite B2280HS monitors (Iiyama; Hoofddorp Netherlands), with a refresh rate of 60 Hz, a resolution of 1200 × 1080 pixels, and a mean luminance of 64 cd m−2, controlled by an iMac (Apple, CA, USA). The screens were positioned so that the centre of each one was 50 cm from the subject and at an angle of 65° from a fixation cross on a third, not gamma corrected, central screen. At 50 cm each pixel subtended 1.7 arcmin.

During each trial, the participant was shown a square target (48 × 48 pixels), which appeared, moved, and then disappeared. Targets could appear on either the left or right screen (the central screen only displayed the fixation cross). The target moved in a sequence that was dictated by a combination of two movement factors (duration and speed), a pattern factor (figure 1), and a flash factor (see below for details). Within each trial, the target would move on a background generated by a 1/f function [32], representing a generic textured background to which visual systems are hypothesized to be adapted [33]. Spectral analysis of natural scenes shows that amplitude is inversely related to spatial frequency, f; hence the 1/f function [33]. The background was generated afresh every trial. After a random latency (a uniform distribution from 1 to 3 s, in 0.5 s increments), the target appeared in the centre of one of the two screens at random (probability 0.5), and then moved in a random direction (discrete uniform distribution in the range 1–360°) in a manner determined by the factorial combination of factors described below. The target then disappeared, the non-target screen turned plain grey and the cursor appeared in the centre of the target screen, which retained its 1/f background. In this way, it was unambiguous to the participant on which screen the target had moved; the task was to localize where it had stopped.

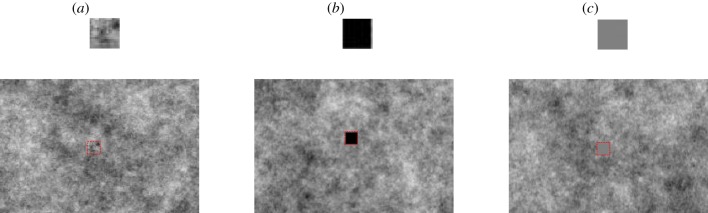

Figure 1.

The target patterning that was used (a) background matching, created using a 1/f function; (b) black; (c) grey (mean luminance). Below each target is an example of how the target would appear on a background. A red outline has been added to highlight the position of the target on the background (not present during the experiment).

Duration of movement (duration) had three levels that were designed to bracket saccadic latency for our human observers [34]: 100, 200 and 400 ms. Speed had three levels that were designed to provide a range of velocities (relatively slower and relatively faster) around data on movement speeds of Zootoca vivipara [35]: 10, 20 and 35 deg s−1. A speed of 35, rather than 40 deg s−1, was chosen so that targets always remained on the screen. Patterning had three levels (figure 1): black (Black; luminance = 0 cd m−2), grey (meanLum; luminance = 64 cd m−2) and background matching (BG; 1/f function, luminance = 66 cd m−2). The background matching function used the same algorithm as that which created the background. Finally, the target could flash briefly prior to movement (maximum luminance = 113 cd m−2). This flash factor had three levels: display for 80 ms, 50 ms or not presented at all. The flash was designed to simulate a startle display [16]. It was added prior to movement to explore its putative effect on masking the target's end location.

(b). Task

After the target had finished moving and disappeared, participants clicked a mouse-controlled on-screen cursor (an 8-pixel radius red circle) on the target's estimated final location. The locations of the centre of the target and the cursor were recorded every frame. On each trial, localization error was computed as the pixel distance between the centre of the target at its final location and the centre of the cursor at the location where it was clicked. The response time for the participant to click the cursor, from the moment at which the target started moving, was also recorded for each trial. Each participant completed six practice trials followed by 162 test trials, which were broken into three blocks of 54. Therefore, participants received all conditions (3 × 3 × 3 × 3) on both screens. Participants were free to take a break between blocks but, in practice, seldom paused for more than a few seconds. The combination of movement and pattern for each trial was independently randomized for every participant. Each trial was completed with the room lights off and with headphones on (to minimize distractions). There were 18 unpaid participants (10 female, ages 18–28), with normal/corrected-to-normal vision, who were naive to the aims of the experiment. Ethical approval was obtained through the Faculty of Science Research Ethics Committee of the University of Bristol. All participants were briefed and gave their informed written consent, in accordance with the Declaration of Helsinki.

(c). Statistical analyses

Statistical analyses were performed using R (R Foundation for Statistical Computing, www.R-project.org). Both pixel error (error) and response time (RT) were distributed lognormally, and so were log10-transformed prior to fitting linear mixed models (function lmer in the lme4 package: [36]). Participant was fitted as a random effect, with fixed effects speed, duration, pattern, screen and flash. Initially, all fixed main effects and their interactions were fitted, followed by backwards stepwise elimination of non-significant terms (based on likelihood ratio tests), starting with the highest order interactions (see electronic supplementary material). Within-factor effects were explored using Tukey-type p-values (R package multcomp [37]).

3. Results

Four extremely short response times (under 0.3 s) were outliers (greater than 5 standard deviations from the mean on the log-transformed scale, when the next lowest was 1.5 s.d.) and from one participant; these were considered to be premature, accidental, mouse clicks. Five data points were also considered to be response errors because the mouse click was off the target screen (possible, as the mouse could be moved to the central and non-target screens). These nine values comprised only 0.3% of the data and were removed. Localization error is the primary response variable, but a detailed analysis of response times can be found in the electronic supplementary material.

For localization error, the final model showed significant main effects of the flash factor (χ2 = 7.44, d.f. = 2, p = 0.0242), and screen side (χ2 = 5.84, d.f. = 1, p = 0.0157), on the participant's localization accuracy, with no interactions between these and other factors (figure 2 and electronic supplementary material). Tukey-type pair-wise tests indicated that no flash had a significantly larger error than a flash of 50 ms (z = 2.388, p = 0.0446) and a similar, but non-significant, difference from an 80 ms flash (z = 2.325, p = 0.0523); 50 ms and 80 ms flashes were not significantly different (z = 0.063, p = 0.9978). The effects of the flash factor can be seen in figure 2. The main effects of screen showed a slightly (2.7%) lower localization error on the right screen, which suggests a bias that could be attributed to eye preference [38].

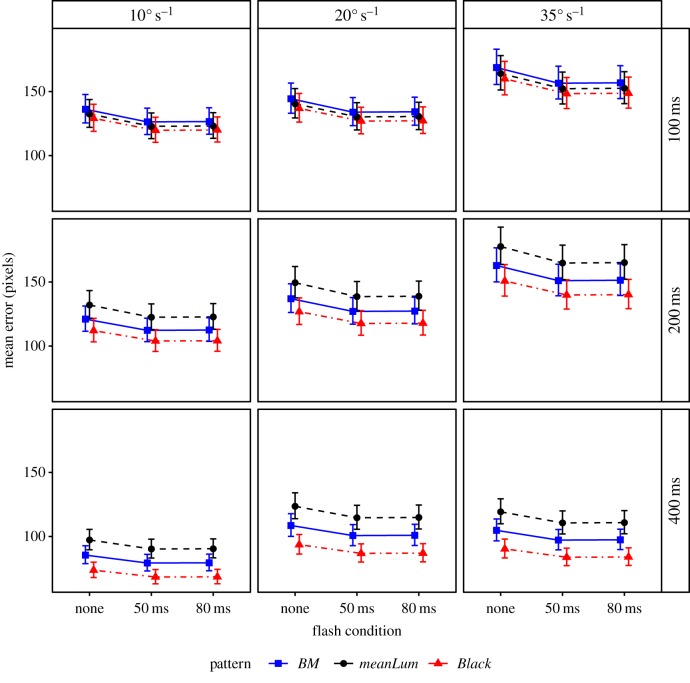

Figure 2.

The mean error associated with the participant's ability to localize a moving object with different movement and patterning conditions, with 95% confidence intervals based on the fitted model (n = 18 participants). Different combinations of movement and patterning conditions can be navigated via the panelling. The phenotype with the strongest effect has mean luminance, does not use a flash and has short, fast movements. Further, note that the width of the target is 48 pixels, and therefore the aforementioned phenotype is missed by more than three body lengths.

Additionally, the model showed that there were significant interactions between the duration of movement and the speed of movement (χ2 = 11.00, d.f. = 4, p = 0.0266), and the duration of movement and the pattern on the target (χ2 = 11.24, d.f. = 4, p = 0.0240). To understand these interactions, the data were split by the factor duration and the effects of speed and pattern assessed for each level. At the shortest duration, 100 ms, there was no significant effect of pattern (figure 2; χ2 = 1.30, d.f. = 2, p = 0.5219), but at 200 ms there was (χ2 = 10.75, d.f. = 2, p = 0.0046), with mean luminance having the greatest error, significantly greater than black (z = 3.28, p = 0.0030), but not background matching (z = 1.75, p = 0.1872). Black and background matching did not differ (z = 1.52, p = 0.2802). At 400 ms, there was also a significant effect of pattern (χ2 = 19.39, d.f. = 2, p < 0.0001), mean luminance again having the greatest error, significantly greater than black (z = 4.41, p < 0.0001), but not background matching (z = 2.047, p = 0.1013). Background matching also had a greater error than black (z = 2.371, p = 0.0467). Regarding the interaction between duration and speed, at 100 ms there was a significant effect of speed (χ2 = 22.39, d.f. = 2, p < 0.0001), with a greater error for 35 deg s−1 than for 10 or 20 deg s−1 (z = 4.60, p < 0.0001 and z = 3.34, p = 0.0024, respectively); 10 and 20 deg s−1 did not differ (z = 1.26, p = 0.4155). At 200 ms, there was also a significant effect of speed (χ2 = 34.69, d.f. = 2, p < 0.0001), error increased progressively with speed (figure 2; 10 versus 20 deg s−1: z = 2.47, p = 0.0364; 20 versus 35 deg s−1: z = 3.44, p = 0.0017; 10 versus 35 deg s−1: z = 5.91, p < 0.0001). At 400 ms, there was also a significant effect of speed (χ2 = 16.93, d.f. = 2, p = 0.0002), with a greater error for 20 and 35 deg s−1 than for 10 deg s−1 (z = 3.83, p < 0.0001 and z = 3.25, p = 0.0033, respectively); 20 and 35 deg s−1 did not differ (z = 0.57, p = 0.8355).

Modelling for response time indicated a significant interaction between pattern and flash when the stimulus moved for 100 ms, with pattern only having a significant effect in the no flash condition (electronic supplementary material). Specifically, mean luminance had longer response times than background matching or black patterning, which did not differ. At 200 ms, there was a significant effect of flash, with the no flash condition having a longer response time than the flash conditions. At 400 ms, there was a significant effect of speed, where an increase in speed increased the response time.

4. Discussion

Unless already detected and fixated, a prey item seeking to avoid a predator, or a predator seeking to approach prey undetected, is likely to be moving within the peripheral visual field. Our data indicate that for such a moving target to minimize its localization, it should move briefly and quickly, and it should be unpatterned, with similar luminance to the background. A first-order stimulus is defined by intensity differences between target and background, while a second-order stimulus is defined by a difference in some other property (e.g. contrast or pattern). Matching the mean luminance of the target and background pushes the stimulus towards being second order, and it is well known that such stimuli are far weaker than their first-order counterparts (e.g. [39,40]). A conspicuous flash, such as a startle display, prior to movement does not anchor the predator's saccade to the initial location. In fact, it is detrimental: localization errors are slightly lower and, for short motion durations, response times considerably shorter, if motion is preceded by a flash. In all treatments, the estimated direction of the target's motion was usually judged fairly accurately, but participants overshot its stopping place (electronic supplementary material), for the most difficult targets by more than three body lengths (figure 2; a 150+ pixel error when the width of the target is 48 pixels). This sort of biased error is frequently observed in motion estimation tasks and is known as representational momentum [13,41]. In our experiment, greater speed led to greater overshoot, particularly for short duration movements (figure 2).

Brief movement was the best strategy to increase localization error, with the greatest errors happening when the duration was shorter than the saccadic latency (100–200 ms) [15,34,42–45]. Little information is gathered while the eyes are saccading [46], and thus stopping before a viewer has had time to complete a saccade and fixate is advantageous. Considering that the fixate–saccade strategy is ubiquitous, this suggests that the prevalence of the intermittent motion observed in many animals [35,47–54], which is often attributed to the benefits of image stabilization for the prey species itself [35,52,53,55], could instead (or additionally) serve to reduce a predator's ability to localize a prey [35,52]. Avery et al. [35] have shown that in the lizard Zootoca vivipara, normal movement operates in bursts that broadly correspond to human saccadic latency and, further, a movement speed that approximately corresponds to 20 deg s−1. In organisms that are successful at stationary camouflage, can change colour [56] or have different appearances through a ‘flicker-fusion’ effect [57], saltatory locomotion could be particularly advantageous. In our experiment, the phenotype that induced the greatest localization error was plain, with the mean luminance of the background, rather than background-matching in pattern. Cuttlefish that are camouflaged when stationary have been observed to change to a plain colour when moving [56], consistent with what we would predict from our results. Although, for short (100 ms) duration movements, the pattern of the target had no effect on localization error (figure 2), this was at the cost of a far longer response time in the absence of an alerting flash.

Our data show that it is more advantageous to move quickly to reduce localization accuracy [24]. This seems counter to the typical slow movements used by military operatives [58,59] and stalking predators [60], and could suggest an alternative: namely, darting between periods of stationary camouflage or refuges/protective cover. There is a significant interaction between the movement duration and the target's movement speed, with increased speed above 20 deg s−1 having no additional benefit for 400 ms movements. However, this could be an artefact of targets nearing the screen edge in the fast/long-duration combination of treatments, such that the extent of over-estimation was constrained.

A flash before movement does not ‘anchor’ the viewer's fixation upon the target's starting point. Instead, it appears that the flash cues the viewer to divert their attention towards the target and primes them for the motion that follows and could hence accelerate the saccade to locate the target in central vision [61]. This contradicts multiple accounts in the literature that deem highly salient patterns as having a startle effect [62–66]; these are proposed to operate by overloading the perceptual mechanisms of the predator with sensory information, so that a prey animal can escape [21]. However, in the current study the target appears in peripheral vision, away from the focus of attention, and so a startle effect would be unlikely. Also, our results do not support the idea that motion, and subsequent localization, is masked due to a flash-lag effect. This is likely to be due to motion continuing beyond the flash-lag processing time and, in order to be effective, flashing should correspond with cessation of movement [17,18].

The response time data support the conclusions of localization error, indicating that shorter durations with mean luminance patterning and no flash prior to movement take longer to localize. Target speed had a limited effect on response time when durations were short, but response time increased progressively with target speed when the duration of movement was longer (400 ms), indicating increased uncertainty even when the moving target was in central vision.

While motion is certainly detrimental to camouflage [1,2], should movement be necessary some behaviours and surface colour patterns reduce that cost [56]. Within the parameters set by our experiment, the phenotype that minimizes detection and localization is unpatterned, has mean background luminance, does not use a startle display (no flash) prior to movement, and has short (below saccadic latency), fast movements. It is feasible that predator attention is drawn to the first instance of movement and, subsequently, predators could sit and wait for additional movement. However, this presupposes that the predator was able to recognize the source of movement as potential prey, which may not be the case. Additionally, it may not be beneficial for the predator to sit and wait for subsequent movement from an uncertain source; continuing to actively search the environment may be more beneficial. Furthermore, we must consider how noisy environments can be (e.g. foliage in the wind) and the impact that this may have upon localization of a moving target [8]. This experiment highlights the importance of addressing ecological problems, while also considering the perceptual differences that different regions of the visual field permit. While there are almost certainly quantitative differences across species, the qualitative effects should remain the same. If we consider the ubiquity of the fixate–saccade strategy [14], and the distribution of photoreceptors that results in a high-resolution region surrounded by an area where resolution drops with increasing eccentricity, we could expect these results to occur in many other species. So, while the speed and mechanism (eye, head or body movement) will no doubt differ between humans and other species, the pattern of results should hold generally. In particular, because limited information is acquired during a viewer's gaze shift, to reduce the probability of being located accurately an animal should move and stop before it can be fixated, and limit the amount of visual information available while moving with coloration that approximates the mean luminance of the background and lacks patterning. It would be very difficult to carry out similar experiments with non-human subjects; we chose humans because it allowed us to be very specific in what we required our observers to do, and what we measured. Our results show that the ability of a (model) predator to localize a target presented in peripheral vision is influenced by different components of movement (duration and speed) and target pattern: motion does not always break camouflage.

Supplementary Material

Acknowledgements

We are grateful to everyone in CamoLab (www.camolab.com) for discussions and advice. We also thank all the participants involved, who were not financially remunerated. We thank the three anonymous referees and editors for their helpful comments.

Ethics

Participants gave their informed written consent in accordance with the Declaration of Helsinki, and the Ethical Committee of the Faculty of Science, University of Bristol, approved the experiment.

Data accessibility

All data are from the Dryad Digital Repository: https://doi.org/10.5061/dryad.x0k6djhf8 [67].

Authors' contributions

All authors conceived and designed the experiment; I.E.S. and N.E.S.S. programmed the experiment; I.E.S. carried out the experiment; I.E.S. and I.C.C. analysed the data; I.E.S. wrote the first draft of the manuscript with subsequent contributions by all authors.

Competing interests

The authors declare no competing interests.

Funding

This research was supported by the Engineering and Physical Sciences Research Council UK, grant no. EP/M006905/1 to N.E.S.S., I.C.C. and R. J. Baddeley.

References

- 1.Hall JR, Cuthill IC, Baddeley R, Shohet AJ, Scott-Samuel NE. 2013. Camouflage, detection and identification of moving targets. Proc. R. Soc. B 280, 1758 ( 10.1098/rspb.2013.0064) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ioannou CC, Krause J. 2009. Interactions between background matching and motion during visual detection can explain why cryptic animals keep still. Biol. Lett. 5, 191–193. ( 10.1098/rsbl.2008.0758) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rosenholtz R. 2016. Capabilities and limitations of peripheral vision. Annu. Rev. Vis. Sci. 2, 437–457. ( 10.1146/annurev-vision-082114-035733) [DOI] [PubMed] [Google Scholar]

- 4.Stevens M, Ruxton GD. 2019. The key role of behaviour in animal camouflage. Biol. Rev. 94, 116–134. ( 10.1111/brv.12438) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cuthill IC. 2019. Camouflage. J. Zool. 308, 75–92. ( 10.1111/jzo.12682) [DOI] [Google Scholar]

- 6.Merilaita S, Scott-Samuel NE, Cuthill IC. 2017. How camouflage works. Phil. Trans. R. Soc. B 372, 1724 ( 10.1098/rstb.2016.0341) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Stevens M, Merilaita S. 2011. Animal camouflage: mechanisms and function. New York, NY: Cambridge University Press; ( 10.1017/CBO9780511852053) [DOI] [Google Scholar]

- 8.Cuthill IC, Matchette SR, Scott-Samuel NE. 2019. Camouflage in a dynamic world. Curr. Opin. Behav. Sci. 30, 109–115. ( 10.1016/j.cobeha.2019.07.007) [DOI] [Google Scholar]

- 9.Osorio D, Cuthill IC. 2015. Camouflage and perceptual organization in the animal kingdom. In The Oxford handbook of perceptual organisation (ed. Wagemans J.), pp. 843–862. Oxford, UK: Oxford University Press; ( 10.1093/oxfordhb/9780199686858.013.044) [DOI] [Google Scholar]

- 10.Regan D. 2000. Human perception of objects: early visual processing of spatial form defined by luminance, color, texture, motion, and binocular disparity. Sunderland, MA: Sinauer Associates. [Google Scholar]

- 11.Rushton SK, Bradshaw MF, Warren PA. 2007. The pop out of scene-relative object movement against retinal motion due to self-movement. Cognition 105, 237–245. ( 10.1016/j.cognition.2006.09.004) [DOI] [PubMed] [Google Scholar]

- 12.Carrasco M. 2011. Visual attention: the past 25 years. Vision Res. 51, 1484–1525. ( 10.1016/j.visres.2011.04.012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.van Heusden E, Rolfs M, Cavanagh P, Hogendoorn H. 2018. Motion extrapolation for eye movements predicts perceived motion-induced position shifts. J. Neurosci. 38, 8243–8250. ( 10.1523/JNEUROSCI.0736-18.2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Land M. 2019. Eye movements in man and other animals. Vision Res. 162, 1–7. ( 10.1016/j.visres.2019.06.004) [DOI] [PubMed] [Google Scholar]

- 15.Walls GL. 1942. The vertebrate eye and its adaptive radiation. Bloomfield Hills, MI: Cranbrook Institute of Science; ( 10.5962/bhl.title.7369) [DOI] [Google Scholar]

- 16.Umbers KD, Lehtonen J, Mappes J. 2015. Deimatic displays. Curr. Biol. 25, R58–R59. ( 10.1016/j.cub.2014.11.011) [DOI] [PubMed] [Google Scholar]

- 17.Murali G. 2018. Now you see me, now you don't: dynamic flash coloration as an antipredator strategy in motion. Anim. Behav. 142, 207–220. ( 10.1016/j.anbehav.2018.06.017) [DOI] [Google Scholar]

- 18.Loeffler-Henry K, Kang C, Yip Y, Caro T, Sherratt TN. 2018. Flash behavior increases prey survival. Behav. Ecol. 29, 528–533. ( 10.1093/beheco/ary030) [DOI] [Google Scholar]

- 19.Hailman JP. 1977. Optical signals: animal communication and light. Bloomington, IN: Indiana University Press. [Google Scholar]

- 20.Nijhawan R. 1994. Motion extrapolation in catching. Nature 370, 256–257. ( 10.1038/370256b0) [DOI] [PubMed] [Google Scholar]

- 21.Stevens M. 2016. Cheats and deceits: how animals and plants exploit and mislead, 1st edn Oxford, UK: Oxford University Press. [Google Scholar]

- 22.Hughes AE, Troscianko J, Stevens M. 2014. Motion dazzle and the effects of target patterning on capture success. BMC Evol. Biol. 14, 201 ( 10.1186/s12862-014-0201-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hogan BG, Cuthill IC, Scott-Samuel NE. 2016. Dazzle camouflage, target tracking, and the confusion effect. Behav. Ecol. 27, 1547–1551. ( 10.1093/beheco/arw081) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Stevens M, Yule DH, Ruxton GD. 2008. Dazzle coloration and prey movement. Proc. R. Soc. B 275, 2639–2643. ( 10.1098/rspb.2008.0877) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stevens M, Searle WTL, Seymour JE, Marshall KL, Ruxton GD. 2011. Motion dazzle and camouflage as distinct anti-predator defenses. BMC Biol. 9, 81 ( 10.1186/1741-7007-9-81) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Scott-Samuel NE, Baddeley R, Palmer CE, Cuthill IC. 2011. Dazzle camouflage affects speed perception. PLoS ONE 6, e20233 ( 10.1371/journal.pone.0020233) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hämäläinen L, Valkonen J, Mappes J, Rojas B. 2015. Visual illusions in predator–prey interactions: birds find moving patterned prey harder to catch. Anim. Cogn. 18, 1059–1068. ( 10.1007/s10071-015-0874-0) [DOI] [PubMed] [Google Scholar]

- 28.von Helversen B, Schooler LJ, Czienskowski U. 2013. Are stripes beneficial? Dazzle camouflage influences perceived speed and hit rates. PLoS ONE 8, e61173 ( 10.1371/journal.pone.0061173) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Brainard DH. 1997. The psychophysics toolbox. Spat. Vis. 10, 433–436. ( 10.1163/156856897X00357) [DOI] [PubMed] [Google Scholar]

- 30.Kleiner M, Brainard D, Pelli D. 2007. What's new in Psychtoolbox-3? Perception 36 (Suppl.), 14. [Google Scholar]

- 31.Pelli DG. 1997. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat. Vis. 10, 437–442. ( 10.1163/156856897X00366) [DOI] [PubMed] [Google Scholar]

- 32.Yearsley J. 2004. Generate AR1 spatial data. See http://wwwmathworkscom/matlabcentral/fileexchange/5099-generate-ar1-spatial-data.

- 33.Olshausen BA, Field DJ. 1996. Natural image statistics and efficient coding. Network: Comput. Neural Syst. 7, 333–339. ( 10.1088/0954-898X_7_2_014) [DOI] [PubMed] [Google Scholar]

- 34.Gilchrist I. 2011. Saccades. In The Oxford handbook of eye movements (eds Liversedge S, Gilchrist I, Everling S), pp. 85–94. New York, NY: Oxford University Press. [Google Scholar]

- 35.Avery RA, Mueller CF, Smith JA, Bond DJ. 1987. The movement patterns of lacertid lizards: speed, gait and pauses in Lacerta vivipara. J. Zool. 211, 47–63. ( 10.1111/j.1469-7998.1987.tb07452.x) [DOI] [Google Scholar]

- 36.Bates D, Maechler M, Bolker B, Walker S. 2015. Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48. ( 10.18637/jss.v067.i01) [DOI] [Google Scholar]

- 37.Bretz F, Westfall P, Hothorn T. 2010. Multiple comparisons using R, 1st edn New York, NY: Chapman and Hall/CRC. [Google Scholar]

- 38.Ehrenstein WH, Arnold-Schulz-Gahmen BE, Jaschinski W. 2005. Eye preference within the context of binocular functions. Graefes Arch. Clin. Exp. Ophthalmol. 243, 926–932. ( 10.1007/s00417-005-1128-7) [DOI] [PubMed] [Google Scholar]

- 39.Smith A, Ledgeway T. 1998. Sensitivity to second-order motion as a function of temporal frequency and eccentricity. Vision Res. 38, 403–410. ( 10.1016/S0042-6989(97)00134-X) [DOI] [PubMed] [Google Scholar]

- 40.Scott-Samuel NE, Georgeson MA. 1999. Does early non-linearity account for second-order motion? Vision Res. 39, 2853–2865. ( 10.1016/S0042-6989(98)00316-2) [DOI] [PubMed] [Google Scholar]

- 41.Freyd JJ, Finke RA. 1984. Representational momentum. J. Exp. Psychol. Learn. Mem. Cogn. 10, 126–132. ( 10.1037/0278-7393.10.1.126) [DOI] [Google Scholar]

- 42.Yarbus A. 1967. Movements of the eyes. London, UK: Pion. [Google Scholar]

- 43.Land MF, Nilsson D-E. 2012. Animal eyes. 2nd edn New York, NY: Oxford University Press; ( 10.1093/acprof:oso/9780199581139.001.0001) [DOI] [Google Scholar]

- 44.Land MF. 1999. Motion and vision: why animals move their eyes. J. Comp. Physiol. A 185, 341–352. ( 10.1007/s003590050393) [DOI] [PubMed] [Google Scholar]

- 45.Land MF. 2011. Oculomotor behaviour in vertebrates and invertebrates. In The Oxford handbook of eye movements (eds Liversedge S, Gilchrist I, Everling S), pp. 3–15. New York, NY: Oxford University Press. [Google Scholar]

- 46.Matin E. 1974. Saccadic suppression: a review and an analysis. Psychol. Bull. 81, 899–917. ( 10.1037/h0037368) [DOI] [PubMed] [Google Scholar]

- 47.Weihs D. 1974. Energetic advantages of burst swimming of fish. J. Theor. Biol. 48, 215–229. ( 10.1016/0022-5193(74)90192-1) [DOI] [PubMed] [Google Scholar]

- 48.Fleishman LJ. 1985. Cryptic movement in the vine snake Oxybelis aeneus. Copeia 1985, 242–245. ( 10.2307/1444822) [DOI] [Google Scholar]

- 49.Rayner J. 1985. Bounding and undulating flight in birds. J. Theor. Biol. 117, 47–77. ( 10.1016/S0022-5193(85)80164-8) [DOI] [Google Scholar]

- 50.Jackson RR, Olphen AV. 1992. Prey-capture techniques and prey preferences of Chrysilla, Natta and Siler, ant-eating jumping spiders (Araneae. Salticidae) from Kenya and Sri Lanka. J. Zool. 227, 163–170. ( 10.1111/j.1469-7998.1992.tb04351.x) [DOI] [Google Scholar]

- 51.Buskey EJ, Coulter C, Strom S. 1993. Locomotory patterns of microzooplankton: potential effects on food selectivity of larval fish. Bull. Mar. Sci. 53, 29–43. [Google Scholar]

- 52.Kramer DL, McLaughlin RL. 2001. The behavioral ecology of intermittent locomotion. Am. Zool. 41, 137–153. ( 10.1093/icb/41.2.137) [DOI] [Google Scholar]

- 53.McAdam AG, Kramer DL. 1998. Vigilance as a benefit of intermittent locomotion in small mammals. Anim. Behav. 55, 109–117. ( 10.1006/anbe.1997.0592) [DOI] [PubMed] [Google Scholar]

- 54.Bian X, Elgar MA, Peters RA. 2015. The swaying behavior of Extatosoma tiaratum: motion camouflage in a stick insect? Behav. Ecol. 27, 83–92. ( 10.1093/beheco/arv125) [DOI] [Google Scholar]

- 55.Miller PL. 1979. A possible sensory function for the stop–go patterns of running in phorid flies. Physiol. Entomol. 4, 361–370. ( 10.1111/j.1365-3032.1979.tb00628.x) [DOI] [Google Scholar]

- 56.Zylinski S, Osorio D, Shohet A. 2009. Cuttlefish camouflage: context-dependent body pattern use during motion. Proc. R. Soc. B 276, 3963–3969. ( 10.1098/rspb.2009.1083) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Umeton D, Read JC, Rowe C. 2017. Unravelling the illusion of flicker fusion. Biol. Lett. 13, 2 ( 10.1098/rsbl.2016.0831) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Brunyé TT, Martis SB, Kirejczyk JA, Rock K. 2019. Camouflage pattern features interact with movement speed to determine human target detectability. Appl. Ergon. 77, 50–57. ( 10.1016/j.apergo.2019.01.004) [DOI] [PubMed] [Google Scholar]

- 59.Brunyé TT, Martis SB, Horner C, Kirejczyk JA, Rock K. 2018. Visual salience and biological motion interact to determine camouflaged target detectability. Appl. Ergon. 73, 1–6. ( 10.1016/j.apergo.2018.05.016) [DOI] [PubMed] [Google Scholar]

- 60.Curio E. 1976. The ethology of predation, 1st edn Berlin, Germany: Springer; ( 10.1007/978-3-642-81028-2_1) [DOI] [Google Scholar]

- 61.Ludwig CJ. 2011. Saccadic decision-making. In The Oxford handbook of eye movements (eds Liversedge S, Gilchrist I, Everling S), pp. 425–438. New York, NY: Oxford University Press. [Google Scholar]

- 62.Vallin A, Jakobsson S, Lind J, Wiklund C. 2006. Crypsis versus intimidation—anti-predation defence in three closely related butterflies. Behav. Ecol. Sociobiol. 59, 455–459. ( 10.1007/s00265-005-0069-9) [DOI] [Google Scholar]

- 63.Vallin A, Jakobsson S, Lind J, Wiklund C. 2005. Prey survival by predator intimidation: an experimental study of peacock butterfly defence against blue tits. Proc. R. Soc. B 272, 1203–1207. ( 10.1098/rspb.2004.3034) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Langridge KV. 2009. Cuttlefish use startle displays, but not against large predators. Anim. Behav. 77, 847–856. ( 10.1016/j.anbehav.2008.11.023) [DOI] [Google Scholar]

- 65.Langridge KV, Broom M, Osorio D. 2007. Selective signalling by cuttlefish to predators. Curr. Biol. 17, R1044–R1045. ( 10.1016/j.cub.2007.10.028) [DOI] [PubMed] [Google Scholar]

- 66.Olofsson M, Eriksson S, Jakobsson S, Wiklund C. 2012. Deimatic display in the European swallowtail butterfly as a secondary defence against attacks from great tits. PLoS ONE 7, e47092 ( 10.1371/journal.pone.0047092) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Smart IE, Cuthill IC, Scott-Samuel NE. 2020. Data from: In the corner ot the eye: camouflaging motion in the peripheral visual field Dryad Digital Repository. ( 10.5061/dryad.x0k6djhf8) [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Smart IE, Cuthill IC, Scott-Samuel NE. 2020. Data from: In the corner ot the eye: camouflaging motion in the peripheral visual field Dryad Digital Repository. ( 10.5061/dryad.x0k6djhf8) [DOI] [PMC free article] [PubMed]

Supplementary Materials

Data Availability Statement

All data are from the Dryad Digital Repository: https://doi.org/10.5061/dryad.x0k6djhf8 [67].