Abstract

Radiomics, artificial intelligence, and deep learning figure amongst recent buzzwords in current medical imaging research and technological development. Analysis of medical big data in assessment and follow-up of personalised treatments has also become a major research topic in the area of precision medicine. In this review, current research trends in radiomics are analysed, from handcrafted radiomics feature extraction and statistical analysis to deep learning. Radiomics algorithms now include genomics and immunomics data to improve patient stratification and prediction of treatment response. Several applications have already shown conclusive results demonstrating the potential of including other “omics” data to existing imaging features. We also discuss further challenges of data harmonisation and management infrastructure to shed a light on the much-needed integration of radiomics and all other “omics” into clinical workflows. In particular, we point to the emerging paradigm shift in the implementation of big data infrastructures to facilitate databanks growth, data extraction and the development of expert software tools. Secured access, sharing, and integration of all health data, called “holomics”, will accelerate the revolution of personalised medicine and oncology as well as expand the role of imaging specialists.

Keywords: Artificial intelligence, Holomics, Machine learning, Precision medicine, Radiomics

Key points

Since 2012, radiomics algorithms have focused on lesion characterisation and response prediction in oncology.

Research advances are expected in deep learning, data harmonisation, and communication.

Radiogenomics and radioimmunomics, alone or in combination with other data, improve prediction accuracy.

Radiomics is a component of all omics data (holomics) for personalised decision-making.

This major evolution relies heavily on information technology and medical imaging specialists.

Background

Radiomics is now a field of intense research after 7 years of an exponential growth in publications. Radiomics offers the perspective to augment human perception with the use of agnostic analyses [1] and even artificial intelligence with computer-assisted increase in productivity. Naturally, this trend has been met with great enthusiasm and even greater scepticism. As the field matures, numerous initiatives have led to the development of a growing number of software solutions, libraries, and algorithms.

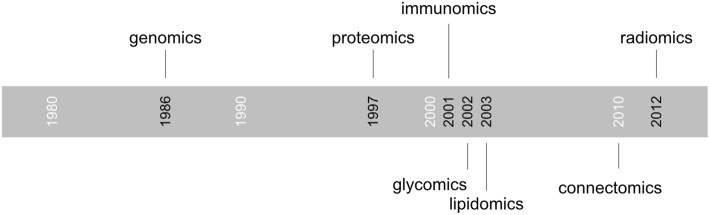

Radiomics is of course not the first “omics” field, and genomics can be traced back to the late 1980s [2], followed by many others (Fig. 1). The suffix “omics” has long been used in life sciences to describe the techniques and data in a specific research field. In the case of radiomics, this suffix reflects the intent to comprehensively use the data provided in the medical images with mathematical and statistical approaches. As radiomics attempts to make its way into the clinical workflow, the use of imaging data alone is becoming insufficient. While there is no formal proof that clinical decisions based on the combination of imaging and other “omics” data have an impact on clinical outcome, there is no reason to limit the scope of algorithms. This approach is similar to clinical practice, where all available data about a particular patient and previous knowledge from other cases are aggregated in the decision process. Radiomics-based algorithms may hence combine genomics, immunomics or other clinical data to improve diagnostic accuracy.

Fig. 1.

Timeline of the first occurrence of selected “omics” terms

In an ambitious paradigm shift, radiomics may further be integrated as a component of a comprehensive software system for clinical decision support combining all “omics” and clinical data into a “holomics” approach (“holo” meaning “whole” in classical Greek), similarly to systems biology in experimental research [3, 4].

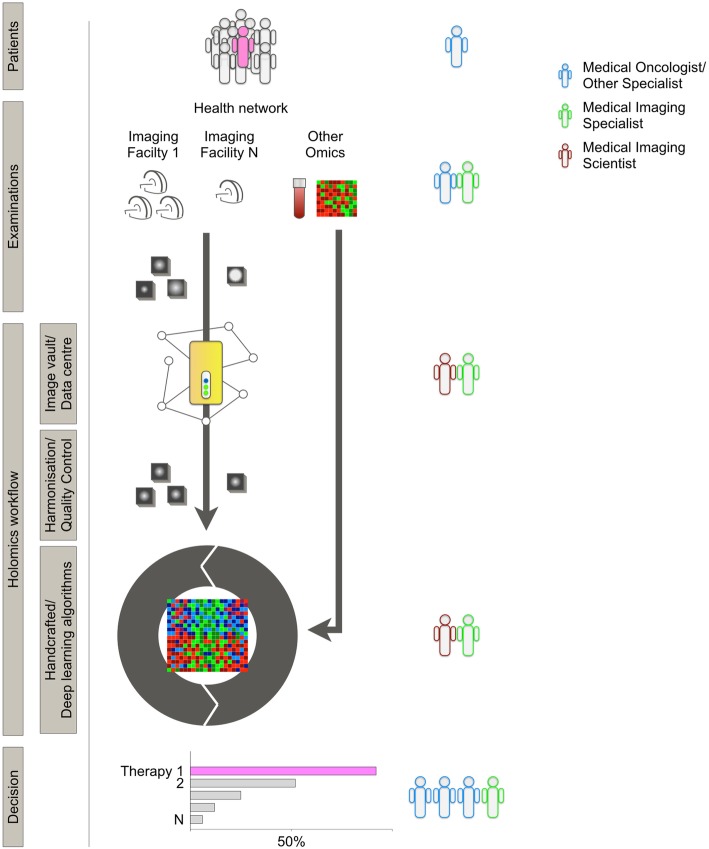

Widespread adoption of radiomics in the clinical decision process has been elusive despite a crucial need for prediction of treatment response and therapy management in the era of personalised medicine. With the development of large health networks and personalised health initiatives, the clinical data flow is rapidly expanding beyond what individual physicians can handle. Through their growing expertise in complex algorithms, big data management, and data harmonisation, medical and information technology imaging specialists are now key for the integration of radiomics and holomics in the clinical workflow of hospitals and health networks (Fig. 2).

Fig. 2.

Illustration of the different elements of a model of integration of radiomics in a holomics-based clinical workflow. Patients: constant accumulation of patient data is used in a dynamic model. Examinations: images are produced with many protocols, machines, and facilities. Other "omics" data from blood tests, tumour samples, or clinical data are aggregated. Image vault/data centre: a collaborative, open-source, open data storage infrastructure guarantees secured ownership of data, and facilitated software development. Harmonisation/quality control: as for other omics, radiomics can only reach clinical practice and feed algorithms with harmonisation and quality control at each step. Decision: predictive information (rather than prognostic only) is provided to tumour boards or other specialist boards to provide support for decision

This review summarises current research on radiomics with a focus on standardisation strategies developed to ensure reproducibility between studies, machines and centres. It also discusses biobanks and personalised medicine initiatives in order to illustrate the steps required for the integration of radiomics into holomics and, ultimately, into clinical workflows.

Research trends in radiomics

Publication trend

Radiomics aims to capture the informative content hidden in medical images, overcoming the limitations of the human eyes and human cognitive patterns: the rationale is that medical images (anatomical, functional, or metabolic) can carry information about the physiological response to cancer and therapy stress [1]. The wealth of relevant information provided by medical images is key in decision-making and follow-up of treatment response. The ability to extract more quantitative data from medical images will reinforce the position of medical imaging in clinical decision-making and patient management.

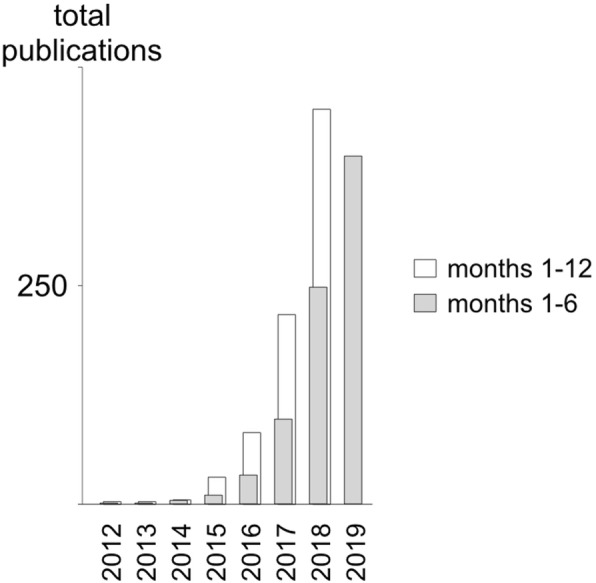

Medical and information technology imaging specialists have produced a number of algorithms, statistical analyses, and models, fuelling an exponential growth in the number of publications since 2012 (Fig. 3, Additional file 1: Table S1). These have gradually become more complex and study more diverse pathologies, modalities, and applications.

Fig. 3.

Timeline of the occurrence of radiomics publications from PubMed title and abstract search shows exponential growth since 2012 with a slowdown in early 2019

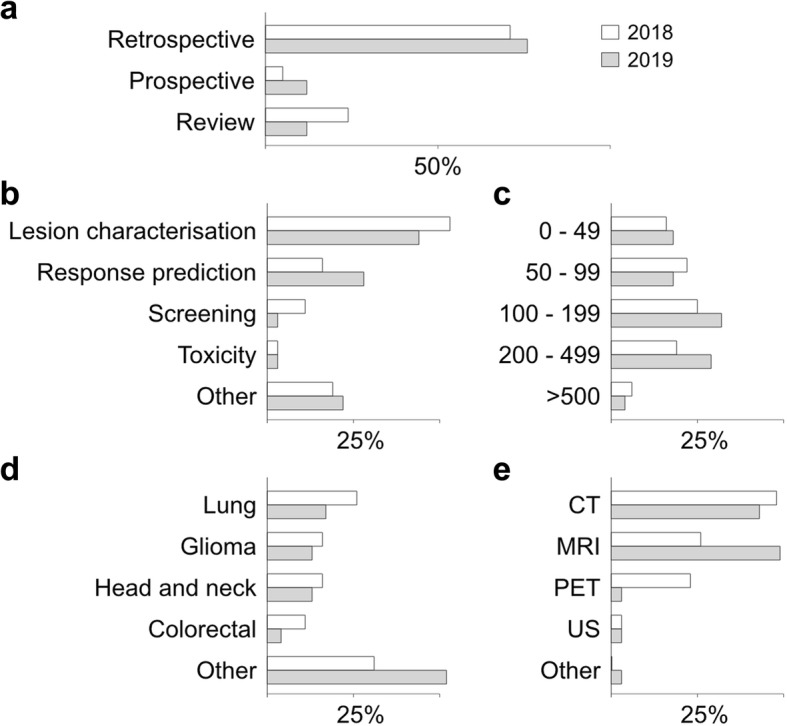

While radiomics make their way into clinical workflows, large or even dynamically growing prospective patient cohorts are required to reach the level of robustness and precision needed to be adopted by clinicians (Fig. 4). To better understand these challenges, it is important to grasp the workflow of radiomics, as well as the variety of algorithms and analytical models currently under development.

Fig. 4.

Research trends in radiomics from a sample of the first radiomics 40 papers of 2018 (white) and 2019 (grey) show a shift towards more diverse applications and larger cohorts of patients (see Additional file 1: Table S1 for PubMed query syntax). a Trial type. b Study aim. c Total size of cohort. d Disease type. e Imaging modality. CT Computed tomography, MRI Magnetic resonance imaging, PET Positron emission tomography, US Ultrasound

Handcrafted radiomics techniques in radiomics research

The current state-of-the-art approach in radiomics relies on a relatively conventional image analysis workflow, which is referred to as “handcrafted” radiomics based on four successive processing tasks [5]: (1) image acquisition/reconstruction, (2) image segmentation, (3) feature extraction and quantification, and (4) feature selection/statistical analysis. In handcrafted radiomics, image features are known and are selected depending on their correlation with clinical outcome.

Image acquisition (step 1) and image segmentation (step 2) have been a lesser focus of radiomics publications. However, the risk of bias and potential lack of reproducibility of algorithms across different machines, protocols, and sites are well recognised [6–9] and standardisation issues are further discussed in the next section.

Feature extraction (step 3) in handcrafted radiomics has established several techniques based, for example, on image morphology, histograms, textures, wavelet, or fractal techniques, with their application in original works reviewed in detail by Avanzo et al. [10]. In the same review, the authors showed that statistical analysis/feature selection (step 4) has not reached a disease-specific or technical consensus on feature selection strategies, predictive models and performance estimation techniques. Linear regression models were shown to be more frequently used, which can be explained by more simple models providing graphical results (nomograms) [11], and a similar trend is noted in a 2019 sample of publications analysed (Additional file 1: Table S2). Avanzo et al. [10] further identified limitations of radiomics related to the size of the cohorts, and issues of standardisation and benchmarking, which are discussed in the next paragraphs.

The emerging role of deep learning

Deep learning radiomics (DLR) can be applied to any aspect of the workflow described above. Many studies use DLR to either automatically identify or extract features (step 3). In other instances, a classical handcrafted radiomics approach extracts features so that a DLR algorithm can select them (step 4). Single artificial neural networks (ANN) can perform both tasks [5].

DLR is based on a subtype of machine learning technique based on ANN with a high number of interconnected layers. Given a training set, such networks can autonomously build image filters and extract image features for classification without the need to pre-determine (handcraft) them. In the field of medical imaging, several applications have already been successfully designed, for example, in detecting lung nodules [12] or in building computed tomography images from magnetic resonance images for the purpose of positron-emission tomography (PET) attenuation correction [13].

These multi-layer ANN are often convolutional (cANN) or recurrent (rANN) and, after training, produce image filters and features in a much higher number than other techniques. The major challenge of deep learning algorithms in radiomics is however the need for a higher number of observations (patients) than in many handcrafted radiomics studies.

However, once trained, a deep learning algorithm can be extremely fast and accurate [5]. Recently, Xu et al. [14] have developed a model based on a cANN and a rANN for overall survival prognosis in non-small cell lung cancer using a seed point tumour localisation instead of a classical segmented contour. The authors analysed how the tumour evolved during the time, exploiting several successive computed tomography scans acquired at different time points (pre-treatment, 1, 3, and 6 months after radiation therapy). Algorithms that can include multiple imaging time points are a key step to bring radiomics to the bedside and into personalised oncology data streams.

Protocol standardisation in radiomics feature extraction

A fundamental aspect of radiomics is the capability of extracting quantitative image features (QIFs). QIFs are measures computed directly from the voxel values (intensity) and their spatial organisation (shape and texture). When compared to human-based visual analysis and semantic (i.e., text) features, the main advantage of QIFs is to yield more objective and reproducible image analysis. However, this advantage comes with the following two challenges.

First, small changes in voxel values can lead to strong variation in values of QIFs, which in turn can modify the output of the associated predictive model and lead to inadequate classification.

Second, the definition, implementation and semantic grouping of QIFs can vary between scanners, studies, and software, which hinders the interoperability of QIFs and their interpretation [15]. These aspects are of particular importance for the integration of radiomics in clinical workflows. Both individual patients and patient cohorts may be managed in multiple centres and may benefit from medical imaging from different scanners and different imaging protocols.

The impact and potential solution to address the two aforementioned challenges are hence of particular interest.

Standardisation of feature definition

The very first aspect of standardisation is to establish a common reference of the definitions of every QIFs. Second, it must be ensured that distinct software implementation of the same QIF will provide the same measure for a given input. To address these two aspects, the Image Biomarker Standardisation Initiative (IBSI) did a remarkable and exhaustive effort, where the QIF definitions are organised in a reference manual [6]. In addition, the IBSI defined a systematic benchmarking of radiomics software using synthetic and patient-based reference images, which allowed to achieve consensus for most QIFs, but often after several iterations of discussions, debug and re-implementation, showcasing the importance of the initiative. The description and benchmarking were not limited to QIFs, but also to image pre-processing and interpolation steps. Since most radiomics software and researchers joined the IBSI since September 2016, it will have a significant impact on the reproducibility of future radiomics studies and the interoperability of QIF extraction algorithms.

Impact of imaging protocol on QIFs: towards standardisation and invariance

Imaging protocols relate to image acquisition and reconstruction and are depending on many factors including disease, anatomical regions and manufacturers and user preferences. Expert image readers are able to quickly adapt to changes in image quality and reconstruction parameters. However, because QIFs are computed from raw voxel values, changing acquisition protocols will have a direct influence on the extracted quantitative measures. This can be simply illustrated with the maximum standardised uptake value (SUVmax), which measures the maximum uptake in PET imaging and was identified in many radiomics studies as an important biomarker [16, 17]. SUVmax will vary significantly based on the post-reconstruction smoothing of PET images (e.g., Gaussian filtering), which deteriorates the generalisation performance of a SUVmax-based radiomics model between imaging centres using different reconstruction protocols. Although achieving consensus protocols across all imaging departments and manufacturers is still unrealistic today, systematic studies of their impact on feature values are crucial to determine the deployment success of radiomics-based clinical models.

Traverso et al. [18] reviewed 41 studies focusing on repeatability and reproducibility of QIFs. Repeatability is defined as QIF stability when imaging the same subject (or phantom) over time and is often associated with the test-retest methodology [19]. Reproducibility addresses the stability of QIFs across imaging conditions (protocol, manufacturer) and is therefore more general than repeatability. The survey revealed that although no consensus could be found regarding the most repeatable and reproducible features, intensity (also called first-order) features were in general more reproducible than shape and textural QIFs.

As a further step towards reproducibility, several efforts focused on developing QIF transformations to achieve invariance to imaging protocols. A notable example is the use of the Combat method (initially developed in the context of genomics to remove machine and time variability in microarray data using Bayesian statistics [20]) to successfully standardise PET radiomics [21]. Neural networks and deep learning techniques such as generative adversarial networks were used in [22] to learn QIF transforms that makes them independent to scanner manufacturers and protocols. The transforms were learnt based on the Credence Cartridge Radiomics (CCR) physical phantom and associated dataset resulting from scanning with various imaging conditions. This radiomics-specific imaging phantom is made of 10 cartridges filled with various materials leading to a wide range of values of radiomics features [23].

Integration of omics data with radiomics algorithms

While a definitive proof that combining all omics information can result in better precision in predicting clinical outcome is not easily achievable, it is important to note that this approach is commonly used in patient management: physicians gather available information from various diagnostic and examination techniques prior to devising a specific patient management plan.

Similarly, radiomics has witnessed an evolution from an initial focus on lesion identification (such as malignant versus non-malignant lesions) and prognosis (i.e., assessing disease but not therapy). In more recent algorithms, genomic or immunomic features are used to address treatment selection or response (i.e., predictive rather than prognostic) and to improve prediction of clinical outcomes (such as overall survival or toxicity) [24, 25]. These approaches can be described specifically as radiogenomics, respectively radioimmunomics. Furthermore, the term holomics is used to refer to more ambitious strategies where radiomics is one component of all “omics” axes used for clinical management in precision medicine strategies [26].

Radiogenomics

The pioneer work of Gevaert et al. in 2012 opened the field of radiogenomic by studying the correlation of gene clusters with radiomics features in 26 non-small cell lung cancer [27]. The following year, Aerts et al. published one of the earliest studies correlated with genetic information [28]. In this milestone publication, the inclusion of more than 1,000 patients with lung or head and neck cancer led to a four-feature prognostic score. Interestingly, prognostic capability of the signature was maintained between tumour subtypes and in particular regardless of human papillomavirus (HPV) status of head and neck tumours. Gene-set enrichment analysis was used to correlate (and not predict) each feature with gene expression, inferring for example a relationship between tumour heterogeneity on imaging and cellular proliferation.

More recently, Digumarthy et al. showed that the combination of clinical, imaging and radiomics features can separate epidermal growth factor receptor mutant from wild-type lung adenocarcinoma with high accuracy [29]. The technical survey published in 2017 by Incoronato et al. explored the status of radiomics research on the correlation and/or prediction of genomic characteristics in 55 original research publications [25]. Incoronato et al. showed that correlation models were twice most frequent and that they were heavily reliant on statistical methods rather than machine learning with a majority of canonical standard models (Wilcoxon, chi-squared, Fisher’s test, Kruskal-Wallis), of canonical correlation techniques (Spearman, Pearson) and linear-regression based techniques (linear-regression, logistic regression, etc.). Deep learning approaches have also shown successes in predicting gene expression and mutation status in gliomas [30–32].

This field remains in constant expansion and further reviews such as Pinker et al. and El Naqa et al. analyse the current state of the art in multiple cancers combining radiomics with single nucleotide polymorphisms, copy number variations and gene expression amongst others [33, 34].

Radioimmunomics

Immune checkpoint modulation and other immunotherapies have significantly increased therapeutic options in oncology and lead to a need for better predictive tools for therapy management decisions and better response follow-up [35]. The recent field of radioimmunomics is expected to further develop in the future. In current publications, the work of Sun et al. is of particular interest. The team developed a predictive radiomics model focusing of immunomics (CD8, cell infiltration in solid tumours) and subsequent response to immune checkpoint therapy (anti-PD-1 or anti-PD-L1) [36]. Interestingly, this retrospective work on four cohorts of above 100 patients each did not restrict itself to a single tumour histology but used databases of patients with solid tumours. It used computed tomography images to build a radiomics biomarker of CD8 cells infiltration (using transcription of CD8B ribonucleic acid in biopsy samples) which showed prediction power for response to immunotherapy. This handcrafted radiomics study was based on machine learning (not deep learning) using a linear elastic-net model with 78 radiomics and six additional features (peak voltage and five binary location variables).

Data heterogeneity in holomics

The challenge of data heterogeneity becomes critical when combining radiomics approaches with genomic or immunomic data or when building holomics workflows. The data contained in an imaging study (voxels values, protocols, series, etc.) is neither numerical (i.e., a single continuous number) nor categorical (i.e., positive versus negative, low versus moderate versus high). Many radiomics strategies are based on statistical methods in order to reduce the dimensionality of image data by focusing on individual continuous numerical variables (i.e., the features themselves). In deep learning, popular techniques such as convolutional ANN (cANN) are able to acquire in input the whole image, exploit the informative content of the pixel/voxel proximity, and automatically build representative image features and filters to reduce the noise due to signal ratio. However, cANN have been developed specifically for images and incorporating individual numerical or categorical variables (ECG signals, lab tests, pathology results) adds further complexity. More recently, ensemble learning has been introduced to combine several predictors that use different data types [37, 38]. Ensemble learning is widely adopted in other domains (e.g., information retrieval, where a meta-predictor is built by voting on different predictors [39]).

Big data challenges for precision medicine

Challenges of health and imaging biobanks

The developments of imaging biobanks and cloud-based data storage services have radically changed the way in which communication and data management are dealt with. Online storage of medical images is not new; several vendors have offered such services for decades already as part of their commercial solutions [39, 40]. Recently, a major change is the emergence of widely accessible and attractive services at a very low cost. These developments face more hurdles in medical applications, due to strict regulations and guidelines for patient confidentiality and data security.

The wealth of data acquired in clinical routine these days is overwhelming and has not been apprehended yet; medical images are no exception.

A crucial limiting factor in the development of radiomics for large and open cohorts in order to be applicable in clinical workflows is the lack of open and collaborative infrastructure collecting constantly structured and well-documented imaging data [41]. Restrictive regulatory constraints and data protection rules further prevent the usage and exploitation of medical data without formal patient approval. Overall, the walls between medical institutions, medical records, and all clinical data repositories have remained despite multiple initiatives from individual institutions, professional societies, and private corporations to facilitate the accessibility to the data. Data extraction and anonymisation for open-access databanks is indeed still very limited [42].

Recent years have seen the development of international consortia and networks to facilitate sample collection and distribution to allow access to precious biomaterials and medical data from patients [43]. Such repositories should provide open access to carefully curated and annotated data. These initiatives however face many challenges that have been long-recognised and theorised [44, 45]. He identified the key restrictions to the development of an open repository to harbour radiomics tools and computer-aided personalised medicine.

Despite these initiatives, many hurdles remain. Data collection is complex due to institutional, infrastructure and regulatory issues. Further, ownership of data by the source institution and the patient is not guaranteed from data collection to utilisation, in particular as data itself may be considered the source of value. This issue is a major difficulty to reach broad acceptance and clinical application. We hypothesise that it may be circumvented by adopting open source and open data architectures as these systems benefit from wide acceptance. Finally, as databases grow, software tools that incorporate new data need to be developed, in particular based on deep learning approaches within environments that facilitate software development.

Initiatives for precision medicine

In parallel, there is a significant attempt by national authorities and private initiatives to channel the amount of data to better predict disease occurrence and behaviour, guide therapy management, and predict outcome.

Amongst these, notably the National Institute for Health (NIH) has launched the “All of Us” research programme aiming at accumulating data on over a million participants. Further research initiatives in the USA towards precision medicine in oncology have been developed in the past decade by the National Cancer Institute. The NCI initiative has designated cancer imaging as one of the four principal axes of this initiative along translational research, genomics and immunomics.

Switzerland has initiated a nationwide initiative for the support and development of personalised medicine called the “Swiss Personalized Health Network” [46] aimed at the harmonisation and deployment of large networks of information technology infrastructures and data repository for future support of artificial intelligence and computer-aided medical decisions and personalised patient management. Notably, within this initiative, a global ethics approval across adopted by each Swiss university hospital is being implemented [47].

Amongst several initiatives currently underway to sustain the development of such networks, a new platform is being specifically designed for hosting and sharing medical imaging data for the implementation of machine learning and radiomics tools. This open platform, called KHEOPS [48], offers a flexible solution for storing, sharing and viewing medical images. KHEOPS is an open-source project with the source code available on GitHub which provides the framework for secure and flexible management of extensive collections of digital images in open and scalable infrastructure for storage, communication, wide distribution, processing, and analysis of this data. In an intermediate step to circumvent data ownership and privacy issues, initiatives to federate research centres around multiple data sources and data types (including images) are already established, such as VATE [49] and EuroCAT [50]. As opposed to KHEOPS, these are based on distributed learning, where each institution maintains a databank and only shares data aggregation.

Conclusions

With the development of radiomics as a mainstream research field, major efforts have been undertaken in the development of new algorithms. In recent years, radiogenomics, radioimmunomics, or other clinical data have been used to increase predictive capacity and accuracy. Concerted efforts and initiatives aiming for data harmonisation between imaging protocols, scanners and imaging devices as well as quality control of algorithms being used for feature extraction are also well underway.

As the field has now matured, the shift from individual algorithms engineered (handcrafted) from finite datasets (i.e., patient cohorts) to open tools that dynamically integrate the constant inflow of medical data is paramount. However, reaching this goal has so far remained elusive, despite many initiatives from institutions, professional societies, manufacturers, and major information technology and Internet corporations.

The time has hence come for a paradigm shift. The imaging and clinical databases can only thrive if data extraction itself is facilitated and if institutional and patient ownership is extended to every stage of data indexing, storage, and analysis. Further, mirroring the dynamic nature that should be adopted by these databases, radiomics, and other software tool development have to be facilitated. The whole environment must embrace full web application programming interfaces, dematerialised data storage, and provide persistent and secure data ownership. We believe that such infrastructure can be achieved with the confidence that offers open source and open data technologies.

It will provide the backbone for thriving, evolutive and collaborative radiomics and holomics tools to ultimately reach clinical workflows. The medical imaging community has the opportunity and responsibility to lead these developments in order for precision medicine based on comprehensive holomics computer-assisted expert systems to mediate a revolution in medical practice and patient management.

Supplementary information

Additional file 1: Table S1. Detailed results and syntax of PubMed “radiomics” search. Table S2. Occurrence and category of statistic techniques in radiomics from an arbitrary sample of the 40 first research papers referenced in PubMed in 2019.

Acknowledgements

We thank Dr. M. Vallati and Prof. M. Leimgruber for reviewing the manuscript.

Abbreviations

- ANN

Artificial neural network

- cANN

Convolutional artificial neural network

- CD8

Cluster of differentiation 8 (CD8 cells, cytotoxic lymphocytes)

- DLR

Deep learning radiomics

- PD-1

Programmed death 1

- PD-L1

Programmed death-ligand 1

- PET

Positron emission tomography

- QIF

Quantitative image features

- rANN

Recurrent artificial neural network

- SUVmax

Maximum standardised uptake value

Authors’ contributions

RG and AL wrote the manuscript and collected PubMed data, figures, and references. AD contributed with the protocol harmonisation paragraph. OR and OS contributed to the Big Data challenges for precision medicine paragraph. All authors read and approved the final manuscript.

Funding

This work is partly supported by the Swiss National Science Foundation for AD (205320 179069).

Availability of data and materials

Not applicable

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information accompanies this paper at 10.1186/s41747-019-0143-0.

References

- 1.Lambin P, Rios-Velazquez E, Leijenaar R, et al. Radiomics: extracting more information from medical images using advanced feature analysis. Eur J Cancer. 2012;48:441–446. doi: 10.1016/j.ejca.2011.11.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Yadav SP. The wholeness in suffix -omics, -omes, and the word om. J Biomol Tech. 2007;18:277. [PMC free article] [PubMed] [Google Scholar]

- 3.Djordjevic M, Rodic A, Graovac S. From biophysics to ‘omics and systems biology. Eur Biophys J. 2019;48:413–424. doi: 10.1007/s00249-019-01366-3. [DOI] [PubMed] [Google Scholar]

- 4.Kitano H. Computational systems biology. Nature. 2002;14:206–210. doi: 10.1038/nature01254. [DOI] [PubMed] [Google Scholar]

- 5.Afshar Parnian, Mohammadi Arash, Plataniotis Konstantinos N., Oikonomou Anastasia, Benali Habib. From Handcrafted to Deep-Learning-Based Cancer Radiomics: Challenges and Opportunities. IEEE Signal Processing Magazine. 2019;36(4):132–160. doi: 10.1109/MSP.2019.2900993. [DOI] [Google Scholar]

- 6.Zwanenburg A, Leger S, Vallières M, Löck S (2016) Image biomarker standardisation initiative. Available via arxiv: CoRR abs/1612.07003, https://arxiv.org/abs/1612.07003. Accessed 20 May 2019

- 7.Midya Abhishek, Chakraborty Jayasree, Gönen Mithat, Do Richard K. G., Simpson Amber L. Influence of CT acquisition and reconstruction parameters on radiomic feature reproducibility. Journal of Medical Imaging. 2018;5(01):1. doi: 10.1117/1.JMI.5.1.011020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mori M, Benedetti G, Partelli S, et al. CT radiomic features of pancreatic neuroendocrine neoplasms (panNEN) are robust against delineation uncertainty. Phys Med. 2019;57:41–46. doi: 10.1016/j.ejmp.2018.12.005. [DOI] [PubMed] [Google Scholar]

- 9.Owens CA, Peterson CB, Tang C, et al. Lung tumor segmentation methods: impact on the uncertainty of radiomics features for non-small cell lung cancer. PLoS One. 2018;13:e0205003. doi: 10.1371/journal.pone.0205003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Avanzo M, Stancanello J, El Naqa I. Beyond imaging: the promise of radiomics. Phys Med. 2017;38:122–139. doi: 10.1016/j.ejmp.2017.05.071. [DOI] [PubMed] [Google Scholar]

- 11.Zhang Z, Kattan MW. Drawing nomograms with R: applications to categorical outcome and survival data. Ann Transl Med. 2017;5:211. doi: 10.21037/atm.2017.04.01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ali I, Hart GR, Gunabushanam G, et al. Lung nodule detection via deep reinforcement learning. Front Oncol. 2018;8:108. doi: 10.3389/fonc.2018.00108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Han X. MR-based synthetic CT generation using a deep convolutional neural network method. Med Phys. 2017;44:1408–1419. doi: 10.1002/mp.12155. [DOI] [PubMed] [Google Scholar]

- 14.Xu Y, Hosny A, Zeleznik R, et al. Deep learning predicts lung cancer treatment response from serial medical imaging. Clin Cancer Res. 2019;25:3266–3275. doi: 10.1158/1078-0432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Vallières Martin, Zwanenburg Alex, Badic Bodgan, Cheze Le Rest Catherine, Visvikis Dimitris, Hatt Mathieu. Responsible Radiomics Research for Faster Clinical Translation. Journal of Nuclear Medicine. 2017;59(2):189–193. doi: 10.2967/jnumed.117.200501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nakamura K, Hongo A, Kodama J, Hiramatsu Y. The measurement of SUVmax of the primary tumor is predictive of prognosis for patients with endometrial cancer. Gynecol Oncol. 2011;123:82–87. doi: 10.1016/J.YGYNO.2011.06.026. [DOI] [PubMed] [Google Scholar]

- 17.Berghmans T, Dusart M, Paesmans M, et al. Primary tumor standardized uptake value (SUVmax) measured on fluorodeoxyglucose positron emission tomography (FDG-PET) is of prognostic value for survival in non-small cell lung cancer (NSCLC): a systematic review and meta-analysis (MA) by the European Lung Cancer Working Party for the IASLC Lung Cancer Staging Project. J Thorac Oncol. 2008;3:6–12. doi: 10.1097/JTO.0B013E31815E6D6B. [DOI] [PubMed] [Google Scholar]

- 18.Traverso Alberto, Wee Leonard, Dekker Andre, Gillies Robert. Repeatability and Reproducibility of Radiomic Features: A Systematic Review. International Journal of Radiation Oncology*Biology*Physics. 2018;102(4):1143–1158. doi: 10.1016/j.ijrobp.2018.05.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhao B, James LP, Moskowitz CS, et al. Evaluating variability in tumor measurements from same-day repeat CT scans of patients with non–small cell lung cancer. Radiology. 2009;252:263–272. doi: 10.1148/radiol.2522081593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Johnson WE, Li C, Rabinovic A. Adjusting batch effects in microarray expression data using empirical Bayes methods. Biostatistics. 2007;8:118–127. doi: 10.1093/biostatistics/kxj037. [DOI] [PubMed] [Google Scholar]

- 21.Orlhac F, Boughdad S, Philippe C, et al. A post-reconstruction harmonization method for multicenter radiomic studies in PET. J Nucl Med. 2018;59:1321–1328. doi: 10.2967/jnumed.117.199935. [DOI] [PubMed] [Google Scholar]

- 22.Andrearczyk V, Depeursinge A, Mueller H (2019) Learning cross-protocol radiomics and deep feature standardization from CT images of texture phantoms. In: Bak PR, Chen P-H (eds) Proc. SPIE 10954, Medical Imaging 2019: Imaging Informatics for Healthcare, Research, and Applications, 109540I 10.1117/12.2512683

- 23.Mackin D, Fave X, Zhang L, et al. Measuring computed tomography scanner variability of radiomics features. Invest Radiol. 2015;50:757–765. doi: 10.1097/RLI.0000000000000180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Emaminejad N, Qian W, Guan Y. Fusion of quantitative image and genomic biomarkers to improve prognosis assessment of early stage lung cancer patients. IEEE Trans Biomed Eng. 2015;63:1034–1043. doi: 10.1109/TBME.2015.2477688. [DOI] [PubMed] [Google Scholar]

- 25.Leithner D, Horvat JV, Ochoa-Albiztegui RE, et al. Imaging and the completion of the omics paradigm in breast cancer. Radiologe. 2018;58:7–13. doi: 10.1007/s00117-018-0409-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gevaert O, Xu J, Hoang CD, et al. Non-small cell lung cancer: identifying prognostic imaging biomarkers by leveraging public gene expression microarray data--methods and preliminary results. Radiology. 2012;264:387–396. doi: 10.1148/radiol.12111607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Aerts HJ, Velazquez ER, Leijenaar RT, et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat Commun. 2014;5:4006. doi: 10.1038/ncomms5006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Digumarthy SR, Padole AM, Gullo RL, Sequist LV, Kalra MK. Can CT radiomic analysis in NSCLC predict histology and EGFR mutation status? Medicine (Baltimore) 2019;98:e13963. doi: 10.1097/MD.0000000000013963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Incoronato Mariarosaria, Aiello Marco, Infante Teresa, Cavaliere Carlo, Grimaldi Anna, Mirabelli Peppino, Monti Serena, Salvatore Marco. Radiogenomic Analysis of Oncological Data: A Technical Survey. International Journal of Molecular Sciences. 2017;18(4):805. doi: 10.3390/ijms18040805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Smedley NF, Hsu W. Using deep neural networks for radiogenomic analysis. Proc IEEE Int Symp Biomed Imaging. 2018;2018:1529–1533. doi: 10.1109/ISBI.2018.8363864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chang K, Bai HX, Zhou H, et al. Residual convolutional neural network for the determination of IDH status in low- and high-grade gliomas from MR imaging. Clin Cancer Res. 2018;24:1073–1081. doi: 10.1158/1078-0432.CCR-17-2236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chang P, Grinband J, Weinberg BD, et al. Deep-learning convolutional neural networks accurately classify genetic mutations in gliomas. AJNR Am J Neuroradiol. 2018;39:1201–1207. doi: 10.3174/ajnr.A5667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pinker K, Shitano F, Sala E, et al. Background, current role, and potential applications of radiogenomics. J Magn Reson Imaging. 2018;47:604–620. doi: 10.1002/jmri.25870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.El Naqa I, Kerns SL, Coates J, et al. Radiogenomics and radiotherapy response modeling. Phys Med Biol. 2017;62:R179–R206. doi: 10.1088/1361-6560/aa7c55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Havel JJ, Chowell D, Chan TA. The evolving landscape of biomarkers for checkpoint inhibitor immunotherapy. Nat Rev Cancer. 2019;19:133–150. doi: 10.1038/s41568-019-0116-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Sun R, Limkin EJ, Vakalopoulou M, et al. A radiomics approach to assess tumour-infiltrating CD8 cells and response to anti-PD-1 or anti-PD-L1 immunotherapy: an imaging biomarker, retrospective multicohort study. Lancet Oncol. 2018;19:1180–1191. doi: 10.1016/S1470-2045(18)30413-3. [DOI] [PubMed] [Google Scholar]

- 37.Paul R, Hall L, Goldgof D, Schabath M, Gillies R (2018) Predicting nodule malignancy using a CNN ensemble approach. Proc Int Jt Conf Neural Netw. 10.1109/IJCNN.2018.8489345 [DOI] [PMC free article] [PubMed]

- 38.Ramella S, Fiore M, Greco C, et al. A radiomic approach for adaptive radiotherapy in non-small cell lung cancer patients. PLoS One. 2018;13:e0207455. doi: 10.1371/journal.pone.0207455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Opitz D., Maclin R. Popular Ensemble Methods: An Empirical Study. Journal of Artificial Intelligence Research. 1999;11:169–198. doi: 10.1613/jair.614. [DOI] [Google Scholar]

- 40.Neri E, Regge D. Imaging biobanks in oncology: European perspective. Future Oncol. 2017;13:433–441. doi: 10.2217/fon-2016-0239. [DOI] [PubMed] [Google Scholar]

- 41.Toga AW. Neuroimage databases: the good, the bad and the ugly. Nat Rev Neurosci. 2002;3:302–309. doi: 10.1038/nrn782. [DOI] [PubMed] [Google Scholar]

- 42.Kohli MD, Summers RM, Geis JR. Medical image data and datasets in the era of machine learning-whitepaper from the 2016 C-MIMI meeting dataset session. J Digit Imaging. 2017;30:392–399. doi: 10.1007/s10278-017-9976-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.European Society of Radiology (ESR) (2015) ESR position paper on Imaging Biobanks. Insights Imaging 6:403–410. 10.1007/s13244-015-0409-x [DOI] [PMC free article] [PubMed]

- 44.Morris MA, Saboury B, Burkett B, Gao J, Siegel EL. Reinventing radiology: big data and the future of medical imaging. J Thorac Imaging. 2018;33:4–16. doi: 10.1097/RTI.0000000000000311. [DOI] [PubMed] [Google Scholar]

- 45.Chan S, Siegel EL. Will machine learning end the viability of radiology as a thriving medical specialty? Br J Radiol. 2019;92:20180416. doi: 10.1259/bjr.20180416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.https://www.sphn.ch. Accessed May 2019

- 47.https://www.unimedsuisse.ch/fr/projets/consentment-general. Accessed May 2019

- 48.www.kheops.online. Accessed May 2019

- 49.Meldolesi E, Van Soest J, Alitto AR, et al. VATE: VAlidation of high TEchnology based on large database analysis by learning machine. Colorectal Cancer. 2014;3:435–450. doi: 10.2217/crc.14.34. [DOI] [Google Scholar]

- 50.Deist TM, Jochems A, van Soest J, et al. Infrastructure and distributed learning methodology for privacy-preserving multi-centric rapid learning health care: euroCAT. Clin Transl Radiat Oncol. 2017;4:24–31. doi: 10.1016/j.ctro.2016.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1: Table S1. Detailed results and syntax of PubMed “radiomics” search. Table S2. Occurrence and category of statistic techniques in radiomics from an arbitrary sample of the 40 first research papers referenced in PubMed in 2019.

Data Availability Statement

Not applicable