Abstract

Following a request from EFSA, the Panel on Plant Protection Products and their Residues (PPR) developed an opinion on the state of the art of Toxicokinetic/Toxicodynamic (TKTD) models and their use in prospective environmental risk assessment (ERA) for pesticides and aquatic organisms. TKTD models are species‐ and compound‐specific and can be used to predict (sub)lethal effects of pesticides under untested (time‐variable) exposure conditions. Three different types of TKTD models are described, viz., (i) the ‘General Unified Threshold models of Survival’ (GUTS), (ii) those based on the Dynamic Energy Budget theory (DEBtox models), and (iii) models for primary producers. All these TKTD models follow the principle that the processes influencing internal exposure of an organism, (TK), are separated from the processes that lead to damage and effects/mortality (TD). GUTS models can be used to predict survival rate under untested exposure conditions. DEBtox models explore the effects on growth and reproduction of toxicants over time, even over the entire life cycle. TKTD model for primary producers and pesticides have been developed for algae, Lemna and Myriophyllum. For all TKTD model calibration, both toxicity data on standard test species and/or additional species can be used. For validation, substance and species‐specific data sets from independent refined‐exposure experiments are required. Based on the current state of the art (e.g. lack of documented and evaluated examples), the DEBtox modelling approach is currently limited to research applications. However, its great potential for future use in prospective ERA for pesticides is recognised. The GUTS model and the Lemna model are considered ready to be used in risk assessment.

Keywords: Toxicokinetic/Toxicodynamic models, aquatic organisms, prospective risk assessment, time‐variable exposure, model calibration, model validation, model evaluation

Summary

In 2008, the Panel on Plant Protection Products and their Residues (PPR Panel) was tasked by the European Food Safety Authority (EFSA) with the revision of the Guidance Document on Aquatic Ecotoxicology under Council Directive 91/414/EEC (SANCO/3268/2001 rev.4 (final), 17 October 2002).1 As a third deliverable of this mandate, the PPR Panel is asked to develop a Scientific Opinion describing the state of the art of Toxicokinetic/Toxicodynamic (TKTD) models for aquatic organisms and prospective environmental risk assessment (ERA) for pesticides with the main focus on: (i) regulatory questions that can be addressed by TKTD modelling, (ii) available TKTD models for aquatic organisms, (iii) model parameters that need to be included and checked in evaluating the acceptability of regulatory relevant TKTD models, and (iv) selection of the species to be modelled.

Chapter 2 presents the underlying concepts, terminology, application domains and complexity levels of three different classes of TKTD models intended to be used in risk assessment, viz., (i) the ‘General Unified Threshold models of Survival’ (GUTS), (ii) toxicity models derived from the Dynamic Energy Budget theory (DEBtox models), and (iii) models for primary producers. All TKTD models follow the principle that the processes influencing internal exposure of an organism, summarised under Toxicokinetics (TK), are separated from the processes that lead to damage and effects/mortality, summarised by the term Toxicodynamics (TD).

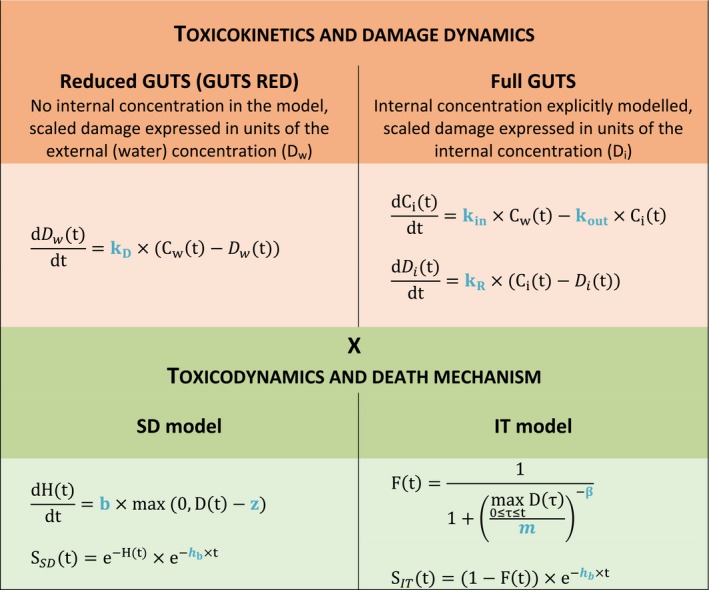

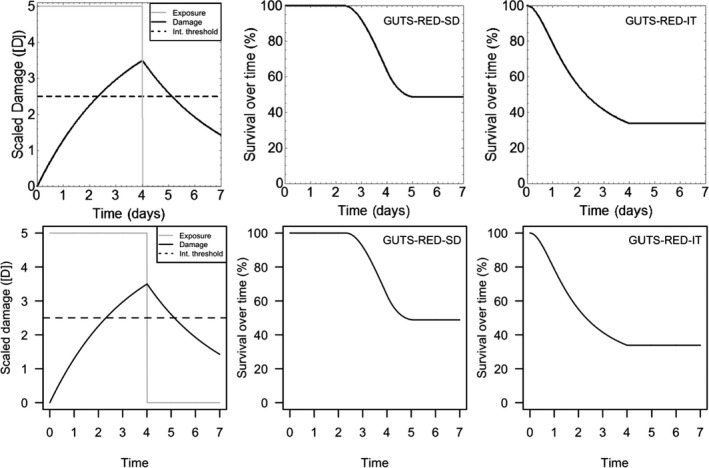

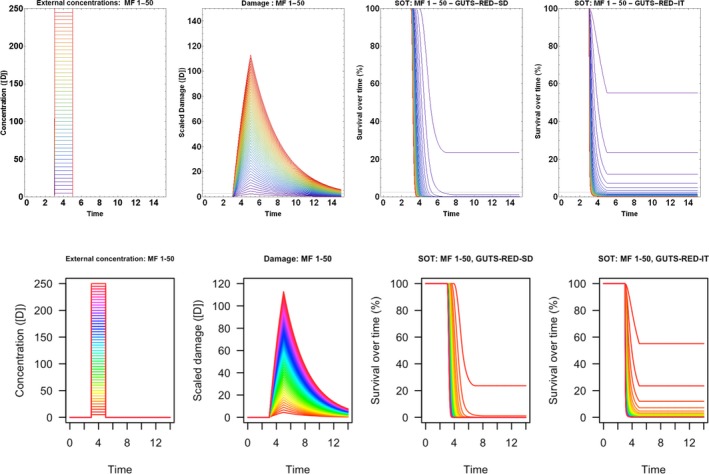

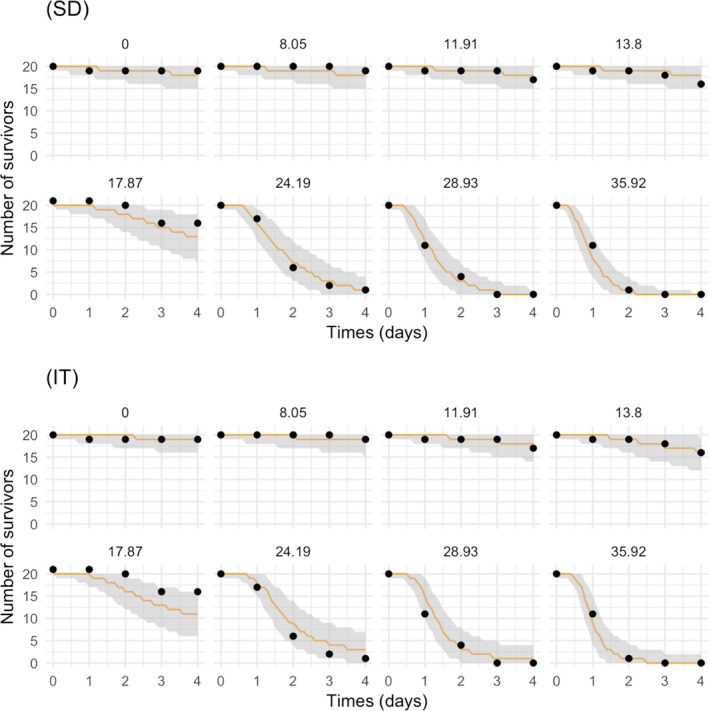

The ultimate aim of GUTS is to predict survival of individuals (as influenced by mortality and/or immobility) under untested time‐variable or constant exposure conditions. The GUTS modelling framework connects the external concentration with a so‐called damage dynamic, which is in turn connected to a hazard resulting in simulated mortality/immobility when an internal damage threshold is exceeded. Within this framework, two reduced versions of GUTS are available: GUTS‐RED‐SD based on the assumption of Stochastic Death (SD) and GUTS‐RED‐IT based on the assumption of Individual Tolerance (IT).

DEBtox modelling is the application of the Dynamic Energy Budget (DEB) theory to deal with effects of toxic chemicals on life‐history traits (sublethal endpoints). DEBtox models incorporate a dynamic energy budget part for growth and reproduction endpoints at the individual level. Therefore, DEBtox models consist of two parts, (i) the DEB or ‘physiological’ part that describes the physiological energy flows and (ii) the part that accounts for uptake and effects of chemicals, named ‘TKTD part’.

The third class of TKTD models presented are developed for primary producers. With respect to the analysis of toxic effects for primary producers, the main endpoint measured is not survival but growth. For that reason, the assessment of toxic effects on algae and vascular plants needs a submodel addressing growth as a baseline, and a connected TKTD part.

Chapter 3 deals with the problem formulation step that sets the scene for the use of the TKTD models within the risk assessment. TKTD models are species and substance specific. TKTD models may either focus on standard test species (Tier‐2C1) or also incorporate relevant additional species (Tier‐2C2). If risks are triggered in Tier‐1 (standard test species approach) and exposure is likely to be shorter than in standard tests, the development of TKTD models for standard test species is the most straightforward option. If Tier‐2A (geometric mean/weight‐of‐evidence approach) or Tier‐2B (species sensitivity distribution approach) information is also available, the development of TKTD models for a wider array of species may be the way forward to refine the risk assessment. Validated TKTD models for these species may be an option to evaluate specific risks, using available field‐exposure profiles, by calculating exposure profile‐specific LPx/EPx values (= multiplication factor to an entire specific exposure profile that causes x% Lethality or Effect), informed by an appropriate aquatic exposure assessment. Exposure profile‐specific LPx/EPx can be used in the Tier‐2C risk assessment by using the same rules and extrapolation techniques (statistical analysis and assessment factors) as used in experimental Tier‐1 (standard test species approach), Tier‐2A (geometric mean/weight‐of‐evidence approach) and Tier‐2B (species sensitivity distribution approach).

The GUTS model framework is considered to be an appropriate approach to use in the acute risk assessment scheme for aquatic invertebrates, fish and aquatic stages of amphibians. In the chronic risk assessment, it is only appropriate to use a validated GUTS model if the critical endpoint is mortality/immobility, which is not often the case. If a sublethal endpoint is the most critical in the chronic lower‐tier assessment for aquatic animals, the dynamic energy budget modelling framework combined with a TKTD part (DEBtox) is the appropriate approach to select in the refined risk assessment. TKTD models developed for primary producers may be used in the chronic risk assessment scheme with a focus on inhibition of growth rate and/or yield. Note that experimental tests and TKTD model assessments for algae and fast‐growing macrophytes like Lemna to some extent assess population‐level effects, since in the course of the test reproduction occurs.

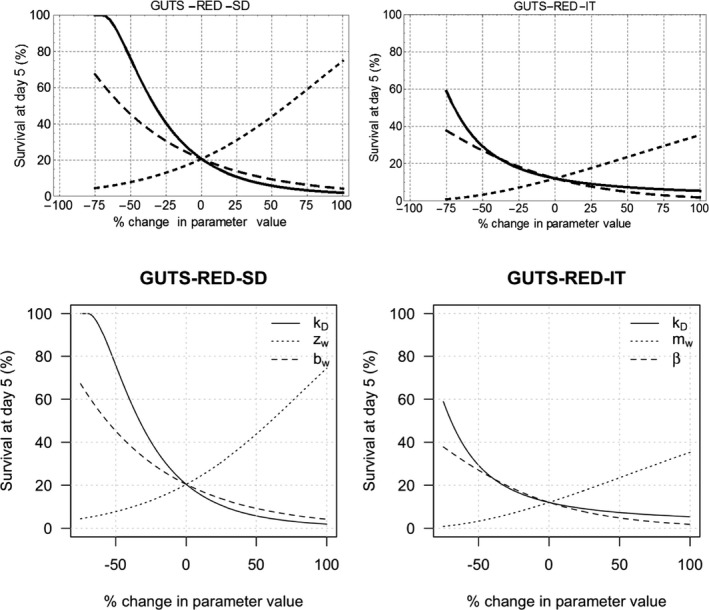

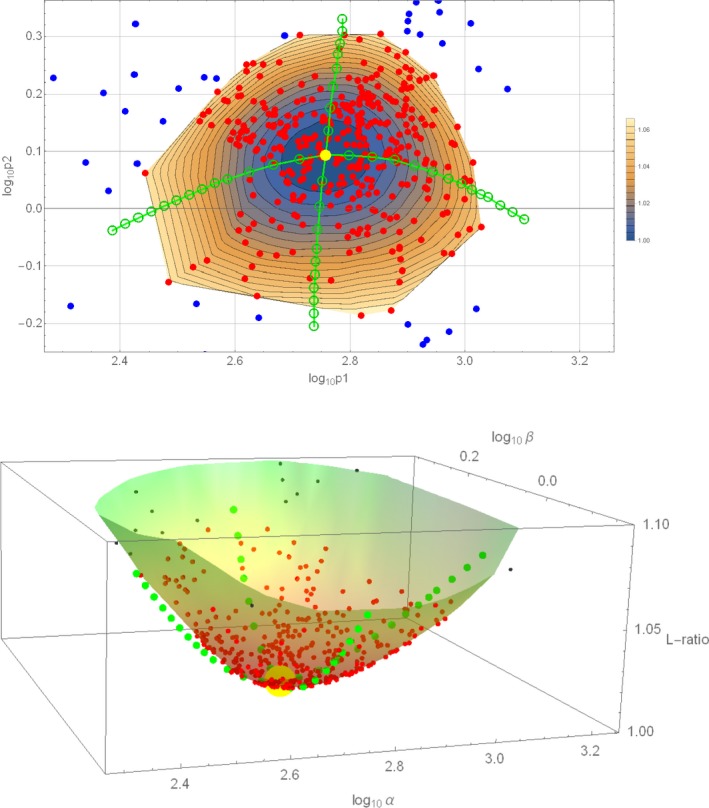

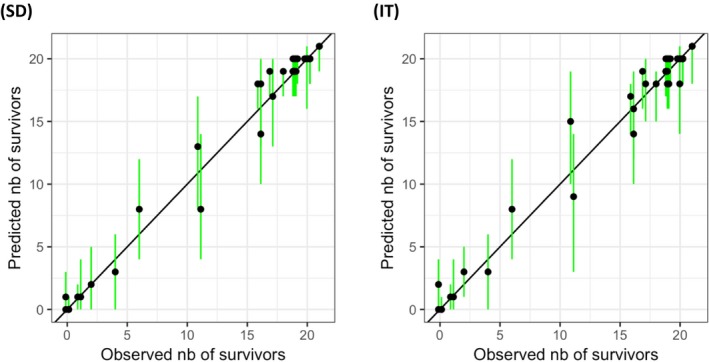

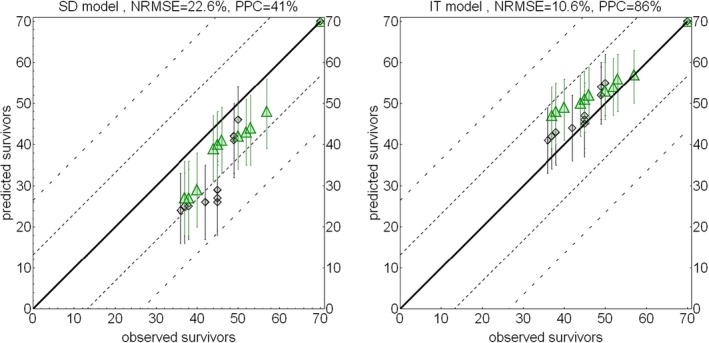

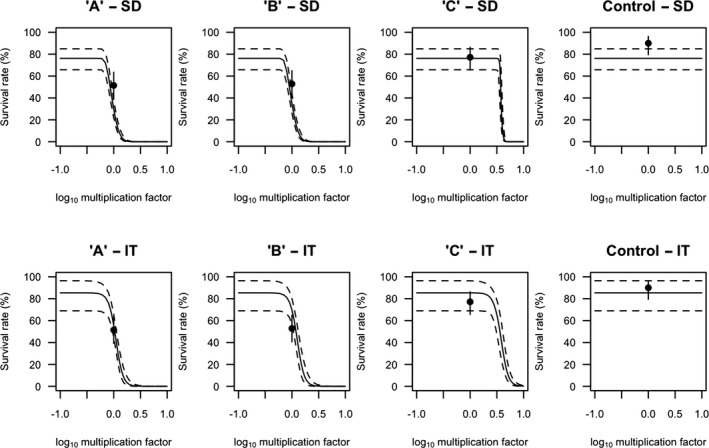

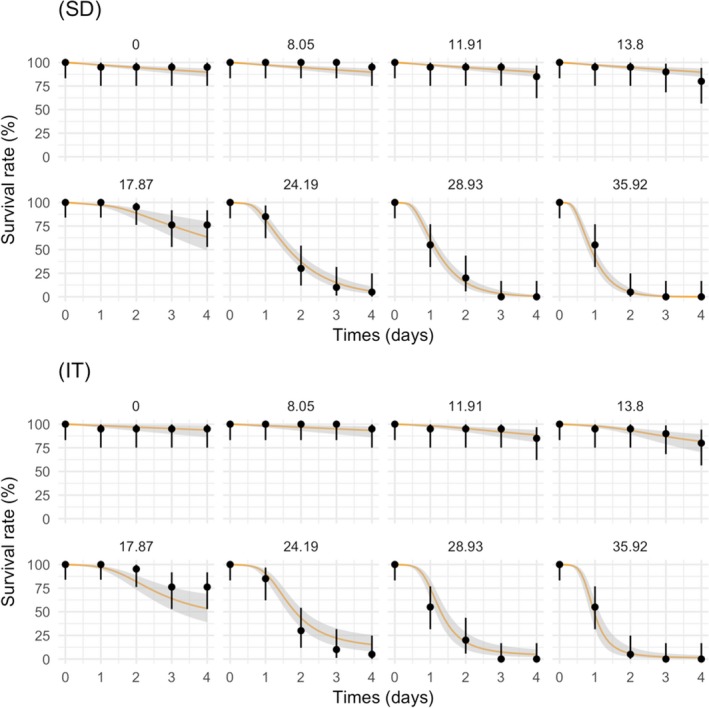

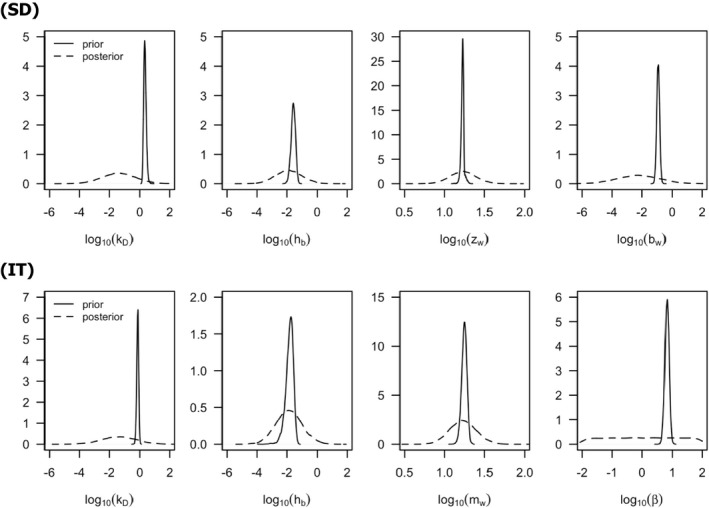

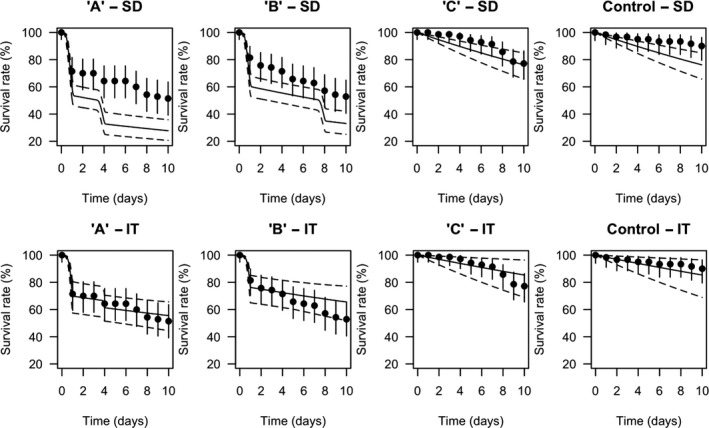

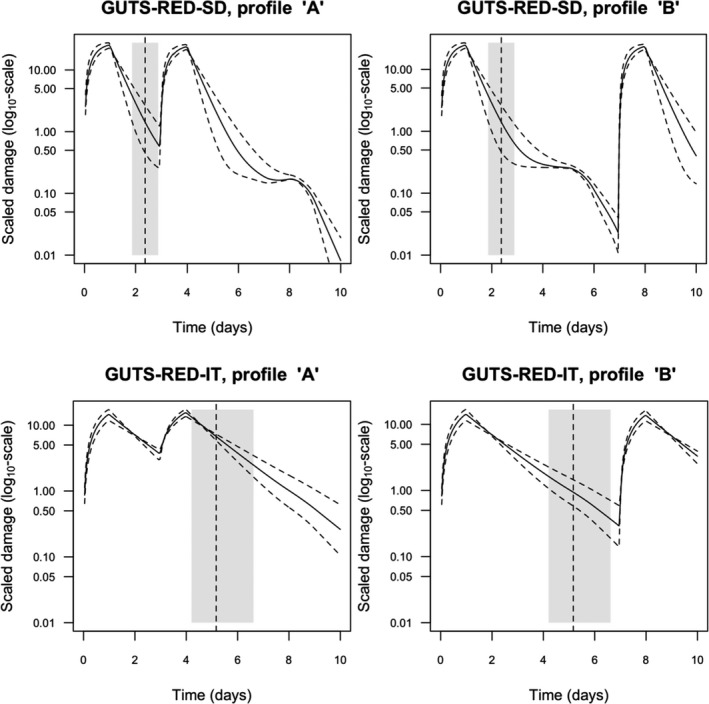

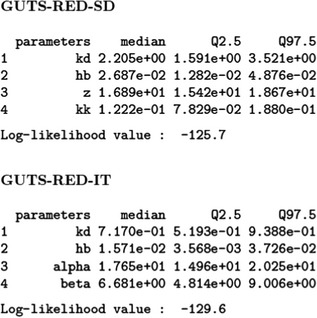

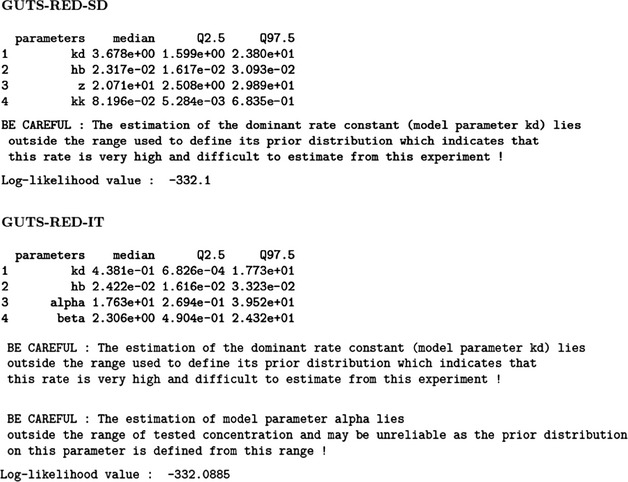

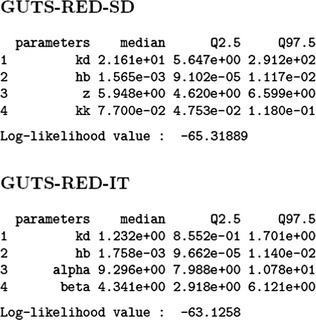

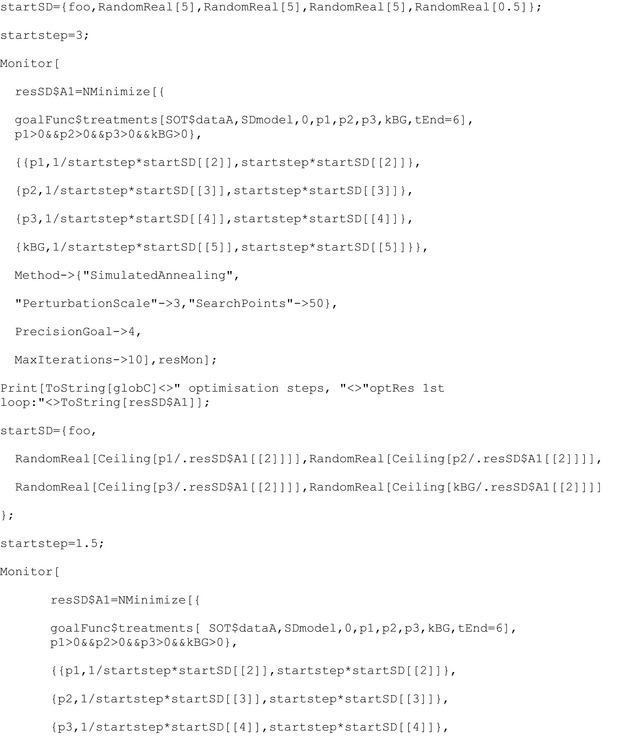

Chapter 4 deals with the GUTS framework. This framework is considered ready for use in aquatic ERA, since a sufficient number of application examples and validation exercises for aquatic species and pesticides are published in the scientific literature, and user‐friendly modelling tools are available. Consequently, in this chapter, detailed information is provided on testing, calibration, validation and application of the GUTS modelling framework. Documentation of the formal GUTS model, and of the verification of two example implementations of the GUTS model equations in different programming languages (R and Mathematica) are presented. In addition, sensitivity analyses of both implementations are described, and an introduction is presented for GUTS parameter estimation both in the Bayesian and frequentist approach. The uncertainty, related to the stochasticity of the survival process in small groups of individuals, is discussed, and the numerical approximation of parameter confidence/credible limits is described. A checklist for the evaluation of parameter estimation in GUTS model applications is given. Descriptions of relevant GUTS modelling output are also given. Approaches to propagate the stochasticity of survival in combination with parameter uncertainty to predictions of survival over time and to LPx/EPx values are presented, allowing the calculation of corresponding confidence/credible limits. The validation of GUTS models is discussed, including requirements for the validation data sets. Qualitative and quantitative model performance criteria are suggested that appear as most suitable for GUTS, and TKTD modelling in general, including the posterior prediction check (PPC), the Normalised Root Mean Square Error (NRMSE) and the survival‐probability prediction error (SPPE). Finally, chapter 4 gives an example of the calibration, validation and application of the GUTS framework for risk assessment.

In Appendix Appendix A – Model implementation details with Mathematica and R, Appendix B – Additional result from the GUTS application example, Appendix C – The log‐logistic distribution–D, GUTS model implementations in Mathematica and R, the results of the application example with the GUT‐RED models and supporting information on the GUTS‐RED exercise are provided, respectively. Source codes of the GUTS implementations in Mathematica and R are available in Appendix E .

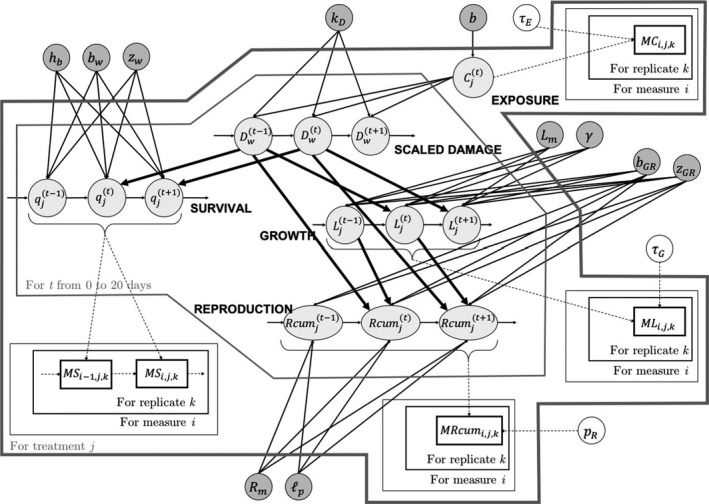

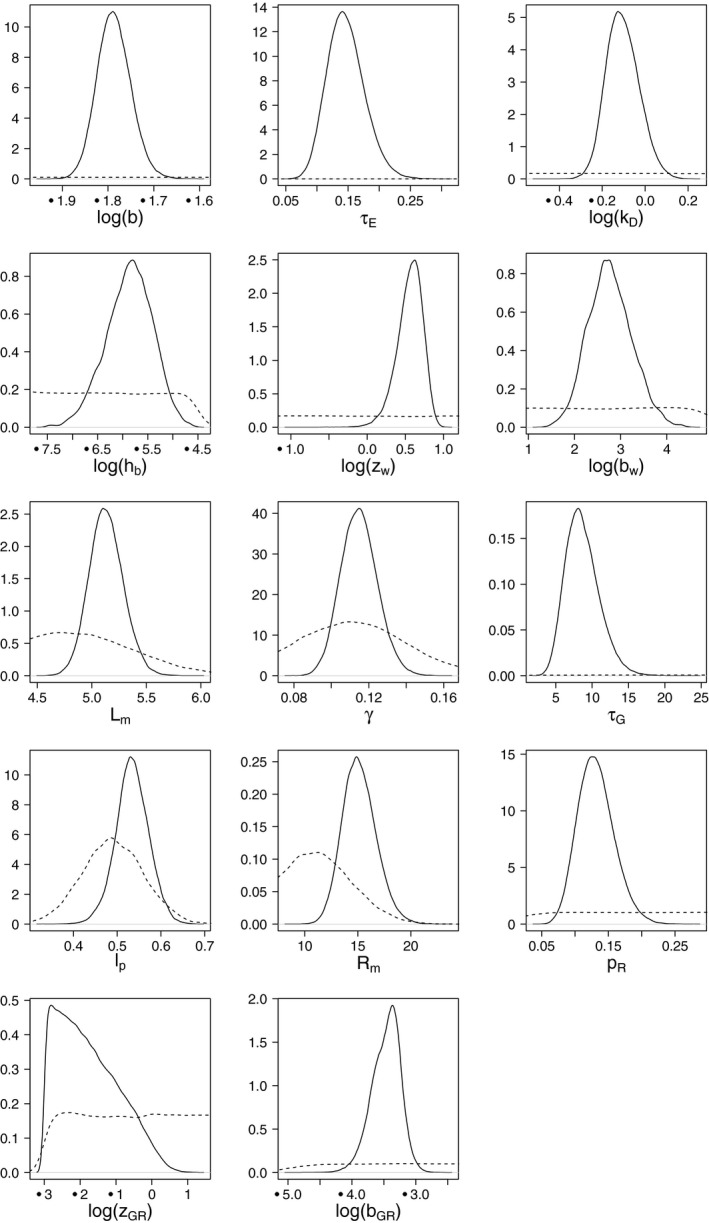

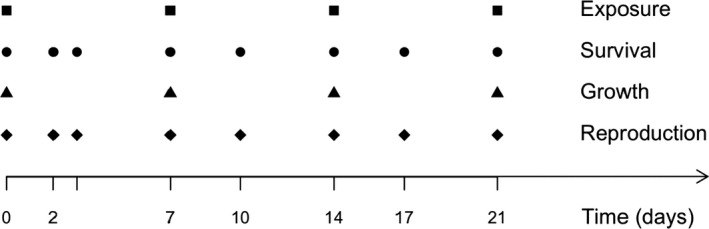

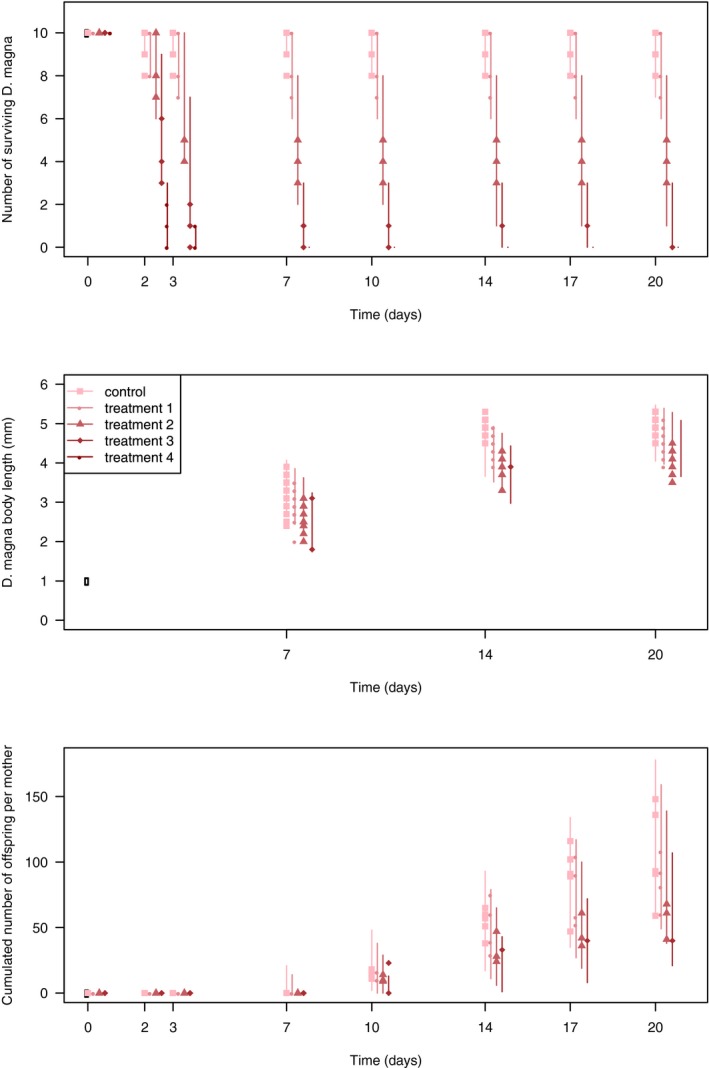

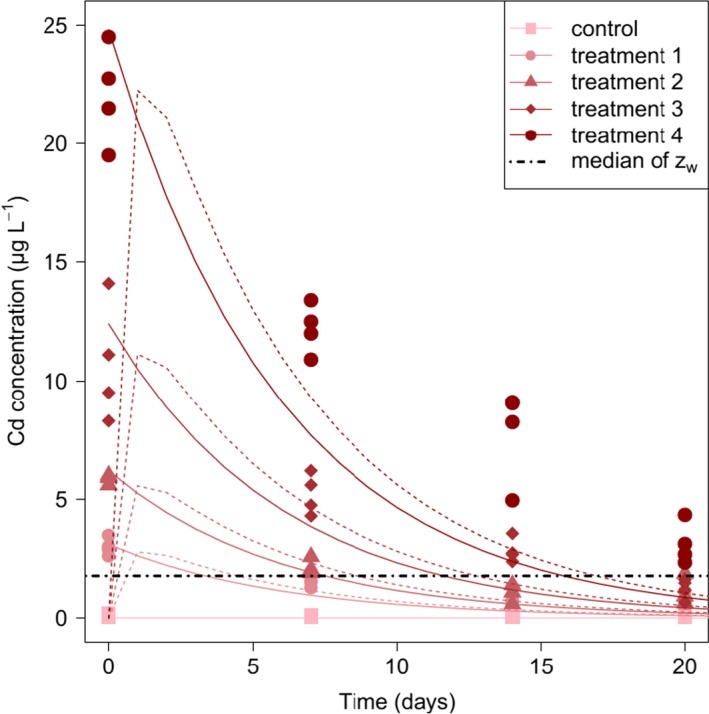

Chapter 5 deals with the documentation, implementation, parameter estimation and output of DEBtox modelling as illustrated with a case study on lethal and sublethal effects of time‐variable exposure to cadmium for Daphnia magna. This case study was selected since sufficiently calibrated and validated DEBtox models for pesticide and aquatic organisms were not yet available in the open literature, including raw data and programming source code to allow for re‐running all calculations. This lack of published examples of DEBtox models for pesticides and aquatic organisms, as well as the fact that no user‐friendly DEBtox modelling tools are currently available, results in the conclusion that these models are not yet ready for use in aquatic risk assessment for pesticides. Nevertheless, the DEBtox modelling approach is recognised as an important research tool with great potential for future use in prospective ERA for pesticides. The DEBtox model described in chapter 5 for cadmium and Daphnia magna illustrates the potential of the DEBtox modelling framework to deal with several kinds of data, namely survival, growth and reproduction data together with bioaccumulation data. It also proves the feasibility of estimating all DEBtox parameters from simple toxicity test data under a Bayesian framework.

In Appendix A.2.2 , a short overview of the DEBtox model implementation in R is given. The respective R code is available in an archive in Appendix E .

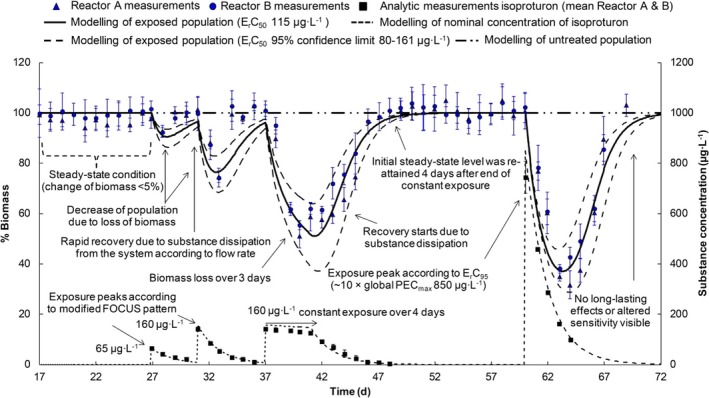

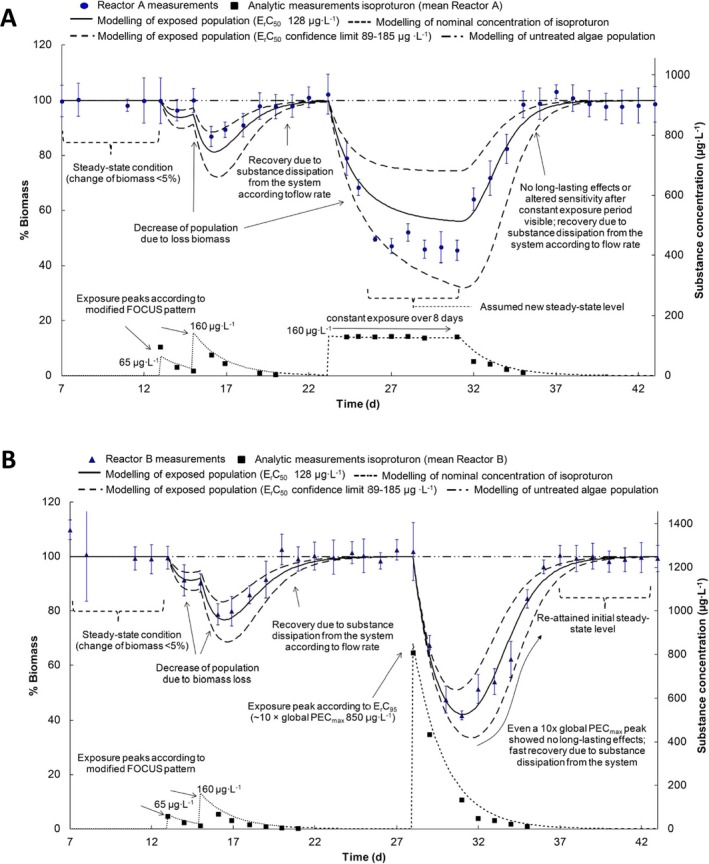

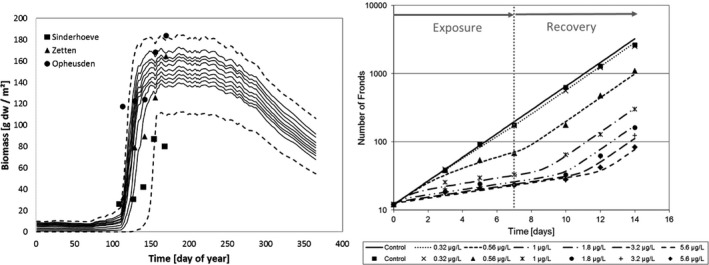

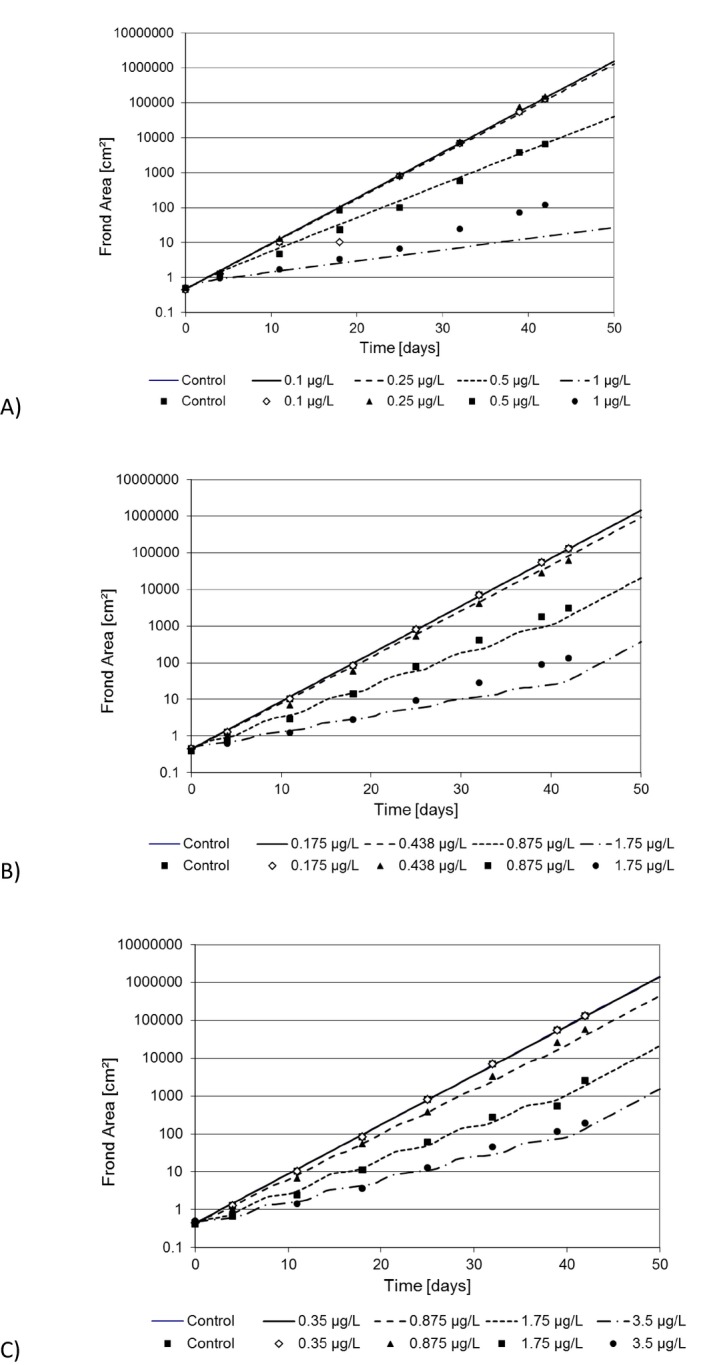

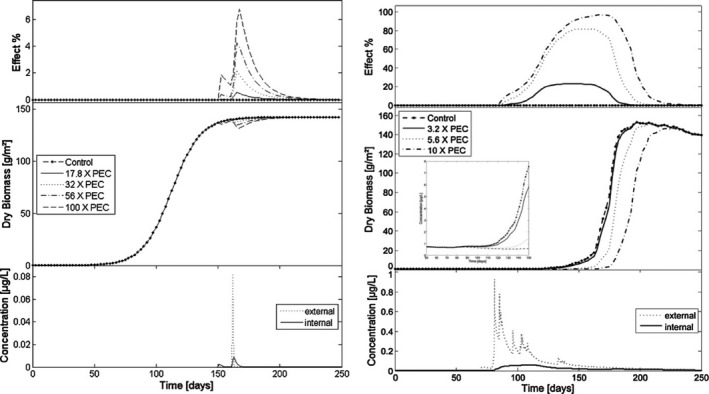

Chapter 6 evaluates the models currently available for primary producers, which all rely on a submodel for growth, driven by a range of external inputs such as temperature, irradiance, nutrient and carbon availabilities. The effect of the pesticide (TKTD part) on the net growth rate is described by a dose–response relationship, linking either external (the algae part) or scaled or measured internal concentrations to the inhibition of the growth rate. All experiments and tests of the models until now have been done under fixed growth conditions, as is the case for standard algae, Lemna and Myriophyllum tests. This was done because the focus has been on evaluating the model ability to predict effects under time‐variable exposure scenarios using predicted exposure profiles (e.g. FOCUS step 3 or 4) and Tier‐1 toxicity data as a starting point. The growth part of the models, however, all have the potential to incorporate changes in temperature, irradiance, nutrient and carbon availabilities in future applications.

TKTD models to describe effects of time‐variable exposures have been developed for two algal species and one PSII inhibiting herbicide. The largest drawback for implementing the algae models in pesticide risk assessment is that the flow‐through experimental setup used for model calibration/validation to simulate long‐term variable exposures of pesticides to fast growing populations of algae has not yet been standardised, nor has the robustness of the setup been ring‐tested. The current experimental setup of refined exposure tests for algae and the algae models is considered an important research tools but probably not yet mature enough to use for risk‐assessment purposes.

Lemna is the most thoroughly tested macrophyte species for which a calibrated and validated model has been documented for a sulfonyl‐urea compound. A Lemna TKTD model can be calibrated with data from the already standardised OECD Lemna test, as long as pesticide concentrations and growth are monitored several times during the exposure phase and the test is prolonged with a one week recovery period. Growth can be most easily and non‐destructively monitored by measuring surface area or frond number on a daily basis. If properly documented, the published Lemna model can be the basis for a compound‐specific Lemna model to evaluate the effects of field‐exposure profiles in Tier‐2C, particularly if in the Tier‐1 assessment Lemna is the only standard test species that triggers a potential risk. The published Myriophyllum modelling approach is not yet as well developed, calibrated, validated and documented as that for Lemna. Developing a model for Myriophyllum is complicated, as this macrophyte also has a root compartment (in the sediment) where the growth conditions (redox potential, pH, nutrient and gas availabilities, sorptive surfaces, etc.), and therefore, bioavailability of pesticides, are very different from the conditions in the shoot compartment (water column). In addition, Myriophyllum grows submerged making inorganic carbon availability in the water column a complicated affair compared to Lemna, for which access to CO2 through the atmosphere is constant and unlimited. Due to the complexity of the Myriophyllum system and the relative novelty of the published modelling approach, the available Myriophyllum model has not yet been very extensively tested and publicly assessable model codes are not yet available. OECD guidelines for conducting tests with Myriophyllum are available. In order to optimise the use of experimental data from such standardised Myriophyllum tests for model calibration, however, it is necessary that the tests are prolonged with a recovery phase in clean water and that growth is monitored over time (non‐destructively as shoot numbers and length). Although the published Myriophyllum modelling approach may be a good basis to further develop TKTD models for rooted submerged macrophytes, it currently is considered not yet fit‐for‐purpose in prospective ERA for pesticides. The currently available Myriophyllum model needs further documentation, calibration and validation.

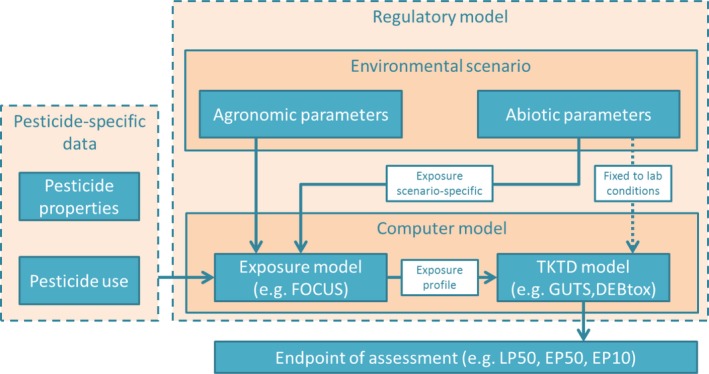

Chapter 7 describes how TKTD models submitted in dossiers can be evaluated by regulatory authorities. Annex Annex A – Checklist for GUTS models , Annex B – Checklist for DEBtox models – C provide checklists for the evaluation of GUTS models, DEBtox models and models for primary producers. It expands on the information provided by the EFSA Opinion on Good Modelling Practice in the context of mechanistic effect models for risk assessment (EFSA PPR Panel, 2014). The chapter mainly focuses on GUTS models but also provides considerations required for DEBtox and primary producer models. The chapter covers all stages of the modelling cycle and the documentation of the model use. For GUTS models the basic model structure is always fixed and consequently several stages of the modelling cycle have been covered in this Opinion, so they do not need to be evaluated again for each use. This includes the conceptual model and the formal model. For parameter estimation of each application, all experimental data used to calibrate and validate the model should be evaluated to ensure they are of sufficient quality. The computer model can be evaluated using a combination of the ring‐test data set, a set of default scenarios and testing against an independent implementation. The regulatory model also needs to be evaluated. The environmental scenario may be covered by using standard exposure models (FOCUS), leaving the parameter estimation as the key area to evaluate. The evaluation of model analysis (sensitivity and uncertainty analysis and validation) is also described. The final stage is the evaluation of the model use that includes information about tools available to the evaluators to check the modelling.

For DEBtox models, the evaluation of the DEB (physiological) part of the model is separated from the evaluation of the TKTD part of the model. Chapter 7 focusses on the TKTD part and starts with the assumption that the DEB part has been evaluated and accepted before it is used for a regulatory risk assessment.

For primary producer models, as with DEBtox models, the evaluation of the physiological part of the model is separated from the evaluation of the TKTD part of the model. For Lemna, this has been covered in this Opinion. For other primary producers, the evaluation of the physiological part of the model needs to be completed before use in regulatory risk assessment.

Documentation of any TKTD model application should be done following Annex D .

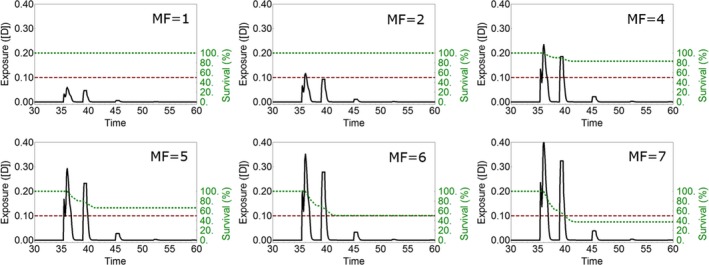

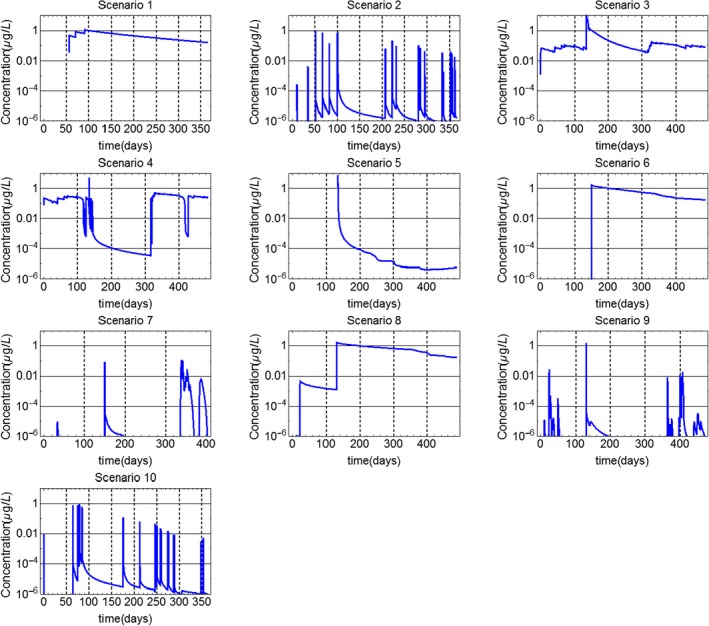

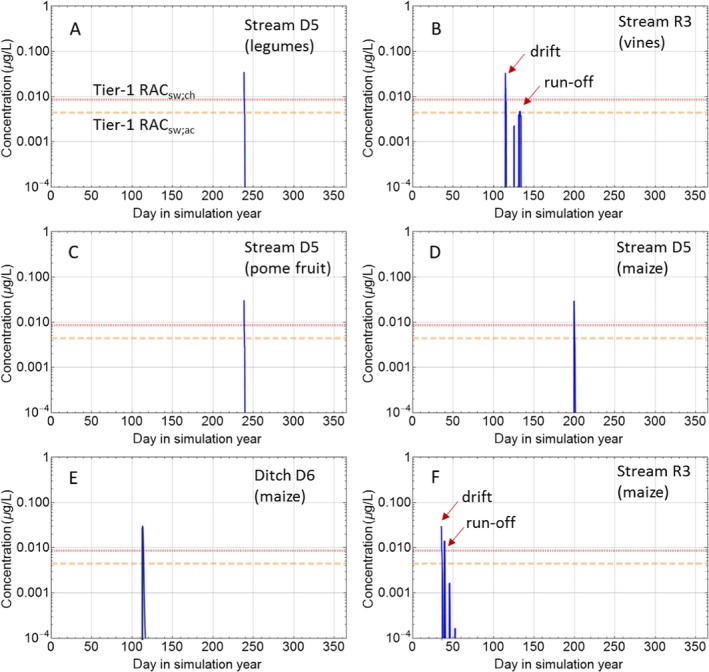

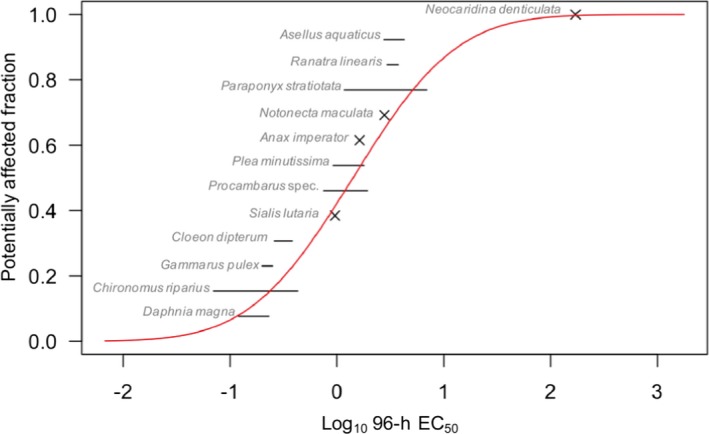

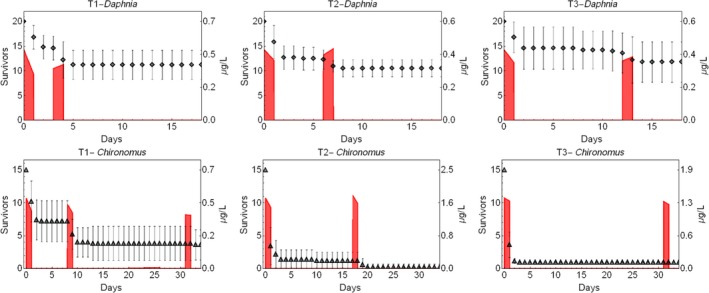

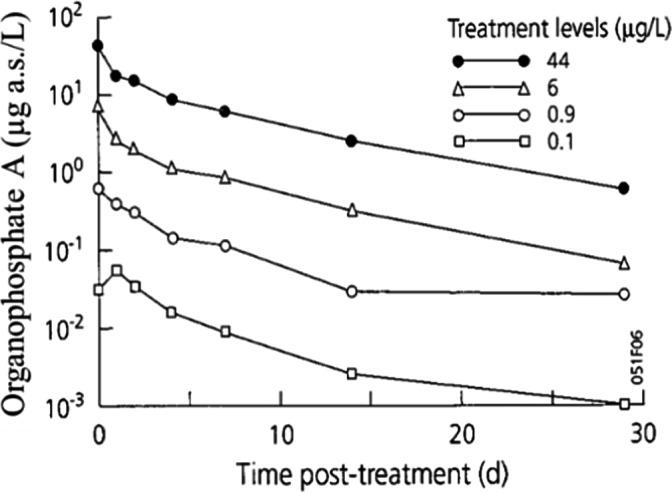

Chapter 8 illustrates the possible use of validated TKTD models as tools in the Tier‐2C risk assessment for plant protection products. The important steps that need to be considered when conducting an ERA by means of validated TKTD models are described. The description of the approach is followed by an example data set for an organophosphorus insecticide. This case study aims to explore how GUTS modelling can be used as a Tier‐2C approach in acute ERA in combination with step 3 or step 4 FOCUSsw exposure profiles. In addition, this case study aims to compare the outcome of the experimental effect assessment tiers (standard test species approach, geometric mean approach, species sensitivity distribution approach, model ecosystem approach) with results of GUTS modelling to put the Tier‐2C approach into perspective.

Chapter 9 concludes that, based on the current state of the art (e.g. lack of documented and evaluated examples), the DEBtox modelling approach is currently limited to research applications. However, its great potential for future use in prospective ERA for pesticides is recognised. The GUTS model and the Lemna model are considered ready to be used in risk assessment.

Two examples on the evaluation of existing TKTD models (one for GUTS and one for DEBtox) used in the context of PPP authorisation are reported in Appendices F and G .

Comments received by the Pesticide Steering Network and related replies are reported in Appendix H .

Guide to the reader: the main topic concerns the implementation of modelling techniques for prospective ERA; hence its stays at the interface of different expertise areas. Taking this into account, the document was structured to allow focussing on sections linked to specific expertise.

Chapters 1, 2 and 3 provide a general context: after presenting the scope of the Scientific Opinion, general principles behind TKTD models are described and the scene for the use of the TKTD models within the risk assessment for aquatic organisms is set. Therefore, these chapters are recommended for getting a complete picture of this document.

Chapters 4, 5 and 6 focus on the description of specific TKTD models. As such the content of these chapters contain rather technical concepts and explanations, particularly addressing modellers. These chapters may be difficult for readers without modelling experience. Understanding of the technical details included in this part, however, is not critical for the reading and understanding of the following chapters.

Chapters 7 and 8 illustrate in details how TKTD models can be used in the PPP ERA context, particularly addressing risk assessors. Evaluation criteria for modelling applications are also given in chapter 7. Hence, it is recommended that this part is also carefully considered by modellers providing elaborations for the risk assessment.

Checklists for the evaluation of TKTD models are given in Annex Annex A – Checklist for GUTS models, Annex C – Checklist for primary producer models –C. Model summary for the model documentation is included in Annex D.

1. Introduction

1.1. Background and Terms of Reference as provided by the requestor

In 2008 the Panel on Plant Protection Products and their Residues (PPR) was tasked by EFSA with the revision of the Guidance Document on Aquatic Ecotoxicology under Council Directive 91/414/EEC (SANCO/3268/2001 rev.4 (final), 17 October 2002) (European Commission, 2002). As a third deliverable of this mandate, the PPR Panel is asked to develop a Scientific Opinion describing the state of the art of Toxicokinetic‐Toxicodynamic (TKTD) effect models for aquatic organisms with a focus on the following aspects:

Regulatory questions that can be addressed by TKTD modelling

Available TKTD models for aquatic organisms

Model parameters that need to be included in relevant TKTD models and that need to be checked in evaluating the acceptability of effect models

Selection of the species to be modelled.

In 2013, EFSA Panel on Plant Protection Products and their residues published the document “Guidance on tiered risk assessment for plant protection products for aquatic organisms in edge‐of‐field surface waters” as a first deliverable within the EFSA mandate of the revision of the former Guidance Document on Aquatic Ecotoxicology. This document (EFSA PPR Panel, 2013) focuses on experimental approaches within the tiered effect assessment scheme for typical (pelagic) water organisms, indicating already how mechanistic effect models could be used within the tiered approach. As a second deliverable the document “Scientific Opinion on the effect assessment for pesticides on sediment organisms in edge‐of‐field surface water” was published in 2015 (EFSA PPR Panel, 2015). This document focuses on experimental effect assessment procedures for typical sediment‐dwelling organisms and exposure to pesticides via the sediment compartment. Initially, it was emphasized that the third deliverable would focus on mechanistic effect models as tools for the prospective effect assessment procedures for aquatic organisms.

Although different types of mechanistic effect models with a focus on different levels of biological organisation are described in the scientific literature (e.g. individual‐level models, population‐level models, community‐level models, landscape/watershed‐level models), this Scientific Opinion (SO) predominantly deals with TKTD models as Tier‐2 tools in the aquatic risk assessment for pesticides. These relatively simple, mechanistic effect models are considered to be in a stage of development that might soon enable their appropriate use in the prospective environmental risk assessment for pesticides, particularly to predict potential risks of time‐variable exposures on aquatic organisms. This is of relevance since in most edge‐of‐field surface waters time‐variable exposures are more often the rule than the exception.

A consultation with Member States of the Pesticides Steering Network was held in March 2018. Comments and related replies are reported in Appendix H.

1.2. Scope of the opinion and restrictions

This SO describes the state‐of‐the‐art of TKTD models developed for aquatic organisms and exposure to pesticides in aquatic ecosystems with a focus on prospective environmental risk assessment (ERA) within the context of the regulatory framework underlying the authorisation of plant protection products in the EU. Within this context, TKTD models developed for specific pesticides and specific species of water organisms – such as fish, amphibians, invertebrates, algae and vascular plants – may be useful regulatory tools in the linking of exposure to effects in edge‐of‐field surface waters.

Where appropriate, in this SO the concepts and Tier‐1 and Tier‐2 experimental approaches already developed by EFSA PPR Panel (2013) are considered and aligned with the proposals on the regulatory use of TKTD models as tools in Tier‐2C ERA. This SO describes the potential use of TKTD models as Tier‐2C tools of the acute and chronic ERA schemes for pesticides and water organisms in edge‐of‐field surface waters. In Tier‐2, the TKTD models developed for aquatic animals focus on individual‐level responses to refine the risks of time‐variable exposure to pesticides in particular. Although TKTD models may play a role in higher‐tier ERAs as well, the coupling of TKTD models with population‐level models for aquatic invertebrates and vertebrates is not the topic of this SO. In the chronic risk assessment for algae and macrophytes like Lemna, however, a clear distinction between individual‐level and population‐level effects in Tier‐1 and Tier‐2 assessments cannot be made. Consequently, in TKTD models for these primary producers, the effects of time‐variable exposures on individuals cannot fully be separated from population‐level effects as influenced by interspecific competition between individuals in experimental test systems, the results of which are used to calibrate and validate TKTD models. TKTD models may be used to refine the risk assessment if experimental effect assessment approaches in Tier‐1 (based on standard test species) and Tier‐2 (based on standard and additional test species) in combination with an appropriate exposure assessment, trigger potential risks. The current exposure assessment for active substance approval is based on FOCUS exposure scenarios and models (2001, 2006, 2007a,b). At present, the FOCUS exposure assessment framework is under review to repair deficiencies in the current methodology. One of the main actions foreseen by the mandate,2 is that at steps 3 and 4, a series of 20 annual exposure profiles should be delivered for the edge‐of‐field surface waters of concern instead of one single year exposure profile. It is assumed that prediction of active substance concentrations in surface waters after pesticide application will be further performed using the FOCUS surface water (FOCUSsw) methodology until updated or new methods become available and will replace the existing tools. FOCUSsw is used for approval of active substances at EU level. It is also used in some Member States for product authorisation, but also different exposure assessment procedures may be used. In principle, the TKTD modelling approaches described in this SO can also be used to predict risks to aquatic organisms when linked to exposure profiles based on Member State specific exposure assessment scenarios and models.

In addition, the recommendations of the ‘Opinion on good modelling practise in the context of mechanistic effect models for risk assessment’ (EFSA PPR Panel, 2014) are, where appropriate, taken on board and operationalised for TKTD models.

Besides the regulatory use of TKTD model in the context of refined risk assessment for aquatic organisms, TKTD modelling can also enable exploration of effects of time‐variable exposure on species with trait assemblages that cannot be (so easily) tested under laboratory conditions, e.g. by extrapolating features from species with fast cycles (features of species usually tested in laboratory) to species with lower metabolic rates and longer life cycles that may become exposed more frequently. However, these more fundamental research applications of TKTD models are outside the scope of this Opinion.

2. Concepts and examples for TKTD modelling approaches

Current acute and chronic lower‐tier risk assessments for Plant Protection Products (PPPs) in edge‐of‐field surface waters (EFSA PPR Panel, 2013) rely on the quantification of treatment‐related responses from protocol tests (e.g. OECD) with standard test species or comparable toxicity tests with additional test species. According to Commission Regulation (EU) No 283/20133 and 284/20134, the L(E)C10, L(E)C20 and L(E)C50 values derived from these tests have to be reported. However, in chronic assessments for aquatic animals no observed effect concentration (NOEC) values may be used if valid EC10 values are not reported (predominantly old studies). In a tiered approach, lower‐tier assessments (i.e. Tier‐1 as described in Section 3) aim to be more conservative than higher‐tier assessments. For example, a more or less constant exposure is maintained in the standard laboratory test and pre‐defined assessment factors (AF) laid down in the uniform principles (Reg 546/20115) are applied in order to take into account a number of uncertainties, e.g. intra‐ and interspecies variability, interlaboratory variability and extrapolation from laboratory to field. Toxicity estimates (e.g. LC/EC values), however, are time‐dependent in that they are usually different for different exposure durations. In edge‐of‐field surface waters, time‐variable exposure is rather the rule than the exception (as indicated by monitoring data or modelled concentration dynamics of pesticides; see e.g. Brock et al., 2010). Consequently, if a risk is triggered in the conservative Tier‐1 approach, a refined risk assessment can be performed by considering realistic time‐variable exposure regimes. As outlined in the Aquatic Guidance Document (EFSA PPR Panel, 2013), this can be addressed experimentally or by modelling, e.g. by using TKTD modelling approaches.

TKTD modelling approaches – as outlined in more detail below – provide a modelling approach of intermediate complexity (Jager, 2017), which ranks between the simple statistical (LC/EC50) models, and fully detailed approaches focusing on the molecular level (Van Straalen, 2003). TKTD models can be used in aquatic risk assessment to link results of laboratory toxicity data to predicted (time‐variable) exposure profiles. These models may also be used to explore the changes of toxicity in time, and to explore the processes underlying the variations between species and toxicants and how they depend on environmental conditions. Some TKTD models can potentially explain links between life‐history traits, as well as explore effects of toxicants over the entire life cycle (e.g. DEBtox toxicity models derived from the Dynamic Energy Budget (DEB) theory). TKTD models for survival can, as has been recently shown (e.g. Nyman et al., 2012; Baudrot et al., 2018b; Focks et al., 2018), be parameterised based on standard, single‐species toxicity tests. These models may still provide relevant information at the individual level when extrapolating beyond the boundaries of tested conditions in terms of exposure. The parameters being used in TKTD models remain as species‐ and compound‐specific as possible and they can usually be interpreted in a physical or a biological way (see Section 2.2 for more details). In this way, parameters can be used in a process‐based context, aiding the understanding of the response of organisms to toxicants (Jager, 2017).

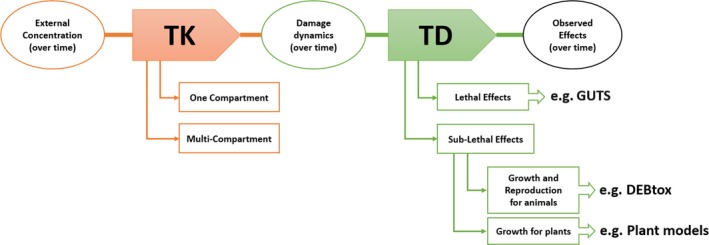

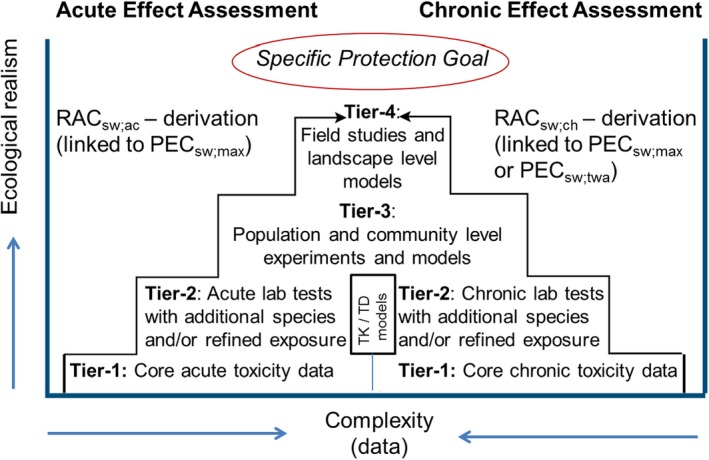

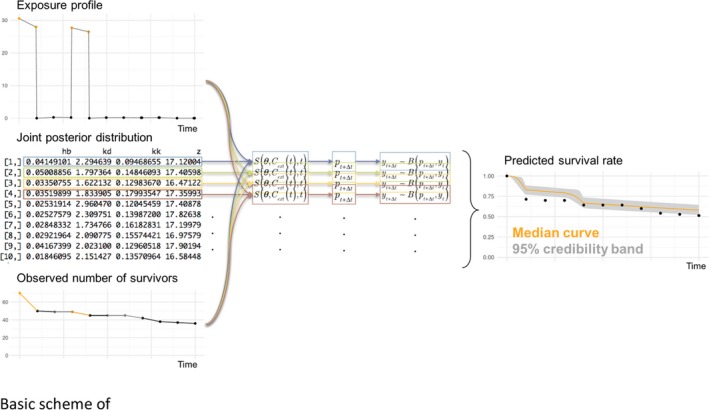

In the following sections, some classes of TKTD models will be explained in more detail concerning their terminology, their background and application domains, their relationships to each other, and the intrinsic or explicit complexity levels they account for (Figure 1). After a more detailed explanation of ‘Toxicokinetic modelling for uptake and internal dynamics of chemicals’ (Section 2.1), the following three sections will be dedicated to: (i) the ‘General Unified Threshold models of Survival’ (GUTS) framework for the analysis of lethal effects (Section 2.2), (ii) toxicity models derived from the Dynamic Energy Budget theory (DEBtox models) (Section 2.3), and (iii) models for primary producers (Section 2.4). An overview about strengths and weaknesses of TKTD models is given in Table 1.

Figure 1.

Schematic presentation of the concepts behind toxicokinetic (TK)/toxicodynamic (TD) models; GUTS stands for the General Unified Threshold model of Survival, while DEBtox stands for toxicity models derived from the Dynamic Energy Budget (DEB) theory. The damage‐dynamics concept is explained in Figure 2 and related text

Table 1.

Introduction to strengths and weaknesses of TKTD models

| Strengths | Weaknesses | |

|---|---|---|

| TKTD models (applicable to GUTS, DEBtox and models for primary producers) |

|

|

| GUTS |

|

|

| DEBtox |

|

|

| Primary producers models |

|

|

2.1. Toxicokinetic modelling for uptake and internal dynamics of chemicals

TKTD models follow one general principle: the processes that influence internal exposure of individual organisms, summarised under toxicokinetics (TK), are separated from the processes that lead to their damage and mortality, summarised by the term toxicodynamics (TD) (Figure 1). In general terms, TK processes correspond to what the organism does to the chemical substance, while the TD processes correspond to what the chemical substance does to the organism. More precisely, TK describes absorption, distribution, metabolism and elimination of hazardous substances by an organism. In aquatic systems, the main uptake routes of substances from the water phase include the organism surface and internal or external gills. Food can also be a relevant uptake route. Transport across the biological membranes can be passive (e.g. diffusion) or active (e.g. membrane transporters). Inside the organism, TK processes relate to internal partitioning of chemicals between liquid‐ and lipid‐dominated parts or between different organs of organisms. TK models result in estimates for internal exposure concentrations, which can relate to whole organism scales or to single organs. The level of detail of the TK model often depends on the purpose of the study, but also on practicalities in terms of size of the organism and single organs in question.

Specific aspects of TK, with emphasis on internal compartmentation, were discussed extensively in a recent review (Grech et al., 2017). TK models are categorised into compartment models and physiologically based TK (PBTK) models. While single organs and blood flow are explicitly considered in PBTK models, one‐ or multi‐compartment models are using a generic simplification of an organism. For aquatic invertebrates, the one‐compartment model is the most often used. One‐compartment models assume concentration‐driven transfer of the chemical from an external compartment into an internal compartment, where it is homogeneously distributed.

Transformation of molecules within the compartment can play an important role for the link from TK to the effects, as chemicals are metabolised either into inactive and excretable products (detoxification) or into active or reactive metabolites causing (toxic) effects.

TD processes are related to internal concentrations of a toxicant within individual organisms. Implicitly, biological effects are caused by the toxicant on the molecular scale, where the molecules interfere with one or more biochemical pathways. These interactions are lumped into only a few equations in TKTD models that have to handle the variability of TD processes within species. These equations especially have to provide enough degrees of freedom to capture the dynamics of responses or effects over time; the latter is the most important aspect in the current modelling context since the aim is to understand how toxic effects change over time under time‐variable exposure profiles.

2.2. Toxicodynamic models for survival

Historically, TKTD models for survival were developed and applied in a tailor‐made way to each research question. Over the years that led to a variety of different TKTD models, in parts redundant or conflicting. This conglomeration of TKTD modelling approaches led to difficulties in the communication of model applications and results and hampered the assimilation of TKTD models within ecotoxicological research and risk assessment. The publication of Jager et al. (2011), aiming at unifying the existing unrelated approaches and clarifying their underlying assumptions, tremendously facilitated the application of TKTD modelling for survival. In that paper, the General Unified Threshold models of Survival (GUTS) theory was defined and its application developed. The biggest achievement of the developed theory was probably the mathematical unification of almost all existing tailor‐made approaches under the GUTS umbrella. Recently, an update of the GUTS modelling approach was published, which works out more details of the modelling of survival and provides examples and ring‐test results; that update also suggests a slightly changed terminology, while the underlying model assumptions and equations have not been changed (Jager and Ashauer, 2018). In this scientific opinion, the updated terminology suggested by Jager and Ashauer (2018) is used, which refers to ‘scaled damage dynamics’ rather than to the previously used concept of ‘dose metrics’.

The ultimate aim of GUTS is to predict survival rate under untested exposure conditions such as time‐variable exposures, which are more likely to occur in the environment than the static exposure levels used in Tier‐1 testing. The prediction functionality of GUTS is useful for risk assessment, because in some cases, it may not be possible to test realistic time‐variable exposure profiles under laboratory conditions, e.g. for individuals characterised by a long life cycle. In addition, exposure modelling can easily create hundreds of potentially environmentally relevant exposure profiles, which would require excessive resources if tested in the laboratory (e.g. number of test animals).

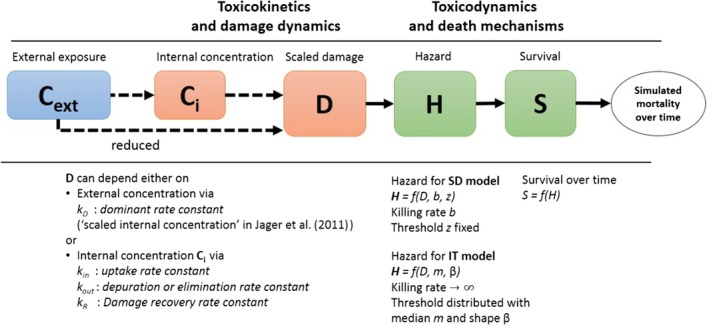

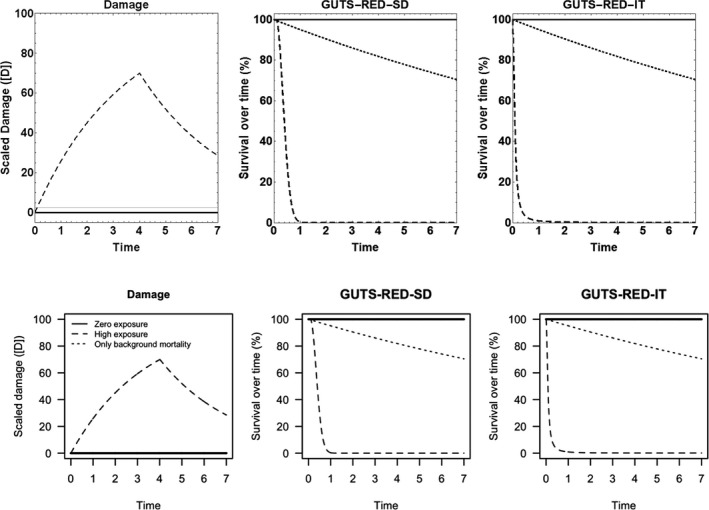

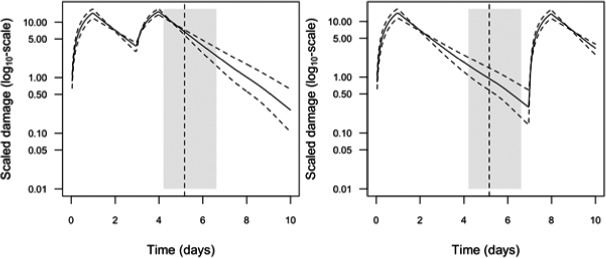

All GUTS versions have in common that they connect the external concentration with a so‐called damage dynamic (see below and Jager and Ashauer (2018) for definition), which is in turn connected to a hazard resulting in simulated mortality when an internal damage threshold is exceeded (Figure 2). The unification of TKTD models for survival was made possible by creating two categories for assumptions about the death process in the TD part of GUTS: the Stochastic Death (SD) and the Individual Tolerance (IT) hypotheses that contain all the other published modelling approaches for survival.

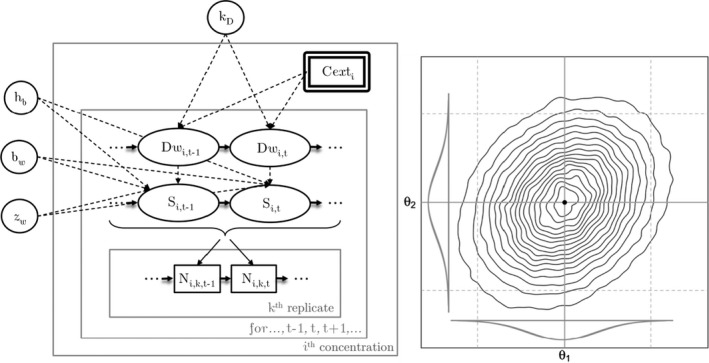

Figure 2.

Overview of state variables in the General Unified Threshold model of Survival (GUTS) framework. The toxicokinetic part of the GUTS theory translates an external concentration into an individual damage state dynamics in a more or less (reduced model) detailed way. In the toxicodynamic part of the model, two death mechanisms are distinguished: SD for Stochastic Death and IT for Individual Tolerance; see text for more details

For models from the SD category, the threshold parameter for lethal effects is fixed and identical for all individuals of a group and a so‐called killing rate relates the probability of a mortality event in proportion to the scaled damage. Hence, death is modelled as a stochastic (random) process occurring with increased probability as the scaled damage rises above the threshold.

For models from the IT category, thresholds for effects are distributed among individuals (sensitivity varies between individuals of a population) of one group, and once an IT is exceeded, mortality of this individual follows immediately, meaning in model terms that the killing rate is set to infinity.

Both models are unified within the ‘combined GUTS’ model, in which a distributed threshold is combined with a between‐individual variable killing rate (Jager et al., 2011; Ashauer et al., 2016; Jager and Ashauer, 2018). It is assumed that all TK and TD model parameters (see Figure 2) are constant throughout the exposure profile, i.e. no effects of exposure on TK and TD dynamics are considered in addition to those already captured from the calibration experiments. Therefore, phenomena like increase in sensitivity or tolerance is not taken into account. The possibility of damages being below thresholds of lethality – inducing faster responses at a next pulse – is accounted for by the use of the scaled damage concept (see Figure 2).

Scaled damage (D in Figure 2) is the internal damage state of an organism after taking the external toxicant concentration, the uptake rate, elimination rate and potentially any damage recovery into account. The scaled damage concept translates external exposure into TD processes and finally into mortality. It links the given external concentration dynamics to the time course of the internal hazard. An ‘internal concentration’ (Ci in Figure 2) can only be considered explicitly in the model when measured internal concentrations are available or clear indications in the observed survival over time are given. The link of external concentrations to the scaled damage via a combined dominant rate constant kD leads to the ‘reduced GUTS’, which was called ‘scaled internal concentration’ in the old dose metric terminology (Jager et al., 2011). It is most often used when no measurements of internal concentrations are experimentally available as in the case of standard survival tests (Figure 2). Parameter kD can then be dominated by either elimination or by damage recovery; Chapter 4 gives the corresponding equations and details about kD estimation.

‘Damage’ is a rather abstract concept here and its level cannot be experimentally measured, but this concept still allows for further mechanistic considerations. In principle, chemicals that have entered the organism are expected to cause some damage by interference with biochemical pathways. Organisms will have capacity to repair the damage at a certain rate, but if the damage exceeds a certain threshold the organism will die. The degree to which the dynamics of internal concentrations can explain the pattern in mortality observed over time can vary. As one possibility, damage repair can be so fast that disappearance of the chemical from the organism is controlling the scaled damage and so the mortality rate. Fast damage repair in this case does not mean that there are no effects, because as long as there are high levels of scaled damage, instantaneous mortality will occur. If the damage that is caused by the chemical, however, is repaired so slowly that organisms keep dying also after elimination of the chemical, the dynamics of the internal concentrations can be accounted for in the model to capture that delay in time. For chemicals which are known to bind irreversibly to their enzymatic target site, very low or zero depuration or repair rates are supposed to be the case. However, depending on the enzyme turnover of different species, it might also happen that by synthesis of fresh, ‘clean’ enzymes, depuration or recovery could take place for such irreversibly binding compounds in case no further internal exposure takes place.

When working with small invertebrates or in some cases with fish, internal concentrations are usually measured on the whole organism level, giving an average concentration across organs and cellular compartments. Hence, measured or modelled internal concentrations might not directly reflect the concentration at a specific molecular target site, but in the GUTS modelling it is assumed that modelled internal concentrations are at least proportional to the concentration at the target sites.

Over‐parameterisation of the model could lead to multiple problems, e.g. imprecise and inaccurate parameter estimation. To avoid this, it is recommended to include the internal concentration only if measured internal concentrations are available in a data set. If internal concentrations are not explicitly modelled (‘scaled internal concentrations’ in the ‘old’ terminology), the rate constant of the scaled damage (kD) describes the rate‐limiting processes of either the chemical elimination/detoxification of the organism or the damage repair. When calibrating a GUTS model without considering the internal concentration as a state variable, it is assumed that survival data over time contain sufficient information to allow for calibration of the dominant rate constant, plus parameters for the death mechanism (SD or IT) which link the scaled damage to effects on survival. The term ‘scaled damage’ accounts for the fact that it is in general not possible to determine the absolute ‘damage’‐related values, while it is possible to assume that the scaled damage is proportional to the true (but unknown) damage and has the dimension of the external concentration. Using the scaled damage without explicit internal concentrations can still give equal or better fits to observed survival data compared with using internal concentrations, despite measured internal concentrations being available. This has been shown when testing GUTS with observed survival under time‐variable, untested exposures (Nyman et al., 2012) and holds especially according to the principle that a simpler model with equal performance is preferable to a more complex one (parsimony principle).

The choice of the specific GUTS variant is not only a conceptual decision, but has direct implications for the number of parameters and hence for the degrees of freedom for parameter estimation. Despite the existence of a theoretical framework, modelling TKTD processes with GUTS requires some experience and thorough thinking about the possible choices and degrees of freedom. The advantage of the GUTS framework is the clear definition of the concepts, of the mathematical equations and of the terminology which eases the documentation of any GUTS model application.

Model parameters for GUTS models can be interpreted in a process‐based context, despite the fact that they are not defined on a purely mechanistic basis. For example, the uptake rate constant kin has a process‐related interpretation; it quantifies the influx of chemicals into organisms, but in GUTS the uptake rate is not associated with physical processes such as membrane transport and it can account also for more than one process. This intermediate complexity of the model is chosen according to the fact that, for many compounds, purely mechanistic parameters are not usually available. Nevertheless, the chosen level of detail enables the determination of GUTS parameters from relatively simple data sets. GUTS parameters are related to dynamic processes such as uptake, elimination, death and/or physiological recovery. Hence, they can potentially be quantitatively related to biological traits such as body size, breathing mode or chemical properties such as log Kow or water solubility (Rubach et al., 2011). Understanding these relationships could make TKTD modelling a useful tool for understanding chemical toxicity across species and chemical modes of action. Based on quantitative relationships, extrapolations between species and across chemicals could in theory be possible although this is not applicable in RA based on the current state of knowledge.

More details about aspects of the GUTS models will be described and discussed in later sections of the document, including model calibration (parameter estimation), model testing and validation, deterministic and probabilistic model predictions, the domain of applicability and regulatory questions.

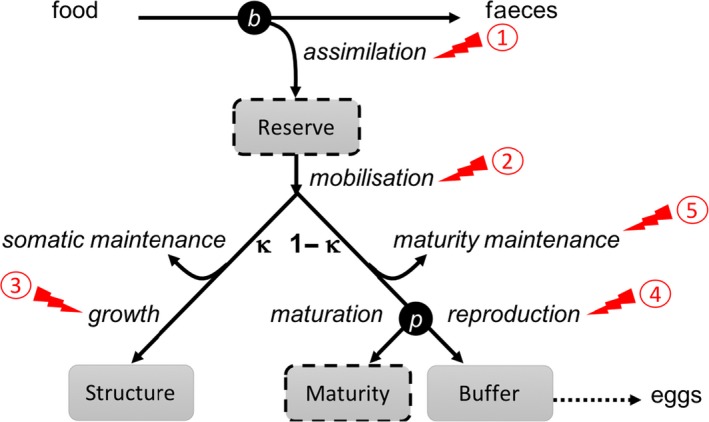

2.3. Toxicodynamic models for effects on growth and reproduction

The Dynamic Energy Budget (DEB) theory was originally proposed by Kooijman who started its development in 1979; see for example, Kooijman and Troost, 2007; Kooijman, 2010, where the DEB theory was used to investigate the toxicity of chemicals on daphnids and several fish species. Since then, the DEB theory became widespread and found applications in many fields of environmental sciences. The DEB theory is based on the principle that all living organisms consume resources from the environment and convert them into energy to fuel their entire life cycle (from egg to death), thus ensuring maintenance, development, growth and reproduction. In performing those activities, organisms need to follow the conservation laws for mass and energy. The DEB theory thus proposes a comprehensive set of rules that specifies how organisms acquire their energy and allocate it to the various processes. In particular, it is assumed that a fixed fraction (kappa or κ) of the mobilised energy is allocated to somatic maintenance and growth, while the rest (1 – kappa) is allocated to maturity maintenance, maturation and reproduction; this is called the kappa‐rule, a core concept within the DEB theory (Figure 3). A conceptual introduction to the DEB theory can be found in Jager (2017), while an extensive and more mathematical description is provided in Kooijman (2010).

Figure 3.

Schematic energy allocation according to the standard DEB model (adapted from Jager, 2017): ‘b’ stands for birth and ‘p’ for puberty. The reserve compartment, and consequently the maturity one (boxes with dashed borders) may be omitted in a DEBkiss model (reserve‐less DEB). Red arrows stand for the five different DEB modes of actions due to toxic stress that can be described with DEBtox models; see text and references above for more explanation on kappa (κ)

When focusing on egg‐laying ectotherm animals, a standard DEB model exists (Figure 3), which assumes that animals do not change their shape when growing (therefore, it does not cover processes such as metamorphosis), and that animals feed on one food source with a constant composition. The ‘Add‐my‐Pet’6 (AmP) database is a collection of parameters of the standard DEB model, as well as of its different variants, for more than 1,000 species, including numerous aquatic species. Conceptual overviews of the DEB theory are given in Jager et al. (2014) and Baas et al. (2018).

From the formulation of the standard DEB model, a simplification for standard laboratory toxicity tests has been derived; it is based on the following assumptions: size is a perfect proxy for maturity; size at puberty remains constant; costs per egg are constant and thus not affected by the reserve status of the mother; egg costs can only be affected by a direct chemical stress on the overhead costs; and the reserve is always in steady‐state with the food density in the environment. Under this framework, the kappa (κ) rule should not be affected by a toxicant. A reserve‐less DEB model (called ‘DEBkiss’) has recently been published (Jager et al., 2013; Jager and Ravagnan, 2016), treating biomass as a single compartment, thus leading to a simplified version of the standard DEB model making the interpretation of toxicity test data easier. However, the exclusion of the reserve compartment together with the maturation state variable (Figure 3) makes DEBkiss mainly applicable to small invertebrates that feed almost continuously.

DEBtox is the application of the DEB theory to deal with effects of toxic chemicals on life‐history traits. The original DEBtox version was published by Kooijman and Bedaux (1996), but Billoir et al. (2008) and Jager and Zimmer (2012) published updated derivations. Observing effects on life‐history traits due to chemical substances necessarily implies that one or more metabolic processes within an organism, and consequently also energy acquisition or use by it, are affected by the toxicant. In that sense, energy‐budget modelling provides a convenient way of quantitatively assessing effects on sublethal endpoints or life‐history traits without the need to go into biological details nor into details about the stressor itself. DEBtox models differ from other TKTD models in their TD part by incorporating a dynamic energy budget part for growth and reproduction endpoints at the individual level. Internal chemical exposure has an impact on the energy balance and translates into modified DEB rates. On this basis, the DEB part describing the physiological energy flows is considered as the physiological part of a DEBtox model (as used in later sections of this SO), while the part that accounts for uptake and effects of chemicals is named the TKTD part.

One of the core assumptions of DEBtox models is the existence of an internal toxicant threshold below which no measurable effect on a specified endpoint can be detected at any time, this is the so‐called no‐effect‐concentration (NEC). The value of the NEC depends on the chemical species combination; it can be modified by other stressors (e.g. other chemicals, abiotic factors, etc.) but in a DEBtox framework, the NEC is a time‐independent parameter. Although toxicity in experimental testing is related to a certain exposure duration, such time‐independent definition of the NEC is of particular interest in an ERA perspective.

DEB processes can be linked to external exposure via the concept of the scaled damage, which is often implemented as a basic, ‘linear‐with‐threshold’ relationship: i.e. the DEB model parameter value equals its control level until the internal concentration reaches the relevant NEC value, and then changes linearly dependent on the internal concentration. Simulated internal concentrations can account for constant, but also time‐variable external concentrations. DEBtox models are flexible enough to allow for the description of several modes of action via specific sets of DEBtox equations depending on the target process that is affected by the toxicant. Stated simply, in DEBtox, the toxicant can affect either the acquisition or the use of energy. In more details, typical effect targets are

Energy acquisition (assimilation, Figure 3);

Energy use from reserves (mobilisation);

Energy spent on growth;

Energy spent for reproduction; or

Allocation between somatic maintenance and reproduction.

It should be kept in mind that the definition of ‘mode of action’ in a DEB context (DEBMoA, as stated by Baas et al., 2018) is not the same as the definition of ‘mode of action’ in a general toxicity context. In a DEB context, the mode of action is mathematically formulated as a change on how the chemical affects the physiological processes accounted for in a DEB model. Hence, chemicals with different biochemical modes of action as defined by molecular target sites for, e.g. pesticides, may exhibit a similar DEB mode of action when evaluating how the pesticide affects the DEB model parameters.

Even though some tools are already available to perform parameter estimation (e.g. DEBtoxM7 and BYOM8 packages for Matlab, home‐made scripts in the R software), DEBtox models in their current form require advanced statistical skills to be fitted to experimental data. In addition, data sets that would be useful to provide best estimate of all DEB model parameters are not standardised yet. Nevertheless, in essence, DEBtox modelling approaches provide a great potential to explore toxicity far beyond classical dose‐effect approaches. A recent paper by Baas et al. (2018) fully explains all the potentiality of DEB models to assess chemical toxicity on individuals in an ERA perspective.

2.4. Models for algae and aquatic macrophytes

2.4.1. How are algae and plants different from other higher organism model?

Algae and plants are different from other higher organisms in one important aspect: they can photosynthesise and thereby create their own energy, rather than having to ingest it through food. The rate at which algae and plants photosynthesise depends on a range of environmental factors of which irradiance, inorganic carbon, nutrient availability and temperature are among the most important. This means that the quantification of energy input in a plant system is more challenging than for an organism where maximum energy uptake basically only depends on its size and food availability, and where the nutritional quality of the food is expected to balance the demands of the organism. Algae and plants need to balance their uptake of inorganic carbon, nitrogen, phosphorous and micronutrients separately to obtain the optimal tissue composition. In addition, plants may have different compartments playing different roles. In rooted aquatic macrophytes, for example, only shoots photosynthesise, while roots play an important role in the uptake of nutrients such as nitrogen and phosphorous. Other nutrients, such as potassium, are primarily taken up by the shoot (Barko and Smart, 1981).

Apart from roots and shoots being physiologically different, the environmental medium where they grow (water vs sediment) also differs immensely in terms of light, inorganic carbon, nutrient and particularly oxygen availability. This means that availabilities of different chemicals will also differ between water and sediment. Hence, if toxicity of chemicals towards plants is to be considered via uptake from both water and sediment, plant models need to account for the respective compartments (i.e. leaves/shoots and roots). For the time being, the transport of toxic chemicals between water and sediment in risk assessment is addressed with fate modelling alone. Scientifically sound and functional interfaces between fate and exposure models and effect models could help here in the future to unify such tightly connected processes. Algae and floating macrophytes get all nutrients and toxicants through the water phase; hence, for these organisms it may suffice to use one‐compartment models.

Another important difference between most heterotrophic organisms used for toxicity testing and algae and plants is that many plants, particularly aquatic macrophytes, are clonal and therefore a large part of their reproduction is vegetative. Microalgae almost exclusively reproduce by cell division, macroalgae, which are not addressed here, can have more complex life cycles. Allocation to sexual reproduction in aquatic macrophytes might take place depending on species and growth conditions, but allocation to storage organs (e.g. root stocks and vegetative propagules) that can enable the plant to survive winters or dry periods might be equally or even more important in terms of resource allocation than sexual reproduction. Hence, resource allocation patterns might be more complex in higher plants than for heterotrophic organisms. For the purpose of macrophyte TKTD modelling, macrophytes are thus considered as ‘one individual’ showing unconstrained (exponential) growth for a constrained time period or density dependent growth. Choosing density dependent growth actually means using saturation‐growth curves to describe the growth of heterotrophic organisms. Sexual reproduction or allocation to storage organs in the macrophyte growth models is not explicitly considered at this stage of plant modelling.

With respect to the analysis of toxic effects, the most obvious difference between primary producers (e.g. algae and vascular plants) and animals (e.g. invertebrates, fish and amphibians) is that for primary producers the main parameter measured is growth (OECD, 2006, 2011a, 2014a,b).

While for the analysis of mortality on invertebrates or vertebrates like fish, the survival of the individual is the normal case (or null model), this does not hold for algae and vascular plants, because they do not simply ‘die’ under exposure to a toxic chemical, but their growth dynamics can decrease or eventually completely stop. Certainly, algae and vascular plants can also die after being intoxicated, but this mortality is a process that takes much longer and is much more challenging to detect than for macroinvertebrates or fish. For microalgae, which are typically used for toxicity assessment, population density is usually measured. Hence, mortality of individual cells is difficult to distinguish from background mortality and from the reproduction of growing cells. For that reason, the assessment of toxic effects on algae and vascular plants needs growth models as a baseline or null model.

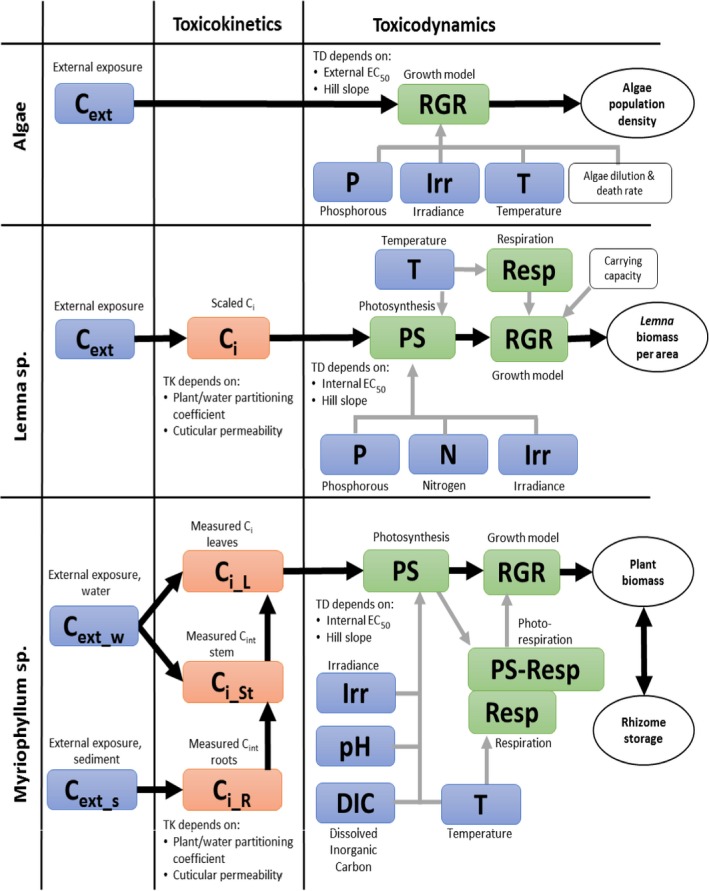

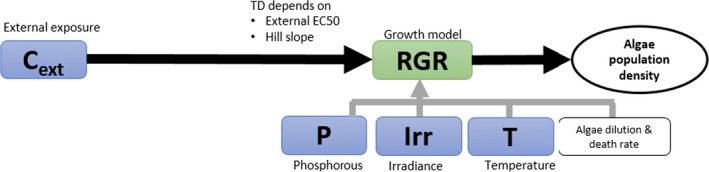

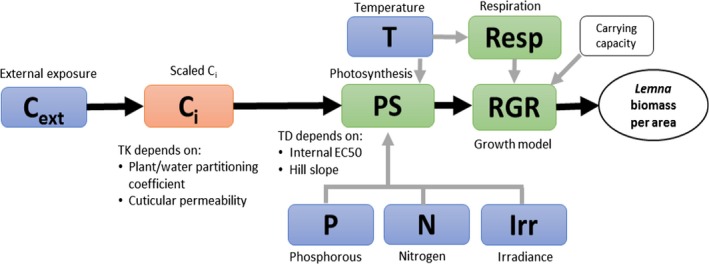

The next two sections will give an overview of how the TKTD concepts can be used on growth models for algae and the aquatic macrophyte species typically used in environmental risk assessment. An overview of the models is given in Figure 4.

Figure 4.

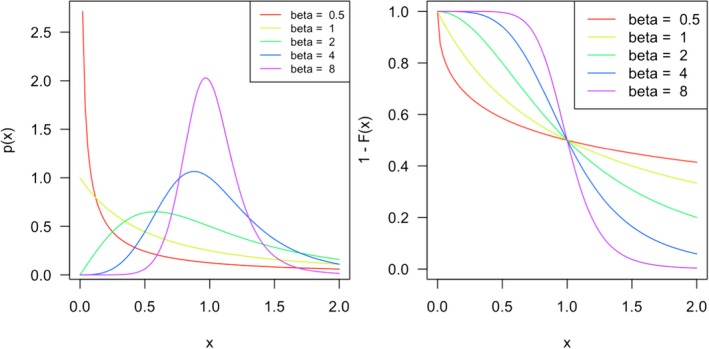

Schematic representation of the algae, Lemna spp. and Myriophyllum spp. models presented in Weber et al. (2012); Schmitt et al. (2013); Heine et al. (2014, 2015, 2016a). External factors affecting chemical uptake or growth are given in blue, internal chemical concentrations in orange and the different rate constants affecting biomass growth of the plants or algae in the respective models are given in green. White squares denote other factors included in the model such as the algae media dilution rate specific for the flow though system used in the algae test and the density‐dependent growth incorporated in the Lemna model. Model endpoints are given in white circles. The output of the growth model is relative growth rates (RGR) and the growth rates are affected by the internal concentrations via a concentration–response relationship defined by the concentration decreasing growth by 50% (EC 50) and a slope parameter of the curve (Hill slope). These two parameters are equivalent to the m and β describing the relationship between internal concentrations or damage and hazard in the IT version of GUTS (see Figure 2)

2.4.2. The TKTD concept used on pelagic microalgae

The algal species most routinely tested for risk‐assessment purposes of plant protection products is the pelagic microalga Raphidocelis subcapitata (formerly known as Pseudokirchneriella subcapitata or Selenastrum capricornutum). Models have been developed for both static (Copin and Chevre, 2015; Copin et al., 2016) and flow‐through growth systems (Weber et al., 2012), with the latter being the most elaborate model. Using flow‐through systems is the only way effects of variable exposures can be tested on microalgae growth without filtering or centrifuging the algae to separate them from the medium and keeping them in an exponential growth phase over a prolonged time span of days to weeks. As the design of the flow‐through system defines the growth conditions of the algae, it is therefore an inherent part of the model. In a flow‐through system, new growth medium is continuously being added to replace media with algae. In this way, the algae can be kept in a constant growth phase with the maximal density being determined by the growth conditions (irradiance, temperature, nutrient and carbon availability) and the flow‐through rate of the medium. Using a flow‐through system, the model can be used to simulate multiple peak/pulsed exposures such as those, e.g. from the FOCUS exposure profiles in a ‘stream scenario’. This is therefore the only algae model that will be evaluated here. Contrary to the macrophyte models, the algae model of Weber et al. (2012) does not contain a TK section, nor does it distinguish between temperature effects on photosynthesis and respiration. Instead the external chemical concentration affects the relative growth rate of the population directly, as does temperature, nutrient (only phosphorous considered) and irradiance (Weber et al., 2012). The algae model is pictured together with the two macrophyte models for comparison in Figure 4. As the TK part of the model is non‐existing, it can be argued whether the model can be categorised as a true TKTD model.

2.4.3. The TKTD concept used on aquatic macrophytes

Presently, there are two models available integrating macrophyte growth models with the TKTD principle: the Lemna model by Schmitt et al. (2013), and the Myriophyllum model by Heine et al. (2014, 2015) which is further extended in Hommen et al. (2016). The two macrophyte species are the standard test species representing an aquatic (floating) monocot (Lemna spp.) and a dicot (Myriophyllum spp.) which is rooted in the sediment. Having both a monocot and a dicot in the test battery is important, as some herbicides are selective against one of these plant groups. A rooted species will also be useful in addressing the bioavailability and toxicity of sediment‐bound pesticides. In addition, the two species represent a floating and a submerged rooted phenotype, and species with different life cycles – fast vs. more slowly growing species. In both the Lemna and Myriophyllum models, internal toxicant concentrations are modelled based on a plant/water partitioning coefficient, corresponding to a bioconcentration factor, and a measure of cuticular permeability that determine the uptake rate. Uptake by roots is not considered at present but can be implemented in the future if needed. The internal concentrations are then directly related to growth via a log‐logistic concentration‐effect model, whereby the per cent inhibition of net photosynthesis is determined. Plant growth is the sum of net photosynthesis and respiration processes, which are to different extents affected by, e.g. temperature and are, therefore, modelled as separate processes.

The main difference between the two models on the TK side is that the Lemna model works with scaled internal concentrations (described in Section 2.2), whereas the Myriophyllum model works with measured internal concentrations.

The largest difference between the two models on the TD side is the growth model. Lemna is the simplest plant: (1) it is floating, and hence the sediment compartment is not an issue, and making a distinction between roots and fronds can thus be ignored, (2) it gets its inorganic carbon mainly from CO2 in the air, which is always available compared to forms and availabilities of inorganic carbon in water and most likely not limiting for photosynthesis, and (3) it mainly reproduces vegetatively, hence allocation to reproduction or storage organs is of little importance. Assuming non‐limiting nutrient supplies, which will typically be the case in edge‐of‐field surface waters affected by agriculture, this leaves photosynthetic rates in the model being dependent only on irradiance and temperature. The influence of plant densities on irradiance is not explicitly considered in the current models, but considered indirectly through the carrying capacity. Parameters to quantify the impact of irradiance and temperature on growth can be obtained from laboratory conditions, and environmentally relevant time series of these factors can be obtained from most meteorological stations. The Lemna model does not focus on a single plant, but instead the plant‐biomass growth (photosynthesis minus respiration) per area is modelled. As the plants/fronds, when reaching a certain density, start to shade each other and block availability to inorganic carbon and nutrients, the growth will decrease, and the biomass will reach a maximum plateau. This density dependence of areal biomass growth has been built into the model in order to enable modelling of Lemna growth dynamics over a season with time‐variable exposure. The growth rate input to the model can be based both on increase in frond number or surface area over time, later calibrated to a biomass unit, as the surface area specific parameters can easily and non‐destructively be monitored over time. Converting surface area or frond number specific growth rates to biomass, however, ignores potential differences in surface to biomass ratios as a function of growth conditions.

Myriophyllum spp. are more complex plants; hence, the growth model is more complex as compared to the Lemna model. Myriophyllum is rooted, hence, the growth model needs at least root, stem and shoot compartments, which are explicitly introduced (Heine et al., 2014) and modelled in Heine et al. (2015, 2016a). In an extended Myriophyllum model (Hommen et al., 2016), rhizomes – which are energy storage organs – are also included to be able to model growth and allocation patterns over longer timescales, e.g. a whole season, which was not possible in the original model by Heine et al. (2014, 2016a). In the original growth model (Heine et al., 2014), photosynthesis takes place in the leaves and biomass is allocated to leaves, stems and roots in the fixed proportions 55:35:10%, which is an average based on literature data (Jiang et al., 2008). Uptake of chemicals can take place by leaves, stems and roots using the same cuticular permeability constant for all three tissues, but different surface to volume ratios. This in principle allows for modelling uptake from the sediment compartment in addition to uptake from the water compartment, but sediment uptake has not been implemented yet in the published Myriophyllum models. Transport between the plant parts is not well described in the literature. A rate constant value for the xylem transport of chemicals is given in the supplementary material of Heine et al. (2016a), but no references for the value is given. Phloem transport is not considered, although it could be quantified proportional to the amount of photosynthates allocated from leaves to support stem and root growth. Hence, although a growth model with different plant compartments for Myriophyllum has been built, further developments and improvements are still needed. The use of these models in risk assessment could profit from the detailed analysis of uptake and transport of organic contaminants in Myriophyllum (Diepens et al., 2014), where uptake and transport processes have been analysed experimentally and also dynamically modelled. In addition, general knowledge on water and carbon flows in aquatic plants (Pedersen and Sand‐Jensen, 1993; Best and Boyd, 1999) combined with knowledge on physicochemical properties of the pesticide could be used to enhance predictability of internal pesticide movements in Myriophyllum.

When it comes to inorganic carbon availability, Myriophyllum is also in a very different position compared to Lemna. Contrary to the aerial compartment, the availability of inorganic carbon and other gaseous compounds in the water is strongly limited by their solubility in water and by their diffusion towards the leaves. In addition, the form by which inorganic carbon exists in the water (e.g. CO2 or HCO3) is strongly pH dependent. Water pH is affected by both plant‐nutrient uptake and photosynthetic rates, which vary over the day; hence, pH can easily vary between pH 6 and 10 in a pond during a day, and to even more extreme values within dense macrophyte stands. The varying carbon availability and its effect on photosynthetic rate is incorporated in the Myriophyllum model, which is why information on both dissolved inorganic carbon (DIC) and pH is required as input parameters. As Myriophyllum has access to nutrients from the sediment, also in this model non‐limiting nutrient supply is assumed.

As for Lemna, Myriophyllum reproduces vegetatively; but in addition, it also flowers and produces seeds once or twice a year and allocates energy to rhizomes (Best and Boyd, 1999). In the extended model version (Hommen et al., 2016), the rhizomes are included but it is not explicit which principles determine allocation to rhizomes. Allocation of energy to flowers and seeds has not been incorporated (Hommen et al., 2016), as it is very minor (Best and Boyd, 1999). The typical ‘die‐off’ after sexual reproduction described in Best and Boyd (1999) is, however, incorporated in Hommen et al. (2016). Contrary to the Lemna model, density dependence of growth, when Myriophyllum reaches closed stands and thereby creates self‐shading of lower leaves, is not incorporated. This could be done, as maximum leaf area indexes for macrophyte populations exist in the literature. In the paper by Hommen et al. (2016), a ‘death rate’ has been incorporated in the Myriophyllum model, but it is not explicit how this is done mathematically and will therefore not be considered further in this opinion.

3. Problem definition/formulation

3.1. Introduction

The EFSA Opinion on good modelling practice in the context of mechanistic effect models for risk assessment (EFSA PPR Panel, 2014) says:

‘The problem formulation sets the scene for the use of the model within the risk assessment. It therefore needs to clearly explain how the modelling fits into the risk assessment and how it can be used to address protection goals’ and ‘The problem formulation needs to address the context in which the model will be used, to specify the question(s) that should be answered with the model, the outputs required to answer the question(s), the domain of applicability of the model, including the extent of acceptable extrapolations, and the availability of knowledge’.

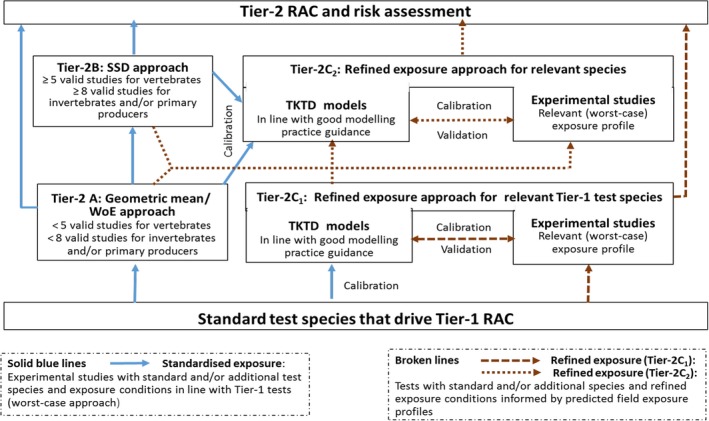

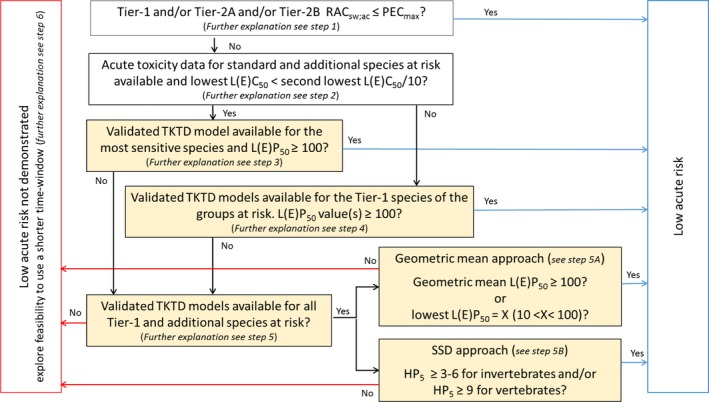

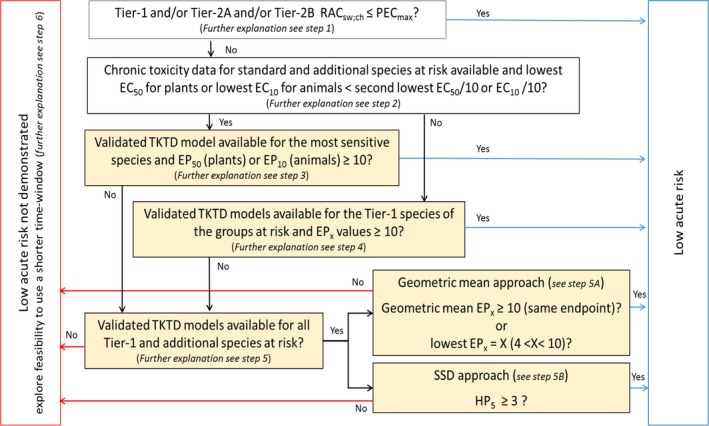

The EFSA Aquatic Guidance Document (EFSA PPR Panel, 2013) describes that TKTD models may be used in the Tier‐2 effect assessment procedure to expand the risk assessment of PPPs based on laboratory single‐species tests with standard and additional test species (Figure 5).

Figure 5.

Schematic presentation of the tiered approach within the acute (left part) and chronic (right part) effect assessment for PPPs. For each PPP, both the acute and chronic effects/risks have to be assessed. The Tier‐1 and Tier‐2 effect assessments are based on single species laboratory toxicity tests, but to better address risks of time‐variable exposures the Tier‐2 assessment may be complemented with toxicokinetic/toxicodynamic (TKTD) models. Tier‐3 (population and community level experiments and models) and Tier‐4 (field studies and landscape level models) may concern a combination of experimental data and modelling to assess population and/or community level responses (e.g. recovery; indirect effects) at relevant spatiotemporal scales. All models included in such a tiered approach need to be properly tested and fulfil required quality criteria. RAC: Regulatory Acceptable Concentration; sw: surface water; ac: acute; ch: chronic; PEC: Predicted Environmental Concentration; twa: time‐weighted average

The different Tier‐2 approaches described in the Aquatic Guidance Document (EFSA PPR Panel, 2013) are schematically presented in Figure 6. For convenience, the refined exposure approach (experiments and models) for standard test species are indicated as Tier‐2C1 and when relevant additional test species are involved as Tier‐2C2.

Figure 6.

Schematic presentation of the different Tier‐2 approaches in the aquatic effect/risk assessment for PPPs. The different types of arrows indicate different approaches (solid blue lines refer to tests with standardised exposures; broken lines refer to tests with refined exposures). Further details on the geometric mean and species sensitivity distribution (SSD) approaches can be found in Chapter 8 of the Aquatic Guidance Document, while information on experimental refined exposure tests are given in Chapter 9 of the Aquatic Guidance Document (EFSA PPR Panel, 2013). Suggestions how to apply a Weight‐of‐Evidence (WoE) approach in Tier‐2 effect assessment if toxicity data for a limited number of additional species are available can be found in Section 8.2.2 of the Scientific Opinion on sediment effect assessment (EFSA PPR Panel, 2015)

Single species toxicity tests with aquatic organisms that are used in Tier‐1 (Standard test species approach), Tier‐2A (geometric mean/weight‐of‐evidence (WoE) approach) and Tier‐2B (species sensitivity distribution (SSD) approach) assessments, address concentration–response relationships based on standardised exposure conditions as laid down in test protocols. Toxicity estimates (e.g. EC50, EC10 and NOEC values) from these tests need to be expressed in terms of nominal (or initially measured) concentrations if they deviate less than 20% from the nominal (or initially measured) concentrations during the test or otherwise in mean concentrations (i.e. geometric mean or time‐weighted average concentrations) appropriately measured during the test. Consequently, these tests aim to assess the effects of a more or less constant exposure. In the field, however, time‐variable exposure regimes of PPPs in edge‐of‐field surface waters may be the rule rather than the exception. To assess toxicity estimates of more realistic time‐variable exposure profiles as predicted in the prospective exposure assessment, both refined exposure experiments and TKTD models may be used, either focussing on standard test species (Tier‐2C1) or also incorporating relevant additional species (Tier‐2C2) (see Figure 6). In the Aquatic Guidance Document (EFSA PPR Panel, 2013), it is stated that for proper use in regulatory risk assessment, experimental refined exposure studies should be based on realistic worst‐case exposure profiles informed by height, width and frequency of toxicologically dependent pulses from the relevant predicted (modelled) field exposure profiles. The realistic worst‐case exposure regimes selected for the refined exposure test thus represents those predicted exposure profiles that likely will trigger the highest risks. This also implies that the results of refined exposure tests are case‐specific. The possible implementation of another use pattern of the PPP under evaluation (e.g. due to mitigation measures) may result in a change in the relevant exposure profiles to be assessed. The main advantage of TKTD models is that they may be used as a regulatory tool to assess multiple exposure profiles.

TKTD models used as tools in Tier‐2 assessments need to be calibrated. For this, Tier‐1, Tier‐2A and/or Tier‐2B toxicity data sets as well as dedicated refined exposure tests with the selected species of concern can be used. In addition, substance‐ and species‐specific data sets derived from independent refined‐exposure experiments are required for TKTD model validation (see Figure 6). These validation experiments could be informed by the predicted field‐exposure profiles according to the worst‐case intended agricultural use of the substance. However, simulating the worst‐case exposure profile should not be considered a prerequisite for validation purpose. The validation experiment, however, should include at least two different profiles with at least two pulses each. For each pulse, at least three concentrations should be tested leading to low, medium and strong effects (see Sections 7 and 4.1.4.5 for more details). These recommendations are to address phenomena related to dynamics between internal and external exposure concentrations and possible repair of effects. To address phenomena related to toxicological dependence/independence, the individual depuration and repair time (DRT95) should be calculated and considered for the timing of the pulses; one of the profiles should show a no‐exposure interval shorter than the DRT95, the other profile clearly larger than the DRT95 (see Section 4.1.4.5 for more details). In case DRT95 values are larger than can be realised in the duration of validation experiments, or even exceed the lifetime of the considered species, the second tested exposure profile may be defined independently from the DRT95.