Abstract

EFSA requested the Scientific Committee to develop a guidance document on the use of the weight of evidence approach in scientific assessments for use in all areas under EFSA's remit. The guidance document addresses the use of weight of evidence approaches in scientific assessments using both qualitative and quantitative approaches. Several case studies covering the various areas under EFSA's remit are annexed to the guidance document to illustrate the applicability of the proposed approach. Weight of evidence assessment is defined in this guidance as a process in which evidence is integrated to determine the relative support for possible answers to a question. This document considers the weight of evidence assessment as comprising three basic steps: (1) assembling the evidence into lines of evidence of similar type, (2) weighing the evidence, (3) integrating the evidence. The present document identifies reliability, relevance and consistency as three basic considerations for weighing evidence.

Keywords: risk assessment, weight of evidence, biological relevance, uncertainty, lines of evidence

Short abstract

This publication is linked to the following EFSA Supporting Publications article: http://onlinelibrary.wiley.com/doi/10.2903/sp.efsa.2017.EN-1295/full

Summary

The European Safety Authority (EFSA) requested the Scientific Committee (SC) to develop a guidance document on the use of the weight of evidence approach in scientific assessments for use in all areas under EFSA's remit.

The guidance document addresses the use of weight of evidence approaches in scientific assessments using both qualitative and quantitative approaches. Several case studies covering the various areas under EFSA's remit are annexed to the guidance document to illustrate the applicability of the proposed approach.

In developing the guidance, the Working Group (WG) of the SC took into account other EFSA activities and related European and international activities to ensure consistency and harmonisation of methodologies in order to provide an international dimension to the guidance and avoid duplication of the work.

This guidance document is intended to guide EFSA Panels and staff on the use of the weight of evidence approach in scientific assessments. It provides a flexible framework that is applicable to all areas within EFSA's remit, within which assessors can apply those methods which most appropriately fit the purpose of their individual assessment.

Weight of evidence assessment is defined in this guidance as a process in which evidence is integrated to determine the relative support for possible answers to a question. This document considers the weight of evidence assessment as comprising three basic steps: (1) assembling the evidence into lines of evidence of similar type, (2) weighing the evidence, (3) integrating the evidence.

The present document identifies reliability, relevance and consistency as three basic considerations for weighing evidence. They are defined in terms of their contributions to the weight of evidence assessment: Reliability is the extent to which the information comprising a piece or line of evidence is correct. Relevance is the contribution a piece or line of evidence would make to answer a specified question, if the information comprising the line of evidence was fully reliable. Consistency is the extent to which the contributions of different pieces or lines of evidence to answering the specified question are compatible.

While no specific methods are prescribed, a list of criteria for comparing weight of evidence methods is provided to assist in evaluating the relative strengths and weaknesses of the different methods. The criteria do not necessarily have equal importance: their relative importance may be considered on a case‐by‐case basis when planning each weight of evidence assessment.

All EFSA scientific assessments must include consideration of uncertainties, reporting clearly and unambiguously what sources of uncertainty have been identified and what their impact on the assessment outcome is.

Reporting should be consistent with EFSA's general principles regarding transparency and reporting. In a weight of evidence assessment, this should include justifying the choice of methods used, documenting all steps of the procedure in sufficient detail for them to be repeated, and making clear where and how expert judgement has been used. Reporting should also include referencing and, if appropriate, listing or summarising all evidence considered, identifying any evidence that was excluded; detailed reporting of the conclusions; and sufficient information on intermediate results for readers to understand how the conclusions were reached.

EFSA Panels and Units are encouraged to review their existing approaches to weight of evidence assessment in the light of the guidance document, and to consider in particular:

Whether all pertinent aspects of reliability, relevance and consistency are addressed,

How to ensure the transparency of weight of evidence assessments,

Carry out some case studies to assess whether additional methods described in the guidance would add value to their scientific assessments.

1. Introduction

1.1. Background and Terms of Reference as provided by EFSA

EFSA's Science Strategy 2012–2016 has identified four strategic objectives: (1) further develop excellence of EFSA's scientific advice, (2) optimise the use of risk assessment capacity in the European Union (EU), (3) develop and harmonise methodologies and approaches to assess risks associated with the food chain, (4) strengthen the scientific evidence for risk assessment and risk monitoring. In this context, the harmonisation and development of new methodologies for risk assessment and scientific assessments is of critical importance to deliver EFSA's science strategy. For this purpose, a number of projects have recently started at the European Food Safety Authority (EFSA) to address individual and cross‐cutting methodological issues within the whole scientific assessment landscape. The Assessment Methodological unit (AMU) of EFSA has started a project (PROMETHEUS) with the objective of supporting the coordination and consistency of all EFSA projects that aim at developing or refining methodological approaches. Such an umbrella project will provide a definition of the guiding principles for evidence‐based assessments and a collection of available approaches and will identify areas where methods or tools are needed to fulfil such guiding principles.1

In July 2013, the Scientific Committee (SC) of EFSA published an opinion on ‘priority topics for the development of risk assessment guidance by EFSA's SC’ which used a number of criteria to make recommendations for the preparation of new or the revision of existing guidance documents as follows:

Across Panel relevance

Critical importance including urgency of topic to be addressed for several Panels

Topic not being addressed by an individual Panel

Sufficient information available to develop meaningful guidance

International dimension.

From this prioritisation exercise, the SC opinion identified three priority topics for 2014: uncertainty analysis, biological relevance, and the use of the weight of evidence (weight of evidence) in scientific assessments (EFSA Scientific Committee, 2013).

The latter is the subject of this project. The weight of evidence has been defined by the WHO as ‘a process in which all of the evidence considered relevant for a risk assessment is evaluated and weighted’ (WHO, 2009). The SC of EFSA used the WHO definition and pointed out that evidence can be derived from several sources such as white literature (peer reviewed scientific publications), grey literature (reports on websites of governmental, nongovernmental, intragovernmental agencies, etc.) and black literature (confidential reports). In order to increase transparency in the risk and other scientific assessment processes, it is important to provide a methodology to select, weigh and integrate the evidence in a systematic, consistent and transparent way to reach the final conclusions and to identify related uncertainties (SCENIHR, 2012; EFSA Scientific Committee, 2013). In addition, the SC of EFSA noted that part of the overall weighing of the evidence deals with the evaluation of equivalent or similar questions performed by other international bodies and the adequacy of such evaluations should be judged by EFSA before taking them into account. This is particularly helpful in cases for which the information available is so extensive that it is beyond the capability of a single evaluation to judge each individual study, report, publication by itself. In addition, systematic reviews (SRs) may be very useful. However, the adequacy of the process, the pertinence to the risk assessment, the nature of the question and the inclusion and exclusion criteria should be transparently evaluated by EFSA before taking SRs into account (EFSA Scientific Committee, 2013). Considering the example of chemical risk assessment, the weight of evidence approach requires expert judgement of distinct lines of evidence (in vivo, in vitro, in silico, population studies, modelled and measured exposure data, etc.), which may come from studies conducted according to official guidelines (e.g. OECD) or from non‐standardised methodologies. In this context, data from all sources and categories of literature should be considered for the risk assessment processes, as appropriate to determine their quality and relevance. These considerations should then be reflected in the relative weight given to the evidence in the scientific assessment and transparently taken into account in the overall evaluation of uncertainty (EFSA Scientific Committee, 2013). It is therefore proposed that the SC of EFSA develop guidance on the use of the weight of evidence approach in scientific assessments.

Terms of Reference

EFSA requests the SC to develop a guidance document on the use of the weight of evidence approach in scientific assessments for use in all areas under EFSA's remit.

The guidance document should address the use of the weight of evidence in scientific assessments using both qualitative and quantitative approaches. Several case studies covering the various areas under EFSA's remit should be annexed in the guidance document to illustrate the proposed approaches.

In developing the guidance, the Working Group (WG) of the SC should take into account other EFSA activities and related European and international activities to ensure consistency and harmonisation of methodologies, to provide an international dimension to the guidance and avoid duplication of the work.

In line with EFSA's policy on openness and transparency, EFSA will publish a draft version of the guidance document for public consultation to invite comments from the scientific community and stakeholders. Subsequently, the guidance document and the results of the public consultation should be presented at an international event after publication.

1.2. Interpretation of the Terms of Reference

In the context of risk assessment, various formal definitions and synonyms have been offered by IPCS (2004), US EPA (2000), WHO FAO, US National Research Council's Committee, SCHER, SCENIHR, SCCS (2013) on Improving Risk Analysis Approaches for the phrase ‘weight of evidence’ or ‘evidence synthesis’.

When addressing the mandate, the SC acknowledged that the issue of weight of evidence approaches in risk assessment encompasses aspects related to the reliability of the various pieces of evidence used in the assessment.

In order for the guidance document to address the use of the weight of evidence approaches in scientific assessments using both qualitative and quantitative approaches, a list of the available approaches used globally has been provided together with several case studies from various areas under EFSA's remit to illustrate the proposed approaches.

In developing the guidance, the WG of the SC has taken into account other EFSA activities and related European and international activities to ensure consistency and harmonisation of methodologies, to provide an international dimension to the guidance and avoid duplication of the work.

In particular:

relevant guidance published by the SC on related subjects (transparency in risk assessment, uncertainty in exposure assessment, statistical significance and biological relevance (EFSA, 2006, 2009, 2011) and the latest draft guidance documents on uncertainty and biological relevance that are being developed concomitantly.

the guidance on uncertainty analysis in risk assessment. It deals specifically with reporting and analysing uncertainties using qualitative and quantitative methods for all work within EFSA‘s remit. The overlaps with the weight of evidence approach should be carefully taken into account by both WGs to ensure that the weight of evidence and uncertainty guidance documents are consistent, use harmonised methodologies, and do not duplicate the work.

the guidance on biological relevance for all areas of work within EFSA's remit. It deals specifically with criteria to evaluate biological relevance in scientific assessments and the overlaps with the weight of evidence approach should be carefully taken into account by both WGs to ensure consistency, the use of harmonised methodologies, and to avoid duplication.

the current work within EFSA on the approach to evidence‐based risk assessment to ensure consistency, the use of harmonised methodologies, and to avoid duplication of the work.

The guidance was also expected to take into account other European and international activities:

The work of the European Commission's non‐food scientific committees and other agencies on weight of evidence approach, and where appropriate, seek their participation for the development of a harmonised guidance.

Examples include best practices in weight of evidence methodologies, the use of SR in risk assessment, the WHO application of the weight of evidence approach in relation to the mode of action framework and other related international developments (EFSA, 2010a; Meek et al., 2014; Perkins et al., 2015; OECD, 2016; Wittwehr et al., 2016).

1.3. Aim and scope of the document

Weighing the evidence is an inherent part of every scientific assessment performed by EFSA. Experts review all available data, and come to conclusions based on an assessment of their overall confidence in the results of all reviewed studies. The approaches and methods used in conducting such an ‘non‐formalised’, inherent weighing of the evidence are mostly not spelled out, however.

The aim of this guidance document is to provide a general framework for considering and documenting the approaches used to weigh the evidence in answering the main question of each scientific assessment or questions that need to be answered in order to provide, in conjunction, an overall answer. The document further indicates, in general terms, types of qualitative and quantitative approaches to weigh and integrate evidence, and lists individual methodologies with pointers as to where details of these can be found. Finally, the document provides suggestions for conducting and reporting of weight of evidence assessments.

The document does not attempt to prescribe approaches or methods to be used, nor does it provide a comprehensive description of all methods that can be used.

1.4. Relation to other relevant EFSA guidance documents

The guidance on the use of the weight of evidence approaches builds on the conceptual approach for scientific assessments as described in PROMETHEUS (EFSA, 2015b), which describes the overall process for dealing with data and evidence.

Transparent reporting of all assumptions and methods used, including expert judgement, is necessary to ensure that the assessment process leading to the conclusions is fully comprehensible.

‘Open EFSA’ aspires both to improve the overall quality of the available information and data used for its scientific outputs and to comply with normative and societal expectations of openness and transparency (EFSA, 2009, 2014a,b,c,d). In line with this, EFSA is publishing three separate but closely related guidance documents to guide its expert Panels for use in their scientific assessments (EFSA, 2015a–c). These documents address three key elements of the scientific assessment: the analyses of Uncertainty, Weight of Evidence assessment and Biological Relevance.

The first document provides guidance on how to identify, characterise, document and explain all types of uncertainty arising within an individual assessment for all areas of EFSA's remit. The Guidance does not prescribe which specific methods should be used from the toolbox but rather provides a harmonised and flexible framework within which different described qualitative and quantitative methods may be selected according to the needs of each assessment.

This current document on weight of evidence assessment provides a general framework for considering and documenting the approach used to evaluate and weigh the assembled evidence when answering the main question of each scientific assessment or questions that need to be answered in order to provide, in conjunction, an overall answer. This includes assessing the relevance, reliability and consistency of the evidence. The document further indicates the types of qualitative and quantitative methods that can be used to weigh and integrate evidence and points to where details of the listed individual methods can be found. The weight of evidence approach carries elements of uncertainty analysis that part of uncertainty which is addressed by weight of evidence analysis does not need to be reanalysed in the overall uncertainty analysis, but may be added to.

The third document provides a general framework to addresses the question of biological relevance at various stages of the assessment: the collection, identification and appraisal of relevant data for the specific assessment question to be answered. It identifies generic issues related to biological relevance in the appraisal of pieces of evidence, in particular, and specific criteria to consider when deciding on whether or not an observed effect is biologically relevant, i.e. whether it shows an adverse or a positive health effect. A decision tree is developed to aid the collection, identification and appraisal of relevant data for the specific assessment question to be answered. The reliability of the various pieces of evidence used and how they should be integrated with other pieces of evidence is considered by the weight of evidence guidance document.

EFSA will continue to strengthen links between the three distinct but related topics to ensure the transparency and consistency of its various scientific outputs while keeping them fit for purpose.

1.5. Audience and degree of obligation

This Guidance is aimed at all those contributing to EFSA assessments and provides a harmonised, but flexible framework that is applicable to all areas of EFSA's work and all types of scientific assessment, including risk assessment. In line with improving transparency, the SC considers the application of this guidance to be unconditional for EFSA. Each assessment must clearly and unambiguously document:

what evidence was considered and how it was assembled into lines of evidence;

how the evidence was weighed and integrated including consideration of reliability, relevance and consistency;

the conclusion on the weight of evidence question in terms of the range and probability of possible answers. This can be expressed qualitatively or quantitatively, but should be quantified if possible when it directly addresses the Terms of Reference for the assessment.

The document provides guidance on the general principles of the weight of evidence approach but assessors have the flexibility to choose appropriate methods, and the degree of refinement in applying them.

2. General framework and principles for weight of evidence assessment

This section provides a general framework and principles for weight of evidence assessment, including definitions of key concepts. Many scientific assessments involve weighing of evidence, although this may be implicit rather than explicit and is only sometimes described as ‘weight of evidence assessment’. The aim of this guidance is to make weight of evidence assessment more explicit and transparent, and to provide a general framework of principles and approaches which is applicable to all areas of EFSA's work. Account is taken of approaches already used by EFSA, by other EU and international organisations, and in the scientific literature.

2.1. Weight of evidence assessment and lines of evidence

WHO (2009) has defined weight of evidence assessment as ‘a process in which all of the evidence considered relevant for a risk assessment is evaluated and weighted’. A recent review by ANSES (2016) defines weight of evidence assessment as ‘the structured synthesis of lines of evidence, possibly of varying quality, to determine the extent of support for hypotheses’. Definitions and descriptions from a selection of other relevant publications are presented in Annex B, and reflect similar concepts to those of WHO and ANSES. The core of most definitions is that weight of evidence assessment is a process for integrating evidence to arrive at conclusions.

In practice, weighing2 of evidence may occur when estimating quantities, as well as when assessing hypotheses, and both are relevant to EFSA's work. Therefore, this document uses a broader definition, as follows:

Weight of evidence assessment is a process in which evidence is integrated to determine the relative support for possible answers to a scientific question.

The term ‘weight of evidence’ on its own is the extent to which evidence supports possible answers to a scientific question. This is what is assessed by weight of evidence assessment, and can be expressed qualitatively or quantitatively (discussed further in Section 2.3, below).

It is often useful to organise evidence into groups or categories, which are often referred to as lines of evidence. ANSES (2016) defines a line of evidence as ‘a set of relevant information of similar type grouped to assess a hypothesis’. Rooney et al. (2014) use the variant ‘streams of evidence’ which they describe as referring specifically to human data, animal data, and ‘other relevant data (including mechanistic or in vitro studies)’. This document simplifies the ANSES (2016) definition, replacing ‘relevant information’ with ‘evidence’ and reducing the emphasis on hypotheses, because weight of evidence assessment may be applied to quantities as well as hypotheses, as mentioned above. This results in the following general definition, which is compatible with other uses of the same term in the literature (see Annex B):

A line of evidence is a set of evidence of similar type.

Various terms have been used to refer to distinct elements of information within a line of evidence, including ‘studies’ and ‘pieces of evidence’. Piece of evidence is a more general term, as it could refer to a study (or to one of multiple outcomes of a study), or to other types of information including expert knowledge, experience, a model or even a single observation. In some cases, a line of evidence may comprise only a single piece of evidence.

Pieces of evidence may show varying degrees of similarity. There is no fixed rule on how much similarity is required within the same line of evidence. This is for the assessor(s) to decide, and depends on what they find useful for the purpose of the scientific assessment. For example, in some assessments, it might be sufficient to treat all human studies as a single line of evidence, whereas in other assessments it might be helpful to treat different types of human studies as separate lines of evidence.

The definition for line of evidence is broadly worded to accommodate different ways in which lines of evidence may contribute to answering a question. Different lines of evidence for the same question may be standalone, in the sense that each line of evidence offers an answer to the question without needing to be combined with other lines of evidence. It is important to distinguish these from complementary lines of evidence, which can only answer the question when they are combined. Multiple experiments measuring the same parameter are examples of standalone lines of evidence, whereas data on hazard and exposure are complementary lines of evidence for risk assessment because both are necessary and must be combined to assess risk. The distinction between complementary and standalone lines of evidence is important because it has practical implications in weight of evidence assessment (see Section 4). Note that a single question may be addressed by a combination of standalone and complementary lines of evidence.

Assessors often refer to ‘data gaps’, where types or lines of evidence that would have been useful are lacking. These are easier to detect in regulatory assessments, where lists of required data are established in legislation or guidance. Although weight of evidence assessment is described in terms of evaluating available evidence it also takes account of data gaps. This is because, when a particular type of evidence is absent, the contribution it could have made will also be absent. How much this affects the assessment will depend on the extent to which the available evidence can answer the question itself, or substitute for what is missing (e.g. by read‐across) (see also Section 4).

2.2. When to use weight of evidence approaches

In general, the purpose of weight of evidence assessment is to answer a scientific question, as implied in the preceding section. EFSA assessments address questions posed by their Terms of Reference. In some cases, a question in the Terms of Reference may be addressed directly, but in other cases, it is beneficial to divide the primary question into two or more subsidiary questions (EFSA, 2015b).

Weighing of evidence is involved, either explicitly or implicitly, wherever more than one piece of evidence is used to answer a question. Weight of evidence assessment is not needed for scientific questions where no integration of evidence is required.

Thus, a single scientific assessment may comprise one or many questions and none, some or all of those questions may require weight of evidence assessment.

Clarifying the questions posed by the Terms of Reference and deciding whether and how to subdivide them, and whether they require weight of evidence assessment, is part of the first stage of scientific assessment, often referred to as problem formulation. This may show that the question is relatively simple and can be addressed directly, by a straightforward assessment. In many assessments, however, questions may need to be subdivided to yield more directly answerable questions. In this manner, a hierarchy or tree of questions may be established. Assessment then starts at the bottom of the hierarchy. The evidence is divided into lines of evidence, as far as is helpful, assessed, weighed and integrated to answer each question at the bottom of the hierarchy. Integration continues upwards through the question hierarchy following similar principles, until full integration is reached to answer the main question defined by the problem formulation.

In some cases, the Terms of Reference for an assessment pose open questions, for example, to review the state of science on a particular topic. These assessments also require weight of evidence assessment approaches, because their conclusions generally derive from weighing and integrating evidence.

2.3. Weight of evidence conclusions

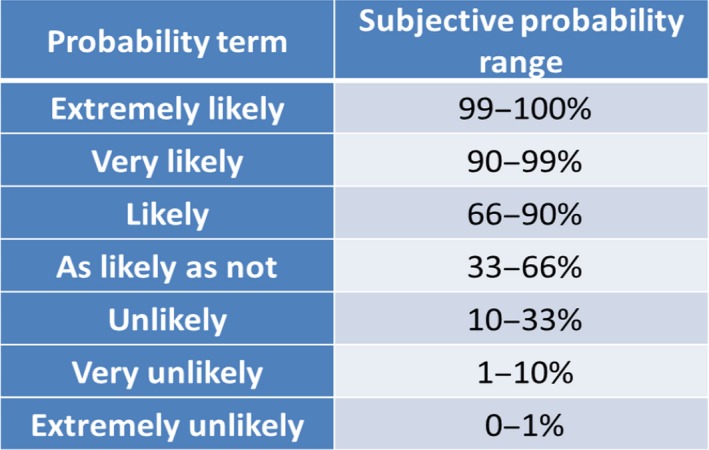

As implied in the definition above, the purpose of weighing evidence is to assess the relative support for possible answers to a scientific question. In some cases, it may be concluded that the evidence supports only one answer, with complete certainty. More usually, multiple answers remain possible, with differing levels of support. In such cases, the conclusion should state the range of answers that remain possible, and not be reduced to a single answer unless a threshold level of support for conclusions has been agreed with decision‐makers, because this involves risk management considerations.

When weight of evidence assessment directly addresses the conclusion of a scientific assessment, its output will be part of the response to the Terms of Reference for the assessment. In general, decision‐makers need to know the range of possible answers to their questions, and how probable they are, because this may have important implications for decision‐making (EFSA, 2016a,b,c). Furthermore, it is important to express this quantitatively when possible, to avoid the ambiguity of qualitative expression (EFSA, 2012, 2016a,b,c).

When weight of evidence addresses an intermediate question in a larger assessment, the possible answers and their relative support needs to be taken into account in subsequent steps of the assessment. In these cases, relative support may be expressed either qualitatively or quantitatively, depending on what is convenient for use in the subsequent steps. Qualitative and quantitative approaches are discussed further in Section 3.

2.4. Steps in weight of evidence assessment

This document considers the weight of evidence assessment as comprising three basic steps:

Assembling the evidence,

Weighing the evidence,

Integrating the evidence.

This corresponds to the three basic steps distinguished by Suter and Cormier (2011, their Figure A.1; see also Suter, 2016). The first step involves searching for and selecting evidence that is relevant for answering the question in hand, and deciding whether and how to group it into lines of evidence. The second step involves detailed evaluation and weighing of the evidence. In the third step, the evidence is integrated to arrive at conclusions, which involves weighing the relative support for possible answers to the question.

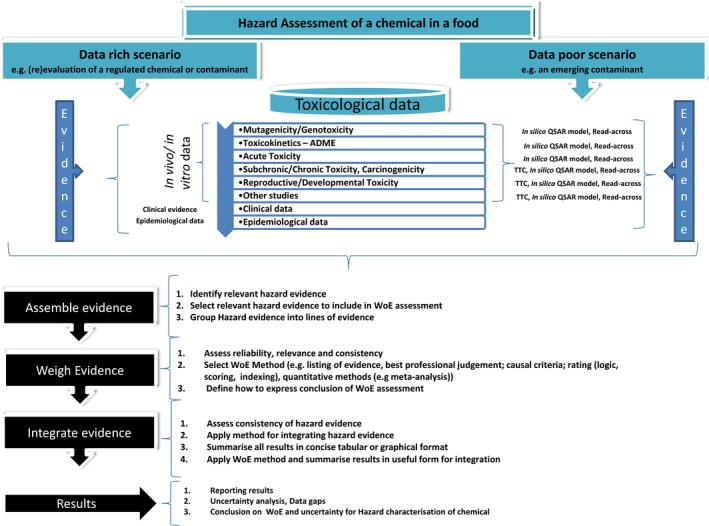

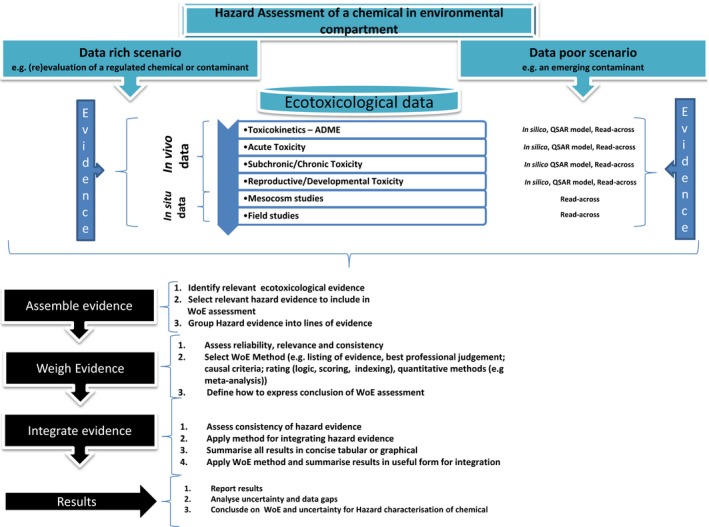

Figure A.1.

Generic decision tree for hazard identification and hazard characterisation of chemicals in food in data rich and data poor situations

Practical guidance for the three basic steps is provided in Section 4. Relevant considerations to be taken into account in the weighing and integrating steps are discussed in Section 2.5, while qualitative and quantitative methods for assessing those considerations are discussed in Section 3.

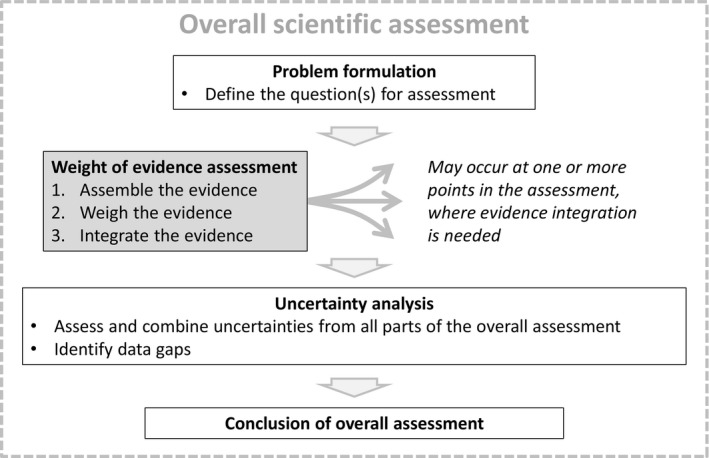

The three steps of weight of evidence assessment, described above, may occur at one or more points in the course of a scientific assessment, wherever integration of evidence is required, as illustrated in Figure 1. The question to be addressed by each weight of evidence assessment is defined by problem formulation, which is a preceding step in the scientific assessment as a whole. The output of weight of evidence assessment feeds either directly or indirectly into the overall conclusion of the scientific assessment. Although weight of evidence assessment itself addresses some of the uncertainty affecting the scientific assessment (see below), a separate step of uncertainty analysis is still needed to take account of any other uncertainties affecting the overall assessment. Some assessments will also include a step of sensitivity analysis or influence analysis, to identify which evidence and uncertainties have most influence on the conclusion.

Figure 1.

Diagrammatic illustration of weight of evidence assessment as a 3‐step process which may occur at one or more points in the course of a scientific assessment

Any part of the overall assessment may be refined iteratively, when necessary, by returning from later steps to earlier steps, depending on which steps it is most useful to refine.

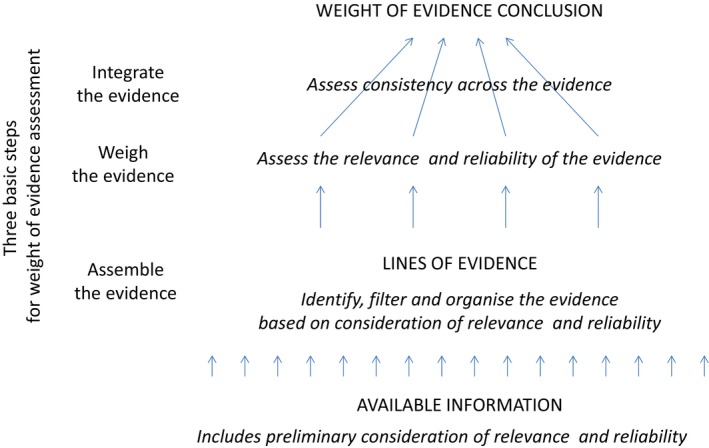

2.5. Key considerations for weighing evidence

Reliability, relevance and consistency are mentioned in many publications on weight of evidence assessment (see Annex B). These can be seen as three basic considerations in the weight of evidence assessment: how applicable the evidence is to the question of interest, the quality of the evidence and how consistent it is with other evidence for the same question. How these three concepts relate to one another, to the three basic steps of weight of evidence assessment and to the weight of evidence conclusion are illustrated in Figure 2. Note that relevance and reliability may be considered in both the first and second steps. First, relevance is considered when identifying evidence, and both relevance and reliability may be considered when selecting which of the identified evidence to include in the assessment (sometimes referred to as screening or filtering). However, the selected evidence will vary in both relevance and reliability and this will be considered in the second step, when weighing the evidence.

Figure 2.

Relationship of relevance (including biological relevance), reliability and consistency to the three basic steps of weight of evidence assessment and to the conclusion for a weight of evidence question

The present document defines reliability, relevance and consistency, in terms of their contributions to the weight of evidence assessment, as illustrated in Figure 2:

Reliability is the extent to which the information comprising a piece or line of evidence is correct, i.e. how closely it represents the quantity, characteristic or event that it refers to. This includes both accuracy (degree of systematic error or bias) and precision (degree of random error).

Relevance is the contribution a piece or line of evidence would make to answer a specified question, if the information comprising the evidence was fully reliable. In other words, how close is the quantity, characteristic or event that the evidence represents to the quantity, characteristic or event that is required in the assessment. This includes biological relevance (EFSA, 2017) as well as relevance based on other considerations, e.g. temporal, spatial, chemical, etc.

Consistency is the extent to which the contributions of different pieces or lines of evidence to answering the specified question are compatible.

These definitions are compatible with those found in other publications relating to weight of evidence assessment (e.g. ECHA, 2010; SCENIHR, 2012; Vermeire et al., 2013). Different types of ‘contribution’ are discussed further below.

Other publications mention additional considerations relevant to weight of evidence assessment including, for example, quality, applicability, coherence, risk of bias, specificity, biological concordance or plausibility, biological gradient and many others. Some of these are synonyms for reliability, relevance or consistency, some refer to combinations of reliability and relevance, and others refer to specific types of reliability, relevance or consistency that are important for particular areas of assessment (see Annex B). For example, in some approaches for assessing reliability, emphasis is placed on particular aspects (e.g. conformance to GLP) with less consideration of other key aspects of reliability that should also be assessed, such as bias (Moermond et al., 2017). The reasons for giving particular emphasis to reliability, relevance and consistency are that they are generic considerations, applicable to every type of assessment, with defined relationships to the three basic steps of weight of evidence assessment, and a defined relationship to the weight of evidence conclusion, as illustrated in Figure 2. This makes them useful to assessors as a conceptual framework for identifying specific considerations relevant to particular assessments, and for assessing how they combine to determine the weight of evidence conclusion. How this can be applied in practice is discussed further in Section 4.

2.6. Relation of weight of evidence assessment and uncertainty

Weight of evidence assessment and uncertainty are closely related. For example, SCENIHR (2012) state that ‘strength of evidence is inversely related to the degree of uncertainty’, while Suter and Cormier (2011) state that ‘the weight of the body of evidence, based on the combined weights of individual pieces of evidence, may be used to express confidence or uncertainty in the results’.

Weight of evidence assessment is defined above as a process which determines the relative support for possible answers to a scientific question. EFSA (2016a,b,c) defines uncertainty as ‘a general term referring to all types of limitations in available knowledge that affect the range and probability of possible answers to an assessment question’. Answers may refer to alternative hypotheses or estimates, and probability is one way of expressing relative support for possible answers. Thus, the weight of evidence conclusion for a question and the uncertainty of the answer can be expressed in identical form: the range of possible answers and their relative degree of support or probability. This is explicit in meta‐analysis, an evidence integration method producing conclusions in the form of estimates with confidence intervals, which express uncertainty.

However, expression of uncertainty for the conclusion of the scientific assessment as a whole should additionally include any uncertainties associated with the weight of evidence process itself. This may include uncertainties regarding, for example, the selection of evidence, assessment of reliability, relevance and consistency, and choice of weight of evidence methods. These should be taken into account by uncertainty analysis following the weight of evidence assessment (as indicated in Figure 1 above), and may modify the range and probability of answers to some degree.

Consistent with this, each of the three basic considerations in the weight of evidence assessment may be expressed in terms of uncertainty:

the reliability of a piece or line of evidence can be expressed as the uncertainty of that evidence itself (i.e. how different the evidence might be if the information comprising it was correct);

the relevance of a piece or line of evidence can be expressed as the uncertainty that would be associated with extrapolating from fully reliable information, of the type provided by that evidence, to its contribution to answering the weight of evidence question;

limitations in consistency between pieces or lines of evidence add to uncertainty about the answer to the weight of evidence question. In principle, different pieces or lines of evidence for the same question should be consistent, if allowance is made for their reliability and relevance (this is discussed further in Section 4.4).

Thus weight of evidence assessment could be regarded as contributing to uncertainty analysis, addressing the part of uncertainty that relates to limitations in reliability, relevance and consistency of the evidence. Uncertainty which is addressed in the weight of evidence assessment does not need to be re‐analysed in the uncertainty analysis, but may be added to (see Section 4.5).

Probability is not the only way to express relative support or uncertainty. It can also be expressed qualitatively, and this is essential for any uncertainties or aspects of weight of evidence assessment that cannot be quantified (see Section 5.10 of EFSA, 2016a,b,c). As explained above and by EFSA (2016a,b,c), expressing the probability of possible answers is important for the conclusions of a scientific assessment, but need not apply to earlier steps in the weight of evidence process.

2.7. Relation of weight of evidence assessment and variability

It is important also to consider how the weight of evidence assessment relates to variability. Variability is defined by EFSA (2016a,b,c) as ‘heterogeneity of values over time, space or different members of a population, including stochastic variability and controllable variability’. Note that ‘values’ could refer to values on a quantitative scale, or to alternative qualitative descriptors, and that ‘population’ is not restricted to biological populations but may also refer to other entities (e.g. variability in temperature at different points in time and space).

Variability is often important in a scientific assessment, e.g. variability in chemical occurrence in food and consumption are important in chemical exposure assessment. This needs to be dealt with when defining the questions for assessment, such that they refer to specific descriptors or summaries of the variable quantity, such as the average or 95th percentile exposure. If weight of evidence assessment was used as part of the exposure assessment, for example to integrate occurrence data from different countries, the reliability, relevance and consistency of the different pieces or lines of evidence regarding the variability of occurrence would be assessed. Thus, variability can be the subject of a weight of evidence assessment, rather than a contributor to it.

Variability in data is a combination of real variability of the quantity being measured (e.g. between individuals) and variability of the measurement process (measurement error, a form of uncertainty). Variability in data due to measurement error should be taken into account when assessing reliability. Variability between results reported by different studies might reflect differences in the reliability of those studies or differences in their relevance for the assessment. Such differences may lead to apparent inconsistencies in data that need to be considered when integrating evidence (Section 4.4).

3. Overview of qualitative and quantitative methods for weight of evidence assessment

3.1. Examples of weight of evidence approaches

3.1.1. Classification of weight of evidence approaches

Several reviews of weight of evidence approaches have been published, especially by Chapman et al. (2002), Weed (2005), Linkov et al. (2009), Lorenz et al. (2013) and ANSES (2016).

Instead of conducting another review of weight of evidence approaches, the SC scrutinised the existing reviews to identify approach(es), which could be useful for classifying weight of evidence methods suitable for ecotoxicological assessments. A classification proposed by Linkov et al. (2009) has been taken as a starting point (with modifications, see below) as it covers a broad range of methods with contrasting levels of complexity; it enables methods to be grouped according to whether they are qualitative and/or quantitative; and it can also capture weight of evidence methods identified in the more recent reviews. It is important to stress that the classification here is illustrative rather than prescriptive. Linkov et al. (2009) distinguish the following types of weight of evidence assessment:

Listing evidence: Presentation of individual lines of evidence without attempt at integration

Best professional judgement: Qualitative integration of multiple lines of evidence

Causal criteria: A criteria‐based methodology for determining cause and effect relationships

Logic: Standardised evaluation of individual lines of evidence based on qualitative logic models

Scoring: Quantitative integration of multiple lines of evidence using simple weighting or ranking

Indexing: Integration of lines of evidence into a single measure based on empirical models

Quantification: Integrated assessment using formal decision analysis and statistical methods.

In the Linkov et al. (2009) classification system, approaches are grouped by the degree to which they are quantitative. The least quantitative approaches are categorised as ‘Listing evidence’, while the most quantitative ones fall within the category ‘Quantification’ and are based on statistical approaches or on formal decision‐analytical tools. Other categories correspond to intermediate situations.

The current guidance does not formally distinguish between the categories ‘listing evidence’ and ‘best professional judgement’. Linkov et al. (2009) suggest that approaches described as ‘listing evidence’ do not attempt to integrate lines of evidence together. Rather, lines of evidence ‘are simply presented, although the assessor will at times make claims that the weight of evidence assessment points to specific conclusions’ (Linkov et al., 2009). In ‘listing evidence’, it is implicit that integration of evidence must take place in order to reach conclusions, although the integration may not be based on a formal method. ‘Listing evidence’ is therefore considered here as being synonymous with ‘best professional judgement’ rather than being a separate category.

In addition, the current guidance groups together the Linkov et al. (2009) categories ‘Logic’, ‘Scoring’ and ‘Indexing’ as they are approaches involving rating which share many common characteristics. These three categories are considered here as a single category named ‘Rating’. Thus, the SC considers four categories of weight of evidence assessment methods: best professional judgement, causal criteria, rating and quantification. Examples of these approaches are listed below for each category. Some of them cover all three basic steps of the general weight of evidence process, while others are more specific and focus on one or two steps. Approaches restricted to problem formulation were considered as outside the scope of this guidance, and are not included.

The SC does not aim to cover here all existing approaches, but rather to give a brief overview of different types of approaches and to provide key references. Examples are briefly presented in the following sections (note that these are included only to illustrate the categories and are not intended as examples of best practice). Categories described in Sections 3.1.2–3.1.3 (best professional judgement and causal criteria) are collectively referred to in this guidance as qualitative approaches. Several criteria are then presented in Section 3.2 to help risk assessors to choose among weight of evidence approaches. These approaches have been used in different EFSA scientific assessments (see for example Annex C). Note that assessments may use a combination of more than one of these categories.

3.1.2. Category ‘Best professional judgement’

In this category, no formal method is used for evidence integration. Instead, the listed pieces of evidence are used to form a conclusion by professional opinion via a discussion of the findings. The approaches of this category simply list pieces of evidence in text or in tables. The origin of the evidence depends on the approach used for assembling evidence, for example:

Several methods of evidence synthesis which do not involve quantitative integration could be classified under this category. These include extensive literature searches (EFSA, 2010a; Higgins and Green, 2011), systematic maps (CEE, 2016; James et al., 2016), and non‐quantitative systematic reviews (i.e. those lacking a quantitative data synthesis step) (EFSA, 2010a; Higgins and Green, 2011).

Evidence can be directly provided by applicants in reports and/or datasets rather than being selected by risk assessors.

Clear documentation of the discussion is important to ensure transparency in how decisions were reached. Several examples are given in the review of Linkov et al. (2009), notably Staples et al. (2004).

3.1.3. Category ‘Causal criteria’

Approaches of this category provide a structure based on explicit criteria to evaluate relationships between cause and effect from one or several lines of evidence. The Bradford Hill considerations (Hill, 1965) are widely used, especially in epidemiology. They are often seen as the minimal conditions needed to establish a causal relationship between two items, and are frequently used in epidemiological studies to assess the extent of supporting evidence on causality. They are also used, in modified form, to weight of evidence assessment in mode of action analysis (Meek et al., 2014). Several variants have been proposed in the literature. For example, Becker et al. (2015) proposed a template based on modified Bradford Hill considerations for weight of evidence assessment of adverse outcome pathways.

3.1.4. Category ‘Rating’

This category includes a variety of frameworks that involve rating of evidence. Examples include GRADE3 (Guyatt et al., 2011), WHO‐IARC (IARC, 2006), WCRF/AICR (2007), OSHA (2016) and guidance used to produce NTP Monographs (NTP, 2015; OHAT, 2015). Guidance to assess and integrate evidence is based on several factors, often derived from the Bradford‐Hill considerations. However, ‘Rating’ builds on the approaches of the category ‘Causal criteria’ in that more guidance is provided for appraising and integrating evidence.

These approaches usually relate to the second step of the weight of evidence process, including the appraisal of individual studies and rating confidence in the individual lines of evidence (e.g. ‘high confidence,’ ‘sufficient evidence’). Some of them also provide tools based on a matrix for integrating lines of evidence to reach hazard identification conclusions (WHO‐IARC, OHAT, OSHA (2016)). None of these approaches use formal probabilistic techniques, but it is possible to combine application of the structured framework guidance with a more quantitative presentation of conclusions.

Some of these approaches (e.g. GRADE) are designed to be flexible for use in a variety of disciplines and able to be applied under different time and resource constraints in situations corresponding to different levels of urgency (Thayer and Schünemann, 2016).

In EFSA, the scheme presented in the ‘Guidance on a harmonised framework for pest risk assessment and the identification and evaluation of pest risk management options’ (EFSA, 2010a) belongs to this category.

3.1.5. Category ‘Quantification’

This category covers a large diversity of approaches that can be used to integrate evidence into lines of evidence and/or to integrate different lines of evidence in order to reach a general conclusion.

This category includes standard statistical models such as fixed‐effect and random‐effect linear and generalised linear models. These are commonly used for meta‐analysis. A typical application is to estimation of mean effect sizes, which can be interpreted as summary estimates of a quantity based on statistical integration of evidence from multiple primary studies. These statistical models may also be used for meta‐regression to explain the variability between studies as a function of explanatory variables, for example, population characteristics or study quality issues. They are able to describe uncertainties through confidence intervals and probability distributions. Other types of statistical methods (e.g. Bayesian methods) are also useful for synthesising multiple sources of evidence.

In addition to statistical methods, other approaches have been proposed, especially machine learning, in silico tools and multicriteria analysis. Linkov et al. (2015) consider that multicriteria decision analysis can be used as a proxy for the Bayesian approach to weight of evidence assessment when model formulation is restricted by data limitations.

When a quantitative model is used for weight of evidence assessment, several authors recommend performing a sensitivity analysis to study the stability of the main conclusions to the model assumptions, e.g. to the model equations, or to the parameter values (Borenstein et al., 2009; Linkov et al., 2011).

Examples of quantitative approaches and several key references are listed below:

Statistical methods for integrating data provided by several studies sharing similar characteristics (classic fixed‐effect and random‐effect models used in meta‐analysis, and Bayesian hierarchical models). Many textbooks and methodological papers are available on these methods, for examples Borenstein et al. (2009) on classic techniques, and Sutton and Abrams (2001) and Higgins et al. (2015) on Bayesian methods in the context of meta‐analysis.

Statistical methods for integrating different types of studies in order to allow decisions based on all available evidence and to analyse uncertainty (Small, 2008; Turner et al., 2009; Gosling et al., 2013).

Quantitative expert judgement including multicriteria decision analysis for integrating different types of studies (Linkov et al., 2011, 2015).

Machine learning techniques (Li and Ngom, 2015).

In silico tools including QSAR, PBTK‐TD (ECHA, 2016).

3.2. Choosing weight of evidence methods

A challenge when planning a weight of evidence assessment is to determine which assessment method(s) to select, given the variety of different methods available. A single easy‐to‐use weight of evidence method that covers all the basic steps of the weight of evidence process and enables transparent quantification of uncertainty may not be available. A pragmatic approach is therefore recommended for identifying the most suitable method, or combination of methods, for the weight of evidence assessment. A list of criteria for comparing weight of evidence methods is suggested in Table 1, to assist in evaluating the relative strengths and weaknesses of the different methods. This list is not exhaustive but based on discussions during the development of the current guidance; the criteria have not been formally tested and are not intended to be prescriptive or mandatory, but are intended to aid the justification and selection of methods. The criteria may also be helpful for transparently recording and reporting the decision‐making process used for weight of evidence method selection, in keeping with EFSA's requirement for transparency in the conduct and reporting of scientific assessments (EFSA, 2006).

Table 1.

Criteria for assessing the relative strengths and weaknesses of weight of evidence methods

| Criterion | Key considerations |

|---|---|

| Time needed | Weight of evidence methods that can be conducted quickly would be preferable where urgent weight of evidence assessments are required. However, rapid methods risk sacrificing scientific rigour. When estimating the time required for a particular weight of evidence method, consideration should be given to the availability of expertise, since this could influence the time required for a weight of evidence assessment |

| Amount and nature of the evidence | The amount of evidence and its type (e.g. experimental, expert knowledge, surveys, qualitative, quantitative or combination) may affect which methods of weight of evidence assessment could be applied: the choice among these would then be determined by other criteria in this table. The similarity of the available studies may also be relevant, e.g. in deciding whether meta‐analysis is an option |

| Availability of guidance | Guidance on the weight of evidence method should be readily available in the public domain and, ideally, should be endorsed, e.g. through peer‐review and/or wide acceptance. Guidance should document the rationale of the method, the full process, and how to interpret the results. Ideally, access to help and support facilities should be readily available (e.g. any relevant tools such as tutorials, software programs or modules). Availability of guidance is important both for the conduct and the critical appraisal of weight of evidence assessments. Weight of evidence methods that lack adequate guidance would rate poorly on this criterion |

| Expertise needed | Weight of evidence methods are likely to vary in the level of technical skill required to conduct them. Some quantitative methods, for example, may require specific skills in statistics and/or programming. The expertise requirement should be considered in relation to availability of expertise and tools and, if necessary, whether available resources would support the outsourcing of expertise or provision of training. The level of expertise required has implications both for the conduct and the critical appraisal of weight of evidence assessments |

| Transparency and reproducibility | Transparency and reproducibility are fundamental principles required by EFSA in its scientific assessments. Transparency should apply to all parts of the weight of evidence method, meaning that it should be possible to follow clearly how the input data for the assessment are analysed to produce the conclusions. Reproducibility is defined such that consistent results should be expected if the same method were to be repeated using the same input data (but note that results are unlikely to be identical, dependent on the degree to which expert opinion is involved) |

| Variability and uncertainty | Weight of evidence methods should, ideally, explicitly report and analyse both variability and uncertainty at all steps of the assessment, and propagate them appropriately through the assessment. Quantitative expression of variability and uncertainty is preferable to qualitative expression. Careful consideration may be needed to ensure that the weight of evidence method can include all relevant sources of variability and uncertainty |

| Ease of understanding for assessors and risk managers | Weight of evidence methods are likely to vary in how easy they are to understand by non‐specialists, and this may be related to the expertise needed, as well as the availability of adequate guidance. It will be beneficial if the principles of the methods chosen can be readily understood by assessors and risk managers |

As with any type of evidence synthesis, weight of evidence methods face a potential trade‐off between what would be ideal in terms of resource requirement (i.e. rapid, cheap, methods) and scientific rigour (i.e. methods that transparently display uncertainty at all steps of the weight of evidence process). Careful consideration will be needed early on in the planning process (in problem formulation) to ensure that adequate resource (time, staff expertise) is available to achieve the desired level of scientific rigour. In EFSA weight of evidence assessments which have prespecified and fixed resources, the criteria in Table 1 could be used to judge the optimal scientific rigour that could be achieved within the available resources. Alternatively, if resource availability for a weight of evidence assessment is negotiable, or if a weight of evidence assessment is at a preliminary scoping phase, these criteria may be helpful for estimating the resource needs for the assessment.

The criteria in Table 1 should be considered together by assessors when planning weight of evidence assessment. This is because the strengths and weaknesses of weight of evidence methods are multidimensional, and individual criteria alone may not be able to capture important trade‐offs, e.g. between resource availability and scientific rigour. Note that the criteria do not necessarily have equal importance: their relative importance may be discussed on a case‐by‐case basis when planning each weight of evidence assessment. The criteria in Table 1 are not exhaustive. Other criteria which may be useful include the strength and scope of the theoretical basis for a method and the extent to which the output of the method is in a form which can be tested.

4. Practical guidance for conducting weight of evidence assessment

This section contains practical guidance for applying weight of evidence approaches within EFSA scientific assessments. Assessors should choose the specific approaches that are best suited to the needs, time/resources available and context of their assessments.

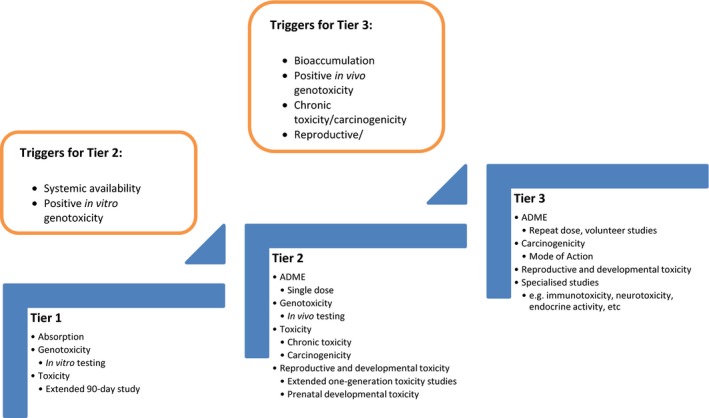

Three types of assessment are distinguished, which require different approaches:

assessments where the approach to integrating evidence is fully specified in a standardised assessment procedure;

case‐specific assessments, where there is no pre‐specified procedure and assessors need to choose and apply approaches on a case‐by‐case basis;

emergency procedures, where the choice of approach is constrained by unusually severe limitations on time and resources.

Standardised assessment procedures have been established in many areas of EFSA's work, especially for regulated products. Standardised procedures are generally defined in documents, e.g. EU regulations or EFSA guidance documents. They specify what questions should be addressed, what evidence is required, and what methods of assessment should be applied to it. They generally include standardised elements that are assumed to provide adequate cover for uncertainty (EFSA, 2016a,b,c).

Where a standard assessment involves questions that require integration of evidence, the methods for doing this will be specified in the standard procedure. For example, in human health risk assessment of chemicals in food, the outcome may often be based on one of the available studies, which is considered to provide the highest level of protection for the consumer. While not generally thought of as weight of evidence, this is a procedure for integration which, after considering all the evidence, in effect gives all the weight to a single study (sometimes referred to as the critical study).

In assessments following a standardised procedure, the default approach should be to integrate evidence using the methods as specified by the procedure. If the methods are specified in detail, they may be sufficient to conduct the assessment without further guidance; where the methods are not fully specified, the assessor may benefit from the guidance in Sections 4.1, 4.2, 4.3, 4.4, 4.5–4.6. If an assessment that would normally be addressed by a standard procedure includes weight of evidence issues that are not adequately addressed by the standard procedure, a case‐specific approach will be needed for that part of the assessment, following the guidance in Sections 4.1, 4.2, 4.3, 4.4, 4.5–4.6.

In case‐specific assessments, for which there is no standard procedure, evidence integration will need to be conducted case‐by‐case, following the guidance in Sections 4.1, 4.2, 4.3, 4.4, 4.5–4.6.

Emergency assessments are required in situations where there are exceptional limitations on time and resources. If an emergency assessment involves scientific questions that require integration of evidence, assessors should first consider whether any standard procedure exists that can be applied within the time and resources available. If not, then the assessor should conduct a case‐specific assessment, choosing options from Sections 4.1, 4.2, 4.3, 4.4, 4.5–4.6 that are compatible with the time and resources available.

In some cases, the literature available for an assessment includes previous reviews or assessments of a similar question, which themselves involve weight of evidence assessment. Ideally, assessors should access and evaluate the original evidence used in the previous assessments, rather than treating the outcomes of previous assessments as evidence per se. However, when time and resources are too limited to access all the original evidence, then it may be justifiable to make use of the previous assessments in the new assessment. If this is done, it will be essential to take account of any differences between the questions addressed in the previous and new assessments, and of any differences or shortcomings in the criteria and assessment methodology that were used, and to document these considerations transparently.

4.1. Define the questions for weight of evidence assessment

A single assessment may comprise one or more scientific questions and none, some or all of those questions may require weight of evidence assessment. Interpreting the questions posed by the Terms of Reference, and deciding whether to subdivide them, is part of the first stage of scientific assessment, often referred to as problem formulation. General guidance on problem formulation for EFSA's scientific assessments is provided in other documents (EFSA, 2006, 2015a,b), and the need to ensure questions are well‐defined is further discussed by EFSA (2016a,b,c). Weight of evidence assessment does not involve any additional requirements or considerations for specifying the questions for assessment, so the reader is referred to the documents referred to above for details.

Problem formulation also includes planning the strategy and methods for assessment (EFSA, 2015a,b). As part of this, the assessors should identify which of the questions in the assessment will require integration of evidence and therefore the use of weight of evidence approaches.

The output of problem formulation should therefore include a list of the questions. Each question that requires weight of evidence assessment should then be addressed by applying the basic steps described in the three following subsections. When the assessment involves a hierarchy of questions, start with the questions at the lowest level of the hierarchy, as the conclusions of these will inform lines of evidence for higher questions. It is sometimes necessary to return to and revise the problem formulation later, if additional questions are identified in the course of the assessment.

4.2. Assemble the evidence

This is the first of the three basic steps of weight of evidence assessment for an individual question (see Section 2.4). Guidance for this and the following two steps is provided as a series of numbered (sub)steps, which may be considered in sequence. For every step, assessors should choose approaches that are appropriate to the needs and context of the assessment in hand, including any limitations on time and resources.

Identify potentially relevant evidence. In some EFSA assessments, the evidence to be used is defined by regulations or guidance, and/or submitted by applicants. This applies especially in standard assessment procedures. When data gaps (absence of required data) are identified, it may be possible to mitigate their effect using other evidence, for example by read‐across, if this is permitted by the relevant regulations or guidance. In non‐standard (case‐specific) assessments, the assessors define the strategy and criteria for identifying and accessing potentially relevant evidence. Procedures for this, with varying degrees of formality, are described in EFSA guidance on extensive literature searching and systematic review (e.g. EFSA, 2010a, 2015b), which is designed to increase coverage and reduce potential biases in evidence gathering.

Select evidence to include in the weight of evidence assessment. In principle, all evidence identified as potentially relevant in step 1 should be taken into account, but limitations on time and resources may require the assessment to focus primarily on the most relevant and/or most reliable evidence. This subset of evidence may be identified by filtering or screening using appropriate criteria for relevance and/or reliability (EFSA, 2010b, 2015b). Evidence that was considered potentially relevant but not included should be retained separately, for example as a list of references or archive of documents, so that the impact of excluding it can be considered as part of uncertainty analysis (below).

Group the evidence into lines of evidence, i.e. subsets of evidence which the assessors find useful to distinguish when conducting the assessment. There are no fixed rules for how to form lines of evidence, but it may be helpful to distinguish those which are standalone and those that are complementary (Section 2.1). If the lines of evidence are complementary, they may be grouped according to the contribution they make to answer the question (e.g. exposure, hazard, etc.). Standalone lines of evidence may comprise evidence on the same aspect of the assessment but generated by different methods (e.g. different study types), with different subjects (e.g. species, chemicals, etc.) and in different conditions. This will tend to group evidence that has similar relevance and/or reliability. The lines of evidence and the rationale for constructing them should be documented, identifying which are standalone and which are complementary.

4.3. Weigh the evidence

Four broad categories of methods for weight of evidence assessment are presented in Section 3, together with suggestions for choosing between them: best professional judgement, causal criteria, rating and quantitative methods. Assessors should first consider the possibility of using quantitative methods because an appropriate and well‐conducted quantitative analysis will generally be more rigorous than other methods. For example, when it is possible and appropriate to combine multiple studies by meta‐analysis, this will be more rigorous than integrating them by expert judgement. However, there are two important caveats to this. First, quantitative methods may not be appropriate for various reasons, e.g. not applicable to the nature, quantity or heterogeneity of the evidence to be integrated, not practical within the time and resources available, etc. Second, quantitative methods may not address all the considerations that are relevant for weighing the evidence. For example, common approaches to meta‐analysis only capture those aspects of reliability and consistency that are represented in the variability of the data, although some forms of meta‐analysis can also take account of relevance and additional aspects of reliability (e.g. Turner et al., 2009).

Therefore, the approach proposed below is to check first whether quantitative methods are practical and appropriate, and then complement them with qualitative methods (categories to ensure all relevant considerations are addressed). When quantitative methods are not practical or appropriate, only qualitative methods can be used. However, assessors should start by deciding what considerations are relevant for weighing the evidence, as this may have implications for the choice of methods.

When there are data gaps, due to the absence of data that are normally required, the weight that those data would have had will be absent and this will be taken into account when the available evidence is integrated (see Section 4.3).

-

Decide what considerations are relevant for weighing the evidence. The general considerations for weighing evidence are reliability, relevance and consistency, as explained and defined in Section 2.5. Assessors may choose to work with these three basic considerations, or use more specific criteria appropriate to their area of work, especially if these have already been established in guidance or the scientific literature. If using pre‐established criteria, assessors should check that they cover all aspects of reliability, relevance and consistency that are relevant for the assessment in hand, and define any additional criteria that are needed.

Reliability is the extent to which the information comprising a piece or line of evidence is correct. It may be assessed by considering the uncertainty of the evidence, i.e. how different it might be if the information comprising it was correct. Everything that contributes to that uncertainty should be included when assessing reliability.

-

Relevance is the contribution a piece or line of evidence would make to answer a specified weight of evidence question, if the information comprising the evidence were fully reliable. Everything that contributes to the need for extrapolation, and its uncertainty, should be included when assessing relevance.

For a standalone line of evidence, consideration of relevance involves thinking about how well that evidence would answer the question, if the information comprising it were fully reliable. How much extrapolation is involved, between the subjects and conditions the evidence relates to and those relevant for the question and how uncertain is that?

For a complementary line of evidence, consideration of relevance involves identifying what that evidence contributes to the conceptual model or argument for answering the question, and considering what extrapolation is required to provide that contribution.

Consistency should be considered when integrating evidence (below).

-

Decide on the method(s) to be used for weighing and integrating the evidence. Refer to the categories and criteria in Section 3. Some of the methods for weighing evidence also perform the integration step (e.g. meta‐analysis), or limit the choice of methods for integration, so both steps should be considered when choosing between methods. The choice of methods may also be affected by whether the lines of evidence are standalone or complementary. For example, meta‐analysis can be used to integrate standalone lines of evidence, whereas complementary lines of evidence require a quantitative model of the relationships between the lines of evidence and the answer to the question (e.g. the relationships between exposure, hazard and risk).

Consider whether quantitative methods are practical and appropriate for the needs and context of the assessment. If it is decided to use a quantitative method, identify which aspects of reliability, relevance and consistency it will address, and which it will not.

Choose one or more qualitative methods to address those aspects of reliability, relevance and consistency that are not treated quantitatively. This could include methods from one or more of the non‐quantitative categories presented in Section 3.

Check that the chosen methods (quantitative and/or qualitative) address all pertinent aspects of reliability, relevance and consistency (identified in step 1 above).

If more than one method is chosen for weighing evidence, consider whether their results can be combined directly when integrating the evidence. Some methods are capable of incorporating the outputs of other methods: e.g. Doi (2014) has developed methods for incorporating quality scores into meta‐analysis, while Turner et al. (2009) have proposed methods for incorporating quantitative expert judgements about the effects of study limitations (which may include reliability and relevance) into meta‐analysis. Such methods may be used, if they are appropriate and practical for the needs and context of the assessment.

Apply the chosen methods for weighing the evidence and summarise the results in a form that is helpful for integration. Weighing will often be conducted at the level of individual pieces of evidence. Alternatively, pieces of evidence within the same line of evidence could be weighed collectively. The latter option may be quicker when time is limited but requires an implicit integration of the pieces within the line of evidence, so is less transparent and may be more challenging for the assessors to perform (because it requires weighing and integrating simultaneously). When more than one method of weighing is used (e.g. a quantitative method combined with a qualitative method), it is recommended to find a way of presenting the results together in a concise tabular or graphical summary. For example, estimates and confidence intervals from quantitative methods can be plotted on a graph alongside symbols or text showing the results of qualitative methods (e.g. EFSA, 2015a, 2016a). This provides a useful overview of the evidence, which may be helpful for the assessors in the integration step and also for others, who read the finished assessment.

4.4. Integrate the evidence

In this step, the evidence is integrated to arrive at the conclusion, taking account of the reliability and relevance of the evidence, assessed in the preceding step, and also the consistency of the evidence. To reach a conclusion on the weight of evidence question, integration is necessary both within and between lines of evidence. When there are data gaps, due to the absence of data that are normally required, the absence of the weight those data would have contributed will be reflected in the outcome of the integration process. When appropriate, the effect of this may be mitigated by the contributions of other evidence (e.g. read‐across), or taken into account by use of assessment factors (which should themselves be evidence‐based).

Consider the conceptual model for integrating the evidence. Integration always involves a conceptual model, even if this is not made explicit. Integrating standalone lines of evidence requires a conceptual model of how evidence of differing weight is combined. Integrating complementary lines of evidence additionally requires a conceptual model of the contributions made by the different lines of evidence and how they combine to answer the question. In both cases, it is important to take account of any dependencies between different pieces and/or lines of evidence. Dependencies can have an important impact on how evidence should be integrated (see point 3 below). Assessors may find it helpful to make the conceptual model explicit, e.g. as a flow chart or list of logical steps. This should help assessors to take appropriate account of the relationships and dependencies between pieces and lines of evidence, and between the evidence and the question being assessed, both when the integration of evidence is done by expert judgement and when it is done using a quantitative model. Making the conceptual model explicit also contributes importantly to the transparency of the assessment.

Assess the consistency of the evidence. Consistency is the extent to which the contributions of different pieces or lines of evidence are compatible (Section 2.5). Limitations in consistency arise in part from limitations in the relevance and reliability of different pieces or lines of evidence. If a question is well‐defined, only a single correct answer should be possible, and any apparent inconsistencies in the evidence should be explicable in terms of differences in reliability and/or relevance. Assessors should not, however, simply conclude that inconsistent evidence is unreliable or irrelevant. Rather, assessors should consider whether, after taking differences in reliability and relevance into account, the pieces or lines of evidence still appear inconsistent. If so, this may imply the presence of additional limitations in relevance and reliability, beyond those already taken into account, or limitations in the conceptual model for integrating the evidence. Alternatively, it may imply there is more than one possible answer to the question, in which case the question may need to be more precisely defined or split into two or more separate questions. Any remaining inconsistency should be considered as part of the uncertainty affecting the weight of evidence conclusion.