ABSTRACT

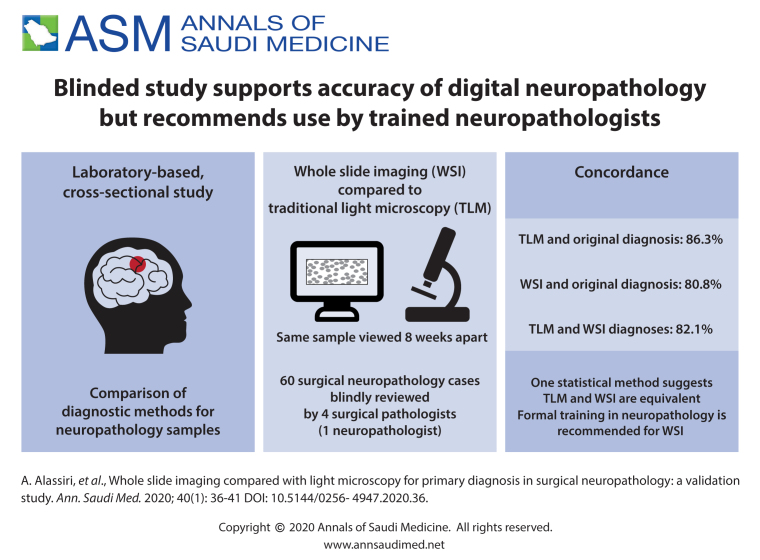

BACKGROUND:

Digital pathology practice is rapidly gaining popularity among practicing anatomic pathologists. Acceptance is higher among the newer generation of pathologists who are willing to adapt to this new diagnostic method due to the advantages offered by whole slide imaging (WSI) compared to traditional light microscopy (TLM). We performed this validation study because we plan to implement the WSI system for diagnostic services.

OBJECTIVES:

Determine the feasibility of using digital pathology for diagnostic services by assessing the equivalency of WSI and TLM.

DESIGN:

A laboratory-based cross-sectional study.

SETTING:

Central laboratory at a tertiary health care center.

MATERIALS AND METHODS:

Four practicing surgical pathologists participated in this study. Each pathologist blindly reviewed 60 surgical neuropathology cases with a minimum 8-week washout-period between the two diagnostic modalities (WSI vs. TLM). Intraobserver concordance rates between WSI and TLM diagnoses as compared to the original diagnosis were calculated.

MAIN OUTCOME MEASURES:

Overall intraobserver concordance rates between each diagnostic method (WSI and TLM) and original diagnosis.

SAMPLE SIZE:

60 in-house surgical neuropathology cases.

RESULTS:

The overall intraobserver concordance rate between TLM and original diagnosis was 86.3% (range 76.7%-91.7%) versus 80.8% for WSI (range 68.3%-88.3%). These findings are suggestive of the superiority of TLM, but the Fleiss' Kappa statistic indicated that the two methods are equivalent, despite the low level of the K value.

CONCLUSION:

WSI is not inferior to the light microscopy and is feasible for primary diagnosis in surgical neuropathology. However, to ensure the best results, only formally trained neuropathologists should handle the digital neuropathology service.

LIMITATIONS:

Only one diagnostic slide per case rather than the whole set of slides, sample size was relatively small, and there was an insufficient number of participating neuropathologists.

CONFLICT OF INTEREST:

None.

INTRODUCTION

Digitizing glass slides has revolutionized the practice of anatomical pathology, improving the diagnostic accuracy, turn around time, and outreach service coverage. Using slide scanners, glass slides are converted into digital slides rapidly accessible to the pathologist. This technology potentially enables neuropathologists to remotely provide an opinion during crucial intraoperative consultations.1 Digital pathology has the potential to deliver efficient and accurate clinical diagnosis to accommodate the rising needs for neuropathological services in under-privileged and remote areas. As a new practice, digital pathology may be an ideal solution to provide neuropathology consultation to laboratories without such expertise due to limited resources. Digitally scanned whole slide images may enable the neuropathologist to provide high-quality and fast primary or secondary diagnoses and improve patient care. Saudi Arabia has only ten formally trained and certified neuropathologists. Digital pathology, despite its challenges, may overcome this shortage by supporting the need for the deployment of a nationwide digital neuropathology services.

Neuropathology frozen sections are stressful to general pathologists. Therefore, a digital pathology solution may be helpful. Fallon et al have shown a high correlation between frozen section slides and WSI with 52 ovarian frozen section diagnoses.2 This accuracy rate maybe similar to neuropathology frozen sections. Several studies concluded that WSI as a diagnostic modality is not inferior to TLM.2,5-21 Validation of the digital pathology system is required to ensure laboratory compliance with accrediting organizations such as the Joint Commission and College of American Pathologists (CAP) and to assure the accuracy of diagnosis. Validation studies have shown a high concordance rate between WSI and TLM in different sub-specialties of anatomical pathology.6,8-10,12,13,15,18 Digital pathology, for intraoperative consultations, primary/secondary diagnosis, multi-disciplinary meetings, and educational purposes, is already being used in some parts of the world (e.g. United States of America, Canada and parts of Europe).22-25 Furthermore, the recent approval of WSI for primary diagnosis by the US Food and Drug Administration (FDA) should encourage the adoption of digital pathology for clinical purposes globally. The FDA considers WSI systems to consist of two subsystems: (1) the image acquisition component (ie, the scanner) and (2) the workstation (ie, the image viewing software, computer, and display). The FDA also considers this entire digital pathology system to be a closed unit precluding the substitution of unapproved components.26

The current CAP guidelines recommend that all pathology departments adopting WSI for diagnosis should conduct their own validation. This is done by evaluation of at least 60 specimens to assess intraobserver variations in diagnoses between digital slides and glass slides, with a washout period of at least 2 weeks.27 In this study, we investigate the feasibility of WSI for diagnostic surgical neuropathology services at our center in Saudi Arabia.

MATERIALS AND METHODS

This study was conducted in the Department of Pathology and Laboratory Medicine at King Abdulaziz Medical City in Riyadh, Saudi Arabia. The goal was primarily to validate the technology using the guidelines published by the College of American Pathologists and Laboratory Quality Center.1 The study protocol was approved by the Institutional Review Board at King Abdullah International Medical Research Center (KAIMRC).

Sixty recent surgical neuropathology cases were retrieved from the archives of our anatomic pathology division. The accuracy of the original diagnosis is ensured as the malignant cases were co-signed by two board certified neuropathologists per departmental policy while the benign lesions were considered straightforward and also diagnosed by a neuropathologist. Instead of scanning all slides for each case, the first author reviewed all slides and selected one diagnostic high quality slide from each case to simplify the methodology in order to guarantee participation from the limited number of study candidates. Additionally the one diagnostic slide approach has been used in several studies.2-4 The sixty selected cases covered a broad range of surgical neuropathological entities encountered at our institution. The original surgical pathology numbers were replaced by a study identifier (case number). The 60 diagnostic hematoxylin and eosin-stained slides were scanned using the Aperio scanner (ScanScope AT Turbo) at 40×.

Four practicing surgical pathologists were carefully selected to participate in this study. One is board-certified in neuropathology while the others either received considerable exposure to neuropathology services during their residency training (6 months or more) or had sufficient expertise being senior pathologists (ranging between 25-40 years of general practice including neuropathology cases).

Two pathologists at a time were given either a set of digital or glass slides independently as well as the clinical information available at the time of the original diagnosis. After a washout period of at least 8 weeks, the sets of slides were switched between the two pathologists. The same process was followed with the two remaining pathologists. For each pathologist, the glass slides were reviewed using their own light microscope and the digital slides were reviewed using a high-resolution large monitor.

The diagnostic accuracy was based on whether or not a study diagnosis was clinically and significantly different from the original diagnosis. Diagnostic discrepancies were classified as major when there was a definite influence on clinical management/outcome and minor when there was little or no clinical significance. In keeping with the widely accepted definitions, a major discordance was defined as a difference in diagnosis that would be associated with a change in patient management. On the other hand, a minor discordance was defined as a difference in diagnosis that was not associated with a change in patient management. Statistical analysis was performed using SAS version 9.4 through the Department of Biostatistics and Bioinformatics at KAIMRC.

RESULTS

The overall intraobserver concordance rate between the original and TLM diagnoses was 86.3% (range 76.7%–91.7%) (Table 1) while the rate was slightly lower (80.8%, range 68.3%–88.3%) using the WSI diagnostic method (Table 2). This showed a 6% difference in favor of the TLM method. Using the statistical module (GEE) to predict which of the two methods was superior, the resultant odds ratio of 1.5 indicated a 50% greater chance in favor of the TLM method. The overall intra-observer concordance rate between TLM and WSI was 82.1% (Table 3).

Table 1.

Intraobserver concordance rates between TLM and original diagnoses by pathologist.

| Pathologist 1 | Pathologist 2 | Pathologist 3 | Pathologist 4 | Total (%) | |

|---|---|---|---|---|---|

| Concordant | 53 (88.3) | 46 (76.7) | 55 (91.7) | 53 (88.3) | 86.3 |

| Minor discordance | 3 (5) | 4 (6.7) | 4 (6.7) | 5 (8.3) | 6.7 |

| Major discordance | 4 (6.7) | 10 (16.7) | 1 (1.7) | 2 (3.3) | 7.1 |

Data are number (%).

Table 2.

Intraobserver concordance rates between WSI and original diagnoses by pathologist.

| Pathologist 1 | Pathologist 2 | Pathologist 3 | Pathologist 4 | Total (%) | |

|---|---|---|---|---|---|

| Concordant | 49 (81.7) | 41 (68.3) | 51 (85) | 53 (88.3) | 80.8 |

| Minor discordance | 2 (3.3) | 8 (13.3) | 7 (11.7) | 7 (11.7) | 10 |

| Major discordance | 9 (15) | 11 (18.3) | 2 (3.3) | 0 | 9.2 |

Data are number (%).

Table 3.

Intraobserver concordance rates between TLM and WSI diagnoses by pathologist.

| Pathologist 1 | Pathologist 2 | Pathologist 3 | Pathologist 4 | Total (%) | |

|---|---|---|---|---|---|

| Concordant | 49 (81.7) | 43 (71.7) | 52 (86.7) | 53 (88.3) | 82.1 |

| Minor discordance | 2 (3.3) | 7 (11.7) | 5 (8.3) | 5 (8.3) | 7.9 |

| Major discordance | 9 (15) | 10 (16.7) | 3 (5) | 2 (3.3) | 10 |

Data are number (%).

There appeared to be a minor superiority for TLM over WSI so we generated the Fleiss kappa statistic for multi-rater agreement using bootstrapping with five subject replacement for each method 1000 times. In each of the samples, a Fleiss kappa statistic was generated for each method and an average Kappa (K) was calculated. The result showed an almost equal level of intraobserver agreement between the TLM with a K value (mean and standard deviation) of 0.14 (0.07) and the WSI with a K value of 0.20 (0.06). There was a nonsignificant difference in the standard error (P=.007) between the two Kappa means which indicates equivalency between the two methods even though the K value was low. The original diagnosis for each of the 60 cases is shown in Table 4.

Table 4.

Original diagnoses for the 60 cases.

| Case # | Original diagnosis |

|---|---|

| 1 | Vasculitis with fibrinoid necrosis |

| 2 | Meningioma (psammomatous) |

| 3 | Metastatic renal cell carcinoma |

| 4 | Medulloblastoma (desmoplastic/nodular) |

| 5 | Lymphoma (PTLD c/w DLBCL) |

| 6 | Meningioma (brain invasive) |

| 7 | Ependymoma |

| 8 | Pituitary adenoma |

| 9 | Colloid cyst |

| 10 | Anaplastic ependymoma |

| 11 | Choroid plexus papilloma |

| 12 | Chordoma |

| 13 | Medulloblastoma (large cell/anaplastic) |

| 14 | Craniopharyngioma |

| 15 | Meningioma (microcystic) |

| 16 | Medulloblastoma (classic) |

| 17 | Metastatic colonic adenocarcinoma |

| 18 | Hemangioblastoma |

| 19 | Myxopapillary ependymoma |

| 20 | Diffuse gliomas (oligodendroglioma) |

| 21 | Schwannoma |

| 22 | Caseating granuloma (tuberculoma) |

| 23 | Cavernoma |

| 24 | Pilocytic astrocytoma |

| 25 | Diffuse gliomas (astrocytoma) |

| 26 | Glioblastoma |

| 27 | Meningioma |

| 28 | Brain abscess |

| 29 | Germinoma |

| 30 | Anaplastic meningioma |

| 31 | Lymphoma (DLBCL) |

| 32 | Pilocytic astrocytoma |

| 33 | Craniopharyngioma |

| 34 | Pineoblastoma |

| 35 | Langerhans cell histiocytosis |

| 36 | Hemangiopericytoma |

| 37 | Dysembryoplastic neuroepithelial tumor |

| 38 | Pituitary adenoma |

| 39 | Glioblastoma (giant cell) |

| 40 | Central neurocytoma |

| 41 | Anaplastic ependymoma |

| 42 | Spinal cord schistosomiasis |

| 43 | Diffuse glioma (oligodendroglioma) |

| 44 | Central neurocytoma (atypical) |

| 45 | Meningioma (microcystic) |

| 46 | Meningioma (chordoid) |

| 47 | Diffuse glioma (oligodendroglioma) |

| 48 | Metastatic melanoma |

| 49 | Metastatic choriocarcinoma |

| 50 | Meningioma (chordoid) |

| 51 | Glioblastoma (gliosarcoma) |

| 52 | Meningioma (secretory) |

| 53 | Medulloblastoma (large cell/anaplastic) |

| 54 | Embryonal tumor with multilayered rosettes (ependymoblastoma) |

| 55 | Craniopharyngioma |

| 56 | Occipital encephalocele |

| 57 | Glioblastoma |

| 58 | Hemangioblastoma |

| 59 | Meningocele |

| 60 | Diffuse glioma (oligodendroglioma) |

PTLD=Posttransplant lymphoproliferative disorder; DLBCL=Diffuse large B cell lymphoma.

DISCUSSION

Following the current CAP with comprehensive statistics, we confirmed the validity of using digital pathology for the practice of surgical neuropathology. This finding supports the results of a relatively recent systematic review and the multicenter blinded randomized study by Mukhopadhyay et al.20 Additionally, intraobserver variability in concordance rates ranged from 68.3% to 88.3% for WSI and 76.7% to 91.7% for TLM with the highest rates for WSI achieved by pathologist 4 (neuropathologist) who also scored high for TLM. In our study, the total major discordance rate for WSI was 2.1% higher than TLM which is higher than the 0.4% reported by Mukhopadhyay et al.20 Interestingly, there was zero percent major discordance rates for WSI by pathologist 4, a board-certified neuropathologist. None of the participating pathologists received prior training to use the WSI for primary diagnosis.

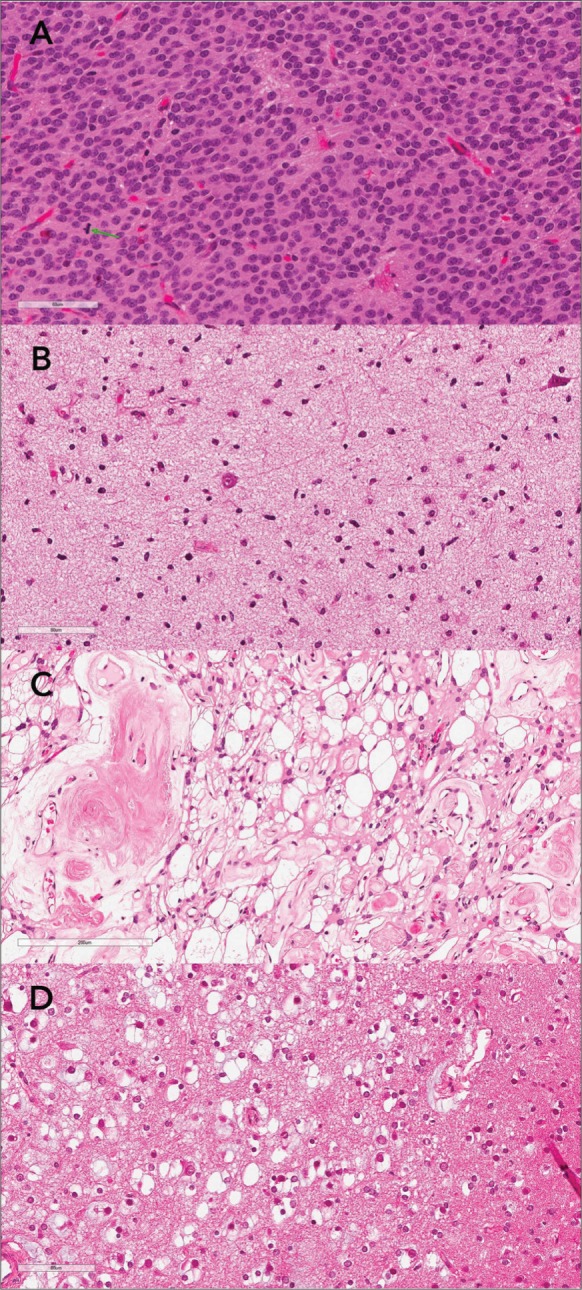

The literature related to digital pathology in the context of surgical neuropathology is scarce. Pekmezci et al identified two potential pitfalls (identification of mitoses and recognition of nuclear details). These pitfalls could limit the utility of WSI for accurate diagnosis and grading of tumors in surgical neuropathology.2 However, in our study, those pitfalls reported by Pekmezci et al were not substantiated. After careful review of digitized slides we found that all discordant cases were attributed to misinterpretation as the image quality was good enough to appreciate the cytological details and to recognize mitotic figures (Figure 1A-D).

Figure 1.

(whole slide images of four discrepant cases): A. Atypical central neurocytoma showing one readily recognized mitotic figure (green arrow) B. Diffuse astrocytoma misdiagnosed as gliosis. C. Microcystic/angiomatous meningioma misdiagnosed as clear cell meningioma. D. Dysembryoplastic neuropithelial tumor (DNET) with several floating neurons misdiagnosed as oligodendroglioma.

Our study evaluated the accuracy of both diagnostic modalities, but not the efficiency in terms of interpretative time for digital images versus glass slides. Mills et al investigated the efficiency of digital pathology compared to glass slide interpretation. Reading pathologists recorded assessment times for each modality. Initially, the digital read was slower than the microscopy but at the end of their study, the digital read was comparable to the optical times.28 The strengths of our study include the blinded design, the inclusion of an extended wash-out period and the participation of four pathologists with variable expertise, one of whom is a neuropathologist. In terms of limitations, we acknowledge the small sample size, the provision of only one diagnostic slides instead of the whole set, and the lack of a sufficient number of participating neuropathologists. The lack of access to the patient's records or ancillary testing results is compensated by the provision of the diagnostic H&E slides with the accompanying brief clinical histories.

WSI as a diagnostic modality is not inferior to TLM and gradual transitioning into digital pathology is possible with close monitoring and sufficient training. The pre-analytical phase should be well controlled with quality H&E slides. However, to ensure the best results, only formally trained neuropathologists should handle the digital neuropathology service.

Funding Statement

None.

REFERENCES

- 1.Fallon Margaret A., Wilbur David C., Prasad Manju. Ovarian frozen section diagnosis: use of whole-slide imaging shows excellent correlation between virtual slide and original interpretations in a large series of cases. Arch Pathol Lab Med 2010;134:1020-23. [DOI] [PubMed] [Google Scholar]

- 2.Pekmezci Melike, Uysal Sanem Pinar, Orhan Yelda, Tihan Tarik, and Lee Han Sung. Pitfalls in the use of whole slide imaging for the diagnosis of central nervous system tumors: A pilot study in surgical neuropathology. J Pathol Inform 2016;7:25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cai G, Parwani AV, Khalbuss WE, Yu J, Monaco SE, Jukic DM, et al. . Cytological Evaluation of Image-Guided Fine Needle Aspiration Biopsies via Robotic Microscopy: A Validation Study. J Patho Inform 2010;26:4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.House JC, Henderson-Jackson EB, Johnson JO, Lloyd MC, Dhillon J, Ahmad N, et al. . Diagnostic digital cytopathology: Are we ready yet?. J Pathol Inform 2013;29:28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lowe Amanda, Chlipala Elizabeth, Elin Jesus, Kawano Yoshihiro, Long Richard E., Tillman Debbie. Validation of digital pathology in a healthcare environment. Conference: Digital Pathology Association, San Diego, CA 2011. [Google Scholar]

- 6.Camparo P, Egevad L, Algaba F, Berney DM, Boccon-Gibod L, Comperat E, et al. . Utility of whole slide imaging and virtual microscopy in prostate pathology. APMIS 2012;120:298-304. [DOI] [PubMed] [Google Scholar]

- 7.Bauer Thomas W., Schoenfield Lynn, Slaw Renee J., Yerian Lisa, Sun Zhiyuan, Henricks Walter H.. Validation of whole slide imaging for primary diagnosis in surgical pathology. Arch Pathol Lab Med 2012;137:518-24. [DOI] [PubMed] [Google Scholar]

- 8.Al-Janabi S, Huisman A, Vink A, Leguit RJ, Offerhaus GJ, ten Kate FJ, et al. . Whole slide images for primary diagnostics of gastrointestinal tract pathology: a feasibility study. Hum Pathol 2012;43:702-7. [DOI] [PubMed] [Google Scholar]

- 9.Al-Janabi S, Huisman A, Willems SM, Van Diest PJ.. Digital slide images for primary diagnostics in breast pathology: a feasibility study. Hum Pathol 2012;43:2318-25. [DOI] [PubMed] [Google Scholar]

- 10.van der Post RS, van der Laak JA, Sturm B, Clarijs R, Schaafsma HE, van Krieken JH, Nap M.. The evaluation of colon biopsies using virtual microscopy is reliable. Histopathology 2013;63:114-21. [DOI] [PubMed] [Google Scholar]

- 11.Buck Thomas P., Dilorio Rebecca, Havrilla Lauren, O'Neill Dennis G.. Validation of a whole slide imaging system for primary diagnosis in surgical pathology: A community hospital experience. J Pathol Inform 2014. 28;5:43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Campbell WS, Hinrichs SH, Lele SM, Baker JJ, Lazenby AJ, Talmon GA, Smith LM, et al. . Whole slide imaging diagnostic concordance with light microscopy for breast needle biopsies. Hum Pathol 2014;45:1713-21. [DOI] [PubMed] [Google Scholar]

- 13.Ordi J, Castillo P, Saco A, Del Pino M, Ordi O, Rodríguez-Carunchio L, Ramirez J.. Validation of whole slide imaging in the primary diagnosis of gynaecological pathology in a University Hospital. J Clin Pathol 2015;68:33-9. [DOI] [PubMed] [Google Scholar]

- 14.Thrall MJ, Wimmer JL, Schwartz MR.. Validation of multiple whole slide imaging scanners based on the guideline from the College of American Pathologists Pathology and Laboratory Quality Center. Arch Pathol Lab Med 2015;139:656-64. [DOI] [PubMed] [Google Scholar]

- 15.Arnold MA, Chenever E, Baker PB, Boue DR, Fung B, Hammond S, et al. . The College of American Pathologists guidelines for whole slide imaging validation are feasible for pediatric pathology: a pediatric pathology practice experience. Pediatr Dev Pathol 2015;18:109-16. [DOI] [PubMed] [Google Scholar]

- 16.Houghton Joseph P, Ervine Aaron J, Kenny Sarah L, Kelly Paul J, Napier Seamus S, McCluggage W Glenn, et al. . Concordance between digital pathology and light microscopy in general surgical pathology: a pilot study of 100 cases. J Clin Pathol 2014;67:1052–55. [DOI] [PubMed] [Google Scholar]

- 17.Snead DR, Tsang YW, Meskiri A, Kimani PK, Crossman R, Rajpoot NM, et al. . Validation of digital pathology imaging for primary histopathological diagnosis. Histopathology 2016;68:1063-72. [DOI] [PubMed] [Google Scholar]

- 18.Shah KK, Lehman JS, Gibson LE, Lohse CM, Comfere NI, Wieland CN.. Validation of diagnostic accuracy with whole-slide imaging compared with glass slide review in dermatopathology. J Am Acad Dermatol 2016;75:1229-37. [DOI] [PubMed] [Google Scholar]

- 19.Saco A, Ramírez J, Rakislova N, Mira A, Ordi J.. Validation of Whole-Slide Imaging for Histopathological Diagnosis: Current State. Pathobiology 2016;83:89-98. [DOI] [PubMed] [Google Scholar]

- 20.Mukhopadhyay S, Feldman, Abels E, Ashfaq R, Beltaifa S, Cacciabeve NG, et al. . Whole Slide Imaging Versus Microscopy for Primary Diagnosis in Surgical Pathology: A Multicenter Blinded Randomized Noninferiority Study of 1992 Cases (Pivotal Study). Am J Surg Pathol 2018;42:39-52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mills AM, Gradecki SE, Horton BJ, Blackwell R, Moskaluk CA, Mandell JW, et al. . Diagnostic Efficiency in Digital Pathology: A Comparison of Optical Versus Digital Assessment in 510 Surgical Pathology Cases. Am J Surg Pathol. 2018;42:53-9. [DOI] [PubMed] [Google Scholar]

- 22.Evans AJ, Salama ME, Henricks WH, Pantanowitz L.. Implementation of Whole Slide Imaging for Clinical Purposes: Issues to Consider From the Perspective of Early Adopters. Arch Pathol Lab Med 2017;141:944-59. [DOI] [PubMed] [Google Scholar]

- 23.Tetu B, Evans A.. Canadian Licensure for the Use of Digital Pathology for Routine Diagnoses. Arch Pathol Lab Med 2014;138:302-4. [DOI] [PubMed] [Google Scholar]

- 24.Thorstenson S, Molin J, Lundstrom C.. Implementation of large-scale routine diagnostics using whole slide imaging in Sweden: Digital pathology experiences 2006-2013. J Pathol Inform 2014;5:14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stathonikos N, Veta M, Huisman A, van Diest PJ.. Going fully digital: Perspective of a Dutch academic pathology lab. J Pathol Inform 2013;4:15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Evans AJ, Bauer TW, Bui MM, Cornish TC, Duncan H, Glassy EF, et al. . US Food and Drug Administration Approval of Whole Slide Imaging for Primary Diagnosis: A Key Milestone Is Reached and New Questions Are Raised. Arch Pathol Lab Med 2018;142:1383-87. [DOI] [PubMed] [Google Scholar]

- 27.Pantanowitz L, Sinard JH, Henricks WH, Fatheree LA, Carter AB, Contis L, et al. . Validating whole slide imaging for diagnostic purposes in pathology: guideline from the College of American Pathologists Pathology and Laboratory Quality Center. Arch Pathol Lab Med 2013;137:1710-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mills AM, Gradecki SE, Horton BJ, Blackwell R, Moskaluk CA, Mandell JW, et al. . Diagnostic Efficiency in Digital Pathology: A Comparison of Optical Versus Digital Assessment in 510 Surgical Pathology Cases. Am J Surg Pathol 2018;42:53-9. [DOI] [PubMed] [Google Scholar]