Abstract

Background

Otolaryngology–head and neck surgery is in the first wave of residency training programs in Canada to adopt Competence by Design (CBD), a model of competency-based medical education. CBD is built on frequent, low-stakes assessments and requires an increase in the number of feedback interactions. The University of Toronto otolaryngology–head and neck surgery residents piloted the CBD model but were completing only 1 assessment every 4 weeks, which was insufficient to support CBD.

Objective

This project aimed to increase assessment completion to once per resident per week using quality improvement methodology.

Methods

Stakeholder engagement activities had residents and faculty characterize barriers to assessment completion. Brief electronic assessment forms were completed by faculty on residents' personal mobile devices in face-to-face encounters, and the number completed per resident was tracked for 10 months during the 2016–2017 pilot year. Response to the intervention was analyzed using statistical process control charts.

Results

The first bundled intervention—a rule set dictating which clinical instance should be assessed, combined with a weekly reminder implemented for 10 weeks—was unsuccessful in increasing the frequency of assessments. The second intervention was a leaderboard, designed on an audit-and-feedback system, which sent weekly comparison e-mails of each resident's completion rate to all residents and the program director. The leaderboard demonstrated significant improvement from baseline over 10 weeks, increasing the assessment completion rate from 0.22 to 2.87 assessments per resident per week.

Conclusions

A resident-designed audit-and-feedback leaderboard system improved the frequency of CBD assessment completion.

What was known and gap

Otolaryngology–head and neck surgery was in the first wave of Canadian residency training programs to adopt Competence by Design, but a pilot program struggled to complete enough assessments per resident to support the new educational model.

What is new

A resident-designed audit-and-feedback leaderboard system aimed to increase assessment completion to once per resident per week.

Limitations

Quality improvement methodology focused on addressing barriers specific to this program, potentially limiting generalizability. Researchers collected data on their own behavior, introducing the potential for subject bias.

Bottom line

A leaderboard of entrustable professional activity completion designed on an audit-and-feedback system significantly increased the frequency of entrustable professional activity assessments per resident per week.

Introduction

Otolaryngology–head and neck surgery (OHNS) is part of the initial wave of specialties adopting the Royal College of Physicians and Surgeons of Canada (Royal College) new competency-based medical educational model, Competence by Design (CBD).1 Residents progress through stages of residency based on assessments of specific skillsets (entrustable professional activities [EPAs]).2 In OHNS, EPAs for postgraduate year 1 (PGY-1) residents include procedural skills such as management of epistaxis and nontechnical skills such as preoperative planning. An assessment of an EPA is used to evaluate a resident's performance during a single clinical encounter. The difficulty and complexity of the EPAs increase as residents progress through their discipline's training.1

CBD is built on frequent, low-stakes assessments with an emphasis on high-quality feedback.1 These assessments are based on well-defined EPAs, which also support more specific and actionable feedback for residents; the goal is to give trainees more coaching as they learn, identify and correct areas of deficiency earlier, and provide a more comprehensive data set of a resident's performance.3 Compared with the traditional mid- and end-of-rotation assessments, the most dramatic shift for the day-to-day interactions between assessors and learners is the large increase in the number of formalized feedback interactions required by CBD. The Royal College did not require a defined number, and there was no consensus among programs about how many assessments should be completed per EPA. With over 60 unique EPAs in the OHNS curriculum, completing an EPA weekly would give residents the opportunity to collect a few assessments per EPA by the end of residency, which was a reasonable target to support CBD.

In 2016, a PGY-1 OHNS cohort at the University of Toronto piloted the CBD model prior to its implementation nationally. Formative assessments were collected for a limited number of EPAs (Box 1). However, insufficient numbers of assessments (approximately 1 assessment per resident per month) were collected to support the CBD model. The cohort aimed to increase the number of EPAs completed to 1 per resident per week by the end of the pilot year.4

Box 1 Assessments Completed Through New Competence by Design Tool

Assessment and preoperative planning

Assessment and management of epistaxis

Initial management: emergent case

Procedural skills assessment

Chart documentation assessment

Teaching or rounds assessment

Oral examination

Methods

This study took place in a large urban institution with all 5 PGY-1 residents out of the 27 residents in the program. While these 5 residents were collecting EPA assessments, PGY-2 through PGY-5 cohorts remained on the traditional educational model and were excluded from the study. EPAs were completed on personal mobile devices through an electronic platform designed at the University of Toronto. The EPA interactions were typically initiated by residents and consisted of face-to-face encounters lasting approximately 5 minutes.

This resident-led project used the Model for Improvement to guide a series of interventions in the 2016–2017 academic year.5 Key steps in the model include conduct problem characterization activities, define measurements to track outcomes, test and refine the intervention using plan-do-study-act (PDSA) cycles, and evaluate the impact using statistical process control.5

Problem Characterization

During resident-led stakeholder engagement activities residents and faculty were asked to characterize barriers to EPA completion. The barriers identified pertained to the following: (1) time constraints for the faculty or learner; (2) personal discomfort in initiating feedback interactions; (3) lack of resident engagement because the EPAs were not mandatory; (4) lack of staff engagement in a new assessment process; (5) the tendency to avoid seeking EPAs when the clinical instance was incomplete or the resident performed suboptimally; and (6) participants forgetting about EPAs in situations that would have been appropriate for assessments.

First PDSA Cycle

The design of the initial intervention stemmed from the barriers identified during stakeholder engagement. The initial intervention assigned a resident to remind the rest of the cohort on a weekly basis to complete EPAs. This was bundled with a peer-enforced rule that the first consult of the week would be the clinical instance used for EPA assessment. This intervention targeted 4 factors identified during problem characterization: (1) increase engagement among the residents in CBD; (2) alleviate the choice about which instance was “good enough” to seek evaluation; (3) remind residents to complete EPAs; and (4) make EPA completion valued as mandatory rather than an optional pilot.

Second PDSA Cycle

After an unsuccessful initial intervention, the residents held another planning session to discuss the lessons learned from the initial PDSA and refine the intervention. The initial intervention had issues with fidelity as reminders were inconsistently delivered, and the intervention did not adequately address the specific barriers identified previously. A positive factor from the first intervention was how it fostered resident cohesiveness and a desire to complete EPAs to avoid letting each other down. Another untapped motivation identified was the spirit of competition among residents.

The second intervention created an audit-and-feedback leaderboard system. Each resident and the program director were e-mailed a non-anonymized comparison table showing their EPAs completed to date by category and the number of EPAs completed that week. The residents agreed to have their names attached to scores rather than comparing results anonymously to increase the motivational impact of the leaderboard. The program director's office extracted the data from the electronic tool; it took approximately 10 minutes of administrative time weekly to compile and send the leaderboard data. The second intervention was designed to (1) foster resident engagement; (2) promote self-reflection on level of performance; (3) draw on resident cohesiveness; (4) draw on interresident competitiveness; (5) make EPA completion valued as mandatory rather than an optional pilot; and (6) provide a weekly reminder.

Ethics review was not required by the University Health Network for this quality improvement measurement of existing educational assessments.

Data Analysis

The outcome measure was the number of assessments completed per week. Results were analyzed using u-charts, a specific type of statistical process control chart, created using quality improvement (QI) macros (KnowWare International Inc, Denver, CO). In a control chart, the control line reflects the mean, and the upper control limit represents 3 standard deviations above the mean. The control chart rules that define significance in health care processes are listed in Box 2.

Box 2 Control Chart Rules of Trend Significance

One point above the upper control limit

2 out of 3 points above or below 2 standard deviations

8 points in a row above or below control line

6 points in a row increasing or decreasing in value

15 points within 1 standard deviation of the mean

Results

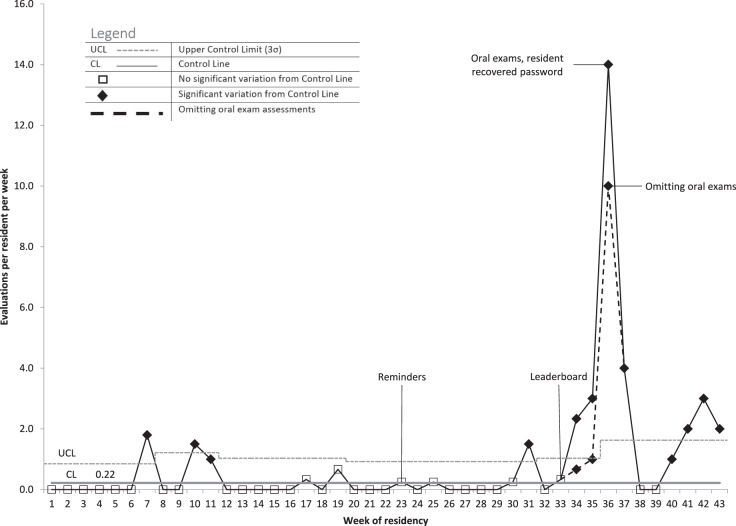

The initial 22 weeks prior to any intervention represent the baseline data set. The baseline rate of completion was 0.22 EPAs completed per resident per week, as indicated by the control line (Figure). The upper control limit varied because the standard deviation varied depending on the number of residents on OHNS rotations per week but ranged from 0.55 (5 residents on OHNS) to 1.10 (1 resident on OHNS). The spike of assessments from week 7, which corresponded to the last week of the first rotation and brought an associated flurry of assessments, was statistically a special cause of variance. This pattern was believed to reflect normal assessment-seeking behavior, so it was included in the baseline rate of EPA completion. Special variance was also observed in weeks 10 and 11, but no factors to explain the variance were discovered after analysis.

Figure.

Entrustable Professional Activity Completion Rate U-Chart

After the first intervention (week 23), there was no change in the rate of EPA completion. The u-chart (Figure) does demonstrate 1 point of special variance (week 31), which corresponded to a scheduled workshop for this project and therefore is not likely attributable to the intervention.

The leaderboard intervention (implemented during week 33) demonstrated an immediate increase in the number of EPAs completed (Figure). The average completion rate after implementation was 2.87 assessments per resident per week. Weeks 34 to 36 are somewhat anomalous, because there were oral examinations that added an extra 15 completed assessments. The dashed trendline reanalyzed the trend in EPA completion when those oral examinations were omitted, and statistically significant improvement was still seen, with a mean of 1.65 per resident per week. A technical barrier with the EPA collection tool was also rectified—a resident who had lost their password to the tool regained access and subsequently completed a backlog of EPAs in week 36.

Discussion

The OHNS PGY-1 cohort led a QI initiative to increase the number of EPA assessments to support CBD and found that a reminder system was unsuccessful. The leaderboard intervention significantly increased the number of completed EPAs above the target goal.

The initial reminder-based system, while simple to implement, is one of the lowest-level interventions in terms of expected effectiveness.6 The refined second leaderboard intervention provided the same frequency of reminder but with a multifaceted increase in persuasiveness. A strength of the intervention was that it encompassed several of the previously identified motivators influencing feedback-seeking behavior—discussions among the residents revealed that individuals were not uniformly motivated by the same aspects of the intervention. Some thought the involvement of the program director increased their motivation the most, while others were more motivated to improve their position on the leaderboard.

The EPA completion rate significantly increased over the baseline level in 8 of the 10 weeks after the leaderboard was implemented. The 2 weeks that had zero assessments followed the 2 weeks of highest EPA completion, which may indicate assessment fatigue from the residents or faculty after the initial success. The recovery to a statistically improved EPA completion rate in the last 4 weeks (without altering the leaderboard) hopefully indicates that the leaderboard can have sustained impact.

Other studies have reported improvements in adherence to new resident assessment models with improved electronic tools and used QI methodology.7–10 Our baseline rate is similar to that of other preintervention cohorts and exceeds the relative gain made in other studies implementing improvements to increase the frequency of feedback.9–11 The locally tailored solution we developed was more effective for our department but is perhaps less generalizable than the interventions reported in the larger studies.9,10

Potential limitations of the study include the instability of the data at baseline, which decreases some statistical confidence in the results. However, the baseline was still unacceptably low, and the improvement measured was temporally related to the second intervention. The fact that the researchers were collecting data on their own behavior introduces the potential for subject bias. Despite this, we believe the engagement of residents in QI projects around CBD assessments is crucial for the success and sustainability of these initiatives. This study examined a limited number of EPAs in a single PGY cohort. It is not known how much assessment fatigue program participants and faculty may experience once all 5 PGY cohorts migrate to the CBD model and are simultaneously seeking an increased number of assessments. The effectiveness seen with our leaderboard intervention may not be generalizable to this situation.

As this study measured the frequency of EPA completion, a key next step is to measure the quality of assessments and ensure that quality is maintained as quantity increases. A process measure of fidelity to evaluate the effectiveness of the leaderboard—for example, tracking how many residents actually read the e-mail per week—is also an important next step. This project targeted human factors, but additional QI interventions could focus on system-level changes. Improving faculty and program readiness, streamlining technical challenges with the tool, scheduling feedback sessions, and decreasing the total number of competencies are potentially effective system-level strategies.

Conclusions

Residents in a large OHNS program created and implemented solutions to increase EPA completion using QI methodology. A leaderboard of EPA completion designed on an audit-and-feedback system significantly increased the frequency of EPA completion from 0.22 to 2.87 assessments per resident per week.

Footnotes

Funding: The authors report no external funding source for this study.

Conflict of interest: The authors declare they have no competing interests.

This work was previously presented at the International Conference on Residency Education, Halifax, Nova Scotia, Canada, October 18–20, 2018.

References

- 1.Royal College of Physicians and Surgeons of Canada. Competence by design. 20142019 http://www.royalcollege.ca/rcsite/cbd/competence-by-design-cbd-e Accessed December 6.

- 2.Frank JR, Snell LS, ten Cate O, Holmboe ES, Carraccio C, Swing SR, et al. Competency-based medical education: theory to practice. Med Teach. 2010;32(8):638–645. doi: 10.3109/0142159X.2010.501190. [DOI] [PubMed] [Google Scholar]

- 3.ten Cate O, Scheele F. Competency-based postgraduate training: can we bridge the gap between theory and clinical practice? Acad Med. 2007;82(6):542–547. doi: 10.1097/ACM.0b013e31805559c7. [DOI] [PubMed] [Google Scholar]

- 4.Equator Network. SQUIRE 2.0 Standards for quality improvement reporting excellence: revised publication guidelines from a detailed consensus process. 2019 doi: 10.4037/ajcc2015455. http://www.equator-network.org/reporting-guidelines/squire Accessed December 6. [DOI] [PubMed]

- 5.Langley G, Moen R, Noaln T, Norman C, Provost L. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance 2nd ed. San Francisco, CA: Jossey-Bass; 2009. [Google Scholar]

- 6.ISMP Medication Safety Alert! Inst Safe Medicat Pract Newsl. 19992019 https://www.ismp.org/resources/medication-error-prevention-toolbox Accessed December 6.

- 7.Yarris LM, Jones D, Kornegay JG, Hansen M. The milestones passport: a learner-centered application of the milestone framework to prompt real-time feedback in the emergency department. J Grad Med Educ. 2014;6(3):555–560. doi: 10.4300/jgme-d-13-00409.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Donoff MG. Field notes: assisting achievement and documenting competence. Can Fam Physician. 2009;55(12):1260–1262. e100–e102. [PMC free article] [PubMed] [Google Scholar]

- 9.Cooney CM, Cooney DS, Bello RJ, Bojovic B, Redett RJ, Lifchez SD. Comprehensive observations of resident evolution: a novel method for assessing procedure-based residency training. Plast Reconstr Surg. 2016;137(2):673–678. doi: 10.1097/01.prs.0000475797.69478.0e. [DOI] [PubMed] [Google Scholar]

- 10.Anderson CI, Basson MD, Ali M, Davis AT, Osmer RL, McLeod MK, et al. Comprehensive multicenter graduate surgical education initiative incorporating entrustable professional activities, continuous quality improvement cycles, and a web-based platform to enhance teaching and learning. J Am Coll Surg. 2018;227(1):64–76. doi: 10.1016/j.jamcollsurg.2018.02.014. [DOI] [PubMed] [Google Scholar]

- 11.Emke AR, Park YS, Srinivasan S, Tekian A. Workplace-based assessments using pediatric critical care entrustable professional activities. J Grad Med Educ. 2019;11(4):430–438. doi: 10.4300/jgme-d-18-01006.1. [DOI] [PMC free article] [PubMed] [Google Scholar]