Abstract

Background

Historically, medically trained experts have served as judges to establish a minimum passing standard (MPS) for mastery learning. As mastery learning expands from procedure-based skills to patient-centered domains, such as communication, there is an opportunity to incorporate patients as judges in setting the MPS.

Objective

We described our process of incorporating patients as judges to set the MPS and compared the MPS set by patients and emergency medicine residency program directors (PDs).

Methods

Patient and physician panels were convened to determine an MPS for a 21-item Uncertainty Communication Checklist. The MPS for both panels were independently calculated using the Mastery Angoff method. Mean scores on individual checklist items with corresponding 95% confidence intervals were also calculated for both panels and differences analyzed using a t test.

Results

Of 240 eligible patients and 42 eligible PDs, 25 patients and 13 PDs (26% and 65% cooperation rates, respectively) completed MPS-setting procedures. The patient-generated MPS was 84.0% (range 45.2–96.2, SD 10.2) and the physician-generated MPS was 88.2% (range 79.7–98.1, SD 5.5). The overall MPS, calculated as an average of these 2 results, was 86.1% (range 45.2–98.1, SD 9.0), or 19 of 21 checklist items.

Conclusions

Patients are able to serve as judges to establish an MPS using the Mastery Angoff method for a task performed by resident physicians. The patient-established MPS was nearly identical to that generated by a panel of residency PDs, indicating similar expectations of proficiency for residents to achieve skill “mastery.”

What was known and gap

With applications of mastery learning expanding to teaching communication and other patient-centered care skills, there is an opportunity to incorporate patients as experts when setting the MPS.

What is new

A process of incorporating patients as judges to set the MPS for the Uncertainty Communication Checklist, using the Mastery Angoff method, and a comparison of the MPS set by patients and emergency medicine residency program directors.

Limitations

Patients were English-speaking and recruited from 2 institutions, reducing diversity and limiting generalizability. The total number of physician judges represented a small percentage of training programs, and additional judges may have changed the ultimate MPS.

Bottom line

Utilizing patient judges on a panel to establish an MPS, using the Mastery Angoff method, for a diagnostic uncertainty communication mastery learning curriculum for resident physicians is feasible.

Introduction

Mastery learning is a form of competency-based medical education developed on the premise that all learners can achieve high levels of performance on a clinical task if given enough time to learn and practice.1,2 Mastery learning is an effective educational approach with documented improved outcomes across many domains, including procedural skills,3 operative interventions,4,5 and difficult conversations.6,7 Additionally, programs implemented using a mastery learning approach have demonstrated improved patient care outcomes and significant cost savings,8,9 suggesting the ability for this learning approach to improve individual and population health.10

There are 7 key components of the mastery learning bundle: (1) baseline testing; (2) clear learning objectives; (3) engagement in educational activities focused on reaching the objectives; (4) a set minimum passing standard (MPS); (5) formative testing; (6) advancement if test achievement is at or above the passing standard; and (7) continued practice until mastery (and the MPS) is reached.11 The establishment of a predetermined, objective, defensible MPS is critical to determining when a learner has achieved mastery.

The first step in setting an MPS is selection of both the method of standard setting and the panel of judges. Historically, the Angoff and Hofstee methods12 were used for standard setting in mastery learning curricula. Experts subsequently proposed new methods to better align with the goals of mastery learning.13,14 The Mastery Angoff method14 has become a favored approach to establishing the MPS in the context of a mastery learning curriculum. Using this approach, judges are asked to consider the performance of a trainee who is ready and well-prepared for the next stage of training, practice, or learning, as opposed to the “borderline trainee” who is considered when using the traditional Angoff method.14 When comparing several different approaches to establish an MPS, one study found the Mastery Angoff method produced a much more stringent MPS, which aligns with the theoretical construct of mastery learning of ensuring that all learners are able to achieve a high level of performance in a mastery learning curriculum.15 Once the method is selected, judges are recruited. Guiding principles for judge selection include content expertise, appropriate knowledge of the learner group, willingness to follow instructions in the standard-setting process, and willingness to minimize bias.12

While mastery learning has been used extensively to teach clinical skills, these skills have focused primarily on procedures; thus, medically trained experts often establish the MPS.16–20 With applications of mastery learning expanding to teaching communication and other patient-centered care skills, there is an opportunity to incorporate patients as experts when setting the MPS. The value of obtaining patient feedback to assess the adequacy of resident communication is highlighted in research that engaged patients to provide feedback on communication skills to surgical residents.21,22 Furthermore, patient satisfaction questions regarding resident performance have been added to Press Ganey surveys.23

Two prior studies incorporated patients for standard setting.24,25 In the first, patients determined the MPS for procedures performed by patients and/or caregivers (LVAD battery changes, controller changes, and dressing change).24 In the second, the authors compared both physician and patient responses to inform ultimate determination of an MPS for a communication survey.25 However, to date, patients have yet to be used as judges to set an MPS for physician performance on a task using the Mastery Angoff method.

The first goal of this project is to describe the process of utilizing patients and emergency medicine (EM) residency program directors (PDs) as judges to set the MPS for an uncertainty communication mastery learning curriculum. The second goal is to compare the MPS generated by the 2 different judge panels using the Mastery Angoff method.

Methods

This work is part of a larger project to develop and test a simulation-based mastery learning curriculum to teach EM residents to have more effective discharge conversations for patients with diagnostic uncertainty. We developed a 21-item Uncertainty Communication Checklist26 for use in our simulation-based training and assessment of these physicians' communication skills.

We recruited 2 separate panels of patients and physicians to serve as judges for the standard-setting process. The number of judges for each panel was based on previously published recommendations.12

Inclusion criteria for patients to participate as a standard-setting judge included being an English-speaking adult (≥ 18 years) with a recent emergency department (ED) visit within either the Thomas Jefferson University (TJU) Health System or Northwestern Memorial (NM) Hospital that resulted in discharge with a symptom-based diagnosis (ie, abdominal pain). Patient exclusion criteria included being admitted to the hospital as a result of their most recent ED visit; undergoing medical clearance for a detox center or any involuntary court or magistrate order; in police custody or currently incarcerated; 4 or more visits to the ED within the month preceding the study recruitment period; having a major communication barrier such as visual, hearing, or cognitive impairment (determined by 6-item screener)27 that would compromise their ability to give written informed consent; or being unwilling or unable to comply with study protocol requirements, determined from research personnel's best judgment.

An electronic health record report was generated at both health systems to identify potentially eligible patients. Two study physicians independently reviewed the report to identify patients discharged with a symptom-based diagnosis and created a randomly ordered recruitment list.28 Trained research personnel contacted patients by telephone to explain the study and further assess eligibility. Interested and eligible patients were invited to participate in focus groups.

Inclusion criterion for physicians included serving as a current or former PD or associate PD for an accredited EM residency program. An initial recruitment e-mail was sent to 28 individuals selected by the study team through our professional network to balance program geography (Northeast, Midwest, South, West), PD gender, and 3- versus 4-year program designation. As the initial PD response led to a predominance of respondents from the Northeast and from 4-year programs, we sent a second recruitment e-mail to an additional 14 PDs from 3-year programs in the underrepresented 3 geographic areas to balance recruitment, resulting in the final sample.

Patient Standard Setting

During May 2018, we conducted patient standard setting in groups of 5 to 8 patients. The standard-setting session included: (1) discuss the goals of the standard-setting process using the Mastery Angoff method14; (2) define mastery learning; (3) review principles of mastery learning; (4) present and review the Uncertainty Communication Checklist; and (5) complete the standard-setting activity. The same presentation slides were used to lead each group. Two study investigators at each site served as moderators. For the standard-setting activity, each judge was asked to consider a “well-prepared learner,” defined as a resident physician who had completed the uncertainty communication curriculum and would be ready to perform this task safely and appropriately without supervision. Judges then were asked to individually estimate the percentage of learners who, after completing a curriculum designed to teach the elements of the checklist, would perform each item correctly. To ensure participant comprehension of the standard-setting methodology, for the first few items, judges estimated this percentage individually and then shared their estimation and rationale with the group. Each judge independently completed the remaining checklist items; the group discussed items for which any judge had questions. Judges recorded written estimates and submitted them to the research team upon session completion.

PD Standard Setting

Each standard-setting session for the PDs occurred between April and June 2018 using Zoom video conference software. Participants viewed the presentation slides as the moderator reviewed the material. PDs completed the standard-setting activity as described above for patients and submitted their scores electronically using Qualtrics (Qualtrics LLC, Provo, UT).

Mean scores and corresponding 95% confidence intervals on individual checklist items were calculated for patient and physician participants. Mean difference for individual checklist items were analyzed using a t test. The MPS was calculated separately for patient and physician cohorts; the final MPS for the Uncertainty Communication Checklist represents the mean score from all of the judges (each individual equally weighted).29

The Institutional Review Boards of both Northwestern University (NU) and Thomas Jefferson University approved this study.

Results

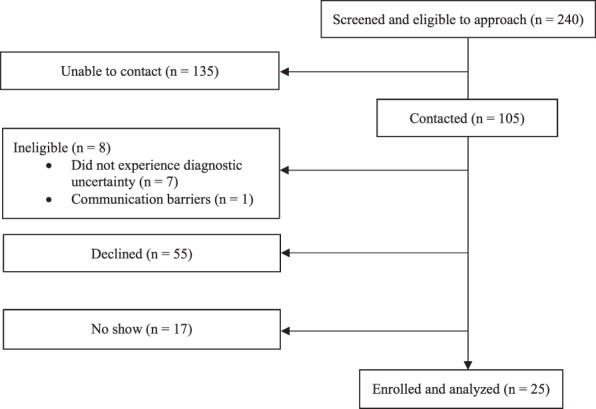

Two hundred forty patients who met inclusion criteria were screened; 105 were able to be contacted, of whom 8 were ineligible and 55 declined participation. Twenty-five patients (26% cooperation rate) agreed to participate as judges in 1 of 4 standard-setting focus groups (2 at TJU, 2 at NU) occurring from May 22 to May 31, 2018 (Figure). Each session included 5 to 8 judges and lasted 90 minutes. We approached 42 PDs, with 20 indicating potential interest, and ultimately 13 PDs (65% cooperation rate) participated as judges in 1 of 4 standard-setting video conferences from April 3 to June 26, 2018. Each session included 2 to 4 judges and lasted 60 minutes. See Tables 1 and 2 for patient and PD judge characteristics, respectively.

Figure.

Recruitment and Screening Process to Enroll Patients as Judges

Table 1.

Patient Judge Panel Demographics (n = 25)

| Demographics | n (%) |

| Age, mean (range), SD | 44.8 (36–50), 19.5 |

| Race | |

| White | 8 (32) |

| Black | 12 (48) |

| Asian | 2 (8) |

| Other | 2 (8) |

| Ethnicity | |

| Hispanic | 3 (12) |

| Non-Hispanic | 22 (88) |

| Female | 11 (44) |

| Marital status | |

| Married, or in domestic partnership | 7 (28) |

| Single (never married) | 15 (60) |

| Widowed | 2 (8) |

| Divorced | 1 (4) |

| Speaks English as primary language | 23 (92) |

| Household size, mean (SD) | 2.5a (1.4) |

| Household income | |

| < $10K | 4 (16) |

| $10–$24K | 4 (16) |

| $25–$49K | 4 (16) |

| $50–$99K | 4 (16) |

| > $100K | 4 (16) |

| Educational attainment | |

| Less than high school | 1 (4) |

| High school graduate | 10 (40) |

| College degree | 10 (40) |

| Postgraduate degree | 3 (12) |

| Has health insurance | 23 (92) |

| Literacy screening questions | |

| “Never” needs help reading medical instructions | 10 (40) |

| “Always” feels confident filling out medical forms | 10 (40) |

| “Never” has difficult understanding written information from a health care provider | 10 (40) |

| Patient-identified health status | |

| 1: excellent | 1 (4) |

| 2: very good | 6 (24) |

| 3: good | 9 (36) |

| 4: fair | 7 (28) |

| 5: poor | 1 (4) |

| Has primary care physician | 20 (80) |

| Health care utilization, mean (range) | |

| No. of hospital admissions | 0.7a (0–4) |

| No. of emergency department or urgent care visits | 1.9a (0–5) |

| No. of physician office visits | 8.0a (0–100) |

At least 1 participant declined to answer.

Table 2.

Program Director Demographics (n = 13)

| Demographics | n (%) |

| Age, mean (range), SD | 42.8 (36–52), 5.2 |

| Race | |

| White | 11 (85) |

| Black | 1 (8) |

| Asian | 1 (8) |

| Ethnicity | |

| Hispanic | 1 (8) |

| Non-Hispanic | 12 (92) |

| Sex | |

| Male | 5 (39) |

| Female | 8 (62) |

| Training program PD attended | |

| 3-year | 3 (23) |

| 4-year | 10 (77) |

| Specialtya | |

| Emergency medicine | 13 (100) |

| Internal medicine | 1 (8) |

| Toxicology | 1 (8) |

| Board certificationsa | |

| Emergency medicine | 13 (100) |

| Internal medicine | 1 (8) |

| Toxicology | 1 (8) |

| Training program PD directs, n (%) | |

| 3-year | 4 (31) |

| 4-year | 9 (69) |

| Years in practice since residency, mean (range), SD | 3.3 (1–8), 2.6 |

| Hospital setting | |

| Urban | 11 (85) |

| Suburban | 2 (15) |

| Hospital geographic location | |

| Northeast | 6 (46) |

| Midwest | 3 (23) |

| South | 2 (15) |

| West | 2 (15) |

Abbreviation: PD, program director.

Program directors listed more than 1 specialty and board certification.

Patients assigned higher scores on 5 items, and physicians assigned higher scores on the remaining 16 items. Only 3 of 21 items had statistically significant differences between patient and physician raters (Table 3).

Table 3.

Comparison of Standard Setting Between Scoring Groups

| Uncertainty Communication Checklist26 | Patient | Program Director | Difference |

| Mean (95% CI) | Mean (95% CI) | Mean Difference (95% CI of Mean Difference) | |

| 1. Explain to the patient that they are being discharged | 86.9 (79.8–93.9) | 95.8 (93.4–98.3) | 8.9 (-0.8–18.7) |

| 2. Ask if there is anyone else that the patient wishes to have included in this conversation in person and/or by telephone | 65.7 (56.9–74.6) | 85.4 (78.2–92.5) | 19.7 (6.6–32.7)a |

| 3. Clearly state that either “life-threatening” or “dangerous” conditions have not been found | 86.4 (78.9–93.8) | 91.4 (87.7–95.0) | 5.0 (-5.4–15.5) |

| 4. Discuss diagnoses that were considered (using both medical and lay terminology) | 81.0 (75.0–87.0) | 84.5 (76.6–92.3) | 3.5 (-6.3–13.2) |

| 5. Communicate relevant results of tests to the patient (normal or abnormal) | 84.4 (79.3–89.4) | 84.6 (79.6–89.6) | 0.2 (-7.4–7.9) |

| 6. Ask patient if there are any questions about testing and/or results | 88.9 (81.8–96.1) | 93.8 (91.0–96.6) | 4.9 (-5.1–14.9) |

| 7. Ask if patient was expecting anything else to be done during their encounter; if yes, address reasons not done | 60.0 (51.1–68.9) | 82.3 (76.7–87.9) | 22.3 (9.5–35.1)a |

| 8. Discuss possible alternate or working diagnoses | 73.9 (65.7–82.0) | 86.8 (80.3–93.2) | 12.9 (0.9–24.9)a |

| 9. Clearly state that there is a not a confirmed explanation (diagnosis) for what the patient has been experiencing | 81.7 (73.5–89.9) | 89.6 (84.0–95.2) | 7.9 (-3.9–19.8) |

| 10. Validate the patient's symptoms | 81.0 (74.3–87.7) | 81.2 (73.2–89.1) | 0.2 (-10.4–10.7) |

| 11. Discuss that the ED role is to identify conditions that require immediate attention | 79.4 (70.4–88.3) | 88.8 (85.1–92.6) | 9.4 (-3.1–22.0) |

| 12. Normalize leaving the ED with uncertainty | 84.7 (79.6–89.8) | 87.7 (82.8–92.6) | 3.0 (-4.7–10.7) |

| 13. Suggest realistic expectations/trajectory for symptoms | 86.7 (81.8–91.6) | 83.1 (77.8–88.4) | -3.6 (-11.2–3.9) |

| 14. Discuss next tests that are needed, if any | 87.8 (80.2–95.4) | 88.1 (82.6–93.5) | 0.3 (-10.8–11.3) |

| 15. Discuss who to see next and in what time frame | 91.9 (87.2–96.6) | 86.5 (80.9–92.1) | -5.4 (-12.8–2.1) |

| 16. Discuss a plan for managing symptoms at home | 89.6 (84.5–94.6) | 90.8 (86.3–95.2) | 1.2 (-6.3–8.8) |

| 17. Discuss any medication changes | 90.2 (83.9–96.4) | 86.6 (79.6–93.4) | -3.6 (-13.3–6.1) |

| 18. Ask patient if there are any questions and/or anticipated problems related to next steps (self-care and future medical care) after discharge | 88.6 (83.1–94.1) | 85.2 (78.2–92.2) | -3.4 (-12.2–5.5) |

| 19. Discuss what symptoms should prompt immediate return to the ED | 90.6 (84.6–96.5) | 90.6 (86.9–94.4) | 0.0 (-8.5–8.6) |

| 20. Make eye contact | 89.4 (82.9–95.9) | 95.0 (91.9–98.0) | 5.6 (-3.5–14.7) |

| 21. Ask patient if there are any other questions or concerns | 95.8 (92.9–98.7) | 93.7 (91.0–96.4) | -2.1 (-6.5–2.2) |

Abbreviations: CI, confidence interval; ED, emergency department.

P < .05.

The patient judges set an MPS of 84.0% (range 45.2–96.2, SD 10.2); PD judges set an MPS of 88.2% (range 79.7–98.1, SD 5.5). The impact of the difference in the MPS between the 2 judge groups is 1 item: the patient MPS equated to a passing score of 18 out of 21 items correct, whereas the physician MPS equated to a passing score of 19 out of 21 items correct. The overall MPS, calculated as an average of these 2 results, was 86.1% (range 45.2–98.1, SD 9.0), equating to perfoming 19 of 21 items correctly.

Discussion

We demonstrated that patients are able to serve as judges for a task performed by physicians using the Mastery Angoff method. Further, in this study, patients established an MPS that is nearly identical to that generated by a panel of residency PDs.

For any given checklist, there is no gold standard for the MPS. Therefore, we cannot infer if patient- or physician-generated scores are “better” or “more accurate” on our Uncertainty Communication Checklist. Instead, we can only comment on the similarities or differences among judges' scores. We found the difference between scores generated by patients and physicians to result in only 1 more item needing to be performed correctly for the more stringent physician-generated score. This high level of agreement reflects the importance that both patients and physicians place on communication, particularly in the context of uncertainty at the time of ED discharge.

We observed a wider range of scores among patients, represented by a standard deviation of 10.2 versus 5.5 among PDs. One outlier patient was the primary driver of the difference in score range. Although statistically an outlier, the team believed that the low percentage estimations of this patient were not due to misunderstanding of the standard-setting task, but rather to their true beliefs about what could be expected of the residents achieving mastery (based on negative prior experiences). Therefore, we retained all scores for the calculations as they represented the patient perspective. Notably, removal of the scores from the patient outlier would not have altered the MPS.

Statistically significant differences among patient and PD scores were identified on only 3 of the 21 Uncertainty Communication Checklist items (items 2, 7, 8). Based on participant feedback during the standard-setting process, we believe several explanations exist for these differences. Patients reflected on their own experiences in the emergency setting, which had never included such interactions (eg, items 2 and 7). While it might seem that patients would frequently experience a discussion of alternate diagnoses (item 8), in an era imbued with fear of giving the “wrong” answer, physicians may not routinely share this information unless specifically guided. PDs, however, commented that while their residents did not engage in these communication tasks routinely, the tasks were concrete items that a well-prepared resident would be able to achieve if instructed properly.

With this work, we sought to extend patient involvement in communication skills assessment by demonstrating the feasibility of engaging patients as judges in mastery learning standard setting for topics in which they have appropriate content expertise. This study extends the reach of 2 previous studies that utilized patients as judges to establish an MPS,24,25 and is the first to integrate patient perspectives through their participation as judges in the context of mastery learning for the assessment of physician performance.

Our findings are similar to those of Barsuk et al,24 which reported a difference in an MPS set by patients and physicians of only 1 checklist item. However, the Wayne et al study25 reported a much larger difference between patient and physician standards. Such differences may be due to the standard-setting approach used. The Wayne et al study used the traditional Angoff method to standard setting, which asks judges to conceptualize a “borderline” learner.25 In contrast, our study used the Mastery Angoff method, asking judges to consider a “well-prepared” learner. Patients may be less familiar than physicians with what would constitute a “borderline” learner, yet better able to conceptualize a “well-prepared” learner, which could explain the similarity in scores. Further, in the Wayne et al study, judges were provided baseline performance data, which has been shown to influence standard-setting scores.30,31 In our study, the focus on “mastery” and the lack of provision of baseline data may have removed the influence of previous personal experience (for both patients and PDs) and served to create a shared mental model between all judges of the ideal masterful performance of the skill.

Our methodology incorporated use of a video conference to facilitate conduct of the standard-setting sessions, which allowed for a panel of judges spanning the geographical and programmatic variation inherent in EM training programs, potentially decreasing their bias. Such broad representation may increase the generalizability of the MPS for this checklist beyond a single institution.

Limitations of this study include generalizability; although physician judges were recruited nationally, patients were English-speaking and recruited from 2 institutions, which reduces diversity. The total number of physician judges represents a small percentage (18%) of overall EM training programs. It is possible that additional judges may have changed the ultimate MPS.

As this study used only one approach for setting the MPS, future studies comparing standard-setting approaches with patient judges may elucidate differences among various these approaches.

Conclusions

This study demonstrated that using patient judges on a panel to establish an MPS for the Uncertainty Communication Checklist, using the Mastery Angoff method, for a diagnostic uncertainty communication mastery learning curriculum for resident physicians is feasible. In addition, patient and expert physician judges found nearly identical MPS.

Footnotes

Funding: This project was supported by grant number R18HS025651 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

Conflict of interest: The authors declare they have no competing interests.

This work was previously presented as a poster at the Council of Residency Directors in Emergency Medicine Academic Assembly, Seattle, Washington, March 31–April 3, 2019.

The authors would like to thank all of the physicians and patients for serving as judges.

References

- 1.McGaghie WC, Miller GE, Sajid A, Telder TV. Competency-based curriculum development in medical education: an introduction. Public Health Pap. 1978;68:11–91. [PubMed] [Google Scholar]

- 2.McGaghie WC. Mastery learning: it is time for medical education to join the 21st century. Acad Med. 2015;90(11):1438–1441. doi: 10.1097/ACM.0000000000000911. [DOI] [PubMed] [Google Scholar]

- 3.McGaghie WC, Issenberg SB, Barsuk JH, Wayne DB. A critical review of simulation-based mastery learning with translational outcomes. Med Educ. 2014;48(4):375–385. doi: 10.1111/medu.12391. [DOI] [PubMed] [Google Scholar]

- 4.Ritter EM, Taylor ZA, Wolf KR, Franklin BR, Placek SB, Korndorffer JR, Jr, et al. Simulation-based mastery learning for endoscopy using the endoscopy training system: a strategy to improve endoscopic skills and prepare for the fundamentals of endoscopic surgery (FES) manual skills exam. Surg Endosc. 2018;32(1):413–420. doi: 10.1007/s00464-017-5697-4. [DOI] [PubMed] [Google Scholar]

- 5.Teitelbaum EN, Soper NJ, Santos BF, Rooney DM, Patel P, Nagle AP, et al. A simulator-based resident curriculum for laparoscopic common bile duct exploration. Surgery. 2014;156:880–893. doi: 10.1016/j.surg.2014.06.020. [DOI] [PubMed] [Google Scholar]

- 6.Sharma R, Szmuilowicz E, Ogunseitan A, Montalvo J, O'Leary K, Wayne DB. Evaluation of a mastery learning intervention on hospitalists' code status discussion skills. J Pain Symptom Manage. 2017;53(6):106–1070. doi: 10.1016/j.jpainsymman.2016.12.341. [DOI] [PubMed] [Google Scholar]

- 7.Vermylen JH, Wood GJ, Cohen ER, Barsuk JH, Mcgaghie WC, Wayne DB. Development of a simulation-based mastery learning curriculum for breaking bad news. J Pain Symptom Manage. 2019;57(3):682–687. doi: 10.1016/j.jpainsymman.2018.11.012. [DOI] [PubMed] [Google Scholar]

- 8.Cohen ER, Feinglass J, Barsuk JH, Barnard C, O'Donnell A, McGaghie WC, et al. Cost savings from reduced catheter-related bloodstream infection after simulation-based education for residents in a medical intensive care unit. Simul Healthc. 2010;5(2):98–102. doi: 10.1097/SIH.0b013e3181bc8304. [DOI] [PubMed] [Google Scholar]

- 9.Barsuk JH, Cohen ER, Feinglass J, Kozmic SE, McGaghie WC, Ganger D, et al. Cost savings of performing paracentesis procedures at the bedside after simulation-based education. Simul Healthc. 2014;9(5):312–318. doi: 10.1097/SIH.0000000000000040. [DOI] [PubMed] [Google Scholar]

- 10.McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. Medical education featuring mastery learning with deliberate practice can lead to better health for individuals and populations. Acad Med. 2011;86(11):e8–e9. doi: 10.1097/ACM.0b013e3182308d37. [DOI] [PubMed] [Google Scholar]

- 11.McGaghie WC, Siddall VJ, Mazmanian PE, Myers J. American College of Chest Physicians Health and Science Policy Committee. Lessons for continuing medical education from simulation research in undergraduate and graduate medical education: effectiveness of continuing medical education: American College of Chest Physicians Evidence-Based Educational Guidelines. Chest. 2009;135(3 suppl):62–68. doi: 10.1378/chest.08-2521. [DOI] [PubMed] [Google Scholar]

- 12.Downing SM, Tekian A, Yudkowsky R. Procedures for establishing defensible absolute passing scores on performance examinations in health professions education. Teach Learn Med. 2006;18(1):50–57. doi: 10.1207/s15328015tlm1801_11. [DOI] [PubMed] [Google Scholar]

- 13.Yudkowsky R, Tumuluru S, Casey P, Herlich N, Ledonne C. A patient safety approach to setting pass/fail standards for basic procedural skills checklists. Simul Healthc. 2014;9(5):277–282. doi: 10.1097/SIH.0000000000000044. [DOI] [PubMed] [Google Scholar]

- 14.Yudkowsky R, Park YS, Lineberry M, Knox A, Ritter EM. Setting mastery learning standards. Acad Med. 2015;90(11):1495–1500. doi: 10.1097/ACM.0000000000000887. [DOI] [PubMed] [Google Scholar]

- 15.Barsuk JH, Cohen ER, Wayne DB, McGaghie WC, Yudkowsky R. A comparison of approaches for mastery learning standard setting. Acad Med. 2018;93(7):1079–1084. doi: 10.1097/ACM.0000000000002182. [DOI] [PubMed] [Google Scholar]

- 16.Wayne DB, Fudala MJ, Butter J, Siddall VJ, Feinglass J, Wade LD, et al. Comparison of two standard-setting methods for advanced cardiac life support training. Acad Med. 2005;80(10 suppl):63–66. doi: 10.1097/00001888-200510001-00018. [DOI] [PubMed] [Google Scholar]

- 17.Wayne DB, Butter J, Cohen ER, McGaghie WC. Setting defensible standards for cardiac auscultation skills in medical students. Acad Med. 2009;84(10 suppl):94–96. doi: 10.1097/ACM.0b013e3181b38e8c. [DOI] [PubMed] [Google Scholar]

- 18.Barsuk JH, Cohen ER, Vozenilek JA, O'Connor LM, McGaghie WC, Wayne DB. Simulation-based education with mastery learning improves paracentesis skills. J Grad Med Educ. 2012;4(1):23–27. doi: 10.4300/JGME-D-11-00161.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Barsuk JH, Cohen ER, Caprio T, McGaghie WC, Simuni T, Wayne DB. Simulation-based education with mastery learning improves residents' lumbar puncture skills. Neurology. 2012;79(2):132–137. doi: 10.1212/WNL.0b013e31825dd39d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Butter J, McGaghie WC, Cohen ER, Kaye M, Wayne DB. Simulation-based mastery learning improves cardiac auscultation skills in medical students. J Gen Intern Med. 2010;25(8):780–785. doi: 10.1007/s11606-010-1309-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Stausmire JM, Cashen CP, Myerholtz L, Buderer N. Measuring general surgery residents' communication skills from the patient's perspective using the Communication Assessment Tool (CAT) J Surg Educ. 2015;72(1):108–116. doi: 10.1016/j.jsurg.2014.06.021. [DOI] [PubMed] [Google Scholar]

- 22.Newcomb AB, Liu C, Trickey AW, Lita E, Dort J. Patient perspectives of surgical residents' communication: do skills improve over time with a communication curriculum? J Surg Educ. 2018;75(6):e142–e149. doi: 10.1016/j.jsurg.2018.06.015. [DOI] [PubMed] [Google Scholar]

- 23.Lang SC, Weygandt PL, Darling T, Gravenor S, Evans JJ, Schmidt MJ, et al. Measuring the correlation between emergency medicine resident and attending physician patient satisfaction scores using Press Ganey. AEM Educ Train. 2017;1(3):179–184. doi: 10.1002/aet2.10039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Barsuk JH, Harap RS, Cohen ER, Cameron KA, Grady KL, Wilcox JE, et al. The effect of judge selection on standard setting using the Mastery Angoff method during development of a ventricular assist device self-care curriculum. Clinical Sim Nursing. 2019;27:39–47. doi: 10.1016/j.ecns.2018.10.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wayne DB, Cohen E, Makoul G, McGaghie WC. The impact of judge selection on standard setting for a patient survey of physician communication skills. Acad Med. 2008;83(10 suppl):17–20. doi: 10.1097/ACM.0b013e318183e7bd. [DOI] [PubMed] [Google Scholar]

- 26.Rising KL, Powell RE, Cameron KA, Salzman DH, Papanagnou D, Doty AMB, et al. Development of the Uncertainty Communication Checklist: a patient-centered approach. Acad Med. In Press. [DOI] [PMC free article] [PubMed]

- 27.Callahan CM, Unverzagt FW, Hui SL, Perkins AJ, Hendrie HC. Six-item screener to identify cognitive impairment among potential subjects for clinical research. Med Care. 2002;40(9):771–781. doi: 10.1097/00005650-200209000-00007. [DOI] [PubMed] [Google Scholar]

- 28.Slovis BH, Mccarthy DM, Nord G, Doty AM, Piserchia K, Rising KL. Identifying emergency department symptom-based diagnoses with the unified medical language system. West J Emerg Med. 2019;20(6):910–917. doi: 10.5811/westjem.2019.8.44230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Angoff WH. Scales, norms, and equivalent scores. In: Thorndike RL, editor. Educational Measurement 2nd ed. Washington, DC: American Council on Education; 1971. pp. 508–600. [Google Scholar]

- 30.Wayne DB, Barsuk JH, Cohen E, McGaghie WC. Do baseline data influence standard setting for a clinical skills examination? Acad Med. 2007;80(10 suppl):105–108. doi: 10.1097/ACM.0b013e318141f464. [DOI] [PubMed] [Google Scholar]

- 31.Prenner SB, McGaghie WC, Chuzi S, Cantey E, Didwania A, Barsuk JH. Effect of trainee performance data on standard setting judgments using the Mastery Angoff method. J Grad Med Educ. 2018;10(3):301–305. doi: 10.4300/JGME-D-17-00781.1. [DOI] [PMC free article] [PubMed] [Google Scholar]