Abstract

Objective

The Uniform Data Set 3.0 (UDS 3.0) neuropsychological battery is a recently published battery intended for clinical research with older adult populations. While normative data for the core measures has been published, several additional discrepancy and derived scores can also be calculated. We present normative data for Trail Making Test (TMT) A & B discrepancy and ratio scores, semantic and phonemic fluency discrepancy scores, Craft Story percent retention score, Benson Figure percent retention score, difference between verbal and visual percent retention, and an error index.

Method

Cross-Sectional data from 1803 English speaking, cognitively normal control participants were obtained from the NACC central data repository.

Results

Descriptive information for derived indices is presented. Demographic variables, most commonly age, demonstrated small but significant associations with the measures. Regression values were used to create a normative calculator, made available in a downloadable supplement. Statistically abnormal values (i.e., raw scores corresponding to the 5th, 10th, 90th, and 95th percentiles) were calculated to assist in practical application of normative findings to individual cases. Preliminary validity of the indices are demonstrated by a case study and group comparisons between a sample of individuals with Alzheimer's (N = 81) and Dementia with Lewy Bodies (DLB; N = 100).

Conclusions

Clinically useful normative data of such derived indices from the UDS 3.0 neuropsychological battery are presented to help researchers and clinicians interpret these scores, accounting for demographic factors. Preliminary validity data is presented as well along with limitations and future directions.

Keywords: Normative data, Uniform data set, Sex, Age, Education

Introduction

The National Alzheimer’s Coordinating Center (NACC) developed the neuropsychological battery of the Uniform Data Set (UDS) in 2005, with the intent to measure cognitive performance with a core set of measures in Alzheimer’s disease (AD) and related conditions (Weintraub et al., 2018). Beginning in 2015, a new version of the UDS battery, version 3.0, was released and can be accessed at https://www.alz.washington.edu/WEB/forms_uds.html. The development of the UDS 3.0 neuropsychological battery has been described elsewhere (Weintraub et al., 2018) and is summarized only briefly here. The stated goal of the revision was to create a core battery of cognitive tasks that covered domains relevant for common neurodegenerative conditions and was practical for use with older adults and individuals with dementia, while simultaneously addressing potential licensing and other restrictions on sharing tests and data. A committee of neuropsychology experts evaluated candidate instruments and reached a conclusion on measures to be included in the 3.0 revision.

This version contains tests that will be familiar to the practicing neuropsychologist. Verbal episodic memory is assessed with the Craft story memory task, which requires participants to recall a short story over short and long delays (Craft et al., 1996). Visuoconstruction and visual recall are assessed by the Benson figure copy and recall task (Possin et al., 2011), which is akin to a modified and simplified version of the Rey figure (Rey, 1941). Confrontation naming is assessed by the multilingual naming test (Ivanova et al., 2013). A non-proprietary version of digit span forwards and backwards is included. The trail making test (TMT), parts A and B (Partington and Leiter, 1949), semantic fluency for animals and vegetables, and phonemic verbal fluency for the letters F and L round out the battery (Weintraub et al., 2018).

Already, an impressive amount of work has been done with this battery. First, detailed scoring procedures, forms, and administrative materials are freely available online at https://www.alz.washington.edu/WEB/researcher_home.html and are available for use with appropriate permissions. These standardized methods of administering and scoring instruments could help alleviate some of the drift in scoring and allocating errors that are common in other instruments (see, e.g., Lopez et al., 2003; Bortnik et al., 2013). Furthermore, normative data from 3602 cognitive intact older adults were published recently, including a normative calculator available online, allowing for demographically corrected scores to be easily calculated for an individual participant (Weintraub et al., 2018). A “cross walk” comparison of these new measures and their legacy formats from the UDS 2.0 has been published as well (Monsell et al., 2016) to allow for comparisons of scores from previous versions of the tests.

Most important to the current study, the data collected from this ongoing and expanding project have been made available online to interested researchers at https://www.alz.washington.edu/NONMEMBER/QUERY/datareqnew.html. In the current climate where normative data for neuropsychological tests are frequently proprietary and not available for further analyses, query, or development of more specific applications, this degree of transparency and accessibility is refreshing. Although of course caveats about potential under-recruitment of individuals from minority samples and caution about the representativeness of data drawn from older adults who present for cognitive research are applicable to these data, these tools and their development have great potential for advancing our knowledge of the neuropsychology of aging and neurodegenerative disease by providing a common language to describe cognition.

Building upon this core of measures and scores, additional indices are commonly used by clinicians and researchers and can be derived from the battery. The current paper focuses on several such indices: trail-making discrepancy scores, verbal fluency discrepancy scores, memory retention indices, and an error index. Understanding and using these additional metrics can provide a more nuanced interpretation of results, with potential for greater diagnostic efficiency and more accurate assessment of patient strengths and weaknesses.

Trail-Making Discrepancy Scores

Despite the frequency of its use as an executive functioning measure, the TMT relies upon a host of other abilities, including graphomotor speed and visual scanning (Jarvis & Barth, 1994; Rabin et al., 2005; Spreen & Strauss, 1998). As a result, some authors (Gaudino et al., 1995; Lamberty et al., 1994) have suggested that the difference score between part A and part B might be more useful in interpreting TMT performance if the intent is to derive a relatively isolated measure of shifting/executive functioning. Age-stratified normative data for such ratio and discrepancy scores have been presented previously (Tombaugh et al., 1998), and recently published normative values are available for an Australian sample (Senior et al., 2018); however, the applicability of these findings in a modern US population and the impact of demographic factors (e.g., gender and education) on performance are not well researched (Drane et al., 2002).

Verbal Fluency Discrepancy

In a similar vein, verbal fluency tests can provide information to neuropsychologists regarding language and executive functioning (Lezak, 1995). These tasks are easily administered, brief, and sensitive to a host of central nervous system disorders (Cottingham & Hawkins, 2010). In clinical practice, phonemic and semantic verbal fluency tasks are typically employed. Phonemic verbal fluency requires the engagement of several cognitive processes, such as working memory and cognitive flexibility, in addition to general lexical knowledge (Cohen et al., 1999). In contrast, semantic fluency involves a more structured search through conceptual knowledge, with lessened executive demands (Baldo et al., 2006). Discrepancies in phonemic and semantic fluency may be of diagnostic value (Spreen & Strauss, 1998) inasmuch as the differences between scores may reflect different sources of dysfunction. For example, early in the course of AD, there is typically a relatively large weakness in semantic fluency relative to phonemic fluency (Canning et al., 2004; Gladsjo et al., 1999; Spreen et al., 2006).

Seminal work creating normative values for these fluency discrepancies was presented by Gladsjo and colleagues (1999) for F-A-S versus animal fluency. More recently F versus animal fluency normative data have been published (Vaughan et al., 2016). In the UDS, two trials of phonemic (F and L) and semantic (animals and vegetables) measures are administered, avoiding some of the reliability issues inherent to using a single letter or category to establish a discrepancy, as well as allowing for a more even comparison between trials. To date though, no normative data have been published on the discrepancies between these forms of the task.

Percent Retention Indices

With episodic memory, percent retention scores may provide a more sensitive measure of memory dysfunction than raw acquisition or recall (Clark et al., 2010). Percent retention scores have been shown to be helpful for differentiating AD from other dementia etiologies, such as frontotemporal dementia (Wicklund et al., 2007) and dementia with Lewy bodies (DLB; Ferman et al., 2006). Furthermore, Clark, Hobson, and Obryant (2010) examined differences in percent retention rates on the Repeatable Battery for the Assessment of Neuropsychological Status (RBANS) memory subtests in clinical cases and controls. Thirty-seven percent of patients in the clinical group obtained scores within the range of normal functioning on the list recall subtests but were classified as impaired when retention rates were examined (Duff et al., 2005). In a similar vein, Duff, Hobson, Beglinger, and O’Bryant (2010) evaluated the diagnostic accuracy of RBANS retention scores among normal controls, individuals with amnestic mild cognitive impairment, and individuals with AD dementia. Retention scores performed better than or comparable to traditional indices in determining clinical status. These findings suggest that memory percent retention scores may be valuable additions to normative data on memory measures.

Errors on Executive Measures

Although overall quantitative measures of performance, like total words produced on a fluency task or time to complete a trail making test, are commonly employed in research and clinical practice, errors made while performing these measures may provide additional and clinically useful data. Studies on cognitively healthy individuals with genetic risk for AD have shown a heightened vulnerability for qualitative error commission on executive function tests (Wetter et al., 2005) and unevenly distributed performance on verbal fluency tasks (Houston et al., 2005). Errors on trail-making, rather than time to completion, were more strongly correlated with right hemisphere lesions in a group with focal brain injuries (Kopp et al., 2015). In addition, those with a history of traumatic brain injury tend to perseverate more on verbal fluency measures, a feature thought to be reflective of working memory dysfunction (Fischer-Baum et al., 2016). All told, analyzing errors along with traditional quantitative performances may improve detection of central nervous system dysfunction (Hankee et al., 2013).

The Potential Clinical Utility of Discrepancy Scores

The clinical utility of derived and discrepancy scores in actual practice has been explored in only a few studies, with more work needed to demonstrate their utility in research and clinical practice. A few relevant examples include a study by Clark, Hobson, and Obryant (2010). This study evaluated percent memory retention on the RBANS and demonstrated that a high percentage of patients were classified as impaired when examining percent retention rates but were in the normal range of normal functioning when using the traditional metrics. In the aforementioned study by Duff and colleagues (2010), percent retention scores performed better than or comparable to traditional indices in determining clinical status. In a similar vein, a study by Jacobson, Delis, Bondi, and Salmon (2002) highlighted the importance of considering alternative methods of defining cognitive changes, such as evaluating cognitive discrepancy scores, in people at risk for AD. Findings from studies such as these suggest that normative data for discrepancy scores may be beneficial in research and clinical practice, though clearly more work is needed.

The Current Study

The goal of the current study was to develop normative data for each of these discrepancies and derived indices within the UDS 3.0. There were three major aims: to describe trail making A and B discrepancies, semantic and phonemic verbal fluency discrepancies, percent retention indices, verbal/visual memory discrepancies, and errors in a national sample of neurologically normal adults; to examine the relationship of these indices with age, education, and gender; and to provide clinically useful normative data of these same indices to aid researchers and clinicians in their interpretation. We demonstrate the potential utility of these constructions with a case example and comparison of DLB and AD. This research is of consequence given the potential of the UDS 3.0 neuropsychological battery to further cognitive aging and neurodegenerative research and the clinical utility of the derived indices.

Materials and Methods

Inclusion/Exclusion Criteria

Data from the current study were derived from the NACC central data repository. Informed consent was obtained from participants at data collection; all current analyses were performed on deidentified data made available to researchers by the NACC. Procedures at individual sites were conducted in compliance with appropriate laws, guidelines, and institutional review. Data from 35 Alzheimer’s Disease Centers were included. Characteristics of individual NACC sites vary location to location but tend to be academic centers with a focus on aging and dementia. For the current data set, the number of participants per location ranged from 1 to 212, with an average of 51 participants derived from each center. The data request included all individuals who completed a Montreal Cognitive Asessment and the UDS 3.0 battery on their initial visit from March 2015 until the current data request in February 2018. To define the normative sample, we followed the rationale presented by Weintraub and colleagues (2018). First, we included individuals who had a Clinical Dementia Rating (Hughes et al., 1982) scale that was 0 and were categorized according to clinical consensus as being a normal control participant at their initial visit. This step left 2182 cases for review. This number differs from the approximately 3600 cases presented in Weintraub and colleagues’ (2018) normative paper, as individuals in the Weintraub study could have completed the UDS 3.0 neuropsychological battery at any clinic visit, whereas our data were restricted to those who were diagnosed as normal and completed the 3.0 battery on their initial clinical visit. This approach was taken to avoid potential confounds of practice effects from repeated testing. Next, because of our emphasis on several language measures, we excluded 66 individuals who reported a primary language other than English. We finally excluded list-wise individuals missing cognitive data relative to the current study, so as to create a uniform normative sample for all indices of interest, leaving a total of 1803 individuals available for the current analysis.

To demonstrate the potential utility of these measures, a separate group of patients with a primary diagnosis of DLB (N = 100) and a matched AD group (N = 81) were evaluated using these derived measures. Details on this sample and the analytic plan are reported separately in the Results section.

Measures and Calculations of Derived Scores

As noted in the introduction, the measures of the UDS 3.0 neuropsychological battery are well described in the relevant publications (Monsell et al., 2016; Weintraub et al., 2018) and in online documentation. As such, we will not restate a description of the tests here and instead focus on the derived variables, which are the focus of the current study.

TMT discrepancy and ratio scores

We calculated two different trail-making performance discrepancies. The first is a simple difference score (TMTB time to complete − TMTA time to complete; we will refer to this as TMT difference score). The second, which we will refer to as TMT ratio score, is the time to complete TMTB divided by the time to complete TMTA. These scores are thought to provide indices of set switching abilities that control for differences in psychomotor speed.

Semantic and phonemic fluency discrepancy

We derived the fluency discrepancy score by first adding all the correct words generated in 1 min to animal and vegetable categories, then subtracting the sum of correct words generated to F and L cues to produce a raw difference score. A positive value indicates more words generated to semantic cues, a negative score indicates more words produced to phonemic cues, and a score of zero would indicate equivalent performance on these tasks.

Craft story percent retention score

Story percent retention was calculated by dividing the number of verbatim correct story units generated on the delayed recall trial (out of 44) by the number of correct verbatim story units produced immediately after presentation. The subsequent ratio was multiplied by 100 to allow for expression in percentage form. Percent retention could be higher than 100 for individuals who recalled more details at delay than immediately after presentation.

Benson figure percent retention score

Figure percent retention was also calculated by dividing the number of points earned on the delayed recall of the Benson figure (out of 17) by the score on the copy trial of the Benson (out of 17). The subsequent ratio was multiplied by 100 to allow for expression in percentile form. Scores could range from 0% to more than 100% if the individual happened to have better recall of the figure at delay than their initial copy.

Differences between verbal and visual retention

The verbal versus visual retention index was calculated as the simple difference: story percent retention − figure percent retention. A positive score on this index would indicate better retention for the story than the figure, a negative index would indicate better retention of the figure than story, and a score near zero would indicate no difference between percent retention for these trials.

Trail making and phonemic fluency error index

As noted above, errors are systematically coded in the UDS for phonemic fluency and trail making instruments. Rule violation errors would account for non-F and non-L words on verbal fluency, as well as prohibited words, including names of people or places and numbers. Repetition errors would account for any word said more than once. Errors on the TMT A&B would account for any commission errors made. We created an error index by summing together the number of rule violations and repetition errors from the phonemic fluency task with commission errors from trail making A and B. Higher scores indicate more total errors and may represent an index of qualitative executive deficits. We also present descriptive data on errors from the constituent measures.

Statistical Analyses

All statistical analyses were conducted in JASP (JASP Team, 2018) and Stata 13.0 (StataCorp, 2013). Our analytic plan was parallel to that presented in Weintraub et al. (2018): for the total sample, demographic data are initially presented and calculated. For age, the number of individuals in the <60, 60–69, 70–79, and 80+ years age brackets are grouped together in keeping with descriptive information typically presented in older adult normative studies and Weintraub et al. Education is not only presented as a continuous variable but also broken out into less than or equal to 12 years of education (high school or less), 13–15 years (some college), 16 years (bachelor’s equivalent), or 17+ years (at least some graduate school). After presenting raw descriptive information for all the variables and derived indices, the distribution of scores was examined via histogram. Next, linear regressions adjusting for age, sex, and education were run for each derived variable, with data presented in tabular form.

Regression diagnostics were conducted as follows (Field, 2013). First, we evaluated the assumption of multicollinearity by evaluating variance inflation factor and tolerance statistics, specifically evaluating if variance inflation factor was greater than 10 or less than 1 or if tolerance values were below 0.2. Case-wise standardized residuals of greater than 3 SDs were evaluated. Given the large sample size, outliers greater than 2% (about 40 cases) would trigger additional case level evaluations. Finally, we visually inspected residual versus predicted, q–q, and histogram of normal probability plot of the residuals.

To ease the application of these data, a normative excel sheet is provided based on the previously published normative calculator template by Shirk and colleagues (2011). Data on statistically abnormal values are also presented to assist in practical interpretation of scores.

Appropriate parametric (T-tests) and chi-square tests are utilized in comparisons of the DLB and AD groups as presented below.

Results

Calculation of Derived Scores and Results from Regression Analyses

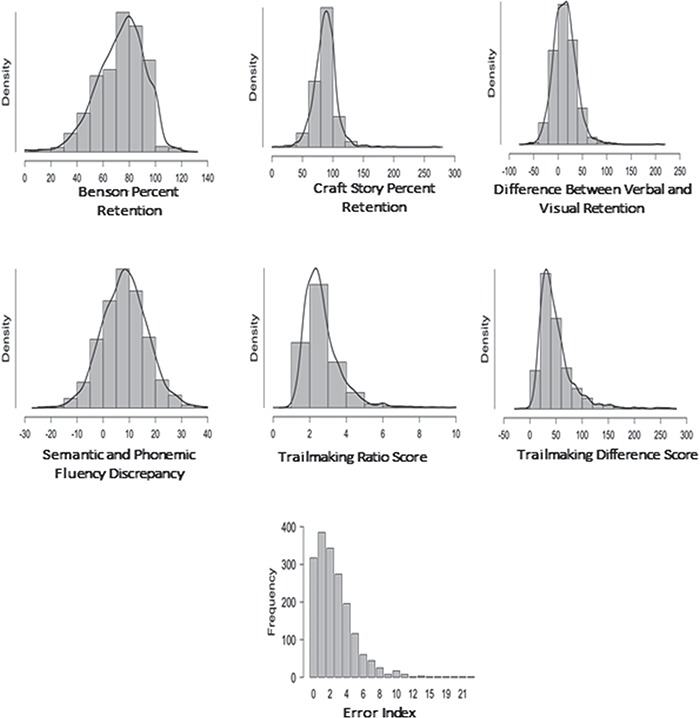

Demographic information for the sample is presented in Table 1 Reference source not found.. Participants represented a predominantly female, White/Caucasian, and well-educated group. Raw scores for the parent cognitive measures and derived indices are presented in Table 2. Derived variables tended to be roughly normal in distribution (Fig. 1), with the exception of the error index, which was strongly positively skewed (few errors overall in the normal sample, with a long tail), and, to a lesser extent, the TMT indices.

Table 1.

Demographic breakdown of the sample

| Demographic variable | Category | Frequency | Percent |

|---|---|---|---|

| Gender | Male | 631 | 35 |

| Female | 1172 | 65 | |

| Age | <60 | 275 | 15.3 |

| 60–69 | 690 | 38.3 | |

| 70–79 | 653 | 36.2 | |

| 80+ | 185 | 10.3 | |

| Race | White | 1403 | 77.8 |

| Black | 311 | 17.2 | |

| American Indian or Alaskan Native | 31 | 1.7 | |

| Native Hawaiian or other Pacific Islander | 2 | 0.1 | |

| Asian | 39 | 2.2 | |

| Other | 7 | 0.4 | |

| Unknown | 10 | 0.6 | |

| Education | <12 | 173 | 9.6 |

| 13–15 | 326 | 18.1 | |

| 16 | 484 | 26.8 | |

| 17+ | 802 | 44.5 | |

| Missing | 18 | 1.0 |

Note: N = 1803.

Table 2.

Summary statistics for all core and derived variables

| Neuropsychological test and derived scores | M | SD | Minimum | Maximum | 25th Percentile | 50th Percentile | 75th Percentile |

|---|---|---|---|---|---|---|---|

| Trail making test part A, time (s) | 31.02 | 12.24 | 9.00 | 148.00 | 23.00 | 28.00 | 36.00 |

| Trail making test part B, time (s) | 80.26 | 39.19 | 24.00 | 300.00 | 55.00 | 71.00 | 93.00 |

| Trail making test, raw difference score (s) | 49.25 | 33.49 | −15.00 | 266.00 | 28.00 | 41.00 | 60.00 |

| Trail making test, ratio score | 2.67 | 1.01 | 0.80 | 9.96 | 2.00 | 2.46 | 3.06 |

| Animals, in 60 s | 21.66 | 5.65 | 7.00 | 49.00 | 18.00 | 21.00 | 25.00 |

| Vegetables, in 60 s | 14.95 | 4.07 | 1.00 | 31.00 | 12.00 | 15.00 | 18.00 |

| Phonemic fluency, F trial | 14.59 | 4.42 | 1.00 | 35.00 | 12.00 | 14.00 | 17.00 |

| Phonemic fluency, L trial | 13.80 | 4.25 | 3.00 | 30.00 | 11.00 | 14.00 | 17.00 |

| Difference between semantic and phonemic fluency | 8.32 | 8.64 | −22.00 | 38.00 | 2.00 | 8.00 | 14.00 |

| Craft story, immediate verbatim units | 21.81 | 6.49 | 2.00 | 40.00 | 17.00 | 22.00 | 26.00 |

| Craft story, delayed verbatim units | 19.02 | 6.55 | 1.00 | 40.00 | 14.00 | 19.00 | 24.00 |

| Percentage of Craft story retained | 87.47 | 18.26 | 6.25 | 266.70 | 76.92 | 88.00 | 97.22 |

| Benson figure, copy trial | 15.64 | 1.25 | 5.00 | 17.00 | 15.00 | 16.00 | 17.00 |

| Benson figure, delayed trial | 11.51 | 2.92 | 0.00 | 17.00 | 10.00 | 12.00 | 14.00 |

| Percentage of Benson figure retained | 73.60 | 18.03 | 0.00 | 121.40 | 62.50 | 75.00 | 87.50 |

| Difference between percentage of craft story and Benson figure retained | 13.87 | 23.42 | −63.33 | 204.20 | −1.92 | 12.73 | 27.75 |

| Trail making test part A commission errors | 0.16 | 0.70 | 0.00 | 21.00 | 0.00 | 0.00 | 0.00 |

| Trail making test part B commission errors | 0.57 | 1.09 | 0.00 | 19.00 | 0.00 | 0.00 | 1.00 |

| F words repeated | 0.60 | 0.97 | 0.00 | 10.00 | 0.00 | 0.00 | 1.00 |

| Non-F words and rule violations | 0.36 | 0.87 | 0.00 | 15.00 | 0.00 | 0.00 | 0.00 |

| L words repeated | 0.63 | 0.92 | 0.00 | 6.00 | 0.00 | 0.00 | 1.00 |

| Non-L words and rule violations | 0.26 | 0.64 | 0.00 | 9.00 | 0.00 | 0.00 | 0.00 |

| Error index | 2.56 | 2.42 | 0.00 | 23.00 | 1.00 | 2.00 | 4.00 |

Note: N = 1803 for all analyses; values in boldface indicate the derived measures that were the focus of this study.

Fig. 1.

Distribution plots for scores from the derived indices.

To assess the impact of demographic variables on derived indices, a series of regression equations were run with age, sex, and education entered as predictors. The coefficients for these equations are presented in Table 3, as they are the foundation of the normative spreadsheet provided in the Supplementary material online.

Table 3.

Multivariate linear regression coefficients for sex, age, and education predicting derived variables

| Neuropsychological test and derived Scores | Age coefficient (SE) | Sexb coefficient (SE) | Education coefficient (SE) | R 2 | F |

|---|---|---|---|---|---|

| Trail making test, raw difference score(s)a | 0.018 (0.001) | 0.011 (0.028) | −0.061 (0.005) | 0.154 | 108.1* |

| Trail making test, ratio scorea | 0.002 (0.000) | −0.0034 (0.016) | 0.023 (0.003) | 0.183 | 20.56* |

| Difference between semantic and phonemic verbal fluency | −0.075 (0.020) | 1.764 (0.427) | 0.055 (0.082) | 0.017 | 10.45* |

| Percentage of Craft story retained | −0.160 (0.041) | 1.071 (0.891) | 0.249 (0.170) | 0.01 | 6.245* |

| Percentage of Benson figure retained | −0.395 (0.040) | −2.261 (0.869) | 0.593 (0.166) | 0.064 | 40.51* |

| Difference between percentage of Craft story and Benson figure retained | 0.235 (0.052) | 3.332 (1.148) | −0.344 (0.219) | 0.016 | 10.75* |

| Error index | 0.035 (0.005) | −0.221 (0.117) | −0.055 (0.022) | 0.027 | 17.27* |

Note. Bolded values indicate a significant predictor at the p < .05. *p < .01 aTrail making test is a dependent variable transformed with the natural log. bSex is coded such that 1 is female, 0 for male.

For trail making raw score differences, demographic variables explained 13.5% of the variance, R2 = .135, F(3, 1781) = 92.71, p < .001. Age and education were the significant predictors, as noted in Table 3. In contrast, when the ratio score was calculated, the overall model remained significant but explained only 3% of the variance, R2 = .033, F(3, 1781) = 20.29, p < .001. Again, age and education emerged as significant predictors of this value. In performing regression diagnostics though, evaluation of the q–q plots suggested distributional peculiarities. As noted in Figure 1, this may have been related to the distributional shape of the TMT variables themselves. To account for this, we re-ran the analysis using the natural log of the trail making ratio score, which essentially normalized the plots. Details from these regressions are in Table 3 and are automatically accounted for in the scoring spreadsheet (Supplementary material online).

For differences between semantic and verbal fluency, demographic factors explained less than 2% of the variance overall, though the relationship was statistically significant, R2 = .017, F(3, 1781) = 10.45, p < .001, with age and sex contributing to this association.

For the percent retention indices, small but statistically significant relationships were observed. Demographic variables explained approximately 1% of the variance in Craft story percent retention (R2 = .01, F[3, 1781] = 6.245, p < .001, age only as a significant predictor) and about 6% of the variance in Benson figure retention (R2 = .064, F[3, 1781] = 40.51, p < .001; all three demographic variables were significantly related). Similarly, less than 2% of the variance in differences in percent retention were explained by demographic variables, R2 = .018, F(3, 1781) = 23.04, p < .001, with age and sex contributing significantly to this relationship.

Finally, for the error index, demographic variables made a small, but statistically significant, contribution to the overall score, R2 = .028, F(3, 1781) = 17.27, p < .001, with age and education the significant contributors. As might be expected given examination of the variable distribution in Figure 1, the error score was non-normally distributed with subsequent regression residual plots raising concern for nonlinearity. Various transformations were attempted [i.e., ln(x + 1) etc.] but did not yield a consistently better result. The results are presented for completeness sake, but given limits on their generalizability to other samples, it may be preferential to use the percentile cutpoints from the sample as a whole to identify abnormal scores.

In addition to general descriptive statistics and regression values presented in Table 1, Table 4 summarizes statistically abnormal values and interpretative considerations for each of the derived scores. This information is provided on the basis of raw scores for each derived score in reference to the total sample. The purpose of this information is to provide set cutpoints for determining abnormality without reference to more specific norm adjusted scores, given the relatively modest adjustment in scores for some measures and the limited statistical utility of adjusted non-normal scores for the error index.

Table 4.

Statistically abnormal performances on derived scores

| Neuropsychological test and derived scores | Low values | High values | Interpretative considerations |

|---|---|---|---|

| 5th percentile/10th percentile | 95th percentile/90th percentile | ||

| Trail making test, raw difference scoreTrail making test, ratio score | 16/201.53/1.69 | 110/884.51/3.89 | Low values indicate abnormally little interference from the additional demands of TMTB; higher values suggest marked declines with the inclusion of the switching condition from TMTB. |

| Difference between semantic and phonemic verbal fluency | −5/−3 | 22/19 | Low scores suggest a statistically abnormal lack of benefit from semantic cueing; high values suggest abnormally large advantage for semantic fluency. |

| Percentage of Craft story retained | 58.33/66.67 | 114.3/106.3 | Low scores indicate statistically abnormal retention; high scores indicate infrequently high retention. |

| Percentage of Benson figure retained | 41.18/50.00 | 100/94.12 | |

| Difference between percentage of Craft story and Benson figure retained | −21.86/−14.15 | 52.43/42.04 | Low scores suggest unusually greater retention of visual information than verbal information; high scores suggest unusually greater retention of verbal than visual information. |

| Error index | 0/0 | 7/5 | Low scores represent error free performance, which is unusual even in normal control participants; high scores suggest abnormally elevated error rates. |

Note: “Low” and “high values” refer to raw score values for the derived indices.

Exploring the Utility of Derived Measures in DLB and AD

To illustrate the potential utility of these indices for research and clinical practice, we present initial data from an ongoing project evaluating patterns of performance on cognitive screening instruments in two frequently encountered clinical conditions: those with primary diagnoses of AD (N = 81) and those with a principal diagnosis of DLB (N = 100), also derived from the NACC data repository. The AD group was selected using a constrained randomization procedure from a broader group of individuals with AD to have similar demographic characteristics to the DLB group, which included an over sampling of male participants (81.5% in the AD group, 83% in the DLB) given higher prevalence of DLB in male participants (Savica et al., 2013), similar education status (average 16 years in both groups), and similar dementia severity as assessed by the clinical dementia rating scale.

Memory performances differed significantly in the two groups, with individuals with DLB showing better recall for the Craft story [demographically corrected mean Z score of −1.21(1.17) vs. −1.95 (.97); t(173) = −4.44, p < .001] and Benson figure [demographically corrected mean Z score of −1.68(1.36) vs. −2.19(1.3); t(163) = −2.45, p = .02). When performances were evaluated at the level of the individual, 53% (n = 53) of individuals with DLB demonstrated low scores (−1.0 Z relative to demographically controlled performance) for Craft Story memory, with 83% (n = 68) of those with AD demonstrating scores in this range. This belies the fact that individuals with DLB and AD may have different sources memory problems, with more consolidation issues in AD versus encoding/free retrieval problems in DLB (Metzler-Baddeley, 2007). Indeed, when only those individuals with impaired free recall of the Craft story were considered, nearly half (26/53) of those with DLB showed normal percent retention of the story using our derived indices, versus only 34% (23/68) with normal percent retention in AD, a difference in distribution that trended (though did not achieve) statistical significance [χ2(1, n = 121) = 2.87, p = .09].

Furthermore, individuals with DLB suffer from disproportionate difficulties with visuospatial skills early in the disease course relative to those with AD (Metzler-Baddeley, 2007). It could be thus hypothesized that individuals with DLB would show better recall for stories than visual figures due to differences in how well the information could be encoded. Indeed, this pattern was observed in the current data, with individuals with DLB demonstrating better retention of the story than the figure relative to individuals with AD who performed equally poorly on both using the percent retention discrepancy score [t(156) = −2.39, p = .01].

In terms of verbal fluency discrepancies, the groups were comparable in terms of the overall magnitude of discrepancy between semantic and phonemic fluency [t(171) = 0.19, p = .84]. However, in the subset of individuals who showed normal phonemic and semantic verbal fluency measures (≥−1.0 Z for demographically corrected scores; AD N = 19, DLB N = 21), more individuals with DLB showed a semantic > phonemic advantage of at least 1 standard deviation than individuals with AD (χ2 = 3.74, p = .05), consistent with the semantic deficit hypothesis of AD. In this way, fluency discrepancy scores may help to reveal subtle cognitive abnormalities even when parent measures are within the normal limits.

Turning to trail making part B, one tail t-tests suggest that individuals with DLB performed worse than their AD cohort [t(141) = 1.6441, p = .0492]. While this may reflect underlying switching/executive dysfunction in DLB, it would be important to control for the influence of psychomotor slowing in DLB, given comorbid parkinsonism in most cases. Using demographically corrected trail making difference scores provides just such evidence, as the groups did not differ in terms of the discrepancy scores [t(140) = −0.99, p = .32] despite a difference in the parent measure (TMTB). This suggests that differences in TMTB without this correction may be more related to underlying slowing as the difference is attenuated after comparisons to TMTA. In a similar vein, rates of errors committed on TMTB and fluency measures were not different between the groups [t(139) = 1.16, p = .25]. Overall, looking at derived measures helps test the hypothesis that individuals with DLB may have similar executive dysfunction on this limited battery than those with AD.

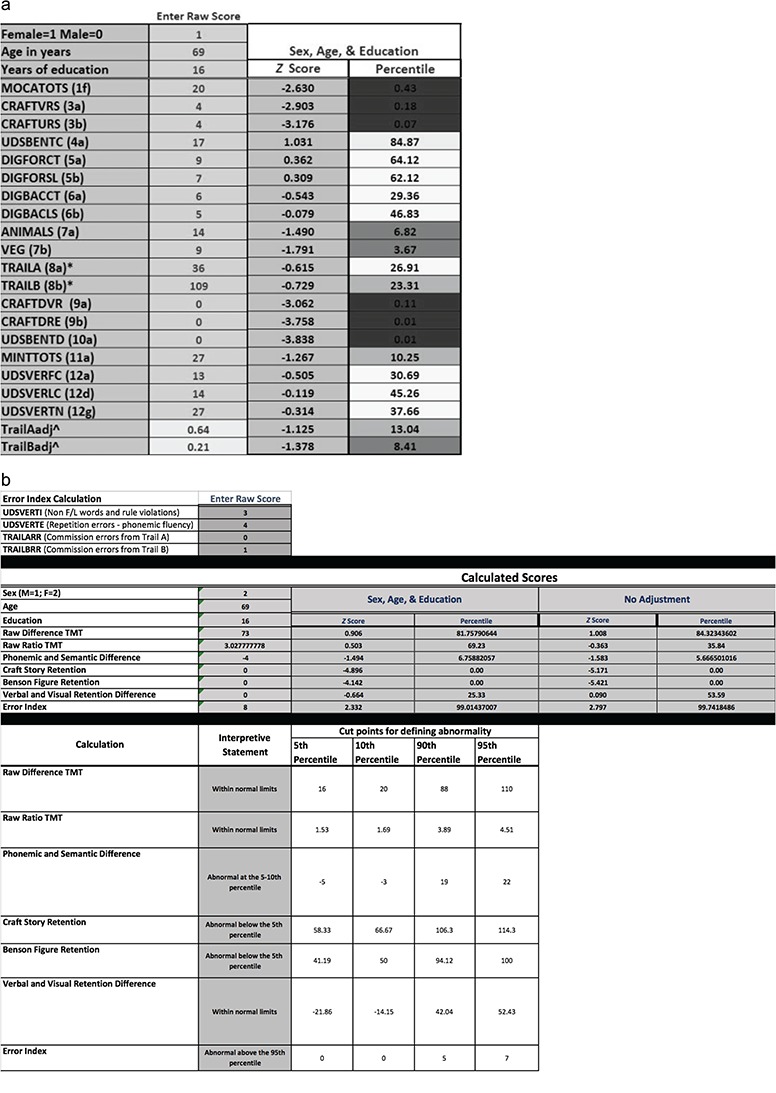

Application to an Individual Case

Next, we turn to the potential utility of this data to be applied at the individual level. To demonstrate the utility of this normative data, deidentified values were entered into the calculator for a 69-year-old female patient with 16 years of education who was diagnosed with mild AD dementia at an outpatient clinic. Although, at first brush, she appeared cognitively intact at interview due to excellent conversational skills, it soon became clear that she could recall little to no details regarding recent autobiographical events. Her husband reported that he had observed an insidious onset of primary memory problems over the past several years, with gradually progressive worsening of symptoms over time. Although the patient continued to manage finances by relying on detailed notes and ledgers to aid memory, she was no longer driving for fear of getting lost and appeared quite confused about her medications (i.e., could not name medications or reasons for taking them). Basic activities of daily living, such as toileting, bathing, and dressing, were reportedly intact.

Testing via the standard UDS 3.0 battery revealed evidence of impaired immediate recall, delayed recall, and semantic fluency, borderline confrontation naming, and otherwise intact performances (see Fig. 2a; performance descriptors taken from Schoenberg & Rum, 2017). The insidious onset and course, as well as the amnestic profile with milder language deficits, are common in AD (Weintraub et al., 2012).

Fig. 2.

(a) Deidentified sample data from the UDS 3.0 standard battery. MOCATOTS, Montreal Cognitive Assessment total score; CRAFTVRS, craft story verbatim scoring; CRAFTURS, craft story paraphrase scoring; UDSBENTC, Benson figure copy; DIGFORCT, Number Span Forward score; DIGFORSL, Number Span Forward longest span; DIGBACCT, Number Span Backward score; DIGBACLS, Number Span Backward longest span; ANIMALS, animal naming score; VEG, vegetable naming score; TRAILA, Trails A time; TRAILB, Trails B time; CRAFTDVR, craft story delayed recall verbatim scoring; craft story delayed recall paraphrase scoring; UDSBENTD, Benson figure delayed recall; MINTTOTS, multilingual naming test; UDSVERFC, letter fluency score for F; UDSVERLC, letter fluency score for C; UDSVERTN, total letter fluency score; TrailAadj^, trails A adjusted score; TrailBadj^, trails B adjusted score. (b) Deidentified sample data utilizing the supplemental normative spreadsheet from an outpatient with mild Alzheimer’s disease dementia.

Findings from derived indices complement this case conceptualization (see Fig. 2b). For example, her 0% retention scores for delayed recall are clearly impaired, providing further evidence of amnesia. Additionally, her semantic versus phonemic verbal fluency discrepancy indicated a statistically unlikely difference in performances on these tasks, with greater impairment on the semantic trial. Such a discrepancy has been found to be characteristic of individuals with AD (Canning et al., 2004; Gladsjo et al., 1999; Strauss et al., 2006), providing further evidence for the stated diagnosis.

Review of the derived index scores yields additional important insights. As can be seen in Figure 2b, demographic correction had little effect on the interpretation of the indices, consistent with regression results. In other words, the patient’s scores fell within the same qualitative descriptor range (Schoenberg & Rum, 2017), regardless of demographic correction. Additionally, one can readily develop new research questions based on the clinical data. For example, this patient committed an abnormally high number of errors on executive tests. Based on this observation, we initiated a study to explore the prevalence of errors among individuals with AD and whether error rates reliably distinguish AD from other disorders (Kiselica & Benge, 2019). Independent research labs will no doubt complete novel and clinically important research using the derived indices.

Discussion

The current study described a number of commonly used derived and discrepancy scores from neuropsychological measures in the UDS 3.0 neuropsychological battery, evaluated their relationship with age, education, and gender in a large sample, and provided methods of evaluating an individual case’s relative standing on these indices versus data normative sample.

Current Findings in Comparison to Prior Studies

As always, caution is raised in using individuals who present for cognitive research studies as representative of the normal aging population. Thus, comparison of the current findings to other normative studies to help determine if the results are abnormal relative to prior studies is important.

No clear consensus has emerged about the optimum methodology for comparing and describing trail making discrepancies, and various methodologies have been proposed (Strauss et al., 2006). In the current study, we found mean raw score differences of 49.25 s between A and B, and a mean ratio of A to B scores of 2.67. This magnitude is slightly higher than that reported in Tombaugh, Rees, and McIntyre (1998), where a similar ratio (adjusted for the percentage formatting) ranged from 2.34 in 55–59 year olds to 2.61 in 85–89 year olds. Part of this discrepancy may be attributable to variations in administration instructions. In the UDS TMTB instructions, for example, there is a 300-s time limit and when errors are made; the individual is not reminded of whether the next answer should be a letter or number. As instruction sets for TMTB vary lab to lab, it is possible that differences in administration result in changes in discrepancies.

Fluency discrepancy scores have been reported previously from a variety of stimulus letters/semantic categories and via discrepancies in normative values versus raw scores. This variability makes direct comparisons of the current results difficult. For example, Gladsjo and colleagues (1999) reported discrepancies between demographically corrected T scores for the parent measures, and a similar methodology was employed in the Delis–Kaplan Executive Function System (D-KEFS) normative data (Delis et al., 2001) with age-adjusted scaled scores. On the other hand, a recently published study (Vaughan et al., 2016) from the Irish Longitudinal Study on Aging using raw scores found that the average discrepancy between words generated to the “animal” cue and the letter F cue was 9.18 words (SD = 6.89). This is roughly similar in magnitude to the average discrepancy we observed using animals/vegetables and F/L as our cues (M = 8.32, SD = 8.64). The relative similarity of this finding across samples suggests that the semantic advantage in verbal fluency is a relatively robust finding. Indeed, in the normative sample the absence of such a finding (e.g., a phonemic advantage of three to five words) was observed infrequently, representing a finding at the 5th to 10th percentile in the normative sample.

Percent retention values reported in the current study are of a single trial administration of the Craft story and copy of the Benson figure, two tasks with prior experimental use but relatively limited psychometric study to date. As such, direct comparisons to previous psychometric studies of these instruments are unavailable. With that caveat in mind, the RBANS, which also includes a figure copy and short story memory (albeit with two administrations of the story), provides an illustrative comparison (Schoenberg et al., 2008). In that study, the authors describe average percent retention of the RBANS figure of 70%–79% in the 65–75-year age group down to 61%–75% in the 80–94-year age group. The present study revealed retention of 75% of the Benson figure at delay, which appears broadly consistent with the RBANS findings. Because the RBANS story is read to participants twice, percent retention is not directly reported; the reported values are the delayed recall raw score divided by the sum of the two immediate recall trials. This calculation lead to a mean story retention value of 0.50 (SD = .15). Extrapolating, this finding suggests that mean story retention rates in percentage form would range between 70% and 100% in the majority of participants (−1 SD to an artificial cap of 100% as used in this article). Thus, the currently reported retention rate of 87% for the single administration of the craft story seems within the range of expectation as well. The potential of percent retention scores to better capture the type of memory difficulty present in clinical populations is highlighted in our DLB/AD sample, where individuals with DLB who had impaired scores more frequently showed normal percent retention, suggesting that difficulties with encoding of new information may artificially lower memory scores in some of these patients. More work is needed to determine the neuroanatomical and clinical significance of using retention versus free recall scores though.

Verbal and visual memory discrepancies are now commonly reported in batteries, such as the Wechsler Memory Scale and Memory Assessment Scale (Wechsler, 1984; Williams, 1991). However, detailed descriptions of the magnitude of discrepancy or nonnormatively corrected values are lacking. That being said, in the current study, it seems that overall percent retention of the crafty story (87.47%, SD = 18.26) was greater than overall percent retention of the Benson figure (73.6%, SD = 13.87). Whether this difference represents a discrepancy in difficulty of the stimuli, the influence of greater top–down control demands of figure copy (Possin et al., 2011) or the impact of other variables remains to be explored. In the past, abnormal visual/verbal discrepancies have been found in individuals with neurodegenerative disease, such as AD (Murayama et al., 2016), and future work will be needed to determine the utility of this observed score in cases of lateralized cerebral dysfunction. However, our results in the DLB versus AD sample reveal that individuals with DLB do have a harder time retaining the Benson figure relative to verbal memory, consistent with an underlying visual disturbance in most cases of DLB.

Errors in verbal fluency and trail making have not been included in many commonly utilized normative databases (Heaton et al., 2004; Schretlen et al., 2010), although they are available in some measures, such as the D-KEFS. The current study indicated that error laden performances on these measures are rare in a normative sample; in clinical populations where such errors are more common (Houston et al., 2005), additional work will be needed to explore their diagnostic utility and other correlates. In our DLB and AD samples, errors were much more common and similar between the groups.

The Impact of Demographic Factors on Derived Indices

Prior research suggests that derived index scores are less affected by demographic factors than their parent measures. For example, Gladsjo and colleagues (1999) found that age, education, gender, and ethnicity were not related to the magnitude of discrepancy between phonemic and semantic verbal fluency measures. However, their discrepancy analysis utilized the difference between already demographically adjusted parent measures. In the aforementioned Vaughan, Coen, Kenny, and Lawlor (2016) study, the semantic fluency advantage was affected by age, such that each increase in age of 10 years was associated with a one word decrease in semantic fluency advantage. However, overall demographics only explained about 2% of the variance in discrepancy scores. In the current study, age and education again did emerge as statistically significant predictors of verbal fluency discrepancy but explained less than 2% of the variance in the data. In a similar vein, prior research has yielded variable impacts of factors, such as age and education, on trail making discrepancy scores. Tombaugh and colleagues (1998) noted increases in the discrepancy with age and smaller discrepancies with increasing education. However, Mrazik, Millis, and Drane (2010) did not note education to have a significant relationship with discrepancy scores. The current results suggest that the influence of demographics depends in part on the methodology employed to calculate the discrepancy, with a greater impact of demographic variables (about 13.5%) for simple discrepancy scores, but only about 3% of variance in ratio scores explained by demographic factors.

More generally, the results revealed that demographics had a limited impact on the derived indices. In the available spreadsheet, demographically adjusted normative scores are provided if readers find that information useful for certain situations. However, given the relative robustness of these findings, simple percentile definitions of abnormality would likely suffice in most applications, and these values are presented as well in Table 4.

Limitations and Future Directions

Numerous factors should be considered when interpreting the current data. At the core, the use of normative data in older adult samples assumes a coherent definition of normal. Debate remains about whether to exclude any individuals with common health comorbidities of aging, or even utilize robust norms, which present normative data only on individuals who do not go on to exhibit signs of neurodegenerative disorders over time (Harrington et al., 2017). The current study presents cross-sectional data on individuals who were clinically normal as of an initial visit to a clinic and thus could theoretically include individuals too early in their neurodegenerative course to be detectable with clinical measures. However, an advantage of the UDS and NACC database is that longitudinal data are available for many participants, and further robust norms can be calculated and made available to the research community as the database grows. Limits are also noted on the sampling of older minority adults in the current sample. Though under sampling of such population is a difficulty noted in many older adult normative samples, this limitation does imply that care should be taken in extrapolating these findings to individuals of different cultural, linguistic, ethnic, and educational backgrounds. To this end, attention is drawn to the relatively high educational level of this sample, with under-representation of those with limited educational attainment (i.e., in the current sample 9.6% or N = 173 have high school degree or lower education). Direct comparison of this distribution to other commonly used normative systems is somewhat difficult, as cross tabs specifically for education in older adults are not always reported. Two examples will highlight this discrepancy. The commonly utilized expanded Halstead Reitan norms (Heaton et al., 2004) cites that their Caucasian sample (N = 634) had 100 individuals with fewer than 12 years education, though it is unclear how many of these were older adults, as only 121 from this sample overall were over the age of 64. The WMS-IV normative sample included 500 older adults (100 per each of 5 age bands over the age of 65 years), with educational distribution designed to mirror census survey data (i.e., roughly 30% or so with college educations, roughly 20% with high school or less education). Thus, the current sample greatly expands on the total N of older adults analyzed, including we suspect, having a comparable total N of older adults with limited education as other samples, but fewer relative to the sample overall. In turn, this oversamples highly educated older adults. Within our laboratory, UDS measures are frequently paired with other measures of memory and other cognitive constructs, allowing for a cross check of normative scores with other sources of data. Ultimately though, more detailed work will be needed on evaluating the impact of education on the variables overall and attempting to diversify samples in aging research more broadly.

A further mention is made concerning the need to focus more on the clinical utility of derived and discrepancy scores in future studies. Our preliminary data at the level of individual cases and a small group of DLB and AD cases highlight the potential utility of derived indices for uncovering patterns in retention and executive functioning that might be obscured using parent measures alone. However, more work is needed to determine the optimum use of these measures and scenarios where they might be particularly useful. Ideally, creating relatively comprehensive statistical comparisons of intra- and inter-test findings will enable individuals to better capture cognitive strengths and weaknesses and more accurately characterize neurodegenerative diseases.

Supplementary Material

Funding

The NACC database is funded by National Institute on Aging/National Institutes of Health (U01 AG016976). NACC data are contributed by the National Institute on Aging-funded ADCs: P30 AG019610 (PI Eric Reiman, MD), P30 AG013846 (PI: Neil Kowall, MD), P50 AG008702 (PI: Scott Small, MD), P50 AG025688 (PI: Allan Levey, MD, PhD), P50 AG047266 (PI: Todd Golde, MD, PhD), P30 AG010133 (PI: Andrew Saykin, PsyD), P50 AG005146 (PI: Marilyn Albert, PhD), P50 AG005134 (PI: Bradley Hyman, MD, PhD), P50 AG016574 (PI: Ronald Petersen, MD, PhD), P50 AG005138 (PI: Mary Sano, PhD), P30 AG008051 (PI: Thomas Wisniewski, MD), P30 AG013854 (PI: M. Marsel Mesulam, MD), P30 AG008017 (PI: Jeffrey Kaye, MD), P30 AG010161 (PI: David Bennett, MD), P50 AG047366 (PI: Victor Henderson, MD, MS), P30 AG010129 (PI: Charles DeCarli, MD), P50 AG016573 (PI: Frank LaFerla, PhD), P50 AG005131 (PI: James Brewer, MD, PhD), P50 AG023501 (PI: Bruce Miller, MD), P30 AG035982 (PI: Russell Swerdlow, MD), P30 AG028383 (PI: Linda Van Eldik, PhD), P30 AG053760 (PI: Henry Paulson, MD, PhD), P30 AG010124 (PI: John Trojanowski, MD, PhD), P50 AG005133 (PI: Oscar Lopez, MD), P50 AG005142 (PI: Helena Chui, MD), P30 AG012300 (PI: Roger Rosenberg, MD), P30 AG049638 (PI: Suzanne Craft, PhD), P50 AG005136 (PI: Thomas Grabowski, MD), P50 AG033514 (PI: Sanjay Asthana, MD, FRCP), P50 AG005681 (PI: John Morris, MD), and P50 AG047270 (PI: Stephen Strittmatter, MD, PhD).

Conflict of Interest

None declared.

References

- Baldo J. V., Schwartz S., Wilkins D., & Dronkers N. F. (2006). Role of frontal versus temporal cortex in verbal fluency as revealed by voxel-based lesion symptom mapping. Journal of the International Neuropsychological Society, 12 (6), 896–900. [DOI] [PubMed] [Google Scholar]

- Bortnik K. E., Boone K. B., Wen J., Lu P., Mitrushina M., Razani J., et al. (2013). Survey results regarding use of the Boston naming test: Houston, we have a problem. Journal of Clinical and Experimental Neuropsychology, 35 (8), 857–866. [DOI] [PubMed] [Google Scholar]

- Canning S. J., Leach L., Stuss D., Ngo L., & Black S. E. (2004). Diagnostic utility of abbreviated fluency measures in Alzheimer disease and vascular dementia. Neurology, 62, 556–562. [DOI] [PubMed] [Google Scholar]

- Clark J. H., Hobson V. L., & Obryant S. E. (2010). Diagnostic accuracy of percent retention scores on RBANS verbal memory subtests for the diagnosis of Alzheimer’s disease and mild cognitive impairment. Archives of Clinical Neuropsychology, 25 (4), 318–326. [DOI] [PubMed] [Google Scholar]

- Cohen M. J., Morgan A. M., Vaughn M., Riccio C. A., & Hall J. (1999). Verbal fluency in children: Developmental issues and differential validity in distinguishing children with attention-deficit hyperactivity disorder and two subtypes of dyslexia. Archives of Clinical Neuropsychology, 14 (5), 433–443. [PubMed] [Google Scholar]

- Cottingham M. E., & Hawkins K. A. (2010). Verbal fluency deficits co-occur with memory deficits in geriatric patients at risk for dementia: Implications for the concept of mild cognitive impairment. Behavioural Neurology, 22 (3–4), 73–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craft S., Newcomer J., Kanne S., Dagogo-Jack S., Cryer P., Sheline Y. et al. (1996). Memory improvement following induced hyperinsulinemia in Alzheimer’s disease. Neurobiology of Aging, 17, 123–130. [DOI] [PubMed] [Google Scholar]

- Delis D. C., Kaplan E., & Kramer J. H. (2001). Delis–Kaplan executive function system (D-KEFS). New York, NY: Pearson. [Google Scholar]

- Drane D. L., Yuspeh R. L., Huthwaite J. S., & Klingler L. K. (2002). Demographic characteristics and normative observations for derived-trail making test indices. Cognitive and Behavioral Neurology, 15 (1), 39–43. [PubMed] [Google Scholar]

- Duff K., Beglinger L., Schoenberg M., Patton D., Mold J., & Scott J. (2005). Test-retest stability and practice effects of the RBANS in a community dwelling elderly sample. Journal of Clinical and Experimental Neuropsychology, 27 (5), 565–575. [DOI] [PubMed] [Google Scholar]

- Duff K., Hobson V. L., Beglinger L. J., & Obryant S. E. (2010). Diagnostic accuracy of the RBANS in mild cognitive impairment: Limitations on assessing milder impairments. Archives of Clinical Neuropsychology, 25 (5), 429–441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferman T. J., Smith G. E., Boeve B. F., Graff-Radford N. R., Lucas J. A., Knopman D. S. et al. (2006). Neuropsychological differentiation of dementia with Lewy bodies from normal aging and Alzheimer’s disease. The Clinical Neuropsychologist, 20, 623–626. [DOI] [PubMed] [Google Scholar]

- Field A. (2013). Discovering statistics using IBM SPSS statistics. Washington, DC: Sage Publishing. [Google Scholar]

- Fischer-Baum S., Miozzo M., Laiacona M., & Capitani E. (2016). Perseveration during verbal fluency in traumatic brain injury reflects impairments in working memory. Neuropsychology, 30 (7), 791. [DOI] [PubMed] [Google Scholar]

- Gaudino E. A., Geisler M. W., & Squires N. K. (1995). Construct validity in the trail making test: What makes part B harder? Journal of Clinical and Experimental Neuropsychology, 17, 529–535. [DOI] [PubMed] [Google Scholar]

- Gladsjo J. A., Schuman C. C., Evans J. D., Peavy G. M., Miller S. W., & Heaton R. K. (1999). Norms for letter and category fluency: Demographic corrections for age, education, and ethnicity. Assessment, 6 (2), 147–178. [DOI] [PubMed] [Google Scholar]

- Hankee L. D., Preis S. R., Beiser A. S., Devine S. A., Liu Y., Seshadri S. et al. (2013). Qualitative neuropsychological measures: Normative data on executive functioning tests from the Framingham offspring study. Experimental Aging Research, 39 (5), 515–535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrington K. D., Lim Y. Y., Ames D., Hassenstab J., Rainey-Smith S., Robertson J. et al. (2017). Using robust normative data to investigate the neuropsychology of cognitive aging. Archives of Clinical Neuropsychology, 32 (2), 142–154. [DOI] [PubMed] [Google Scholar]

- Heaton R. K., Grant I., Matthews C. G., Fastenau P. S., & Adams K. M. (1996). Heaton, Grant, and Matthews' comprehensive norms: An overzealous attempt. Journal of Clinical and Experimental Neuropsychology, 18 (3), 444–448. [Google Scholar]

- Heaton R. K., Miller S. W., Taylor M. J., & Grant I. (2004). Revised comprehensive norms for an expanded Halstead–Reitan battery: Demographically adjusted neuropsychological norms for African American and Caucasian adults. Lutz, FL: Psychological Assessment Resources. [Google Scholar]

- Houston W. S., Delis D. C., Lansing A., Jacobson M. W., Cobell K. R., Salmon D. P. et al. (2005). Executive function asymmetry in older adults genetically at-risk for Alzheimer’s disease: Verbal fluency versus design fluency. Journal of the International Neuropsychological Society, 11 (7), 863–870. [DOI] [PubMed] [Google Scholar]

- Hughes C., Berg L., Danziger W., Coben L., & Martin R. (1982). A new clinical scale for the staging of dementia. British Journal of Psychiatry, 140 (6), 566–572. [DOI] [PubMed] [Google Scholar]

- Ivanova I., Salmon D. P., & Gollan T. H. (2013). The multilingual naming test in Alzheimer’s disease: Clues to the origin of naming impairments. Journal of the International Neuropsychological Society, 19, 272–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobson M. W., Delis D. C., Bondi M. W., & Salmon D. P. (2002). Do neuropsychological tests detect preclinical Alzheimer's disease: Individual-test versus cognitive-discrepancy score analyses. Neuropsychology, 16 (2), 132. [DOI] [PubMed] [Google Scholar]

- JASP Team (2018). JASP (Version 0.9). Retrieved from https://jasp-stats.org/download/.

- Jarvis P. E., & Barth J. T. (1994). The Halstead Reitan neuropsychological battery: A guide to interpretation and clinical applications. Odessa, FL: Psychological Assessment Resources. [Google Scholar]

- Kiselica A. M., & Benge J. F. (2019, February). Quantitative and qualitative features of executive dysfunction in frontotemporal and Alzheimer’s dementia. Poster session presented at the Annual Convention of the International Neuropsychological Society. New York, NY. [Google Scholar]

- Kopp B., Rösser N., Tabeling S., Stürenburg H. J., de Haan B., Karnath H. O. et al. (2015). Errors on the trail making test are associated with right hemispheric frontal lobe damage in stroke patients. Behavioural Neurology, doi: 10.1155/2015/309235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamberty G. J., Putnam S. H., Chatel D. M., & Bieliauskas L. A. (1994). Derived trail making test indices: A preliminary report. Neuropsychiatry, Neuropsychology, and Behavioral Neurology, 7 (3), 230–234. [Google Scholar]

- Lezak M. D. (1995). Neuropsychological assessment (3rd ed.). Oxford, UK: Oxford University Press. [Google Scholar]

- Lopez M. N., Arias G. P., Hunter M. A., Charter R. A., & Scott R. R. (2003). Boston naming test: Problems with administration and scoring. Psychological Reports, 92 (2), 468–472. [DOI] [PubMed] [Google Scholar]

- Metzler-Baddeley C. (2007). A review of cognitive impairments in dementia with Lewy bodies relative to Alzheimer's disease and Parkinson's disease with dementia. Cortex, 43 (5), 583–600. [DOI] [PubMed] [Google Scholar]

- Monsell S. E., Dodge H. H., Zhou X. H., Bu Y., Besser L. M., Mock C. et al. (2016). Results from the NACC uniform data set neuropsychological battery: Crosswalk study results. Alzheimer Disease and Associated Disorders, 30 (2), 134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mrazik M., Millis S., & Drane D. L. (2010). The oral trail making test: Effects of age and concurrent validity. Archives of Clinical Neuropsychology, 25 (3), 236–243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murayama N., Ota K., Kasanuki K., Kondo D., Fujishiro H., Fukase Y. et al. (2016). Cognitive dysfunction in patients with very mild Alzheimer's disease and amnestic mild cognitive impairment showing hemispheric asymmetries of hypometabolism on 18F-FDG PET. International Journal of Geriatric Psychiatry, 31 (1), 41–48. [DOI] [PubMed] [Google Scholar]

- Partington J. E., & Leiter R. G. (1949). Partington’s pathway test. The Psychological Service Center Bulletin, 1, 9–20. [Google Scholar]

- Possin K. L., Laluz V. R., Alcantar O. Z., Miller B. L., & Kramer J. H. (2011). Distinct neuroanatomical substrates and cognitive mechanisms of figure copy performance in Alzheimer's disease and behavioral variant frontotemporal dementia. Neuropsychologia, 49 (1), 43–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rabin L., Barr W., & Burton L. (2005). Assessment practices of clinical neuropsychologists in the United States and Canada: A survey of INS, NAN, and APA division 40 members. Archives of Clinical Neuropsychology, 20 (1), 33–65. [DOI] [PubMed] [Google Scholar]

- Rey A. (1941). L'examen psychologique dans les cas d'encephalopathie traumatique. Archrues de Psychologre, 28, 286–340. [Google Scholar]

- Savica R., Grossardt B. R., Bower J. H., Boeve B. F., Ahlskog J. E., & Rocca W. A. (2013). Incidence of dementia with Lewy bodies and Parkinson disease dementia. JAMA Neurology, 70 (11), 1396–1402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenberg M. R., Duff K., Beglinger L. J., Moser D. J., Bayless J. D., Mold J. et al. (2008). Retention rates on RBANS memory subtests in elderly adults. Journal of Geriatric Psychiatry and Neurology, 21 (1), 26–33. [DOI] [PubMed] [Google Scholar]

- Schoenberg M. R., & Rum R. S. (2017). Towards reporting standards for neuropsychological study results: A proposal to minimize communication errors with standardized qualitative descriptors for normalized test scores. Clinical Neurology and Neurosurgery, 162, 72–79. [DOI] [PubMed] [Google Scholar]

- Schretlen D. J., Testa S. M., & Pearlson G. D. (2010). Calibrated neuropsychological normative system professional manual. Lutz, FL: Psychological Assessment Resources. [Google Scholar]

- Senior G., Piovesana A., & Beaumont P. (2018). Discrepancy analysis and Australian norms for the trail making test. The Clinical Neuropsychologist, 32 (3), 510–523. [DOI] [PubMed] [Google Scholar]

- Shirk S. D., Mitchell M. B., Shaughnessy L. W., Sherman J. C., Locascio J. J., Weintraub S. et al. (2011). A web-based normative calculator for the uniform data set (UDS) neuropsychological test battery. Alzheimer's Research & Therapy, 3 (6), 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strauss E., Sherman E. M., & Spreen O. (2006). A compendium of neuropsychological tests: Administration, norms, and commentary. Oxford: Oxford University Press. [Google Scholar]

- Spreen O., & Strauss E. (1998). A compendium of neuropsychological tests, 213–218. Oxford University Press: New York. [Google Scholar]

- StataCorp (2013). Stata Statistical Software: Release 13. College Station, TX: StataCorp. [Google Scholar]

- Tombaugh T. N., Rees L., & McIntyre N. (1998). Normative data for the trail making test. Personal communication cited in Spreen and Strauss. A compendium of neuropsychological tests: Administration, norms and commentary (2nd ed.). New York, NY: Oxford University Press. [Google Scholar]

- Vaughan R. M., Coen R. F., Kenny R., & Lawlor B. A. (2016). Preservation of the semantic verbal fluency advantage in a large population-based sample: Normative data from the TILDA study. Journal of the International Neuropsychological Society, 22 (5), 570–576. [DOI] [PubMed] [Google Scholar]

- Wechsler D. (1984). WMS-R: Wechsler memory scale-revised manual. New York, NY: Psychological Corporation. [Google Scholar]

- Wechsler D. (2009). WMS-IV technical and interpretive manual. San Antonio, TX: Pearson. [Google Scholar]

- Weintraub S., Besser L., Dodge H. H., Teylan M., Ferris S., Goldstein F. C. et al. (2018). Version 3 of the Alzheimer disease Centers’ neuropsychological test battery in the uniform data set (UDS). Alzheimer Disease and Associated Disorders, 32 (1), 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weintraub S., Wicklund A. H., & Salmon D. P. (2012). The neuropsychological profile of Alzheimer disease. Cold Spring Harbor Perspectives in Medicine, 1–18, a006171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wetter S. R., Delis D. C., Houston W. S., Jacobson M. W., Lansing A., Cobell K. et al. (2005). Deficits in inhibition and flexibility are associated with the APOE-E4 allele in nondemented older adults. Journal of Clinical and Experimental Neuropsychology, 27 (8), 943–952. [DOI] [PubMed] [Google Scholar]

- Wicklund A. H., Rademaker A., Johnson N., Weitner B. B., & Weintraub S. (2007). Rate of cognitive change measured by neuropsychologic test performance in 3 distinct dementia syndromes. Alzheimer Disease & Associated Disorders, 21 (4), S70–S79. doi: 10.1097/wad.0b013e31815bf8a5. [DOI] [PubMed] [Google Scholar]

- Williams J. M. (1991). Memory Assessment Scales. Odessa, FL: Psychological Assessment Resources. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.