Abstract

Background

Increasingly, drug and device clinical trials are tracking activity levels and other quality of life indices as endpoints for therapeutic efficacy. Trials have traditionally required intermittent subject visits to the clinic that are artificial, activity-intensive, and infrequent, making trend and event detection between visits difficult. Thus, there is an unmet need for wearable sensors that produce clinical quality and medical grade physiological data from subjects in the home. The current study was designed to validate the BioStamp nPoint® system (MC10 Inc., Lexington, MA, USA), a new technology designed to meet this need.

Objective

To evaluate the accuracy, performance, and ease of use of an end-to-end system called the BioStamp nPoint. The system consists of an investigator portal for design of trials and data review, conformal, low-profile, wearable biosensors that adhere to the skin, a companion technology for wireless data transfer to a proprietary cloud, and algorithms for analyzing physiological, biometric, and contextual data for clinical research.

Methods

A prospective, nonrandomized clinical trial was conducted on 30 healthy adult volunteers over the course of two continuous days and nights. Supervised and unsupervised study activities enabled performance validation in clinical and remote (simulated “at home”) environments. System outputs for heart rate (HR), heart rate variability (HRV) (including root mean square of successive differences [RMSSD] and low frequency/high frequency ratio), activity classification during prescribed activities (lying, sitting, standing, walking, stationary biking, and sleep), step count during walking, posture characterization, and sleep metrics including onset/wake times, sleep duration, and respiration rate (RR) during sleep were evaluated. Outputs were compared to FDA-cleared comparator devices for HR, HRV, and RR and to ground truth investigator observations for activity and posture classifications, step count, and sleep events.

Results

Thirty participants (77% male, 23% female; mean age 35.9 ± 10.1 years; mean BMI 28.1 ± 3.6) were enrolled in the study. The BioStamp nPoint system accurately measured HR and HRV (correlations: HR = 0.957, HRV RMSSD = 0.965, HRV ratio = 0.861) when compared to Actiheart<sup>TM</sup>. The system accurately monitored RR (mean absolute error [MAE] = 1.3 breaths/min) during sleep when compared to a Capnostream35<sup>TM</sup> end-tidal CO<sub>2</sub> monitor. When compared with investigator observations, the system correctly classified activities and posture (agreement = 98.7 and 92.9%, respectively), step count (MAE = 14.7, < 3% of actual steps during a 6-min walk), and sleep events (MAE: sleep onset = 6.8 min, wake = 11.5 min, sleep duration = 13.7 min) with high accuracy. Participants indicated “good” to “excellent” usability (average System Usability Scale score of 81.3) and preferred the BioStamp nPoint system over both the Actiheart (86%) and Capnostream (97%) devices.

Conclusions

The present study validated the BioStamp nPoint system's performance and ease of use compared to FDA-cleared comparator devices in both the clinic and remote (home) environments.

Keywords: Conformal wearable sensor, Biometrics, Cardiac monitoring, Respiration, Sleep, Actigraphy, Remote monitoring

Introduction

Clinical trials are continuously evolving and incorporating new technologies that allow for increased efficiency and insight into the status and progress of the participant. Increasingly, “efficacy” and “outcome” are being viewed in terms of quality of life metrics, including activity levels, sleep quality, vital signs, and patient-reported outcomes.

Recent studies have shown that data gathered from participants in the home environment show significant differences from brief and intermittent assessments made in controlled clinical environments. Data from the home setting have the potential to better represent disease status, progression, and response to therapy [1].

“Wearable” health-monitoring devices have been developed to extend disease monitoring beyond infrequent clinic visits and subjective assessments to provide continuous and objective data. Physiological and activity monitoring have revealed important insights across many therapeutic areas, including neurodegenerative diseases [2], oncology [3], orthopedics [4], cardiology [5, 6, 7, 8], pulmonary disease [9], behavioral science [10], and sleep medicine (e.g., periodic limb movements, respiration) [11].

Currently available wearable devices, however, lack the combination of subject comfort and clinical data quality [11]. Often their form factor, size, and wear locations impede continuous wear, interfere with daily activities, make them conspicuous, and compromise sleep. Recent studies have shown that lightweight, conformal sensors provide an alternative in studies focused on Huntington disease [12], multiple sclerosis [13], physical rehabilitation [14], and cardiac monitoring [15]. Here, we present a validation study conducted with a novel wireless remote monitoring system (BioStamp nPoint®; MC10 Inc., Lexington, MA, USA) that includes lightweight, conformal, multimodal biosensors to capture continuous physiological data in simulated home and clinical settings. The study was conducted to validate data quality, algorithm performance and accuracy, participant preference, and general ease of use.

Materials and Methods

Device Description

The BioStamp nPoint system, the next-generation BioStampTM system [16, 17, 18], comprises an investigator web portal, conformal wearable sensors (BioStamp), a docking station (Link Hub), a mobile smartphone containing proprietary software (Link App for communication and data upload), two-sided adhesives, and an adhesive alignment-assist applicator. The system is designed to gather raw physiological data from accelerometers, gyroscopes, and biopotential electrodes integrated into each sensor (see online supplementary Fig. 1; www.karger.com/doi/10.1159/000493642). These raw data are processed by proprietary algorithms to provide standard clinical metrics: heart rate (HR), heart rate variability (HRV) (by root mean square of successive differences [RMSSD] and HRV low frequency/high frequency ratio), activity classification, step count during walking, posture classification, sleep onset and wake times, respiration rate (RR) during sleep, and total sleep duration. The algorithms are based on seminal work in the public domain [19], published recent research, and proprietary machine learning [17]. Collected raw data stored on the sensors are transferred through the Link Hub to a secure Medical Device Data System where the data are analyzed by nPoint algorithms and displayed in the Investigator Portal (Fig. 1).

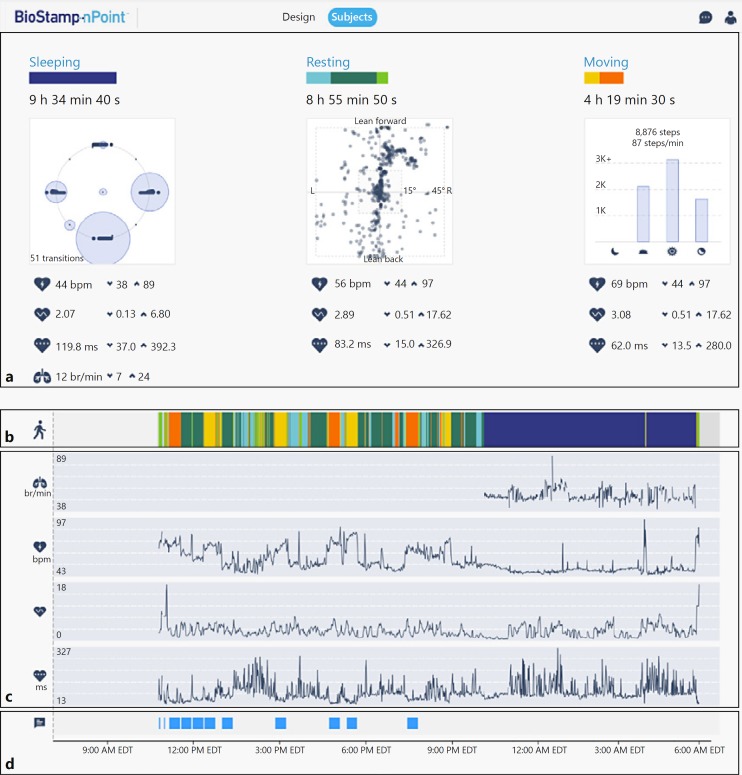

Fig. 1.

Investigator portal dashboard displaying representative examples of participant data from one day of wear including daily summary outputs associated with sleeping, resting, and moving activities (a), longitudinal view of color-coded actigraphy during sensor wear (b), RR, HR, HRV ratio, and HRV RMSSD during sleep (c), and instances of annotated study activities (d). Graphs for HR, HRV ratio, and HRV RMSSD are auto-scaled for display purposes and for representing data for an approximate 24-h recording. HR, heart rate; HRV, heart rate variability; RMSSD, root mean square of successive differences; RR, respiration rate.

Study Overview

The present study was performed in part to support a Premarket Notification to the FDA. Comparator devices were selected to reproduce the identical validation previously published by the predicate device [20].

The study was a prospective, nonrandomized clinical investigation on healthy volunteers physically and cognitively able to perform activities of daily living without assistance, as assessed by the investigator. After obtaining informed consent, participants were enrolled for two continuous days and nights at the clinical site allowing for supervised (days 0–1, “in-clinic”) and unsupervised (days 1–2, “remote”) study segments. The study objectives were to evaluate system performance against FDA-cleared comparators' (Fig. 2) ability to calculate HR, HRV RMSSD, HRV ratio, and RR during sleep. Additionally, algorithmic outputs for step count, activity classification, posture classification, and sleep parameters were compared to ground truth investigator observations. The ease of use of the system for research participants was also assessed.

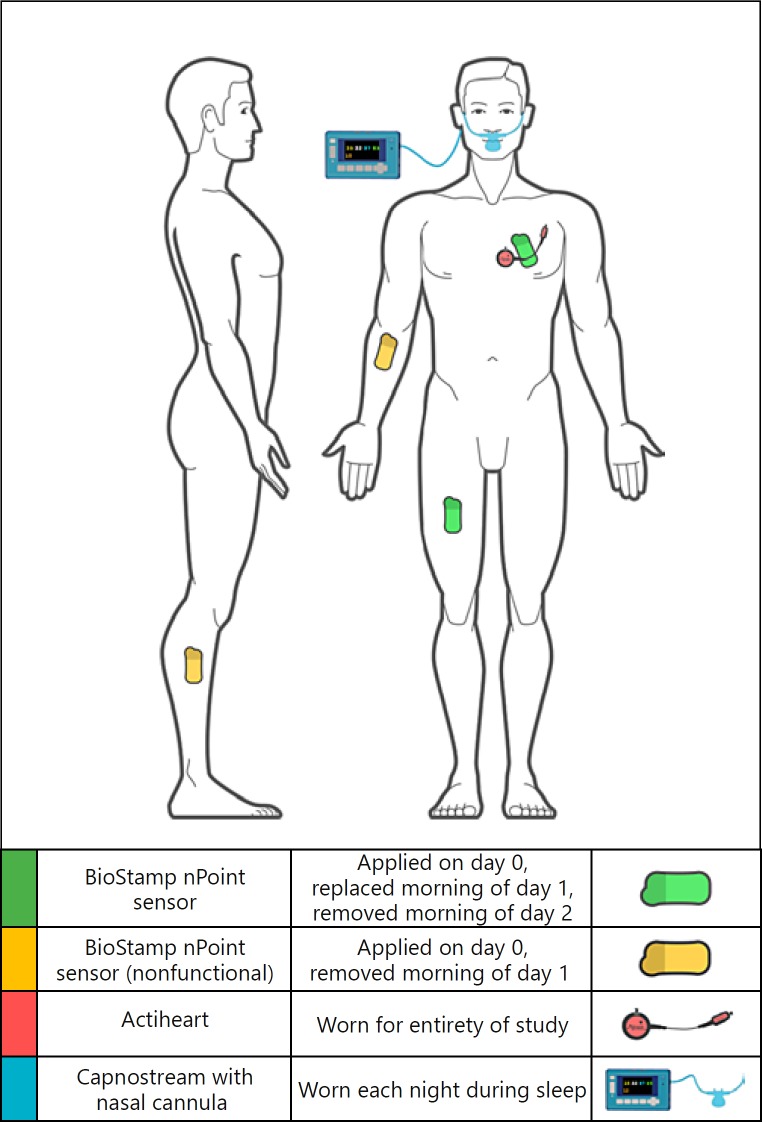

Fig. 2.

Anatomical locations for the investigational device and comparators. BioStamp sensors were applied to the chest (lead II orientation) and thigh locations required for algorithmic outputs (green, n = 2), and nonrecording sensors (yellow, n = 2) were applied to the forearm and shank, representing alternate locations for raw data collection. Actiheart was applied to the chest, proximal to the chest BioStamp sensor. A Capnostream cannula was worn in the nostrils during sleep.

ActiheartTM (CamNtech, Boerne, TX, USA) was used as the comparator device for HR and HRV, and Capnostream35TM Portable Respiratory Monitor (Medtronic, Minneapolis, MN, USA) was used to compare RR. A manual counter was used as ground truth for step counting. Independent investigator observation provided ground truth data for activity and posture classifications.

BioStamp sensors were applied either by clinical staff (day 0) or by the study participant (day 1) to the left precordium (lead II orientation, accel: 31.25 Hz ± 16G, electrodes: 250 Hz) and thigh (anterior thigh, accel: 31.25 Hz ± 16G) locations required for system algorithmic outputs, and to the shank and forearm to assess sensor adherence. The Actiheart comparator device was applied to the upper chest on day 0 and worn continuously throughout the study.

Supervised activities were designed to test the system's ability to classify participant actigraphy (lying, sitting, standing, walking, and “other” tested via stationary biking), classify posture (lying: supine, left, right, prone; sitting/standing: upright, leaning left, leaning right, leaning forward, leaning back), and to count steps while walking. On day 1, clinical staff interfaced with the mobile Link App to record participant activities performed in random order, blinding the system to the ground truth. On day 2, participants interacted with the Link App to independently complete a prescribed set of activities (sitting, walking, or stationary biking for 22 min each). In the sleep laboratory, participants were instrumented with a nasal cannula connected to a Capnostream end-tidal CO2 monitor and instructed to go to sleep at a predetermined time. Sleep laboratory staff monitored participants during sleep, documenting sleep and wake times, as well as times the participant awakened or arose during the night. Data collected from the two nights were used to test the system's ability to detect sleep onset and wake times, classify sleep, and calculate RR during sleep.

The clinical site's amenities allowed participants to engage in everyday indoor activities such as watching movies or television, lounging, playing games, and eating meals, simulating a home environment. Participants' ability to set up and use the BioStamp nPoint system without assistance was evaluated by clinical staff during the unsupervised portion of the study. Participants completed a System Usability Scale (SUS) [21] survey and evaluated their experience and device preferences.

Data Alignment and Sampling

After data collection, BioStamp nPoint system and comparator data streams were time-aligned and assessed for signal quality. Measurements meeting each device's intrinsic signal quality standards were then paired for comparison. A random sample of qualified data pairs per participant was collected for each algorithmic endpoint. The resulting dataset was provided to an independent third party for statistical analysis and clinical study report generation. Adhesion data and subject surveys were analyzed descriptively by the sponsor.

Statistical Analysis

All clinical efficacy endpoints were obtained by comparing the BioStamp nPoint system to investigator observed ground truth and the data obtained from the comparator devices. The analysis consisted of widely used measures of agreement. Mean absolute error (MAE), root-mean-square error (RMSE), Lin's concordance correlation coefficient, and Deming regression variables were calculated for quantitative comparison, simultaneously producing scatter and Bland-Altman plots. Deming regression was used as both the system and comparators are subject to measurement error.

Results

Study Population

The population was selected to be consistent with previous device performance validation studies [20] (K152139). Forty-four subjects were recruited with 30 participants enrolled: 77% male, 23% female, mean age of 35.9 ± 10.1 years (range 21–52), and a mean BMI of 28.1 ± 3.6 (range 20.1–34.1). There were no withdrawals and all participants completed all scheduled activities. All possible data were obtained from BioStamp and Capnostream devices. Five BioStamp sensors deadhered prior to or during a night of sleep; thus, data from those nights could not be analyzed. Due to a single Actiheart malfunction, only 29 of 30 Actiheart datasets were obtained. There was one adverse event (nasal congestion) unrelated to the investigational device.

Results

Multimodal sensor data were captured from 30 participants using the BioStamp nPoint system. Table 1 and Figure 3 summarize the physiological measurements and results for all participants. MAE and concordance correlation coefficient were used to evaluate all quantitative measurements. For qualitative comparisons, tables of agreement were constructed with percent agreement and uncertainty coefficients computed. The uncertainty coefficient was computed for the true state given the BioStamp classification.

Table 1.

Study efficacy endpoints, comparator device used for associated endpoint data collection, and statistical agreement between investigational device and comparator on an overall and activity grouped basis

| Efficacy endpoint | Comparator device | Overall observed range | Overall comparison (sleep, resting, moving) | Sleep comparison | Resting comparison | Moving comparison |

|---|---|---|---|---|---|---|

| HR | Actiheart | 38–136 bpm | corr. = 0.957, MAE = 2.0±5.1 bpm | corr. = 0.865, MAE = 1.9±5.3 bpm | corr. = 0.947, MAE = 1.9±4.0 bpm | corr. = 0.900, MAE = 2.3±5.9 bpm |

| HRV (RMSSD) | Actiheart | 2.32–747.24 ms | corr. = 0.965, MAE = 5.056±24.907 ms | corr. = 0.939, MAE = 10.09±38.20 ms | corr. = 0.977, MAE = 3.41±18.13 ms | corr. = 0.996, MAE = 1.67±6.23 ms |

| HRV ratio (LF/HF) | Actiheart | 0.2–30.5 | corr. = 0.861, MAE = 1.09±1.82 | corr. = 0.845, MAE = 0.67±1.44 | corr. = 0.825, MAE = 1.34±2.06 | corr. = 0.892, MAE = 1.27±1.84 |

| RR | Capno-stream35 | 3–4 breaths/min | N/A | corr. = 0.697, MAE = 1.3±2.1 breaths/min | N/A | N/A |

| Step count | independent observer with manual counter | 467–716 steps/6 min | N/A | N/A | N/A | walking only, corr. = 0.950, MAE = 14.7±11.9 steps, error = 2.5% (average ground truth = 598.7 steps) |

The HRV ratio for sleep was calculated using all qualified data pairs. corr., correlation; HF, high frequency; HR, heart rate; HRV, heart rate variability; LF, low frequency; MAE, mean absolute error; RMSSD, root mean square of successive differences; RR, respiration rate.

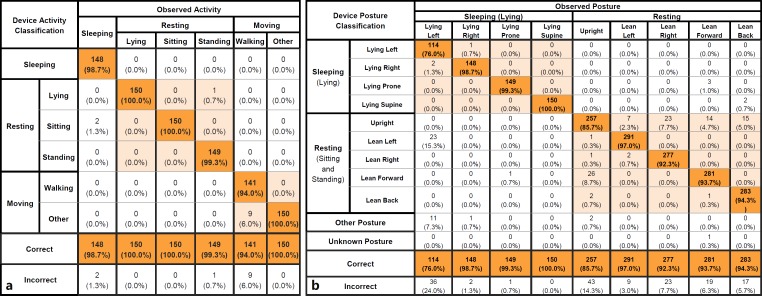

Fig. 3.

Activity (a; sleeping, resting, moving activities) and posture (b; sleeping, resting postures) tables of agreement between investigational device classification and independent observation. Activity grouping is derived from sleeping (sleep activity only), resting (lying, sitting, and standing), and moving (walking and other) activities. Aggregate of all activity classifications resulted in a percent agreement of 98.7% (sleeping activity 98.7%, resting activities 100.0%, and moving activities 100.0%). Aggregate of all posture classifications resulted in a percent agreement of 92.9% (sleeping postures 94.0% and resting postures 99.5%). Activity classifications compared against independent observer ground truth activity.

Endpoint Analysis

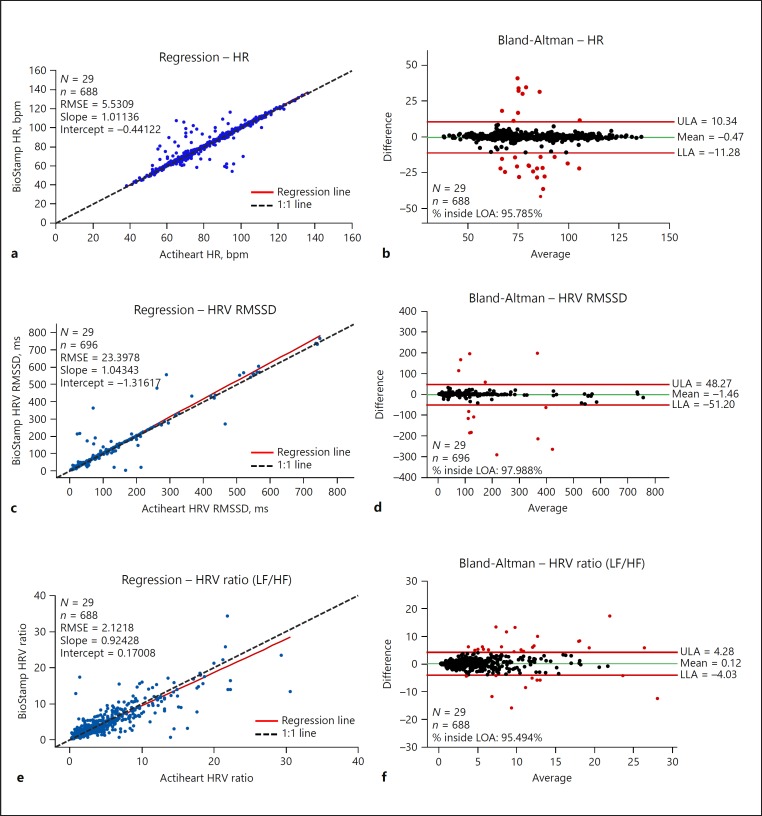

System measurements for HR (bpm), HRV RMSSD (ms), and HRV ratio were calculated from interbeat intervals [19, 22] derived from the Actiheart device (Fig. 4). The intent was to compare algorithmic performance based on identical raw data. Data from 29 of 30 participants were analyzed (one Actiheart dataset was irretrievable). HR ranged from 38 to 136 bpm (both BioStamp and Actiheart); the concordance correlation coefficient was > 0.95 for HR and HRV RMSSD and 0.86 for HRV ratio. The Deming regression line was nearly coincident with the line of identity for all three parameters. Additionally, MAE values (HR = 2.0 bpm, HRV RMSSD = 5.1 ms, and HRV ratio = 1.1) yielded high agreement between device measurements. MAEs may further improve when the nPoint algorithm processes its own calculated interbeat interval and the investigator selects a higher digital sampling rate (which is available).

Fig. 4.

HR and HRV: Deming (orthogonal) regression plots, with population (N), qualified data pairs (n), RMSE, intercept, and slope, and Bland-Altman plots of the randomly sampled qualified data pairs encompassing sleeping, resting, and moving activities for HR (a, b), HRV RMSSD (c, d), and HRV ratio (e, f). All HR parameter outputs were compared against Actiheart HR parameter measurements (sampling rate 128 Hz). Interbeat intervals from the Actiheart device were processed by BioStamp nPoint HRV algorithms in order to produce nPoint HRV measurements according to the identical interbeat interval input. HF, high frequency; HR, heart rate; HRV, heart rate variability; LF, low frequency; LLA, lower limit of agreement; LOA, line of agreement; RMSE, root-mean-square error; RMSSD, root mean square of successive differences; ULA, upper limit of agreement.

BioStamp activity and posture classification were analyzed using tables of agreement (Fig. 3). The system correctly classified activity for 98.67% (888 of 900) of tested activities, with an uncertainty coefficient of 0.968. The system correctly classified participant posture for 92.86% (1,950 of 2,100) of tested lying, sitting, and standing postures, with an uncertainty coefficient of 0.879.

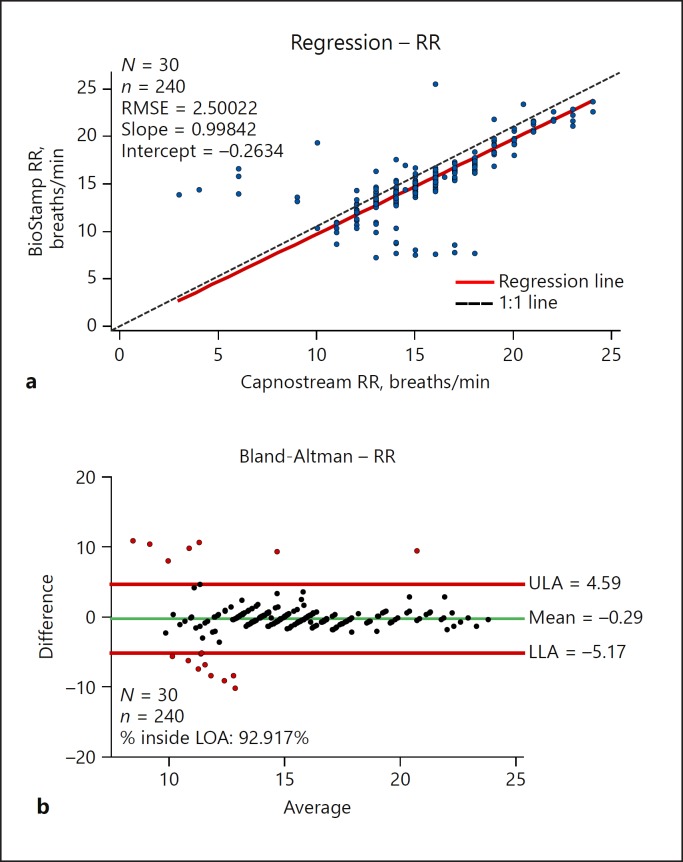

RR was calculated during sleep using accelerometer data from the BioStamp chest sensor [23] and was compared with the rates measured by the Capnostream device. Overall, the Deming regression line for RR was very close to the line of identity (Fig. 3), and MAE and RMSE (1.3 breaths/min and 2.50, respectively) demonstrated agreement between simultaneous measurements. Analyzed RRs ranged from 7.2 to 25.4 breaths/min (BioStamp) and from 3 to 24 breaths/min (Capnostream). The stepwise nature of comparator measurements observed in Figure 5 is due to the limited resolution of RR values of the comparator device (Capnostream = 0.5 breaths/min) against raw RR values output from the nPoint system.

Fig. 5.

RR: Deming (orthogonal) regression plot (a), with population (N), qualified data pairs (n), RMSE, intercept, and slope, and Bland-Altman plot (b) of the randomly sampled qualified da ta pairs. RR measurements compared against Capnostream35 capnography RR measurements (sampling rate 20 Hz). LLA, lower limit of agreement; LOA, line of agreement; RMSE, root-mean-square error; RR, respiration rate; ULA, upper limit of agreement.

The BioStamp nPoint system records step count when a subject is classified as “walking” (correctly classified 94% of the time). Participants performed a 6-min walk at their own pace on a treadmill, and the system calculated step count with an error < 3% of actual steps taken (MAE = 14.72 steps, average ground truth count = 598.7 steps).

The system's activity-based sleep onset and wake time detection [24, 25, 26] demonstrated alignment with ground truth recorded by independent observers. Sleep onset (participant instructed to go to sleep) MAE was 6.8 ± 8.43 min, wake time MAE was 11.5 ± 28.5 min, and sleep duration MAE was 13.7 ± 22.7 min.

BioStamp nPoint sensor adhesion was visually assessed by independent observers. Acceptable adhesion (> 75% of sensor adhered at the end of wear) was observed at chest and thigh locations mandated for algorithm analytics for 91.7% of sensor wears (110 of 120). More than 75% deadhesion makes electrode contact potentially unreliable. All but one adhesion failure was on an investigational location, not required for algorithmic output. Subject-facing surveys, including a SUS evaluation, and independent observer assessments were used to characterize participants' ability to use the system. The system received a rating of “good” to “excellent,” with an average SUS score of 81.3 [27]. Additionally, participants preferred the BioStamp sensors to both the Actiheart (87%, 26 of 30) and Capnostream (97%, 29 of 30) devices.

Discussion and Conclusion

The study (NCT 03257189 and reported within K173510) was intentionally limited to healthy volunteers and did not directly test individuals with disabilities, recognizable conditions, or specific disease states. Generalizability to impaired populations will require further validation. Other limitations of the study include combining data from both the supervised and unsupervised settings for analysis and not comparing the two datasets. The results suggest that the data from both settings were comparable. We also recognize that usability from the investigator's perspective would have been valuable. Only participants were formally surveyed because investigators had been so thoroughly trained and experienced before the trial was initiated.

Earlier generations (BioStamp RCTM and investigational prototypes) of the technology have been used in academic research, including motor assessments in Parkinson disease [28], gait assessment in multiple sclerosis [13], posture classification in Huntington disease [12], postural sway in multiple sclerosis [29], and periodic leg movements in sleep (unpublished data). Additional independent research with BioStamp technology has looked at ambulatory vectorcardiography [30], spasticity [31], and more granular activities of daily living (unpublished data). This is the first report of clinical validation with the new nPoint system. Recently, wearable biosensing in some form has become nearly ubiquitous, predominantly through the adoption of consumer health and wellness devices [32, 33, 34]. Regulated medical devices have been adopted more slowly, especially in the outpatient environment. Many of these devices are still best used in the clinic or laboratory. Some devices rely on complex and expensive visual tracking technology or lack the form factor and comfort needed for continuous outpatient wear. Because of limited available technology, lack of validated digital endpoints, and financial drivers, clinicians still assess study participant status episodically, even during rigorous clinical trials. Unfortunately, these assessments are too often rater-dependent and are performed in an artificial environment [12]. Gathering objective, clinically meaningful data from the study participants in their own environment between clinic assessments, perhaps minimizing their frequency and intensity, represents one potential of cogently designed wearable devices.

In a 2016 survey of medical product industry professionals [35], more than 60% stated that they had already used digital health technology in clinical trials, and more than 97% intended to use digital technology in future trials. Currently there are over 100 industry-sponsored clinical trials registered on clinicaltrials.gov using actigraphy endpoints, and more than 8,000 looking at some form of quality of life endpoint. The nPoint system was designed to meet this need.

The BioStamp nPoint system has the potential to enhance clinical trials and patient management by providing objective longitudinal measures of treatment response through remote monitoring, data trends, and quality of life metrics. This pivotal study demonstrated the nPoint system's ability to accurately characterize posture, activity, vital signs, and sleep metrics with results equivalent to currently available devices. The multimodal and conformal nature of the sensors facilitates gathering of physiological data from ambulatory subjects in the home setting. The technology is designed to support the evolving clinical trials' design trends and endpoints. The present study supports the nPoint system's accuracy and usability for that purpose.

Statement of Ethics

The study protocol was reviewed and approved by the Aspire IRB (Santee, CA, USA), a WIRB-Copernicus Group company.

Disclosure Statement

A subset of the authors are employees of MC10 Inc., the study sponsor and funding source. R. Ghaffari is a cofounder of MC10 Inc. CTI Clinical Trial and Consulting Services and STATKING Clinical Services were paid consultants to MC10 Inc.

Funding Sources

The study was funded by MC10 Inc.

Author Contributions

E. Sen-Gupta and D.E. Wright contributed to the study design, conducted study visits, analyzed and interpreted the data, and wrote the manuscript. J.W. Caccese contributed to the study design, the data alignment, and the writing of the manuscript. J.A. Wright Jr. and A.H. Combs contributed to the study design and the writing of the manuscript. E. Jortberg, V. Bhatkar, and M. Ceruolo contributed to the initial study design and system development. R. Ghaffari contributed to the writing the manuscript. D.L. Clason contributed to data analysis. J.P. Maynard was the lead investigator for the study and its implementation. All authors reviewed the manuscript and approved the final revision.

Supplementary Material

Supplementary data

Acknowledgment

MC10 Inc. would like to thank former employees Briana Morey, Kirsten Seagers, Jake Phillips, Julia Spinelli, and Ariel Dowling, PhD who contributed in the early stages of study design and development. CTI Clinical Trial and Consulting Services (Cincinnati, OH, USA) provided the site facility and employed the lead investigator (J.P. Maynard, MD). STATKING Clinical Services (Fairfield, OH, USA) designed the statistical analysis plan and employed the biostatisticians, including D.L. Clason, PhD who authored the clinical study report.

References

- 1.Tomasic I, Tomasic N, Trobec R, Krpan M, Kelava T. Continuous remote monitoring of COPD patients-justification and explanation of the requirements and a survey of the available technologies. Med Biol Eng Comput. 2018 Apr;56((4)):547–69. doi: 10.1007/s11517-018-1798-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Patel S, Lorincz K, Hughes R, Huggins N, Growdon J, Standaert D, et al. Monitoring motor fluctuations in patients with Parkinson's disease using wearable sensors. IEEE Trans Inf Technol Biomed. 2009 Nov;13((6)):864–73. doi: 10.1109/TITB.2009.2033471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gresham G, Schrack J, Gresham LM, Shinde AM, Hendifar AE, Tuli R, et al. Wearable activity monitors in oncology trials: current use of an emerging technology. Contemp Clin Trials. 2018 Jan;64:13–21. doi: 10.1016/j.cct.2017.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mizner RL, Petterson SC, Clements KE, Zeni JA, Jr, Irrgang JJ, Snyder-Mackler L. Measuring functional improvement after total knee arthroplasty requires both performance-based and patient-report assessments: a longitudinal analysis of outcomes. J Arthroplasty. 2011 Aug;26((5)):728–37. doi: 10.1016/j.arth.2010.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bergemann T. Use of Accelerometer Data to Evaluate Physical Activity as a Surrogate Endpoint in Heart Failure Clinical Trials. In: Medtronic, ed. ASA Biopharmaceutical Section Regulatory-Industry Statistics Workshop. American Statistical Association. 2017 [Google Scholar]

- 6.Butler J, Hamo CE, Udelson JE, Pitt B, Yancy C, Shah SJ, et al. Exploring New Endpoints for Patients With Heart Failure With Preserved Ejection Fraction. Circ Heart Fail. 2016 Nov;9((11)):9. doi: 10.1161/CIRCHEARTFAILURE.116.003358. [DOI] [PubMed] [Google Scholar]

- 7.Conraads VM, Spruit MA, Braunschweig F, Cowie MR, Tavazzi L, Borggrefe M, et al. Physical activity measured with implanted devices predicts patient outcome in chronic heart failure. Circ Heart Fail. 2014 Mar;7((2)):279–87. doi: 10.1161/CIRCHEARTFAILURE.113.000883. [DOI] [PubMed] [Google Scholar]

- 8.Snipelisky D, Kelly J, Levine JA, Koepp GA, Anstrom KJ, McNulty SE, et al. Accelerometer-Measured Daily Activity in Heart Failure With Preserved Ejection Fraction: Clinical Correlates and Association With Standard Heart Failure Severity Indices. Circ Heart Fail. 2017 Jun;10((6)):e003878. doi: 10.1161/CIRCHEARTFAILURE.117.003878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Moy ML, Teylan M, Weston NA, Gagnon DR, Garshick E. Daily step count predicts acute exacerbations in a US cohort with COPD. PLoS One. 2013 Apr;8((4)):e60400. doi: 10.1371/journal.pone.0060400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hickie IB, Naismith SL, Robillard R, Scott EM, Hermens DF. Manipulating the sleep-wake cycle and circadian rhythms to improve clinical management of major depression. BMC Med. 2013 Mar;11((1)):79. doi: 10.1186/1741-7015-11-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kelly JM, Strecker RE, Bianchi MT. Recent developments in home sleep-monitoring devices. ISRN Neurol. 2012;2012:768794. doi: 10.5402/2012/768794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Adams JL, Dinesh K, Xiong M, Tarolli CG, Sharma S, Sheth N, et al. Multiple Wearable Sensors in Parkinson and Huntington Disease Individuals: A Pilot Study in Clinic and at Home. Digit Biomark. 2017;1((1)):52–63. doi: 10.1159/000479018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Moon Y, McGinnis RS, Seagers K, Motl RW, Sheth N, Wright JA, Jr, et al. Monitoring gait in multiple sclerosis with novel wearable motion sensors. PLoS One. 2017 Feb;12((2)):e0171346. doi: 10.1371/journal.pone.0171346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McGinnis RS, Patel S, Silva I, et al. Skin mounted accelerometer system for measuring knee range of motion. 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); 2016 16-Aug;2016:p. 5298–5302. doi: 10.1109/EMBC.2016.7591923. [DOI] [PubMed] [Google Scholar]

- 15.Schultz K, Lui G, McElhinney D, et al. Abstract 13416: The Days of the Holter Monitor Are Numbered: Extended Continuous Rhythm Monitoring Detects More Clinically Significant Arrhythmias in Adults With Congenital Heart Disease. Circulation. 2016;134:A13416–A. [Google Scholar]

- 16.Patel S, McGinnis RS, Silva I, et al. A wearable computing platform for developing cloud-based machine learning models for health monitoring applications. 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); 2016 16-Aug;2016:p. 5997–6001. doi: 10.1109/EMBC.2016.7592095. [DOI] [PubMed] [Google Scholar]

- 17.McGinnis RS, DiCristofaro S, Mahadevan N, et al. Longitudinal Posture and Activity Tracking in the Home Enabled by Machine Learning and a Conformal, Wearable Sensor System. Summer Biomechanics, Bioengineering, and Biotransport Conference (SB3C2017) Tucson, AZ, USA2017 [Google Scholar]

- 18.McGinnis RS, Mahadevan N, Moon Y, Seagers K, Sheth N, Wright JA, Jr, et al. A machine learning approach for gait speed estimation using skin-mounted wearable sensors: from healthy controls to individuals with multiple sclerosis. PLoS One. 2017 Jun;12((6)):e0178366. doi: 10.1371/journal.pone.0178366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Pan J, Tompkins WJ. A real-time QRS detection algorithm. IEEE Trans Biomed Eng. 1985 Mar;32((3)):230–6. doi: 10.1109/TBME.1985.325532. [DOI] [PubMed] [Google Scholar]

- 20.Chan AM, Selvaraj N, Ferdosi N, Narasimhan R. Wireless patch sensor for remote monitoring of heart rate, respiration, activity, and falls. Conference proceedings : Annual International Conference of the IEEE Engineering in Medicine and Biology Society IEEE Engineering in Medicine and Biology Society Annual Conference 2013. 2013:6115–6118. doi: 10.1109/EMBC.2013.6610948. [DOI] [PubMed] [Google Scholar]

- 21.Brooke J. London: Taylor and Francis; 1996. SUS-A quick and dirty usability scale. Usability evaluation in industry. [Google Scholar]

- 22.Clifford GD, Azuaje F, McSharry P. Artech House, Inc; 2006. Advanced Methods And Tools for ECG Data Analysis. [Google Scholar]

- 23.Bates A, Ling MJ, Mann J, Arvind DK. Respiratory Rate and Flow Waveform Estimation from Tri-axial Accelerometer Data. International Conference on Body Sensor Networks. 2010 Jul-Jun;2010:p. 144–150. [Google Scholar]

- 24.Cole RJ, Kripke DF, Gruen W, Mullaney DJ, Gillin JC. Automatic sleep/wake identification from wrist activity. Sleep. 1992 Oct;15((5)):461–9. doi: 10.1093/sleep/15.5.461. [DOI] [PubMed] [Google Scholar]

- 25.Jean-Louis G, Kripke DF, Cole RJ, Assmus JD, Langer RD. Sleep detection with an accelerometer actigraph: comparisons with polysomnography. Physiol Behav. 2001 Jan;72((1-2)):21–8. doi: 10.1016/s0031-9384(00)00355-3. [DOI] [PubMed] [Google Scholar]

- 26.Webster JB, Kripke DF, Messin S, Mullaney DJ, Wyborney G. An activity-based sleep monitor system for ambulatory use. Sleep. 1982;5((4)):389–99. doi: 10.1093/sleep/5.4.389. [DOI] [PubMed] [Google Scholar]

- 27.Bangor A, Kortum P, Miller J. Determining What Individual SUS Scores Mean: Adding an Adjective Rating Scale. J Usability Stud. 2009;4:114–23. [Google Scholar]

- 28.Boroojerdi B, Claes K, Ghaffari R, et al. Clinical feasibility of a wearable, conformable, sensor patch to monitor motor symptoms in Parkinson's disease . (MDS) Vancouver, BC, Canada: International Parkinson and Movement Disorder Society; 2017. 21st International Congress of Parkinson's Disease and Movement Disorders. [DOI] [PubMed] [Google Scholar]

- 29.Sun R, Moon Y, McGinnis RS, Seagers K, Motl RW, Sheth N, et al. Assessment of Postural Sway in Individuals with Multiple Sclerosis Using a Novel Wearable Inertial Sensor. Digit Biomark. 2018;2((1)):1–10. doi: 10.1159/000485958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kabir MM, Perez-Alday EA, Thomas J, Sedaghat G, Tereshchenko LG. Optimal configuration of adhesive ECG patches suitable for long-term monitoring of a vectorcardiogram. J Electrocardiol. 2017 May-Jun;50((3)):342–8. doi: 10.1016/j.jelectrocard.2016.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lonini L, Shawen N, Ghaffari R, Rogers J, Jayarman A, Automatic detection of spasticity from flexible wearable sensors . Maui, Hawaii: ACM; 2017. Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2017 ACM International Symposium on Wearable Computers; pp. 133–6. [Google Scholar]

- 32.Bonato P. Wearable sensors/systems and their impact on biomedical engineering. IEEE Eng Med Biol Mag. 2003 May-Jun;22((3)):18–20. doi: 10.1109/memb.2003.1213622. [DOI] [PubMed] [Google Scholar]

- 33.Pantelopoulos A, Bourbakis NG. A Survey on Wearable Sensor-Based Systems for Health Monitoring and Prognosis. IEEE Trans Syst Man Cybern C. 2010;40((1)):1–12. [Google Scholar]

- 34.Dorsey ER, Topol EJ. State of Telehealth. N Engl J Med. 2016 Jul;375((2)):154–61. doi: 10.1056/NEJMra1601705. [DOI] [PubMed] [Google Scholar]

- 35.Insights on Digital Health Technology Survey 2016: How Digital Health Devices and Data Impact Clinical Trials e-book: Validic; 2016 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary data