Abstract

Comparison of graph structure is a ubiquitous task in data analysis and machine learning, with diverse applications in fields such as neuroscience, cyber security, social network analysis, and bioinformatics, among others. Discovery and comparison of structures such as modular communities, rich clubs, hubs, and trees yield insight into the generative mechanisms and functional properties of the graph. Often, two graphs are compared via a pairwise distance measure, with a small distance indicating structural similarity and vice versa. Common choices include spectral distances and distances based on node affinities. However, there has of yet been no comparative study of the efficacy of these distance measures in discerning between common graph topologies at different structural scales. In this work, we compare commonly used graph metrics and distance measures, and demonstrate their ability to discern between common topological features found in both random graph models and real world networks. We put forward a multi-scale picture of graph structure wherein we study the effect of global and local structures on changes in distance measures. We make recommendations on the applicability of different distance measures to the analysis of empirical graph data based on this multi-scale view. Finally, we introduce the Python library NetComp that implements the graph distances used in this work.

1 Introduction

In the era of big data, comparison and matching are ubiquitous tasks. A graph is a particular type of data structure that records the interactions between some collection of agents. These objects are sometimes referred to as “complex networks;” we use the mathematician’s term “graph” throughout the paper. This type of data structure relates connections between objects, rather than directly relating the properties of those objects. The interconnectedness of the object in graph data disallows many common statistical techniques used to analyze tabular datasets. The need for new analytical techniques for visualizing, comparing, and understanding graph data has given rise to a rich field of study [1–3].

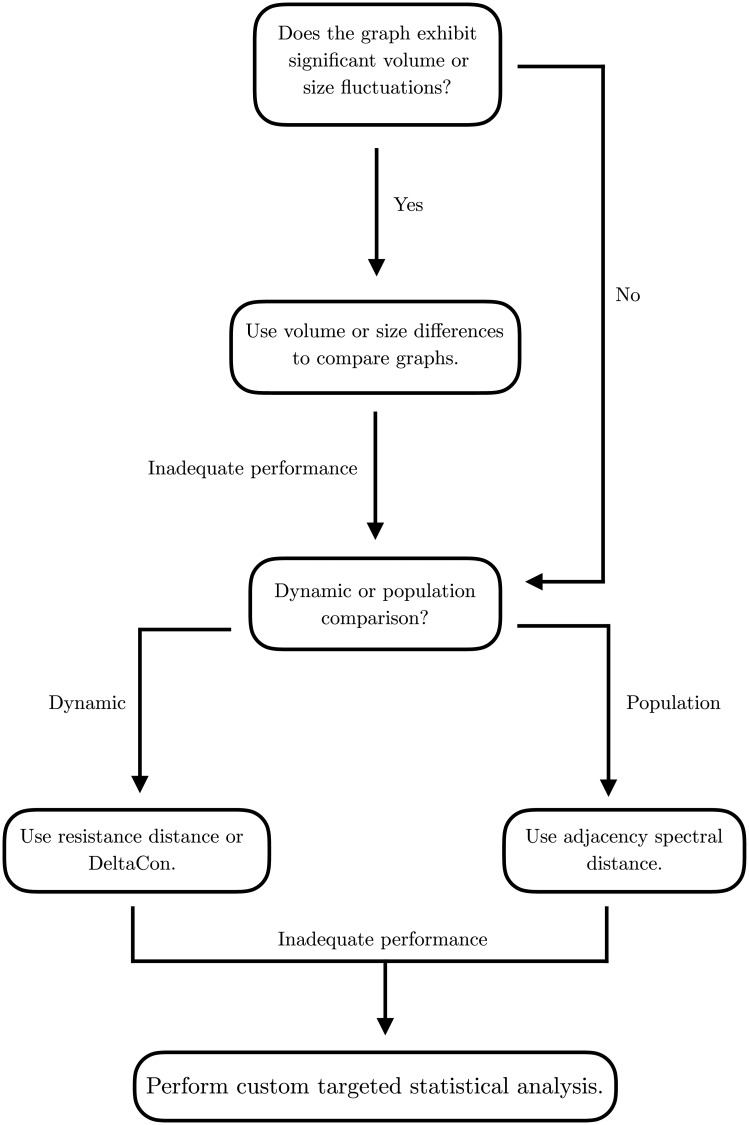

In this work, we focus on tools for pairwise comparison of graphs. Examples of applications include the two-sample test problem and the change point detection problem. In the former, we compare two populations of graphs using a distance statistic, and we experimentally test whether both populations could be generated by the same probability distribution. In the latter, we consider a dynamic network formed by a time series of graphs, and the goal is to detect significant changes between adjacent time steps using a distance [4]. Both problems require the ability to effectively compare two graphs. However, the utility of any given comparison method varies with the type of information the user is looking for; one may care primarily about large scale graph features such as community structure or the existence of highly connected “hubs”; or, one may be focused on smaller scale structure such as local connectivity (i.e. the degree of a vertex) or the ubiquity of substructures such as triangles.

Existing surveys of graph distances are limited to observational datasets (e.g., [5] and references therein). While authors try to choose datasets that are exemplars of certain classes of networks (e.g., social, biological, or computer networks), it is difficult to generalize these studies to other datasets.

In this paper, we take a different approach. We consider existing ensembles of random graphs as prototypical examples of certain graph structures, which are the building blocks of existing real world networks. We propose therefore to study the ability of various distances to compare two samples randomly drawn from distinct ensembles of graphs. Our investigation is concerned with the relationship between the families of graph ensembles, the structural features characteristic of these ensembles, and the sensitivity of the distances to these characteristic structural features.

The myriad of proposed techniques for graph comparison [6] are severely reduced in number when one requires the practical restriction that the algorithm run in a reasonable amount of time on large graphs. Graph data frequently consists of 104 to 108 vertices, and so algorithms whose complexity scales quadratically with the size of the graph quickly become unfeasible. In this work, we restrict our attention to approaches where the calculation time scales linearly or near-linearly with the number of vertices in the graph for sparse graphs. We recall that a graph is sparse if the number of edges grows linearly (up to a logarithmic factor) with the number of nodes.

In the past 40 years, many random graph models have been developed that emulate certain features found in real-world graphs [7, 8]. A rigorous probabilistic study of the application of graph distances to these random models is difficult because the models are often defined in terms of a generative process rather than a distribution over the space of possible graphs. As such, researchers often restrict their attention to very small, deterministic graphs (see e.g., [9]) or to very simple random models, such as that proposed by Erdős and Rényi [10]. Even in these simple cases, rigorous probabilistic analysis can be prohibitively difficult. We propose instead a numerical approach where we sample from random graph distributions and observe the empirical performance of various distance measures.

Throughout the work, we examine the observed results through a lens of global versus local graph structure. Examples of global structure include community structure and the existence of well-connected vertices (often referred to as “hubs”). Examples of local structure include the median degree in the graph, or the density of substructures such as triangles. Our results demonstrate that some distances are particularly tuned towards observing global structure, while others naturally observe multiple scales. In both empirical and numerical experiments, we use this multi-scale interpretation to understand why the distances perform the way they do on a given model, or on given empirical graph data.

The paper is structured as follows: in Section 2.2, we introduce the distances used, and establish the state of knowledge regarding each. In Section 2.4, we describe the random graph ensembles that are used to evaluate the various distances. We discuss their structural features, and their respective values as prototypical models for real networks. In Section 2.5 we describe three real networks that we use to further study the performance of the distances. The reader who is already familiar with the graph models and distances discussed can skip to Section 2.6 to find the description of the contrast statistic that we use to compare graph populations. Experimental results are briefly described in sections 3.1 and 3.2. A detailed discussion is provided in Sections 4.1 and 4.2. Finally, Section Conclusion summarizes the work and our recommendations. In Section 7, we introduce and discuss NetComp, the Python package that implements the distances used to compare the graphs throughout the paper.

2 Methods

2.1 Notation

We must first introduce the notation used throughout the paper. It is standard wherever possible.

We denote by G = (V, E, W) a graph with vertex set V = {1, …, n} and edge set E ⊆ V × V. The function W: E → R+ assigns each edge (i, j) in E a positive weight that we denote wi,j. We call n = |V| the size of the graph, and denote by the number of edges. For i ∈ V and j ∈ V, we say i ∼ j if (i, j) ∈ E. The matrix A is called the adjacency matrix, and is defined as

The degree di of a vertex is defined as . The degree matrix D is the diagonal matrix of degrees, so Di,i = di and Di,j = 0 for i ≠ j. The combinatorial Laplacian matrix (or just Laplacian) of G is given by . The normalized Laplacian is defined as , where the diagonal matrix D−1/2 is given by

We refer to A, L, and as matrix representations of G. These are not the only useful matrix representations of a graph, although they are some of the most common. For a more diverse catalog of representations, see [11].

The spectrum of a matrix is the sorted sequence of eigenvalues. Whether the sequence is ascending or descending depends on the matrix in question. We denote the kth eigenvalue of the adjacency matrix by , where we order the eigenvalues in descending order

| (1) |

We denote by the kth eigenvalue of the Laplacian matrix, and we order these eigenvalues in ascending order, so that

| (2) |

We similarly denote the kth eigenvalue of the normalized Laplacian by , with

| (3) |

and we denote by ϕk the corresponding eigenvector.

The significance of this convention is that the index k of an eigenvalue λk always encodes the frequency of the corresponding eigenvector. To wit, the eigenvector associated with either or experiences about k oscillations on the graphs, with k + 1 nodal domains [12].

Two graphs G and G′ are isomorphic if and only if there exists a map between their vertex sets under which the two edge sets are equal; we write G ≅ G′. If we denote by A and A′ the adjacency matrices of G and G′ respectively, then G ≅ G′ if and only if there exists a permutation matrix P such that A′ = PTAP.

We say that a distance d requires node correspondence when there exist graphs G, G′, and H such that G ≅ G′ but d(G, H) ≠ d(G′, H). Intuitively, a distance requires node correspondence when one must know some meaningful mapping between the vertex sets of the graphs under comparison.

2.2 Graph distance measures

Let us begin by introducing the distances that we study in this paper, and discussing the state of the knowledge for each. We have chosen both standard and cutting-edge distances, with the requirement that the algorithms be computable in a reasonable amount of time on large, sparse graphs. In practice, this means that the distances must scale linearly or near-linearly in the size in the graph.

We refer to these tools as “distance measures,” as many of them do not satisfy the technical requirements of a metric. Although all are symmetric, they may fail one or more of the other requirements of a mathematical metric. This can be very problematic if one hopes to perform rigorous analysis on these distances, but in practice it is not significant. Consider the requirement of identity of indiscernible, in which d(G, G′) = 0 if and only if G = G′. We rarely encounter two graphs where d(G, G′) = 0; we are more frequently concerned with an approximate form of this statement, in which we wish to deduce that G is similar to G′ from the fact that d(G, G′) is small.

The distance measures we study divide naturally into two categories, that we now describe. These categories are not exhaustive; many distance measures (including one we employ in the experiments) do not fit neatly into either category. Akoglu et al. [6], whose focus is anomaly detection, provide an alternative taxonomy; our taxonomy refines a particular group of methods they refer to as “feature-based”.

2.2.1 Spectral distances

Let us first discuss spectral distances. We briefly review the necessary background; see [11] for a good introduction to spectral methods used in graph comparison.

We first define the adjacency spectral distance; the Laplacian and normalized Laplacian spectral distances are defined similarly. Let G and G′ be graphs of size n, with adjacency spectra λA and , respectively. The adjacency spectral distance between the two graphs is defined as

which is just the distance between the two spectra in the ℓ2 metric. We could use any ℓp metric here, for p ∈ [0, ∞]. The choice of p is informed by how much one wishes to emphasize outliers; in the limiting case of p = 0, the metric returns the measure of the set over that the two vectors are different, and when p = ∞ only the largest element-wise difference between the two vectors is returned. Note that for p < 1 the ℓp distances are not true metrics (in particular, they fail the triangle inequality) but they still may provide valuable information. For a more detailed discussion on ℓp norms, see [13].

The Laplacian and normalized Laplacian spectral distances dL and are defined in the exact same way. In general, one can define a spectral distance for any matrix representation of a graph; for results on more than just the three we analyze here, see [11]. We note that spectral distances do not require node correspondence.

An important property of the normalized Laplacian spectral distance is that it can be used to compare graphs of different sizes (see e.g., [14]).

In practice, it is often the case that only the first k eigenvalues are compared, where k ≪ n. We still refer to such truncated spectral distances as spectral distances. When using spectral distances, it is important to keep in mind that the adjacency spectral distance compares the largest k eigenvalues, whereas the Laplacian spectral distances compare the smallest k eigenvalues. Comparison using the first k eigenvalues for small k allows one to focus on the community structure of the graph, while ignoring the local structure of the graph [15]. Inclusion of the highest-k eigenvalues allows one to discern local features as well as global. This flexibility allows the user to target the particular scale at which she wishes to study the graph, and is a significant advantage of the spectral distances.

The three spectral distances used here are not true metrics. This is because there exist graphs G and G′ that are co-spectral but not isomorphic. That is to say, adjacency cospectrality occurs when for all i = 1, …, n, so dA(G, G′) = 0, but G ≇ G′. Similar notions of cospectrality exist for all matrix representations; graphs that are co-spectral with respect to one matrix representation are not necessarily co-spectral with respect to other representations.

Little is known about cospectrality, save for some computational results on small graphs [16] and trees [11]. Schwenk proved that a sufficiently large tree nearly always has a co-spectral counterpart [17]. This result was extended recently to include a wide variety of random trees [18]. However, results such as these are not of great import to us; the graphs examined are large enough that we do not encounter cospectrality in our numerical experiments. A more troubling failure mode of the spectral distances would be when the distance between two graphs is very small, but the two graphs have important topological distinctions. In Section Discussion, we provide further insight into the effect of topological changes on the spectra of some of the random graph models we study.

The consideration above addresses the question of how local changes affect the overall spectral properties of a graph. Some limited computational studies have been done in this direction. For example, Farkas et al. [19] study the transition of the adjacency spectrum of a small world graph as the disorder parameter increases. As one might expect, the authors in [19] observe the spectral density transition from a highly discontinuous density (which occurs when the disorder is zero and the graph is a ring-like lattice) to Wigner’s famous semi-circular shape [20] (which occurs when the disorder is maximized, so that the graph is roughly equivalent to an uncorrelated random graph).

From an analytical standpoint, certain results in random matrix theory inform our understanding of fluctuations of eigenvalues of the uncorrelated random graph (see Section Random Graph Models for a definition). These results hold asymptotically as we consider the kth eigenvalue of a graph of size n, where k = αn for α ∈ (0, 1]. In this case, O’Rourke [21] has shown that the the eigenvalue λk is asymptotically normal with asymptotic variance σ2(λk) = C(α) log n/n. An expression for the constant C(α) is provided; see Remark 8 in [21] for the detailed statement of the theorem. This result can provide a heuristic for spectral fluctuations in some random graphs, but when the structure of these graphs diverges significantly from that of the uncorrelated random graph, then results such as these become less informative.

Another common question is that of interpretation of the spectrum of a given matrix representation of a graph. How are we to understand the shape of the empirical distribution of eigenvalues? Specifically, one might study the overall shape of the spectral density, or the value of individual eigenvalues separated from the bulk. Can we interpret the eigenvalues which separate from this bulk in a meaningful way? The answer to this question depends, of course, on the matrix representation in question. Let us focus first on the Laplacian matrix L, the interpretation of that is the clearest.

The first eigenvalue of L is always , with the eigenvector being the vector of all ones, . It is a well-known result that the multiplicity of the zero eigenvalue is the number of connected components of the graph, i.e. if , then there are precisely k connected components of the graph [22]. Furthermore, in such a case, the first k eigenvectors can be chosen to be the indicator functions of the components. There exists a relaxed version of this result: if the first k eigenvalues are very small (in a sense properly defined), then the graph can be strongly partitioned into k clusters (see [15] for the rigorous formulation of the result). This result justifies the use of the Laplacian in spectral clustering algorithms, and can help us understand the interplay between the presence of small eigenvalues and the presence of communities in the ensembles of random graphs studied in Section 3.1.1.

The eigenvalues of the Laplacian can be interpreted as vibrational frequencies in a manner similar to the eigenvalues of the continuous Laplacian operator ∇2. To understand this analogy, consider the graph as embedded in a plane, with each vertex representing an oscillator of mass one and each edge a spring with elasticity one. Then, for small oscillations perpendicular to the plane, the Laplacian matrix is precisely the coupling matrix for this system, and the eigenvalues give the square of the normal mode frequencies, . For a more thorough discussion of this interpretation of the Laplacian, see [23].

Maas [24] suggests a similar interpretation of the spectrum of the adjacency matrix A. Consider the graph as a network of oscillators, embedded in a plane as previously discussed. Additionally, suppose that each vertex is connected to so many external non-moving points (by edges with elasticity one) so that the graph becomes regular with degree d. The frequencies of the normal modes of this structure then connect to the eigenvalues of A via . If the graph is already regular with degree d, then this interpretation is consistent with the previous, since the eigenvalues of L = dI − A are just .

2.2.2 Matrix distances

The second class of distances we discuss are called matrix distances, and consist of direct comparison of the structure of pairwise affinities between vertices in a graph (see [9] for a detailed discussion on matrix distances). These affinities are frequently organized into matrices, and the matrices can then be compared, often via an entry-wise ℓp norm. Matrix distances all require node correspondence.

We have discussed spectral methods for measuring distances between two graphs; to introduce the matrix distances, we begin by focusing on methods for measuring distances on a graph; that is to say, the distance δ(v, w) between two vertices v, w ∈ V. Just a few examples of such distances include the shortest path distance [25], the effective graph resistance [26], and variations on random-walk distances [27]. Of those listed above, the shortest path distance is the oldest and the most thoroughly studied; in fact, it is so ubiquitous that “graph distance” is frequently used synonymously with shortest path distance [28].

There are important differences between the distances δ that we might choose. The shortest path distance considers only a single path between two vertices. In comparison, the effective graph resistance takes into account all possible paths between the vertices, and so measures not only the length, but the robustness of the communication between the vertices.

How do these distances on a graph help us compute distances between graphs? Let us denote by a generic distance on a graph. We need assume very little about this function, besides it being real-valued; in particular, it need not be symmetric, and we can even allow δ(v, v) ≠ 0. When we say “distance” we implicitly assume that smaller values imply greater similarity; however, we can also carry out this approach with a “similarity score”, in which larger values imply greater similarity. Recalling that the vertices v ∈ V = {1, …, n} are labelled with natural numbers, we can then construct a matrix of pairwise distances M via . The idea behind what we refer to as matrix distances is that this matrix M carries important structural information about the graph.

Consider two graphs G = (V, E) and G′ = (V, E′) defined on the same vertex set. Given a graph distance δ(⋅, ⋅), let M and M′ be the matrices of pairwise distances between vertices in the graph G and G′ respectively. We define the distance d induced by δ between G and G′ as follows,

| (4) |

where ‖ ⋅ ‖ is a norm we are free to choose. In principle, we could use metrics, or even similarity functions here, at the risk of the function d losing some desirable properties.

Let us elucidate a specific example of such a distance; in particular, we show how the edit distance conforms to this description. Let δ(v, w) be defined as

| (5) |

Then the matrix M is just the adjacency matrix A. If we use the norm

| (6) |

then we call the resulting distance the edit distance.

Of course, the usefulness of such a distance is directly dependent on how well the matrix M reflects the topological structure of the graph. The edit distance focuses by definition on local structure; it can only see changes at the level of edge perturbations. If significant volume changes are happening in the graph, then the edit distance detects these changes, as do other matrix distances.

To compensate for such trivial first order changes (changes in volume) we match the expected volume of the models under comparison (see Section 3.1). We can then study whether distances can detect structural changes.

We also implement the resistance-perturbation distance, first discussed in [9]. This distance takes the effective graph resistance R(u, v), defined in [26], as the measure of vertex affinity. This results in a (symmetric) matrix of pairwise resistances R. The resistance-perturbation distance (or just resistance distance) is based on comparing these two matrices in the entry-wise ℓ1 norm given in Eq (6).

Unlike the edit distance, the resistance distance is designed to detect changes in connectivity between graphs. A recent work [29] discusses the efficacy of the resistance distance in detecting community changes.

Finally, we study DeltaeCon, a distance based on the fast belief propagation method of measuring node affinities [30]. To compare graphs, this method uses the fast belief propagation matrix

| (7) |

and compares the two representations S and S′ via the Matusita difference,

| (8) |

Note that the matrix S can be rewritten in a matrix power series as

| (9) |

and so takes into account the influence of neighboring vertices in a weighted manner, where neighbors separated by paths of length k have weight ϵk. Fast belief propagation is designed to model the diffusion of information throughout a graph [31], and so should in theory be able to perceive both global and local structures. Although empirical tests are performed in [30], no direct comparison to other modern methods is presented.

2.2.3 Feature-based distances

These two categories do not cover all possible methods of graph comparison. The computer science literature explores various other methods (e.g., see [6], Section 3.2 for a comprehensive review), and other disciplines that apply graph-based techniques often have their own idiosyncratic methods for comparing graphs extracted from data.

One possible method for comparing graphs is to look at specific “features” of the graph, such as the degree distribution, betweenness centrality distribution, diameter, number of triangles, number of k-cliques, etc. For graph features that are vector-valued (such as degree distribution) one might also consider the vector as an empirical distribution and take as graph features the sample moments (or quantiles, or statistical properties). A feature-based distance is a distance that uses comparison of such features to compare graphs.

Of course, in a general sense, all methods discussed so far are feature based; however, in the special case where the features occur as values over the space V × V of possible node pairings, we choose to refer to them more specifically as matrix distances. Similarly, if the feature in question is the spectrum of a particular matrix realization of the graph, we call the method a spectral distance.

In [32], a feature-based distance called NetSimile is proposed, which focuses on local and egonet-based features (e.g., degree, volume of egonet as fraction of maximum possible volume, etc.). If we are using k features, the method aggregates a feature-vertex matrix of size k × n. This feature matrix is then reduced to a “signature vector” (a process the authors in [32] call “aggregation”) that consists of the mean, median, standard deviation, skewness, and kurtosis of each feature. These signature vectors are then compared in order to obtain a measure of distance between graphs.

In the neuroscience literature in particular, feature-based methods for comparing graphs are popular [33, 34]. In [35], the authors use graph features such as modularity, shortest path distance, clustering coefficient, and global efficiency to compare functional connectivity networks of patients with and without schizophrenia. Statistics of these features for the control and experiment groups are aggregated and compared using standard statistical techniques.

We implement NetSimile as a prototypical feature-based method. It is worth noting that the general approach could be extended in almost any direction; any number of features could be used (which could take on scalar, vector, or matrix values) and the aggregation step can include or omit any number of summary statistics on the features, or can be omitted entirely. We implement the method as it is originally proposed, with the caveat that calculation of many of these features is not appropriate for large graphs, as they cannot be computed in linear or near-linear time. A scalable modification of NetSimile would utilize features that can be calculated (at least approximately) in linear or near-linear time.

2.2.4 Learning graph kernels

Given the diversity of structural features in graphs, and the difficulty of designing by hand the set of features that optimizes the graph embedding, several researchers have proposed recently to learn the embedding from massive datasets of existing networks. Such algorithms learn an embedding [36] from a set of graphs into Euclidean space, and then compute a notion of similarity between the embedded graphs (e.g., [37–39] and references therein). The metric that is learnt can be tailored to a specific application (e.g., [39–45]).

All these approaches rely on the extension of convolutional neural networks to non Euclidean structures, such as manifolds and graphs (e.g., [46–49] and references therein). The core scientific question becomes: how does one implement the convolution units that are in the network? Two methods have been proposed. The first method performs the convolution in the spectral domain [50], (defined by the eigenspace of the graph Laplacian). These data-dependent convolutions can be performed directly in the spatial domain (using polynomials of the Laplacian [51]) or in the spectral domain (in the eigenspace of the Laplacian). Purely “in-the-graph” methods have also been proposed where the convolution is implemented using an aggregation process (e.g., [42, 52, 53] and references therein).

Graph kernels [54] are typically not injective (two graphs can be perfectly similar without being the same), and rarely satisfies the triangular inequality. There have been some recent attempts at identifying the classes of kernels that are injective [55, 56]. The question can be rephrased in terms of how expressive is the embedding from the space of graphs to Euclidean space, i.e. how often do two distinct graphs are mapped to same point [57]. The authors in [55, 56] have proved that graph neural networks are as expressive as the Weisfeiler-Lehman graph isomorphism test: if two graphs are mapped to distinct points by the embedding, then the Weisfeiler-Lehman graph test would consider these graphs to be distinct (non isomorphic).

2.2.5 Comparing graphs of different sizes

The distance measures described in the previous paragraphs are defined for two graphs that have the same size. In practice, one often needs to compare graphs of different sizes. Inspired by the rich connections between graph theory and geometry, one can define a notion of distance between any two graphs by extending the notion of distance between metric spaces [58]. The construction proceeds as follows: each graph is represented as a metric space, wherein the metric is simply the shortest distance on the graph. Two graphs are equivalent if there exists an isomorphism between the graph—represented as metric spaces. Finally, one can define a distance between two graphs G1 and G2 (or rather between the two classes of graph isometric to G1 and G2 respectively) by considering standard notions of distances between isometry classes of metric spaces [59]. Examples of such distances include the Gromov-Hausdorff distance [59], the Kantorovich-Rubinstein distance and the Wasserstein distance [60], which both require that the metric spaces be equiped with probability measures. The Gromov-Hausdorff distance computes the infimum of the Hausdorff distance between the isometric embeddings of two metric spaces into a common one. In plain English, this distance measures the residual error after trying to “optimally align” two metric spaces using deformations of these spaces that preserve distances (isometries). Because the search for the optimal alignment (embedding) is over such a vast space of functions, the Gromov-Hausdorff does not lend itself to practical applications (but see [61]).

On the other hand, the Wasserstein-Kantorovich-Rubinstein distance, also known as the “Earth Mover’s distance” in the engineering literature, has been used extensively in probability and pattern recognition (e.g., [62–64] and references therein). The Wasserstein distance can be interpreted as the cost of transporting a measure from one metric space to a second measure defined on a second metric space; the cost increases with the distance between the metric spaces and the proportion of the measure that needs to be transported. These concepts have just recently been applied to the case of measuring distances between graphs. Given a graph G, one can associate a measure on the graph (e.g., defined by a histogram of the degrees [65, 66], a Gaussian measure with a covariance matrix given by the pseudo-inverse of the graph Laplacian [67], or a uniform measure on the graph [68]), and a notion of cost between nodes (e.g., the Bures distance [67], the shortest distance between two nodes [68] assuming the node correspondence between the graphs has been established).

The computational complexity of the estimation of the Wasserstein distance remains prohibitively high for large graph: the cost is mn2 + m2n, where m is the number of edges, and n is the number of nodes. A closed form expression of the Wasserstein distance can be derived when the measure on each graph is a Gaussian measure [67]. In this case the Wasserstein distance is the Bures distance between their respective covariance matrices. This computation is further simplified when the covariance matrices are diagonal, since the Bures distance becomes then the Hellinger distance (e.g., [69, 70] and references therein). The rich connection between distances between metric spaces, optimal transport, and metrics on the cone of positive semidefinite matrices is clearly beyond the scope of the current study; it will certainly provide interesting avenues for future studies.

The relevance of the current study to this burgeoning research area stems from the exploration of the relationship between the structural features characteristic of several graph ensembles and the sensitivity of the distances to these features. The distributions associated with these features can then be used to define a probability measure associated with a given graph (e.g., [71] where the distribution of hitting times is used to characterize a functional brain connectivity network).

2.3 Computational efficiency

2.3.1 Algorithmic complexity

In many interesting graph analysis scenarios, the sizes of the graphs to be analyzed are on the order of millions or even billions of vertices. For example, the social network defined by Facebook users has over 2.3 billion vertices as of 2018. In scenarios such as these, any algorithm of complexity becomes unfeasible; although in principle it is possible that the constant hidden in would be so small it would make up for the n2 term in the complexity, in practice this is not the case. This motivates the requirement that algorithms be of near-linear complexity. When the complexity of the distance depends on the graph volume m, we assume that the graph is sparse and m is a linear function (up to a logarithmic factor) of the size n.

This challenge motivates the previously stated requirement that all algorithms be of linear or near-linear complexity. We say an algorithm is linear if it is ; it is near-linear if it is where an is asymptotically bounded by a polynomial. We use the notation in the standard way; for a more thorough discussion of algorithmic complexity, including definitions of the Landau notations, see [72].

Table 1 displays the algorithmic complexity of each distance measure we compare. We assume that factors such as graph weights and quality of approximation are held constant, leading to simpler expressions here than appear in cited references. Spectral distances have equivalent complexity, since they all all amount to performing an eigendecomposition on a symmetric real matrix. For DeltaCon and the resistance distance, there are approximate algorithms as well as exact algorithms; we list the complexity of both. Although we use the exact versions in the experiments, in practice the approximate version would likely be used if the graphs to be compared are large.

Table 1. Distance measures and complexity.

The size (of the larger) graph is n; the number of edges is m. For the spectral decomposition, k denotes the number of principal eigenvalues we wish to find.

Of particular interest are the highly parallelizable randomized algorithms which can allow for extremely efficient matrix decomposition. In [73], the authors review many such algorithms, and discuss in particular their applicability to determining principal eigenvalues. The computation complexity in Table 1 for the spectral distances is based on their simplified analysis of the Krylov subspace methods, that states that the approach is , where Tmult is the cost of matrix-vector multiplication for the input matrix. Since the input matrices are sparse, , and . Although the eigensolver uses the implicitly restarted Arnoldi method, if implementing such a decomposition on large matrices, the use of a randomized algorithm could lead to a significant increase in efficiency.

2.3.2 Comparison of runtimes on graphs on small graphs

In Section 3.1, we perform the experiments on small graphs, consisting of only 1,000 nodes.

In application, the graphs under comparison can vary from hundreds up to billions of nodes. We focus on smaller graphs primarily so that the computation of the distances is tractable even on a small personal computer.

Of course, the time it takes to calculate a given distance depends highly on the implementation of that distance. The runtimes reported below use the implementations in NetComp [75]. These implementations are not highly optimized; spectral calculations depends on the standard spectral solvers that come with scipy, a standard computational package in Python. These leverage sparse data structures when available.

For the resistance distance and DeltaCon, the distance has both an exact form which has complexity, and an approximate form which has complexity. We use the exact forms in our calculations, and these are the forms implemented in NetComp [75]. For DeltaCon, the approximate form is implemented in MATLAB, and the code is available on the author’s website, http://web.eecs.umich.edu/~dkoutra/. For the resistance distance, the authors of [9] have released an implementation of the approximate resistance distance in MATLAB, which can be found on GitHub at https://github.com/natemonnig/Resistance-Perturbation-Distance. We hope to include Python implementations of these fast approximate distances in NetComp in the near future.

Table 2 shows the results of our runtime experiments. We compare mean and standard deviations of runtimes for the various distances.

Table 2. Runtimes for distance various distance measures, for graphs of size n = 100 and n = 300.

Each distance is calculated N = 500 times. Each sample generates two Erdős-Rényi random graphs with parameter p = 0.15, and times the calculation of the distance between the two graphs. All distances are implemented in the NetComp library, which can be found on GitHub at [75].

| Distance Measure | Computational Time (n = 100) |

| Edit Distance | 8.2 × 10−5 ± 4.5 × 10−5 |

| DeltaCon | 3.1 × 10−3 ± 7.4 × 10−4 |

| Resistance Dist. | 7.5 × 10−3 ± 1.4 × 10−3 |

| Spectral (Adjacency) | 1.1 × 10−2 ± 1.1 × 10−3 |

| Spectral (Laplacian) | 1.2 × 10−2 ± 4.7 × 10−3 |

| Spectral (Normalized Laplacian) | 1.5 × 10−2 ± 9.4 × 10−4 |

| NetSimile | 2.3 × 10−1 ± 6.3 × 10−2 |

| Distance Measure | Computational Time (n = 300) |

| Edit Distance | 5.7 × 10−4 ± 9.9 × 10−4 |

| DeltaCon | 1.4 × 10−2 ± 6.6 × 10−3 |

| Resistance Dist. | 8.8 × 10−2 ± 5.4 × 10−2 |

| Spectral (Adjacency) | 1.5 × 10−1 ± 8.9 × 10−3 |

| Spectral (Laplacian) | 1.5 × 10−1 ± 9.8 × 10−3 |

| Spectral (Normalized Laplacian) | 1.6 × 10−1 ± 6.6 × 10−3 |

| NetSimile | 5.5 × 10−1 ± 1.1 × 10−2 |

| Distance Measure | Computational Time (n = 1, 000) |

| Edit Distance | 4.2 × 10−3 ± 1.5 × 10−3 |

| DeltaCon | 8.8 × 10−2 ± 6.4 × 10−3 |

| Resistance Dist. | 5.9 × 10−1 ± 4.3 × 10−2 |

| Spectral (Adjacency) | 1.3 ± 5.5 × 10−1 |

| Spectral (Laplacian) | 1.3 ± 1.6 × 10−1 |

| Spectral (Normalized Laplacian) | 1.4 ± 3.7 × 10−1 |

| NetSimile | 2.5 ± 1.8 × 10−1 |

These are computed on small graphs, of size n = 100, 300, and 1, 000.

As one might expect, the edit distance is by far the most efficient, as it is simply a difference between and summation over two sparse matrices. NetSimile is notably slow in our experiments. This is due to inefficient implementation—most of the work of calculating the various metrics used by NetSimile is done by leveraging NetworkX, a common network analysis library in Python. Although NetworkX is very simple and clear to work with, it is not designed for maximal efficiency or scalability, as is evidenced by the above experiments.

We believe it is valuable for the user to get a rough estimate of the efficiency of the easily-available implementations of the distances discussed in this work. However, much more efficient implementations are possible for each given distance; these implementations must be carefully designed to be optimal for the particular use-case. A thorough empirical comparison of the runtimes of optimized implementations of each of these distances would be very illuminating, but would require considerable care in order to be done equitably, and is well beyond the scope of this work.

2.4 Random graph models

Random graph models have long been used as a method for understanding topological properties of graph data that occurs in the world. The uncorrelated random graph model of Erdős and Rényi [10] is the simplest model, and provides a null model akin to white noise. This probabilistic model has been analysed thoroughly [76]. Unfortunately, the uniform topology of the model does not accurately model empirical graph data. The stochastic blockmodel is an extension of the uncorrelated random graph, but with explicit community structure reflected in the distribution of edge density.

Models such as preferential attachment [7] and the Watts-Strogatz model [8] have been designed to mimic properties of observed graphs. Very little can be said about these models analytically, and thus much of what is understood about them is computational. The two-dimensional square lattice is a quintessential example of a highly structured and regular graph.

Finally, we restrict the present study to unlabelled and undirected graphs, with no self-loops. Although directed graphs are of great practical importance [77], the mathematical analysis of directed graphs is far more complex.

Most of the models in this work are sampled via the Python package NetworkX [78]; details of implementation can be found in the source code of the same. Some of the models we use are most clearly defined via their associated probability distribution, while others are best described by a generative mechanism. We introduce the models roughly in order of complexity.

2.4.1 The uncorrelated random graph

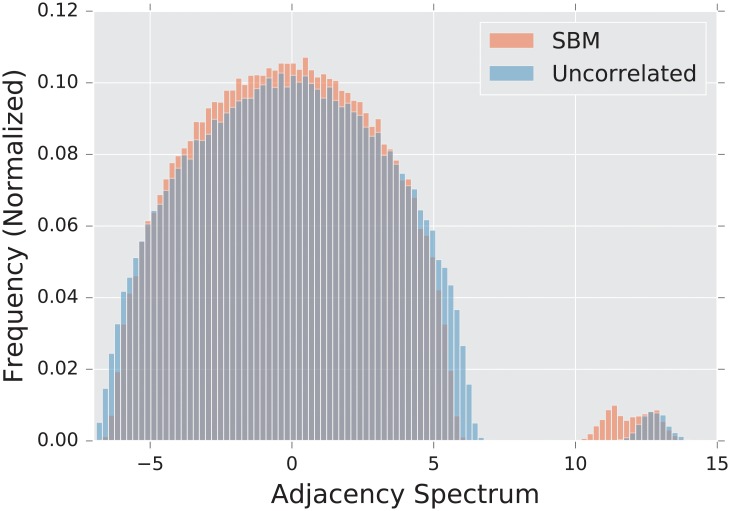

The uncorrelated Erdős-Rényi random graph is a random graph in which each edge exists with probability p, independent of the existence of all others. We denote this distribution of graphs by G(n, p). The spectral density of the λA forms a semi-circular shape, first described by Wigner [20], of radius , albeit with a single eigenvalue separate from the semicircular bulk [19].

We employ the uncorrelated random graph as the null model in many experiments. It is, in some sense, a “structureless” model; more specifically, the statistical properties of each edge and vertex in the graph are exactly the same. This model fails to produce many of properties observed in empirical networks, that motivates the use of alternative graph models.

2.4.2 The stochastic blockmodel

One important property of real world networks is community structure. Vertices often form densely connected communities, with the connection between communities being sparse, or non-existent. This motivates the use of the stochastic blockmodel. In this model, the vertex set can be partitioned into two non-overlapping sets C1 and C2 referred to as “communities”,

| (10) |

Each edge e = (i, j) exists independently with probability p if i and j are in the same community, and q if i and j are in distinct communities. In this work, we use “balanced” communities, whose sizes are equal (up to one vertex in either direction).

The stochastic blockmodel is a prime example of a model that exhibits global structure without any meaningful local structure. In this case, the global structure is the partitioned nature of the graph as a whole. On a fine scale, the graph looks like an uncorrelated random graph. We use the model to determine which distances are most effective at discerning global (and in particular, community) structure.

The stochastic blockmodel is at the cutting edge of rigorous probabilistic analysis of random graphs. Abbe et al. [79] have recently proven a strict bound on community recovery, showing in exactly what regimes of p and q it is possible to detect the communities, and assign the correct label to each node.

Generalizations of this model exist in which there are K communities of arbitrary size. Furthermore, each community need not have the same parameter p, and each community pair need not have the same parameter q.

2.4.3 Preferential attachment models

Another often-studied feature of real world networks is the degree distribution. In practice, the distribution is estimated using a histogram.

The degree distribution of an uncorrelated random graph is binomial, and so it has tails that decay exponentially for large graphs (as n → ∞). However, in real world graphs such as computer networks, human neural nets, and social networks, the measured degree distribution has a power-law tail [7], where γ ∈ [2, 3]. Such distributions are often also referred to as “scale-free”.

The preferential attachment model is a scale-free random graph model. Although first described by Yule in 1925 [80], the model did not achieve its current popularity until the work of Barabási and Albert in 1999 [7].

The model has two parameters, l and n. The latter is the size of the graph, and the former controls the density of the graph. We require that 1 ≤ l < n. The generative procedure for sampling from this distribution proceeds as follows. Begin by initializing a star graph with l + 1 vertices, with vertex l + 1 having degree l and all others having degree 1. Then, for each l + 1 < i ≤ n, add a vertex, and randomly attach it to l vertices already present in the graph, where the probability of i attaching to v is proportional to to the degree of v. We stop once the graph contains n vertices.

The constructive description of the algorithm does not yield itself to simple analysis, and so less is known analytically about the preferential attachment model than the uncorrelated random graph or the stochastic blockmodel (but see [81, 82] for some basic properties of this model). There are few results about the spectrum of the various matrices. In [83], the authors prove that if are the k largest eigenvalues of the adjacency matrix, and if d1 ≥ … ≥ dk are the k largest degrees, then

| (11) |

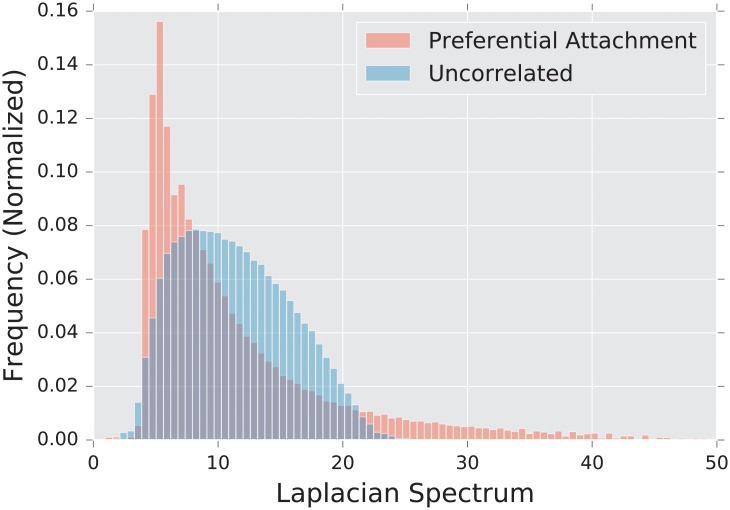

These results are proven on a model with a slightly different generative procedure; we do not find that they yield a particularly good approximation for our experiments that are conducted at the quite low n = 100. In [19], the authors demonstrate numerically that the adjacency spectrum exhibits a triangular peak with power-law tails.

Having a high degree makes a vertex more likely to attract more connections, so the graph quickly develops strongly connected “hubs,” or vertices with very high degree, which cannot be found in the Erdős-Rényi model. This impacts both the global and local structure of the graph. Hubs are by definition global structures, as they touch a significant portion of the rest of the graph, making path lengths shorter and increasing connectivity throughout the graph. On the local scale, vertices in the graph tend to connect exclusively to the highest-degree vertices in the graph, rather than to one another, generating a tree-like topology. This particular topology yields a signature in the tail of the spectrum.

2.4.4 The Watts-Strogatz model

Many real-world graphs exhibit the so-called “small world phenomenon,” where the expected shortest path length between two vertices chosen uniformly at random grows logarithmically with the size of the graph. Watts and Strogatz [8] constructed a random graph model that exhibits this behavior, along with a high clustering coefficient not seen in an uncorrelated random graph. The clustering coefficient is defined as the ratio of number of triangles to the number of connected triplets of vertices in the graph. The Watts-Strogatz model [8] is designed to be the simplest random graph that has high local clustering and small average shortest path distance between vertices.

Like the preferential attachment model, this graph is most easily described via a generative mechanism. The algorithm proceeds as follows. Let n be the size of the desired graph, let 0 ≤ p ≤ 1, and let k be an even integer, with k < n. We begin with a ring lattice, which is a graph where each vertex is attached to its k nearest neighbors, k/2 on each side. We then randomly rewire edges (effectively creating shortcuts) as follows. With probability p, each edge (i, j) (where i < j) is replaced by the edge (i, l), where l is chosen uniformly at random. The target l is chosen so that i ≠ l and i is not connected to l at the time of rewiring. We stop once all edges have been iterated through. We add an additional stipulation that the graph must be connected. If the algorithm terminates with a disconnected graph, then we restart the algorithm and generate a new graph.

As mentioned before, the topological features that are significant in this graph are the high local clustering and short expected distance between vertices. Of course, these quantities are dependent on the parameter p; as p → 1, the Watts-Strogatz model approaches an uncorrelated random graph. Similarly, as p → 1 the adjacency spectral density transitions from the tangle of sharp maxima typical of a ring-lattice graph to the smooth semi-circle of the uncorrelated random graph [19]. Unlike the models above, this model exhibits primarily local structure. Indeed, we observe that the most significant differences lie in the tail of the adjacency spectrum, that can be directly linked to the number of triangles in the graph [19]. On the large scale, however, this graph looks much like the uncorrelated random graph, in which it exhibits no communities or high-degree vertices.

This model fails to produce the scale-free behavior observed in many real world networks. Although the preferential attachment model reproduces this scale-free behavior, it fails to reproduce the high local clustering that is frequently observed, and so we should think of neither model as fully replicating the properties of observed graphs.

2.4.5 The configuration model

The above three models are designed to mimic certain properties of real world networks. In some cases, however, we may wish to create a random graph with a prescribed degree sequence. That is to say, we seek a distribution that assigns equal probability to each graph, conditioned upon the graph having a given degree sequence. The simplest model that attains this result is the configuration model [84]. Recently, Zhang et al. [85] have derived an asymptotic expression for the adjacency spectrum of a configuration model, that is exact in the limit of large graph size and large mean degree.

Inconveniently, this model is not guaranteed to generate a simple graph; the resulting graph can have self-edges, or multiple edges between two vertices. In 2010, Bayati et al. [86] described an algorithm that samples (approximately) uniformly from the space of simple graphs with a given degree distribution. In [86] the authors prove that the distribution is asymptotically uniform, but they do not prove results for finite graph size (see [87] for a more detailed analysis). We use this algorithm despite the fact that it does not sample the desired distribution in a truly uniform manner; the fact that the resulting graph is simple overcomes this drawback.

We refer to graphs sampled in this way as configuration model graphs. The significance of this class of graphs stems from the fact that we can use them to control for the degree sequence when comparing graphs; they are used as a null model, similar to the uncorrelated random graph, but they can be tuned to share some structure (notably, the power-law degree distribution of preferential attachment) with the graphs to which they are compared.

2.4.6 Lattice graphs

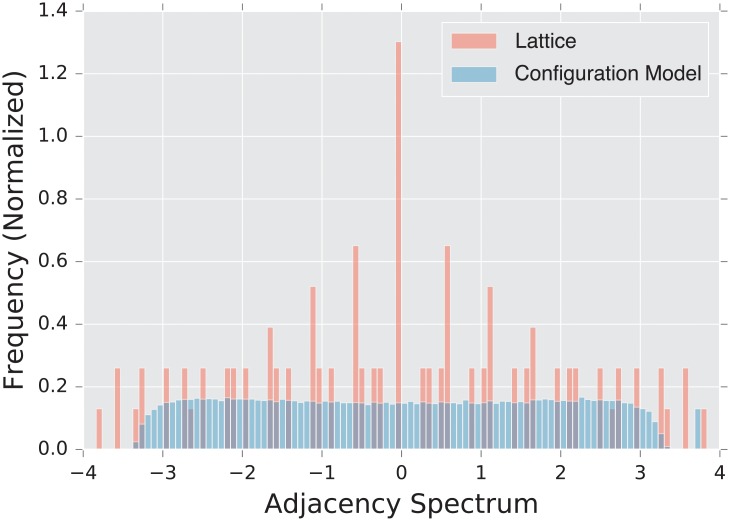

We use a 2-dimensional x by y rectangular lattice as a prototypical example of a highly regular graph. This regularity is reflected by the discrete nature of the lattice’s spectrum, which can be seen in Fig 1. The planar structure of the lattice allows for an intuitive understanding of the eigenvalues, as they approximate the vibrational frequencies of a two-dimensional surface.

Fig 1. Spectral densities λA of the adjacency matrix for a lattice graph and a degree matched configuration model.

Densities are built from an ensemble of 1,000 graphs generated using parameters described in Subsection3.1.5.

This is a particularly strong flavor of local structure, as it is not subject to the noise present in random graph models. This aspect allows us to probe the functioning of our distances when they are exposed to graphs with a high amount of inherent structure and very low noise.

2.4.7 Exponential random graph models

A popular random graph model is the exponential random graph model, or ERGM for short. Although they are popular and enjoy simple interpretability, we do not use ERGMs in our experiments. Unlike some of our other models that are described by their generative mechanisms, these are described directly via the probability of observing a given graph G.

Let gi(G) be some scalar graph properties (e.g., size, volume, or number of triangles) and let θi be corresponding coefficients, for i = 1, …, K. Then, the ERGM assigns to each graph a probability [88]

This distribution can be sampled via a Gibbs sampling technique, a process that is outlined in detail in [88]. ERGMs show great promise in terms of flexibility and interpretability; one can seemingly tune the distribution towards or away from any given graph metric, including mean clustering, average path length, or even decay of the degree distribution.

However, our experience attempting to utilize ERGMs led us away from this approach. When sampling from ERGMs, we were unable to control properties individually to our satisfaction. We found that attempts to increase the number of triangles in a graph increased the graph volume; when we subsequently used the ERGM parameters to de-emphasize graph volume, the sampled graphs had an empirical distribution very similar to an uncorrelated random graph.

2.4.8 Graph neural networks

Each one of the graph ensembles described in the previous sections represents the quintessential exemplar of a certain graph structure (e.g, degree distribution, clustering coefficients, shortest path distance, community structure, etc.) Each ensemble can be thought as the atomic building block that can be used to understand complex existing real world networks. For instance, it is shown in [89] that any sufficiently large graph behaves approximately like a stochastic blockmodel. These networks are also amenable to a rigorous mathematical analysis, and one can analyze the influence on the graph distances of changes in the graph geometry and topology.

As explained in section 2.2.4, there has been some very recent attempts at generating random realizations of graphs by learning the structure of massive datasets of existing networks (e.g, [90–92]). These algorithms offer an implicit representation of a set of graphs, by discovering an optimal neural network that can generate new graphs with similar structures. In contrast to the prototypical random graph ensembles, the current understanding of the theoretical properties of the graph neural networks is very limited: there are no results on the structural properties of these models (but see [93] for an estimate of the complexity of a graph convolutional network (number of nodes and number of hidden units) required to learn graph moments).

A systematic study of the sensitivity of graph distances on graph neural networks is clearly needed. Such a study would provide information that would complement theoretical results that elucidate how expressive such graph models can be [55, 56]. Unfortunately, such experiments clearly go beyond the scope of the current manuscript.

2.5 Real world networks

Random graph models are often designed to simulate a single important feature of real world networks, such as clustering in the Watts-Strogatz model or the high-degree vertices of the preferential attachment model. In real networks, these factors coexist in an often unpredictable configuration, along with significant amounts of noise. Although the above analysis of the efficacy of various distances on random graph scenarios can help inform and guide our intuition, to truly understand their utility we must also look at how they perform when applied to empirical graph data.

In this study, we evaluate the performance of the aforementioned distances using two scenarios. First, we study the change point detection scenario for two time-varying networks: a dynamic social-contact graph, collected via RFID tags in an French primary school [94], and a time series of emails exchanged between 986 members of a large European research institution [95] over a period of 803 days.

Secondly, we investigate the two-sample test problem in neuroscience: given two populations of functional brain connectivity networks, we compute a statistic to test whether both populations are generated by the same probability distribution of controls (null hypothesis), or one population is significantly different from the other one. Specifically, we compare the functional connectivity of subjects with a diagnosis of autism spectrum disorder [96] versus a population of controls.

2.5.1 Primary school face to face contact

Some of the most well-known empirical network datasets reflect social connective structure between individuals, often in online social network platforms such as Facebook and Twitter. These networks exhibit structural features such as communities and highly connected vertices, and can undergo significant structural changes as they evolve in time. Examples of such structural changes include the merging of communities, or the emergence of a single user as a connective hub between disparate regions of the graph.

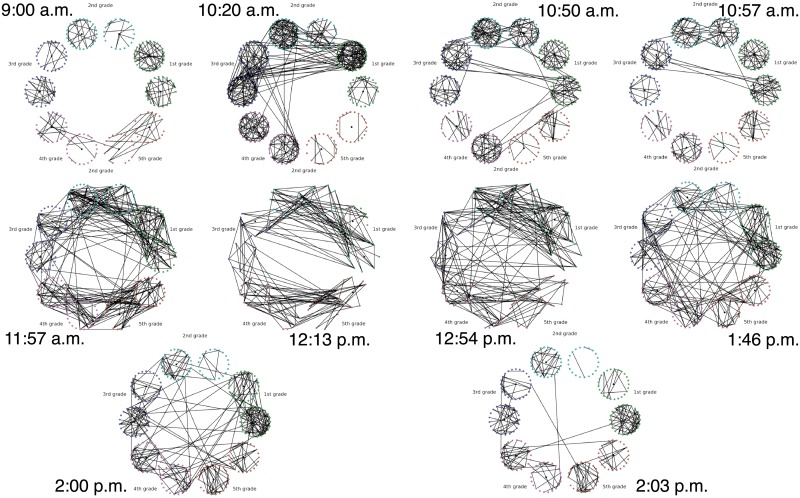

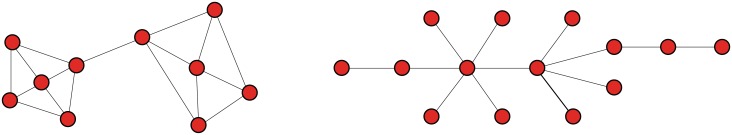

Description of the Experiment. The data are part of a study of face to face contact between primary school students [94]. Briefly, RFID tags were used to record face-to-face contact between students in a primary school in Lyon, France in October, 2009. Events punctuate the school day of the children (see Table 3), and lead to fundamental topological changes in the contact network (see Fig 2). The school is composed of ten classes: each of the five grades (1 to 5) is divided into two classes (see Fig 2).

Table 3. Events that punctuate the school day.

| Time | Event |

|---|---|

| 10:30 a.m.–11:00 a.m. | Morning Recess |

| 12:00 p.m.–1:00 p.m. | First Lunch Period |

| 1:00 p.m.–2:00 p.m. | Second Lunch Period |

| 3:30 p.m.–4:00 p.m. | Afternoon Recess |

Fig 2. Top to bottom, left to right: Snapshots of the face-to-face contact network at times (shown next to each graph) surrounding significant topological changes.

The construction of a dynamic graph proceeds as follows: time series of edges that correspond to face to face contact describe the dynamics of the pairwise interactions between students. We divide the school day into N = 150 time intervals of Δt ≈ 200 s. We denote by ti = 0, Δt, …, (N − 1)Δt, the corresponding temporal grid. For each ti we construct an undirected unweighted graph , where the n = 232 nodes correspond to the 232 students in the 10 classes, and an edge is present between two students u and v if they were in contact (according to the RFID tags) during the time interval [ti−1, ti).

For the purpose of this work, we think of each class as a community of connected students; classes are weakly connected (e.g., see Fig 2 at times 9:00 a.m., and 2:03 p.m.). During the school day, events such as lunchtime and recess, trigger significant increases in the the number of links between the communities, and disrupt the community structure; see Fig 2 at times 11:57 a.m., and 1:46 p.m.

2.5.2 European Union Emails

Description of the Data. The data were obtained from the Stanford Large Network Dataset Collection [97]. The network was generated using anonymized emails exchanged between 986 members of a large European research institution [95]. There are 986 nodes that correspond to distinct individuals sending and receiving emails. To reduce the variability in the data, we aggregate the emails exchanged every week, and perform an analysis at the week level. An edge was created between nodes i and j if both i sent at least one email to j and j sent at least one email to i during that week. The timeline starts on October 1, 2003 [95]. The graph distances were computed between the weekly graphs thus created.

2.5.3 Functional brain connectivity

Graph theoretical analysis of the connective structure of the human brain is a popular research topic, and has benefited from our growing ability to analyze network topology [98–100]. In these graph representations of the brain, the vertices are physical regions of the brain, and the edges indicate the connectivity between two regions. The connective structure of the brain is examined either at the “structural” level, in which edges represent anatomical connection between two regions, or at the “functional” level, in which an edge connects regions whose activation patterns are in some sense similar. Developmental and mental disorders such as autism spectrum disorder [101] and schizophrenia [102] have been shown to have structural correlates in the graph representations of the brains of those affected. In this study we focus on autism spectrum disorder, or ASD.

Description of the Data. The Autism Brain Imagine Data Exchange [96, 103], or ABIDE, is an aggregation of brain-imaging data sets from laboratories around the world that study ASD [96]. The data that we focus on are measurements of the activity level in various regions of the brain, measured via functional magnetic resonance imaging (fMRI).

After preprocessing, the data is analyzed for quality. Of the original 1114 subjects (521 ASD and 593 TD), only 871 pass this quality-assurance step. These subjects are then spatially aggregated via the Automated Anatomical Labelling (AAL) atlas, that aggregates the spatial data into 116 time series.

To construct a graph from these time series, the pairwise Pearson correlation is calculated to measure similarity. If we let u and v denote two regions in the AAL atlas and let ρ(u, v) denote the Pearson correlation between the corresponding time series, the simplest way to build a graph is to assign weights w(u, v) = |ρ(u, v)|. We exclude low correlations, as these are often spurious and not informative as to the structure of the underlying network, and define the weights

Finally, we also construct an unweighted graph according to

We will compare both binary and weighted connectomes, generated for multiple thresholds. This will allow us to be confident that our results are not artifacts of poorly chosen parameters in our definition of the connectome graph.

2.6 Evaluation protocol: The distance contrast

2.6.1 The distance contrast

The experiments are designed to mimic a scenario in which a practitioner is attempting to determine whether a given graph belongs to a population or is an outlier relative to that population.

Specifically, let us define by and two graph populations, which we refer to as the null and alternative populations respectively. For each distance measure, let be the distribution of distances where G0 and are both drawn from the distribution . Similarly, let be the distribution of distances d(G0, G1), where G0 is drawn from and G1 is drawn from .

The statistic characterizes the natural variability of the graph population , as seen through the lens formed by the distance d. Similarly, the statistic reveals how distant—according to the distance d—the two graph populations and are. If the distributions of and are well separated, then d is effective at differentiating the null population from the alternative population.

To that end, we normalize the statistics of by those of in order to compare. In particular, let μi be the sample mean of , and let σi be the sample standard deviation, for i ∈ {0, 1}. We define the following (normalized) contrast, , [104], between D0 and D1, whose samples are calculated via

| (12) |

This studentized distance contrast can also be related to the Wald test statistic [104]

| (13) |

If the empirical distribution of contrast is well separated from zero, viz. the contrast between D1 and the sample mean μ0 is significantly greater than the standard deviation, then the distance is effectively separating the null and alternative populations.

2.6.2 Comparisons of the random graph ensembles

Table 4 describes the various experiments. Each model is compared against a null model; the null model can be sampled either from the Erdős-Rényi model, or from a configuration model. The latter makes it possible to match the degree distribution of the model being tested against that of the null model. We compare the distance contrast in (12) between each model and the null model using all the distances. When appropriate, we also report the performance of the spectral distances for various k. Table 4 also displays the structural feature that is being evaluated for a particular experiment.

Table 4. Table of comparisons performed, and the important structural features therein.

G(n, p) indicates the Erdős-Rényi uncorrelated random graph, SBM is the stochastic blockmodel, PA is the preferential attachment model, CM is the degree matched configuration model, and WS is the Watts-Strogatz model.

| Section | Null | Alternative | Structural Difference |

|---|---|---|---|

| Stochastic Block Model | G(n, p) | SBM | Community structure |

| Preferential Attachment | G(n, p) | PA | High-degree vertices |

| Preferential Attachment vs Configuration Model | CM | PA | Structure not in the degree sequence |

| Watts-Strogatz | G(n, p) | WS | Local structure |

| Lattice Graph | G(n, p) | Lattice | Extreme local structure |

2.6.3 Comparisons of the real world networks

Primary School Face to Face Contact and European Union Emails. Temporal changes in the graph topology over time are quantified using the various distance measures. For each distance measure d, we defined the following temporal difference,

To help compare these distances with one another, we normalize each by its sample mean , and we define the normalized temporal difference,

Functional Brain Connectivity. We define to be the set of connectomes computed from the ASD subjects, and the set of connectomes from the control population (null model). The evaluation proceeds as described in Section 2.6. The distance contrast between the two populations is evaluated using the statistic defined in (12),

| (14) |

3 Results

3.1 Random graph ensembles

For each experiment described in Table 4, we generate 50 samples of D0 and D1, where each sample compares two graphs of size n = 1, 000, unless otherwise specified.

The graphs are always connected; the sampler will discard a draw from a random graph distribution if the resulting graph is disconnected. Said another way, we draw from the distribution defined by the model, conditioning upon the fact that the graph is connected.

The small size of the graphs allows us to use larger sample sizes; although all of the matrix distances used have fast approximate algorithms available, we use the slower, often , exact algorithms for the experiments, and so larger graphs would be prohibitively slow to work with. In all the experiments, we choose parameter values so that the expected volume of the two models under comparison is equal.

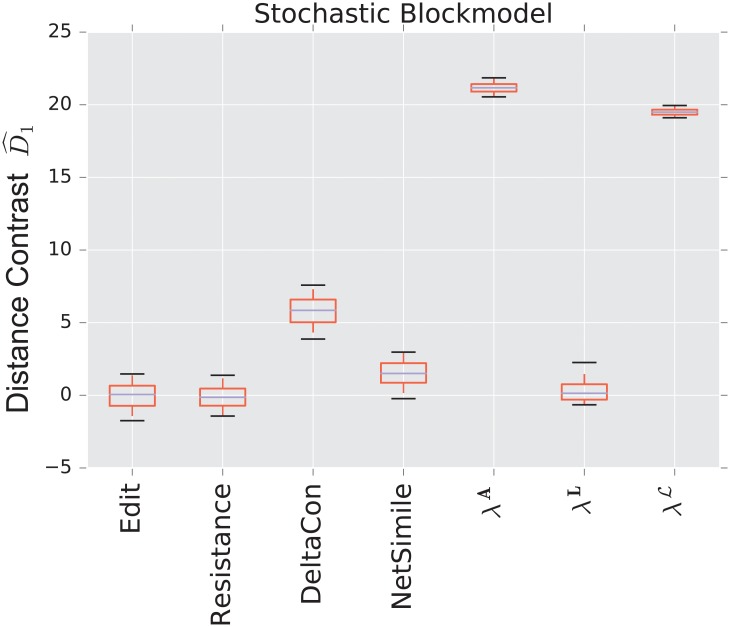

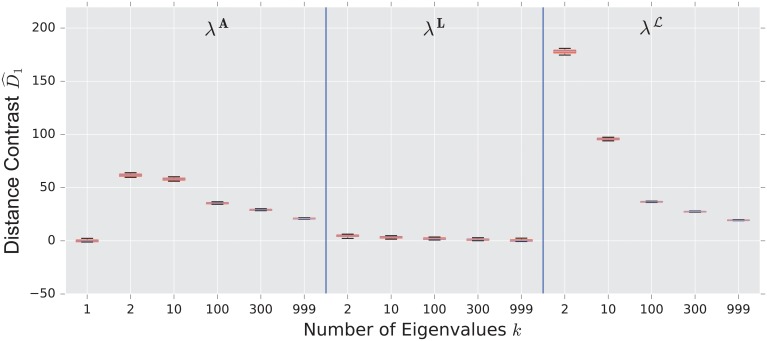

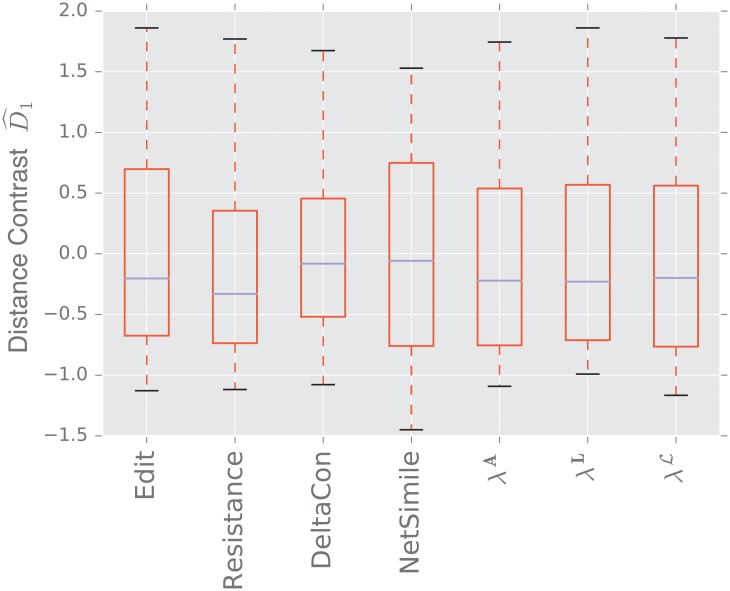

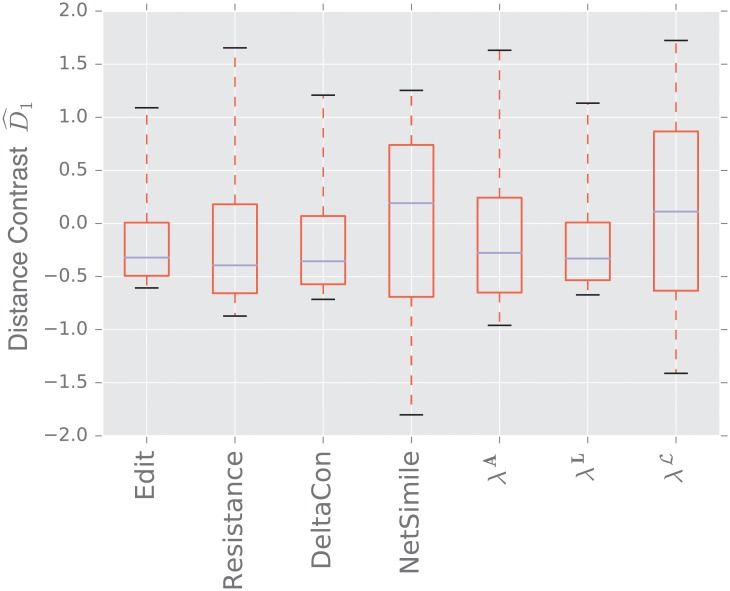

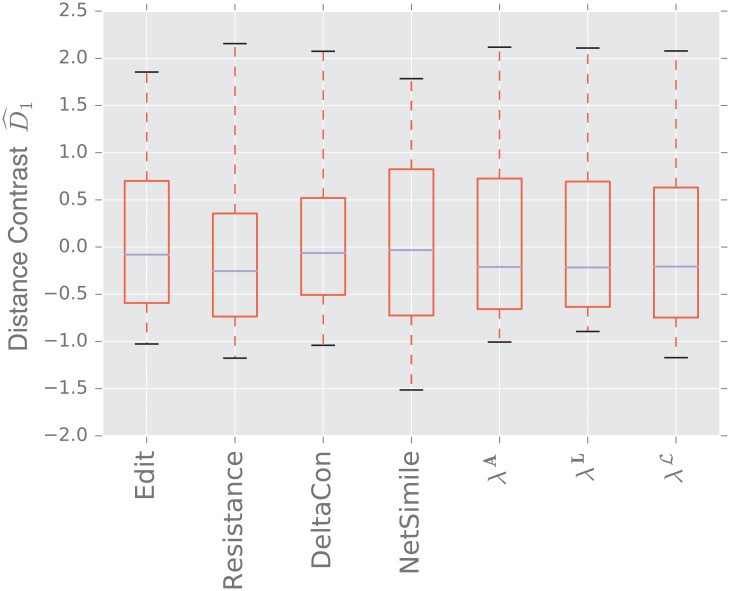

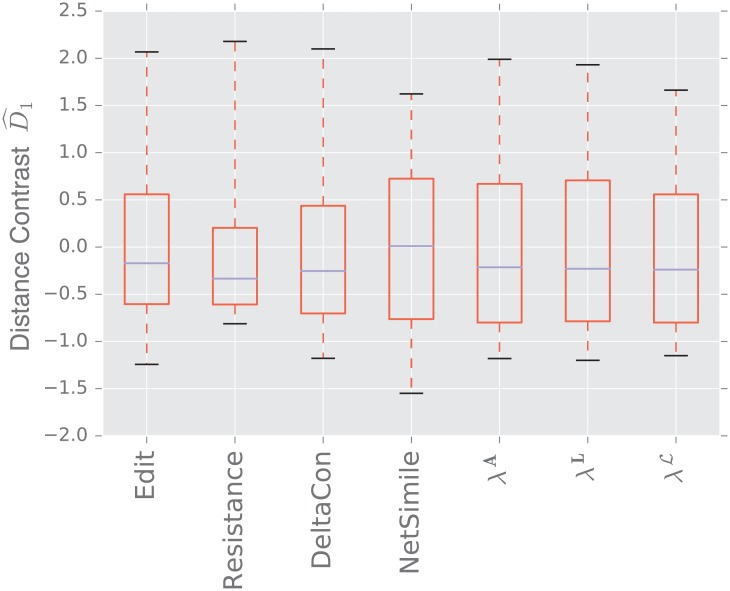

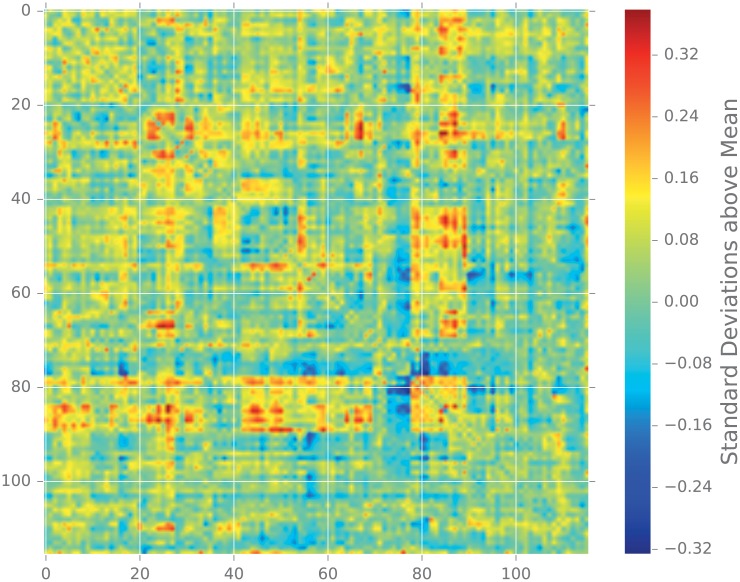

We display the performance of the various distances on the same figure. Boxes extend from lower to upper quartile, with center line at median. Whiskers extend from 5th to 95th percentile (e.g., see Fig 3). We also display the performance of the spectral distances contrast —for the three matrices: adjacency, combinatorial Laplacian, and normalized Laplacian as a function of the number of eigenvalues used to compute the distance (e.g., see Fig 4).

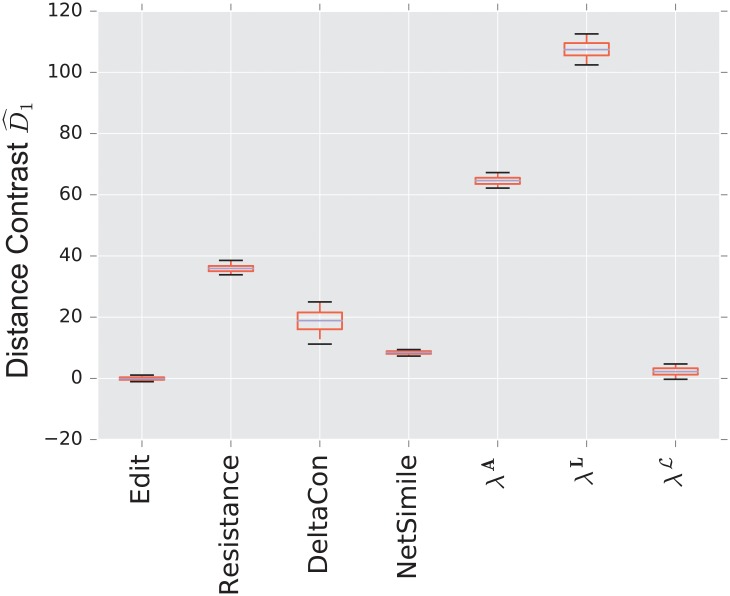

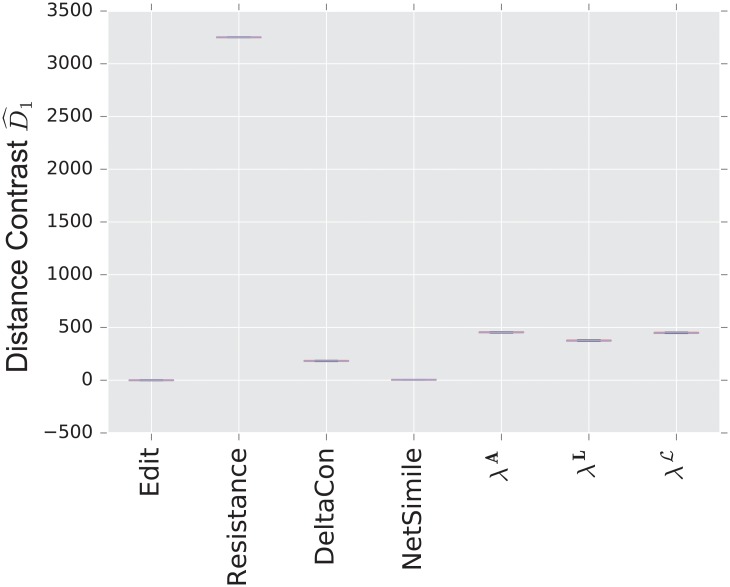

Fig 3. Distance contrast between the stochastic blockmodel and the uncorrelated random graph model (null model).

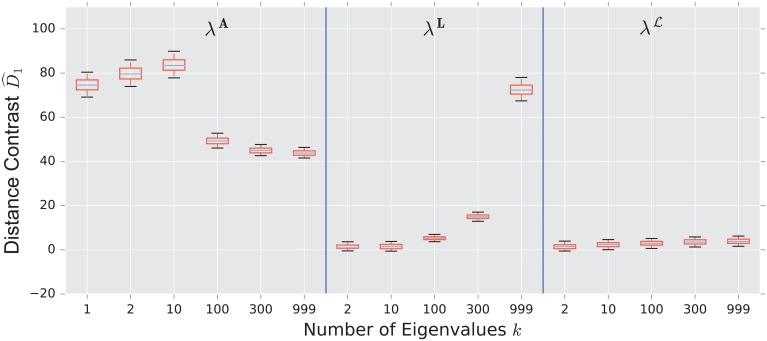

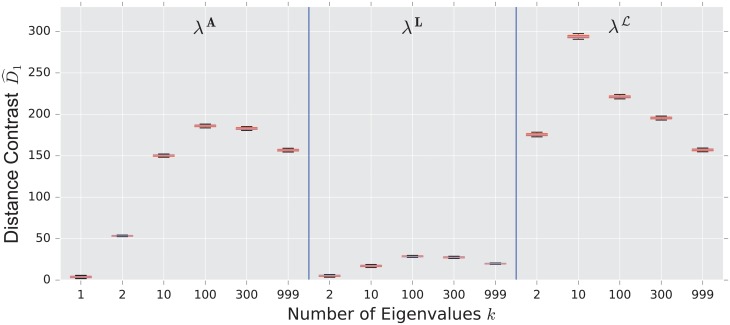

Fig 4. Spectral distances contrast —For the three matrices: Adjacency, combinatorial Laplacian, and normalized Laplacian (from left to right)—Between the stochastic blockmodel and the uncorrelated random graph model (null model).

3.1.1 Stochastic blockmodel

Fig 3 displays the comparison between a stochastic blockmodel and an uncorrelated random graph model (null model). The edge density p = 0.12 of the uncorrelated random graph is chosen so that graphs are connected with high probability. With this choice of parameters, we observe that the empirical probability of generating a disconnected uncorrelated random graph with these parameters is ∼ 0.02%. The preferential attachment section describes in more detail why this exact value is chosen.

The stochastic blockmodel is composed of two communities of equal size, n/2 = 500. Stochastic blockmodel experiments are run with in-community parameter p = 1.9 × 0.02, and cross-community parameter q = 0.1 × 0.02. Thus, the in-community connectivity is denser than the cross-community connectivity by a factor of p/q = 19.

Since we have matched the volume of the graphs, the edit distance fails to distinguish the two models. Among the matrix distances, DeltaCon separates the two models most reliably. The adjacency and normalized Laplacian distances perform well. The resistance perturbation distance and the non-normalized Laplacian distance fail to distinguish the two models.

As confirmed in Fig 4, the performance of the adjacency distance is primarily driven by differences in the second eigenvalue , and including further eigenvalues adds no benefit; the normalized Laplacian also shows most of its benefit in the second eigenvalue , but unlike the adjacency distance, including more eigenvalues decreases the performance of the metric.

3.1.2 Preferential attachment vs uncorrelated

Fig 5 shows the results of comparing a preferential attachment graph to an uncorrelated random graph. The preferential attachment graph is quite dense, with l = 6. Since the number of edges in this model is always |E| = l(n − l), we determine the parameter p for the uncorrelated graph via

to guarantee that both graphs always have the same volume.

Fig 5. Distance contrast between the preferential attachment and the uncorrelated random graph model (null model).

Again, because both graphs have the same number of edges (with high probability), the edit distance fails to distinguish the two models. The resistance distance shows mediocre performance, although 0 is outside the 95% confidence interval. DeltaCon exhibits extremely high variability, although it has the highest median of the matrix distances.

The combinatorial Laplacian distance outperforms all others, while the normalized Laplacian does not separate the two models at all. Fig 6 shows that the very fine scale eigenvalues of the combinatorial Laplacian (large index) are needed to differentiate the two models. Conversely, the discriminating eigenvalues of the adjacency matrix are the smallest eigenvalue; in fact, the first eigenvalue captures much of the contrast: the distance contrast (12) stays more or less constant as one increases k (see Fig 6).

Fig 6. Spectral distance contrast —For the three matrices: Adjacency, combinatorial Laplacian, and normalized Laplacian (from left to right)—Between the preferential attachment and the uncorrelated random graph model (null model).

3.1.3 Preferential attachment vs configuration model

To further explore the distinctive features of the preferential attachment graphs, we change here the null model. Instead of using a volume matched uncorrelated random graph model, we use a degree matched configuration model as the null model (the volume is automatically matched, since the number of edges is half of the sum of the degrees). This experiment allows us to search for structure in the preferential attachment model that is not prescribed by the degree distribution.

An intriguing result happens: not a single distance can differentiate between a preferential attachment graph and a randomized graph with the same degree distribution (see Fig 7).

Fig 7. Distance contrast between the preferential attachment model and the degree matched configuration model (null model).

The spectral distance based on the eigenvalues combinatorial Laplacian λL, which yields the strongest contrast when comparing the preferential attachment model to the uncorrelated random graph model is now unavailing. This thought-provoking experiment suggests that all significant structural features of the preferential attachment model are prescribed by the degree distribution.

3.1.4 Watts-Strogatz

The Watts-Strogatz experiments used k = 20, p = 0.020020… (calculated so that the volumes match) and β = 0.1. The number of nodes was n = 1, 000.

The Watts-Strogatz model is sparse, and thus the uncorrelated random graph has a low value of p—since we match the number of edges—and is very likely disconnected. This is only a significant problem for the resistance distance, that is undefined for disconnected graphs. To remedy this, we use an extension of the resistance distance called the renormalized resistance distance, that is developed and analyzed in [29]. This is the only experiment in which the use of this particular variant of the resistance distance is required.

Fig 8 shows that the spectral distances based on the adjacency and normalized Laplacian are the strongest performers. Amongst the matrix distances, DeltaCon strongly outperforms the resistance distance. The resistance distance here shows a negative median, that indicates smaller distances between populations than within the null population. This is likely due to the existence of many (randomly partitioned) disconnected components within this particular null model, that inflates the distances generated by the renormalized resistance distance. It is notable that, contrary to the comparison in Section 3.1.2, the normalized Laplacian outperforms the combinatorial Laplacian.

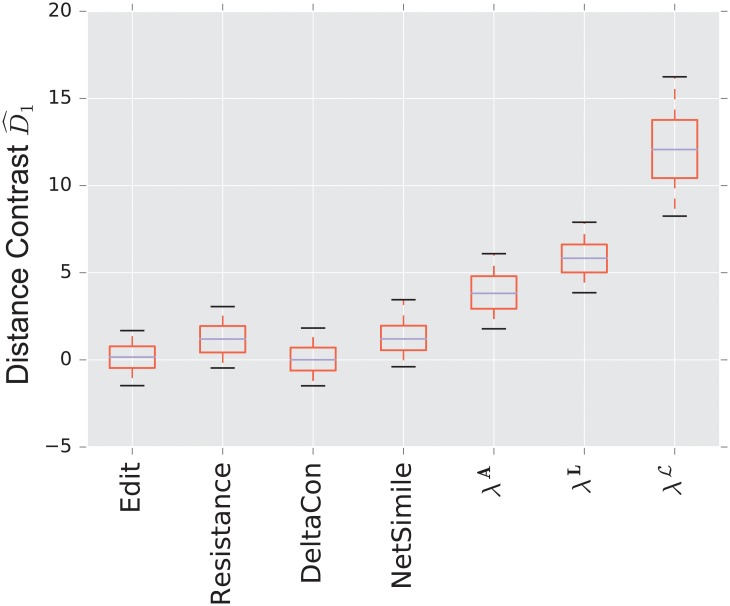

Fig 8. Distance contrast between a small-world graph and the the degree matched configuration model (null model).

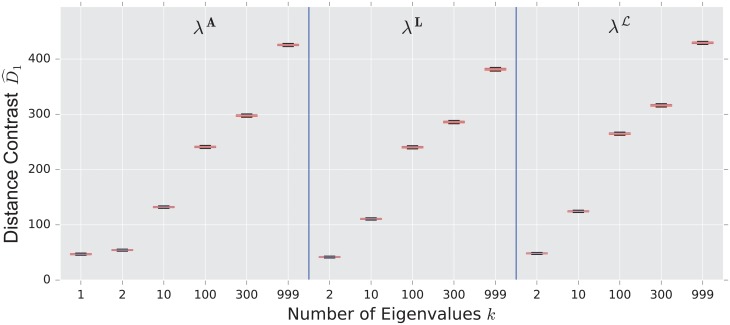

Fig 9 displays the results for spectral distances, for a wide variety of k. This figure is significant because it illustrates the fact that both coarse scales (large k for ) and fine scales (large k for ) are necessary to yield the optimal contrast between the two models.

Fig 9. Spectral diastances contrast —For the three matrices: Adjacency, combinatorial Laplacian, and normalized Laplacian (from left to right)—Between the small-world graph and the degree matched configuration model (null model).

3.1.5 Lattice graph

The final experiment, compares a lattice graph to a configuration model graph with the same degree distribution.

The lattice graphs are 100 × 10, giving a total size of 1, 000.

The lattice here is highly structured, while the configuration model graph is quite similar to an uncorrelated random graph; both the deterministic degree distribution of the lattice and the binomial distribution of the uncorrelated random graph are highly concentrated around their respective means.

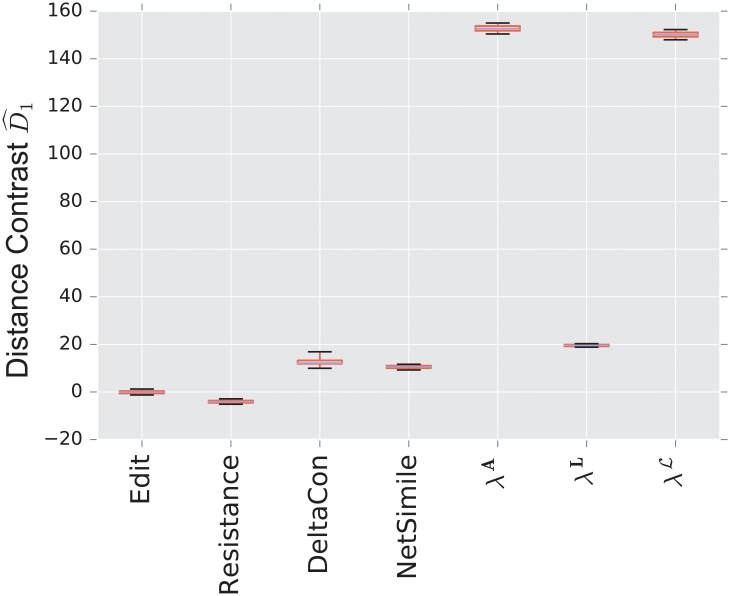

We see in Fig 10 that the scaled distances in this experiment are about an order of magnitude higher than they are in other experiments for some of the distances; because the lattice is such an extreme example of regularity, it is quite easy for many of the distances to discern between these two models. The resistance distance has the highest performance, while spectral distances all perform equally well. Note that for a regular graph, the eigenvalues of A, L, and are all equivalent, up to an overall scaling and shift, so we would expect near-identical performance for graphs that are nearly regular.

Fig 10. Distance contrast between the 10 × 100 two-dimensional lattice graph and the the degree matched configuration model (null model).

The spectral distances need all the scales (i.e. all the eigenvalues) to discern between the lattice and the configuration models (see Fig 11). This phenomenon, which is similar to the Watts-Strogatz model (see Section 3.1.4), points to the importance of the local structure in the topology of the lattice graph.

Fig 11. Spectral distances contrast —For the three matrices: Adjacency, combinatorial Laplacian, and normalized Laplacian (from left to right)—Between the 10 × 10 two-dimensional lattice graph and the degree matched configuration model (null model).

3.2 Real world networks

3.2.1 Primary school face to face contact

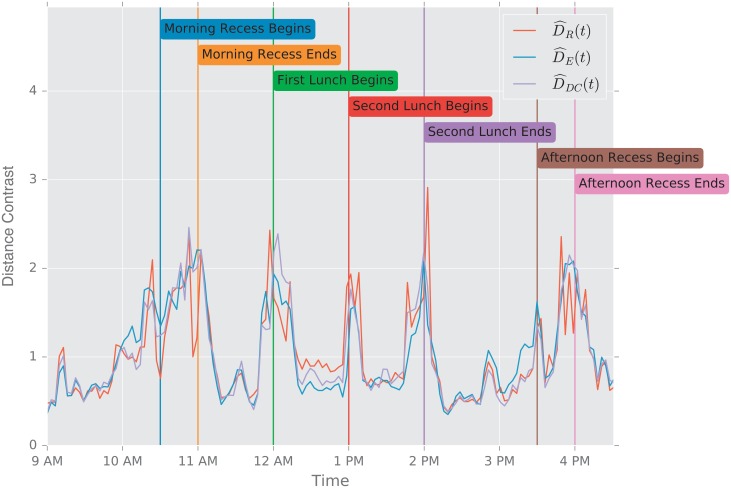

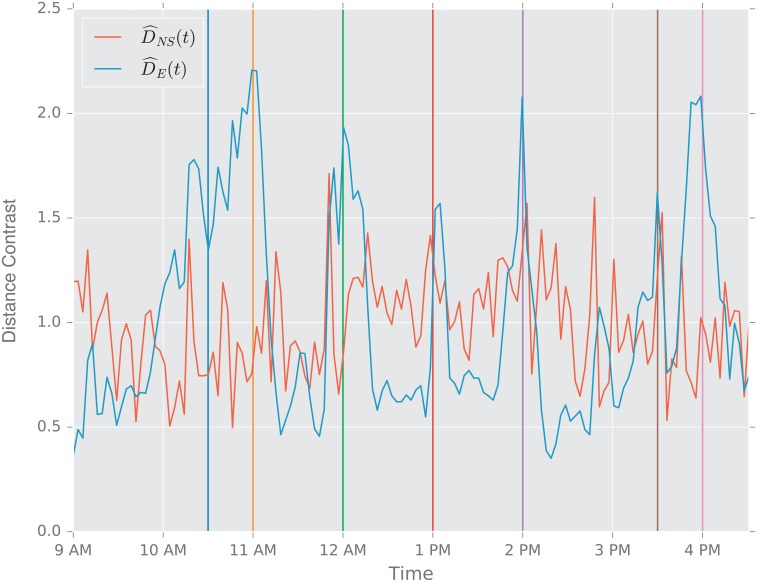

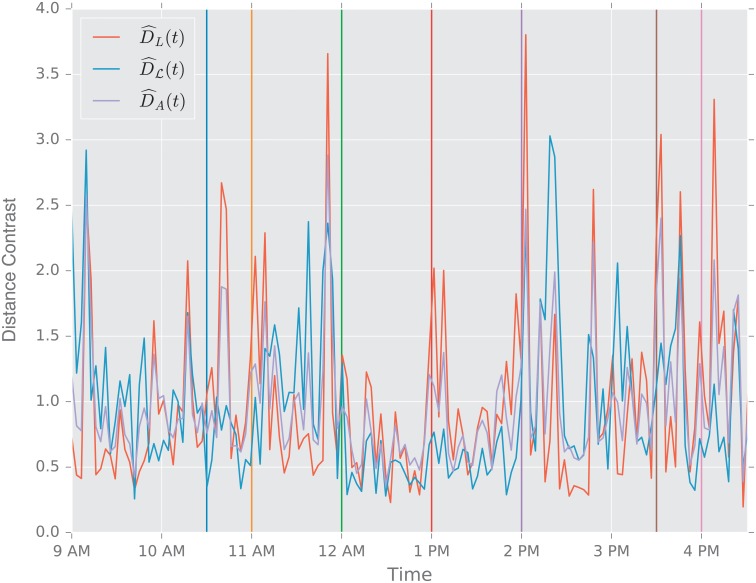

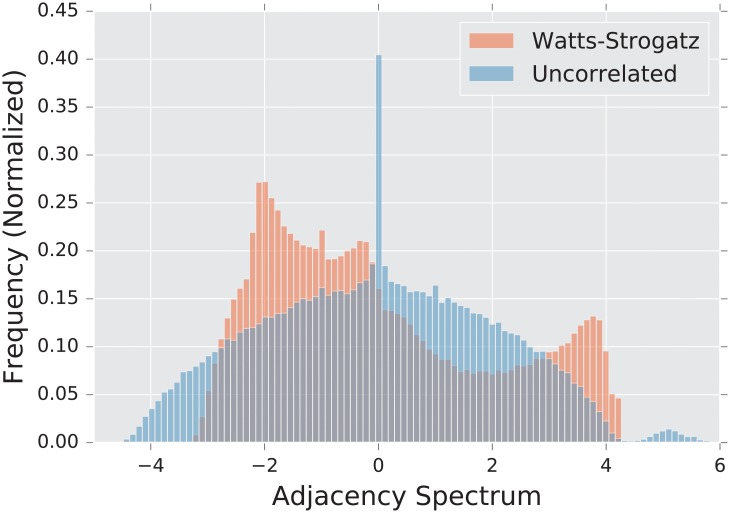

Fig 12 displays the normalized temporal differences for the resistance distance , edit distance , and DeltaCon distance . All the matrix distances are capable of detecting significant changes in the hidden events that control the topology of the contact network during the school day (see Fig 2). Indeed, the main structural changes that the graph undergoes are transitions into and out of a strong ten-community structure that reflects the classrooms of the school. For example, the adjacency matrix begins as (mostly) block-diagonal at 9 AM, but has significant off-diagonal elements by morning recess at 10:20 AM, and is no longer (block) diagonally dominant come the lunch period at 12 PM.

Fig 12. Primary school data set: Normalized temporal differences for the resistance distance , edit distance , and DeltaCon distance .

There exists a persistent random variability of the very fine scale connectivity (e.g., edges come and go within a community) that is superimposed on the large scale structural changes. Unlike, the matrix distances (displayed in Fig 12), NetSimile is significantly affected by these random fluctuations (see Fig 13).

Fig 13. Primary school data set: Normalized temporal differences for the NetSimile distance and edit distance .

The stochastic variability in the connectivity appreciably influence the high frequency (fine scale) eigenvalues. Consequently, the spectral distances, which are computed using all the eigenvalues, lead to very noisy normalized temporal differences (see Fig 14), making it difficult to detect the significant changes in the graph topology triggered by the school schedule.

Fig 14. Primary school data set: Normalized temporal differences for the three spectral distances: Combinatorial Laplacian , normalized Laplacian , and adjacency .

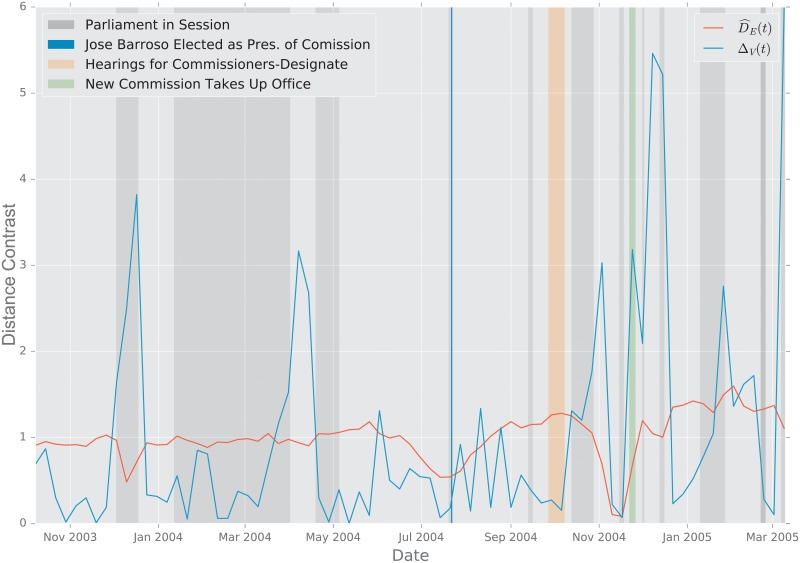

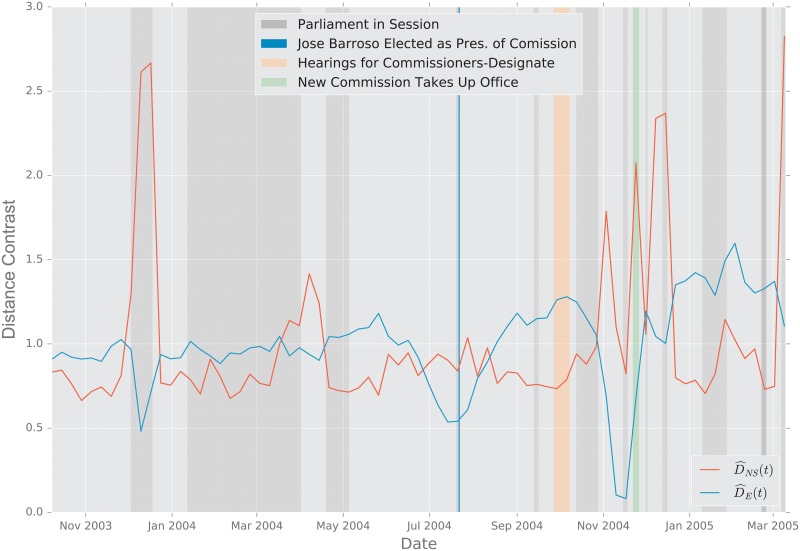

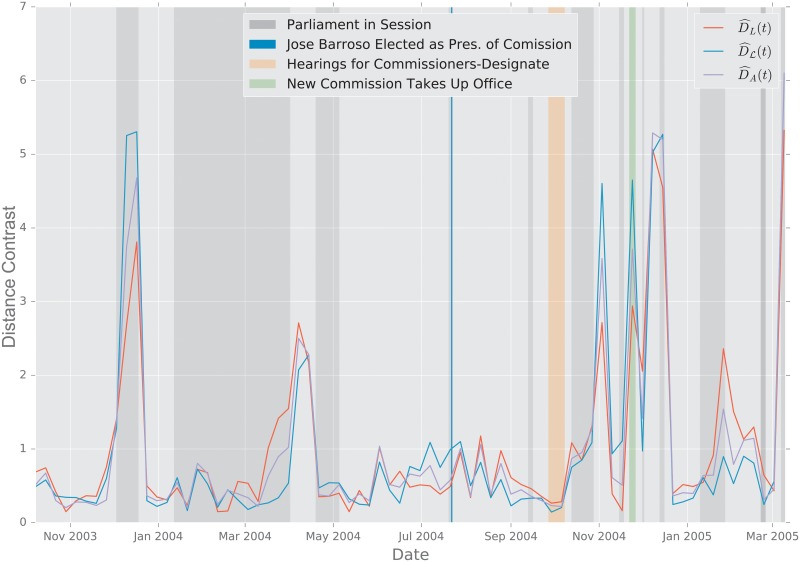

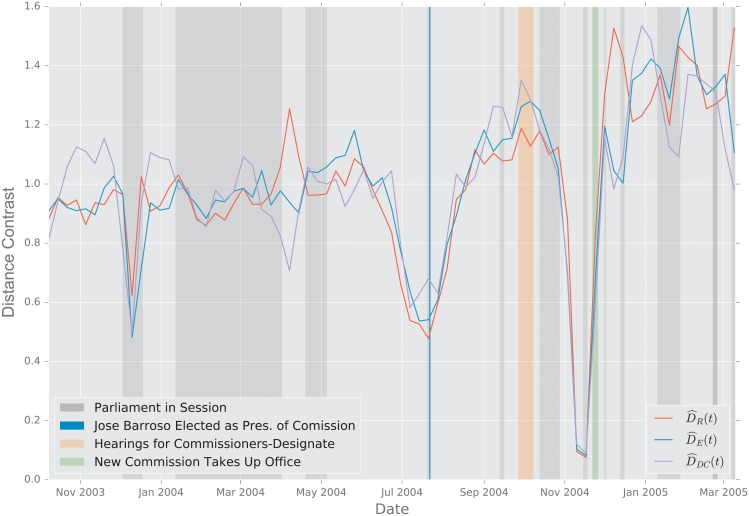

3.2.2 European Union Emails