Abstract.

Planning of radiotherapy involves accurate segmentation of a large number of organs at risk (OAR), i.e., organs for which irradiation doses should be minimized to avoid important side effects of the therapy. We propose a deep learning method for segmentation of OAR inside the head, from magnetic resonance images (MRIs). Our system performs segmentation of eight structures: eye, lens, optic nerve, optic chiasm, pituitary gland, hippocampus, brainstem, and brain. We propose an efficient algorithm to train neural networks for an end-to-end segmentation of multiple and nonexclusive classes, addressing problems related to computational costs and missing ground truth segmentations for a subset of classes. We enforce anatomical consistency of the result in a postprocessing step. In particular, we introduce a graph-based algorithm for segmentation of the optic nerves, enforcing the connectivity between the eyes and the optic chiasm. We report cross-validated quantitative results on a database of 44 contrast-enhanced T1-weighted MRIs with provided segmentations of the considered OAR, which were originally used for radiotherapy planning. In addition, the segmentations produced by our model on an independent test set of 50 MRIs were evaluated by an experienced radiotherapist in order to qualitatively assess their accuracy. The mean distances between produced segmentations and the ground truth ranged from 0.1 to 0.7 mm across different organs. A vast majority (96%) of the produced segmentations were found acceptable for radiotherapy planning.

Keywords: segmentation, organs at risk, radiotherapy, convolutional neural networks, magnetic resonance image

1. Introduction and Related Work

Malignant tumors of the central nervous system cause more than 200,000 deaths per year worldwide.1 Many brain cancers are treated with radiotherapy, often combined with other types of treatment, in particular, surgery and chemotherapy. Radiotherapy planning requires segmentation of target volumes (visible tumor mass and regions likely to contain tumor cells) and anatomical structures that are susceptible to damage by ionizing radiation exposure during treatment. The segmented volumes are used for computation of optimal irradiation doses, with the objective of maximizng irradiation of cancer cells while minimizing damage of neighboring healthy structures, called organs at risk (OAR). Magnetic resonance images (MRIs)2 are commonly used for imaging of tumors and organs in the head. In this work, we address the challenging problem of multiclass segmentation of organs in MRI of the brain.

Delineation of OAR is today manually performed by experienced clinicians. Due to a large number of structures to be accurately segmented, the segmentation process takes usually several hours per patient. Manual segmentation represents, therefore, a very high cost and eventually delays the beginning of the therapy. Moreover, a high intraobserver and interobserver variability is observed.3 Automatic methods for segmentation of OAR are therefore of particular interest. We can distinguish two main types of approaches proposed in the literature.

The first type of methods corresponds to atlas-based approaches.4–6 The input image is typically registered to one7,8 or several9,10 annotated images, from which the segmentation is extrapolated. When multiple atlases are used, the candidate segmentations may be combined, for instance, by voting strategies10 or by the STAPLE algorithm.11 An important advantage of atlas-based methods is to produce anatomically consistent results. However, their main drawback is their limited generalization capacity. The important variability between cases results not only from the natural anatomical differences between patients but also from pathological factors. In particular, healthy organs are deformed by growing tumors, which may appear at different locations and which are typically not present in atlases. Some organs may even be missing because of surgeries undergone previously by the patient.

The second group of approaches is based on a discriminative classification of voxels with machine learning models such as random forests12–14 or convolutional neural networks (CNNs).15 These discriminative methods are less constrained than atlas-based approaches and may, therefore, better adapt to the diversity of cases. However, in general, voxelwise classifiers may produce results that are inconsistent in terms of shapes and locations of organs.

OAR in the head have complex shapes and are surrounded by other structures sharing similar voxel intensities in MRI. Moreover, there are large differences related to acquisition of MRI, especially when images come from different medical centers. In order to segment organs from MRI, a complex and abstract information has, therefore, to be extracted. CNNs are suitable for this task, as they have the ability to automatically learn complex and relevant image features. In this work, we propose a system based on CNNs for multiclass segmentation of OAR in brain MRI.

In this work, we assume nonexclusive classes, i.e., that one voxel may belong to zero or several classes (Fig. 1). This is in contrast with the majority of segmentation models, which assign one unique label to each voxel following the format of public segmentation challenges such as the BRATS.16 However, some works addressing OAR segmentation consider nonexclusive classes17–20 similar to our work. An important difficulty to train machine learning models for multiclass OAR segmentation is the varying availability of ground truth segmentations of different classes among patients, depending on clinical needs. Although some organs, such as the optic nerve, are systematically segmented during radiotherapy planning, annotation of other structures may be available for only a subset of patients. One solution to this problem is to independently train one model per class, as was proposed in some recent deep learning works.19–21 A limitation of this approach is, however, the need to perform time-consuming trainings for every class while the number of classes of interest may be large. In this work, we propose a loss function and an algorithm to train neural networks for an end-to-end multiclass segmentation, taking into account the problem of missing annotations. To the best of our knowledge, the only deep learning method for end-to-end multiclass OAR segmentation that addresses this issue is the one proposed22 for the segmentation of head and neck OAR in CT scans.

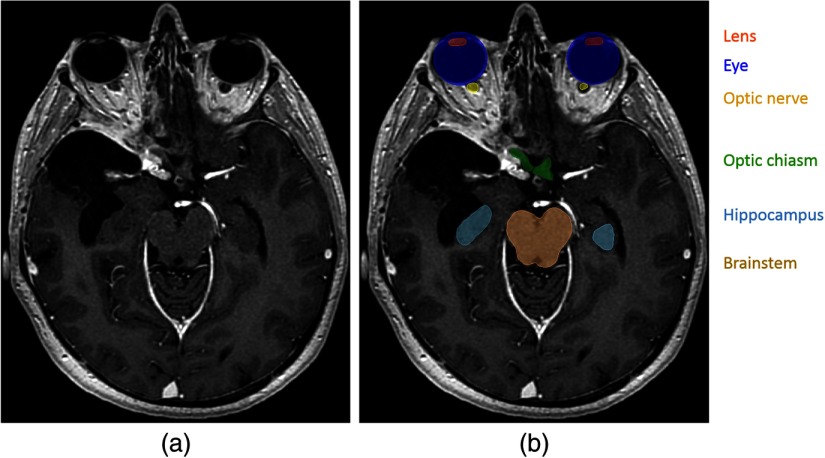

Fig. 1.

Segmentation of OAR in radiotherapy planning. (a) T1-weighted MRI acquired after injection of a gadolinium-based contrast agent. (b) Manual annotations of several OAR. In contrast to standard segmentations problems, one voxel may belong to zero or several classes (for instance, the eye and the lens).

The network architecture used in our work is a modified version of 2-D U-Net.23 As computation of gradients of the loss function by backpropagation represents high computational costs and GPU memory load, large segmentation CNNs often cannot be trained on entire MRIs or CT scans (representing several millions of voxels). Input images are often downsampled18,24 to allow large 3-D CNNs to capture information from distant regions, i.e., to have a long-range 3-D receptive field. In this work, we have chosen a 2-D architecture to limit the memory load while capturing a long-range spatial context without the need to downsample input images. In particular, downsampling of inputs may affect segmentation of small structures such as the optic nerve or the lens.

Even if most of the proposed deep learning methods for OAR segmentation do not apply anatomical constraints on the output of neural networks, some approaches include shape priors in models. For instance, Tong et al.25 proposed to learn latent representations of shapes of organs by a stacked autoencoder and to use these learned representations in the loss function of a segmentation network, in order to compare the shape of the output with the shape of the ground truth. References 26 and 27 proposed to adapt triangulated meshes representing organ boundaries to medical images and to use neural networks for regression of distances between centers of triangles and organ boundaries. This type of approach may, therefore, be seen as atlas-based with the use of deep learning for boundary detection.

Spatial relations between anatomical structures in the head could be explicitly modeled using, for instance, graph-based representations as it was proposed in model-based methods.28,29 However, inclusion of constraints related to connectivity and relative positions of organs in loss functions of CNNs is not trivial due to considerable computational costs. In order to apply such constraints, a neural network would have to segment large regions of the input images during the training phase. Segmentation of large 3-D volumes containing different anatomical structures requires a considerable amount of the GPU memory, as outputs of all layers of the network are stored in the GPU during computation of gradients of the loss function. Moreover, penalization of anatomical inconsistencies during the training does not guarantee anatomically consistent results at the test phase. To the best of our knowledge, none of the proposed deep learning methods explicitly enforces consistency of OAR segmentation in terms of relative positions of organs. However, some methods define regions of interest of organs, for instance by registering the image to a set of atlases.19

In our work, we enforce some anatomical constraints in a postprocessing stage, starting from the segmentation produced by majority voting of 2-D CNNs processing the image by axial, coronal, and sagittal slices. In particular, we propose an anatomically consistent segmentation of the optic nerves, with an approach based on the search of the shortest path in a graph, using outputs of neural networks to define weights of edges in the graph. Application of anatomical constraints in postprocessing modules rather than in the deep learning model is mainly motivated by computational costs of CNNs but also by their “black box” aspect.

We consider eight classes of interest, corresponding to anatomical structures systematically segmented during radiotherapy planning for brain cancers: eye, lens, optic nerve, optic chiasm, pituitary gland, hippocampus, brainstem, and brain (including cerebrum, cerebellum, and brainstem). The anatomical structures composed of left and right components (eye, lens, optic nerve, and hippocampus) are seen as one entity by the neural network but are separated in the postprocessing step.

Most of the proposed deep learning methods for segmentation of OAR were applied on CT scans in the context of head and neck cancers,30 i.e., cancers of the upper parts of respiratory and digestive systems (mouth, larynx, and throat). To the best of our knowledge, the only deep learning method for segmentation of OAR in MRIs of the brain is the one proposed in Ref. 27 (MRI T1 and T2).

Our method is tested on a set of contrast-enhanced T1-weighted MRIs acquired in the Centre Antoine Lacassagne in Nice (France). First, our method is quantitatively evaluated on a set of 44 MRIs with provided segmentation of different anatomical structures. Segmentation performances are measured by three different metrics: Dice score, Hausdorff distance, and the mean distance between the output and the ground truth. Then the segmentations produced by our method on a different set of 50 MRIs are qualitatively evaluated by an experienced radiotherapist. Our system was able to produce segmentations with an accuracy level that was found acceptable for radiotherapy planning in a large majority of cases (96%). The mean distances between the output segmentation and the ground truth for different organs were between 0.1 and 0.7 mm.

2. Methods

2.1. Deep Learning Model

2.1.1. Network architecture

The architecture used in our work is a modified version of 2-D U-Net,23 which is composed of an encoding part and a decoding part. The encoding part is a sequence of convolutional and max-pooling layers. The number of feature maps is doubled after each pooling, taking advantage of their reduced dimensions. The decoding part is composed of convolutional and upsampling layers. Feature maps of the encoding part as concatenated in the decoding part in order to combine low-level and high-level features and to ease the flow of gradients during the optimization process. The final convolutional layer (the segmentation layer) of the standard U-Net has two feature maps, representing pixelwise classification scores of the class 0 (“background”) and the class 1. During training, these two final feature maps are normalized by the softmax function.

We adapt this architecture to our problem of multiclass segmentation with nonexclusive classes, where each pixel may belong to zero or several classes. In the following, denotes the number of classes (in our experiments, ) and the classes are numbered from 1 to . In our model, each class has its dedicated binary segmentation layer (Fig. 2), composed of two feature maps corresponding to pixelwise scores of the class and of the background. Each segmentation layer takes as input the second to last convolutional layer of U-Net. We use batch normalization31 in all convolutional layers of the network, except segmentation layers.

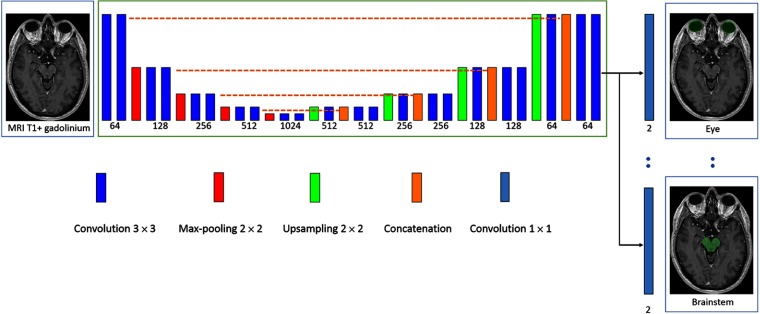

Fig. 2.

Architecture of our model. The rectangles represent layers and their height represents the sampling factor (increasing with max-poolings, decreasing with upsamplings). The numbers of features maps is specified below layers. The proposed model is a modified version of U-Net, having one segmentation layer per class in order to perform an end-to-end multiclass segmentation with nonexclusive classes.

2.1.2. Training of the model

Our loss function and training scheme were designed to deal with class imbalance and the problem of missing annotations (for a given image, the ground truth is available only for a subset of classes). In a given training image , each pixel has three possible labels for the class : 0 (negative), 1 (positive), or (unknown). If the ground truth segmentation of the class is unavailable for the image , all pixels are labeled as unknown for the class by default. However, missing annotations may be partially reconstructed from segmentations of other classes. For example, if the segmentation of the “lens” class is not available but the “eye” class is segmented, all pixels outside the eye may be labeled as negative for the lens.

Given a training batch of images and the estimated parameters of the network, the segmentation layer of the class is penalized by the following loss function, which can be seen as pixelwise cross-entropy with adaptative weights. Let us note , , and the numbers of pixels labeled, respectively, 0, 1, and for the class in the training batch. The weight of the pixel of the image has three possible values, according to the label of the pixel. If the label is unknown, then . If its label is 1, then , where is a fixed hyperparameter, which we call the “target weight.” If the pixel is labeled 0, then . The introduced hyperparameter controls, therefore, the relative weight of positive and negative pixels of the class (positive pixels have the total weight of and negative pixels have the total weight of ). This type of weighting strategy has been used in our previous work32 to counter the problem of class imbalance. The loss function of the segmentation layer of the class is defined by , where is the softmax score given by the network for the ground truth label of the pixel.

The loss function of the model is a convex combination of losses of all segmentation layers: .

We propose a sampling strategy to construct training batches so that there are positive and negative pixels for each of the classes in each training batch.

For each image of the training database, we precompute bounding boxes of all classes with provided segmentations. For bilateral classes such as the eyes, there are generally two bounding boxes per image corresponding to left and right components, unless one of the components is missing (e.g., an organ removed by surgery). The precomputed bounding boxes are used during the training in order to sample patches containing positive pixels of different classes.

At the beginning of the training, for each class , we construct a list of training images with provided ground truth segmentation of the class . To sample a 2-D patch which is likely to contain positive pixels of the class , we randomly choose an image from and a random point from the bounding box (or two bounding boxes if the class has left and right components) of the class in the chosen image. Once the point is chosen, a 2-D patch centered on this point is extracted from the image and segmentations of all available classes are read. In the following, we refer to this procedure as extracting a patch centered on the class .

We assume that the number of images in each training batch () is larger than the number of classes , in order to be able to sample at least one image/patch centered on each of the classes. Each training batch is constructed as follows. The first images of the batch are centered, respectively, on each of the classes. At this stage, the batch is likely to contain positive and negative pixels of each class. The remaining images may be chosen randomly or be centered on larger classes. In our case, , , and the last images are centered on the largest class we segment the brain, whose bounding box occupies almost an entire volume of the head.

As the model is trained for multiclass segmentation with nonexclusive classes, several binary segmentation maps have to be read for each input image in each iteration of the training. If the ground truth segmentations are not optimally stored in the memory, these reading operations may considerably slow down the training. The ground truth label of a given pixel can be represented by one bit (0 or 1). However, to store binary segmentation masks in commonly used formats such as HDF5,33 each label would have to be represented by at least one byte. We propose, therefore, to store multiclass segmentations in a specifically encoded format, where every bit represents a label of a given class . A binary segmentation mask of the class is retrieved by the “bitwise and operation” between the encoded multiclass segmentation and the code of the class, corresponding to a power of 2.

The size of extracted 2-D patches should be chosen according to the capacities of the GPU. In our experiments, the training batches were composed of 10 patches of size . Given that in our network we use unpadded operations (convolutions, max-poolings, etc.), the dimensions of the outputs of segmentation layers are considerably smaller.

The model is trained with a variant of stochastic gradient descent with momentum presented in our previous work.32 The main characteristics of this algorithm are that gradients are computed over several batches in each iteration of the training, in order to use many training examples despite GPU memory limitations.

2.2. Postprocessing and Enforcing Anatomical Consistency

Fully convolutional neural networks such as our model produce segmentations by individually classifying every voxel based on intensities of voxels within the corresponding receptive field. Such classification is performed by extracting powerful and automatically learned image features. However, as this classification is performed on a voxel by voxel basis, there is no guarantee of obtaining an anatomically consistent result, especially when the number of training images is limited. In particular, CNNs do not explicitly take into account aspects such as relative positions of different structures or adjacency of voxels belonging to the same structure. Including constraints related to these aspects in loss functions of neural networks or conceiving architectures that produce anatomically consistent results is difficult, in particular because of computational costs (need to simultaneously segment large 3-D regions of input images). We propose, therefore, to improve consistency of segmentations in a postprocessing step. We also separate left and right components of classes such as the eye, as these components are considered separately for radiotherapy planning.

We combine, by majority voting, segmentations produced by three networks trained, respectively, on axial, coronal, and sagittal slices. The goal of this combination is to take into account the three dimensions and to improve the robustness of the method. We subsequently apply a few rules described in the following, in order to correct some observed inconsistencies.

2.2.1. Segmentation of the brain

Brain (including the cerebrum, the cerebellum, and the brainstem) is the largest class to be segmented. For various reasons, some voxels within this structure may be inconsistently classified as negative by networks, which appears as “holes” in the segmentation or unrealistically sharp borders. We propose, therefore, a procedure that we call triplar hole-filling (Fig. 3). For each axial, coronal, and sagittal plan of the 3-D segmentation, we compute connected components of the background (negative voxels) and we remove components (changing their label from 0 to 1), which are not connected to the border of the plan. The reason of applying this procedure in 2-D is that some holes may easily be connected to the outside of the class in 3-D.

Fig. 3.

(a) Example of “holes” in the original output segmentation (left image) on a test example. (b) Segmentation obtained after our postprocessing (triplanar hole-filling).

The bounding box of the segmentation of the brain is subsequently used to separate left and right components of bilateral classes. Note that the head of the patient may appear at different locations of the image, depending on acquisition conditions and performed preprocessings. For a given class expected to have left and right components (eye, lens, optic nerve, and hippocampus), barycenter of each connected component is computed. In order to decide which side corresponds to a connected component, the coordinate (right–left) of its barycenter is compared to min and max coordinates of the bounding box of the brain.

2.2.2. Segmentation of the visual system

We propose an anatomically consistent segmentation of the visual system (eyes, lenses, optic nerves, and chiasm), starting from the segmentations predicted by neural networks.

The eye is probably the less challenging organ for automatic segmentation as it has a simple spherical shape. However, some false positives are possible, especially in cases where an eye has been removed by surgery, resulting in false positives within the orbit. We propose, therefore, to remove connected components of eye segmentation whose volume is below an expected minimum value, which is set to .

We constraint segmentation of the lenses to be inside the eyes, i.e., we assign the 0 label to all voxels outside the predicted masks of the eyes. Segmentation of the optic chiasm is obtained by taking the largest connected component of the segmentation predicted by the networks. We distinguish left and right sides of the chiasm in order to compute landmarks for segmentation of the two optic nerves as described in the following.

Segmentation of the optic nerve in MR images is particularly challenging as the nerve is thin and may have an appearance similar to neighboring structures at some locations. However, it has a rather regular shape, which can be seen as a tube connecting an eye and the optic chiasm. The nerve is generally very visible at some locations, particularly close to the eye. A human expert is able to track the trajectory of the nerve to distinguish it from neighboring structures at more difficult locations. Based on this observation, we propose a graph-based algorithm for segmentation of the optic nerves in order to guarantee connectivity between the eyes and the optic chiasm and to decrease the number of false positives. The algorithm is based on the search of the shortest path between two nodes in a graph. Outputs of neural networks are used to define weights of the edges in the graph. The different steps of the algorithm (applied separately for left and right nerves) are described below.

First, we detect landmarks corresponding to the two endpoints of an optic nerve based on the initial segmentation of the visual system produced by neural networks (Fig. 4). The first landmark of the left optic nerve is the barycenter of points initially predicted as the left optic nerve and which are closest to the left eye. The second landmark is similarly computed but searching points of the left side of the optic chiasm, which are the closest to the initial prediction of the left optic nerve. We take the barycenter of several points (in our experiments ) in order to obtain a point that is more likely to be close to the centerline of the nerve. If the detected chiasm landmarks for the two optic nerves are abnormally close, the procedure is applied only for one nerve, connecting the landmark with the closest eye.

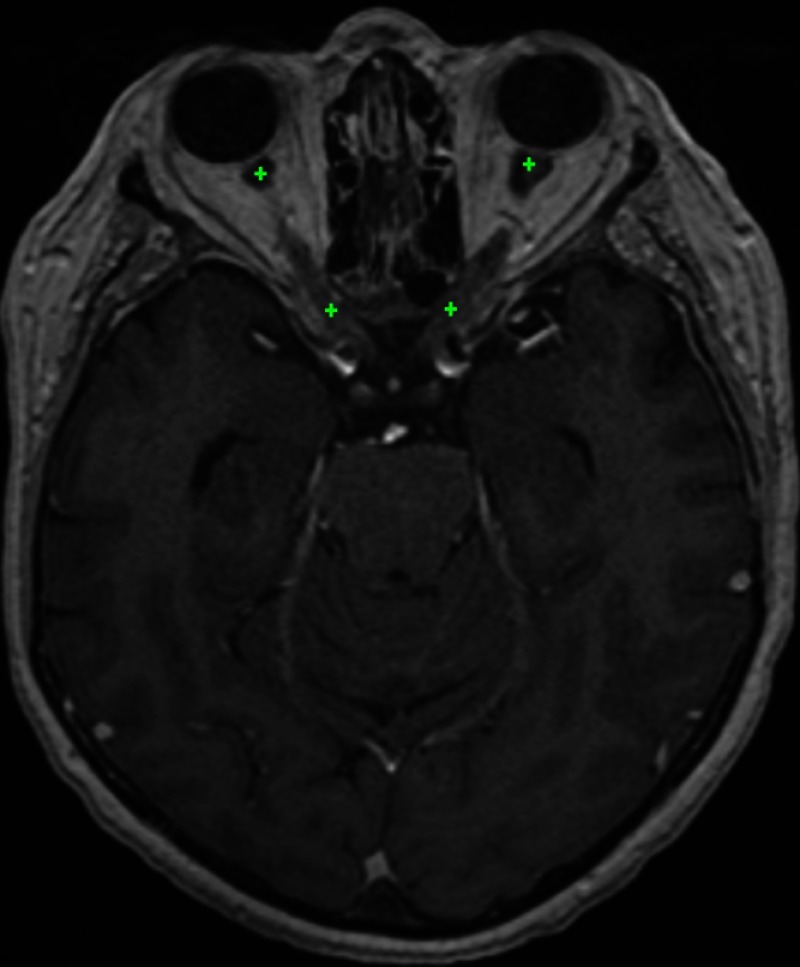

Fig. 4.

Approximate position of the optic nerve landmarks (displayed on the same axial slice) found by the system on a test example. For each of the optic nerves, the graph-based algorithm ensures the connectivity between the two landmarks.

Before applying the graph-based algorithm, we refine the initial segmentation of the optic nerves based on voxel intensities (specific to each image). In fact, the optic nerves are surrounded by fat, which appears hyperintense on MR T1-weighted images and can be rather easily distinguished from the optic nerve. We compute an approximate range of intensities of voxels of the fat by computing the 98% quantile of a small volume surrounding the eye–nerve landmark. Voxels whose intensities are above 80% of this value are classified negative for the optic nerve in order to eliminate common false positives.

Given the two computed landmarks and the refined initial segmentation, we estimate the centerline of the optic nerve (Fig. 5) by computing the shortest path in an oriented graph. The nodes of the graph correspond to voxels within a region of interest (cuboids containing the two landmarks) and which are reachable from the starting point. The connectivity of nodes is defined by adjacency of voxels with increasing coordinate, i.e., the child of the node are nodes with and . We, therefore, assume strictly increasing of the centerline toward the second landmark (from anterior to posterior).

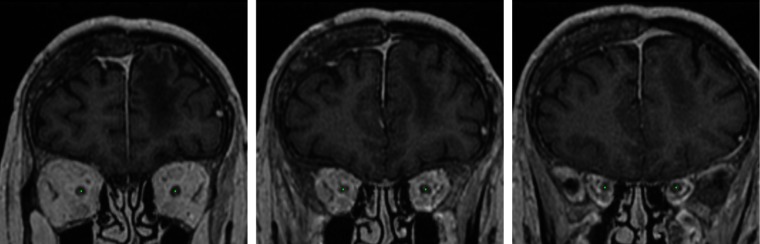

Fig. 5.

The centerlines of the optic nerves computed by our system on a test example (displayed on three different coronal slices). We assume one point of the centerline for each coronal slice between the two landmarks of the optic nerve.

Each node of the graph has its associated cost based on three criteria (listed by decreasing importance):

-

•

Label initially assigned to the voxel . A strong penalty is applied to voxels predicted as negative, in order to force the centerline to pass by points initially predicted as positive. The associated cost is if and otherwise, where is a fixed number controlling the importance of this cost (we set ).

-

•

If the predicted label is positive, distance to the closest point classified as negative. The penalty is inversely proportional to this distance, to give priority to points that are far from predicted borders of the optic nerve (preference to central points). This cost is expressed by , if and otherwise, where is the radius of a search zone around the voxel . As the visible nerve is larger close to the eye, varies with the coordinate (interpolatation between and , expressed in number of voxels).

-

•

Distance to the target point (i.e., the nerve–chiasm landmark). The penalty is proportional to this distance in order to force the centerline to immediately go toward the target point if other criteria do not give priority to some points. In particular, when one part of the optic nerve has not been initially detected (negative voxels), the line should go in the direction of the target point. The associated cost is , where controls the importance of this cost. We fixed , to make it negligible compared to the previous criteria.

The cost of the node is the sum of the three components: . The introduced cost determines the weights of edges in the graph. A directed edge between the point and has the weight of . The shortest path between nodes corresponding to the two endpoints of the optic nerve is computed by Dijkstra’s algorithm.34,35 The start point is the eye–nerve landmark as the optic nerve is generally well visible close to the eye. To the best of our knowledge, our approach is the first to combine deep learning with the search of the shortest path in a graph for segmentation of tubular anatomical structures. However, the idea of computing optimal distances for segmentation of tubular structures appears in interactive level-set methods.36–38 The objective of these methods is to find a geodesic between two points in the image chosen by the user. The Eikonal equation is constructed based on voxel intensities and contrasts, and the problem is solved by fast marching,39 similar to Dijkstra’s algorithm. Application of methods based only on image intensities may be difficult for segmentation of the optic nerves in MRI due, for instance, to the noise in images and local inhomogeneity of intensities within the optic nerve.

The final segmentation of the optic nerve is constructed from the centerline. As the optic nerve has a variable thickness, around each point of the centerline, we consider two spherical volumes and with associated radii . All voxels within are classified positive (optic nerve). Voxels of that are not within are classified positive only if they were positive in the original segmentation. We fixed and corresponds to the radius defined previously (large close to the eye, smaller close to the optic chiasm).

Finally, we apply mathematical morphology40 to reduce false positives corresponding to structures that are “attached” to the optic nerve and have a similar appearance. As these false positives are often connected to the correct segmentation by thin segments (Fig. 6), we apply the morphological opening with three 1-D structuring elements of size 2 in the three directions and we take the largest connected component.

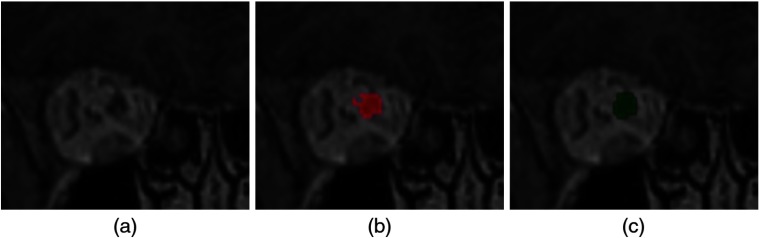

Fig. 6.

Use of mathematical morphology for reduction of false positives. (a) A coronal patch centered on an optic nerve. (b) Result obtained by the system on a test example without using mathematical morphology. (c) Result obtained after application of morphological opening followed by taking the largest connected component.

3. Experiments

3.1. Data and Preprocessing

We constructed a database of contrast-enhanced T1 MRIs acquired in the Centre Antoine Lacassagne (Nice, France), which is one of the three cancer centers in France equipped with proton therapy41 systems.

The database contains 44 MRIs with provided segmentations of OAR and 50 nonannotated MRIs. The annotated images are used for training and cross-validated quantitative evaluation. For each scan, the ground truth segmentation was provided only for a subset of classes. The numbers of available segmentations for each class are reported in Table 1. The images without annotations are used for qualitative evaluation by a radiotherapist, as described in Sec. 3.4.

Table 1.

Numbers of provided ground truth segmentations for different classes (in the database of 44 MRIs).

| Number of segmentations | |

|---|---|

| Hippocampus | 39 |

| Brainstem | 39 |

| Eye | 41 |

| Lens | 34 |

| Optic nerves | 40 |

| Optic chiasm | 41 |

| Pituitary gland | 29 |

| Brain | 37 |

The images were originally provided in Dicom format42 and were heterogeneous in terms of image intensities and geometrical properties such as size, spatial resolution, and the visible part of the head. The ground truth segmentations were the ones used for routine radiotherapy planning and were provided in Dicom RT-Struct files,43 representing coordinates of polygons corresponding to contours of anatomical structures.

In order to use these images, we performed the following preprocessings. We used 3-D Slicer44 and its extension SlicerRT45 to generate 3-D volumes (in “nrrd” format) from Dicom slices and to generate binary label masks from RT-Struct files. All images were resampled to the same spatial resolution, (isotropic in axial slices, 0.9 spacing between slices) and then resized to dimensions . The 200 axial slices start from the top of the head, i.e., if an image originally has more than 200 axial slices, the bottom slices (close to the neck) are ignored. However, the input images had generally around 150 axial slices and the bottom slices were filled with zeros. To approximately normalize the image intensities, first we compute the maximum of an image, which is likely to be reached by a point on the fat or contrast-enhanced blood vessels. Then all voxel values are divided by the value of the maximum and multiplied by a fixed constant.

3.2. Metrics for Quantitative Evaluation

To quantitatively evaluate our system, we perform fivefold cross-validation on the set of 44 annotated MRIs. In each fold, 80% of the database is used for training and 20% is used for test. For each class of interest, two results are reported. First, we report results obtained with our model trained on axial slices (denoted “U-Net multiclass, axial” in the following), i.e., the raw output of the neural network, without postprocessing. Then we report results obtained after majority voting and postprocessing (denoted “final result” in the following).

The first metric we use is the Dice score, which measures the voxelwise overlap between the output and the ground truth segmentation. An important limitation of this metric is that it gives the same importance to very close and very distant mismatches. As the ground truth is often uncertain and noisy close to the boundaries of structures, the Dice scores are generally considerably lower for small structures. This is why, in addition to raw Dice scores, we also report results (Dice, sensitivity, and specificity) obtained when a margin of one voxel is allowed, i.e., ignoring mismatches on the borders of the ground truth. This assumption means that a false positive on a voxel that is directly neighboring with the ground truth segmentation is ignored, i.e., it is neither counted as false positive nor true positive. Similarly, a false negative (nondetection) on the border of the ground truth is ignored.

The second used metric is the undirected Hausdorff distance expressed in millimeters (the coordinates of points are expressed in real values). The Hausdorff distance measures the length of the farthest mismatch between the output and ground truth (false positive or false negative). It is, therefore, useful to assess the consistency of the result, i.e., presence of very distant mistmatches. However, its limitation is that it only measures the value of the maximal distance, and therefore, one misclassified voxel is sufficient to considerably increase the Hausdorff distance.

Therefore, we also measure the mean distance between the output segmentation and the ground truth , defined as follows:

| (1) |

where is the Euclidean distance.

3.3. Quantitative Results

The mean distances between produced segmentations and the ground truth segmentation ranged from 0.08 (for the brain) to 0.69 mm (for the pituitary gland), as reported in Table 5. The results are variable across the different organs, according to their size, the number of ground truth segmentations available for training and the overall complexity of the segmentation task.

Table 5.

Mean distances in millimeters (fivefold cross-validation).

| U-Net multiclass, axial | Final result | |

|---|---|---|

| Hippocampus | 0.97 | 0.66 |

| Brainstem | 0.26 | 0.26 |

| Eye | 0.35 | 0.11 |

| Lens | 1.29 | 0.63 |

| Optic nerves and chiasm | 1.09 | 0.48 |

| Pituitary gland | 2.45 | 0.69 |

| Brain | 0.07 | 0.08 |

The Dice scores are usually higher for large anatomical structures such as the brain and the brainstem. In particular, the borders of the ground truth are usually very uncertain, which represents a problem for quantitative evaluation for smaller classes. In large classes, the border region is small compared to the entire volume of the class, and therefore, the mismatches on borders do not cause large drops of the metric. The highest Dice score was obtained for the brain (Dice score of 96.8). The lowest performances were obtained for the pituitary gland (mean Dice of 58, mean distance of 0.69 mm between the output and the ground truth). Segmentation of the pituitary gland is particularly challenging as it is small and difficult to be differentiated from surrounding structures. Moreover, the pituitary gland was the class with the lowest number of training examples (29 annotated cases, i.e., around 23 training cases in each of the five folds).

To take into account the uncertain borders of the ground truth, we also reported Dice scores, sensitivity, and specificity ignoring mismatches on the border of the ground truth, as described previously. As most mismatches between the outputs and the ground truth are on noisy borders of organs, there is a considerable difference between the raw Dice score (Table 2) and the Dice score with tolerance to one voxel (Table 3).

Table 2.

Mean Dice scores (fivefold cross-validation) obtained on a set of 44 MRIs. “Final result” denotes the result obtained after majority voting and postprocessing.

| U-Net multiclass, axial | Final result | |

|---|---|---|

| Hippocampus | 69.2 | 71.4 |

| Brainstem | 88.1 | 88.6 |

| Eye | 88.3 | 89.6 |

| Lens | 55.8 | 58.8 |

| Optic nerves and chiasm | 63.9 | 67.4 |

| Pituitary gland | 53.6 | 58.0 |

| Brain | 96.5 | 96.8 |

Table 3.

Mean Dice score (fivefold cross-validation), sensitivity, and specificity with tolerance to one voxel (ignoring mismatches on the borders due to the uncertainty of the ground truth).

| Dice score | Sensitivity | Specificity | |

|---|---|---|---|

| Hippocampus | 88.2 | 92.7 | 85.0 |

| Brainstem | 95.1 | 95.5 | 95.6 |

| Eye | 97.5 | 98.3 | 96.8 |

| Lens | 82.1 | 88.2 | 78.4 |

| Optic nerves and chiasm | 91.1 | 96.2 | 87.1 |

| Pituitary gland | 79.7 | 83.3 | 77.5 |

| Brain | 98.6 | 98.0 | 99.4 |

However, the measured Hausdorff distances (Table 4) are higher for large classes. The highest mean Hausdorff distance is observed for the brain, for which it is almost equal to 1 cm.

Table 4.

Hausdorff distances in millimeters (fivefold cross-validation). “Final result” denotes the result obtained after majority voting and postprocessing.

| U-Net multiclass, axial | Final result | |

|---|---|---|

| Hippocampus | 42.1 | 6.9 |

| Brainstem | 45.5 | 7.8 |

| Eye | 75.9 | 3.0 |

| Lens | 31.0 | 3.7 |

| Optic nerves and chiasm | 76.7 | 6.3 |

| Pituitary gland | 52.5 | 4.6 |

| Brain | 30.4 | 9.8 |

The combination of neural networks (trained, respectively, on axial, coronal, and sagittal slices) by majority voting improved almost all metrics. The improvements were particularly large for the Hausdorff distance (Table 4) and the mean distance (Table 5). We observe that the majority voting removes almost all distant false positives and yields more robust results than a raw output of one neural network. The results were subsequently improved by additional postprocessings.

The postprocessing of the eyes consisted in setting a lower bound on the physical volume of the output segmentation. This simple procedure allowed to remove false positives and decreased the mean Hausdorff distance from 12.2 mm (result of the majority voting) to 3 mm.

The postprocessing of the optic nerve decreased the number of false positives and enforced connectivity between the eyes and the chiasm, as described in Sec. 2.2.2. False positives are removed when they are either too far from the centerline, hyperintense in T1-weighted MRI (fat surrounding eyes) or are disconnected from the main connected component after application of morphological opening removing thin segments. The Dice score with one-voxel tolerance increased from 89.6 (result of the majority voting) to 91.1 (after postprocessing) for the optic nerves and chiasm. The raw Dice score increased from 66.3 to 67.4.

The postprocessing of the brain consisted in taking the largest connected component and filling the “holes” of the segmentation in axial, coronal, and sagittal planes. As these holes are usually small compared to the whole volume of the class (occupying a large part of the image), the variation of the metrics is limited. The Dice score increased from 96.7 to 96.8 and the Hausdorff distance decreased from 10.2 to 9.8.

To the best of our knowledge, the only deep learning work for segmentation of OAR in MRI is the one proposed,27 which reported cross-validated results (mean distances in mm) on a set of 16 MRIs. The authors used a model-based segmentation46 combined with a neural network for detection of boundaries of anatomical structures. The results reported by the authors for the anatomical structures we also segment are: 0.608 mm for the brainstem, 0.563 mm for the eyes, 0.268 mm for the lenses, and 0.41 mm for the optic nerves and chiasm. Overall, the ranges of mean distances are, therefore, comparable to the ours.

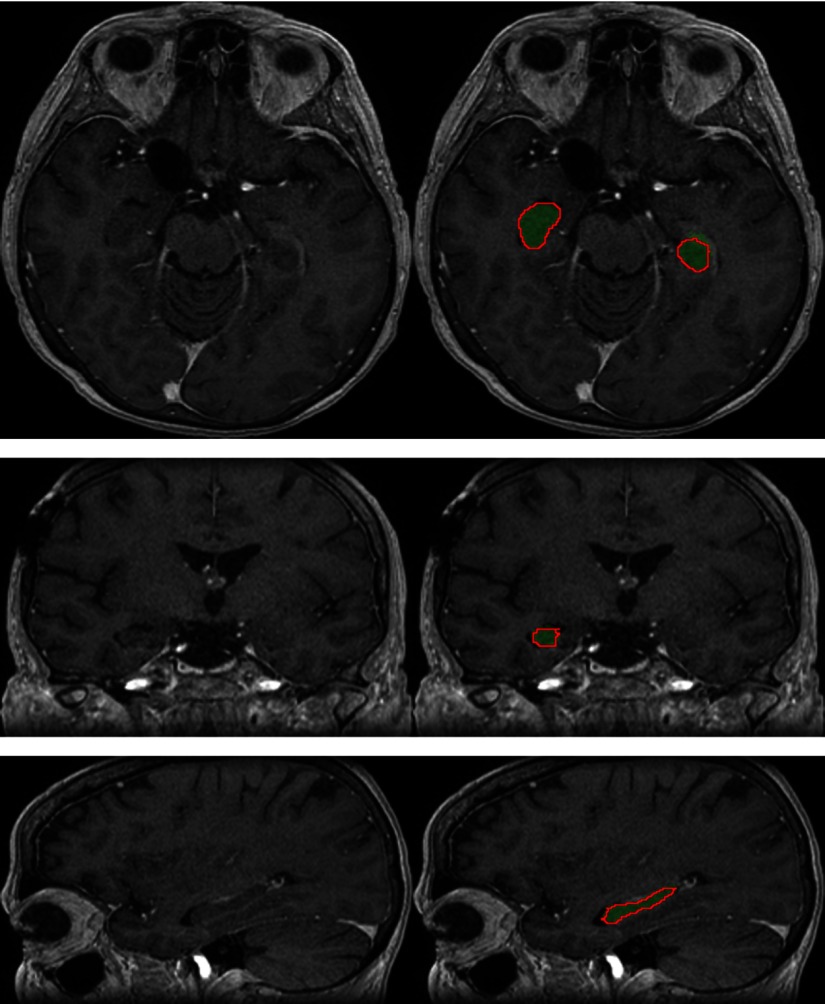

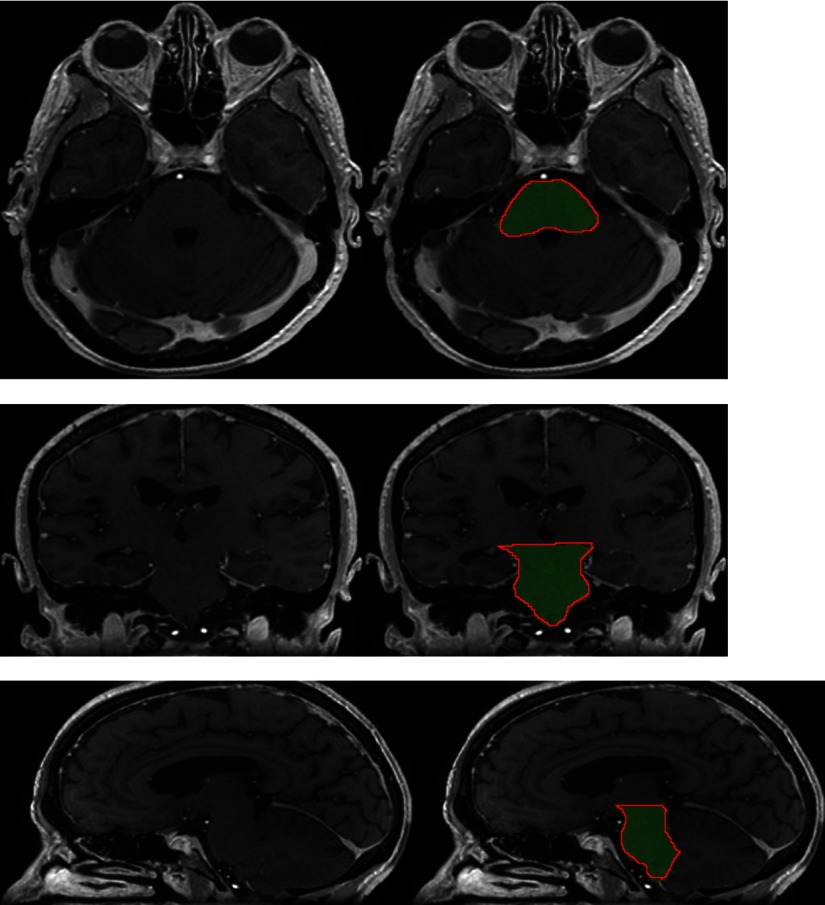

Examples of the output segmentations (comparison to the ground truth) for the hippocampus, the brainstem and the optic nerve are displayed on Figs. 7–9.

Fig. 7.

Segmentation of the hippocampus produced by our system on a test example (three orthogonal slices passing by the same point). The output segmentation is represented by the green region, and the ground truth annotation is represented by the red contour.

Fig. 8.

Segmentation of the brainstem produced by our system on a test example (three orthogonal slices passing by the same point). The output segmentation is represented by the green region, and the ground truth annotation is represented by the red contour.

Fig. 9.

Segmentation of the optic nerves produced by our system on a test example (three orthogonal slices passing by the same point). The output segmentation is represented by the green region, and the ground truth annotation is represented by the red contour.

3.4. Qualitative Evaluation by a Radiotherapist

The segmentations produced by our system on a set of 50 nonannotated MRIs are qualitatively evaluated by an experienced radiotherapist in order to assess their accuracy and utility for radiotherapy planning. For each of the 50 patients, the radiotherapist qualitatively evaluates the segmentations produced by our system for 12 anatomical structures (counting separately left and right components of bilateral classes), i.e., 600 segmentations are evaluated in total. The results are evaluated visually: no comparative dosimetric planning has been performed for corrected and uncorrected segmentations. The segmentations are displayed with 3-D Slicer.44 Each of the 600 segmentations is assigned to one of the following categories.

-

•

Accept. The radiotherapist would keep the segmentation for radiotherapy planning without any changes.

-

•

Accept, minor modifications. The segmentation is still acceptable for radiotherapy planning, i.e., some minor errors are observed but leaving out the modifications should not affect the dose distribution after the addition of safety margins of target volumes and OAR: planning target volumes (PTV) and planning OAR volumes (PRV).

-

•

Accept, major modifications. The segmentation has necessarily to be corrected, i.e., the resulting PTV and PRV differences before and after correction would have an important impact on the dose distribution (even if only few voxels are misclassified). The segmentation is, however, still good enough to be kept, i.e., it is less time-consuming to perform the necessary modifications than segmenting the structure from the beginning.

-

•

Reject. The segmentation has failed and keeping it would not save time compared to manually segmenting the structure from the beginning.

-

•

Not assigned. The structure is absent (e.g., organ removed by surgery) or invisible in the image because of a tumor.

The results are summarized in Table 6. 73% of the segmentations were assigned to the category “accept,” i.e., would be kept for radiotherapy planning without any modifications. Approximately 23% of the segmentations were assigned to the second category, i.e., acceptable for radiotherapy planning but with recommendation to perform some minor corrections, usually on extremities of organs. The system produced, therefore, satisfactory segmentations in a large majority of cases. It was able to correctly delineate organs despite the important difficulties such as the presence of tumors and the resulting mass effects, motion artifacts in MRI, different orientations of heads of patients, and anatomical modifications resulting from previous surgeries undergone by the patient (removed tissues).

Table 6.

Clinical evaluation by a radiotherapist on 50 test cases.

| Accept | Accept, minor corrections | Accept, major corrections | Reject | N/A | |

|---|---|---|---|---|---|

| Hippocampus left | 39/50 | 8 | 1 | 2 | 0 |

| Hippocampus right | 45/50 | 5 | 0 | 0 | 0 |

| Brainstem | 22/50 | 26 | 1 | 1 | 0 |

| Eye left | 48/50 | 2 | 0 | 0 | 0 |

| Eye right | 45/50 | 4 | 1 | 0 | 0 |

| Lens left | 39/50 | 7 | 4 | 0 | 0 |

| Lens right | 42/50 | 6 | 2 | 0 | 0 |

| Optic nerve left | 44/50 | 6 | 0 | 0 | 0 |

| Optic nerve right | 40/50 | 10 | 0 | 0 | 0 |

| Optic chiasm | 19/50 | 26 | 4 | 1 | 0 |

| Pituitary gland | 19/50 | 25 | 3 | 0 | 3 |

| Brain | 36/50 | 14 | 0 | 0 | 0 |

| Total | 438/600 | 139 | 16 | 4 | 3 |

Segmentations of the eyes had the highest rate of immediate acceptation: 93 out of 100 segmentations were assigned to the accept category. The only segmentation that required a major modification was a case with a lesion inside the eye, possibly the polypoidal choroidal vasculopathy. The lesion was not classified by the system as part of the eye, and therefore, one part of the eye was not detected. The minor modifications recommended for other cases were generally to correct few nondetected voxels on the border of the eye (top or bottom axial slices) or few false positives on the anterior part of the orbit.

All segmentations of the optic nerves were found acceptable for radiotherapy planning: 84 out of 100 segmentations were assigned to the accept category and the remaining 16 cases required only minor corrections. Most of the minor errors were nondetections for few voxels on the extremity of the optic nerve close to the eye (e.g., on the top axial slice). There was also at least one case of false positives on the neighboring arteries, close to the optic chiasm.

Even if in the previous, quantitative evaluation, the metrics for the lenses were significantly lower than for other structures, most of their segmentations on the set of 50 MRIs were found satisfactory by the radiotherapist. Minor corrections were required in 13 out of 100 cases and major corrections were required in 6 cases. Most of the problems were nondetections, for instance, observed in cases where the patient looks to the side and the system does not detect one side of the lens. The lenses are very small structures, and their visibility is highly impacted by motion artifacts in MRI.

For the optic chiasm, corrections were more frequently required but were usually minor: 19 out of 50 cases were assigned to the accept category and 26 cases required minor corrections. The minor errors were often false positives on the hypothalamus (the same issue was observed in the ground truth used to train the model) and sometimes on arteries neighboring the chiasm. The major corrections (four cases) were mainly nondetections of a small subpart of the beginning of an optic nerve. In fact, even if only a small number of voxels is not detected (false negatives), the corrections are necessary as an excessive irradiation of one part of the optic nerve could make the entire nerve dysfonctional.47 One segmentation was rejected due to nondetection of one part of the chiasm. This error appeared in a challenging case where the anatomy of the patient was modified by an important mass effect caused by a tumor.

Similar performances were obtained for the pituitary gland, located below the optic chiasm. Most of the minor (26 cases) and major (3 cases) required corrections correspond to nondetections, typically on the 1–2 lowermost slices. In at least 2 cases, few false negatives were observed on the pituitary stalk (also observed in some ground truth segmentations used for training), which is the connection between the pituitary gland and the hypothalamus.

Even if segmentation of the hippocampus is difficult (low contrast with neighboring structures), in our evaluation it had one of the highest acceptation rates, with 84 segmentations in the accept category. However, it is also the only structure for which more than one segmentation was rejected. The two rejected segmentations correspond to cases where a large tumoral mass has grown near to the hippocampus, causing an edema having a similar intensity in T1-weighted MRI. Moreover, the tumors had a large necrotic core, which may be confused with a ventricle by the system. In other cases, the required corrections (mostly minor) correspond usually to false positives (in particular on the amygdales, neighboring hippocampi, and having a similar intensity in MRI T1) or some nondetections on the extremities of the hippocampus.

For the brainstem, 48 out of 50 segmentations were found acceptable for radiotherapy planning but required minor modifications in approximately half cases. The required corrections (false positives or nondetections) were almost exclusively on the uppermost axial slices (typically on 2 slices) which correspond to the top extremity of the brainstem. The only rejected segmentation corresponds to a case with a tumor adjacent to the brainstem and which was mistakenly included in the segmentation (false positives).

Finally, all segmentations of the brain (occupying a large part of the head) were found acceptable for radiotherapy planning even if they required minor corrections in almost one third of cases. The recommended corrections include, for instance, nondetections close to the cribriform plate (between the eyes) and false positives on bones.

In particular, we observe that the only two structures for which all segmentations were found acceptable for radiotherapy planning (without any major correction) are the ones for which a specific postprocessing was performed, i.e., the optic nerves and the brain.

4. Conclusion and Future Work

In this work, we proposed a CNN-based method for segmentation of OAR from MR images in the context of neuro-oncology. The method was evaluated on clinical data.

First, we proposed a deep learning model and a training algorithm for segmentation of multiple and nonexclusive anatomical structures. The proposed methodology addresses problems related to computational costs and the variable availability of ground truth segmentations of the different anatomical structures (unsegmented classes). The neural network used in our method is a modified version of U-Net. The network is trained separately for segmentation in axial, coronal, and sagittal slices. The three versions of the network are combined by majority voting.

Second, we proposed procedures to enforce anatomical consistency of the result in a postprocessing stage. In particular, we proposed a graph-based algorithm for segmentation of the optic nerves, which are among the most difficult anatomical structures for automatic segmentation. The proposed postprocessings have shown their efficiency particularly in the qualitative evaluation by a radiotherapist. In particular, all segmentations of the optic nerves were found acceptable for radiotherapy planning.

The method was evaluated quantitatively on a set of 44 annotated MRIs, with fivefold cross-validation and using several metrics. The segmentations produced by our system on a set of 50 nonannotated MRIs were qualitatively evaluated by an experienced radiotherapist. Despite the limited size of the training database (44 annotated MRIs) and the different challenges of the segmentation tasks (in particular, presence of tumors), a large majority of the output segmentations were found sufficiently accurate to be used for computation of irradiation doses in radiotherapy.

An important step of the future work is to adapt the method to multimodal data. Often, several types of images are acquired during radiotherapy planning for one patient, including CT scans and different MR sequences (T1, T2, FLAIR). Inclusion of different imaging modalities could improve segmentation of several structures but it comes also with new challenges related, for instance, to intermodality registration and training of models on cases with missing modalities.

In our work, we used a variant of 2-D U-Net to limit the GPU memory load. 2-D CNNs have, however, the drawback of ignoring one spatial dimension. An interesting alternative would be to use 3-D CNNs with anisotropic receptive fields, such as the model proposed in Ref. 48.

As discussed in Sec. 3, using the voxelwise overlap as the metric to compare the output segmentation with the ground truth has its limits, in particular due to uncertain borders of the ground truth. An interesting direction for future work would be to use a loss function penalizing distances between the output and the ground truth. However, computation of mean distances may represent important computational costs as it implies computation of distances for a large number of pairs of coordinates.

Even if the proposed postprocessing modules (hole-filling, thresholding on the size of connected components, inclusion of one class in another, graph-based algorithm to find centerlines) have been applied to specific anatomical structures, they can be adapted for segmentation of other structures. In particular, our graph-based algorithm could be used to compute centerlines of different tubular structures, such as blood vessels. The proposed postprocessings are not specific to MRI, except the intensity-based refinement of the segmentation of the optic nerve (hyperintensity in MRI T1 of the fat surrounding the optic nerve).

As for other segmentation tasks in medical imaging, availability of annotated training data is an important problem. Methods able to exploit weaker forms of annotations (bounding boxes and slice-level labels) for training of segmentation models are therefore of interest. In particular, methods combining weakly annotated and fully annotated training images were recently proposed in Refs. 49 and 50. As our system was able to produce accurate segmentations in a large majority of cases and the rare observed errors were mainly on boundaries of organs, the system could be used for generation of bounding boxes (subsequently verified by a human), which could be used to train segmentation models that are able to exploit this type of annotations.

Another important direction of the future work is to combine segmentation of OAR and segmentation of radiotherapy target volumes. In particular, a large variability of methods for tumor segmentation32,48,51–53 were proposed in recent years. Deep learning could also be used for computation of irradiation doses54 in radiotherapy planning.

Acknowledgments

Pawel Mlynarski was funded by the Microsoft Research-Inria Joint Centre, France. This work was supported by the Inria Sophia Antipolis—Méditerranée, “NEF” computation cluster.

Biographies

Pawel Mlynarski is a PhD student at Inria Sophia Antipolis, France. His research work addresses problems of automatic analysis of medical images in oncology. His thesis focuses on segmentation of brain tumors and organs at risk in the context of radiotherapy planning. He obtained his master’s degree of applied mathematics at the École Normale Supérieure (Cachan, France).

Hervé Delingette is a research director at Inria, director of an academy of excellence at Université Côte d’Azur, and member of the board of the MICCAI Society. He also holds a chair at the new AI institute 3IA Côte d’Azur. He received his engineering and PhD degrees from the École Centrale Paris. His research focuses on various aspects of artificial intelligence in medical image analysis, computational physiology, and surgery simulation.

Hamza Alghamdi is a radiation oncologist at the Centre Antoine Lacassagne (Nice, France).

Pierre-Yves Bondiau presented his thesis as medical doctor in 1995 about a automatic eye reconstruction software. His scientific thesis was made at the Inria Sophia Antipolis, France, about automatic segmentation for radiotherapy treatment. Then he implemented the first radiosurgery robot, and he started the high energy proton device project at the Antoine Lacassagne Anticancer Center, Nice, France. Presently he is involved in the application of AI in cancer treatments.

Nicholas Ayache is research director at Inria, head of the Epione research team dedicated to e-patients for e-medicine, and scientific director of the new AI institute 3IA Côte d’Azur. He is a member of the French Academy of Sciences and the Academy of Surgery. His current research focuses on AI methods to improve diagnosis, prognosis, and therapy from medical images and clinical, biological, behavioral, and environmental data available on the patient.

Disclosures

The authors have no conflicts of interest to disclose.

Contributor Information

Pawel Mlynarski, Email: pawel.mlynarski@inria.fr.

Hervé Delingette, Email: herve.delingette@inria.fr.

Hamza Alghamdi, Email: hamza.alghamdi@nice.unicancer.fr.

Pierre-Yves Bondiau, Email: pierre-yves.bondiau@nice.unicancer.fr.

Nicholas Ayache, Email: nicholas.ayache@inria.fr.

References

- 1.Vos T., et al. , “Global, regional, and national incidence, prevalence, and years lived with disability for 310 diseases and injuries, 1990–2015: a systematic analysis for the Global Burden of Disease Study 2015,” Lancet 388(10053), 1545–1602 (2016). 10.1016/S0140-6736(16)31678-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bauer S., et al. , “A survey of MRI-based medical image analysis for brain tumor studies,” Phys. Med. Biol. 58(13), R97 (2013). 10.1088/0031-9155/58/13/R97 [DOI] [PubMed] [Google Scholar]

- 3.Brouwer C. L., et al. , “3D variation in delineation of head and neck organs at risk,” Radiat. Oncol. 7(1), 32 (2012). 10.1186/1748-717X-7-32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ciardo D., et al. , “Atlas-based segmentation in breast cancer radiotherapy: evaluation of specific and generic-purpose atlases,” Breast 32, 44–52 (2017). 10.1016/j.breast.2016.12.010 [DOI] [PubMed] [Google Scholar]

- 5.Bondiau P.-Y., et al. , “Atlas-based automatic segmentation of MR images: validation study on the brainstem in radiotherapy context,” Int. J. Radiat. Oncol. Biol. Phys. 61(1), 289–298 (2005). 10.1016/j.ijrobp.2004.08.055 [DOI] [PubMed] [Google Scholar]

- 6.Alchatzidis S., Sotiras A., Paragios N., “Local atlas selection for discrete multi-atlas segmentation,” in IEEE 12th Int. Symp. Biomed. Imaging (ISBI), IEEE, pp. 363–367 (2015). 10.1109/ISBI.2015.7163888 [DOI] [Google Scholar]

- 7.Commowick O., Grégoire V., Malandain G., “Atlas-based delineation of lymph node levels in head and neck computed tomography images,” Radiother. Oncol. 87(2), 281–289 (2008). 10.1016/j.radonc.2008.01.018 [DOI] [PubMed] [Google Scholar]

- 8.Commowick O., Warfield S. K., Malandain G., “Using Frankenstein’s creature paradigm to build a patient specific atlas,” Lect. Notes Comput. Sci. 5762, 993–1000 (2009). 10.1007/978-3-642-04271-3_120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ramus L., Malandain G., “Assessing selection methods in the context of multi-atlas based segmentation,” in IEEE Int. Symp. Biomed. Imaging: From Nano to Macro, IEEE, pp. 1321–1324 (2010). 10.1109/ISBI.2010.5490240 [DOI] [Google Scholar]

- 10.Ramus L., Malandain G., “Multi-atlas based segmentation: application to the head and neck region for radiotherapy planning,” in MICCAI Workshop Med. Image Anal. Clin.-A Grand Challenge, pp. 281–288 (2010). [Google Scholar]

- 11.Warfield S. K., Zou K. H., Wells W. M., “Simultaneous truth and performance level estimation (STAPLE): an algorithm for the validation of image segmentation,” IEEE Trans. Med. Imaging 23(7), 903–921 (2004). 10.1109/TMI.2004.828354 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Criminisi A., et al. , “Regression forests for efficient anatomy detection and localization in CT studies,” Lect. Notes Comput. Sci. 6533, 106–117 (2010). 10.1007/978-3-642-18421-5_11 [DOI] [Google Scholar]

- 13.Criminisi A., et al. , “Regression forests for efficient anatomy detection and localization in computed tomography scans,” Med. Image Anal. 17(8), 1293–1303 (2013). 10.1016/j.media.2013.01.001 [DOI] [PubMed] [Google Scholar]

- 14.Gauriau R., et al. , “Multi-organ localization with cascaded global-to-local regression and shape prior,” Med. Image Anal. 23(1), 70–83 (2015). 10.1016/j.media.2015.04.007 [DOI] [PubMed] [Google Scholar]

- 15.LeCun Y., Bengio Y., “Convolutional networks for images, speech, and time series,” Handb. Brain Theory Neural Networks 3361(10), 1995 (1995). [Google Scholar]

- 16.Menze B. H., et al. , “The multimodal brain tumor image segmentation benchmark (BRATS),” IEEE Trans. Med. Imaging 34(10), 1993–2024 (2015). 10.1109/TMI.2014.2377694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nikolov S., et al. , “Deep learning to achieve clinically applicable segmentation of head and neck anatomy for radiotherapy,” arXiv:1809.04430 (2018). [DOI] [PMC free article] [PubMed]

- 18.Wang Y., et al. , “Organ at risk segmentation in head and neck ct images using a two-stage segmentation framework based on 3D U-Net,” IEEE Access 7, 144591–144602 (2019). [Google Scholar]

- 19.Larsson M., Zhang Y., Kahl F., “Robust abdominal organ segmentation using regional convolutional neural networks,” Appl. Soft Comput. 70, 465–471 (2018). 10.1016/j.asoc.2018.05.038 [DOI] [Google Scholar]

- 20.Ibragimov B., Xing L., “Segmentation of organs-at-risks in head and neck CT images using convolutional neural networks,” Med. Phys. 44(2), 547–557 (2017). 10.1002/mp.12045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Men K., et al. , “More accurate and efficient segmentation of organs-at-risk in radiotherapy with convolutional neural networks cascades,” Med. Phys. 46(1), 286–292 (2019). 10.1002/mp.13296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zhu W., et al. , “AnatomyNet: deep learning for fast and fully automated whole-volume segmentation of head and neck anatomy,” Med. Phys. 46(2), 576–589 (2019). 10.1002/mp.2019.46.issue-2 [DOI] [PubMed] [Google Scholar]

- 23.Ronneberger O., Fischer P., Brox T., “U-Net: convolutional networks for biomedical image segmentation,” Lect. Notes Comput. Sci. 9351, 234–241, (2015). 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- 24.Roth H. R., et al. , “Hierarchical 3D fully convolutional networks for multi-organ segmentation,” arXiv:1704.06382 (2017).

- 25.Tong N., et al. , “Fully automatic multi-organ segmentation for head and neck cancer radiotherapy using shape representation model constrained fully convolutional neural networks,” Med. Phys. 45(10), 4558–4567 (2018). 10.1002/mp.13147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Brosch T., et al. , “Deep learning-based boundary detection for model-based segmentation with application to MR prostate segmentation,” Lect. Notes Comput. Sci. 11073, 515–522 (2018). 10.1007/978-3-030-00937-3_59 [DOI] [Google Scholar]

- 27.Orasanu E., et al. , “Organ-at-risk segmentation in brain MRI using model-based segmentation: benefits of deep learning-based boundary detectors,” in Int. Workshop on Shape in Med. Imaging, Springer, pp. 291–299 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bloch I., et al. , “Fusion of spatial relationships for guiding recognition, example of brain structure recognition in 3D MRI,” Pattern Recognit. Lett. 26(4), 449–457 (2005). 10.1016/j.patrec.2004.08.009 [DOI] [Google Scholar]

- 29.Fouquier G., Atif J., Bloch I., “Sequential model-based segmentation and recognition of image structures driven by visual features and spatial relations,” Comput. Vision Image Understanding 116(1), 146–165 (2012). 10.1016/j.cviu.2011.09.004 [DOI] [Google Scholar]

- 30.Argiris A., et al. , “Head and neck cancer,” Lancet 371(9625), 1695–1709 (2008). 10.1016/S0140-6736(08)60728-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ioffe S., Szegedy C., “Batch normalization: accelerating deep network training by reducing internal covariate shift,” arXiv:1502.03167 (2015).

- 32.Mlynarski P., et al. , “3D convolutional neural networks for tumor segmentation using long-range 2D context,” Computer. Med. Imaging Graphics 73, 60–72 (2019). 10.1016/j.compmedimag.2019.02.001 [DOI] [PubMed] [Google Scholar]

- 33.Folk M., et al. , “An overview of the HDF5 technology suite and its applications,” in Proc. EDBT/ICDT 2011 Workshop Array Databases, ACM, pp. 36–47 (2011). 10.1145/1966895.1966900 [DOI] [Google Scholar]

- 34.Cormen T. H., et al. , Introduction to Algorithms, MIT Press; (2009). [Google Scholar]

- 35.Zhan F. B., Noon C. E., “Shortest path algorithms: an evaluation using real road networks,” Transp. Sci. 32(1), 65–73 (1998). 10.1287/trsc.32.1.65 [DOI] [Google Scholar]

- 36.Deschamps T., Cohen L. D., “Fast extraction of minimal paths in 3D images and applications to virtual endoscopy,” Med. Image Anal. 5(4), 281–299 (2001). 10.1016/S1361-8415(01)00046-9 [DOI] [PubMed] [Google Scholar]

- 37.Cohen L. D., Kimmel R., “Global minimum for active contour models: a minimal path approach,” Int. J. Comput. Vision 24(1), 57–78 (1997). 10.1023/A:1007922224810 [DOI] [Google Scholar]

- 38.Benmansour F., Cohen L. D., “Tubular structure segmentation based on minimal path method and anisotropic enhancement,” Int. J. Comput. Vision 92(2), 192–210 (2011). 10.1007/s11263-010-0331-0 [DOI] [Google Scholar]

- 39.Sethian J. A., “Fast marching methods,” SIAM Rev. 41(2), 199–235 (1999). 10.1137/S0036144598347059 [DOI] [Google Scholar]

- 40.Zana F., Klein J.-C., “Segmentation of vessel-like patterns using mathematical morphology and curvature evaluation,” IEEE Trans. Image Process. 10(7), 1010–1019 (2001). 10.1109/83.931095 [DOI] [PubMed] [Google Scholar]

- 41.Levin W., et al. , “Proton beam therapy,” Br. J. Cancer 93(8), 849–854 (2005). 10.1038/sj.bjc.6602754 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mildenberger P., Eichelberg M., Martin E., “Introduction to the DICOM standard,” Eur. Radiol. 12(4), 920–927 (2002). 10.1007/s003300101100 [DOI] [PubMed] [Google Scholar]

- 43.Law M. Y., Liu B., “DICOM-RT and its utilization in radiation therapy,” Radiographics 29(3), 655–667 (2009). 10.1148/rg.293075172 [DOI] [PubMed] [Google Scholar]

- 44.Fedorov A., et al. , “3D Slicer as an image computing platform for the quantitative imaging network,” Magn. Reson. Imaging 30(9), 1323–1341 (2012). 10.1016/j.mri.2012.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Pinter C., et al. , “SlicerRT: radiation therapy research toolkit for 3D Slicer,” Med. Phys. 39(10), 6332–6338 (2012). 10.1118/1.4754659 [DOI] [PubMed] [Google Scholar]

- 46.Ecabert O., et al. , “Automatic model-based segmentation of the heart in CT images,” IEEE Trans. Med. Imaging 27(9), 1189–1201 (2008). 10.1109/TMI.2008.918330 [DOI] [PubMed] [Google Scholar]

- 47.Källman P., Ågren A., Brahme A., “Tumour and normal tissue responses to fractionated non-uniform dose delivery,” Int. J. Radiat. Biol. 62(2), 249–262 (1992). 10.1080/09553009214552071 [DOI] [PubMed] [Google Scholar]

- 48.Wang G., et al. , “Automatic brain tumor segmentation using cascaded anisotropic convolutional neural networks,” Lect. Notes Comput. Sci. 10670, 178–190 (2017). 10.1007/978-3-319-75238-9_16 [DOI] [Google Scholar]

- 49.Mlynarski P., et al. , “Deep learning with mixed supervision for brain tumor segmentation,” J. Med. Imaging 6(3), 034002 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Shah M. P., Merchant S., Awate S. P., “MS-Net: mixed-supervision fully-convolutional networks for full-resolution segmentation,” Lect. Notes Comput. Sci. 11073, 379–387 (2018). 10.1007/978-3-030-00937-3_44 [DOI] [Google Scholar]

- 51.Myronenko A., “3D MRI brain tumor segmentation using autoencoder regularization,” Lect. Notes Comput. Sci. 11384, 311–320 (2018). 10.1007/978-3-030-11726-9_28 [DOI] [Google Scholar]

- 52.Kamnitsas K., et al. , “Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation,” Med. Image Anal. 36, 61–78 (2017). 10.1016/j.media.2016.10.004 [DOI] [PubMed] [Google Scholar]

- 53.Parisot S., et al. , “Concurrent tumor segmentation and registration with uncertainty-based sparse non-uniform graphs,” Med. Image Anal. 18(4), 647–659 (2014). 10.1016/j.media.2014.02.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Andres E. A., et al. , “PO-1002 pseudo computed tomography generation using 3D deep learning-application to brain radiotherapy,” Radiother. Oncol. 133, S553 (2019). 10.1016/S0167-8140(19)31422-7 [DOI] [Google Scholar]