Abstract

Magneto- and electro-encephalography (MEG/EEG) non-invasively record human brain activity with millisecond resolution providing reliable markers of healthy and disease states. Relating these macroscopic signals to underlying cellular- and circuit-level generators is a limitation that constrains using MEG/EEG to reveal novel principles of information processing or to translate findings into new therapies for neuropathology. To address this problem, we built Human Neocortical Neurosolver (HNN, https://hnn.brown.edu) software. HNN has a graphical user interface designed to help researchers and clinicians interpret the neural origins of MEG/EEG. HNN’s core is a neocortical circuit model that accounts for biophysical origins of electrical currents generating MEG/EEG. Data can be directly compared to simulated signals and parameters easily manipulated to develop/test hypotheses on a signal’s origin. Tutorials teach users to simulate commonly measured signals, including event related potentials and brain rhythms. HNN’s ability to associate signals across scales makes it a unique tool for translational neuroscience research.

Research organism: Human

eLife digest

Neurons carry information in the form of electrical signals. Each of these signals is too weak to detect on its own. But the combined signals from large groups of neurons can be detected using techniques called EEG and MEG. Sensors on or near the scalp detect changes in the electrical activity of groups of neurons from one millisecond to the next. These recordings can also reveal changes in brain activity due to disease.

But how do EEG/MEG signals relate to the activity of neural circuits? While neuroscientists can rarely record electrical activity from inside the human brain, it is much easier to do so in other animals. Computer models can then compare these recordings from animals to the signals in human EEG/MEG to infer how the activity of neural circuits is changing. But building and interpreting these models requires advanced skills in mathematics and programming, which not all researchers possess.

Neymotin et al. have therefore developed a user-friendly software platform that can help translate human EEG/MEG recordings into circuit-level activity. Known as the Human Neocortical Neurosolver, or HNN for short, the open-source tool enables users to develop and test hypotheses on the neural origin of EEG/MEG signals. The model simulates the electrical activity of cells in the outer layers of the human brain, the neocortex. By feeding human EEG/MEG data into the model, researchers can predict patterns of circuit-level activity that might have given rise to the EEG/MEG data. The HNN software includes tutorials and example datasets for commonly measured signals, including brain rhythms. It is free to use and can be installed on all major computer platforms or run online.

HNN will help researchers and clinicians who wish to identify the neural origins of EEG/MEG signals in the healthy or diseased brain. Likewise, it will be useful to researchers studying brain activity in animals, who want to know how their findings might relate to human EEG/MEG signals. As HNN is suitable for users without training in computational neuroscience, it offers an accessible tool for discoveries in translational neuroscience.

Introduction

Modern neuroscience is in the midst of a revolution in understanding the cellular and genetic substrates of healthy brain dynamics and disease due to advances in cellular- and circuit-level approaches in animal models, for example two-photon imaging and optogenetics. However, the translation of new discoveries to human neuroscience is significantly lacking (Badre et al., 2015; Sahin et al., 2018). To understand human disease, and more generally the human condition, we must study humans. To date, EEG and MEG are the only noninvasive methods to study electrical neural activity in humans with fine temporal resolution. Despite the fact that EEG/MEG provide biomarkers of almost all healthy and abnormal brain dynamics, these so called ‘macro-scale’ techniques suffer from difficulty in interpretability in terms of the underlying cellular- and circuit-level events. As such, there is a need for a translator that can bridge the ‘micro-scale’ animal data with the ‘macro-scale’ human recordings in a principled way. This is the ideal problem for computational neural modeling, where the model can have specificity at different scales.

To address this need, we developed the Human Neocortical Neurosolver (HNN), a modeling tool designed to provide researchers and clinicians an easy-to-use software platform to develop and test hypotheses regarding the neural origin of their data. The foundation of the HNN software is a neocortical model that accounts for the biophysical origin of macroscale extracranial EEG/MEG recordings with enough detail to translate to the underlying cellular- and network-level activity. HNN’s graphical user interface (GUI) provides users with an interactive tool to interpret the neural underpinnings of EEG/MEG data and changes in these signals with behavior or neuropathology.

HNN’s underlying model represents a canonical neocortical circuit based on generalizable features of cortical circuitry, with individual pyramidal neurons and interneurons arranged across the cortical layers, and layer-specific input pathways that relay spiking information from other parts of the brain, which are not explicitly modeled. Based on known electromagnetic biophysics underlying macroscale EEG/MEG signals (Jones, 2015), the elementary current generators of EEG/MEG (current dipoles) are simulated from the intracellular current flow in the long and spatially-aligned pyramidal neuron dendrites (Hämäläinen et al., 1993; Ikeda et al., 2005; Jones, 2015; Murakami et al., 2003; Murakami and Okada, 2006; Okada et al., 1997). This unique construction produces equal units between the model output and source-localized data (ampere-meters, Am) allowing one-to-one comparison between model and data to guide interpretation.

The extracranial macroscale nature of EEG/MEG limits the space of signals that are typically observed and studied. The majority of studies focus on quantification of event related potentials (ERPs) and low-frequency brain rhythms (<100 Hz), and there are commonalities in these signals across tasks and species (Buzsáki et al., 2013; Shin et al., 2017). HNN’s underlying mathematical model has been successfully applied to interpret the mechanisms and meaning of these common signals, including sensory evoked responses and oscillations in the alpha (7–14 Hz), beta (15–29 Hz) and gamma bands (30–80 Hz) (Jones et al., 2009; Jones et al., 2007; Lee and Jones, 2013; Sherman et al., 2016; Ziegler et al., 2010), and changes with perception (Jones et al., 2007) and aging (Ziegler et al., 2010). The model has also been used to study the impact of non-invasive brain stimulation on circuit dynamics measured with EEG (Sliva et al., 2018), and to constrain more reduced ‘neural mass models’ of laminar activity (Pinotsis et al., 2017). In the clinical domain, HNN’s model has also been applied to study MEG-measured circuit deficits in autism (Khan et al., 2015).

Despite these examples of use, the complexity of the original model and code hindered use by the general community. The innovation in the new HNN software is the construction of an intuitive graphical user interface to interact with the model without any coding. We offer several free and publicly-available resources to assist the broad EEG/MEG community in using the software and applying the model to their studies. These resources include an example workflow, several tutorials (based on the prior studies cited above) to study ERPs and oscillations, and community-sharing resources.

HNN’s GUI is designed so that researchers can simultaneously view the model's net current dipole output and microscale features (including layer-specific responses, individual cell spiking activity, and somatic voltages) in both the time and frequency domains. HNN is constructed to be a hypothesis development and testing tool to produce circuit-level predictions that can then be directly tested and informed by invasive recordings and/or other imaging modalities. This level of scalability provides a unique tool for translational neuroscience research.

In this paper, we outline biophysiological and physiological background information that is the basis of the development of HNN, give an overview of tutorials and available data and parameter sets to simulate ERPs and low-frequency oscillations in the alpha, beta, and gamma range, and describe current distribution and online resources (https://hnn.brown.edu). We discuss the differences between HNN and other EEG/MEG modeling software packages, as well as limitations and future directions.

Results

Background information on the generation of EEG/MEG signals and uniqueness of HNN

Primary currents and the relation to forward and inverse modeling

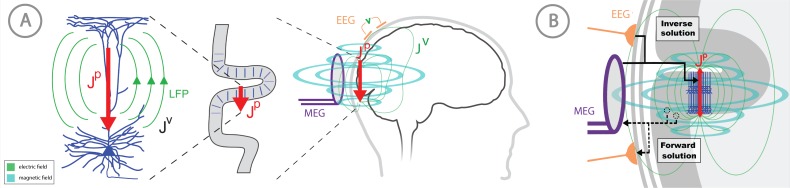

The concepts of electromagnetic biophysics are succinctly discussed, for example in Hämäläinen et al. (1993) and Hari and Ilmoniemi (1986). Here, we briefly review the basic framework of forward and inverse modeling and how they relate to HNN (Figure 1).

Figure 1. Overview of the biophysical origin of MEG/EEG signals and the relationship between HNN and forward/inverse modeling.

(A) HNN bridges the ‘macroscale’ extracranial EEG/MEG recordings to the underlying cellular- and circuit-level activity by simulating the primary electrical currents (JP) underlying EEG/MEG, which are generated by the postsynaptic, intracellular current flow in the long and spatially-aligned dendrites of a large population of synchronously-activated pyramidal neurons. (B) A zoomed in representation of the relationship between HNN and EEG/MEG forward and inverse modeling. Inverse modeling estimates the location, timecourse and orientation of the primary currents (Jp), and HNN simulates the neural activity creating Jp, at the microscopic scale. Adapted from Jones (2015).

MEG/EEG signals are created by electrical currents in the brain. The signals are recorded by sensors at least a centimeter from the actual current sources. Given this configuration, a macroscopic scale is employed for the distribution of electrical conductivity. The division between the actual non-ohmic equivalent current sources of activity and the passive ohmic currents is then referred to as primary (Jp) and volume currents (JV), respectively. As depicted in Figure 1, both MEG and EEG are ultimately generated by the primary currents. The primary currents set up a potential distribution (V) that extends through the brain tissue, the cerebrospinal fluid (CSF), the skull, and the scalp, where it is measured as EEG; the passive JV is proportional to the electric field (negative gradient of V) and electrical conductivity (σ). MEG, in general, is generated by both the Jp and Jv. The total current is the sum of Jp and Jv: J = Jp + Jv, whence Jp = J – Jv = J + σ∇V. In this definition, the conductivity is considered on a macroscopic scale, omitting the cellular level details. The primary current is the ‘battery’ of the circuit and is nonzero at the active sites in the brain. Furthermore, the direction of the primary current is determined by the cellular level geometry of the active cells. By locating the primary currents from MEG/EEG we locate the sites of activity.

The task of computing EEG and MEG given Jp is commonly called forward modeling, and it is governed by Maxwell’s equations. However, in the geometry of the head, the integral effect of the volume currents to the magnetic field can be relatively easily taken into account and, therefore, modeling of MEG is in general more straightforward than the precise calculation of the electric potentials measured in EEG. Specifically, a first order approximation, the spherically symmetric conductor model (Sarvas, 1987), can often be used (Tarkiainen et al., 2003). In this case, all components of the magnetic field can be computed from an analytical formula which is independent of the value of the electrical conductivity as a function of the distance from the center of the sphere (Sarvas, 1987). In a more complex case with realistically shaped conductivity compartments, the skull and the scalp can be replaced by a perfect insulator (Hämäläinen and Sarvas, 1987; Hämäläinen and Sarvas, 1989).

The availability of these forward models opens up the possibility to estimate the locations (r) and time course of the primary current activity, Jp = Jp(r,t) from MEG and EEG sensor data, that is inverse modeling, or source estimation. However, this inverse problem is fundamentally ill-posed, and constraints are needed to render the problem unique. The different source estimation methods, such as current dipole fitting, minimum-norm estimates, sparse source estimation methods, and beamformer approaches, differ in their capability to approximate the extent of the source activity and in their localization accuracy; there are presently several open-source software packages for source estimation, for example Gramfort et al. (2013). All of these methods are capable of inferring both the location and direction of the neural currents and their time courses. Importantly, due to physiological considerations, the appropriate elementary primary current source in all of these methods is estimated as a current dipole, with units current x distance, that is Am (Ampere x meter).

Thanks to the consistent orientation of the apical dendrites of the pyramidal cells in the cortex this primary current is oriented normal to the cortical mantle and its direction corresponds to the intracellular current flow (Okada et al., 1997; Ikeda et al., 2005; Murakami and Okada, 2006). When source estimation is used in combination with geometrical models of the cortex constructed from anatomical MRI, the current direction can be related to the direction of the outer normal of the cortex: one is thus able to tell whether the estimated current is flowing outwards or inwards at a particular cortical site at a particular point in time. As such, the direction of the current flow can be related to orientation of the pyramidal neuron apical dendrites and inferred as currents flow from soma to apical tuft (up the dendrites) or apical tuft to soma (down the dendrites), see Figure 1.

Inferring the neural origin of the primary currents with HNN

The focus of HNN is to study how Jp is generated by the assembly of neurons in the brain at the microscopic scale. Currently, the process of estimating the primary current sources with inverse methods, or calculating the forward solution from Jp to the measured sensor level signal, is separate from HNN. A future direction is to integrate the top-down source estimation software with our bottom-up HNN model for all-in-one source estimation and circuit interpretation (see Discussion).

HNN’s underlying neural model contains elements that can simulate the primary current dipoles (Jp) creating EEG/MEG signals in a biophysically principled manner (Figure 1). Specifically, HNN simulates the primary current from a canonical model of a layered neocortical column via the net intracellular electrical current flow in the pyramidal neuron dendrites in a direction parallel to the apical dendrites (see red arrow in Figures 1 and 2, and further discussion in Materials and methods) (Hämäläinen et al., 1993; Ikeda et al., 2005; Jones, 2015; Murakami et al., 2003; Murakami and Okada, 2006; Okada et al., 1997). With this construction, the units of measure produced by the model are the same as those estimated from source localization methods, namely, ampere-meters (Am), enabling one-to-one comparison of results. This construction is unique compared to other EEG/MEG modeling software (see Discussion). A necessary step in comparing model results with source-localized signals is an understanding of the direction of the estimated net current in or out of the cortex, which corresponds to current flow down or up the pyramidal neuron dendrites, respectively, as discussed above. Estimation of current flow orientation at any point in time is an option in most inverse solution software that helps guide the neural interpretation, as does prior knowledge of the relay of sensory information in the cortex, see further discussion in the Tutorials part of the Results section.

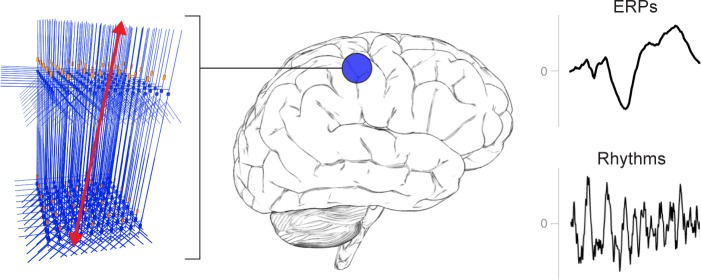

Figure 2. A schematic illustration of a canonical patch of neocortex that is represented by HNN’s underlying neural model.

(Left) 3D visualization of HNN’s model (pyramidal neurons drawn in blue, interneurons drawn in orange. (Right) Commonly measured EEG/MEG signals (ERPs and low frequency rhythms) from a single brain area that can be studied with HNN.

By keeping model output in close agreement with the data, HNN’s underlying model has led to new and generative predictions on the origin of sensory evoked responses and low-frequency rhythms, and on the changes in these signals across experimental conditions (Jones et al., 2009; Jones et al., 2007; Khan et al., 2015; Lee and Jones, 2013; Sherman et al., 2016; Sliva et al., 2018; Ziegler et al., 2010) described further below. The macro- to micro-scale nature of the HNN software is designed to develop and test hypotheses that can be directly validated with invasive recordings or other imaging modalities (see further discussion in tutorial on alpha and beta rhythms).

HNN is currently constructed to dissect the cell and network contributions to signals from one source-localized region of interest. Specifically, the HNN GUI is designed to simulate sensory evoked responses and low-frequency brain rhythms from a single region, based on the local network dynamics and the layer-specific thalamo-cortical and cortico-cortical inputs that contribute to the local activity. As such, HNN’s underlying neocortical network represents a scalable patch of neocortex containing canonical features of neocortical circuitry (Figure 2). Ongoing expansions will include the ability to import other user-defined cell types and circuit models into HNN, simulate LFP and sensor level signals, as well as the the interactions among multiple neocortical areas (see Discussion). Of note, users can still benefit from our software if they are working with data directly from EEG/MEG sensor rather than source-localized signals. The primary currents are the foundation of the sensor signal and, as such, can have similar activity profiles (e.g., compare source-localized tactile evoked response in Figure 4 and sensor-level response in Figure 5).

Overview of HNN’s default canonical neocortical column template network

Neocortical column structure

Here, we give an overview of the main features that are important to understand in order to begin exploring the origin of macroscale evoked responses and brain rhythms, and we provide details on how these features are implemented in HNN’s template model. Further details can be found in the Materials and methods section, in our prior publications (e.g., Jones et al., 2009), and on our website https://hnn.brown.edu.

Given that the primary electrical current that generates EEG/MEG signals comes from synchronous activity in pyramidal neuron (PN) dendrites across a large population, there are several key features of neocortical circuitry that are essential to consider when simulating these currents. While there are known differences in microscale circuitry across cortical areas and species, many features of neocortical circuits are remarkably similar. We assume these conserved features are minimally sufficient to account for the generation of evoked responses and brain rhythms measured with EEG/MEG, and we have harnessed this generalization into HNN’s foundational model, with success in simulating many of these signals using the same template model (see Introduction). These canonical features include:

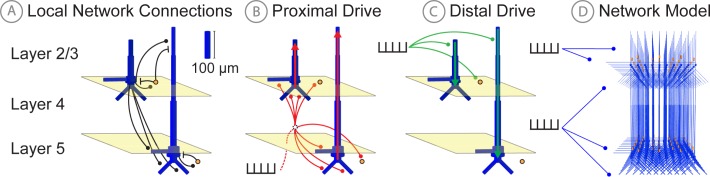

(I) A 3-layered structure with pyramidal neurons in the supragranular and infragranular layers whose dendrites span across the layers and are synaptically coupled to inhibitory interneurons in a 3-to-1 ratio of pyramidal to inhibitory cells (Figure 3A). Of note, cells in the granular layer are not explicitly included in the template circuit. This initial design choice was based on the fact that macroscale current dipoles are dominated by PN activity in supragranular and infragranular layers. Thalamic input to granular layers is presumed to propagate directly to basal and oblique dendrites of PN in the supragranular and infragranular layers. In the model, the thalamic input synapses directly onto these dendrites.

Figure 3. Schematic illustrations of HNN’s underlying neocortical network model.

(A) Local Network Connectivity: GABAergic (GABAA/GABAB; lines) and glutamatergic (AMPA/NMDA; circles) synaptic connectivity between single-compartment inhibitory neurons (orange circles) and multi-compartment layer 2/3 and layer five pyramidal neurons (blue neurons). Excitatory to excitatory connections not shown, see Materials and methods. (B) Exogenous proximal drive representing lemniscal thalamic drive to cortex. User defined trains or bursts of action potentials (see tutorials described in text) are simulated and activate post-synaptic excitatory synapses on the basal and oblique dendrites of layer 2/3 and layer five pyramidal neurons as well as the somata of layer 2/3 and layer five interneurons. These excitatory synaptic inputs drive current flow up the dendrites towards supragranular layers (red arrows). (C) Exogenous distal drive representing cortical-cortical inputs or non-lemniscal thalamic drive that synapses directly into the supragranular layer. User defined trains of action potentials are simulated and activate post-synaptic excitatory synapses on the distal apical dendrites of layer 5 and layer 2/3 pyramidal neurons as well as the somata of layer 2/3 interneurons. These excitatory synaptic inputs push the current flow down towards the infragranular layers (green arrows). (D) The full network contains a scalable number of pyramidal neurons in layer 2/3 and layer 5 in a 3-to-1 ratio with inhibitory interneurons, activated by user defined layer specific proximal and distal drive (see Materials and methods for full details).

The number of cells in the network is adjustable in the Local Network Parameters window via the Cells tab, while maintaining at 3-to-1 pyramidal to inhibitory interneuron ratio in each layer. The connectivity pattern is fixed, but the synaptic weights between cell types can be adjusted in the Local Network menu and the Synaptic Gains menu. Macroscale EEG/MEG signals are generated by the synchronous activity in large populations of PN neurons. Evoked responses are typically on the order of 10 – 100nAm, and are estimated to be generated by the synchronous spiking activity of the order of tens of thousands of pyramidal neurons. Low-frequency oscillations are larger in magnitude and are on the order of 100–1000 nAm, and are estimated to be generated by the subthreshold activity of on the order of a million pyramidal neurons (Jones et al., 2009; Jones et al., 2007; Murakami and Okada, 2006). While HNN is constructed with the ability to adjust local network size, the magnitude of these signals can also be conveniently matched by applying a scaling factor to the model output, providing an estimate of the number of neurons that contributed to the signal.

(II) Exogenous driving input through two known layer-specific pathways. One type of input represents excitatory synaptic drive that comes from the lemniscal thalamus and contacts the cortex in the granular layers, which then propagates to the proximal PN dendrites in the supragranular and infragranular layers and somata of the inhibitory neurons; this input is referred to as proximal drive (Figure 3B). The other input represents excitatory synaptic drive from higher-order cortex or non-specific thalamic nuclei that synapses directly into the supragranular layers and contacts the distal PN dendrites and somata of the inhibitory neuron; this input is referred to as distal drive (Figure 3C). The networks that provide proximal and distal input to the local circuit (e.g., thalamus and higher order cortex) are not explicitly modelled, but rather these inputs are represented by simulated trains of action potentials that activate excitatory post-synaptic receptors in the local network. The temporal profile of these action potentials is adjustable depending on the simulation experiment and can be represented as single spikes, bursts of input, or rhythmic bursts of input. There are several ways to change the pattern of action potential drive through different buttons built into the HNN GUI: Evoked Inputs, Rhythmic Proximal Inputs, and Rhythmic Distal Inputs. The dialog boxes that open with these buttons allow creation and adjustment of patterns of evoked response drive or rhythmic drive to the network (see tutorials described in Results section for further details).

(III) Exogenous drive to the network can also be generated as excitatory synaptic drives following a Poisson process to the somata of chosen cell classes or as tonic input simulated as a somatic current clamp with a fixed current injection. The timing and duration of these drives is adjustable.

Further details of the biophysics and morphology of the cells and of the architecture of the local synaptic connectivity profiles in the template network can be found in the Materials and methods section. As the use of our software grows, we anticipate other cells and network configurations will be made available as template models to work with via open source sharing (see Discussion).

Parameter tuning in HNN’s template network model

HNN’s template model is a large-scale model simulated with thousands of differential equations and parameters, making the parameter optimization process challenging. The process for tuning this canonical model and constraining the space of parameters to investigate the origin of ERPs and low-frequency oscillations was as follows. First, the individual cell morphologies and physiologies were constrained so individual cells produced realistic spiking patterns to somatic injected current (detailed in Materials and methods). Second, the local connectivity within and among cortical layers was constructed based on a large body of literature from animal studies (detailed in Materials and methods). All of these equations and parameters were then fixed, and the only parameters that were originally tuned to simulate ERPs and oscillations were the timing and the strength of the exogenous drive to the local network. This drive represented our ‘simulation experiment’ and was based on our hypotheses on the origin of these signals motivated by literature and on matching model output to features of the data (see tutorials described in Results). The HNN GUI was constructed assuming ERPs and low-frequency oscillations depend on layer-specific exogenous drives to the network. The simulation experiment workflow and tutorials described below are in large part based on ‘activating the network’ by defining the characteristics of this layer-specific drive. Default parameter sets are provided as a starting point from which the underlying parameters can be interactively manipulated using the GUI, and additional exogenous driving inputs can be created or removed.

Automated parameter optimization is also available in HNN and is specifically designed to accurately reproduce features of an ERP waveform based on the temporal spacing and strength of the exogenous driving inputs assumed to generate the ERP. Before taking advantage of HNN’s automated parameter optimization, we strongly encourage users to begin by understanding our ERP tutorial and by hand-tuning parameters using one of our default parameter sets to get an initial representation of the recorded data. The identification of an appropriate number of driving inputs and their approximate timings and strengths serves as a starting point for the optimization procedure (described in the ERP Model Optimization section below). Hand tuning of parameters and visualizing the resultant changes in the GUI will enable users to understand how specific parameter changes impact features of the current dipole waveform.

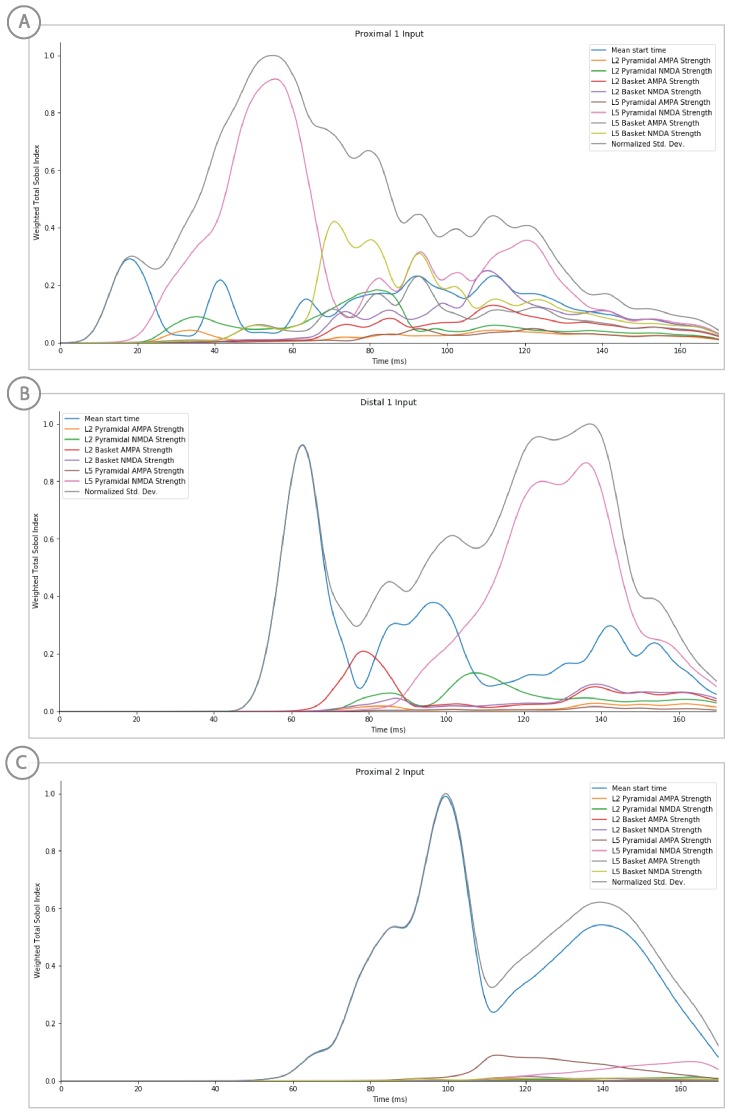

Importantly, the biophysical constraints on the origin of the current dipoles signal (discussed above) will dictate the output of the model and necessarily limit the space of parameter adjustments that can accurately account for the recorded data. The same principle underlies the fact that a limited space of signals are typically studied at the macroscale (ERPs and low-frequency oscillations). A parameter sensitivity analysis on perturbations around the default ERP parameter sets confirmed that a subset of the parameters have the strongest influence on features of the ERP waveform (see Supplementary Materials). Insights from GUI-interactive hand tuning and sensitivity analyses can help narrow the number of parameters to include in the subsequent optimization procedure and greatly decrease the number of simulations required for optimization.

HNN GUI overview and interactive simulation experiment workflow

The HNN GUI is designed to allow researchers to link macro-scale EEG/MEG recordings to the underlying cellular- and network-level generators. Currently available visualizations include a direct comparison of simulated electrical sources to recorded data with calculated goodness of fit estimates, layer-specific current dipole activity, individual cell spiking activity, and individual cell somatic voltages (Figure 4B–D). Results can be visualized in both the time and frequency domain. Based on its biophysically detailed design, the output of HNN’s model and recorded source-localized data have the same units of measure (Am). By closely matching the output of the model to recorded data in an interactive manner, users can test and develop hypotheses on the cell and network origin of their signals.

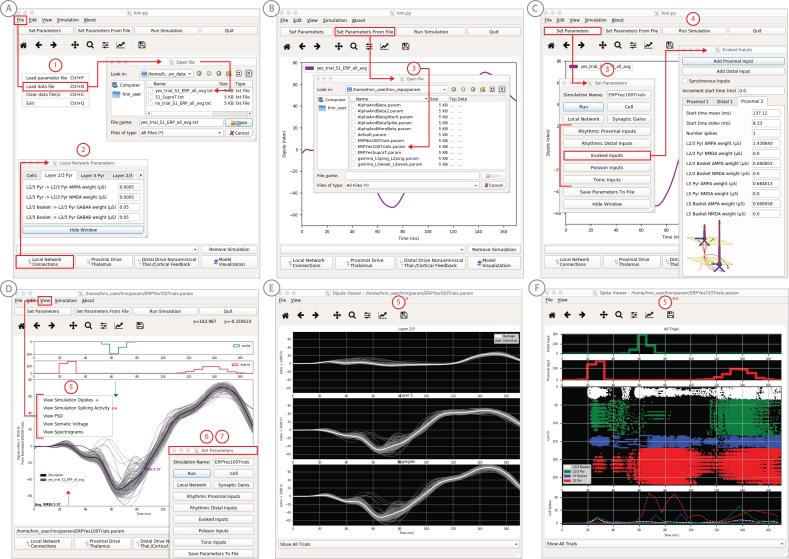

Figure 4. An example workflow showing how HNN can be used to link the macroscale current dipole signal to the underlying cell and circuit activity.

The example shown is for a perceptual threshold level tactile evoked response (50% detected) from SI (Jones et al., 2007; see ERP Tutorial text for details). (A) Steps 1 and 2: load data and define the local network structure. (B) Step 3: activate the local network, starting with a predefined parameter set; shown here for the parameter set for perceptual threshold-level evoked response (ERPYes100Trials.param) (C) Step 3 and 4: adjust the evoked input parameters according to user defined hypotheses and simulation experiment, and run the simulation. (D) Step 5: visualize model output; the net current dipole will be displayed in the main GUI window and microcircuit details, including layer-specific responses, cell membrane voltages, and spiking profiles (E and F) are shown by choosing them from the View pull down menu. Parameters can be adjusted to hypothesized circuit changes under different experimental conditions (e.g. see Figure 5).

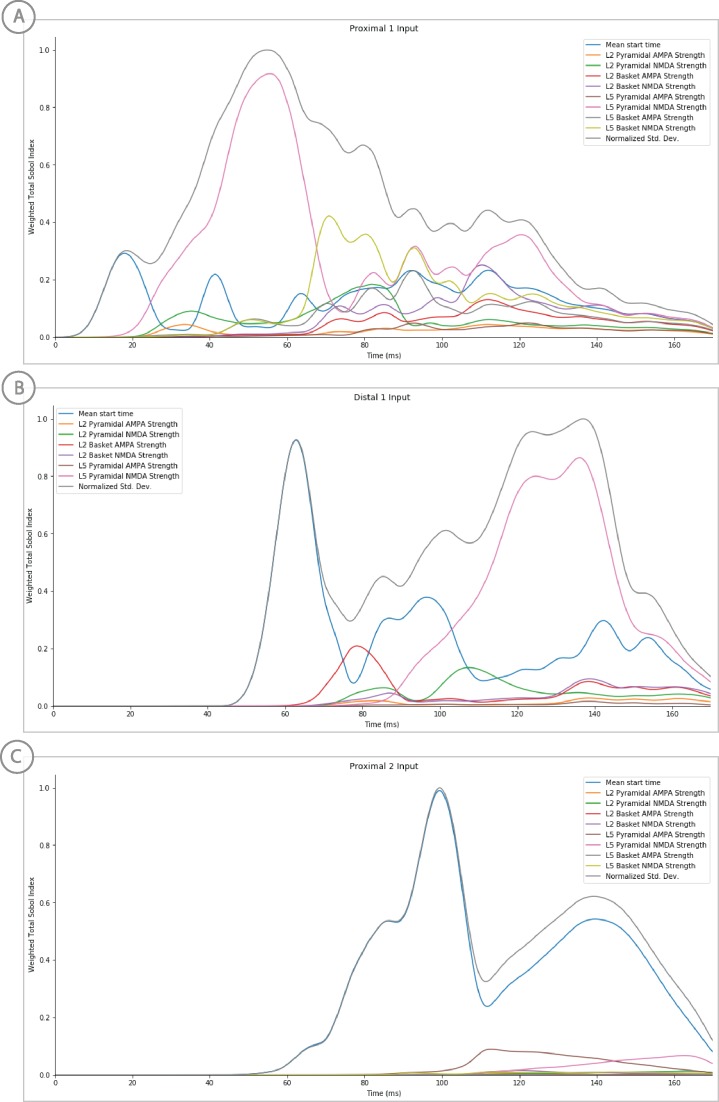

Figure 4—figure supplement 1. Sensitivity analysis results of the perceptual threshold level evoked response example showing the relative contribution of each input’s parameters on variance.

The process for simulating evoked responses or brain rhythms from a single region of interest is to first define the network structure and then to ‘activate’ the network with exogenous driving input based on your hypotheses and simulation experiment. HNN’s template model provides the initial network structure. The choice of ‘activation’ to the network depends on the simulation experiment. The GUI design is motivated by our prior published studies and was built specifically to simulate sensory evoked responses, spontaneous rhythms, or a combination of the two (Jones et al., 2009; Jones et al., 2007; Khan et al., 2015; Lee and Jones, 2013; Sherman et al., 2016; Sliva et al., 2018; Ziegler et al., 2010). The tutorials described in the Results section below detail examples of how to ‘activate’ the network to simulate sensory evoked responses and spontaneous rhythms. Here, we outline a typical simulation experiment workflow.

In practice, users apply the following interactive workflow, as in Figure 4 and detailed further in the tutorials with an example tactile evoked response from somatosensory cortex (data from Jones et al., 2007).

(Step 1) Load EEG/MEG data (blue). (Step one is optional.)

(Step 2) Define the cortical column network structure. The default template network is automatically loaded when HNN starts. Default parameters describing the local network can be adjusted by clicking the Set Parameters button on the GUI and then Local Network Parameters, or directly from the Local Network Parameters button on the GUI (Figure 4A).

(Step 3) ‘Activate’ the local network by defining layer-specific, exogenous driving inputs (Figure 3B,C). The drive represents input to the local circuit from thalamus and/or other cortical areas and can be in the form of (i) spike trains (single spikes or bursts of rhythmic input) that activate post-synaptic targets in the local network, (ii) current clamps (tonic drive), or (iii) noisy (Poisson) synaptic drive. The choice of input parameters depends on your hypotheses and ‘simulation experiment’. In the example simulation, predefined evoked response parameters were loaded in via the Set Parameters From File button and choosing the file ‘ERPYes100Trials.param’; this is also the default evoked response parameter set loaded when starting HNN (Figure 4B). The Evoked Input parameters are then viewed in the Set Parameters dialog box under Evoked Inputs (Figure 4C). The Evoked Inputs parameters are described further in the tutorials below.

(Step 4) Run simulation and directly compare model output (black) and data (purple) with goodness of fit calculations (root mean squared error, RMSE, between data and averaged simulation) (Figure 4D).

(Step 5) Visualize microcircuit details, including layer-specific responses, cell membrane voltages, and spiking profiles by choosing from the View pull down menu (Figure 4D,E,F).

(Step 6) Adjust parameters through the Set Parameters dialog box to develop and test predictions on the circuit mechanisms that provide the best fit to the data. With any parameter adjustment, the change in the dipole signal can be viewed and compared with the prior simulation to infer how specific parameters impact the current dipole waveform. Prior simulations can be maintained in the GUI or removed. For ERPs, automatic parameter optimization can be iteratively applied to tune the parameters of the exogenous driving inputs to find those that provide the best initial fit between the simulated dipole waveform and the EEG/MEG data (see further details below).

(Step 7) To infer circuit differences across experimental conditions, once a fit to one condition is found, adjustments to relevant cell and network parameters can be made (guided by user-defined hypotheses), and the simulation can be re-run to see if predicted changes account for the observed differences in the data A list of the GUI-adjustable parameters in the model can be found in the ‘Tour of the GUI’ section of the tutorials on our website. HNN’s GUI was designed so that users could easily find the adjustable parameters from buttons and pull down menus on the main GUI leading to dialogue boxes with explanatory labels.

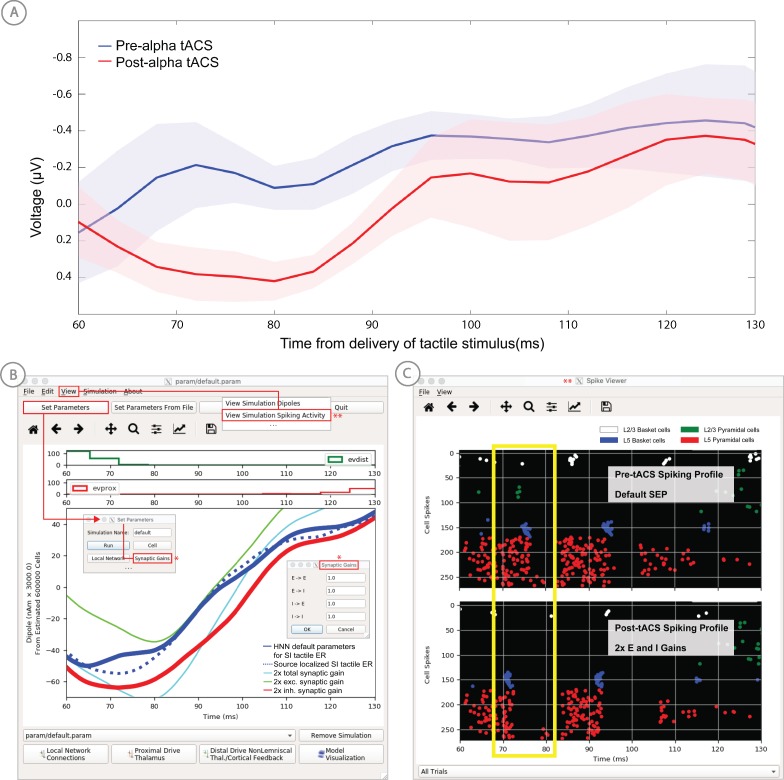

As a specific example on how to use HNN as a hypothesis testing tool, we have used HNN to evaluate hypothesized changes in EEG-measured neural circuit dynamics with non-invasive brain stimulation (Figure 5). We measured somatosensory evoked responses from brief threshold-level taps to the middle finger tip before and after 10 min of ~10 Hz transcranial alternating current stimulation (tACS) over contralateral somatosensory cortex (see Sliva et al., 2018 for details). The magnitude of an early peak near ~70 ms in the tactile evoked response increased after the tACS session (Figure 5, top left). Based on prior literature, we hypothesized that the observed difference was due to changes in synaptic efficacy in the local network induced by the tACS (Kronberg et al., 2017; Rahman et al., 2017). To test this hypothesis, we first used HNN to simulate the pre-tACS evoked responses, following the evoked response tutorial in our software (see Tutorial below). Once the pre-tACS condition was accounted for, we then adjusted the synaptic gain between the excitatory and inhibitory cells in the network using the HNN GUI and re-simulated the tactile evoked responses. We tested several possible gain changes between the populations. HNN showed that a two-fold increase in synaptic strength of the inhibitory connections, as opposed to an increase in the excitatory connections or in total synaptic efficacy, could best account for the observed differences in the data (compare blue in red curves in Figure 5). By viewing the cell spiking profiles in each condition (Figure 5, bottom right), HNN further predicted that the increase in the magnitude of the ~70 ms peak coincided with increased firing in the inhibitory neuron population and decreased firing in the excitatory pyramidal neurons in the post-tACS compared to the pre-tACS window. These detailed predictions can guide further experiments and follow-up testing in animal models or with other human imaging experiments. Follow up testing of model derived predictions is described further in the alpha/beta tutorial below.

Figure 5. Application of HNN to test alternative hypotheses on the circuit level impact of tACS on the somatosensory tactile evoked response (adapted from Sliva et al., 2018).

(A) The early tactile evoked response from above somatosensory cortex before and after 10 min of 10 Hz alternating current stimulation over SI shows that the ~70 ms peak is more prominent in the post-tACS condition. Note that the timing of this peak in the sensor level signal is analogous to the 70 ms peak in the source localized signal in Figure 4B, since the tactile stimulation was the same in both studies and the early signal from SI is similar both at the source and sensor level. (B) HNN was applied to investigate the impact of several possible tACS induced changes in local synaptic efficacy and identify which could account for the observed evoked response data. The parameters in HNN were first adjusted to account for the pre-tACS response using the default HNN parameter set (solid blue line). The synaptic gains between the different cell types was then adjusted through the Set Parameters dialog box to predict that 2x gain in the local inhibitory synaptic weights best accounted for the post-tACS evoked response. (C) Simultaneous viewing of the cell spiking activity further predicted that there is less pyramidal neuron spiking at 70 ms post-tACS, despite the more prominent 70 ms current dipole peak.

Tutorials on ERPs and low-frequency oscillations

HNN’s tutorials are designed to teach users how to simulate the most commonly studied EEG/MEG signals, including sensory evoked responses and low-frequency oscillations (alpha, beta, and gamma rhythms) by walking users through the workflow we applied in our prior studies of these signals. The data and parameter sets used in these studies are distributed with the software, and the interactive GUI design was motivated by this workflow. In completing each tutorial, users will have a sense of the basic structure of the GUI and the process for manipulating relevant parameters and viewing results. From there, users can begin to develop and test hypotheses on the origin of their own data. Below we give a basic overview of each tutorial. The HNN website (https://hnn.brown.edu) provides additional information and example exercises for further exploration.

Sensory evoked responses

We have applied HNN to study the neural origin of tactile evoked responses localized with inverse methods to primary somatosensory cortex from MEG data (Jones et al., 2007). In this study, the tactile evoked response was elicited from a brief perceptual threshold level tap - stimulus strength maintained at 50% detection - to the contralateral middle finger tip during a tactile detection experiment (experimental details in Jones et al., 2009 and Jones et al., 2007). The average tactile evoked response during detected trials is shown in Figure 4. The data from this study is distributed with HNN installation.

Following the workflow described above, the process for reproducing these results in HNN is as follows.

Steps 1 and 2

Load the evoked response data distributed with HNN, ‘yes_trial_SI_ERP_all_avg.txt’. The data shown in Figure 4B will be displayed. Adjust parameters defining the automatically loaded default local network, if desired.

Step 3

‘Activate’ the local network. In prior publications, we showed that this tactile evoked response could be reproduced in HNN by ‘activating’ the network with a sequence of layer-specific proximal and distal spike train drive to the local network, which is distributed with HNN in the file ‘ERPYes100Trials.param’.

The sequence described below was motivated by intracranial recordings in non-human primates, which guided the initial hypothesis testing in the model. Additionally, we established with inverse methods that at the prominent ~70 ms negative peak (Figure 4D), the orientation of the current was into the cortex (e.g., down the pyramidal neuron dendrites), consistent with prior intracranial recordings (see Jones et al., 2007). As such, in this example, negative current dipole values correspond to current flow down the dendrites, and positive values up the dendrites. In sensory cortex, the earliest evoked response peak corresponds to excitatory synaptic input from the lemniscal thalamus that leads to current flow out of the cortex (e.g., up the dendrites). This earliest evoked response in somatosensory cortex occurs at ~25 ms. The corresponding current dipole positive peak is small for the threshold tactile response in Figure 4D, but clearly visible in Figure 11 for a suprathreshold (100% detection) level tactile response.

The drive sequence that accurately reproduced the tactile evoked response consisted of ‘feedforward’/proximal input at ~25 ms post stimulus, followed by ‘feedback’/distal input at ~60 ms, followed by a subsequent ‘feedforward’/proximal input at ~125 ms (Gaussian distribution of input times on each simulated trial, Figure 4C). This ‘activation’ of the network generated spiking activity and a pattern of intracellular dendritic current flow in the pyramidal neuron dendrites in the local network to reproduce the current dipole waveform, many features of which fell naturally out of the local network dynamics (details in Jones et al., 2007). This sequence can be interpreted as initial ‘feedforward’ input from the lemniscal thalamus followed by ‘feedback’ input from higher-order cortex or non-lemniscal thalamus, followed by a re-emergent leminsical thalamic drive. A similar sequence of information flow likely applies to most sensory evoked signals. The inputs are distinguished with red and green arrows (corresponding to proximal and distal input, respectively) in the main GUI window. The number, timing, and strength (post-synaptic conductance) of the driving spikes were manually adjusted in the model until a close representation of the data was found (see section on parameter tuning above). To account for some variability across trials, the exact time of the driving spikes for each input was chosen from a Gaussian distribution with a mean and standard deviation (see Evoked Inputs dialog box, Figure 4C, and green and red histograms on the top of the GUI in Figure 4D). The gray curves in Figure 4D show 25 trials of the simulation (decreased from 100 trials in the Set Parameters, Run dialog box) and the black curve is the average across simulations. The top of the GUI windows displays histograms of the temporal profile of the spiking activity providing the sequence of proximal (red) and distal (green) synaptic input to the local network across the 25 trials. Note, a scaling factor was applied to net dipole output to match to the magnitude of the recorded ERP data and used to predict the number of neurons contributing to the recorded ERP. This scaling factor is chosen from Set Parameters, Run dialog box, and is shown as 3000 on the y-axis of the main GUI window in Figure 4D. Note that the scaling factor is used to predict the number of pyramidal neurons contributing to the observed signal. In this case, since there are 100 pyramidal neurons in each of layers 2/3 and 5, that amounts to 600,000 neurons (200 neurons x 3000 scaling factor) contributing to the evoked response, consistent with the experimental literature (described in Jones et al., 2009 and Jones et al., 2007).

Based on the assumption that sensory evoked responses will be generated by a layer-specific sequence of drive to the local network similar to that described above, HNN’s GUI was designed for users to begin simulating evoked responses by starting with the aforementioned default sequence of drive that is defined when starting HNN and by loading in the parameter set from the ‘ERPYes100Trials.param’ file, as described above. The Evoked Inputs dialog box (Figure 4C) shows the parameters of the proximal and distal drive (number, timing, and strength) used to produce the evoked response in Figure 4D. Here, there were two proximal drives and one distal drive to the network. These parameters were found by first hand tuning the inputs to get a close representation of the data and then running the parameter optimization procedure described below.

Step 4

The evoked response shown in Figure 4 is reproduced by clicking the ‘Run Simulation’ button at the top of the GUI, and the RMSE of the goodness of fit to the data is automatically calculated and displayed. Additional network features can also be visualized through pull down menus (Step 5).

Evoked response parameters can now be adjusted, and additional inputs can be created or removed to account for the user-defined ‘simulation experiment’ and hypothesis testing goals (Step 6). With each parameter change, a new parameter file will be saved by renaming the simulation under ‘Simulation Name’ in the ‘Set Parameters’ dialog box (see Figure 4C). From here, other cell or network parameters can be adjusted to compare across conditions (Step 7).

Alpha and beta rhythms

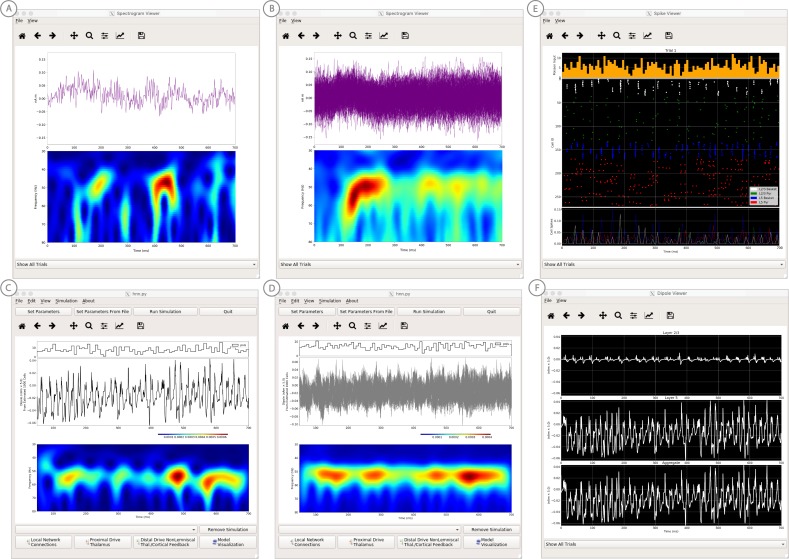

We have applied HNN to study the neural origin of spontaneous rhythms localized to the primary somatosensory cortex from MEG data; it is often referred to as the mu-rhythm, and it contains a complex of (7–14 Hz) alpha and (15–29 Hz) beta frequency components (Jones et al., 2009). A 1 s time frequency spectrogram of the spontaneous unaveraged SI rhythm from this study is shown in Figure 6a. This data is distributed on the HNN website (‘SI_ongoing.txt’), and contains 1000 1 s epochs of spontaneous data (100 trials each from 10 subjects). The data is plotted in HNN through the ‘View → View Spectrograms’ menu item, followed by ‘Load Data’ and then selecting the ‘SI_ongoing.txt’ file. Note that it may take a few minutes to calculate the wavelet transforms for all 1000 1 s trials included. Next, select an individual trial (e.g. trial 32) from the drop-down menu. The dipole waveform from a single 1 s epoch will then be shown in the top.

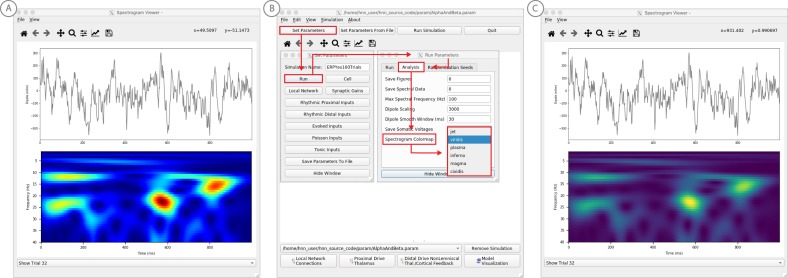

Figure 6. Example spontaneous data from a current dipole source in SI showing transient alpha (~7–14 Hz Hz) and beta (~15–29 Hz) components (data as in Jones et al., 2009).

The data file (‘SI_ongoing.txt’) used to generate these outputs is provided with HNN and plotted through the ‘View → View Spectrograms’ menu item, followed by ‘Load Data’, and then selecting the file. (A) The spectrogram viewer with the default ‘jet’ colormap. (B) The configuration option to change the colormap to other perceptually uniform colormaps, where the lightness value increases monotonically. (C) The spectrogram viewer updated with the perceptually uniform ‘viridis’ colormap.

The corresponding time-frequency spectrogram is automatically calculated and displayed at the bottom, as seen in Figure 6A. The default colormap indicating spectral power is the ‘jet’ scheme with blue representing low power and red representing high power. This is the same colormap used in the spectrograms of prior publications using the HNN model for studying low-frequency oscillations. However, HNN allows the user to plot the spectrogram using different standardized colormaps that are perceptually uniform, meaning they have a lightness value that increases monotonically (Pauli, 1976). The configuration option for changing the colormap and the colormap options are shown in Figure 6B. The spectrogram resulting from choosing the perceptually uniform ‘viridis’ colormap is shown in Figure 7C. Note that updating the spectrogram colormap requires repeating the ‘View → View Spectrograms’ menu selection.

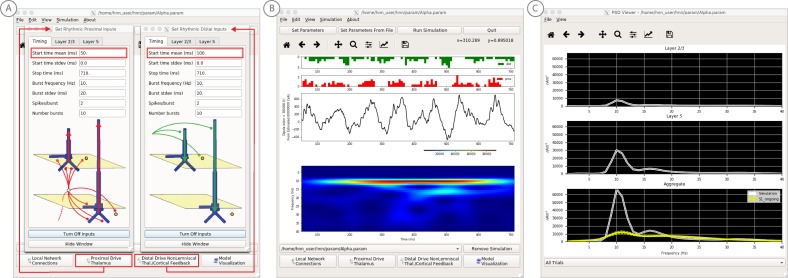

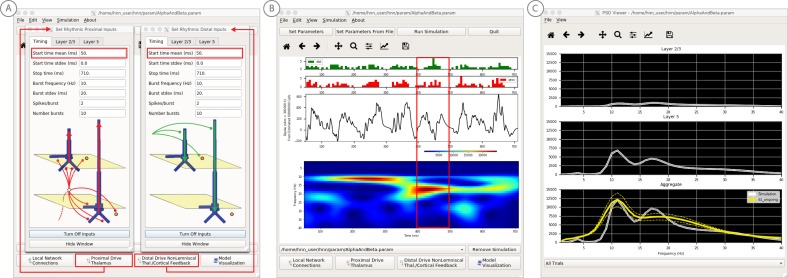

Figure 7. An example workflow for simulating alpha frequency rhythm (Jones et al., 2009; Ziegler et al., 2010; see Alpha and Beta Rhythms Tutorial text for details).

(A) Here we are using the default HNN network configuration and not directly comparing the waveform to data, so begin with Step 3: activate the local network. Motivated by prior studies (see text), in this example alpha rhythms were simulated by driving the network with ~10 Hz bursts (presumed to be generated by thalamus) to the local network through proximal and distal projection pathways. The parameter set describing these burst is provided in the Alpha.param file and loaded through the Set Parameters From File button. Adjustable burst drive parameter are shown and here were set with a 50 ms delay between the ~10 proximal and distal drive (red boxes). (B) Step 4: running the simulation with the ‘Run Simulation’ button, shows that a continuous alpha rhythm emerged in the current dipole signal (middle dipole time trace; bottom time-frequency representation). Green and red histograms at the top display the defined distal and proximal burst drive patterns, respectively. (C) Step 5: additional network features, including layer specific power spectral density plots as shown can be visualized through the ‘View’ pull down menu, and compared to data (here compared to the spontaneous SI data shown in Figure 6A). Features of the burst drive can be adjusted (panel A) and corresponding changes in the current dipole signals studied (Steps 6 and 7, see Figure 8).

Notice that this rhythm contains brief bouts of alpha or beta activity that will occur at different times in different trials due to the spontaneous, non-stationary nature of the signals. Such non-time-locked oscillations are often referred to as spontaneous and/or ‘induced’ rhythms. When the non-negative spectrograms are averaged across trials, the intermittent bouts of high power alpha and beta activity accumulate without cancellation, and bands of alpha and beta activity appear continuous in the spectrogram (data not shown, see Jones, 2016; Jones et al., 2007) and will create peaks at alpha and beta in a power spectral density (Figure 8C). Since the alpha and beta components of this rhythm are not time locked across trials, it is difficult to directly compare the waveform of the recorded data with model output. Rather, to assess the goodness of fit of the model, we compared features of the simulated rhythm to the data (see Jones et al., 2009), including peaks in the power spectral density, as described below. Since we can not directly compare the waveform of this rhythm with the model output, rather than first loading the data, we begin this tutorial with Step 3, ‘activating’ the network, using the default local network defined when starting HNN.

Figure 8. An example workflow for simulating transient alpha and beta frequency rhythm as in the spontaneous SI rhythms shown in Figure 6A (Jones et al., 2009; Ziegler et al., 2010; see Alpha and Beta Rhythms Tutorial text for details).

(A) Here we are using the default HNN network configuration and not directly comparing the waveform to data, so begin with Step 3: activate the local network. In this example, a beta component emerged when the parameters of two ~ 10 Hz bursts to the local network through proximal and distal project pathways, as described in Figure 7, were adjusted so that on average they arrived to the network at the same time (see red boxes). This parameter set is provided in the ‘AlphaAndBeta.params’ file. (B) Step 4: running the simulation with the ‘Run Simulation’ button, shows that intermittent and transient alpha and beta rhythms emerge in the current dipole signal (middle dipole time trace; bottom time-frequency representation). Green and red histograms at the top display the defined distal and proximal burst drive patterns, respectively. Due to the stochastic nature of the bursts, on some cycles of the drive, the distal burst was simultaneous with the proximal burst and strong enough to push current flow down the dendrites to create a beta event (see red box). This model derived prediction reproduced several features of the data, including alpha and beta peaks in the corresponding PSD that were more closely matched to the recorded data (C). Model predictions were subsequently validated with invasive recordings in mice and monkeys (Sherman et al., 2016; see further discussion in text).

Step 3

‘Activate’ the local network. In prior publications, we have simulated non-time-locked spontaneous low-frequency alpha and beta rhythms through patterns of rhythmic drive (repeated bursts of spikes) through proximal and distal projection pathways. These patterns of drive were again motivated by literature and by tuning the parameters to match features of the model output to the recorded data (see Jones et al., 2009; Sherman et al., 2016).

We begin by describing the process for simulating a pure alpha frequency rhythm only, and we then describe how a novel prediction for the origin of beta events emerged (Sherman et al., 2016). Motivated by a long history of research showing alpha rhythms in neocortex rely on ~10 Hz bursting in the thalamus, we tested the hypothesis that ~10 Hz bursts of drive through proximal and distal projection pathways (representing lemniscal and non-lemniscal thalamic drive) could reproduce an alpha rhythm in the local circuit. The burst statistics (number of spikes and inter-burst interval chosen from a Gaussian distribution), strength of the input (post-synaptic conductance), and delay between the proximal and distal input were manually adjusted until a pure alpha rhythm sharing feature of the data was found. We showed that when ~ 10 Hz bursts of proximal and distal drives are subthreshold and arrive to the local network in anti-phase (~50 ms delay) a pure alpha rhythm emerges (Jones et al., 2009; Ziegler et al., 2010).

The parameters of this drive are distributed with HNN in the file ‘Alpha.param’, loaded through the Set Parameters From File button and viewed in the Set Parameters dialog box under Rhythmic Proximal and Rhythmic Distal inputs (Figure 7A). Note that the start time mean of the ~10 Hz Rhythmic Proximal and Rhythmic Distal Inputs are delayed by 50 ms. The HNN GUI in Figure 7B displays the simulated current dipole output from this drive (middle), the histogram of the proximal and distal driving spike trains (top), and the corresponding time-frequency domain response (bottom). This GUI window is automatically constructed when rhythmic inputs are given to the network, and HNN is designed to easily define rhythmic input to the network via the Set Parameters dialog box. A scaling factor was also applied to this signal (via Set Parameters, Run dialog box) and is shown as 300,000 on the y-axis of the main GUI window example in Figure 7B. The 300,000 scaling factor predicts that 60,000,000 PNs (300,000 × 200 PNs) contribute to the measured signal.

Step 4

The alpha rhythm shown in Figure 7B is reproduced by clicking the ‘Run Simulation’ button at the top of the GUI, Additional network features, including power-spectral density plots, can also be visualized through the pull down menus (Step 5).

Steps 6 and 7

Rhythmic input parameters can be adjusted to account for the user defined ‘simulation experiment’ and hypothesis testing goals.

The goal in our prior study was to reproduce the alpha/beta complex of the SI mu-rhythm. By hand tuning the parameters we were able to match the output of the model to several features of the recorded data, including symmetric amplitude modulation around zero and PSD plots as shown in Figures 7 and 8 (see further feature matching in Jones et al., 2009 and Sherman et al., 2016), we arrived at the hypothesis that brief bouts of beta activity (‘beta events’) non-time locked to alpha events could be generated by decreasing the mean delay between the proximal and distal drive to 0 ms and increasing the strength of the distal drive relative to the proximal drive. This parameter set is also distributed with HNN (‘AlphaandBeta.param’) and viewed in Figure 8A. With this mechanism, beta events emerged on cycles when the two stochastic drives hit the network simultaneously and when the distal drive was strong enough to break the upward flowing current and create a prominent ~50 ms downward deflection (see red box in Figure 8B). The stronger the distal drive the more prominent the beta activity (data not shown, see Sherman et al., 2016). This beta event hypothesis was purely model derived and was based on matching several features of the SI mu rhythm between the model output and data (detailed in Jones et al., 2009 Sherman et al., 2016).

Importantly, due to the non-time locked nature of this spontaneous rhythm, the waveform can not be directly compared by overlaying the waveform of the model and recorded oscillations as in the evoked response example (e.g. Figure 4). However, one can quantify features of the oscillation and compare to recorded data (Jones et al., 2009; Sherman et al., 2016). One such feature is the amplitude of the oscillation waveform, where a scaling factor can be applied to the model to predict how many cells are needed to produce a waveform amplitude on the same order as the recorded data, as described in Step three above. Additionally, the PSD from the model and data can be directly compared. This can be viewed in the HNN GUI though the ‘View PSD’ pull down menu (see Figure 4, Step 5), where this data (‘SI_ongoing.txt’ - provided with HNN) can be automatically compared to the model output in the PSD window (Figure 8C).

The model derived predictions on mechanisms underlying alpha and beta where motivated by literature and further refined by tuning the parameters to match the output of the model with various features of the recorded data. While the mechanisms of the alpha rhythm described above were motivated by literature showing cortical alpha rhythms arise in part from alpha frequency drive from the thalamus and supported by animal studies (Hughes and Crunelli, 2005; for example, see Figure 2 in Bollimunta et al., 2011), the beta event hypothesis was novel. The level of circuit detail in the model led to specific predictions on the laminar profile of synaptic activity occurring during beta events that could be directly tested with invasive recordings in animal models. One specific prediction was that the orientation of the current during the prominent ~50 ms deflection defining a beta event (red box, Figure 8B) was down the pyramidal neuron dendrites (e.g. into the cortex). This prediction, along with several others, were subsequently tested and validated with laminar recordings in both mice and monkeys, where it was also confirmed that features of beta events are conserved across species and recording modalities (Sherman et al., 2016; Shin et al., 2017).

Gamma rhythms

Gamma rhythms can encompass a wide band of frequencies from 30 to 150 Hz. Here, we will focus on the generation of so-called ‘low gamma’ rhythms in the 30–80 Hz range. It has been well established through experiments and computational modeling that these rhythms can emerge in local spiking networks through excitatory and inhibitory cell interactions where synaptic time constants set the frequency of the oscillation, while broadband or ‘high gamma’ rhythms reflect spiking activity in the network that creates sharp waveform deflections (Lee and Jones, 2013). The period of the low gamma oscillation is determined by the time constant of decay of GABAA-mediated inhibitory currents (Buzsáki and Wang, 2012; Cardin et al., 2009; Vierling-Claassen et al., 2010), a mechanism that has been referred to as pyramidal-interneuron gamma (PING). In normal regimes, the decay time constant of GABAA-mediated synapses (~25 ms) bounds oscillations to the low gamma frequency band (~40 Hz). In general, PING rhythms are initiated by ‘excitation’ to the excitatory (PN) cells, and this initial excitation causes PN spiking that, in turn, synaptically activates a spiking population of inhibitory (I) cells. These (I) cells then inhibit the PN cells, preventing further PN activity until the PN cells can overcome the effects of the inhibition ~25 ms later. The pattern is repeated, creating a gamma frequency oscillation (~40 Hz).

We have applied HNN to determine if features in the current dipole signal could distinguish PING-mediated gamma from other possible mechanisms such as exogenous rhythmic drive or spiking activity that creates ‘high gamma’ oscillations (Lee and Jones, 2013). Here, we describe the process for generating gamma rhythms via the canonical PING mechanisms in HNN. First, to demonstrate the basic mechanisms of PING, we simulate a robust large amplitude and nearly continuous gamma rhythm (Steps 3–5, Figure 9). Second, we adjust the simulation parameters in order to directly compare to experimental data, where on single trials induced gamma rhythms are smaller in amplitude and less continuous emerging as transient bursts of activity (Pantazis et al., 2018) (Steps 6 and 7, Figure 10). Both parameter sets for creating the examples below are distributed with the software.

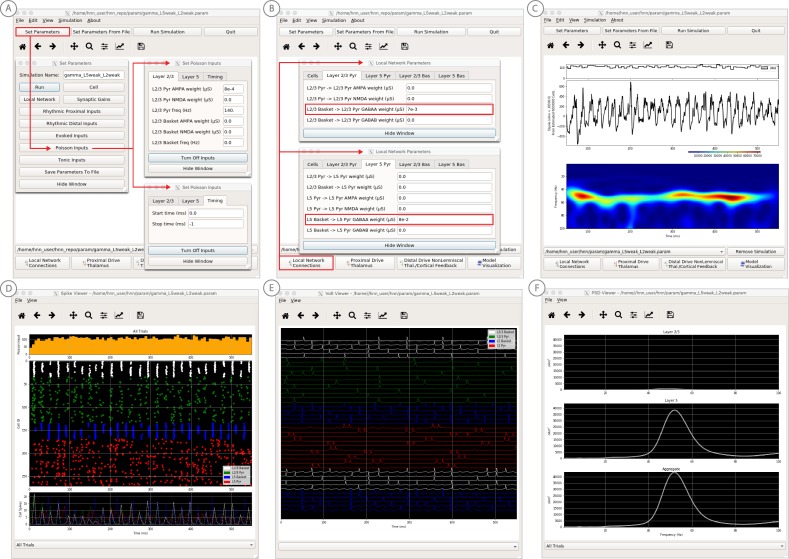

Figure 9. An example workflow for simulating canonical pyramidal-interneuron gamma (PING) rhythms (Lee and Jones, 2013; see Gamma Rhythms Tutorial text for details).

(A) Here we are using the default HNN network configuration (with some parameter adjustments as shown in panel B) and we are not directly comparing the waveform to data, so begin with Step 3: activate the local network. Motivated by prior studies on PING mechanisms (see text), in this example PING rhythms were simulated by driving the pyramidal neuron somas with noisy excitatory synaptic input following a Poisson process. The parameters defining this noisy drive are viewed and adjusted through the Set Parameters button as shown, see text and Materials and methods for parameter details. This parameter set for this example is provided in the ‘gamma_L5weak_L2weak.param’ file. In this example, all synaptic connections within the network are turned off (synaptic weight = 0), except for reciprocal connections between the excitatory (AMPA only) and inhibitory (GABAA only) cells within the same layer. The local network connectivity can be viewed and adjusted through the Set Network Connection button or pull down menu, as shown in (B). (C) Step 4: running the simulation with the ‘Run Simulation’ button, shows that a ~ 50 Hz gamma rhythm is produced in the current dipole signal (middle dipole time trace; bottom time-frequency representation). The black histogram at the top displays the noisy excitatory drive to the network. (D–F) Step 5: additional network features, including cell spiking responses, somatic voltages, and layer specific power spectral density plots as shown can be visualized through the ‘View’ pull down menu.

Figure 10. An example simulation describing how parameter adjustments to the canonical PING mechanism can account for source localized visually-induced gamma rhythms recorded with MEG.

(A) An example single trial current dipole waveform generated from a stationary square-wave grating, presented from 0 to 700 ms. MEG data was source localized to the pericalcarine region of the visual cortex (top). The corresponding time-frequency representation (bottom) shows transient bursts of induced gamma activity (bottom). Data is as in Pantazis et al. (2018) and described further in text. (B) Analogous waveforms from 100 trials overlaid (top) and corresponding averaged time-frequency spectrograms (bottom). Averaging in the spectral domain creates the appearance of a more continuous oscillation. (C) Parameters used to simulate the canonical PING rhythm in Figure 9 were adjusted (Steps 6 and 7) to test the hypothesis that a reduced rate of Poisson synaptic input to the pyramidal neurons could account for the bursty nature of the observed gamma data. The parameter set for this example is provided in the ‘gamma_L5weak_L2weak_bursty.param’ file. In this example, the Poisson input rates to the Layer 2/3 and Layer 5 cells were reduced to 4 Hz and 5 Hz, respectively, and the NMDA synaptic inputs were set to a small positive weight (dialog box not shown). Running the simulation will display a histogram of the Poisson drive (top), the current dipole waveform (middle), and the corresponding time-frequency spectrogram (bottom). With this parameter adjustment,~50 Hz bursty gamma activity is reproduced. A scaling factor of 5 was applied to the net current dipole to produce a signal amplitude comparable to the data (<0.1 nAm), suggesting that only ~1000 pyramidal neurons contributed the recorded signal. (D) Poisson input over 100 simulations (top), current dipole waveforms overlaid (middle), and the corresponding spectrogram average (bottom). Similar to the recorded data, averaging in the spectral domain creates the appearance of a more continuous oscillation. (E–F) Microcircuit details including cell spiking responses and layer specific current dipoles shows Layer 5 activity dominates the net current dipole signal, see text for further description.

To demonstrate the robust PING-mechanisms and its expression at the level of a current dipole, we begin this tutorial with Step 3, ‘activating’ the network using a slightly altered local network configuration as described below.

Step 3

‘Activate’ the local network by loading in the parameter set defining the local network and initial input parameters ‘gamma_L5weak_L2weak.param’. In this example, the input was noisy excitatory synaptic drive to the pyramidal neurons. Additionally, all synaptic connections within the network are turned off (synaptic weight = 0) except for reciprocal connections between the excitatory (AMPA only) and inhibitory (GABAA only) cells within the same layer. This is not biologically realistic, but was done for illustration purposes and to prevent pyramidal-to-pyramidal interactions from disrupting the gamma rhythm. To view the local network connections, click the ‘local network’ button in the Set Parameters dialog box. Figure 9B shows the corresponding dialog box where the values of adjustable parameters are displayed. Notice that the L2/3 and L5 cells are not connected to each other, the inhibitory conductance weights within layers are stronger than the excitatory conductances, and there are also strong inhibitory-to-inhibitory (i.e., basket-to-basket) connections. This strong autonomous inhibition will cause synchrony among the basket cells, and hence strong inhibition onto the PNs.

To reproduce the ~40 Hz gamma oscillation described by the PING mechanism above, we drove the pyramidal neuron somas in L2/3 and L5 with noisy excitatory AMPA synaptic input, distributed in time as a Poisson process with a rate of 140 Hz. This noisy input can be viewed in the ‘Set Parameters’ menu by clicking on the ‘Poisson Inputs’ button (see Figure 9A). Setting the stop time of the Poisson drive to −1, under the Timing tab, keeps it active throughout the simulation duration.

Step 4

The gamma rhythm shown in Figure 9C is reproduced by clicking the ‘Run Simulation’ button at the top of the main HNN GUI. The top panel shows a histogram of Poisson distributed times of input to the pyramidal neurons, the middle panel the net current dipole across the entire network, and the bottom the corresponding time-frequency spectrogram showing strong gamma band activity. Additional network features, including spiking activity in each cell in the population (Figure 9D), somatic voltages (Figure 9E), and PSD plots for each layer and the entire network (Figure 9F) can also be visualized through the ‘View’ pull down menu (Step 5). Notice the PING mechanisms described above in the spiking activity of the cells (Figure 9D), where in each layer the excitatory pyramidal neurons fire before the inhibitory basket cells. The line plots, which show spike counts over time, also demonstrate rhythmicity. The pyramidal neurons are firing periodically but with lower synchrony due to the Poisson drive (orange histogram at the top), which creates randomized spike times across the populations (once the inhibition sufficiently wears off). Notice also that the power in the gamma band is much smaller in Layers 2/3 than in Layer 5 (Figure 9F). This is reflective, in part, of the fact that the length of the L2/3 PNs is smaller than the L5 PNs, and hence the L2/3 cells produce smaller current dipole moments that can be masked by activity in Layer 5. This example was constructed to describe features in the current dipole signal that could distinguish PING mediated gamma rhythms but has not been directly compared to recorded data (see Lee and Jones, 2013 for further discussion).

Steps 6 and 7

Next, we describe how parameter adjustments to the canonical PING mechanism above can account for induced gamma rhythms recorded with MEG in a prior published study (Pantazis et al., 2018). In this study, subjects were presented with the following visual stimuli: stationary square-wave Cartesian gratings with vertical orientation and black/white maximum contrast with a frequency of 3 cycles/degree. These stimuli create non-time locked induced gamma rhythms in the visual cortex; a reliable phenomena observed in many studies. The data represents time-domain activity from sources localized in the pericalcarine region of the visual cortex from 0 to 700 ms after the presentation of the stimulus (Figure 10A,B), and is located in HNN’s data/gamma_tutorial folder.

To view the data in HNN, click on HNN’s ‘View’ pull down menu, then click on ‘View Spectrograms’. Then from the Spectrogram Viewer, click on the ‘File’ pull down menu, click ‘Load Data’, and select data/gamma_tutorial/100_trials.txt. Figure 10A shows a single trial of the source-localized current dipole signal, with corresponding wavelet-transform spectrogram below. Here, visual gratings were provided from time = 0–700 ms. On single non-averaged trials, the visual stimulus creates non-time-locked induced gamma rhythms at ~50 Hz that are not continuous but transient in time and ‘burst-like’, with each burst lasting ~50–100 ms. HNN’s Spectrogram Viewer automatically averages the wavelet spectrograms across trials when the data is loaded (Figure 10B). To view a single trial as in Figure 10A, use the drop-down menu at the bottom of the Spectrogram Viewer to select a single trial. The averaged spectrogram from 100 trials makes the oscillation appear nearly continuous in the 40–50 Hz gamma band (Figure 10B).

We hypothesized that in order to change the nearly continuous gamma oscillation from HNN’s canonical PING model (Figure 9) into a bursty lower amplitude gamma rhythm on single trials, the noisy excitatory AMPA synaptic input to the pyramidal neurons that creates the PING-rhythm, as described in Step 3, would need to be reduced. We tested this hypothesis by reducing the rate of the Poisson synaptic inputs to L2/3 and L5 pyramidal neurons, here from 140 Hz to 4 Hz and 5 Hz, respectively. To see the parameter values used, load the ‘gamma_L5weak_L2weak_bursty.param’ file, then click on ‘Set Parameters’ and ‘Poisson Inputs’ (parameter values not shown in Figure 10). Note, that in addition to the change and rate of drive, the NMDA synaptic inputs now have a small positive weight (previously zero), which was required to compensate for the reduced AMPA activation of the pyramidal neurons.

Click on ‘Run Simulation’ to produce the results shown in Figure 10C. The top panel of Figure 10C shows the Poisson inputs, which have a noticeably lower rate than the default PING simulation. The current dipole time-course is shown in the middle panel. The corresponding wavelet spectrogram in the bottom panel shows intermittent gamma bursts recurring with high power, similar to the features seen in the experiment. Note, in this data set, the amplitude of the current dipole signal is small,<0.1 nAm. As such, a small dipole scaling factor of 5 was applied to the output of HNN to compare to the amplitude of the experimental data. This predicts that a highly localized population of ~1000 pyramidal neurons contribute to the recorded gamma signal.

To see the effects of averaging across trials in HNN, click on ‘Set Parameters’ and ‘Run’, enter 100 trials, and then click on ‘Run Simulation’. Output as shown in Figure 10D will be produced. The individual current dipole waveforms do not have consistent phase across trials (Figure 10D middle). However, averaging the spectrograms across trials produces a more continuous band of gamma oscillation throughout the simulation duration, similar to the effects observed in the experiment.

We can now use HNN to take a closer look at the underlying neuronal spiking activity contributing to the observed dipole signal, as shown in Figure 10E. The firing rates of L2/3 pyramidal neurons (green) and L5 pyramidal neurons (red) are significantly lower and more sparse than in the continuous PING simulation shown in Figure 9. This lower pyramidal neuron activation leads to fewer, or no basket interneurons firing on any given gamma cycle (Figure 10E white, blue points have fewer participating interneurons, and do not always occur). This lower interneuron activity produces lower-amplitude bouts of feedback inhibition, which are sometimes nearly absent, and thus creates transient bursty gamma activity. Note that the firing rates of L2/3 pyramids are lower than that of L5 pyramidals, consistent with experimental data (Naka et al., 2019; Schiemann et al., 2015) and previous data-driven modeling (Dura-Bernal et al., 2019; Neymotin et al., 2016). Layer specific current dipole responses in Figure 10F confirm that due to their dendritic length L5 pyramidals are once again the major contributors to the aggregate dipole signal. This example is presented as a proof of principle of the method to begin to study the cell and circuit origin of source localized gamma band data with HNN. The circuit level hypotheses have not yet been published elsewhere or investigated in further detail.

ERP model optimization

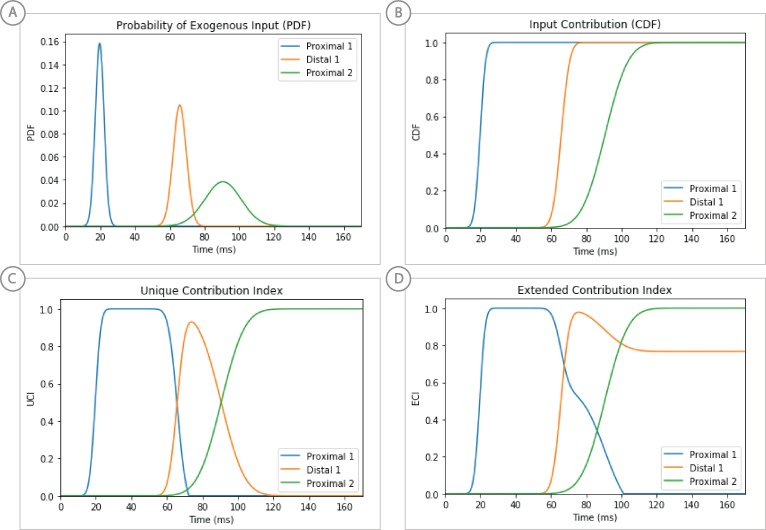

To ease the process of narrowing in on parameter values representing a user’s hypothesized model, we have added a model optimization tool in HNN. Currently, this tool automatically estimates parameter values that minimize the error between model output and features of ERP waveforms from experiments. Parameter estimation is a computationally demanding task for any large-scale model. To reduce this complexity, we have leveraged insight of key parameters essential to ERP generation, along with a parameter sensitivity analysis, to create an optimization procedure that reduces the computational demand to a level that can be satisfied by a common multi-core laptop.

Two primary insights guided development of the optimization tool. First, exogenous proximal and distal driving inputs are the essential parameters to first tune to get an initial accurate representation of an ERP waveform. Thus, the model optimization is currently designed to estimate the parameters of these driving inputs defined by their synaptic connection strengths, and the Gaussian distribution of their timing (see dialog box in Figure 11B). In optimizing the parameters of the evoked response simulations to reproduce ERP data distributed with HNN (e.g. see ERP tutorial), we performed sensitivity analyses that estimated the relative contributions of each parameter to model uncertainty, where a low contribution indicated that a parameter could be fixed in the model and excluded from the estimation process to decrease compute time (see Supplementary Materials).

Figure 11. Example of the ERP parameter optimization procedure for a suprathreshold tactile evoked response.

(A) Source localized SI data from a suprathreshold tactile evoked response (100% detection; purple) is shown overlaid with the corresponding HNN evoked response (black) using the threshold level evoked response parameter set detailed in Figure 4, as in initial parameter set. The RMSE between the data and the model is initially high at 30.53. (B) To improve the fit to the data, a procedure for sequentially optimizing the strengths of the proximal and distal drive inputs generating the evoked response can be run. The ‘Configure Optimization’ option is available under the ‘Simulation’ pull down menu. A dialog box allows users to choose and set a range over free optimization parameters, see text for details. (C) The GUI displays an intermediate fit after the first optimization step, specific to the first proximal drive. (D) The final fit is displayed once the optimization is complete. Here, the simulation from the optimized parameter set for the suprathreshold evoked response is shown in gray with an improved RMSE of 15.54 compared to 30.53 for the initial model. See Figure 11—figure supplements 1 and 2 for a further description of the optimization routine and parameter sensitivity analysis.

Figure 11—figure supplement 1. Weighted scoring of the stepwise optimization procedure for suprathreshold level evoked response example, see text for details.

Figure 11—figure supplement 2. Sensitivity analysis results of the suprathreshold level evoked response example showing the relative contribution of each input’s parameters on variance.