Abstract

Muscular activity recording is of high basic science and clinical relevance and is typically achieved using electromyography (EMG). While providing detailed information about the state of a specific muscle, this technique has limitations such as the need for a priori assumptions about electrode placement and difficulty with recording muscular activity patterns from extended body areas at once. For head and face muscle activity, the present work aimed to overcome these restrictions by exploiting magnetoencephalography (MEG) as a whole head myographic recorder (head magnetomyography, hMMG). This is in contrast to common MEG studies, which treat muscular activity as artifact in electromagnetic brain activity. In a first proof‐of‐concept step, participants imitated emotional facial expressions performed by a model. Exploiting source projection algorithms, we were able to reconstruct muscular activity, showing spatial activation patterns in accord with the hypothesized muscular contractions. Going one step further, participants passively observed affective pictures with negative, neutral, or positive valence. Applying multivariate pattern analysis to the reconstructed hMMG signal, we were able to decode above chance the valence category of the presented pictures. Underlining the potential of hMMG, a searchlight analysis revealed that generally neglected neck muscles exhibit information on stimulus valence. Results confirm the utility of hMMG as a whole head electromyographic recorder to quantify muscular activation patterns including muscular regions that are typically not recorded with EMG. This key advantage beyond conventional EMG has substantial scientific and clinical potential.

Keywords: affective neuroscience, electromyography, EMG, Emotion, Magnetomyography, MEG

Short abstract

We present an innovative method called head magnetomyography (hMMG), which exploits magnetoencephalography (MEG) as a whole head electromyographic (EMG) recorder. Differently from the typical EMG recording, which needs an a priori selection of the placement of the electrodes, hMMG is able to detect muscular activity from many regions of the face and head simultaneously, including typically overlooked muscles. Our data show that hMMG can readily serve researchers in the emotion field and hold further scientific as well as clinical promise.

1. INTRODUCTION

Our body allows for expression of a rich set of emotional states intrinsic to social interaction (Darwin, 1872; Nummenmaa, Glerean, Hari, & Hietanen, 2014; Nummenmaa, Hari, Hietanen, & Glerean, 2018). Especially facial muscles have a fundamental role in communicating emotion and mood, that is, expressing and, from the observer point of view, perceiving related bodily signals. To investigate emotional expression, studies in this field often exploit a technique of muscular recording called surface electromyography (EMG; Fridlund & Cacioppo, 1986; Larsen, Norris, & Cacioppo, 2003). When expressing a specific emotional state, specialized muscles in the face contract, resulting in differentiated activity patterns of facial muscles. In order to start a muscle contraction, motor neurons release acetylcholine at the neuromuscular junction leading to muscle fiber action potential, which in turn causes the fiber to contract. Typically, EMG uses a bipolar arrangement to record the voltage difference between two electrodes placed along the muscle fiber direction. Given its low cost and relative ease of use, EMG is the gold standard for noninvasive recordings of muscular electrical activity. While yielding signals at relatively high signal‐to‐noise levels for a specific muscle, a limitation is that, in order to record different muscles, many electrodes are needed. Naturally spatial and practical limits are reached fairly quickly (commonly not more than 5–6 muscles are investigated), forcing the researchers to a priori selection. Further, some muscles are very difficult to record via surface EMG (e.g., inner neck). These issues limit the clinical use of the surface and (more invasive) needle EMG, since indeed some muscles (such as longus colli in chronic neck pain syndromes; Falla, Jull, O’Leary, & Dall’Alba, 2006) are difficult to record with these techniques. In addition, the use of conventional techniques can be highly unpleasant in pain conditions such as allodynia or hyperalgesia (Coutaux, Adam, Willer, & Le Bars, 2005).

In an attempt to overcome these issues, we introduce a novel application of whole head magnetoencephalography (MEG) to record and reconstruct myographic activity from the head at once. MEG uses superconductive sensors (SQUIDs) to record magnetic potentials primarily produced by postsynaptic currents. Given the resemblance between functioning principles of neurons and muscle fiber contraction, MEG also records electromagnetic signals originating from muscles. In fact, in typical cognitive neuroscience experiments, muscular activity is visible even in MEG raw signals. Such muscular signals are normally regarded as noise that needs to be removed from the neural data. In the present work, we treat muscular activity as signal since MEG has distinct advantages with respect to the aforementioned EMG‐related issues: (a) no electrodes and no a priori locations are needed since MEG records from the entire head at once, and (b) ideally, it might also record deep muscle activity, which (using inverse models) could be separated from more superficial ones.

Fifty years ago, researchers had already attempted to record magnetic fields generated from muscular contractions: David Cohen was the first, using one SQUID, to record activity from forearm and hand muscles; he called the technique “magnetomyography” (MMG, Cohen & Givler, 1972). Nowadays, MMG is used to investigate uterine contractions using arrays of SQUIDs specifically implemented for this purposes (Escalona‐Vargas, Oliphant, Siegel, & Eswaran, 2019; Eswaran, Preissl, Murphy, Wilson, & Lowery, 2005) and muscular activity from the heart (magnetocardiography, Fenici, Brisinda, & Meloni, 2005).

In contrast to previous research, the present work focuses on exploiting existing MEG devices to record muscular activity from face and head and to localize, taking advantage of source reconstruction algorithms, the magnetic activity generated by a variety of face and head muscles at the same time.

In order to make first steps into expanding the application of MMG into the affective neuroscience, we implemented a proof of principle that illustrates some of these potential advantages: in a first step, using an imitation task, we demonstrate that the multiple muscular sources contributing to the MEG signal are distinctly localizable on the participants’ face. In a second step, we apply this novel approach to a more typical experimental environment by asking participants to passively watch emotion‐eliciting pictures. Validating the approach, we replicate a classical finding in the emotion literature, namely, that m. corrugator supercilii activity correlates negatively with stimulus valence (Cacioppo, Petty, Losch, & Kim, 1986; Lang, Greenwald, Bradley, & Hamm, 1993). Going beyond this replication, we use classification algorithms to predict the valence category of the observed picture from the reconstructed muscular signals, pointing to the importance of neck muscles, which has not been considered in studies using conventional EMG. This underscores the promise of this approach as a tool for future scientific discoveries as well as a potential clinical tool. From this point on, we refer to our implementation of MEG muscular recording as head magnetomyography (hMMG).

2. METHOD

2.1. Participants

The experiment took place in the MEG Lab of the University of Salzburg located at the Christian Doppler Clinics (Salzburg). The protocol was approved by the Ethics Committee of the University of Salzburg. Twenty‐two healthy participants (15 female, mean age 24.8 ± 3.6) were tested, after having signed an informed consent. All participants had normal or corrected‐to‐normal vision and declared no knowledge of any neurological or psychiatric disorder. Participants received either a monetary reimbursement (10 € per hour) or course credit for their participation. In total, the experimental session lasted about 2 hr.

2.2. Data collection procedure

Participants were asked to remove any metal objects from their body. Considering the features of the MEG signal we were interested in (gamma to high gamma frequencies, see Section 2.3.6), participants wearing metallic braces were not excluded since the noise produced by braces on the MEG sensors does not affect the frequency bands of interest for the subsequent analyses.

Participants’ head shape was digitized by means of a Polhemus tracker (3Space FASTRAK, Polhemus, Colchester, VT); at least 300 points were tracked on participants’ head, including five head‐position (HPI) coils and three anatomical landmarks. For each participant, in addition to the standard head points, the nose shape and position of the eyebrows were also tracked. On a subset of participants (10), three EMG electrode pairs were placed in correspondence of left m. corrugator supercilii, right m. frontalis, and right m. zygomaticus major (Figure 2a). Participants were then seated in the MEG device in an electromagnetically shielded room (AK3b, Vacuumschmelze, Hanau, Germany). The MEG lab in Salzburg is equipped with a 306‐channel Elekta Triux whole head MEG device (102 triplets composed of one magnetometer and two orthogonally placed planar gradiometers). Signal from MEG sensors was sampled at 2,000 Hz, with the acquisition filters set to 0.1 Hz high‐pass and 660 Hz low‐pass. The head position inside the MEG helmet was localized by means of HPI coils, which were energized throughout the whole experiment.

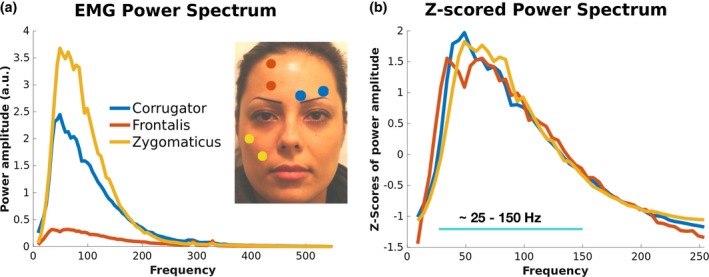

Figure 2.

Location and power spectra for the 3 EMG channels. (a) Average power spectrum across participants of the 3 EMG channels representing the power during the imitation conditions that are best associated with their contraction: left m. corrugator supercilii during disgust imitation, m. frontalis during fear imitation, m. zygomaticus major during joy imitation. (b) Z‐score transformation of individual EMG power spectra shows that the channels have very similar spectral components. The band containing dominant frequencies, between 25 and 150 Hz, has been used in the following analyses

Visual stimuli were presented by means of a projector (PROPixx, VPixx Saint‐Bruno, QC, Canada) back‐projecting the images on a semitransparent screen.

2.3. Imitation task: Materials and methods

2.3.1. Stimuli

We selected five pictures from the Radboud Face Database (RaFD, Langner et al., 2010) data set of an actress producing five emotional expressions: joy, disgust, fear, sadness, neutral (Figure 1a, top). Each imitation task session was composed of 175 trials, 35 for each emotion.

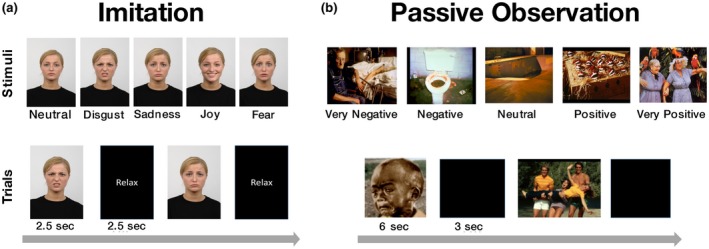

Figure 1.

Stimuli and procedure in the imitation and passive observation tasks. Imitation (a) Top: Pictures showing an actress performing five different emotional facial expressions. Bottom: Time line of two trials: pictures were presented for 2.5 s, followed by a “Relax” label again for 2.5 s. (175 pictures, 35 per expression). Participants were asked to imitate the facial expressions observed and to relax their face during the relax label. Passive observation (b) Top: pictures showing exemplars of the five valence categories. Bottom: Time line of two trials: pictures were presented for 6 s each, followed by a blank screen for 3 s (100 pictures, 20 per valence category)

2.3.2. Procedure

The presentation of a picture lasted 2.5 s on the screen, followed by 2.5 s of black screen on which a white “Relax” label was presented (Figure 1a, bottom). The order of the emotion conditions was randomized across participants. Their task was to imitate the emotional expression observed in each trial. Before going inside the MEG scanner, participants were trained outside the shielded room to produce the facial contraction pattern that best approximated the one observed in the pictures. Some participants reported that it was sometimes difficult for them to imitate the facial expressions, especially for fear and sadness pictures. Acquired data were stored on a local server.

2.3.3. EMG power spectrum

EMG power spectrum was computed for the three channels recorded from participants wearing EMG electrodes. The signal was band‐pass filtered between 9 and 550 Hz, and then it underwent a time‐frequency analysis from 9 to 550 (5‐Hz step, 0.3‐s sliding time window, Hanning taper); the resulting time‐frequency data were averaged between 0.5 and 2.5 s after picture presentation, yielding a single value for each frequency tested. Eventually, we performed a time‐frequency analysis, averaged across time. As a final step, trimmed mean at 20% across trials (10% trimming for each side) was computed at each frequency to detect the average power spectrum across participants. A Z‐score transformation was applied to the power spectra separately for each channel from 9 to 250 Hz. This analysis, as well as showing the distribution of power spectra at different muscles, also guided us in selecting a frequency band optimal for the following analyses.

2.3.4. Sensor to source space projection

We conducted two types of analysis for the imitation task, the hMMG‐EMG correlation and the hMMG analysis of emotional facial expressions. Both were conducted in source space, projecting the sensor data onto a set of virtual sensors. FieldTrip toolbox (version updated to November 15, 2018) and custom‐made MATLAB functions were used to analyze the data (Oostenveld, Fries, Maris, & Schoffelen, 2011).

As a first step, we epoched all trials from 0.5 to 2.5 s after picture presentation onset. Data were checked only for broken channels but not for physiologically driven artifacts, given that participants wearing braces were also included in the experiment. Data were band‐pass filtered depending on the type of analysis conducted; details on filtering procedures are described in the hMMG‐EMG correlation (Section 2.3.5) and the hMMG analysis of emotional facial expressions (Section 2.3.6).

After filtering, sensor data were projected onto source space, separately for each condition, to obtain virtual sensors by multiplying the spatial filters with the single trial data. We obtained structural MRIs of 10 participants’ heads. For the others, we used an MRI model downloaded from the FieldTrip toolbox website (ftp:/ftp.fieldtriptoolbox.org/pub/fieldtrip/tutorial/Subject01.zip); we manually (interactively) morphed the MRI shape in order to fit each participant's head shape.

Then, the morphed head MRIs were segmented into surface (“scalp” tissue) and interior (“brain” tissue). In order to create a volume conduction model, the geometry of the head was determined using a triangulated surface mesh made of 5,000 vertices for each compartment. Finally, we used the FieldTrip implementation of the volume conduction algorithm (singleshell option) proposed by Nolte (2003).

To create virtual sensors, we scanned the scalp using steps of 1 cm between voxels and then subtracted the brain volume from it, in order to delete voxels falling into brain tissue. The inner border of the scalp was extended inside by 2 cm (i.e., setting: cfg.inwardshift = 1), producing a scan grid of 4,735 voxels.

Once a volume conduction model and a source model had been constructed, the lead field was estimated for each point of the grid, and the covariance matrix between MEG sensors was calculated. We applied the linear constrained minimum variance (LCMV) beamformer algorithm onto the data (Van Veen, van Drongelen, Yuchtman, & Suzuki, 1997) to generate a spatial filter, mapping virtual sensors to MEG sensor data for each trial. At the end of this procedure, we obtained the time course of the activity of 4,735 virtual sensors on the surface of the head. For displaying the surface plots, we used the MRI of one of the authors (G.B.).

2.3.5. hMMG‐EMG correlation

Given that projecting data from each single frequency (from 9 to 550 Hz) into source space would have been computationally very demanding, for each participant wearing EMG electrodes we band‐pass filtered the data into nine frequency bands (i.e., 9–25, 25–45, 45–70, 70–100, 100–160, 160–200, 200–250, 250–350, 450–550 Hz) before projecting them onto source space (Dalal et al., 2008). The band‐pass filtering has been applied in order to optimize the beamformer reconstruction to the frequencies of interest. To determine which one of the virtual sensors correlates best with each EMG channel, we linearly correlated (Pearson), separately for each participant, for each condition, and for each frequency band, the time course of each virtual sensor with the time course of each EMG channel, ending up with a matrix of correlation values for each frequency band with Number of Trials × Virtual Sensors × Number of EMG Channels as dimensions (35 × 4,735 × 3). Finally, we averaged the correlation values across trials using a trimmed mean at 20%. Since we were not interested in the direction of the correlation, we computed the absolute value of the obtained matrix.

For each participant and frequency band, absolute correlation values were sorted from the lowest to the highest value. Subsequently, the 2.5% of the virtual sensors showing the highest absolute correlation values were selected. At the group level, we computed a map in which every virtual sensor represents the number of participants having that virtual sensor among the 2.5% highest absolute correlation value. Only a subset of condition‐EMG pairings was analyzed because of their theoretical correspondence (Cacioppo et al., 1986): disgust‐corrugator, fear‐frontalis, joy‐zygomaticus.

To show the distribution of correlations across frequency bands, we computed a correlation spectrum for each frequency band. We computed this correlation spectrum on the voxels showing the highest count and the highest count minus one on the hMMG‐EEG correlation maps, separately for each condition (e.g., if maximum count for one condition was 9, then all the channels having 9 or 8 counts were selected). As a final step, separately for each condition, we averaged the power of the selected voxels, obtaining one power value for each condition and each frequency band.

2.3.6. hMMG analysis of emotional facial expressions

Differently from the filtering strategy adopted for the hMMG‐EMG correlation analysis, we band‐pass filtered the MEG signal from 25 to 150 Hz, being the frequency range in which the EMG power spectrum showed the highest activity and best correlated, on average, with the correspondent MEG sensors (see Sections 3.1.1 and 3.1.2).

A time‐frequency analysis of the virtual sensors was computed on the whole band‐passed signal (from 25 to 150 Hz, in steps of 5 Hz, mtmconvol method option of the ft_frequencyanalysis FieldTrip function) and on the time window from −0.5 to 2.5 s after the picture presentation (taper = Hanning, sliding window 0.3 s). The result of this procedure was a three‐dimensional matrix (Virtual Sensor × Frequency Range × Time). Data were averaged across frequencies, and the baseline time window was averaged between −0.5 and 0 s, while the target time window was averaged between 0.5 and 2.5 s; in this way, we obtained for each participant two vectors of virtual sensors containing a single power value. In order to avoid any possible artifactual effect due to the estimation of different spatial filters for different conditions, the baseline time data were used to normalize the data in the time window of interest (db baseline from ft_freqbaseline function).

Statistical analyses were performed comparing at a group level each of the active emotions (joy, fear, disgust, sadness) against the neutral one. Then, all combinations involving active emotions were compared with each other (p < .05, two‐tailed). For presentation purposes, we thresholded pictures at t ≥ |5|.

2.4. Passive observation task: Materials and methods

2.4.1. Stimuli

We selected 100 pictures from the International Affective Picture System (IAPS) data set (Bradley & Lang, 2007). The IAPS database provides normative ratings on three dimensions: valence, dominance, and arousal. Based on the valence feature (lowest valence 1.45, highest valence 8.2), they were divided into five categories with 20 pictures, respectively: very negative (median valence rating 1.79 ± median absolute deviation 0.14), negative (2.45 ± 0.17), neutral (3.76 ± 0.40), positive (5.18 ± 0.58), and very positive (7.31 ± 0.40).

2.4.2. Procedure

Each picture was presented on the screen for 6 s, followed by a black screen of 3 s (Figure 1b, bottom). Order of pictures was randomized across participants. Participants were asked to pay attention to the pictures and were told that, at the end of the experiment, they would be asked some questions about the stimuli.

2.4.3. Valence‐contraction correlation

Data preprocessing followed almost identical steps as the hMMG analysis of emotional facial expressions, with two notable differences: (a) Spatial filters were estimated using all available trials, that is, including trials from all conditions. In this case, baseline correction was not necessary, given that the spatial filter applied was identical for all trials. (b) Data were epoched from −7 to 7 s with respect to picture presentation onset.

Concerning the analyses strategy, we used for consistency the same approach used to analyze imitation task data (see Sections 2.3.3 and 2.3.6). However, given that for the current task we expected effects likely smaller in magnitude and less temporally aligned to the onset of the pictures, we averaged the data over a longer period of time; accordingly, data included in the time window −7 to −1 s were averaged both along the time and frequency domain (mean frequency = 87.5 Hz) to form the prestimulus time window. The same averaging was applied between 1 and 7 s after stimulus presentation onset (note that spontaneous facial expression typically exceeds the stimulus offset) to form the poststimulus time window.

The result of these data processing steps is a two‐dimensional matrix of virtual channels containing two power values, one from prestimulus and the other from poststimulus time periods.

At first, we conducted a more conventional analysis with the scope of highlighting head muscles correlating with picture valences. On the poststimulus data set, we linearly correlated (Pearson) the valence of each picture with the averaged amplitude of the 25–150 Hz frequency window for each virtual sensor, thus obtaining a correlation value for each virtual sensor for each participant.

To test the statistical significance of the correlation values, we used a nonparametric randomization procedure in order to control for multiple comparisons over sensors (Maris & Oostenveld, 2007) implemented in FieldTrip.

We compared the obtained values against 0 correlation (settings: cfg.method = “montecarlo”; cfg.clusterthreshold = “nonparametric‐individual”; cfg.clusterstatistics = “max”; cfg.numrandomization = 5,000, 15.5 neighbors on average; method = “distance,” neighbor distance = 1.5 cm).

Statistical threshold was set at alpha = .05, one‐tailed, since we focused only on negative correlations (i.e., the lower the pleasantness of the picture, the higher the muscle contraction). Our results are in agreement with the literature, showing negative linear correlation between regions close to m. corrugator supercilii and hedonic picture ratings (Cacioppo et al., 1986).

2.4.4. Valence classification based on hMMG activity

A linear discriminant analysis (LDA) was used to classify the data adopting a cross‐validation approach provided by the CoSMoMVPA MATLAB toolbox (Oosterhof, Connolly, & Haxby, 2016) both on the prestimulus and on the poststimulus periods. We conducted a k‐fold (k = 10) cross‐validation, with 90 pictures used as the training set for the classifier and 10 as the test set. The accuracies resulting from the 10 cross‐validation procedures were averaged, providing one accuracy value for each of the time periods for each participant.

Two paired‐sample t tests against chance level accuracy (0.2) were performed on the accuracy of the prestimulus and the poststimulus time windows at group level.

2.4.5. Searchlight classification

In order to identify areas on the surface of the head carrying most of the information about the picture valence category, a searchlight virtual sensor pattern analysis procedure was carried out on the poststimulus time window. The searchlight procedure follows similar steps as the previous classification analysis but with the difference that, instead of considering the whole set of virtual sensors as a feature for the classifier, it takes each virtual sensor along with its neighbors (eight on average) as features (called the searchlight), to perform the cross‐validation analysis as before and then repeats the same procedure for the next virtual sensor (Kriegeskorte, Goebel, & Bandettini, 2006). Thus, differently from the hMMG analysis of emotional facial expressions, which produced one value of accuracy per participant, the result of the searchlight procedure provides a value of accuracy for each virtual sensor, reflecting the amount of information contained in each virtual sensor and its immediate neighbors.

To statistically evaluate the results of the searchlight classification procedure, we used a threshold‐free cluster estimation (Smith & Nichols, 2009) algorithm implemented in CoSMoMVPA (E = 0.5, H = 2). We compared, at group level, the accuracy of each searchlight against the chance value of 0.2.

3. RESULTS

The experimental session was divided into two parts, passive observation and imitation tasks (Figure 1). While experimentally the two tasks were run in the above‐described order, with the aim of not directing participants’ attention to their own facial expressions during the passive observation task, we will describe results in the reverse order (i.e., first imitation followed by passive observation task).

3.1. Imitation task

In the imitation task, participants (N = 22) were asked to imitate five facial expressions (joy, disgust, fear, sadness, neutral) performed by an actress on the screen (RaFD; Langner et al., 2010, Figure 1a). For comparison purposes with conventional EMG, 10 participants wore electrodes in correspondence of left m. corrugator supercilii, right m. frontalis, and right m. zygomaticus major (Figure 2a). The general purpose of the imitation task was to provide an important quality check that the signal recorded from facial muscles was well reconstructed in the hypothesized face locations.

3.1.1. EMG power analysis

Being the gold standard in muscular signal recording, as a first step, a spectral characterization of the muscular activity picked up by EMG was performed in order to inform the subsequent MEG source localization analysis. The resulting power spectrum shows strongest signal in the 25–150 Hz range, peaking between 35 and 45 Hz (Figure 2a). The highest overall power is reached by zygomaticus major and corrugator supercilii, followed by frontalis muscles, which show the lowest absolute power. However, following a Z‐score transformation of the power spectra of each muscle, the channels show virtually identical spectral power distribution. Based on the Z‐scored EMG power spectra, we selected a frequency band ranging between 25 and 150 Hz to be used in all of the following analyses.

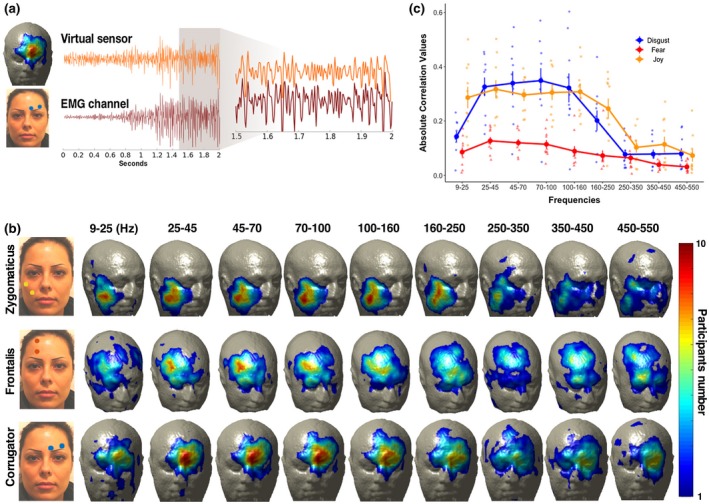

3.1.2. hMMG‐EMG correlation

If hMMG is to be used as a tool to record muscular activity localized on the face, we would expect a strong correlation at relevant locations with signals recorded using conventional EMG. To test this, we conducted a correlation analysis between band‐passed filtered hMMG and EMG time courses; an example of such band‐passed time series is provided in Figure 3a. EMG signal from m. corrugator supercilii and hMMG signal were correlated during imitation of disgust, while EMG from m. frontalis, and zygomaticus major, respectively, were correlated with hMMG during fear and joy imitation. We selected the 2.5% (approximately 118 out of 4,735 voxels) of the highest correlation values for each participant, in each condition and frequency band. Figure 3b shows the number of counts representing the number of participants having a virtual sensor among the highest 2.5% in correlation values for different frequency bands. The results show that overall the location of the highest count number matches the a priori EMG electrode placement, being in close proximity to the position of the targeted muscles.

Figure 3.

(a) Example trial hMMG versus EMG time course. The two traces represent EMG (m. corrugator supercilii) and a correspondent hMMG virtual sensor during a single contraction; magnification shows the similarity of the two traces during the time window from 1.5 to 2 s after picture presentation. MEG data have been band‐pass filtered in the 25–150 Hz range before being submitted to LCMV beamformer. (b) hMMG‐EMG localization. hMMG‐EMG absolute correlation values have been computed separately for each participant wearing EMG electrodes. The upper row shows the number of times in which a virtual sensor was among the 2.5% with the highest correlation during disgust imitation. The middle and the bottom rows show the same metric during fear and joy imitation. Correlations were computed after time series had been band‐pass filtered in nine different frequency bands. (c) hMMG‐EMG average correlation. The absolute correlation values have been averaged from virtual sensors containing the highest 2.5% absolute correlation values for the highest number of participants down to the highest number minus one, separately for each condition, after a 25–150 Hz band‐pass filtering (e.g., if maximum count in the correlation after 25–150 Hz band‐pass filtering for one condition was 9, then all the channels having 9 or 8 counts were selected). After the relevant voxels were obtained, means and standard error of the correlation per each frequency band were calculated. Dots represent single subject correlation data

After having localized the voxels that consistently showed the highest correlations with the EMG signal, we wanted to measure in absolute terms the average magnitude of the correlations. Figure 3c shows the distribution of the average hMMG‐EMG correlation values across frequency bands by considering virtual sensors showing the highest count and highest count minus one, separately for each muscle (e.g., if maximum count for one condition was 9, then all the channels having 9 or 8 counts were selected, and their correlation values averaged). For all channels recorded, the highest correlation values lay approximately between 25 and 150 Hz, in line with the EMG power analysis results. Within that frequency range, zygomaticus and corrugator muscles had the highest correlation values, between 0.296 and 0.317 and between 0.322 and 0.349, respectively. The frontalis muscle has overall smaller correlation values compared to the other two muscles, ranging between 0.127 and 0.09 in the 25–150 Hz band. Overall, the results of this analysis established a strong similarity between hMMG‐based muscular recordings and standard EMG.

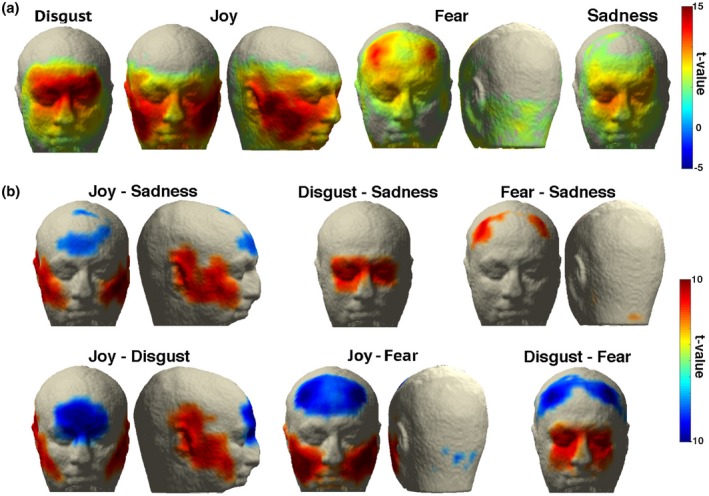

3.1.3. hMMG analysis of emotional facial expressions

To illustrate the potential of our approach to measure facial muscular activity, we performed statistical contrasts of the hMMG signals in order to obtain topographic differences between emotional facial expressions. In a first step, we contrasted emotional expressions to the neutral expression in order to test whether commonly reported muscle groups can be identified. As Figure 4a shows, the expected muscular activity characterizing each emotion was detected; joy imitation showed peaks at inferior‐lateral locations on the face, in proximity to m. zygomaticus minor, m. zygomaticus major, and m. risorius, while disgust and fear imitation showed peaks in correspondence to lower (m. corrugator supercilii, m. procerus) and higher forehead (m. frontalis), respectively. Notably, other portions of the head, outside those predicted, were activated for joy and fear imitation; areas close to the ears showed a peak of activity in joy imitation, possibly pointing to anterior auricularis muscle contractions, while in fear imitation, activity in correspondence to neck muscles was detected. Comparisons between active emotions (Figure 4b) are in line with observations shown in Figure 4a and highlight specific areas of muscular activation differences between emotion expressions.

Figure 4.

(a) hMMG of the comparisons of emotion versus neutral expression. (b) hMMG of the comparisons of emotion expressions against each other. Virtual sensors showing t values above |5| are displayed

These results prove that hMMG is suited for localizing muscular activity during facial expressions, but, most importantly, they highlight the advantage of whole head recordings against the classical a priori electrode selection, revealing that unexpected or overlooked muscles contributed to the expression patterns.

3.2. Passive observation task

During imitation, muscular activity is particularly intense, so the usefulness of hMMG in a setting with more subtle muscle activity still needs to be established. This was the purpose of the passive observation task, where participants (N = 17) were asked to attentively observe a series of emotion‐inducing pictures selected from the IAPS database ranging from very negative to very positive valence ratings (Bradley & Lang, 2007).

We conducted a valence‐contraction correlation analysis in which, for each participant, the amount of activity of each virtual sensor was correlated with the normative valence rating of the pictures presented (Figure 5a), followed by a classification analysis based on hMMG activity (Figure 5b) and a searchlight classification (Figure 5c).

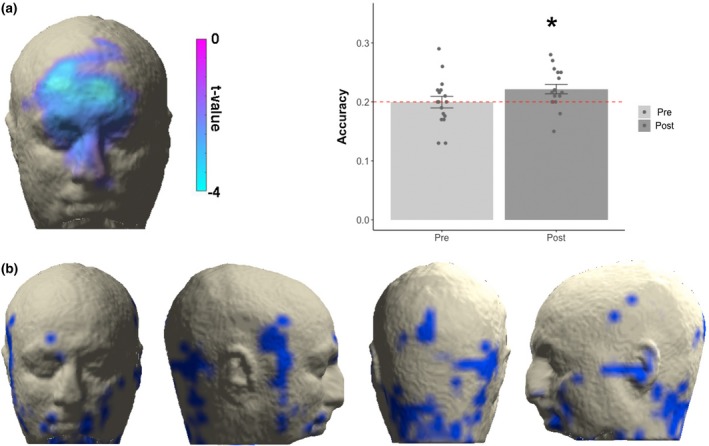

Figure 5.

(a) Valence‐contraction correlation: the only statistically significant cluster was found in the region including bilaterally the corrugator muscles but also regions higher on the z axis, a picture resembling the joy‐disgust comparisons but also including areas in proximity to the medialis pars of frontalis muscles. The lateral bar represents the values of the t test against 0 correlation. (b) Accuracy values on the whole head voxel: average classification accuracies in the prestimulus and the poststimulus period. Error bars represent standard error of the mean. Dots represent single subject accuracies for each time period. (c) Searchlight classification results: A hub of information is provided within the neck region. Only voxels showing p < .005 are represented

3.2.1. Valence‐contraction correlation

To validate hMMG, it is important to illustrate that it is sensitive to established effects. To this end, we aimed to replicate a well‐established result from studies using conventional EMG (Cacioppo et al., 1986; Lang et al., 1993; Larsen et al., 2003), showing that amplitude of m. corrugator supercilii contractions is negatively correlated with the valence of the perceived stimulus. Our cluster‐based statistical procedure confirmed this finding, yielding one negative cluster of voxels (p < .05 one‐tailed) located medially on the upper face, including m. nasalis, corrugator supercilii bilaterally, and m. frontalis pars medialis bilaterally (Figure 5c), confirming the similarity between the classical EMG results and hMMG recordings.

3.2.2. Valence classification based on hMMG activity

Classical data from the literature, as the valence‐contraction correlation, extracted information from the channels one at a time. hMMG instead, comprising multiple voxels, is suited to take advantage of classifiers to characterize informative multidimensional patterns of activity. To test if hMMG activity patterns could predict the valence of the observed pictures, we sorted the images, dividing them into five valence classes, and applied a LDA classifier. The analysis yielded, for the poststimulus time window, an accuracy level above chance level (0.2), indicating that valence can be classified significantly above chance (0.222; t(16) = 2.70, p = .016, Figure 5b). For the prestimulus period, accuracy not significantly different from chance was detected (0.199; t(16) = −0.05, p = .962). Since the previous analysis used all grid points simultaneously to classify valence category, no spatial information was obtained. For this purpose, we used a searchlight approach, yielding a spatial distribution of accuracy. This analysis showed that valence categories are distinguishable above chance in different regions of the head, including lower and lateral portion of the face and, notably, neck regions (Figure 5c).

4. DISCUSSION

Given its ease of application, usefulness, and low cost, EMG is the gold standard of muscular recording. As any other technique, however, EMG suffers from limitations; here, we focused especially on one of them, namely, the need for a priori selection of the electrodes to be placed on the participants’ face. In order to overcome this limitation, we used conventional whole head MEG as a large‐scale EMG recorder, providing MMG recordings of the whole head at once.

4.1. hMMG provides similar information as EMG with high spatial coverage

As a first step, to test the hypothesis that hMMG is capable of measuring and locating muscular activity at circumscribed regions, we calculated the correlation between the EMG and hMMG for facial expressions of joy, disgust, and fear. Clearly, if the MEG source space reconstruction of head muscular activity was spatially unspecific, then a dispersed pattern of hMMG‐EMG correlation would have emerged with no consistent peak locations. Instead, we were able to find clear peaks of correlations among participants, located in correspondence to the muscles we expected to be correlated with the EMG activity. Second, we showed that the correlation between EMG and hMMG time courses was modulated by the frequency range of the initial band‐pass filtering of the traces and that correlation followed a trend, across filtering frequency bands, that resembled the one found on the EMG power spectrum. Indeed, the highest correlation values were found within the band corresponding to the 25‒150 Hz frequency range, analogous to the pattern found in the power spectrum of the EMG signal. If the two signals were unrelated, no pattern would have been found across frequency bands. Instead, our results clearly point to a relationship between EMG and hMMG signals. Third, we showed that the patterns of muscular activity detected by the hMMG across the imitation task are highly compatible with expected facial expression patterns, with the addition of unpredicted activations, as shown for the case of m. auricularis and neck muscles—an outcome that highlights the advantage of having a large‐scale muscle recorder compared to an a priori and limited channel selection. A possible concern is the lack of consistent activation of the mouth area, since it usually provides critical information regarding the emotion experienced. One possibility is that signals from the mouth were not optimally captured due to the poor sensor coverage in proximity of the mouth area. However, most of the changes in mouth shape are due to muscles that pull the mouth edges, like the m. zygomaticus (both major and minor) or m. depressor anguli oris, for example; these muscles, however, are not located inside the mouth but in its proximity (Netter, 2019). The mouth itself contains a muscle called m. orbicularis oris that allows, for example, whistling or kissing, which were not performed in this study. Nevertheless, it cannot be excluded that signal from the mouth was not recorded due to the poor sensor coverage in proximity of the mouth area.

Overall, these findings illustrate that the hMMG can be used to reconstruct muscular information at circumscribed locations from the entire head. The latter aspect is the central added value of hMMG over or in conjunction with conventional EMG. Our results also indicate muscular activity in head regions that are challenging to detect using classical bipolar EMG (e.g., due to presence of hair) such as auricularis muscles in proximity of the ears or occipitalis muscles on the back of the head.

4.2. hMMG detects subtle muscular activity patterns during affective picture viewing

While the application of hMMG might potentially expand beyond basic research, our study already shows the benefit for affective neuroscience research. In order to show consistency between hMMG and conventional EMG results in this domain, we correlated the activity at each voxel with the valence of the pictures observed, aiming to reproduce the well‐known result of a negative linear correlation between valence and m. corrugator supercilii activity (Cacioppo et al., 1986; Lang et al., 1993; Larsen et al., 2003). A significant negative correlation was observed comprising m. corrugator supercilii bilaterally, extending to upper fibers, possibly in the pars medialis of the frontalis muscle. Considering the Facial Action Coding System as an atlas for facial muscle contraction description, this pattern resembles a mix of activity in action‐unit 1 and 4 (inner brow raiser and brow lowerer, Ekman & Friesen, 1978), suggesting that the view of negative‐valence pictures went along with sadder emotional states. To exploit the advantage of having multiple voxels recorded at one time, we applied a LDA to the hMMG data aiming to predict the valence category of the presented emotion‐inducing pictures. Using whole head patterns enabled the above chance classification of a presented image's valence category. As a last step, the use of hMMG in combination with a searchlight analysis uncovered informative muscle activity patterns in the neck region, previously overlooked when studying the relationship between emotional experience and muscle contraction. It is worth noting that, differently from EMG, no electrodes need to be attached to the participants’ face when using hMMG; this characteristic avoids the interaction between electrodes and skin, which might alter proprioceptive feedback and thus influence emotional facial expression. In addition, it might draw participants’ attention to their face and thus reduce spontaneity of facial expression. Overall, this set of promising results show how hMMG can be readily utilized as a neuroscientific tool for affective science, to significantly exceed the amount of information that can be acquired using conventional EMG alone.

4.3. Limitations and caveats

The correlation analysis between EMG and hMMG provided intermediate values, with the highest average correlation of around 0.3. This value reached up to 0.6 for some participants, but overall it is clear that the hMMG does not model the EMG time series perfectly. Several reasons for this discrepancy exist: (a) EMG spatial selectivity might have been influenced by cross talk, the phenomenon by which the EMG signal, aimed to record only one muscle, is contaminated by the activity of surrounding muscles. In hMMG, muscular activity is estimated at exact point sources using an adaptive spatial filter. (b) The signal‐to‐noise ratio is higher for the EMG compared to the virtual sensor traces as suggested by the precontraction period in Figure 3a. Some contributing reasons could be vicinity of EMG electrodes to the source or the measurement via a bipolar montage. (c) We used a template MRI for almost half of the participants, which could have reduced average signal‐to‐noise ratio for hMMG. (d) While we could have chosen to correlate the envelopes of hMMG and EMG time series, we opted for the stricter comparison by directly correlating the raw time series. (e) To project sensor data into source space, we used the LCMV beamformer algorithm, which is frequently used to capture distributed brain activity. Application of alternative methods such as minimum norm estimation (MNE) are, of course, also possible and may lead to better results in some circumstances. In the context of our study, the use of MNE led to very similar patterns for the imitation task (see online supporting information, Figure S1). (f) Performing emotional expressions was not easy for many participants since they were only briefly trained to perform those expressions before the experiment started; this problem might have resulted in nonoptimal contractions throughout the imitation, leading to the production of poor muscular signal, which might have reduced correlation values.

Related to the last point, it is obvious that some expressions were performed better than others: the sadness map does not correspond entirely to the pattern of activity hypothesized a priori, which would have been characterized by m. frontalis (pars medialis), plus m. corrugator supercilii activity. To a weaker extent, this also applies to fear expressions. While, as a first thought, this lack of correspondence might be attributed to intrinsic limitations of the hMMG technique, the most likely explanation can be found in the participants’ difficulty in imitating emotional expressions. As a consequence, this difficulty should not only be reflected in the hMMG but also in the EMG signal; indeed, for example, the average EMG activity recorded on the frontalis muscle in fear expressions was approximately one fifth of the one recorded from the m. corrugator, so clearly differences like these might emerge also in the hMMG signal.

It is also worth mentioning that source space activity maps for the imitation task as well as statistical maps have been created based on only 35 samples per emotion, which is a small number compared to classical experimental MEG brain designs.

Altogether, these considerations suggest that conventional EMG is advised whenever the relevant muscle is precisely known in advance and confounding due to proprioceptive feedback and emotion self‐awareness is not of concern. In all other cases, hMMG is a powerful alternative or addition. hMMG is at the beginning of its development, which leaves space for important technical improvements that might provide a more fine‐tuned identification of muscular activity patterns and signal‐to‐noise ratio. For example, muscle fibers have been modeled as current dipoles (but also as tripoles and quadrupoles; Barbero, Merletti, & Rainoldi, 2012; Fuglevand, Winter, Patla, & Stashuk, 1992; McGill, 2004); since fibers run roughly parallel in most of the face muscles, we will exploit in future both the orientation of the magnetic dipoles produced by muscles to better separate close muscle fibers (e.g., as m. masseter and m. risorius) and also the a priori information from muscular atlases.

4.4. Conclusions and future perspectives

This study demonstrates that hMMG is a powerful method to monitor whole head muscular activity, yielding some advantages over classical EMG. In addition, our data show the potential of hMMG in psychological experiments, by replicating and extending established findings in emotion research; in this context, hMMG can be readily used as a stand‐alone muscular recording technique. Beyond the illustrated possibilities, hMMG holds promise as a clinical screening tool; for example, using hMMG as an adjunct to electroneurography might provide a deeper characterization of facial palsies, identifying more easily the muscular groups affected by such pathologies (electrical stimulation inside the MEG has been shown to be feasible by our group; Neuling et al., 2015). hMMG might also become an alternative to monitoring the innervation process of implanted muscle flaps after facial animation procedures (Bianchi, Copelli, Ferrari, Ferri, & Sesenna, 2010); normally, this monitoring is performed by implanting a needle for EMG recording inside the innervated flap, which is uncomfortable especially for children. Another advantage over EMG, which in a clinical context could become critical, is that hMMG might record activity of muscles that are difficult to reach with standard bipolar surface or even needle recordings (e.g., stapedius muscle inside the inner ear, the extraocular muscles, muscles involved in swallowing or located inside the larynx, and inner neck muscles such as m. longus colli). However, given the inexpensive and easy application of EMG, in many cases hMMG may be assisted by EMG, combining the strengths of both approaches. Overall, we believe that hMMG is likely to gain prominence as a muscular recording technique, expanding its advantages not only to the affective sciences domain but also to applied contexts such as the clinical one.

Supporting information

Figure S1. (a) hMMG of the comparisons emotion vs. Neutral expression using MNE source reconstruction. (b) hMMG of the comparisons of emotion expressions against each other. Virtual sensors showing t‐values above |5| are displayed

ACKNOWLEDGMENTS

The first author was supported by Lise Meitner FWF grant M 2171‐BBL. We would like to thank Manfred Seifter and Viola Heberger for their help in collecting the data.

Barchiesi G, Demarchi G, Wilhelm FH, Hauswald A, Sanchez G, Weisz N. Head magnetomyography (hMMG): A novel approach to monitor face and whole head muscular activity. Psychophysiology. 2020;57:e13507 10.1111/psyp.13507

REFERENCES

- Barbero, M. , Merletti, R. , & Rainoldi, A. (2012). Atlas of muscle innervation zones. Milan: Springer; 10.1007/978-88-470-2463-2 [DOI] [Google Scholar]

- Bianchi, B. , Copelli, C. , Ferrari, S. , Ferri, A. , & Sesenna, E. (2010). Facial animation in patients with Moebius and Moebius‐like syndromes. International Journal of Oral and Maxillofacial Surgery, 39(11), 1066–1073. 10.1016/j.ijom.2010.06.020 [DOI] [PubMed] [Google Scholar]

- Bradley, M. M. , & Lang, P. J. (2007). The International Affective Picture System (IAPS) in the study of emotion and attention In Handbook of emotion elicitation and assessment (pp. 29–46). Oxford: Oxford University Press. [Google Scholar]

- Cacioppo, J. T. , Petty, R. E. , Losch, M. E. , & Kim, H. S. (1986). Electromyographic activity over facial muscle regions can differentiate the valence and intensity of affective reactions. Journal of Personality and Social Psychology, 50(2), 260–268. 10.1037/0022-3514.50.2.260 [DOI] [PubMed] [Google Scholar]

- Cohen, D. , & Givler, E. (1972). Magnetomyography: Magnetic fields around the human body produced by skeletal muscles. Applied Physics Letters, 21(3), 114–116. 10.1063/1.1654294 [DOI] [Google Scholar]

- Coutaux, A. , Adam, F. , Willer, J.‐C. , & Le Bars, D. (2005). Hyperalgesia and allodynia: Peripheral mechanisms. Joint Bone Spine, 72(5), 359–371. 10.1016/j.jbspin.2004.01.010 [DOI] [PubMed] [Google Scholar]

- Dalal, S. S. , Guggisberg, A. G. , Edwards, E. , Sekihara, K. , Findlay, A. M. , Canolty, R. T. , … Nagarajan, S. S. (2008). Five‐dimensional neuroimaging: Localization of the time–frequency dynamics of cortical activity. NeuroImage, 40(4), 1686–1700. 10.1016/j.neuroimage.2008.01.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darwin, C. (1872). The expression of the emotions in man and animals. London, UK: John Murray. [Google Scholar]

- Ekman, P. , & Friesen, W. (1978). Facial action coding system: A technique for the measurement of facial movement. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Escalona‐Vargas, D. , Oliphant, S. , Siegel, E. R. , & Eswaran, H. (2019). Characterizing pelvic floor muscles activities using magnetomyography. Neurourology and Urodynamics, 38(1), 151–157. 10.1002/nau.23870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eswaran, H. , Preissl, H. , Murphy, P. , Wilson, J. D. , & Lowery, C. L. (2005). Spatial‐temporal analysis of uterine smooth muscle activity recorded during pregnancy. 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, 6665–6667. 10.1109/IEMBS.2005.1616031 [DOI] [PubMed] [Google Scholar]

- Falla, D. , Jull, G. , O’Leary, S. , & Dall’Alba, P. (2006). Further evaluation of an EMG technique for assessment of the deep cervical flexor muscles. Journal of Electromyography and Kinesiology, 16(6), 621–628. 10.1016/j.jelekin.2005.10.003 [DOI] [PubMed] [Google Scholar]

- Fenici, R. , Brisinda, D. , & Meloni, A. M. (2005). Clinical application of magnetocardiography. Expert Review of Molecular Diagnostics, 5(3), 291–313. 10.1586/14737159.5.3.291 [DOI] [PubMed] [Google Scholar]

- Fridlund, A. J. , & Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology, 23(5), 567–589. 10.1111/j.1469-8986.1986.tb00676.x [DOI] [PubMed] [Google Scholar]

- Fuglevand, A. J. , Winter, D. A. , Patla, A. E. , & Stashuk, D. (1992). Detection of motor unit action potentials with surface electrodes: Influence of electrode size and spacing. Biological Cybernetics, 67(2), 143–153. 10.1007/BF00201021 [DOI] [PubMed] [Google Scholar]

- Kriegeskorte, N. , Goebel, R. , & Bandettini, P. (2006). Information‐based functional brain mapping. Proceedings of the National Academy of Sciences of the United States of America, 103(10), 3863–3868. 10.1073/pnas.0600244103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang, P. J. , Greenwald, M. K. , Bradley, M. M. , & Hamm, A. O. (1993). Looking at pictures: Affective, facial, visceral, and behavioral reactions. Psychophysiology, 30(3), 261–273. 10.1111/j.1469-8986.1993.tb03352.x [DOI] [PubMed] [Google Scholar]

- Langner, O. , Dotsch, R. , Bijlstra, G. , Wigboldus, D. H. J. , Hawk, S. T. , & van Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cognition and Emotion, 24(8), 1377–1388. 10.1080/02699930903485076 [DOI] [Google Scholar]

- Larsen, J. T. , Norris, C. J. , & Cacioppo, J. T. (2003). Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology, 40(5), 776–785. 10.1111/1469-8986.00078 [DOI] [PubMed] [Google Scholar]

- Maris, E. , & Oostenveld, R. (2007). Nonparametric statistical testing of EEG‐ and MEG‐data. Journal of Neuroscience Methods, 164(1), 177–190. 10.1016/j.jneumeth.2007.03.024 [DOI] [PubMed] [Google Scholar]

- McGill, K. C. (2004). Surface electromyogram signal modelling. Medical and Biological Engineering and Computing, 42(4), 446–454. 10.1007/BF02350985 [DOI] [PubMed] [Google Scholar]

- Netter, F. H. (2019). Atlas of human anatomy (7th ed.). Philadelphia, PA: Elsevier. [Google Scholar]

- Neuling, T. , Ruhnau, P. , Fuscà, M. , Demarchi, G. , Herrmann, C. S. , & Weisz, N. (2015). Friends, not foes: Magnetoencephalography as a tool to uncover brain dynamics during transcranial alternating current stimulation. NeuroImage, 118, 406–413. 10.1016/j.neuroimage.2015.06.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nolte, G. (2003). The magnetic lead field theorem in the quasi-static approximation and its use for magnetoencephalography forward calculation in realistic volume conductors. Physics in Medicine & Biology, 48(22), 3637. [DOI] [PubMed] [Google Scholar]

- Nummenmaa, L. , Glerean, E. , Hari, R. , & Hietanen, J. K. (2014). Bodily maps of emotions. Proceedings of the National Academy of Sciences, 111(2), 646–651. 10.1073/pnas.1321664111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nummenmaa, L. , Hari, R. , Hietanen, J. K. , & Glerean, E. (2018). Maps of subjective feelings. Proceedings of the National Academy of Sciences, 115(37), 9198–9203. 10.1073/pnas.1807390115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld, R. , Fries, P. , Maris, E. , & Schoffelen, J.‐M. (2011). FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience, 2011, 1568–1569. 10.1155/2011/156869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof, N. N. , Connolly, A. C. , & Haxby, J. V. (2016). CoSMoMVPA: Multi‐modal multivariate pattern analysis of neuroimaging data in Matlab/GNU octave. Frontiers in Neuroinformatics, 10, 1–27. 10.3389/fninf.2016.00027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith, S. M. , & Nichols, T. E. (2009). Threshold‐free cluster enhancement: Addressing problems of smoothing, threshold dependence and localisation in cluster inference. NeuroImage, 44(1), 83–98. 10.1016/j.neuroimage.2008.03.061 [DOI] [PubMed] [Google Scholar]

- Van Veen, B. D. , van Drongelen, W. , Yuchtman, M. , & Suzuki, A. (1997). Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Transactions on Biomedical Engineering, 44(9), 867–880. 10.1109/10.623056 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figure S1. (a) hMMG of the comparisons emotion vs. Neutral expression using MNE source reconstruction. (b) hMMG of the comparisons of emotion expressions against each other. Virtual sensors showing t‐values above |5| are displayed