Abstract

A longstanding challenge facing MD-PhD students and other dual-degree medical trainees is the loss of clinical knowledge that occurs during the non-medical phases of training. Academic medical institutions nationwide have developed continued clinical training and exposure to maintain clinical competence; however, quantitative assessment of their usefulness remains largely unexplored. The current study therefore sought to both implement and optimize an online game platform to support MD-PhD students throughout their research training. Sixty-three current MD-PhD students completing the PhD research phase of training were enrolled in an institutionally developed online game platform for 2 preliminary and 4 competition rounds of 3–4 weeks each. During preliminary game rounds, we found that participation, though initially high, declined precipitously throughout the duration of each round, with 37 students participating to some extent. Daily reminders were implemented in subsequent rounds, which markedly improved player participation. Average participation in competition rounds exceeded 35% (23/63) active participants each round, with trending improvement in scores throughout the duration of PhD training. Both player participation and progress through the research phase of the MD-PhD program correlated positively with game performance and therefore knowledge retention and/or acquisition. Coupled with positive survey-based feedback from participants, our data therefore suggest that gamification is an effective tool for MD-PhD programs to combat loss of clinical knowledge during research training.

Electronic supplementary material

The online version of this article (10.1007/s40670-019-00725-1) contains supplementary material, which is available to authorized users.

Keywords: Gamification, Spaced repetition, Knowledge decay, MD-PhD, Formative assessment

Introduction

For over 60 years, physician-scientist education has equipped dual-degree seeking (MD-PhD) trainees with the clinical skills, leadership training, and scientific insights crucial to successful careers in academic medicine [1]. However, with these advantages come distinct challenges faced by aspiring physician-scientists throughout their training, including transitioning between phases of training and the long 7–9-year duration of training. These challenges have diminished enthusiasm among prospective applicants to physician- scientist development pipelines [2]. This observation is especially worrisome in light of the imminent shortage of faculty who possess both clinical and scientific expertise [3]. Therefore, physician-scientist education constitutes an area warranting educational reinforcement.

The traditional MD-PhD training program begins with 2 years of pre-clinical education, in which trainees learn alongside their MD colleagues. After successfully passing Step 1 of the United States Medical Licensing Exam (USMLE), MD-PhD students depart from their clinical training to begin the PhD research phase of their training, a period of undefined length typically ranging 3–5 years. MD-PhD students then return to clinic after having spent considerable time away from their medical training (Fig. 1a). Owing to a rapid expansion of medical knowledge [4], the decayed clinical knowledge that remains from their initial training is wholly inadequate for satisfactory performance during the final 2 years of clinical training [5]. Therefore, repeated exposure to clinical knowledge and contexts throughout research training would greatly advance physician-scientist education [6–8].

Fig. 1.

Game participants and structure. a MD-PhD training pipeline, illustrating the accrual (teal color) and loss (white) of medical knowledge throughout the various stages of training. b In addition to answering questions correctly (+ 5 points per question), players were able to earn additional points either by consistently answering daily questions correctly in a row (“streak badges”), or by consistently answering questions simply by daily participation (“marathon badges”)

Named for the eponymous Japanese concept meaning “continuous improvement,” the Kaizen Education platform (Kaizen) was developed at the University of Alabama at Birmingham for use by internal medicine residents to hone their medical knowledge in an entertaining and engaging way [9]. Kaizen software delivers questions in the form of a game, employing both intrinsic (learning, personal challenge, socialization) and extrinsic (leaderboards, points, levels, badges, etc.) motivators to stimulate individual and team-based medical knowledge advancement. Although previous studies have examined the impact of online spaced-learning games to reinforce medical knowledge among general medical students (reviewed by McCoy et al.) [14] and nursing student populations [16], the usefulness of gamification to promote medical knowledge retention among dual-degree students has yet to be explored.

The current study therefore sought to determine whether an updated, team-oriented version of the Kaizen medical knowledge retention platform could serve as an academic support mechanism to maintain clinical knowledge in a cohort of MD-PhD students throughout their PhD research years.

Methods

Study Subjects

The study cohort consisted of trainees from the NIH-supported Medical Scientist Training Program (MSTP) at the University of Alabama at Birmingham (UAB). The MSTP curriculum at UAB consists of three phases: the preclinical phase (2 years), the PhD research phase (usually 3–4 years), and the clinical phase (14–21 months) (Fig. 1a). Students in the preclinical phase take the first-year graduate school curriculum in conjunction with the first 2 years of medical school while rotating through up to three labs, permitting in-depth research experiences in different laboratories prior to selecting a laboratory for their PhD dissertation research.

After the first 2 years of classwork and laboratory rotations, students take the United States Medical Licensing Examination (USMLE Step 1) and select the laboratory in which they will complete their dissertation research. It is during the PhD phase that continuing clinical education (CCE) activities are organized as part of a required MSTP course. This course is administered in-part by a student committee designed to develop and maintain clinical skills and knowledge during the PhD research phase. Following the fulfillment of all PhD program requirements and the defense of their dissertations, students return to the medical curriculum to resume their clinical training with the same clerkships, acting internships, and electives normally taken by third- and fourth-year medical students.

Game Structure

Our study employed the pre-existing Kaizen software, which was originally described by Nevin et al. [15] and recently used for nursing education [16]. Briefly, MD-PhD students (n = 63) received an email at the beginning of each round containing a registration link. Upon registration, participants were given the opportunity to create a unique username to maintain anonymity in public rankings, if they desired.

Each round consisted of validated, faculty-generated questions selected from a pre-existing database representing 22 categories. Owing to the diverse nature of clinical knowledge assessment in medical training, multiple-choice questions ranged in style from vignette-based USMLE format to short fact-based questions across a variety of medical specialties (See Table 1 for a breakdown of daily competition questions by discipline). All questions were written by University of Alabama School of Medicine faculty for residents in the internal medicine, emergency medicine, otolaryngology, and general surgery programs, as well as professional school trainees in the Center for Clinical and Translational Science Training Academy and the graduate nursing programs at UAB. Examples of competition questions have been included in the supplemental materials (Additional File 1). Furthermore, detailed answers (50 words) were provided following each answer, providing students with formative real-time feedback.

Table 1.

Breakdown of questions by discipline

| Category | Count |

|---|---|

| Cardiology | 8 |

| Dermatology | 5 |

| Emergency Medicine | 13 |

| Endocrinology | 7 |

| Gastroenterology | 2 |

| General Medicine/Critical care | 10 |

| General surgery | 3 |

| Hematology | 2 |

| Infectious disease | 16 |

| Medical Genetics/Pediatrics | 7 |

| Musculoskeletal Disease/Orthopedics | 7 |

| Nephrology | 8 |

| Neurology | 5 |

| Oncology | 8 |

| Otolaryngology | 3 |

| Pharmacology | 6 |

| Pulmonary medicine | 6 |

| Psychiatry | 5 |

| Rheumatology/Immunology | 5 |

| Urology | 2 |

| Women’s Health/OB-GYN | 10 |

| Miscellaneous | 4 |

Total question count does not add up to the number of daily questions posed because some questions belong to multiple categories

Consultation of learning materials during gameplay was designated as an honor code violation, thus heavily discouraging gamers from using outside resources when answering questions. Initially, questions during the first preliminary round were administered with a short (30 s to 1 min) time-limit depending on the length of the question stem. However, these time-limits were later removed due to negative player feedback (see “Results”). Questions not answered on the day of their release could be revisited at the trainee’s convenience, although badges worth extra points incentivized trainees to answer each question on the same day that it was released, thereby encouraging regular daily response (see “Discussion” of badges below). Students competed against one another as individuals but were also divided into eight predetermined teams (balanced by year in the program but otherwise randomly assigned) to encourage team competition. Two students serving on the MSTP’s CCE committee also served as Game Masters for the game rounds described below. Their duties included assigning questions, managing the Kaizen-MSTP online platform, and communicating with participants by way of emails and text messaging updates.

Upon logging in to the Kaizen-MSTP platform, players could view a leaderboard showing individual participants and teams ranked by score, participation level, and badges acquired. This leaderboard was automatically adjusted by the Kaizen software in real-time. Five points were awarded for each correct answer, while virtual badges worth additional points were awarded on the following bases: daily “streak” badges were awarded for correctly answering multiple questions in a row in intervals of three, five, and ten questions (worth 9, 15, and 30 points, respectively); and “marathon” badges were awarded for consistent daily participation in intervals of 4, 8, and 12 days in a row (worth 10, 20, and 30 points, respectively). Weekly emails were sent to inform participants of individual and team standings, and daily text messages were sent to remind students of daily questions and to motivate participation (See “Preliminary Round Findings and Optimization” under Discussion) (Fig. 1b).

Game Rounds and Competition

We first conducted two preliminary rounds to troubleshoot various aspects of the game protocol. Participant feedback was solicited both informally and by way of an anonymous survey administered following the first preliminary round. In response to this feedback, we modified several game variables, including the duration of game rounds, the days of the week on which questions were released, the number and point-value of badges awarded in-game, the timing of weekly update emails, and the exclusion of time limits on game questions. These variables were adjusted to maximize trainee participation and overall player satisfaction in participation (see “Results”).

Following these two preliminary rounds, we next administered four competition rounds: round 1 (27 days), round 2 (29 days), round 3 (29 days), and Round 4 (29 days). Each round consisted of 1 question per day, including the weekends. Following each round, a subset of four questions was selected from the preceding round for repetition in all subsequent competition rounds, thus ensuring that a chosen subset of questions could be repeated at least once and at most three times across all rounds (n = 8 ± 4). Questions were selected for repetition on the basis of discrimination index and a moderate level of difficulty, where correct response rate 50%. At the conclusion of each round, the three individuals with the highest individual scores received a small prize while the three teams with the highest team scores received plaques and recognition at the MSTP’s annual retreat.

Data Analysis

The Kaizen software automatically recorded all game data including player identification, trainee level, team affiliation, points and badges earned, and response accuracy. Data were exported from the Kaizen platform and analyzed using R (v3.5.2) statistical programming language with analysis employing the following statistical tests: Player engagement and attrition during Preliminary Round 1 was determined by two-tailed Student’s t test (Fig. 2b). Score plotted as a function of participation was modeled using linear regression analysis (Fig. 2c). Player engagement and attrition for each round were measured by linear regression analysis of the number of players answering each daily question (Fig. 3). Analysis of co-variance (ANCOVA) was then applied to the resulting linear equations to test for significant differences in player attrition between rounds.

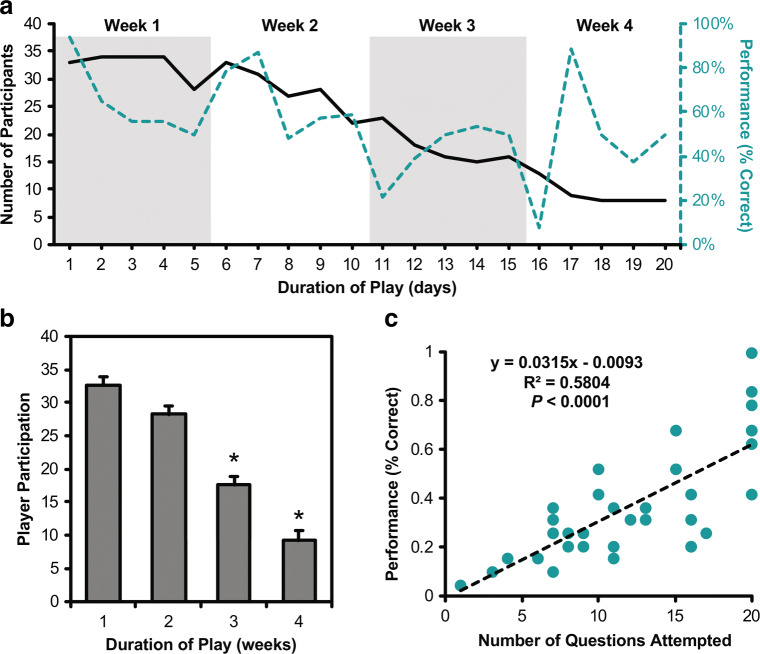

Fig. 2.

Game usage and performance for preliminary round 1. a The number of participants throughout the duration of play along with the fraction of players selecting the correct answer. b Average participation by week, demonstrating statistically significant differences in participation by week 3 (p < 0.05). c The overall score of each participant plotted as a function of overall participation as determined by the number of questions answered

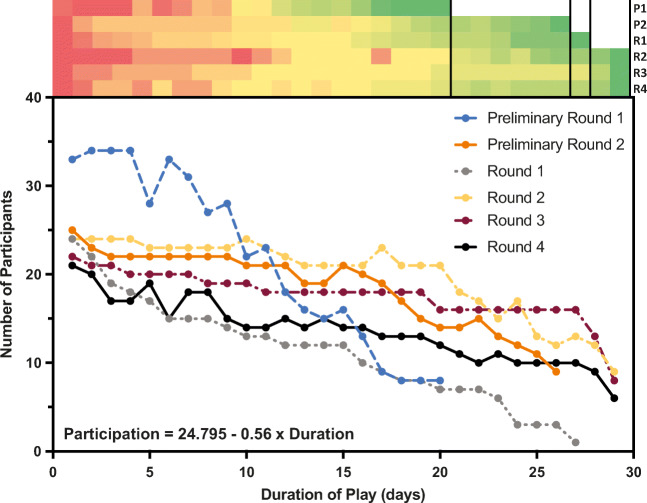

Fig. 3.

Aggregate game usage across all rounds. The number of participants answering questions plotted as a function of game duration across all rounds. The heatmap represents each round’s participation per question (scaled to each round of play; red = more participation, green = less participation) and visualizes player attrition as each round progressed. Aggregate usage is significant at p < 0.0001 based on one-way ANOVA. Changes implemented between P1 (blue) and P2 (orange) appear to have resulted in decreased player attrition throughout subsequent round (F = 148.292, p < 0.0001) based on linear regression analysis followed by analysis of covariance (ANCOVA)

Differences in overall student performance compared to the number of times a question was repeated in subsequent rounds (Fig. 4a) as well as the number of years spent in the research phase of the MSTP (Fig. 4c) were determined by one-way analysis of variance (ANOVA). Helpfulness measures and qualitative feedback were solicited from players by way of an anonymous online survey following the administration of preliminary round 1 (see Additional File 2), while participants were invited to submit informal feedback to game coordinators at any time via email.

Fig. 4.

Player performance in competition rounds. a Correct scores on repeated questions (n = 8 ± 4) based on the frequency of repetition (Black), demonstrating significantly increased scores. Student participation (gray) as a function of competition round, failing to demonstrate significant difference*. b The performance of participants answering questions was plotted as a function of the number of questions attempted, with trendline drawn to represent the standardization of average score over the number of questions attempted (λ = 0.1). c Player performance (mean ± SEM) plotted by year in the graduate program (n = 5 ± 2)

Results

Participant Characteristics

Thirty-seven of the 63 invited students (58.73%) participated in Kaizen-MSTP at any level (answering at least one question), with an average participation of 23 students per competition round. Representative proportions of male (24, 64.9%) and female (13, 35.1%) students participated relative to the academic cohorts undertaking MD-PhD training (34 ± 10% female) (Table 2). Although students from all phases of the MSTP were invited to participate, our primary research question concerned only those players who were engaged in the research phase of the program.

Table 2.

Study population and participation in competition rounds. Asterisk indicates students who were not in their research years and so were not included in all analyses

| Population | Players | Performance |

|---|---|---|

| n | 37 | 63 ± 22% |

| Male | 24 (64.9%) | 63 ± 22% |

| Female | 13 (35.1%) | 66 ± 23% |

| Year in program | ||

| *MS1-2 | 5 (13.5%) | 51.3 ± 15% |

| GS1 | 6 (16.2%) | 51.3 ± 23% |

| GS2 | 5 (13.5%) | 66.9 ± 19% |

| GS3 | 7 (18.9%) | 68.4 ± 31% |

| GS4 | 4 (10.8%) | 69.1 ± 19% |

| GS5 | 5 (13.5%) | 69.2 ± 30% |

| *MS3-4 | 5 (13.5%) | 66.7 ± 12% |

| Area of research | ||

| Biomedical engineering | 2 (5.4%) | 50.0% |

| Biostatistics | 1 (2.7%) | 54.9% |

| Cancer biology | 5 (13.5%) | 70.5% |

| Cell, molecular, and developmental biology | 2 (5.4%) | 74.0% |

| Genetics, genomics, and bioinformatics | 3 (8.1%) | 62.3% |

| Immunology | 7 (18.9%) | 60.8% |

| Neuroscience | 6 (16.2%) | 69.3% |

| Pathobiology and molecular medicine | 6 (16.2%) | 69.2% |

| Undeclared | 5 (13.5%) | 67.9% |

Preliminary Rounds

Analysis of preliminary round 1 revealed an increasing level of player attrition as the round progressed (Fig. 2a & b), with significantly fewer students answering questions by weeks 3 (two-tailed t test, p < 0.0001) and 4 (p < 0.0001). However, analysis of player participation revealed a significant positive correlation between degree of participation (as number of questions answered) and overall performance (Pearson coefficient r = 0.7618, p < 0.0001).

Informal feedback from multiple players suggested that while participation in Kaizen-MSTP was not a time-consuming commitment, students had difficulty remembering to answer daily questions in light of their busy schedules. In response to this feedback, we implemented a voluntary, daily text message alert service in which players could elect to receive daily reminders. We also increased the game coordinators’ communication with participants by way of weekly emails updating players on the previous week’s changes in rankings as well as any new badges earned. Previously, new game questions had been released only on week days (Mondays through Fridays); however, in response to decreased player engagement between weeks, and with the hope of habituating player participation, we altered the game procedure such that questions were released daily for the duration of the round, including weekends.

When asked how participation in Kaizen-MSTP had affected their level of medical knowledge, 50% of survey respondents perceived that their medical knowledge had “increased” as a result of their participation, while the remaining 50% indicated that their medical knowledge had “not changed” (see Additional File 2). When asked, “What other comments do you have regarding Kaizen-MSTP as an educational tool?” open-ended responses were entirely positive, with participants describing the game as “useful and fun tool” with “a lot of potential.” Consistent with the aims of this project, one participant highlighted that it “encourages thought processes I do not otherwise use during my normal daily activities as a graduate student.”

Competition Rounds

Following the changes implemented between preliminary rounds 1 and 2, participation levels in the competition rounds resembled those of preliminary round 2, becoming significantly more stable and exhibiting significantly less player attrition (one-way ANOVA P < 0.01, Fig. 3). Specifically, linear regression analysis followed by analysis of covariance (ANCOVA) support that the changes implemented between P1 (blue) and P2 (orange) resulted in decreased player attrition across all subsequent competition rounds (F = 148.292, p < 0.0001).

In general, average scores on repeated questions improved with increasing repetitions while active participation remained steady with approximately 23 of the program’s 63 enrolled trainees remaining engaged in the majority of competition rounds. We found significant differences between average scores depending on how many times a question was repeated (F 4, 19.47; p < 0.0001), with post-hoc analysis revealing significant improvement between the round in which a question was first introduced and the first, second, and third repetitions, as well as significant improvement between the first and third repetitions (Fig. 4a).

We next asked whether overall player performance could be associated with increased participation or with the player’s year in the PhD research phase. When aggregate player performance was plotted against participation (defined as the total number of questions attempted), we found that the variability in student performance decreased markedly with increasing participation and that minimum average score exhibited a trend toward increased performance (Fig. 4b). Additionally, analysis of student performance also revealed an upward trend in performance from GS2 to GS2 (F 5, 1.749; p = 0.1509), followed by a maintenance of performance throughout the remaining years of graduate research training (Fig. 4c).

Discussion

While dedicated research experiences provide invaluable scientific training for medical students and residents, multiple studies have suggested that these experiences come at a corresponding cost of medical knowledge and clinical skills [5, 12, 13]. Indeed, medical students’ retention of knowledge follows a pattern similar to that found in other disciplines, a phenomenon which can be understood in the context of the broader educational theory as “knowledge decay” [14]. Attempts to ameliorate this problem have shown that repetitive testing and spaced-repetition learning can be employed to combat knowledge decay in student and resident populations [15, 16], but the medical education community has yet to consider gamification and spaced-repetition testing in the context of MD-PhD students, a population for whom the interlude between medical studies and the reinstitution of clinical duties can last several years.

MD-PhD students frequently cite program transition points, especially the return to clinical clerkships after extended PhD research, as a source of anxiety [17]. Preparing for this transition is difficult, however, as students are engaged in full-time dissertation research. Therefore, the UAB MSTP has developed a continuing clinical education course during this phase of training which consists of regular patient interactions, shadowing, and case conferences.

In this study, we describe an innovative approach to the continuing clinical education of MD-PhD students during their PhD research years. Specifically, we modified an existing, web-based medical knowledge competition platform (Kaizen) in order to incorporate gamification and spaced-repetition learning into an existing continuing clinical education curriculum. We found correlative evidence supporting that participation in Kaizen-MSTP improved maintenance, possibly even accrual, of medical knowledge throughout the research years. Our data further suggest that gamification is an educational support mechanism that confers benefit in proportion to the degree of use, similar to the effects observed in other learners (8). These preliminary findings, while exciting, require further investigation to define the exact nature and extent of benefit relative to students choosing not to participate.

The improvement in player attrition and daily participation following game optimization underscores the need for managers of game-like educational platforms to acquire continual feedback from participants regarding their specific needs. Although initial gains in participation are likely motivated by novelty of any resource (as seen in preliminary round 1), maintaining player engagement requires an awareness of both the benefits and challenges facing a student-user population should inform game design and optimization. In the case of this study, iterative testing of the modified Kaizen-MSTP platform via preliminary rounds revealed that participants were busy individuals managing multiple responsibilities. Such challenges are likely universal to student populations, supporting the implementation of reminders for all methods of formative assessment and spaced-repetition learning.

Medical knowledge learned by students early in their training is often quickly forgotten, even in traditional medical student populations [18]; therefore, a commensurate decay of medical knowledge for MD-PhD students engaged in long-term research is not unexpected. Fortunately, spaced repetition has been shown to improve retention of clinical knowledge for medical students in randomized, controlled trials [18, 19].

Despite the relatively small sample sizes of repeated questions (n = 8 ± 4) and student population (n = 63), our findings suggest that routine engagement in game-based medical knowledge assessments may promote the maintenance of medical knowledge throughout MD-PhD research training in proportion to the degree of student participation. Furthermore, we provide preliminary support that spaced repetition may increase the retention of tested content; however, follow-up studies are necessary to determine whether overall performance on content areas is improved, or whether gains are simply based on the specific recall of question stems. The long-term effects of participation in Kaizen-MSTP or similar knowledge games remains currently untested, but these questions remain the focus of ongoing follow-up studies which aim to compare participation with clinical outcomes such as clerkship grades, USMLE Step 2 CK scores, match rates, and subjective assessments of anxiety and confidence upon reintroduction to the clinical setting.

Among the observations made relative to each academic cohort was a trend toward improved performance with increasing time spent in graduate training. In contrast to the classical deterioration of medical knowledge throughout non-medical research training, students who were more advanced in their PhD research years appeared to perform better than their underclassmen (Fig. 4c). Although many potential confounders exist to explain this pattern, it may reflect increased use or effort on the part of students in anticipation of their return to clinical training. However, further studies using cross-institutional data are needed to characterize this observation.

Finally, the positive relationship identified between the number of questions a player attempted and that player’s overall performance (expressed as a percentage of correct answers) suggests that participation alone may be sufficient to confer some value to students as a positive strategy to familiarize students with the lines of inquiry and reasoning skills used in clinically-oriented questions (Figs. 2c and 4b).

Although we believe that the current study furthers our understanding of continuing clinical education, several limitations exist which should be considered when interpreting the results. The major limitations of our study include the small study population and its provenance from a single institution. It is possible that a larger sample size and/or a longer period of data collection could alter our results. As previously addressed, players were not monitored to limit their use of external resources when answering the questions. While the distribution of scores suggests that consultation of outside resources was not widespread in the competition rounds, it nonetheless remains a possibility for any expanded implementation of a game using these parameters. Lastly, we provide some demographic and usage data from participants of this study, but ethical approval to obtain demographic and academic characteristics of non-participants precluded our analysis of clinical readiness of participants relative to non-participants. Therefore, future approval will enable us to determine the differential effects of Kaizen-MSTP utilization relative to those who opted out.

Conclusions

We report a study in which a modified version of the Kaizen learning platform was used to “gamify” the maintenance of clinical education and optimize MD-PhD student participation during their PhD research years. Despite the incipient nature of this program, encouraging trends and positive feedback from our pilot study suggest that gamification and spaced repetition learning may be useful ways to encourage medical study, and may promote the retention of clinical knowledge. It is our goal that Kaizen-MSTP will continue as an engaging means of facilitating regular review of clinical medicine in a format that can be easily incorporated into the daily schedule of MD-PhD students.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Abbreviations

- ANCOVA

Analysis of Covariance

- ANOVA

Analysis of Variance

- CCE

Continuing Clinical Education

- MSTP

Medical Scientist Training Program

- UAB

University of Alabama at Birmingham

- USMLE

United States Medical Licensing Examination

Funding Information

This project was supported by a Teaching Innovation & Development Grant from the UAB Center for Teaching and Learning and the UAB Medical Scientist Training Program T32GM008361.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they have no competing interests.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the University of Alabama at Birmingham and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Footnotes

Mark E. Pepin and William M. Webb contributed equally.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Alamri Y. The combined medical/PhD degree: a global survey of physician-scientist training programmes. Clin Med (Lond) 2016;16(3):215–18. doi: 10.7861/clinmedicine.16-3-215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Amaral F, Almeida Troncon EL. Retention of knowledge and clinical skills by medical students: a prospective, longitudinal, one-year study using basic pediatric cardiology as a model. Open Med Educ J. 2013;6:48–54. doi: 10.2174/1876519X01306010048. [DOI] [Google Scholar]

- 3.Bills JL, Davidson M, Dermody TS. Effectiveness of a clinical intervention for MD/PhD students re-entering medical school. Teach Learn Med. 2013;25(1):77–83. doi: 10.1080/10401334.2012.741539. [DOI] [PubMed] [Google Scholar]

- 4.Chrzanowski S. Transitions within MD-PhD programs: reassurance for students. Med Sci Educ. 2017;27:835–7. doi: 10.1007/s40670-017-0472-7. [DOI] [Google Scholar]

- 5.Custers EJ. Long-term retention of basic science knowledge: a review study. Adv Health Sci Educ Theory Pract. 2010;15(1):109–28. doi: 10.1007/s10459-008-9101-y. [DOI] [PubMed] [Google Scholar]

- 6.D’Angelo AD, D’Angelo JD, Rogers DA, Pugh CM. 2017. Faculty perceptions of resident skills decay during dedicated research fellowships. Am J Surg. [DOI] [PubMed]

- 7.Dugdale A, Alexander H. Knowledge growth in medical education. Acad Med. 2001;76(7):669–70. doi: 10.1097/00001888-200107000-00002. [DOI] [PubMed] [Google Scholar]

- 8.Goldberg C, Insel PA. Preparing MD-PhD students for clinical rotations: navigating the interface between PhD and MD training. Acad Med. 2013;88(6):745–7. doi: 10.1097/ACM.0b013e31828ffeeb. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Greb AE, Brennan S, McParlane L, Page R, Bridge PD. Retention of medical genetics knowledge and skills by medical students. Genet Med. 2009;11(5):365–70. doi: 10.1097/GIM.0b013e31819c6b2d. [DOI] [PubMed] [Google Scholar]

- 10.Harding CV, Akabas MH, Andersen OS. History and outcomes of 50 years of Physician-Scientist Training in Medical Scientist Training Programs. Acad Med. 2017;92(10):1390–8. doi: 10.1097/ACM.0000000000001779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kerfoot BP, DeWolf WC, Masser BA, Church PA, Federman DD. Spaced education improves the retention of clinical knowledge by medical students: a randomised controlled trial. Med Educ. 2007;41(1):23–31. doi: 10.1111/j.1365-2929.2006.02644.x. [DOI] [PubMed] [Google Scholar]

- 12.Kerfoot BP, Fu Y, Baker H, Connelly D, Ritchey ML, Genega EM. Online spaced education generates transfer and improves long-term retention of diagnostic skills: a randomized controlled trial. J Am Coll Surg. 2010;211(3):331–337 e331. doi: 10.1016/j.jamcollsurg.2010.04.023. [DOI] [PubMed] [Google Scholar]

- 13.Matos J, Petri CR, Mukamal KJ, Vanka A. Spaced education in medical residents: an electronic intervention to improve competency and retention of medical knowledge. PLoS One. 2017;12(7):e0181418. doi: 10.1371/journal.pone.0181418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McCoy L, Lewis JH, Dalton D. Gamification and multimedia for medical education: a landscape review. J Am Osteopath Assoc. 2016;116(1):22–34. doi: 10.7556/jaoa.2016.003. [DOI] [PubMed] [Google Scholar]

- 15.Nevin CR, Westfall AO, Rodriguez JM, Dempsey DM, Cherrington A, Roy B, Patel M, Willig JH. Gamification as a tool for enhancing graduate medical education. Postgrad Med J. 2014;90(1070):685–93. doi: 10.1136/postgradmedj-2013-132486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Roche CC, Wingo NP, Willig JH. Kaizen: An innovative team learning experience for first-semester nursing students. J Nurs Educ. 2017;56(2):124. doi: 10.3928/01484834-20170123-11. [DOI] [PubMed] [Google Scholar]

- 17.Schmidmaier R, Ebersbach R, Schiller M, Hege I, Holzer M, Fischer MR. Using electronic flashcards to promote learning in medical students: retesting versus restudying. Med Educ. 2011;45(11):1101–10. doi: 10.1111/j.1365-2923.2011.04043.x. [DOI] [PubMed] [Google Scholar]

- 18.Swartz TH, Lin JJ. A clinical refresher course for medical scientist trainees. Med Teach. 2014;36(6):475–9. doi: 10.3109/0142159X.2014.886767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Williams CS, Iness AN, Baron RM, Ajijola OA, Hu PJ, Vyas JM, Baiocchi R, Adami AJ, Lever JM, Klein PS, Demer L, Madaio M, Geraci M, Brass LF, Blanchard M, Salata R, Zaidi M. Training the physician-scientist: views from program directors and aspiring young investigators. JCI Insight. 2018;3(23):e125651. doi: 10.1172/jci.insight.125651. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.