Abstract

We approach the C. elegans connectome as an information processing network that receives input from about 90 sensory neurons, processes that information through a highly recurrent network of about 80 interneurons, and it produces a coordinated output from about 120 motor neurons that control the nematode’s muscles. We focus on the feedforward flow of information from sensory neurons to motor neurons, and apply a recently developed network analysis framework referred to as the “hourglass effect”. The analysis reveals that this feedforward flow traverses a small core (“hourglass waist”) that consists of 10-15 interneurons. These are mostly the same interneurons that were previously shown (using a different analytical approach) to constitute the “rich-club” of the C. elegans connectome. This result is robust to the methodology that separates the feedforward from the feedback flow of information. The set of core interneurons remains mostly the same when we consider only chemical synapses or the combination of chemical synapses and gap junctions. The hourglass organization of the connectome suggests that C. elegans has some similarities with encoder-decoder artificial neural networks in which the input is first compressed and integrated in a low-dimensional latent space that encodes the given data in a more efficient manner, followed by a decoding network through which intermediate-level sub-functions are combined in different ways to compute the correlated outputs of the network. The core neurons at the hourglass waist represent the information bottleneck of the system, balancing the representation accuracy and compactness (complexity) of the given sensory information.

Author summary

The C. elegans nematode is the only species for which the complete wiring diagram (“connectome”) of its neural system has been mapped. The connectome provides architectural constraints that limit the scope of possible functions of a neural system. In this work, we identify one such architectural constraint: the C. elegans connectome includes a small set (10-15) of neurons that compress and integrate the information provided by the much larger set of sensory neurons. These intermediate-level neurons encode few sub-functions that are combined and re-used in different ways to activate the circuits of motor neurons, which drive all higher-level complex functions of the organism such as feeding or locomotion. We refer to this encoding-decoding structure as “hourglass architecture” and identify the core neurons at the “waist” of the hourglass. We also discuss the similarities between this property of the C. elegans connectome and artificial neural networks. The hourglass architecture opens a new way to think about, and experiment with, intermediate-level neurons between input and output neural circuits.

Introduction

Natural, technological and information-processing complex systems are often hierarchically modular [1, 2, 3, 4]. A modular system consists of smaller sub-systems (modules) that, at least in principle, can function independently of whether or how they are connected to other modules: each module receives inputs from the environment or from other modules to perform a certain function [5, 6, 7]. Modular systems are often also hierarchical, meaning that simpler modules are embedded in, or reused by, modules of higher complexity [8, 9, 10 11]. It has been shown that both modularity and hierarchy can emerge naturally as long as there is an underlying cost for the connections between different system units [12, 13].

In the technological world, modularity and hierarchy are often viewed as essential principles that provide benefits in terms of design effort (compared to “flat” or “monolithic” designs in which the entire system is a single module), development cost (design a module once, reuse it many times), and agility (upgrade, modify or replace modules without affecting the entire system) [14, 15, 16]. In the natural world, the benefits of modularity and hierarchy are often viewed in terms of evolvability (the ability to adapt and develop novel features can be accomplished with minor modifications in how existing modules are interconnected) [17, 18, 19] and robustness (the ability to maintain a certain function even when there are internal or external perturbations can be accomplished using existing modules in different ways) [20, 21, 22].

It has been observed across several disciplines that hierarchically modular systems are often structured in a way that resembles a bow-tie or hourglass (depending on whether that structure is viewed horizontally or vertically) [23, 24]. This structure enables the system to generate many outputs from many inputs through a relatively small number of intermediate modules, referred to as the “knot” of the bow-tie or the “waist” of the hourglass. The “hourglass effect” has been observed in systems of embryogenesis [25, 26], metabolism [27, 28], immunology [29, 30], signaling networks [31], vision and cognition [32, 33], deep neural networks [34], computer networking [35], manufacturing [36], as well as in the context of general core-periphery complex networks [37, 38]. The few intermediate modules in the hourglass waist are critical for the operation of the entire system, and so they are also more conserved during the evolution of the system compared to modules that are closer to inputs or outputs [39, 40, 35]. Note that the two terms, bow-tie and hourglass, have not been always interchangeable in the network science literature. In particular, the term bow-tie has been applied even to networks for which the knot includes a large fraction of the network’s nodes [41, 42].

In this paper, we apply the hourglass analysis framework of [23] on the C. elegans connectome [43]. The C. elegans connectome can be thought of as an information processing network that transforms stimuli received by the environment, through sensory neurons, into coordinated bodily activities (such as locomotion) controlled by motor neurons [43]. Between the sensory and motor neurons, there is a highly recurrent network of interneurons that gradually transforms the input information to output motor activity. An important challenge in applying the analysis framework of [23] on C. elegans is that the former assumes that the network from a given set of input nodes (sources) to a given set of output nodes (targets) is a Directed Acyclic Graph (DAG). On the contrary, the C. elegans connectome includes many nested feedback loops between all three types of neurons. For this reason, we extend the methods of [23] in networks that may include cycles as long as we are given a set of sources and a set of targets. The key idea is to identify the set of feedforward paths from each source towards targets, and to apply the hourglass analysis framework on the union of such paths, across all sources.

Our main result is that the C. elegans connectome exhibits the hourglass effect. This result is robust to the “routing methodology” that separates the feedforward from the feedback flow of information. Further, we observe the hourglass architecture when we consider just chemical synapses, or the combination of the latter with gap junctions. On the contrary, appropriately randomized networks do not exhibit the hourglass property. We also identify the neurons at the “waist” of the hourglass. Interestingly, they are mostly the same set of interneurons that were previously shown, using a different analytical methodology, to constitute the “rich-club” of the C. elegans connectome [44]. We explain that these two network architectures, hourglass and rich-club, are not equivalent—and in fact the hourglass property of the C. elegans connectome is maintained even if we rewire the connections between core neurons so that they do not form a rich-club. The fact that the core interneurons also form a rich-club suggests that they form an information processing bottleneck that integrates the compressed information from different sensory modalities, before driving any higher-level neural circuits.

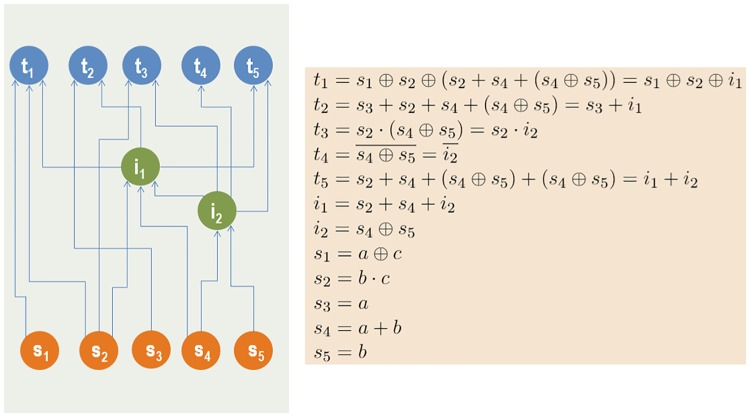

We explain the benefits of the hourglass architecture, in the context of neural information processing systems, using an encoder-decoder model that resembles recent architectures in artificial neural networks [34, 45]. The encoding component compresses the redundant stimuli provided by the sensory neurons into a low-dimensional latent feature space (represented by the core neurons at the hourglass waist) that encodes the source information in a more efficient manner. Then, the decoding component of the network combines those latent features, which represent intermediate-level sub-functions, in different ways to drive each output through the motor neurons. The toy-example of Fig 1 illustrates this idea using a Boolean circuit with five binary sources and five output functions.

Fig 1. A hypothetical Boolean system with five sources and five targets.

The sources are represented by orange nodes while the targets by blue nodes. Each target is a logic function of the sources. The sources are correlated, as shown by their logical expressions. A direct source-to-target computation would require 18 Boolean operations. Instead, we can compute the targets with only 9 operations if we first compute the two intermediate green nodes shown (3 operations) and then reuse those nodes to compute the targets (6 operations). This cost reduction is possible because there are correlations between the target functions. The two intermediate nodes, which represent the hourglass waist in this example, compress the information provided by the sources, computing sub-functions that are re-used at least twice in the targets. In this example the encoding part of the network is the set of connections between sources and intermediate nodes, while the decoding part is the set of connections between intermediate nodes and targets. In general, the encoder and decoder components can include additional nodes, creating a deeper hourglass architecture.

Methods

Connectome

The dataset we analyze describes the neural network of the hermaphrodite C. elegans, as reported in [43]. This connectome is a directed network between 279 neurons (the 282 non-pharyngeal neurons excluding VC6 and CANL/R, which are missing connectivity data). Neurons can be connected with two types of connections: chemical synapses and gap junctions (or, electrical synapses). The former are typically slower but strongest connections, and they transfer information only in one direction. The latter can be thought of as bi-directional connections.

The synaptic network (i.e., the network formed by only chemical synapses) consists of 2194 neural connections, created by 6393 chemical synapses. The weight of a connection is defined as the number of chemical synapses between the corresponding pair of neurons. The in-strength or out-strength of a neuron is defined as the sum of connection weights entering or leaving that neuron, respectively.

The complete network includes both chemical synapses and gap junctions. There are 514 pairs of neurons connected through gap junctions, creating the same number of bi-directional connections between those neurons. Unless mentioned otherwise, we analyze the synaptic network. In the Section “Including Gap Junctions: the Complete Network”, we extend the analysis to consider the complete network, asking whether there are any major differences when we also consider gap junctions.

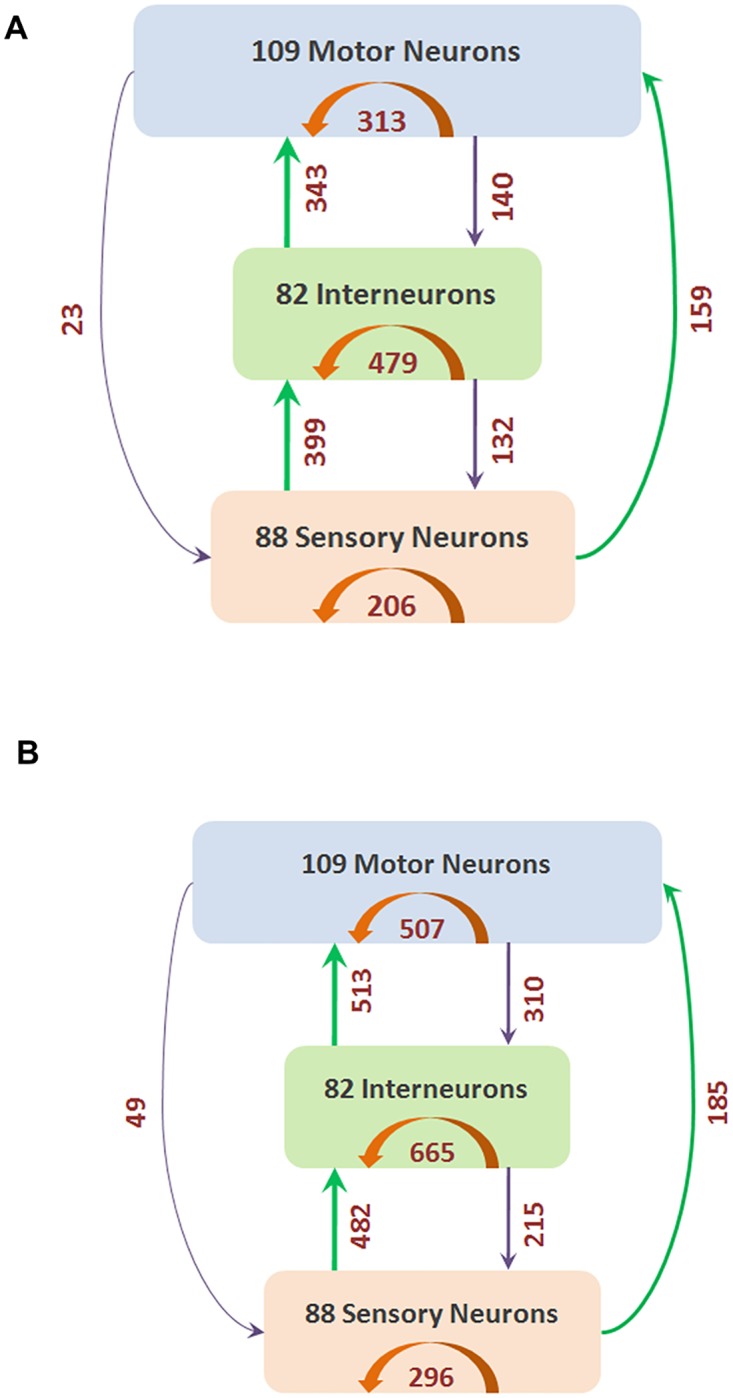

The C. elegans neurons can be classified as sensory (S), inter (I) and motor (M) neurons, based on their structure and function [46]. Sensory neurons transfer information from the external environment to the central nervous system (CNS). Motor neurons transfer information from the CNS to effector organs (e.g. glands or muscles). Interneurons process information within the CNS. The C. elegans connectome has 88 sensory neurons, 87 interneurons and 119 motor neurons. Some of these neurons however have a dual role: ten behave as S and M, two as S and I, and three as M and I. In our analysis, we consider the S-M and S-I dual-role neurons as sensory, and the M-I neurons as motor. Consequently, the final network consists of 88 sensory neurons, 82 interneurons, and 109 motor neurons.

We can think of C. elegans as an information processing system in which the feedforward flow of information, from sensory to motor neurons, transfers sensory cues from the environment to the CNS, processes those signals to extract actionable information, which is then used to drive the behavior/motion of the organism. This feedforward flow however is regulated by multiple feedback loops that transfer information in the opposite direction, as well as lateral connections between neurons of the same type.

The connections that we refer to as feedforward (FF) are those from S to I, I to M, and S to M neurons. In the opposite direction (i.e., from I to S, M to I, and M to S neurons) the connections are referred to as feedback (FB). Connections between neurons of the same type (i.e., S to S, I to I, and M to M neurons) are referred to as lateral (LT). In the synaptic network, there are 901 FF connections, 998 LT connections, and 295 FB connections. Fig 2 shows the breakdown of these connection types in the synaptic and complete networks.

Fig 2. Neurons separated into three classes (S, I and M) based on structure and function.

The number of connections between these three classes (and within each class) are shown with arrows (green for FF, orange for LT and purple for FB). (A) shows the synaptic network containing only chemical synapses, and (B) shows the complete network containing both chemical synapses and gap junctions.

The FB connection weights are often lower than FF and LT weights (see S1A Fig Also, when considering neuron pairs that are reciprocally connected with both FF and FB connections, it is more likely that the FF connection is strongest than the corresponding FB connection (see S1B Fig). These observations suggest that the distinction between FF and FB connections has some neurophysiological significance.

If we focus on the top-5% stronger connections, relative to all chemical synapses, this set is dominated by feedforward S-to-I and I-to-M connections, as well as by lateral connections between I neurons and M neurons (see S1 Table). None of the top-5% connections is of the feedback type. This observation suggests that feedback connections are weaker—one reason may be that they are involved mostly with the control of feedforward circuits, acting as modulators rather than drivers.

Feedforward paths from sensory to motor neurons

The “routing problem” in a communication network refers to the selection of an efficient path, or multiple paths, from each source node to each target. In neural networks, there is no established “routing algorithm” that can accurately describe or model how information propagates from a sensory neuron to a motor neuron. Whether a neuron will fire or not depends on how many of its pre-synaptic neurons fire, the timing of those events, the physical size and location of the synapses in the dendritic tree, and several other factors. There are some first principles, however, that we can rely on to identify plausible routing schemes [47, 48]. These schemes should be viewed only as phenomenological models—we do not claim that neurons actually choose activation paths based on the following algorithms.

First, neurons cannot form routes based on information about the complete network or through coordination with all neurons (such as the routing algorithms used by the Internet or other technological systems). Instead, whether a neuron fires or not should be a function of only locally available information. So, we cannot expect that neural circuits use optimal routes that minimize the path length (“shortest path routing”) or other path-level cost functions [49].

Second, evolution has most likely selected routing schemes that result in efficient (even though not necessarily optimal) neural communication. Consequently, we can reject routing schemes that exploit all possible paths between two neurons as many of those paths would be inefficient.

Third, for robustness and resilience reasons, it is likely that multiple paths are used to transfer information from each sensory neuron to a motor neuron—schemes that only select a single path would be too fragile.

Fourth, given the low firing reliability of neurons, it is unlikely that a sensory neuron can communicate effectively with a motor neuron through multiple intermediate neurons. There should be a limit on the length of any plausible neural path [50].

Putting the previous four principles together, we are led to the following hypothesis: a sensory neuron S communicates with a motor neuron T through multiple paths that may be suboptimal but not much longer than the shortest path length from S to T.

Given this broad hypothesis, we identify several plausible routing schemes—and then examine whether our results are robust to the selection of a specific routing scheme.

To help choose reasonable parameter values for the various routing schemes we consider, we first examine the length and number of shortest paths from each sensory neuron S to each motor neuron M. S2A Fig shows the distribution of the length of these paths, measured in “hops” (i.e., connections between neurons). Almost all shortest paths from S to M neurons are between 2-4 hops. So, if the shortest connection from a sensory to a motor neuron is say 3 hops, the second and fourth principles suggest that we may also consider slightly longer paths, say 4 or 5 hops long.

S2B Fig shows the likelihood that an (S,M) pair is connected through x shortest paths. Note that only 4% of (S,M) pairs are not connected by any path, about 32% of (S,M) pairs are connected through only one shortest path, while the rest are connected with multiple shortest paths.

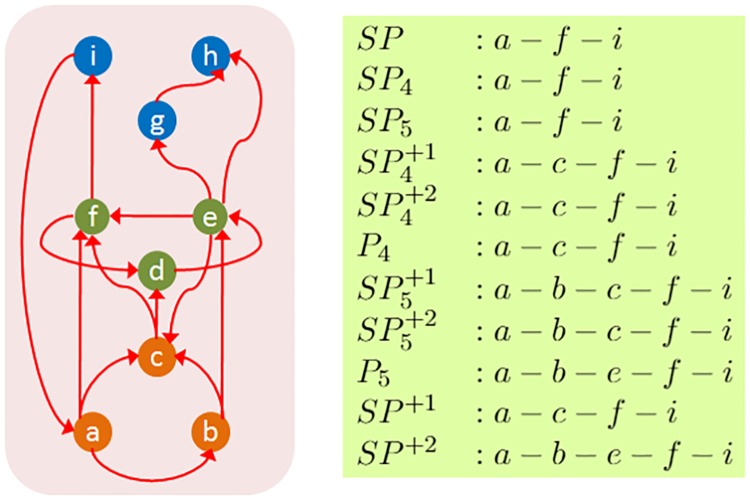

The various routing schemes we consider in the rest of the paper are (see Fig 3):

Fig 3. A representative path for each routing scheme when the source is a and the target is i.

The orange nodes represent sensory neurons, the green nodes interneurons, and the blue nodes motor neurons.

“SP”: As a reference point, SP refers to the selection of only shortest paths from a sensory neuron s to a motor neuron t.

“SP4”: The subset of SP including paths that are at most 4 hops.

“SP5”: The subset of SP including paths that are at most 5 hops.

“SP+1”: The paths in SP together with all paths that are one hop longer than the shortest path from s to t.

“SP+2”: The paths in SP together with all paths that are one or two hops longer than the shortest path from s to t.

“”: The subset of SP+1 including paths that are at most 4 hops.

“”: The subset of SP+1 including paths that are at most 5 hops.

“”: The subset of SP+2 including paths that are at most 4 hops.

“”: The subset of SP+2 including paths that are at most 5 hops.

“P4”: All paths from s to t that are at most 4 hops long.

“P5”: All paths from s to t that are at most 5 hops long.

The last two routing schemes (P4 and P5) are not variations of shortest path but they are based on the notion of diffusion-based routing. In the latter, information propagates from a source towards a sink selecting among all possible connections either randomly (e.g., random-walk based models) [51] or based on a threshold function (e.g., a neuron fires if at least a certain function of its pre-synaptic neurons fire) [52].

Path centrality metric and τ-core selection

After utilizing one of the previous routing schemes to compute all paths from a sensory neuron to a motor neuron, we analyze these “source-target” paths based on the hourglass framework, developed in [23]. The objective of this analysis is to examine whether there is a small set of nodes through which almost all source-target paths go through. In other words, the hourglass analysis examines whether there is a small set of neurons that forms a bottleneck in the flow of information from sensory neurons towards motor neurons.

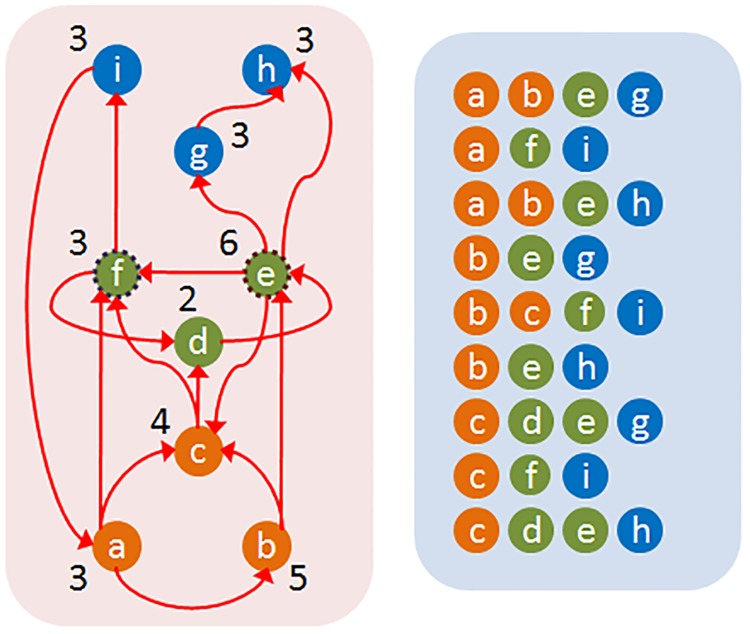

The path centrality P(v) of a node v is defined as the number of source-target paths that traverse v. This metric has been also referred to as the stress of a node [53]. Fig 4 illustrates the path centrality of each node in a small network—just for this example, the paths have been computed based on the shortest path (SP) routing algorithm. Any other routing scheme could have been used instead.

Fig 4. The path centrality of each node (shown at the left) based on the set of shortest paths SP (shown at the right).

For τ = 90%, a possible core is the two neurons {e, f}.

The path centrality metric is more general than betweenness or closeness centrality that are only applicable to shortest paths. Katz centrality does not distinguish between terminal and intermediate nodes and it penalizes longer paths. Metrics such as degree, strength, PageRank or eigenvector centrality are heavily dependent on the local connectivity of nodes rather than on the paths that traverse each node.

Given a set of source-target paths, the next step of the analysis is to compute the τ-Core, i.e., the smallest subset of nodes that can collectively cover a fraction τ of the given set of paths. The fraction τ is referred to as the path coverage threshold and it is meant to ignore a small fraction of paths that may be incorrect or invalid. Computing the τ-Core is an NP-Complete problem [23], and so we solve it with the following greedy heuristic (see [23] for an approximation bound):

Initially, the core set is empty.

- In each iteration:

- Compute the path centrality of all remaining nodes.

- Include the node with maximum path centrality in the core set and remove all paths that traverse this node from the given set of paths.

The algorithm terminates when we have covered at least a fraction τ of the given set of paths.

Fig 4 illustrates the core of a small network based on the shortest path routing mechanism, for τ = 90%.

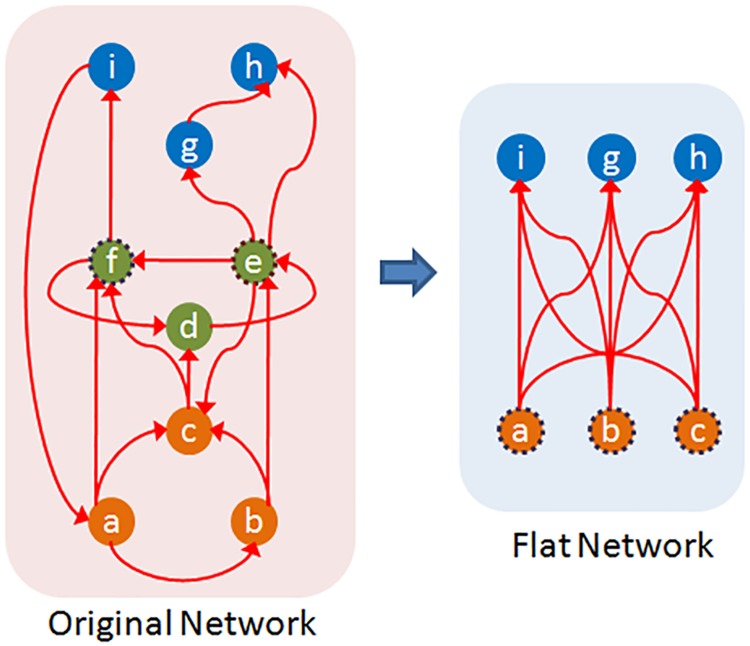

Hourglass score

Informally, the hourglass property of a network can be defined as having a small core, even when the path coverage threshold τ is close to one. To make the previous definition more precise, we can compare the core size C(τ) of the given network G with the core size of a derived network that maintains the same set source-target dependencies of G but that is not an hourglass by construction.

To do so, we create a flat dependency network Gf from G as follows:

Gf has the same set of source and target nodes as G but it does not have any intermediate nodes.

For every ST-path from a source s to a target t in G, we add a direct connection from s to t in Gf. If there are w connections from s to t in Gf, they can be replaced with a single connection of weight w.

Note that Gf preserves the source-target dependencies of G: each target in Gf is constructed based on the same set of “source ingredients” as in G. Additionally, the number of ST-paths in the original dependency network is equal to the number of paths in the weighted flat network (a connection of weight w counts as w paths). However, the paths in Gf are direct, without forming any intermediate modules that could be reused across different targets. So, by construction, the flat network Gf cannot have the hourglass property.

Suppose that Cf(τ) represents the core size of the flat network Gf. The core of Gf can include a combination of sources and targets, and it cannot be larger than either the set of sources or targets. Additionally, the core of the flat network is larger or equal than the core of the original network (because the core of the flat network also covers at least a fraction τ of the ST-paths of the original network—but the core of the original network may be smaller because it can also include intermediate nodes together with sources or targets). So,

| (1) |

To quantify the extent at which G exhibits the hourglass effect, we define the Hourglass Score, or H-score, as follows:

| (2) |

Clearly, 0 ≤ H(τ) < 1. The H-score of G is approximately one if the core size of the original network is negligible compared to the the core size of the corresponding flat network. Fig 5 illustrates the definition of this metric.

Fig 5.

When the path coverage threshold is τ = 90%, a core for the original network (left) is the set {e, f}. The weight of a connection in the flat network (right) represents the number of ST-paths between the corresponding source-target pair in the original network. All connections of this flat network have unit weight. The core of the flat network for the same τ consists of three nodes ({a, b, c} or ({i, g, h}). The H-score of the original network is .

An ideal hourglass-like network would have a single intermediate node that is traversed by every single ST-path (i.e., C(1) = 1), and a large number of sources and targets none of which originates or terminates, respectively, a large fraction of ST-paths (i.e., a large value of Cf(1)). The H-score of this network would be approximately equal to one.

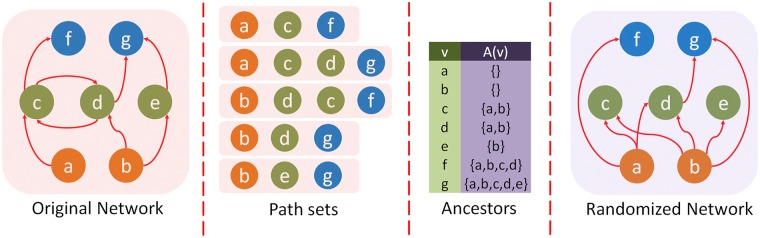

Randomization method

We examine the statistical significance of the observed hourglass score in a given network G using an ensemble of randomized networks {Gr}. The latter are constructed so that they preserve some key properties of G: the number of nodes and connections, the in-degree of each node, and the partial ordering between nodes (explained next). The randomization reassigns connections between pairs of nodes and changes the out-degree of nodes, as described below.

Suppose we are given G and a set of paths P from sources to targets. If there is a path in which node v appears after node u and there is no path in which u appears after v, we say that u is an ancestor of v and write u ∈ A(v). For a pair of nodes (u, v), we can have one of the following cases: (1) u is an ancestor on v, (2) v is an ancestor of u, (3) both u and v depend on each other, and (4) u and v do not depend on each other. We aim to preserve the partial ordering of nodes, as follows:

if u is not an ancestor of v in G, then it cannot be that u becomes an ancestor of v in a randomized network,

the set of ancestors of v in a randomized network is a subset of the set of ancestors A(v) in G.

The construction of randomization networks proceeds as follows: for each node v in the original network, we first remove all incoming connections. We then randomly pick in-degree(v) distinct nodes from A(v) and add connections from them to v. If in-degree(v) > |A(v)| then we add in-degree(v) − |A(v)| additional connections (“multi-connections”) from randomly selected nodes in A(v) to v. The randomization mechanism is illustrated in Fig 6. It should be mentioned that there are several other randomization methods, preserving different network features [54]. None of them however preserve the partial ordering between nodes, which is an essential feature of a network in which a set of input-output dependency paths captures how information flows from sources to targets.

Fig 6. For the network and source-target paths given in the left two figures, we first compute the ancestors A(v) of each node v (shown in the third figure from left).

In the randomized network (shown at the right), we preserve the in-degree of each node v and randomly select incoming connections from the set A(v).

Location metric

We also associate a location with each node to capture its relative position in the feedforward network between sources and targets. One way to place intermediate nodes between sources and targets is to consider the number of paths PS(v) from sources (excluding v if it is a source itself) to v as a proxy for v’s complexity and the number of paths PT(v) from v to targets (excluding v if it is a target itself) as a proxy for v’s generality. Nodes with zero in-degree (which cover most sources) have the lowest complexity value (equal to 0), while nodes with zero out-degree (which cover most targets) have the lowest generality value (equal to 0). The following equation defines a location metric based on PS(v) and PT(v),

| (3) |

L(v) varies between 0 (for zero in-degree sources) and 1 (for zero out-degree targets). If there is a small number of paths from sources to a node v (low complexity) but a large number of paths from v to targets (high complexity), v’s role in the network is more similar to sources than targets, and so its location should be closer to 0 than 1. The opposite is true for nodes that have high complexity but low generality.

Encoder-decoder architecture

Returning to the illustration of Figure-1, the number of paths from the set of sources S to a specific target t is denoted by PS(t), and is equal to the number of source literals in the mathematical expression for t. If a Boolean expression involves n literals, it requires n − 1 Boolean operations. So, PS(t) can be thought of as the cost for computing t from S.

More generally, even if a feedforward network does not represent Boolean expressions, we can think of the number of paths in PS(t) as a cost metric for “computing” the target t from the set of sources S: the larger PS(t) is, the more ways exist in which the information provided by the set of sources S affects the function of t.

Informally, an hourglass architecture is a network in which the information provided by a large set of sources S is first encoded (or compressed) into a small set Z of intermediate nodes at the “waist” of the hourglass. Then, the functions provided by the nodes in Z are decoded in computing the targets in T. Additionally, in an hourglass architecture there should be relatively few paths that bypass Z.

The question we focus on here is: how does an hourglass architecture decrease the cost of computing a set of targets T from a set of sources S, and how large is that decrease in the case of C. elegans?

Let CS(T) be the cumulative cost for computing the set of targets T from the set of sources S:

| (4) |

Given a set of intermediate nodes Z, we can produce the targets T in a two-step process: first, compute each node in Z from the sources S, and then compute each target in T from the set of intermediate nodes Z. There may be some source-to-target paths however that bypass the nodes in Z—we need to consider the cost of those “bypass-Z” paths as an extra term that depends on the selection of Z. So, the cost CS,Z(T) of computing T from S given Z is:

| (5) |

where the first summation term is the cost of computing Z from sources, the second is the cost of computing targets from Z, and the third is the cost of bypass-Z paths.

The encoding-decoding gain ΦZ, defined below, quantifies how significant is the cost reduction provided by such an encoder-decoder architecture,

| (6) |

If ΦZ ≤ 1, the intermediate nodes do not offer any cost reduction. On the other extreme, if there is a single intermediate node z that depends on all n sources and all m targets depend only on z, then ΦZ gets its maximum value, nm/(n + m).

To illustrate, consider a three-layer network with n sources, k intermediate nodes and m targets, in which every intermediate node depends on every source, and every target depends only on every intermediate node. Suppose that the set Z consists of k′ < k of the intermediate nodes. It is easy to see that:

| (7) |

If n > 2 and m > 2, we have that n + m < nm, meaning that ΦZ is maximized (equal to nm/(n + m)) when Z includes all k intermediate nodes (k = k′).

On the other hand, if the network includes k+ additional intermediate nodes that only connect to one source and one target, the maximum value of ΦZ results when the set Z includes only the k densely connected nodes and leaves the k+ nodes in the bypass paths:

| (8) |

Returning to the network of Fig 1, the direct cost CS(T) is ∑t∈T PS(t) = 6+5+3+2+6 = 22. The cost of constructing the nodes in Z from sources is ∑z∈Z PS(z) = 4+2 = 6, the cost of constructing targets from Z nodes is ∑t∈T PZ(t) = 1+1+1+1+2 = 6, while the cost of bypass-Z paths is ∑t∈T PS,b(t) = 2+1+1+0+0 = 4. So, the encoding-decoding gain is 22/16 = 1.375 while its maximum possible value is 25/10 = 2.5.

Results

Hourglass analysis of feedforward paths

We defined earlier eleven different routing methods for computing paths from sensory to motor neurons in C. elegans. Table 1 shows some relevant properties for each of these path sets. The number of all possible pairs of sensory-motor neurons is about 9,500. About 90%-95% of these pairs are connected with any of the eleven path sets. Even with the smallest path set (SP4), there are typically multiple paths for every sensory-motor pair. The sensory-motor neural paths are typically short: the median is 3 hops meaning that there are typically two other neurons between a sensory and a motor neuron. The number of paths increases ten-fold when we allow one more hop than the shortest path (SP+1), and about eight-fold more when we allow a second extra hop (SP+2). Also, about 5%-10% of the connectome (after the removal of FB connections between the three different classes of neurons) are not traversed by any of these paths. This suggests that these connections are utilized only in feedback circuits between neurons of the same type (e.g., feedback between interneurons).

Table 1. Properties of the eleven paths sets from sensory to motor neurons computed using the eleven routing methods we consider.

| Eleven sets of paths from sensory to motor neurons | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SP | SP4 | SP5 | P4 | P5 | SP+1 | SP+2 | ||||||

| Number of paths | 41,305 | 36,942 | 40,801 | 239,941 | 435,877 | 441,153 | 392,895 | 1,926,944 | 3,245,610 | 434,930 | 3,434,325 | |

| 10,50,90-percentile of path lengths | 3,3,5 | 3,3,4 | 3,3,4 | 3,4,4 | 3,4,4 | 3,4,4 | 3,4,5 | 4,5,5 | 4,5,5 | 3,4,5 | 4,5,6 | |

| Sensory-motor neuron pairs connected | 96% | 91% | 96% | 91% | 91% | 91% | 96% | 96% | 95% | 96% | 96% | |

| Utilized connections | Total | 90% | 90% | 90% | 95% | 95% | 95% | 95% | 95% | 95% | 95% | 95% |

| FF | 95% | 95% | 95% | 95% | 95% | 95% | 95% | 95% | 95% | 95% | 95% | |

| LT | 85% | 85% | 85% | 95% | 95% | 95% | 95% | 95% | 95% | 95% | 95% | |

Given a set of feedforward paths from sensory to motor neurons, we now apply the hourglass analysis framework (see Section “Hourglass Score”). In particular, the goal is to compute the smallest set of neurons that can cover a percentage τ of all paths in . That set of neurons is referred to as τ-Core.

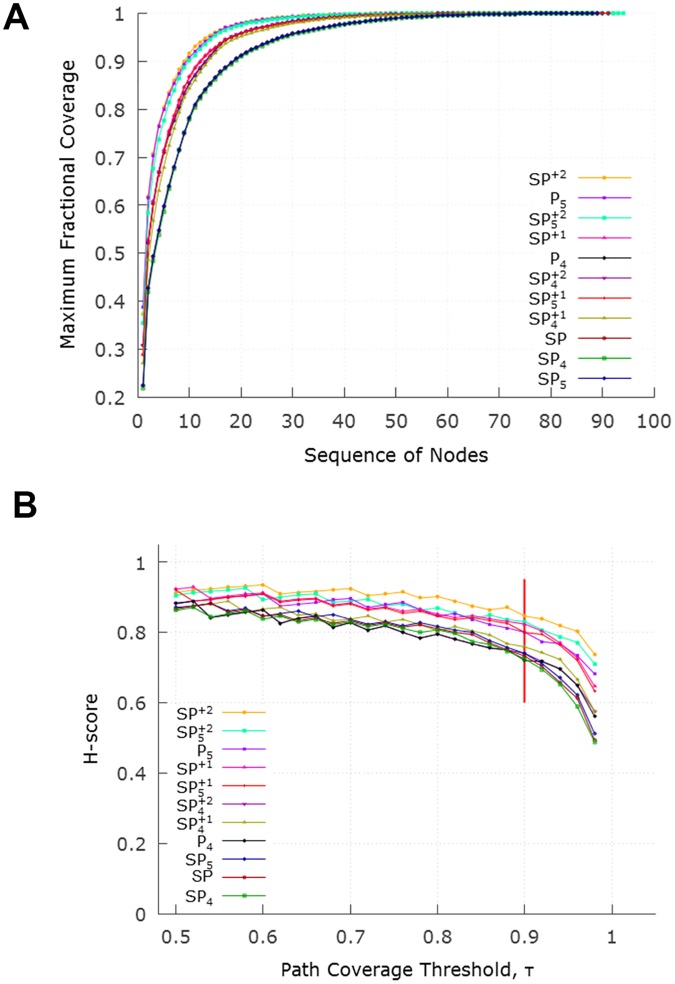

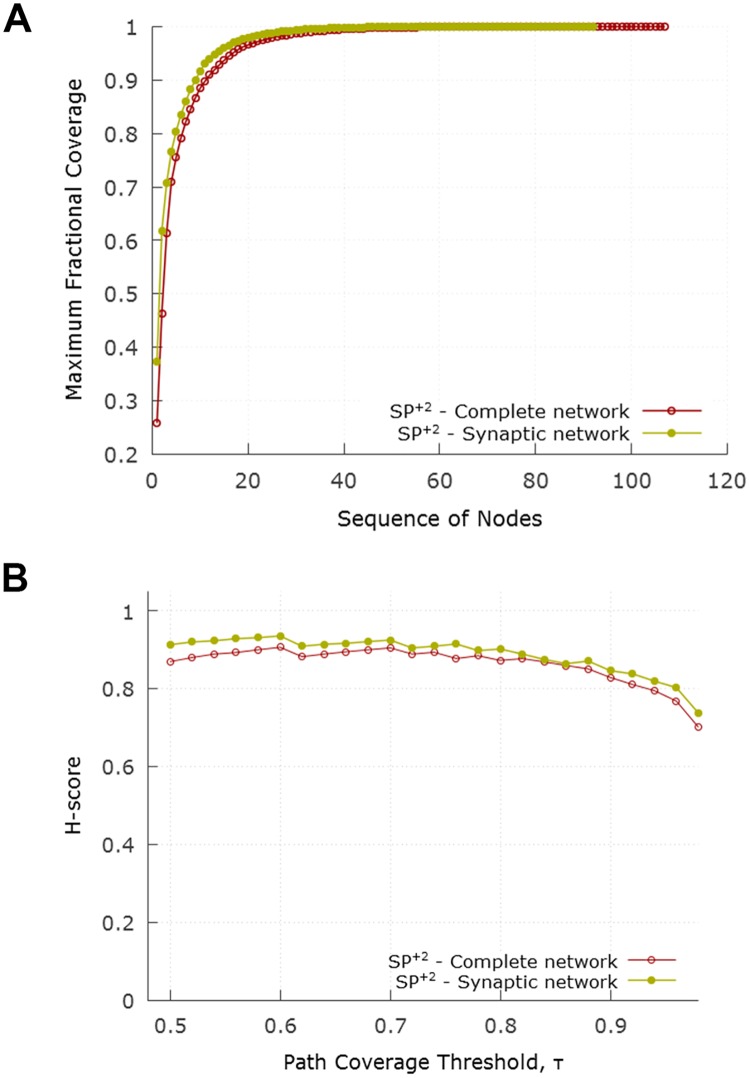

Fig 7A shows the cumulative path coverage as a function of the number of neurons in the τ-Core (increasing values of τ require a larger set of core neurons). All path sets have the same “sharp knee” property: almost all (80%-90%, depending on the routing method) of the feedforward paths traverse a small set of about 10 neurons. Routing methods that produce more paths (such as SP+2) tend to have a smaller τ-Core than more constrained routing methods (such as SP4).

Fig 7.

(A) Cumulative path coverage by the top-X core neurons for X = 1…100. Depending on path selection method, the core size varies between 10-20 neurons when the path coverage threshold τ is 90%. For a given τ, routing methods that produce more paths (such as SP+2 or P5) result in a smaller core. (B) Effect of τ on hourglass metric (H-score) for each path set. The ordering of the legends is the same with the top-down ordering of the curves.

Fig 7B examines the effect of the path coverage threshold τ on the hourglass metric (H-score). For all path sets, the H-score is close to one (its theoretical maximum value) as long as τ < 90%. This suggests an hourglass-like architecture, independent of which routing scheme has produced the set of feedforward paths.

Table 2 shows the sequence of core neurons (for τ = 90%) for each path set. The first 10-11 of those neurons appear in almost every path set. The remaining neurons appear in more constrained path sets (such as SP) and they only cover a small fraction of additional paths (1%-3%).

Table 2. The identified core neurons when the path coverage threshold is τ = 90% for each path set.

For each core neuron, we show the fraction of paths that the corresponding neuron contributes to the core. The neurons are ranked in decreasing order in terms of their contribution to the core (considering the SP set of paths), grouping bilateral neurons together. The last column shows the 11 “rich-club” neurons, as identified in [44].

| Core Neuron | SP | SP4 | SP5 | P4 | P5 | SP+1 | SP+2 | Rich-club | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AVAL | 0.25 | 0.24 | 0.25 | 0.24 | 0.24 | 0.24 | 0.26 | 0.25 | 0.25 | 0.26 | 0.27 | ✓ |

| AVAR | 0.23 | 0.22 | 0.23 | 0.30 | 0.34 | 0.34 | 0.32 | 0.40 | 0.43 | 0.32 | 0.41 | ✓ |

| AVBL | 0.08 | 0.07 | 0.08 | 0.10 | 0.09 | 0.09 | 0.09 | 0.10 | 0.10 | 0.09 | 0.10 | ✓ |

| AVBR | 0.04 | 0.04 | 0.04 | 0.04 | 0.03 | 0.03 | 0.04 | 0.03 | 0.03 | 0.04 | 0.03 | ✓ |

| AVEL | 0.06 | 0.06 | 0.06 | 0.05 | 0.04 | 0.04 | 0.05 | 0.04 | 0.03 | 0.05 | 0.03 | ✓ |

| AVER | 0.06 | 0.06 | 0.06 | 0.06 | 0.05 | 0.05 | 0.06 | 0.05 | 0.04 | 0.05 | 0.04 | ✓ |

| DVA | 0.05 | 0.05 | 0.04 | 0.02 | 0.03 | 0.03 | 0.03 | 0.03 | 0.02 | 0.04 | 0.01 | ✓ |

| PVCL | 0.05 | 0.05 | 0.05 | 0.07 | 0.07 | 0.07 | 0.07 | 0.07 | 0.07 | 0.06 | 0.06 | ✓ |

| PVCR | 0.03 | 0.03 | 0.03 | 0.02 | 0.02 | 0.02 | 0.02 | 0.03 | 0.04 | ✓ | ||

| AVDR | 0.04 | 0.04 | 0.04 | 0.04 | 0.03 | 0.03 | 0.03 | 0.02 | 0.02 | 0.03 | 0.01 | ✓ |

| AVDL | 0.02 | 0.02 | 0.02 | 0.02 | 0.01 | 0.01 | ✓ | |||||

| HSNR | 0.02 | 0.03 | 0.03 | 0.02 | 0.02 | 0.01 | 0.01 | 0.01 | 0.02 | |||

| HSNL | 0.01 | 0.01 | ||||||||||

| RIAL | 0.01 | 0.02 | 0.01 | 0.01 | 0.02 | 0.02 | 0.01 | 0.01 | 0.01 | |||

| RIAR | 0.01 | 0.02 | 0.01 | 0.01 | ||||||||

| RIMR | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.02 | ||||||

| RMGL | 0.01 | 0.01 | 0.01 | |||||||||

| PVR | 0.01 | 0.01 | 0.01 | |||||||||

| AIBR | 0.01 | 0.01 | 0.01 | |||||||||

| AIZL | 0.01 | |||||||||||

| H-score | 0.79 | 0.79 | 0.79 | 0.82 | 0.84 | 0.74 | 0.85 | 0.86 | 0.81 | 0.84 | 0.87 |

If we focus on those first 10-11 core neurons, we observe that, first, they are included in the 90%-core of all path sets we consider (with few exceptions).

To simplify the presentation of the results, in the rest of this paper we will focus on the “SP+2” path set. This path set results in the largest number of paths and a core of 10 neurons when τ = 90%.

That set of core neurons includes bilateral pairs of interneurons (namely: AVA, AVB, PVC, AVE, and AVD)—the DVA stretch sensitive core neuron does not appear bilaterally. Seven of the core neurons are located in the head region (AVAR/L, AVBR/L, AVER/L, AVDR) and three are in the tail region (PVCR/L, DVA). The original ten core neurons contain nine command interneurons that play a pivotal role in forward and backward locomotion [44]. The other non-command interneuron of the core, DVA, is a proprioceptive interneuron modulating the locomotion circuit [44].

If we want to extend the set of core neurons slightly by covering τ = 95% of all paths instead of 90%, we need to add four more neurons into the core (HSNR, AVDL, RIAL, RIMR).

Comparison with rich-club effect

The existence of a set of densely interconnected nodes in the C. elegans connectome, termed as rich-club, has been previously established by Towlson et al. [44]. A rich-club is a subgraph of high-degree nodes that are much more densely interconnected with each other than what would be expected based only on their degrees [55]. In other words, the rich-club concept is based on the analysis of local connectivity in a network—rather than the analysis of (shortest or other) network paths. Further, the rich-club analysis does not consider whether some nodes act as inputs (sensory neurons) or outputs (motor neurons) in the network. The hourglass analysis, on the other hand, analyzes the set of feedforward paths from inputs to outputs. So, these two methods are significantly different.

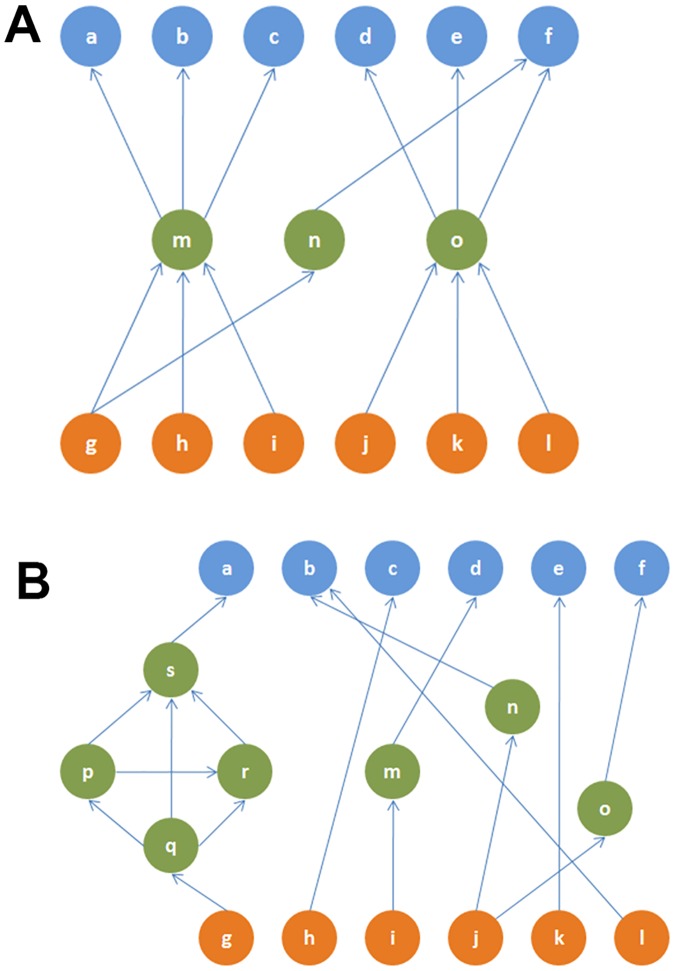

Are these two network properties, rich-club and hourglass effect, equivalent? We can see that this is not the case through simple counter-examples (see Fig 8).

Fig 8.

(A) A toy network in which two nodes (m and o) cover more than 90% of all source-target paths (H-score = 0.67). This network does not contain a rich-club. (B) A toy network that is not an hourglass (H-score = 0) but it has a rich-club (nodes p, q, r, s—the rich-club coefficient is 2.60 [56]).

An important observation, however, is that the core neurons that we identify through the hourglass analysis highly overlap with the rich-club neurons of [44]. The first ten core neurons identified by all routing methods we consider also appear in the eleven rich-club neurons reported in [44]. The AVDL interneuron is the 11th rich-club member but it appears in the hourglass core only in half of the routing methods we consider (for τ = 90%). The fact that two very different methods highlight almost the same set of interneurons as the most important in the system adds confidence in the results of both studies.

The fact that a small set of interneurons act as both the hourglass core and rich-club, even though these two network properties are qualitatively different, raises an interesting hypothesis about the functional role of these interneurons: In the hourglass network of Fig 8A, the core nodes m, n, o are not connected with each other—such an architecture can compress different input information streams but without integrating them. On the contrary, the core interneurons of C. elegans are densely interconnected and so they form an information processing bottleneck that integrates the compressed information from different sensory modalities, before driving any higher-level neural circuits.

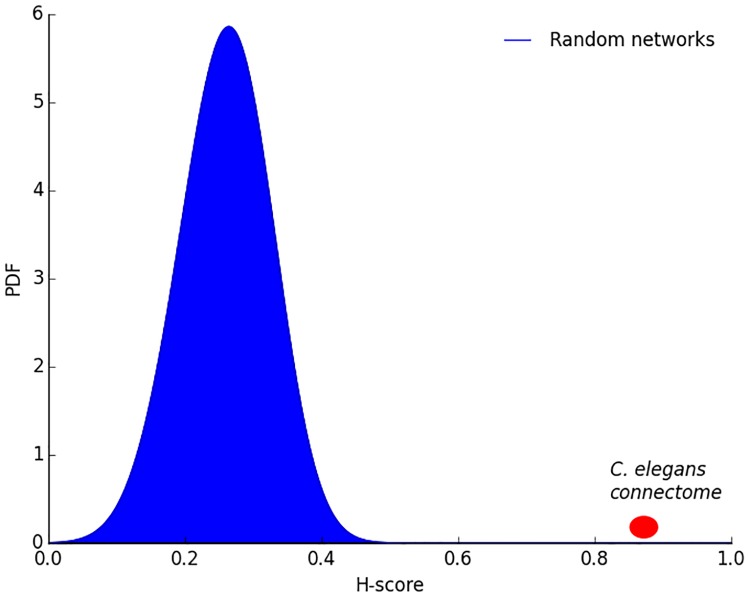

Comparison with randomized networks

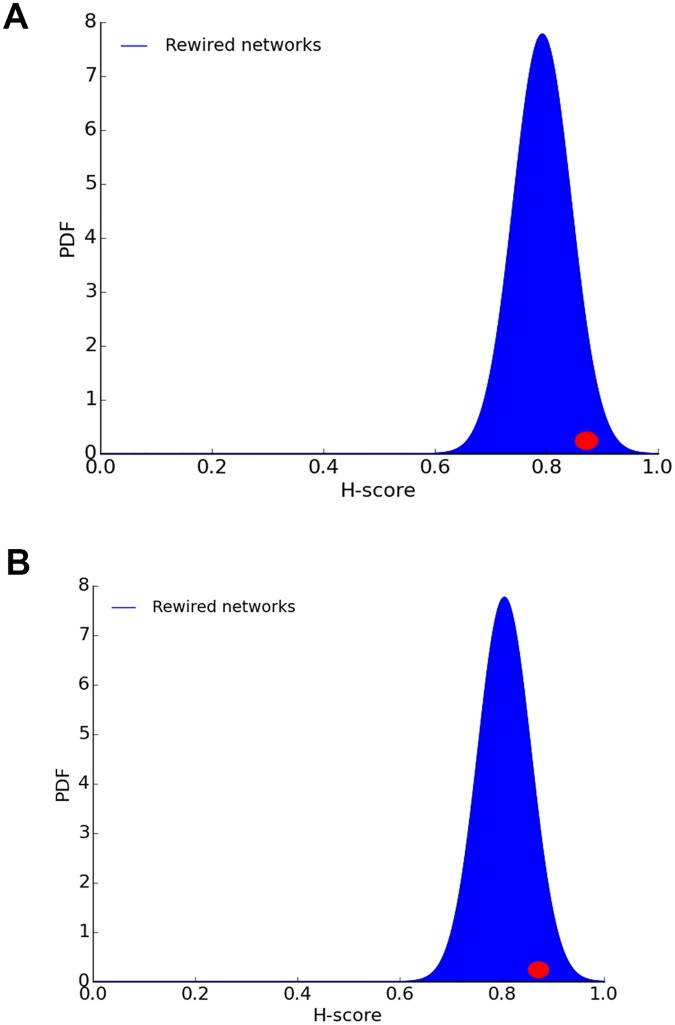

Is the hourglass effect a genuine property of the C. elegans connectome or would it also be present in similar but randomly connected networks? We generate 1000 random networks using the algorithm described in Section “Randomization Method”. The randomization process preserves the in-degree of each neuron and the hierarchical ordering between neurons (i.e., if neuron v depends on neuron u but u does not depend on v in the original connectome, it cannot be that u depends on v in a randomized network). Fig 9 shows the H-score distribution of the randomized networks. The H-score of the random networks is significantly less than the corresponding original network (p < 10−3), suggesting that the hourglass effect we observe in the C. elegans connectome is not a statistical artifact.

Fig 9. Distribution of H-score for randomized networks in which we preserve the in-degree of each neuron and the hierarchical ordering between neurons.

The probability of observing the H-score value of the original network in randomized networks is less than 10−3.

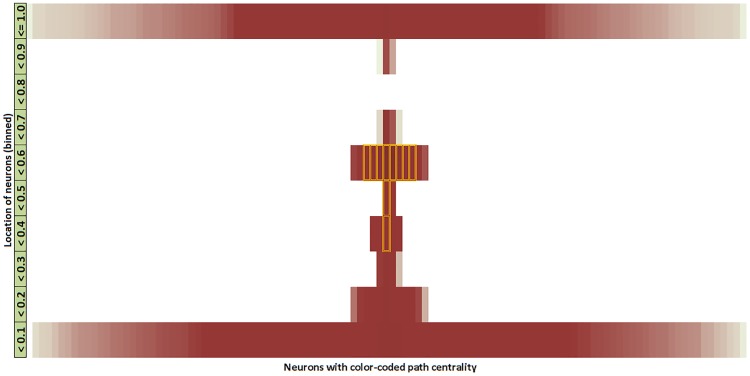

Is the hourglass effect a consequence of the dense connectivity between core neurons? The latter is the defining characteristic of rich-club neurons. Would we still observe the hourglass effect if the core neurons were not so densely interconnected with each other, forming a rich-club?

To answer this question, we perform a second randomization experiment in which every connection between two core neurons X and Y is rewired so that X connects instead to a randomly chosen neuron Z that is not in the set of core neurons. We experimented with two variations of this method: one in which Z is an interneuron and another in which Z can be any neuron, including sensory and motor neurons.

Both approaches fail to destroy the hourglass property. As shown in Fig 10, the H-score distribution of the randomized networks (100 instances) includes the H-score of the original network (0.87). This means that the hourglass property is not due to the dense connectivity between core neurons. When we remove the connections between core neurons, we reduce the number of core nodes that a typical sensory-to-motor path traverses—but it is still the case that almost all such paths traverse at least one core node, and this is what creates the hourglass property.

Fig 10. H-score distribution of randomized networks in which every connection X-Y between two core neurons X and Y is rewired.

In (A), Y is replaced with a randomly chosen interneuron Z that is not in the core. In (B), Y is replaced with a randomly chosen neuron Z (including sensory and motor neurons) that is not in the core. The red dot shows the H-score of the original connectome.

Hourglass organization based on location metric

The location metric associates each neuron v with a value between 0 and 1, depending on the number of paths from sensory neurons to v and from v to motor neurons.

Fig 11 shows the location of each neuron in a vertical orientation. Most sensory neurons have zero incoming connections (and thus no incoming paths), and so their location is 0. Similarly most motor neurons have zero outgoing connections and so their location is 1. The location of the ten core neurons is shown with dotted rectangles—they are concentrated close to the middle of the location range, meaning that their number of paths from sensory neurons is roughly the same with their number of paths to motor neurons. This visualization has been produced with the SP+2 path set but other path sets give similar results.

Fig 11. Visualization of the C. elegans connectome based on the location metric.

We discretize the location metric in 10 bins (each bin accounting for 1/10 of the 0–1 range). The path centrality of each node is represented by its color intensity (darker for higher path centrality). Nodes with higher centrality are placed closer to the vertical mid-line. The core nodes are marked with an orange outline. The location of all core neurons falls between 0.3-0.6, close to the middle of the hourglass.

C. elegans as an encoder-decoder architecture

We can think of C. elegans as an information processing system that transforms input information, collected and encoded by sensory neurons, to output information that is represented by the activity of motor neurons. The analysis of the previous sections has identified a number of core neurons that most of the sensory-to-motor neural pathways go through. The exact number of core neurons depends on the fraction τ of all sensory-to-motor paths covered by the core.

Suppose that a given set of core neurons forms the intermediate set Z, defined in Section “Encoder-Decoder Architecture”. We can then compute the number of paths PS(Z) from the set S of all sensory neurons to the neurons in Z as a proxy for the information processing cost of an encoding operation that transforms S to Z. Similarly, the number of paths PS(Z) from the neurons in Z to the set T of all motor neurons can be thought of as a proxy for the information processing cost of a decoding operation that transforms Z to T. We also need to consider any sensory-to-motor paths PS,b(T) that bypass the core neurons in Z—this is a proxy for the cost of any additional information processing that is specific to each motor neuron and that is not provided by the encoding-decoding function of Z.

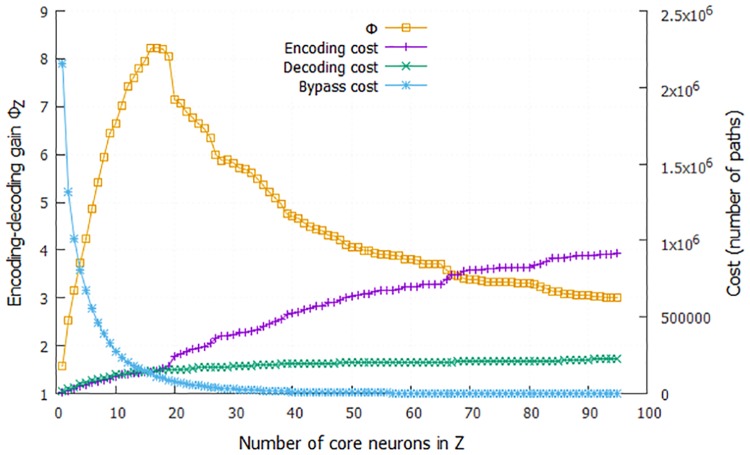

These three cost terms are shown in Fig 12 as we increase the number of core neurons included in Z (i.e., as we increase the threshold τ). The bypass-Z cost is the dominant cost term until we include about 15 neurons in Z. This suggests that the information provided by sensory neurons cannot be captured well with fewer neurons. On the other hand, the costs of the encoding and decoding operations (PS(Z) and PS(Z), respectively) increase with the number of neurons in Z, as expected.

Fig 12. The encoder-decoder gain ratio ΦZ as the number of core neurons in the encoding set Z increases (yellow curve).

The maximum value of ΦZ is about 8.2 when Z includes the first 16 core neurons. Based on the cost framework of Section “Encoder-Decoder Architecture”, this means that the hourglass organization of the C. elegans connectome reduces the sensory-to-motor information processing cost eight-fold. The figure also shows the three relevant cost terms: cost of encoding the information provided by sensory neuron using neurons in Z (magenta), cost of decoding that information to drive all motor neurons (green), and cost of processing pathways that bypass the core (blue).

The encoder-decoder gain ratio ΦZ (see Eq 6) shows that the maximum cost reduction takes place when we consider the first 16 core neurons (corresponds to τ = 95% for the SP+2 set of paths). In that case, the encoder-decoder architecture achieves an eight-fold decrease (ΦZ = 8.2) in terms of information processing cost relative to a hypothetical architecture in which the information processing cost of each motor neuron is computed separately, based on the number of paths from sensory neurons to that motor neuron.

An important question is whether the hourglass architecture achieves this cost reduction by increasing the path length between sensory and motor neurons (in terms of the number of neurons in each path). This trade-off between network efficiency (associated with the distribution of path lengths in a network) and network cost has received significant attention in network neuroscience [48, 57, 49]. Networks that minimize the length of every processing path connect every source to every target with a direct link—a costly design approach. On the other hand, networks that attempt to reduce the number of intermediate links typically need longer source-to-target paths (for the same reason that flying between two cities often requires one or more intermediate stops).

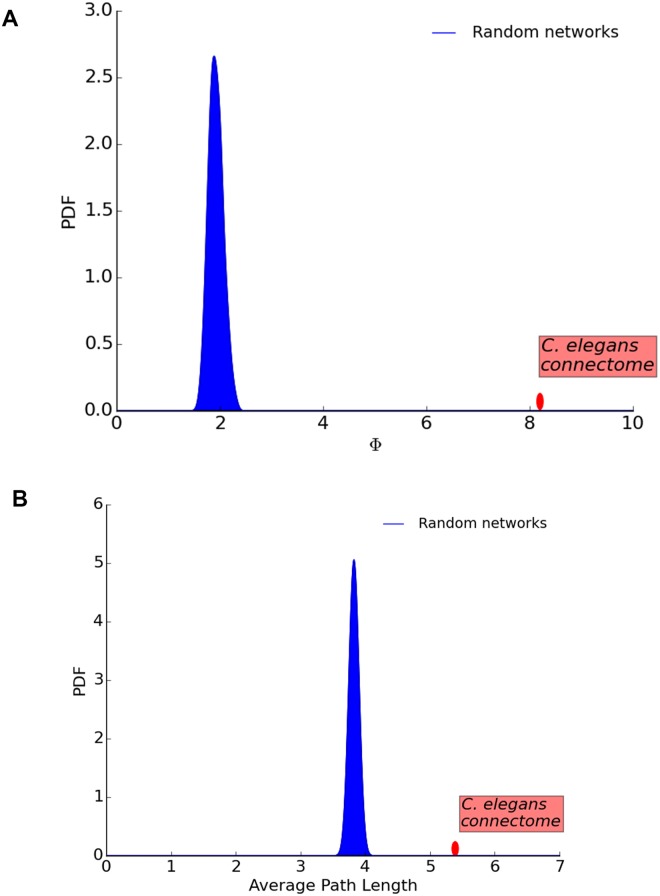

Here, we examine whether the hourglass architecture introduces a significant increase in the average path length from sensory to motor neurons relative to the ensemble of randomized networks. Recall that those networks do not follow the hourglass architecture (see Fig 9) but they maintain the in-degree of each neuron and the hierarchical ordering between neurons. Given that each neuron selects randomly its inputs from any neuron that is “lower” in the hierarchy (closer to the sensory neurons), we expect that such randomized non-hourglass networks will be more efficient (i.e., they will have shorter paths). In the extreme case that every motor neuron receives connections only from sensory neurons, the average path length will be minimized.

Fig 13 shows that the randomized networks are not as cost-efficient as the original C. elegans connectome, (their encoder-decoder gain ratio is around 2 even though the original network’s is 8.2). However, the randomized networks provide shorter path lengths. Their average sensory-to-motor path length is 3.9, according to the SP+2 set of paths, while the same metric for the original connectome is 5.4 hops. In other words, the hourglass organization of the C. elegans connectome trades off the reuse of intermediate-level neurons and connections with a modest increase in the length of sensory-to-motor path lengths. We should mention that this reduction in path-length efficiency is smaller for other path sets; for instance, with the set of shortest paths (SP) the average path length of the original connectome is 3.4 hops while the randomized connectomes have a mean of 3.0.

Fig 13. The ensemble of randomized networks have much lower encoding-decoding gain Φ than the original C. elegans connectome (A)—But not significantly lower average path length (B).

Including gap junctions: The complete network

Gap junctions provide a different type of connectivity between neurons than chemical synapses. Chemical synapses use neurotransmitters to transfer information from the presynaptic to the postsynaptic neuron, while gap junctions work by creating undirected electrical channels and they provide faster (but typically weaker) neuronal coupling. The directionality of the current flow in gap junctions cannot be detected from electron micrographs. Hence they are treated as bidirectional in the C. elegans connectome.

In this section, we consider both gap junctions and chemical synapses, forming what we refer to as the complete network. Gap junctions connect 253 (out of 279) neurons through 514 undirected connections (that we treat as 1028 directional connections). Recall that the number of (directed) chemical connections is 2194. 64% of the gap junction connections do not co-occur with chemical connections between the same pair of neurons, while 83% of the synaptic connections do not co-occur with gap junction connections. In other words, the inclusion of gap junctions changes significantly the connectivity between neurons.

The complete network has 1180 FF, 1468 LT and 574 FB connections. If we remove feedback connections, as we did for the synaptic network, we end up with a total of 2648 directed connections.

The inclusion of gap junctions also increases significantly the number of paths between sensory and motor neurons, independent of the routing method. If we focus on SP+2, the number of paths increases by a factor of 2.3 (about 7.7 millions).

Fig 14A shows the cumulative path coverage as a function of the number of nodes in the core. Fig 14B examines the effect of the path coverage threshold τ on the resulting H-score. Both curves are quite similar to the corresponding results for the synaptic network.

Fig 14. Hourglass analysis for complete network with the SP+2 set of paths.

(A) compares the cumulative path coverage of top core neurons between synaptic and complete network. (B) compares the progression of H-score between synaptic and complete network as τ varies.

With τ = 90%, the resulting core nodes are shown in Table 3. The H-score for the complete network is 0.83 (compared to 0.87 for the synaptic network).

Table 3. The identified 12 core neurons in the complete network.

The 10 neurons shown in italic were also the core of the synaptic network.

| Neuron | AVAL | AVAR | AVBL | AVBR | AVEL | AVER | PVCL | AIBR | AVDR | DVA | PVCR | VD01 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Fraction of new paths covered | 0.29 | 0.23 | 0.16 | 0.11 | 0.05 | 0.04 | 0.03 | 0.03 | 0.02 | 0.02 | 0.01 | 0.01 |

The two additional core neurons that appear in the hourglass waist of the complete network but not in the synaptic network are:

AIBR: related to locomotion, food and odor evoked behaviors, local search, lifespan and starvation response.

VD01: related to motor—Sinusoidal body movement-locomotion.

The encoder-decoder gain analysis for the complete network appears in S4 Fig. Qualitatively the encoder-decoder gain ratio follows the same trend with the network of only chemical synapses (see Fig 12) but the maximum value of ΦZ is slightly less (7.4 instead of 8.2).

Discussion

In this Section, we discuss in more detail prior studies that relate to the hourglass effect in C. elegans or more broadly in neuroscience. Varshney et al. [43] analyzed the structural properties of the C. elegans connectome and found that several central neurons (based on closeness centrality) play a key role in information processing. Among them are command inter-neurons such as AVA, AVB, AVE that are responsible for locomotion control. On the other hand, neurons such as DVA or ADE have high out-closeness centrality and a good position to propagate a signal to the rest of the network. Most of the “central” neurons in that study are also included in the hourglass core.

The modular organization of the C. elegans connectome has been discovered by Sohn et al. [58] through cluster analysis. Their analysis showed that communities correspond well to known functional circuits and it helped uncover the role of a few previously unknown neurons. They also identified a hierarchical organization among five key clusters that form a backbone for higher-order complex behaviors.

The fact that the rich-club interneurons are almost identical with the hourglass core, even though these two network properties are qualitatively different, suggests that these 10-15 neurons form a information processing bottleneck that does not simply compress but also integrates the information from different sensory modalities, before driving any higher-level neural circuits.

This hypothesis is also supported by the analysis of functional modules in the C. elegans connectome, by Pan et al. [59], which showed that neurons in the same module are located close and contribute in the same task. That study identified 23 connector hub neurons, i.e., high-connectivity neurons that connect to most or all functional modules. The eleven core neurons that we identified with the SP+2 paths also belong in that set of connector hubs. The fact that all hourglass core neurons are also connector hubs between functional modules supports the idea that these neurons integrate multimodal information, rather than simply compress the sensory information in a segregated manner. Note that the distinction between connector hubs, non-hub connectors, etc, depends on certain thresholds and so it is not surprising that some connector hubs such as AVKL or SMBVL do not appear in the hourglass core.

The posterior nervous system of the male C. elegans connectome was analyzed by Jarrell et al. [60] (recall that we analyze the hermaphrodite C. elegans connectome). One of their conclusions was that the nervous system has a mostly feedforward architecture that runs from sensory to motor neurons via interneurons. There is also some feedback circuitry in the nervous system and the actual physical output of the worm (i.e. motion etc.) feeds back to sensory neurons to allow closed-loop control. There are however many more feedforward loops (termed lateral connections in our analysis) that provide localized coordination most notably visible within interneurons. More recently, the same research group has mapped the complete connectome of the male nematode, focusing on its differences with the hermaphrodite [61].

Yan et al. have applied a controllability framework to analyze the C. elegans connectome, aiming to identify essential neurons for locomotion [62]. Some of those neurons also appear in the hourglass core (AVAL/R, AVBL/R, AVDL/R, PVCL/R)—but there are also several neurons (such as the six neurons of the DD class) that do not stand out in the hourglass analysis. This is not surprising given that the two studies ask very different questions: Yan et al. ask which neurons are essential to control every motor neuron or muscle, while we ask which neurons form a bottleneck in the feedforward flow of information from sensory to motor neurons.

The physical placement of neurons in C. elegans has been thought to be not exclusively optimized for global minimum wiring but rather for a variety of other factors of which the minimization of pair-wise processing steps is important. For example, Kaiser and Hilgetag [49] showed that the total wiring length can be reduced by 48% by optimally placing the neurons. However that would significantly increase the number of processing nodes along shortest paths between components as well. Similar findings were also revealed by Chen et al. [57], concluding that the placement of neurons does not globally minimize wiring length. These studies emphasize the notion of choosing shorter communication paths between neuron pairs and supports our approach of choosing paths that are shortest, or close to shortest, in terms of processing steps.

Analysis by Csoma et al. [63] challenged the well rooted notion of shortest path based communication routing in the human brain network. They collected empirical data through diffusion MRI and concluded that although a large number of paths conform to the shortest path assumption, a significant fraction (20-40%) are inflated up to 4-5 hops.

Research by Avena-Koenigsberger et al. [47, 64] analyzed in depth the communication strategies in the human brain and also challenged the shortest path assumption. They discussed how the computation of shortest path routing is not feasible in the brain circuitry, and the shortest path routes would leave out around 80% of neural connections. They examined the spectrum of routing strategies hinging upon the amount of global information and communication required. At one end of the spectrum, there are random-walk routing mechanisms that are wasteful and often fail to achieve efficient routes but require no knowledge. On the other end there is shortest-path routing requiring global wiring knowledge at each neuron. As a more realistic choice, they studied the k-shortest path based approach (with k being 100). Their findings show that this strategy increases the utilization of connections. We have used a more relaxed constraint to choose paths between any two nodes by allowing all possible paths that are up to 2 hops longer than the shortest path between the corresponding pair.

Markov et al. have shown that the macaque cortical network includes a highly interconnected “bow-tie core” [42]. At first, this may seem relevant to the hourglass effect. We should note however that the network of Markov et al. considers 29 cortical regions and 17 of them are in the bow-tie core. On the contrary, a defining characteristic of the hourglass effect is that the number of core nodes at the waist is a small fraction of the total network size.

In some earlier studies, the hourglass effect is defined for layered networks, based on on the number of nodes at each layer. A network is referred to as an hourglass if the width of the intermediate layers is much smaller relative to the width of the input and output layers [24, 35, 65]. In this work, we generalize the definition of the hourglass effect to include networks that do not have clearly defined layers and that include feedback or lateral connections.

What is the biological significance of the hourglass architecture in the C. elegans connectome? Is it just an interesting graph-theoretic property or does this architecture provide an adaptive advantage that could be selected by evolution?

First, it is important to set appropriate expectations for any study that analyzes the connectome attempting to learn something valuable about the underlying biology. It has been argued by several authors, including C. Bargmann and E. Marder [66], that mechanisms such as neuromodulators, parallel and antagonistic pathways and circuits, and complex neuronal dynamics can completely change the function of a given neural circuit. We believe that a connectome should be viewed as an architectural constraint that limits the scope of possible functions that a neural circuit can perform—rather than as the unique determinant of those functions.

The earlier C. elegans literature has attributed specific functions to the “command interneurons” or it has associated those interneurons with one or more functional circuits (for instance, see [67, 68]). The main contribution of our study is to propose a different way to think about the role of those interneurons in the C. elegans connectome: the interneurons between sensory and motor neurons can be thought of as forming an encoder-decoder network. This network reduces the intrinsic dimensionality of the low-level sensory information, and then integrates the compressed information from different sensory modalities to compute few intermediate-level sub-functions. The latter are then combined and re-used in higher-level behavioral circuits and tasks. Those few sub-functions are encoded in the activity of 10-15 core interneurons in the hourglass waist.

So, instead of trying to identify the function of each neuron in the connectome, or instead of focusing on individual functional circuits ignoring all others behaviors and circuits, we can focus on that smaller set of 10-15 core interneurons and attempt, through a combination of experiments and modeling, to reverse engineer the sub-functions they “compute”. These sub-functions will probably be much simpler than the observable behaviors of the organism (e.g., escape response or social feeding)—they can be viewed as re-usable functional modules. Then, for each of the observable behaviors of the organism, we can try to find out how that task is accomplished by combining in different ways those functional modules. We firmly believe that such a research agenda will be more tractable because it depends on a smaller number of components (10-15) that need to be “reverse engineered”, compared to the number of all neurons in C. elegans.

The core neurons at the hourglass waist create a “bottleneck” in the flow of information from sensory to motor neurons. Such bottleneck effects have been studied in the literature under different names. The most relevant such framework is the information bottleneck method developed based on information theory results: given a joint probability distribution between an input vector X and an output vector Y, the goal of that method is to compute an optimal intermediate-level representation T that is both compact (i.e., a highly compressed version of X) and able to predict Y accurately [69, 70]. It appears that the C. elegans connectome has evolved to “compute” such a compact and integrated intermediate-level representation of its sensory inputs, represented by the 10-15 core interneurons at the hourglass waist.

Supporting information

(A) Weight distribution of FF, LT and FB connections. (B) Considering only pairs of neurons with reciprocal FF and FB connections, this histogram shows the difference of the FF weight minus the FB weight.

(TIFF)

(A) The length distribution for all shortest paths from sensory to motor neurons. Almost all shortest paths are shorter than 6 hops. (B) Distribution of the number of distinct shortest paths from a sensory neuron to a motor neuron. For about 50% of S-M pairs, there are more than two shortest paths.

(TIFF)

All paths for the routing scheme P4. The model network is the same one depicted in Fig 3.

(TIF)

The encoder-decoder gain ratio ΦZ for the combined network containing both chemical synapses and gap junctions (contrast with Fig 12). The maximum value of ΦZ is 7.4 when Z includes the first 20 core neurons. Recall that the maximum value of ΦZ in the network of chemical synapses is 8.2.

(TIF)

In the synaptic network, the top-5% strongest connections are dominated by FF connections from S neurons to I or M neurons, and by LT connections between I neurons and M neurons. On the other hand, none of the FB connections appear in this set.

(TIF)

Functional circuits associated with core neurons based on the C. elegans literature. The core neurons appear in several circuits, mostly related to spontaneous or planned movement. Many of the adaptive behaviors of the organism such as feeding, egg-laying, escape and navigation require a common set of underlying simpler tasks. Some of the circuits shown (e.g. thermotaxis, chemosensation, olfactory behavior) perform tasks that start with activity in some sensory neurons, followed by a locomotory response that is modulated by certain core interneurons.

(TIF)

Data Availability

All data and code to reproduce the results of this paper are available from: https://github.com/kmsabrin/hourglass-celegans.

Funding Statement

Since the submission of the original manuscript, the contact author CD has received funding by DARPA to complete this work, https://www.darpa.mil/program/lifelong-learning-machines (Grant number: Lifelong Learning Machines (L2M) program of DARPA/MTO: Cooperative Agreement HR0011-18-2-0019). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Bassett DS, Gazzaniga MS. Understanding complexity in the human brain. Trends in cognitive sciences. 2011;15(5):200–209. 10.1016/j.tics.2011.03.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Meunier D, Lambiotte R, Bullmore ET. Modular and hierarchically modular organization of brain networks. Frontiers in Neuroscience. 2010;4:200 10.3389/fnins.2010.00200 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Parnas DL, Clements PC, Weiss DM. The modular structure of complex systems. In: Proceedings of the 7th International Conference on Software Engineering. IEEE Press; 1984. p. 408–417.

- 4. Schilling MA. Toward a general modular systems theory and its application to interfirm product modularity. Academy of Management Review. 2000;25(2):312–334. 10.5465/amr.2000.3312918 [DOI] [Google Scholar]

- 5. Baldwin CY, Clark KB. Design Rules: The Power of Modularity. vol. 1 MIT press, Cambridge; 2000. [Google Scholar]

- 6. Callebaut W, Rasskin-Gutman D. Modularity: Understanding the Development and Evolution of Natural Complex Systems. MIT press, Cambridge; 2005. [Google Scholar]

- 7. Wagner GP, Pavlicev M, Cheverud JM. The road to modularity. Nature Reviews Genetics. 2007;8(12):921–931. 10.1038/nrg2267 [DOI] [PubMed] [Google Scholar]

- 8. Ravasz E, Barabási AL. Hierarchical organization in complex networks. Physical Review E. 2003;67(2):026112 10.1103/PhysRevE.67.026112 [DOI] [PubMed] [Google Scholar]

- 9. Sales-Pardo M, Guimera R, Moreira AA, Amaral LAN. Extracting the hierarchical organization of complex systems. Proceedings of the National Academy of Sciences. 2007;104(39):15224–15229. 10.1073/pnas.0703740104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Simon HA. The architecture of complexity. Springer; 1991. [Google Scholar]

- 11. Yu H, Gerstein M. Genomic analysis of the hierarchical structure of regulatory networks. Proceedings of the National Academy of Sciences. 2006;103(40):14724–14731. 10.1073/pnas.0508637103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Clune J, Mouret JB, Lipson H. The evolutionary origins of modularity. Proceedings of the Royal Society of London B: Biological Sciences. 2013;280(1755):20122863 10.1098/rspb.2012.2863 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Mengistu H, Huizinga J, Mouret JB, Clune J. The evolutionary origins of hierarchy. PLoS computational biology. 2016;12(6):e1004829 10.1371/journal.pcbi.1004829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Fortuna MA, Bonachela JA, Levin SA. Evolution of a modular software network. Proceedings of the National Academy of Sciences. 2011;108(50):19985–19989. 10.1073/pnas.1115960108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Huang CC, Kusiak A. Modularity in design of products and systems. Systems, Man and Cybernetics, Part A: Systems and Humans, IEEE Transactions on. 1998;28(1):66–77. 10.1109/3468.650323 [DOI] [Google Scholar]

- 16. Myers CR. Software systems as complex networks: Structure, function, and evolvability of software collaboration graphs. Physical Review E. 2003;68(4):046116 10.1103/PhysRevE.68.046116 [DOI] [PubMed] [Google Scholar]

- 17. Kashtan N, Alon U. Spontaneous evolution of modularity and network motifs. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(39):13773–13778. 10.1073/pnas.0503610102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Kashtan N, Noor E, Alon U. Varying environments can speed up evolution. Proceedings of the National Academy of Sciences. 2007;104(34):13711–13716. 10.1073/pnas.0611630104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Lorenz DM, Jeng A, Deem MW. The emergence of modularity in biological systems. Physics of Life Reviews. 2011;8(2):129–160. 10.1016/j.plrev.2011.02.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Kirsten H, Hogeweg P. Evolution of networks for body plan patterning; interplay of modularity, robustness and evolvability. PLoS Comput Biol. 2011;7(10):e1002208 10.1371/journal.pcbi.1002208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Kitano H. Biological robustness. Nature Reviews Genetics. 2004;5(11):826–837. 10.1038/nrg1471 [DOI] [PubMed] [Google Scholar]

- 22. Stelling J, Sauer U, Szallasi Z, Doyle FJ, Doyle J. Robustness of cellular functions. Cell. 2004;118(6):675–685. 10.1016/j.cell.2004.09.008 [DOI] [PubMed] [Google Scholar]

- 23. Sabrin KM, Dovrolis C. The hourglass effect in hierarchical dependency networks. Network Science. 2017;5(4):490–528. 10.1017/nws.2017.22 [DOI] [Google Scholar]

- 24. Friedlander T, Mayo AE, Tlusty T, Alon U. Evolution of bow-tie architectures in biology. PLoS computational biology. 2015;11(3):e1004055 10.1371/journal.pcbi.1004055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Casci T. Development: Hourglass theory gets molecular approval. Nature Reviews Genetics. 2011;12(2):76–76. 10.1038/nrg2940 [DOI] [PubMed] [Google Scholar]

- 26. Quint M, Drost HG, Gabel A, Ullrich KK, Bönn M, Grosse I. A transcriptomic hourglass in plant embryogenesis. Nature. 2012;490(7418):98–101. 10.1038/nature11394 [DOI] [PubMed] [Google Scholar]

- 27. Tanaka R, Csete M, Doyle J. Highly optimised global organisation of metabolic networks. IEE Proceedings-Systems Biology. 2005;152(4):179–184. 10.1049/ip-syb:20050042 [DOI] [PubMed] [Google Scholar]

- 28. Zhao J, Yu H, Luo JH, Cao ZW, Li YX. Hierarchical modularity of nested bow-ties in metabolic networks. BMC Bioinformatics. 2006;7(1):386 10.1186/1471-2105-7-386 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Beutler B. Inferences, questions and possibilities in Toll-like receptor signalling. Nature. 2004;430(6996):257–263. 10.1038/nature02761 [DOI] [PubMed] [Google Scholar]

- 30. Oda K, Kitano H. A comprehensive map of the toll-like receptor signaling network. Molecular Systems Biology. 2006;2(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Supper J, Spangenberg L, Planatscher H, Dräger A, Schröder A, Zell A. BowTieBuilder: modeling signal transduction pathways. BMC Systems Biology. 2009;3(1):1 10.1186/1752-0509-3-67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Quiroga RQ, Reddy L, Kreiman G, Koch C, Fried I. Invariant visual representation by single neurons in the human brain. Nature. 2005;435(7045):1102–1107. 10.1038/nature03687 [DOI] [PubMed] [Google Scholar]

- 33. Riesenhuber M, Poggio T. Hierarchical models of object recognition in cortex. Nature Neuroscience. 1999;2(11):1019–1025. 10.1038/14819 [DOI] [PubMed] [Google Scholar]

- 34. Hinton GE, Salakhutdinov RR. Reducing the dimensionality of data with neural networks. Science. 2006;313(5786):504–507. 10.1126/science.1127647 [DOI] [PubMed] [Google Scholar]

- 35. Akhshabi S, Dovrolis C. The evolution of layered protocol stacks leads to an hourglass-shaped architecture. ACM SIGCOMM Computer Communication Review. 2011;41(4):206–217. 10.1145/2043164.2018460 [DOI] [Google Scholar]

- 36. Swaminathan JM, Smith SF, Sadeh NM. Modeling supply chain dynamics: A multiagent approach*. Decision Sciences. 1998;29(3):607–632. 10.1111/j.1540-5915.1998.tb01356.x [DOI] [Google Scholar]

- 37. Csermely P, London A, Wu LY, Uzzi B. Structure and dynamics of core/periphery networks. Journal of Complex Networks. 2013;1(2):93–123. 10.1093/comnet/cnt016 [DOI] [Google Scholar]

- 38. Holme P. Core-periphery organization of complex networks. Physical Review E. 2005;72(4):046111 10.1103/PhysRevE.72.046111 [DOI] [PubMed] [Google Scholar]

- 39. Csete M, Doyle J. Bow ties, metabolism and disease. TRENDS in Biotechnology. 2004;22(9):446–450. 10.1016/j.tibtech.2004.07.007 [DOI] [PubMed] [Google Scholar]

- 40. Domazet-Lošo T, Tautz D. A phylogenetically based transcriptome age index mirrors ontogenetic divergence patterns. Nature. 2010;468(7325):815–818. 10.1038/nature09632 [DOI] [PubMed] [Google Scholar]

- 41. Ma HW, Zeng AP. The connectivity structure, giant strong component and centrality of metabolic networks. Bioinformatics. 2003;19(11):1423–1430. 10.1093/bioinformatics/btg177 [DOI] [PubMed] [Google Scholar]

- 42. Markov NT, Ercsey-Ravasz M, Van Essen DC, Knoblauch K, Toroczkai Z, Kennedy H. Cortical high-density counterstream architectures. Science. 2013;342(6158):1238406 10.1126/science.1238406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Varshney LR, Chen BL, Paniagua E, Hall DH, Chklovskii DB. Structural properties of the Caenorhabditis elegans neuronal network. PLoS computational biology. 2011;7(2):e1001066 10.1371/journal.pcbi.1001066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Towlson EK, Vértes PE, Ahnert SE, Schafer WR, Bullmore ET. The rich club of the C. elegans neuronal connectome. Journal of Neuroscience. 2013;33(15):6380–6387. 10.1523/JNEUROSCI.3784-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Goodfellow I, Bengio Y, Courville A. Deep learning. MIT press; 2016. [Google Scholar]

- 46. Reece JB, Urry LA, Cain ML, Wasserman SA, Minorsky PV, Jackson RB, et al. Campbell biology. Pearson; Boston; 2014. [Google Scholar]

- 47. Avena-Koenigsberger A, Misic B, Sporns O. Communication dynamics in complex brain networks. Nature Reviews Neuroscience. 2018;19(1):17 10.1038/nrn.2017.149 [DOI] [PubMed] [Google Scholar]

- 48. Bullmore E, Sporns O. The economy of brain network organization. Nature Reviews Neuroscience. 2012;13(5):336 10.1038/nrn3214 [DOI] [PubMed] [Google Scholar]

- 49. Kaiser M, Hilgetag CC. Nonoptimal component placement, but short processing paths, due to long-distance projections in neural systems. PLoS computational biology. 2006;2(7):e95 10.1371/journal.pcbi.0020095 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Raj A, Chen Yh. The wiring economy principle: connectivity determines anatomy in the human brain. PloS one. 2011;6(9):e14832 10.1371/journal.pone.0014832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Abdelnour F, Voss HU, Raj A. Network diffusion accurately models the relationship between structural and functional brain connectivity networks. Neuroimage. 2014;90:335–347. 10.1016/j.neuroimage.2013.12.039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Mišić B, Betzel RF, Nematzadeh A, Goni J, Griffa A, Hagmann P, et al. Cooperative and competitive spreading dynamics on the human connectome. Neuron. 2015;86(6):1518–1529. 10.1016/j.neuron.2015.05.035 [DOI] [PubMed] [Google Scholar]

- 53.Ishakian V, Erdös D, Terzi E, Bestavros A. A Framework for the Evaluation and Management of Network Centrality. In: SDM. SIAM; 2012. p. 427–438.

- 54. Karrer B, Newman ME. Random graph models for directed acyclic networks. Physical Review E. 2009;80(4):046110 10.1103/PhysRevE.80.046110 [DOI] [PubMed] [Google Scholar]