Abstract

Simultaneous consonance is a salient perceptual phenomenon corresponding to the perceived pleasantness of simultaneously sounding musical tones. Various competing theories of consonance have been proposed over the centuries, but recently a consensus has developed that simultaneous consonance is primarily driven by harmonicity perception. Here we question this view, substantiating our argument by critically reviewing historic consonance research from a broad variety of disciplines, reanalyzing consonance perception data from 4 previous behavioral studies representing more than 500 participants, and modeling three Western musical corpora representing more than 100,000 compositions. We conclude that simultaneous consonance is a composite phenomenon that derives in large part from three phenomena: interference, periodicity/harmonicity, and cultural familiarity. We formalize this conclusion with a computational model that predicts a musical chord’s simultaneous consonance from these three features, and release this model in an open-source R package, incon, alongside 15 other computational models also evaluated in this paper. We hope that this package will facilitate further psychological and musicological research into simultaneous consonance.

Keywords: composition, consonance, dissonance, music, perception

Simultaneous consonance is a salient perceptual phenomenon that arises from simultaneously sounding musical tones. Consonant tone combinations tend to be perceived as pleasant, stable, and positively valenced; dissonant combinations tend conversely to be perceived as unpleasant, unstable, and negatively valenced. The opposition between consonance and dissonance underlies much of Western music (e.g., Dahlhaus, 1990; Hindemith, 1945; Parncutt & Hair, 2011; Rameau, 1722; Schoenberg, 1978).1

Many psychological explanations for simultaneous consonance have been proposed over the centuries, including amplitude fluctuation (Vassilakis, 2001), masking of neighboring partials (Huron, 2001), cultural familiarity (Johnson-Laird, Kang, & Leong, 2012), vocal similarity (Bowling, Purves, & Gill, 2018), fusion of chord tones (Stumpf, 1890), combination tones (Hindemith, 1945), and spectral evenness (Cook, 2009). Recently, however, a consensus is developing that consonance primarily derives from a chord’s harmonicity (Bidelman & Krishnan, 2009; Bowling & Purves, 2015; Cousineau, McDermott, & Peretz, 2012; Lots & Stone, 2008; McDermott, Lehr, & Oxenham, 2010; Stolzenburg, 2015), with this effect potentially being moderated by musical exposure (McDermott et al., 2010; McDermott, Schultz, Undurraga, & Godoy, 2016).

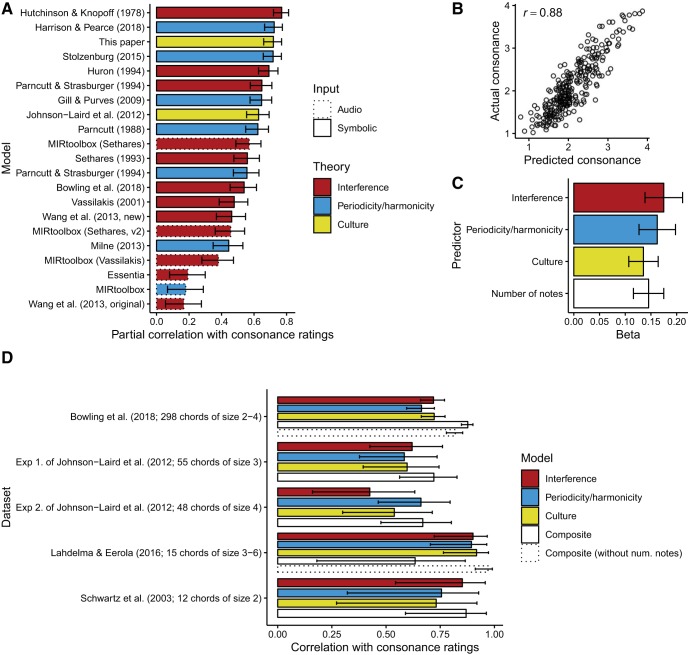

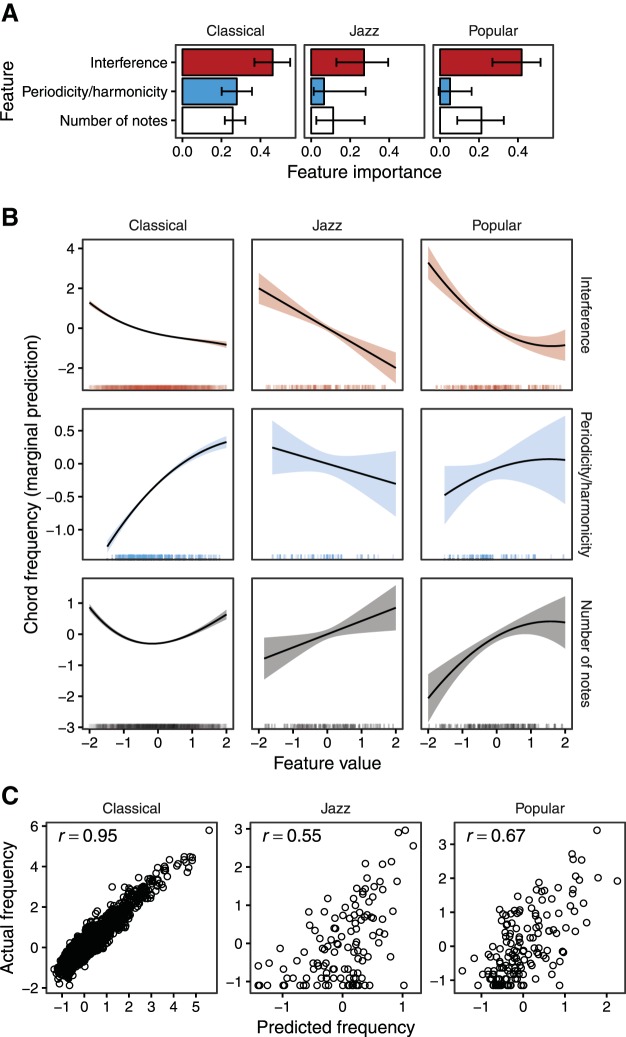

Here we question whether harmonicity is truly sufficient to explain simultaneous consonance perception. First, we critically review historic consonance research from a broad variety of disciplines, including psychoacoustics, cognitive psychology, animal behavior, computational musicology, and ethnomusicology. Second, we reanalyze consonance perception data from four previous studies representing more than 500 participants (Bowling et al., 2018; Johnson-Laird et al., 2012; Lahdelma & Eerola, 2016; Schwartz, Howe, & Purves, 2003). Third, we model chord prevalences in three large musical corpora representing more than 100,000 compositions (Broze & Shanahan, 2013; Burgoyne, 2011; Viro, 2011). On the basis of these analyses, we estimate the degree to which different psychological mechanisms contribute to consonance perception in Western listeners.

Computational modeling is a critical part of our approach. We review the state of the art in consonance modeling, empirically evaluate 20 of these models, and use these models to test competing theories of consonance. Our work results in two new consonance models: a corpus-based cultural familiarity model, and a composite model of consonance perception that captures interference between partials, harmonicity, and cultural familiarity. We release these new models in an accompanying R package, incon, alongside new implementations of 14 other models from the literature (see Software for details). In doing so, we hope to facilitate future consonance research in both psychology and empirical musicology.

Musical Terminology

Western music is traditionally notated as collections of atomic musical elements termed notes, which are organized along two dimensions: pitch and time. In performance, these notes are translated into physical sounds termed tones, whose pitch and timing reflect the specifications in the musical score. Pitch is the psychological correlate of a waveform’s oscillation frequency, with slow oscillations sounding “low” and fast oscillations sounding “high.”

Western listeners are particularly sensitive to pitch intervals, the perceptual correlate of frequency ratios. Correspondingly, a key principle in Western music is transposition invariance, the idea that a musical object (e.g., a melody) retains its perceptual identity when its pitches are all shifted (transposed) by the same interval.

A particularly important interval is the octave, which approximates a 2:1 frequency ratio.2 Western listeners perceive a fundamental equivalence between pitches separated by octaves. Correspondingly, a pitch class is defined as an equivalence class of pitches under octave transposition. The pitch-class interval between two pitch classes is then defined as the smallest possible ascending interval between two pitches belonging to the respective pitch classes.

In Western music theory, a chord may be defined as a collection of notes that are sounded simultaneously as tones. The lowest of these notes is termed the bass note. Chords may be termed based on their size: For example, the terms dyad, triad, and tetrad denote chords comprising two, three, and four notes respectively. Chords may also be termed according to the representations of their constituent notes: (a) Pitch sets represent notes as absolute pitches; (b) Pitch-class sets represent notes as pitch classes; and (c) Chord types represent notes as intervals from the bass note.

This paper is about the simultaneous consonance of musical chords. A collection of notes is said to be consonant if the notes “sound well together,” and conversely dissonant if the notes “sound poorly together.” In its broadest definitions, consonance is associated with many different musical concepts, including diatonicism, centricism, stability, tension, similarity, and distance (Parncutt & Hair, 2011). For psychological studies, however, it is often useful to provide a stricter operationalization of consonance, and so researchers commonly define consonance to their participants as the pleasantness, beauty, or attractiveness of a chord (e.g., Bowling & Purves, 2015; Bowling et al., 2018; Cousineau et al., 2012; McDermott et al., 2010, 2016).

In this paper we use the term “simultaneous” to restrict consideration to the notes within the chord, as opposed to sequential relationships between the chord and its musical context. Simultaneous and sequential consonance are sometimes termed vertical and horizontal consonance respectively, by analogy with the physical layout of the Western musical score (Parncutt & Hair, 2011). These kinds of chordal consonance may also be distinguished from “melodic” consonance, which refers to the intervals of a melody. For the remainder of this paper, the term “consonance” will be taken to imply “simultaneous consonance” unless specified otherwise.

Consonance and dissonance are often treated as two ends of a continuous scale, but some researchers treat the two as distinct phenomena (e.g., Parncutt & Hair, 2011). Under such formulations, consonance is typically treated as the perceptual correlate of harmonicity, and dissonance as the perceptual correlate of roughness (see Consonance Theories). Here we avoid this approach, and instead treat consonance and dissonance as antonyms.

Consonance Theories

Here we review current theories of consonance perception. We pay particular attention to three classes of theories—periodicity/harmonicity, interference between partials, and culture—that we consider to be particularly well-supported by the empirical literature. We also discuss several related theories, including vocal similarity, fusion, and combination tones.

Periodicity/Harmonicity

Human vocalizations are characterized by repetitive structure termed periodicity. This periodicity has several perceptual correlates, of which the most prominent is pitch. Broadly speaking, pitch corresponds to the waveform’s repetition rate, or fundamental frequency: Faster repetition corresponds to higher pitch.

Sound can be represented either in the time domain or in the frequency domain. In the time domain, periodicity manifests as repetitive waveform structure. In the frequency domain, periodicity manifests as harmonicity, a phenomenon where the sound’s frequency components are all integer multiples of the fundamental frequency.3 These integer-multiple frequencies are termed harmonics; a sound comprising a full set of integer multiples is termed a harmonic series. Each periodic sound constitutes a (possibly incomplete) harmonic series rooted on its fundamental frequency; conversely, every harmonic series (incomplete or complete) is periodic in its fundamental frequency. Harmonicity and periodicity are therefore essentially equivalent phenomena, and we will denote both by writing “periodicity/harmonicity.”

Humans rely on periodicity/harmonicity analysis to understand the natural environment and to communicate with others (e.g., Oxenham, 2018), but the precise mechanisms of this analysis remain unclear. The primary extant theories are time-domain autocorrelation theories and frequency-domain pattern-matching theories (de Cheveigné, 2005). Autocorrelation theories state that listeners detect periodicity by computing the signal’s correlation with a delayed version of itself as a function of delay time; peaks in the autocorrelation function correspond to potential fundamental frequencies (Balaguer-Ballester, Denham, & Meddis, 2008; Bernstein & Oxenham, 2005; Cariani, 1999; Cariani & Delgutte, 1996; de Cheveigné, 1998; Ebeling, 2008; Langner, 1997; Licklider, 1951; Meddis & Hewitt, 1991a, 1991b; Meddis & O’Mard, 1997; Slaney & Lyon, 1990; Wightman, 1973). Pattern-matching theories instead state that listeners infer fundamental frequencies by detecting harmonic patterns in the frequency domain (Bilsen, 1977; Cohen, Grossberg, & Wyse, 1995; Duifhuis, Willems, & Sluyter, 1982; Goldstein, 1973; Shamma & Klein, 2000; Terhardt, 1974; Terhardt, Stoll, & Seewann, 1982b). Both of these explanations have resisted definitive falsification, and it is possible that both mechanisms contribute to periodicity/harmonicity detection (de Cheveigné, 2005).

The prototypically consonant intervals of Western music tend to exhibit high periodicity/harmonicity. For example, octaves are typically performed as complex tones that approximate 2:1 frequency ratios, where every cycle of the lower-frequency waveform approximately coincides with a cycle of the higher-frequency waveform. The combined waveform therefore repeats approximately with a fundamental frequency equal to that of the lowest tone, which is as high a fundamental frequency as we could expect when combining two complex tones; we can therefore say that the octave has maximal periodicity. In contrast, the dissonant tritone cannot be easily approximated by a simple frequency ratio, and so its fundamental frequency (approximate or otherwise) must be much lower than that of the lowest tone. We therefore say that the tritone has relatively low periodicity.

It has correspondingly been proposed that periodicity/harmonicity determines consonance perception (Bidelman & Heinz, 2011; Boomsliter & Creel, 1961; Bowling & Purves, 2015; Bowling et al., 2018; Cousineau et al., 2012; Ebeling, 2008; Heffernan & Longtin, 2009; Lee, Skoe, Kraus, & Ashley, 2015; Lots & Stone, 2008; McDermott et al., 2010; Milne et al., 2016; Nordmark & Fahlén, 1988; Patterson, 1986; Spagnolo, Ushakov, & Dubkov, 2013; Stolzenburg, 2015; Terhardt, 1974; Ushakov, Dubkov, & Spagnolo, 2010).4 The nature of this potential relationship depends in large part on the unresolved issue of whether listeners detect periodicity/harmonicity using autocorrelation or pattern-matching (de Cheveigné, 2005), as well as other subtleties of auditory processing such as masking (Parncutt, 1989; Parncutt & Strasburger, 1994), octave invariance (Harrison & Pearce, 2018; Milne et al., 2016; Parncutt, 1988; Parncutt, Reisinger, Fuchs, & Kaiser, 2018), and nonlinear signal transformation (Lee et al., 2015; Stolzenburg, 2017). It is also unclear precisely how consonance develops from the results of periodicity/harmonicity detection; competing theories suggest that consonance is determined by the inferred fundamental frequency (Boomsliter & Creel, 1961; Stolzenburg, 2015), the absolute degree of harmonic template fit at the fundamental frequency (Bowling et al., 2018; Gill & Purves, 2009; Milne et al., 2016; Parncutt, 1989; Parncutt & Strasburger, 1994), the degree of template fit at the fundamental frequency relative to that at other candidate fundamental frequencies (Parncutt, 1988; Parncutt et al., 2018), or the degree of template fit as aggregated over all candidate fundamental frequencies (Harrison & Pearce, 2018). This variety of hypotheses is reflected in a diversity of computational models of musical periodicity/harmonicity perception (Ebeling, 2008; Gill & Purves, 2009; Harrison & Pearce, 2018; Lartillot, Toiviainen, & Eerola, 2008; Milne et al., 2016; Parncutt, 1988, 1989; Parncutt & Strasburger, 1994; Spagnolo et al., 2013; Stolzenburg, 2015). So far these models have only received limited empirical comparison (e.g., Stolzenburg, 2015).

It is clear why periodicity/harmonicity should be salient to human listeners: Periodicity/harmonicity detection is crucial for auditory scene analysis and for natural speech understanding (e.g., Oxenham, 2018). It is less clear why periodicity/harmonicity should be positively valenced, and hence associated with consonance. One possibility is that long-term exposure to vocal sounds (Schwartz et al., 2003) or Western music (McDermott et al., 2016) induces familiarity with periodicity/harmonicity, in turn engendering liking through the mere exposure effect (Zajonc, 2001). A second possibility is that the ecological importance of interpreting human vocalizations creates a selective pressure to perceive these vocalizations as attractive (Bowling et al., 2018).

Interference Between Partials

Musical chords can typically be modeled as complex tones, superpositions of finite numbers of sinusoidal pure tones termed partials. Each partial is characterized by a frequency and an amplitude. It is argued that neighboring partials can interact to produce interference effects, with these interference effects subsequently being perceived as dissonance (Dillon, 2013; Helmholtz, 1863; Hutchinson & Knopoff, 1978; Kameoka & Kuriyagawa, 1969a, 1969b; Mashinter, 2006; Plomp & Levelt, 1965; Sethares, 1993; Vassilakis, 2001).

Pure-tone interference has two potential sources: beating and masking. Beating develops from the following mathematical identity for the addition of two equal-amplitude sinusoids:

| 1 |

where f1, f2 are the frequencies of the original sinusoids (f1 > f2), , δ = f1 − f2, and t denotes time. For sufficiently large frequency differences, listeners perceive the left hand side of Equation 1, corresponding to two separate pure tones at frequencies f1, f2. For sufficiently small frequency differences, listeners perceive the right hand side of Equation 1, corresponding to a tone of intermediate frequency modulated by a sinusoid of frequency . This modulation is perceived as amplitude fluctuation with frequency equal to the modulating sinusoid’s zero-crossing rate, f1 − f2. Slow amplitude fluctuation (c. 0.1–5 Hz) is perceived as a not unpleasant oscillation in loudness, but fast amplitude fluctuation (c. 20–30 Hz) takes on a harsh quality described as roughness. This roughness is thought to contribute to dissonance perception.

Masking describes situations where one sound obstructs the perception of another sound (e.g., Patterson & Green, 2012; Scharf, 1971). Masking in general is a complex phenomenon, but the mutual masking of pairs of pure tones can be approximated by straightforward mathematical models (Parncutt, 1989; Parncutt & Strasburger, 1994; Terhardt, Stoll, & Seewann, 1982a; Wang, Shen, Guo, Tang, & Hamade, 2013). These models embody long-established principles that masking increases with smaller frequency differences and with higher sound pressure level.

Beating and masking are both closely linked with the notion of critical bands. The notion of critical bands comes from modeling the cochlea as a series of overlapping bandpass filters, areas that are preferentially excited by spectral components within a certain frequency range (Zwicker, Flottorp, & Stevens, 1957). Beating typically only arises from spectral components localized to the same critical band (Daniel & Weber, 1997). The mutual masking of pure tones approximates a linear function of the number of critical bands separating them (termed critical-band distance), with additional masking occurring from pure tones within the same critical band that are unresolved by the auditory system (Terhardt et al., 1982a).

Beating and masking effects are both considerably stronger when two tones are presented diotically (to the same ear) rather than dichotically (to different ears; Buus, 1997; Grose, Buss, & Hall, 2012). This indicates that these phenomena depend, in large part, on physical interactions in the inner ear.

There is a long tradition of research relating beating to consonance, mostly founded on the work of Helmholtz (1863; Aures, 1985a, cited in Daniel & Weber, 1997; Hutchinson & Knopoff, 1978; Kameoka & Kuriyagawa, 1969a, 1969b; Mashinter, 2006; Parncutt et al., 2018; Plomp & Levelt, 1965; Sethares, 1993; Vassilakis, 2001).5 The general principle shared by this work is that consonance develops from the accumulation of roughness deriving from the beating of neighboring partials.

In contrast, the literature linking masking to consonance is relatively sparse. Huron (2001, 2002) suggests that masking induces dissonance because it reflects a compromised sensitivity to the auditory environment, with analogies in visual processing such as occlusion or glare. Aures (1984; cited in Parncutt, 1989) and Parncutt (1989; Parncutt & Strasburger, 1994) also state that consonance reduces as a function of masking. Unfortunately, these ideas have yet to receive much empirical validation; a difficulty is that beating and masking tend to happen in similar situations, making them difficult to disambiguate (Huron, 2001).

The kind of beating that elicits dissonance is achieved by small, but not too small, frequency differences between partials. With very small frequency differences, the beating becomes too slow to elicit dissonance (Hutchinson & Knopoff, 1978; Kameoka & Kuriyagawa, 1969a; Plomp & Levelt, 1965). The kind of masking that elicits dissonance is presumably also maximized by small, but not too small, frequency differences between partials. For moderately small frequency differences, the auditory system tries to resolve two partials, but finds it difficult on account of mutual masking, with this difficulty eliciting negative valence (Huron, 2001, 2002). For very small frequency differences, the auditory system only perceives one partial, which becomes purer as the two acoustic partials converge on the same frequency.

Musical sonorities can often be treated as combinations of harmonic complex tones, complex tones whose spectral frequencies follow a harmonic series. The interference experienced by a combination of harmonic complex tones depends on the fundamental frequencies of the complex tones. A particularly important factor is the ratio of these fundamental frequencies. Certain ratios, in particular the simple-integer ratios approximated by prototypically consonant musical chords, tend to produce partials that either completely coincide or are widely spaced, hence minimizing interference.

Interference between partials also depends on pitch height. A given frequency ratio occupies less critical-band distance as absolute frequency decreases, typically resulting in increased interference. This mechanism potentially explains why the same musical interval (e.g., the major third, 5:4) can sound consonant in high registers and dissonant in low registers.

It is currently unusual to distinguish beating and masking theories of consonance, as we have done above. Most previous work solely discusses beating and its psychological correlate, roughness (e.g., Cousineau et al., 2012; McDermott et al., 2010, 2016; Parncutt & Hair, 2011; Parncutt et al., 2018; Terhardt, 1984). However, we contend that the existing evidence does little to differentiate beating and masking theories, and that it would be premature to discard the latter in favor of the former. Moreover, we show later in this paper that computational models that address beating explicitly (e.g., Wang et al., 2013) seem to predict consonance worse than generic models of interference between partials (e.g., Hutchinson & Knopoff, 1978; Sethares, 1993; Vassilakis, 2001). For now, therefore, it seems wise to contemplate both beating and masking as potential contributors to consonance.

Culture

Consonance may also be determined by a listener’s cultural background (Arthurs, Beeston, & Timmers, 2018; Guernsey, 1928; Johnson-Laird et al., 2012; Lundin, 1947; McDermott et al., 2016; McLachlan, Marco, Light, & Wilson, 2013; Omigie, Dellacherie, & Samson, 2017; Parncutt, 2006b; Parncutt & Hair, 2011). Several mechanisms for this effect are possible. Through the mere exposure effect (Zajonc, 2001), exposure to common chords in a musical style might induce familiarity and hence liking. Through classical conditioning, the co-occurrence of certain musical features (e.g., interference) with external features (e.g., the violent lyrics in death metal music, Olsen, Thompson, & Giblin, 2018) might also induce aesthetic responses to these musical features.

It remains unclear which musical features might become consonant through familiarity. One possibility is that listeners become familiar with acoustic phenomena such as periodicity/harmonicity (McDermott et al., 2016). A second possibility is that listeners internalize Western tonal structures such as diatonic scales (Johnson-Laird et al., 2012). Alternatively, listeners might develop a granular familiarity with specific musical chords (McLachlan et al., 2013).

Other Theories

Vocal similarity

Vocal similarity theories hold that consonance derives from acoustic similarity to human vocalizations (e.g., Bowling & Purves, 2015; Bowling et al., 2018; Schwartz et al., 2003). A key feature of human vocalizations is periodicity/harmonicity, leading some researchers to operationalize vocal similarity as the latter (Gill & Purves, 2009). In such cases, vocal similarity theories may be considered a subset of periodicity/harmonicity theories. However, Bowling et al. (2018) additionally operationalize vocal similarity as the absence of frequency intervals smaller than 50 Hz, arguing that such intervals are rarely found in human vocalizations. Indeed, such intervals are negatively associated with consonance; however, this phenomenon can also be explained by interference minimization. To our knowledge, no studies have shown that vocal similarity contributes to consonance through paths other than periodicity/harmonicity and interference. We therefore do not evaluate vocal similarity separately from interference and periodicity/harmonicity.

Fusion

Stumpf (1890, 1898) proposed that consonance derives from fusion, the perceptual merging of multiple harmonic complex tones. The substance of this hypothesis depends on the precise definition of fusion. Some researchers have operationalized fusion as perceptual indiscriminability, that is, an inability to identify the constituent tones of a sonority (DeWitt & Crowder, 1987; McLachlan et al., 2013). This was encouraged by Stumpf’s early experiments investigating how often listeners erroneously judged tone pairs as single tones (DeWitt & Crowder, 1987; Schneider, 1997). Subsequently, however, Stumpf wrote that fusion should not be interpreted as indiscriminability but rather as the formation of a coherent whole, with the sophisticated listener being able to attend to individual chord components at will (Schneider, 1997). Stumpf later wrote that he was unsure whether fusion truly caused consonance; instead, he suggested that fusion and consonance might both stem from harmonicity recognition (Plomp & Levelt, 1965; Schneider, 1997).

Following Stumpf, several subsequent studies have investigated the relationship between fusion and consonance, but with mixed findings. Guernsey (1928) and DeWitt and Crowder (1987) tested fusion by playing participants different dyads and asking how many tones these chords contained. In both studies, prototypically consonant musical intervals (octaves, perfect fifths) were most likely to be confused for single tones, supporting a link between consonance and fusion. McLachlan et al. (2013) instead tested fusion with a pitch-matching task, where each trial cycled between a target chord and a probe tone, and participants were instructed to manipulate the probe tone until it matched a specified chord tone (lowest, middle, or highest). Pitch-matching accuracy increased for prototypically consonant chords, suggesting (contrary to Stumpf’s claims) that consonance was inversely related to fusion. It is difficult to conclude much about Stumpf’s claims from these studies, partly because different studies have yielded contradictory results, and partly because none of these studies tested for causal effects of fusion on consonance, as opposed to consonance and fusion both being driven by a common factor of periodicity/harmonicity.

Combination tones

Combination tones are additional spectral components introduced by nonlinear sound transmission in the ear’s physical apparatus (e.g., Parncutt, 1989; Smoorenburg, 1972; Wever, Bray, & Lawrence, 1940). For example, two pure tones of frequencies f1, f2 : f1 < f2 can elicit combination tones including the simple difference tone (f = f2 − f1) and the cubic difference tone (f = 2f1 − f2; Parncutt, 1989; Smoorenburg, 1972).

Combination tones were once argued to be an important mechanism for pitch perception, reinforcing a complex tone’s fundamental frequency and causing it to be perceived even when not acoustically present (e.g., Fletcher, 1924; see Parncutt, 1989). Combination tones were also argued to have important implications for music perception, explaining phenomena such as chord roots and perceptual consonance (Hindemith, 1945; Krueger, 1910; Tartini, 1754, cited in Parncutt, 1989). However, subsequent research showed that the missing fundamental persisted even when the difference tone was removed by acoustic cancellation (Schouten, 1938, described in Plomp, 1965), and that, in any case, difference tones are usually too quiet to be audible for typical speech and music listening (Plomp, 1965). We therefore do not consider combination tones further.

Loudness and sharpness

Aures (1985a, 1985b) describes four aspects of sensory consonance: tonalness, roughness, loudness, and sharpness. Tonalness is a synonym for periodicity/harmonicity, already discussed as an important potential contributor to consonance. Roughness is an aspect of interference, also an important potential contributor to consonance. Loudness is the perceptual correlate of a sound’s energy content; sharpness describes the energy content of high spectral frequencies. Historically, loudness and sharpness have received little attention in the study of musical consonance, perhaps because music theorists and psychologists have primarily been interested in the consonance of transposition-invariant and loudness-invariant structures such as pitch-class sets, for which loudness and sharpness are undefined. We do not consider these phenomena further.

Evenness

The constituent notes of a musical chord can be represented as points on a pitch line or a pitch-class circle (e.g., Tymoczko, 2016). The evenness of the resulting distribution can be characterized in various ways, including the difference in successive interval sizes (Cook, 2009, 2017; Cook & Fujisawa, 2006), the difference between the largest and smallest interval sizes (Parncutt et al., 2018), and the standard deviation of interval sizes (Parncutt et al., 2018). In the case of Cook’s (2009, 2017, Cook & Fujisawa, 2006) models, each chord note is expanded into a harmonic complex tone, and pitch distances are computed between the resulting partials; in the other cases, pitch distances are computed between fundamental frequencies, presumably as inferred through periodicity/harmonicity detection.

Evenness may contribute negatively to consonance. When a chord contains multiple intervals of the same size, these intervals may become confusable and impede perceptual organization, hence decreasing consonance (Cook, 2009, 2017; Cook & Fujisawa, 2006; Meyer, 1956). For example, a major triad in pitch-class space contains the intervals of a major third, a minor third, and a perfect fourth, and each note of the triad participates in a unique pair of these intervals, one connecting it to the note above, and one connecting it to the note below. In contrast, an augmented triad contains only intervals of a major third, and so each note participates in an identical pair of intervals. Correspondingly, the individual notes of the augmented triad may be considered less distinctive than those of the major triad.

Evenness may also contribute positively, but indirectly, to consonance. Spacing harmonics evenly on a critical-band scale typically reduces interference, thereby increasing consonance (see, e.g., Huron & Sellmer, 1992; Plomp & Levelt, 1965). Evenness also facilitates efficient voice leading, and therefore may contribute positively to sequential consonance (Parncutt et al., 2018; Tymoczko, 2011).

Evenness is an interesting potential contributor to consonance, but so far it has received little empirical testing. We do not consider it to be sufficiently well-supported to include in this paper’s analyses, but we encourage future empirical research on the topic.

Current Evidence

Evidence for disambiguating different theories of consonance perception can be organized into three broad categories: stimulus effects, listener effects, and composition effects. We review each of these categories in turn, and summarize our conclusions in Table 1.

Table 1. Summarized Evidence for the Mechanisms Underlying Western Consonance Perception.

| Evidence | Interference | Periodicity/harmonicity | Culture |

|---|---|---|---|

| Note. Each row identifies a section in Current Evidence. “✓” denotes evidence that a mechanism contributes to Western consonance perception. “✗” denotes evidence that a mechanism is not relevant to Western consonance perception. “✠” denotes evidence that a mechanism is insufficient to explain Western consonance perception. Parentheses indicate tentative evidence; blank spaces indicate a lack of evidence. | |||

| Stimulus effects | |||

| Tone spectra | ✓ | ||

| Pitch height | ✓ | ||

| Dichotic presentation | ✠ | ||

| Familiarity | (✓) | ||

| Chord structure | (✓) | (✓) | (✓) |

| →This paper: Perceptual analyses | ✓ | ✓ | (✓) |

| Listener effects | |||

| Western listeners | (✗) | ✓ | |

| Congenital amusia | ✓ | ||

| Non-Western listeners | ✓ | ||

| Infants | (✠) | ||

| Animals | (✠) | ||

| Composition effects | |||

| Musical scales | ✓ | ||

| Manipulation of interference | ✓ | ✓ | |

| Chord spacing (Western music) | ✓ | ||

| Chord prevalences (Western music) | (✓) | (✓) | |

| →This paper: Corpus analyses | ✓ | ✓ | |

Stimulus Effects

We begin by discussing stimulus effects, ways in which consonance perception varies as a function of the stimulus.

Tone spectra

A chord’s consonance depends on the spectral content of its tones. With harmonic tone spectra, peak consonance is observed when the fundamental frequencies are related by simple frequency ratios (e.g., Stolzenburg, 2015). With pure tone spectra, these peaks at integer ratios disappear, at least for musically untrained listeners (Kaestner, 1909; Plomp & Levelt, 1965). With inharmonic tone spectra, the peaks at integer ratios are replaced by peaks at ratios determined by the inharmonic spectra (Geary, 1980; Pierce, 1966; Sethares, 2005).6 The consonance of harmonic tone combinations can also be increased by selectively deleting harmonics responsible for interference (Vos, 1986), though Nordmark and Fahlén (1988) report limited success with this technique.

Interference theories clearly predict these effects of tone spectra on consonance (for harmonic and pure tones, see Plomp & Levelt, 1965; for inharmonic tones, see Sethares, 1993, 2005). In contrast, neither periodicity/harmonicity nor cultural theories clearly predict these phenomena. This suggests that interference does indeed contribute toward consonance perception.

Pitch height

A given interval ratio typically appears less consonant if it appears at low frequencies (Plomp & Levelt, 1965). Interference theories predict this phenomenon by relating consonance to pitch distance on a critical-bandwidth scale; a given ratio corresponds to a smaller critical-bandwidth distance if it appears at lower frequencies (Plomp & Levelt, 1965). In contrast, neither periodicity/harmonicity nor cultural theories predict this sensitivity to pitch height.

Dichotic presentation

Interference between partials is thought to take place primarily within the inner ear. Correspondingly, the interference of a given pair of pure tones can be essentially eliminated by dichotic presentation, where each tone is presented to a separate ear. Periodicity/harmonicity detection, meanwhile, is thought to be a central process that combines information from both ears (Cramer & Huggins, 1958; Houtsma & Goldstein, 1972). Correspondingly, the contribution of periodicity/harmonicity detection to consonance perception should be unaffected by dichotic presentation.

Bidelman and Krishnan (2009) report consonance judgments for dichotically presented pairs of complex tones. Broadly speaking, participants continued to differentiate prototypically consonant and dissonant intervals, suggesting that interference is insufficient to explain consonance. Unexpectedly, however, the tritone and perfect fourth received fairly similar consonance ratings. This finding needs to be explored further.

Subsequent studies have investigated the effect of dichotic presentation on consonance judgments for pairs of pure tones (Cousineau et al., 2012; McDermott et al., 2010, 2016). These studies show that dichotic presentation reliably increases the consonance of small pitch intervals, in particular major and minor seconds, as predicted by interference theories. This would appear to support interference theories of consonance, though it is unclear whether these effects generalize to the complex tone spectra of real musical instruments.

Familiarity

McLachlan et al. (2013, Experiment 2) trained nonmusicians to perform a pitch-matching task on two-note chords. After training, participants judged chords from the training set as more consonant than novel chords. These results could be interpreted as evidence that consonance is positively influenced by exposure, consistent with the mere exposure effect, and supporting a cultural theory of consonance. However, the generalizability of this effect has yet to be confirmed.

Chord structure

Western listeners consider certain chords (e.g., the major triad) to be more consonant than others (e.g., the augmented triad). It is possible to test competing theories of consonance by operationalizing the theories as computational models and testing their ability to predict consonance judgments.

Unfortunately, studies using this approach have identified conflicting explanations for consonance:

-

1

Interference (Hutchinson & Knopoff, 1978);

-

2

Interference and additional unknown factors (Vassilakis, 2001);

-

3

Interference and cultural knowledge (Johnson-Laird et al., 2012);

-

4

Periodicity/harmonicity (Stolzenburg, 2015);

-

5

Periodicity/harmonicity and interference (Marin, Forde, Gingras, & Stewart, 2015);

-

6

Interference and sharpness (Lahdelma & Eerola, 2016);

-

7

Vocal similarity (Bowling et al., 2018).

These contradictions may often be attributed to methodological problems:

-

1

Different studies test different theories, and rarely test more than two theories simultaneously.

-

2

Stimulus sets are often too small to support reliable inferences.7

-

3

Stolzenburg (2015) evaluates models using pairwise correlations, implicitly assuming that only one mechanism (e.g., periodicity/harmonicity, interference) determines consonance. Multiple regression would be necessary to capture multiple simultaneous mechanisms.

-

4

The stimulus set of Marin et al. (2015) constitutes 12 dyads each transposed four times; the conditional dependencies between transpositions are not accounted for in the linear regressions, inflating Type I error.

-

5

Johnson-Laird et al. (2012) do not report coefficients or p values for their fitted regression models; they do report hierarchical regression statistics, but these statistics do not test their primary research question, namely whether interference and cultural knowledge simultaneously contribute to consonance.

-

6

The audio-based periodicity/harmonicity model used by Lahdelma and Eerola (2016) fails when applied to complex stimuli such as chords (see the Perceptual Analyses section).

These methodological problems and contradictory findings make it difficult to generalize from this literature.

Listener Effects

We now discuss listener effects, ways in which consonance perception varies as a function of the listener.

Western listeners

McDermott et al. (2010) tested competing theories of consonance perception using an individual-differences approach. They constructed three psychometric measures, testing: (a) Interference preferences, operationalized by playing listeners pure-tone dyads and subtracting preference ratings for dichotic presentation (one tone in each ear) from ratings for diotic presentation (both tones in both ears); (b) Periodicity/harmonicity preferences, operationalized by playing listeners subsets of a harmonic complex tone and subtracting preference ratings for the original version from ratings for a version with perturbed harmonics; (c) Consonance preferences, operationalized by playing listeners 14 musical chords, and subtracting preference ratings for the globally least-preferred chords from the globally most-preferred chords.

Consonance preferences correlated with periodicity/harmonicity preferences but not with interference preferences. This suggests that consonance may be driven by periodicity/harmonicity, not interference. However, these findings must be considered preliminary given the limited construct validation of the three psychometric measures. Future work must examine whether these measures generalize to a wider range of stimulus manipulations and response paradigms.

Congenital amusia

Congenital amusia is a lifelong cognitive disorder characterized by difficulties in performing simple musical tasks (Ayotte, Peretz, & Hyde, 2002; Stewart, 2011). Using the individual-differences tests of McDermott et al. (2010) (see the Western listeners section), Cousineau et al. (2012) found that amusics exhibited no aversion to traditionally dissonant chords, normal aversion to interference, and an inability to detect periodicity/harmonicity. Because the aversion to interference did not transfer to dissonant chords, Cousineau et al. (2012) concluded that interference is irrelevant to consonance perception. However, Marin et al. (2015) subsequently identified small but reliable preferences for consonance in amusics, and showed with regression analyses that these preferences were driven by interference, whereas nonamusic preferences were driven by both interference and periodicity/harmonicity. This discrepancy between Cousineau et al. (2012) and Marin et al. (2015) needs further investigation.

Non-Western listeners

Cross-cultural research into consonance perception has identified high similarity between the consonance judgments of Western and Japanese listeners (Butler & Daston, 1968), but low similarity between Western and Indian listeners (Maher, 1976), and between Westerners and native Amazonians from the Tsimane’ society (McDermott et al., 2016). Exploring these differences further, McDermott et al. (2016) found that Tsimane’ and Western listeners shared an aversion to interference and an ability to perceive periodicity/harmonicity, but, unlike Western listeners, the Tsimane’ had no preference for periodicity/harmonicity.

These results suggest that cultural exposure significantly affects consonance perception. The results of McDermott et al. (2016) additionally suggest that this effect of cultural exposure may be mediated by changes in preference for periodicity/harmonicity.

Infants

Consonance perception has been demonstrated in toddlers (Di Stefano et al., 2017), 6-month-old infants (Crowder, Reznick, & Rosenkrantz, 1991; Trainor & Heinmiller, 1998), 4-month-old infants (Trainor, Tsang, & Cheung, 2002; Zentner & Kagan, 1998), 2-month-old infants (Trainor et al., 2002), and newborn infants (Masataka, 2006; Perani et al., 2010; Virtala, Huotilainen, Partanen, Fellman, & Tervaniemi, 2013). Masataka (2006) additionally found preserved consonance perception in newborn infants with deaf parents. These results suggest that consonance perception does not solely depend on cultural exposure.

A related question is whether infants prefer consonance to dissonance. Looking-time paradigms address this question, testing whether infants preferentially look at consonant or dissonant sound sources (Crowder et al., 1991; Masataka, 2006; Plantinga & Trehub, 2014; Trainor & Heinmiller, 1998; Trainor et al., 2002; Zentner & Kagan, 1998). With the exception of Plantinga and Trehub (2014), these studies each report detecting consonance preferences in infants. However, Plantinga and Trehub (2014) failed to replicate several of these results, and additionally question the validity of looking-time paradigms, noting that looking times may be confounded by features such as familiarity and comprehensibility. These problems may partly be overcome by physical play-based paradigms (e.g., Di Stefano et al., 2017), but such paradigms are unfortunately only applicable to older infants.

In conclusion, therefore, it seems that young infants perceive some aspects of consonance, but it is unclear whether they prefer consonance to dissonance. These conclusions provide tentative evidence that consonance perception is not solely cultural.

Animals

Animal studies could theoretically provide compelling evidence for noncultural theories of consonance. If animals were to display sensitivity or preference for consonance despite zero prior musical exposure, this would indicate that consonance could not be fully explained by cultural learning.

Most studies of consonance perception in animals fall into two categories: discrimination studies and preference studies (see Toro & Crespo-Bojorque, 2017 for a review). Discrimination studies investigate whether animals can be taught to discriminate consonance from dissonance in unfamiliar sounds. Preference studies investigate whether animals prefer consonance to dissonance.

Discrimination studies have identified consonance discrimination in several nonhuman species, but methodological issues limit interpretation of their findings. Experiment 5 of Hulse, Bernard, and Braaten (1995) suggests that starlings may be able to discriminate consonance from dissonance, but their stimulus set contains just four chords. Experiment 2 of Izumi (2000) suggests that Japanese monkeys may be able to discriminate consonance from dissonance, but this study likewise relies on just four chords at different transpositions. Watanabe, Uozumi, and Tanaka (2005) claim to show consonance discrimination in Java sparrows, but the sparrows’ discriminations can also be explained by interval-size judgments.8 Conversely, studies of pigeons (Brooks & Cook, 2010) and rats (Crespo-Bojorque & Toro, 2015) have failed to show evidence of consonance discrimination (but see also Borchgrevink, 1975).9

Preference studies have identified consonance preferences in several nonhuman animals. Using stimuli from a previous infant consonance study (Zentner & Kagan, 1998), Chiandetti and Vallortigara (2011) found that newly hatched domestic chicks spent more time near consonant sound sources than dissonant sound sources. Sugimoto et al. (2010) gave an infant chimpanzee the ability to select between consonant and dissonant two-part melodies, and found that the chimpanzee preferentially selected consonant melodies. However, these studies have yet to be replicated, and both rely on borderline p values (p = .03). Other studies have failed to demonstrate consonance preferences in Campbell’s monkeys (Koda et al., 2013) or cotton-top tamarins (McDermott & Hauser, 2004).

These animal studies provide an important alternative perspective on consonance perception. However, recurring problems with these studies include small stimulus sets, small sample sizes, and a lack of replication studies. Future work should address these problems.

Composition Effects

Here we consider how compositional practice may provide evidence for the psychological mechanisms underlying consonance perception.

Musical scales

A scale divides an octave into a set of pitch classes that can subsequently be used to generate musical material. Scales vary cross-culturally, but certain cross-cultural similarities between scales suggest common perceptual biases.

Gill and Purves (2009) argue that scale construction is biased toward harmonicity maximization, and explain harmonicity maximization as a preference for vocal-like sounds. They introduce a computational model of harmonicity, which successfully recovers several important scales in Arabic, Chinese, Indian, and Western music. However, they do not test competing consonance models, and admit that their results may also be explained by interference minimization.

Gamelan music and Thai classical music may help distinguish periodicity/harmonicity from interference. Both traditions use inharmonic scales whose structures seemingly reflect the inharmonic spectra of their percussion instruments (Sethares, 2005). Sethares provides computational analyses relating these scales to interference minimization; periodicity/harmonicity, meanwhile, offers no obvious explanation for these scales.10 These findings suggest that interference contributes cross-culturally to consonance perception.

Manipulation of interference

Western listeners typically perceive interference as unpleasant, but various other musical cultures actively promote it. Interference is a key feature of the Middle Eastern mijwiz, an instrument comprising two blown pipes whose relative tunings are manipulated to induce varying levels of interference (Vassilakis, 2005). Interference is also promoted in the vocal practice of beat diaphony, or Schwebungsdiaphonie, where two simultaneous voice parts sing in close intervals such as seconds. Beat diaphony can be found in various musical traditions, including music from Lithuania (Ambrazevičius, 2017; Vyčinienė, 2002), Papua New Guinea (Florian, 1981), and Bosnia (Vassilakis, 2005). In contrast to Western listeners, individuals from these traditions seem to perceive the resulting sonorities as consonant (Florian, 1981). These cross-cultural differences indicate that the aesthetic valence of interference is, at least in part, culturally determined.

Chord spacing (Western music)

In Western music, chords seem to be spaced to minimize interference, most noticeably by avoiding small intervals in lower registers but permitting them in higher registers (Huron & Sellmer, 1992; McGowan, 2011; Plomp & Levelt, 1965). Periodicity theories of consonance provide no clear explanation for this phenomenon.

Chord prevalences (Western music)

Many theorists have argued that consonance played an integral role in determining Western compositional practice (e.g., Dahlhaus, 1990; Hindemith, 1945; Rameau, 1722). If so, it should be possible to test competing theories of consonance by examining their ability to predict compositional practice.

Huron (1991) analyzed prevalences of different intervals within 30 polyphonic keyboard works by J. S. Bach, and concluded that they reflected dual concerns of minimizing interference and minimizing tonal fusion. Huron argued that interference was minimized on account of its negative aesthetic valence, whereas tonal fusion was minimized to maintain perceptual independence of the different voices.

Parncutt et al. (2018) tabulated chord types in seven centuries of vocal polyphony, and related their occurrence rates to several formal models of diatonicity, interference, periodicity/harmonicity, and evenness. Most models correlated significantly with chord occurrence rates, with fairly stable coefficient estimates across centuries. These results suggest that multiple psychological mechanisms contribute to consonance.

However, these findings must be treated as tentative, for the following reasons: (a) The parameter estimates have low precision due to the small sample sizes (12 dyads in Huron, 1991; 19 triads in Parncutt et al., 2018)11; (b) The pairwise correlations reported in Parncutt et al. (2018) cannot capture effects of multiple concurrent mechanisms (e.g., periodicity/harmonicity and interference).

Discussion

Table 1 summarizes the evidence contributed by these diverse studies. We now use this evidence to reevaluate some claims in the recent literature.

Role of periodicity/harmonicity

Recent work has claimed that consonance is primarily determined by periodicity/harmonicity, with the role of periodicity/harmonicity potentially moderated by musical background (Cousineau et al., 2012; McDermott et al., 2010, 2016). In our view, a significant contribution of periodicity/harmonicity to consonance is indeed supported by the present literature, in particular by individual-differences research and congenital amusia research (see Table 1). A moderating effect of musical background also seems likely, on the basis of cross-cultural variation in music perception and composition. However, quantitative descriptions of these effects are missing: It is unclear what proportion of consonance may be explained by periodicity/harmonicity, and it is unclear how sensitive consonance is to cultural exposure.

Role of interference

Recent work has also claimed that consonance is independent of interference (Bowling & Purves, 2015; Bowling et al., 2018; Cousineau et al., 2012; McDermott et al., 2010, 2016). In our view, the wider literature is inconsistent with this claim (see Table 1). The main evidence against interference comes from the individual-differences study of McDermott et al. (2010), but this evidence is counterbalanced by several positive arguments for interference, including studies of tone spectra, pitch height, chord voicing in Western music, scale tunings in Gamelan music and Thai classical music, and cross-cultural manipulation of interference for expressive effect.

Role of culture

Cross-cultural studies of music perception and composition make it clear that culture contributes to consonance perception (see Table 1). The mechanisms of this effect remain unclear, however: Some argue that Western listeners internalize codified conventions of Western harmony (Johnson-Laird et al., 2012), whereas others argue that Westerners simply learn aesthetic preferences for periodicity/harmonicity (McDermott et al., 2016). These competing explanations have yet to be tested.

Conclusions

We conclude that consonance perception in Western listeners is likely to be driven by multiple psychological mechanisms, including interference, periodicity/harmonicity, and cultural background (see Table 1). This conclusion is at odds with recent claims that interference does not contribute to consonance perception (Cousineau et al., 2012; McDermott et al., 2010, 2016). In the rest of this paper, we therefore examine our proposition empirically, computationally modeling large datasets of consonance judgments and music compositions.

Computational Models

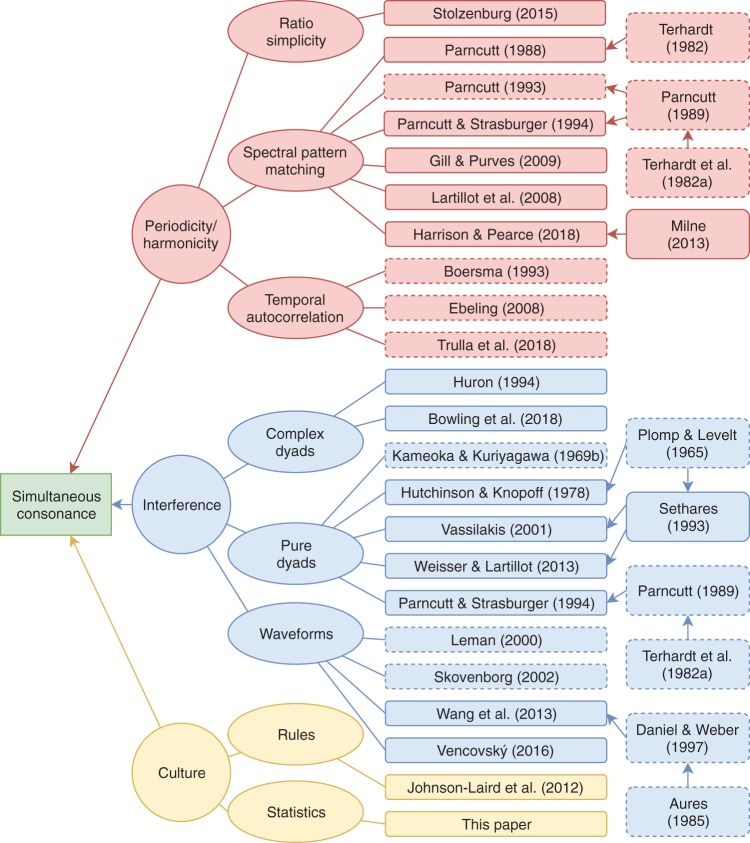

We begin by reviewing prominent computational models of consonance from the literature, organizing them by psychological theory and by modeling approach (see Figure 1).

Figure 1.

Consonance models organized by psychological theory and modeling approach. Dashed borders indicate models not evaluated in our empirical analyses. Arrows denote model revisions.

Periodicity/Harmonicity: Ratio Simplicity

Chords tend to be more periodic when their constituent tones are related by simple frequency ratios. Ratio simplicity can therefore provide a proxy for periodicity/harmonicity. Previous research has formalized ratio simplicity in various ways, with the resulting measures predicting the consonance of just-tuned chords fairly well (e.g., Euler, 1739; Geer, Levelt, & Plomp, 1962; Levelt, van de Geer, & Plomp, 1966; Schellenberg & Trehub, 1994).12 Unfortunately, these measures generally fail to predict consonance for chords that are not just-tuned. A particular problem is disproportionate sensitivity to small tuning deviations: For example, an octave stretched by 0.001% still sounds consonant, despite corresponding to a very complex frequency ratio (200,002:100,000). However, Stolzenburg (2015) provides an effective solution to this by introducing a preprocessing step where each note is adjusted to maximize ratio simplicity with respect to the bass note. These adjustments are not permitted to change the interval size by more than 1.1%. Stolzenburg argues that such adjustments are reasonable given human perceptual inaccuracies in pitch discrimination. Having expressed each chord frequency as a fractional multiple of the bass frequency, ratio simplicity is then computed as the lowest common multiple of the fractions’ denominators. Stolzenburg terms this expression relative periodicity, and notes that, assuming harmonic tones, relative periodicity corresponds to the chord’s overall period length divided by the bass tone’s period length. Relative periodicity values are then postprocessed with logarithmic transformation and smoothing to produce the final model output (see Stolzenburg, 2015 for details).

Periodicity/Harmonicity: Spectral Pattern Matching

Spectral pattern-matching models of consonance follow directly from spectral pattern-matching theories of pitch perception (see the Consonance Theories section). These models operate in the frequency domain, searching for spectral patterns characteristic of periodic sounds.

Terhardt (1982); Parncutt (1988)

Terhardt (1982) and Parncutt (1988) both frame consonance in terms of chord-root perception. In Western music theory, the chord root is a pitch class summarizing a chord’s tonal content, which (according to Terhardt and Parncutt) arises through pattern-matching processes of pitch perception. Consonance arises when a chord has a clear root; dissonance arises from root ambiguity.

Both Terhardt’s (1982) and Parncutt’s (1988) models use harmonic templates quantized to the Western 12-tone scale, with the templates represented as octave-invariant pitch class sets. Each pitch class receives a numeric weight, quantifying how well the chord’s pitch classes align with a harmonic template rooted on that pitch class. These weights preferentially reward coincidence with primary harmonics such as the octave, perfect fifth, and major third.13 The chord root is estimated as the pitch class with the greatest weight; root ambiguity is then operationalized by dividing the total weight by the maximum weight. According to Terhardt and Parncutt, root ambiguity should then negatively predict consonance.

Parncutt (1989); Parncutt and Strasburger (1994)

Parncutt’s (1989) model constitutes a musical revision of Terhardt et al.’s (1982a) pitch perception algorithm. Parncutt and Strasburger’s (1994) model, in turn, represents a slightly updated version of Parncutt’s (1989) model.

Like Parncutt’s (1988) model, Parncutt’s (1989) model formulates consonance in terms of pattern-matching pitch perception. As in Parncutt (1988), the algorithm works by sweeping a harmonic template across an acoustic spectrum, seeking locations where the template coincides well with the acoustic input; consonance is elicited when the location of best fit is unambiguous. However, Parncutt’s (1989) algorithm differs from Parncutt (1988) in several important ways: (a) Chord notes are expanded into their implied harmonics; (b) Psychoacoustic phenomena such as hearing thresholds, masking, and audibility saturation are explicitly modeled; (c) The pattern-matching process is no longer octave-invariant.

Parncutt (1989) proposes two derived measures for predicting consonance: pure tonalness and complex tonalness.14 Pure tonalness describes the extent to which the input spectral components are audible, after accounting for hearing thresholds and masking. Complex tonalness describes the audibility of the strongest virtual pitch percept. The former may be considered a interference model, the latter a periodicity/harmonicity model.

Parncutt and Strasburger (1994) describe an updated version of Parncutt’s (1989) algorithm. The underlying principles are the same, but certain psychoacoustic details differ, such as the calculation of pure-tone audibility thresholds and the calculation of pure-tone height. We evaluate this updated version here.

Parncutt (1993) presents a related algorithm for modeling the perception of octave-spaced tones (also known as Shepard tones). Because octave-spaced tones are uncommon in Western music, we do not evaluate the model here.

Gill and Purves (2009)

Gill and Purves (2009) present a pattern-matching periodicity/harmonicity model which they apply to various two-note chords. They assume just tuning, which allows them to compute each chord’s fundamental frequency as the greatest common divisor of the two tones’ frequencies. They then construct a hypothetical harmonic complex tone rooted on this fundamental frequency, and calculate what proportion of this tone’s harmonics are contained within the spectrum of the original chord. This proportion forms their periodicity/harmonicity measure. This approach has been shown to generalize well to three- and four-note chords (Bowling et al., 2018). However, the model’s cognitive validity is limited by the fact that, unlike human listeners, it is very sensitive to small deviations from just tuning or harmonic tone spectra.

Peeters et al. (2011); Bogdanov et al. (2013); Lartillot et al. (2008)

Several prominent audio analysis toolboxes—the Timbre Toolbox (Peeters et al., 2011), Essentia (Bogdanov et al., 2013), and MIRtoolbox (Lartillot et al., 2008)—contain inharmonicity measures. Here we examine their relevance for consonance modeling.

The inharmonicity measure in the Timbre Toolbox (Peeters et al., 2011) initially seems relevant for consonance modeling, being calculated by summing each partial’s deviation from harmonicity. However, the algorithm’s preprocessing stages are clearly designed for single tones rather than tone combinations. Each input spectrum is preprocessed to a harmonic spectrum, slightly deformed by optional stretching; this may be a reasonable approximation for single tones, but it is inappropriate for tone combinations. We therefore do not consider this model further.

Essentia (Bogdanov et al., 2013) contains an inharmonicity measure defined similarly to the Timbre Toolbox (Peeters et al., 2011). As with the Timbre Toolbox, this feature is clearly intended for single tones rather than tone combinations, and so we do not consider it further.

MIRtoolbox (Lartillot et al., 2008) contains a more flexible inharmonicity measure. First, the fundamental frequency is estimated using autocorrelation and peak-picking; inharmonicity is then estimated by applying a sawtooth filter to the spectrum, with troughs corresponding to integer multiples of the fundamental frequency, and then integrating the result. This measure seems more likely to capture inharmonicity in musical chords, and indeed it has been recently used in consonance perception research (Lahdelma & Eerola, 2016). However, systematic validations of this measure are lacking.

Milne (2013); Harrison and Pearce (2018)

Milne (2013) presents a periodicity/harmonicity model that operates on pitch-class spectra (see also Milne et al., 2016). The model takes a pitch-class set as input, and expands all tones to idealized harmonic spectra. These spectra are superposed additively, and then blurred by convolution with a Gaussian distribution, mimicking perceptual uncertainty in pitch processing. The algorithm then sweeps a harmonic template over the combined spectrum, calculating the cosine similarity between the template and the combined spectrum as a function of the template’s fundamental frequency. The frequency eliciting the maximal cosine similarity is identified as the fundamental frequency, and the resulting cosine similarity is taken as the periodicity/harmonicity estimate.

Harrison and Pearce (2018) suggest that picking just one fundamental frequency may be inappropriate for larger chords, where listeners may instead infer several candidate fundamental frequencies. They therefore treat the cosine-similarity profile as a probability distribution, and define periodicity/harmonicity as the Kullback-Leibler divergence to this distribution from a uniform distribution. The resulting measure can be interpreted as the information-theoretic uncertainty of the pitch-estimation process.

Periodicity/Harmonicity: Temporal Autocorrelation

Temporal autocorrelation models of consonance follow directly from autocorrelation theories of pitch perception (see Consonance Theories). These models operate in the time domain, looking for time lags at which the signal correlates with itself: High autocorrelation implies periodicity and hence consonance.

Boersma (1993)

Boersma’s (1993) autocorrelation algorithm can be found in the popular phonetics software Praat. The algorithm tracks the fundamental frequency of an acoustic input over time, and operationalizes periodicity as the harmonics-to-noise ratio, the proportion of power contained within the signal’s periodic component. Marin et al. (2015) found that this algorithm had some power to predict the relative consonance of different dyads. However, the details of the algorithm lack psychological realism, having been designed to solve an engineering problem rather than to simulate human perception. This limits the algorithm’s appeal as a consonance model.

Ebeling (2008)

Ebeling’s (2008) autocorrelation model estimates the consonance of pure-tone intervals. Incoming pure tones are represented as sequences of discrete pulses, reflecting the neuronal rate coding of the peripheral auditory system. These pulse sequences are additively superposed to form a composite pulse sequence, for which the autocorrelation function is computed. The generalized coincidence function is then computed by integrating the squared autocorrelation function over a finite positive range of time lags. Applied to pure tones, the generalized coincidence function recovers the traditional hierarchy of intervallic consonance, and mimics listeners in being tolerant to slight mistunings. Ebeling presents this as a positive result, but it is inconsistent with Plomp and Levelt’s (1965) observation that, after accounting for musical training, pure tones do not exhibit the traditional hierarchy of intervallic consonance. It remains unclear whether the model would successfully generalize to larger chords or to complex tones.

Trulla, Stefano, and Giuliani (2018)

Trulla et al.’s (2018) model uses recurrence quantification analysis to model the consonance of pure-tone intervals. Recurrence quantification analysis performs a similar function to autocorrelation analysis, identifying time lags at which waveform segments repeat themselves. Trulla et al. (2018) use this technique to quantify the amount of repetition within a waveform, and show that repetition is maximized by traditionally consonant frequency ratios, such as the just-tuned perfect fifth (3:2). The algorithm constitutes an interesting new approach to periodicity/harmonicity detection, but one that lacks much cognitive or neuroscientific backing. As with Ebeling (2008), it is also unclear how well the algorithm generalizes to larger chords or to different tone spectra, and the validation suffers from the same problems described above for Ebeling’s model.

Summary

Autocorrelation is an important candidate mechanism for consonance perception. However, autocorrelation consonance models have yet to be successfully generalized outside simple tone spectra and two-note intervals. We therefore do not evaluate these models in the present work, but we look forward to future research in this area (see, e.g., Tabas et al., 2017).

Interference: Complex Dyads

Complex-dyad models of interference search chords for complex dyads known to elicit interference. These models are typically hand-computable, making them well-suited to quick consonance estimation.

Huron (1994)

Huron (1994) presents a measure termed aggregate dyadic consonance, which characterizes the consonance of a pitch-class set by summing consonance ratings for each pitch-class interval present in the set. These consonance ratings are derived by aggregating perceptual data from previous literature.

Huron (1994) originally used aggregate dyadic consonance to quantify a scale’s ability to generate consonant intervals. Parncutt et al. (2018) subsequently applied the model to musical chords, and interpreted the output as an interference measure. The validity of this approach rests on the assumption that interference is additively generated by pairwise interactions between spectral components; a similar assumption is made by pure-dyad interference models (see the Interference: Pure Dyads section). A further assumption is that Huron’s dyadic consonance ratings solely reflect interference, not (e.g.) periodicity/harmonicity; this assumption is arguably problematic, especially given recent claims that dyadic consonance is driven by periodicity/harmonicity, not interference (McDermott et al., 2010; Stolzenburg, 2015).

Bowling et al. (2018)

Bowling et al. (2018) primarily explain consonance in terms of periodicity/harmonicity, but also identify dissonance with chords containing pitches separated by less than 50 Hz. They argue that such intervals are uncommon in human vocalizations, and therefore elicit dissonance. We categorize this proposed effect under interference, in line with Parncutt et al.’s (2018) argument that these small intervals (in particular minor and major seconds) are strongly associated with interference.

Interference: Pure Dyads

Pure-dyad interference models work by decomposing chords into their pure-tone components, and accumulating interference contributions from each pair of pure tones.

Plomp and Levelt (1965); Kameoka and Kuriyagawa (1969b)

Plomp and Levelt (1965) and Kameoka and Kuriyagawa (1969b) concurrently established an influential methodology for consonance modeling: Use perceptual experiments to characterize the consonance of pure-tone dyads, and estimate the dissonance of complex sonorities by summing contributions from each pure dyad. However, their original models are rarely used today, having been supplanted by later work.

Hutchinson and Knopoff (1978)

Hutchinson and Knopoff (1978) describe a pure-dyad interference model in the line of Plomp and Levelt (1965). Unlike Plomp and Levelt, Hutchinson and Knopoff sum dissonance contributions over all harmonics, rather than just neighboring harmonics. The original model is not fully algebraic, relying on a graphically depicted mapping between interval size and pure-dyad dissonance; a useful modification is the algebraic approximation introduced by Bigand, Parncutt, and Lerdahl (1996), which we adopt here (see also Mashinter, 2006).

Hutchinson and Knopoff (1978) only applied their model to complex-tone dyads. They later applied their model to complex-tone triads (Hutchinson & Knopoff, 1979), and for computational efficiency introduced an approximation decomposing the interference of a triad into the contributions of its constituent complex-tone dyads (see previous discussion of Huron, 1994). With modern computers, this approximation is unnecessary and hence rarely used.

Sethares (1993); Vassilakis (2001); Weisser and Lartillot (2013)

Several subsequent studies have preserved the general methodology of Hutchinson and Knopoff (1978) while introducing various technical changes. Sethares (1993) reformulated the equations linking pure-dyad consonance to interval size and pitch height. Vassilakis (2001) and Weisser and Lartillot (2013) subsequently modified Sethares’s (1993) model, reformulating the relationship between pure-dyad consonance and pure-tone amplitude. These modifications generally seem principled, but the resulting models have received little systematic validation.

Parncutt (1989); Parncutt and Strasburger (1994)

As discussed above (see the Periodicity/Harmonicity: Spectral Pattern Matching section), the pure tonalness measure of Parncutt (1989) and the pure sonorousness measure of Parncutt and Strasburger (1994) may be categorized as interference models. Unlike other pure-dyad interference models, these models address masking, not beating.

Interference: Waveforms

Dyadic models present a rather simplified account of interference, and struggle to capture certain psychoacoustic phenomena such as effects of phase (e.g., Pressnitzer & McAdams, 1999) and waveform envelope shape (e.g., Vencovský, 2016) on roughness. The following models achieve a more detailed account of interference by modeling the waveform directly.

Leman (2000)

Leman’s (2000) synchronization index model measures beating energy within roughness-eliciting frequency ranges. The analysis begins with Immerseel and Martens’s (1992) model of the peripheral auditory system, which simulates the frequency response of the outer and middle ear, the frequency analysis of the cochlea, hair-cell transduction from mechanical vibrations to neural impulses, and transmission by the auditory nerve. Particularly important is the half-wave rectification that takes place in hair-cell transduction, which physically instantiates beating frequencies within the Fourier spectrum. Leman’s model then filters the neural transmissions according to their propensity to elicit roughness, and calculates the energy of the resulting spectrum as a roughness estimate. Leman illustrates model outputs for several amplitude-modulated tones, and for two-note chords synthesized with harmonic complex tones. The initial results seem promising, but we are unaware of any studies systematically fine-tuning or validating the model.

Skovenborg and Nielsen (2002)

Skovenborg and Nielsen’s (2002) model is conceptually similar to Leman’s (2000) model. The key differences are simulating the peripheral auditory system using the HUTear MATLAB toolbox (Härmä & Palomäki, 1999), rather than Immerseel and Martens’s (1992) model, and adopting different definitions of roughness-eliciting frequency ranges. The authors provide some illustrations of the model’s application to two-tone intervals of pure and complex tones. The model recovers some established perceptual phenomena, such as the dissonance elicited by small intervals, but also exhibits some undesirable behavior, such as multiple consonance peaks for pure-tone intervals, and oversensitivity to slight mistunings for complex-tone intervals. We are unaware of further work developing this model.

Aures (1985c); Daniel and Weber (1997); Wang et al. (2013)

Aures (1985c) describes a roughness model that has been successively developed by Daniel and Weber (1997) and Wang et al. (2013). Here we describe the model as implemented in Wang et al. (2013). Like Leman (2000) and Skovenborg and Nielsen (2002), the model begins by simulating the frequency response of the outer and middle ear, and the frequency analysis of the cochlea. Unlike Leman (2000) and Skovenborg and Nielsen (2002), the model does not simulate hair-cell transduction or transmission by the auditory nerve. Instead, the model comprises the following steps: (a) Extract the waveform envelope at each cochlear filter; (b) Filter the waveform envelopes to retain the beating frequencies most associated with roughness; (c) For each filter, compute the modulation index, summarizing beating magnitude as a proportion of the total signal; (d) Multiply each filter’s modulation index by a phase impact factor, capturing signal correlations between adjacent filters; high correlations yield higher roughness; (e) Multiply by a weighting factor identifying how different cochlear filters contribute more to the perception of roughness; (f) Square the result and sum over cochlear filters.

Unlike the models of Leman (2000) and Skovenborg and Nielsen (2002), these three models are presented alongside objective perceptual validations. However, these validations are generally restricted to relatively artificial and nonmusical stimuli.

Vencovský (2016)

Like Leman (2000); Skovenborg and Nielsen (2002), and Wang et al. (2013); Vencovský’s (2016) model begins with a sophisticated model of the peripheral auditory system. The model of Meddis (2011) is used for the outer ear, middle ear, inner hair cells, and auditory nerve; the model of Nobili, Vetešník, Turicchia, and Mammano (2003) is used for the basilar membrane and cochlear fluid. The output is a neuronal signal for each cochlear filter.

Roughness is then estimated from the neuronal signal’s envelope, or beating pattern. Previous models estimate roughness from the amplitude of the beating pattern; Vencovský’s (2016) model additionally accounts for the beating pattern’s shape. Consider a single oscillation of the beating pattern; according to Vencovský’s (2016) model, highest roughness is achieved when the difference between minimal and maximal amplitudes is large, and when the progression from minimal to maximal amplitudes (but not necessarily vice versa) is fast. Similar to previous models (Daniel & Weber, 1997; Wang et al., 2013), Vencovský’s (2016) model also normalizes roughness contributions by overall signal amplitudes, and decreases roughness when signals from adjacent cochlear channels are uncorrelated.

Vencovský (2016) validates the model on perceptual data from various types of artificial stimuli, including two-tone intervals of harmonic complex tones, and finds that the model performs fairly well. It is unclear how well the model generalizes to more complex musical stimuli.

Culture

Cultural aspects of consonance perception have been emphasized by many researchers (see Consonance Theories), but we are only aware of one preexisting computational model instantiating these ideas: that of Johnson-Laird et al. (2012).

Johnson-Laird et al. (2012)

Johnson-Laird et al. (2012) provide a rule-based model of consonance perception in Western listeners. The model comprises three rules, organized in decreasing order of importance:

-

1

Chords consistent with a major scale are more consonant than chords only consistent with a minor scale, which are in turn more consonant than chords not consistent with either;

-

2

Chords are more consonant if they (a) contain a major triad and (b) all chord notes are consistent with a major scale containing that triad;

-

3

Chords are more consonant if they can be represented as a series of pitch classes each separated by intervals of a third, optionally including one interval of a fifth.

Unlike most other consonance models, this model does not return numeric scores, but instead ranks chords in order of their consonance. Ranking is achieved as follows: Apply the rules one at a time, in decreasing order of importance, and stop when a rule identifies one chord as more consonant than the other. This provides an estimate of cultural consonance.

Johnson-Laird et al. (2012) suggest that Western consonance perception depends both on culture and on roughness. They capture this idea with their dual-process model, which adds an extra rule to the cultural consonance algorithm, applied only when chords cannot be distinguished on the cultural consonance criteria. This rule predicts that chords are more consonant if they exhibit lower roughness. The authors operationalize roughness using the model of Hutchinson and Knopoff (1978).

The resulting model predicts chordal consonance rather effectively (Johnson-Laird et al., 2012; Stolzenburg, 2015). However, a problem with this model is that the rules are hand-coded on the basis of expert knowledge. The rules could represent cultural knowledge learned through exposure, but they could also explain post hoc rationalizations of perceptual phenomena. This motivates us to introduce an alternative corpus-based model, described below.

A corpus-based model of cultural familiarity