Abstract

Infants are responsive to and show a preference for human vocalizations from very early in development. While previous studies have provided a strong foundation of understanding regarding areas of the infant brain that respond preferentially to social vs. non-social sounds, how the infant brain responds to sounds of varying social significance over time, and how this relates to behavior, is less well understood. The current study uniquely examined longitudinal brain responses to social sounds of differing social-communicative value in infants at 3 and 6 months of age using functional near-infrared spectroscopy (fNIRS). At 3 months, infants showed similar patterns of widespread activation in bilateral temporal cortices to communicative and non-communicative human non-speech vocalizations, while by 6 months infants showed more similar, and focal, responses to social sounds that carried increased social value (infant-directed speech and human non-speech communicative sounds). In addition, we found that brain activity at 3 months of age related to later brain activity and receptive language abilities as measured at 6 months. These findings suggest areas of consistency and change in auditory social perception between 3 and 6 months of age.

Keywords: fNIRS, Infancy, Social perception, Brain development, Auditory stimuli

1. Introduction

Infants are responsive to auditory information in the social environment, in particular human voices, from very early in development. Fetuses have been shown to change their behavior in response to their mother’s voice by the third trimester of pregnancy (Marx and Nagy, 2015), and newborns show a behavioral preference for their mother’s voice at birth (DeCasper and Fifer, 1980). This preferential attention to social sounds may have significant downstream relevance for the development of social and language skills. While functional magnetic resonance imaging (fMRI) studies have contributed important information regarding the neural underpinnings of auditory social perception using fMRI with infants during natural sleep (e.g., Blasi et al., 2011; Shultz et al., 2014), functional near-infrared spectroscopy (fNIRS) has several advantages for use with infants that complement fMRI in localizing brain responses to auditory social stimuli (McDonald and Perdue, 2018). These advantages include the relative silence and accessibility of fNIRS systems, along with the ability to assess brain responses while infants are awake (Lloyd-Fox et al., 2010).

One of the more studied types of social sounds in fNIRS investigations of infant brain responses to auditory social stimuli are human non-speech vocalizations, such as laughing and coughing. Lloyd-Fox and colleagues have conducted a series of studies using fNIRS to contrast infant frontal-temporal region responses to non-speech vocalizations and various non-social but familiar environmental sounds (e.g., running water). In an investigation of 4- to 7-month-old infants, robust activation was observed in right and left frontal-temporal regions in response to social and non-social sounds, as compared to a visual baseline (Lloyd-Fox et al., 2012). An anterior portion of the superior temporal sulcus (STS) region, however, selectively responded to the vocal rather than the non-vocal condition. The latter effect was stronger in the left hemisphere and strengthened in both right and left hemispheres with age, with younger infants responding more strongly to the non-vocal condition in some fNIRS channels and older infants showing increased activation only to the vocal sounds. These stimuli were further investigated in a semi-longitudinal study of a Gambian cohort, which ranged in age from 0 to 24 months (Lloyd-Fox et al., 2017). Findings were largely consistent with Lloyd-Fox et al. (2012), although fNIRS data were only collected in the right hemisphere due to practical constraints related to imaging in a remote location. Younger infants preferentially responded with more right posterior temporal activation in response to the non-social stimuli, while infants at older ages (9–24 months) typically responded more strongly to the auditory social stimuli in more anterior portions of the temporal cortex. Grossmann et al. (2010a) also investigated infant responses to vocal (speech and non-speech) and non-vocal sounds in bilateral temporal regions at 4 and 7 months of age. Consistent with these findings (Lloyd-Fox et al., 2017; Lloyd-Fox et al., 2012), the 7-month-olds showed increased activation to the vocal stimuli, relative to the non-vocal sounds, in the left and right posterior temporal cortex, while the 4-month-olds responded more strongly to the non-vocal vs. vocal sounds. Somewhat in contrast with Lloyd-Fox et al. (2012);, Blasi et al. (2011) found a right-hemisphere bias in the temporal cortex in response to vocal vs. non-vocal sounds in 3- to 7-month-old infants using fMRI during natural sleep.

Studies of infant speech processing reveal differences based upon prosodic elements of speech, as well as familiarity with the speech sounds. Brain responses to infant-directed speech (IDS), which is slower and of higher and more varying pitch than adult-directed speech (ADS), have been another area of interest in studies of infant brain development. Infants show a behavioral preference for IDS within the first months of life, as evidenced by increased attention to IDS vs. ADS using a habituation-dishabituation procedure (Pegg et al., 1992). In an fNIRS study of infants ranging from 4 to 13 months of age, increased activation was found in response to IDS vs. ADS in left and right temporal areas (Naoi et al., 2012). Studies contrasting infant responses to non-native and native speech over the first year of life suggest that there may be a left-lateralized response to speech from an infant’s native language from early in life (Peña et al., 2003; Vannasing et al., 2016; although see May et al., 2011), while non-native language is processed in right-lateralized (Vannasing et al., 2016) or bilateral temporal areas shortly after birth (Sato et al., 2012). With increased exposure over the first year of life, infant responses to their native language may become increasingly left-lateralized, while non-native speech is increasingly processed in the right hemisphere (Minagawa-Kawai et al., 2011a). Shultz et al. (2014) uniquely examined brain responses to a wide variety of sounds in young infants during natural sleep using fMRI, including non-native IDS and ADS, in comparison to biological non-speech sounds (e.g., human vocalizations, walking sounds, macaque calls). They found evidence of a left-lateralized response to speech vs. biological non-speech sounds in infants at 1 to 4 months of age. An age-related decrease in left temporal cortex response to biological non-speech sounds was observed, while the response to speech was sustained from 1 to 4 months of age.

Although much of the previous fNIRS literature has focused upon temporal cortex response to social sounds, the prefrontal cortex (PFC) may also play a role in processing vocalizations that are perceived to be more self-relevant. At 5–6 months of age, areas of the PFC have shown increased activation when infants hear their own (vs. another) name being called (Grossmann et al., 2010b) and their name spoken by their mother (vs. a stranger; Imafuku et al., 2014). Additionally, infants who showed a stronger response in the dorsal medial PFC to the own mother condition were also more likely to show a behavioral preference for their own mother’s voice as measured by a head-turn preference paradigm (Imafuku et al., 2014).

While previous studies have provided a strong foundation of understanding regarding speech processing and areas of the infant brain that respond preferentially to social vs. non-social sounds, how the infant brain differentially responds to sounds of varying social importance over time is less well understood. Likewise, the degree to which early differences in brain responses to social sounds are relevant for language and social development later in life has rarely been studied, particularly in fNIRS research (although see Kuhl, 2010 for a broad review). Building on the work of Shultz et al. (2014), the current study longitudinally examined brain responses to different types of human vocalizations in infants at 3 and 6 months of age using fNIRS. Specifically, we compared patterns of infant brain activation in bilateral frontal-temporal (3 & 6 months) and prefrontal (6 months) cortices in response to human sounds that varied in social communicative value: human non-communicative vocalizations (e.g., cough), human communicative vocalizations (e.g., laugh), and infant-directed speech. As in Shultz et al. (2014), we focused on an unfamiliar language (Japanese) in order to avoid a focus on processing of language content vs. the prosodic elements of speech sounds. We also leveraged our longitudinal design by uniquely examining whether early brain responses to speech sounds predicted later receptive understanding. We sought to answer the following three research questions: 1) Which areas of the right and left frontal-temporal and prefrontal cortices respond to different types of social sounds in infants at 3 and 6 months of age? 2) Does brain activation to social sounds at 3 months relate to brain activation to social sounds at 6 months? 3) Do individual differences in brain response to speech sounds (IDS) predict receptive language ability at 6 months?

2. Materials and methods

2.1. Participants

A total of 42 infants were recruited for the current study (see Table 1 for participant information). Infants were primarily recruited through the Newborn Nursery at Yale-New Haven Hospital. All families received a brochure and contact form in their discharge paperwork and some families were informed verbally about the study by nursing staff or a research associate during a weekly class on the unit. Infants were also recruited via word of mouth. Entry into the study was allowed at the 3- or 6-month time point. Exclusion criteria included: premature birth (< 37 weeks), low birth weight (< 2500 g), as well as any major vision or hearing problems or major medical issues. All parents provided written informed consent prior to participation. The study was approved and overseen by the Yale Human Investigations Committee.

Table 1.

Participant information. Paternal education not reported for two children from single-parent families. Developmental abilities were measuring with the Mullen Scales of Early Learning (MSEL). The MSEL Early Learning Composite standard score (M = 100, SD = 15) is presented.

| Variable | |

|---|---|

| Gender (% male) | 61.9% |

| Race/ethnicity (% non-Caucasian) | 33.3% |

| Maternal education (% college +) | 71.5% |

| Paternal education (% college +) | 72.5% |

| Developmental abilities M (SD) | 103.56 (11.77) |

| Age (months/days) at 3-month visit M (SD) | 3.15 (.32)/ 94.53 (9.47) |

| Age (months/days) at 6-month visit M (SD) | 6.24 (.41)/ 187.05 (12.35) |

Of the 42 enrolled infants, 37 (88%) were enrolled at 3 months and 5 (12%) at 6 months. At 3 months, infant head circumference measurements ranged from 38 to 43 cm (M = 41.22, SD = 1.27) and ear-to-ear (across forehead) measurements from 19 to 24 cm (M = 21.89, SD = 1.15). At 6 months, infant head circumference measurements ranged from 41 to 46 cm (M = 43.88, SD = 1.34) and ear-to-ear (across forehead) measurements from 21 to 25.5 cm (M = 23.35, SD = 1.00). One infant missed the 6-month time point due to illness. We retained a high proportion of fNIRS data at each age. At 3 months, fNIRS data collection was attempted with 37 infants. Of these infants, 35 (95%) were included in analyses. Two were excluded due to poor quality data associated with excessive hair (n = 1) and movement (n = 1) leading to large shifts of the fNIRS headgear. At 6 months, fNIRS data collection was attempted with 41 infants. Of these infants, 30 (73%) were included in analyses of both the frontal and lateral arrays, and 33 (80%) in analyses of frontal and/or lateral arrays. Eleven infants were excluded from frontal and/or lateral analyses due to excessive hair (n = 2), excessive motion or noisy data (n = 2), poor headgear placement (n = 1), large headgear shifting (n = 2), poor fit/discomfort from frontal optodes (n = 2), and experimenter error (n = 2). Infants with and without usable fNIRS data did not differ in terms of demographics or developmental abilities3 (ps > .11).

2.2. Procedure

Families visited the lab when their infants were approximately 3 months (range = 2.60–4.17 months; 78–125 days) and 6 months of age (range = 5.60–7.63 months; 168–229 days; see Table 1). Infants were comfortably seated on their parent’s lap across from a screen in a dimly lit room. After a research associate conducted head measurements, the fNIRS headgear was placed on the infant’s head. After headgear placement, a research associate instructed parents to quietly hold their child while he/she listened to different types of sounds. Parents were asked to help keep their infant still and the infant’s hands away from the fNIRS headgear. Parents were instructed to quietly soothe their infant if he/she became upset. If infants could not calm, the task was suspended and a second run was conducted as appropriate. Infants were video recorded during the task for quality control purposes. A developmental assessment was completed by a trained clinician following fNIRS data collection at the 6-month visit.

2.3. Task and stimuli

The auditory stimuli used in the current study were previously described in an fMRI study of young infants during natural sleep (see Shultz et al., 2014 and Shultz et al., 2012 for further details on stimuli development). All sounds consisted of short sound tokens spoken by adult female native Japanese-speaking actors. Sounds were equalized for mean intensity and played at a consistent volume within and across participants. The fMRI task was adapted for fNIRS measurement of awake infants by: (1) reducing the overall length of the task (10-s vs. 20-s blocks, 3 vs. 7 conditions), and (2) pairing sounds with a video of colorful moving (non-social) stimuli (e.g., spinning pinwheels, lava lamps) that served to maintain infants’ attention (played continuously throughout the task). The following conditions were selected to facilitate contrast of different types of social sounds: human non-communicative sounds (H-NonC), human communicative sounds (H-Comm), and infant-directed speech (IDS).

The IDS condition consisted of short speech tokens of female voices speaking in Japanese with a high-pitched, infant friendly vocal tone (none of the participating infants had significant exposure to Japanese). The H-Comm condition consisted of a series of human non-speech sounds, produced by the same actors, that carry some communicative value, including laughter, disgust, inquiry, agreement, and disagreement. The H-NonC condition was made up of a series of human non-speech sounds that do not carry social-communicative value, produced again by the same actors, which consisted of coughing, throat clearing, yawning, hiccupping, and sneezing.

Each of the three conditions were played in five 10-s blocks, interleaved by 12-s baseline periods with no sound. Conditions were composed of 5–7 individual sounds separated by 600–1000 ms of silence. The sounds and conditions played in pseudorandom order; the same token and type of condition was never played two times in a row. At least 3 usable trials for each condition were necessary for inclusion in analyses (all trials available for 89% of sample at 3 months and 91% at 6 months). Stimuli were presented using E-Prime 2.0 (Psychology Software Tools, 2012).

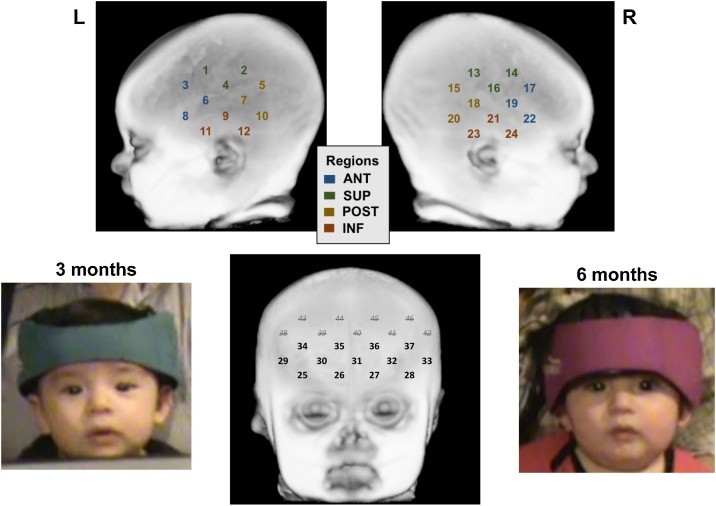

2.4. fNIRS data acquisition and preprocessing

fNIRS data were collected with a Hitachi ETG-4000 system (Tokyo, Japan), using specialized neonate/infant optodes. Optodes emitted near-infrared light at 695 and 830 nm onto the infants’ scalps. The intensity of the attenuated light that exited the scalp was then detected by corresponding optodes. fNIRS data were sampled every 100 ms (10 Hz). At both ages, two 3 × 3 optode sets were centered above the infants’ left and right ears. At 6 months only, an additional 3 × 5 optode set was centered on the forehead above the nasion. Given the smaller size of the infants’ heads at 3 months, which disallowed for a proper fit, it was not possible to consistently collect quality data using the frontal array with our optode set. See Fig. 1 for depictions and images of the optode set up. The 3 × 3 optode sets consisted of 5 emitters and 4 detectors placed 2-cm apart forming 12 channels each (24 total) in the left and right hemisphere. The 3 × 5 optode set consisted of 8 emitters and 7 detectors also placed 2-cm apart for an additional 22 channels. Given poor optical coupling of the top row of the frontal optodes, the top two rows of frontal channels (channels 38–46) were removed from analyses, leaving 13 frontal channels. The optode sets were affixed with Velcro to a soft headband, which allowed for customization of fit to each infant (see Fig. 1). Data were analyzed at the channel-level and within regions based on spatial location (anterior, superior, posterior, inferior; mean of 3 channels each; see Fig. 1).

Fig. 1.

Infant fNIRS optode configuration and headgear on an infant at 3 and 6 months old.

This image provides an approximate mapping of the placement of the fNIRS channels, but does not represent precisely where they are placed on an infant’s head (6-month template displayed). Regions: ANT = Anterior. SUP = Superior. POST = Posterior. INF = Inferior.

fNIRS data were pre-processed using in-house MATLAB (The MathWorks, Inc., Natick, MA) scripts and HOMER2 (MGH-Martinos Center for Biomedical Imaging, Boston, MA; Huppert et al., 2009), which is a MATLAB-based software package. Raw data were exported into. csv files from the Hitachi system and converted into. nirs files. Next, channels were excluded for artifact if the signal magnitude was > 98% or < 2% of the total signal range for more than 5 s during the recording period. Infants with > 25% channel loss (within the lateral/frontal array) were excluded from analyses. At 3 months, 24 of the 35 infants (69%) with usable data retained all 24 channels, and 11 of the 35 (31%) were missing < 25% of the channels (2 infants with > 25% channel loss removed). For the 6-month lateral array data, 26 of the 33 infants (79%) with usable data retained all 24 channels, and 7 of the 33 (21%) were missing < 25% of the channels (4 infants with > 25% channel loss removed). For 6-month frontal array data, 31 of the 33 infants (94%) with usable data retained all 13 included channels, and 2 of the 33 (6%) were missing < 25% of the channels (1 infant with > 25% channel loss removed).

After finalizing the sample via these quality control measures, the following pre-processing steps were conducted via HOMER2. The detected attenuated light intensities were converted to optical density units and wavelet motion correction (interquartile range = 0.5) was applied (Behrendt et al., 2018). Data were filtered using a band pass filter from 0.05-0.50 Hz. Data were baseline corrected using the 2 s prior to trial initiation, as is common practice (Funane et al., 2014; Ravicz et al., 2015; Watanabe et al., 2008). The filtered data were then used to calculate change in concentration for oxygenated hemoglobin (HbO), deoxygenated hemoglobin (HbR), and total hemoglobin according to the modified Beer-Lambert Law (Delpy et al., 1988), with an assumed pathlength factor of 5 (Duncan et al., 1995).

2.5. Behavioral data

The Mullen Scales of Early Learning (MSEL; Mullen, 1995) was collected at 6 months of age. The MSEL is a norm-referenced, examiner-administered measure of developmental abilities for infancy to early childhood. The MSEL yields standardized scores for five subdomains, Gross Motor, Visual Reception, Fine Motor, Receptive Language, and Expressive Language, as well as an Early Learning Composite (ELC) score based upon the latter four subtests. For descriptive purposes, we report MSEL data for the sample in Table 1. We utilized the Receptive Language t-score to assess possible correlations with fNIRS data. This subtest was selected because the items measured at this age were expected to be most closely related to the fNIRS task (e.g., listening/responding to social sounds). We did not include the Expressive Language subscale as it lacks variability at this age.

2.6. Analytic plan

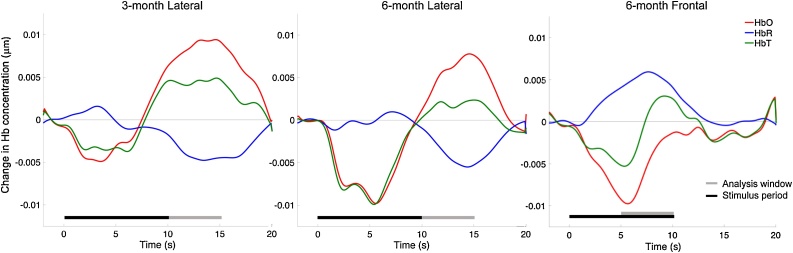

Statistical analyses were conducted primarily with SPSS 24.0 (IBM Corporation, Armonk, NY). Time windows of interest were selected based upon visual inspection of grand-averaged activation patterns across conditions to capture peak activation for each array (Lloyd-Fox et al., 2017; see Fig. 2). We sought to identify the classic hemodynamic response showing an increase in HbO and corresponding decrease in HbR when selecting time windows. Accordingly, 10–15 s post-stimulus presentation onset was selected for the lateral arrays. Since there was no classic hemodynamic response in the frontal channels, 5–10 s post-stimulus presentation was selected to capture an earlier decrease in HbO and corresponding increase in HbR in order to best represent the data. Given the difference in the responses measured by the lateral and frontal arrays, we considered these areas separately in our analyses and interpretation of findings.

Fig. 2.

Grand average waveforms by age and region. Hb = Hemoglobin. HbO = Oxygenated hemoglobin. HbR = Deoxygenated hemoglobin. HbT = Total hemoglobin.

We first conducted one-sample t-tests for these time windows for each channel at each age to determine whether changes in mean HbO and HbR concentration significantly differed from 0, indicating activation to the auditory stimuli. False Discovery Rate (FDR) was used to correct for multiple comparisons within array (frontal, lateral), condition (IDS, H-Comm, H-NonC), age (3, 6 months), and chromophore (HbO, HbR; Benjamini and Hochberg, 1995). Next, we conducted a series of four 2 (age) x 2 (hemisphere) x 3 (condition) repeated measures ANOVA models within each region (anterior, superior, posterior, inferior) to examine differences between conditions and over time (only HbO examined given its higher signal-to-noise ratio; Lloyd-Fox et al., 2010).

We then assessed longitudinal associations using bivariate Pearson’s correlations between HbO concentrations across 3 and 6 months. We first averaged HbO concentrations for left and right lateral channels (12 each; for infants missing channels, averaged across available “good” channels) for each condition, then conducted correlations across ages within and between conditions. Significant hemisphere-level correlations were then followed up with region-level analyses to assess the specific regions at 3 months that accounted for correlations with 6-month data. Finally, we conducted a multiple regression analysis predicting 6-month MSEL Receptive Language scores based upon channels showing significant activation to IDS at 3 and 6 months. MSEL nonverbal scores (average of Visual Reception and Fine Motor t-scores) were also added to the model to control for differences in nonverbal cognitive level.

3. Results

3.1. Which areas of the right and left frontal-temporal and prefrontal cortices activate to different types of social sounds in infants at 3 and 6 months of age?

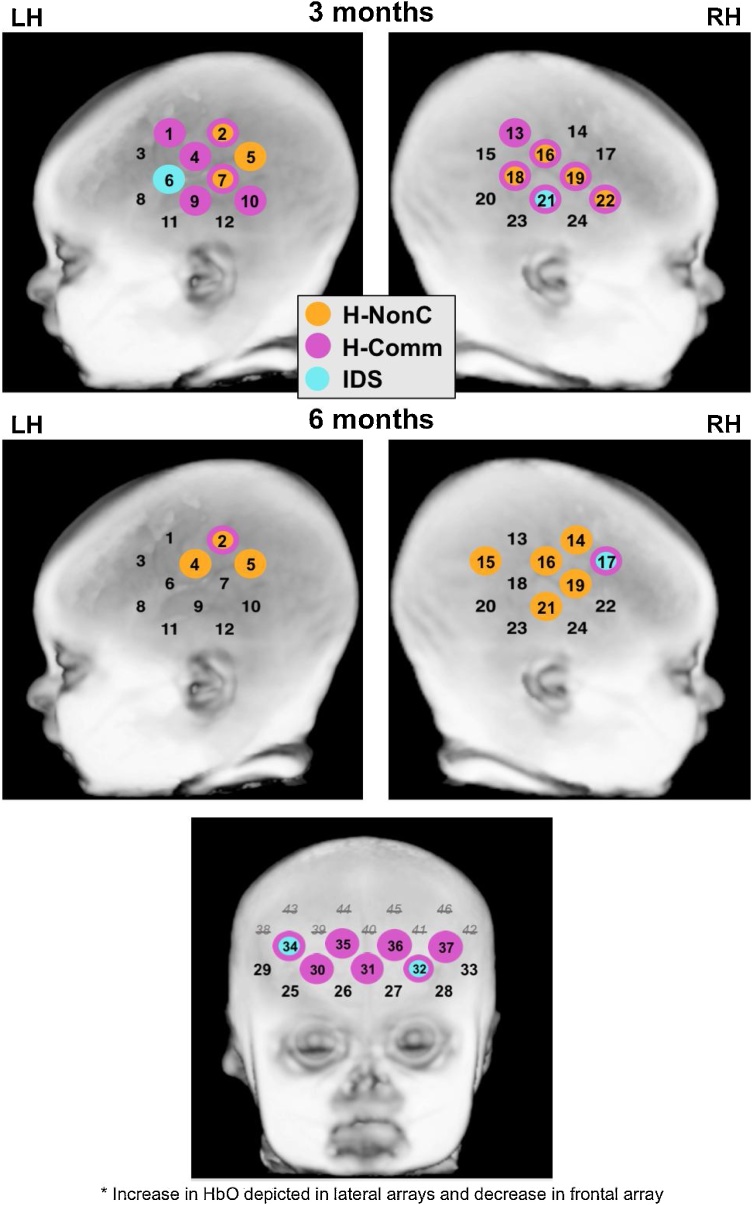

See Table 2 and Fig. 3 for specific information on channels with significant HbO and/or HbR activation within each age and condition. At 3 months, widespread activation was observed in bilateral frontal-temporal cortices in response to the H-NonC and H-Comm conditions. For the H-NonC condition, three channels in the superior posterior portion of the left lateral array and four channels primarily in the middle portion of the right lateral array showed significant increases in mean HbO (relative to baseline). Likewise, significant decreases in mean HbR were observed in seven left frontal-temporal and eight right frontal-temporal channels; these channels largely overlapped with the significant HbO channels. For H-Comm, six channels located primarily in the middle-posterior portions of the left lateral array and another six in the middle-posterior portions of the right lateral array showed significant increases in mean HbO (relative to baseline). Similarly, significant decreases in mean HbR were found in six left frontal-temporal and three right frontal-temporal channels that largely overlapped with HbO responses. In contrast, more focal activation was observed bilaterally in response to the IDS condition. Specifically, one channel in the anterior portion of the left lateral array and one in the middle-inferior portion of the right lateral array showed significant increases in mean HbO, and an adjacent channel in the right lateral array had a significant decrease in mean HbR (relative to baseline).

Table 2.

Significant activation by channel in HbO and HbR across conditions at 3 and 6 months. Channels that significantly differ from 0, as indicated by FDR-corrected p < .05, in one-sample t-tests reported. HbO = Oxygenated hemoglobin. HbR = Deoxygenated hemoglobin. H-NonC = Human non-communicative speech, H-Comm = Human communicative speech, IDS = Infant-directed speech.

| 3 months | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Left lateral array |

Right lateral array |

Frontal array |

|||||||||||||||||

| HbO |

HbR |

HbO |

HbR |

HbO |

HbR |

||||||||||||||

| Ch | t | p | Ch | t | p | Ch | t | p | Ch | t | p | Ch | t | p | Ch | t | p | ||

| H-NonC vs. silent baseline | |||||||||||||||||||

| 2 | 3.57 | .005 | 2 | −2.55 | .026 | 16 | 3.85 | .005 | 13 | −2.88 | .015 | n/a (not measured at 3 months) | |||||||

| 5 | 4.31 | <.001 | 4 | −2.96 | .014 | 18 | 3.35 | .007 | 16 | −3.30 | .005 | ||||||||

| 7 | 3.83 | .005 | 5 | −2.81 | .016 | 19 | 4.52 | <.001 | 18 | −3.37 | .005 | ||||||||

| 6 | −4.07 | <.001 | 22 | 3.28 | .007 | 19 | −4.78 | <.001 | |||||||||||

| 7 | −3.36 | .005 | 20 | −2.67 | .022 | ||||||||||||||

| 8 | −2.62 | .022 | 21 | −4.61 | <.001 | ||||||||||||||

| 9 | −4.59 | <.001 | 22 | −4.86 | <.001 | ||||||||||||||

| 24 | −3.97 | <.001 | |||||||||||||||||

| H-Comm vs. silent baseline | |||||||||||||||||||

| 1 | 3.17 | .009 | 4 | −3.48 | .005 | 13 | 2.58 | .030 | 18 | −3.13 | .014 | ||||||||

| 2 | 3.38 | .008 | 6 | −2.99 | .020 | 16 | 3.75 | .006 | 19 | −3.58 | .005 | ||||||||

| 4 | 4.62 | <.001 | 7 | −5.21 | <.001 | 18 | 3.07 | .010 | 21 | −4.33 | <.001 | ||||||||

| 7 | 3.72 | .006 | 8 | −4.38 | <.001 | 19 | 3.39 | .008 | |||||||||||

| 9 | 3.23 | .009 | 9 | −3.36 | .008 | 21 | 3.59 | .006 | |||||||||||

| 10 | 2.82 | .017 | 11 | −2.50 | .045 | 22 | 2.84 | .017 | |||||||||||

| IDS vs. silent baseline | |||||||||||||||||||

| 6 | 3.16 | .030 | 21 | 3.40 | .036 | 19 | −3.38 | .048 | |||||||||||

| 6 months | ||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Left lateral array |

Right lateral array |

Frontal array |

||||||||||||||||||||

| HbO |

HbR |

HbO |

HbR |

HbO |

HbR |

|||||||||||||||||

| Ch | t | p | Ch | t | p | Ch | t | p | Ch | t | p | Ch | t | p | Ch | t | p | |||||

| H-NonC vs. silent baseline | ||||||||||||||||||||||

| 2 | 2.61 | .048 | 1 | −3.70 | .006 | 14 | 2.56 | .048 | 13 | −2.89 | .024 | |||||||||||

| 4 | 2.93 | .036 | 2 | −2.82 | .024 | 15 | 3.61 | .010 | 14 | −3.77 | .006 | |||||||||||

| 5 | 2.58 | .048 | 3 | −2.56 | .032 | 16 | 3.54 | .010 | 16 | −4.68 | <.001 | |||||||||||

| 4 | −2.71 | .026 | 19 | 2.55 | .048 | 19 | −4.09 | <.001 | ||||||||||||||

| 6 | −2.34 | .048 | 21 | 3.08 | .030 | 22 | −2.41 | .044 | ||||||||||||||

| 7 | −3.20 | .014 | ||||||||||||||||||||

| 9 | −2.80 | .024 | ||||||||||||||||||||

| 11 | −3.02 | .020 | ||||||||||||||||||||

| H-Comm vs. silent baseline | ||||||||||||||||||||||

| 2 | 3.10 | .048 | 17 | 4.57 | <.001 | 19 | −3.53 | .024 | 30 | −3.10 | .010 | 28 | 2.46 | .043 | ||||||||

| 31 | −3.61 | .007 | 32 | 2.45 | .043 | |||||||||||||||||

| 32 | −4.08 | <.001 | 33 | 3.70 | .003 | |||||||||||||||||

| 34 | −3.02 | <.01 | 34 | 4.36 | <.001 | |||||||||||||||||

| 35 | −2.65 | <.02 | 36 | 4.77 | <.001 | |||||||||||||||||

| 36 | −2.98 | <.01 | 37 | 3.64 | .003 | |||||||||||||||||

| 37 | −2.73 | .02 | ||||||||||||||||||||

| IDS vs. silent baseline | ||||||||||||||||||||||

| 17 | 3.53 | .020 | 32 | −3.31 | .020 | 28 | 2.72 | .026 | ||||||||||||||

| 34 | −3.23 | .020 | 29 | 3.26 | .013 | |||||||||||||||||

| 32 | 2.96 | .020 | ||||||||||||||||||||

| 33 | 3.23 | .013 | ||||||||||||||||||||

| 34 | 3.18 | .013 | ||||||||||||||||||||

| 35 | 2.42 | .041 | ||||||||||||||||||||

| 37 | 2.59 | .030 | ||||||||||||||||||||

Fig. 3.

Channels with significant change in HbO across condition and time. Results based upon one-sample t-tests (FDR-corrected p < .05). Channels with significant increase in HbO at 10-15 s post-stimulus presentation depicted for left and right lateral arrays. Channels with significant decrease in HbO at 5–10 s post-stimulus depicted for frontal array (frontal data not collected at 3 months). LH = Left hemisphere. RH = Right hemisphere. H-NonC = Human non-communicative sounds. H-Comm = Human communicative sounds. IDS = Infant-directed speech.

At 6 months, we again observed widespread activation in bilateral temporal cortices in response to the H-NonC condition, with three channels in the superior posterior portion of the left lateral array and five channels primarily in the middle portion of the right lateral array showing significant increases in mean HbO (relative to baseline). Significant decreases in mean HbR were also observed in eight left lateral and five right lateral channels, which mostly overlapped with or were adjacent to significant HbO channels. In response to the H-Comm and IDS conditions, more focal activation was observed. For H-Comm, one channel in the superior posterior portion of the left lateral array and one channel in the anterior portion of the right lateral array showed significant increases in mean HbO, and one adjacent channel in the right lateral array (relative to baseline). A significant decrease in mean HbR was also found in an adjacent right anterior channel. In the IDS condition, only one channel in the anterior portion of the right lateral array, which also responded to H-Comm, showed a significant increase in mean HbO (relative to baseline).

We also examined prefrontal cortex responses to social sounds at 6 months. None of the frontal channels differed significantly from baseline for the H-NonC condition. For the H-Comm condition, however, seven channels spanning left and right portions of the frontal array showed significant early decreases in mean HbO, and six channels had a significant increase in mean HbR (relative to baseline). Similarly, for IDS, two channels in the left and right portions of the frontal array showed significant early decreases in mean HbO, and six channels had a significant increase in mean HbR (relative to baseline).

Repeated measures ANOVA analyses revealed both similarities and differences between conditions and over time, and were largely consistent with the first-level analyses. Within the anterior region (LH: CHs 3, 6, 8; RH: CHs 17, 19, 22), a main effect of hemisphere was found, F(1, 24) = 5.75, p = .025, partial η2 = .19, indicating that the right anterior region (EMM = .009, SE = .002) responded more strongly across conditions than the left (EMM = .004, SE = .002; all other ps ≥ .17). For the superior region (LH: CHs 1, 2, 4; RH: CHs 13, 14, 16), there were no significant main or interaction effects (ps ≥ .13).

The posterior region (LH: CHs 5, 7, 10; RH: CHs 15, 18, 20) had a significant main effect of age, F(1, 19) = 4.82, p = .041, partial η2 = .20, and condition, F(1, 19) = 6.53, p = .004, partial η2 = .26, and a marginally significant interaction effect between age and hemisphere, F(1, 19) = 3.51, p = .076, partial η2 = .16 (all other ps ≥ .22). Overall, the posterior region responded more strongly at 3 months (EMM = .012, SE = .004) than 6 months (EMM = .004, SE = .003) across conditions, and to the H-NonC condition (EMM = .012, SE = .003) than the other conditions (H-Comm: EMM = .008, SE = .003; IDS: EMM = .005, SE = .004) across both ages. Additionally, across conditions the left posterior region (EMM = .015, SE = .009) tended to respond more strongly than the right (EMM = .009, SE = .004) at 3 months, whereas the left (EMM = .004, SE = .003) and right (EMM = .005, SE = .003) posterior regions tended to respond similarly at 6 months.

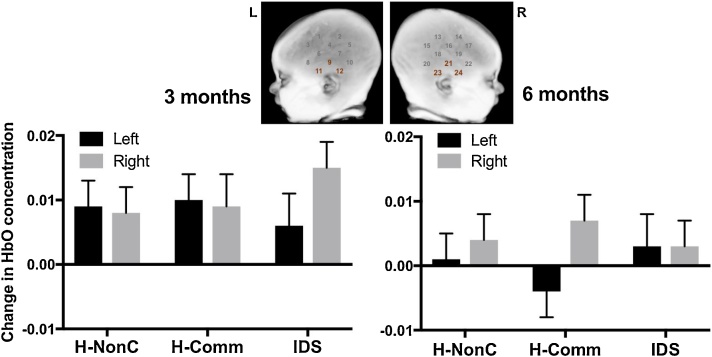

In the inferior region (LH: CHs 9, 11, 12; RH: CHs 21, 23, 24), there was a main effect of age, F(1, 25) = 4.44, p = .045, partial η2 = .15, in addition to a 3-way interaction between age, hemisphere, and condition, F(2, 50) = 4.09, p = .023, partial η2 = .14 (all other ps ≥ .20). Generally, there were higher HbO responses in the inferior region at 3 months (EMM = .009, SE = .004) than 6 months (EMM = .002, SE = .003), although the pattern of change differed by condition and hemisphere. For the H-NonC and H-Comm conditions at 3 months, infants responded similarly across the left and right inferior regions, whereas at 6 months a right hemisphere bias was evident across both conditions, particularly H-Comm (see Fig. 4). For IDS at 3 months, infants responded more strongly in the right vs. left inferior region, while at 6 months infants responded similarly to IDS across left and right inferior regions (see Fig. 4).

Fig. 4.

Depiction of age x hemisphere x condition interaction (p = .023) in the inferior region from the lateral arrays. HbO = Oxygenated hemoglobin. H-NonC = Human non-communicative speech. H-Comm = Human communicative speech. IDS = Infant-directed speech.

3.2. Do brain activation patterns to social sounds at 3 months relate to brain activation patterns to social sounds at 6 months?

We also examined whether mean HbO responses in the lateral arrays at 3 months predicted brain responses at 6 months. Overall, results of these analyses suggested that greater response to the H-Comm condition at 3 months predicted greater response to IDS at 6 months. Specifically, higher HbO concentrations in response to H-Comm in the left hemisphere at 3 months correlated with higher HbO response to IDS in the left, r(29) = .49, p = .006, and right hemisphere, r(29) = .41, p = .026, at 6 months. Additionally, higher HbO response to H-Comm in the right frontal-temporal cortex at 3 months correlated with higher left hemisphere response to IDS at 6 months, r(29) = .36, p = .049. Follow-up analyses revealed that left inferior region responses to H-Comm at 3 months primarily accounted for correlations with IDS in the left, r(27) = .66, p < .001, and right hemispheres, r(27) = .53, p = .004 (other ps ≥ .08). Correlations between 3-month right hemisphere H-Comm response and 6-month left hemisphere IDS response were primarily accounted for by the right posterior, r(24) = .46, p = .020, and inferior regions, r(26) = .47, p = .013. None of the other hemisphere-level correlations between 3- and 6-month social sounds reached significance (ps ≥ .06).

3.3. Do brain responses to IDS at 3 and 6 months predict individual differences in receptive language at 6 months?

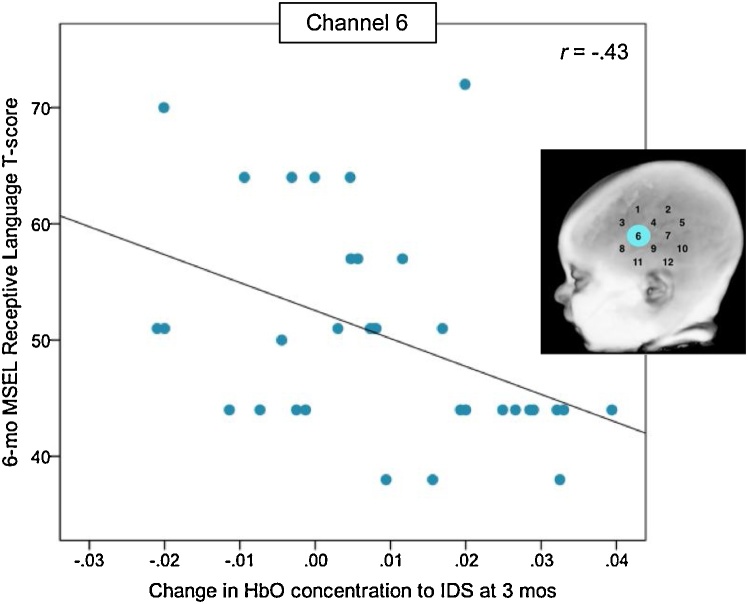

Finally, we examined whether the channels that showed significant activation to IDS in left and right frontal-temporal cortices at 3 months (CH6 [HbO], CH19 [HbR], CH21 [HbO]) or 6 months (CH17 [HbO]) predicted variation in receptive language4, as measured by the MSEL, at 6 months. Unexpectedly, greater response to IDS in a channel in the left anterior region (CH6) at 3 months negatively predicted 6-month MSEL receptive language scores, t(26) = -2.76, p = .012, β = -.53 (see Fig. 5), above and beyond the variability due to MSEL nonverbal cognition, t(26) = 1.26, p = .223. None of the other tested channels significantly predicted receptive language scores within the regression model (ps ≥ .42).

Fig. 5.

Negative correlation between HbO response to IDS at 3 months and receptive language score at 6 months. MSEL = Mullen Scales of Early Learning. HbO = Oxygenated hemoglobin. IDS = Infant-directed speech.

4. Discussion

The current study longitudinally examined infant brain responses to social sounds of varying social-communicative value at 3 and 6 months of age.

4.1. Frontal-temporal cortex response to social sounds

Widespread bilateral activation in frontal-temporal cortices was observed when 3-month-old infants listened to human non-speech vocalizations (both communicative and non-communicative), while a bilateral but decidedly more focal response was observed when these infants listened to infant-friendly speech sounds. At 6 months of age, however, patterns of brain activation were more similar, and more focal, across the two conditions presenting sounds that carried more presumed social communicative value (IDS and H-Comm). In contrast, non-communicative vocalizations (H-NonC) showed a relatively similar pattern of response in the infants from 3 to 6 months of age. Over time, age-related decreases in HbO response were observed primarily within the posterior and inferior regions of the lateral arrays; the inferior region showed bilateral activation to the non-speech vocalizations (H-NonC and H-Comm) at 3 months that shifted to a right-dominant response by 6 months, while response to IDS was initially right-dominant and then more bilateral within this region at 6 months of age.

Direct comparisons revealed relatively subtle differences between conditions. Somewhat in contrast with Shultz et al. (2012), an adult fMRI study using the same stimuli, the posterior region of the lateral array, which may correspond to the posterior superior temporal sulcus (pSTS) area, responded more strongly to non-communicative vocalizations than the other types of social sounds. While the H-NonC sounds are not presumed to carry communicative value per se, it is possible that they did carry strong social salience for the infants, as opposed to the adults in Shultz et al. (2012). As suggested by Blasi et al. (2011), non-communicative vocalizations, such as coughing and sneezing, may be quite familiar to infants and important in that they signal that an adult is near. In contrast, elements of the communicative and speech sounds aside from social salience, such as speech-related, prosodic, or emotional content, may have led to increased processing in other regions of the brain (including those not measured by this study). Shultz et al. (2012) identified differences in perceived communicativeness, emotional content, and valence, as well as acoustic properties, such as pitch, intensity, and harmonicity, between the vocal conditions used in the current study. While inclusion of these variables did not significantly change their results (with the exception of emotional content, which overlapped significantly with communicativeness; Shultz et al., 2012), it is possible that other aspects in the sound properties outside of communicativeness may have also contributed to different patterns of responses in the current study.

Together these results suggest that between 3 and 6 months, as infants gain more experience and become more active contributors to social interactions, they may begin to more strongly differentiate non-speech vocalizations that carry less social-communicative value (e.g., cough, sneeze) from those that are a more frequent component of social interactions and communicate important emotional information (e.g., laugh, sigh, disgust sound). The reduced response to social sounds over time was somewhat unexpected given previous work that suggests increased sensitivity to vocal sounds in bilateral temporal cortices (e.g., Grossmann et al., 2010a; Lloyd-Fox et al., 2012); this difference may be explained in part by the lack of a non-vocal control in the present study. The observed more focal and somewhat reduced responses to the more socially communicative sounds (H-Comm and IDS) during this time period may reflect increased processing efficiency or specialization of particular brain regions for social information processing.

Human communicative vocalizations also emerged as a predictor of later response to infant-directed speech. Infants who at 3 months of age responded more strongly to the H-Comm condition in both the left and right temporal cortices went on to respond more strongly to the IDS condition at 6 months. These correlations were mainly attributed to responses to these non-speech social sounds in the inferior and posterior regions of the lateral arrays, which may correspond to the STS/temporo-parietal junction area. Together with previous research demonstrating the importance of these areas to social brain development (e.g., Hakuno et al., 2018; Yang et al., 2015), these findings suggest that a general sensitivity and responsiveness to social sounds in these infants may first emerge in response to non-speech vocalizations.

With regard to speech sounds, infants’ brains showed a more limited response across both ages. At 3 months, activation was observed across one anterior channel in the left lateral array and another slightly more inferior and posterior channel in the right temporal cortex. At 6 months, brain activation in response to these speech sounds was observed solely in the right hemisphere. Interestingly, the channels that activated in response to IDS in the right hemisphere at both 3 and 6 months of age also responded to H-Comm, suggesting a sensitivity to social vocalizations more generally rather than a speech-specific response. These findings may reflect our use of an unfamiliar language, which allowed us to focus on the prosodic elements rather than the content of the speech. During this period of time, the left hemisphere may become more specialized for the processing of language, while the right hemisphere processes the more prosodic and emotional aspects of speech (Minagawa-Kawai et al., 2011a, b). It is thus possible that adult-directed speech, which is faster and has less varying intonation than IDS, would have elicited increased left inferior frontal-temporal activation at 6 months if it were included in the current study.

In some ways, the current findings are in contrast with those of Shultz et al. (2014), which found a left-lateralized response to speech vs. biological non-speech sounds (including human non-speech vocalizations). This apparent inconsistency could be due to several factors, including differences in the age range (1–4 months vs. 3 & 6 months), mode of measurement (fMRI vs. fNIRS), status of infant (asleep vs. awake), and the type and content of the contrasts (infant- & adult-directed speech/varied biological non-speech sounds vs. infant-directed speech/silent baseline). Interestingly, a similar difference in lateralization pattern despite comparable vocal stimuli was observed in Lloyd-Fox et al. (2012; fNIRS, awake infants) and Blasi et al. (2011; fMRI, asleep infants), suggesting that the imaging method and state of the infants may influence how the information is processed. In other ways, however, our findings are quite consistent, with both studies finding decreases in response to non-speech sounds and relatively similar patterns of response to non-native speech with age. Together, these findings suggest that the left temporal cortex may first become more specialized for speech over the first several months of life, followed by an increased specialization for an infant’s primary language by around 6 months of age.

A channel corresponding to the left inferior frontal gyrus, an area of the brain that is implicated in language development (e.g., Altvater-Mackensen and Grossmann, 2016), showed evidence of activation in response to the IDS condition, but not the non-speech conditions, at 3 months, but not at 6 months. Paradoxically, a stronger response in this same channel at 3 months was associated with lower receptive language abilities at 6 months, as measured by a standardized developmental test. While this was somewhat unexpected, it is possible that this finding was again related to the use of a non-native speech sample; less response to the non-native speech sample in this area may indicate that infants are becoming attuned to their native language more quickly, and therefore show stronger emerging receptive language skills at 6 months. It will be important to test these hypotheses more directly using a native vs. non-native paradigm, and to confirm whether these findings persist as infants age and their receptive language abilities become more variable.

4.2. Prefrontal cortex response to social sounds

We also examined infant brain responses to social sounds in the frontal cortex at 6 months of age. Our results were unusual in that we observed decreases in HbO and increases in HbR while infants were presented with the auditory stimuli. Negative responses were observed only during the IDS and H-Comm conditions, with no frontal channels showing significant changes in response to the H-NonC condition. The lack of positive response to social sounds was somewhat surprising given previous work (e.g., Altvater-Mackensen and Grossmann, 2016; Naoi et al., 2012). As Grossmann (2015) suggests, self-relevance and joint engagement seem to be key elements to prefrontal cortex responses to social stimuli. Previous studies included familiar voices and a familiar language (Naoi et al., 2012), and paired sounds with visual social stimuli that included eye gaze focused on the infant (Altvater-Mackensen and Grossmann, 2016). In our study, the infants may not have experienced the stimuli as particularly self-relevant, despite the infant-directed speech, given the non-native language sample and lack of corresponding visual signals, making it difficult to compare to previous work. Still, while it is difficult to make any firm conclusions given the lack of full brain measurement allowed by fNIRS, it is intriguing that there was such a stark difference between the pattern of response to the human communicative and non-communicative vocalizations.

Previous fNIRS studies have similarly found negative responses to stimuli in the infant frontal cortex (e.g., Ravicz et al., 2015; Xu et al., 2017). As suggested in Ravicz et al. (2015), this pattern of decreased HbO and increased HbR may indicate a decrease in neural activity relative to baseline, or de-activation, or it may be a sign of increased activation in a nearby brain area. The negative response in the prefrontal cortex may more specifically represent an attenuation of the default mode network when externally-focused attention is required, as posited in Xu et al. (2017). Furthermore, it has been suggested that non-canonical or inverted hemodynamic responses may more generally represent changes in stimulus complexity or familiarity (Issard and Gervain, 2018). In this study, we instead found differences in the shape of the hemodynamic response function depended primarily on brain region, as the different categories of stimulus generally elicited similar responses, while the shape of the response to the same stimulus differed between frontal and temporal brain regions.

4.3. Limitations

We took a different approach than previous work by contrasting the pattern of brain responses to differing types of social sounds. While this allowed for a unique study focus, interpretation of our findings is limited by the exclusion of important contrast conditions. To allow for feasible and successful data collection with an infant population, we focused on three conditions that were of the most interest to compare. Ideally, however, we would have also included native language speech and non-vocal auditory control conditions. Given the lack of a native speech condition, it is unclear to what degree the current results reflect speech processing vs. the processing of social sounds more generally. As demonstrated in Shultz et al. (2012), there are also sound features that differ among our three conditions in addition to presumed social communicative value, including valence and acoustic features (e.g., intensity, pitch), that may have accounted for a portion of the differences among the conditions.

Additionally, although the use of fNIRS importantly allows for the examination of localized brain function in awake infants, as is common in fNIRS studies, our optodes did not provide full head coverage, which necessitated a focus on a limited number of brain regions; this, along with the depth and localization limitations of fNIRS, does not allow for a full understanding of the brain regions involved in the processing of the auditory social information presented in this study. While our sample size was comparable or even slightly larger than previous fNIRS studies, we may have been underpowered to detect more subtle differences between conditions, as well as higher-order interactions within the ANOVA models. With regard to behavioral data, while the MSEL is a widely used measure of early development, it may not have been ideally suited to identifying more fine-grained differences in early language skills, particularly in the expressive domain. More specialized behavioral measures of early expressive and receptive language development should be used in future studies.

4.4. Conclusions and future directions

This study uniquely examined longitudinal infant brain responses to different types of social sounds in infants at both 3 and 6 months of age. We found that at 3 months, infants showed similar patterns of widespread activation in bilateral temporal cortices to human non-speech vocalizations, while by 6 months infants showed more similar, and focal, responses to social sounds that carried increased social value. In addition, we found that brain activity at 3 months predicted later brain activity and language skills as measured at 6 months.

An important next step to this study would be to examine responses to differing social sounds in infants who are at risk for atypical social development, such as autism spectrum disorder (ASD). Recent research in infants with familial risk for ASD suggests that by 6 months of age, high-risk infants with ASD outcomes show reduced brain responses to vocal sounds and increased responses to non-vocal sounds (Lloyd-Fox et al., 2018), highlighting the clinical relevance of studying social brain development in the first year of life. Ultimately, a better understanding of the typical development of social information processing may allow for a stronger basis upon which to assess deviations from the typical course.

Conflict of interest

The authors have no conflicts of interest to report.

Acknowledgements

This research and the work of the first author (NMM) was supported by the National Institute of Mental Health (F32 MH108283-01). KLP was supported by the National Institute of Mental Health (4R01 MH078829) and the Bill and Melinda Gates Foundation (OPP1111625). We thank the following members of the research team for their important contributions to participant recruitment and data collection efforts: Amy Ahn, Megan Braconnier, Harlan Fichtenholtz, Karen Lob, Courtney Paisley, and Carla Wall. We are particularly grateful for the families who took the time to participate in this study.

Footnotes

Based on 6-month data.

Corresponding analyses with the 6-month MSEL Expressive Language t-score revealed no significant associations.

Contributor Information

Nicole M. McDonald, Email: nmcdonald@mednet.ucla.edu.

Katherine L. Perdue, Email: katherine.perdue@childrens.harvard.edu.

Jeffrey Eilbott, Email: jeffrey.eilbott@yale.edu.

Jaspreet Loyal, Email: jaspreet.loyal@yale.edu.

Frederick Shic, Email: fshic@uw.edu.

Kevin A. Pelphrey, Email: kevin.pelphrey@virginia.edu.

References

- Altvater-Mackensen N., Grossmann T. The role of left inferior frontal cortex during audiovisual speech perception in infants. Neuroimage. 2016;133:14–20. doi: 10.1016/j.neuroimage.2016.02.061. [DOI] [PubMed] [Google Scholar]

- Behrendt H.F., Firk C., Nelson C.A., Perdue K.L. Motion correction for infant functional near-infrared spectroscopy with an application to live interaction data. Neurophotonics. 2018;5(1) doi: 10.1117/1.NPh.5.1.015004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamini Y., Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B. 1995;57(1):289–300. [Google Scholar]

- Blasi A., Mercure E., Lloyd-Fox S., Thomson A., Brammer M., Sauter D. Early specialization for voice and emotion processing in the infant brain. Curr. Biol. 2011;21(14):1220–1224. doi: 10.1016/j.cub.2011.06.009. [DOI] [PubMed] [Google Scholar]

- DeCasper A.J., Fifer W.P. Of human bonding: newborns prefer their mothers’ voices. Science. 1980;208(4448):1174–1176. doi: 10.1126/science.7375928. [DOI] [PubMed] [Google Scholar]

- Delpy D.T., Cope M., van der Zee P., Arridge S., Wray S., Wyatt J. Estimation of optical pathlength through tissue from direct time of flight measurement. Phys. Med. Biol. 1988;33(12):1433–1442. doi: 10.1088/0031-9155/33/12/008. [DOI] [PubMed] [Google Scholar]

- Duncan A., Meek J.H., Clemence M., Elwell C.E., Tyszczuk L., Cope M., Delpy D.T. Optical pathlength measurements on adult head, calf and forearm and the head of the newborn infant using phase resolved optical spectroscopy. Phys. Med. Biol. 1995;40(2):295–304. doi: 10.1088/0031-9155/40/2/007. [DOI] [PubMed] [Google Scholar]

- Funane T., Homae F., Watanabe H., Kiguchi M., Taga G. Greater contribution of cerebral than extracerebral hemodynamics to near-infrared spectroscopy signals for functional activation and resting-state connectivity in infants. Neurophotonics. 2014;1(2) doi: 10.1117/1.NPh.1.2.025003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T. The development of social brain functions in infancy. Psychol. Bull. 2015;141(6):1266–1287. doi: 10.1037/bul0000002. [DOI] [PubMed] [Google Scholar]

- Grossmann T., Oberecker R., Koch S.P., Friederici A.D. The developmental origins of voice processing in the human brain. Neuron. 2010;65(6):852–858. doi: 10.1016/j.neuron.2010.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T., Parise E., Friederici A.D. The detection of communicative signals directed at the self in infant prefrontal cortex. Front. Hum. Neurosci. 2010;4:201. doi: 10.3389/fnhum.2010.00201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hakuno Y., Pirazzoli L., Blasi A., Johnson M.H., Lloyd-Fox S. Optical imaging during toddlerhood: brain responses during naturalistic social interactions. Neurophotonics. 2018;5(1):011020. doi: 10.1117/1.NPh.5.1.011020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huppert T.J., Diamond S.G., Franceschini M.A., Boas D.A. HomER: a review of time-series analysis methods for near-infrared spectroscopy of the brain. Appl. Opt. 2009;48(10):D280–298. doi: 10.1364/ao.48.00d280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imafuku M., Hakuno Y., Uchida-Ota M., Yamamoto J., Minagawa Y. "Mom called me!" Behavioral and prefrontal responses of infants to self-names spoken by their mothers. Neuroimage. 2014;103:476–484. doi: 10.1016/j.neuroimage.2014.08.034. [DOI] [PubMed] [Google Scholar]

- Issard C., Gervain J. Variability of the hemodynamic response in infants: Influence of experimental design and stimulus complexity. Dev. Cogn. Neurosci. 2018 doi: 10.1016/j.dcn.2018.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl P.K. Brain mechanisms in early language acquisition. Neuron. 2010;67(5):713–727. doi: 10.1016/j.neuron.2010.08.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd-Fox S., Blasi A., Elwell C.E. Illuminating the developing brain: the past, present and future of functional near infrared spectroscopy. Neurosci. Biobehav. Rev. 2010;34(3):269–284. doi: 10.1016/j.neubiorev.2009.07.008. [DOI] [PubMed] [Google Scholar]

- Lloyd-Fox S., Blasi A., Mercure E., Elwell C.E., Johnson M.H. The emergence of cerebral specialization for the human voice over the first months of life. Soc. Neurosci. 2012;7(3):317–330. doi: 10.1080/17470919.2011.614696. [DOI] [PubMed] [Google Scholar]

- Lloyd-Fox S., Begus K., Halliday D., Pirazzoli L., Blasi A., Papademetriou M. Cortical specialisation to social stimuli from the first days to the second year of life: a rural Gambian cohort. Dev. Cogn. Neurosci. 2017;25:92–104. doi: 10.1016/j.dcn.2016.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd-Fox S., Blasi A., Pasco G., Gliga T., Jones E.J.H., Murphy D.G.M., Johnson M.H. Cortical responses before 6 months of life associate with later autism. Eur. J. Neurosci. 2018;47(6):736–749. doi: 10.1111/ejn.13757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marx V., Nagy E. Fetal behavioural responses to maternal voice and touch. PLoS One. 2015;10(6) doi: 10.1371/journal.pone.0129118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- May L., Byers-Heinlein K., Gervain J., Werker J.F. Language and the newborn brain: does prenatal language experience shape the neonate neural response to speech? Front. Psychol. 2011;2:222. doi: 10.3389/fpsyg.2011.00222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald N.M., Perdue K.L. The infant brain in the social world: moving toward interactive social neuroscience with functional near-infrared spectroscopy. Neurosci. Biobehav. Rev. 2018;87:38–49. doi: 10.1016/j.neubiorev.2018.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y., Cristia A., Dupoux E. Cerebral lateralization and early speech acquisition: a developmental scenario. Dev. Cogn. Neurosci. 2011;1(3):217–232. doi: 10.1016/j.dcn.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y., van der Lely H., Ramus F., Sato Y., Mazuka R., Dupoux E. Optical brain imaging reveals general auditory and language-specific processing in early infant development. Cereb. Cortex. 2011;21(2):254–261. doi: 10.1093/cercor/bhq082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullen E. Circle Pines: American Guidance Service, Inc.; 1995. Mullen Scales of Early Learning. [Google Scholar]

- Naoi N., Minagawa-Kawai Y., Kobayashi A., Takeuchi K., Nakamura K., Yamamoto J., Kojima S. Cerebral responses to infant-directed speech and the effect of talker familiarity. Neuroimage. 2012;59(2):1735–1744. doi: 10.1016/j.neuroimage.2011.07.093. [DOI] [PubMed] [Google Scholar]

- Pegg J.E., Werker J.F., McLeod P.J. Preference for infant-directed over adult-directed speech: evidence from 7-week-old infants. Infant Behav. Dev. 1992;15(3):325–345. [Google Scholar]

- Peña M., Maki A., Kovacic D., Dehaene-Lambertz G., Koizumi H., Bouquet F., Mehler J. Sounds and silence: an optical topography study of language recognition at birth. Proc. Natl. Acad. Sci. 2003;100(20):11702–11705. doi: 10.1073/pnas.1934290100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Psychology Software Tools . 2012. Psychology Software Tools, Inc. [E-Prime 2.0].http://www.pstnet.com Retrieved from. [Google Scholar]

- Ravicz M.M., Perdue K.L., Westerlund A., Vanderwert R.E., Nelson C.A. Infants’ neural responses to facial emotion in the prefrontal cortex are correlated with temperament: a functional near-infrared spectroscopy study. Front. Psychol. 2015;6:922. doi: 10.3389/fpsyg.2015.00922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato H., Hirabayashi Y., Tsubokura H., Kanai M., Ashida T., Konishi I., Maki A. Cerebral hemodynamics in newborn infants exposed to speech sounds: a whole-head optical topography study. Hum. Brain Mapp. 2012;33(9):2092–2103. doi: 10.1002/hbm.21350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shultz S., Vouloumanos A., Pelphrey K. The superior temporal sulcus differentiates communicative and noncommunicative auditory signals. J. Cogn. Neurosci. 2012;24(5):1224–1232. doi: 10.1162/jocn_a_00208. [DOI] [PubMed] [Google Scholar]

- Shultz S., Vouloumanos A., Bennett R.H., Pelphrey K. Neural specialization for speech in the first months of life. Dev. Sci. 2014;17(5):766–774. doi: 10.1111/desc.12151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vannasing P., Florea O., Gonzalez-Frankenberger B., Tremblay J., Paquette N., Safi D. Distinct hemispheric specializations for native and non-native languages in one-day-old newborns identified by fNIRS. Neuropsychologia. 2016;84:63–69. doi: 10.1016/j.neuropsychologia.2016.01.038. [DOI] [PubMed] [Google Scholar]

- Xu M., Hoshino E., Yatabe K., Matsuda S., Sato H., Maki A., Minagawa Y. Prefrontal Function Engaging in External-Focused Attention in 5- to 6-Month-Old Infants: A Suggestion for Default Mode Network. Front. Hum. Neurosci. 2017:10. doi: 10.3389/fnhum.2016.00676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang D.Y., Rosenblau G., Keifer C., Pelphrey K.A. An integrative neural model of social perception, action observation, and theory of mind. Neurosci. Biobehav. Rev. 2015;51:263–275. doi: 10.1016/j.neubiorev.2015.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe H., Homae F., Nakano T., Taga G. Functional activation in diverse regions of the developing brain of human infants. Neuroimage. 2008;43(2):346–357. doi: 10.1016/j.neuroimage.2008.07.014. [DOI] [PubMed] [Google Scholar]