Abstract.

Significance: Functional near-infrared spectroscopy (fNIRS) has become an important research tool in studying human brains. Accurate quantification of brain activities via fNIRS relies upon solving computational models that simulate the transport of photons through complex anatomy.

Aim: We aim to highlight the importance of accurate anatomical modeling in the context of fNIRS and propose a robust method for creating high-quality brain/full-head tetrahedral mesh models for neuroimaging analysis.

Approach: We have developed a surface-based brain meshing pipeline that can produce significantly better brain mesh models, compared to conventional meshing techniques. It can convert segmented volumetric brain scans into multilayered surfaces and tetrahedral mesh models, with typical processing times of only a few minutes and broad utilities, such as in Monte Carlo or finite-element-based photon simulations for fNIRS studies.

Results: A variety of high-quality brain mesh models have been successfully generated by processing publicly available brain atlases. In addition, we compare three brain anatomical models—the voxel-based brain segmentation, tetrahedral brain mesh, and layered-slab brain model—and demonstrate noticeable discrepancies in brain partial pathlengths when using approximated brain anatomies, ranging between to 23% with the voxelated brain and 36% to 166% with the layered-slab brain.

Conclusion: The generation and utility of high-quality brain meshes can lead to more accurate brain quantification in fNIRS studies. Our open-source meshing toolboxes “Brain2Mesh” and “Iso2Mesh” are freely available at http://mcx.space/brain2mesh.

Keywords: functional near-infrared spectroscopy, tetrahedral mesh generation, brain atlas, Monte Carlo method

1. Introduction

Functional near-infrared spectroscopy (fNIRS) has played an increasingly important role in functional neuroimaging.1 Using light in the red and near-infrared range, the hemodynamic response of the brain is probed through careful placement of sources and detectors on the scalp surface at multiple wavelengths. Relative changes in oxygenated () and deoxygenated hemoglobin (HbR) concentrations, as a result of neural activities, lead to variations in light intensities at the detectors that are used to infer the locations of these activities. The accuracy of this inference depends greatly not only upon an accurate representation of the complex human brain anatomy but also on the surrounding tissues that affect the migrations of photons from the sources to the detectors. Using the anatomical scans of the patient’s head or a resembling atlas, Monte Carlo (MC) simulations are often used in conjunction with tissue optical properties to approximate the photon pathlengths that are used to reconstruct the changes in HbR and . While much simplified brain models, such as planar2,3 or spherical layers,4 as well as approximated photon propagation models, such as the diffusion approximation (DA),5 have been widely utilized by the research community, their limitations are recognized by a number of studies.3,6 In addition, modeling brain anatomy accurately also plays important roles in other quantitative neuroimaging modalities, such as electroencephalography (EEG)7 and magnetoencephalography.8

Whereas a voxelated brain representation has been dominantly used for acquiring and storing a three-dimensional (3-D) neuroanatomical volume, the terraced boundary shape in a voxelated space has difficulty in representing a smooth and curved boundary that typically delineates human tissues, resulting in a loss of accuracy, especially when modeling complex cortical surfaces with limited resolution. In addition, the uniform grid structure of the voxel space also demands a large number of cells in order to store brain anatomy without losing spatial details. This can cause prohibitive memory allocation and runtime in applications where solving sophisticated numerical models is necessary. Another approach—octree—uses nested voxel refinement near curved boundaries. This improves memory efficiency significantly but still suffers from terraced mesh boundaries.9–11

Mesh-based brain/head models made of triangular surfaces or tetrahedral elements have advantages in both improved boundary accuracy and high flexibility compared to voxelated domains. Mesh-based models are not only the most common choice in computer graphics and 3-D visualization of brain structures, but they are also the primary format for finite element analysis (FEA) and image reconstructions in many neuroimaging studies.12,13 In fNIRS, tetrahedral meshes have been reported in several studies to model light propagation and recover brain hemodynamic activation using the finite-element14,15 or mesh-based Monte Carlo (MMC) method.16,17 Despite its importance for quantitative fNIRS analysis, the available mesh-based brain models remain limited6,18,19 in part due to the difficulties in generating accurate brain tetrahedral meshes.

The importance of creating high-quality brain mesh models is not limited to fNIRS. EEG relies on surface and volumetric head/brain meshes to quantitatively estimate the brain cortical activities.20 The effects of transcranial magnetic stimulation and transcranial direct current stimulation (tDCS) can also be simulated on realistic mesh models to evaluate brain damages13,21 or by measuring tDCS effects on major brain disorders.22 In addition, FEA of brain tissue deformation using mesh models can assist neurosurgeons in the study of traumatic brain injuries or surgical planning.23

On the other hand, while it has been generally agreed that mesh-based light transport models are more accurate than those using a voxel-based domain, there has not been a systematic study to investigate such difference and its impact on fNIRS. The shape differences between a voxelated and a mesh-based boundary not only influence how photons get absorbed by tissue, thus altering fluence distributions, but also greatly impact photon reflection/transmission characteristics near a tissue/air boundary. Such error could also be amplified with the presence of low-scattering/low-absorption tissue, such as cerebrospinal fluid (CSF) in the brain, and result in inaccurate estimations in fNIRS. To quantify the impact of brain anatomical models in light modeling using the state-of-the-art voxel and MMC simulators could be greatly beneficial for the community to design more efficient study protocols and instruments.

Despite the broad awareness of anatomical model differences, fNIRS studies utilizing mesh brain models are quite limited, largely due to the challenges to create brain meshes and lack of publicly available meshing tools. A large portion of these studies rely on previously created meshes24 or using general-purpose tetrahedral mesh generators that are not optimized for meshing the brain. For example, a voxel conforming mesh generation approach,25,26 the marching cubes algorithm27,28 and the “Cleaver” software29 can achieve good surface accuracy but at the cost of highly dense elements near the boundaries due to the octree-like refinement. A general-purpose 3-D mesh generation pipeline was proposed alongside with an open-source meshing software BioMesh3D.30 This approach makes use of physics-based optimizations to obtain a high-quality multimaterial feature-preserving tetrahedral mesh models but at the expense of lengthy runtime, on the order of several hours. In another Delaunay-based meshing pipeline provided in the Computational Geometry Algorithms Library (CGAL), also supported in “Iso2Mesh”31 in 2009 and NIRFAST in 2013,32 the tetrahedral mesh is generated from a random point set that is iteratively refined.33,34 This procedure is relatively fast, parallelizable, and robust. However, nonsmooth boundaries are often observed between tissue regions (example shown below). Commercially available tools, such as Mimics (Materialise, Leuven, Belgium) and ScanIP (Simpleware, Exeter, UK), offer integrated interfaces for image segmentation and mesh generation but often require manual mesh editing. A streamlined high-quality mesh-processing pipeline from neuroanatomical scans remains a challenge.

Meshing tools specifically optimized for brain mesh generation are very limited. In 2010, we reported a surface-based brain meshing approach.16 In 2013, a similar approach was reported,35 in which an automated meshing pipeline “mri2mesh” was reported, incorporating FreeSurfer surface models and the scalp, skull, and CSF segmentations from FMRIB Software Library (FSL) with the assistance of a surface decoupling step.36 Although mri2mesh yielded smooth boundaries and high-quality tetrahedral elements, the reported meshing times are on the order of 3 to 4 h, in addition to the time for segmentation. Moreover, mri2mesh can only process FreeSurfer and FSL outputs, limiting its integration with other segmentation tools.

In this work, we address two challenges in model-based neuroimaging analysis. First, we report a fully automated, surface-based brain/full head 3-D meshing pipeline “Brain2Mesh”—a specialized wrapper built upon our widely adopted mesh generation toolbox, Iso2Mesh, dedicated toward high-quality brain mesh generation. A major difference separating this work from the more conventional CGAL-based volumetric meshing approach32,33 is the use of surface-based meshing workflow. This allows it to produce brain mesh models with significantly higher quality. It is also much more flexible, processing data conveniently from multilabel (discrete) or probabilistic (continuous) segmentations and surface models of the brain. Second, this work quantitatively demonstrates that by utilizing an accurate mesh-based brain representation, one can potentially improve the accuracy in fNIRS data analysis. We analyze the errors in both fluence and photon partial pathlengths in the MC simulation outputs comparing between a layered-slab, a voxel-based, and a mesh-based brain model.

In the remainder of this paper, we first detail the brain mesh generation pipeline that we have developed to create high-quality brain mesh models. In Sec. 3, we show a variety of examples of the brain/full head meshes created using different types of neuroanatomical data inputs, including an open-source brain mesh libraries created based on the recently published Neurodevelopmental Magnetic Resonance Imaging (MRI) Database.37 In addition, we also perform MMC simulation using the produced mesh model and compare the results with those from voxelated and layered-slab brain models. We highlight the modeling errors of using a voxelated model in both fluence and partial pathlength in comparison with a mesh-based brain.

2. Material and Methods

2.1. High-Quality Three-Dimensional Brain Mesh Generation Pipeline

2.1.1. Brain segmentation

The brain anatomical modeling pipeline reported in this work starts from a presegmented brain. Here, we want to particularly highlight that brain segmentation is outside the scope of this paper. As noted below, there is an array of dedicated brain segmentation tools, extensively developed and validated over the past several decades. Advanced statistical and template-based segmentation methods have been investigated and rigorously implemented in these tools. It is not in our interests to develop a new segmentation method but to convert these presegmented anatomies into accurate meshes for subsequent computational modeling.

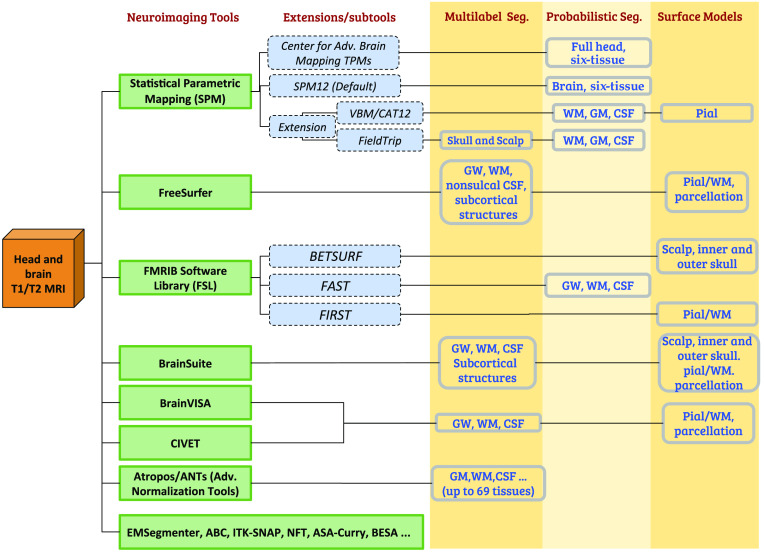

A diagram summarizing the common pathways in segmenting a neuroanatomical scan using popular neuroimaging analysis tools is shown in Fig. 1. In most cases, a tissue probability or multilabel volume is obtained for the white matter (WM), gray matter (GM), and CSF. Some segmentation tools, such as Statistical Parametric Mapping (SPM), allow the use of a matching T2-weighted MRI to improve the CSF segmentation. Utilities such as the FSL brain extraction tool and SPM can provide additional information on the scalp and skull. In this work, we primarily focus on six tissue types—WM, GM, CSF, skull, scalp, and air cavities. Additional classes of tissue (e.g., dura, vessels, fatty tissues, skin, and muscle) are also available in some segmentation outputs, which can be incorporated to our mesh generation pipeline. However, one should be aware that adding additional segmentations may result in increased node numbers and surface complexity, including disconnected surface components.

Fig. 1.

Segmentation pathways from anatomical head and brain MRI scans. The common neuroimaging tools/extensions (left) and the corresponding outputs (right, shaded) are listed.

2.1.2. Segmentation pre-preprocessing

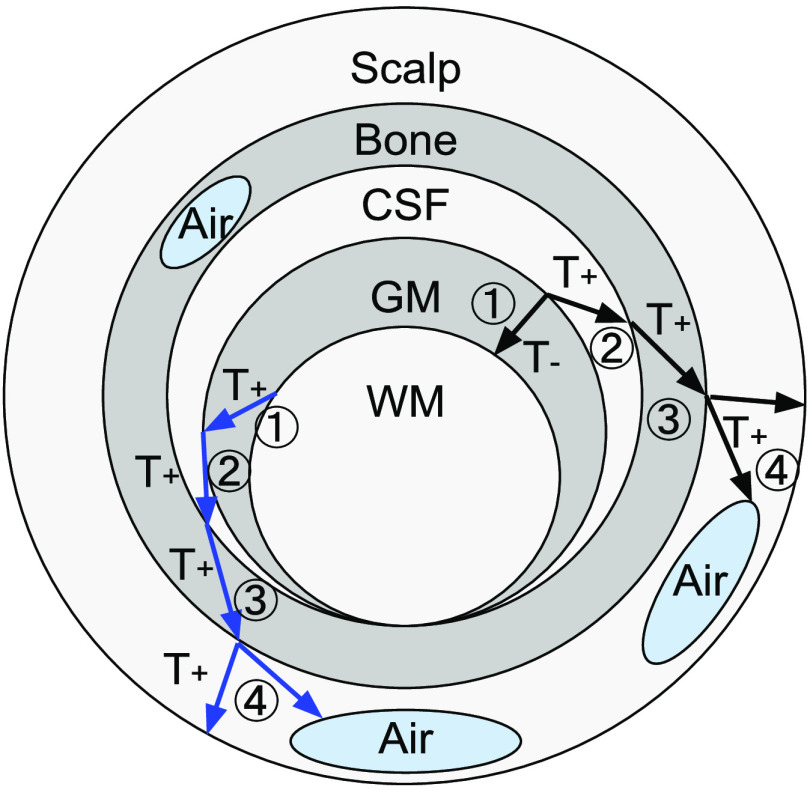

The segmented brain or full-head volume, represented by either a multilabel 3-D mask or a set of probability maps (in floating-point 0 to 1 values), is preprocessed to ensure a layered tissue model—i.e., the WM, GM, CSF, skull, and scalp are incrementally enclosed by or share a common boundary with the later tissue layers; the scalp surface is the outermost layer and the WM is the innermost layer. In previous publications, two adjacent layers must be separated by a nonzero gap.35,38 In this work, we have overcome this limitation by initially inserting a small gap between successive layers and then applying a postprocessing step to recover the merged boundaries. Moreover, we also consider air cavities, which can be located between two tissue surfaces, for example, inside or outside skull surfaces. In Fig. 2, the workflow to create different brain tissue boundaries is outlined.

Fig. 2.

Illustration of the layered tissue model and the segmentation preprocessing workflow. Multiple air cavities are allowed. An arrow represents a thinning () or thickening () operation between two adjacent regions. Two sample pathways are indicated, shown by black and blue arrows, respectively. The circled numbers indicate the processing order. The gaps inserted between layers can be removed in the postprocessing step to recover shared boundaries (such as the CSF/GM/WM surfaces here).

To avoid intersecting triangles in the generated multilayered surface model, we first insert a small gap between adjacent brain tissue layers (only in the regions where tissues have shared boundaries). This is achieved using either a 3-D image “thickening” or “thinning” operator in the segmented volume. In the case of a thickening operator (), the outer layer tissue segmentation () is modified as

| (1) |

where and represent the inner and outer tissue probabilistic segmentations, respectively; denotes a “max-filter,” i.e., a volumetric dilation operator defined by replacing each voxel with the maximum value in a cubic neighboring region with a half-edge length of , i.e.,

| (2) |

Similarly, a thinning operator () is defined as

| (3) |

where is an “erosion” operator of width , defined by a “min-filter” as

| (4) |

The operator effectively shrinks the inner layer mask at the intersecting regions with the outer layer. We note here that the above and operators work for both probabilistic and binary segmentations. We also highlight that the above operators only alter tissue segmentations in the regions where the inner tissue boundaries “merge” or intersect with the outer boundaries. Such regions only account for a small fraction of the brain tissue boundaries generated from realistic data. An optional postprocessing step is applied to “relabel” the elements in the expanded regions to recover the shared tissue boundaries (see below).

2.1.3. Tissue surface extraction and surface-mesh processing

If the input data are given in the form of a multilabeled volume or tissue probability maps (see Fig. 1), the next step of the mesh generation is to create a triangular surface mesh for each tissue layer. This is achieved using the “-sample” algorithm using the CGAL Surface Mesh Generation library.39 For each extracted tissue surface, independent mesh quality and density criteria can be defined. In general, a surface mesh extracted from a probabilistic segmentation (grayscale) is smoother than the one derived from a binary segmentation. In addition, we also provide three surface smoothing algorithms, including the Laplacian, Laplacian+HC, and low-pass filters.40 Moreover, a surface Boolean operation using on a customized Cork 3-D surface Boolean library41 is used to avoid surface intersections.

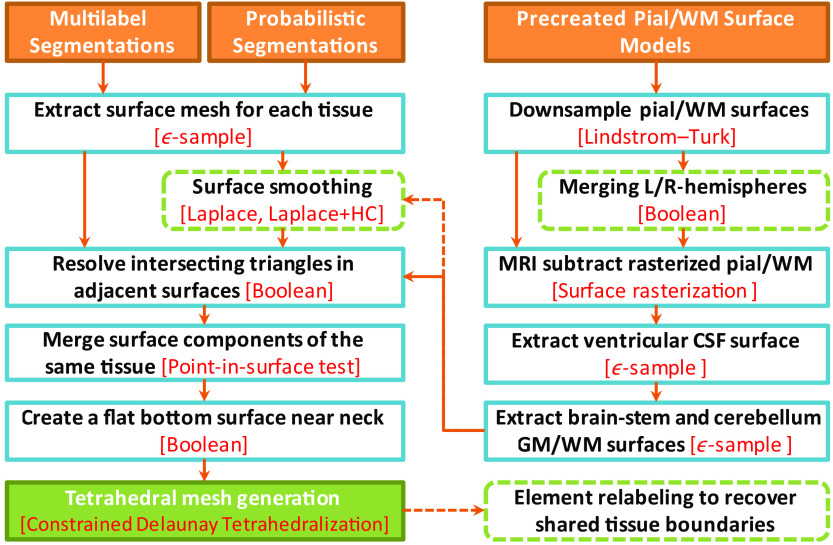

If the segmentation tool directly outputs the surface meshes of GM and WM, such as FreeSurfer, additional surface preprocessing is often required. For example, the FreeSurfer GM/WM surfaces are typically very dense. A surface simplification algorithm using the Lindstrom–Turk algorithm is applied42,43 to decimate the surface nodes. In another example, if the pial and WM surfaces contain a separate surface for each brain hemisphere, a merge operation is applied to combine them into one closed surface. In addition, the FreeSurfer and FSL pial/WM surfaces do not cover the cerebellum and brainstem regions. To add those two anatomical regions, we first rasterize the pial/WM surfaces and then subtract those from the GW/WM probabilistic segmentations. From the subtracted probability maps, we extract the brainstem and cerebellum WM and GM surfaces and subsequently merge these meshes with the cerebral GM/WM surfaces. A diagram summarizing our surface-based brain mesh generation pipeline is shown in Fig. 3.

Fig. 3.

Processing steps for a surface-based mesh generation workflow. The left side shows the steps for processing tissue probability maps and multilabel volumes, and the right side shows additional steps to incorporate precreated pial and WM surfaces. The specific algorithm used in each step is indicated in red, and dashed boxes and arrows indicate optional processing steps.

2.1.4. Volumetric mesh generation and postprocessing

With the above-derived combined multilayer head surface model, a full head tetrahedral mesh can be finally generated using a constrained Delaunay tetrahedralization algorithm, achieved using an open-source meshing utility TetGen.44 The output mesh quality and density are fully controlled by a set of user-defined meshing criteria. An un-normalized radius-edge ratio () lower bound (see below) can be specified to control the overall quality of the tetrahedral elements. In addition, one can set an upper bound for the tetrahedral element volume globally for the entire mesh or for a particular tissue label. Spatially varying mesh density is also supported via user-defined “sizing field.”44 After tessellation, each enclosed region in a multilayered brain surface model is filled by tetrahedral elements and is assigned with a unique region label to distinguish different tissue types.

If the small gaps inserted by the aforementioned thickening and thinning operations are not desired, an optional “relabeling” step is performed to recover the originally merged tissue boundaries. To do this, we use the centroids of the tetrahedral mesh elements in each unique region to determine the innermost surface that encloses this region volumes, based on which we can retag these elements using the correct tissue labels.

2.2. Assessing Impact of Anatomical Models in Light Transport Modeling

The ability to generate high-quality brain mesh models alongside the in-depth understandings to the state-of-the-art voxel and MMC photon transport modeling tools allow us to investigate the impact of brain anatomical models on fNIRS data analysis. Here, we are interested in quantitatively comparing various brain anatomical models in terms of light transport modeling and quantify their differences in the optical parameters essential to fNIRS measurements. The three brain anatomical models that we are evaluating include (1) mesh-based brain models,6,16 (2) voxel-based brain segmentations,45 and (3) simple layered-slab brain models often found in literature.3,46 We apply our widely adopted and cross-validated MC light transport simulators—MMC16,17—for mesh- and layered-slab brain simulations, and Monte Carlo eXtreme (MCX)31,47 for voxel-based brain simulations. Furthermore, a number of publications applied the DA to fNIRS modeling by utilizing an approximated scattering coefficient for CSF,5,48 as it has very low scattering. It is beneficial to the community to understand the errors caused by such model approximation.

To ensure that the mesh model is “equivalent” to the original segmentation, we calculate the volumetric ratios, denoted as , between the enclosed volume of the tissue boundary over those derived from the corresponding segmentation for a given tissue. A value close to 1 suggests excellent volume conservation. From all MC simulations, we compute important light transport parameters, such as the average partial pathlengths49 in the GM/WM regions ( in millimeters), the fraction of the partial pathlengths in the brain (), as well as the optical fluence spatial distributions. In addition, we also compute the percentage fractions of the total energy deposition in the brain regions using both mesh and voxel-based simulations. Such parameter is strongly relevant to photobiomodulation (PBM) applications.50

3. Results and Discussion

In the below sections, we first showcase the robustness and flexibility of our aforementioned brain meshing pipeline by processing a wide range of complex brain anatomical scans. Various meshing pathways of our meshing pipeline are validated by using publicly available brain atlas datasets, including the Neurodevelopmental MRI database.37,51 In addition, we use a sample full-head mesh generated from the MRI database and report their differences in key optical parameters by performing 3-D mesh-, voxel- and layered-domain MC transport simulations at a range of source–detector (SD) separations. All computational times were benchmarked on an Intel i7-8700K processor using a single thread.

3.1. High-Quality Tetrahedral Meshes of Human Head and Brain Models

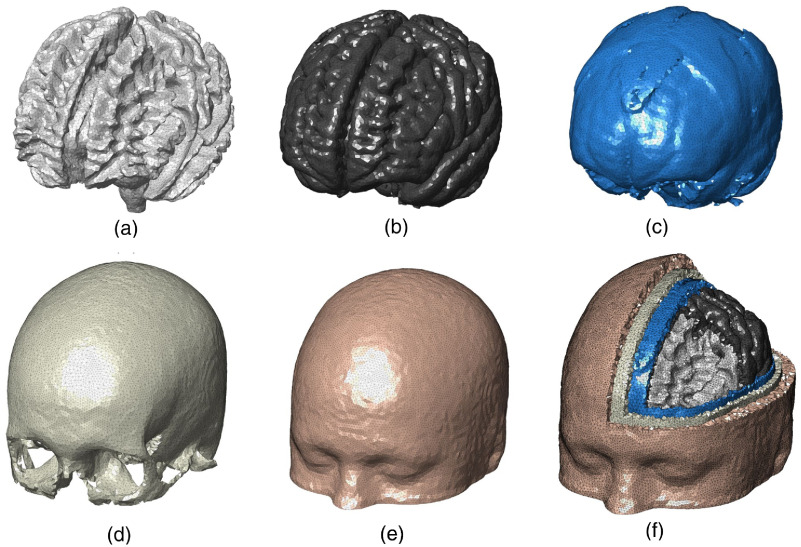

In Fig. 4, a sample full-head mesh model is generated from an SPM segmentation with tissue priors from the Laboratory for Research in Neuroimaging.52 The generated mesh contains 181,026 nodes and 1,060,051 tetrahedral elements. A T1-weighted MRI scan of an average head for the 40 to 44 years old age group from the University of South Carolina (USC) Neurodevelopmental MRI database (in the following sections, we will use “USC age group” to refer to an atlas from this database; for example, the atlas used in this example is USC 40 to 44) was used as the input. The segmentation yields five tissue classes that are used in the mesh generation: WM, GM, CSF, skull, and scalp.

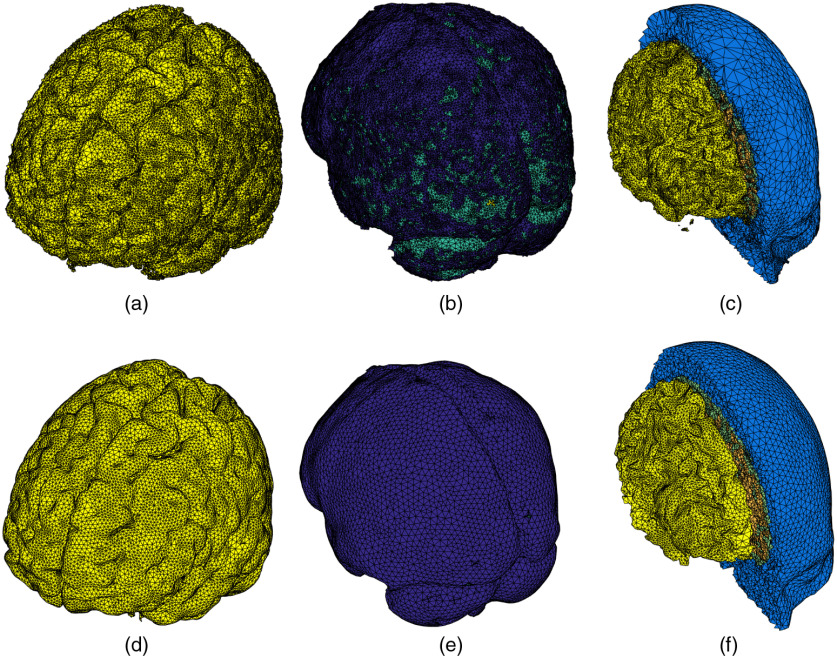

Fig. 4.

A five-layered full head tetrahedral mesh derived from an atlas head of the USC 40 to 44 atlas. It contains (a) WM, (b) GM, (c) CSF, (d) skull, and (e) scalp layers. (f) A cross-cut view of the tetrahedral mesh is shown.

The mesh density for each tissue surface and volumetric region is fully customizable by setting the following three parameters:

-

•

: The maximum radius of the Delaunay sphere39 (in voxel unit) that bounds each of the triangles in a given surface mesh. For example, setting requires that the circumscribed sphere of each surface triangle in the generated mesh must have a radius less than 2 (voxel length). A smaller value is generally needed when meshing objects with sharp features.

-

•

: The maximum tetrahedral element volume44 (in cubic voxel-length unit). For example, setting to 4 means no tetrahedral element in the generated mesh can exceed 4 cubic voxels in volume.

-

•

: The lower bound of the radius-to-edge ratio44 (measuring mesh quality), defined by , where is the radius of the circumsphere of the ’th tetrahedron and is the shortest edge length of that tetrahedron.

Each surface of the brain tissue layer is extracted using a layer-specific value: for pial and WM, 2 mm for CSF, 2.5 mm for skull, and 3.5 mm for scalp. The value was defined as , and was set to 1.414. The probability threshold for surface extraction was set to 0.5 for each of the tissues. The entire mesh takes 53.47 s to generate using a single CPU thread. The values computed from the generated mesh layers for WM, GM, CSF, skull, and scalp are 0.9924, 0.9989, 0.9921, 1.0088, and 1.0037, respectively. The excellent match of the volumes is a strong indication that our meshing pipeline preserves the tissue shapes accurately.

The tissue surfaces shown in Fig. 4 are visually smooth with an average Joe–Liu quality metric53 of 0.74, indicating excellent element shape quality. Overall, no degenerated element is found. The element volumes in Fig. 4 are well distributed (not shown) with only a small portion of relatively small elements. The significant improvement in mesh quality is demonstrated in the side-by-side comparison shown in Fig. 5. Here we compare the full head meshes created using the conventional CGAL-based direct meshing approach32 and the results from our surface-based meshing pipeline. As shown in Fig. 5(a)–5(c), there are several notable limitations from the conventional approach, namely (1) the tissue boundaries are not smooth (also evident from Fig. 2 in Ref. 32), (2) it has difficulty processing thin-layered tissue such as CSF [see Fig. 5(b)], and (3) it can produce small isolated “islands” [see Fig. 5(c)] due to the noise present in the volumetric image. In comparison, our surface-based meshing pipeline produces smooth and well-shaped surfaces with correct topological order. We also want to highlight that the CGAL-generated mesh contains over 268,000 nodes, whereas the mesh from our approach has only 158,211 nodes.

Fig. 5.

Comparison between (a)–(c) conventional CGAL-based volumetric meshing and (d)–(f) the new surface-based meshing approaches. From left to right, we show sample meshes for (a), (d) GM; (b), (e) CSF; and (c), (f) WM/scalp.

In Fig. 6, we show that users can generate tetrahedral meshes of different densities, by conveniently setting and the sizing-field () parameters. The set values are and , shown in Fig. 6(b), and and shown in Fig. 6(c). In the case of Fig. 6(c), we also reduced to 1.4 (in voxel length unit) for WM and GM, 1.7 for CSF, 2.0 for skull, and 2.5 for scalp.

Fig. 6.

Demonstration of mesh density control. (a) The mesh contains 181,026 nodes, 1,060,051 elements, with a runtime of 53.47 s. (b) The mesh includes 499,134 nodes, 3,009,706 elements, with runtime 76.03 s, and (c) the mesh has 1,023,739 nodes, 6,220,187 elements, and 135 s runtime. The five layers of brain tissues are, in order from outer to inner: scalp (apricot), skull (light-yellow), CSF (blue), GM (gray), and WM (white).

3.2. Hybrid Meshing Pipeline Combining Volumetric Segmentations with Tissue Surface Models

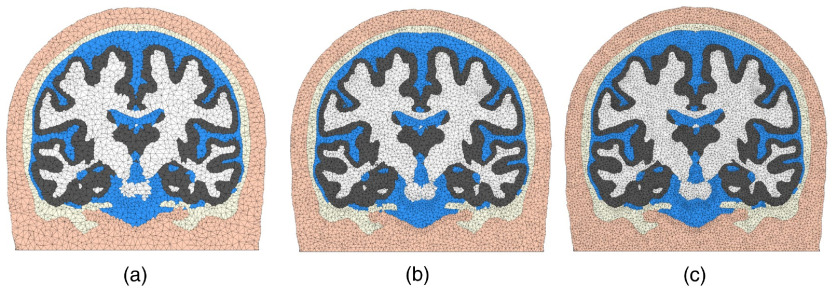

In this subsection, we demonstrate our “hybrid” meshing pathway (described in the right-half of Fig. 3). This approach combines tissue surfaces extracted from probabilistic segmentations with the pial and WM surfaces generated by dedicated neuroanatomical analysis tools, such as FreeSurfer and FSL. In the case of FreeSurfer, the raw pial and WM surfaces are very dense. A mesh simplification algorithm is performed to “downsample” the surface to the desired density. In Fig. 7, we characterize the trade-offs between mesh size and the surface error54 at various resampling ratios of the dense FreeSurfer-generated pial surface for the USC 30 to 34 atlas.43 The surface error is computed as the absolute value of the Euclidean distance (in millimeters) between each node of the downsampled surface to the closest node on the original surface.54 As shown in Fig. 7, at resampling ratio values below 0.1 (i.e., decimating over 90% edges), both gyri and sulci show a surface error above 0.5 mm. When the resampling ratio value is increased to 0.15, the observed error at the gyri becomes minimal. Only a few regions show errors above 1 mm. A resampling ratio of 0.2 is selected in this example, giving a mean surface error below 0.2 mm.

Fig. 7.

Box plots of surface errors as a function of resampling ratio (percentage of edges that are preserved) when downsampling a FreeSurfer-generated pial surface. Spatial distributions of the errors are shown as insets.

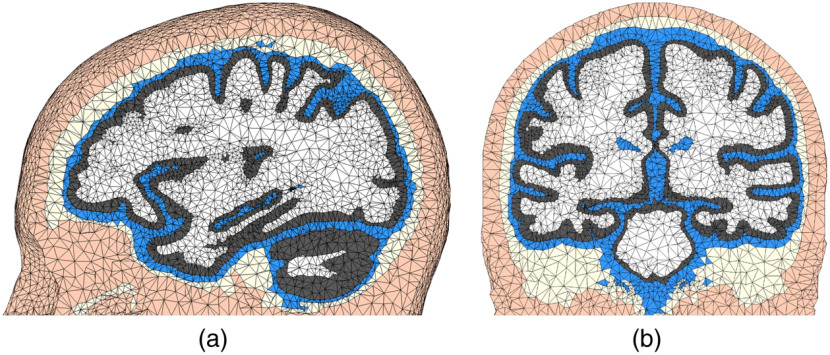

As we illustrate in Fig. 3, a number of additional step have been taken to process the FreeSurfer pial/WM surfaces. These include the merging of the left- and right-hemisphere surfaces, addition of the CSF ventricles, and the addition of cerebellum and brainstem using SPM segmentations. The final mesh, as shown in Fig. 8, contains 150,999 nodes and 917,212 elements. The mesh generation time was 158.44 s. This meshing time is significantly lower than the reported 3 to 4 h required for creating a similar mesh using mri2mesh.35 While not shown here, this hybrid workflow also accepts probabilistic segmentations produced by FSL or pial/WM surfaces created by BrainSuite and similar combinations.

Fig. 8.

Tetrahedral mesh generated from a hybrid meshing pathway combining FreeSurfer surfaces with SPM segmentation outputs for the USC 30 to 34 atlas. The (a) sagittal and (b) coronal views are shown. The tissue layers include scalp (apricot), skull (light-yellow), CSF (blue), GM (gray), and WM (white).

3.3. Brain Mesh Library Generated from Public Brain Databases

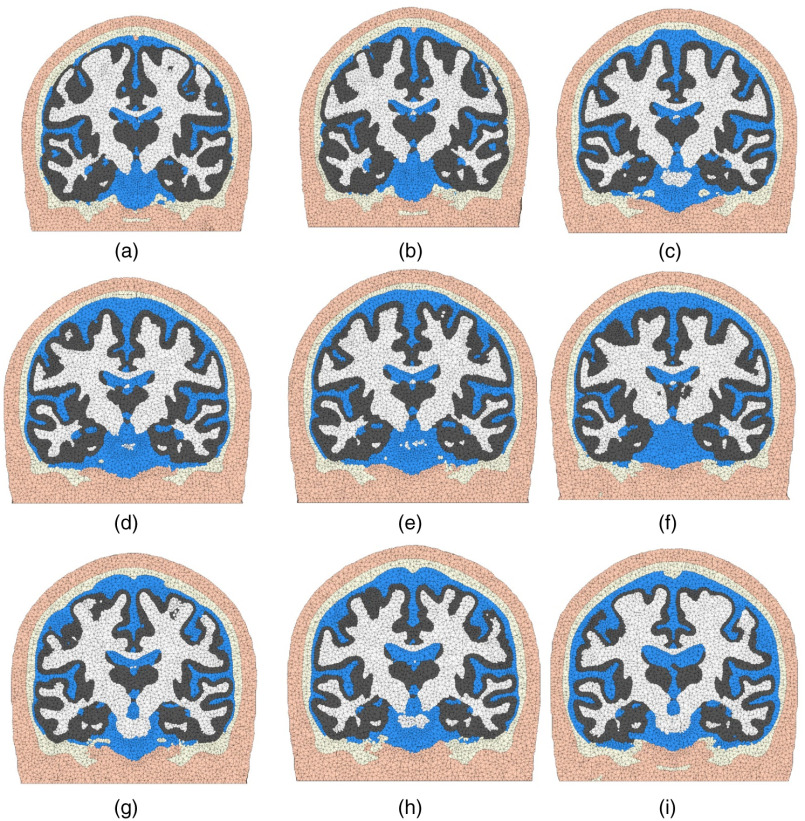

To test the robustness of our meshing workflow described above, we successfully processed many of the publicly available brain segmentation datasets, including the BrainWeb database55 and the recently published Neurodevelopmental MRI database.37 For the BrainWeb atlas database, we created the corresponding mesh models directly from the available brain segmentations. For neurodevelopmental atlases, the WM and GM segmentations provided as part of the database were used. However, the CSF and skull segmentations were not directly included by the database because they are generally more difficult to create. For these missing tissues, separate segmentations for CSF and skull were created using SPM. In addition, the scalp surface was extracted from the raw MRI image using an intensity thresholding approach followed by three iterations of Laplacian+HC smoothing.40 In Fig. 9, we show nine sample USC atlas brain meshes derived from adult and adolescent scans. In all processed MRI scans, the proposed meshing workflow worked smoothly. The average processing time is less than a minute per mesh when the voxel resolution is and about 3 min per mesh when the resolution is . It is important to note that the CSF and skull segmentations in Fig. 9 have not been validated and are shown only for illustration purposes.

Fig. 9.

Illustrative brain mesh examples (coronal views) produced using the Neurodevelopmental MRI Database, including (a) 16 years, (b) 17.5 years, (c) 25 to 29 years, (d) 30 to 34 years, (e) 35 to 39 years, (f) 40 to 44 years, (g) 50 to 54 years, (h) 60 to 64 years, and (i) 70 to 74 years old. The tissue layers include scalp (apricot), skull (light-yellow), CSF (blue), GM (gray), and WM (white).

3.4. Comparing Mesh-, Voxel-, and Layer-Based Brain Models in Light Transport Simulations

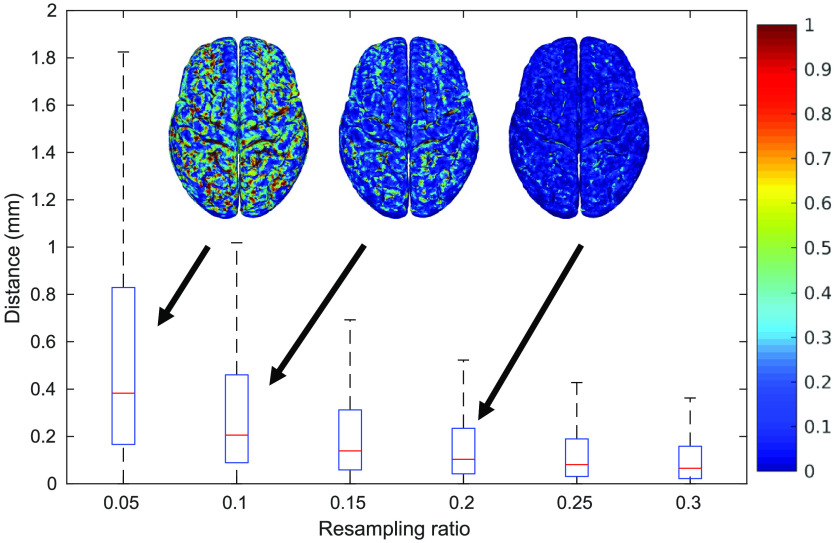

Next, we demonstrate the impact of different brain anatomical models, particularly between the mesh, voxel, and layered-slab brain representations and highlight their discrepancies in optical parameters estimated from 3-D MC light transport simulations. Here we use our in-house dual-grid mesh-based Monte Carlo (DMMC) simulator17 for mesh- and layer-based MC simulations and MCX31 for voxel-based simulation. An MRI brain atlas (19.5 year group51) was selected for this comparison, although our methods are readily applicable to other brain models. The SPM segmentation ( with resolution) of the selected atlas and the generated tetrahedron mesh from this segmentation are used for this comparison.

In this case, a tetrahedral mesh with 442,035 nodes and 2,596,064 elements is used for the DMMC simulations. The mesh was created using our aforementioned meshing pipeline with maximum volume size and maximum Delaunay sphere radii for all tissue layers. In comparison, a much simplified layered-slab brain model is made of slabs of the same five tissue layers with the layer thicknesses calculated based on the mesh model: scalp: 7.25 mm, skull: 4.00 mm, CSF: 2.73 mm, and GM: 3.29 mm. WM tissue fills the remaining space. To minimize boundary effect, the layered-slab brain model has a dimension of .

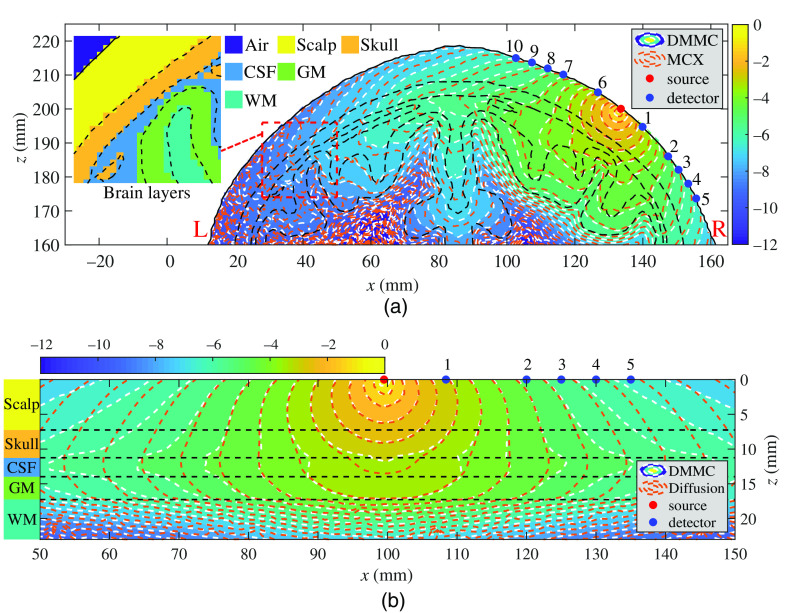

For the two anatomically derived (voxel and mesh) brain simulations, an inward-pointing pencil beam source is placed at an EEG 10-5 landmark56—“C4h”—selected using the “Mesh2EEG” toolbox.57 Within the same coronal plane, five 1.5-mm-radius detectors are placed on either side of the source along the scalp, 8.4, 20, 25, 30, and 35 mm from the source [in geodesic distance, see Fig. 10(a)], respectively, determined based on typical fNIRS system settings.18,58 Similarly, for the simulations with the layered-slab brain model, a pencil beam source pointing down is placed at (99.5, 100, 0) mm. A similar set of detectors are placed on one side of the source due to symmetry [see Fig. 10(b)].

Fig. 10.

Comparisons of fluence distributions in an MRI brain atlas (19.5 years) using three different brain models: (a) MC fluence maps using anatomically derived mesh (computed using DMMC) and voxel (computed using MCX) brain representations, and (b) fluence maps computed using the MC and DA in a simple layered-slab brain model. Contour plots, in log-10 scale, are shown along the coronal planes with each brain tissue layer labeled and delineated by black dashed lines. In (a), the “L” and “R” markings (red) indicate the left brain and the right brain, respectively. The comparisons between the mesh and voxel tissue boundaries are shown in the inset of (a).

The 3-D MC photon simulations are performed on all three brain models, where DMMC is used for both the mesh-based and the layered-slab brain models and MCX is used for the voxel-based brain model. The output fluence distributions along the SD plane are compared in Fig. 10(a). From these MC simulations, we also report the average partial pathlengths in the brain regions (), average total pathlengths () and their percentage ratios in Table 1. Moreover, we also computed the percentage fraction of the energy deposition in the GM region with respect to the total simulated energy. In addition to MC-based photon modeling, we have also applied the DA, frequently seen in the literature,5 to the layered-slab brain model and compared the results with those derived from the MC method in Fig. 10(b). For solving the diffusion equation, we used our in-house diffusion solver, Redbird.59 The reduced scattering coefficient of the CSF region is set to as suggested in Refs. 5 and 48. The Redbird solution matches excellently with that from NIRFAST15 (not shown).

Table 1.

Comparison of key optical parameters derived from MC simulations from an MRI brain atlas (19.5 years): for each detector, we compare the average photon partial pathlengths in the brain region (), total-pathlengths (TPL), and their percentage ratios () derived from mesh-based (DMMC), voxel-based (MCX), and layered-slab (DMMC) brain representations at various source–detector (SD) separations.

| Det. | SD (mm) | Mesh-based brain model (DMMC) | Voxel-based brain model (MCX) | Layered-slab brain model (DMMC) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| (mm) | TPL (mm) | (%) | (mm) | TPL (mm) | (%) | (mm) | TPL (mm) | (%) | ||

| 1 | 8.4 | 0.05 | 34.37 | 0.14 | 0.06 | 33.91 | 0.17 | 0.13 | 36.52 | 0.35 |

| 2 | 20 | 1.55 | 92.96 | 1.66 | 1.90 | 93.53 | 2.03 | 3.03 | 101.9 | 2.97 |

| 3 | 25 | 4.33 | 122.4 | 3.54 | 5.33 | 123.6 | 4.31 | 7.05 | 133.4 | 5.28 |

| 4 | 30 | 8.74 | 150.1 | 5.82 | 9.73 | 150.1 | 6.48 | 12.5 | 163.5 | 7.64 |

| 5 | 35 | 12.8 | 169.6 | 7.56 | 14.4 | 170.5 | 8.44 | 17.4 | 186.5 | 9.34 |

| 6 | 8.4 | 0.04 | 36.06 | 0.11 | 0.05 | 36.10 | 0.14 | — | — | — |

| 7 | 20 | 1.20 | 95.47 | 1.26 | 1.38 | 97.16 | 1.42 | — | — | — |

| 8 | 25 | 3.48 | 125.5 | 2.78 | 3.70 | 126.4 | 2.92 | — | — | — |

| 9 | 30 | 7.77 | 157.0 | 4.95 | 7.90 | 158.0 | 5.00 | — | — | — |

| 10 | 35 | 12.2 | 179.5 | 6.78 | 12.0 | 180.1 | 6.68 | — | — | — |

In Fig. 10(a), the fluence contour plots produced by MCX (orange dashed-line) and DMMC (white dashed-line) agree excellently in the vicinity of the source, whereas noticeable discrepancies are observed when moving away from the source. We believe this is a combined result of (1) photon energy deposition variations due to the small disagreement between a terraced tissue boundary and the smooth surface boundary, and (2) the distinct photon reflection behaviors between a voxel- and a mesh-based surface due to the differences in the orientations of surface facets. The effect from the first cause is largely depicted by the deviation between the two solutions in the depth direction near the source. Such difference is particularly prominent near highly curved boundaries or near boundaries with high absorption/scattering contrasts, such as the CSF region beneath detectors #7, #8, and #9 in this plot. The effect from the second cause is highlighted by the worsened discrepancy when moving away from the source along the scalp layer, for example, the scalp region to the left of detector #10. Overall, the second source of error is noticeably prominent than the mismatch resulted from the first cause. This observation is further validated by disabling the refractive index mismatch calculations in both of our simulations (results not shown): the error along the scalp surface was largely removed, but the deviations in the deep-brain regions remain.

In Fig. 10(b), the DA (orange dashed-line) and MC (white dashed-line) produce well-matched fluence contour plots near the source but show significant difference in the regions distal to the source. The difference is particularly noticeable within the CSF and GM regions and above 30-mm SD separation on the scalp surface. We believe it is largely due to the error introduced by the approximated CSF reduced scattering coefficient.48

To further quantify the differences caused by different brain representations and their impact on fNIRS brain measurements, in Table 1, we also compare several key photon parameters derived from MC simulations. Here we use the parameters derived from MMC as the reference. This is because MC solutions are typically used as gold standard, and mesh-based shape representations are known to be more accurate than voxelated domains.16 We observe that simulations using voxel-based and layered-slab brain models tend to overestimate compared to the mesh models. For detectors #6 to #10, the voxel-based simulation gives a 21% overestimation at the shortest SD separation (8.4 mm). Such discrepancy is reduced to within at the largest two separations (30 and 35 mm). The values are less susceptible to anatomical model accuracy, reporting a percentage difference between 0.1% and 1.8%. As a result, the difference in is largely modulated by that of , ranging between −1.5% and 21%. However, for detectors #1 to #5 located on a different brain region where the superficial layers are shallower than those under detectors #6 to #10, more pronounced overestimations of for all SD separations, ranging from 12% to 23%, are observed, resulting in an percentage difference between 12% and 24%. Similarly, compared to mesh-based model, the layered-slab brain MC simulations report significant overestimation of (36% to 166% with the highest difference at the shortest separation) and (6.3% to 10%), resulting in significant variation in : 151% at the shortest SD separation and 78% to 24% for four long separations. Furthermore, we have also computed the percentage fraction of the energy deposition within the GM. This fraction is 1.69% when using mesh-based brain model, and 1.42% when using a voxel-based brain model, resulting in a 16% reduction in brain energy deposition. This result could have some implications to many PBM applications.50

4. Conclusion

In this work, we address the increasing needs for accurate and high-quality brain/head anatomical models that arise in fNIRS and many other neuroimaging modalities for brain function quantification, image reconstruction, multiphysics modeling, and visualization. Combined with the advance in light transport simulators,16,17,47 our proposed brain mesh generation pipeline enables fNIRS research community to utilize more accurate anatomical representations of the human brain to improve quantification accuracy and make atlas-based as well as subject-specific fNIRS analysis more feasible. This also gives us an opportunity to systematically investigate how neuroanatomical models—ranging from the simple layered-slab brain model to voxel-based and mesh-based models—impact the estimations of optical parameters that are essential to fNIRS imaging.

Specifically, we first described a fast and robust brain mesh generation algorithm and demonstrated that our MATLAB-based open-source toolbox, Brain2Mesh, can produce high-quality brain and full-head tetrahedral meshes from multilabel or probabilistic segmentations with full automation. The abilities to create tissue boundaries from grayscale probabilistic maps and incorporate detailed surface models from FreeSurfer/FSL ensure smoothness and high accuracy in representing brain tissue boundaries. The output meshes generally exhibit excellent shape quality without needing to generate excessive number of small elements, such as in many existing mesh generators. For most of the included examples, the processing time ranges between 1 and a few minutes using only a single CPU thread. This is dramatically faster than most previously published brain meshing tools.30,35,60 Moreover, the entire meshing pipeline was developed based on our open-source meshing toolbox, Iso2Mesh, and other open-source meshing utilities such as CGAL, TetGen, and Cork. This ensures excellent accessibility of this tool to the community. In addition to developing this brain mesh generation toolkit, we have also produced a set of high-quality brain atlas mesh models, including the widely used BrainWeb, Colin27, and MNI atlases. We believe these ready-to-use brain/full head models will be valuable resources for the broad neuroimaging community.

Another important aspect of this study is that we demonstrate how tissue boundary representations, especially layered-, voxel- and mesh-based anatomical models, could impact light transport simulations in fNIRS data analysis. While the modeling error caused by voxelization in MC simulations has been previously reported,61 we believe this is the first time such discrepancy has been quantified, particularly in the context of brain imaging, enabled by our unique access to high-quality brain meshes and highly accurate MMC simulation tools. We believe such findings could provide guidance for advancing fNIRS toward improved accuracy and broad utility. Our open-source meshing software and the brain mesh library are freely available at Ref. 62.

Acknowledgments

The authors wish to thank the funding supports from the National Institutes of Health under Grant Nos. R01-GM114365, R01-CA0204443, and R01-EB026998. They also acknowledge the valuable inputs from Dr. Hang Si on the use of TetGen, as well as the instructive conversation with Dr. John Richards and Dr. Katherine Perdue on brain segmentation.

Biographies

Anh Phong Tran is a PhD student in chemical engineering and MS student in electrical and computer engineering at Northeastern University in Boston, Massachusetts. He received his BS degree in chemical engineering from Tufts University in 2013. His current research interests include optics, medical imaging, control theory, and systems biology.

Shijie Yan is a doctoral candidate at Northeastern University. He received his BE degree from Southeast University, China, in 2013 and MS degree from Northeastern University in 2017. His research interests include Monte Carlo photon transport simulation algorithms, parallel computing, and GPU programming and optimization.

Qianqian Fang, PhD, is currently an assistant professor in the Bioengineering Department of Northeastern University, Boston, USA. He received his PhD from Dartmouth College in 2005. He then joined Massachusetts General Hospital and became an instructor of radiology in 2009 and an assistant professor of radiology in 2012, before he joined Northeastern University in 2015. His research interests include translational medical imaging devices, multimodal imaging, image reconstruction algorithms, and high-performance computing tools to facilitate the development of next-generation imaging platforms.

Disclosures

No conflicts of interest, financial or otherwise, are declared by the authors.

Contributor Information

Anh Phong Tran, Email: tran.anh@HUSKY.NEU.EDU.

Shijie Yan, Email: yan.shiji@husky.neu.edu.

Qianqian Fang, Email: q.fang@neu.edu.

References

- 1.Ferrari M., Quaresima V., “A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application,” NeuroImage 63, 921–935 (2012). 10.1016/j.neuroimage.2012.03.049 [DOI] [PubMed] [Google Scholar]

- 2.Pucci O., Toronov V., Lawrence K. S., “Measurement of the optical properties of a two-layer model of the human head using broadband near-infrared spectroscopy,” Appl. Opt. 49, 6324–6332 (2010). 10.1364/AO.49.006324 [DOI] [PubMed] [Google Scholar]

- 3.Selb J., et al. , “Comparison of a layered slab and an atlas head model for Monte Carlo fitting of time-domain near-infrared spectroscopy data of the adult head,” J. Biomed. Opt. 19, 016010 (2014). 10.1117/1.JBO.19.1.016010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shang Y., Yu G., “A Nth-order linear algorithm for extracting diffuse correlation spectroscopy blood flow indices in heterogeneous tissues,” Appl. Phys. Lett. 105, 133702 (2014). 10.1063/1.4896992 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Eggebrecht A. T., et al. , “Mapping distributed brain function and networks with diffuse optical tomography,” Nat. Photonics 8, 448–454 (2014). 10.1038/nphoton.2014.107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Perdue K. L., Fang Q., Diamond S. G., “Quantitative assessment of diffuse optical tomography sensitivity to the cerebral cortex using a whole-head probe,” Phys. Med. Biol. 57, 2857–2872 (2012). 10.1088/0031-9155/57/10/2857 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hallez H., et al. , “Review on solving the forward problem in EEG source analysis,” J. NeuroEng. Rehabil. 4, 46 (2007). 10.1186/1743-0003-4-46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wolters C., et al. , “Influence of tissue conductivity anisotropy on EEG/MEG field and return current computation in a realistic head model: a simulation and visualization study using high-resolution finite element modeling,” NeuroImage 30, 813–826 (2006). 10.1016/j.neuroimage.2005.10.014 [DOI] [PubMed] [Google Scholar]

- 9.Frey P. J., George P.-L., Mesh Generation: Application to Finite Elements, ISTE Publishing Company; (2007). [Google Scholar]

- 10.Owen S. J., “A survey of unstructured mesh generation technology,” in IMR, pp. 239–267 (1998). [Google Scholar]

- 11.Weatherill N., Soni B., Thompson J., Handbook of Grid Generation, CRC Press, Boca Raton: (1998). [Google Scholar]

- 12.Tadel F., et al. , “Brainstorm: a user-friendly application for MEG/EEG analysis,” Intell. Neurosci. 2011, 1–13 (2011). 10.1155/2011/879716 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Horn A., et al. , “Connectivity predicts deep brain stimulation outcome in Parkinson disease,” Ann. Neurol. 82, 67–78 (2017). 10.1002/ana.24974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Joshi A., et al. , “Radiative transport-based frequency-domain fluorescence tomography,” Phys. Med. Biol. 53, 2069–2088 (2008). 10.1088/0031-9155/53/8/005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dehghani H., et al. , “Near infrared optical tomography using NIRFAST: algorithm for numerical model and image reconstruction,” Commun. Numer. Methods Eng. 25(6), 711–732 (2009). 10.1002/cnm.1162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fang Q., “Mesh-based Monte Carlo method using fast ray-tracing in Plücker coordinates,” Biomed. Opt. Express 1, 165 (2010). 10.1364/BOE.1.000165 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yan S., Tran A. P., Fang Q., “Dual-grid mesh-based Monte Carlo algorithm for efficient photon transport simulations in complex three-dimensional media,” J. Biomed. Opt. 24, 020503 (2019). 10.1117/1.JBO.24.2.020503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Brigadoi S., et al. , “A 4D neonatal head model for diffuse optical imaging of pre-term to term infants,” NeuroImage 100, 385–394 (2014). 10.1016/j.neuroimage.2014.06.028 [DOI] [PubMed] [Google Scholar]

- 19.Watté R., et al. , “Modeling the propagation of light in realistic tissue structures with MMC-fpf: a meshed Monte Carlo method with free phase function,” Opt. Express 23(13), 17467–17486 (2015). 10.1364/OE.23.017467 [DOI] [PubMed] [Google Scholar]

- 20.Ramon C., Schimpf P. H., Haueisen J., “Influence of head models on EEG simulations and inverse source localizations,” Biomed. Eng. Online 5(1), 10 (2006). 10.1186/1475-925X-5-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yang K. H., et al. , “Modeling of the brain for injury prevention,” in Neural Tissue Biomechanics, Bilston L., Ed., pp. 69–120, Springer, Berlin, Heidelberg: (2011). [Google Scholar]

- 22.Rampersad S. M., et al. , “Simulating transcranial direct current stimulation with a detailed anisotropic human head model,” IEEE Trans. Neural Syst. Rehabil. Eng. 22, 441–452 (2014). 10.1109/TNSRE.2014.2308997 [DOI] [PubMed] [Google Scholar]

- 23.Warfield S. K., et al. , “Real-time registration of volumetric brain MRI by biomechanical simulation of deformation during image guided neurosurgery,” Comput. Visualization Sci. 5, 3–11 (2002). 10.1007/s00791-002-0083-7 [DOI] [Google Scholar]

- 24.Cassidy J., et al. , “High-performance, robustly verified Monte Carlo simulation with FullMonte,” J. Biomed. Opt. 23, 085001 (2018). 10.1117/1.JBO.23.8.085001 [DOI] [PubMed] [Google Scholar]

- 25.Lederman C., et al. , “The generation of tetrahedral mesh models for neuroanatomical MRI,” NeuroImage 55, 153–164 (2011). 10.1016/j.neuroimage.2010.11.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Montenegro R., et al. , “An automatic strategy for adaptive tetrahedral mesh generation,” Appl. Numer. Math. 59, 2203–2217 (2009). 10.1016/j.apnum.2008.12.010 [DOI] [Google Scholar]

- 27.Lorensen W. E., Cline H. E., “Marching cubes: a high resolution 3D surface construction algorithm,” in Proc. 14th Annu. Conf. Comput. Graphics and Interact. Tech., ACM Press; (1987). [Google Scholar]

- 28.Wu Z., Sullivan J. M., “Multiple material marching cubes algorithm,” Int. J. Numer. Methods Eng. 58(2), 189–207 (2003). 10.1002/nme.775 [DOI] [Google Scholar]

- 29.Bronson J. R., Levine J. A., Whitaker R. T., “Lattice cleaving: conforming tetrahedral meshes of multimaterial domains with bounded quality,” in Proc. 21st Int. Meshing Roundtable, Jiao X., Weill J.-C., Eds., pp. 191–209, Springer, Berlin, Heidelberg: (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Callahan M., et al. , “A meshing pipeline for biomedical computing,” Eng. Comput. 25, 115–130 (2009). 10.1007/s00366-008-0106-1 [DOI] [Google Scholar]

- 31.Fang Q., Boas D. A., “Tetrahedral mesh generation from volumetric binary and grayscale images,” in IEEE Int. Symp. Biomed. Imaging: From Nano to Macro, IEEE; (2009). 10.1109/ISBI.2009.5193259 [DOI] [Google Scholar]

- 32.Jermyn M., et al. , “Fast segmentation and high-quality three-dimensional volume mesh creation from medical images for diffuse optical tomography,” J. Biomed. Opt. 18, 086007 (2013). 10.1117/1.JBO.18.8.086007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Boltcheva D., Yvinec M., Boissonnat J.-D., “Mesh generation from 3D multi-material images,” Lect. Notes Comput. Sci. 5762, 283–290 (2009). 10.1007/978-3-642-04271-3_35 [DOI] [PubMed] [Google Scholar]

- 34.Pons J. P., et al. , “High-quality consistent meshing of multi-label datasets,” Lect. Notes Comput. Sci. 4584, 198–210 (2007). 10.1007/978-3-540-73273-0_17 [DOI] [PubMed] [Google Scholar]

- 35.Windhoff M., Opitz A., Thielscher A., “Electric field calculations in brain stimulation based on finite elements: an optimized processing pipeline for the generation and usage of accurate individual head models,” Hum. Brain Mapping 34, 923–935 (2013). 10.1002/hbm.21479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Attene M., “A lightweight approach to repairing digitized polygon meshes,” Visual Comput. 26, 1393–1406 (2010). 10.1007/s00371-010-0416-3 [DOI] [Google Scholar]

- 37.Fillmore P. T., Phillips-Meek M. C., Richards J. E., “Age-specific MRI brain and head templates for healthy adults from 20 through 89 years of age,” Front. Aging Neurosci. 7, 44 (2015). 10.3389/fnagi.2015.00044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Burguet J., Gadi N., Bloch I., “Realistic models of children heads from 3D-MRI segmentation and tetrahedral mesh construction,” in Proc. 2nd Int. Symp. 3D Data Process. Visualization and Transm., IEEE; (2004). 10.1109/TDPVT.2004.1335298 [DOI] [Google Scholar]

- 39.Boissonnat J.-D., Oudot S., “Provably good sampling and meshing of surfaces,” Graphical Models 67, 405–451 (2005). 10.1016/j.gmod.2005.01.004 [DOI] [Google Scholar]

- 40.Bade R., Haase J., Preim B., “Comparison of fundamental mesh smoothing algorithms for medical surface models,” in Simulation und Visualisierung, Vol. 6, pp. 289–304, Citeseer (2006). [Google Scholar]

- 41.Bernstein G., “Cork: a 3D Boolean/CSG Library,” https://github.com/gilbo/cork (accessed August 2016).

- 42.Lindstrom P., Turk G., “Fast and memory efficient polygonal simplification,” in Proc. of the Conf. on Visualization’98, IEEE, 279–286 (1998). 10.1109/VISUAL.1998.745314 [DOI] [Google Scholar]

- 43.Garland M., Heckbert P. S., “Surface simplification using quadric error metrics,” in Proc. 24th Annu. Conf. Comput. Graphics and Interact. Tech., ACM Press; (1997). [Google Scholar]

- 44.Si H., “TetGen, a Delaunay-based quality tetrahedral mesh generator,” ACM Trans. Math. Software 41, 1–36 (2015). 10.1145/2732672 [DOI] [Google Scholar]

- 45.Cooper R., et al. , “Validating atlas-guided dot: a comparison of diffuse optical tomography informed by atlas and subject-specific anatomies,” NeuroImage 62, 1999–2006 (2012). 10.1016/j.neuroimage.2012.05.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Zhang Y., et al. , “Influence of extracerebral layers on estimates of optical properties with continuous wave near infrared spectroscopy: analysis based on multi-layered brain tissue architecture and Monte Carlo simulation,” Comput. Assisted Surg. 24(Suppl. 1), 144–150 (2019). 10.1080/24699322.2018.1557902 [DOI] [PubMed] [Google Scholar]

- 47.Yu L., et al. , “Scalable and massively parallel Monte Carlo photon transport simulations for heterogeneous computing platforms,” J. Biomed. Opt. 23, 010504 (2018). 10.1117/1.JBO.23.1.010504 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Custo A., et al. , “Effective scattering coefficient of the cerebral spinal fluid in adult head models for diffuse optical imaging,” Appl. Opt. 45, 4747–4755 (2006). 10.1364/AO.45.004747 [DOI] [PubMed] [Google Scholar]

- 49.Strangman G., Franceschini M. A., Boas D. A., “Factors affecting the accuracy of near-infrared spectroscopy concentration calculations for focal changes in oxygenation parameters,” Neuroimage 18(4), 865–879 (2003). 10.1016/S1053-8119(03)00021-1 [DOI] [PubMed] [Google Scholar]

- 50.Cassano P., et al. , “Selective photobiomodulation for emotion regulation: model-based dosimetry study,” Neurophotonics 6, 015004 (2019). 10.1117/1.NPh.6.1.015004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Sanchez C. E., Richards J. E., Almli C. R., “Age-specific MRI templates for pediatric neuroimaging,” Dev. Neuropsychol. 37, 379–399 (2012). 10.1080/87565641.2012.688900 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Lorio S., et al. , “New tissue priors for improved automated classification of subcortical brain structures on MRI,” NeuroImage 130, 157–166 (2016). 10.1016/j.neuroimage.2016.01.062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Liu A., Joe B., “Relationship between tetrahedron shape measures,” BIT 34, 268–287 (1994). 10.1007/BF01955874 [DOI] [Google Scholar]

- 54.Cignoni P., Rocchini C., Scopigno R., “Metro: measuring error on simplified surfaces,” Comput. Graphics Forum 17(2), 167–174 (1998). 10.1111/cgf.1998.17.issue-2 [DOI] [Google Scholar]

- 55.Aubert-Broche B., et al. , “Twenty new digital brain phantoms for creation of validation image data bases,” IEEE Trans. Med. Imaging 25, 1410–1416 (2006). 10.1109/TMI.2006.883453 [DOI] [PubMed] [Google Scholar]

- 56.Jurcak V., Tsuzuki D., Dan I., “10/20, 10/10, and 10/5 systems revisited: their validity as relative head-surface-based positioning systems,” NeuroImage 34(4), 1600–1611 (2007). 10.1016/j.neuroimage.2006.09.024 [DOI] [PubMed] [Google Scholar]

- 57.Giacometti P., Perdue K. L., Diamond S. G., “Algorithm to find high density EEG scalp coordinates and analysis of their correspondence to structural and functional regions of the brain,” J. Neurosci. Methods 229, 84–96 (2014). 10.1016/j.jneumeth.2014.04.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Kamran M. A., Mannan M. M. N., Jeong M. Y., “Cortical signal analysis and advances in functional near-infrared spectroscopy signal: a review,” Front. Hum. Neurosci. 10, 261 (2016). 10.3389/fnhum.2016.00261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Fang Q., et al. , “A multi-modality image reconstruction platform for diffuse optical tomography,” in Biomed. Opt., BMD24, Optical Society of America; (2008). [Google Scholar]

- 60.Huang Y., et al. , “Automated MRI segmentation for individualized modeling of current flow in the human head,” J. Neural Eng. 10, 066004 (2013). 10.1088/1741-2560/10/6/066004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Binzoni T., et al. , “Light transport in tissue by 3D Monte Carlo: influence of boundary voxelization,” Comput. Meth. Programs Biomed. 89, 14–23 (2008). 10.1016/j.cmpb.2007.10.008 [DOI] [PubMed] [Google Scholar]

- 62.Fang Q., Tran A. P., “Brain2Mesh: a one-liner brain mesh generator,” http://mcx.space/brain2mesh (accessed February 2020).