Abstract

Early diagnosis is the most important determinant of oral and oropharyngeal squamous cell carcinoma (OPSCC) outcomes, yet most of these cancers are detected late, when outcomes are poor. Typically, nonspecialists such as dentists screen for oral cancer risk, and then they refer high-risk patients to specialists for biopsy-based diagnosis. Because the clinical appearance of oral mucosal lesions is not an adequate indicator of their diagnosis, status, or risk level, this initial triage process is inaccurate, with poor sensitivity and specificity. The objective of this study is to provide an overview of emerging optical imaging modalities and novel artificial intelligence–based approaches, as well as to evaluate their individual and combined utility and implications for improving oral cancer detection and outcomes. The principles of image-based approaches to detecting oral cancer are placed within the context of clinical needs and parameters. A brief overview of artificial intelligence approaches and algorithms is presented, and studies that use these 2 approaches singly and together are cited and evaluated. In recent years, a range of novel imaging modalities has been investigated for their applicability to improving oral cancer outcomes, yet none of them have found widespread adoption or significantly affected clinical practice or outcomes. Artificial intelligence approaches are beginning to have considerable impact in improving diagnostic accuracy in some fields of medicine, but to date, only limited studies apply to oral cancer. These studies demonstrate that artificial intelligence approaches combined with imaging can have considerable impact on oral cancer outcomes, with applications ranging from low-cost screening with smartphone-based probes to algorithm-guided detection of oral lesion heterogeneity and margins using optical coherence tomography. Combined imaging and artificial intelligence approaches can improve oral cancer outcomes through improved detection and diagnosis.

Keywords: oral neoplasms, screening, diagnosis, machine intelligence, dentists, medicine

Introduction

Early diagnosis is the most important determinant of oral and oropharyngeal squamous cell carcinoma (OPSCC) outcomes, yet most of these cancers are detected late, when outcomes are poor. In this article, novel, combined imaging and artificial intelligence (AI)–based approaches to screen for, diagnose, and map OPSCC are discussed.

Clinical Need

OPSCC is the sixth most common cancer in the United States, with approximately 52,000 new cases and 11,000 related deaths annually (American Cancer Society 2018). The 5-y survival rate for US patients with localized disease at diagnosis is 83%, but it is only 32% for those whose cancer has metastasized (Llewellyn et al. 2001). Therefore, early detection is essential to ensure the best possible outcomes. Worldwide, there are over 640,000 new cases of OPSCC each year (Llewellyn et al. 2001) with approximately two-thirds in low- and middle-income countries (LMICs) (Llewellyn et al. 2001). Tobacco and alcohol use are considered the primary cause of OPSCC (Shopland 1995). The rapid increase in the number of human papillomavirus (HPV)–related cancers in some countries, especially in Europe and North America, poses an additional challenge (Chaturvedi et al. 2011). HPV-related cancers are often initially asymptomatic and remain undetected until they reach an advanced stage. Currently, more than two-thirds of all OPSCCs are detected after metastasis, and morbidity and mortality are among the highest of any major cancers.

Current Practice

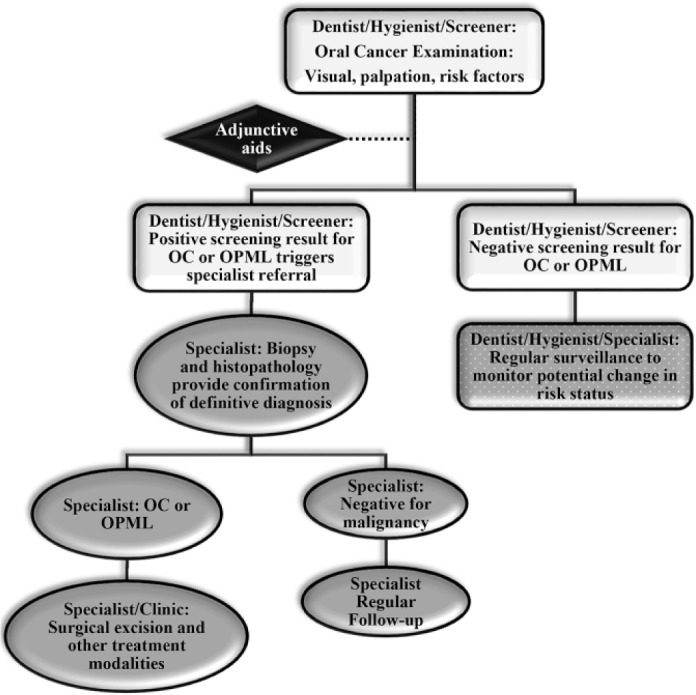

Over 60% of adults visit a dentist each year (National Center for Health Statistics 2016), making routine dental care an ideal setting to improve early detection of OPSCC. Indeed, OPSCC is detected primarily by dentists, who refer patients with suspect lesions to specialists for diagnosis and treatment (Alston et al. 2014; American Academy of Oral Medicine 2019). A noninvasive visual and tactile oral mucosal examination with risk factor assessment for oral diseases including OPSCC is part of the standard visit by oral health care providers and is recommended for all patients (American Academy of Oral Medicine 2019). A positive screening outcome leads to referral to a specialist for biopsy and histopathological analysis. Figure 1 depicts a schematic of the conventional pathway to care. As dentists typically represent the first step in the pathway to care, it is critical that they are effective in their “gatekeeper” role. However, studies have identified a lack in knowledge and practice of dentists to recognize and diagnose oral potentially malignant lesions (OPMLs) and OPSCC. There is a need to raise awareness and institute continuing education programs for general dentists on this topic. Moreover, there is a lack of knowledge and awareness from the general population, which serves as a barrier to seeking early diagnosis (Laronde et al. 2014; Hashim et al. 2019; Webster et al. 2019). In current clinical practice, due to lack of knowledge and reliance on subjective analyses of clinical features, screening accuracy by dentists remains poor (Sardella et al. 2007; Epstein et al. 2012; Yang et al. 2018; Grafton-Clarke et al. 2019, Ries et al. 2019).

Figure 1.

Schematic of the diagnostic process for oral cancer and precancers with typical decision-making junctures and their impact on the process of care. OC, oral cancer; OPML, oral potentially malignant lesion. By courtesy of and with permission from Dr. Diana Messadi.

Given that more than two-thirds of all OPSCCs are detected after metastasis, improving early detection provides the most effective means of improving OPSCC outcomes. There exist 2 major barriers to early OPSCC detection: the poor accuracy of existing approaches and the need for surgical biopsies to establish a diagnosis.

Most Oral Mucosal Lesions Identified during Routine Dental Visits Are Benign Confounders

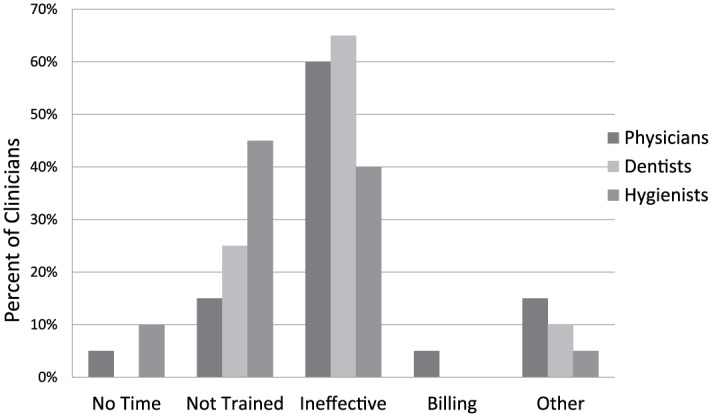

Diagnosing oral lesions is challenging due to a reliance on subjective analyses of clinical features such as color, texture, and consistency; therefore, subtle lesions can pass undetected. Benign oral lesions are difficult to distinguish from dysplasia and early stage cancer (Epstein et al. 2012; Cleveland and Robison 2013; Grafton-Clarke et al. 2019). Therefore, it is not surprising that screening accuracy in dental offices varies considerably. In 1 study, dentists discriminated OPSCC from benign confounders with 57.8% sensitivity and 53% specificity; a recent meta-analysis concluded that dentists achieve only 31% specificity in distinguishing OPSCC and OPMLs from benign lesions. A third study found that only 40% of referring dentists’ diagnoses coincided with specialist diagnosis, and it was reported that dentists’ delay in referring to a specialist is the primary cause of poor OPSCC outcomes, with dentists on average recalling the patient for 2 or 3 additional visits before specialist referral (Mehrotra and Gupta 2011; Epstein et al. 2012; Grafton-Clarke et al. 2019). The rapid increase in the number of HPV-related OPSCC in some countries poses an additional challenge (Amarasinghe et al. 2010; Tong et al. 2015). A recent survey of 130 clinicians found that 89% cited “ineffective” (65%) or inexpert (24%) as a primary barrier to effective OPSCC screening (Fig. 2) (Wilder-Smith, unpublished data, 2019).

Figure 2.

Primary barrier to effective oropharyngeal squamous cell carcinoma screening: survey of 130 California clinicians.

Biopsies Are Required for Definitive Diagnosis, but These Are Invasive, Resource Intensive, Do Not Provide Immediate Results, and Often Result in Underdiagnosis Due to Sampling Bias

Biopsy is mandatory for diagnosing OPMLs and OPSCC. Auxiliary methods are needed to assist the clinician in identifying the need for biopsy and in selecting appropriate sites to avoid false-negative results. Most dentists are reluctant to perform biopsies because of unfamiliarity with technique and uncertainty in choosing sites (Wan and Savage 2010). Selecting biopsy sites relies on visual examination, yet this is an unreliable criterion for selecting sampling sites (Wan and Savage 2010). Lesion heterogeneity can result in histological diagnosis at the biopsy site that differs from the adjacent, unsampled area. Thus, the histopathological information that provides the diagnosis does not necessarily indicate risk levels of the entire lesion. Moreover, because 3-dimensional mapping of lesions is currently impossible, point-based histopathological sampling guides presurgical treatment planning. Further intraoperative sampling and histopathology are then required during surgery to ensure complete removal of all pathological tissues and an adequate excision margin. Intraoperative histopathology considerably increases surgical risk, duration, and cost without providing confirmation that the entire continuous surgical margin is indeed clear. Finally, treatment-related changes in the appearance and feel of the intraoral tissues hinder monitoring for recurrence of neoplasia using customary visual exam and palpation. Consequently, recurrences are not detected sufficiently early to minimize long-time morbidity and mortality.

Principles of Successful Technology Innovation Translation

The success of biomedical innovation is defined by a host of factors beyond efficacy. No matter how accurate the information provided by a novel device, if it does not translate directly into clinical decision-making guidance and improved outcomes, the device will have little impact.

Technology Should Be Designed to Meet End Users’ Decision-Making Needs

The complexity and diversity of information that can be gathered by cutting-edge biomedical technologies is immense. However, any information that is generated by a device that does not add value in the sense of improving decision making or outcomes adds unnecessary cost and wastes precious clinical time (Litscher 2014). For example, if a device is intended to assist dentists with managing OPMLs, it should quantify any change in lesion status from one visit to the next in a manner that assists the dentist in recognizing increased risk levels and in determining whether to refer the patient to a specialist. A device that does less is not meeting its purpose; a device that goes beyond this is equally unsuccessful as it provides greater cost and complexity without improving outcomes.

Technology Should Be Calibrated Specifically to Meet the End Users’ Parameters for Cost, Skills, Training, Time, and Space

A device will only become a part of the end user’s clinical routine if it fits into the office workflow and its cost is commensurate with compensation and frequency of use (Slavkin 2017). For example, a device intended for OPSCC screening in a community setting must be very low cost, robust, and portable. Its user interface should be simple, be intuitive, and produce only the input and output parameters that are directly linked to improving patient outcomes—in this case, identifying OPSCC risk and connecting the patient with the pathway to care.

Imaging Oral and Oropharyngeal Squamous Cell Carcinoma

It is possible to image many phenomena related to the presence, progression, or regression of neoplasia. Such biomarkers include metabolic rates, oxygenation, blood flow, spatial and structural characteristics of tissue architecture, biochemical pathways, and cell viability (O’Connor et al. 2015). Imaging-based approaches to screening, early detection, and surveillance of OPSCC are attractive because they allow for immediate, nonsurgical interrogation of the oral tissues. Because it is completely noninvasive, imaging can be repeated as needed. Yet, overall, existing guidelines do not recommend for community screeners or dentists the use of currently available imaging-based adjuncts such as autofluorescence imaging (Macey et al. 2015; Lingen et al. 2017), in part due to low accuracy in distinguishing OPSCC/OPMLs from benign confounders, as well as challenges in interpreting images. However, other recent works have validated the use of autofluorescence within the context of population screening and recommend it as an adjunct method to conventional oral examination to detect OPMLs and OPSCC (Simonato et al. 2017; Farah et al. 2019; Simonato et al. 2019; Tiwari et al. 2019; Tomo et al. 2019).

Several innovative high-resolution imaging approaches have also been investigated that are specifically designed for use by specialists. These devices have frequently performed well in clinical tests; nevertheless, none have been adopted for routine use in specialty practice. Their failure to bridge the gap to clinical adoption is more about overall logistics and effect on outcomes than diagnostic performance. Optical coherence tomography (OCT) provides a good illustration for this observation.

OCT was introduced as an imaging technique in 1991. It has been compared to ultrasound imaging. Both technologies employ backscattered signals reflected from within the tissue to reconstruct structural images, with OCT measuring light rather than sound. The resulting OCT image is a 2-dimensional representation of the optical reflection within a tissue sample at near histologic resolution. These images can be stacked to generate 3-dimensional reconstructions of the target tissue. Images are acquired using a flexible fiber-optic probe, which is placed on the tissue surface to generate real-time surface and subsurface images of tissue microanatomy and cellular structure. OCT can image to a depth of approximately 2 to 3 mm in oral mucosa.

Several OCT systems have received Food and Drug Administration (FDA) approval for clinical use, and OCT is an essential imaging modality in ophthalmology. Multiple studies have investigated the diagnostic utility of OCT to detect and diagnose oral premalignancy and malignancy, with reported diagnostic sensitivities and specificities typically ranging between 80% to 90% and 85% to 98%, respectively (Doi 2007; Tsai et al. 2008; Wilder-Smith et al. 2009; Sunny et al. 2016; Tran et al. 2016; Munir et al. 2019). However, despite excellent diagnostic performance, OCT has overall not been adopted as a clinical tool for OPSCC diagnosis for several reasons: 1) images are difficult to interpret; 2) the device is large, heavy, and fragile; 3) the operating software and user interfaces are daunting; and 4) the cost is high. In a recent project, innovative engineering techniques were used to reduce the size, fragility, and cost of OCT technology. A prototype OCT system was constructed at 10% of the cost of typical existing commercial systems (Sunny et al. 2016; Tran et al. 2016). Despite excellent diagnostic accuracy and an improved user interface, clinicians who tested the device stated that the need to learn to read and interpret the images continued to present a significant barrier to clinical adoption of the system.

Artificial Intelligence

Artificial intelligence techniques are gaining attention as a means of improving image-based diagnosis (Munir et al. 2019). Machine learning and deep learning are 2 subsets of AI, which, although the terms are sometimes used interchangeably, have some important differences. Briefly, machine learning algorithms typically require an accurately categorized data input, whereas deep learning networks rely on layers of the artificial neural networks to generate their own categories based on identifying edges (differences) within layers of neural networks when exposed to a huge number of data points. Therefore, although both of these subsets of AI are “intelligent,” deep learning requires much more data than a traditional machine learning algorithm, while machine learning performs better with fewer data sets that are clearly labeled or structured with regard to a gold standard or specific criteria of interest.

Both approaches are used for intelligent image analysis, depending on the application and data sets that are available. Manual interpretation of medical images is very time-consuming, requires considerable specialist expertise, and is prone to inaccuracy. For this reason, in the early 1980s, computer-aided diagnosis (CAD) systems were developed to improve the efficiency of medical image interpretation (Bengio et al. 2013). Feature extraction was the key step in early efforts to automate medical diagnosis. Next, AI techniques were developed and progressively improved to overcome some of the early weaknesses in feature extraction techniques (Bengio et al. 2013; LeCun et al. 2015). For example, the development and refinement of convolutional neural networks improved dramatically the ability for automated cancer detection (Xu et al. 2015). Briefly, there are 3 main steps to applying AI to medical imaging: preprocessing, image segmentation, and postprocessing.

Preprocessing

To overcome the noise contained in raw images, unwanted image information must be removed. Many filters can be applied to remove optical noise. At the preprocessing stage, contrast is also adjusted, for example, to improve differentiation and delineation between different structures or between healthy versus pathological structures. For example, an image of skin with hairs in it as well as a lesion may cause misclassification of the lesion.

Cutting-edge imaging approaches that map multiple levels and types of biomarkers are well suited to addressing OPSCC. However, the large volumes of complex data generated by these devices are poorly compatible with the diagnostic needs and workflow parameters of the settings in which OPSCC is detected, diagnosed, and managed. Deep learning excels at recognizing complex patterns in images and thus offers an important means of transforming image interpretation from a qualitative subjective task with unclear cutoffs and no decision-making guidance to a process that is quantifiable, reproducible, and customized to providing only the information needed for decision making. It is this unique capability that primarily powers the impact of AI on clinical outcomes. Moreover, AI can quantify subtle variations that are not detectable by the human eye. It can also combine multifactorial data streams into powerful integrated diagnostic and predictive systems spanning divergent data streams from sources such as images, genomics, pathology, electronic health records, and even social networks. A recent survey of the literature reported a 15% to 20% improvement in the accuracy of cancer prediction outcomes in clinical practice using AI techniques (Kourou et al. 2014).

To date, there are few publications on the application of these techniques to imaging in the oral cavity. In 1 recent study, the performance of a deep learning algorithm for detecting oral cancer from hyperspectral images of patients with oral cancer was evaluated (Jeyaraj and Samuel Nadar 2019). The investigators reported a classification accuracy of 94.5% for differentiating between images of malignant and healthy oral tissues. Similar results were described both in a recent animal study (Lu et al. 2018) and in another project that imaged human tissue specimens (Fei et al. 2017). In another study, deep learning techniques were applied to confocal laser endomicroscopy to analyze cell structure as a means of detecting OPSCC. A mean diagnostic accuracy of 88.3% (sensitivity 86.6%, specificity 90%) was reported (Aubreville et al. 2017).

Image Segmentation

This process recognizes and delineates the region of interest. For cancer imaging, pathological areas of the lesion are distinguished from nonpathological sites. While segmentation can be divided into 4 main classes, there exist a host of different approaches to this process, and often hybrid models combining multiple techniques have been used to improve accuracy.

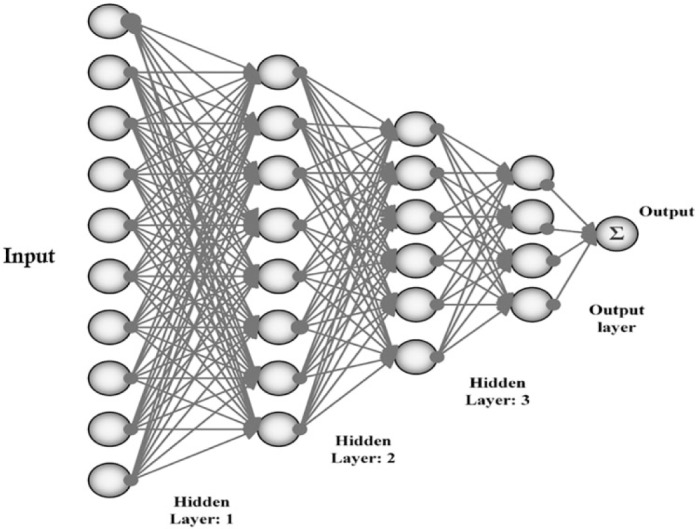

Postprocessing

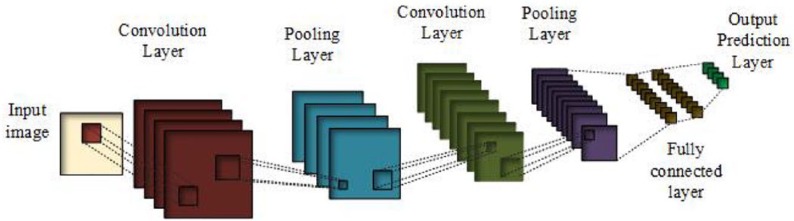

Multiple postprocessing methods exist, whose primary function is to target and extract information on features of interest such as islands, borders, and regions that share the same defined properties (Lee and Landgrebe 1993; Sikorski 2004; Zhou et al. 2009). Many different postprocessing techniques are being applied to medical imaging, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), multiscale convolutional neural network (M-CNN), and multi-instance learning convolutional neural network (MIL-CNN). Neural networks can perform complex computational tasks because of the nonlinear processing capabilities of their neurons. A general schematic of an artificial neural network is shown in Figure 3. Briefly, test data such as images of OPSCC with matching histopathological diagnosis and risk factors are input into the neurons to train the network to recognize specific features. Then, network performance is tested using additional data sets, and the generated output is matched with the gold-standard diagnosis (such as histopathology). An error signal is generated in all the cases where the outputs do not match the gold-standard criterion. This error signal propagates in the backward direction. Weightings for the specific features used by the neurons are adjusted for error reduction. This processing is repeated to minimize error while avoiding overfitting the data. The number of studies investigating the use of these approaches for diagnosing and mapping different types of cancers is increasing exponentially, especially for breast, lung, brain, and skin cancer (Xu et al. 2015).

Figure 3.

Simplified schematic of artificial neural networks (ANNs). Source: Munir K, Elahi H, Ayub A, Frezza F, Rizzi A. Cancer diagnosis using deep learning: a bibliographic review. Cancers (Basel). 2019;11(9):E1235.

CNNs are one form of deep learning that is frequently applied to medical imaging tasks. These systems function using a direct feed-forward trajectory as shown in Figure 4. Here, the signal is processed directly without any backward loops or cycles. The pooling steps of the CNN have the function of summarizing neighboring pixels and propagating these summarized characteristics in the output (subsampling) to make the representation invariant to small changes to translation in the input. CNNs were used to develop the algorithms described below.

Figure 4.

General convolutional neural networks (CNNs). Source: Munir K, Elahi H, Ayub A, Frezza F, Rizzi A. Cancer diagnosis using deep learning: a bibliographic review. Cancers (Basel). 2019;11(9):E1235.

Applying AI to Imaging to Improve OPSCC Outcomes

AI is able to automate processes that combine complex variables with different levels of weighting into an analytic pathway whose outcome provides guidance for clinical decision making. For example, micromorphological features can be combined with disease risk factors, geographical data, and differing gradients of signal intensity or varying voxel-by-voxel signal patterns to generate risk assessments for specific conditions.

Below we present 2 scenarios that demonstrate the application of these approaches to increasingly complex situations: 1) OPSCC screening and diagnosis and 2) intraoperative mapping of OPSCC heterogeneity and margins.

Oral Cancer Screening Using a Simple Smartphone Probe with Deep Learning Algorithm

In their landmark randomized clinical trial, Sankaranarayanan et al. for the first time demonstrated that effective screening by community health workers using only visual examination combined with risk factors can almost halve OPSCC-related mortality in high-risk groups (Sankaranarayanan et al. 2005; Subramanian et al. 2009). They also demonstrated that this intervention is cost-effective with considerable cost per life-year saved in the high-risk group (Sankaranarayanan et al. 2005; Subramanian et al. 2009). However, this outcome was quite different from those of other major OPSCC screening studies, which typically determined no impact on OPSCC outcomes such as mortality, morbidity, and cost (Kuriakose 2018). The accuracy and effectiveness of the screening process was identified as the primary and predominant determinant factor for the unique success of the Sankaranarayanan group in improving long-term OPSCC outcomes (Kuriakose 2018). The researchers had invested an extraordinary amount of time, innovation, and effort into intensive, detailed, frequently repeated training and standardization of its field staff for their screening activity, which went far beyond standard practice, and these measures were each implemented at an intensive level that would be difficult to maintain or upscale. However, deep learning techniques are ideally suited to achieving a similar or better level of screening efficacy and accuracy while overcoming the challenging need for highly skilled, trained, and constantly retrained screeners.

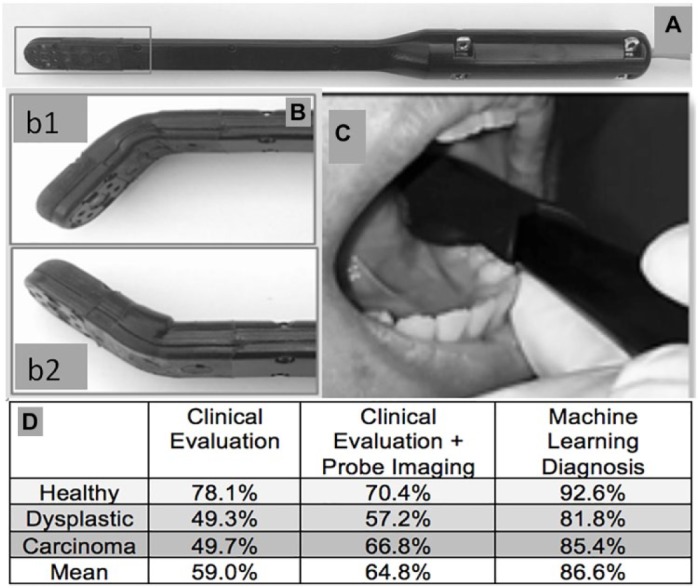

In a recent multistage, multicenter study, a very low-cost point-of-care deep learning–supported smartphone-based oral cancer probe was developed specifically for screeners in high-risk populations in remote regions with limited infrastructure (Firmalino et al. 2018; Song et al. 2018; Uthoff, Song, Birur, et al. 2018; Uthoff, Song, Sunny, et al. 2018). The probe is designed to access all areas of the oral cavity, including sites that are often inaccessible to conventional approaches and that carry high risk of HPV-related lesions (Fig. 5). The probe’s autofluorescence and polarization images are combined with OPSCC risk factor tabulation (habits, signs, and symptoms) for analysis by a proprietary deep learning–based algorithm to generate a screening output that provides triage guidance to the screener.

Figure 5.

Probe design and performance. (A) Fourth-generation oropharyngeal squamous cell carcinoma probe prototype. (B) Soft bendable probe tip (b1) extends intraoral reach (b2). (C) Screening accuracy of community health workers using conventional exam (left-hand side), community health workers using conventional exam and probe image (center), and machine learning algorithm (right-hand side).

The deep learning algorithm was initially trained using 1,000 data sets of images, risk factors, and matching histopathological diagnoses. It was then tested and refined using an additional 300 data sets from our database. In the first clinical study in 92 subjects with oral lesions, the initial screening algorithm performed well, with an agreement with standard-of-care diagnosis of 80.6% (Uthoff, Song, Birur, et al. 2018). After additional training, the algorithm was able to classify intraoral lesions with sensitivities, specificities, positive predictive values, and negative predictive values ranging from 81% to 95%. In another study, screening accuracy approximated 85%, whereas conventional screening accuracy by community health workers ranged from 30% to 60% (Cleveland and Robison 2013; Uthoff, Song, Birur, et al. 2018; Uthoff, Song, Sunny, et al. 2018). Figure 5 shows the results of a recent field study in which community health workers screened 292 individuals with increased OPSCC high risk in 3 ways: 1) by conventional clinical examination and risk factor tabulation, 2) by combining conventional clinical examination and risk factor tabulation with their visual assessment of the OPSCC probe image, and 3) using the deep learning diagnostic algorithm. Interestingly, mean screening accuracy only improved marginally, from 59% to 64.8% accuracy, when the pretrained screener “read” the probe image in additional to conventional screening. Using the same information as the screener, the deep learning algorithm improved mean diagnostic accuracy from 59% to 86.6% (Wilder-Smith, unpublished data, 2019).

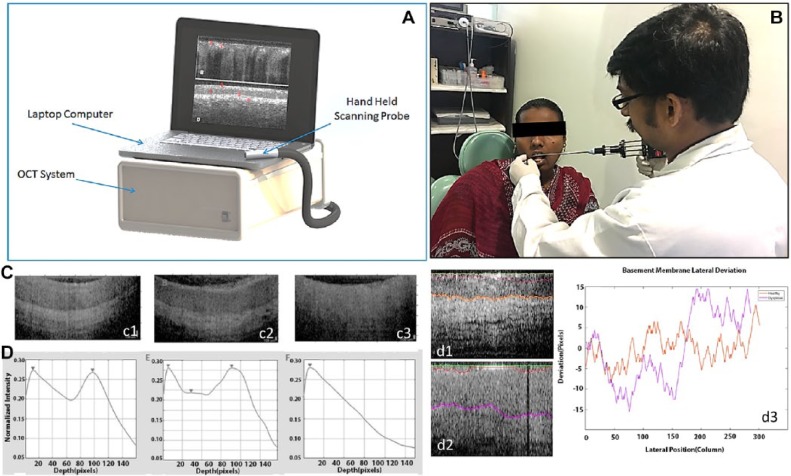

Diagnosing, Mapping Heterogeneity, and Margins Using OCT with Machine Learning and Deep Learning Algorithm

Adding a diagnostic algorithm to an OCT system overcomes the need to train users to read the OCT images. In a recent study using a prototype low-cost OCT system (Fig. 6), investigators developed and tested an automated diagnostic algorithm linked to an image-processing app and user interface (Heidari et al. 2019). After preprocessing, OCT images were classified as normal, dysplastic, or malignant through a 2-step automated decision tree. Initially, OCT images were categorized into 2 groups: nonmalignant (normal and dysplastic) versus malignant through a comparison of optical tissue stratification. Subsequently, the “nonmalignant” group was broken down into either “healthy” or “dysplastic” by observing changes in the linearity of the basement membrane. The automated cancer screening platform differentiated between healthy versus dysplastic versus malignant tissues with a sensitivity of 87% and a specificity of 83% versus the histopathological gold standard (Heidari et al. 2019).

Figure 6.

Optical coherence tomography (OCT) device, use, and algorithm. (A) Low-cost, robust prototype OCT system. (B) Imaging with high-resolution probe in low-resource setting. (C) OCT images and matching depth resolved intensity maps for healthy (C1), dysplastic (C2), and malignant (C3) oral mucosa. (D) Segmented OCT images of healthy (D1) and dysplastic (D2) oral tissues with graph showing lateral deviation versus layer average thickness of the epithelium-lamina propria boundary (D3).

To test the ability of the system to map tumor heterogeneity and margins, intraoperative images from 125 sites in 14 patients with histopathologically confirmed OPSCC were captured from multiple zones within and adjacent to the tumor area. The AI diagnosis was compared with the clinical and pathologic diagnosis for each site imaged. The spatially resolved diagnostic accuracy of the system was 92.2% versus histopathology, with 100% sensitivity and specificity for detecting malignancy within the clinically delineated tumor and tumor margin areas. For dysplastic lesions, the AI algorithm showed a location-specific sensitivity of 92.5%, specificity of 68.8%, and a moderate concordance with histopathological diagnosis (κ = 0.59). In addition, the AI algorithm significantly differentiated squamous cell carcinoma (SCC) from dysplasia (P ≤ 0.005) and dysplasia from non-dysplastic lesions (P ≤ 0.05) (Sunny et al. 2019).

Conclusion

Novel imaging technologies provide information on a wide range of biomarkers of neoplasia. However, the unaided human mind is unable to process and interpret these data sets without computational assistance. AI approaches can rapidly analyze complex images to provide decision-making guidance. Additional studies are needed to identify optimal imaging approaches for each clinical need and to finalize the configuration and clinical guidance outcomes of AI-based algorithms.

Author Contributions

B. Ilhan, contributed to conception and data analysis, drafted and critically revised the manuscript; K. Lin, contributed to data acquisition and interpretation, drafted the manuscript; P. Guneri, contributed to conception, data acquisition, and interpretation, critically revised the manuscript; P. Wilder-Smith, contributed to conception, design, data acquisition, interpretation, and analysis, drafted and critically revised the manuscript. All authors gave final approval and agree to be accountable for all aspects of the work.

Supplemental Material

Supplemental material, DS_10.1177_0022034520902128 for Improving Oral Cancer Outcomes with Imaging and Artificial Intelligence by B. Ilhan, K. Lin, P. Guneri and P. Wilder-Smith in Journal of Dental Research

Footnotes

This work was supported by Laser Microbeam and Medical Program National Institutes of Health (NIH)/National Institute of Biomedical Imaging and Bioengineering (NIBIB) grant P41EB05890, NIH/NIBIB grant UH2EB022623, and NIH/National Cancer Institute (NCI) grant P30CA0 62203.

The authors declare no potential conflicts of interest with respect to the authorship and/or publication of this article.

A supplemental appendix to this article is available online.

References

- Alston PA, Knapp J, Luomanen JC. 2014. Who will tend the dental safety net? J Calif Dent Assoc. 42(2):112–118. [PubMed] [Google Scholar]

- Amarasinghe HK, Usgodaarachchi US, Johnson NW, Lalloo R, Warnakulasuriya S. 2010. Public awareness of oral cancer, of oral potentially malignant disorders and of their risk factors in some rural populations in Sri Lanka. Community Dent Oral Epidemiol. 38(6):540–548. [DOI] [PubMed] [Google Scholar]

- American Academy of Oral Medicine (AAOM). 2019. Subject: oral cancer screening [accessed 2019 June 4]. https://www.aaom.com/clinical-practice-statement-oral-cancer-screening

- American Cancer Society (ACS). 2018. Cancer facts and figures. Atlanta (GA): ACS. [Google Scholar]

- Aubreville M, Knipfer C, Oetter N, Jaremenko C, Rodner E, Denzler J, Bohr C, Neumann H, Stelzle F, Maier A. 2017. Automatic classification of cancerous tissue in laserendomicroscopy images of the oral cavity using deep learning. Sci Rep. 7(1):11979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bengio Y, Courville A, Vincent P. 2013. Representation learning: a review and new perspectives. IEEE Trans Pattern Anal Mach Intell. 35(8):1798–1828. [DOI] [PubMed] [Google Scholar]

- Chaturvedi AK, Engels EA, Pfeiffer RM, Hernandez BY, Xiao W, Kim E, Jiang B, Goodman MT, Sibug-Saber M, Cozen W, et al. 2011. Human papillomavirus and rising oropharyngeal cancer incidence in the United States. J Clin Oncol. 29(32):4294–4301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cleveland JL, Robison VA. 2013. Clinical oral examinations May not be predictive of dysplasia or oral squamous cell carcinoma. J Evid Based Dent Pract. 13(4):151–154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doi K. 2007. Computer-aided diagnosis in medical imaging: historical review, current status and future potential. Comput Med Imaging Graph. 31(4–5):198–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein JB, Güneri P, Boyacioglu H, Abt E. 2012. The limitations of the clinical oral examination in detecting dysplastic oral lesions and oral squamous cell carcinoma. J Am Dent Assoc. 143(12):1332–1342. [DOI] [PubMed] [Google Scholar]

- Farah CS, Dost F, Do L. 2019. Usefulness of optical fluorescence imaging in identification and triaging of oral potentially malignant disorders: a study of VELscope in the LESIONS programme. J Oral Pathol Med. 48(7):581–587. [DOI] [PubMed] [Google Scholar]

- Fei B, Lu G, Wang X, Zhang H, Little JV, Patel MR, Griffith CC, El-Diery MW, Chen AY. 2017. Label-free reflectance hyperspectral imaging for tumor margin assessment: a pilot study on surgical specimens of cancer patients. J Biomed Opt. 22(8):1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firmalino V, Anbarani A, Islip D, Song B, Uthoff R, Takesh T, Liang R, Wilder-Smith P. 2018. First clinical results: optical smartphone-based oral cancer screening. Paper presented at: 38th American Society for Laser Surgery and Medicine Annual Meeting; April 11–15, 2018; Dallas, TX. [Google Scholar]

- Grafton-Clarke C, Chen KW, Wilcock J. 2019. Diagnosis and referral delays in primary care for oral squamous cell cancer: a systematic review. Br J Gen Pract. 69(679):e112–e126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hashim D, Genden E, Posner M, Hashibe M, Boffetta P. 2019. Head and neck cancer prevention: from primary prevention to impact of clinicians on reducing burden. Ann Oncol. 30(5):744–756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heidari E, Sunny S, James BL, Lam TM, Tran AV, Yu J, Ramanjinappa RD, Uma K, Birur PN, Suresh A, et al. 2019. Optical coherence tomography as an oral cancer screening adjunct in a low resource settings. IEEE J Sel Top Quant. 25(1):1–8. [Google Scholar]

- Jeyaraj PR, Samuel Nadar ER. 2019. Computer-assisted medical image classification for early diagnosis of oral cancer employing deep learning algorithm. J Cancer Res Clin Oncol. 145(4):829–837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourou K, Exarchos TP, Exarchos KP, Karamouzis MV, Fotiadis DI. 2014. Machine learning applications in cancer prognosis and prediction. Comput Struct Biotechnol J. 13:8–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuriakose MA. 2018. Strategies to improve oral cancer outcome in high-prevalent, low-resource setting. J Head Neck Physicians Surg. 6(2):63–68. [Google Scholar]

- Laronde DM, Williams PM, Hislop TG, Poh C, Ng S, Zhang L, Rosin MP. 2014. Decision making on detection and triage of oral mucosa lesions in community dental practices: screening decisions and referral. Community Dent Oral Epidemiol. 42(4):375–384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeCun Y, Bengio Y, Hinton G. 2015. Deep learning. Nature. 521(7553):436–444. [DOI] [PubMed] [Google Scholar]

- Lee C, Landgrebe DA. 1993. Feature extraction based on decision boundaries. IEEE Trans Pattern Anal Mach Intell. 15(4):388–400. [Google Scholar]

- Lingen MW, Abt E, Agrawal N, Chaturvedi AK, Cohen E, D’Souza G, Gurenlian J, Kalmar JR, Kerr AR, Lambert PM, et al. 2017. Evidence-based clinical practice guideline for the evaluation of potentially malignant disorders in the oral cavity: a report of the American Dental Association. J Am Dent Assoc. 148(10):712–727. [DOI] [PubMed] [Google Scholar]

- Litscher G. 2014. Innovation in medicine. Medicines (Basel). 1(1):1–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Llewellyn CD, Johnson NW, Warnakulasuriya KA. 2001. Risk factors for squamous cell carcinoma of the oral cavity in young people—a comprehensive literature review. Oral Oncol. 37(5):401–418. [DOI] [PubMed] [Google Scholar]

- Lu G, Wang D, Qin X, Muller S, Wang X, Chen AY, Chen ZG, Fei B. 2018. Detection and delineation of squamous neoplasia with hyperspectral imaging in a mouse model of tongue carcinogenesis. J Biophotonics. 11(3):1–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macey R, Walsh T, Brocklehurst P, Kerr AR, Liu JL, Lingen MW, Ogden GR, Warnakulasuriya S, Scully C. 2015. Diagnostic tests for oral cancer and potentially malignant disorders in patients presenting with clinically evident lesions. Cochrane Database Syst Rev. 29(5):CD010276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehrotra R, Gupta D. 2011. Exciting new advances in oral cancer diagnosis: avenues to early detection. Head Neck Oncol. 3:33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munir K, Elahi H, Ayub A, Frezza F, Rizzi A. 2019. Cancer diagnosis using deep learning: a bibliographic review. Cancers (Basel). 11(9):1–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Center for Health Statistics (NCHS). 2016. Health, United States, 2015: with special feature on racial and ethnic health disparities. Hyattsville (MD): National Center for Health Statistics. Report No. 2016-1232. [PubMed] [Google Scholar]

- O’Connor JP, Rose CJ, Waterton JC, Carano RA, Parker GJ, Jackson A. 2015. Imaging intratumor heterogeneity: role in therapy response, resistance, and clinical outcome. Clin Cancer Res. 21(2):249–257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ries LAG, Eisner MP, Kosary CL, Hankey BF, Miller BA, Clegg L, Mariotto A, Feuer EJ, Edwards BK. SEER Cancer Statistics Review, 1975. –2002, National Cancer Institute [accessed 2019 Jun 10]. https://seer.cancer.gov/csr/1975_2002/

- Sankaranarayanan R, Ramadas K, Thomas G, Muwonge R, Thara S, Mathew B, Rajan B; Trivandrum Oral Cancer Screening Study Group. 2005. Effect of screening on oral cancer mortality Kerala, India: a cluster-randomised controlled trial. Lancet. 365(9475):1927–1933. [DOI] [PubMed] [Google Scholar]

- Sardella A, Demarosi F, Lodi G, Canegallo L, Rimondini L, Carrassi A. 2007. Accuracy of referrals to a specialist oral medicine unit by general medical and dental practitioners and the educational implications. J Dent Educ. 71(4):487–491. [PubMed] [Google Scholar]

- Shopland DR. 1995. Tobacco use and its contribution to early cancer mortality with a special emphasis on cigarette smoking. Environ Health Perspect. 103(Suppl 8):131–142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sikorski J. 2004. Identification of malignant melanoma by wavelet analysis in Student/Faculty Research Day. New York: CSIS, Pace University. [Google Scholar]

- Simonato LE, Tomo S, Miyahara GI, Navarro RS, Villaverde AGJB. 2017. Fluorescence visualization efficacy for detecting oral lesions more prone to be dysplastic and potentially malignant disorders: a pilot study. Photodiagnosis Photodyn Ther. 17:1–4. [DOI] [PubMed] [Google Scholar]

- Simonato LE, Tomo S, Navarro RS, Villaverde AGJB. 2019. Fluorescence visualization improves the detection of oral, potentially malignant, disorders in population screening. Photodiagnosis Photodyn Ther. 27:74–78. [DOI] [PubMed] [Google Scholar]

- Slavkin HC. 2017. The impact of research on the future of dental education: how research and innovation shape dental education and the dental profession. J Dent Educ. 81(9):eS108–eS127. [DOI] [PubMed] [Google Scholar]

- Song S, Sunny S, Uthoff RD, Patrick S, Suresh A, Kolur T, Keerthi G, Anbarani A, Wilder-Smith P, Kuriakose MA, et al. 2018. Automatic classification of dual-modality, smartphone-based oral dysplasia and malignancy images using deep learning. Biomed Opt Express. 9(11):5318–5329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Subramanian S, Sankaranarayanan R, Bapat B, Somanathan T, Thomas G, Mathew B, Vinoda J, Ramadas K. 2009. Cost-effectiveness of oral cancer screening: results from a cluster randomized controlled trial in India. Bull World Health Organ. 87(3):200–206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sunny SP, Agarwal S, James BL, Heidari E, Muralidharan A, Yadav V, Pillai V, Shetty V, Chen Z, Hedne N, et al. 2019. Intra-operative point-of-procedure delineation of oral cancer margins using optical coherence tomography. Oral Oncol. 92:12–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sunny SP, Heidari AE, James BL, Ravindra DR, Subhashini AR, Keerthi G, Shubha G, Uma K, Kumar S, Mani S, et al. 2016. Field screening for oral cancer using optical coherence tomography Paper presented at: American Head and Neck Society (AHNS) 9th International Conference on Head and Neck Cancer; July 16–20, 2016; Seattle, WA. [Google Scholar]

- Tiwari L, Kujan O, Farah CS. 2019. Optical fluorescence imaging in oral cancer and potentially malignant disorders: a systematic review. Oral Dis [epub ahead of print 27 Feb 2019]. doi: 10.1111/odi.13071 [DOI] [PubMed] [Google Scholar]

- Tomo S, Miyahara GI, Simonato LE. 2019. History and future perspectives for the use of fluorescence visualization to detect oral squamous cell carcinoma and oral potentially malignant disorders. Photodiagnosis Photodyn Ther. 28:308–317. [DOI] [PubMed] [Google Scholar]

- Tong EK, Fagan P, Cooper L, Canto M, Carroll W, Foster-Bey J, Hébert JR, Lopez-Class M, Ma GX, Nez Henderson P, et al. 2015. Working to eliminate cancer health disparities from tobacco: a review of the National Cancer Institute’s Community Networks Program. Nicotine Tob Res. 17(8):908–923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tran AV, Lam T, Heidari E, Sunny SP, James BL, Kuriakose MA, Chen Z, Birur PN, Wilder-Smith P. 2016. Evaluating imaging markers for oral cancer using optical coherence tomography. Lasers Surg Med. 48(4): Late Breaking Abstracts #LB29. [Google Scholar]

- Tsai MT, Lee HC, Lu CW, Wang YM, Lee CK, Yang CC, Chiang CP. 2008. Delineation of an oral cancer lesion with swept-source optical coherence tomography. J Biomed Opt. 13(4):044012. [DOI] [PubMed] [Google Scholar]

- Uthoff RD, Song B, Birur P, Kuriakose MA, Sunny S, Suresh A, Patrick S, Anbarani A, Spires O, Wilder-Smith P, et al. 2018. Development of a dual-modality, dual-view smartphone-based imaging system for oral cancer detection. Proceedings of SPIE 10486, Design and Quality for Biomedical Technologies XI. 10486. doi: 10.1117/12.2296435. [DOI] [Google Scholar]

- Uthoff RD, Song B, Sunny S, Patrick S, Suresh A, Kolur T, Keerthi G, Spires O, Anbarani A, Wilder-Smith P, et al. 2018. Point-of-care, smartphone-based, dual-modality, dual-view, oral cancer screening device with neural network classification for low-resource communities. PLoS One. 13(12):e0207493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wan A, Savage N. 2010. Biopsy and diagnostic histopathology in dental practice in Brisbane: usage patterns and perceptions of usefulness. Aust Dent J. 55(2):162–169. [DOI] [PubMed] [Google Scholar]

- Webster JD, Batstone M, Farah CS. 2019. Missed opportunities for oral cancer screening in Australia. J Oral Pathol Med. 48(7):595–603. [DOI] [PubMed] [Google Scholar]

- Wilder-Smith P, Lee K, Guo S, Zhang J, Osann K, Chen Z, Messadi D. 2009. In vivo diagnosis of oral dysplasia and malignancy using optical coherence tomography: preliminary studies in 50 patients. Lasers Surg Med. 41(5):353–357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu B, Wang N, Chen T, Li M. 2015. Empirical evaluation of rectified activations in convolutional network. arXiv, https://arxiv.org/abs/ 150500853 [Google Scholar]

- Yang EC, Tan MT, Schwarz RA, Richards-Kortum RR, Gillenwater AM, Vigneswaran N. 2018. Noninvasive diagnostic adjuncts for the evaluation of potentially premalignant oral epithelial lesions: current limitations and future directions. Oral Surg Oral Med Oral Pathol Oral Radiol. 125(6):670–681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou H, Chen M, Rehg JM. 2009. Dermoscopic interest point detector and descriptor. Paper presented at: Proceedings of the 6th IEEE International Symposium on Biomedical Imaging: From Nano to Macro (ISBIâTM09); 2009 Oct 11; Boston, MA http://www.howardzzh.com/research/publication/2009.ISBI/2009.ISBI.Zhou.DIP.pdf [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, DS_10.1177_0022034520902128 for Improving Oral Cancer Outcomes with Imaging and Artificial Intelligence by B. Ilhan, K. Lin, P. Guneri and P. Wilder-Smith in Journal of Dental Research