Abstract

Background

Breast cancer is a collection of multiple tissue pathologies, each with a distinct molecular signature that correlates with patient prognosis and response to therapy. Accurately differentiating between breast cancer sub-types is an important part of clinical decision-making. Although this problem has been addressed using machine learning methods in the past, there remains unexplained heterogeneity within the established sub-types that cannot be resolved by the commonly used classification algorithms.

Methods

In this paper, we propose a novel deep learning architecture, called DeepTRIAGE (Deep learning for the TRactable Individualised Analysis of Gene Expression), which uses an attention mechanism to obtain personalised biomarker scores that describe how important each gene is in predicting the cancer sub-type for each sample. We then perform a principal component analysis of these biomarker scores to visualise the sample heterogeneity, and use a linear model to test whether the major principal axes associate with known clinical phenotypes.

Results

Our model not only classifies cancer sub-types with good accuracy, but simultaneously assigns each patient their own set of interpretable and individualised biomarker scores. These personalised scores describe how important each feature is in the classification of any patient, and can be analysed post-hoc to generate new hypotheses about latent heterogeneity.

Conclusions

We apply the DeepTRIAGE framework to classify the gene expression signatures of luminal A and luminal B breast cancer sub-types, and illustrate its use for genes as well as the GO and KEGG gene sets. Using DeepTRIAGE, we calculate personalised biomarker scores that describe the most important features for classifying an individual patient as luminal A or luminal B. In doing so, DeepTRIAGE simultaneously reveals heterogeneity within the luminal A biomarker scores that significantly associate with tumour stage, placing all luminal samples along a continuum of severity.

Keywords: Breast cancer, Precision medicine, TCGA, Deep learning

Background

Breast cancer is a collection of multiple tissue pathologies with a joint genetic and environmental aetiology, and is a leading cause of death among women worldwide. During the progression of cancer, inherited or acquired mutations in the DNA change the sequence (or amount) of the messenger RNA (mRNA) produced by the cell, thereby changing the structure (or amount) of functional protein. As such, mRNA can serve as a useful proxy for evaluating the functional state of a cell, with its abundance being easily measured by microarray or high-throughput RNA sequencing (RNA-Seq). Indeed, mRNA abundance has already been used as a biomarker for cancer diagnosis and classification [1, 2], cancer sub-type classification [3, 4], and for clustering gene expression signatures [5]. For a comprehensive comparison of the supervised and unsupervised methods used with gene expression data, see [6].

Despite advancements in the field, mRNA-based classifiers still present unique challenges. First, these data-sets contain many more features (10,000s) than samples (100s), a p≫n problem that is usually addressed by feature selection or feature engineering [7, 8]. Second, it is often difficult to interpret mRNA-based classifiers because the predictive genetic features do not necessarily make sense to biologist experts without explicit contextualisation. Third, the routine use of discriminative methods (e.g., support vector machines [9] or random forests [8, 10]) only provide information with regard to the importance of a feature for an entire class. For cancer data, this means that these methods cannot suggest the importance of a feature for a specific patient, but instead only provide such information at the level of cancer type or sub-type. This is important given that a substantial amount of heterogeneity remains unaddressed within cancer sub-types [4, 11].

In this paper, we propose a deep learning method for the stratification of clinical samples that not only offers interpretability through feature importance at the level of the cancer sub-type, but also at the level of the individual patient. As such, our method offers a finer level of interpretation than existing methods by capturing the heterogeneity of samples within each sub-type. To achieve this goal, we use an attention mechanism, a deep learning technique first proposed for machine translation and automatic image captioning [12, 13]. Attention allows salient features to come dynamically to the forefront for each patient as needed. As a result, the global knowledge of the model, obtained from the discriminating classes, is enhanced by the local knowledge that each patient provides. In other words, the attention mechanism offers an insight into the model’s decision-making process by revealing a set of individualised importance scores that describe how important each feature is for the classification of that patient. Further analysis of these importance scores reveals valuable insights into sub-type heterogeneity that are not directly apparent in the unattended data.

Machine learning techniques have been successfully applied to gene expression data for decades. More recently, deep learning, especially unsupervised deep learning, has influenced several approaches to gene expression analysis. These unsupervised models have been used to learn meaningful abstractions of biology from unlabelled gene expression data. For example, [14] extracted biological insights from the Pseudomonas aeruginosa gene expression compendium using shallow auto-encoders. Similarly, stacked auto-encoders have been adopted to capture a hierarchical latent space from yeast gene expression data [15], showing that the first layer correctly captures yeast transcription factors, while deeper layers conform to the existing knowledge of biological processes. More related to our work, it has been shown that shallow denoising auto-encoders are capable of extracting clinical information and molecular signatures from the gene expression data of patients with breast cancer [16]. Later, [17] coupled representations obtained from stacked denoising auto-encoders with a traditional classifier to achieve discriminatory power, applying it to classify cancerous samples from healthy ones.

Although the classification of cancer is an important task, breast cancer is not a monolithic entity. It is comprised of distinct molecular sub-types, each with a distinct molecular signature that correlate with patient prognosis and response to therapy [18]. This is especially true when considering the luminal sub-types: luminal A cancers have a much better prognosis than luminal B cancers and can be treated with endocrine therapy alone [18]. Yet, luminal A remains one of the most diverse cancer sub-types in terms of its molecular signature and severity [19]. As a case study, we focus our analysis on the difficult problem of classifying breast cancer sub-types based on gene expression signatures, and approach it using an end-to-end supervised deep learning model. Using publicly available data from The Cancer Genome Atlas (TCGA), we develop and apply a novel deep learning architecture, called DeepTRIAGE (Deep learning for the TRactable Individualised Analysis of Gene Expression). The DeepTRIAGE architecture achieves two key outcomes.

First, our architecture extends the attention mechanism to model data where the number of features is much larger than the number of observations.

Second, our architecture facilitates a new interpretation of feature importance by providing individualised patient-level importance scores. These patient-level importance scores can be analysed directly using multivariate methods to reveal and describe latent intra-class heterogeneity.

Taken together, our work establishes a computational framework for calculating interpretable and individualised biomarker scores that can accurately classify luminal sub-types, while simultaneously revealing and describing intra-class heterogeneity. Using DeepTRIAGE, we calculate personalised biomarker scores that describe the most important features for classifying an individual patient as luminal A or luminal B. In doing so, DeepTRIAGE simultaneously reveals heterogeneity within the luminal A biomarker scores that significantly associate with tumour stage, placing all luminal samples along a continuum of severity.

Methods

Data acquisition

We retrieved the unnormalised gene-level RNA-Seq data for the TCGA breast cancer (BRCA) cohort [20] using the TCGAbiolinks package in Bioconductor [21]. After filtering any genes with zero counts across all samples, we performed an effective library size normalisation of the count data using DESeq2 [22]. To retrieve luminal A (LumA) and luminal B (LumB) sub-type status for the TCGA BRCA samples, we downloaded the “PAM50” labels from the supplementary data of [19]. With 1148 PAM50 labels retrieved, we excluded patients that had more than one tumour sample sequenced. This left us with 528 LumA and 201 LumB samples. Of these, 176 LumA and 67 LumB samples were set aside as a test set.

Engineering annotation-level expression from genes

To reduce the dimensionality of the raw feature space, we transformed raw features from “gene expression space” into an “annotation space”. For this, we elected to use the Gene Ontology (GO) Biological Process and Kyoto Encyclopedia of Genes and Genomes (KEGG) annotation databases. Pathways with less than ten associated genes were removed before estimating pathway-level expression by taking the sum of counts for all genes in each pathway, as described and validated in [23]. This results in 3942 GO features and 302 KEGG features that are then used for model training.

Model architecture

Gene expression data-sets usually have many more features than samples. Using a standard deep learning architecture with such data leads to a parameter explosion in the model that can cause over-fitting and reduce generalizability. Instead, our model aims to (i) reduce the number of free parameters of the model, (ii) encode global knowledge in the data by finding discriminatory features at the cancer sub-type level (akin to a logistic regression), and (iii) encode local information provided by each individual patient using an attention mechanism. These innovations make the attention mechanism tractable for high-dimensional data.

The deepTRIAGE model

Let d be the dimension of the raw feature space and let xj=[xj1,…,xjd] be the representation of sample j in this space. Our goal is to train a binary classifier that learns whether sample j belongs to the LumA class (yj=1) or the LumB class (yj=0).

LetEd×m be an embedding matrix and let ei=[ei1,…,eim] be the embedding vector for feature i∈{1:d}. Using Eq. 1, we define , the m-dimensional embedded vector of feature i for sample j:

| 1 |

where fe, parametrised by Θe, is the function that captures the relationship between the scalar value xji and the embedding vector ei. Note that the same embedding matrix is used for all samples.

Now, we can define a new representation for sample j using its embedded vectors, as shown in Eq. 2:

| 2 |

where is a p-dimensional representation of the raw feature data, is parametrised by Θx, and βji is a scalar value denoting the individualised importance that feature i has in the classification of sample j. Note that m≪d and usually p≪m. In other words, the dimensionality of the embedding space is much smaller than the dimensionality of the raw feature space, and the dimensionality of the final representation is smaller than the dimensionality of the embedding space, allowing the attention mechanism to work successfully for such high-dimensional data. By viewing Eq. 2 in a deep learning framework, one can interpret βji as the attention of sample j to feature i and compute it using Equations 3, 4, and 5:

| 3 |

| 4 |

| 5 |

where is parametrised by Θα. Equation 5 is simply a normalisation to ensure that the attention weights sum to one for each sample.

Now, given the fixed size representation of sample j as , we can train the binary classifier fy for LumA vs. LumB classification:

| 6 |

where Θyis the set of parameters for fy.

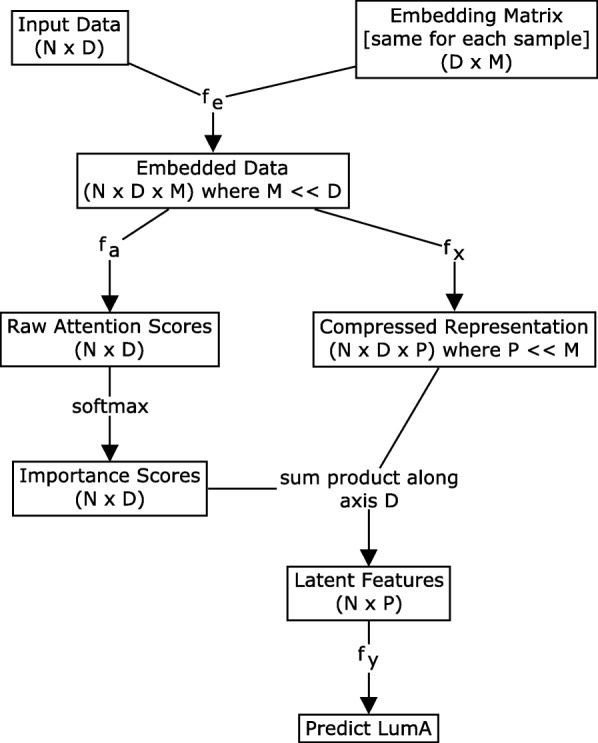

Given the embedding matrix E, we have defined our model through Equations 1–6. Figure 1 shows a schematic overview of the DeepTRIAGE model architecture. In the next section, we discuss how we learn the parameters of this model.

Fig. 1.

This figure shows a schematic overview of the DeepTRIAGE model architecture

Remark 1

There are different approaches to constructing the embedding matrix E. For instance: end-to-end learning with an unsupervised component added to the model, estimation using auto-encoders, or dimensionality reduction using PCA. We chose to use random vectors because it has been shown that their performance is comparable with the aforementioned techniques [24,25]. Therefore, ei is an m-dimensional random vector.

Remark 2

There are many ways to compute the attention weights. We used a definition inspired by the concept of self-attention which means that the attention to a feature is only influenced by that feature [26].

Learning model parameters

In the previous section, we defined our model through Equations 1–6. Now we discuss how to specify its components {fe,fx,fα,fy} and how to learn their parameters {Θe,Θx,Θα,Θy}. Since we want to learn the model end-to-end, we choose these components to be differentiable.

In order to compute , we capture the relationship between the feature value xji and the embedding vector ei via multiplicative interaction using Eq. 7. Therefore, Θe is a null set. One could, however, choose a more complex function.

| 7 |

We choose fx and fα to be two feed-forward neural networks with weights Θx and Θα respectively. See Equations 8 and 9:

| 8 |

| 9 |

where both can be thought of as a non-linear transform; and .

Given , any differentiable classifier can be placed on top to predict the cancer sub-type (Eq. 6). We use a feed-forward network with a sigmoid activation function in the last layer to calculate the probability of sample j belonging to a sub-type:

| 10 |

where Θy represents the weights of this network. To limit the model complexity, we choose fx to be a single-layer neural network with tanh nonlinearity, fα to be a network with one hidden layer and tanh nonlinearity, and fy to be a network with one hidden layer, batch normalisation and ReLu nonlinearity. Dropout with p=0.5 is also applied to these three functions. Again, one can use more complex functions as long as they are differentiable.

Since all components are fully differentiable, the entire model can be learnt by minimising the log-loss function employing automatic differentiation and gradient-based methods. In this case, we used the Adam optimiser [27].

Analysis of importance scores

What we have described so far focuses on the discriminatory mechanism of our model. When viewed from the top, our proposed model is capable of separating cancer sub-types, like many other classification algorithms. However, one important distinction is that our model also generates an individualised importance score for each feature at the sample-level. This aspect is highly useful as it opens new opportunities for post-classification analyses of individual patients, making our method both hypothesis-testing and hypothesis-generating.

Given βj=[βj1,…,βjd], where βji is the individualised importance score for sample j and feature i, we can construct an importance score matrixB by stacking βj for all samples.

To detect emerging patterns within the individualised importance scores, we perform non-negative matrix factorisation (NMF) and principal component analysis (PCA) of the importance score matrix B. As a point of reference, we also perform an ordination of the raw feature space from “Engineering annotation-level expression from genes” section. Note that all individualised per-sample importance scores were calculated on the withheld test set.

Results and discussion

GINS1 drives luminal sub-type classification in test set

Table 1 shows the performance of the DeepTRIAGE model for luminal sub-type classification according to a single test set. When applying this model to Ensembl gene expression features, we obtain personalised biomarker scores that describe how important each gene is in predicting the cancer sub-type for each sample. The objective of DeepTRIAGE is to improve interpretability, not accuracy. Yet, this method appears to perform marginally better for the given test set.

Table 1.

This table shows the F1-score performance of the DeepTRIAGE attention model for luminal sub-type classification according to a single test set

| Logistic Regression | Linear SVM | DeepTRIAGE | |

|---|---|---|---|

| GO (BP) annotations | 0.87 | 0.89 | 0.90 |

| KEGG annotations | 0.86 | 0.84 | 0.87 |

| Ensembl genes | 0.85 | 0.85 | 0.87 |

We benchmark its performance as compared to a logistic regression and support vector machine (SVM), using both gene and gene set annotation features. From this, we see that our model, which adds a level of interpretability at the individual level, does not sacrifice classification accuracy. The objective of DeepTRIAGE is to improve interpretability, not accuracy. Yet, this method appears to perform marginally better for the given test set

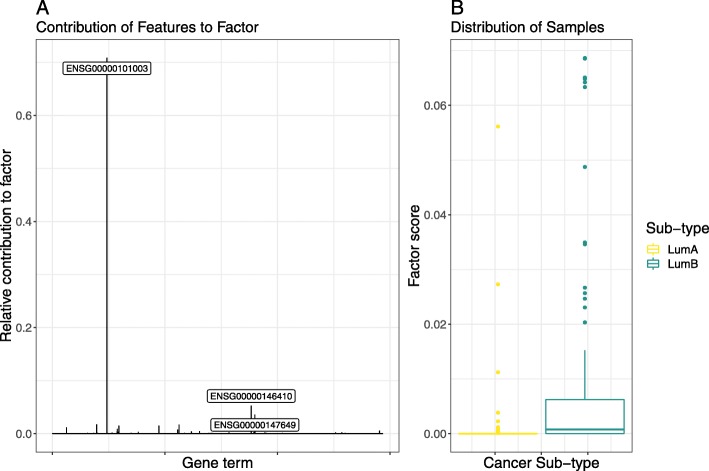

We can interpret the resultant importance score matrix directly using multivariate methods. Figure 2 shows the NMF factor which best discriminates between the breast cancer sub-types. Here, we see that a single gene, GINS1 (ENSG00000101003), contributes most to this factor. This gene has a role in the initiation of DNA replication, and has been associated with worse outcomes for both luminal A and luminal B sub-types [28]. Interestingly, this is not a PAM50 gene, suggesting that our model does not merely re-discover the PAM50 signature. We posit that the model performance, along with this biologically plausible result, validates its use for gene expression data.

Fig. 2.

This figure presents the results of non-negative matrix factorisation applied to the importance score matrix computed from Ensemble gene expression data using DeepTRIAGE. Shown here is the factor which best discriminates between the two breast cancer sub-types. a shows the relative contribution of each gene term to the most discriminative factor, with the top 3 components labelled explicitly. b shows a box plot of the distribution of all samples across the composite factor score. This figure is produced using the test set only

Kinetochore organisation associates with tumour severity within and between luminal sub-types

To reduce the number of features and to facilitate the interpretation of feature importance, we transformed the gene-level expression matrix into an annotation-level expression matrix using the Gene Ontology (GO) annotation set (cf. “Engineering annotation-level expression from genes” section). Table 1 shows that GO annotation features perform as well as gene features for all models. Although annotation features do not improve performance, they do improve the interpretability of the model by representing the data in a way that reflects domain-specific knowledge [29]. By applying DeepTRIAGE to the GO features, we obtain personalised biomarker scores that describe how important each GO term is in predicting the cancer sub-type for each sample.

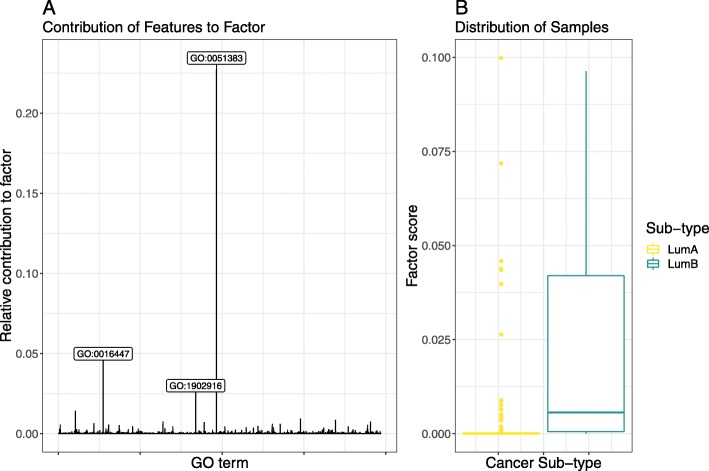

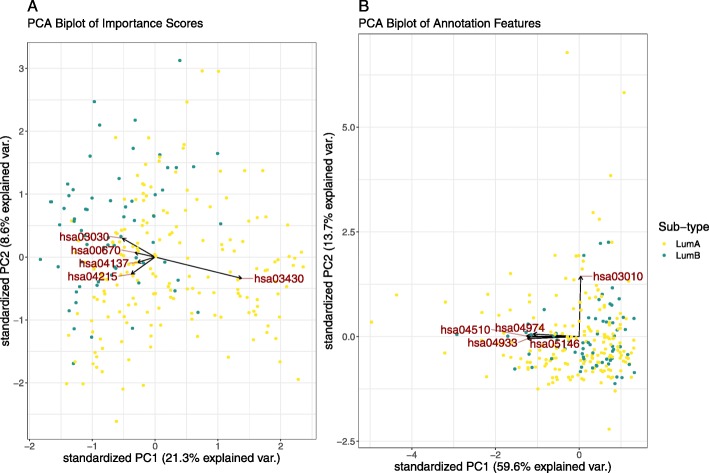

Figure 3 shows the most discriminative NMF factor of the GO-based importance score matrix. The left panel shows the relative contribution of each term to this factor, while the right panel shows the distribution of samples with regard to this factor. From this, we see that a single factor cleanly delineates the luminal A samples from the luminal B samples, and is comprised mostly by the GO:0051383 (kinetochore organisation) gene set. Figure 4 shows a PCA of the same importance score matrix, along with a biplot of the 5 most variable GO terms, offering another perspective into the structure of the importance score matrix.

Fig. 3.

This figure presents the results of non-negative matrix factorisation applied to the GO-based importance score matrix. Shown here is the factor which best discriminates between the two breast cancer sub-types. a shows the relative contribution of each GO term to the most discriminative factor, with the top 3 components labelled explicitly. b shows a box plot of the distribution of all samples across the composite factor score. This figure is produced using the test set only

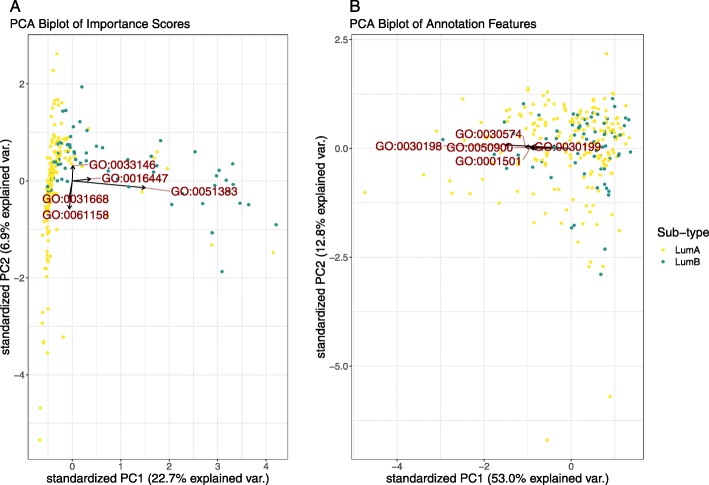

Fig. 4.

This figure shows a PCA biplot of the GO-based importance score matrix (a) and the GO annotation features (b), with the top 5 most variable terms labelled explicitly. For the importance scores, we see that the first principal axis describes much of the variance between the breast cancer sub-types, while the second principal axis describes much of the variance within the luminal A sub-type. By super-imposing the features as arrows, we can see which annotations best describe the origin of this variance. This level of structure is not evident when looking at the PCA biplot of the annotation feature space. This figure is produced using the test set only

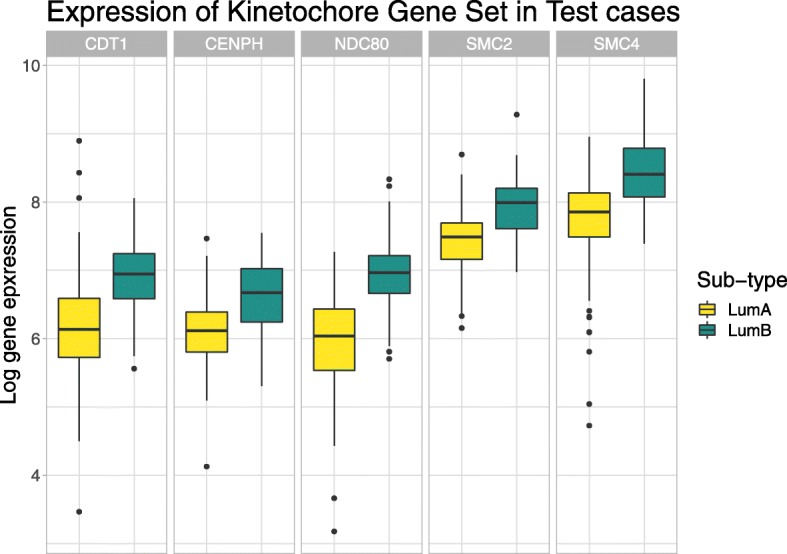

Both visualisations show that the kinetochore organisation gene set can meaningfully discriminate between the luminal A and luminal B cancer sub-types. This gene set contains 5 members: SMC4, NDC80, SMC2, CENPH, and CDT1. Figure 5 shows the expression of these genes in the test data, showing that the prioritised gene set contains genes with significant mean differences between the two sub-types (p-value < 0.01). Interestingly, only one of these (NDC80) is a member of the PAM50 gene set used to define the luminal A and B sub-types. The kinetochore organisation gene set is involved in the assembly and disassembly of the chromosome centromere, an attachment point for spindle microtubules during cell division. The dysregulation of this gene set would be expected to associate with luminal sub-typing because centromere instability drives genomic instability, and luminal B cancers are more unstable than luminal A cancers (as evidenced by Ki-67 staining [30] and tumour severity). Indeed, NDC80 and CENPH dysregulation has already been associated with worse breast cancer outcomes, with luminal A exhibiting less centromere and kinetochore dysregulation in general [31].

Fig. 5.

This figure shows the test set gene expression for 5 genes found within the GO:0051383 (kinetochore) gene set. Here, we see that all 5 genes are up-regulated in luminal B samples. This is relevant because our attention model prioritised this gene set when looking for feature importance within the breast cancer test set

However, the real added value of our attention model is that it projects all samples according to a distribution of importance scores, implicitly revealing and describing heterogeneity within the cancer sub-types. While Fig. 4 shows how GO:0051383 distinguishes between the luminal sub-types, it also shows how GO:0031668 (cellular response to extra-cellular stimulus) and GO:0061158 (3’-UTR-mediated mRNA destabilisation) explain much variance within the luminal A group. These axes are not arbitrary. A linear model predicting each PCA axis as a function of the tumour (T), node (N), and metastasis (M) stage (as nominal factors) among the luminal A samples only, reveals that small values in the first axis (PC1) significantly associate with the lower T stages, while large values significantly associate with the N2 stage (p<0.05). Meanwhile, large values in the second axis (PC2) significantly associate with the T4 stage (p<0.05). This suggests that the luminal A samples which are closest to luminal B samples in the PCA tend to be worse tumours. This is consistent with the literature which describes luminal B cancer as a more severe disease [18], as well as Netanely et al’s observation that luminal cancers exist along a phenotypic continuum of severity [19]. Thus, our method provides a biological explanation for some of the variance associated with the diagnostically-relevant differences in luminal sub-types. This level of resolution is not provided by the other machine learning algorithms used for RNA-Seq data, and is not evident in the ordination of the unattended GO annotation features (see Fig. 4b).

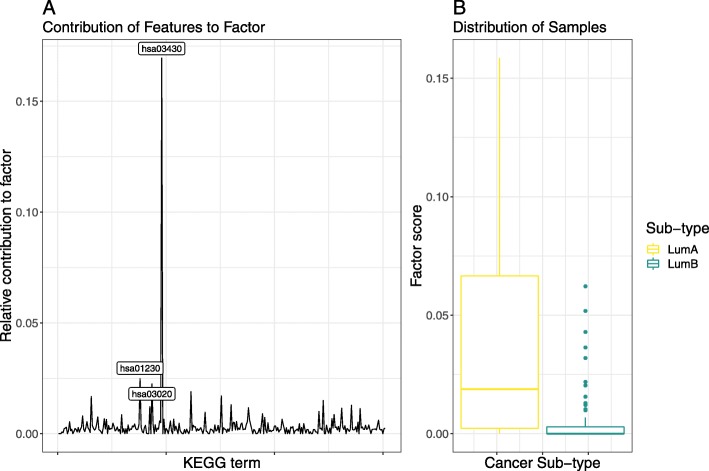

DNA mismatch repair associates with tumour severity within and between luminal sub-types

We repeated the same analysis above using the Kyoto Encyclopedia of Genes and Genomes (KEGG) annotation set which organises genes according to canonical functional pathways (cf. “Engineering annotation-level expression from genes” section). Like with GO annotations, the DeepTRIAGE model performed well with KEGG annotations (see Table 1). By applying DeepTRIAGE to the KEGG features, we obtain personalised biomarker scores that describe how important each KEGG term is for the classification of each patient.

The NMF and PCA ordination of the KEGG-based importance scores both show that hsa03430 (DNA mismatch repair) explains much of the inter-group variability (see Fig. 6 and Fig. 7). This is expected to separate luminal A and B sub-types because errors in the DNA mismatch repair mechanism allow mutations to propagate, resulting in a more aggressive cancer. Yet, the PCA biplot shows that there exists a large amount of intra-class heterogeneity that is not explained by this pathway. Along this axis, we see a contribution by hsa04670 (Leukocyte transendothelial migration) and hsa04215 (Apoptosis), both relevant to tumour progression and metastasis. Again, these axes are not arbitrary. A linear model predicting each PCA axis as a function of the tumour (T), node (N), and metastasis (M) stage (as nominal factors) among the luminal A samples only, reveals that small values in both axes (PC1 and PC2) significantly associate with the T1 stage (p<0.05). This suggests that the heterogeneity uncovered by the DeepTRIAGE architecture places patients along a diagnostically-relevant continuum of tumour severity. Again, this level of resolution is not provided by other machine learning algorithms and is not evident in the ordination of the unattended annotation-level data (see Figure 7b).

Fig. 6.

This figure presents the results of a non-negative matrix factorisation applied to the KEGG-based importance score matrix. Shown here is the factor which best discriminates between the two breast cancer sub-types. a shows the relative contribution of each KEGG term to the most discriminative factor, with the top 3 components labelled explicitly. b shows a box plot of the distribution of all samples across the composite factor score. This figure is produced using the test set only

Fig. 7.

This figure shows a PCA biplot of the KEGG-based importance scores (a) and the KEGG annotation features (b), with the top 5 most variable terms labelled explicitly. For the importance scores, we see that the first principal axis describes much of the variance between the breast cancer sub-types, while the second principal axis describes much of the variance within the luminal A sub-type. By super-imposing the features as arrows, we can see which annotations best describe the origin of this variance. This level of structure is not evident when looking at the PCA biplot of the annotation feature space. This figure is produced using the test set only

Conclusions

Breast cancer is a complex heterogeneous disorder with many distinct molecular sub-types. The luminal breast cancer class, comprised of the luminal A and luminal B intrinsic sub-types, varies in disease severity, prognosis, and treatment response [18], and has been described as existing along a vast phenotypic continuum of severity [19]. Stratifying individual cancerous samples along this severity continuum could inform clinical decision-making and generate new research hypotheses. In this manuscript, we propose the DeepTRIAGE architecture as a general solution to the classification and stratification of biological samples using gene expression data. To the best of our knowledge, this work showcases the first application of the attention mechanism to the classification of high-dimensional gene expression data.

In developing DeepTRIAGE, we also innovate the attention mechanism so that it extends to high-dimensional data where there are many more features than samples. Using DeepTRIAGE, we show that the attention mechanism can not only classify cancer sub-types with good accuracy, but can also provide individualised biomarker scores that reveal and describe the heterogeneity within and between cancer sub-types. While commonly used feature selection methods prioritise features at the population-level during training, our attention mechanism prioritises features at the sample-level during testing. By applying DeepTRIAGE to the gene expression signatures of luminal breast cancer samples, we identify canonical cancer pathways that differentiate between the cancer sub-types and explain the variation within them, and find that some of this intra-class variation associates with tumour severity.

Acknowledgements

Not applicable.

About this supplement

This article has been published as part of BMC Medical Genomics Volume 13 Supplement 3, 2020: Proceedings of the Joint International GIW & ABACBS-2019 Conference: medical genomics (part 2). The full contents of the supplement are available online at https://bmcmedgenomics.biomedcentral.com/articles/supplements/volume-13-supplement-3.

Abbreviations

- GO

Gene ontology

- KEGG

Kyoto encyclopedia of genes and genomes

- LumA

Luminal A sub-type

- LumB

Luminal B sub-type

- NMF

Non-negative matrix factorisation

- PCA

Principal components analysis

- TCGA

The cancer genome atlas

Authors’ contributions

AB developed and applied the architecture. TPQ performed the multivariate analyses and reviewed the cancer literature. AB and TPQ drafted the manuscript. SCL prepared the data and supported key analyses. TT and SV supervised the project. All authors designed the project, revised early drafts, and approved the manuscript.

Funding

Publication costs have been funded by university funds.

Availability of data and material

All models are freely available from https://github.com/adham/BiomarkerAttend. A copy of the importance scores matrices and the follow-up analysis scripts are available from https://zenodo.org/record/3381655.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Adham Beykikhoshk and Thomas P. Quinn contributed equally to this work.

References

- 1.Golub TR, Slonim DK, Tamayo P, Huard C, Gaasenbeek M, Mesirov JP, Coller H, Loh ML, Downing JR, Caligiuri MA, Bloomfield CD, Lander ES. Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science (New York, NY) 1999;286(5439):531–7. doi: 10.1126/science.286.5439.531. [DOI] [PubMed] [Google Scholar]

- 2.Bair E, Tibshirani R. Machine Learning Methods Applied to DNA Microarray Data Can Improve the Diagnosis of Cancer. SIGKDD Explor Newsl. 2003;5(2):48–55. doi: 10.1145/980972.980980. [DOI] [Google Scholar]

- 3.Sørlie T, Perou CM, Tibshirani R, Aas T, Geisler S, Johnsen H, Hastie T, Eisen MB, van de Rijn M, Jeffrey SS, Thorsen T, Quist H, Matese JC, Brown PO, Botstein D, Lønning PE, Børresen-Dale A-L. Gene expression patterns of breast carcinomas distinguish tumor subclasses with clinical implications. Proc Nat Acad Sci. 2001;98(19):10869–74. doi: 10.1073/pnas.191367098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Parker JS, Mullins M, Cheang MCU, Leung S, Voduc D, Vickery T, Davies S, Fauron C, He X, Hu Z, Quackenbush JF, Stijleman IJ, Palazzo J, Marron Js, Nobel AB, Mardis E, Nielsen TO, Ellis MJ, Perou CM, Bernard PS. Supervised Risk Predictor of Breast Cancer Based on Intrinsic Subtypes. J Clin Oncol. 2009;27(8):1160–7. doi: 10.1200/JCO.2008.18.1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ben-Dor A, Shamir R, Yakhini Z. Clustering Gene Expression Patterns. J Comput Biol. 1999;6(3-4):281–97. doi: 10.1089/106652799318274. [DOI] [PubMed] [Google Scholar]

- 6.Pirooznia M, Yang JY, Yang MQ, Deng Y. A comparative study of different machine learning methods on microarray gene expression data. BMC Genomics. 2008;9(Suppl 1):S13. doi: 10.1186/1471-2164-9-S1-S13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Saeys Y, Inza I, Larrañaga PL. A review of feature selection techniques in bioinformatics. Bioinformatics. 2007;23(19):2507–17. doi: 10.1093/bioinformatics/btm344. [DOI] [PubMed] [Google Scholar]

- 8.Kursa MB. Robustness of Random Forest-based gene selection methods. BMC Bioinformatics. 2014;15(1):8. doi: 10.1186/1471-2105-15-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Vanitha CDA, Devaraj D, Venkatesulu M. Gene Expression Data Classification Using Support Vector Machine and Mutual Information-based Gene Selection. Procedia Comput Sci. 2015;47:13–21. doi: 10.1016/j.procs.2015.03.178. [DOI] [Google Scholar]

- 10.Cai Z, Xu D, Zhang Q, Zhang J, Ngai S-M, Shao J. Classification of lung cancer using ensemble-based feature selection and machine learning methods. Mol BioSystems. 2015;11(3):791–800. doi: 10.1039/C4MB00659C. [DOI] [PubMed] [Google Scholar]

- 11.Mayer IA, Abramson VG, Lehmann BD, Pietenpol JA. New strategies for triple-negative breast cancer–deciphering the heterogeneity. Clin Cancer Res Off J Am Assoc Cancer Res. 2014;20(4):782–90. doi: 10.1158/1078-0432.CCR-13-0583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bahdanau D, Cho K, Bengio Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv:1409.0473. 2014.

- 13.Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. In: International Conference on Machine Learning: 2015. p. 2048–57.

- 14.Tan J, Hammond JH, Hogan DA, Greene CS. ADAGE-Based Integration of Publicly Available Pseudomonas aeruginosa Gene Expression Data with Denoising Autoencoders Illuminates Microbe-Host Interactions. mSystems. 2016;1:1. doi: 10.1128/mSystems.00025-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chen L, Cai C, Chen V, Lu X. Learning a hierarchical representation of the yeast transcriptomic machinery using an autoencoder model. BMC Bioinformatics. 2016;17(1):S9. doi: 10.1186/s12859-015-0852-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tan J, Ung M, Cheng C, Greene CS. Unsupervised feature construction and knowledge extraction from genome-wide assays of breast cancer with denoising autoencoders. Pac Symp Biocomput. 2015;20:132–43. [PMC free article] [PubMed] [Google Scholar]

- 17.Danaee P, Ghaeini R, Hendrix DA. A deep learning approach for cancer detection and relevant gene identification. In: Biocomputing 2017. WORLD SCIENTIFIC: 2016. p. 219–29. [DOI] [PMC free article] [PubMed]

- 18.Dai X, Li T, Bai Z, Yang Y, Liu X, Zhan J, Shi B. Breast cancer intrinsic subtype classification, clinical use and future trends. Am J Cancer Res. 2015;5(10):2929–43. [PMC free article] [PubMed] [Google Scholar]

- 19.Netanely D, Avraham A, Ben-Baruch A, Evron E, Shamir R. Expression and methylation patterns partition luminal-A breast tumors into distinct prognostic subgroups. Breast Cancer Res. 2016;18(1):74. doi: 10.1186/s13058-016-0724-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Weinstein JN, Collisson EA, Mills GB, Shaw KM, Ozenberger BA, Ellrott K, Shmulevich I, Sander C, Stuart JM. The Cancer Genome Atlas Pan-Cancer Analysis Project. Nat Genet. 2013;45(10):1113–20. doi: 10.1038/ng.2764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Colaprico A, Silva TC, Olsen C, Garofano L, Cava C, Garolini D, Sabedot TS, Malta TM, Pagnotta SM, Castiglioni I, Ceccarelli M, Bontempi G, Noushmehr H. TCGAbiolinks: an R/Bioconductor package for integrative analysis of TCGA data. Nucleic Acids Res. 2016;44(8):e71. doi: 10.1093/nar/gkv1507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Anders S, Huber W. Differential expression analysis for sequence count data. Genome Biol. 2010;11:R106. doi: 10.1186/gb-2010-11-10-r106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Quinn TP, Lee SC, Venkatesh S, Nguyen T. Improving the classification of neuropsychiatric conditions using gene ontology terms as features. Am J Med Genet B Neuropsychiatr Genet. 2019;180(7):508–18. doi: 10.1002/ajmg.b.32727. [DOI] [PubMed] [Google Scholar]

- 24.Bingham E, Mannila H. Proceedings of the Seventh ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’01. New York: ACM; 2001. Random Projection in Dimensionality Reduction: Applications to Image and Text Data. [Google Scholar]

- 25.Romero A, Luc Carrier PL, Erraqabi A, Sylvain T, Auvolat A, Dejoie E, Legault M-A, Dubé M-P, Hussin JG, Bengio Y. Diet Networks: Thin Parameters for Fat Genomics. arXiv: 1611.09340.

- 26.Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, N Gomez AN, Kaiser Ł, Polosukhin I. Attention is All you Need In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R, editors. Advances in Neural Information Processing Systems 30. Curran Associates, Inc.: 2017. p. 5998–6008.

- 27.Kingma DP, Adam JB. A Method for Stochastic Optimization. arXiv: 1412.6980. 2014.

- 28.Nieto-Jiménez C, Alcaraz-Sanabria A, Páez R, Pérez-Peña J, Corrales-Sánchez V, Pandiella A, Ocaña A. DNA-damage related genes and clinical outcome in hormone receptor positive breast cancer. Oncotarget. 2017;8(38):62834–41. doi: 10.18632/oncotarget.10886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Subramanian A, Tamayo P, Mootha VK, Mukherjee S, Ebert BL, Gillette MA, Paulovich A, Pomeroy SL, Golub TR, Lander ES, Mesirov JP. Gene set enrichment analysis: A knowledge-based approach for interpreting genome-wide expression profiles. Proc Nat Acad Sci. 2005;102(43):15545–50. doi: 10.1073/pnas.0506580102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Inic Z, Zegarac M, Inic M, Markovic I, Kozomara Z, Djurisic I, Inic I, Pupic G, Jancic S. Difference between Luminal A and Luminal B Subtypes According to Ki-67, Tumor Size, and Progesterone Receptor Negativity Providing Prognostic Information. Clin Med Insights Oncol. 2014;8:107–11. doi: 10.4137/CMO.S18006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zhang W, Mao J-H, Zhu W, Jain AK, Liu K, Brown JB, Karpen GH. Centromere and kinetochore gene misexpression predicts cancer patient survival and response to radiotherapy and chemotherapy. Nat Commun. 2016;7:12619. doi: 10.1038/ncomms12619. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All models are freely available from https://github.com/adham/BiomarkerAttend. A copy of the importance scores matrices and the follow-up analysis scripts are available from https://zenodo.org/record/3381655.