Abstract

Introduction: Several measures to assess family planning service quality (FPQ) exist, yet there is limited evidence on their association with contraceptive discontinuation. Using data from the Measurement, Learning & Evaluation (MLE) Project, this study investigates the association between FPQ and discontinuation-while-in-need in five cities in Kenya. Two measures of FPQ are examined – the Method Information Index (MII) and a comprehensive service delivery point (SDP) assessment rooted in the Bruce Framework for FPQ.

Methods: Three models were constructed: two to assess MII reported in household interviews (as an ordinal and binary variable) among 1,033 FP users, and one for facility-level quality domains among 938 FP users who could be linked to a facility type included in the SDP assessment. Cox proportional hazards ratios were estimated where the event of interest was discontinuation-while-in-need. Facility-level FPQ domains were identified using exploratory factor analysis (EFA) using SDP assessment data from 124 facilities.

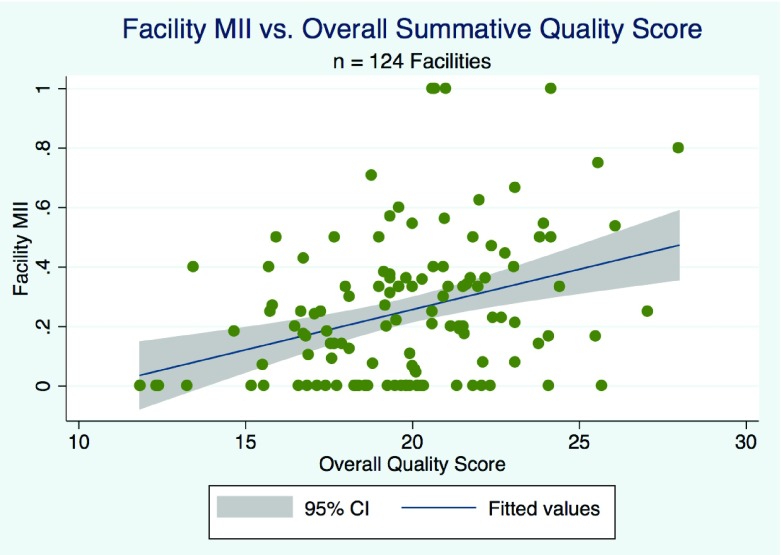

Results: A woman’s likelihood of discontinuation-while-in-need was approximately halved whether she was informed of one aspect of MII (HR: 0.45, p < 0.05), or all three (HR: 0.51, p < 0.01) versus receiving no information, when MII was assessed as an ordinal variable. Six facility-level quality domains were identified in EFA. Higher scores in information exchange, privacy, autonomy & dignity and technical competence were associated with a reduced risk of discontinuation-while-in-need (p < 0.05). Facility-level MII was correlated with overall facility quality (R= 0.3197, p < 0.05).

Conclusions: The MII has potential as an actionable metric for FPQ monitoring at the health facility level. Furthermore, family planning facilities and programs should emphasize information provision and client-centered approaches to care alongside technical competence in the provision of FP care.

Keywords: Contraceptive discontinuation, Family planning, quality, measurement, Kenya

Introduction

Despite global advances in access to family planning services, 214 million women of reproductive age in developing regions of the world still experience an unmet need for modern contraception 1. Contraceptive discontinuation is understood to be a driver of unmet need for family planning; in fact, an analysis of Demographic and Health Survey (DHS) data collected in 34 countries between 2005 and 2010 demonstrated that modern method discontinuation while in need—which occurs when a woman who wishes to avoid pregnancy stops using her modern method of contraception—accounted for over one third total estimated unmet need 2. There is evidence to suggest that the quality of family planning (FP) services can impact continued contraceptive use 3– 6. Thus, contraceptive discontinuation provides a key measurable outcome of interest in quality improvement strategies in FP service provision; however, identifying which aspects of structural and process quality are correlated with contraceptive method discontinuation can provide insights that are actionable for health systems implementers now—before poor quality service delivery manifests as unmet need.

The assessment of quality of care (QoC) in family planning programs has been largely guided by the Bruce family planning QoC framework for the past several decades. The framework articulates six fundamental domains of quality: choice of methods, information given to clients, technical competence of providers, interpersonal relations, mechanisms for follow-up and having an appropriate constellation of services 7. A number of measurement tools exist which include indicators that seek to capture key elements of FP service quality; yet, questions remain as to the utility of existing tools for performance benchmarking and strategic decision making 8. The broadly endorsed Bruce framework is applied inconsistently among researchers and programs seeking to understand and improve quality of care at the facility level, and limited guidance exists as to how to analyze resulting data once collected. A recent review of quality assessment tools for FP programs in LMICs identified 20 comprehensive tools for the assessment of clinical quality of care 9. Some are well aligned with the Bruce framework. For example, the Quick Investigation of Quality (QIQ) was developed by MEASURE Evaluation in 2000 as a tool that would balance the feasibility of data collection with the reliability of the resulting data. The QIQ indicators align with 5 out of the 6 domains of FP service quality defined in the Bruce Framework: choice of methods, information provision, technical competence, interpersonal relations, and mechanisms for follow-up 10. Meanwhile, large scale facility surveys, such as the Service Availability and Readiness Assessment (SARA) used by WHO for health facility assessment in LMICs, typically limit data collection to facility audits which assess infrastructure and readiness for choice only 11. Indeed, these structural aspects of quality are among the easiest and least expensive to measure.

When data to measure the domains of the Bruce framework are collected inconsistently, it creates an analytical challenge for programs that wish to summarize and compare findings related to the measurement of FP QoC. Some programs have tracked changes over time in certain indicators, while other studies have used various methods to create indices to measure all or some of the elements in the Bruce framework; in either case, indicators may be chosen from existing data collection tools based on the feasibility of data collection, or custom indicators may be developed anew. Where no standardized set of indicators exist, but instruments are designed to capture certain domains of FP QoC, data reduction techniques can be useful to enhance comparability and simplify analyses. For example, the authors of a 2014 DHS Analytical Study to assess the quality of care in FP, antenatal, and sick child services in several countries used principal components analysis (PCA) to create indices corresponding to structure, process, and client satisfaction 12. Elsewhere, factor analysis has been used to reduce indicators into variables representing different domains of quality corresponding to the Bruce framework 13. Exploratory Factor Analysis (EFA) is particularly useful in situations where multiple latent variables are likely to be the source of variation in a set of indicators, such as is the case in the measure of QoC. EFA can help to determine how many latent variables underlie a set of indicators, and—like PCA—provide a means for data reduction in order to explore the relationship between QoC and outcomes of interest 14.

Another measure used to assess FP service quality is the Method Information Index (MII). The MII is distinct from the comprehensive assessment tools derived from the Bruce framework, but related - it aims to capture similar information to what would be measured in the Information Provision domain of the Bruce framework, but measures it from the client perspective. Specifically, the MII assesses FP counseling quality through three questions, asked of women in regard to the family planning visit where they received their contraceptive method: “Were you informed of potential side effects of the method?”, “Were you informed on what to do if you experienced side effects?”, and “Were you told about other methods of family planning apart from your current method?” The MII is one of 18 core indicators tracked by the Family Planning 2020 (FP2020) global partnership, which formed in 2012 following the London Summit on Family Planning to help reduce unmet need in the world’s poorest countries 15. It has also been used in population-based surveys, such as the Performance Monitoring and Accountability 2020 (PMA2020) surveys or the DHS Women’s questionnaire, to report on the quality of FP counseling at the national-level. In these surveys, the MII is calculated as the percentage of women who respond ‘yes’ to all three questions 16, 17.

The MII captures information exchange during the counseling session and a woman’s understanding of having received that information, giving it the potential to approximate the overall quality of FP services. The simplicity and versatility of the MII makes it an appealing choice for programs and clinics seeking to routinely measure program performance. It can be aggregated and reported at multiple levels—from the clinic to national-level estimates—and it places the client perspective at the center of quality measurement. Furthermore, it is collected and analyzed consistently where tools applying the full Bruce framework are not. However, it captures only one aspect of the Bruce framework – “information given to clients” – and, as with more comprehensive measures, there is limited evidence to show how well MII predicts outcomes of interest, such as contraceptive discontinuation, when measured at the individual level or when applied programmatically.

Recent (2014) Demographic and Health Survey data from Kenya—the site of this study—indicates that more than half (58%) of women are using contraception, up from 45.5% in 2008–09, and knowledge of modern contraceptive methods among women and men age 15 to 49 is nearly universal; yet, 18% of currently married women have an unmet need for family planning services. Of family planning users, 31% discontinued their method within 12 months – 11% due to side effect and health concerns 18. With regard to MII in Kenya, 60% of current users of modern contraceptive methods in 2014 reported that they were informed about potential side effects of their method, 52% were told what to do if they experienced side effects, and 79% were given information about other methods 18.

The present study is a secondary analysis of data collected in select urban sites of Kenya as part of the Measurement, Learning & Evaluation (MLE) Project, implemented by the Carolina Population Center at UNC Chapel Hill. The MLE Project was the evaluation component of the Urban Reproductive Health Initiative (URHI), which operated in Kenya, Nigeria, India, and Senegal from 2010 to 2015. In Kenya, the URHI project, called Tupange, operated in five urban areas in Kenya: Nairobi, Mombasa, Kisumu, Machakos, and Kakamega. The data used come from baseline health facility surveys and household surveys of women that were conducted in 2010 to 2011 (baseline) and again in 2014 to 2015 (endline) in these five urban areas. Two methods for assessing QoC in FP are incorporated into the surveys: the MII, and a modified version of the Quick Investigation of Quality (QIQ). The QIQ has historically used client exit interviews, facility audits, and provider observation to assess a short list of quality of care indicators at the health facility level which align with 5 domains of the Bruce Framework, mentioned previously. Facility readiness is assessed through the facility audit, while the exit interview collects information related to clients’ experience of care at the health facility 10. The MLE survey instruments incorporate provider surveys in place of provider observation to assess technical competence in clinical procedures and counseling skills. The MLE facility audit contains additional items to assess the sixth domain of the Bruce framework: constellation of services 19.

In this study, we leverage the existing data to examine the relationship between FP service quality and modern contraceptive discontinuation while-in-need using two QoC measures and explore the feasibility of these measures in the context quality monitoring at the service delivery point.

Methods

Data sources and survey design

The MLE project was initiated in 2009 ahead of the implementation of the Tupange project in Nairobi, Mombasa, Kisumu, Kakamega, and Machakos. Data collection activities included a household survey and a service delivery point (SDP) assessment. The survey and sampling protocol are described below for the data included in the present analysis.

The baseline household survey was conducted with a representative sample of women in the intervention cities from September to November 2010. The household sample was drawn through a two-stage cluster sampling design in which clusters were identified from the most recent Population and Housing Census (2009) and randomly selected in each urban area, from which a random sample of 30 households per cluster was selected. Across the five intervention cities, a total of 13,140 household were selected for interviews. Women aged 15 to 49 who were residents or household visitors were eligible to be interviewed 20. A follow-up household survey was conducted at endline in 2014/2015, wherein eligible women were tracked using contact information collected at baseline. Household surveys collected information on a variety of topics, including demographic information and household characteristics, current and past FP use and sources of FP. At endline, 5 year reproductive health calendars were also collected 21.

Baseline SDP surveys took place from August 2011 to November 2011. In Nairobi and Mombasa, all public facilities and all URHI/ Tupange facilities were selected; private facilities that were identified as sources of FP by women in baseline household surveys were also selected for surveys. In Kisumu, Kakamega and Machakos, all public and private facilities offering sexual and reproductive health services were included. In total, 279 facilities were surveyed. The SDP survey incorporated a health facility audit, health care provider surveys and client exit interviews. Client exit interviews were only conducted in facilities that reported offering reproductive health services routinely. Women aged 15 to 49 were approached for an interview as they exited reproductive health or child health departments. Interviewers aimed to reach 40 women per facility, half family planning clients and half clients of any other reproductive health service 19. Survey tools used are available as Extended data 22.

Inclusion criteria

To assess service quality at the facility level, data are used only from facilities that reported offering FP services, and where provider interviews, client exit interviews, and a facility audit were conducted at baseline in 2011 (n = 124).

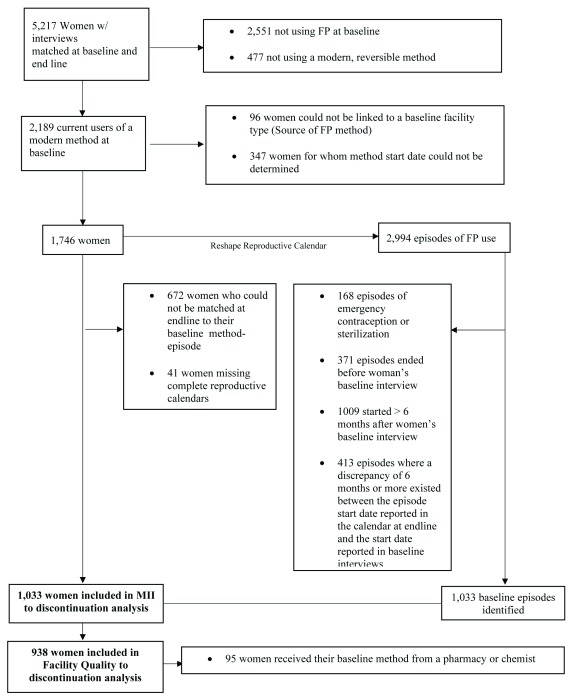

To assess contraceptive discontinuation, individual-level data is used from matched baseline (2010/2011) and endline (2014/2015) household surveys of women. Women who were not using a modern, reversible contraceptive method at baseline were excluded, as were women for whom the method start date could not be determined and women who did not report where she received her method, or who reported that she did not know where she received her method. For the remaining women in the sample, 5-year retrospective reproductive calendars were examined to identify the episode of contraceptive use reported by the women at baseline. Women for whom her baseline episode could not be identified in the reproductive calendar within 6 months of the method start date reported at baseline were excluded from the analysis. Although pharmacies are recognized as part of the private health sector in Kenya, women who received their method from a pharmacy or chemist (n = 95) were excluded from models assessing the relationship between facility-level measures of quality and discontinuation but included in models assessing the relationship between woman-reported MII and discontinuation. This is because no pharmacies were included in the SDP survey; therefore, facility-level measures of quality as assessed in this study are not reflective of QoC in a pharmacy setting. Figure 1 illustrates how women were selected for inclusion in the present analyses (n = 1,033 & n = 938). Chi-square statistics were calculated to examine demographic differences between current FP users who were included and excluded in the analyses ( Table 1).

Figure 1. Participant Diagram.

Table 1. Demographic characteristics and baseline contraceptive method use of women aged 15 to 49 in urban Kenya included in models assessing the relationship between the Method Information Index (MII) and discontinuation-while-in-need.

Of the 2,189 users of modern, reversible contraceptive methods at baseline, 1,033 were included in the MII to discontinuation model and 1,156 current f ere excluded. (Note: an additional 95 women were excluded from the facility quality to discontinuation model because they reported receiving their method from a pharmacy or chemist).

| Women included in

analysis (N = 1,033) |

Current FP users excluded

from analysis (N = 1,156) 1 |

|||

|---|---|---|---|---|

| Demographic Characteristics | N | % | N | % |

| Age | ||||

| 15 to 24 | 263 | 25.5% | 342 | 29.6% |

| 25 to 34 | 535 | 51.8% | 508 | 43.9% |

| 35 to 49 | 235 | 22.8% | 306 | 26.5% |

| Education | ||||

| No Education | 13 | 1.3% | 25 | 2.2% |

| Incomplete Primary | 155 | 15.0% | 184 | 15.9% |

| Complete Primary | 327 | 31.7% | 350 | 30.3% |

| Secondary plus | 538 | 52.1% | 597 | 51.6% |

| Wealth 2 | ||||

| Quintile 1 | 255 | 24.7% | 275 | 23.8% |

| Quintile 2 | 221 | 21.4% | 254 | 22.0% |

| Quintile 3 | 184 | 17.8% | 229 | 19.8% |

| Quintile 4 | 222 | 21.5% | 251 | 21.7% |

| Quintile 5 | 151 | 14.6% | 147 | 12.7% |

| City | ||||

| Nairobi | 256 | 24.8% | 342 | 29.6% |

| Mombasa | 109 | 10.6% | 168 | 14.5% |

| Kisumu | 154 | 14.9% | 235 | 20.3% |

| Machakos | 313 | 30.3% | 227 | 19.6% |

| Kakamega | 201 | 19.5% | 184 | 15.9% |

| Marital Status | ||||

| Never married | 60 | 5.8% | 187 | 16.2% |

| Married or living together | 902 | 87.3% | 856 | 74.1% |

| Other | 71 | 6.9% | 113 | 9.8% |

| Parity | ||||

| No births | 7 | 0.7% | 47 | 4.1% |

| One birth | 135 | 13.1% | 174 | 15.1% |

| Two births | 358 | 34.7% | 306 | 26.5% |

| Three births | 268 | 25.9% | 291 | 25.2% |

| Four or more births | 265 | 25.7% | 338 | 29.2% |

1 Current users of family planning (FP) who were excluded from analysis if they matched any of the following criteria: 1) start date of baseline method could not be determined; 2) woman could not be linked to facility type as the source of her method at baseline; 3) complete reproductive calendar information was not available for the woman at end line; or 4) the woman’s baseline episode of contraceptive use could not be identified in her reproductive calendar within 6 months of the start date she reported at baseline.

2 Wealth quintiles are calculated across the 5 cities.

Statistical analysis

Linking women to facility level measures of quality

To examine the relationship between facility-level measures of quality and discontinuation, facilities were categorized as public hospitals, public facilities, private hospitals and private facilities. Public facilities included government health centers, government dispensaries and other public facilities. Private facilities included private clinics, nursing/maternity homes, faith-based home/health centers, other NGO clinics, and other private facilities. In baseline household interviews, women reported the facility type where they received their method, and those facility types were condensed into 4 categories to align with the SDP analysis. Because women could not be linked to the specific facility where they received their baseline contraceptive method, quality variables were aggregated into categories according to city and facility type, which necessitates the assumption that women would have attended a facility within the city where they lived. Where facility audits distinguished between private hospitals and private clinics, the household survey combined private and faith-based hospitals and clinics into two categories: ‘Private hospital/clinic’ and ‘Faith-based hospital/clinic’. Women who reported receiving their method from one of these categories, were linked to measures of quality at private hospitals, except in Kakamega – where no private hospitals were included in the SDP assessment, so women were linked to measures of quality at private facilities.

Discontinuation analyses

Three models were constructed to determine the relationship between measures of FP service quality and discontinuation: two to assess MII reported at baseline in household interviews (as an ordinal variable, and as a binary variable), and one for summative domains of quality at the facility level. Women who indicated that they discontinued their method due to side effects, health concerns, method failure, issues related to access or disapproval of their partner and who did not switch to a new modern method were considered to have discontinued while in need. Women who indicated that they wanted to become pregnant, were no longer sexually active, or who switched to a different modern method were censored. Time to discontinuation was measured in months. All women were right-censored at 36 months. For all discontinuation models, Cox proportional hazards ratios were estimated where the event of interest was discontinuation while in need. The following variables were screened for inclusion as covariates in the final adjusted models: age, marital status, parity, education, wealth, facility type, and method-type (long-term method or short-term method) at baseline. In models to assess the relationship between MII and discontinuation, pharmacies were categorized as ‘Private Facilities’ within the facility type variable. Correlations between potential covariates were examined. All independent variables and covariates were checked to ensure proportional hazards assumptions were met. The final models of women-level and facility-level measures of quality related to discontinuation were adjusted for covariates that were significant at p < 0.1 when examined individually in a Cox proportional hazard model. We accounted for intragroup correlation in the facility-level discontinuation analysis by using a shared frailty model 24. Facility level quality domains were assessed first individually, and then together.

Independent variables

Calculation of Woman MII. Women were assigned an MII score of 0 to 3 by adding together her responses to each of the three MII questions (0 for no, 1 for yes), asked during baseline household interviews in reference to her current method: “Were you told by a health or family planning worker about side effects or problems you might have using this family planning method?”, “Were you told by a health or family planning worker about what to do if you experienced side effects or problems with this method?”, “Were you told by a health or family planning worker about other methods of family planning (beside the one you are currently using)?” Woman MII was examined in discontinuation analyses as an ordinal variable (0 to 3) and as a binary variable (3 vs. less than 3).

Facility quality variables. Exploratory factor analysis (EFA) was used to identify domains of family planning service quality at the facility level. To incorporate data from provider interviews, a single representative provider survey was chosen for each facility based on highest cadre, and most years worked at the facility. Client exit interview variables were incorporated into the EFA as continuous variables (0 to 1) corresponding to the proportion of clients at the facility who indicated an affirmative response to each question. Efforts were made to reduce the number of variables entered into the EFA model, in order to improve the subject to item ratio (N:p) 25. Variables were combined into composite variables aligned with the list of 25 QIQ indicators where feasible, and converted to binary variables or standardized to continuous variables (0 to 1) 10. Variables pertaining to basic infrastructure – water, electricity and toilet facilities – were combined into a binary variable and assigned a value of 1 if the facility had all three characteristics. To generate a standardized method-mix score, facilities received one point each for having available: one permanent, one long-acting reversible contraception (LARC) (implant or intrauterine device (IUD)), one short term hormonal method (pill, emergency contraception (EC), injectable), and one barrier method. The number of family planning methods offered was summed (range: 0 to 12) for each facility, and then standardized to 0 to 1. Checklist items not included in the list of 25 QIQ indicators, such as those pertaining to infection prevention equipment, were standardized to a continuous variable (0 to 1) according to the proportion of items achieved within the checklist. Other facility audit variables were coded as binary variables. The final N:p ratio was 124: 38, or 3.3:1.

The EFA was an iterative process. All variables were entered into the factor analysis model using the principal factors method. The number of factors was determined based on the resulting scree plot, and by restricting the analysis to factors with eigenvalues > 1 25. An orthogonal varimax factor rotation was applied in order to produce uncorrelated factors. Factor analysis was repeated until all variables loading with a uniqueness > 0.8 were excluded, and those remaining loaded on to at least one factor at 0.3 or higher. Variables were assigned to the factor where they loaded the highest. Cronbach’s alpha was examined for each factor to understand the internal consistency of the items contained within 14. Summative quality domain variables were generated for each facility by adding up the values of the variables that loaded into each factor.

Facility level quality to MII analysis

To better understand the relationship between these two measures of FP service quality that could be collected at the facility level, a facility-level measure of MII was calculated for each of the 124 facilities included in the quality analysis. Facility MII was calculated at the percent (%) of FP clients who responded ‘yes’ to all three MII questions in exit interviews conducted during the baseline SDP survey. Spearman correlations were run between facility-level MII and each summative quality domain among the sample of facilities included in the analysis. Additionally, a scatter plot fitted with a regression line and 95% CI was examined for facility-level MII vs. overall quality scores.

Results

Analytical sample

124 facilities were included in the present analysis. Table 2 describes the distribution and characteristics of those facilities included in the analysis. Approximately 53% were public hospitals or other public facilities; 47% were private hospitals or other private facilities. The majority of facilities—approximately 35%—were located in Nairobi. Of 5,217 women with matched baseline and endline interviews, 1,033 women were ultimately included in the MII to discontinuation analysis and 938 were included in the facility quality to discontinuation analysis. The resulting analytical samples consisted of 1,033 and 938 baseline episodes of contraceptive use (one per woman), respectively. Table 1 describes the demographic characteristics of current FP users (n = 2,189) who were included in (n = 1,033) and excluded from (n=1,156) the MII to discontinuation analysis. There were no significant differences in age (p = 0.82), education (p = 0.49), wealth (p = 0.45), or parity (p = 0.59) between current FP users who were included and excluded from the analyses; however, current FP users who were excluded from the analysis were more likely to have never married (p < 0.05). The salient features of the episode of contraceptive method use at baseline among women included in the analyses can be found in Table 3. Most women received their method from a public hospital (44.9% and 49.5%, respectively) or private hospital (26.2%, and 25.7%, respectively). The majority of women used injectables (59.2% and 62.5%, respectively) or oral contraceptives (19.0% and 14.7%, respectively) as their contraceptive method. 20.9% and 21.1% of baseline FP users in each of the two analytical samples discontinued use of their method within 3 years; 5.2% and 4.9% switched to a different method; 73.9% and 74.0% continued use of their method throughout the 3 year window.

Table 2. Distribution and facility type of 124 high-volume facilities included in the quality analysis.

| City | Nairobi | Mombasa | Kisumu | Machakos | Kakamega | Total | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Facility Type | n | % | n | % | n | % | n | % | n | % | n | % |

| Public Hospital | 4 | 3.2 | 3 | 2.4 | 2 | 1.6 | 1 | 0.8 | 1 | 0.8 | 11 | 8.9 |

| Other Public Facility | 27 | 21.8 | 11 | 8.9 | 12 | 9.7 | 2 | 1.6 | 3 | 2.4 | 55 | 44.4 |

| Private Hospital | 1 | 0.8 | 2 | 1.6 | 4 | 3.2 | 1 | 0.8 | 0 | 0.0 | 8 | 6.5 |

| Other Private Facility | 11 | 8.9 | 9 | 7.3 | 12 | 9.7 | 11 | 8.9 | 7 | 5.7 | 50 | 40.3 |

| Total | 43 | 34.68 | 25 | 20.15 | 30 | 24.2 | 15 | 12.1 | 11 | 8.9 | 124 | 100.0 |

Table 3. Characteristics of baseline contraceptive use for women included in models assessing the relationship between method discontinuation and the Method Information Index (MII) or Facility Quality, respectively.

| MII to Discontinuation

Models (N = 1,033) |

Facility Quality to

Discontinuation Models (N = 938) |

|||

|---|---|---|---|---|

| Baseline Contraceptive Use | n | % | n | % |

| Method | ||||

| Implant | 120 | 11.6% | 119 | 12.7% |

| IUD | 73 | 7.1% | 70 | 7.5% |

| Injectable | 611 | 59.2% | 586 | 62.5% |

| Oral Contraceptive | 196 | 19.0% | 138 | 14.7% |

| Condoms | 33 | 3.2% | 25 | 2.7% |

| Episode Type (3YR) 1 | ||||

| Discontinuer | 216 | 20.9% | 198 | 21.1% |

| Switcher | 54 | 5.2% | 46 | 4.9% |

| Continuer | 763 | 73.9% | 684 | 74.0% |

| Source of FP Method | ||||

| Public Hospital | 464 | 44.9% | 464 | 49.5% |

| Other Public Facility | 191 | 18.5% | 191 | 20.4% |

| Private Hospital | 241 | 23.3% | 241 | 25.7% |

| Other Private Facility | 137 | 13.7% | 42 | 4.5% |

| MII Score | ||||

| 0 | 162 | 15.7% | 140 | 14.9% |

| 1 | 217 | 21.0% | 184 | 19.6% |

| 2 | 81 | 7.9% | 77 | 8.2% |

| 3 | 573 | 55.5% | 537 | 57.3% |

| MII by Question | ||||

| Informed of side effects | 669 | 64.8% | 627 | 66.9% |

| Told how to resolve problems | 611 | 59.2% | 572 | 62.0% |

| Informed of other methods | 818 | 79.2% | 750 | 80.0% |

1Classification of the baseline episode at 3 years. Discontinuers are women who discontinued their method within 3 years for any reason, without switching to a new method – including discontinuation while in need, discontinuation while not in need, and method failure.

IUD – intrauterine device, FP – Family planning

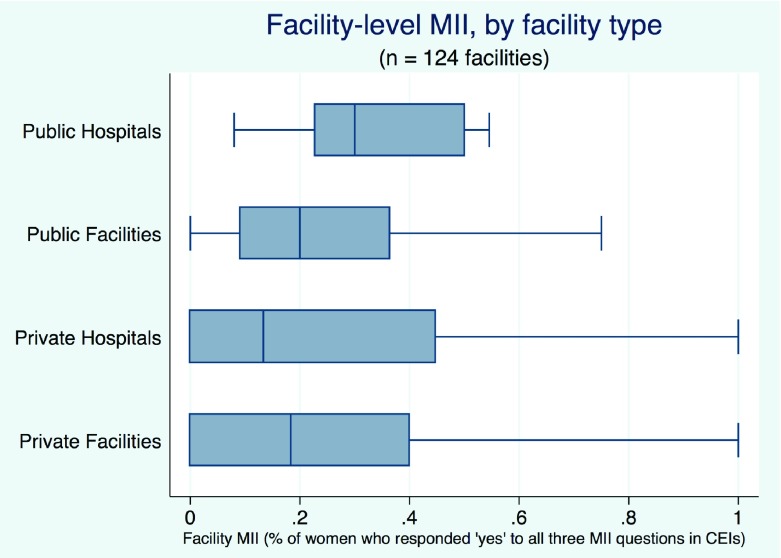

The distribution of women’s MII scores is presented at the bottom of Table 3. In baseline household interviews, 15.7% of the 1,033 women included in the MII to discontinuation analysis reported never having been informed of any the three elements of the MII in regard to their current method. More than half of the sample (55.5%) answered ‘Yes’ to all three questions. There were no significant differences in the distribution of MII scores (p = 0.26) or the frequency with which women answered yes to all three MII questions between the full analytical sample and the reduced sample used in the facility quality to discontinuation analysis (p = 0.33). Figure 2 presents overall facility-level MII, by facility type, for the 124 facilities included in analysis.

Figure 2. Distribution of facility-level Method Information Index (MII) scores, by facility type.

Quality analysis

The following six domains of quality were identified in EFA of 38 facility-level variables within the sample of 124 facilities: client satisfaction, readiness for choice and management support; infrastructure & equipment; privacy, autonomy, and dignity; and information exchange ( Table 4). The actual range and distribution of summative facility quality scores across the 124 facilities included in the analysis are described in Table 5. Overall quality scores ranged from 11.8 to 27.9 (possible range 0 to 30), with an average overall score of 19.6.

Table 4. Results of exploratory factor analysis (EFA) of 38 facility-level quality variables from 124 high-volume facilities.

| Domain (α) | Variable | Factor Loading |

|---|---|---|

| Client Satisfaction (0.79) | Proportion of clients who felt their wait time was at least reasonable | 0.3001 |

| Proportion of clients who felt they were treated very well by other staff at facility | 0.7048 | |

| Proportion of clients who felt they were treated very well by the providers at the facility | 0.9318 | |

| Proportion of clients for whom the provider demonstrated good counseling skills

(composite) |

0.8251 | |

| Readiness for Choice &

Management Support (0.60) |

External supervisory visits occur at least quarterly, or at least 4 times in the past year | 0.2324 |

| Written guidelines/protocols for family planning (FP) services in place | 0.6144 | |

| Written guidelines/protocols for integration of FP and HIV services in place | 0.4794 | |

| Written guidelines or tools to screen patients for pregnancy in place | 0.4593 | |

| Audits or reports are conducted at least quarterly | 0.3722 | |

| Standardized Method Mix (Available) Score | 0.4223 | |

| Total # FP Methods Offered | 0.7050 | |

| Infrastructure &

Equipment (0.68) |

Facility has all equipment and supplies necessary to deliver methods offered | 0.5542 |

| Private examination room | 0.5507 | |

| Facility infection prevention equipment (out of those surveyed) | 0.6936 | |

| Facility has basic infrastructure (electricity, running water, toilet facilities) | 0.3388 | |

| Privacy, Autonomy &

Dignity (0.55) |

Proportion of clients who felt that they had visual privacy | 0.5243 |

| Proportion of clients who felt that they had audio privacy | 0.6869 | |

| Proportion of clients who felt comfortable asking questions | 0.6177 | |

| Information Exchange

(0.63) |

Proportion of clients who felt the information they received during their visit was at least

enough |

0.3609 |

| Proportion of clients who discussed side effects with provider at facility | 0.6406 | |

| Proportion of clients who discussed what to do when they have problems with the provider

at the facility |

0.5891 | |

| Technical Competence

(0.65) |

Provider provides information about different methods | 0.2806 |

| Provider has ever received any in-service training on providing methods of family planning? | 0.3021 | |

| Provider keeps personal/financial records - a client record card/form | 0.3658 | |

| Provider helps a client select a suitable method | 0.3919 | |

| Provider explains the way to use the selected method | 0.5465 | |

| Provider explains the side effects of the selected method to clients | 0.3862 | |

| Provider explains specific medical reasons to return for follow up | 0.2859 | |

| Provider the client's family planning preferences | 0.3413 |

Table 5. Range and distribution of summative facility quality domain scores, summarized by city and by facility type.

| Category | n | Client Satisfaction | Readiness

for Choice & Management Support |

Infrastructure & Equipment | Privacy, Autonomy & Dignity | Information Provision | Technical Competence | Overall Quality Score | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| range | mean | sd | range | mean | sd | range | mean | sd | range | mean | sd | range | mean | sd | range | mean | sd | range | mean | sd | ||

| All | 124 | 0 - 4 | 1.6 | 0.8 | 1 - 7 | 4.6 | 1.6 | 0.5 - 4 | 2.9 | 1 | 1 -3 | 2.9 | 0.4 | 0.5 - 4 | 2.7 | 0.7 | 0 - 8 | 5 | 1.8 | 11.8 - 27.9 | 19.6 | 3.1 |

| City | ||||||||||||||||||||||

| Nairobi | 43 | 0.7 - 3.3 | 1.3 | 0.5 | 2.3 - 7 | 5.2 | 1.2 | 1.2 - 4 | 3.0 | 0.9 | 2 -3 | 2.77 | 0.3 | 1.9 - 3.8 | 2.9 | 0.5 | 0 - 8 | 5.0 | 1.8 | 14.6 - 27.0 | 20.6 | 2.5 |

| Mombasa | 25 | 0.5 - 3.5 | 1.9 | 0.7 | 1.6 - 6.9 | 4.9 | 1.5 | 0.7 - 4 | 2.7 | 1.0 | 2.2 - 3 | 2.79 | 0.3 | 1.7 - 3.5 | 2.4 | 0.5 | 1 - 8 | 4.3 | 1.8 | 12.3 - 24.1 | 18.9 | 2.8 |

| Kisumu | 30 | 0 - 3.5 | 1.4 | 0.8 | 1 - 6.8 | 4.2 | 1.6 | 0.5 - 4 | 2.6 | 1.2 | 1 - 3 | 2.58 | 0.5 | 0.5 - 4 | 2.6 | 0.9 | 0 - 8 | 5.4 | 2.0 | 11.8 - 27.9 | 18.9 | 3.9 |

| Machakos | 15 | 0.8 - 4 | 2.2 | 0.9 | 1 - 6.8 | 3.3 | 1.9 | 2 - 4 | 3.1 | 0.8 | 2.6 - 3 | 2.94 | 0.1 | 1.5 - 4 | 2.6 | 0.7 | 3 - 8 | 5.5 | 1.4 | 15.2 - 25.7 | 19.5 | 2.6 |

| Kakamega | 11 | 0.9 - 4 | 2.3 | 1.0 | 3.2 - 6.2 | 4.6 | 0.9 | 1.2 - 4 | 3.2 | 1.1 | 1.7 - 3 | 2.74 | 0.4 | 1.6 - 4 | 2.9 | 0.8 | 4 - 7 | 5.1 | 1.0 | 15.5 - 24.4 | 20.9 | 3.0 |

|

Facility

Type |

||||||||||||||||||||||

| Public

Hospitals |

11 | 0.7 - 2.3 | 1.5 | 0.5 | 4.4 - 7 | 6 | 0.8 | 2.6 - 4 | 3.6 | 0.6 | 2.2 - 3 | 2.7 | 0.3 | 1.9 - 3.3 | 2.8 | 0.5 | 0 - 8 | 5.9 | 2.3 | 17.6 - 25.6 | 22.7 | 2.6 |

| Public

Facilities |

55 | 0 - 3.5 | 1.5 | 0.6 | 1.7 - 6.6 | 4.7 | 1.4 | 0.5 - 4 | 2.5 | 1.0 | 1- 3 | 2.7 | 0.4 | 0.5 - 3.8 | 2.5 | 0.6 | 1 - 8 | 4.9 | 1.8 | 11.8 - 25.6 | 18.7 | 2.7 |

| Private

Hospitals |

8 | 0.8 - 2.7 | 1.5 | 0.7 | 3.2 - 6.5 | 5.4 | 1.1 | 2.8 - 4 | 3.7 | 0.6 | 2.4 – 3 | 2.9 | 0.2 | 2 - 4 | 2.9 | 0.8 | 3 - 8 | 4.6 | 1.7 | 17.2 - 25.7 | 21.0 | 2.4 |

| Private

Facilities |

50 | 0.5 - 4 | 2.0 | 0.9 | 1 to 7 | 4.2 | 1.7 | 0.9 - 4 | 3.1 | 1.0 | 1.5 - 3 | 2.8 | 0.3 | 0.5 - 4 | 2.9 | 0.8 | 0 - 8 | 5.1 | 1.6 | 11.8 - 27.9 | 20.1 | 3.2 |

Note: Summative quality domain are summarized here for descriptive purposes by city and by facility type; however, scores were applied to women in discontinuation analysis according to city-facility type categories (i.e. public hospitals in Nairobi).

Facility level MII to facility level quality

At the facility-level, MII measured as the % of women who responded ‘yes’ to all three MII questions in client exit interviews was significantly correlated with the domains of infrastructure & equipment (R = 0.2354, p < 0.05), and information provision (R = 0.6818, p < 0.05) ( Table 6). Facility level MII was also correlated with overall summative quality scores (R= 0.3197, p < 0.05), and a positive association was examined in a two-way plot fitted with a regression line and 95% CI ( Figure 3).

Table 6. Correlation between facility-level Method Information Index (MII) scores and domains of family planning quality derived in exploratory factor analysis (EFA) (n = 124 facilities).

| Domain | Spearman’s rho | p |

|---|---|---|

| Client Satisfaction | 0.067 | 0.457 |

| Readiness for Choice & Management Support | 0.127 | 0.159 |

| Infrastructure & Equipment | 0.235 | 0.009 |

| Privacy, Autonomy & Dignity | -0.071 | 0.431 |

| Information Provision | 0.682 | < 0.001 |

| Technical Competence | 0.016 | 0.863 |

| Overall Quality | 0.320 | 0.003 |

Figure 3. A scatter plot fitted with a regression line and 95% CI for facility-level Method Information Index (MII) scores vs. overall summative quality scores at 124 high-volume facilities.

Discontinuation analysis

Among the 216 women who stopped using their method within the 3-year period, 210 discontinued while-in-need (20.3% of 1,033 women included in the MII to discontinuation analysis). The results of the discontinuation analysis are presented in the form of crude and adjusted hazard ratios in Table 7 and Table 8. All models are adjusted for age, method-type and facility-type.

Table 7. Unadjusted and adjusted hazard ratios for 3 -year discontinuation of modern contraception while-in-need, by Method Information Index (MII) score reported by woman at baseline; two models presented for binary and ordinal variables (n = 1,033 women).

| Variable | Unadjusted | Adjusted 1 | ||||

|---|---|---|---|---|---|---|

| HR | p | [95%

Conf. Interval] |

HR | p | [95 %

Conf. Interval] |

|

| Method Information Index (Binary) | ||||||

| Answered ‘No’ to at least one question (ref) | ||||||

| Answered ‘Yes’ to all 3 questions | 0.76 | 0.180 | [0.50 1.14] | 0.79 | 0.257 | [0.52 1.19] |

| Method Information Index (Ordinal) | ||||||

| MII – 0 (Answered ‘No’ to all 3) (ref) | ||||||

| MII - 1 | 0.45 | 0.012 | [0.24 0.84] | 0.45 | 0.014 | [0.24 0.85] |

| MII - 2 | 0.45 | 0.075 | [0.19 1.09] | 0.51 | 0.142 | [0.21 1.25] |

| MII - 3 | 0.48 | 0.003 | [0.23 0.78] | 0.51 | 0.009 | [0.31 0.84] |

1Adjusted for woman’s age at baseline, facility type, and method type (long-term vs. short-term method)

Table 8. Unadjusted and adjusted hazard ratios for 3-year discontinuation-while-in-need of modern contraception by facility-level measures of quality (n = 938 women).

| Variable | Unadjusted 1 | Adjusted 1, 2 | ||||

|---|---|---|---|---|---|---|

| HR | p | [95% Conf.

Interval] |

HR | p | [95 % Conf.

Interval] |

|

| Privacy, Autonomy & Dignity | 0.07 | <0.001 | [0.03 0.14] | 0.12 | <0.001 | [0.07 0.19] |

| Technical Competence | 0.81 | 0.006 | [0.69 0.94] | 0.64 | <0.001 | [0.59 0.68] |

| Client Satisfaction | 2.80 | <0.001 | [1.59 4.91] | 4.71 | <0.001 | [3.68 6.04] |

| Information Provision | 1.05 | 0.874 | [0.56 1.99] | 0.66 | 0.016 | [0.46 0.93] |

| Infrastructure & Equipment | 0.89 | 0.592 | [0.57 1.38] | 0.98 | 0.928 | [0.76 1.28] |

| Readiness for Choice &

Management Support |

1.44 | 0.001 | [1.15 1.80] | 1.39 | 0.056 | [0.99 1.94] |

1Intragroup correlation was accounted for using a shared frailty model

2Adjusted for woman’s age at baseline, facility type, and method type (long term vs. short term method)

Women who responded yes to all three MII questions asked in relation to their current contraceptive method in baseline household interviews were no less likely to discontinue their method while in need than women who responded yes to less than three (p > 0.05); however, when MII was examined as an ordinal variable in the discontinuation analysis, a woman’s likelihood of discontinuation while in need was reduced by approximately 50% whether she reported being informed of just one aspect of MII (HR: 0.45, p < 0.05), or informed of all three (HR: 0.51, p < 0.01) compared to none ( Table 7) versus those who received no information. Facility-level measures of quality derived through EFA were found to be significantly associated with discontinuation as well ( Table 8). Higher scores in the domains of privacy, autonomy & dignity (p < 0.01), technical competence (p < 0.01), and information exchange (p <0.05) reduced the risk of discontinuation while in need; higher scores in client satisfaction were associated with an increased risk of discontinuation (p < 0.01).

Discussion

In this study of women and facilities in urban Kenya, two measures of FP service quality were assessed for their association with contraceptive discontinuation: a QIQ-based facility quality assessment tool and the Method Information Index (MII). Women who reported receiving higher quality FP counseling according to their MII score were significantly less likely to discontinue their method over the next three years compared to women with an MII score of zero. In fact, a woman’s likelihood of discontinuation while in need was cut in half whether she reported being informed of just one aspect of MII or informed of all three versus receiving no information at all.

The analysis of the comprehensive facility-based QoC assessment tool was more complex. Using EFA, we identified six domains of FP service quality captured by the assessment tool. Four of these domains – client satisfaction, readiness for choice & management support, information exchange, and technical competence—closely align with the domains that the QIQ indicators are intended to capture; however, two additional domains of quality emerged in this setting: privacy, autonomy & dignity, and infrastructure & equipment. Items included in the assessment tool which may have captured mechanisms for follow-up and/or constellation of services were not sufficiently correlated to be identified as domains of quality in this analysis. Of the six that were identified, three domains of quality—privacy, autonomy & dignity, technical competence, and information exchange—were found to be significantly associated with a decreased risk of contraceptive discontinuation. Additionally, overall facility quality as measured by the summative domains derived in EFA was correlated with facility-level measures of MII.

The knowledge contributions of this study are manifold. First, programs operating in urban Kenya that wish to reduce the rate of contraceptive discontinuation among their clients might refer to these preliminary findings in their decision making. When women receive, or understand to have received, the information reported in the MII, they are less likely to discontinue their method. Thus, programs may choose to prioritize ensuring provision of full information on other methods and side effects in all contraceptive counseling that takes place at a facility and from this, could expect some reduction in each patient’s risk of discontinuing her method as a result. Additionally, efforts to improve aspects of privacy, autonomy, & dignity in FP service provision and to hire and train competent providers may strengthen rates of contraceptive continuation among their clients. These results echo findings from other settings where current method use or discontinuation were an outcome of interest. For example, interventions to train providers in evidenced-based medicine or to improve provider competencies in areas such as contraceptive counseling and client-management have been shown to improve contraceptive continuation in a variety of settings 26– 28. Similarly, a 2002 study conducted in Egypt found that interactions between providers and family planning clients that could be characterized as ‘client-centered’ versus ‘physician-centered’ were associated with higher rates of method continuation 7 months later 29.

As such, these findings may contribute to efforts to develop standardized, actionable measures for QoC in FP. The framework established by Bruce in 1990 ushered in a client focused approach to family planning services by establishing quality services as those in which clients are free to choose a method, are empowered to continue using the method, and are provided with avenues to seek help with or change their method. The framework achieved this paradigm shift by emphasizing choice, information provision, and mechanisms for follow-up as key tenets of quality, and establishing interpersonal relationships—vital for the facilitation of those tenets of quality—as a domain of quality in itself. However, the Bruce framework does not explicitly address issues of privacy, autonomy or dignity, nor are these issues explicitly addressed in many of the existing studies linking client-centered or person-centered approaches to care to FP outcomes 29– 31. For example, in the 2002 study in Egypt referenced above, ‘client-centered’ models of communication were identified as those with a high proportion of solidarity statements (versus disagreement statements), but issues of dignity, respect or privacy were not explicitly measured. In the present study, the domain most closely aligned with client-centered approaches to care contains explicit indicators of client comfort and privacy, including: ‘proportion of clients who reported they were comfortable asking questions’, and ‘proportion of clients who had visual privacy’, ‘proportion of clients who had auditory privacy’. The QIQ was based on the Bruce framework, and does not contain all of the aspects now considered important to client-centered care; yet, those measures that are present came together in EFA to form a domain independent of other domains of quality, even if it does not represent a comprehensive measure of client-centered or person-centered care. Given these findings, and an understanding of the rights of patients to dignified and respectful FP care, we agree with others who have suggested that more comprehensive and explicit measures of client-centeredness should be developed and incorporated into family planning quality measures 32, 33.

In this study, structural aspects of quality, such as infrastructure, equipment and facility readiness, were not associated with contraceptive discontinuation. Overall, these domains were more homogenous across facilities, which may suggest that indicators pertaining to structural quality, while generally easier and less expensive to measure, are better suited for measuring against a minimum acceptable level of quality, and process measures are more appropriate for teasing out which aspects of a facility or program’s performance will lead to better links with outcomes.

The appeal of using the MII over more comprehensive measures of FP QoC for routine quality monitoring at the facility or program level is clear – it contains only three questions, it is easily assessed and analyzed, it can be aggregated for upward reporting and measurement, and it allows for benchmarking against national standards. Therefore, to better understand the utility of using MII at the facility-level, we examined the correlation between facility-level measures of MII and other facility-level measures of quality. MII assessed at the facility level was not correlated with all of the domains of quality that were associated with a decreased risk of discontinuation among women. Expectedly, facility-level MII was found to be correlated with the information exchange domain, but there was no correlation with privacy, sutonomy, & fignity, or with technical competence. However, it was correlated with measures of overall facility quality, and with scores in the facility infrastructure domain. The client exit interviews (CEI) included in the SDP assessment in the MLE study are a good fit in the context of this comprehensive assessment tool, but were not designed to be representative of FP clients at any given facility, which informed our decision to exclude facility-level MII from the discontinuation analyses. Nonetheless, given these findings – that for a woman, her understanding of having received any MII information is associated with her being less likely to discontinue her method, and that when measured at the facility level, MII is correlated with measures of overall quality, more research is needed to determine how the measurement of MII can be refined at the facility level so that it can provide information to facilities and program managers that is actionable for improving outcomes.

Limitations of this study include the size of the analytical sample used (n = 1,033), which represents less than half of the 2,189 women interviewed at baseline and endline who were identified as current users of a modern reversible contraceptive method in 2010. The women who were dropped would have been eligible for inclusion in the analysis had it been possible to link them to the type of facility where they had received their method, to determine their method start date, and to identify their baseline episode of contraceptive use within the reproductive health calendar collected at endline. The final samples are much smaller than the original representative sample of women, and the generalizability of these results may be limited; however, the demographic characteristics of current FP users included in and excluded from the analysis were found to be comparable ( Table 1). We were unable to identify the exact facility where each woman received her FP method; thus, this study linked women to a measure of average facility quality based on her location and the type of facility where she received her method, assuming that women seek services within the city where she resides, which may be inaccurate in some cases. Additionally, quality measures aggregated at the facility type and city level may not be representative of a women’s individual experience of care. Together these limitations may attenuate any existing associations between other domains of facility quality identified in our study and contraceptive discontinuation, or perhaps no association exists. The reason for the association identified between the client satisfaction domain and an increased risk of discontinuation is unclear, but we do not interpret this to mean that that higher rates of client satisfaction will lead to higher rates of discontinuation among women. There was no single variable within the domain that could be identified as the main driver of this finding. The domain includes only client reported measures (of wait time, being treated well by staff and providers, and of experiencing good counseling skills during the visit). This may be an indication of the reliability of client-reported measures, especially where women are approached and interviewed as they are exiting a facility and may be reticent to provide negative feedback regarding staff and providers; however, given our mathematical approach to the quality analysis, we recognize that the face validity of the items in this domain may be poor. The items held together mathematically in factor analysis, but they may be representing some construct other than ‘client satisfaction’.

Conclusion

Our study found a positive association between woman-reported MII and facility-level measures of information provision, technical competence, privacy, autonomy & dignity and contraceptive method continuation over 3 years. The findings suggest that family planning facilities and programs should emphasize information provision and client-centered approaches to care alongside technical competence in the provision of FP care. More work is needed to determine how the measurement of MII can be refined at the facility level as an actionable metric for improving outcomes. Furthermore, comprehensive and explicit measures of client-centeredness which incorporate aspects of privacy, autonomy & dignity should be emphasized and operationalized as standard measures of FP QoC are advanced.

Ethical considerations and consent

The study protocol and tools were approved by the Institutional Review Board at the University of North Carolina at Chapel Hill and the Kenya Medical Research Institute Ethical Review Committee. All household-level participants and client exit interview participants provided verbal consent while providers provided written consent.

Data availability

Underlying data

The data used in this study are available upon request. Please see: Carolina Population Center Data Portal for the Measurement, Learning & Evaluation

project at: https://data.cpc.unc.edu/projects/14/view to request the data.

Data are available for download after an approval of a restricted use application, which involves signing a data use agreement and providing brief information about intent of use (investigator information, research team information, and statement of purpose). The endline Women’s data is restricted to matched women only.

This project contains the following underlying data:

Baseline Service Delivery Point Survey (2010–11)

SDP Facility Audit

SDP Client exit interviews

SDP Provider survey

Baseline Household Survey (2010–11)

Women’s data

Endline Household Survey (2014–15)

Women’s data

Extended data

Harvard Dataverse: MLE Kenya Survey Tools. https://doi.org/10.15139/S3/XAJYU1

This project contains the following Extended data:

Kenya Baseline Woman Questionnaire.pdf (Baseline household woman survey available in English and Swahili)

Kenya Baseline SDP Exit Interview (Eng-Swa).pdf (Exit interview survey available in English, Swahili, Kamba and Luo)

Kenya Baseline SDP Facility Audit.pdf (Baseline service delivery point survey)

Kenya Baseline SDP Service Provider Survey.pdf (Provider survey available in English)

Kenya Endline Woman Quesionnaire.pdf (Endline household woman survey available in English and Swahili)

Data are available under the terms of the Creative Commons Zero "No rights reserved" data waiver (CC0 1.0 Public domain dedication).

Acknowledgements

We would like to thank the Kenya Medical Research Institute – Research, Care and Training Program, the Tupange program (led by Jhpiego), and the Kenya National Bureau of Statistics for their support with data collection.

Funding Statement

This work was supported by the Bill and Melinda Gates Foundation through a grant to the Measurement, Learning & Evaluation Project [OPP52037] and International Conference on Family Planning [OPP1181398]. The contents of this paper are solely the responsibility of the authors and do not necessarily represent the official views of the funder.

The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

[version 1; peer review: 2 approved with reservations]

References

- 1. Guttmacher Institute: Adding It Up: The Costs and Benefits of Investing in Sexual and Reproductive Health 2017, fact sheet.2017. [Google Scholar]

- 2. Jain AK, Obare F, RamaRao S, et al. : Reducing unmet need by supporting women with met need. Int Perspect Sex Reprod Health. 2013;39(3):133–141. 10.1363/3913313 [DOI] [PubMed] [Google Scholar]

- 3. Ali MM: Quality of care and contraceptive pill discontinuation in rural Egypt. J Biosoc Sci. 2001;33(2):161–172. 10.1017/S0021932001001614 [DOI] [PubMed] [Google Scholar]

- 4. Arends-Kuenning M, Kessy FL: The impact of demand factors, quality of care and access to facilities on contraceptive use in Tanzania. J Biosoc Sci. 2007;39(1):1–26. 10.1017/S0021932005001045 [DOI] [PubMed] [Google Scholar]

- 5. Mensch B, Arends-Kuenning M, Jain A: The impact of the quality of family planning services on contraceptive use in Peru. Stud Fam Plann. 1996;27(2):59–75. 10.2307/2138134 [DOI] [PubMed] [Google Scholar]

- 6. RamaRao S, Lacuesta M, Costello M, et al. : The link between quality of care and contraceptive use. Int Fam Plan Perspect. 2003;29(2):76–83. [DOI] [PubMed] [Google Scholar]

- 7. Bruce J: Fundamental elements of the quality of care: a simple framework. Stud Fam Plann. 1990;21(2):61–91. 10.2307/1966669 [DOI] [PubMed] [Google Scholar]

- 8. Tumlinson K: Measuring quality of care: A review of previously used methodologies and indicators.2018;29 Reference Source [Google Scholar]

- 9. Sprockett A: Review of quality assessment tools for family planning programmes in low- and middle-income countries. Health Policy Plan. 2017;32(2):292–302. 10.1093/heapol/czw123 [DOI] [PubMed] [Google Scholar]

- 10. MEASURE Evaluation: Quick Investigration of Quality (QIQ): A User’s Guide for Monitoring Quality of Care in Family Planning.2001. Reference Source [Google Scholar]

- 11. WHO: Service Availability and Readiness Assessment (SARA): An annual monitoring system for service delivery.2013. Reference Source [Google Scholar]

- 12. Wang W, Do M, Hembling J, et al. : Assessing the Quality of Care in Family Planning, Antenatal, and Sick Child Services at Health Facilities in Kenya, Namibia, and Senegal. Rockville, Maryland, USA: ICF International;2014. Reference Source [Google Scholar]

- 13. Tumlinson K, Pence BW, Curtis SL, et al. : Quality of Care and Contraceptive Use in Urban Kenya. Int Perspect Sex Reprod Health. 2015;41(2):69–79. 10.1363/4106915 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. DeVellis R: Scale Development Theory and Applications.Second Edition. Thousand Oaks, California: Sage Publications, Inc;2003;26 Reference Source [Google Scholar]

- 15. Brown W, Druce N, Bunting J, et al. : Developing the “120 by 20” goal for the Global FP2020 initiative. Stud Fam Plann. 2014;45(1):73–84. 10.1111/j.1728-4465.2014.00377.x [DOI] [PubMed] [Google Scholar]

- 16. DHS Model Questionnaire with Commentary - Phase 4 (1997 - 2003).2001; Accessed January 2, 2019. Reference Source [Google Scholar]

- 17. Glossary of Family Planning Indicators | PMA2020. Accessed March 19, 2019. Reference Source [Google Scholar]

- 18. Kenya National Bureau of Statistics, Ministry of Health, National AIDS Control Council, et al. : Kenya Demographic and Health Survey 2014.2015. Reference Source [Google Scholar]

- 19. MLE, Tupange, KEMRI: Report of the Baseline Service Delivery Point Survey for the Kenya Urban Reproductive Health Initiative (Tupange).2011. Reference Source [Google Scholar]

- 20. MLE, Tupange, KNBS: Report of the Baseline Household Survey for the Kenya Urban Reproductive Health Initiative (Tupange).2011. [Google Scholar]

- 21. Measurement, Learning & Evaluation (MLE) Project: Measurement, Learning & Evaluation of the Kenya Urban Reproductive Health Initiative (Tupange): Kenya, Endline Household Survey 2014. [TWP 3 - 2015].2015. Reference Source [Google Scholar]

- 22. Measurement, Learning & Evaluation Project: MLE Kenya Survey Tools. UNC Dataverse, V1,2017. 10.15139/S3/XAJYU1 [DOI] [Google Scholar]

- 23. StataCorp: Stata Statistical Software: Release 14. College Station, TX: StataCorp LP. [Google Scholar]

- 24. Wienke A: Frailty Models in Survival Analysis. New York: Chapman and Hall/CRC;2011. 10.1201/9781420073911 [DOI] [Google Scholar]

- 25. Costello A, Osborne J: Best Practices in Exploratory Factor Analysis: Four Recommendations for Getting the Most From Your Analysis. Pract Assess Res Eval. 2005;10(7). Reference Source [Google Scholar]

- 25. Baumgartner JN, Morroni C, Mlobeli RD, et al. : Impact of a provider job aid intervention on injectable contraceptive continuation in South Africa. Stud Fam Plann. 2012;43(4):305–314. 10.1111/j.1728-4465.2012.00328.x [DOI] [PubMed] [Google Scholar]

- 27. Blackstone SR, Nwaozuru U, Iwelunmor J: Factors Influencing Contraceptive Use in Sub-Saharan Africa: A Systematic Review. Int Q Community Health Educ. 2017;37(2):79–91. 10.1177/0272684X16685254 [DOI] [PubMed] [Google Scholar]

- 28. Liu J, Shen J, Diamond-Smith N: Predictors of DMPA-SC continuation among urban Nigerian women: the influence of counseling quality and side effects. Contraception. 2018;98(5):430–437. 10.1016/j.contraception.2018.04.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Abdel-Tawab N, Roter D: The relevance of client-centered communication to family planning settings in developing countries: lessons from the Egyptian experience. Soc Sci Med. 2002;54(9):1357–1368. 10.1016/S0277-9536(01)00101-0 [DOI] [PubMed] [Google Scholar]

- 30. Costello M, Lacuesta M, RamaRao S, et al. : A client-centered approach to family planning: the Davao project. Stud Fam Plann. 2001;32(4):302–314. 10.1111/j.1728-4465.2001.00302.x [DOI] [PubMed] [Google Scholar]

- 31. Dehlendorf C, Henderson JT, Vittinghoff E, et al. : Association of the quality of interpersonal care during family planning counseling with contraceptive use. Am J Obstet Gynecol. 2016;215(1):78.e1–78.e9. 10.1016/j.ajog.2016.01.173 [DOI] [PubMed] [Google Scholar]

- 32. Jain AK, Hardee K: Revising the FP Quality of Care Framework in the Context of Rights-based Family Planning. Stud Fam Plann. 2018;49(2):171–179. 10.1111/sifp.12052 [DOI] [PubMed] [Google Scholar]

- 33. Sudhinaraset M, Afulani P, Diamond-Smith N, et al. : Development of a Person-Centered Family Planning Scale in India and Kenya. Stud Fam Plann. 2018;49(3):237–258. 10.1111/sifp.12069 [DOI] [PubMed] [Google Scholar]