Abstract

We introduce the basic elements of a spatio-angular theory of fluorescence microscopy, providing a unified framework for analyzing systems that image single fluorescent dipoles and ensembles of overlapping dipoles that label biological molecules. We model an aplanatic microscope imaging an ensemble of fluorescent dipoles as a linear Hilbert-space operator, and we show that the operator takes a particularly convenient form when expressed in a basis of complex exponentials and spherical harmonics—a form we call the dipole spatio-angular transfer function. We discuss the implications of our analysis for all quantitative fluorescence microscopy studies and lay out a path towards a complete theory.

1. Introduction

Fluorescence microscopes are widely used in the biological sciences for imaging fluorescent molecules that label specific proteins and biologically important molecules. While most fluorescence microscopy experiments are designed to measure only the spatial distribution of fluorophores, a growing number of experiments seek to measure both the spatial and angular distributions of fluorophores by the use of polarizers [1–5] or point spread function engineering [6,7].

Meanwhile, single-molecule localization microscopy (SMLM) experiments use spatially sparse fluorescent samples to localize single molecules with precision that surpasses the diffraction limit. Noise limits the precision of this localization [8,9], and several studies have shown that model mismatch (e.g. ignoring the effects of vector optics, dipole orientation [10], and dipole rotation [11]) can introduce localization bias as well. Therefore, the most precise and accurate SMLM experiments must use an appropriate model and jointly estimate both the position and orientation of each fluorophore. Several studies have successfully used vector optics and dipole models to estimate the position and orientation of single molecules [12–16], and there is growing interest in designing optical systems for measuring the position, orientation, and rotational dynamics of single molecules [6,7,17–19].

While many studies have focused on improving imaging models for spatially sparse fluorescent samples, we consider the more general case and aim to improve imaging models for arbitrary samples including those containing ensembles of fluorophores within a resolvable volume. In particular, we examine the effects of two widely used approximations in fluorescence microscopy—the monopole approximation and the scalar approximation.

We use the term monopole to refer to a model of a fluorescent object that treats it as an isotropic absorber/emitter. Although the term monopole approximation is not in widespread use, we think it accurately describes the way many models of fluorescence microscopy treat fluorescent objects, and we use the term to distinguish the monopole model from more realistic dipole and higher-order models. Despite their use in models, electromagnetic monopole absorber/emitters do not exist in nature. All physical fluorescent objects absorb and emit radiation with dipole or higher-order moments, and these moments are always oriented in space. For a classical mental model of fluorescent objects we imagine each dipole as a small oriented antenna where electrons are constrained to move along a single direction.

All fluorescence microscopy models that use an optical point spread function or an optical transfer function to describe the propagation of light through the microscope implicitly make the monopole approximation. The optical point spread function is the irradiance response of an optical system to an isotropic point source, so it cannot model the response due to an anisotropic dipole radiator. In this work we define monopole and dipole transfer functions that describe the mapping between fluorescent emissions and the measured irradiance. Although optical systems are an essential part of microscopes, fluorescence microscopists are interested in measuring the properties of fluorophores (not optics), so the monopole and dipole transfer functions are more directly useful than the optical transfer function for the problems that fluorescence microscopists are interested in solving.

While the monopole approximation applies to the fluorescent object, the scalar approximation applies to the fields that propagate through the microscope. Modeling the electric fields in a region requires a three-dimensional vector field, but if the electric fields are random or completely parallel to one another, a scalar field is sufficient, and we can replace the vector-valued electric field, E, with a scalar-valued field, U.

The scalar approximation is often made together with the monopole approximation. For example, the Born-Wolf model [20] and the Gibson-Lanni model [21] make both the monopole and scalar approximations when applied to fluorescence microscopes. However, some models make the monopole approximation but not the scalar approximation. For example, the Richards-Wolf model [22] considers the role of vector-valued fields in the optical system, but it is implicitly an optical model so when it is applied to fluorescence microscopes the monopole approximation is assumed.

This work lies at the intersection of three subfields of fluorescence microscopy: (1) spatial ensemble imaging where each resolvable volume contains many fluorophores and the goal is to find the concentration of fluorophores as a function of position in the sample, (2) spatio-angular ensemble imaging where each resolvable volume contains many fluorophores and the goal is to find the concentration and average orientation of fluorophores as a function of position in the sample, and (3) SMLM imaging where fluorophores are sparse in the sample and the goal is to find the position and orientation of each fluorophore. We briefly review how these three subfields use the monopole and scalar approximations.

The large majority of fluorescence microscopes are used to image ensembles of fluorophores, and most existing modeling techniques make use of the monopole approximation, the scalar approximation, or both. As discussed above, the Gibson-Lanni model, the Born-Wolf model, and the Richards-Wolf model are approximate when applied to fluorescence microscopy data because they only model monopole emitters. Deconvolution algorithms that use these models may make biased estimates of fluorophore concentrations since they ignore the dipole excitation and emission of fluorophores.

A small but growing group of microscopists is interested in measuring the orientation and position of ensembles of fluorophores [1–5]. These techniques typically use polarizers to make multiple measurements of the same object with different polarizer orientations, then they use a model of the dipole excitation and emission processes [23] to recover the orientation of fluorophores using pixel-wise arithmetic. Although these studies do not adopt the scalar or monopole approximations for the angular part of the problem, they adopt both approximations when they consider the spatial part of the problem. Existing works either ignore the spatial reconstruction problem [1–4] or assume that the spatial and angular reconstruction problems can be solved sequentially [5].

The most precise experiments in SMLM imaging do not adopt the scalar or monopole approximations. Many works have applied dipole models with vector optics, and several works have considered the effects of rotational and spatial diffusion on the images of single molecules [7, 24–27]. We will see that the dipole transfer functions are useful tools for incorporating angular and spatial diffusion into SMLM simulations and reconstructions.

In the present work we begin to place these three subfields on a common theoretical footing. First, in section 2, we consider arbitrary fluorescence imaging models and lay out a plan for developing a model for spatio-angular imaging. In section 3 we review the familiar monopole imaging model, and in section 4 we extend the model to dipoles. Finally, in section 5 we discuss the results and their broader implications.

In this paper we focus on modeling a single-view fluorescence microscope with neither polarized excitation nor detection. In future papers of this series we will extend our models to include polarized excitation, polarized detection, and multi-view microscopes. Additionally, we have restricted this paper to the forward problem—the mapping between a known object and the data. In future papers we will consider the inverse problem, and the singular value decomposition (SVD) will play a central role.

2. Theory

We begin our analysis with the abstract Hilbert space formalism of Barrett and Myers [28, ch. 1.3]. Our first task is to formulate the imaging process as a mapping between two Hilbert spaces , where is a set that contains all possible objects, is a set that contains all (possibly noise-corrupted) datasets, and is a model of the instrument that maps between these two spaces. We denote (possibly infinite-dimensional) Hilbert-space vectors in with f, Hilbert-space vectors in with g, and the mapping between the spaces with

| (1) |

Throughout this work we will use the letters g, h, and f with varying fonts, capitalizations, and arguments to represent the data, the instrument, and the object, respectively.

Once we have identified the spaces and , we can start expressing the mapping between the spaces in a specific object-space and data-space basis. In most cases the easiest mapping to find uses a delta-function basis—we expand object and data space into delta functions, then express the mapping as an integral transform. After finding this mapping we can start to investigate the same mapping in different bases.

The above discussion is quite abstract, but it is a powerful point of view that will enable us to unify the analysis of spatio-angular fluorescence imaging. In section 3 we will demonstrate the formalism by examining a familiar monopole imaging model, and we will demonstrate the mapping between object and data space in two different bases. In section 4 we will extend the monopole imaging model to dipoles and examine the mapping in four different bases.

3. Monopole imaging

We start by modeling the imaging process for a field of dynamic fluorescent monopoles. We have split our model into two pieces: (1) the dynamics of excitation, decay, and diffusion processes, and (2) the emission and detection processes. This section treads familiar ground, but it serves to establish the concepts and notation that will be necessary when we extend to the dipole case.

3.1. Monopole dynamics

We begin by considering a spatial distribution of monopoles that can occupy just two states—a ground state and an excited state. We use to represent the number of monopoles in the ground state at position per unit volume at time t per unit time. Similarly, we use to represent the number of monopoles in the excited state.

Next, we describe all of the transitions between states. If we excite the monopoles with an illumination pattern that is uniform in space and time, then the rate of excitation will be the product of an excitation constant κ(ex) with the number of monopoles in the ground state. Similarly, the rate of molecules decaying from the excited state to the ground state will be the product of a decay constant κ(d) with the number of monopoles in the excited state (assuming that stimulated emission is negligible).

Finally, we describe the effect of diffusion within each state. If the diffusion is homogeneous (independent of position) and isotropic (independent of direction) then the rate of change of the spatial distribution will be proportional to a diffusion constant DR multiplied by the Laplacian operator acting on the spatial distribution of monopoles at that point.

Bringing the effects of excitation, decay, and diffusion together yields a pair of coupled partial differential equations

| (2) |

| (3) |

Eqs. (2) and (3) serve as a minimal model of monopole dynamics, and these equations can be generalized to model a broad class of fluorescence imaging techniques. For example, space-and time-varying excitation patterns are useful for modeling structured illumination microscopes (SIM); space- and time-varying decay constants are of interest in fluorescence lifetime imaging microscopy (FLIM); space-, time-, and direction-varying (tensor-valued) diffusion coefficients are of interest in diffusion imaging; and extra states and transitions can be added to model single-molecule techniques, stimulated emission and depletion (STED) techniques, Forster resonance energy transfer (FRET), and photobleaching.

3.2. Monopole detection

Next, we model the monopole emission and detection processes independent of the underlying dynamics. In our simple two-state model we can only measure the photons radiated during transitions from the excited state to the ground state, which occur at a rate . If we expose a detector for a period te, then the most we can hope to recover from our data without knowing more about the dynamics of the system is the quantity

| (4) |

The function represents the number of monopole emissions during the exposure time from position per unit volume. A complete name for would be the time-integrated monopole emission density rate, but for brevity we will refer to it as the monopole emission density.

We identify the set of all possible monopole emission densities as our object space — the set of square-integrable functions in three-dimensional space. If we measure the irradiance on a two-dimensional detector then data space is and the data can be represented by a function called the irradiance—the power received by a surface per unit area at position . Note that we have adopted a slightly unusual convention of using primes to denote unscaled coordinates. Later in this section we will introduce unprimed scaled coordinates that we will use throughout the rest of the paper.

A reasonable starting point is to assume that the relationship between the object and the data is linear—this is true in many fluorescence microscopes because fluorophores emit incoherently, so a scaled sum of emissions result in a scaled sum of the irradiance patterns created by the individual emissions.

If the mapping is linear, we can write the irradiance as a weighted integral over the monopole emission density

| (5) |

where is the irradiance at two-dimensional position (roman font) created by a point source at three-dimensional position (gothic font).

Next, we assume that the optical system is aplanatic—Abbe’s sine condition is satisfied and on-axis points are imaged without aberration. Abbe’s sine condition guarantees that off-axis points are imaged without spherical aberration or coma [29, ch. 1], so the imaging system can be modeled within the field of view of the optical system as a transverse magnifier with shift-invariant blur. We split the three-dimensional object coordinate into a two-dimensional transverse coordinate and a one-dimensional axial coordinate to write the forward model as

| (6) |

where m is a transverse magnification factor. Note that non-paraxial imaging systems that satisfy Abbe’s sine condition cannot simultaneously satisfy the Herschel condition when m ≠ 1 [30, 31], so non-paraxial magnifying imaging systems cannot be shift-invariant in three dimensions. For paraxial imaging systems the Abbe sine and Herschel conditions are approximately the same, and the imaging system can be described as approximately shift invariant in three dimensions.

We can simplify our analysis by changing coordinates and writing Eq. (6) as a transverse convolution [28, ch. 7.2.7]. We define a demagnified detector coordinate and a normalization factor that corresponds to the total power incident on the detector plane due to an in-focus point source where . We use these scaling factors to define the monopole point spread function as

| (7) |

and the scaled irradiance as

| (8) |

With these definitions we can express the mapping between the object and the data as a familiar transverse convolution

| (9) |

We have chosen to normalize the monopole point spread function so that

| (10) |

The monopole point spread function corresponds to a measurable irradiance, so it is always real and positive.

The mapping between the object and the data in a linear shift-invariant imaging system takes a particularly simple form in a complex exponential (i.e. Fourier) basis. If we apply the Fourier convolution theorem to Eq. (9) we find that

| (11) |

where we define the scaled irradiance spectrum as

| (12) |

the monopole transfer function as

| (13) |

and the monopole spectrum as

| (14) |

The monopole point spread function is normalized and real, so we know that the monopole transfer function is normalized, H(0, 0) = 1, and conjugate symmetric, H(−ν, 0) = H*(ν, 0), where z* denotes the complex conjugate of z.

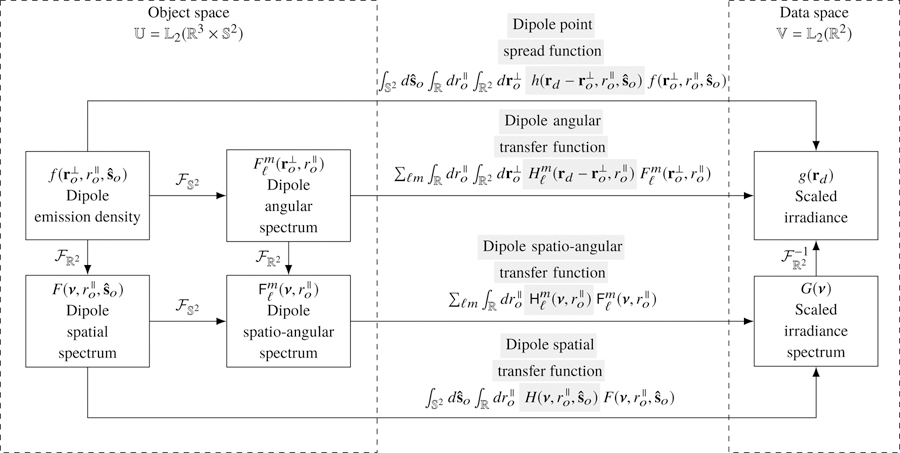

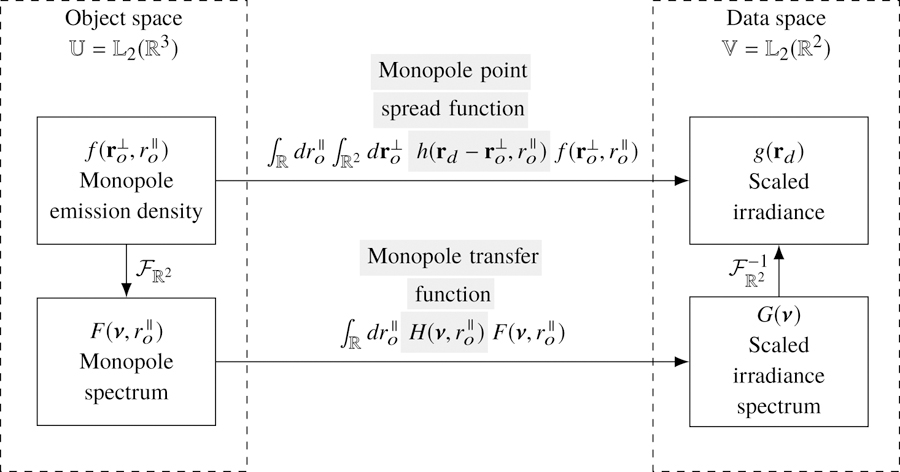

Notice that Eqs. (9) and (11) are expressions of the same mapping between object and data space in different bases. Figure 1 summarizes the relationship between object and data space in both bases.

Fig. 1.

The mapping between the object and data space of a monopole fluorescence microscope can be computed in two different bases—a delta function basis and a complex exponential basis. The change of basis can be computed with a two-dimensional Fourier transform denoted . Gray highlighting indicates which part of each expression is being named.

We have been careful to use the term monopole transfer function instead of the commonly-used term optical transfer function. We reserve the term optical transfer function for optical systems—the optical transfer function maps between an input irradiance spectrum and an output irradiance spectrum in an optical system. We can use optical transfer functions to model the propagation of light through a microscope, but ultimately we are always interested in the object, not the light emitted by the object. We will find the distinction between the optical transfer function and the object transfer function to be especially valuable when we consider dipoles in section 4.

3.3. Monopole coherent transfer functions

Although the Fourier transform can be used to calculate the monopole transfer function directly from the monopole point spread function, there is a well-known alternative that exploits coherent transfer functions. The key idea is that the monopole point spread function can always be written as the absolute square of a scalar-valued monopole coherent spread function, , defined by

| (15) |

Physically, the monopole coherent spread function corresponds to the scalar-valued electromagnetic field on the detector with appropriate scaling.

We can plug Eq. (15) into Eq. (13) and use the autocorrelation theorem to rewrite the monopole transfer function as

| (16) |

where we have introduced the monopole coherent transfer function as the two-dimensional Fourier transform of the monopole coherent spread function:

| (17) |

Physically, the monopole coherent transfer function corresponds to the scalar-valued field in a Fourier plane of the detector with appropriate scaling.

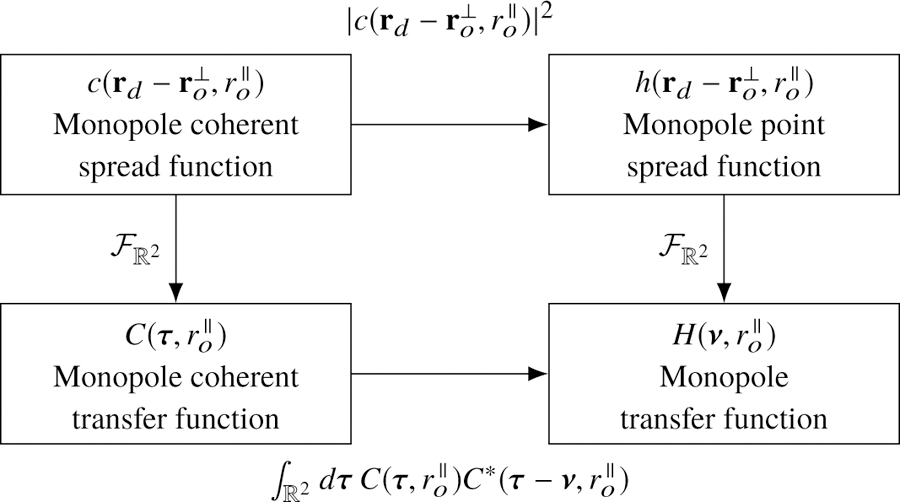

The coherent transfer function provides a valuable shortcut for analyzing microscopes since it is often straightforward to calculate the field in a Fourier plane of the detector. A typical approach for calculating the transfer functions is to (1) calculate the field in a Fourier plane of the detector, (2) scale the field to find the monopole coherent transfer function, then (3) use the relationships in Fig. 2 to calculate the other transfer functions.

Fig. 2.

The monopole transfer functions are related by a two-dimensional Fourier transform (right column). The coherent monopole transfer functions (left column) can be used to simplify the calculation of the remaining transfer functions.

4. Dipole imaging

Now we consider a microscope imaging a field of dipoles by recording the irradiance on a two-dimensional detector. Similar to the monopole case, we split our model in two pieces: a dynamics model and an imaging model.

4.1. Dipole dynamics

We consider a distribution of dipoles that can occupy a ground state and an excited state. A function that assigns a real number to each point in space and time t is not enough to specify a dynamic field of dipoles because the dipoles can have different orientations. Therefore, we introduce an orientation coordinate and use the function to represent the number of dipoles in the ground state at position per unit volume oriented along per steradian at time t per unit time. Similarly, we use to represent the number of dipoles in the excited state.

We can model the transitions between states in the same way that we did with monopoles. If we excite the dipoles with an illumination pattern that is uniform in space, orientation, and time, then the rate of excitation will be . Similarly, the rate of decay from the excited state to the ground state will be (assuming that stimulated emission is negligible).

We can model homogeneous and isotropic spatial diffusion in the same way that we did for monopoles—the rate of change of the spatial distribution will be the product of a spatial diffusion coefficient DR multiplied by the Laplacian acting on the dipole distribution at that point. Similarly, if angular diffusion is homogeneous (independent of orientation ) and isotropic (independent of angular diffusion direction) then the rate of the change of angular distribution will be the product of an angular diffusion coefficient DS multiplied by the spherical Laplacian acting on the dipole distribution at that orientation. If we choose a set of spherical coordinates where θ is the inclination angle and ϕ is the azimuthal angle, then the spherical Laplacian takes the form .

Bringing everything together yields a pair of coupled partial differential equations

| (18) |

| (19) |

Variants of Eqs. (18) and (19) can be used to model a wide range of more realistic dynamics. For example, a space-, orientation-, and time-varying excitation pattern can be used to model spatio-angular structured illumination patterns. Specifically, an orientation-dependent excitation pattern can be used to model selective excitation with polarized light. There is also the possibility of modeling space-, orientation-, and time-varying decay constants and diffusion constants.

Finally, we note that many fluorescent molecules have excitation and emission dipole moments that are not collinear. We can model these types of molecules by choosing the orientation coordinate to be along the emission dipole moment, then accounting for the excitation moment in the excitation rate function .

4.2. Dipole detection

Next, we model the dipole emission and detection processes independent of the underlying dynamics. We can only measure photons radiated during transitions from the excited state to the ground state, which occur at rate . If we expose a detector for a period te then the most we can hope to recover from our data without knowing more about the underlying dynamics is the quantity

| (20) |

The function represents the number of dipole emissions during the exposure time from position per unit volume and orientation per unit steradian. A complete name for would be the time-integrated dipole emission density rate, but for brevity we will refer to it as the dipole emission density. We identify the set of all possible dipole emission densities as our object space —the set of square-integrable functions on the product space of a volume and a two-dimensional spherical surface (the usual spherical surface embedded in ). To visualize functions in object space we imagine a sphere at every point in a three-dimensional volume with a scalar value assigned to every surface point on each sphere.

Predicting the dipole emission density for a specific experiment requires (1) a model of the underlying dynamics (a variant of Eqs. (18) and (19)), (2) a set of initial/boundary conditions, (3) a solution of the coupled partial differential equations (either an analytic or a numerical solution), then (4) the computation of the dipole emission density using Eq. (20). In Appendix B we demonstrate this procedure for an experiment with an instantaneous excitation pulse.

Similar to the monopole case, we model the mapping between the object and the irradiance as an integral transform

| (21) |

where is the irradiance at position created by a point source at with orientation . Notice that we have considered all possible orientations and integrated over the sphere . The dipole emission density is always symmetric under angular inversion, , so we could have chosen to integrate over a hemisphere and adjusted the definition of the dipole emission density by a factor of two. For convenience we will continue to integrate over the complete sphere. We note that all functions in this work with as an independent variable are symmetric under angular inversion,.

If the optical system is aplanatic, then we can split the three-dimensional object coordinate into a transverse coordinate and an axial coordinate and write the forward model as

| (22) |

We define the same demagnified detector coordinate and a new normalization factor that corresponds to the total power incident on the detector due to an in-focus spatial point source with an angularly uniform distribution of dipoles . We use these scaling factors to define the dipole point spread function as

| (23) |

and the scaled irradiance as

| (24) |

With these definitions we can express the mapping between the object and the data as

| (25) |

Equation (25) is a key result because it represents the mapping between object space and data space in a delta function basis. The integrals in Eq. (25) would be extremely expensive to compute for an arbitrary object, but the integrals simplify to an efficient sum if the object is spatially and angularly sparse. For example, Eq. (25) would reduce to a superposition of dipole point spread functions if the object consisted of immobile dipole emitters.

Similar to the monopole case, we have chosen to normalize the dipole point spread function so that

| (26) |

The dipole point spread function is a measurable irradiance, so it is real and positive.

4.3. Dipole spatial transfer function

We can make our first change of basis by applying the Fourier-convolution theorem to Eq. (25), which yields

| (27) |

where we define the dipole spatial transfer function as

| (28) |

and the dipole spatial spectrum as

| (29) |

Since the dipole point spread function is normalized and real, we know that the dipole spatial transfer function is normalized, , and conjugate symmetric,.

This basis is efficient for simulating and analyzing objects that are angularly sparse and spatially dense; e.g. rod-like structures that contain fluorophores in a fixed orientation, or rotationally fixed fluorophores that are undergoing spatial diffusion.

4.4. Dipole angular transfer function

The spherical harmonics are another set of convenient basis functions that play the same role as complex exponentials in spatial transfer functions—see Appendix A for an introduction to the spherical harmonics. We can change basis from spherical delta functions to spherical harmonics by applying the generalized Plancherel theorem for spherical functions

| (30) |

where and are arbitrary functions on the sphere, and are their spherical Fourier transforms defined by

| (31) |

and are the spherical harmonic functions defined in Appendix A. Equation (30) expresses the fact that scalar products are invariant under a change of basis [28, Eq. 3.78]. The left-hand side of Eq. (30) is the scalar product of functions in a delta function basis and the right-hand side is the scalar product of functions in a spherical harmonic function basis. Applying Eq. (30) to Eq. (25) yields

| (32) |

where we have defined the dipole angular transfer function as

| (33) |

and the dipole angular spectrum as

| (34) |

Since the dipole point spread function is normalized and real, we know that the dipole angular transfer function is normalized, , and conjugate symmetric,.

This basis is efficient for simulating and analyzing objects that are spatially sparse and angularly dense; e.g. single fluorophores that are undergoing angular diffusion, or many fluorophores that are within a resolvable volume with varying orientations.

4.5. Spatio-angular dipole transfer function

We can arrive at our final basis in two ways: by applying the generalized Plancherel theorem for spherical functions to Eq. (27) or by applying the Fourier convolution theorem to Eq. (32). We follow the first path and find that

| (35) |

where we have defined the dipole spatio-angular transfer function as

| (36) |

and the dipole spatio-angular spectrum as

| (37) |

Since the dipole point spread function is normalized and real, we know that the dipole spatio-angular transfer function is normalized, , and conjugate symmetric,.

This basis is efficient for simulating and analyzing arbitrary samples because it exploits the band limit of the imaging system. We note that most single molecule imaging experiments are efficiently described in this basis because of the effects of spatial and rotational diffusion—see Appendix B for an example.

Figure 3 summarizes the relationships between the four bases that we can use to compute the image of a field of dipoles. We reiterate that all four bases may be useful depending on the sample.

Fig. 3.

The mapping between the object space and data space of a dipole imaging system can be computed in four different bases—a delta function basis, a complex-exponential/angular-delta basis, a spatial-delta/spherical-harmonic basis, and a complex-exponential/spherical-harmonic basis. The changes of basis can be computed with the two-dimensional Fourier transform denoted , and the spherical Fourier transform denoted . Gray highlighting indicates which part of each expression is being named.

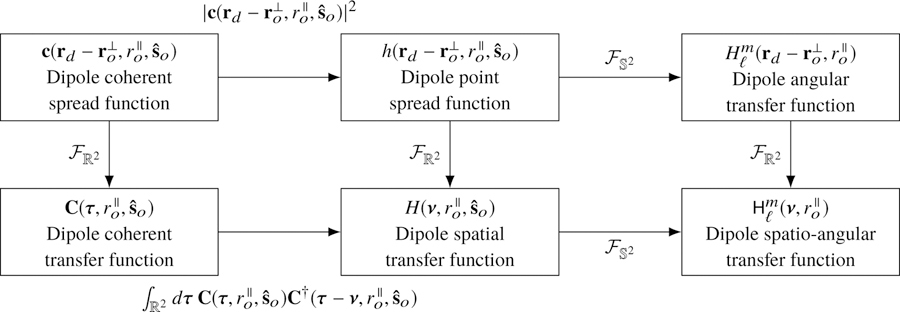

4.6. Dipole coherent transfer functions

Similar to the monopole case, there is an efficient way to calculate the transfer functions using coherent transfer functions. The dipole point spread function can always be written as the absolute square of a vector-valued function, , called the dipole coherent spread function:

| (38) |

Physically, the dipole coherent spread function corresponds to the vector-valued electric field on the detector with appropriate scaling. We need a vector-valued coherent transfer function since the polarization of the field plays a significant role in dipole imaging, so the dipole point spread function cannot be written as an absolute square of a scalar-valued function.

We can plug Eq. (38) into Eq. (28) and use the autocorrelation theorem to rewrite the dipole spatial transfer function as

| (39) |

where we have introduced the dipole coherent transfer function as the two-dimensional Fourier transform of the dipole coherent spread function:

| (40) |

Physically, the dipole coherent transfer function corresponds to the vector-valued electric field created by a dipole oriented along in a Fourier plane of the detector with appropriate scaling. Similar to the monopole case, we can calculate the dipole-orientation-dependent fields in a Fourier plane of the detector, scale appropriately to find the dipole coherent transfer function, then use the relationships in Fig. 4 to calculate the other transfer functions. Finally, we note that the dipole coherent transfer function is identical (up to scaling factors) to what Agrawal et al. call the Green’s tensor [6] and Novotny and Hecht’s dyadic point spread function multiplied by the dipole moment vector [32].

Fig. 4.

There is one transfer function for each set of object-space basis functions, and these transfer functions are related by two-dimensional and spherical Fourier transforms—see center and right columns. There is an additional pair of coherent transfer functions that are useful for calculating the transfer functions—see left column.

5. Discussion

5.1. When are alternative bases useful?

Describing a sample f, an imaging system , or data g in a basis other than a delta function basis is useful when the signal is sparse or band-limited in the new basis. Expressing a signal in a sparser basis is particularly useful for improving intuition about the signal and for reducing computation time.

Throughout this work we have emphasized examples of samples that are sparse in alternative bases. For example, dipoles that are undergoing spatial and angular diffusion give rise to dipole emission densities that are sparse in a basis of complex exponentials and spherical harmonics. In the second of this pair of papers we describe how a common imaging system—a single-view aplanatic microscope—can be described sparsely in the same basis, and this will lead us to a natural definition of the angular band-limit of the imaging system that augments the widely known spatial band-limit.

5.2. Alternatives to the spherical harmonics

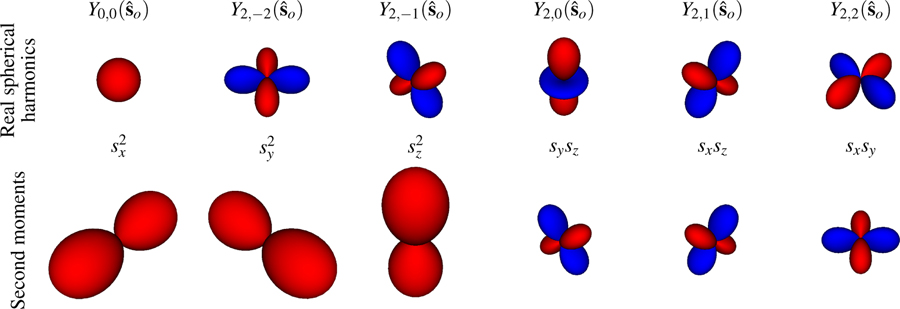

Throughout this work we have used the spherical harmonic functions as a basis for functions on the sphere, but there are other basis functions that can be advantageous in some cases. Several works [7, 15, 17, 19, 27, 33] have used the second moments of the Cartesian unit vectors in orientation space (or second moments for brevity) as basis functions for the sphere because they arise naturally when computing the dipole point spread function. These works use an alternative to the dipole angular transfer function that uses the second moments as basis functions so the forward model can be written as

| (41) |

where

| (42) |

| (43) |

and are the second moments. This formulation is convenient because it can exploit the spatial sparsity of the sample, the angular sparsity of diffusing samples in the basis of second moments, and the angular band-limit of common imaging systems. Additionally, the second moments approach does not require an expansion of the dipole point spread function onto spherical harmonics.

The spherical harmonics provide the same conveniences as the second moments: samples undergoing angular diffusion can be efficiently described using the spherical harmonics, and common imaging systems have an angular band-limit. Additionally, the spherical harmonics provide several potential advantages over the second moments. First, the spherical harmonics form a complete basis for functions on the sphere, while the second moments span a much smaller function space. Descoteaux et al. show that the even order spherical harmonics up to order ℓ and the degree-ℓ homogeneous polynomials restricted to the sphere span the same -dimensional function space [34]. Therefore, the spherical harmonic basis can be extended by adding an extra order of spherical harmonics to the existing set of spherical harmonics. Meanwhile, extending the basis of homogeneous polynomials without redundant basis vectors requires a completely new set of functions. Second, the spherical harmonics are orthogonal, which will allow us to deploy invaluable tools from linear algebra—linear subspaces, rank, SVD, etc.— to analyze and compare microscope designs. Finally, using the spherical harmonics provides access to a set of fast algorithms. The naive expansion of an arbitrary discretized N point spherical function onto spherical harmonics (or second moments) requires a matrix multiplication, while pioneering work by Driscoll and Healy [35] showed that the forward discrete fast spherical harmonic transform can be computed with a algorithm and its inverse can be computed with a algorithm. To our knowledge no similarly fast algorithms exist for expansion onto the higher-order moments.

Zhang et al. [7] have used the second moments to optimize a phase mask that creates a tri-spot point spread function. The authors formulate a system model as a 6×6 matrix that maps the six second moments that describe a rotating single molecule to the six intensity measurements from each lobe and polarization of the tri-spot point spread function. They show that the measurement matrices for existing phase mask designs are rank 4 or 5, and they implement a new rank 6 design that can measure all six second moments. The spherical harmonics can be used to similar effect in this problem by rewriting the system matrix in a basis of spherical harmonics instead of second moments. Additionally, the spherical harmonics form an orthogonal basis, so optimizing the system will be slightly easier (for example, the Fisher information matrix will be diagonal in a spherical harmonic basis).

Backer et al. [36] have used the transverse second moments to design a polarized-excitation imaging system and reconstruction algorithm that can recover the transverse distribution of fluorescent molecules. Similarly, Zhanghao et al. [5] have used the circular harmonics to design a polarized structured illumination imaging system and reconstruction scheme that can recover the transverse distribution of fluorescent molecules. The transverse second moments and the circular harmonics share the same relationship as the three-dimensional second moments and the spherical harmonics—each pair spans the same function space but only the circular/spherical harmonics are orthogonal. The transverse second moments and the circular harmonics are useful design tools for restricting the number of parameters to estimate, but these approaches artificially restrict the reconstructed dipole distributions to the transverse plane. Finally, we note that the relationship between 2D and 3D estimation problems is slightly simpler in a second moment basis than a spherical/circular harmonic basis. In a second moment basis the six second moments that describe the 3D problem can be truncated to the three second moments that describe the 2D problem. Meanwhile, the spherical harmonics that describe the 3D problem require a matrix multiplication (not a truncation) to map to the circular harmonics.

The diffusion magnetic resonance imaging community uses both the second moments (or second-order tensor) basis functions [37] and the spherical harmonic basis functions [38]. Descoteaux et al. have provided an explicit invertible transformation matrix to convert between the second moments and the zeroth- and second-order spherical harmonics [34]. Since the transformation between these two bases is invertible, functions on the sphere can be described with equal accuracy in either basis.

Another difference between the spherical harmonics and the second moments is that the spherical harmonics are complex-valued functions while the second moments are real-valued. Complex-valued spherical harmonics are convenient for mathematical manipulations, but we can also work with real-valued spherical harmonics for cheaper numerical manipulations or for improving our intuition. One possible definition of real-valued spherical harmonics is

| (44) |

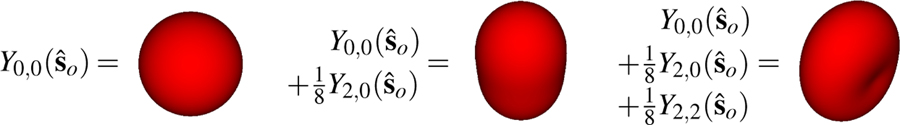

where the pair of lower indices imply that these are real-valued spherical harmonic functions. In Fig. 5 we directly compare the real-valued spherical harmonic functions to the second moment functions.

Fig. 5.

Top row: Real spherical harmonic basis functions for ℓ = 0 and ℓ = 2. Bottom row: Second moments of the Cartesian unit vectors in orientation space. The radius of each glyph indicates the value of the function along that direction. Red surfaces indicate positive values and blue surfaces indicate negative values. The two sets of basis functions span the same function space, but only the real spherical harmonics form an orthogonal basis.

5.3. Towards spatio-angular reconstructions

We have focused on modeling the mapping between the object and the data in this paper, but ultimately we are interested in reconstructing the object from the data. Considering in-focus objects and applying the monopole approximation simplifies the reconstruction problem because both object and data space are , so we can directly apply regularized inverse filters and maximum likelihood methods. The complete three-dimensional dipole model expands object space to , so the inverse problem becomes much more challenging. In future work we will use the singular value decomposition to find inverse filters, and we will consider using polarizers and multiple views to increase the size of data space.

6. Conclusions

Many models of fluorescence microscopes use the monopole and scalar approximations, but complete models need to consider dipole and vector optics effects. In this work we have introduced several transfer functions that simplify the mapping between the dipole emission density and the irradiance pattern on the detector. In future papers of this series we will calculate these transfer functions for specific instruments and use the results to simulate and analyze data collected by these instruments. Also, we note that polarized excitation and/or polarization analysis of the fluorescence emission plays an extremely important role in measuring/imaging the orientation distributions of dipoles. Although we have not included the effects of polarizers in this paper, we plan to extend our work in this direction in upcoming papers of this series.

Acknowledgments

Funding

National Institute of Health (NIH) (R01GM114274, R01EB017293).

We thank Kyle Myers, Harrison Barrett, Scott Carney, Luke Pfister, Jerome Mertz, Sjoerd Stallinga, Mikael Backlund, Matthew Lew, Min Guo, Yicong Wu, Shalin Mehta, Abhishek Kumar, Peter Basser, Marc Levoy, Michael Broxton, Gordon Wetzstein, Hayato Ikoma, Laura Waller, Ren Ng, Tomomi Tani, Michael Shribak, Mai Tran, Amitabh Verma, Xiaochuan Pan, Emil Sidky, Chien-Min Kao, Phillip Vargas, Dimple Modgil, Sean Rose, Corey Smith, Scott Trinkle, and Jianhua Gong for valuable discussions during the development of this work. TC was supported by a University of Chicago Biological Sciences Division Graduate Fellowship, and PL was supported by a Marine Biological Laboratory Whitman Center Fellowship. Support for this work was provided by the Intramural Research Programs of the National Institute of Biomedical Imaging and Bioengineering.

A. Spherical harmonics and the spherical Fourier transform

The spherical harmonic function of degree ℓ and order −ℓ ≤ m ≤ ℓ is defined as [39]

| (45) |

where are the associated Legendre polynomials with the Condon-Shortley phase

| (46) |

and Pℓ(x) are the Legendre polynomials defined by the recurrence

| (47) |

| (48) |

| (49) |

The spherical harmonics are orthonormal, which means that

| (50) |

where denotes the Kronecker delta. The spherical harmonics form a complete basis for , so an arbitrary square-integrable function on the sphere can be expanded into a sum of weighted spherical harmonic functions

| (51) |

We can find the spherical harmonic coefficients for a given function using Fourier’s trick— multiply both sides by , integrate over the sphere, and exploit orthogonality to find that

| (52) |

The coefficients are called the spherical Fourier transform of a spherical function.

We can build an intuition for the spherical harmonics by building several spherical functions from their spherical harmonic components—see Fig. 6. We can build a constant function on the sphere with the ℓ = 0, m = 0 spherical harmonic . The ℓ = 2, m = 0 spherical harmonic is independent of the azimuthal angle φ, so the function is rotationally symmetric about the ϑ = 0 axis (see the fourth column of the top row of Fig. 5). Y2,0(ϑ, φ) is negative for 55° < ϑ < 125°, zero for ϑ = 55°, 125°, and positive otherwise. The weighted sum of these two spherical harmonics results in a rotationally symmetric function that is relatively large near the ϑ = 0 axis (the poles) and relatively small near (the equator). The real-valued ℓ = 2, m = 2 spherical harmonic (see Eq. (44)) depends on both the polar angle ϑ and the azimuthal angle φ, so it is not a rotationally symmetric function (see the sixth column of the top row of Fig. 5). The weighted sum of these three spherical harmonics results in a non-rotationally symmetric function that is relatively large near the ϑ = 0 axis (the poles) and the axis, and relatively small near the axis. By adding spherical harmonics with suitable prefactors, arbitrary square-integrable spherical functions can be built using the spherical harmonics (see Eq. (51)).

Fig. 6.

Square-integrable functions on the sphere can be written as a weighted sum of spherical harmonics. The ℓ = 0, m = 0 spherical harmonic can represent a constant function (left), m = 0 spherical harmonics can represent rotationally symmetric functions (center), and m ≠ 0 spherical harmonics can represent non-rotationally symmetric functions (right).

B. Spatio-angular dynamics

In this appendix we will demonstrate the calculation of the dipole emission density from a simple underlying dynamic model. Our analysis is related to Stallinga’s [18], but here we consider an ensemble of dipoles diffusing both spatially and rotationally with homogeneous and isotropic diffusion coefficients. We start by considering a sample with all of its fluorescent molecules in the ground state. At t = 0 an instantaneous pulse excites a spatio-angular distribution of dipoles so that the initial condition is given by a known function . As time evolves, we assume the fluorescent molecules decay at a constant rate κ(d), diffuse spatially with a constant coefficient DR, and diffuse angularly with a constant coefficient DS so that the dynamics are governed by the partial differential equation

| (53) |

As written this equation is difficult to solve, but in a basis of complex exponentials and spherical harmonics this equation reduces to an ordinary differential equation. We start by expanding into its frequency components using

| (54) |

Plugging this expansion into Eq. (53) we find that

| (55) |

We can simplify this equation using the eigenvectors and eigenvalues of the spatial and spherical Laplacian operators

| (56) |

| (57) |

to find that

| (58) |

Comparing both sides we find that

| (59) |

The solution of this equation is given by

| (60) |

where .

Eq. (60) shows that as time evolves the initial spectrum decays to the ground state while undergoing an effective decay of the high spatial- and angular-frequency components. In other words, we can interpret diffusion as a filtering operation applied to the spatio-angular spectrum.

is the excited state spectrum, but we can only detect photons emitted as molecules decay to the ground state during the exposure time te. In other words, we can only measure the quantity

| (61) |

The main conclusion of this section is that spatial and angular diffusion can be efficiently described in a basis of complex exponentials and spherical harmonics. Although we have only considered homogeneous and isotropic diffusion with constant diffusion coefficients, the complex exponentials and spherical harmonics can be used to simplify the analysis of inhomogeneous and anisotropic diffusion as well. Stallinga has used the spherical harmonics to model angular diffusion in rotationally symmetric potentials [18], and his approach can be generalized to arbitrary spatial and angular diffusion potentials.

Footnotes

Disclosures

The authors declare that there are no conflicts of interest related to this article.

References

- 1.Vrabioiu AM and Mitchison TJ, “Structural insights into yeast septin organization from polarized fluorescence microscopy,” Nature 443, 466–469 (2006). [DOI] [PubMed] [Google Scholar]

- 2.Mattheyses AL, Kampmann M, Atkinson CE, and Simon SM, “Fluorescence anisotropy reveals order and disorder of protein domains in the nuclear pore complex,” Biophys. J 99, 1706–1717 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mehta SB, McQuilken M, La Rivière PJ, Occhipinti P, Verma A, Oldenbourg R, Gladfelter AS, and Tani T, “Dissection of molecular assembly dynamics by tracking orientation and position of single molecules in live cells,” Proc. Natl. Acad. Sci. U.S.A 113, E6352–E6361 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.McQuilken M, Jentzsch MS, Verma A, Mehta SB, Oldenbourg R, and Gladfelter AS, “Analysis of septin reorganization at cytokinesis using polarized fluorescence microscopy,” Front. Cell Dev. Biol 5, 42 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhanghao K, Chen X, Liu W, Li M, Shan C, Wang X, Zhao K, Lai A, Xie H, Dai Q, and Xi P, “Structured illumination in spatial-orientational hyperspace,” https://arxiv.org/abs/1712.05092 .

- 6.Agrawal A, Quirin S, Grover G, and Piestun R, “Limits of 3D dipole localization and orientation estimation for single-molecule imaging: towards Green’s tensor engineering,” Opt. Express 20, 26667–26680 (2012). [DOI] [PubMed] [Google Scholar]

- 7.Zhang O, Lu J, Ding T, and Lew MD, “Imaging the three-dimensional orientation and rotational mobility of fluorescent emitters using the tri-spot point spread function,” Appl. Phys. Lett 113, 031103 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Foreman MR and Török P, “Fundamental limits in single-molecule orientation measurements,” New J. Phys 13, 093013 (2011). [Google Scholar]

- 9.Chao J, Ward ES, and Ober RJ, “Fisher information theory for parameter estimation in single molecule microscopy: tutorial,” J. Opt. Soc. Am. A 33, B36–B57 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Backlund MP, Lew MD, Backer AS, Sahl SJ, and Moerner WE, “The role of molecular dipole orientation in single-molecule fluorescence microscopy and implications for super-resolution imaging,” ChemPhysChem 15, 587–599 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lew MD, Backlund MP, and Moerner WE, “Rotational mobility of single molecules affects localization accuracy in super-resolution fluorescence microscopy,” Nano Lett 13, 3967–3972 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Böhmer M and Enderlein J, “Orientation imaging of single molecules by wide-field epifluorescence microscopy,” J. Opt. Soc. Am. B 20, 554–559 (2003). [Google Scholar]

- 13.Lieb MA, Zavislan JM, and Novotny L, “Single-molecule orientations determined by direct emission pattern imaging,” J. Opt. Soc. Am. B 21, 1210–1215 (2004). [Google Scholar]

- 14.Toprak E, Enderlein J, Syed S, McKinney SA, Petschek RG, Ha T, Goldman YE, and Selvin PR, “Defocused orientation and position imaging (DOPI) of myosin V,” Proc. Natl. Acad. Sci. U.S.A 103, 6495–6499 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Aguet F, Geissbühler S, Märki I, Lasser T, and Unser M, “Super-resolution orientation estimation and localization of fluorescent dipoles using 3-D steerable filters,” Opt. Express 17, 6829–6848 (2009). [DOI] [PubMed] [Google Scholar]

- 16.Mortensen KI, Churchman LS, Spudich JA, and Flyvbjerg H, “Optimized localization analysis for single-molecule tracking and super-resolution microscopy,” Nat. Methods 7, 377–381 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Backer AS and Moerner WE, “Extending single-molecule microscopy using optical Fourier processing,” J. Phys. Chem. B 118, 8313–8329 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stallinga S, “Effect of rotational diffusion in an orientational potential well on the point spread function of electric dipole emitters,” J. Opt. Soc. Am. A 32, 213–223 (2015). [DOI] [PubMed] [Google Scholar]

- 19.Zhang O and Lew MD, “Fundamental limits on measuring the rotational constraint of single molecules using fluorescence microscopy,” https://arxiv.org/abs/1811.09017 . [DOI] [PMC free article] [PubMed]

- 20.Born M and Wolf E, Principles of Optics: Electromagnetic Theory of Propagation, Interference and Diffraction of Light (Elsevier Science Limited, 1980). [Google Scholar]

- 21.Gibson SF and Lanni F, “Diffraction by a circular aperture as a model for three-dimensional optical microscopy,” J. Opt. Soc. Am. A 6, 1357–1367 (1989). [DOI] [PubMed] [Google Scholar]

- 22.Richards B and Wolf E, “Electromagnetic diffraction in optical systems, II. structure of the image field in an aplanatic system,” Proc. Royal Soc. Lond. A: Math. Phys. Eng. Sci 253, 358–379 (1959). [Google Scholar]

- 23.Fourkas JT, “Rapid determination of the three-dimensional orientation of single molecules,” Opt. Lett 26, 211–213 (2001). [DOI] [PubMed] [Google Scholar]

- 24.Ruiter AGT, Veerman JA, Garcia-Parajo MF, and van Hulst NF, “Single molecule rotational and translational diffusion observed by near-field scanning optical microscopy,” J. Phys. Chem. A 101, 7318–7323 (1997). [Google Scholar]

- 25.Forkey JN, Quinlan ME, Alexander Shaw M, Corrie JET, and Goldman YE, “Three-dimensional structural dynamics of myosin V by single-molecule fluorescence polarization,” Nature 422, 399–404 (2003). [DOI] [PubMed] [Google Scholar]

- 26.Bhattacharya S, Sharma DK, Saurabh S, De S, Sain A, Nandi A, and Chowdhury A, “Plasticization of poly(vinylpyrrolidone) thin films under ambient humidity: Insight from single-molecule tracer diffusion dynamics,” J. Phys. Chem. B 117, 7771–7782 (2013). [DOI] [PubMed] [Google Scholar]

- 27.Backer AS and Moerner WE, “Determining the rotational mobility of a single molecule from a single image: a numerical study,” Opt. Express 23, 4255–4276 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Barrett H and Myers K, Foundations of Image Science (Wiley-Interscience, 2004). [Google Scholar]

- 29.Mansuripur M, Classical Optics and Its Applications (Cambridge University Press, 2009). [Google Scholar]

- 30.Gu M, Advanced Optical Imaging Theory, Springer Series in Optical Sciences (Springer, 2000). [Google Scholar]

- 31.Botcherby E, Juškaitis R, Booth M, and Wilson T, “An optical technique for remote focusing in microscopy,” Opt. Commun 281, 880–887 (2008). [Google Scholar]

- 32.Novotny L and Hecht B, Principles of Nano-Optics (Cambridge University Press, 2006). [Google Scholar]

- 33.Brasselet S, “Polarization-resolved nonlinear microscopy: application to structural molecular and biological imaging,” Adv. Opt. Photon 3, 205 (2011). [Google Scholar]

- 34.Descoteaux M, Angelino E, Fitzgibbons S, and Deriche R, “Apparent diffusion coefficients from high angular resolution diffusion imaging: estimation and applications,” Magn. Reson. Medicine 56, 395–410 (2006). [DOI] [PubMed] [Google Scholar]

- 35.Driscoll J and Healy D, “Computing Fourier transforms and convolutions on the 2-sphere,” Adv. Appl. Math 15, 202–250 (1994). [Google Scholar]

- 36.Backer AS, Lee MY, and Moerner WE, “Enhanced DNA imaging using super-resolution microscopy and simultaneous single-molecule orientation measurements,” Optica 3, 659–666 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Basser P, Mattiello J, and LeBihan D, “MR diffusion tensor spectroscopy and imaging,” Biophys. J 66, 259–267 (1994). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Tournier J-D, Calamante F, Gadian DG, and Connelly A, “Direct estimation of the fiber orientation density function from diffusion-weighted MRI data using spherical deconvolution,” NeuroImage 23, 1176–1185 (2004). [DOI] [PubMed] [Google Scholar]

- 39.Schaeffer N, “Efficient spherical harmonic transforms aimed at pseudospectral numerical simulations,” Geochem. Geophys. Geosystems 14, 751–758 (2013). [Google Scholar]