The hallmark of episodic memory is recollecting multiple perceptual details tied to a specific spatial-temporal context. To remember an event, it is therefore necessary to integrate such details into a coherent representation during initial encoding. Here we tested how the brain encodes and binds multiple, distinct kinds of features in parallel, and how this process evolves over time during the event itself.

Keywords: encoding, episodic memory, hippocampus

Abstract

The hallmark of episodic memory is recollecting multiple perceptual details tied to a specific spatial-temporal context. To remember an event, it is therefore necessary to integrate such details into a coherent representation during initial encoding. Here we tested how the brain encodes and binds multiple, distinct kinds of features in parallel, and how this process evolves over time during the event itself. We analyzed data from 27 human subjects (16 females, 11 males) who learned a series of objects uniquely associated with a color, a panoramic scene location, and an emotional sound while fMRI data were collected. By modeling how brain activity relates to memory for upcoming or just-viewed information, we were able to test how the neural signatures of individual features as well as the integrated event changed over the course of encoding. We observed a striking dissociation between early and late encoding processes: left inferior frontal and visuo-perceptual signals at the onset of an event tracked the amount of detail subsequently recalled and were dissociable based on distinct remembered features. In contrast, memory-related brain activity shifted to the left hippocampus toward the end of an event, which was particularly sensitive to binding item color and sound associations with spatial information. These results provide evidence of early, simultaneous feature-specific neural responses during episodic encoding that predict later remembering and suggest that the hippocampus integrates these features into a coherent experience at an event transition.

SIGNIFICANCE STATEMENT Understanding and remembering complex experiences are crucial for many socio-cognitive abilities, including being able to navigate our environment, predict the future, and share experiences with others. Probing the neural mechanisms by which features become bound into meaningful episodes is a vital part of understanding how we view and reconstruct the rich detail of our environment. By testing memory for multimodal events, our findings show a functional dissociation between early encoding processes that engage lateral frontal and sensory regions to successfully encode event features, and later encoding processes that recruit hippocampus to bind these features together. These results highlight the importance of considering the temporal dynamics of encoding processes supporting multimodal event representations.

Introduction

Our ongoing perceptual experience of the world includes a stream of disparate, multimodal features unfolding in parallel. Memory-related increases in brain activity during encoding are often found in visuo-perceptual brain regions (Spaniol et al., 2009; Kim, 2011), emphasizing that stronger, more precise representations of perceptual information support memory formation. For instance, comparing encoding of different kinds of information, such as words and pictures, reveals category-selective patterns of sensory activity that predict subsequent recollection of these individual features (Gottlieb et al., 2010; Duarte et al., 2011; Park and Rugg, 2011). Yet natural experience not only involves representing different perceptual features, but crucially, it requires us to encode them simultaneously. Moreover, we later remember this information as a unified event. In this study, we investigated how perceptual features are uniquely represented during encoding and the neural operations that bind them together.

Studies addressing these issues have largely examined memory for simple paired associations studied as short “events.” This research involves presenting subjects with pairs of features within the same trial, such as a location, person, emotion, or color. By predicting subsequent memory separately for each component feature (Uncapher et al., 2006; Staresina and Davachi, 2008; Gottlieb et al., 2012; Ritchey et al., 2019) or contrasting events with overlapping or nonoverlapping feature types (Horner et al., 2015), these studies take a step toward understanding how the brain represents and encodes distinct kinds of information in parallel. Moreover, some regions, most notably the hippocampus, show a preference for binding pairs of features during encoding rather than promoting memory for either detail alone (Uncapher et al., 2006; Horner et al., 2015). However, restricting encoding trials to bimodal associations leaves it unclear how distinct feature signals of more complex experiences are simultaneously distinguished, encoded, and integrated as the event unfolds.

The hippocampus is considered to be crucial for binding elements of our experience with contextual information (Davachi, 2006; Diana et al., 2007; Eichenbaum et al., 2007; Ranganath, 2010), although less is known about how exactly the hippocampus organizes multimodal information. Its involvement might be tied to total memory content, including details and their associations, during event encoding (Addis and McAndrews, 2006; Staresina and Davachi, 2008; Park and Rugg, 2011), such that activity tracks the amount of information subsequently recalled (Qin et al., 2011) regardless of its content or relational structure. Alternatively, the hippocampus could be specifically recruited to organize our environment in a structured way, perhaps around a spatial framework (Horner et al., 2015; Deuker et al., 2016; Zeidman and Maguire, 2016). It is also important to distinguish the role of the hippocampus in multimodal event encoding from that of other brain regions that show encoding effects related to organization and integration, such as left inferior frontal gyrus (L IFG) (Ranganath et al., 2004; Addis and McAndrews, 2006; Staresina and Davachi, 2006; Park and Rugg, 2011).

Here, we present the encoding data from our prior work (Cooper and Ritchey, 2019) in which participants encoded and reconstructed complex events. In line with past research (Horner and Burgess, 2013; Joensen et al., 2020), we previously showed that successful recall of event associations in our task, an object with a color, scene location, and sound, exhibits a dependent structure (Cooper and Ritchey, 2019). In the current analyses, we leverage the multimodal aspect of this paradigm to test how features are prioritized and integrated during encoding. To preview the results, we found that visuo-perceptual and left inferior frontal regions supported feature-specific and binding processes, respectively. Surprisingly, however, hippocampal activity at the onset of events did not correlate with subsequent memory. Based on recent findings that hippocampal activity is particularly enhanced at the end of an event (Ben-Yakov et al., 2014; Ben-Yakov and Henson, 2018), we further investigated the temporal dynamics of event encoding. Specifically, we contrasted the neural processes supporting encoding of upcoming versus just-studied information, testing whether hippocampal signals at an event transition act to bind episodic features in memory.

Materials and Methods

Portions of this dataset have been previously reported (Cooper and Ritchey, 2019). Whereas the previous paper was focused on functional connectivity analyses of the retrieval phase, the current paper reports on univariate activation analyses of the encoding phase. Methods for MRI data collection, the task procedure, and behavioral analyses have been detailed previously (Cooper and Ritchey, 2019), and so are summarized here.

Experimental design and statistical analysis

Participants.

Twenty-seven participants took part in the current experiment (16 females, 11 males). All participants were 18–35 years of age (mean = 21.7 years, SD = 3.58 years) and did not have a history of any psychiatric or neurological disorders. Seven additional subjects took part but were excluded from data analyses: 2 participants did not complete the experiment (1 due to anxiety and the other due to excessive movement in the MRI scanner), 4 additional participants had chance-level performance on the memory task, and 1 subject was excluded after data quality checks revealed 3 of 6 encoding functional runs exceeded our motion criteria. Informed consent was obtained from all participants before the experiment, and participants were reimbursed for their time. Procedures were approved by the Boston College Institutional Review Board.

Paradigm.

Participants were presented with a series of 144 unique object “events” in an MRI scanner, 24 per scan run. Each object was presented in a color from a continuous CIEL*A*B color spectrum, in a scene location within 1 of 6 panoramic environments, and in conjunction with 1 of 12 sounds: 6 that were emotionally negative and 6 that were neutral. All sounds contained natural, easily recognizable content and were 6 s in duration, corresponding to the time each event was displayed during encoding. Events were separated by a 1 s fixation. Participants were instructed to integrate the object and its associated features into a meaningful event, but no response was required (see Fig. 1A). Allocations of features to objects as well as the presentation order of events were randomized within each subject. This memory encoding phase is the focus of all fMRI analyses presented here (for results from the retrieval phase, see Cooper and Ritchey, 2019). After encoding 24 events, subjects were tested on their memory for the features associated with each object, presented in grayscale as memory cues. On each retrieval trial, participants attempted to remember all of the features in their mind for 4 s, following which they were prompted to remember whether the object was paired with a negative or neutral sound (2 s), and to reconstruct the object's color and spatial location (6 s each). For the sound feature, participants reported their confidence in their decision (“maybe” or “sure”), whereas, for the visual features, participants changed the object's color and moved it around the panorama to recreate its visual appearance as precisely as possible in 360 degree space.

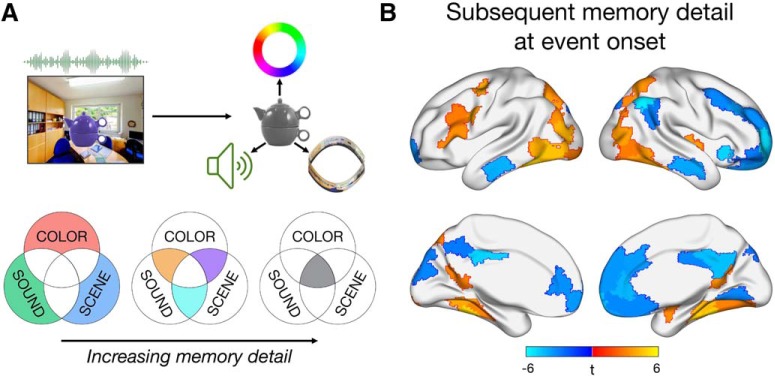

Figure 1.

Task design and neural correlates of subsequent memory detail at the onset of each multifeature event. A, During encoding, participants learned a series of events presented for 6 s, each uniquely associating an object to a color, sound, and scene location. For subsequent memory analyses, each event was coded by its level of memory detail, specified by the number of features (0–3) that were successfully recollected. B, Significant relationships (p < 0.05, FDR-corrected) between activity at event onset and the number of features subsequently recalled. All voxels within a functionally homogeneous region, defined based on a whole-brain parcellation, are color-coded by the region's t statistic.

fMRI data acquisition.

MRI scanning was performed using a 3-T Siemens Prisma MRI scanner at the Harvard Center for Brain Science, with a 32-channel head coil. Structural MRI images were obtained using a T1-weighted (T1w) multiecho MPRAGE protocol (FOV = 256 mm, 1 mm isotropic voxels, 176 sagittal slices with interleaved acquisition, TR = 2530 ms, TE = 1.69/3.55/5.41/7.27 ms, flip angle = 7°, phase encoding: anterior–posterior, parallel imaging = GRAPPA, acceleration factor = 2). Functional images were acquired using a whole-brain multiband EPI sequence (FOV = 208 mm, 2 mm isotropic voxels, 69 slices with interleaved acquisition, TR = 1500 ms, TE = 28 ms, flip angle = 75°, phase encoding: anterior–posterior, parallel imaging = GRAPPA, acceleration factor = 2), for a total of 466 TRs encompassing encoding and retrieval trials per scan run. Fieldmap scans were acquired to correct the EPI images for signal distortion (TR = 314 ms, TE = 4.45/6.91 ms, flip angle = 55°).

Behavioral data processing.

As previously reported (Cooper and Ritchey, 2019), participants' responses for the object color and scene location questions were measured in terms of error, the difference between the target (encoded) feature value and the reported feature value in 360 degree space. Responses to the sound question were considered in terms of accuracy and confidence. Each encoding event was characterized in terms of its pattern of memory success (correct vs incorrect subsequent retrieval) for each of these features. We a priori chose to use a more conservative method for identifying “correct” memory retrieval than in our previous analysis of these data (Cooper and Ritchey, 2019) to ensure that we only included trials associated with confident/precise subsequent recollection, and to more evenly balance the distribution of trials across different remembered feature combinations. Specifically, the sound feature was considered correctly recalled when the participant successfully identified the associated sound as negative or neutral and reported this memory with high confidence. For color and scene location features, we first fitted a mixture model (Zhang and Luck, 2008; Bays et al., 2009) to the aggregate group error data, per feature. The mixture model includes a uniform distribution to estimate the proportion of responses that reflect guessing, as well as a circular Gaussian (von Mises) distribution to estimate the proportion of responses that reflect successful remembering. We defined “correct” color and scene memory by the probability that an error had at least a 75% chance of belonging to the von Mises distribution and not the uniform distribution, resulting in a threshold of ±42 degrees for color and ±24 degrees for scene memory. Whereas participant-level models could capture variation in memory precision between subjects (with participant-specific thresholds for “correct”/“incorrect” memory), we chose to define memory based on group-level data. Modeling at the subject level may produce variable threshold estimates because of differences in model fit rather than differences in memory per se. Moreover, trials allocated as “correct” or “incorrect” across subjects would not be directly comparable. In line with our prior work (Richter et al., 2016; Cooper et al., 2017; Cooper and Ritchey, 2019), we felt that a threshold for correct memory based on group data was therefore most appropriate.

For each encoding event, a composite measure of memory detail was calculated as the number of features subsequently recalled (0–3). Of note, this is a slightly different composite measure of memory than we have used previously (Cooper and Ritchey, 2019), where we accounted for variations in confidence and precision within “successful” recall. However, we have already restricted our measure of successful memory for each feature to a confident and precise judgment; and, in our previous behavioral analyses, it was successful recall but not the precision of recall that showed a dependent structure in memory.

fMRI data preprocessing.

All data preprocessing was performed using FMRIPrep version 1.0.3 (RRID:SCR_016216) (Esteban et al., 2019) with the default processing steps. Each T1w volume was corrected for intensity nonuniformity and skull-stripped. Spatial normalization to the ICBM 152 Nonlinear Asymmetrical template version 2009c was performed through nonlinear registration, using brain-extracted versions of both the T1w volume and template. All analyses reported here use images in MNI space. Brain tissue segmentation of CSF, white matter, and gray matter was performed on the brain-extracted T1w image. Functional data were slice time-corrected, motion-corrected, and corrected for field distortion. This was followed by coregistration to the corresponding T1w using boundary-based registration with 9 degrees of freedom. Six principal components of a combined CSF and white matter signal were extracted using aCompCor (Behzadi et al., 2007) for use as nuisance regressors. Framewise displacement was also calculated for each functional run. For further details of the pipeline, including the software packages used by FMRIPrep for each preprocessing step, see the online documentation (https://fmriprep.readthedocs.io/en/1.0.3/index.html). After preprocessing, the first 116 TRs were selected from each run, capturing all encoding trials and extending 6 s beyond completion of the final trial. Encoding runs were checked for motion and were excluded from data analyses if >20% of TRs exceeded a framewise displacement of 0.3 mm. Participants were retained for analyses if at least 4 of 6 runs passed criteria. One subject was excluded as result of 3 runs having high motion, but no other subject had runs removed because of motion. Four participants successfully completed only 5 of the 6 scan runs, 3 as a result of exiting the scanner early and 1 due to a technical problem with the sound system during the first run.

Whole-brain parcellation.

To test which regions across the whole brain were sensitive to subsequent memory effects, we divided the cortex into 200 functionally homogeneous regions using a recent cortical parcellation (Schaefer et al., 2018) (https://github.com/ThomasYeoLab/CBIG). Four medial temporal areas were added to this whole-brain atlas (left and right hemisphere as separate regions). The hippocampus was obtained from a probabilistic medial temporal lobe atlas (Ritchey et al., 2015) (https://neurovault.org/collections/3731/), and amygdala was added from the Harvard-Oxford subcortical atlas, consistent with our prior methods (Cooper and Ritchey, 2019). We decided to implement a whole-brain parcellation method rather than a more traditional voxel-based approach for two reasons: (1) to use what we know about anatomical and functional similarities across the brain to maximize statistical power; and (2) to allow us to draw clearer conclusions about the function of individual brain regions defined in a discrete way. Although parcellation-based averaging has the potential to miss task-specific variations in activity within larger regions, we believe that using a fine-grained parcellation, with multiple-comparisons correction, may be an optimal approach for whole-brain analyses that extend beyond hypothesis-driven functional ROIs. The results of all whole-brain analyses were displayed using BrainNet Viewer (RRID:SCR_009446) (Xia et al., 2013), where, for visualization purposes, every voxel within a region was allocated the same t statistic calculated from an analysis of that region's mean activity.

Single-trial analyses.

All analyses were run using single-trial estimates of activity during encoding. Single-trial betas were computed using SPM12 (RRID:SCR_007037), implementing the least-squares-separate method (Mumford et al., 2012) where a separate GLM was constructed for each encoding trial. In each single-trial GLM, the first regressor corresponds to the trial of interest and a second regressor codes for all other encoding trials in that functional run. Functional data were denoised by including framewise displacement, 6 aCompCor principal components, and 6 movements parameters as nuisance regressors. Spike regressors were also included to exclude data points with high motion (>0.6 mm). Single-trial models were constructed using: (1) a stick function at stimulus onset or (2) a stick function at stimulus offset, with each type of regressor convolved with the canonical HRF. Mean single-trial β series were extracted by averaging across voxels within each of the 204 regions per subject. Analyses of single-trial betas were conducted using RStudio R version 3.5.1 (RRID:SCR_001905) (RStudio Team, 2016).

Before running any analyses, data were first cleaned by z scoring the single-trial β values within each region per subject and removing trials >4 SD from the mean for any region. A total of 108 (2.85%) and 113 (2.98%) of 3792 trials were removed for analyses of event onsets and offsets, respectively. Event onsets were used to test the relationship between activity and memory for the upcoming event, whereas event offsets were used to test the relationship between activity and memory for the preceding event. All statistical tests quantify the relationship between each region's encoding β series and subsequent memory using linear mixed-effects models (lme4; RRID:SCR_015654) (Bates et al., 2015), fitted using restricted maximum likelihood. In all models, subject was included as a random effect with a variable intercept and variable slopes for all fixed effects. This “maximal” approach guards against an inflated Type 1 error rate (Barr et al., 2013) but can commonly result in model convergence failures with increasing complexity of the random effect variance-covariance structure (Matuschek et al., 2017). To address this problem, random effect correlations were constrained to zero in all models (Barr et al., 2013; Matuschek et al., 2017) using the formula lmer(Y ∼ X1 + X2 … + (1 + X1 + X2 … || Subject)). To avoid singular fits for more complex models, the random effects structure of models with more than two fixed effects were additionally simplified using the lmerTest package (RRID:SCR_015656) (Kuznetsova et al., 2017) “step” function, which iteratively removes random slopes that do not improve the model fit. Each fixed effect was tested against zero using lmerTest, which implements t tests with Satterthwaite's approximation method. This method estimates the effective degrees of freedom, which can differ as a result of variance and whether the random slope for the corresponding fixed effect was included in the model. All p values reported were FDR-corrected (α = 0.05) for multiple comparisons across all 204 brain regions within each type of mixed-effects model.

Code accessibility

Single-trial encoding data for all subjects and brain regions as well as R scripts to run the analyses described here have been made freely available through GitHub (http://www.thememolab.org/paper-bindingfmri/). This repository also contains extended data in the form of csv and nifti files for the results of all whole-brain linear mixed-effects analyses.

Results

Neural correlates of subsequent episodic detail are sensitive to distinct memory features

We first sought to test where across the brain activity at the onset of a multifeature event was sensitive to the amount of detail recalled (Fig. 1B). Activity of each brain region was predicted from an objective measure of memory detail coding each trial according to whether 0, 1, 2, or 3 features were subsequently remembered. Significant memory-related increases in activity were found in a number of visuo-perceptual brain regions, including bilateral occipital and ventral visual regions, bilateral parahippocampal cortex (PHC) and retrosplenial cortex (RSC), as well as right amygdala and superior temporal cortex. L IFG, frequently associated with successful associative encoding, also positively tracked the amount of episodic detail later recalled. In contrast, activity of default network regions, including bilateral medial prefrontal cortex (mPFC), posterior cingulate, and middle temporal gyrus, was negatively associated with increasing subsequent memory detail. Thus, left lateral frontal and visuo-perceptual regions appear to prioritize event features at the onset of encoding to support a detailed memory representation.

We next wanted to determine which of these regions were associated with subsequent memory in a feature-specific way. That is, to what extent does encoding a multifeature event involve the parallel activation of feature-specific patterns? To this end, mixed-effects models were used to identify patterns of event-onset activity that were uniquely predicted by subsequent memory for the individual features, color, sound, and scene perspective, with each feature predictor binary-coded according to whether memory was subsequently correct or incorrect. Of note, remembering any one feature in our paradigm involves an association of that feature to an object. Therefore, this analysis identifies regions sensitive to cued recall of different types of event content rather than recognition of single features.

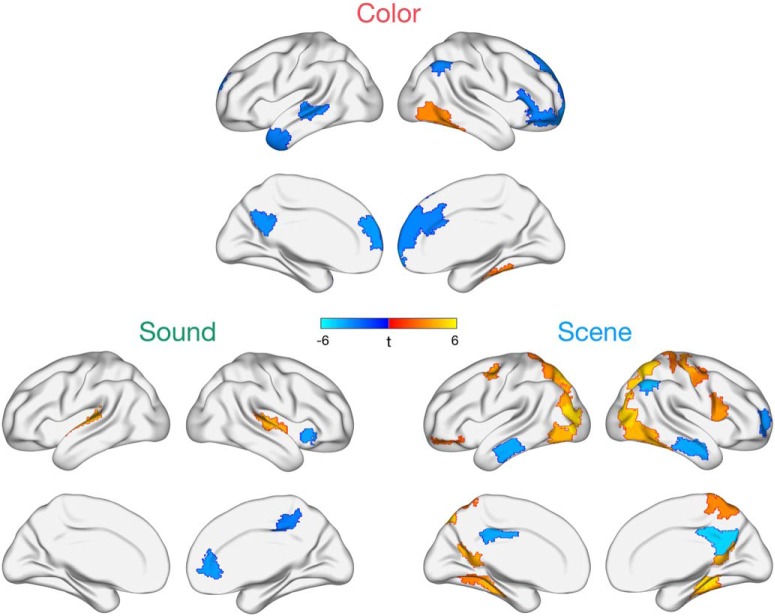

Pronounced feature-specific effects emerged in terms of positive subsequent memory correlates (Fig. 2). Specifically, successful encoding of the object's color was associated with enhanced activity of right ventral visual cortical areas, whereas subsequent memory for the sound was positively related to activity in bilateral superior temporal (auditory) cortex. Neural correlates of successful scene encoding were more widespread, with positive changes in activity observed in bilateral dorsal medial parietal and occipital cortex, as well as bilateral retrosplenial and parahippocampal cortex, frequently reported to be sensitive to specific representations of spatial locations and perspectives (e.g., Epstein, 2008; Robertson et al., 2016; Robin et al., 2018; Berens et al., 2019). Thus, we were able to detect unique patterns of brain activity that positively tracked different kinds of episodic features encoded simultaneously, with the most widespread effects for encoding scene details. Notably, L IFG, which tracked overall memory detail, did not significantly support memory for any individual feature. There were also signs of feature-specific negative subsequent memory effects (Fig. 2), predominantly localized to the default network. Given evidence of distinct default network subsystems (Buckner and DiNicola, 2019), future work should consider the possible content-specificity of negative encoding effects.

Figure 2.

Regions whose activity at the onset of each event is uniquely predicted by subsequent color, sound, or scene memory while these features are encoded in parallel. Task-positive effects show mostly distinct patterns across the three features. Effects shown are FDR-corrected for multiple comparisons at p < 0.05, and all voxels within a region are color-coded by the region's t statistic.

Memory-related neural activity shifts over the time course of encoding

The previous analysis showed that there are partially distinct, parallel patterns of encoding activity that support memory for individual features. Yet a fundamental aspect of episodic memory is the binding of multimodal features into a coherent event representation. While we found evidence that left lateral frontal cortex activity correlated with memory detail and thus may facilitate integration, surprisingly, we found that hippocampal activity was unrelated to the number of features recalled (|βs| < 0.02, |ts| < 0.26, pscorrected > 0.91) or to memory for any feature alone (|βs| < 0.04, |ts| < 0.50, pscorrected > 0.81). Hippocampal subsequent memory effects have been frequently (although not universally) reported in event-related paradigms (Kim, 2011), specifically for binding multiple episodic features (Horner et al., 2015), and most models of episodic memory assume that the hippocampus supports this binding process. In light of evidence that hippocampal activity may be particularly sensitive to the end of an event (Ben-Yakov et al., 2014; Ben-Yakov and Henson, 2018), we reasoned that some encoding-related brain regions might be important for prioritizing features early on (predicting memory for the upcoming event), whereas other regions, such as hippocampus, might be engaged later to integrate just-viewed information into a memory trace.

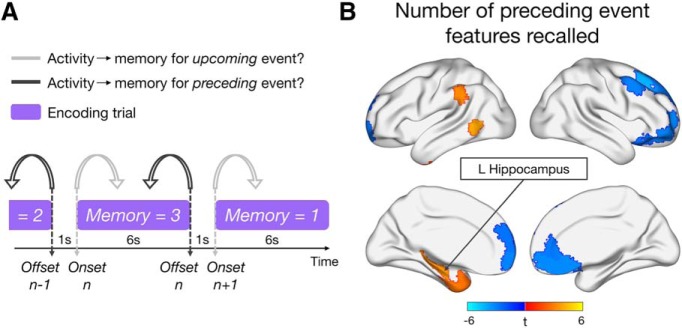

To test this hypothesis, we next examined whether the pattern of subsequent memory effects across the brain would shift over the course of encoding. Specifically, whereas the previous set of analyses were focused exclusively on how onset activity was related to memory for the upcoming event, we now considered how activity at the offset of each event (6 s after onset) was related to subsequent memory for the just-viewed information (Fig. 3A). Overall, fewer regions exhibited sensitivity to memory detail for the preceding event (Fig. 3B), although the sensitivity of some default regions to memory detail was sustained over encoding, with an overlapping subset of mPFC regions showing a negative relationship between activity and subsequent memory for both upcoming and just-viewed event information. A transition of subsequent memory effects was particularly apparent for left inferior frontal and feature-selective regions; these regions did not significantly exhibit sensitivity to memory detail over the full course of encoding. Of particular interest, activity at event offset in left hippocampus (L HIPP) was positively related to the number of event details subsequently remembered (left: β = 0.14, t(23.9) = 3.50, pcorrected = 0.024; right: β = 0.11, t(25.1) = 2.40, pcorrected = 0.099), reflecting the emergence of a hippocampal memory effect at the end of an encoding trial. This effect appeared to be relatively specific to the period after the event of interest, in that a control analysis modeling the entire trial as a 6 s block (onset to offset) did not reveal any significant hippocampal subsequent memory effects (|βs| < 0.03, |ts| < 1.72, pscorrected > 0.19). Positive correlates of memory detail for the preceding event were also seen in left inferior parietal cortex, middle temporal gyrus, and temporal pole; but, in contrast to the hippocampus, these effects were also present throughout the entire encoding trial (βs > 0.026, ts > 3.21, pscorrected < 0.02). Whole-brain results of the control analysis modeling each trial as a 6 s block can be found in our GitHub repository.

Figure 3.

Analysis approach and neural correlates of preceding memory detail. A, Schematic of the different models predicting brain activity at event onset (light gray) or offset (dark gray) with subsequent memory for the upcoming or preceding event, respectively. B, Brain regions whose activity at event offset is significantly (p < 0.05, FDR-corrected) predicted by the number of details (0–3) subsequently recalled from the preceding event. All voxels within a region are color-coded by the region's t statistic.

Because this study was not originally designed with the intention of studying offset responses, it is important to consider the timing of event offsets relative to other trial components. Onsets and offsets for a given trial were placed 6 s apart, allowing us to separately relate them to that trial's memory score. Indeed, within each ROI per subject, the mean correlation between activity at trial N onset and trial N offset (6 s apart) was low (mean r = 0.14, SE = 0.01). In contrast, due to the fixed 1 s ITI between trials, the offset and onset β estimates of neighboring trials, offset N and onset N + 1, were highly correlated (mean r = 0.88, SE = 0.02), as expected with a slow BOLD response. Importantly, however, these time points were associated with different memory scores (Fig. 3A), and control analyses show that memory scores for neighboring trials were largely independent of one another (total memory detail: mean r = 0.10, SE = 0.03; color: mean r = 0.01, SE = 0.02; sound: mean r = 0.11, SE = 0.04; scene location: mean r = 0.06, SE = 0.02). Moreover, to rule out the possibility of any low correlation between memory on adjacent trials influencing our results, we reran the analyses predicting onset N or offset N activity from memory detail on trial N, also including memory detail on the neighboring trial, N − 1 or N + 1, respectively, as a covariate. The pattern of results did not change, with L IFG tracking memory for the upcoming event (βs > 0.21, ts > 3.04, pscorrected < 0.048), and L HIPP tracking preceding event memory (β = 0.13, t(22.8) = 3.44, pcorrected = 0.025). Finally, to further confirm that our results and conclusions are due to differences in activity related to memory for the upcoming versus preceding trial, and not a fine distinction between offset N and onset N + 1, we ran an analysis focused on activity during the 1 s ITI, thereby disregarding the offset-onset distinction altogether. The results looked extremely similar to the onset- and offset-based analyses reported above. Thus, we remain agnostic as to the specific time point at an event transition that triggers memory-related activity for the upcoming or preceding event. Full results of all control analyses detailed above can be found in our GitHub repository. Therefore, over the course of encoding a multimodal event, there is a temporal transformation in the neural correlates of subsequent memory detail.

Hippocampal signals integrate episodic features with scene information

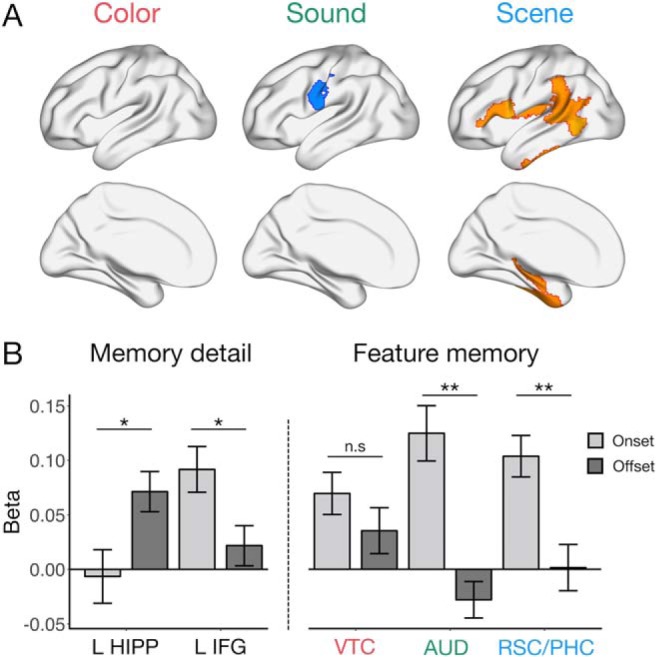

To complement the previous onset-related analyses, we next tested the unique influence of subsequent memory for each feature (color, sound, and scene) on brain activity at the end of each event. Whereas there were no significant increases in activity with subsequent color or sound memory, some regions did, however, positively track subsequent scene memory. These effects were not present in the RSC/PHC and occipital regions previously seen; rather, activity of L HIPP, bilateral lateral PFC, and left temporoparietal junction and temporal pole tracked scene encoding at the end of the event (Fig. 4A). As with overall memory detail, a feature-specific analysis modeling each trial as a 6 s block further revealed that the positive relationship between hippocampal activity and memory for the preceding scene was not present throughout the entire encoding trial (|βs| < 0.03, |ts| < 1.11, pscorrected > 0.48; whole-brain results can be found in our GitHub repository).

Figure 4.

Feature-specific patterns relating activity to memory for the preceding event, and follow-up ROI visualization. A, Feature-specific memory correlates at the offset of each event. Effects shown are significant at p < 0.05 (FDR-corrected). B, Visualization of the change in memory-related activity from the beginning (onset) to end (offset) of each event of interest, for subsequent memory detail (left) in L HIPP and L IFG, and for subsequent color, sound, and scene memory (right) in VTC, AUD, and RSC/PHC, respectively. Bars represent fixed effect estimates of memory-related activity. Error bars indicate SEM. **p < 0.005. *p < 0.05. n.s = not significant.

To visualize the transformation of memory-related activity over encoding, we focused on 5 regions, L HIPP and L IFG, selected based on a significant relationship between activity and subsequent memory detail for just-viewed and upcoming event information, respectively, as well as ventral temporal cortex (VTC), auditory cortex (AUD), and RSC/PHC, whose onset activity tracked color, sound, and scene encoding, respectively. Here, we predicted memory on trial N from two regressors, the standardized activity at both onset N and offset N, which allowed us to compare their unique effects and control for any low correlation in activity between the beginning and end of a trial (Fig. 4B). The relationship between activity and memory detail was significantly greater at event offset than onset in L HIPP (t(52.5) = 2.48, p = 0.016), whereas the opposite was true in L IFG (t(64.6) = −2.42, p = 0.019). AUD and RSC/PHC also showed significantly greater sensitivity to later sound (t(49.8) = −4.82, p < 0.001) and scene memory (t(64.1) = −3.43, p = 0.001), respectively, at event onset than offset, but this pattern was not significant for VTC and color encoding (t(63.8) = −1.13, p = 0.26). Therefore, lateral frontal and visuo-perceptual memory-related onset signals transition to a hippocampal signal after an event that is sensitive to later scene recollection and the amount of information recalled.

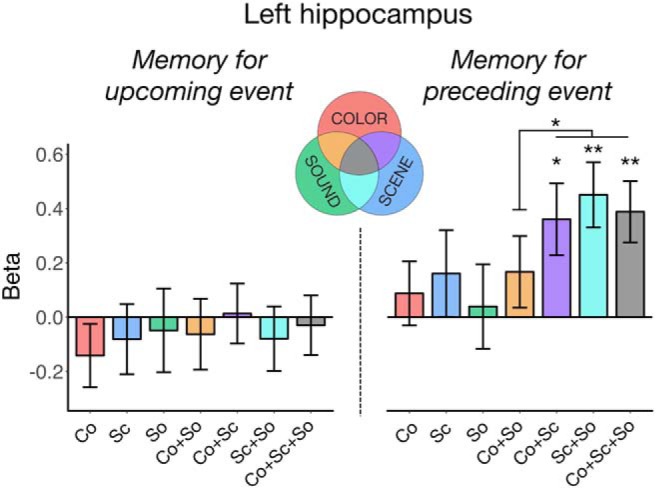

The observed pattern of hippocampal activity raises two possibilities about its memory-related function: (1) it may promote memory for the spatial context at an event transition regardless of other episodic features; or (2) it could reflect a binding signal, integrating event features with spatial information after the individual features have been processed. To distinguish these explanations, we predicted the mean activity of L HIPP with all combinations of features recalled (7 regressors: each feature remembered alone, each possible pair of features remembered together but the third forgotten, or all three features remembered), and tested whether hippocampal offset activity is greater in situations where space was remembered with either color or sound features, or both, than when space was recalled in the absence of other information or when color and sound were recalled in the absence of spatial information (Fig. 5). Indeed, hippocampal activity after an event was only increased (relative to an implicit baseline of no features recalled) when scene information was successfully remembered in conjunction with color, sound, or both features (ts > 2.72, ps < 0.009) and not when scene was the only feature subsequently recalled (t(30.4) = 1.00, p = 0.32) or when color and sound were remembered without the associated scene (t(3562.5) = 1.26, p = 0.21). Moreover, remembering a conjunction of features that included a scene recruited the hippocampus more than recalling the color and sound without the associated scene (t(1285.2) = 1.99, p = 0.046), but the difference between scene alone versus scene + other feature(s) fixed effects was not significant (t(22.4) = 1.62, p = 0.12).

Figure 5.

L HIPP activity based on all possible feature conjunctions in memory, relative to an implicit baseline of no features recalled. Hippocampal activity at event onset (left) was not sensitive to memory for any combination of features in the upcoming event. In contrast, hippocampal activity at event offset (right) was increased when spatial information was successfully encoded with other episodic features in the preceding event. Co, color; Sc, scene; So, sound. Bars represent fixed effect β estimates of activity in each condition. Error bars indicate SEM. **p < 0.005. *p < 0.05.

In an exploratory analysis, we used the same model to determine whether this pattern of end-of-event hippocampal encoding activity varied from anterior (head) to posterior (body and tail) segments, determined using the medial temporal lobe probabilistic atlas (Ritchey et al., 2015). Interestingly, although the activity of both segments increased when more than 1 feature was recalled (anterior: β = 0.25, t(2264.3) = 2.58, p = 0.010; posterior: β = 0.23, t(798.2) = 2.41, p = 0.016), selective sensitivity to binding with scenes was a characteristic of only the anterior hippocampus (β = 0.37, t(2476.6) = 2.57, p = 0.010; posterior: β = 0.04, t(1092.2) = 0.29, p = 0.77). Hence, hippocampal encoding activity, particularly in anterior hippocampus, may act to bind just-viewed features into a coherent spatial event.

Discussion

This study tested the neural correlates of distinct features encoded in parallel within a complex event, and how those features are integrated into a coherent representation. First, we found that L IFG and visuo-perceptual regions were sensitive to the amount of detail encoded at the onset of each event. Whereas IFG selectively tracked the number of associations recalled, we uncovered unique variance in the brain activity of visuo-perceptual areas predicted by subsequent memory for color, sound, and scene features. In contrast, modeling activity at event offset revealed a shift in the positive neural correlates of successful encoding. Specifically, activity in L HIPP at the end of each event was predicted by the amount of detail later recalled as well as scene memory. Probing specific feature combinations revealed that these hippocampal effects were driven by binding color and sound information with an associated scene.

Our findings of feature-specific encoding effects demonstrate that multimodal details of complex episodes can be decomposed in the brain, even when encoded simultaneously. The specific pattern of our results converges with univariate and multivariate decoding studies previously using these types of features, including color (Uncapher et al., 2006; Favila et al., 2018), sounds (Gottlieb et al., 2012), and scenes (Park and Chun, 2009; Morgan et al., 2011; Staresina et al., 2011) to study perception and encoding. We additionally showed that these visuo-perceptual neural correlates were only present early on in encoding, which is consistent with relatively earlier subsequent memory effects in ventral visual areas shown using intracranial EEG (Long and Kahana, 2015). Therefore, while the strength of sensory signals predicts upcoming feature-specific encoding, the importance of this sensory activity for memory generally decreases over the course of an event. Moreover, by modeling the unique variance of each feature, we found that scenes showed the most prominent subsequent memory effects. One explanation of this finding is that spatial information serves as the dominant framework for integrating other features within memory (Hassabis and Maguire, 2007; Mullally and Maguire, 2014; Horner et al., 2016; Robin et al., 2016; Bicanski and Burgess, 2018; Robin, 2018). In contrast to many previous studies, memory for spatial information in our task is based on an exact viewpoint rather than overall environment, and it is an open question whether spatial organization extends to precise as well as gist-like contexts. An alternate explanation for these findings is that scenes may be the most salient feature in our task, given that they include a number of other objects and visual details. Thus, despite previous work highlighting the prioritization of spatial information in events, it is difficult to completely disentangle this account from the amount of information contained within spatial contexts.

In addition to feature-specific encoding effects showing an early, transient profile, L IFG also showed an early relationship to total memory detail that diminished over time. This pattern supports the proposal that IFG processes goal-relevant information and initiates an organizational framework (Blumenfeld and Ranganath, 2007; Blumenfeld et al., 2011) to support integration of perceptual features. In our paradigm, participants were asked to generate a story linking the object associations together, and so early IFG activity may help to generate meaningful semantic associations necessary to support feature binding (Gabrieli et al., 1998). Interestingly, and in contrast to prior literature, we did not find a similar relationship between hippocampal onset activity and later encoding success. Instead, we observed a shift whereby left hippocampal activity at the completion of an event significantly predicted the number of just-viewed features recalled. Collectively, these findings suggest that both hippocampus and L IFG contribute to the integration of event components (Staresina and Davachi, 2006; Zeithamova and Preston, 2010) but reveal that their memory signals are temporally dissociable. As such, while L IFG may control organizational strategies, hippocampus may act upon this organizational structure to bind an item with its context. Previous research supports this distinction, showing that IFG generates associations, whereas hippocampus is sensitive to an existing relational structure (Addis and McAndrews, 2006), with the former tracking subsequent memory confidence and the latter the number of details remembered (Qin et al., 2011; Mayes et al., 2019). Another interesting contrast suggests that IFG may actively control encoding processes while hippocampal signals are driven by inherent memorability of a scene's perceptual features (Bainbridge et al., 2017). Furthermore, intracranial EEG work has demonstrated that encoding activity in IFG precedes that in hippocampus (Long and Kahana, 2015), thus providing convincing evidence for their distinct functional and temporal roles in integration during memory encoding.

The absence of hippocampal correlates of subsequent memory early on in encoding is particularly interesting in light of inconsistency in the literature. Although hippocampal encoding effects have often been reported (Spaniol et al., 2009; Kim, 2011), many individual studies have not found them (e.g., Sommer et al., 2005; Haskins et al., 2008; Cooper et al., 2017; Tibon et al., 2019). Although variability in hippocampal encoding effects might be attributed to differences in memory strength and trial selection (Henson, 2005), the current results suggest an additional possible explanation, namely, that such inconsistencies might be related to the duration of the encoding event used in fMRI analyses. Most event-related fMRI tasks use short trials, often 2–3 s, and analyze activity locked to the onset, meaning that memory correlates at different times of an event will be virtually indistinguishable. Meanwhile, studies using longer, naturalistic events have consistently shown increased hippocampal activity at the end of an event (Ben-Yakov et al., 2014; Ben-Yakov and Henson, 2018), which correlates with later memory reinstatement both neurally and behaviorally (DuBrow and Davachi, 2016; Baldassano et al., 2017). The hippocampal offset signal is thought to reflect a binding operation based on event discontinuities, linking together all the features that just co-occurred within the same spatial-temporal context before transitioning to a new environment (Staresina and Davachi, 2009), which may be facilitated by rapid reinstatement of the previous event at a boundary (Silva et al., 2019). Evidence that earlier visuo-perceptual activity predicts a hippocampal event boundary response within continuous experience (Baldassano et al., 2017) provides support for this proposal and complements the temporal shift from sensory memory correlates to hippocampal memory effects observed here.

To our knowledge, this is the first study to explicitly test the hypothesis that hippocampal activity at the end of an event reflects binding per se; previous studies have not separately tested memory for distinct kinds of episodic features. Our paradigm allowed us to tease apart which features and conjunctions influenced this signal most, where left hippocampal activity was enhanced selectively on trials where spatial information was successfully remembered and integrated with color or sound features, or both. This neural finding corroborates previous explanations of the hippocampal offset effect (Cohen et al., 2015; Ben-Yakov and Henson, 2018) as well as theoretical accounts that hippocampus binds information within a contextual framework (Davachi, 2006; Ranganath, 2010; Eichenbaum, 2017). Moreover, evidence of an end-of-event hippocampal binding signal is also present in other event-related tasks: When learning overlapping associations across trials, hippocampal activity predicts subsequent memory after being exposed to component event associations (Horner et al., 2015) and binds previously studied associations based on shared context (Zeithamova and Preston, 2010; Zeithamova et al., 2012), suggesting that a prominent function is the integration of previously encoded event features. While binding appeared to be a common function along the hippocampal long axis, we additionally found that successful encoding of feature conjunctions, involving scenes specifically, was a more prominent feature of anterior hippocampus, which is frequently associated with scene perception and construction (Zeidman and Maguire, 2016).

When interpreting the results of this study, it is important to consider some limitations of the current approach. Although our events were longer than those in most event-related encoding studies, 6 s is still relatively short; and as such, we were unable to map out the full-time course of memory-related signals over a longer period. This partially reflects the fact that, even though participants were encouraged to generate a story to encode the events, the features were static rather than evolving over time. This design enabled us to measure memory for distinct event features, but future studies would benefit from using longer videos while controlling features that can be tested later on. Relatedly, the lack of jittered event duration means that we cannot definitively assign memory-related effects to the start and end of events. For example, it is possible, yet unlikely, that hippocampal signals have a slower HRF and thus actually reflect an earlier memory-related response. Future studies should therefore use events with variable duration to test the generalizability of the current findings.

In conclusion, we demonstrated that feature-specific neural responses are dissociable and reflect early encoding processes. Integration of these features into a coherent episode is likely supported by complementary lateral frontal and hippocampal signals, with early L IFG operations possibly providing a meaningful structure, and later hippocampal operations integrating these associations within a contextual framework. This multifaceted process thus promotes subsequent episodic retrieval, helping us to mentally reconstruct the rich detail of our environment.

Footnotes

This work was supported by National Institutes of Health Grant R00MH103401 to M.R. This work was performed at the Harvard Center for Brain Science, involving the use of instrumentation supported by the National Institutes of Health Shared Instrumentation Grant Program (Grant S10OD020039). We thank Max Bluestone, Rosalie Samide, and Emily Iannazzi for assistance with data collection.

The authors declare no competing financial interests.

References

- Addis DR, McAndrews MP (2006) Prefrontal and hippocampal contributions to the generation and binding of semantic associations during successful encoding. Neuroimage 33:1194–1206. 10.1016/j.neuroimage.2006.07.039 [DOI] [PubMed] [Google Scholar]

- Bainbridge WA, Dilks DD, Oliva A (2017) Memorability: a stimulus-driven perceptual neural signature distinctive from memory. Neuroimage 149:141–152. 10.1016/j.neuroimage.2017.01.063 [DOI] [PubMed] [Google Scholar]

- Baldassano C, Chen J, Zadbood A, Pillow JW, Hasson U, Norman KA (2017) Discovering event structure in continuous narrative perception and memory. Neuron 95:709–721.e5. 10.1016/j.neuron.2017.06.041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barr DJ, Levy R, Scheepers C, Tily HJ (2013) Random effects structure for confirmatory hypothesis testing: keep it maximal. J Mem Lang 68:3. 10.1016/j.jml.2012.11.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates D, Mächler M, Bolker B, Walker S (2015) Fitting linear mixed-effects models using lme4. J Stat Softw 67:1–48. [Google Scholar]

- Bays PM, Catalao RF, Husain M (2009) The precision of visual working memory is set by allocation of a shared resource. J Vis 9:7.1–11. 10.1167/9.10.7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behzadi Y, Restom K, Liau J, Liu TT (2007) A component based noise correction method (CompCor) for BOLD and perfusion based fMRI. Neuroimage 37:90–101. 10.1016/j.neuroimage.2007.04.042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Yakov A, Henson RN (2018) The hippocampal film-editor: sensitivity and specificity to event boundaries in continuous experience. J Neurosci 38:10057–10068. 10.1523/JNEUROSCI.0524-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Yakov A, Rubinson M, Dudai Y (2014) Shifting gears in hippocampus: temporal dissociation between familiarity and novelty signatures in a single event. J Neurosci 34:12973–12981. 10.1523/JNEUROSCI.1892-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berens SC, Joensen BH, Horner AJ (2019) Tracking the emergence of location-based spatial representations in human scene-selective cortex. bioRxiv 547976:1–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bicanski A, Burgess N (2018) A neural-level model of spatial memory and imagery. Elife 7:e33752. 10.7554/eLife.33752 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blumenfeld RS, Ranganath C (2007) Prefrontal cortex and long-term memory encoding: an integrative review of findings from neuropsychology and neuroimaging. Neuroscientist 13:280–291. 10.1177/1073858407299290 [DOI] [PubMed] [Google Scholar]

- Blumenfeld RS, Parks CM, Yonelinas AP, Ranganath C (2011) Putting the pieces together: the role of dorsolateral prefrontal cortex in relational memory encoding. J Cogn Neurosci 23:257–265. 10.1162/jocn.2010.21459 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL, DiNicola LM (2019) The brain's default network: updated anatomy, physiology and evolving insights. Nat Rev Neurosci 20:593–608. 10.1038/s41583-019-0212-7 [DOI] [PubMed] [Google Scholar]

- Cohen N, Pell L, Edelson MG, Ben-Yakov A, Pine A, Dudai Y (2015) Peri-encoding predictors of memory encoding and consolidation. Neurosci Biobehav Rev 50:128–142. 10.1016/j.neubiorev.2014.11.002 [DOI] [PubMed] [Google Scholar]

- Cooper RA, Ritchey M (2019) Cortico-hippocampal network connections support the multidimensional quality of episodic memory. Elife 8:e45591. 10.7554/eLife.45591 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper RA, Richter FR, Bays PM, Plaisted-Grant KC, Baron-Cohen S, Simons JS (2017) Reduced hippocampal functional connectivity during episodic memory retrieval in autism. Cereb Cortex 27:888–902. 10.1093/cercor/bhw417 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davachi L. (2006) Item, context and relational episodic encoding in humans. Curr Opin Neurobiol 16:693–700. 10.1016/j.conb.2006.10.012 [DOI] [PubMed] [Google Scholar]

- Deuker L, Bellmund JL, Navarro Schröder T, Doeller CF (2016) An event map of memory space in the hippocampus. Elife 5:e16534. 10.7554/eLife.16534 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C (2007) Imaging recollection and familiarity in the medial temporal lobe: a three-component model. Trends Cogn Sci 11:379–386. 10.1016/j.tics.2007.08.001 [DOI] [PubMed] [Google Scholar]

- Duarte A, Henson RN, Graham KS (2011) Stimulus content and the neural correlates of source memory. Brain Res 1373:110–123. 10.1016/j.brainres.2010.11.086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- DuBrow S, Davachi L (2016) Temporal binding within and across events. Neurobiol Learn Mem 134:107–114. 10.1016/j.nlm.2016.07.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H. (2017) Memory: organization and control. Annu Rev Psychol 68:19–45. 10.1146/annurev-psych-010416-044131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Yonelinas AP, Ranganath C (2007) The medial temporal lobe and recognition memory. Annu Rev Neurosci 30:123–152. 10.1146/annurev.neuro.30.051606.094328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein RA. (2008) Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn Sci 12:388–396. 10.1016/j.tics.2008.07.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esteban O, Markiewicz CJ, Blair RW, Moodie CA, Isik AI, Erramuzpe A, Kent JD, Goncalves M, DuPre E, Snyder M, Oya H, Ghosh SS, Wright J, Durnez J, Poldrack RA, Gorgolewski KJ (2019) fMRIPrep: a robust preprocessing pipeline for functional MRI. Nat Methods 16:111–116. 10.1038/s41592-018-0235-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Favila SE, Samide R, Sweigart SC, Kuhl BA (2018) Parietal representations of stimulus features are amplified during memory retrieval and flexibly aligned with top-down goals. J Neurosci 38:7809–7821. 10.1523/JNEUROSCI.0564-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabrieli JD, Poldrack RA, Desmond JE (1998) The role of left prefrontal cortex in language and memory. Proc Natl Acad Sci U S A 95:906–913. 10.1073/pnas.95.3.906 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottlieb LJ, Uncapher MR, Rugg MD (2010) Dissociation of the neural correlates of visual and auditory contextual encoding. Neuropsychologia 48:137–144. 10.1016/j.neuropsychologia.2009.08.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottlieb LJ, Wong J, de Chastelaine M, Rugg MD (2012) Neural correlates of the encoding of multimodal contextual features. Learn Mem 19:605–614. 10.1101/lm.027631.112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haskins AL, Yonelinas AP, Quamme JR, Ranganath C (2008) Perirhinal cortex supports encoding and familiarity-based recognition of novel associations. Neuron 59:554–560. 10.1016/j.neuron.2008.07.035 [DOI] [PubMed] [Google Scholar]

- Hassabis D, Maguire EA (2007) Deconstructing episodic memory with construction. Trends Cogn Sci 11:299–306. 10.1016/j.tics.2007.05.001 [DOI] [PubMed] [Google Scholar]

- Henson R. (2005) A mini-review of fMRI studies of human medial temporal lobe activity associated with recognition memory. Q J Exp Psychol B 58:340–360. 10.1080/02724990444000113 [DOI] [PubMed] [Google Scholar]

- Horner AJ, Burgess N (2013) The associative structure of memory for multi-element events. J Exp Psychol Gen 142:1370–1383. 10.1037/a0033626 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horner AJ, Bisby JA, Bush D, Lin WJ, Burgess N (2015) Evidence for holistic episodic recollection via hippocampal pattern completion. Nat Commun 6:7462. 10.1038/ncomms8462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horner AJ, Bisby JA, Wang A, Bogus K, Burgess N (2016) The role of spatial boundaries in shaping long-term event representations. Cognition 154:151–164. 10.1016/j.cognition.2016.05.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joensen BH, Gaskell MG, Horner AJ (2020) United we fall: all-or-none forgetting of complex episodic events. J Exp Psychol Gen 149:230–248. 10.1037/xge0000648 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H. (2011) Neural activity that predicts subsequent memory and forgetting: a meta-analysis of 74 fMRI studies. Neuroimage 54:2446–2461. 10.1016/j.neuroimage.2010.09.045 [DOI] [PubMed] [Google Scholar]

- Kuznetsova A, Brockhoff P, Christensen R (2017) lmerTest package: tests in linear mixed effects models. J Stat Softw 82:1–26. [Google Scholar]

- Long NM, Kahana MJ (2015) Successful memory formation is driven by contextual encoding in the core memory network. Neuroimage 119:332–337. 10.1016/j.neuroimage.2015.06.073 [DOI] [PubMed] [Google Scholar]

- Matuschek H, Kliegl R, Vasishth S, Baayen H, Bates D (2017) Balancing type I error and power in linear mixed models. J Mem Lang 94:305–315. 10.1016/j.jml.2017.01.001 [DOI] [Google Scholar]

- Mayes AR, Montaldi D, Roper A, Migo EM, Gholipour T, Kafkas A (2019) Amount, not strength of recollection, drives hippocampal activity: a problem for apparent word familiarity-related hippocampal activation. Hippocampus 29:46–59. 10.1002/hipo.23031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan LK, Macevoy SP, Aguirre GK, Epstein RA (2011) Distances between real-world locations are represented in the human hippocampus. J Neurosci 31:1238–1245. 10.1523/JNEUROSCI.4667-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullally SL, Maguire EA (2014) Memory, imagination, and predicting the future: a common brain mechanism? Neuroscientist 20:220–234. 10.1177/1073858413495091 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mumford JA, Turner BO, Ashby FG, Poldrack RA (2012) Deconvolving BOLD activation in event-related designs for multivoxel pattern classification analyses. Neuroimage 59:2636–2643. 10.1016/j.neuroimage.2011.08.076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park H, Rugg MD (2011) Neural correlates of encoding within- and across-domain inter-item associations. J Cogn Neurosci 23:2533–2543. 10.1162/jocn.2011.21611 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park S, Chun MM (2009) Different roles of the parahippocampal place area (PPA) and retrosplenial cortex (RSC) in panoramic scene perception. Neuroimage 47:1747–1756. 10.1016/j.neuroimage.2009.04.058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qin S, van Marle HJ, Hermans EJ, Fernández G (2011) Subjective sense of memory strength and the objective amount of information accurately remembered are related to distinct neural correlates at encoding. J Neurosci 31:8920–8927. 10.1523/JNEUROSCI.2587-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C. (2010) Binding items and contexts: the cognitive neuroscience of episodic memory. Curr Dir Psychol Sci 19:131–137. 10.1177/0963721410368805 [DOI] [Google Scholar]

- Ranganath C, Yonelinas AP, Cohen MX, Dy CJ, Tom SM, D'Esposito M (2004) Dissociable correlates of recollection and familiarity within the medial temporal lobes. Neuropsychologia 42:2–13. 10.1016/j.neuropsychologia.2003.07.006 [DOI] [PubMed] [Google Scholar]

- Richter FR, Cooper RA, Bays PM, Simons JS (2016) Distinct neural mechanisms underlie the success, precision, and vividness of episodic memory. Elife 5:e18260. 10.7554/eLife.18260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ritchey M, Montchal ME, Yonelinas AP, Ranganath C (2015) Delay-dependent contributions of medial temporal lobe regions to episodic memory retrieval. Elife 4:e05025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ritchey M, Wang SF, Yonelinas AP, Ranganath C (2019) Dissociable medial temporal pathways for encoding emotional item and context information. Neuropsychologia 124:66–78. 10.1016/j.neuropsychologia.2018.12.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robertson CE, Hermann KL, Mynick A, Kravitz DJ, Kanwisher N (2016) Neural representations integrate the current field of view with the remembered 360° panorama in scene-selective cortex. Curr Biol 26:2463–2468. 10.1016/j.cub.2016.07.002 [DOI] [PubMed] [Google Scholar]

- Robin J. (2018) Spatial scaffold effects in event memory and imagination. Wiley Interdiscip Rev Cogn Sci 9:e1462. 10.1002/wcs.1462 [DOI] [PubMed] [Google Scholar]

- Robin J, Wynn J, Moscovitch M (2016) The spatial scaffold: the effects of spatial context on memory for events. J Exp Psychol Learn Mem Cogn 42:308–315. 10.1037/xlm0000167 [DOI] [PubMed] [Google Scholar]

- Robin J, Buchsbaum BR, Moscovitch M (2018) The primacy of spatial context in the neural representation of events. J Neurosci 38:2755–2765. 10.1523/JNEUROSCI.1638-17.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- RStudio Team (2016) RStudio: integrated development for R. Boston: RStudio; Available at http://www.rstudio.com/. [Google Scholar]

- Schaefer A, Kong R, Gordon EM, Laumann TO, Zuo XN, Holmes AJ, Eickhoff SB, Yeo BTT (2018) Local-global parcellation of the human cerebral cortex from intrinsic functional connectivity MRI. Cereb Cortex 28:3095–3114. 10.1093/cercor/bhx179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silva M, Baldassano C, Fuentemilla L (2019) Rapid memory reactivation at movie event boundaries promotes episodic encoding. J Neurosci 39:8538–8548. 10.1523/JNEUROSCI.0360-19.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommer T, Rose M, Weiller C, Büchel C (2005) Contributions of occipital, parietal and parahippocampal cortex to encoding of object-location associations. Neuropsychologia 43:732–743. 10.1016/j.neuropsychologia.2004.08.002 [DOI] [PubMed] [Google Scholar]

- Spaniol J, Davidson PS, Kim AS, Han H, Moscovitch M, Grady CL (2009) Event-related fMRI studies of episodic encoding and retrieval: meta-analyses using activation likelihood estimation. Neuropsychologia 47:1765–1779. 10.1016/j.neuropsychologia.2009.02.028 [DOI] [PubMed] [Google Scholar]

- Staresina BP, Davachi L (2006) Differential encoding mechanisms for subsequent associative recognition and free recall. J Neurosci 26:9162–9172. 10.1523/JNEUROSCI.2877-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Davachi L (2008) Selective and shared contributions of the hippocampus and perirhinal cortex to episodic item and associative encoding. J Cogn Neurosci 20:1478–1489. 10.1162/jocn.2008.20104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Davachi L (2009) Mind the gap: binding experiences across space and time in the human hippocampus. Neuron 63:267–276. 10.1016/j.neuron.2009.06.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Duncan KD, Davachi L (2011) Perirhinal and parahippocampal cortices differentially contribute to later recollection of object- and scene-related event details. J Neurosci 31:8739–8747. 10.1523/JNEUROSCI.4978-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibon R, Fuhrmann D, Levy DA, Simons JS, Henson RN (2019) Multimodal integration and vividness in the angular gyrus during episodic encoding and retrieval. J Neurosci 39:4365–4374. 10.1523/JNEUROSCI.2102-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uncapher MR, Otten LJ, Rugg MD (2006) Episodic encoding is more than the sum of its parts: an fMRI investigation of multifeatural contextual encoding. Neuron 52:547–556. 10.1016/j.neuron.2006.08.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xia M, Wang J, He Y (2013) BrainNet viewer: a network visualization tool for human brain connectomics. PLoS One 8:e68910. 10.1371/journal.pone.0068910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeidman P, Maguire EA (2016) Anterior hippocampus: the anatomy of perception, imagination and episodic memory. Nat Rev Neurosci 17:173–182. 10.1038/nrn.2015.24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeithamova D, Preston AR (2010) Flexible memories: differential roles for medial temporal lobe and prefrontal cortex in cross-episode binding. J Neurosci 30:14676–14684. 10.1523/JNEUROSCI.3250-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeithamova D, Dominick AL, Preston AR (2012) Hippocampal and ventral medial prefrontal activation during retrieval-mediated learning supports novel inference. Neuron 75:168–179. 10.1016/j.neuron.2012.05.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang W, Luck SJ (2008) Discrete fixed-resolution representations in visual working memory. Nature 453:233–235. 10.1038/nature06860 [DOI] [PMC free article] [PubMed] [Google Scholar]