Abstract

Background

Reproducibility is a cornerstone of scientific advancement; however, many published works may lack the core components needed for study reproducibility.

Aims

In this study, we evaluate the state of transparency and reproducibility in the field of psychiatry using specific indicators as proxies for these practices.

Methods

An increasing number of publications have investigated indicators of reproducibility, including research by Harwicke et al, from which we based the methodology for our observational, cross-sectional study. From a random 5-year sample of 300 publications in PubMed-indexed psychiatry journals, two researchers extracted data in a duplicate, blinded fashion using a piloted Google form. The publications were examined for indicators of reproducibility and transparency, which included availability of: materials, data, protocol, analysis script, open-access, conflict of interest, funding and online preregistration.

Results

This study ultimately evaluated 296 randomly-selected publications with a 3.20 median impact factor. Only 107 were available online. Most primary authors originated from USA, UK and the Netherlands. The top three publication types were cohort studies, surveys and clinical trials. Regarding indicators of reproducibility, 17 publications gave access to necessary materials, four provided in-depth protocol and one contained raw data required to reproduce the outcomes. One publication offered its analysis script on request; four provided a protocol availability statement. Only 107 publications were publicly available: 13 were registered in online repositories and four, ten and eight publications included their hypothesis, methods and analysis, respectively. Conflict of interest was addressed by 177 and reported by 31 publications. Of 185 publications with a funding statement, 153 publications were funded and 32 were unfunded.

Conclusions

Currently, Psychiatry research has significant potential to improve adherence to reproducibility and transparency practices. Thus, this study presents a reference point for the state of reproducibility and transparency in Psychiatry literature. Future assessments are recommended to evaluate and encourage progress.

Keywords: retrospective studies, sample size, Sampling studies, research design

Introduction

Reproducibility is a cornerstone of scientific advancement1; however, many published works lack the core components needed for reproducibility and transparency. These barriers to reproducibility have presented serious immediate and long-term consequences for psychiatry, including poor credibility, reliability and accessibility.2 Fortunately, methods to improve reproducibility are practical and applicable to many research designs. For example, preregistration of studies provides public access to the protocol and analysis plan. Reproducibility promotes independent verification of results2 and successful replication,2 3 and it hedges against outcome switching.4 Supporting this need in the field of psychology, the Open Science Collaboration’s reproducibility project was an attempt to replicate the findings of 100 experimental and correlated studies published in three leading psychology journals. Researchers found that 97% of the original reports had statistically significant results, whereas only 37% of the replicated studies had significant results.5 With regard to outcome switching, a recent survey of 154 researchers investigating electrical brain stimulation found that less than half were able to replicate previous study findings. These researchers also admitted to selective reporting of study outcomes (41%), adjusting statistical analysis to alter results (43%) and adjusting their own statistical measurements to support certain outcomes.6 Leveraging good statistical practices and using methods that promote reproducibility, such as preregistration, are necessary to protect against similar incidents of selective reporting.7

Considerable advancements have been noted to promote and endorse reproducible and transparent research practices in the field of psychology. For example, the Centre for Open Science, the Berkeley Institute for Transparency in the Social Sciences and the Society for the Improvement of Psychological Science have all worked vigorously to establish a culture of transparency and a system of reproducible research practices. However, mental health researchers, including psychiatry researchers, have not kept pace with their psychology counterparts.8 A few editorials have circulated to promote awareness of reproducibility and transparency within psychiatric literature.7–9 For example, The Lancet Psychiatry published an editorial addressing various topics of reproducibility, such as increasing the availability of materials, protocols, analysis scripts and raw data within online repositories. The editorial author argued that few limitations exist within psychiatry that would impede depositing study materials in a public repository. The author additionally countered a commonly held position that raw patient data should not be made available, noting that the use of appropriate de-identification can make the information anonymous.8 A second editorial published in JAMA Psychiatry 7 argued for more robust statistical analysis and decision making to improve the reproducibility of psychiatry studies. The author of this editorial discussed several statistical considerations, including the effects of statistical assumption violations on validity and study power, the likelihood of spurious findings based on small sample sizes, a priori covariate selection, effect size reporting and cross-validation. These efforts are good first steps to create awareness of the problem, which was deemed a ‘reproducibility crisis’ by over 1000 scientists in a recent Nature survey10 ; however, further measures are needed. A top-down approach to evaluating transparency and reproducibility would provide valuable information about the current state of the psychiatry literature. In our study, we examined a random sample of publications from psychiatric literature for evaluating specific indicators of reproducibility and transparency within the field. Our results may be used both to evaluate for current strengths and limitations and to serve as baseline data for subsequent investigations.

Methods

We conducted an observational study using a cross-sectional design based on methodology by Hardwicke et al.2 Our study is reported in accordance with guidelines for meta-epidemiological methodology research.11 We have made available protocols, materials and other pertinent information on Open Science Framework (https://osf.io/n4yh5/). This study was not subject to institutional review board oversight because it did not include human participants.

Journal and study selection

We used the National Library of Medicine (NLM) catalogue to search for all journals, using the subject terms tag Psychiatry[ST]. This search was performed on May 29, 2019 by DT. The inclusion criteria required that journals were in English and also MEDLINE indexed. The list of journals in the NLM catalogue was then extracted along with their electronic ISSN (or linking ISSN if electronic is unavailable). The final ISSN search string was used to search PubMed to identify all publications between January 1, 2014, and December 31, 2018. DT then compiled a random sample of 300 publications from selected journals.

Extraction training

Prior to data extraction, two investigators (CES and JZP) underwent a full day of training to ensure inter-rater reliability. The training included a review of the study design, protocol, extraction form and the identification of information from two publications selected by DT. The two investigators were given three articles from which to extract data as examples. Following extraction, the pair reconciled all differences. The training session was recorded and listed online for reference (https://osf.io/jczx5/). Prior to extracting data from all studies, these two investigators extracted data from the first 10 publications from their specialty list. Discrepancies were resolved by discussion between the investigators.

Data extraction

Two investigators (CES, JZP) extracted data from the 300 publications in a duplicate and blinded fashion. Following extraction, a final consensus meeting was held by the pair to resolve disagreements. A third investigator (DT) was available for adjudication, but this process was not necessary. A pilot-tested Google form was created based on a study by Hardwicke et al,2 with additions. This form included the indicators of reproducibility and transparency (https://osf.io/3nfa5/) and items related to study characteristics. This assessment of reproducibility and transparency was developed according to key indicators in Hardwicke et al in addition to other indicators relevant to promoting transparent, collaborative, reproducible research. The indicators examined were: public accessibility, funding, conflict of interest, citation frequency and any statements for protocol, materials, data availability and preregistration. (See online supplementary table A for specific quantity and percentage). Further explanation of the frequency, value and relevance of these indicators of transparency and reproducibility is organised in online supplementary table B. The extracted data varied according to the study design, with studies that had no empirical data being excluded (eg, editorials, commentaries (without reanalysis), simulations, news, reviews and poems). We also expanded the study design options to include cohort, case series, secondary analysis, chart reviews and cross-sectional studies. Finally, we used the following funding categories: university, hospital, public, private/industry, or non-profit.

gpsych-2019-100149supp001.pdf (57.5KB, pdf)

Open access availability

We searched Open Access Button (https://openaccessbutton.org) to assess whether studies were available by open access. If Open Access Button was unable to access the article, then two investigators (CES, JZP) used the publication title and DOI to search Google or PubMed to find whether the full text version was publicly available.

Replication and evidence synthesis

We used the Web of Science (https://www.webofknowledge.com/) to determine whether the studies composing our sample were replication studies or included in systematic reviews. Web of Science was used to easily determine the number and type of other studies that cited each publication we examined. Articles unavailable on Web of Science were located and examined via PubMed or other resources. We determined if each publication was a replication study based on if the research was conducted to replicate aspects of a prior study’s design or findings. To do so, we reviewed each publication that had cited the studies included in our sample using Web of Science’s citation listing feature. We performed this process in the same manner as data extraction, described previously.

Statistical analysis

We report descriptive statistics for each category along with 95% CIs of proportions, calculated using Microsoft Excel.

Results

Sample characteristics

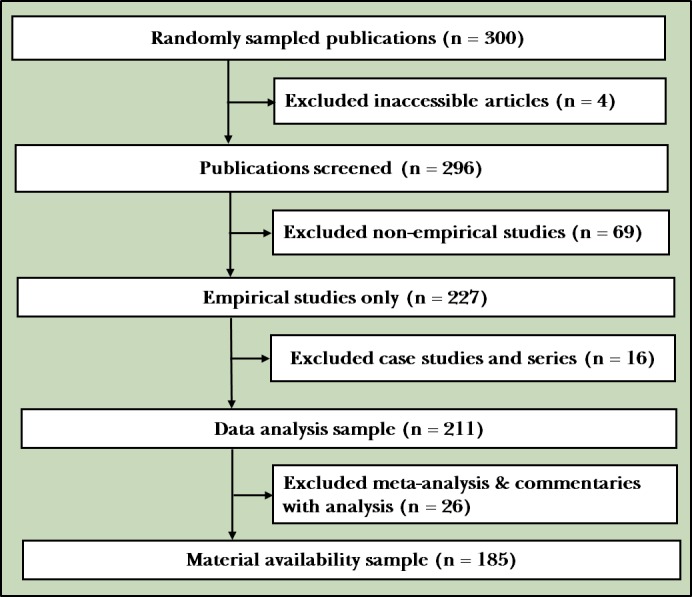

Our search of the NLM catalogue identified 346 journals, with only 158 meeting the inclusion criteria (figure 1). The median 5-year impact factor for these journals was 3.20 (IQR: 2.2–4.2). Our PubMed search returned 407 656 studies initially, and this number was reduced to 90 281 after we applied the date limiter. From these search returns, 300 psychiatry research publications were randomly selected. Four were inaccessible, yielding a final sample size of 296 publications. The majority of the 296 publications had a primary author from USA (155, 52%), UK (86, 29%) and the Netherlands (32, 11%). The top three publications types were cohort studies (46, 16%), surveys (45, 15%) and clinical trials (36, 12%). With regard to accessibility, 107 (36%) of the 296 publications were publicly available, whereas the additional 189 publications (64%) were only available behind a paywall. Remaining sample characteristics are displayed in table 1 and online supplementary table A.

Figure 1.

Prisma diagram: selection process from PubMed-indexed psychiatry journals.

Table 1.

Publication characteristics

| Characteristic | Variables | ||

| N (%) | 95% CI | ||

| Test subjects (n=296) |

Animals | 5 (1.69) | — |

| Humans | 189 (63.85) | — | |

| Both | 0 (0) | — | |

| Neither | 102 (34.46) | — | |

| Country of journal publication (n=296) | USA | 130 (43.92) | — |

| UK | 19 (6.42) | — | |

| Australia | 16 (5.41) | — | |

| Netherlands | 15 (5.07) | — | |

| Germany | 15 (5.07) | — | |

| Canada | 12 (4.05) | — | |

| China | 11 (3.72) | — | |

| Italy | 8 (2.7) | — | |

| France | 6 (2.03) | — | |

| Spain | 5 (1.69) | — | |

| Portugal | 5 (1.69) | — | |

| Israel | 5 (1.69) | — | |

| Brazil | 5 (1.69) | — | |

| Unclear | 3 (1.01) | — | |

| Other | 41 (13.85) | — | |

| Country of corresponding author (n=296) | USA | 155 (52.36) | — |

| UK | 86 (29.05) | — | |

| Netherlands | 32 (10.81) | — | |

| Ireland | 8 (2.7) | — | |

| Germany | 5 (1.69) | — | |

| Switzerland | 3 (1.01) | — | |

| France | 3 (1.01) | — | |

| Canada | 1 (0.34) | — | |

| Brazil | 1 (0.34) | — | |

| Australia | 1 (0.34) | — | |

| Unclear | 1 (0.34) | — | |

| Open access (n=296) |

Yes found via Open Access Button | 95 (32.1) | 26.8–37.4 |

| Yes found article via other means | 12 (4.1) | 1.8–6.3 | |

| Could not access through paywall | 189 (63.9) | 58.4–69.3 | |

| 5-year impact factor (n=296) |

Median | 3.2 | — |

| First quartile | 2.2 | — | |

| Third quartile | 4.2 | — | |

| IQR | 2.2–4.2 | — | |

| Most recent impact factor year (n=296) | 2014 | 0 | — |

| 2015 | 0 | — | |

| 2016 | 0 | — | |

| 2017 | 270 | — | |

| 2018 | 0 | — | |

| Other | 30 | — | |

| Most recent impact factor (n=296) |

Median | 3 | — |

| First quartile | 1.9 | — | |

| Third quartile | 4 | — | |

| IQR | 1.9–4.0 | — | |

Reproducibility factors

Factors for reproducibility include the availability of materials, data, protocol, analysis script and preregistration. (See online supplementary table B detailing the relevance and value of each factor). Of the 296 publications, 185 were analysed for a materials availability statement and 211 were analysed for a data availability, protocol availability, analysis script availability statement and preregistration statement (figure 1). These differences were the result of excluding particular study designs from certain analyses, such as excluding case studies from preregistration. Of the 185 publications analysed for a materials availability statement, 22 (12% (95% CI: 8.2% to 16%]) had a materials availability statement, yet only 17 provided an accessible materials document (table 2). Only 14 (6.6% (95% CI: 3.8% to 6.6%)) of the 211 publications provided a data availability statement, with just one study including all the raw data necessary to reproduce its findings. Only four of the 211 publications (1.9% (95% CI: 0.4% to 3.4%)) provided a protocol availability statement, and a single publication (0.47% (95% CI: 0% to 1.3%)) stated that its analysis script was available on request (table 2). Of the 211 publications for which preregistration was analysed, only 13 (6.2% (95% CI: 3.4% to 8.9%)) included a statement that the study was registered in publicly accessible repositories (table 2). All 13 publications were accessible; four (31% (95% CI: 26% to 36%)) included their hypothesis, 10 (77% (95% CI: 72% to 82%)) included their methods and eight (62% (95% CI: 56% to 67%)) included their analysis plan.

Table 2.

Characteristics of reproducibility in psychiatry studies

| Characteristics | Variables | ||

| N (%) | 95% CI | ||

| Funding* (n=296) |

University | 14 (4.73) | — |

| Hospital | 2 (0.68) | — | |

| Public | 72 (24.3) | — | |

| Private/Industry | 16 (5.41) | — | |

| Non-profit | 3 (1.01) | — | |

| Mixed | 46 (15.54) | — | |

| No statement listed | 111 (37.5) | — | |

| No funding received | 32 (10.81) | — | |

| Conflict of interest statement (n=296) |

Statement, one or more conflicts of interest | 31 (10.47) | 7 to 13.9 |

| Statement, no conflict of interest | 146 (49.32) | 43.7 to 55 | |

| No conflict of interest statement | 119 (40.2) | 34.7 to 45.8 | |

| Data availability (n=211) |

Statement, some data are available | 14 (6.64) | 3.8 to 6.6 |

| Statement, data are not available | 0 (0) | 0 to 0 | |

| No data availability statement | 197 (93.36) | 90.5 to 93.4 | |

| Material availability (n=185) |

Statement, some materials are available | 22 (11.89) | 8.2 to 15.6 |

| Statement, materials are not available | 0 (0) | 0 to 0 | |

| No materials availability statement | 163 (88.11) | 84.4 to 91.8 | |

| Protocol available (n=211) |

Full protocol | 4 (1.90) | 0.4 to 3.4 |

| No protocol | 207 (98.10) | 96.6 to 99.6 | |

| Analysis scripts (n=211) |

Statement, some analysis scripts area available | 1 (0.47) | 0 to 1.3 |

| Statement, analysis scripts are not available | 0 (0) | 0 to 0 | |

| No analysis script availability statement | 210 (99.53) | 98.7 to 100 | |

| Replication studies (n=211) |

Reports a replication or novel study | 4 (1.90) | 0.4 to 3.4 |

| Does not report replication or novel study | 207 (98.10) | 96.6 to 99.6 | |

| Cited by meta-analysis or systematic review† (n=185) |

No citations | 119 (59.20) | 53.6 to 64.8 |

| A single citation | 39 (19.40) | 14.9 to 23.9 | |

| One–five citations | 39 (19.40) | 14.9 to 23.9 | |

| Greater than five citations | 4 (2.00) | 0.4 to 3.6 | |

| Preregistration (n=211) |

Statement, says was preregistration | 13 (6.16) | 3.4 to 8.9 |

| Statement, was not preregistration | 0 (0) | 0 to 0 | |

| No, there is no preregistration statement | 198 (93.84) | 91.1 to 96.6 | |

*Some studies included multiple funding sources.

†No studies were explicitly excluded from the systematic reviews that cited the original article.

Conflict of interest and funding

All 296 publications were included in the conflict of interest and funding source analysis (figure 1). Of these studies, 177 (60% (95% CI: 41% to 69%)) included a conflict of interest statement, with 10% reporting a conflict of interest. With regard to funding, 185 (63% (95% CI: 43% to 82%)) of the 296 articles had a funding statement. Of the 296 publications, 153 (52% (95% CI: 36% to 68%)) were funded and 32 (11% (95% CI: 7.3% to 14%)) did not receive funding. The majority (72, 24%) of funding came from public sources. Additional results are presented in table 2.

Replication and evidence synthesis

Of the 296 publications, 211 were analysed for being a replication study, and 201 were analysed to determine how many had been cited in a meta-analysis or systematic review (figure 1). Four (1.9% (95% CI: 0.4% to 3.4%)) were identified as a replication study, and 82 (41% (95% CI: 30% to 51%)) were cited in at least one systematic review or meta-analysis (table 2).

Discussion

Main findings

Our results demonstrated that the majority of publications within psychiatry literature lack the necessary materials, raw data and detailed protocols to be easily reproducible. These findings are concerning, given the critical need for reproducible and transparent scientific research. In this section, we outlined a few of the issues causing concern and offer suggestions to improve this disparity between standards of research and current practices.

To begin, we found that only 13 publications had a statement about preregistration. Preregistration allows for independent evaluation of the consistency between the registered plan and what was actually performed in the study. Selective reporting bias—upgrading, downgrading, removing, or adding study outcomes based on statistically significant findings—is particularly problematic. Comparisons between preregistration documents and published reports enable independent researchers to determine whether this form of bias has likely occurred. Multiple studies indicate that selective reporting bias is a pervasive problem in the medical literature12–16 including psychotherapy trials.17 Scott et al evaluated selective outcome reporting of clinical trials published in The American Journal of Psychiatry, Archives of General Psychiatry/JAMA Psychiatry, Biological Psychiatry, Journal of the American Academy of Child and Adolescent Psychiatry and The Journal of Clinical Psychiatry.18 They found that 28% of trials in their sample showed evidence of selective outcome reporting. As another example, the COMPare project was designed to evaluate all trials published in prestigious general medical journals. After completing evaluations for selective outcome reporting, members of the project drafted letters to the editor requesting clarification for discrepant endpoints. To date, they have identified 354 outcomes that were not reported and 357 outcomes that were silently added across 67 trials.19 To address this type of problem, stricter adherence to preregistration is needed. For example, although the Food and Drug Administration Amendments Act codified into law that all applicable clinical trials should be prospectively registered before trial commencement, penalties for non-compliant investigators have never been enacted.20 Given that this safeguard is already in place, greater enforcement is likely a viable first step toward improvement. Additionally, the International Committee of Medical Journal Editors (ICMJE) mandates that ICMJE-endorsing journals require prospective trial registration as a precondition for publication for all clinical trials.21 However, studies have found that journals do not always enforce registration policies.22 Given that journals are gatekeepers of scientific knowledge and advancement, we advocate for journals adopting mechanisms to enforce their policies. Additional training is also warranted for junior researchers and students who may not be aware of the inherent issues involved in the failure to preregister studies. Responsible conduct of research courses are required for trainees participating in fellowships and training programme funded by the National Institutes of Health. For more established faculty, universities offer modules related to research ethics, human participant protections, data management, informed consent and anonymity. Such courses could likely incorporate training into issues involving preregistration, transparency and reproducibility. Academic conferences offer another avenue for training of all parties regarding open research practices.

Transparency of the methodological process, data collection and data analyses increases the credibility of study findings.8 Thus, access to the complete protocols and materials used to perform a study is imperative for replication attempts. This need is illustrated by the Reproducibility Project in Cancer Biology, which attempted to reproduce 50 landmark studies after concerns were raised by two drug companies regarding replication of cancer study findings.23 Replication of 32 of the 50 studies was abandoned, in large part because methodological details were not available from the original researchers in these published papers.5 In addition, a review of 441 biomedical publications from 2000 to 2014 found that only one study provided a full protocol, and none made all of their raw data available.24 Given the significant deficiency of materials availability in psychiatry, looking to other fields to garner ideas would be suggested. For example, the American Journal of Political Science requires authors of manuscripts accepted for publication to provide sufficient materials to enable other researchers to verify all analytic results reported in the narrative and supporting documents.25 Furthermore, this journal requires the materials of the final draft manuscript to be verified to confirm that the analytic results are reproducible for each study. In this process, both the quantitative and qualitative analyses have verification processes conducted at universities. Following verification, the university staff release the final data for public access, and then final publication can occur.

Strengths

With regard to strengths, this study included a random sample of the psychiatry literature from a large selection of journals. We used extensive training to ensure inter-rater reliability between investigators. Data were extracted in duplicate and blinded fashion with joint reconciliation to minimise human error. This double data extraction methodology is the gold standard in systematic reviews and is recommended by the Cochrane Handbook for Systematic Reviews of Interventions.26 Furthermore, all relevant study materials have been made available to ensure transparency and reproducibility.

Limitations

We acknowledge that although our sample size was 50% greater than that of Hardwicke et al,2 it still only represents a small fraction of the published literature. In addition, the current indicators of reproducibility and transparency have not been completely established. We used factors previously identified in social sciences and applied them to psychiatry. Our study findings should also be interpreted in light of our sample, which included only MEDLINE-indexed journals and studies published during a set time period. Differences in indicators might exist in other journals or outside this timeframe.

Implications

In conclusion, we stress the importance of adopting transparent and reproducible practices in research. Certainly, if the public lacks trust in science, it could evolve into a lack of trust in clinical practices.27 Lack of transparency is not an unknown issue,1 but when faced with change, we must reform our current practices. This study presents a reference point for the state of reproducibility and transparency in psychiatry literature and future assessments are recommended to evaluate progress.

Biography

Caroline E. Sherry is a second year medical student at Oklahoma State University Center for Health Sciences. She graduated from the University of Notre Dame with a double major in Science and Theology, as well as a minor specialising in the science and practice of humanistic, compassionate medicine in 2018. Caroline became a member of the Vassar Research team as a first year medical student, enjoyed dedicated summer research time, and is looking forward to the opportunity to engage in research on rotations.

Caroline E. Sherry is a second year medical student at Oklahoma State University Center for Health Sciences. She graduated from the University of Notre Dame with a double major in Science and Theology, as well as a minor specialising in the science and practice of humanistic, compassionate medicine in 2018. Caroline became a member of the Vassar Research team as a first year medical student, enjoyed dedicated summer research time, and is looking forward to the opportunity to engage in research on rotations.

Footnotes

Presented at: OSU-CHS Research Conference 2020

Contributors: All authors have contributed substantially to the planning, conduct and reporting of the work described in the article, including, but not limited to; Study design, data acquisition, data analysis, manuscript drafting and final manuscript approval. CES and JZP collaborated on the extraction, validation, organisation, analysis and interpretation of all data. CES was also responsible for team organisation and the manuscript formatting, revision and submission. DT designed methods, compiled the publication list, led data extraction training and assisted with data interpretation and manuscript editing. BKC and AP contributed to data interpretation and writing the introduction and discussion sections. MV provided advisement and leadership in data interpretation, scientific writing and manuscript editing.

Funding: This study was funded through the 2019 Presidential Research Fellowship Mentor–Mentee Program at Oklahoma State University Center for Health Sciences.

Competing interests: None declared.

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: All protocols, materials and other pertinent information are available on Open Science Framework (https://osf.io/n4yh5/). Comprehensive results are accessible online in Supplementary Tables A and B.

References

- 1. Rigor and Reproducibility [Internet] National Institutes of health (NIH). Available: https://www.nih.gov/research-training/rigor-reproducibility [Accessed cited 2019 Jun 26].

- 2. Hardwicke TE, Wallach JD, Kidwell M, et al. . An empirical assessment of transparency and reproducibility-related research practices in the social sciences (2014-2017) [Internet] 2019. [DOI] [PMC free article] [PubMed]

- 3. Turner EH, Matthews AM, Linardatos E, et al. . Selective publication of antidepressant trials and its influence on apparent efficacy. N Engl J Med 2008;358:252–60. 10.1056/NEJMsa065779 [DOI] [PubMed] [Google Scholar]

- 4. Falk Delgado A, Falk Delgado A. Outcome switching in randomized controlled oncology trials reporting on surrogate endpoints: a cross-sectional analysis. Sci Rep 2017;7:9206 10.1038/s41598-017-09553-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Open Science Collaboration Psychology. estimating the reproducibility of psychological science. Science 2015;349:aac4716 10.1126/science.aac4716 [DOI] [PubMed] [Google Scholar]

- 6. Héroux ME, Loo CK, Taylor JL, et al. . Questionable science and reproducibility in electrical brain stimulation research. PLoS One 2017;12:e0175635 10.1371/journal.pone.0175635 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Blackford JU. Leveraging statistical methods to improve validity and reproducibility of research findings. JAMA Psychiatry 2017;74:119–20. 10.1001/jamapsychiatry.2016.3730 [DOI] [PubMed] [Google Scholar]

- 8. Bell V. Open science in mental health research. Lancet Psychiatry 2017;4:525–6. 10.1016/S2215-0366(17)30244-4 [DOI] [PubMed] [Google Scholar]

- 9. Jain S, Kuppili PP, Pattanayak RD, et al. . Ethics in psychiatric research: issues and recommendations. Indian J Psychol Med 2017;39:558–65. 10.4103/IJPSYM.IJPSYM_131_17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Baker M. 1,500 scientists lift the lid on reproducibility. Nature 2016;533:452–4. 10.1038/533452a [DOI] [PubMed] [Google Scholar]

- 11. Murad MH, Wang Z. Guidelines for reporting meta-epidemiological methodology research. Evid Based Med 2017;22:139–42. 10.1136/ebmed-2017-110713 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Howard B, Scott JT, Blubaugh M, et al. . Systematic review: outcome reporting bias is a problem in high impact factor neurology journals. PLoS One 2017;12:e0180986 10.1371/journal.pone.0180986 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Wayant C, Scheckel C, Hicks C, et al. . Evidence of selective reporting bias in hematology journals: a systematic review. PLoS One 2017;12:e0178379 10.1371/journal.pone.0178379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Rankin J, Ross A, Baker J, et al. . Selective outcome reporting in obesity clinical trials: a cross-sectional review. Clin Obes 2017;7:245–54. 10.1111/cob.12199 [DOI] [PubMed] [Google Scholar]

- 15. Aggarwal R, Oremus M. Selective outcome reporting is present in randomized controlled trials in lung cancer immunotherapies. J Clin Epidemiol 2019;106:145–6. 10.1016/j.jclinepi.2018.10.010 [DOI] [PubMed] [Google Scholar]

- 16. Mathieu S, Boutron I, Moher D, et al. . Comparison of registered and published primary outcomes in randomized controlled trials. JAMA 2009;302:977–84. 10.1001/jama.2009.1242 [DOI] [PubMed] [Google Scholar]

- 17. Bradley HA, Rucklidge JJ, Mulder RT. A systematic review of trial registration and selective outcome reporting in psychotherapy randomized controlled trials. Acta Psychiatr Scand 2017;135:65–77. 10.1111/acps.12647 [DOI] [PubMed] [Google Scholar]

- 18. Scott A, Rucklidge JJ, Mulder RT. Is mandatory prospective trial registration working to prevent publication of Unregistered trials and selective outcome reporting? an observational study of five psychiatry journals that mandate prospective clinical trial registration. PLoS One 2015;10:e0133718 10.1371/journal.pone.0133718 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Goldacre B, Drysdale H, Dale A, et al. . Compare: a prospective cohort study correcting and monitoring 58 misreported trials in real time. Trials 2019;20:118 10.1186/s13063-019-3173-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. U.S. Food and Drug Administration Food and drug administration amendments act (FDAAA) of 2007 2007.

- 21. Laine C, De Angelis C, Delamothe T, et al. . Clinical trial registration: looking back and moving ahead. Ann Intern Med 2007;147:275–7. 10.7326/0003-4819-147-4-200708210-00166 [DOI] [PubMed] [Google Scholar]

- 22. Sims MT, Sanchez ZC, Herrington JM, et al. . Shoulder arthroplasty trials are infrequently registered: a systematic review of trials. PLoS One 2016;11:e0164984 10.1371/journal.pone.0164984 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Errington TM, Iorns E, Gunn W, et al. . An open investigation of the reproducibility of cancer biology research. eLife 2014;3 10.7554/eLife.04333 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Wallach JD, Boyack KW, Ioannidis JPA. Reproducible research practices, transparency, and open access data in the biomedical literature, 2015-2017. PLoS Biol 2018;16:e2006930 10.1371/journal.pbio.2006930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. AJPS Verification Policy [Internet]. American Journal of Political Science, 2019. Available: https://ajps.org/ajps-verification-policy/ [Accessed 27 Jun 2019].

- 26. Higgins JPT. Green S: cochrane Handbook for systematic reviews of interventions. John Wiley & Sons, 2011. [Google Scholar]

- 27. Birkhäuer J, Gaab J, Kossowsky J, et al. . Trust in the health care professional and health outcome: a meta-analysis. PLoS One 2017;12:e0170988 10.1371/journal.pone.0170988 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

gpsych-2019-100149supp001.pdf (57.5KB, pdf)