Abstract

Theories of embodied cognition propose that we recognize tools in part by reactivating sensorimotor representations of tool use in a process of simulation. If motor simulations play a causal role in tool recognition then performing a concurrent motor task should differentially modulate recognition of experienced vs. non-experienced tools. We sought to test the hypothesis that an incompatible concurrent motor task modulates conceptual processing of learned vs. non-learned objects by directly manipulating the embodied experience of participants. We trained one group to use a set of novel, 3-D printed tools under the pretense that they were preparing for an archeological expedition to Mars (manipulation group); we trained a second group to report declarative information about how the tools are stored (storage group). With this design, familiarity and visual attention to different object parts was similar for both groups, though their qualitative interactions differed. After learning, participants made familiarity judgments of auditorily presented tool names while performing a concurrent motor task or simply sitting at rest. We showed that familiarity judgments were facilitated by motor state-dependence; specifically, in the manipulation group, familiarity was facilitated by a concurrent motor task, whereas in the spatial group familiarity was facilitated while sitting at rest. These results are the first to directly show that manipulation experience differentially modulates conceptual processing of familiar vs. unfamiliar objects, suggesting that embodied representations contribute to recognizing tools.

Introduction

After suffering brain damage to relatively specific regions of the parietal or frontal lobe, a neuropsychological patient who is shown a hammer may immediately, without prompting, reach for it and start swinging. This ‘utilization behavior’, first described by Lhermitte (1983; see also Iaccarino, Chieffi, & Iavarone, 2014), suggests that our knowledge of how to use familiar tools can be elicited by the mere sight of the tool. Importantly, this behavior appears to occur in the absence of an intention to act, pointing to the importance of motor activity as an essential component of our understanding of familiar tools. Neuroimaging research has shown that frontoparietal areas implicated in organizing reaching and grasping towards objects are activated in response to pictures of tools but not to houses, faces, or animals (e.g. Chao & Martin, 2000). It is these areas that are often damaged in cases of utilization behavior (see Iaccarino, Chieffi, & Iavarone, 2014). Importantly, these frontoparietal regions are activated in response to tools even when they are observed but not explicitly used (see Martin, 2007; Rugg & Thompson-Schill, 2013). These phenomena suggest that motor-related information is activated to support cognition.

These types of findings have prompted theorists to posit that cognition is partially grounded in simulations of action (see Meyer & Damasio, 2009; Allport, 1985; Barsalou, 2008). According to this framework, to know that an object is a hammer requires reactivating, by way of simulation, our sensory and motor experiences of a hammer. Such a mechanism would help explain why, when inhibitory mechanisms are disrupted after brain damage, merely seeing a hammer can elicit hammering actions; that is, simulations of action are no longer inhibited and result in actual performance. This notion is also consistent with the general idea that memory is state-dependent; that is, reinstating learning states appears to improve memory performance (see Smith & Vela, 2001, for a meta-analysis). However, the grounding framework goes beyond previous research in state-dependence (which focuses on reinstating environmental or physiological states) in suggesting that the state of the motor system may also be crucial for memory (see also Meyer & Damasio, 2009).

One of the most significant criticisms levied against grounded theories of cognition is that the frontoparietal activity observed in brain imaging studies may not be a simulation at all but simply reflect association—a cascade of activation from the neural regions devoted to representing conceptual knowledge to the regions responsible for explicitly executing actions (see Mahon & Caramazza, 2008). According to this criticism, all motor-related activation occurs after abstract conceptual processing has occurred and is, therefore, not functionally involved in knowledge (or perhaps incidentally to conceptual processing). Thus, in determining the role of motor activity in cognition, an important question arises: are motor activations functionally involved in tool identification? If motoric activations play a functional role during cognitive tasks involving tools, then activating the motor system should modulate performance in some way. To put it another way, cognitive performance should be state-dependent with respect to the motor system. For instance, if identifying a tool (which we experience with an active motor system) requires re-activation of motor information, then overtly producing motor actions should modulate the identification of tools.

A few studies support the notion that the state of the motor system can influence object identification. For instance, viewing images of hands primes the identification of tools affording compatible postures, a finding suggesting that images of hands activate the motor system in a way that facilitates tool identification (see Helbig et al., 2010). In contrast, however, overtly producing a motoric action can impair processing of tools. For instance, performing a paddy-cake task (i.e. a repetitive hand clapping pattern) selectively interfered with making abstract/concrete judgments of manipulable words compared to less manipulable words (Yee et al., 2013). Similarly, Witt et al. (2010) had participants squeeze a ball while naming images of animals and tools and showed that ball squeezing interfered with naming tools but not animals. To further complicate things, sometimes motor activation is irrelevant to performance. For instance, Pecher (2013) found that motor interference did not disrupt working memory performance more for manipulable than non-manipulable objects (see also Quak et al., 2014). Additionally, concurrent motor tasks can affect naming of non-manipulable objects if they are oriented in different ways in the picture plane (Matheson et al., 2014). These results suggest at best that a concurrent motor task may affect some forms of processing, but to date it is unclear what the conditions are that lead to such facilitatory or inhibitory effects.

A strong test of the functional role of motor activity in tool identification is needed to further develop grounded theories of cognition. In ecological contexts, people vary in their experience with manipulable objects, and manipulable objects tend to share certain characteristics. This makes it difficult to control what types of experiences people have, and almost impossible to find people who have no manipulation experience with a particular class of object. To address this issue, we devised a training protocol that strongly controlled the amount, type, and quality of motor experience individuals had while learning about a novel set of tools. Participants were trained and tested over the course of 4 days. One group of participants learned how to use novel tools by manipulating them (i.e. manipulation information); a control group learned declarative knowledge about the storage of tools (i.e. spatial information). In a test of the functional role of motor activity in tool processing, we had participants make familiarity judgments of auditorily presented tool names. For half the trials, participants performed a concurrent ‘paddy-cake’ motor pattern with their hands; for the other half, their hands remained still. We predicted a type of state-dependent learning (i.e. motor compatibility effect). We reasoned that both groups should show a familiarity effect and respond more efficiently to the tools they learned about (i.e. familiarity facilitates tool identification; Salmon et al., 2014). However, if motor activations are functionally involved in familiarity judgments, then familiarity judgments should be state-dependent, and this state-dependence should benefit performance. Specifically, we reasoned that motor activation should facilitate familiarity judgments in the manipulation group. Conversely, motor activation should not influence judgments in the spatial group; in fact, motor state-dependence would predict that a resting motor system should facilitate familiarity judgments in this group, since they were not motorically active during learning. Further, because we analyzed data from both the first and last sessions, we were able to explore how these effects evolve as experience accumulates. Currently, it is unknown how practice modulates the role of the motor system in tool identification. Previous state-dependent motor-learning studies have shown that the reinstatement of the learning state has a greater influence early in training (Ruitenberg et al., 2012). Because of this, we hypothesized that motor compatibility may be especially important early in learning (i.e. day 1) vs. later in learning (i.e. day 4). Overall, evidence for a motor compatibility effect, especially on day 1, would provide strong evidence that motor activation plays a functional role in tool processing and demonstrate that the activity of the motor system can serve as a facilitatory state for tool identification.

Methods

Participants

Twenty-eight participants in each group (manipulation group 27 right handed, 12 males, mean age = 22.4 years; spatial group 25 right handed, 12 males, mean age = 23.2 years) were recruited from the University of Pennsylvania community through online ads. Participants were monetarily compensated for their time. All participants provided consent, and the study was approved by the Institutional Review Board at the University of Pennsylvania.

Stimuli

Tools

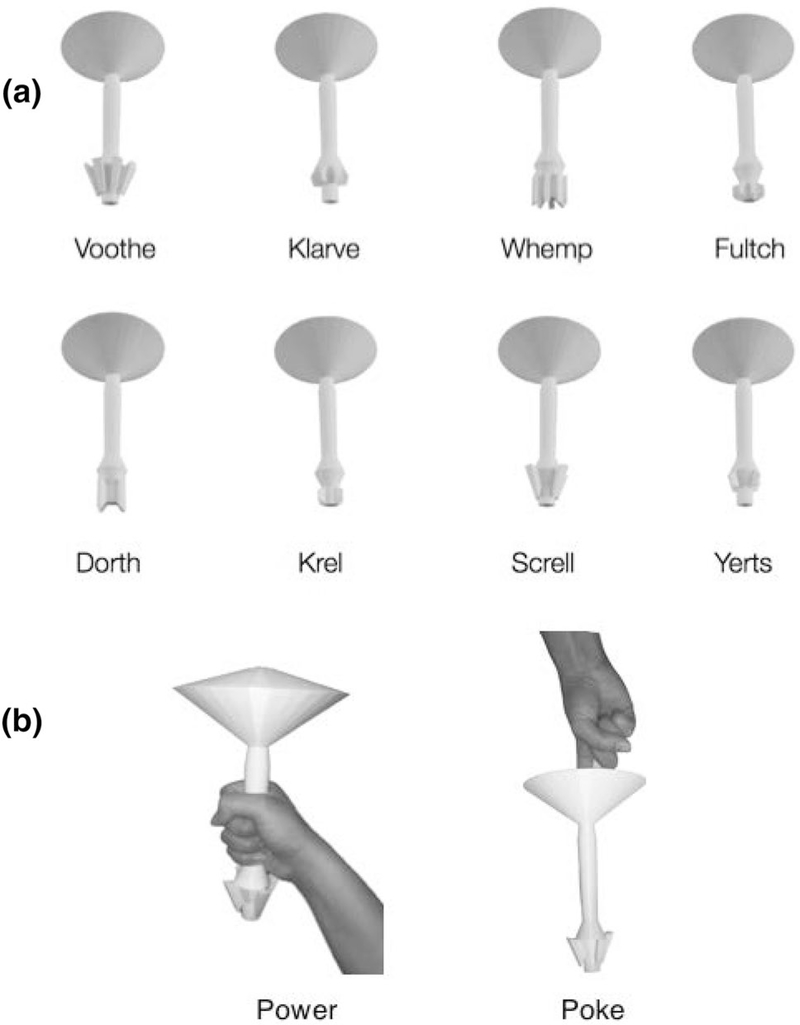

3-D tools were modeled in Blender (v 2.71, https://www.blender.org). Each tool was modeled with an elongated mid-section which could be manipulated with a power grip by the mid-region. Each object also included a hole in the flat, rounded end that could be manipulated with a poke gesture. Each tool differed in the shape of its ‘functional end’, defined by different arrangements of appendages. The appendages were created by parametrically varying three variables. First, the number of appendages was set at either 4 or 8; the position of the appendages was either high or low on the handle; and the angles of the faces were either obtuse or acute. Varying these variables resulted in eight unique functional ends. Models were 3-D printed locally using a Dimension Elite printer (Stratasys, MN) with a 0.010″ layer resolution and opaque white ABSplus thermoplastic as the material. Each object was 6.3″ wide (at the flat rounded end) and was either 9.8″ or 10.1″ long. See Fig. 1.

Fig. 1.

a Images of the 3-D printed tools used in the present experiment. Eight unique identities were defined by eight visually distinct ‘functional ends’. b Examples of the manual interactions with object. The objects could be manipulated using a power hand posture (along the main shaft of the object) or a poke hand posture (through a small hole in the flat bulbous end)

Visual stimuli

Images of each object were created using a Canon Power-ShotSX120is. Each object was placed on a table under a mix of natural and artificial light. The object was shot from approximately 24″ above at approximately 35° angle. This produced images with an ecologically valid distribution of light and with an ecologically plausible viewing perspective. Each object was shot oriented at 0°, 45°, 90°, 270°, and 315°.

Raw camera images were edited using GIMP photo editing software (v 2.8.10, https://www.gimp.org). Using the edge select tool, each object was cut from the original image and pasted onto a canvas (3648 × 2736) with a solid white background. Each image was grayscaled using the default GIMP function. Finally, using MATLAB (R2014a, Math-works, CA), images were resized (365 × 274).

Though images were shot with a mix of natural and artificial light to improve the ecological validity of the light’s distribution, we equated the mean luminance of the stimuli using the SHINE toolbox (Willenbockel et al., 2010). This suite of MATLAB functions scales the mean luminance between images. This ensures equal luminance across the different types of objects despite the different numbers and sizes, and of the shapes, of the faces at the functional end.

Apparatus

Experimental tasks were completed using E-Prime 2.0 (Psychology Software Tools, PA) running with Windows 7 on a Dell Latitude E7440 laptop computer (14-inch screen). Participants were fitted with a pair of Sennheiser HD headphones.

During training, for the manipulation group, we used a small basswood cornice box (3.25 × 12 × 9″) filled with white play-sand to act as the mock martian surface in which the tools are used. For the spatial group, we used two of the same boxes, this time empty so the objects could be placed in them, one located on the floor and the other on the desk.

Procedure

Design

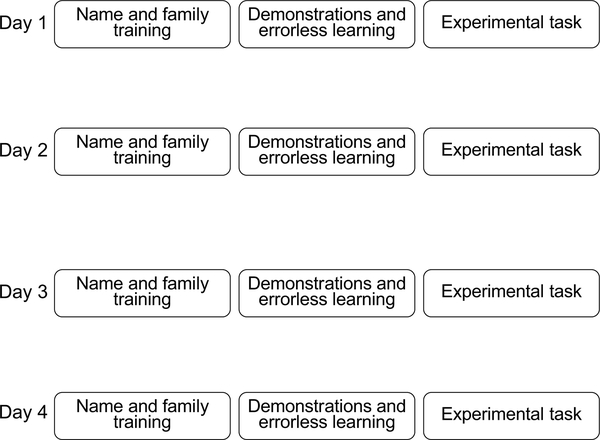

Experimental sessions occurred on four separate days. The general procedure is represented in Fig. 2.

Fig. 2.

Schematic representation of the training and experimental phases for each of the 4 days. Note that participants were assigned to the manipulation or spatial groups after name and family training on day 1. Demonstrations and errorless learning sessions were conducted according to group assignment described in text (i.e. manipulation training or spatial training). All participants completed the same experimental task on days 1–4

We attempted to run participants on consecutive days but a small number had at least a 1-day delay between two of their sessions (6/28 in the manipulation group, 7/28 in the spatial group; range of interval = 0–4). Note that, along with the experimental task of the present study, participants performed a separate experimental task (randomly preceding or proceeding the one reported here); further, on a fifth day (after the last experimental session reported here), 16 participants in each group completed an fMRI task. These additional experiments were designed to address different theoretical questions and are not reported here. On each day, participants first completed name and family training tasks. Then, led by the experimenter, they completed their demonstrations and errorless learning training. Finally, they completed the experimental task. Below we describe each phase in detail.

The names of the tools were taken from the novel objects used by Hsu et al. (2011; including, Voothe, Whemp, Krel, Screll, Dorth, Fultch, Yerts, and Klarve). Importantly, for every group of four subjects, we mapped names, families, and the manipulation/spatial information onto the eight objects in a pseudorandom way. This was done to ensure that any effects arising in our behavioral tasks were not the consequence of a particular name–family–action/spatial information pairing. We created a number of unique mappings. Four participants were assigned to a mapping. (We assigned four participants to each mapping because this allowed us to counterbalance the button presses for each task). See Table 1 for an example of mappings.

Table 1.

Example of name, family, and action/spatial information mapping

| Object | Assigned tool name | Assigned family name | Assigned actions: Manip. group | Assigned locations: Spatial group |

|---|---|---|---|---|

| Object a | Voothe | Hinch | Power, shave | Upright, shelf |

| Object b | Krel | Hinch | Poke, shave | Side, shelf |

| Object c | Whemp | Hinch | power, drill | upright, floor |

| Object d | Yerts | Hinch | Poke, drill | Side, floor |

| Object e | Dorth | Thull | Poke, shave | Side, shelf |

| Object f | Klarve | Thull | Power, shave | Upright, shelf |

| Object g | Screll | Thull | Poke, drill | Side, floor |

| Object h | Fultch | Thull | Power, drill | Upright, floor |

Four participants received this mapping. For other groups of four subjects, the names, as well as the actions/locations, were pseudorandomly reassigned to create a novel mapping. Overall, each object was associated with a unique combination of name, family, and action/spatial information

Name and family training for both groups

Name and family training procedures were adopted from the Greebles paradigm originally used by Gauthier and Tarr (1997). To learn the names and families of each object, participants performed separate study-test recognition memory tasks. For name training, in the study phase, participants saw a fixation cross for 500 ms followed by an object–name pair for 2000 ms. Object images were presented upright (i.e. 0°) at approximately 5.5° of visual angle, and were paired with a name presented in Courier New 18 font. Within each study phase, each object–name pair was shown once. Participants were instructed to say the name out loud and to simply remember the object and its name. Following the study phase, their recognition memory was tested immediately. On each trial, participants saw a object–name pair; the name was paired either correctly or incorrectly (i.e. the object–name pair was either a correct match or an incorrect match). They were instructed to press the ‘z’ or ‘m’ button (mapping counterbalanced) to indicate whether the name matched the pictured object. Object–name pairs remained on the screen until participants made a response. Each object was presented twice (once matched correctly and once incorrectly) for a total of 16 trials. At the end of the study-test block, accuracy was reported to the participant on the screen. Participants completed this sequence of study-test blocks until they achieved 100% performance for five consecutive blocks (or until, due to time constraints, the experimenter forced the session ahead. Sometimes, this occurred because the participant reported hitting the wrong button accidentally. In these cases, performance was on average > 95% for the last ten blocks.). The goal was to ensure that participants were consistently identifying correct object–name pairs before they engaged in the demonstrations and errorless learning phase.

Once participants reached criterion in the name training phase, they completed the family training phase. The family training phase was identical to the name training phase, though rather than names, family labels were paired with the images. Here, the names Thull and Hinch were used as family names, generated in the same way as the objects’ proper names. Again, participants performed study-test blocks until they achieved 100% performance for five consecutive blocks. Note that across all 4 days, name training always preceded family training.

Demonstrations and errorless learning for the manipulation group

After completing the name and family training phase on day 1, participants were handed a training memo explaining that they had been selected to join an archeological dig on humanity’s first mission to Mars in a search for ancient alien artifacts. The memo highlighted three features of the tools they were to be using. It explained that some of the tools were used for digging in the Martian surface to locate objects and some of them were used to excavate and clear off artifacts once they were uncovered. Importantly, they were verbally told that the family names corresponded to these two functions; for example, the Hinch family of tools was specialized digging tools and the Thull family tools were used to uncover artifacts and excavate the artifacts from the surface. Thus, each family was associated with a different function.1 Second, the memo stated that spacesuit constraints limited how the tools could be used: the tools had to be used with either a drilling motion or a sweeping motion to accomplish their functions. Finally, it was explained that, because the tools would potentially uncover delicate artifacts, they had to be handled with care with either power grasp or a finger poke. In this way, participants were explicitly alerted to the variables we manipulated in our design though they were not told why we were manipulating them.

Participants then completed the demonstrations and errorless learning phase. In the manipulation group, participants were shown how to use the objects in a small box of faux Martian sand that sat on the table. During each day, this phase had two different phases. In the demonstration phase, the experimenter presented the participant with the object. They were asked to verbally name it, verbally give the family label, and verbally describe the ultimate function of the tool (i.e. is it used for digging or excavating?). The experimenter then demonstrated the tool’s use while the participant observed (e.g. the experimenter used a poke and a sweeping motion with the tool in the sandbox). After demonstrating the tool’s use, the tool was placed on the table in front of the participant. They were instructed to imitate the experimenter (e.g. copy the experimenter’s poke and sweeping motion with the tool). After a predetermined number of demonstration phases (see below), participants performed completed the errorless learning phase. It was during this phase that the participant completed feedback trials. On each trial, an object was placed on the table in front of the participant. Again, they were instructed to verbally give the name, family label, and describe its use. In this phase, however, participants were instructed to demonstrate the object’s use to the experimenter (without the benefit of a demonstration). If the participant began the action incorrectly, the experimenter intervened on that trial, demonstrated the correct use, and immediately placed it back in front of the participant to repeat the trial. If the participant demonstrated the correct use, the experimenter administered a verbal reward.

Within each phase, participants saw each object once (for a total of eight trials). In sessions 1 and 2, there were three rounds of demonstrations followed by two rounds of errorless learning. In session three, there were two rounds of demonstrations followed by three rounds of feedback learning. In session 4, there were no demonstrations and three rounds of feedback learning. The goal of these sessions was to have participants effortlessly demonstrate how to use the objects by the end of the fourth day, relying less and less on demonstrations (i.e. until they could demonstrate each object’s use with no demonstrations). Accuracy was scored as ‘1’ for each round of errorless learning that was completed without errors for a possible total of ten (i.e. a score of ten indicates perfect errorless learning across all four days; that is, a ten indicates the participant made no errors).

Demonstrations and errorless learning for the spatial group

The spatial group’s training proceeded similarly to the manipulation group, with the exception that they did not handle the objects or learn actions; rather, this group learned information about how the objects were to be stored (i.e. spatial information). Participants in this group received a similar memo about the archeological dig on Mars, though they were told they would not be directly responsible for the dig but would have to give verbal instructions to members on the team about how to store the tools while they were not in use. Again, the memo described three features of the tools. First, participants were told that each family needed to be stored in different locations on the planet (i.e. the Hinches were to be stored at locations at the poles of the planet and Thulls at the equator). Second, they were told that, given the constraints of the space vehicle they would be travelling in on Mars, there were two boxes that the tools were stored in. They were told that one of these boxes was located at their hip level in the truck (and therefore the cornice box was placed on the desk in the testing room) and the other was located at their feet in the truck (and therefore the box was on the floor of the testing room). Third, they were told that the tool would be stored either on their side (i.e. lying down with the elongated shaft parallel to the table) or vertically (i.e. standing on its end, with the elongated shaft perpendicular to the table).

For the demonstration phase, the experimenter showed the object to the participant by holding it in view for them (each object was held in the same way by the experimenter using their index fingers at the top and bottom of the object). Participants were instructed to verbally provide the name, family label, and the environment the objects were to be stored in. Next, the experimenter placed the object in its appropriate storage container and participants were instructed to give a verbal label describing its location (e.g. it is stored on the floor, upright; it is stored at hip level on its side). For the errorless learning phase, the experimenter again held the object in view but now waited for the participant to give an appropriate instruction (e.g. that is stored on the floor, upright). If the participant began giving an incorrect instruction, the experimenter interrupted the participant and demonstrated the appropriate storage and waited for the participant to verbally correct themselves; if the participant gave the correct instruction the experimenter administered a verbal reward. The number of rounds of demonstrations vs. errorless learning was as in the manipulation group. Thus, the exposure (in terms of duration and number of trials) to the objects was the same for the two groups.

Experimental task

The experimental task was adapted from Yee et al. (2013). In this task, participants heard (through headphones) auditorily presented object names, half of which were the names of tools from the tool-set they were learning about, and half that were unfamiliar names. They were instructed to confirm, by way of verbal response, whether the object was a part of their set or not (i.e. recognition). Verbal responses were used in place of button presses so that participants could continuously execute the paddy-cake task during each trial. They performed ten blocks of trials in which they heard eight familiar and eight non-familiar tool names in random order (i.e. objects were familiar in 50% of the trials). On half the blocks (either the first half or the second half, randomly selected) they performed a paddy-cake task. In this task, participants were instructed to perform a bi-manual pattern in which they started with four fingers of each hand on the edge of the desk, then clapped with their whole hands, then placed two fingers of each hand on the desk, then clapped with their whole hands, and repeated this sequence for the duration of the block (at approximately 120 beats per min). Each block lasted about 2 min. The experimenter monitored paddy-cake performance and provided cues if participants began making errors (e.g. forgetting to alternate between two and four fingers). For the other half of the experiment, participants were instructed to place their palms on the table in front of them and to not fidget their fingers (i.e. no motor sequencing). Short breaks were provided in between blocks.

The trial sequence was as follows: on each trial a fixation appeared (randomly between 500 and 1500 ms). The fixation turned into a question mark for 3000 ms as subjects heard the auditory stimulus and provided a verbal response. Participants were told to respond as quickly and accurately as possible and to respond within 3000 ms. For familiar tools subjects responded with the word “tic” and for unfamiliar tools subjects responded with the word “tab” (counterbalanced). We used pseudo words with strong and similar consonants as the first phoneme allowed for accurate coding of vocal reaction times.

Results

Analytical strategy

Data were coded by hand by the experimenter offline using audio manipulation software. Only data from day 1 and day 4 were coded. The experimenter remained blind to the group of each data file, but was aware of the day and the motor task (as the microphone recorded the paddy-cake sounds). Accuracy was high across all conditions and not amenable to statistical testing (M = 0.997, SD = 0.05). Reaction times (RTs) results were analyzed using linear mixed effects models and a model selection procedure to explore whether higher order interactions were present in the data. Reaction times less than 200 ms or greater than 3000 ms were excluded. Additionally, RTs greater than 2.5 standard deviations were excluded on a by-subject basis (2.7% removed). Our design was a 2 (state: concurrent vs. non-concurrent motor task) × 2 (group: manipulation vs. spatial) × 4 (day: 1–4) × 2 (familiarity: familiar vs. unfamiliar) factorial. To test our main hypothesis (a state × group × day × familiarity interaction), we fitted a basic model with state (concurrent vs. non-concurrent) and group (manipulation vs. spatial) as fixed effects factors, and included subject as a random intercept. We started with this model because it is well established that dual tasks reduce performance (see Pashler, 1994; also Leone et al., 2017), and because group was one of our main variables of interest—though we did not expect any main effects of group because learning was equated between them by the end of testing. We compared this to a second model that added day (day 1 vs. day 4) as a fixed effect to explore the effects of training. Finally, we compared this to the most complex model, which added familiarity (familiar vs. unfamiliar) to address our main theoretical question about whether the familiarity effect was modulated by our other manipulations. Models were fitted in R (v. 3.3.3) using the lme4 package (v. 1.1–13; Bates et al., 2015). We compared models using the anova, and report χ2 and Akaike Information Criterion (AIC) values of the model comparison. We report estimated F and p values for each of the fixed effects terms using lmerTest (v. 2.0–33; Kuznetsova et al., 2015). Further, for post hoc testing, we used the lsmeans function (v. 2.26–3; Lenth, 2016) with FDR correction (see Benjamini & Hochberg, 1995) for comparisons of all estimated means.

Model fitting

The model selection procedure determined that model (3) (i.e. with all fixed effects) was the best fitting model, χ2(16) = 42.9, p < .001, AIC = 226,159 compared to AIC = 226,186 for model (2) and AIC = 229,340 for model (1). The list of significant effects, derived from the anova() and lmerTest() functions in R, is shown in Table 2.

Table 2.

ANOVA table of fixed effects for the best fitting model

| SS | MS error | Numerator DF | Denominator DF | F | p | |

|---|---|---|---|---|---|---|

| Group | 33010.0 | 33010.0 | 1 | 54 | 0.8 | 0.380 |

| State | 72654260.0 | 72654260.0 | 1 | 16,715 | 1721.2 | 0.000 |

| Day | 136736011.0 | 136736011.0 | 1 | 16,715 | 3239.3 | 0.000 |

| Familiarity | 617796.0 | 617796.0 | 1 | 16,715 | 14.6 | 0.000 |

| Group × state | 29652.0 | 29652.0 | 1 | 16,715 | 0.7 | 0.402 |

| Group × day | 4311255.0 | 4311255.0 | 1 | 16,715 | 102.1 | 0.000 |

| State × day | 6722640.0 | 6722640.0 | 1 | 16,715 | 159.3 | 0.000 |

| Group × familiarity | 22999.0 | 22999.0 | 1 | 16,715 | 0.5 | 0.460 |

| State × familiarity | 33445.0 | 33445.0 | 1 | 16,715 | 0.8 | 0.373 |

| Day × familiarity | 781965.0 | 781965.0 | 1 | 16,715 | 18.5 | 0.000 |

| Group × state × day | 775174.0 | 775174.0 | 1 | 16,715 | 18.4 | 0.000 |

| Group × state × familiarity | 157986.0 | 157986.0 | 1 | 16,715 | 3.7 | 0.053 |

| Group × day × familiarity | 29258.0 | 29258.0 | 1 | 16,715 | 0.7 | 0.405 |

| State × day × familiarity | 104834.0 | 104834.0 | 1 | 16,715 | 2.5 | 0.115 |

| Group × state × day × familiarity | 86283.0 | 86283.0 | 1 | 16,715 | 2 | 0.153 |

Theoretically relevant interaction is highlighted in bold

There were a number of expected results. First, the dual task impaired performance as participants were slower during the concurrent paddy-cake task (Mean RT difference of concurrent vs. non-concurrent = 131.9, SE = 3.2, p < .001). Second, performance improved over the course of training, with better performance on day 4 (Mean RT difference of day 1 vs. day 4 = 181, SE = 3.2, p < .001). Finally, participants were faster at identifying the familiar names (Mean RT difference of familiar vs. unfamiliar = − 12.16, SE = 3.2, p = .0001), demonstrating a familiarity effect over all.

Day interacted with a number of variables. First, there was a Day × Group interaction, due to the fact that the performance improvement overall was slightly better in the manipulation group (Mean RT difference of day 1 vs. day 4 = 213.2, SE = 4.5, p < .001) than the spatial group (Mean RT difference of day 1 vs. day 4 = 148.9, SE = 4.5, p < .001). The state × day interaction was due to the fact that, overall, the dual task impaired performance more on day 1 (Mean RT difference of concurrent vs. non-concurrent = 172.1, SE = 4.6, p < .001) than on day 4 (Mean RT difference of concurrent vs. non-concurrent = 91.8, SE = 4.4, p < .001), consistent with previous research (Ruitenberg et al., 2012). A Day × Familiarity interaction arose because the familiarity effect, too, was more prominent on day 1 only (Mean RT of unfamiliar—familiar = 25.8, SE = 4.6, p < .001) but not day 4 (Mean RT of unfamiliar—familiar = 1.52, SE = 4.4, p = .731).

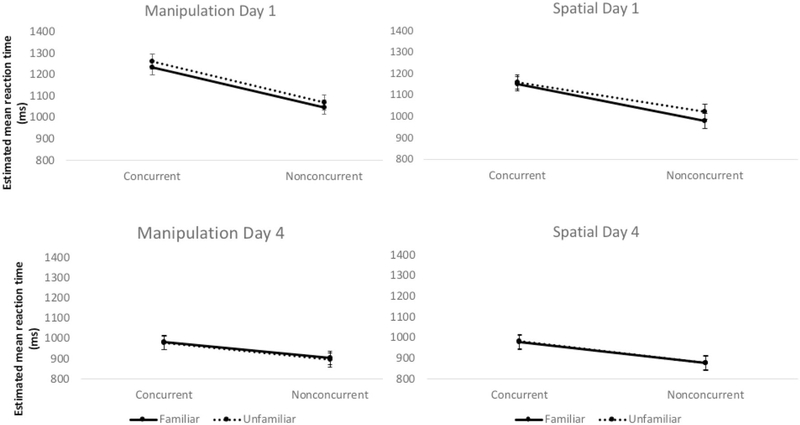

The most theoretically important significant interaction was the group × state × familiarity interaction. This interaction shows that the familiarity effect was different for the two groups and depended on the state of the motor system. The manipulation group showed a familiarity effect when performing the concurrent motor task (Mean RT difference of unfamiliar vs. familiar = 13.1, SE = 6.4, p = .05) but not the non-concurrent task (Mean RT difference of unfamiliar vs. familiar = 6.5, SE = 6.4, p = .3); conversely, the spatial group showed a familiarity effect during the non-concurrent task (Mean RT difference of unfamiliar vs. familiar = 23.5, SE = 6.3, p = .0002) but not the concurrent task (Mean RT difference of unfamiliar vs. familiar = 5.5, SE = 6.4, p = .39). Note that, while the trend was for this effect to be more prominent on day 1, the four-way interaction did not reach significance (see Fig. 3 for plot of the four-way interaction).

Fig. 3.

Estimated mean RTs derived from the most complex model that included all fixed effect factors. Note, the significant three-way interaction described in-text excludes the day factor shown here. Error bars represent standard error on the estimate

Discussion

In the present study, we trained one group of participants to use a set of novel tools (manipulation group) and another to verbally report declarative information about how they are stored (spatial group). In a test of the functional role of motor activation in familiarity judgments, we had participants identify auditorily presented familiar vs. unfamiliar object names while they performed a concurrent motor task or remained at rest. Though the effects were of a small magnitude, we showed that the state of the motor system during learning facilitated familiarity judgments during our test. Specifically, the manipulation group, who actively manipulated the tools at study, showed a familiarity effect when performing a concurrent motor task at test; conversely, the spatial group, who remained at rest at study, showed a familiarity effect when they were not preforming a motor task at test.

Overall, our findings reveal a novel motor system state-dependence effect in tool identification. For both groups, the familiarity advantage depended on compatibility between the state of the motor system at learning and at test. Our effect was facilitatory, much like that observed in previous priming studies (e.g. Helbig et al., 2010; see also Helbig et al., 2006). Importantly, previous research has shown that viewing images of hands primes identification of objects that afford a compatible grasp; more recent neuroimaging studies have revealed (a) priming-related activity in primary motor, somatosensory, and premotor areas, and (b) that this activity occurs within about 200 ms of the stimulus, well before later activations in ventral visual areas normally implicated in object identification (Sim et al., 2015). This supports the notion that motor activation may occur with a time-course that promotes priming.

Abundant evidence shows that identifying manipulable objects facilitates motor-related activity (e.g. Kiefer et al., 2007; Weisberg, van Turennout, & Martin, 2007), but to date it is unclear whether the reverse is also true: can motor activations facilitate identification? Importantly, although we show motor system state-dependent facilitation, many studies have shown interference. For instance, Downing-Doucet and Guérard (2014) had participants engage in a concurrent motor task while performing a working memory task. They showed that participants were better at recalling tools that afforded dissimilar actions than similar actions when they were not performing a concurrent motor task, but this advantage disappeared during the concurrent motor task; the authors suggested that in this case motor activations no longer contribute to performance. The discrepancies in the literature are increasing (e.g. Guérard & Lagacé, 2014; see also Zeelenberg & Pecher, 2016, for a review of concurrent motor effects on object processing). One possibility that future research should investigate is that the strength (measured as specificity or duration) of the motor activity may be non-linearly related to performance. For instance, in the visual priming literature, the nature of the prime determines the pattern of priming (e.g. a longer visual prime is more effective than a short one; see Carr et al., 1982). By extension, if motor activity does indeed functionally contribute to identification and other types of conceptual tasks, then the strength of the motor task may also determine the pattern of facilitation/inhibition. That is, we hypothesize that when the motor task is weak or unspecified (as it was in our case), facilitation is likely; conversely, if the motor task is too strong or too specific, perhaps interference is shown. This hypothesis echoes Naish et al’s. (2014) with respect to action observation. Specifically, after reviewing a large body of literature exploring corticospinal excitability during action observation, the authors proposed that observing a human action induces a simulated motor response in the observer that begins quickly (at about 90 ms) but is unspecified (i.e. not specific to particular muscle groups or actions) and is likely facilitatory; it is later (at about 200 ms) that this response is more specific, reflected in the potentiation of specific muscle groups; at this stage, the activity may become inhibitory (see also Naish & Obhi, 2015). The authors also propose that this modulation is affected by factors such as the observer’s intention to act and the affordances of the object. Future research should investigate this possibility not only in action observation, but in more conceptual object processing tasks as well. Our results suggest that the paddy-cake task we used here may be unspecified enough to induce early facilitatory effects on object identification.

In interpreting facilitatory or inhibitory effects of motor activity on cognitive performance, another important consideration is the nature of the task performed. Specifically, the task that observers perform (e.g. categorization vs. naming) likely modulates the functional relationship of motor activity to cognitive performance. For instance, ratings of object manipulability are positively associated with naming times but negatively with categorization times (Salmon, Matheson & McMullen, 2014). This suggests further that motor resonance is not automatic, but is called upon in different ways to support performance in some tasks but not others (see also Campbell & Cunnington, 2017, for a review in action observation). One hypothesis is that, while we showed a facilitatory effect for familiarity judgments, motor compatibility may impair more specific tasks such as naming.

Importantly, though the four-way interaction did not reach significance itself, day did interact with a number of variables. The trend suggests that familiarity effects are more prominent on day 1. This is consistent with Guérard, Guerrette, and Rowe (2015) who showed that a concurrent motor task interfered with short-term memory for manipulable object pairs but not long-term memory. Our results suggest that the effects of the concurrent motor task are more prevalent after only brief exposure. Future research should also explore this possibility.

Finally, while most previous studies have discussed concurrent motor tasks in terms of motor simulations, our results suggest the intriguing possibility that motor simulation may be a part of the more general phenomenon of state-dependent learning. Indeed, previous research shows that reinstating physiological or environmental states at the time of test benefits learning performance (Smith & Vela, 2001). Here we show that the state of the motor system (i.e. producing action vs. sitting still) can act as a state that facilitates familiarity judgments of objects learned in a compatible state. In this way, simulation may be considered a part of the broader phenomena in which states—physiological, environmental, motor, etc.—may be reinstated in ways to support cognitive performance (e.g. Badets et al., 2016). This suggests that the concept of simulation maybe closely related to other concepts that are often discussed in accounting for these findings, including predictive coding, associative learning, and neural reuse (see Anderson, 2010).

Supplementary Material

Acknowledgements

This research was supported by NIH R01DC015359 awarded to STS. Special thanks to Dr. David Kraemer for helpful discussions of the data and Nicole C. White for helpful feedback on an earlier version of the manuscript.

Funding This study was funded by NIH R01DC015359.

Footnotes

Compliance with ethical standards

Conflict of interest Heath Matheson declares he has no conflict of interest. Ariana Familiar declares she has no conflict of interest. Sharon Thompson-Schill declares she has no conflict of interest.

Ethical approval All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent Informed consent was obtained from all individual participants included in the study.

Electronic supplementary material The online version of this article (https://doi.org/10.1007/s00426-018-0997-4) contains supplementary material, which is available to authorized users.

The pseudorandomized assignment of actions and family names was done to ensure that there was no clear relationship between the function of a tool (i.e. a ‘digging’ tool) and any given set of actions or hand postures (i.e. we wanted to avoid having all ‘digging’ tools be associated with a particular action like sweeping for example).

References

- Allport DA (1985). Distributed memory, modular subsystems and dysphasia. Current perspectives in dysphasia, 32, 60. [Google Scholar]

- Anderson ML (2010). Neural reuse: A fundamental organizational principle of the brain. Behavioral and brain sciences, 33(4), 245–266. [DOI] [PubMed] [Google Scholar]

- Badets A, Koch I, & Philipp AM (2016). A review of ideomotor approaches to perception, cognition, action, and language: Advancing a cultural recycling hypothesis. Psychological Research Psychologische Forschung, 80(1), 1–15. [DOI] [PubMed] [Google Scholar]

- Barsalou LW (2008). Grounded cognition. Annual Review Psychology, 59, 617–645. [DOI] [PubMed] [Google Scholar]

- Bates D, Maechler M, Bolker B, & Walker S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48 [Google Scholar]

- Benjamini Y, & Hochberg Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the royal statistical society. Series B (Methodological), 57(1), 289–300. [Google Scholar]

- Campbell ME, & Cunnington R. (2017). More than an imitation game: Top-down modulation of the human mirror system. Neuroscience and Biobehavioral Reviews, 75, 195–202. [DOI] [PubMed] [Google Scholar]

- Carr TH, McCauley C, Sperber RD, & Parmelee CM (1982). Words, pictures, and priming: on semantic activation, conscious identification, and the automaticity of information processing. Journal of Experimental Psychology Human Perception and Performance, 8(6), 757. [DOI] [PubMed] [Google Scholar]

- Chao LL, & Martin A. (2000). Representation of manipulable man-made objects in the dorsal stream. Neuroimage, 12(4), 478–484. [DOI] [PubMed] [Google Scholar]

- Downing-Doucet F, & Guérard K. (2014). A motor similarity effect in object memory. Psychonomic Bulletin and Review, 21(4), 1033–1040. [DOI] [PubMed] [Google Scholar]

- Gauthier I, & Tarr MJ (1997). Becoming a “Greeble” expert: Exploring mechanisms for face recognition. Vision Research, 37(12), 1673–1682. [DOI] [PubMed] [Google Scholar]

- Guérard K, Guerrette MC, & Rowe VP (2015). The role of motor affordances in immediate and long-term retention of objects. Acta Psychologica, 162, 69–75. [DOI] [PubMed] [Google Scholar]

- Guérard K, & Lagacé S. (2014). A motor isolation effect: When object manipulability modulates recall performance. The Quarterly Journal of Experimental Psychology, 67(12), 2439–2454. [DOI] [PubMed] [Google Scholar]

- Helbig HB, Graf M, & Kiefer M. (2006). The role of action representations in visual object recognition. Experimental Brain Research, 174(2), 221–228. [DOI] [PubMed] [Google Scholar]

- Helbig HB, Steinwender J, Graf M, & Kiefer M. (2010). Action observation can prime visual object recognition. Experimental Brain Research, 200(3–4), 251–258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu NS, Kraemer DJ, Oliver RT, Schlichting ML, & Thompson-Schill SL (2011). Color, context, and cognitive style: Variations in color knowledge retrieval as a function of task and subject variables. Journal of Cognitive Neuroscience, 23(9), 2544–2557. [DOI] [PubMed] [Google Scholar]

- Iaccarino L, Chieffi S, & Iavarone A. (2014). Utilization behavior: What is known and what has to be known? Behavioural Neurology, 2014, 297128. 10.1155/2014/297128section [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiefer M, Sim EJ, Liebich S, Hauk O, & Tanaka J. (2007). Experience-dependent plasticity of conceptual representations in human sensory-motor areas. Journal of Cognitive Neuroscience, 19(3), 525–542. [DOI] [PubMed] [Google Scholar]

- Kuznetsova A, Brockhoff PB, & Christensen RHB (2015). lmerTest: tests in linear mixed effects models R package version 2.0–20. Vienna: R Foundation for Statistical Computing. [Google Scholar]

- Lenth RV (2016). Least-squares means: The R package lsmeans. Journal of Statistical Software, 69(1), 1–3. [Google Scholar]

- Leone C, Feys P, Moumdjian L, D’Amico E, Zappia M, & Francesco P. (2017). Cognitive-motor dual-task interference: a systematic review of neural correlates. Neuroscience and Biobehavioral Reviews, 75, 348–360 [DOI] [PubMed] [Google Scholar]

- Lhermitte F. (1983). ‘Utilization behaviour’ and its relation to lesions of the frontal lobes. Brain, 106(2), 237–255. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, & Caramazza A. (2008). A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. Journal of Physiology Paris, 102(1), 59–70. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, & Caramazza A. (2008). A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. Journal of Physiology Paris, 102(1), 59–70. [DOI] [PubMed] [Google Scholar]

- Martin A. (2007). The representation of object concepts in the brain. Annual Review of Psychology, 58, 25–45. [DOI] [PubMed] [Google Scholar]

- Matheson HE, White NC, & McMullen PA (2014). A test of the embodied simulation theory of object perception: Potentiation of responses to artifacts and animals. Psychological Research, 78(4), 465–482. [DOI] [PubMed] [Google Scholar]

- Meyer K, & Damasio A. (2009). Convergence and divergence in a neural architecture for recognition and memory. Trends in Neurosciences, 32(7), 376–382. [DOI] [PubMed] [Google Scholar]

- Naish KR, Houston-Price C, Bremner AJ, & Holmes NP (2014). Effects of action observation on corticospinal excitability: Muscle specificity, direction, and timing of the mirror response. Neuropsychologia, 64, 331–348. [DOI] [PubMed] [Google Scholar]

- Naish KR, & Obhi SS (2015). Timing and specificity of early changes in motor excitability during movement observation. Experimental Brain Research, 233(6), 1867–1874. [DOI] [PubMed] [Google Scholar]

- Pashler H. (1994). Dual-task interference in simple tasks: Data and theory. Psychological Bulletin, 116(2), 220. [DOI] [PubMed] [Google Scholar]

- Pecher D. (2013). No role for motor affordances in visual working memory. Journal of Experimental Psychology Learning Memory and Cognition, 39(1), 2. [DOI] [PubMed] [Google Scholar]

- Quak M, Pecher D, & Zeelenberg R. (2014). Effects of motor congruence on visual working memory. Attention Perception and Psychophysics, 76(7), 2063–2070. [DOI] [PubMed] [Google Scholar]

- Rugg MD, & Thompson-Schill SL (2013). Moving forward with fMRI data. Perspectives on Psychological Science, 8(1), 84–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruitenberg MF, De Kleine E, Van der Lubbe RH, Verwey WB, & Abrahamse EL (2012). Context-dependent motor skill and the role of practice. Psychological Research, 76(6), 812–820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salmon JP, Matheson HE, & McMullen PA (2014). Slow categorization but fast naming for photographs of manipulable objects. Visual Cognition, 22(2), 141–172. [Google Scholar]

- Sim EJ, Helbig HB, Graf M, & Kiefer M. (2015). When action observation facilitates visual perception: activation in visuo-motor areas contributes to object recognition. Cerebral Cortex, 25(9), 2907–2918. [DOI] [PubMed] [Google Scholar]

- Smith SM, & Vela E. (2001). Environmental context-dependent memory: A review and meta-analysis. Psychonomic Bulletin and Review, 8(2), 203–220. [DOI] [PubMed] [Google Scholar]

- Weisberg J, Van Turennout M, & Martin A. (2006). A neural system for learning about object function. Cerebral Cortex, 17(3), 513–521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willenbockel V, Sadr J, Fiset D, Horne GO, Gosselin F, & Tanaka JW (2010). Controlling low-level image properties: the SHINE toolbox. Behavior Research Methods, 42(3), 671–684. [DOI] [PubMed] [Google Scholar]

- Witt JK, Kemmerer D, Linkenauger SA, & Culham J. (2010). A functional role for motor simulation in identifying tools. Psychological Science, 21(9), 1215–1219. [DOI] [PubMed] [Google Scholar]

- Yee E, Chrysikou EG, Hoffman E, & Thompson-Schill SL (2013). Manual experience shapes object representations. Psychological Science, 24(6), 909–919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeelenberg R, & Pecher D. (2016). The role of motor action in memory for objects and words In Ross B. (Ed.), The Psychology of Learning and Motivation (vol. 64, pp. 161–193). Cambridge, MA: Academic Press. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.