Abstract

Immersive virtual reality can expose humans to novel training and sensory environments, but motor training with virtual reality has not been able to improve motor performance as much as motor training in real-world conditions. An advantage of immersive virtual reality that has not been fully leveraged is that it can introduce transient visual perturbations on top of the visual environment being displayed. The goal of this study was to determine whether transient visual perturbations introduced in immersive virtual reality modify electrocortical activity and behavioral outcomes in human subjects practicing a novel balancing task during walking. We studied three groups of healthy young adults (5 male and 5 female for each) while they learned a balance beam walking task for 30 min under different conditions. Two groups trained while wearing a virtual reality headset, and one of those groups also had half-second visual rotation perturbations lasting ~10% of the training time. The third group trained without virtual reality. We recorded high-density electroencephalography (EEG) and movement kinematics. We hypothesized that virtual reality training with perturbations would increase electrocortical activity and improve balance performance compared with virtual reality training without perturbations. Our results confirmed the hypothesis. Brief visual perturbations induced increased theta spectral power and decreased alpha spectral power in parietal and occipital regions and improved balance performance in posttesting. Our findings indicate that transient visual perturbations during immersive virtual reality training can boost short-term motor learning by inducing a cognitive change, minimizing the negative effects of virtual reality on motor training.

NEW & NOTEWORTHY We found that transient visual perturbations in virtual reality during balance training can boost short-term motor learning by inducing a cognitive change, overcoming the negative effects of immersive virtual reality. As a result, subjects training in immersive virtual reality with visual perturbations have equivalent performance improvement as training in real-world conditions. Visual perturbations elicited cortical responses in occipital and parietal regions and may have improved the brain’s ability to adapt to variations in sensory input.

Keywords: adaptive generalization, balance training, electroencephalography, motor learning, virtual reality

INTRODUCTION

Virtual reality has become a popular paradigm for training due to its ability to expose users to novel training and sensory environments (Adamovich et al. 2009). Virtual reality use can make a user feel present in a virtual world (Sanchez-Vives and Slater 2005) and improve clinical scenarios such as the sensing of pain in patients with burns (Hoffman et al. 2008, 2014). Virtual reality has also been shown to improve walking speed and gait stability (Mirelman et al. 2011; Darekar et al. 2015), leading to its use in rehabilitation of mobility and balance control (Booth et al. 2014; Darekar et al. 2015). Recently, affordable virtual reality head-mounted displays have been able to provide unprecedented immersion into novel virtual experiences. These new headsets have driven interest in adapting virtual reality further into other clinical and research settings.

Despite the promise of such immersive experiences, virtual reality head-mounted displays have been shown to decrease gait performance compared with unaltered viewing (Epure et al. 2014; Robert et al. 2016). Numerous studies have examined the possibility of using virtual reality for improving rehabilitation and gait (Booth et al. 2013; Corbetta et al. 2015; Darekar et al. 2015; Palacios-Navarro et al. 2016), but few studies used head-mounted displays to provide fully immersive virtual reality. Among those that have measured gait stability, all reported worsened stability when subjects used the virtual reality head-mounted display (Calogiuri et al. 2017; Epure et al. 2014; Kawamura and Kijima 2016; Kelly et al. 2008; Lott et al. 2003; Robert et al. 2016; Soffel et al. 2016). Two studies have found improvements in timed up-and-go performance in stroke patients when trained in virtual reality compared with conventional training (Jung et al. 2012; Kang et al. 2012), but the head-mounted displays for these studies were similar to spectacles placed away from the eyes, providing users with peripheral vision. Another study found improved step length and walking speed in stroke patients when performing obstacle walking in immersive virtual reality (Jaffe et al. 2004). This comparison may have been influenced by the control group performing overground walking while the virtual reality group performed treadmill walking. We could find no studies in the literature that found that training with virtual reality head-mounted displays was as good as or better than training in real-world conditions. Despite these limitations, virtual reality head-mounted displays are the best available solution for controlling a user’s entire field of view while walking. There is a need to overcome the effects of impaired performance training in immersive virtual reality in order to provide novel experiences equivalent to real world training.

One potential way to improve balance training outcomes is to perturb the sensory input required for balance control. This partly relies on the specificity of learning hypothesis, where humans use the optimal sources of information during the task. These optimal sources of sensory information can dynamically change based on sensory perturbations (Assländer and Peterka 2014), such as less emphasis on proprioception during balance when vision is available and reliable (Bernier et al. 2005; Proteau et al. 1992). Transient perturbations of optical flow during stance in healthy adults have been shown to increase electroencephalography (EEG) parietal theta power. Studies have used virtual reality to expose subjects to visual rotations up to 45° (Chiarovano et al. 2015; Wright 2014), similar to prism goggle studies (Fortis et al. 2011; Martin et al. 1996). However, such effects are usually temporary. In contrast, repeated exposure to visuomotor perturbations during lower limb cursor control has been shown to reduce tracking errors and decrease response time (Luu et al. 2017a). Using the control over the user’s visual field provided by a virtual reality head-mounted display, it may be possible to provide similar repeated perturbations during dynamic balance to the user’s visual input, leading to improved short-term motor learning.

The purpose of this study was to determine whether a transient visual perturbation, presented as visual rotations in virtual reality, can boost motor training outcomes and potentially overcome the negative effects of immersive virtual reality. We studied young healthy subjects performing short-term motor training on a balance beam (Domingo and Ferris 2009, 2010). We used a virtual reality headset to present brief visual perturbations. We chose visual perturbations because they induce large effects on mediolateral stability (Franz et al. 2016), making them relevant to a beam-walking task. We compared these results with virtual reality without perturbations and unaltered viewing without virtual reality (i.e., real-world conditions) to determine whether the sensory perturbation boosted short-term motor learning and overcame the negative training effects from virtual reality. We hypothesized that brief visual perturbations in virtual reality would significantly improve balance performance compared with virtual reality training without perturbations. To provide insight into the neural mechanisms for changes in balance performance, we used high-density EEG with independent component analysis (ICA) and source localization (Makeig et al. 1996; Sipp et al. 2013). We hypothesized that EEG theta spectral power in parietal and occipital sensorimotor integration areas would increase following perturbation onset due to conflicting visuomotor information and impaired visual working memory.

MATERIALS AND METHODS

Subjects.

We recruited 32 healthy young adults for this study. All subjects identified themselves as right hand and right foot dominant, with normal or corrected vision. We screened subjects for any orthopedic, cardiac, or neurological conditions and injuries. All subjects provided written informed consent. Our protocol was approved by the University of Michigan Health Sciences and Behavioral Sciences Institutional Review Board for the protection of human subjects.

Before each experiment, we screened subjects for motion sickness in virtual reality. Subjects stood in place during a 5-min activity while wearing a virtual reality headset (Oculus Rift DK2; Oculus Virtual Reality, Irvine, CA). Subjects walked and jumped around a virtual environment using body gestures tracked by a Microsoft Kinect v.2 (Microsoft, Redmond, WA). We intentionally included a disconnect between real and virtual movements to be more disorienting than our main testing protocol. Subjects participated in the main experiment if both the subject and experimenter agreed that they did not exhibit any symptoms of motion sickness. Two potential subjects exhibited motion sickness symptoms and did not perform the experiment; 30 subjects passed this screening [15 men and 15 women, age 22.6 ± 4.5 yr (mean ± SD)].

Experiment design.

Subjects walked at a fixed speed of 0.22 m/s on a 2.5-cm wide × 2.5-cm tall treadmill-mounted balance beam, similar to those in previous studies (Domingo and Ferris 2009; Sipp et al. 2013). We based this speed on pilot data we recorded at a range of walking speeds. The 0.22-m/s speed was the lowest speed setting on the treadmill. We placed subjects in a body support harness for safety, with extended support straps to increase unrestricted mediolateral movement. All subjects performed 30 min of training, separated into three 10-min trials. Subjects also performed a 3-min pretest before training and 3-min posttest after training. Subjects took breaks as needed between training trials, with a 5-min break between the end of training and the posttest. While beam-walking, subjects crossed their arms and walked heel to toe, with their feet along the direction of the beam. We gave subjects specific instructions to look straight ahead and to avoid looking at their feet. If subjects stepped off while on the beam, they were instructed to step off the beam with both feet and walk on the treadmill for ~5 s. When on the balance beam, subjects were instructed to move only their hips side to side to balance, avoiding rotations across their body’s longitudinal axis. Our instructions followed previous studies (Domingo and Ferris 2009, 2010).

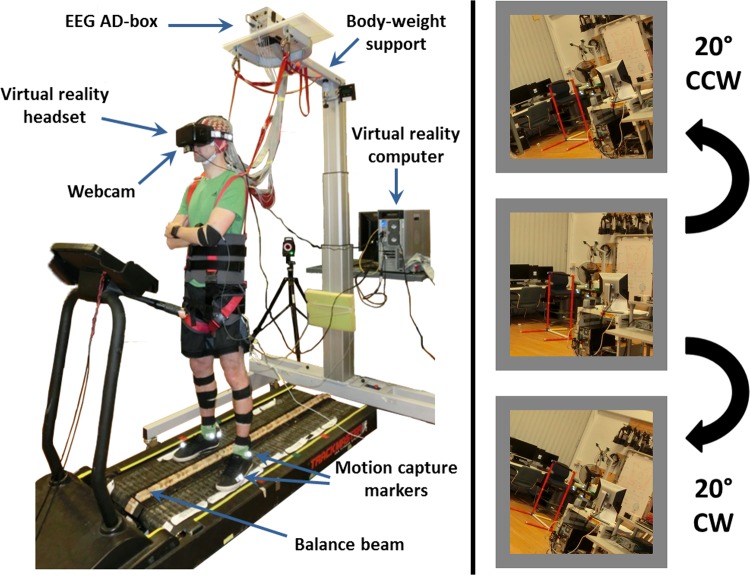

We randomly assigned subjects to one of three groups during training: 1) virtual reality with perturbations, 2) virtual reality without perturbations, and 3) unaltered view. Each group consisted of 10 subjects with equal numbers of men and women. Subjects in all groups performed the same physical beam-walking task. Group 1 subjects wore a virtual reality headset with a webcam (Logitech C930e; Logitech, Lausanne, Switzerland) mounted underneath the front, providing the subject a pass-through view of what was in front of them. For this group, the webcam view rotated 20° clockwise or counterclockwise for 0.5 s when both feet were placed on the beam, referred to as a visual perturbation. We used brief perturbations because continuous feedback can lead to dependence on the feedback used, leading to less general learning (Schmidt et al. 1989; Schmidt 1991). We added a random delay (up to 1 s) to this visual perturbation to make it more difficult to anticipate. We rendered the virtual environment with Unity 5 software (Unity Technologies, San Francisco, CA) using a NVIDIA Titan X graphics card (NVIDIA, Santa Clara, CA) to avoid slow-downs in the virtual reality presentation. Group 2 subjects wore the same virtual reality headset and webcam but without any visual perturbations. Group 3 subjects had unaltered vision (no virtual reality headset). We included groups 2 and 3 to establish the negative effects of our virtual reality setup without perturbations on balance performance without a virtual reality headset. All groups performed the pretest and posttest with unaltered vision. Figure 1 shows the setup for a subject in one of the virtual reality groups, along with example visual perturbations in each direction.

Fig. 1.

Subject setup and example visual perturbations. Typical subject setup for the virtual reality groups is shown (left). All 4 groups walked on the same physical beam. For the virtual reality training with perturbations group, each subject’s field of view in virtual reality was randomly rotated 20° counterclockwise (CCW; top right) or clockwise (CW; bottom right). The perturbation rotations are shown on an example image similar to the subject’s view during the experiment (right). Perturbations lasted for 0.5 s and occurred only when subjects placed both feet on the balance beam.

Performance and physiological measures.

We recorded motion capture at the feet, sacrum, neck, and head by use of 16 reflective markers (Vicon, Los Angeles, CA) sampled at 100 Hz. We recorded EEG using a 136-channel BioSemi ActiView II system (BioSemi, Amsterdam, The Netherlands) sampled at 512 Hz. We placed one external electrode on the sternum to record heart rate, three external electrodes around the eyes to record eye movements, two external electrodes on the neck to record neck electromyography, and two external electrodes on each mastoid. EEG electrode locations were measured using a Zebris ELPOS digitizer (Zebris Medical, Isny, Germany). We placed the AD-box and battery upside-down on top of the body weight support to maximize the reach of the cables, fastening the wires to the body weight support to avoid wires pulling out of the AD-box. We programmed different key presses on the virtual reality keyboard to correspond to the visual perturbation onset and termination, using Windows Input Simulator. This keyboard input was recorded and synchronized with the EEG data using Laboratory Streaming Layer (Delorme et al. 2011). We used a 0.5-Hz square pulse to synchronize the motion capture and EEG data. We also measured skin conductance by use of wrist sensors (Empatica E4; Empatica, Milan, Italy) as a proxy for sympathetic activity. Subjects in the virtual reality groups filled out surveys for motion sickness [Motion Sickness Assessment Questionnaire (Gianaros et al. 2001)] and presence [Witmer & Singer Presence Questionnaire (Witmer and Singer 1998) and Slater-Usoh-Steed Questionnaire (Slater et al. 1994)] after performing the experiment.

To assess behavioral performance, we recorded the number of times each subject stepped off the beam and the total time spent on the beam. We combined these measures into failures per minute, computed as the number of step-offs divided by the total time in minutes spent on the beam (Domingo and Ferris 2009, 2010). Failures per minute decrease when subjects spend more time on the beam and have fewer step-offs. To assess pretest-to-posttest changes in performance, we calculated the percent change in failures per minute as the posttest value minus the pretest, divided by the pretest value, and multiplied by 100. A negative percent change in testing performance indicated that a subject’s balance performance improved in the posttest compared with the pretest. We also calculated failures per minute during training to determine if balance performance differed across groups during training. On the basis of our beam-walking performance results, we concentrated further analysis solely on the two virtual reality groups, which reduced confounding factors in our comparisons.

We used mean heart rate and electrodermal activity to assess physical exertion across groups during training. Electrodermal activity measures sympathetic activity, making it a good indicator of physical exertion (Critchley 2002). We processed heart rate data during training using the Cleanline EEGLAB plug-in (Mullen 2014) and band-pass filtering between 5 and 20 Hz. Kubios HRV software determined the R-peaks, which correspond to heartbeats (Tarvainen et al. 2014). We manually adjusted incorrectly labeled R-peaks and used the mean heart rate calculated by Kubios. We processed the electrodermal activity using deconvolution in Ledalab to separate out the transient component of the signal (Benedek and Kaernbach 2010a, 2010b). We ran deconvolution using default parameters. We counted the differences in the deconvoluted transient signal greater than 0.05 μS (Schmidt and Walach 2000) during training, divided by the duration of training in minutes.

Motion capture marker trajectories were cleaned in Vicon Nexus and further processed in Visual3D (C-Motion, Germantown, MD). We low-pass filtered the data at 6 Hz and determined foot contacts by finding the peaks and troughs of the foot markers in the anterior/posterior direction of movement on the treadmill (Zeni et al. 2008). We manually adjusted incorrectly labeled foot contacts. We determined whether the foot contact was on or off the beam by manually thresholding the foot marker data in the mediolateral direction of movement. The beam location varied slightly for each subject, necessitating a manual threshold. In addition to foot contacts, we calculated the mediolateral standard deviation of markers at the head and sacrum as a measure of movement variability. For both markers, we calculated the average standard deviation during training, along with the percent change in standard deviation from the first to last 3 min of training. We also calculated the percent change in standard deviation from pretest to posttest. We used marker values only from when the subject was on the balance beam for calculating standard deviation.

EEG data and analysis.

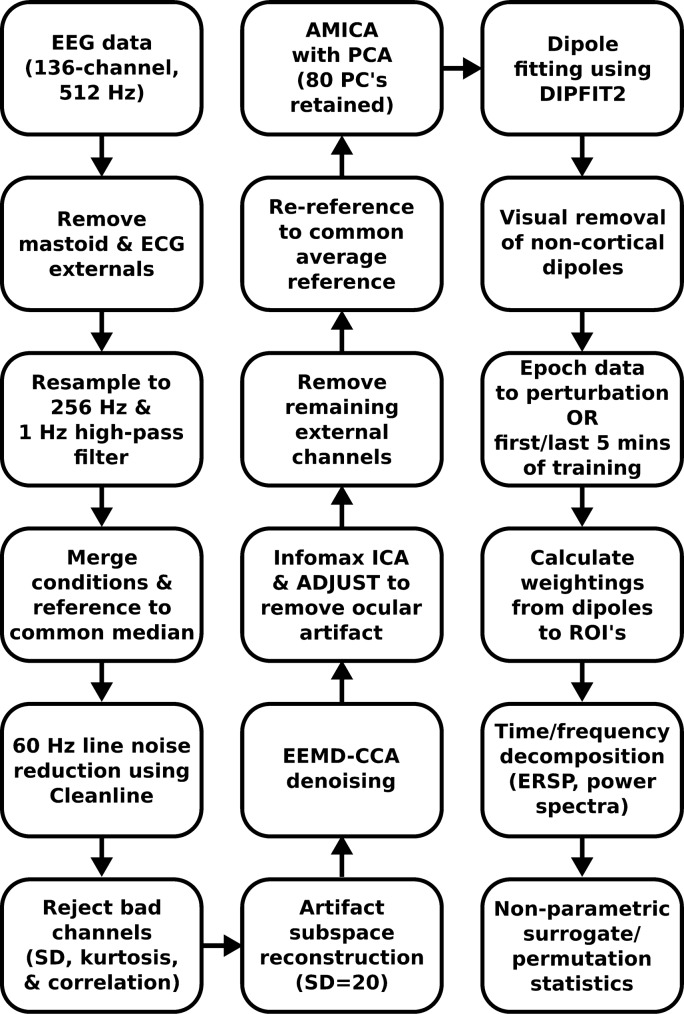

EEG data were processed using custom scripts in EEGLAB (Delorme and Makeig 2004). Our complete EEG processing pipeline can be seen in Fig. 2. We downsampled the data to 256-Hz, high-pass filtered the EEG data at 1 Hz, and merged all conditions, referencing all channels to a common median reference to reduce the impact of potential outlier channels. We used Cleanline to reduce 60-Hz electrical noise. We rejected channels that had an abnormally high standard deviation from all other electrodes, had a kurtosis above five standard deviations, or were uncorrelated with other channels for more than 1% of the time (Kline et al. 2015; Oliveira et al. 2017). Including the five eye and neck muscle external channels, we retained 116 channels on average (range: 100–127).

Fig. 2.

EEG processing flow chart. A flowchart of the EEG processing pipeline is shown, including all preprocessing and cleaning methods. All methods were performed in EEGLAB using custom Matlab scripts.

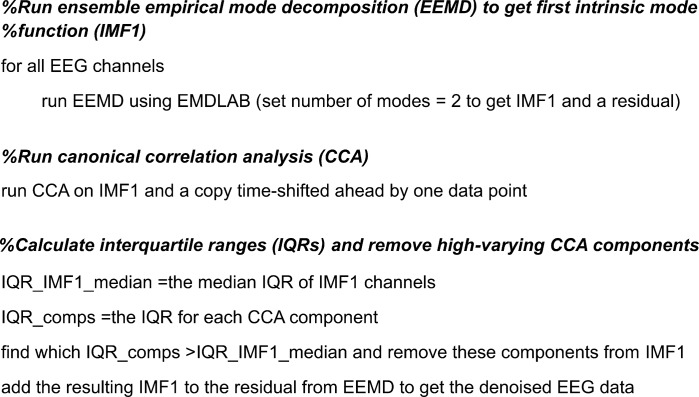

Following channel rejection, we used multiple denoising procedures to reduce prominent artifacts in the data. First, we used artifact subspace reconstruction (Mullen et al. 2015) with a standard deviation of 20 to remove large artifacts. Next, we developed a high-frequency denoising method based on two emerging signal processing methods: ensemble empirical mode decomposition (EEMD) (Wu and Huang 2009) and canonical correlation analysis (CCA) (Hotelling 1936), which have been combined before (Roy et al. 2017). Our EEMD-CCA method performed selective low-pass filtering, specifically targeting large high-frequency activity with low autocorrelation such as muscle activity and line noise. With EMDLAB (Al-Subari et al. 2015), we used EEMD to find the high-frequency content of each channel, called the first intrinsic mode function (IMF1) (Huang et al. 1998). Compared with standard filters, EEMD filtering is data driven and does not assume the data are stationary. In our data, IMF1 mostly contained high frequencies above 40 Hz. We assumed that IMF1 primarily contained nonbrain activity, such as line noise and muscle artifact. This assumption aligns with previous uses of EEMD denoising where IMF1 is completely removed as artifact (Boudraa and Cexus 2007; Safieddine et al. 2012). Instead of completely removing it, we ran CCA on IMF1 and a copy of IMF1 time-lagged by one data point (Friman et al. 2002). We did this to separate out sources of activity based on autocorrelation, where muscle activity is expected to have low autocorrelation (Chen et al. 2014). Then, we applied a threshold to remove large CCA components based on interquartile range. We removed all CCA components from IMF1 whose variance exceeded the median interquartile range of IMF1. This targets high-frequency data that are well clustered by CCA, similar to previous EEG methods to reduce muscle artifact (Bai et al. 2016; Safieddine et al. 2012). The data rank is not altered by removing CCA components because they are removed only from the first IMF of the signal, similar to typical frequency filtering. We have included pseudo-code for this method in Fig. 3 to facilitate reproducibility. After EEMD-CCA denoising, we ran extended Infomax ICA (Lee et al. 1999) and used the ADJUST plug-in to automatically remove eye artifacts (Mognon et al. 2011). Our data included external channels around the eyes to potentially improve eye artifact removal. We then removed the external channels from the data.

Fig. 3.

Ensemble empirical mode decomposition and canonical correlation analysis (EEMD-CCA) pseudocode. IMF1, first intrinsic mode; The computational steps used to perform high-frequency denoising using a combined EEMD-CCA approach are described.

We rereferenced the remaining channels to a common average and interpolated the rejected channels to maintain a consistent montage across the head. Next, we ran an adaptive mixture of independent components analysis (AMICA 15) (Palmer et al. 2006, 2008), using principal components analysis to reduce down to 80 principal components before keep the data at full rank. AMICA also rejected unlikely data frames.

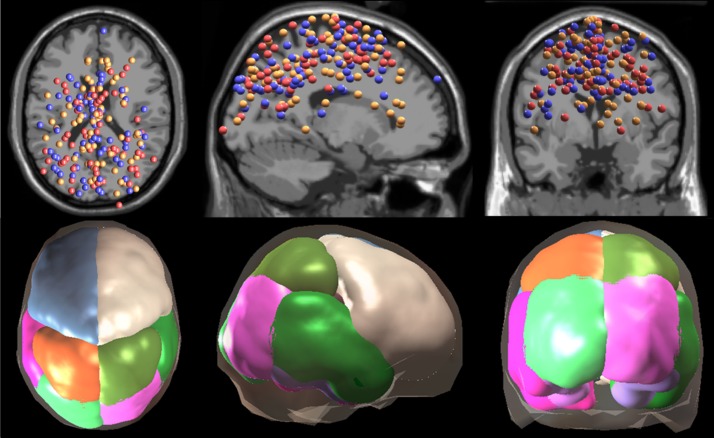

Following ICA, we performed dipole fitting (DIPFIT2) and retained dipoles explaining greater than 85% of the scalp variance (Oostenveld and Oostendorp 2002). We removed noncortical independent components based on visual inspection of power spectrum and dipole location. We then combined the remaining dipoles for each group (67 for virtual reality training with perturbations and 63 for virtual reality training without perturbations) and projected each dipole measure into predefined regions of interest (Bigdely-Shamlo et al. 2013). We split the brain into 11 general regions of interest according to the LONI LPBA40 atlas (Shattuck et al. 2008): left/right occipital, left/right parietal, left/right frontal, left/right fusiform, left/right temporal, and cingulate (Fig. 4). We excluded results from temporal and fusiform regions because few dipoles were located in these areas. Instead of weighting each dipole measure in a region equally, measure projection performs a weighted average based on each dipole’s location within a region, using a three-dimensional Gaussian (14.2 mm full-width half-maximum) to spatially smear each dipole. We chose this method so that we compared the same predefined regions between two groups of subjects.

Fig. 4.

EEG source localization and regions of interest. EEG source localizations are shown in blue for virtual reality training with perturbations, red for virtual reality training without perturbations training, and orange for unaltered view (n = 10 for each group). Dipole locations (top) and regions of interest (bottom) are shown in transverse, sagittal, and coronal views (left to right). We retained 7 regions of interest: left frontal (blue), right frontal (tan), left parietal (orange), right parietal (olive green), left occipital (light green), right occipital (purple), and cingulate (pink; shown in small head plots).

For each region of interest, dipole weights are normalized to 1 for creating weighted averages of measures. Prior to normalization, weights sum to 1 for each dipole. We summed up the unnormalized weights as an estimate of dipole density in each region of interest (Bigdely-Shamlo et al. 2013). For virtual reality training with perturbations, dipole density at each region was: 14.5 (left frontal), 6.4 (right frontal), 3.7 (left parietal), 4.7 (right parietal), 4.7 (left occipital), 2.9 (right occipital), and 3.7 (cingulate). For virtual reality training without perturbations, dipole density at each region was: 9.7 (left frontal), 7.4 (right frontal), 6.0 (left parietal), 6.1 (right parietal), 3.6 (left occipital), 4.9 (right occipital), and 1.5 (cingulate).

To assess perturbation-evoked electrocortical activity, we computed time-frequency activity for the virtual reality training with the perturbations group (n = 10) averaged across epochs, known as event-related spectral perturbation (ERSP) plots. We split the EEG data during training into epochs of −0.5 to 1.5 s, with visual perturbation onset at 0 s. We separated epochs by direction of the perturbation, resulting in 121 ± 18 clockwise and 103 ± 17 counterclockwise epochs for each subject (mean ± SD). We took the median instead of the mean across trials to avoid any single trial disproportionately affecting the resulting ERSP. Average baseline activity from −0.5 to 0 s before perturbation onset was subtracted. We computed the ERSP for each dipole in the virtual reality training with perturbations group and projected this to the seven regions of interest.

We compared electrocortical differences between virtual reality groups use of spectral power during the first and last 5 min of training (referred to as early training and late training, respectively). For each 5-min period, we analyzed spectral power only while subjects were on the beam. The power spectra for each dipole were projected to the seven regions of interest, using the same measure projection weights as for the ERSPs. Because differences in total power could be attributed to intersubject recording and processing differences, we normalized all four conditions (virtual reality with perturbations early/late and virtual reality without perturbations early/late) to their common average total power.

Whereas motion artifact is a concern when collecting EEG activity (Castermans et al. 2014; Kline et al. 2015; Snyder et al. 2015), we mitigated the effects of motion artifact by having subjects walk at a slow speed (0.22 m/s) and analyzing visual perturbation events where any head motion would be delayed after the stimulus presentation. Based on prior studies analyzing motion artifact during human walking (Kline et al. 2015; Nathan and Contreras-Vidal 2015; Snyder et al. 2015), any effects of head motion on EEG results should have been negligible. To verify this, we computed the average sacrum and head mediolateral marker motion from our motion capture data, time-locked to the visual perturbation. These trajectories were baseline subtracted to the 1.5 s preceding the perturbation onset. Due to the way we ran the experiment, we presented perturbations to all three groups during training, except that the virtual reality perturbations group had a 20° rotation, whereas the virtual reality perturbations group had a 0° rotation (pseudo-perturbation), and the unaltered view group was not wearing the headset. This gave us a similar event to compare motion capture displacement across groups to determine what sacrum and head displacement was caused by the 20° perturbation for the virtual reality perturbations group.

Statistical analysis.

We performed behavioral performance statistical tests across all groups with ANOVA and pairwise t-tests with Bonferroni correction (P < 0.05). These statistical tests were performed using R (R Core Team 2017). In addition, we tested whether the failures per minute during the pretest and posttest were stable to ensure that the performance improvement values we calculated were accurate. We used the heel strikes on and off the beam to create a “running total” failures per minute metric across each 3-min condition. We normalized each subject’s data by subtracting out the mean and dividing by the standard deviation to reduce intersubject variability within groups. For each group and pre/posttrial, we then took the average running total and performed an augmented Dickey-Fuller test using R, which tests whether data are stationary over time by assuming that the data are not stationary (null hypothesis).

For EEG ERSPs, time-frequency activity was bootstrapped 200 times to determine significance (P < 0.05). We used dipoles in each region with normalized weights above 0.05 to create the bootstrap distribution, so only the dipoles that noticeably contributed to a region were used. Nonsignificant differences were set to 0. Because amount of time on the beam varied across subjects, we randomly selected 85 half-second time bins on the beam for each subject from the last 5 min of training. Similarly to the ERSPs, each time bin was projected to a region and normalized to average total power. We averaged these projected power spectra into predefined frequency bands: delta (1–4 Hz), theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz), and gamma (30–50 Hz). We used 3×2 nonparametric ANOVAs with 2,000 permutations to test for main effects of group (virtual reality with perturbations vs. virtual reality without perturbations vs. unaltered viewing) and training (early vs. late) on the power spectra results. Further pairwise comparisons were performed using a Wilcoxon rank sum test (P < 0.05) (Gibbons and Chakraborti 2011), with false discovery rate correction for multiple comparisons.

RESULTS

Beam walking performance.

The percent change in beam walking performance varied considerably across groups [F(2,27) = 14.2, P = 6.10e-5, ANOVA; Table 1]. Subjects improved significantly less during virtual reality training without perturbations compared with virtual reality training with perturbations [t(27) = 4.50, P = 3.50e-4, paired t-test with Bonferroni correction] and training with unaltered view [t(27) = 4.73, P = 1.90e-4, paired t-test with Bonferroni correction]. There was more than a fourfold increase in beam walking performance increases with virtual reality training with perturbations (mean 42%, SD 17%) compared with virtual reality training without perturbations (mean 9%, SD 20%). We found no significant difference in beam walking performance improvement between unaltered view and virtual reality training with perturbations [t(27) = 0.23, P = 1.0, paired t-test with Bonferroni correction]. In addition, we found significant results for the augmented Dickey-Fuller test for all groups and pre/posttrials (P < 0.02), indicating that pretest and posttest failures per minute can be reasonably assumed to be stationary for all three groups.

Table 1.

Training failures per minute and percent change

| Measure | VR with Perturbations | VR without Perturbations | Unaltered View |

|---|---|---|---|

| Testing failures change (%) | −42.4 (16.7) | −9.1 (20.4)* | −44.2% (12.5) |

| Training failures (min−1) | 32.6 (9.3) | 32.6 (10.0) | 14.9 (5.7)* |

Average percent change in failures per minute from pretest to posttest are shown for the 3 viewing groups, with standard error bars (n = 10 for each group). Negative percent change indicates that subjects improved balance performance in posttest compared with pretest. Asterisk denotes significantly less percent change in the virtual reality (VR) training without perturbations group compared with the other 3 conditions. Average failures per minute during training are shown with standard error bars. Asterisk denotes significantly fewer training failures per minute during training with unaltered view compared with the other 3 conditions. Significant pairwise comparisons were performed using post hoc t-test with Bonferroni correction following significant ANOVA (P < 0.05 for both).

We also found that the number of errors made during the entire training period differed across groups [F(2,27) = 14.69, P = 4.802e-5, ANOVA; Table 1]. Subjects made significantly fewer errors during training with unaltered view compared with virtual reality training with perturbations [t(27) = 4.70, P = 2.10e-4, paired t-test with Bonferroni correction] and virtual reality training without perturbations [t(27) = 4.69, P = 2.10e-4, paired t-test with Bonferroni correction]. We found no significant difference between virtual reality conditions [t(27) = 0.002, P = 1.0, paired t-test with Bonferroni correction].

Behavioral and physiological measures.

We found no substantive differences in the gross physiological measures compared across groups (Table 2). There were no significant differences between virtual reality groups for motion sickness [F(1,18) = 0.064, P = 0.803, ANOVA] and both of the presence assessments [F(1,18) = 0.005, P = 0.942, ANOVA and F(1,18) = 0.055, P = 0.817, ANOVA, respectively]. In addition, heart rate and electrodermal activity during training was not significantly different across groups [F(2,27) = 0.053, P = 0.949, ANOVA and F(2,27) = 0.085, P = 0.919, ANOVA].

Table 2.

Surveys and physiological measures during training

| Measure | VR with Perturbations | VR without Perturbations | Unaltered View |

|---|---|---|---|

| Motion Sickness | 24.3% (17.3%) | 22.2% (19.6%) | — |

| Presence #1 (WS) | 64.9% (8.7%) | 65.2% (9.6%) | — |

| Presence #2 (SUS) | 55.2% (18.1%) | 57.0% (16.1%) | — |

| Heart rate (beats/min) | 105.15 (18.74) | 104.98 (9.02) | 103.29 (12.99) |

| Electrodermal activity (counts/min) | 42.76 (9.42) | 41.73 (17.62) | 40.03 (16.45) |

Average values for motion sickness (Motion Sickness Assessment Questionnaire) and presence questionnaires, along with average heart rate and electrodermal activity during training for both virtual reality (VR) groups and unaltered view (n = 10 for each group). Standard deviations are in parentheses. Presence #1 refers to Witmer & Singer Presence Questionnaire; presence #2 refers to Slater-Usoh-Steed Questionnaire. We found no significant differences across groups for these measures (ANOVA P > 0.05).

Head and sacrum motion variability were mostly similar between virtual reality groups, but head motion significantly increased with perturbations training (Table 3). Percent change in head marker standard deviation significantly differed across groups [F(2,26) = 5.441, P = 0.011, ANOVA], with significantly increased percent change during virtual reality training with perturbations compared with training with unaltered view [t(26) = 0.807, P = 0.012, paired t-test with Bonferroni correction] but not compared with virtual reality training without perturbations [t(26) = 2.401, P = 0.071, paired t-test with Bonferroni correction]. The perturbations increased head movement as training progressed. We checked the correlation between performance improvement and the magnitude of change in head movement during training across groups. We found a nonsignificant correlation of 0.32 (P = 0.094), which suggests that larger changes in head motion during training may lead to increased performance improvement, but more subjects would likely be needed to establish a significant correlation. The percent change in head marker standard deviation from pretest to posttest [F(2,26) = 0.504, P = 0.610, ANOVA] did not significantly differ across groups [F(1,18) = 0.126, P = 0.727, ANOVA]. The overall average head marker standard deviation during training was significantly different across groups [F(2,26) = 3.965, P = 0.031, ANOVA], with significantly increased head motion during training during unaltered view compared with virtual reality training with perturbations [t(26) = 2.570, P = 0.049, paired t-test with Bonferroni correction] but not compared with virtual reality training without perturbations [t(26) = 2.332, P = 0.083, paired t-test with Bonferroni correction]. No other significant differences were found. For the sacrum marker, we found no significant differences in percent change during training [F(2,26) = 2.462, P = 0.105, ANOVA], percent change during testing [F(2,26) = 1.570, P = 0.226, ANOVA], and overall average during training [F(2,26) = 2.471, P = 0.104, ANOVA]. Overall, we found few noncognitive differences between virtual reality groups.

Table 3.

Motion capture marker standard deviation

| Measure | VR with Perturbations | VR without Perturbations | Unaltered View | |

|---|---|---|---|---|

| Head marker | Train (%change)* | 16.1% (19.7%) | −0.9% (6.4%) | −6.8% (18.2%) |

| Test (%change) | 2.7% (29.1%) | −5.3% (22.8%) | 6.8% (28.3%) | |

| Train (cm) | 5.8 (1.1) | 6.0 (0.8) | 7.4 (2.1) | |

| Sacrum marker | Train (%change) | 3.9% (8.7%) | 0.7% (9.5%) | −5.7% (10.3%) |

| Test (%change) | −8.5% (20.4%) | 6.7% (28.0%) | −8.2% (15.8%) | |

| Train (cm) | 5.1 (0.8) | 5.2 (0.8) | 4.5 (0.6) |

Average values for head and sacrum markers standard deviation during training along with percent change during training and testing for both virtual reality (VR) groups and unaltered view (n = 10 for each group). Standard deviations are in parentheses. Head marker percent change during training significantly differed between groups, denoted with an asterisk (post hoc t-test with Bonferroni correction following significant ANOVA; P < 0.05). We found no other significant differences.

EEG during perturbations.

Parietal and occipital areas showed the strongest EEG activity immediately following perturbation onset and termination (Fig. 5). We found synchronization in delta (1–4 Hz), theta (4–8 Hz), and alpha (8–13 Hz) EEG bands. The parietal and occipital synchronization was followed by beta (13–30 Hz) desynchronization. There was similar time-frequency activity for each perturbation direction across all regions. The cingulate region showed delta and theta synchronization after perturbation onset, followed by delta, alpha, and gamma (30–50 Hz) synchronization after perturbation termination. Frontal areas showed little activity except for delta synchronization in the right frontal area during clockwise perturbations.

Fig. 5.

Event-related spectral perturbation (ERSP) plots for virtual reality perturbation. EEG ERSPs are shown for the 7 regions of interest for averaged perturbation activity during training in the virtual reality training with perturbations group (n = 10). Perturbation onset occurred at 0 s, with perturbation termination at 0.5 s. Perturbations were split based on counterclockwise (CCW) or clockwise (CW) rotation. Nonsignificant ERSP power was set to 0 (bootstrap statistics, P > 0.05). We subtracted out the spectral power during the 0.5 s before perturbation onset as baseline activity.

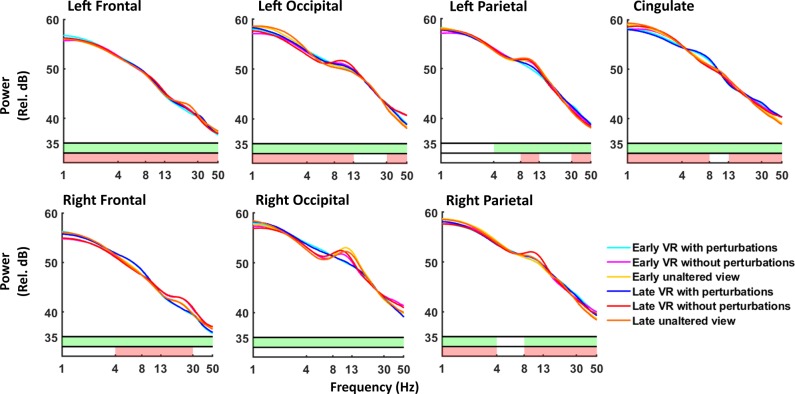

Power spectra between conditions.

We found increased theta spectral power during virtual reality with perturbations training across multiple cortical regions compared with the virtual reality without perturbations and unaltered view training groups (Fig. 6). Theta power was significantly increased during virtual reality with perturbations compared with the other two conditions in left/right frontal, left/right occipital, and cingulate regions (pairwise P < 0.002 for all). These significant differences were maintained for both early and late training, except for a nonsignificant difference in the left frontal region between early virtual reality with perturbations training and early unaltered view training (P = 0.075). Left occipital also had significantly decreased theta power during late training compared with early training (perturbations: P = 0.009; no perturbations: P = 0.001; unaltered view: P = 0.006).

Fig. 6.

Spectral power across groups. Average EEG spectral power is shown for the 7 regions of interest during the first (early) and last (late) 5 min of training for both virtual reality training groups and the unaltered view group (n = 10 for each group). Average spectral power is shown for virtual reality with perturbations during early (cyan) and late (blue) training. For virtual reality without perturbations, early training is shown in magenta with late training in red. Early training with unaltered view is shown in yellow, with late training in orange. We plotted across frequencies from 1 to 50 Hz. Shaded areas at the bottom denote significant differences in spectral power between groups, as compared across 85 randomly selected time bins for each subject, using nonparametric 3×2 ANOVA comparisons using permutation statistics. Green shading corresponds to a significant main effect of group (virtual reality with perturbation vs. virtual reality without perturbation vs. unaltered viewing). Red shading corresponds to a significant main effect of training (early vs. late).

Alpha spectral power decreased primarily during perturbations training and also increased as training progressed for all three groups. Compared with virtual reality without perturbations training, alpha power decreased during virtual reality with perturbations and unaltered view training in left frontal, left occipital, and right parietal (pairwise P < 0.001 for all). This alpha power decrease occurred for both early and late training. Alpha power also significantly decreased during virtual reality with perturbations training compared with virtual reality training without perturbations in right occipital and left parietal for both early and late training (pairwise P < 0.001 for all). In contrast, alpha power significantly increased in the cingulate region during late virtual reality training without perturbations compared with late training for both other groups (pairwise P < 0.001 for both). In addition, alpha power significantly increased from early to late training for all three groups in left occipital and left/right parietal (pairwise P < 0.003 for all).

We found differences in beta and gamma spectral power across groups across most cortical regions. Gamma power was significantly decreased during virtual reality with perturbations training in left and right occipital areas compared with virtual reality without perturbations training (pairwise P < 0.001 for all). This significant difference persisted through early and late training. The virtual reality with perturbations group also showed significant gamma power increases in left/right parietal and cingulate regions compared with the other two groups for both early and late training (pairwise P < 0.003 for all). Beta power was significantly decreased in right frontal during virtual reality training with perturbations compared with both other groups (pairwise P < 0.001 for all). We also found significantly decreased gamma power in left/right parietal regions during late training compared with early training for all three groups (pairwise P < 0.003 for all).

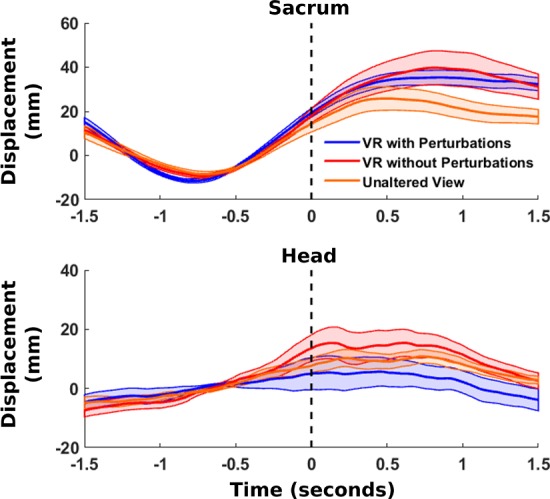

Perturbation-evoked body and head displacement.

In our comparison of head and sacrum mediolateral displacement following perturbation onset, we found large, slow deviations preceding the perturbation onset for the virtual reality with perturbations group (Fig. 7). Similar activity was also seen in the virtual reality without perturbations and unaltered viewing groups, suggesting that the deviations seen were primarily due to movements while balancing. For virtual reality with perturbations, subjects received a 20° rotation perturbation, whereas the other two groups received a 0° pseudo-perturbation. The perturbation onset for all groups occurred shortly after subjects had both feet on the beam, which may explain why similar head and sacrum displacements were seen for both groups. In addition, we found nearly identical head and sacrum displacements during early and late training for all groups. This suggests that the visual perturbation did not induce consistent head motion artifact in our EEG results.

Fig. 7.

Perturbation-evoked head and sacrum motion. Average mediolateral motion capture marker trajectories are shown from the sacrum (top) and head (bottom) during training for virtual reality with perturbations (blue), virtual reality without perturbations (red), and unaltered view (orange), time-locked to the perturbation onset (n = 10 for each group). In the case of the virtual reality without perturbations group, a pseudo-perturbation of 0° was performed. Motion capture trajectories were baseline subtracted to the 1.5 s preceding perturbation onset.

DISCUSSION

Virtual reality training with perturbations resulted in equivalent pretest-to-posttest improvement compared with unaltered viewing without virtual reality. Performance improvement in both groups was significantly increased compared with virtual reality training without perturbations, indicating that the visual rotation perturbations helped subjects overcome the negative effects of virtual reality. Similar training errors and physiological results between virtual reality groups indicated that the perturbations primarily induced a cognitive change. We found strong perturbation-evoked parietal and occipital activity, as hypothesized, but hardly any noticeable tuning based on perturbation direction. Subjects training in virtual reality with perturbations showed increased theta power in the cingulate, occipital, and frontal cortical regions compared with unaltered viewing and virtual reality training without perturbations. Perturbations training also decreased alpha power across multiple cortical regions compared with virtual reality training without perturbations. One interpretation of these findings is that our visual perturbations primed cortical areas to facilitate short-term motor learning equivalent to training without immersive virtual reality.

Behavioral and physiological measures.

We found similar beam walking improvement between virtual reality training with perturbations and unaltered viewing, indicating that the brief visual perturbations helped subjects overcome the negative effects of virtual reality. Both virtual reality training with perturbations and unaltered viewing showed significantly improved performance compared with virtual reality without perturbations. Increased improvement during perturbations training agrees with similar research using unexpected mechanical perturbations during walking to improve balance control (Kurz et al. 2016). Comparing the two virtual reality groups, we found no evidence that the perturbation affects the quantity of training errors, subjects’ experience of virtual reality, or physical exertion. While the perturbation may induce similar sensorimotor adaptation as prism glasses (Nemanich and Earhart 2015), the resulting improvement is not likely an after-effect and likely reflects changes in short-term motor learning. Based on the average perturbations during training (224), perturbations occurred approximately every 8 s during training. Each perturbation lasts one half-second, leaving subjects in an unperturbed view for ~93.8% of training. Long-lasting improvements might occur because of repeated exposure to the perturbation (Bastian 2008; Krakauer et al. 2005) with randomly presented perturbations in clockwise and counterclockwise directions to avoid directional dependencies. Repeatedly adapting to shifting visual input can improve subjects’ ability to adapt to novel visual perturbations (Welch et al. 1993). Such adaptive generalization, also called learning to learn, has been seen during upper limb movements (Seidler 2004; Shadmehr and Moussavi 2000) and gait training (Batson et al. 2011; Mulavara et al. 2009), with the anterior cingulate thought to be involved (Seidler 2010). Similar virtual reality visual perturbations have improved responses to falls in older adults (Parijat et al. 2015), although our paradigm induced more catastrophic errors whenever a perturbation occurred. Previous research has shown the importance of catastrophic errors, such as step-offs or falls, for improving stability (Domingo and Ferris 2009).

In addition to differences in beam walking improvement, perturbation training increased mediolateral head motion variability as training progressed compared with the unaltered viewing groups. Control of the head during stance perturbations is initiated by proprioceptive and vestibular signals (Allum et al. 1997; Héroux et al. 2015). This increase in head movement may reflect alterations in sensory processing of the visual perturbation during training. However, this large increase in head movement during training does not translate to increased head movement from pretest to posttest. It is interesting to note that the unaltered view group had higher head motion variability than the virtual reality conditions during training and that increased change in head motion during training appeared to be potentially related to increased balance performance improvement. This may reflect the importance of training head motion during balance beam walking. We found cognitive differences primarily due to the visual perturbations, agreeing with visuomotor perturbation research during upper-limb movements (Anglin et al. 2017).

EEG differences.

EEG showed robust time-frequency activity during perturbation onset and termination in the parietal and occipital areas. Unpredictable perturbations during stance induce large EEG event-related potentials (Adkin et al. 2006), with decreased amplitude when attention is diverted to a secondary task (Quant et al. 2004). We saw little activity in frontal areas in response to visual perturbations whereas frontal areas typically have large responses to mechanical perturbations (Maki and McIlroy 2007; Mihara et al. 2008). The exception to this was right frontal delta synchronization to the clockwise perturbations only. Our delta band results must be interpreted cautiously due to the close proximity of delta band to our high-pass filtering frequency of 1 Hz. In occipital and parietal areas, we found similar theta, alpha, and beta synchronization following perturbation onset and termination, which matches previous research (Varghese et al. 2014). The beta desynchronization following this activity aligns with research indicating that beta desynchronization occurs when stability is important (Bruijn et al. 2015). While no differences in perturbation direction were found, we noticed larger magnitude responses in right parietal and occipital areas, which may reflect increased right hemisphere involvement in position sensing (Iandolo et al. 2018). Also, theta and alpha synchronization occurred in the cingulate region only during perturbation onset. Several studies suggest that the anterior cingulate functions as an error monitor (Anguera et al. 2009; Carter et al. 1998; Kerns 2004; Miltner et al. 2003), and our results showing increased activity during visuomotor conflict appear to support this claim. We also found increased gamma activity in occipital, parietal, and cingulate regions following perturbation onset and termination, which may indicate increased cognitive processing during instability (Sipp et al. 2013; Slobounov et al. 2009).

Power spectral analysis revealed significantly increased theta power for the virtual reality with perturbations group in frontal, occipital, and cingulate regions, likely related to differences in sensorimotor processing. Increased theta power is consistent with Slobounov et al., which found theta activity located in the anterior cingulate (Slobounov et al. 2009). Balance beam walking increases frontal theta power compared with walking on a treadmill (Sipp et al. 2013). Increased theta power also corresponds to more challenging balance tasks (Hülsdünker et al. 2015; Youssofzadeh et al. 2016). Our increase in theta power may indicate improved sensorimotor processing during balancing and may be of interest for future studies with motor training interventions.

We also found wide-spread decreases in alpha power for the virtual reality perturbations group compared with virtual reality training without perturbations, likely indicating more active cortical processing. The unaltered view group also had significant decreases in alpha power in multiple regions compared with virtual reality training without perturbations, suggesting that alpha power reflects impaired cortical processing due to virtual reality use. Regions with significantly decreased alpha power also showed significantly higher alpha power during late training compared with early training. This may indicate decreased mental engagement towards the end of training. Alpha activity decreases when walking compared with standing (Presacco et al. 2011; Youssofzadeh et al. 2016), when walking in interactive virtual reality compared with walking while viewing a black screen (Wagner et al. 2014), and when walking with closed-loop brain-computer interface control of a virtual avatar compared with no closed-loop control (Luu et al. 2017b). Centro-parietal alpha suppression has also been associated with improved stance stability (Del Percio et al. 2007). Increased alpha power has been found in high fall-risk older adults compared with a low fall-risk group during perturbed balance (Chang et al. 2016), showing similarity with our results where the subjects with improved balance showed reduced alpha power.

We also found significant differences in beta and gamma power across multiple regions, suggesting differences in active cortical processing during training (Başar et al. 2001). Beta power decreased in the right frontal region during perturbations training compared with the other two groups, agreeing with research showing decreased beta power under more challenging balance conditions (Bruijn et al. 2015). This reinforces the idea that the perturbations may induce a greater challenge to stability than training without them. Perturbations training also increased parietal gamma power, which may indicate greater instability (Slobounov et al. 2009). The significant decrease in parietal gamma power from early to late training may indicate improved stability for all groups as a result of training. Yet, gamma power results should be interpreted with caution due to the possible presence of neck muscle contamination, especially in the occipital regions. Fortunately, EEG differences across groups are not likely influenced by perturbation-induced artifacts based on similar mediolateral head movements around the perturbation onset (Fig. 7).

Limitations of virtual reality setup.

Motor performance improvement during virtual reality training without perturbations was much lower than improvement during virtual reality training with perturbations and training with unaltered view (Table 1). There seems to be a decrement in short-term motor learning that occurs with virtual reality use compared with not using virtual reality at all, as expected from the literature. One contributing factor may be the field of view. Our virtual reality headset had a 100° field of view, whereas human field of view is at least 180° (Walker et al. 1976). Peripheral vision is important for maintaining stability (Amblard and Carblanc 1980; Assaiante et al. 1989); therefore decreased field of view could have impaired balance performance. Peripheral optic flow while wearing a headset can greatly reduce head and body sway during stance (Horiuchi et al. 2017). Another possible factor is the refresh rate of the webcam (30 Hz) and headset (75 Hz), which can affect unipedal stance stability (Kawamura and Kijima 2016). In contrast, stability in virtual reality may remain impaired even when controlling for field of view and refresh rate (Kelly et al. 2008), suggesting that further research is needed. See Steinicke et al. for further discussion (Steinicke et al. 2013).

It is also worth noting that the perturbation timing affects improvement. During pilot testing, we randomized perturbations to occur throughout training, which actually led to worse improvement for the virtual reality perturbations group (mean: 30.7%, SD: 59.0%, n = 3) compared with the virtual reality without perturbations group (mean: −16.4%, SD: 24.9%, n = 3). By timing perturbations to consistently occur shortly after subjects stepped onto the beam, we appear to have maximized their impact. Future studies should also consider larger sample size per group and testing for longer learning and retention effects across days.

Conclusions.

We used a novel visual rotation perturbation paradigm to overcome the negative effects of immersive virtual reality during a beam walking balance task. These perturbations induced distinct electrocortical responses in parietal, occipital, and cingulate areas due to conflicts between sensory input during balance. Power spectra differences between virtual reality training groups showed increased theta power and decreased alpha power during training with perturbations compared with virtual training without perturbations across multiple cortical regions, indicating that the perturbations induced a change in cortical activity. The beam walking improvements we found could not be explained as adaptation effects, suggesting short-term motor learning improvements in balance control. Such perturbation training may be useful in future immersive virtual reality paradigms to minimize the negative effects of virtual reality on short-term motor learning.

GRANTS

This research was sponsored by a Graduate Research Fellowship Program grant from the National Science Foundation (DGE 1256260) and by the Army Research Laboratory under Cooperative Agreement No. W911NF-10-2-0022 (Cognition and Neuroergonomics Collaborative Technology Alliance).

DISCLAIMERS

The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Army Research Laboratory or the United States Government.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

S.M.P. and D.P.F. conceived and designed research; S.M.P. and E.R. performed experiments; S.M.P. and E.R. analyzed data; S.M.P. and D.P.F. interpreted results of experiments; S.M.P. prepared figures; S.M.P. drafted manuscript; S.M.P. and D.P.F. edited and revised manuscript; S.M.P., E.R., and D.P.F. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Zachary Ohs for assistance with processing the motion capture data. We also thank members of the Human Neuromechanics Laboratory for their input regarding the experiment design and data processing.

REFERENCES

- Adamovich SV, Fluet GG, Tunik E, Merians AS. Sensorimotor training in virtual reality: a review. NeuroRehabilitation 25: 29–44, 2009. doi: 10.3233/NRE-2009-0497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adkin AL, Quant S, Maki BE, McIlroy WE. Cortical responses associated with predictable and unpredictable compensatory balance reactions. Exp Brain Res 172: 85–93, 2006. doi: 10.1007/s00221-005-0310-9. [DOI] [PubMed] [Google Scholar]

- Al-Subari K, Al-Baddai S, Tomé AM, Goldhacker M, Faltermeier R, Lang EW. EMDLAB: A toolbox for analysis of single-trial EEG dynamics using empirical mode decomposition. J Neurosci Methods 253: 193–205, 2015. doi: 10.1016/j.jneumeth.2015.06.020. [DOI] [PubMed] [Google Scholar]

- Allum JH, Gresty M, Keshner E, Shupert C. The control of head movements during human balance corrections. J Vestib Res 7: 189–218, 1997. doi: 10.1016/S0957-4271(97)00029-3. [DOI] [PubMed] [Google Scholar]

- Amblard B, Carblanc A. Role of foveal and peripheral visual information in maintenance of postural equilibrium in man. Percept Mot Skills 51: 903–912, 1980. doi: 10.2466/pms.1980.51.3.903. [DOI] [PubMed] [Google Scholar]

- Anglin JM, Sugiyama T, Liew S-L. Visuomotor adaptation in head-mounted virtual reality versus conventional training. Sci Rep 7: 45469, 2017. doi: 10.1038/srep45469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anguera JA, Seidler RD, Gehring WJ. Changes in performance monitoring during sensorimotor adaptation. J Neurophysiol 102: 1868–1879, 2009. doi: 10.1152/jn.00063.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Assaiante C, Marchand AR, Amblard B. Discrete visual samples may control locomotor equilibrium and foot positioning in man. J Mot Behav 21: 72–91, 1989. doi: 10.1080/00222895.1989.10735466. [DOI] [PubMed] [Google Scholar]

- Assländer L, Peterka RJ. Sensory reweighting dynamics in human postural control. J Neurophysiol 111: 1852–1864, 2014. doi: 10.1152/jn.00669.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bai Y, Wan X, Zeng K, Ni Y, Qiu L, Li X. Reduction hybrid artifacts of EMG-EOG in electroencephalography evoked by prefrontal transcranial magnetic stimulation. J Neural Eng 13: 066016, 2016. doi: 10.1088/1741-2560/13/6/066016. [DOI] [PubMed] [Google Scholar]

- Başar E, Başar-Eroglu C, Karakaş S, Schürmann M. Gamma, alpha, delta, and theta oscillations govern cognitive processes. Int J Psychophysiol 39: 241–248, 2001. doi: 10.1016/S0167-8760(00)00145-8. [DOI] [PubMed] [Google Scholar]

- Bastian AJ. Understanding sensorimotor adaptation and learning for rehabilitation. Curr Opin Neurol 21: 628–633, 2008. doi: 10.1097/WCO.0b013e328315a293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batson CD, Brady RA, Peters BT, Ploutz-Snyder RJ, Mulavara AP, Cohen HS, Bloomberg JJ. Gait training improves performance in healthy adults exposed to novel sensory discordant conditions. Exp Brain Res 209: 515–524, 2011. doi: 10.1007/s00221-011-2574-6. [DOI] [PubMed] [Google Scholar]

- Benedek M, Kaernbach C. A continuous measure of phasic electrodermal activity. J Neurosci Methods 190: 80–91, 2010a. doi: 10.1016/j.jneumeth.2010.04.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benedek M, Kaernbach C. Decomposition of skin conductance data by means of nonnegative deconvolution. Psychophysiology 47: 647–658, 2010b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernier P-M, Chua R, Franks IM. Is proprioception calibrated during visually guided movements? Exp Brain Res 167: 292–296, 2005. doi: 10.1007/s00221-005-0063-5. [DOI] [PubMed] [Google Scholar]

- Bigdely-Shamlo N, Mullen T, Kreutz-Delgado K, Makeig S. Measure projection analysis: a probabilistic approach to EEG source comparison and multi-subject inference. Neuroimage 72: 287–303, 2013. doi: 10.1016/j.neuroimage.2013.01.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth V, Masud T, Connell L, Bath-Hextall F. The effectiveness of virtual reality interventions in improving balance in adults with impaired balance compared with standard or no treatment: a systematic review and meta-analysis. Clin Rehabil 28: 419–431, 2014. doi: 10.1177/0269215513509389. [DOI] [PubMed] [Google Scholar]

- Boudraa A-O, Cexus J-C. EMD-based signal filtering. IEEE Trans Instrum Meas 56: 2196–2202, 2007. doi: 10.1109/TIM.2007.907967. [DOI] [Google Scholar]

- Bruijn SM, Van Dieën JH, Daffertshofer A. Beta activity in the premotor cortex is increased during stabilized as compared to normal walking. Front Hum Neurosci 9: 593, 2015. doi: 10.3389/fnhum.2015.00593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calogiuri G, Litleskare S, Fagerheim KA, Rydgren TL, Brambilla E, Thurston M. Experiencing nature through immersive virtual environments: environmental perceptions, physical engagement, and affective responses during a simulated nature walk. Front Psychol 8: 2321, 2018. doi: 10.3389/fpsyg.2017.02321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter CS, Braver TS, Barch DM, Botvinick MM, Noll D, Cohen JD. Anterior cingulate cortex, error detection, and the online monitoring of performance. Science 280: 747–749, 1998. doi: 10.1126/science.280.5364.747. [DOI] [PubMed] [Google Scholar]

- Castermans T, Duvinage M, Cheron G, Dutoit T. About the cortical origin of the low-delta and high-gamma rhythms observed in EEG signals during treadmill walking. Neurosci Lett 561: 166–170, 2014. doi: 10.1016/j.neulet.2013.12.059. [DOI] [PubMed] [Google Scholar]

- Chang C-J, Yang T-F, Yang S-W, Chern J-S. Cortical modulation of motor control biofeedback among the elderly with high fall risk during a posture perturbation task with augmented reality. Front Aging Neurosci 8: 80, 2016. doi: 10.3389/fnagi.2016.00080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X, He C, Peng H. Removal of muscle artifacts from single-channel EEG based on ensemble empirical mode decomposition and multiset canonical correlation analysis. J Appl Math 2014: 1–10, 2014. [Google Scholar]

- Chiarovano E, de Waele C, MacDougall HG, Rogers SJ, Burgess AM, Curthoys IS. Maintaining balance when looking at a virtual reality three-dimensional display of a field of moving dots or at a virtual reality scene. Front Neurol 6: 164, 2015. doi: 10.3389/fneur.2015.00164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta D, Imeri F, Gatti R. Rehabilitation that incorporates virtual reality is more effective than standard rehabilitation for improving walking speed, balance and mobility after stroke: a systematic review. J Physiother 61: 117–124, 2015. doi: 10.1016/j.jphys.2015.05.017. [DOI] [PubMed] [Google Scholar]

- Critchley HD. Electrodermal responses: what happens in the brain. Neuroscientist 8: 132–142, 2002. doi: 10.1177/107385840200800209. [DOI] [PubMed] [Google Scholar]

- Darekar A, McFadyen BJ, Lamontagne A, Fung J. Efficacy of virtual reality-based intervention on balance and mobility disorders post-stroke: a scoping review. J Neuroeng Rehabil 12: 46, 2015. doi: 10.1186/s12984-015-0035-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Del Percio C, Brancucci A, Bergami F, Marzano N, Fiore A, Di Ciolo E, Aschieri P, Lino A, Vecchio F, Iacoboni M, Gallamini M, Babiloni C, Eusebi F. Cortical alpha rhythms are correlated with body sway during quiet open-eyes standing in athletes: a high-resolution EEG study. Neuroimage 36: 822–829, 2007. doi: 10.1016/j.neuroimage.2007.02.054. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods 134: 9–21, 2004. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Delorme A, Mullen T, Kothe C, Akalin Acar Z, Bigdely-Shamlo N, Vankov A, Makeig S. EEGLAB, SIFT, NFT, BCILAB, and ERICA: new tools for advanced EEG processing. Comput Intell Neurosci 2011: 130714, 2011. doi: 10.1155/2011/130714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domingo A, Ferris DP. Effects of physical guidance on short-term learning of walking on a narrow beam. Gait Posture 30: 464–468, 2009. doi: 10.1016/j.gaitpost.2009.07.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domingo A, Ferris DP. The effects of error augmentation on learning to walk on a narrow balance beam. Exp Brain Res 206: 359–370, 2010. doi: 10.1007/s00221-010-2409-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epure P, Gheorghe C, Nissen T, Toader L-O, Nicolae A, Nielsen SSM, Christensen DJR, Brooks AL, Petersson E. . Effect of the Oculus Rift head mounted display on postural stability. Proceedings of the 10th International Conference on Disability Virtual Reality and Associated Technologies, edited by Sharkey P, Pareto L, Broeren J, Rydmark M. Reading, UK: University of Reading Press, 2014, p. 119–127. [Google Scholar]

- Fortis P, Goedert KM, Barrett AM. Prism adaptation differently affects motor-intentional and perceptual-attentional biases in healthy individuals. Neuropsychologia 49: 2718–2727, 2011. doi: 10.1016/j.neuropsychologia.2011.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franz JR, Francis C, Allen M, Thelen DG. Visuomotor entrainment and the frequency-dependent response of walking balance to perturbations. IEEE Trans Neural Syst Rehabil Eng 25: 1132–1142, 2017. doi: 10.1109/TNSRE.2016.2603340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friman O, Borga M, Lundberg P, Knutsson H. Exploratory fMRI analysis by autocorrelation maximization. Neuroimage 16: 454–464, 2002. doi: 10.1006/nimg.2002.1067. [DOI] [PubMed] [Google Scholar]

- Gianaros PJ, Muth ER, Mordkoff JT, Levine ME, Stern RM. A questionnaire for the assessment of the multiple dimensions of motion sickness. Aviat Space Environ Med 72: 115–119, 2001. [PMC free article] [PubMed] [Google Scholar]

- Gibbons JD, Chakraborti S. Nonparametric statistical inference. In: International Encyclopedia of Statistical Science, edited by Lovric M. Berlin: Springer, 2011, p. 977–979. [Google Scholar]

- Héroux ME, Law TCY, Fitzpatrick RC, Blouin J-S. Cross-modal calibration of vestibular afference for human balance. PLoS One 10: e0124532, 2015. doi: 10.1371/journal.pone.0124532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman HG, Meyer WJ III, Ramirez M, Roberts L, Seibel EJ, Atzori B, Sharar SR, Patterson DR. Feasibility of articulated arm mounted Oculus Rift Virtual Reality goggles for adjunctive pain control during occupational therapy in pediatric burn patients. Cyberpsychol Behav Soc Netw 17: 397–401, 2014. doi: 10.1089/cyber.2014.0058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman HG, Patterson DR, Seibel E, Soltani M, Jewett-Leahy L, Sharar SR. Virtual reality pain control during burn wound debridement in the hydrotank. Clin J Pain 24: 299–304, 2008. doi: 10.1097/AJP.0b013e318164d2cc. [DOI] [PubMed] [Google Scholar]

- Horiuchi K, Ishihara M, Imanaka K. The essential role of optical flow in the peripheral visual field for stable quiet standing: evidence from the use of a head-mounted display. PLoS One 12: e0184552, 2017. doi: 10.1371/journal.pone.0184552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hotelling H. Relations between two sets of variates. Biometrika 28: 321–377, 1936. doi: 10.1093/biomet/28.3-4.321. [DOI] [Google Scholar]

- Huang NE, Shen Z, Long SR, Wu MC, Shih HH, Zheng Q, Yen N-C, Tung CC, Liu HH. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc Royal Soc Math Phys Eng Sci 454: 903–995, 1998. doi: 10.1098/rspa.1998.0193. [DOI] [Google Scholar]

- Hülsdünker T, Mierau A, Neeb C, Kleinöder H, Strüder HK. Cortical processes associated with continuous balance control as revealed by EEG spectral power. Neurosci Lett 592: 1–5, 2015. doi: 10.1016/j.neulet.2015.02.049. [DOI] [PubMed] [Google Scholar]

- Iandolo R, Bellini A, Saiote C, Marre I, Bommarito G, Oesingmann N, Fleysher L, Mancardi GL, Casadio M, Inglese M. Neural correlates of lower limbs proprioception: an fMRI study of foot position matching. Hum Brain Mapp 39: 1929–1944, 2018. doi: 10.1002/hbm.23972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaffe DL, Brown DA, Pierson-Carey CD, Buckley EL, Lew HL. Stepping over obstacles to improve walking in individuals with poststroke hemiplegia. J Rehabil Res Dev 41, 3A: 283–292, 2004. doi: 10.1682/JRRD.2004.03.0283. [DOI] [PubMed] [Google Scholar]

- Jung J, Yu J, Kang H. Effects of virtual reality treadmill training on balance and balance self-efficacy in stroke patients with a history of falling. J Phys Ther Sci 24: 1133–1136, 2012. doi: 10.1589/jpts.24.1133. [DOI] [Google Scholar]

- Kang H-K, Kim Y, Chung Y, Hwang S. Effects of treadmill training with optic flow on balance and gait in individuals following stroke: randomized controlled trials. Clin Rehabil 26: 246–255, 2012. doi: 10.1177/0269215511419383. [DOI] [PubMed] [Google Scholar]

- Kawamura S, Kijima R.. Effect of head mounted display latency on human stability during quiescent standing on one foot. 2016 IEEE Virtual Reality (VR). Greenville, SC, March 19–23 2016, p. 199–200. [Google Scholar]

- Kelly JW, Riecke B, Loomis JM, Beall AC. Visual control of posture in real and virtual environments. Percept Psychophys 70: 158–165, 2008. doi: 10.3758/PP.70.1.158. [DOI] [PubMed] [Google Scholar]

- Kerns JG, Cohen JD, MacDonald AW III, Cho RY, Stenger VA, Carter CS. Anterior cingulate conflict monitoring and adjustments in control. Science 303: 1023–1026, 2004. doi: 10.1126/science.1089910. [DOI] [PubMed] [Google Scholar]

- Kline JE, Huang HJ, Snyder KL, Ferris DP. Isolating gait-related movement artifacts in electroencephalography during human walking. J Neural Eng 12: 046022, 2015. doi: 10.1088/1741-2560/12/4/046022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krakauer JW, Ghez C, Ghilardi MF. Adaptation to visuomotor transformations: consolidation, interference, and forgetting. J Neurosci 25: 473–478, 2005. doi: 10.1523/JNEUROSCI.4218-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurz I, Gimmon Y, Shapiro A, Debi R, Snir Y, Melzer I. Unexpected perturbations training improves balance control and voluntary stepping times in older adults - a double blind randomized control trial. BMC Geriatr 16: 58, 2016. doi: 10.1186/s12877-016-0223-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee TW, Girolami M, Sejnowski TJ. Independent component analysis using an extended infomax algorithm for mixed subgaussian and supergaussian sources. Neural Comput 11: 417–441, 1999. doi: 10.1162/089976699300016719. [DOI] [PubMed] [Google Scholar]

- Lott A, Bisson E, Lajoie Y, McComas J, Sveistrup H. The effect of two types of virtual reality on voluntary center of pressure displacement. Cyberpsychol Behav 6: 477–485, 2003. doi: 10.1089/109493103769710505. [DOI] [PubMed] [Google Scholar]

- Luu TP, He Y, Nakagome S, Nathan K, Brown S, Gorges J, Contreras-Vidal JL. Multi-trial gait adaptation of healthy individuals during visual kinematic perturbations. Front Hum Neurosci 11: 320, 2017a. doi: 10.3389/fnhum.2017.00320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luu TP, Nakagome S, He Y, Contreras-Vidal JL. Real-time EEG-based brain-computer interface to a virtual avatar enhances cortical involvement in human treadmill walking. Sci Rep 7: 8895, 2017b. doi: 10.1038/s41598-017-09187-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makeig S, Bell AJ, Jung T-P, Sejnowski TJ. Independent component analysis of electroencephalographic data. In: Advances in Neural Information Processing Systems 8, edited by Touretzky DS, Mozer MC, Hasselmo ME. Cambridge, MA: MIT Press, 1996, p. 145–151. [Google Scholar]

- Maki BE, McIlroy WE. Cognitive demands and cortical control of human balance-recovery reactions. J Neural Transm (Vienna) 114: 1279–1296, 2007. doi: 10.1007/s00702-007-0764-y. [DOI] [PubMed] [Google Scholar]

- Martin TA, Keating JG, Goodkin HP, Bastian AJ, Thach WT. Throwing while looking through prisms. I. Focal olivocerebellar lesions impair adaptation. Brain 119: 1183–1198, 1996. doi: 10.1093/brain/119.4.1183. [DOI] [PubMed] [Google Scholar]

- Mihara M, Miyai I, Hatakenaka M, Kubota K, Sakoda S. Role of the prefrontal cortex in human balance control. Neuroimage 43: 329–336, 2008. doi: 10.1016/j.neuroimage.2008.07.029. [DOI] [PubMed] [Google Scholar]

- Miltner WHR, Lemke U, Weiss T, Holroyd C, Scheffers MK, Coles MGH. Implementation of error-processing in the human anterior cingulate cortex: a source analysis of the magnetic equivalent of the error-related negativity. Biol Psychol 64: 157–166, 2003. doi: 10.1016/S0301-0511(03)00107-8. [DOI] [PubMed] [Google Scholar]

- Mirelman A, Maidan I, Herman T, Deutsch JE, Giladi N, Hausdorff JM. Virtual reality for gait training: can it induce motor learning to enhance complex walking and reduce fall risk in patients with Parkinson’s disease? J Gerontol A Biol Sci Med Sci 66: 234–240, 2011. doi: 10.1093/gerona/glq201. [DOI] [PubMed] [Google Scholar]

- Mognon A, Jovicich J, Bruzzone L, Buiatti M. ADJUST: an automatic EEG artifact detector based on the joint use of spatial and temporal features. Psychophysiology 48: 229–240, 2011. doi: 10.1111/j.1469-8986.2010.01061.x. [DOI] [PubMed] [Google Scholar]

- Mulavara AP, Cohen HS, Bloomberg JJ. Critical features of training that facilitate adaptive generalization of over ground locomotion. Gait Posture 29: 242–248, 2009. doi: 10.1016/j.gaitpost.2008.08.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullen T, Kothe C, Chi YM, Ojeda A, Kerth T, Makeig S, Cauwenberghs G, Jung T-P. Real-time modeling and 3D visualization of source dynamics and connectivity using wearable EEG. Conf Proc IEEE Eng Med Biol Soc 2184–2187: 2015, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullen TR. The Dynamic Brain: Modeling Neural Dynamics and Interactions from Human Electrophysiological Recordings (PhD thesis). La Jolla, CA: University of California San Diego, 2014. [Google Scholar]

- Nathan K, Contreras-Vidal JL. Negligible motion artifacts in scalp electroencephalography (EEG) during treadmill walking. Front Hum Neurosci 9: 708, 2016. doi: 10.3389/fnhum.2015.00708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nemanich ST, Earhart GM. How do age and nature of the motor task influence visuomotor adaptation? Gait Posture 42: 564–568, 2015. doi: 10.1016/j.gaitpost.2015.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliveira AS, Schlink BR, Hairston WD, König P, Ferris DP. Restricted vision increases sensorimotor cortex involvement in human walking. J Neurophysiol 118: 1943–1951, 2017. doi: 10.1152/jn.00926.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Oostendorp TF. Validating the boundary element method for forward and inverse EEG computations in the presence of a hole in the skull. Hum Brain Mapp 17: 179–192, 2002. doi: 10.1002/hbm.10061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palacios-Navarro G, Albiol-Pérez S, García-Magariño García I. Effects of sensory cueing in virtual motor rehabilitation. A review. J Biomed Inform 60: 49–57, 2016. doi: 10.1016/j.jbi.2016.01.006. [DOI] [PubMed] [Google Scholar]

- Palmer JA, Kreutz-Delgado K, Makeig S. Super-Gaussian mixture source model for ICA. In: Lecture Notes in Computer Science. Heidelberg, Germany: Springer, 2006, p. 854–861. [Google Scholar]

- Palmer JA, Makeig S, Kreutz-Delgado K, Rao BD. Newton method for the ICA mixture model. 2008 IEEE International Conference on Acoustics, Speech and Signal Processing. Las Vegas, NV, 31 March–4 April 2008. doi: 10.1109/ICASSP.2008.4517982 [DOI] [Google Scholar]

- Parijat P, Lockhart TE, Liu J. Effects of perturbation-based slip training using a virtual reality environment on slip-induced falls. Ann Biomed Eng 43: 958–967, 2015. doi: 10.1007/s10439-014-1128-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Presacco A, Goodman R, Forrester L, Contreras-Vidal JL. Neural decoding of treadmill walking from noninvasive electroencephalographic signals. J Neurophysiol 106: 1875–1887, 2011. doi: 10.1152/jn.00104.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]