Abstract

Background:

In recent years, biomedical devices have proven to be able to target also different neurological disorders. Given the rapid ageing of the population and the increase of invalidating diseases affecting the central nervous system, there is a growing demand for biomedical devices of immediate clinical use. However, to reach useful therapeutic results, these tools need a multidisciplinary approach and a continuous dialogue between neuroscience and engineering, a field that is named neuroengineering. This is because it is fundamental to understand how to read and perturb the neural code in order to produce a significant clinical outcome.

Results:

In this review, we first highlight the importance of developing novel neurotechnological devices for brain repair and the major challenges expected in the next years. We describe the different types of brain repair strategies being developed in basic and clinical research and provide a brief overview of recent advances in artificial intelligence that have the potential to improve the devices themselves. We conclude by providing our perspective on their implementation to humans and the ethical issues that can arise.

Conclusions:

Neuroengineering approaches promise to be at the core of future developments for clinical applications in brain repair, where the boundary between biology and artificial intelligence will become increasingly less pronounced.

Keywords: Artificial intelligence, bioethics, brain disorder, closed loop, neuroprosthetics

Introduction

At the beginning of the third millennium, we are witnessing the birth of the new biology era in which the issues related to biology and medicine are approached in a totally different and revolutionary way with respect to the past (Palsson, 2000). Instead of a reductionist approach, which dominated biological sciences during the latter half of the 20th century, an integrative approach is now adopted, thanks to the continuous interplay between biology and technology (Fields, 2001).

New multidisciplinary fields are emerging in every section of biology. As underlined by Fields (2001), technology provides the tools and biology the problems. Within the plethora of possible marriages between faraway fields, one is advancing more than others, both in terms of scientific outcomes and technology transfer perspective: we are referring to Neuroengineering. The term Neuroengineering is defined in various ways, depending on the perspective of the people developing and using it. In general, we can define Neuroengineering as the discipline developing instruments which allow a dialogue with a neuronal system (i.e. in vitro neuronal cultures, ex vivo brain tissue, the nervous system of live experimental animals or of humans). In general, neuroengineering products (which include some types of neurotechnologies, see below for a definition) embrace the technical and computational tools that allow the communication with the nervous system, such as measuring, analysing or manipulating (by different types of stimulation) the electrical activity of neuronal cells. Neurotechnologies are, for example, devices aimed at reading/writing the electrical activity, tools designed to understand the neural code (Horch and Dhillon, 2004) and platforms allowing the interaction with the nervous system, in order to alter its activity or to control external devices, also in case of pathological conditions (Bronzino et al., 2007).

In this review, we first introduce why it is important to develop novel neurotechnologies for brain repair. Second, we describe the logic underlying the function of artificial devices for therapeutic purposes. We then illustrate the different types of neurotechnologies being developed in basic and clinical research and provide a brief overview of recent advances in artificial intelligence (AI), with the possibility of combining the two worlds. We conclude by providing our perspective on their implementation on humans and the possible implications for dual use.

Why Neuroengineering?

Brain matters: the global burden of disease of neurological disorders

A recent report by the World Health Organization (WHO) pointed out that neurological disorders (including neuropsychiatric conditions) constitute 6.3% of the global burden of disease (GBD; Chin and Vora, 2014; WHO, 2006). With the highest GBD in Europe (11.2%), neurological disorders represent the most invalidating clinical condition, exceeding HIV, malignant neoplasm, heart ischemia, respiratory and digestive diseases. Among neurological disorders, more than half of the burden in disability-adjusted life years (DALYs) is constituted by cerebrovascular diseases, such as stroke (55%), followed by Alzheimer’s disease and other dementias (12%), migraine (7.9%) and epilepsy (7%) – see Table 1.

Table 1.

Top diseases in the GBD at the European level.

| Disease | Incidence | Description |

|---|---|---|

| Stroke | 55% | Stroke is an ischemic insult to the brain, leading to death of the nervous tissue. The effectiveness of acute stroke care is constrained by a narrow time window (3–6 h post onset) and, as a matter of fact, only 10–20% of patients can receive immediate intervention. Stroke is the leading cause of adult disability worldwide and is the fifth most frequent cause of death in the world and the second in Europe (Nichols et al., 2012). |

| Dementia | 12% | Dementias are characterised by progressive cognitive decline, the etiopathogenesis of which is rarely clarified. The lack of targeted treatments to heal dementias accounts for the fatal progression of these neurological syndromes (Rossor et al., 2010). |

| Migraine | 7.9% | Migraine is a highly prevalent disorder characterised by attacks of moderate to severe throbbing headaches that are often unilateral in location, worsened by physical activity and associated with nausea and/or vomiting, photophobia and phonophobia (Marmura et al., 2015). |

| Epilepsy | 7% | Epilepsy can be defined as recurrent abnormal brain activity causing transient uncontrolled sensorimotor disturbances, with or without loss of consciousness (seizures). Epileptic syndromes are particularly relevant in the context of neuroengineering for brain repair, in light of the brain damage consequent to the repeated seizures, the high incidence of cognitive and psychiatric comorbidities and the significant social stigma (Fiest et al., 2014). |

GBD: global burden of disease.

The number of people affected by stroke and dementia is likely to increase in the coming years, because of the rapidly ageing population, whereas migraine and epilepsy affect people at any age and may be so severe to compromise the patient’s ability to work. Therefore, enhancing recovery of cognitive, sensory and motor functions in these conditions has become a global priority in healthcare.

From the scenario depicted above, it is clear that brain disorders represent one of the biggest challenges for healthcare and society and that the lack of effective treatments demands for innovative interventions.

The challenge

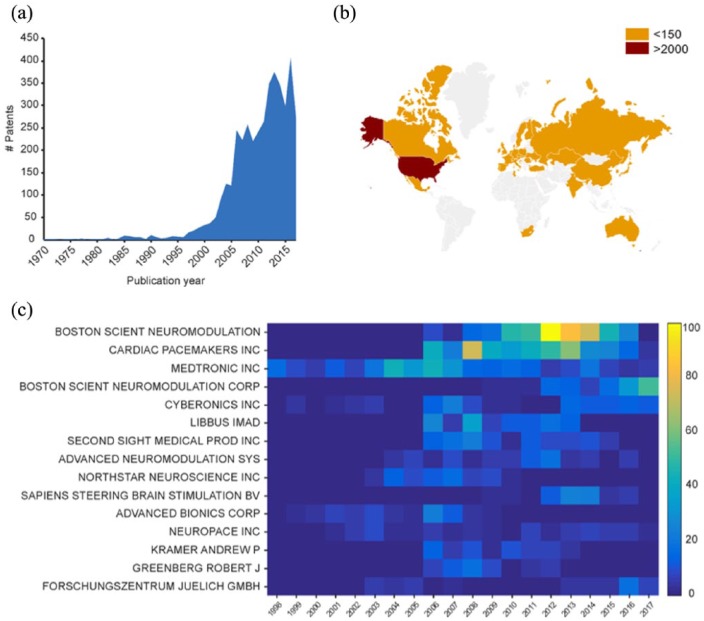

Since the pioneering work in the 1970s by Eberhard Fetz (Fetz and Finocchio, 1971; Fetz, 1969) and at the end of the 1990s by the groups of Andrew Schwartz and Miguel Nicolelis (Nicolelis, 2001, 2003; Taylor et al., 2002), we have experienced an exponential development of neuroengineering tools for brain repair, such as brain modulators, brain–machine interfaces (BMIs) and brain prostheses. For biomedical engineers, these technologies can be the key to innovative treatments for brain disorders, as witnessed by several initiatives in the United States (Brain Research through Advancing Innovative Neurotechnologies - BRAIN), in Europe (Human Brain Project - HBP) and in Japan (Brain Mapping by Innovative Neurotechnologies for Disease Studies). The interest in novel technologies relies on the possibility to use targeted electrical stimulation delivered by smart microfabricated devices to replace pharmaceutical interventions, recently named in major journals as electroceutical (Famm et al., 2013; Reardon, 2014). The number of patents related to brain stimulation published in the last decades confirmed the interest in this field (Figure 1(a)). The United States is by far the most prolific nation in terms of patents published in this field (Figure 1(b)). Big companies such as Medtronic (http://www.medtronic.com/) and Boston Scientific Neuromodulation Corp. (http://www.bostonscientific.com) are constantly looking for new devices able to provide a useful therapy for neuropathologies like epilepsy, Parkinson’s disease, chronic pain and stroke (Figure 1(c)). Furthermore, the growing interest towards brain repair strategies is giving rise to new companies such as Elon Musk’s most recent initiative Neuralink (https://www.neuralink.com/), Bryan Johnson’s initiative Kernel (https://kernel.co/) and Galvani Bioelectronics, a joint venture between Verily and GlaxoSmithKline (http://www.galvani.bio/). These companies were created to accelerate the development of this new market. Thus, the 21st century is becoming the era of visionary brain repair strategies lying at the edge between neuroscience and engineering, in which the bi-directional dialogue between the brain and machine is at the core of cutting-edge neuroengineering.

Figure 1.

Patent analysis in neuroengineering. (a) Number of published patents per year from 1970 to 2017. (b) Map showing the countries where the patents were filed. The United States leads with 3073 patents followed by Germany with 147, Netherlands with 131, Switzerland with 127 and China with 82. The remaining nations are below 65. (c) Number of published patents per year (from 1998 to 2017) by the 15 most active applicants. Patent data were collected using the Patent Inspiration database (http://www.patentinspiration.com/) based on the DOCDB database from the European Patent Office (EPO). DOCDB database contains bibliographic data from over 102 countries. Bibliographic data include titles, abstracts, applicants, inventors, citations, literature citations, code classifications and family info. The database is updated on a weekly basis. Patents were searched from January 1970 to October 2017 using the following Boolean search strategy: (‘neurostimulation’ OR ‘neural stimulation’ OR ‘brain stimulation’) in title or abstract.

The achievement of a truly useful bi-directional interface with the central nervous system (CNS) requires overcoming a set of different challenges (Thakor, 2013). Understanding the neural code is a priority, which is usually split into the coding problem (i.e. reading the code from the CNS) and the encoding problem (i.e. writing the code back into the CNS). In this regard, giant steps have already been taken in the last decades, but there is room for much improvement (Jun et al., 2017; Panzeri et al., 2017; Stanley, 2013). From the technical point of view, an urgency is represented, for example, by the resolution of the recorded signal which is linked to the invasiveness of the recording system (Lee et al., 2013; Nunez and Srinivasan, 2006). A non-invasive recording technique like electroencephalography (EEG) has a low spatial resolution and signal-to-noise ratio but no clinical risk (Chaudhary et al., 2016). On the other hand, invasive recordings offer higher spatial resolution and signal-to-noise ratio but at the cost of higher clinical risks (Baranauskas, 2014; Tehovnik et al., 2013).

Similar issues regard the techniques used to stimulate the CNS, which range from non-invasive methods, such as non-invasive brain stimulation (NIBS; Hummel and Cohen, 2005), to more invasive procedures, such as intracortical stimulating electrodes (Tehovnik et al., 2006).

Another concern regards the algorithms mediating the interaction between the nervous tissue and an artificial device: the control policy may exhibit different levels of sophistication, from simple decoding techniques up to the implementation of smarter techniques that leverage on machine learning and AI (Andersen et al., 2014; Pohlmeyer et al., 2014; Shenoy and Carmena, 2014; Wissel et al., 2013). The development of these control algorithms for the clinical practice undoubtedly benefits brain disorders including neurological and neuropsychiatric conditions.

We will show in the next paragraphs that motor disorders (like Parkinson’s disease - PD, epilepsy and depression) are current target diseases for neuroengineering applications.

Advances in Neuroengineering

Architecture and control algorithms: some definitions

Neurotechnological devices operate through architecture and control strategies which deserve a brief description in order to better classify what has been developed in the course of the years. Bearing in mind that no approach is better than another in an absolute sense, but each serves a specific purpose, we define these strategies according to their operating input/output (I/O) function and use the logic underlying that function (hereafter, the algorithm) to classify a system’s performance, from the lowest to the highest sophistication.

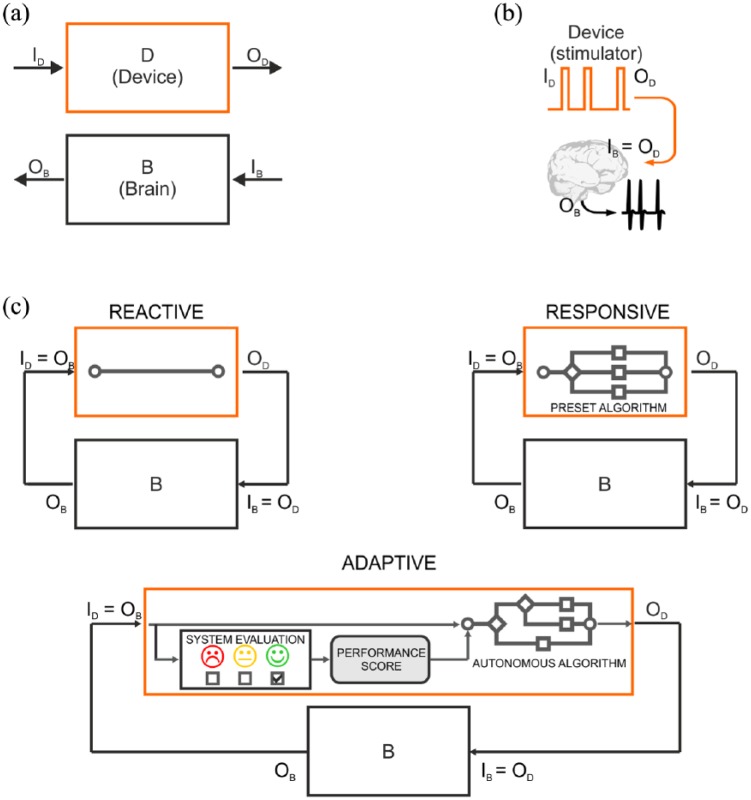

The first distinction should be made between open-loop and closed-loop architectures (Greenwald et al., 2016; Wright et al., 2016), both involving two systems – a device (D) and a brain (B) – characterized by their specific I/O functions (ID/OD for the device and IB/OB for the brain; Figure 2(a)). In open-loop systems (Figure 2(b)), the output of the device (OD) consists of a stimulus (e.g. electrical pulse) which is directly delivered to the brain (IB = OD). The brain processes the incoming information (IB) and produces an output response (OB). The input to the device (ID) can be any function determining the features of the stimulation sequence; however, it is not modulated by any feedback from the brain.

Figure 2.

I/O control systems for neuroengineering. (a) Representation of the elements involved in a neurotechnological tool: an artificial device (D block) and a portion of the CNS (B block). (b) Open-loop architecture. The device is programmed to output a stimulus (device output – OD), which also represents the input to the brain (device input – ID); in turn, the brain generates an output response (brain output – OB). The open-loop device cannot read brain electrical activity (black trace) and thus operates independent of it. (c) Control algorithms for closed-loop architecture. In closed-loop fashion, the brain output response (OB) is fed back to the device, thus serving as an input to the device (brain input – IB) and determining the device output (OD). The reactive I/O system is capable of reading an input signal, but cannot interpret its meaning. The system’s output is predefined by the human, based on theoretical assumptions or on empirical trial-and-error refinement, and the feedback from the brain acts as a simple trigger. The responsive I/O system is provided with a number of choices, but their conditional application is predefined by the human based on previously acquired knowledge. The system interprets the feedback from the brain and selects the stimulus based on its content. The adaptive I/O system can independently choose the best output provided a varying input. The system learns the best output strategy through the feedback provided by the performance evaluator. In this way, the system may evolve and deliver a different output upon subsequent presentation of the same input based on the learned strategy and on its past experience.

Closed-loop devices are based on feedback: the output of the brain (OB), consisting in the ongoing brain activity or its processed version, serves as the input for the device (ID = OB), which triggers the device operation. The output of the device (OD) is the input to the brain (IB = OD). This system generates an I/O loop, which continues indefinitely. With regard to the modus operandi of closed-loop devices, we use a hierarchical scheme to rank the sophistication of control algorithms and distinguish them in Reactive, Responsive and Adaptive (Figure 2(c)).

Reactive

The algorithm is based on a single-input-single-output paradigm. A typical example is activity-dependent stimulation in which a stimulus is delivered with a fixed delay upon the detection of an electrophysiological event in the recorded brain area, that is, stimulation is phase-locked to the brain activity (Guggenmos et al., 2013; Jackson et al., 2006). The output parameters are fixed by the human operator, but the device is triggered by an input signal detected by the system. This is a basic I/O system, representing the simplest implementation of control algorithm, which does not operate any autonomous choice, but it is simply instructed to react in a stereotypical fashion.

Responsive

The algorithm may process several inputs and respond with different outputs, but its operation is task oriented, contextual and conditional. The algorithm is provided with multiple built-in output options. For example, a stimulation algorithm may be instructed to modify its output frequency proportionally to the frequency of the recorded event(s) (Beverlin and Netoff, 2012; Morrell and Halpern, 2016). At any given time, the system follows a stimulation policy which is a function of the feedback it receives from the brain. In addition, the system contains a set of stimulation policies and decides, based on the context, which one to follow. All the stimulation policies and their activation rules are, however, fixed and predefined at design time.

Adaptive

The algorithm is similar to the previous one, but it implements an adaptive I/O response, in that it is capable of real-time self-adjustment according to past experience, a set of learning rules and performance evaluation or reward functions. The algorithm evolves in time using the acquired knowledge, rather than obeying predefined rules. The human operator only provides the knowledge of what to achieve, whereas the algorithm autonomously chooses the how to, exhibiting the highest sophistication. In machine learning, this paradigm is regarded as reinforcement learning (Russell and Norvig, 2010; Sutton and Barto, 1998), because the learning system never receives indication of the correct I/O relationship (as in supervised learning), but it has to autonomously discover the set of actions (in our case the I/O policy) that converge to the optimal solution, that is, maximise a given reward and minimise a given penalty (Orsborn et al., 2012; Panuccio et al., 2013; Pineau et al., 2009).

Neuroengineering from bench to bedside

In the early seventies, the seminal work of Fetz (1969) and the observations of Schmidt (1980) and Georgopoulos et al. (1986) revealed that specific movement trajectories activate specific ensembles of cortical motor neurons and thus the observation of these ensembles can help predict the desired direction of the movement. These studies laid the foundation for brain signal decoding and the development of neuroprosthetics. Other types of neuroprostheses are directed towards the restoration of sensory capabilities. The first examples in this context are the cochlear implant that uses electrical stimulation to restore hearing in the profoundly deaf (Loeb et al., 1983) and the retina implant that translates visual information into stimulation patterns directed towards retinal neurons (Humayun, 2001). Nowadays, brain modulators, BMIs and brain prostheses are neurotechnological tools under intense preclinical investigation.

Brain modulators

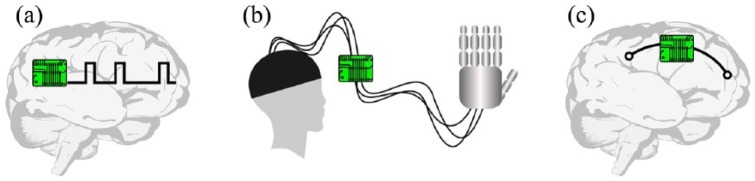

Brain modulators are devices that modulate brain patterns by means of externally applied current or magnetic fields, or by electrical stimulation of deep brain structures (deep brain stimulation - DBS). Here, we refer specifically to DBS devices, since they represent the majority of brain modulators. Typically, the development of these devices aims at treating movement disorders (e.g. PD; Collomb-Clerc and Welter, 2015; Duker and Espay, 2013), epilepsy (Laxpati et al., 2014) and psychiatric conditions (such as obsessive-compulsive disorder and major depression; Berlim et al., 2014; Holtzheimer et al., 2017). Current preclinical studies for the development of DBS devices implement both open-loop and closed-loop architectures, the latter deploying a variety of control algorithms, including reactive, responsive and adaptive stimulation policies (see Panuccio et al., 2016 for a comprehensive review). Well-known clinical applications of DBS are movement disorders and drug-refractory epilepsy. Approved brain modulators for movement disorders are open-loop devices delivering high-frequency electrical stimulation (Groiss et al., 2009; Figure 3(a)). With regard to epilepsy, the Food and Drug Administration (FDA) agency has recently approved the responsive neurostimulation (RNS) system NeuroPace® as an adjunctive DBS therapy for drug-refractory epileptic patients (Bergey et al., 2015; Thomas and Jobst, 2015). This device operates in closed loop and implements a responsive algorithm able to recognize abnormal brain activity and consequently adjusts its electrical stimulation response. It must be mentioned that recently a DBS non invasive strategy for electrically stimulating neurons at depth was developed (Grossman et al., 2017) and, although human trials have not yet been conducted, this technique holds great promise for PD patients.

Figure 3.

Neuroengineering devices for brain repair. (a) Brain modulator for DBS. The device is implanted into deep brain structures and may be based on open- or closed-loop architecture. (b) A BMI conveys the electrical activity of the recorded brain area to the robotic end effector. In this rendition, the established system is made up of the input brain area, the interface apparatus and the robotic hand. In this case, an open-loop strategy is implemented: visual feedback aids in movement planning and the required adjustments, which, in turn, influence the function of the interface apparatus. (c) A brain prosthesis is an artificial device implanted in the brain to replace brain activity or to reconnect disconnected brain areas.

BMIs

The term BMI refers to a device that interfaces the brain with a robotic end effector, like a robotic limb (Figure 3(b)). BMIs aim at restoring missing motor functions in patients stricken by a disabling neurological condition, brain injury or limb amputation. Following the pioneering demonstration of the feasibility of this approach (Chapin et al., 1999), BMIs have become increasingly sophisticated and have proved to be successfully applicable to paralysed humans (Ajiboye et al., 2017; Bouton et al., 2016; Hochberg et al., 2012). An increasing fraction of the BMI community is also devoted to the investigation of neural stimulation as a tool to provide subjects with an artificial channel of feedback information (Flesher et al., 2016; Micera et al., 2008; Raspopovic et al., 2014).

Brain prostheses

Brain prostheses are intended as artificial systems directly connected with the brain in order to replace a damaged area or bridge disconnected areas and regain the lost functionality (Figure 3(c)). For example, the device may be used to reconnect somatosensory and motor cortical areas in order to restore forelimb movement impaired by brain injury. A brain prosthesis implementing an architecture following a closed-loop reactive policy has been presented for the first time by Kansas University Medical Center (Guggenmos et al., 2013). Another promising example is represented by the hippocampal memory prosthesis, in which the neural activity of specific hippocampus areas suitably processed can be used to manipulate and thus restore (through ad hoc electrical stimulation) cognitive mnemonic processes (Berger et al., 2011, 2012; Song et al., 2015; Song and Berger, 2014). The development of brain prostheses is still at the preclinical stage.

At the boundaries between BMIs and brain prostheses lays the work of Courtine and co-workers (Wenger et al., 2014, 2016) who successfully demonstrated that spatiotemporal modulations of the spinal cord can restore the locomotion of spinal cord–injured rodents. Indeed, it needs to be stressed that the borderline among these three types of technology is not always clear-cut; rather, some overlaps can be identified. For example, brain modulators may be integrated into brain prostheses, since these devices must stimulate target brain areas in order to act as a bridge or as a replacement within the brain.

It is worth underlining that all the abovementioned neuroprosthetic tools are taking advantage of the recent software and hardware progress in terms of the number of recorded electrodes and related data processing (Jun et al., 2017; Mahmud et al., 2017; Mahmud and Vassanelli, 2016). Moreover, improved computational capabilities can be achieved thanks to innovative neuromorphic synthetic devices (Bonifazi et al., 2013; Chiolerio et al., 2017) also based on memristor technology (Gupta et al., 2016). Technological progress interests also the field of the electrophysiological techniques. For example, optogenetics (Paz and Huguenard, 2015) and sonogenetics (Ibsen et al., 2015) allow, through light and sound, respectively, precise spatiotemporal control of cells and therefore the manipulation of specific brain circuits. These techniques can therefore be exploited in future neuroprosthetic devices with important implication for the treatment of neurological disorders (Cheng et al., 2014).

Neuroengineering meets AI

Intelligent systems: an historical perspective

The foundation of AI as an academic discipline dates back to the Dartmouth Conference in 1956 (now regarded as the birth of AI (Russell and Norvig, 2010)) and has recently received a resurge of interest thanks to the remarkable success of deep learning. In the wake of this legacy, the adoption of AI in the treatment of neurological disorders promises to revolutionise the concept of brain repair strategies. Because virtually any system receiving an input is generally regarded as intelligent, we would like to provide a definition of an intelligent system following the one provided by the pioneers of AI.

The invention of the first general-purpose programmable digital computer, the Z3 by Konrad Zuse in 1941 (see Zuse (1993) for historical review), inspired the vision of building an electronic brain. In 1948, Norbert Wiener described computing machines (i.e. computers) that were able to improve their behaviour during a chess competition by analysing past performances (Rossi et al., 2016). These machines may be regarded as self-evolving: they are driven by a feedback mechanism based on the evaluation of previous failures/successes to adjust their behaviour in response to experience and performance scoring. In the same years, Gray Walter built the turtle robots, the first electronic autonomous robots (Walter, 1950a, 1950b). He demonstrated that a system composed of few elements produced unpredictable behaviour, which he defined as free will. These extraordinary science achievements wiped out traditional certainty about machines as agents that ‘can do only what we tell them to do’ (as stated by Lady Ada Lovelace). This statement was formally rejected by Alan Turing in his seminal paper Computing Machinery and Intelligence (Turing and Copeland, 2004), in which he argued that the objection could be proven wrong by building a learning machine. He also explained that this was only possible if, instead of building a machine simulating the adult brain, we built a child machine having some hereditary material (predefined structure), subject to mutation (changes) and natural selection (judgement of the experimenter) and undergoing a learning process based on a reward/punishment mechanism (interestingly, this process is not too far from how modern deep reinforcement systems are built; see, for example, Mnih et al., 2015). Turing therefore defined intelligence as the ability to reach human levels of performance in a cognitive task (Turing Test). In robotics, the field of embodied intelligence challenges the conventional view that sees intelligence as the ability to manipulate symbols and produce other symbols. Embodied intelligence focuses instead on the skills that allow biological systems to thrive in their environment: the ability to walk, catch prays, escape from threats and, above all, adapt (Brooks, 1990; Pfeifer and Scheier, 1999).

From this brief excursus, it emerges that autonomous behaviour (Walter’s free will), intended as the agent’s ability to make a decision in response to an external event, and evolution, intended as the agent’s ability of adapting to changes in the environmental stimuli so to maximise a given reward, are both attributes of intelligence. According to this definition, the feature of detecting an electrical event generated by the brain without interpreting its meaning should not suffice for a system to earn the recognition of intelligence, whereas autonomous behaviour and evolution should be the appropriate prerequisites, because they imply the capability of interpreting a brain signal (the environment variable) so as to choose the most appropriate action (thrive in the environment).

Intelligent neurotechnologies

The scientific community has converged to the general consensus that the establishment of a functional partnership between biological and artificial components, as implemented in closed-loop systems, is a crucial prerequisite to successfully achieve functional brain repair by means of neuroengineering (see, for example, the change in perspective with regard to open- versus closed-loop stimulators for PD and epilepsy (Rosin et al., 2011)). However, the progressive parallelism between closed-loop architecture and intelligent operation has been made so that the closed-loop paradigm is generally considered as intelligent per se, regardless of the underlying control algorithm. Nonetheless, in most of the closed-loop systems (i.e. reactive and responsive as defined in the previous section) the artificial component is not set to autonomously evolve and adapt to the biological counterpart in order to achieve the desired functional outcome. Rather, it responds in a quite stereotypical fashion to the biological inputs it receives. Most of the current control algorithms cannot autonomously adapt their policy to the progression of brain patterns. Thus, the dialogue between biological tissue and an artificial device is necessary but definitely not sufficient to endow a system with true intelligent performance (Vassanelli and Mahmud, 2016).

In order to provide a definition of intelligent Neuroengineering, we bring back to light the canonical formalism proposed decades ago by Russel and Norving (2010). The authors distinguished in the first place between an agent and a rational agent, the latter being capable of autonomous (human-independent) behaviour. Then they stated that ‘A system is autonomous to the extent that its behavior is determined by its own experience. […] A truly autonomous intelligent agent should be able to operate successfully in a wide variety of environments, given sufficient time to adapt’.

Translating these canonical concepts to the design of intelligent neuroengineering, the conceived devices should exhibit the capability of dynamic adaptation to the flow of neuronal information that is continuously changing due to its reciprocal interaction with the artificial device. That is, a truly intelligent system should intrinsically exhibit (1) the ability to acquire information (can read input), (2) set of choices (have options) and (3) autonomous decisional power (decide by itself). Only the synergetic enforcement of these three salient features will allow a system to accomplish its task in an intelligent manner, achieving rationality in its performance independent of human intervention. Such intelligent behaviour implies the pivotal role of a learning process based on feedback mechanisms, cost and reward functions.

Outstanding advancements have been recently made leading to self-adjusting neuroprostheses (Bouton et al., 2016) and adaptive algorithms for DBS technology to treat epileptic disorders (Panuccio et al., 2013; Pineau et al., 2009). Based on statistical machine learning techniques, these algorithms exhibit autonomous decisional power and evolution, overcoming the initial expectations of the designers as they are capable of elaborating the best (mostly unexpected) intervention policy to achieve a specified goal, rather than picking the predefined most appropriate task from a look-up table (Elbasiouny, 2017).

The future of AI for neurotechnologies

Humans have been striving for decades to mimic the brain using computing machines. However, despite sporadic success in very specific applications (e.g. IBM Deep Blue beating the World Chess Champion in 1997), progress has been slow. Thanks to deep learning, however, AI has received growing attention, in academia and industry (LeCun et al., 2015). With the adoption of deep neural networks, machines today demonstrate stunning performances in tasks that have been previously considered difficult, like automatic classification of images (see Russakovsky et al. (2015) for a review) and speech signals (Hinton et al., 2012). Deep learning has also been applied successfully to solve reinforcement learning problems in the presence of very large state space (perhaps the most popular example is the application of deep learning to implement a software that learns to play Atari games (Mnih et al., 2015) and the game of Go (Silver et al., 2016)).

Deep learning has strong potentials for the development of neuroengineering devices, because the strength of deep networks is in their ability to process complex signals (like images or sound) to provide a low-dimensional representation that retains task-dependent information but it is much easier to manipulate. For this reason, it is reasonable to assume that deep learning could be employed as an efficient way to encode the complex signals that originate from the sensory system or from implanted arrays of electrodes (Mahmud et al., 2018).

The key ingredients that contribute to the success of deep learning are as follows: the availability of large dataset and affordable hardware for massive parallel computing (typically graphical processing units - GPUs) that can train neural networks with a large number of layers and millions of parameters in a reasonable amount of time. Today, these are not problems for conventional applications of deep learning. Humans produce large amounts of data through smartphones, Internet and social media, while powerful GPUs in datacenters are accessible through cloud computing. However, these may be stumbling blocks for adoption of deep learning in neuroengineering devices.

For use of deep learning in embedded systems, companies have started to develop dedicated hardware supporting the deployment of deep neural networks with real-time performance, low power consumption and small footprint (NVIDIA Jetson TX2 https://www.nvidia.com/en-us/autonomous-machines/embedded-systems-dev-kits-modules/, ARM/MALI https://community.arm.com/graphics/f/discussions/165/deep-learning-inference-on-arm-mali-super-efficient-convolutional-neural-networks-implemented-on-arm-mali-based-socs, Intels’ Movidius https://developer.movidius.com/). This trend is likely to continue in the following years, and it is reasonable to assume that affordable solutions supporting the deployment of deep learning systems in neuroengineering will be available in the future.

Adopting data-driven techniques for training such devices could, however, be difficult, because it requires long periods of data acquisition from human patients. An emerging area of research in machine learning deals with this problem by leveraging on existing knowledge when solving a new task (Gopalan et al., 2015). Similar techniques have been used for the classification of electromyography (EMG) signals (Gregori et al., 2017; Tommasi et al., 2013). The benefit of such techniques in the control of prosthetic devices is, however, debated (Gregori et al., 2017) and may need to be further developed. A similar problem is being studied in robotics for training visual recognition systems from small dataset (Pasquale et al., 2016; Schwarz et al., 2015). In this case, the main idea is to rely on neural models that have been trained for a similar task for which a large dataset is available and to adapt the network to solve a novel task, using much less data.

Ethical considerations

The rapid advancements of neurotechnologies for clinical use raise important ethical questions that urge to be addressed (Goering and Yuste, 2016; Greely et al., 2016; Ienca et al., 2017; Muller and Rotter, 2017) since they may as well be implemented to augment cognitive abilities or body performance (Roco and Bainbridge, 2003). Indeed, a PubMed search of ‘neurotechnology ethics’ returns almost 40 entries, of which the earliest dates back to 2004, while 16 papers were published only in the last 2 years. This provides an indication that a growing number of psychologists and science philosophers are shifting their interests towards the ethical implications of these novel technologies.

Ethical considerations can be raised at different levels (Klein and Nam, 2016). A priority is represented by the concerns of end-users who report a controversial attitude towards neurotechnology (Yuste et al., 2017). For example, patients affected by PD describe apprehensions regarding the control over device function, authentic self, relationship effects and meaningful consent (Klein et al., 2016). In general, patients demand a major involvement in the developing process of the technology programmed for their own use and rehabilitation, and also a major attention to the specific needs of their particular disease, given that different classes of patients have different requirements (Sullivan et al., 2017). It is all too common for a preclinical researcher never to have met a person with the disease they are working on, with the consequence that patients’ hopes and aspirations or the burden of the illness are not appreciated (Garden et al., 2016).

Another important area of related ethical interests regards the restoration versus augmentation principle with which neurotechnologies are conceived. For example, BMIs and brain prostheses were designed with the ultimate goal of restoring a missing function or enhancing a poor one, following brain injury or neurological disorder (Berger et al., 2011, 2012; Hatsopoulos and Donoghue, 2009; Lebedev and Nicolelis, 2006; Mussa-Ivaldi and Miller, 2003; Soekadar et al., 2015; Tankus et al., 2014). However, while developed and investigated with this aim, these tools also offered researchers the possibility to further study the brain, both in the physiological and pathological conditions (Chaudhary et al., 2015; Oweiss and Badreldin, 2015; Soekadar et al., 2015). Moreover, by investigating the strategies to potentiate the functions of a damaged brain, the possibility of changing, augmenting or controlling the functions of a healthy brain also emerged (Jarvis and Schultz, 2015). As an example, researchers funded by the US military are developing innovative AI-controlled brain implants based on closed-loop technology to treat severe mental illness and mood disorders that resist current therapies (Reardon, 2017). The devices are now under tests in humans and the preliminary results have been presented at the Society for Neuroscience Meeting in Washington DC (November 2017). This technique, developed with the aim to treat soldiers and veterans who have depression and post-traumatic brain disorders, raises thorny ethical concerns, since researchers can potentially access a person’s inner feelings in real time. To this end, researchers working at these projects understand the importance of these complex issues (Roco and Bainbridge, 2003) and of discussing them with experts in neuroethics.

The Nuffield Council of Bioethics indicates that blending humans with machines may lead to the man as a ‘prosthetic god’, with both exciting and scary implications, and warns that these considerations have to be taken into account when designing neurotechnologies (Nuffield Council on Bioethics (NCB), 2013).

Conclusion

The long-going effort of humans to build thinking computers and artificial replacement parts for the human body has landed on a very fertile ground. Still, it is clear that machines may be successful at solving specific problems but are still far from matching the capabilities of the human brain. As of today, advanced complex machine learning algorithms and neuromorphic engineering have not yet been established for clinical applications. Nonetheless, such remarkable approaches promise to be at the core of future neuroengineering for brain repair, where the boundary between biology and AI will become increasingly less pronounced.

Acknowledgments

The authors wish to thank Prof. Diego Ghezzi for useful inputs on the manuscript. G.P., M.S. and M.C. conceived the manuscript. G.P., M.S., I.C. and S.B. elaborated the core conceptual design. All authors have written and approved the final version of the manuscript. G.P. and M.S. contributed equally to this work.

Footnotes

Declaration of conflicting interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship and/or publication of this article.

Funding: G.P. was supported by the Marie Skłodowska-Curie Individual Fellowship Re.B.Us, Grant Agreement no. 660689, funded by the European Union under the framework programme Horizon 2020.

ORCID iD: Michela Chiappalone  https://orcid.org/0000-0003-1427-5147

https://orcid.org/0000-0003-1427-5147

References

- Ajiboye AB, Willett FR, Young DR, et al. (2017) Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: A proof-of-concept demonstration. Lancet 389(10081): 1821–1830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, Kellis S, Klaes C, et al. (2014) Toward more versatile and intuitive cortical brain-machine interfaces. Current Biology 24(18): R885–R897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baranauskas G. (2014) What limits the performance of current invasive brain machine interfaces? Frontiers in System Neuroscience 8: 68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger TW, Hampson RE, Song D, et al. (2011) A cortical neural prosthesis for restoring and enhancing memory. Journal of Neural Engineering 8(4): 046017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger TW, Song D, Chan RH, et al. (2012) A hippocampal cognitive prosthesis: Multi-input, multi-output nonlinear modeling and VLSI implementation. IEEE Transactions on Neural Systems and Rehabilitation Engineering 20(2): 198–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergey GK, Morrell MJ, Mizrahi EM, et al. (2015) Long-term treatment with responsive brain stimulation in adults with refractory partial seizures. Neurology 84(8): 810–817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berlim MT, McGirr A, Van den Eynde F, et al. (2014) Effectiveness and acceptability of deep brain stimulation (DBS) of the subgenual cingulate cortex for treatment-resistant depression: A systematic review and exploratory meta-analysis. Journal of Affective Disorders 159: 31–38. [DOI] [PubMed] [Google Scholar]

- Beverlin B, Netoff TI. (2012) Dynamic control of modeled tonic-clonic seizure states with closed-loop stimulation. Frontiers in Neural Circuits 6: 126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonifazi P, Difato F, Massobrio P, et al. (2013) In vitro large-scale experimental and theoretical studies for the realization of bi-directional brain-prostheses. Frontiers in Neural Circuits 7(40). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton CE, Shaikhouni A, Annetta NV, et al. (2016) Restoring cortical control of functional movement in a human with quadriplegia. Nature 533(7602): 247–250. [DOI] [PubMed] [Google Scholar]

- Bronzino J, DiLorenzo D, Anderson T, et al. (2007) Neuroengineering. Boca Raton, FL: CRC Press. [Google Scholar]

- Brooks RA. (1990) Elephants don’t play chess. Robotics and Autonomous Systems 6(1–2): 3–15. [Google Scholar]

- Chapin JK, Moxon KA, Markowitz RS, et al. (1999) Real-time control of a robot arm using simultaneously recorded neurons in the motor cortex. Nature Neuroscience 2(7): 664–670. [DOI] [PubMed] [Google Scholar]

- Chaudhary U, Birbaumer N, Curado MR. (2015) Brain-machine interface (BMI) in paralysis. Annals of Physical and Rehabilitation Medicine 58(1): 9–13. [DOI] [PubMed] [Google Scholar]

- Chaudhary U, Birbaumer N, Ramos-Murguialday A. (2016) Brain-computer interfaces for communication and rehabilitation. Nature Reviews Neurology 12(9): 513–525. [DOI] [PubMed] [Google Scholar]

- Cheng MY, Wang EH, Woodson WJ, et al. (2014) Optogenetic neuronal stimulation promotes functional recovery after stroke. Proceedings of the National Academy of Sciences of the United States of America 111(35): 12913–12918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chin JH, Vora N. (2014) The global burden of neurologic diseases. Neurology 83(4): 349–351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiolerio A, Chiappalone M, Ariano P, et al. (2017) Coupling resistive switching devices with neurons: State of the art and perspectives. Frontiers in Neuroscience 11(70). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collomb-Clerc A, Welter ML. (2015) Effects of deep brain stimulation on balance and gait in patients with Parkinson’s disease: A systematic neurophysiological review. Neurophysiologie Clinique 45(4–5): 371–388. [DOI] [PubMed] [Google Scholar]

- Duker AP, Espay AJ. (2013) Surgical treatment of Parkinson disease: Past, present, and future. Neurologic Clinics 31(3): 799–808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elbasiouny S. (2017) Cross-disciplinary medical advances with neuroengineering: Challenges spur development of unique rehabilitative and therapeutic interventions. IEEE Pulse 8(5): 4–7. [DOI] [PubMed] [Google Scholar]

- Famm K, Litt B, Tracey KJ, et al. (2013) Drug discovery: A jump-start for electroceuticals. Nature 496(7444): 159–161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetz EE. (1969) Operant conditioning of cortical unit activity. Science 163(3870): 955–958. [DOI] [PubMed] [Google Scholar]

- Fetz EE, Finocchio DV. (1971) Operant conditioning of specific patterns of neural and muscular activity. Science 174(4007): 431–435. [DOI] [PubMed] [Google Scholar]

- Fields S. (2001) The interplay of biology and technology. Proceedings of the National Academy of Sciences of the United States of America 98(18): 10051–10054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiest KM, Birbeck GL, Jacoby A, et al. (2014) Stigma in epilepsy. Current Neurology and Neuroscience Reports 14(5): 444. [DOI] [PubMed] [Google Scholar]

- Flesher SN, Collinger JL, Foldes ST, et al. (2016) Intracortical microstimulation of human somatosensory cortex. Science Translational Medicine 8(361): 361ra141. [DOI] [PubMed] [Google Scholar]

- Garden H, Bowman DM, Haesler S, et al. (2016) Neurotechnology and society: Strengthening responsible innovation in brain science. Neuron 92(3): 642–646. [DOI] [PubMed] [Google Scholar]

- Georgopoulos AP, Schwartz AB, Kettner RE. (1986) Neuronal population coding of movement direction. Science 233(4771): 1416–1419. [DOI] [PubMed] [Google Scholar]

- Goering S, Yuste R. (2016) On the necessity of ethical guidelines for novel neurotechnologies. Cell 167(4): 882–885. [DOI] [PubMed] [Google Scholar]

- Gopalan R, Li R, Patel VM, et al. (2015) Domain adaptation for visual recognition. Foundations and Trends on Computer Graphics and Vision 8(4): 285–378. [Google Scholar]

- Greely HT, Ramos KM, Grady C. (2016) Neuroethics in the age of brain projects. Neuron 92(3): 637–641. [DOI] [PubMed] [Google Scholar]

- Greenwald E, Masters MR, Thakor NV. (2016) Implantable neurotechnologies: Bidirectional neural interfaces – applications and VLSI circuit implementations. Medical & Biological Engineering & Computing 54(1): 1–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gregori V, Gijsberts A, Caputo B. (2017) Adaptive learning to speed-up control of prosthetic hands: A few things everybody should know. In: Proceedings of the 2017 international conference on rehabilitation robotics (ICORR), London, 17–20 July, pp. 1130–1135. New York: IEEE. [DOI] [PubMed] [Google Scholar]

- Groiss SJ, Wojtecki L, Südmeyer M, et al. (2009) Deep brain stimulation in Parkinson’s disease. Therapeutic Advances in Neurological Disorders 2(4): 20–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman N, Bono D, Dedic N, et al. (2017) Noninvasive deep brain stimulation via temporally interfering electric fields. Cell 169: 1029–1041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guggenmos DJ, Azin M, Barbay S, et al. (2013) Restoration of function after brain damage using a neural prosthesis. Proceedings of the National Academy of Sciences of the United States of America 110(52): 21177–21182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gupta I, Serb A, Khiat A, et al. (2016) Real-time encoding and compression of neuronal spikes by metal-oxide memristors. Nature communications 7: 12805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatsopoulos NG, Donoghue JP. (2009) The science of neural interface systems. Annual Review Neuroscience 32: 249–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hinton G, Deng L, Yu D, et al. (2012) Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Processing Magazine 29(6): 82–97. [Google Scholar]

- Hochberg LR, Bacher D, Jarosiewicz B, et al. (2012) Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 485(7398): 372–375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holtzheimer PE, Husain MM, Lisanby SH, et al. (2017) Subcallosal cingulate deep brain stimulation for treatment-resistant depression: A multisite, randomised, sham-controlled trial. Lancet Psychiatry 4(11): 839–849. [DOI] [PubMed] [Google Scholar]

- Horch KW, Dhillon GS. (2004) Neuroprosthetics: Theory and Practice. Singapore: World Scientific. [Google Scholar]

- Humayun MS. (2001) Intraocular retinal prosthesis. Transactions of the American Ophthalmological Society 99: 271–300. [PMC free article] [PubMed] [Google Scholar]

- Hummel FC, Cohen LG. (2005) Drivers of brain plasticity. Current Opinion in Neurobiology 18(6): 667–674. [DOI] [PubMed] [Google Scholar]

- Ibsen S, Tong A, Schutt C, et al. (2015) Sonogenetics is a non-invasive approach to activating neurons in Caenorhabditis elegans. Nature Communications 6: 8264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ienca M, Kressig RW, Jotterand F, et al. (2017) Proactive ethical design for neuroengineering, assistive and rehabilitation technologies: The Cybathlon lesson. Journal of Neuroengineering and Rehabilitation 14(1): 115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson A, Mavoori J, Fetz EE. (2006) Long-term motor cortex plasticity induced by an electronic neural implant. Nature 444(7115): 56–60. [DOI] [PubMed] [Google Scholar]

- Jarvis S, Schultz SR. (2015) Prospects for optogenetic augmentation of brain function. Frontiers in Systems Neuroscience 9(157). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jun JJ, Steinmetz NA, Siegle JH, et al. (2017) Fully integrated silicon probes for high-density recording of neural activity. Nature 551(7679): 232–236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein E, Nam CS. (2016) Neuroethics and brain-computer interfaces (BCIs). Brain-Computer Interfaces 3(3): 123–125. [Google Scholar]

- Klein E, Goering S, Gagne J, et al. (2016) Brain-computer interface-based control of closed-loop brain stimulation: Attitudes and ethical considerations. Brain-Computer Interfaces 3(3): 140–148. [Google Scholar]

- Laxpati NG, Kasoff WS, Gross RE. (2014) Deep brain stimulation for the treatment of epilepsy: Circuits, targets, and trials. Neurotherapeutics 11(3): 508–526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lebedev MA, Nicolelis MAL. (2006) Brain-machine interfaces: Past, present and future. Trends in Neurosciences 29(9): 536–546. [DOI] [PubMed] [Google Scholar]

- LeCun Y, Bengio Y, Hinton G. (2015) Deep learning. Nature 521: 436–444. [DOI] [PubMed] [Google Scholar]

- Lee B, Liu CY, Apuzzo ML. (2013) A primer on brain-machine interfaces, concepts, and technology: A key element in the future of functional neurorestoration. World Neurosurgery 79(3–4): 457–471. [DOI] [PubMed] [Google Scholar]

- Loeb GE, Byers CL, Rebscher SJ, et al. (1983) Design and fabrication of an experimental cochlear prosthesis. Medical and Biological Engineering and Computing 21(3): 241–254. [DOI] [PubMed] [Google Scholar]

- Mahmud M, Vassanelli S. (2016) Processing and analysis of multichannel extracellular neuronal signals: State-of-the-art and challenges. Frontiers in Neuroscience 10(248). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahmud M, Cecchetto C, Maschietto M, et al. (2017) Towards high-resolution brain-chip interface and automated analysis of multichannel neuronal signals. In: Proceedings of the 2017 IEEE region 10 humanitarian technology conference R10-HTC, Dhaka, Bangladesh, 21–23 December, pp. 868–872. New York: IEEE. [Google Scholar]

- Mahmud M, Kaiser MS, Hussain A, et al. (2018) Applications of deep learning and reinforcement learning to biological data. IEEE Transactions on Neural Networks and Learning Systems. Epub ahead of print 31 January. DOI: 10.1109/TNNLS.2018.2790388. [DOI] [PubMed] [Google Scholar]

- Marmura MJ, Silberstein SD, Schwedt TJ. (2015) The acute treatment of migraine in adults: The American Headache Society evidence assessment of migraine pharmacotherapies. Headache 55(1): 3–20. [DOI] [PubMed] [Google Scholar]

- Micera S, Navarro X, Carpaneto J, et al. (2008) On the use of longitudinal intrafascicular peripheral interfaces for the control of cybernetic hand prostheses in amputees. IEEE Transactions on Neural Systems and Rehabilitation Engineering 16(5): 453–472. [DOI] [PubMed] [Google Scholar]

- Mnih V, Kavukcuoglu K, Silver D, et al. (2015) Human-level control through deep reinforcement learning. Nature 518(7540): 529–533. [DOI] [PubMed] [Google Scholar]

- Morrell MJ, Halpern C. (2016) Responsive direct brain stimulation for epilepsy. Neurosurgery Clinics of North America 27(1): 111–121. [DOI] [PubMed] [Google Scholar]

- Muller O, Rotter S. (2017) Neurotechnology: Current developments and ethical issues. Frontiers in Systems Neuroscience 11(93). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mussa-Ivaldi FA, Miller LE. (2003) Brain-machine interfaces: Computational demands and clinical needs meet basic neuroscience. Trends in Neurosciences 26(6): 329–334. [DOI] [PubMed] [Google Scholar]

- Nichols M, Townsend N, Scarborough P, et al. (2012) European Cardiovascular Disease Statistics. Brussels: European Society of Cardiology. [Google Scholar]

- Nicolelis MAL. (2001) Actions from thoughts. Nature 409(6818): 403–407. [DOI] [PubMed] [Google Scholar]

- Nicolelis MAL. (2003) Brain-machine interfaces to restore motor function and probe neural circuits. Nature Reviews Neuroscience 4(5): 417–422. [DOI] [PubMed] [Google Scholar]

- Nuffield Council on Bioethics (NCB) (2013) Novel Neurotechnologies: Intervening in the Brain. London: Nuffield Council on Bioethics. [Google Scholar]

- Nunez PL, Srinivasan R. (2006) Electric Fields of the Brain: The Neurophysics of EEG. Oxford: Oxford University Press. [Google Scholar]

- Orsborn AL, Dangi S, Moorman HG, et al. (2012) Closed-loop, decoder adaptation on intermediate time-scales facilitates rapid BMI performance improvements independent of decoder initialization conditions. IEEE Transactions on Neural Systems and Rehabilitation Engineering 20(4): 468–477. [DOI] [PubMed] [Google Scholar]

- Oweiss KG, Badreldin IS. (2015) Neuroplasticity subserving the operation of brain-machine interfaces. Neurobiology of Disease 83: 161–171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palsson B. (2000) The challenges of in silico biology. Nature Biotechnology 18(11): 1147–1150. [DOI] [PubMed] [Google Scholar]

- Panuccio G, Guez A, Vincent R, et al. (2013) Adaptive control of epileptiform excitability in an in vitro model of limbic seizures. Experimental Neurology 241: 179–183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panuccio G, Semprini M, Chiappalone M. (2016) Intelligent biohybrid systems for functional brain repair. New Horizons in Translational Medicine 3(3–4): 162–174. [Google Scholar]

- Panzeri S, Harvey CD, Piasini E, et al. (2017) Cracking the neural code for sensory perception by combining statistics, intervention, and behavior. Neuron 93(3): 491–507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasquale G, Ciliberto C, Rosasco L, et al. (2016) Object identification from few examples by improving the invariance of a deep convolutional neural network. In: Proceedings of the IEEE/RSJ international conference on intelligent robots and systems, Daejeon, Korea, 9–14 October, pp. 4904–4910. New York: IEEE. [Google Scholar]

- Paz JT, Huguenard JR. (2015) Optogenetics and epilepsy: Past, present and future. Epilepsy Currents 15(1): 34–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeifer R, Scheier C. (1999) Understanding Intelligence. New York: MIT Press. [Google Scholar]

- Pineau J, Guez A, Vincent R, et al. (2009) Treating epilepsy via adaptive neurostimulation: A reinforcement learning approach. International Journal of Neural Systems 19(4): 227–240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pohlmeyer EA, Mahmoudi B, Geng S, et al. (2014) Using reinforcement learning to provide stable brain-machine interface control despite neural input reorganization. PLoS ONE 9(1): e87253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raspopovic S, Capogrosso M, Petrini FM, et al. (2014) Restoring natural sensory feedback in real-time bidirectional hand prostheses. Science Translational Medicine 6(222): 222ra219. [DOI] [PubMed] [Google Scholar]

- Reardon S. (2014) Electroceuticals spark interest. Nature 511(7507): 18. [DOI] [PubMed] [Google Scholar]

- Reardon S. (2017) AI-controlled brain implants for mood disorders tested in people. Nature 551(7682): 549–550. [DOI] [PubMed] [Google Scholar]

- Roco MC, Bainbridge WS. (2003) Converging Technologies for Improving Human Performance: Nanotechnology, Biotechnology, Information Technology and Cognitive Science. Dordrecht: Springer. [Google Scholar]

- Rosin B, Slovik M, Mitelman R, et al. (2011) Closed-loop deep brain stimulation is superior in ameliorating parkinsonism. Neuron 72(2): 370–384. [DOI] [PubMed] [Google Scholar]

- Rossi PJ, Gunduz A, Judy J, et al. (2016) Proceedings of the third annual deep brain stimulation think tank: A review of emerging issues and technologies. Frontiers in Neuroscience 10(119). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rossor MN, Fox NC, Mummery CJ, et al. (2010) The diagnosis of young-onset dementia. Lancet Neurology 9(8): 793–806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russakovsky O, Deng J, Su H, et al. (2015) ImageNet large scale visual recognition challenge. International Journal of Computer Vision 115(3): 211–252. [Google Scholar]

- Russell SJ, Norvig P. (2010) Artificial Intelligence: A Modern Approach. Upper Saddle River, NJ: Prentice Hall. [Google Scholar]

- Schmidt EM. (1980) Single neuron recording from motor cortex as a possible source of signals for control of external device. Annals of Biomedical Engineering 8(4–6): 339–349. [DOI] [PubMed] [Google Scholar]

- Schwarz M, Schulz H, Behnke S. (2015) RGB-D object recognition and pose estimation based on pre-trained convolutional neural network features. In: Proceedings of the IEEE international conference on robotics and automation (ICRA), Seattle, WA, 26–30 May. [Google Scholar]

- Shenoy KV, Carmena JM. (2014) Combining decoder design and neural adaptation in brain-machine interfaces. Neuron 84(4): 665–680. [DOI] [PubMed] [Google Scholar]

- Silver D, Huang A, Maddison CJ, et al. (2016) Mastering the game of Go with deep neural networks and tree search. Nature 529: 484–489. [DOI] [PubMed] [Google Scholar]

- Soekadar SR, Birbaumer N, Slutzky MW, et al. (2015) Brain-machine interfaces in neurorehabilitation of stroke. Neurobiology of Disease 83: 172–179. [DOI] [PubMed] [Google Scholar]

- Song D, Berger TW. (2014) Hippocampal memory prosthesis. In: Jaeger D, Jung R. (eds) Encyclopedia of Computational Neuroscience. Berlin: Springer. [Google Scholar]

- Song D, Robinson BS, Hampson RE, et al. (2015) Sparse generalized volterra model of human hippocampal spike train transformation for memory prostheses. IEEE Engineering in Medicine and Biology Society 2015: 3961–3964. [DOI] [PubMed] [Google Scholar]

- Stanley GB. (2013) Reading and writing the neural code. Nature Neuroscience 16: 259–263. [DOI] [PubMed] [Google Scholar]

- Sullivan LS, Klein E, Brown T, et al. (2017) Keeping disability in mind: A case study in implantable brain-computer interface research. Science and Engineering Ethics 24(2): 479–504. [DOI] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. (1998) Reinforcement Learning: An Introduction. Cambridge, MA: MIT Press. [Google Scholar]

- Tankus A, Fried I, Shoham S. (2014) Cognitive-motor brain-machine interfaces. Journal of Physiology Paris 108(1): 38–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor DM, Tillery SI, Schwartz AB. (2002) Direct cortical control of 3D neuroprosthetic devices. Science 296(5574): 1829–1832. [DOI] [PubMed] [Google Scholar]

- Tehovnik EJ, Tolias AS, Sultan F, et al. (2006) Direct and indirect activation of cortical neurons by electrical microstimulation. Journal of Neurophysiology 96(2): 512–521. [DOI] [PubMed] [Google Scholar]

- Tehovnik EJ, Woods LC, Slocum WM. (2013) Transfer of information by BMI. Neuroscience 255: 134–146. [DOI] [PubMed] [Google Scholar]

- Thakor NV. (2013) Translating the brain-machine interface. Science Translational Medicine 5(210): 210–217. [DOI] [PubMed] [Google Scholar]

- Thomas GP, Jobst BC. (2015) Critical review of the responsive neurostimulator system for epilepsy. Medical Devices 8: 405–411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tommasi T, Orabona F, Castellini C, et al. (2013) Improving control of dexterous hand prostheses using adaptive learning. IEEE Transactions on Robotics 29(1): 207–219. [Google Scholar]

- Turing AM, Copeland BJ. (2004) The Essential Turing: Seminal Writings in Computing, Logic, Philosophy, Artificial Intelligence, and Artificial Life, Plus the Secrets of Enigma. Oxford; New York: Clarendon Press; Oxford University Press. [Google Scholar]

- Vassanelli S, Mahmud M. (2016) Trends and challenges in neuroengineering: Toward ‘Intelligent’ neuroprostheses through brain – ‘Brain Inspired Systems’ communication. Frontiers in Neuroscience 10(438). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walter W. (1950. a) An electro-mechanical animal. Dialectica 4(3): 206–213. [Google Scholar]

- Walter W. (1950. b) An imitation of life. Scientific American 182(5): 42–54. [Google Scholar]

- Wenger N, Moraud EM, Gandar J, et al. (2016) Spatiotemporal neuromodulation therapies engaging muscle synergies improve motor control after spinal cord injury. Nature Medicine 22(2): 138–145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wenger N, Moraud EM, Raspopovic S, et al. (2014) Closed-loop neuromodulation of spinal sensorimotor circuits controls refined locomotion after complete spinal cord injury. Science Translational Medicine 6(255): 255ra133. [DOI] [PubMed] [Google Scholar]

- Wissel T, Pfeiffer T, Frysch R, et al. (2013) Hidden Markov model and support vector machine based decoding of finger movements using electrocorticography. Journal of Neural Engineering 10(5): 056020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- World Health Organization (WHO) (2006) Neurological Disorders: Public Health Challenges. Geneva: WHO. [Google Scholar]

- Wright J, Macefield VG, van Schaik A, et al. (2016) A review of control strategies in closed-loop neuroprosthetic systems. Frontiers in Neuroscience 10(312). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuste R, Goering S, Arcas BAY, et al. (2017) Four ethical priorities for neurotechnologies and AI. Nature 551(7679): 159–163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zuse K. (1993) The Computer – My Life. Berlin: Springer. [Google Scholar]