Abstract

Background:

The prefrontal cortices play an essential role in cognitive-emotional and working memory processes through interactions with multiple brain regions.

Methods:

This article further develops a unified neural architecture that explains many recent and classical data about prefrontal function and makes testable predictions.

Results:

Prefrontal properties of desirability, availability, credit assignment, category learning, and feature-based attention are explained. These properties arise through interactions of orbitofrontal, ventrolateral prefrontal, and dorsolateral prefrontal cortices with the inferotemporal cortex, perirhinal cortex, parahippocampal cortices; ventral bank of the principal sulcus, ventral prearcuate gyrus, frontal eye fields, hippocampus, amygdala, basal ganglia, hypothalamus, and visual cortical areas V1, V2, V3A, V4, middle temporal cortex, medial superior temporal area, lateral intraparietal cortex, and posterior parietal cortex. Model explanations also include how the value of visual objects and events is computed, which objects and events cause desired consequences and which may be ignored as predictively irrelevant, and how to plan and act to realise these consequences, including how to selectively filter expected versus unexpected events, leading to movements towards, and conscious perception of, expected events. Modelled processes include reinforcement learning and incentive motivational learning; object and spatial working memory dynamics; and category learning, including the learning of object categories, value categories, object-value categories, and sequence categories, or list chunks.

Conclusion:

This article hereby proposes a unified neural theory of prefrontal cortex and its functions.

Keywords: Category learning, cognitive-emotional interactions, working memory, reinforcement learning, incentive motivational learning, contextual cueing, inferotemporal cortex, orbitofrontal cortex, ventrolateral prefrontal cortex, dorsolateral prefrontal cortex, amygdala, basal ganglia, perirhinal cortex, parahippocampal cortex, frontal eye fields, ventral prearcuate gyrus, ventral bank of the principal sulcus

1. Introduction: towards a mechanistic theoretical understanding of prefrontal functions

1.1. Functional roles of orbitofrontal, ventrolateral, and dorsolateral prefrontal cortex

The prefrontal cortex (PFC) contributes to many of the higher cognitive, emotional, and decision-making processes that define human intelligence, while also controlling the release of goal-oriented actions towards valued goal objects. As noted in the Wikipedia article about PFC,

The most typical psychological term for functions carried out by the prefrontal cortex area is executive function. Executive function relates to abilities to differentiate among conflicting thoughts, determine good and bad, better and best, same and different, future consequences of current activities, working toward a defined goal, prediction of outcomes, expectation based on actions, and social ‘control’ (the ability to suppress urges that, if not suppressed, could lead to socially unacceptable outcomes).

Elliott et al. (2000) discussed how the PFC contributes to generating behaviours that are flexible and adaptive, notably in novel situations, and to suppressing actions that are no longer appropriate, thereby freeing humans and other primates from being forced to respond more reflexively to current sensory inputs. These authors also review the various terms that have been used to describe PFC functions, including planning (Luria, 1966), memory for the future (Ingvar, 1985), executive control (Baddeley, 1986), working memory (Goldman-Rakic, 1987), supervisory attention (Shallice, 1988), and top-down modulation of bottom-up processes (Frith and Dolan, 1997).

Miller and Cohen (2001) reviewed data that are consistent with these concepts, noting that these properties result

from the active maintenance of patterns of activity in the prefrontal cortex that represent goals and the means to achieve them. They provide bias signals to other brain structures whose net effect is to guide the flow of activity along neural pathways that establish the proper mappings between inputs, internal states, and outputs needed to perform a given task. (p. 167)

Said in yet another way, the PFC is involved with predicting future outcomes and enabling animals and humans to respond adaptively to them.

Wise (2008) espoused a similar view that he vividly summarised as follows: ‘The long list of functions often attributed to prefrontal cortex may all contribute to knowing what to do and what will happen when rare risks arise or outstanding opportunities knock’ (p. 599).

Even this brief heuristic summary of some of the multiple functions of the PFC illustrates the challenge facing any theorist who wishes to model this, or indeed any, part of the brain. The challenge is that various functionally distinct parts of the PFC are connected to each other in complex ways, in addition to being widely connected with multiple other brain regions. Broadly speaking, the dorsal PFC is interconnected with brain regions involved with attention, cognition, and action (Goldman-Rakic, 1988), whereas the ventral prefrontal cortex is interconnected with brain regions involved with emotion (Price, 1999). These facts do not, however, explain how these brain circuits give rise to these distinct psychological functions as emergent properties that arise from interactions among brain regions that work together as functional systems.

A critical question for any theorist of mind and brain is thus: How can the divide between brain mechanisms and psychological functions be bridged? How can this be done with sufficient mechanistic precision to explain and predict challenging interdisciplinary data? Section 1.3 summarises a well-established theoretical method whereby the emergent properties of brain mechanisms may be linked to the mental functions that they control.

Before summarising this theoretical method, Section 1.2 will review some of the prefrontal properties that recent experiments have reported and that will be explained in later sections using this theoretical method. In particular, many prefrontal cortical properties can be subsumed under two unifying mechanistic themes: cognitive-emotional dynamics and working memory dynamics. A macrocircuit of the brain regions that embody these processes in a unified predictive Adaptive Resonance Theory (pART), a model that herein explains and predicts many prefrontal cortical data, is shown in Figure 1.

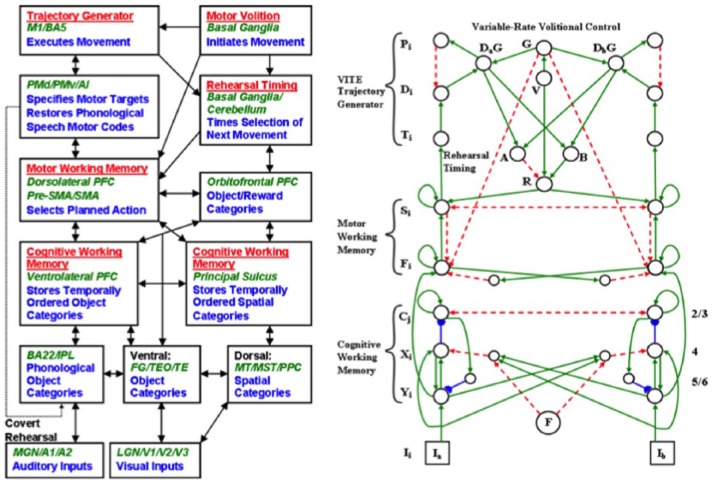

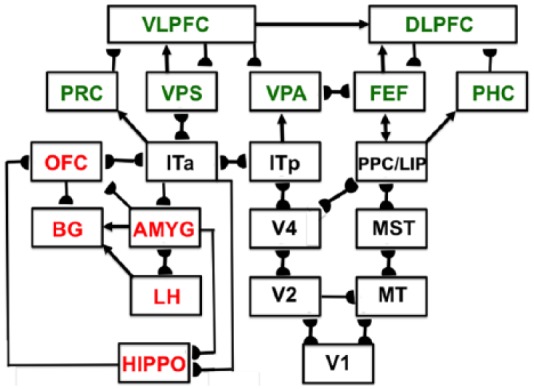

Figure 1.

Macrocircuit of the main brain regions, and connections between them, that are modelled in the unified predictive Adaptive Resonance Theory (pART) of cognitive-emotional and working memory dynamics that is described in this article. Abbreviations in red denote brain regions used in cognitive-emotional dynamics, whereas abbreviations in green denote brain regions used in working memory dynamics. Black abbreviations refer to brain regions that process visual data during visual perception and are used to learn visual object categories. Arrows denote non-adaptive excitatory synapses. Hemidiscs denote adaptive excitatory synapses. Many adaptive synapses are bidirectional, thereby supporting synchronous resonant dynamics among multiple cortical regions. The output signals from the basal ganglia that regulate reinforcement learning and gating of multiple cortical areas are not shown. Also not shown are output signals from cortical areas to motor responses. V1: striate, or primary, visual cortex; V2 and V4: areas of prestriate visual cortex; MT: middle temporal cortex; MST: medial superior temporal area; ITp: posterior inferotemporal cortex; ITa: anterior inferotemporal cortex; PPC: posterior parietal cortex; LIP: lateral intraparietal area; VPA: ventral prearcuate gyrus; FEF: frontal eye fields; PHC: parahippocampal cortex; DLPFC: dorsolateral hippocampal cortex; HIPPO: hippocampus; LH: lateral hypothalamus; BG: basal ganglia; AMGY: amygdala; OFC: orbitofrontal cortex; PRC: perirhinal cortex; VPS: ventral bank of the principal sulcus; VLPFC: ventrolateral prefrontal cortex. See text for further details.

Section 2 will summarise relevant data and models of cognitive-emotional dynamics and Section 3 will summarise relevant data and models of working memory dynamics. Cognitive-emotional dynamics, and models thereof, include how orbitofrontal cortex (OFC) interacts with brain regions like temporal cortex, amygdala, hippocampus, and cerebellum to regulate processes like category learning and adaptively timed motivated attention and action to acquire a valued goal. Working memory dynamics, and models thereof, include how sequences of events are temporarily stored in ventrolateral and dorsolateral prefrontal cortex, how these sequences are unitised, or chunked, into cognitive plans, and how interactions of prefrontal regions with other brain regions like perirhinal cortex (PRC), parahippocampal cortex (PHC), and basal ganglia (BG) enables predictions and actions to be chosen that are most likely to succeed based on sequences of previously rewarded experiences.

These sections will also compare and contrast the neural models that are used herein with other models in the literature and will make testable predictions to further clarify the brain mechanisms that underlie these data.

Section 4 will provide concluding remarks that highlight some of the article’s main themes.

A unified theoretical explanation with such ambitious goals will necessarily be presented in stages. The text will state key data, and outline model explanations of them, at the beginning of each section to help readers to frame subsequent, more detailed, explanations. The text will also attempt to provide a self-contained explanation of all the models that it uses. This explanation cannot be exhaustive because the theoretical and experimental literatures that fall within the scope of the models are vast. The exposition will nonetheless provide enough information for the reader to appreciate how the explanations work, and why they are compelling.

The exposition will also try to deal with another, even more basic, problem: How can any theory penetrate the complexity of a behaving brain? Indeed, a few facts, taken alone, can have multiple explanations. They provide insufficient guidance to rule out plausible, but incorrect, explanations. In contrast, the current theoretical method confronts hundreds of facts about a particular behavioural function, taken from the entire experimental literature on multiple organisational levels from behavioural to cellular, with all known modelling principles, mechanisms, microcircuits, and architectures. When this is done properly, every such fact, and every modelling hypothesis about it, is put under enormous ‘conceptual pressure’ that typically allows only one possible explanation to survive. The text tries to summarise enough of these constraints to clarify how and why the explanations work and to motivate readers to pursue further reading about topics that particularly interest them in related articles.

1.2. Prefrontal desirability, availability, credit assignment, and feature-based attention

These models help to understand and mechanistically explain recent data about the OFC, ventrolateral prefrontal cortex (VLPFC), and dorsolateral prefrontal cortex (DLPFC). The text will first focus upon a striking conclusion that has arisen about roles for OFC and VLPFC that were derived from behavioural experiments in monkeys who had experienced excitotoxic lesions of these brain regions. The conclusion is that OFC and VLPFC are involved in updating a predicted outcome’s desirability versus its availability, respectively (Rudebeck et al., 2017). Recent data have also claimed that DLPFC neurons encode a solution of the credit-assignment problem (Assad et al., 2017). What the concepts of desirability, availability, and credit assignment mean will be operationally defined in the sections where these data are explained.

Some of the results on desirability that are reported by Rudebeck et al. (2017) were predicted, indeed simulated, by a well-established neural model of cognitive-emotional interactions, notably reinforcement learning and motivated attention, in Grossberg et al. (2008). Aspects of the role of VLPFC in updating availability are clarified by a well-established neural model of cognitive working memory and the learning of predictive cognitive plans, or list chunks (Cohen and Grossberg, 1986, 1987; Grossberg, 2017b; Grossberg and Pearson, 2008). This model has been further developed in Huang and Grossberg (2010) to quantitatively simulate data about sequential decisions during contextually cued visual search and in Silver et al. (2011) to simulate prefrontal cortical neurophysiological data about sequences of eye movement decisions. Data about credit assignment are explained by combining the model of cognitive working memory and list chunks with the model of reinforcement learning and motivated attention to show how chunks that lead to successful predictions are amplified, while those that do not are suppressed.

In Sections 2 and 3, the article will first summarise the above kinds of data that are explanatory targets of the article, then review the models that explain them, and go on to use these models to provide explanations and predictions of additional interdisciplinary data. It will then extend them in a consistent way to mechanistically and functionally explain data about prefrontal sources of feature-based attention in monkeys (Bichot et al., 2015) and humans (Baldauf and Desimone, 2014).

The PFC has been studied with multiple methods. Some articles have studied the PFC of humans with functional neuroimaging in normal subjects or clinical patients, while others have studied monkeys or rats with neurophysiological or anatomical methods. Important functional conclusions have also been derived by combining selective lesions with behavioural studies in monkeys. Recent studies have, however, also shown that different lesion methods can yield quite different results. For example, monkeys with selective excitotoxic lesions of the OFC, unlike monkeys who have received aspiration OFC lesions, are unimpaired in learning and reversing object choices based on reward feedback (Rudebeck et al., 2013). Neurotoxic lesions of the amygdala (Izquierdo and Murray, 2007) have also led to results that challenge earlier demonstrations using aspiration and radiofrequency lesions that the amygdala is needed for object reversal learning (Aggleton and Passingham, 1981; Jones and Mishkin, 1972; Spiegler and Mishkin, 1981).

Why do different lesion methods yield such different results? One main reason is that, unlike excitotoxic lesions, other lesion methods, including aspiration and radiofrequency lesions, may also damage fibres of passage to adjacent cortical areas. The fact that OFC activity has been reported during reversal learning (Fellows and Farah, 2003; Morrison et al., 2011; Rolls, 2000; Rolls et al., 1994) suggests that several neuronal regions and pathways may be involved in this behavioural competence.

This picture is complicated further by different definitions of the brain areas that constitute OFC and VLPFC. The conclusions above hold if OFC is understood to consist of areas 11, 13, and 14. If, however, area 12o is also included, which overlaps what Chau et al. (2015) call the lateral OFC (lOFC), then various properties of what Rudebeck et al. (2017) would assign to VLPFC get attributed to OFC. Herein, the convention will be followed that OFC consists of areas 11, 13, and 14. Another caveat is that there appear to be species-specific variations. For example, unlike old world monkeys, excitotoxic lesions in new world monkeys such as marmosets (Roberts, 2006) can impair these animals on reversal tasks. These variations will not be analysed herein.

1.3. A theoretical method for linking brain to mind: method of minimal anatomies

One successful method for linking brain mechanisms to behavioural functions has been developed and applied during the past 60 years. This method has led to neural models that often anticipated psychological and neurobiological data about the PFC, among other brain regions. It continues to do so, as this article will illustrate.

A key theme of this theoretical ‘method of minimal anatomies’ is that one cannot derive a theory of an entire brain in one step. Rather, one does so incrementally in stages. This incremental theoretical method embodies a kind of design evolution whereby each model embodies a certain set of design principles and mechanisms that the evolutionary process has discovered whereby to cope with a given set of environmental challenges. Then, the model is refined, or unlumped, to embody an even larger set of design principles and mechanisms and thereby expands its explanatory and predictive power. This process of evolutionary unlumping continues unabated, leading to current models that can individually explain psychological, anatomical, neurophysiological, biophysical, and biochemical data about a given faculty of biological intelligence.

Using this method, the pART theory of cognitive-emotional and working memory dynamics has been discovered and incrementally elaborated over the past 60 years (Figure 1). The current exposition will emulate the theoretical method by first summarising the simplest models that can explain nontrivial amounts of prefrontal data, before unlumping them to explain even more data. Although, for expository reasons, multiple models will be mentioned, it needs to be understood that there is just one unified theory behind all the explanations that joins together cognitive-emotional dynamics and working memory dynamics.

The theoretical derivation always begins with behavioural data because brain evolution needs to achieve behavioural success. Starting with behavioural data enables models to be derived whose brain mechanisms have been shaped during evolution by behavioural success. Starting with a large database helps to rule out incorrect, but otherwise seemingly plausible, answers.

Such a derivation leads to the discovery of novel design principles and mechanisms with which to explain how an individual, behaving in real time, can generate the behavioural data as emergent properties. This conceptual leap from data to design is the art of modelling. Once derived, despite being based on psychological constraints, the minimal mathematical model that realises the behavioural design principles has always been interpretable in terms of brain mechanisms. Sixty years of modelling have hereby supported the hypothesis that brains look the way that they do because they embody computational designs whereby individuals autonomously adapt to changing environments in real time. The link from behaviour-to-principle-to-model-to-brain has, in addition, often disclosed unexpected functional roles of the derived brain mechanisms that are not clear from neural data alone.

A ‘minimal’ model is one for which if any of the model’s mechanisms is removed, then the surviving model can no longer explain a key set of previously explained data. Once a connection is made top-down from behaviour to brain by such a minimal model, mathematical and computational analysis discloses what data the minimal model, and its variations, can and cannot explain. Such an analysis focuses attention upon design principles that the current model does not yet embody. These new design principles and their mechanistic realisations are then consistently incorporated into the model by unlumping it to generate a more realistic model. If the model cannot be refined in this way, then that is strong evidence that the current model contains a serious error and must be discarded. The unified pART model that is discussed herein, which explains key functional processes in the brain regions depicted in Figure 1, has withstood multiple stages of unlumping.

2. Cognitive-emotional dynamics and the OFC

2.1. Orbitofrontal coding of desirability as probed by selective satiation

This section of the article summarises an explanation of how the desirability of an outcome is computed in our brains. The relevant data will first be reviewed before the model that can explain, indeed anticipated, them is summarised, along with other data explanations and predictions. An outline of the model’s explanation of desirability will first be given to frame the subsequent exposition of the model mechanisms that accomplish this.

As noted above, the experiments of Rudebeck et al. (2017) support the hypothesis that the OFC, but not the VLPFC, plays a necessary role in choices that are based on outcome desirability. In contrast, the VLPFC, but not the OFC, plays a necessary role in choices that are based on outcome availability. What desirability means is explained operationally by an experiment (their Experiment 2) that manipulates the subjective value of different food rewards with a stimulus-based reinforcer devaluation, or satiation, procedure that was earlier used by Málková et al. (1997), while keep the probability and magnitude of reward stable. The monkeys in this experiment were trained with some conditioned stimulus (CS) objects that were associated with Food 1, and others associated with Food 2. The two foods acted like unconditioned stimuli (US) in the experiment. Following the selective satiation procedure, monkeys were presented with pairs of objects, one object associated with Food 1 and the other with Food 2. The effects of devaluation were measured by calculating how much monkeys shifted their choices towards objects associated with a higher value food, relative to baseline choices.

Both unoperated control monkeys and monkeys with excitotoxic VLPFC lesions could update and use the current value of food reward to guide their choices. In contrast, monkeys with excitotoxic OFC lesions chose stimuli that were associated with the sated food at a much higher rate. Various tests led unambiguously to the conclusion that this deficit in monkeys with OFC lesions arose from their inability to link objects with the current value of the food in guiding their choices.

Málková et al. (1997) had earlier used the same devaluation procedure in rhesus monkeys to test whether excitotoxic basolateral amygdala (AMYG) lesions lead to an inability to shift decisions based upon current food value. Their experiments followed up earlier work of Hatfield et al. (1996) and Holland and Straub (1979) in rats. The results on desirability that are reported by Rudebeck et al. (2017) on OFC lesions were earlier predicted and simulated by the MOTIVATOR (Matching Objects To Internal VAlues Triggers Option Revaluations) neural model in Grossberg et al. (2008) as part of an explanation of the Málková et al. (1997) results.

In particular, Grossberg et al. (2008) wrote the following as part of the caption of their Figure 8 that shows data and simulations about reaction time, choice behaviour, and reward value:

Choices made between the two CSs reflect preferences between the different food rewards. Devaluation of a US by food-specific satiety (FSS) shifts the choices of the animal away from cues associated with the devalued rewards (reprinted with permission from Málková et al., 1997). Málková et al. (1997) report the effects of basolateral amygdala lesions using a difference score. The difference score is calculated by measuring the percent of the trials in which the to-be-devalued food is chosen over other foods, before and after FSS. The ‘difference score’ reflects the difference between these two percentages … Using FEF activity to determine cue choice, the intact model (CTL) shows a similar shift in CS preference when the US associated with it is devalued by FSS. Food-specific satiety is implemented by lowering selected DRIVE inputs to the LH … The automatic shifting of visual cue preference when an associated US is devalued by FSS is lost after AMYG lesions (AX) and ORBl lesions (OX). [italics mine]

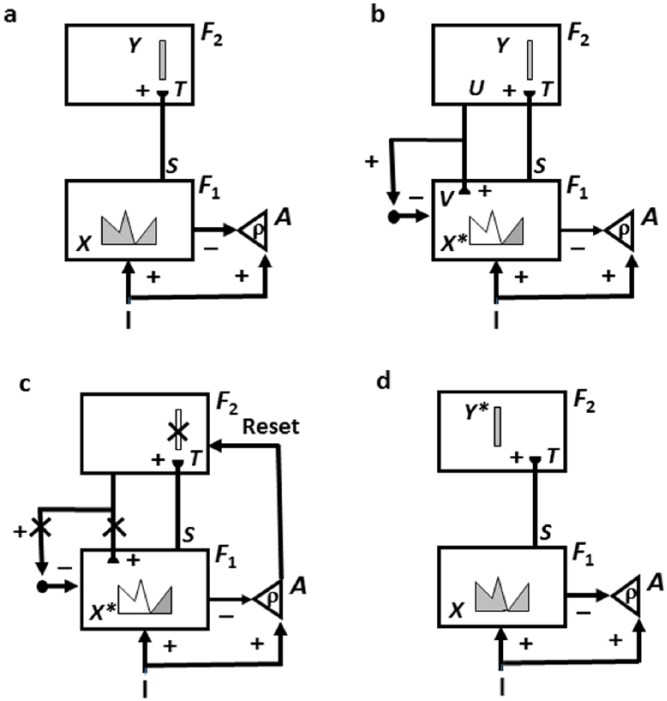

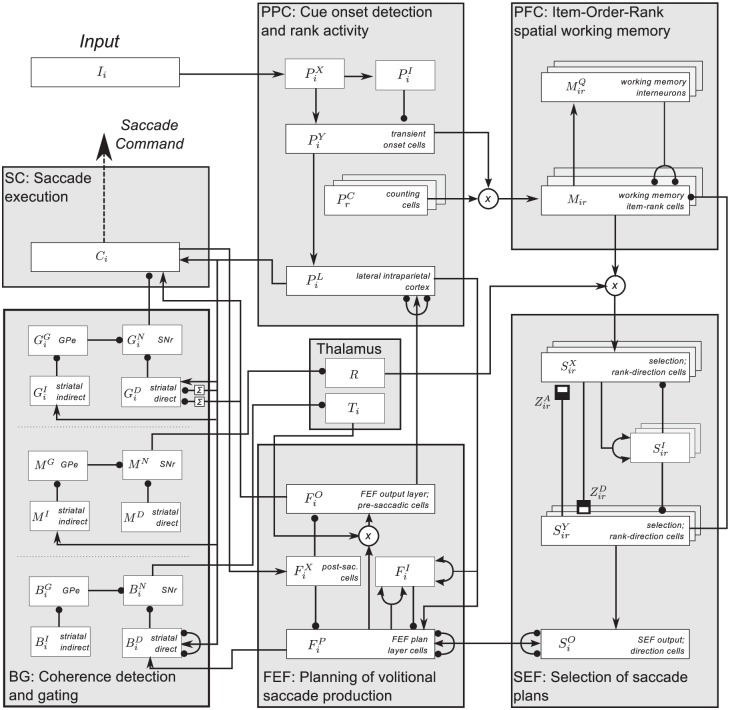

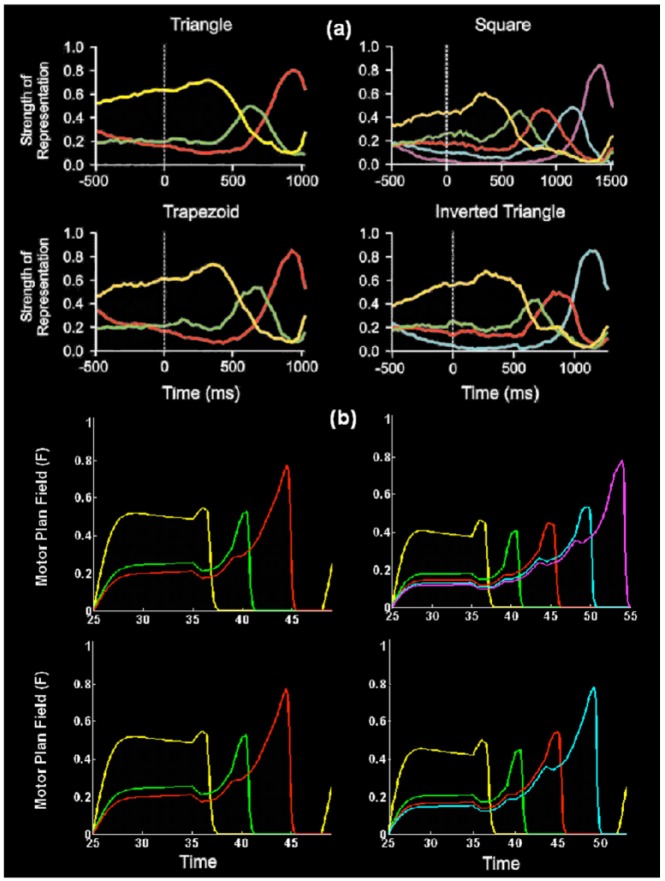

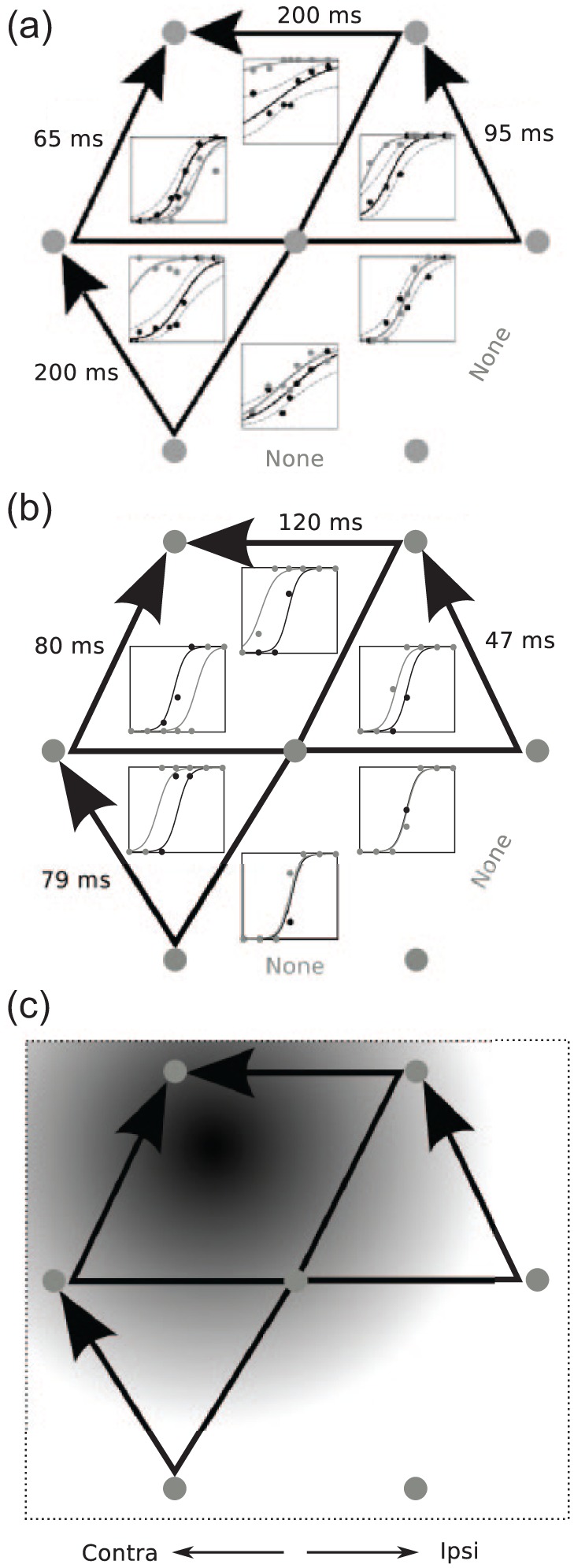

Figure 8.

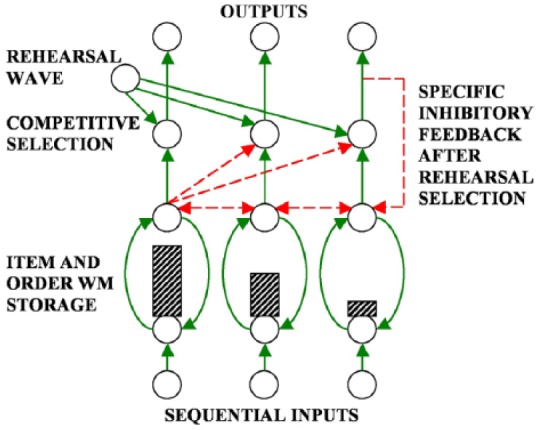

A temporal sequence of inputs creates a spatial pattern of activity across item chunks in an Item-and-Order working memory (height of hatched rectangles is proportional to cell activity). Relative activity level codes for item and order. A rehearsal wave allows item activations to compete before the maximally active item elicits an output signal and self-inhibits via feedback inhibition to prevent its perseverative performance. The process then repeats itself. Solid arrows denote excitatory connections. Dashed arrows denote inhibitory connections.

Source: Adapted from Grossberg (1978a).

The devaluation procedure that was used by Málková et al. (1997) and Rudebeck et al. (2017) fed monkeys a lot of Food 1 before testing their choice between Food 1 and an alternative food, Food 2, that has not be devalued. The MOTIVATOR model explains how devaluing Food 1 reduces the drive input (Figure 2(a)) from the lateral hypothalamus (LH) that is needed to activate its value category in the amygdala (AMYG), thereby reducing its ability to compete with the value category of Food 2. The value category of Food 2 can hereby win the competition between value categories, and release an incentive motivational signal to the OFC, which enables the OFC to choose Food 2 with increased probability. Either AMYG or OFC lesions eliminate this pathway to motivated choice of Food 2.

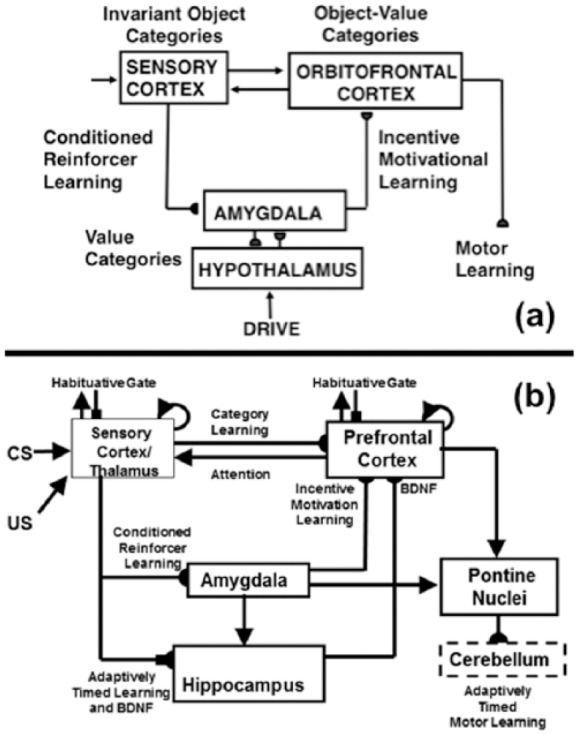

Figure 2.

(a) CogEM (Cognitive-Emotional-Motor) neural model circuits and their anatomical interpretation. CogEM models how invariant object categories in sensory cortex can activate value categories, also called drive representations, in the amygdala and hypothalamus, and object-value categories in the orbitofrontal cortex. Converging output signals from an object category and its value category are needed to vigorously fire the corresponding object-value category. Achieving such convergence from the amygdala requires prior conditioned reinforcer learning and incentive motivational learning. Activation of a value category also requires converging signals: from its object category and its internal drive input. When an object-value category fires, it can send positive feedback to its object category and attentionally enhance it with value-modulated activation. The motivationally enhanced object category can then better compete with other object categories via a recurrent competitive network (not shown) and draw attention to itself. Closing the feedback loop between object, value, and object-value categories causes a cognitive-emotional resonance that can induce a conscious percept of having a particular emotion, or feeling, towards the attended object, as well as knowing what it is. As this resonance develops, the object-value category can generate output signals that can activate cognitive expectations and action commands through other brain circuits. Adapted with permission from Grossberg and Seidman (2006). (b) The neurotrophic Spectrally Timed Adaptive Resonance Theory, or nSTART, model macrocircuit is a further development of the START model in which parallel and interconnected networks support both delay and trace conditioning. Connectivity from both the thalamus and the sensory cortex occurs to the amygdala and hippocampus. Sensory cortex interacts reciprocally with prefrontal cortex, specifically orbitofrontal cortex. Multiple types of learning and neurotrophic mechanisms of memory consolidation cooperate in these circuits to generate adaptively timed responses. Connections from the sensory cortex to the orbitofrontal cortex support category learning. Reciprocal connections from orbitofrontal cortex to sensory cortex support motivated attention. Habituative transmitter gates modulate excitatory conductances at all processing stages. Connections from sensory cortex to amygdala support conditioned reinforcer learning. Connections from amygdala to orbitofrontal cortex support incentive motivation learning. Hippocampal adaptively timed learning and brain-derived neurotrophic factor (BDNF) bridge temporal delays between CS offset and US onset during trace conditioning acquisition. BDNF also supports long-term memory consolidation within sensory cortex to hippocampal pathways and from hippocampal to orbitofrontal pathways. The pontine nuclei serve as a final common pathway for reading-out conditioned responses. Cerebellar dynamics are not simulated in nSTART. Arrowhead: excitatory synapse; hemidisc: adaptive weight; square: habituative transmitter gate; square followed by a hemidisc: habituative transmitter gate followed by an adaptive weight. Reprinted with permission from Franklin and Grossberg (2017).

In order to better understand how this decision process is proposed to work, the subsequent text will review three model properties: (1) how the brain can learn different value categories that can be selectively activated by different foods; (2) how internal drive inputs, notably satiety signals, interact with conditioned or unconditioned reinforcing sensory inputs before such sensory-drive combinations compete to determine an incentive motivational output; and (3) how conditioned reinforcer pathways can habituate due to frequent activation by a particular food and thus create progressively smaller inputs to their value categories. The loss of these factors due to an amygdala lesion may prevent an animal from shifting its visual cue preference away from a devalued food.

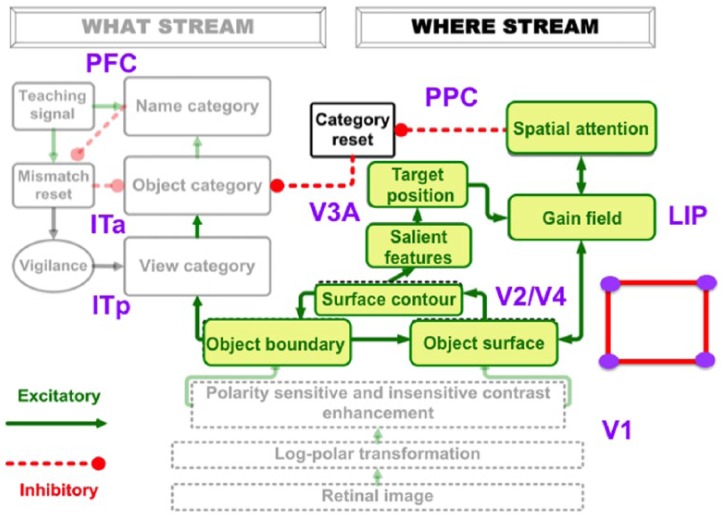

2.2. Reinforcement learning, motivated attention, resonance, and directed action

The Rudebeck et al. (2017) concept of desirability of predicted outcomes is related to earlier concepts such as the ‘somatic marker hypothesis’ which proposes that decision-making is a process that depends upon emotion (Bechara et al., 1999). The Cognitive-Emotional-Motor, or CogEM, model (Figure 2(a)) that will be described in this section, in several incrementally unlumped versions that include the MOTIVATOR model, provides a mechanistic neural explanation of some aspects of emotionally modulated decision-making by describing different properties of, and interactions between, sensory cortex, amygdala, and OFC in making these decisions (Baxter et al., 2000; Bechara et al., 1999; Schoenbaum et al., 2003; Tremblay and Schultz, 1999). CogEM also clarifies how the OFC contributes in this circuit to the expression of the incentive value of rewards and their sensitivity to reward devaluation (Gallagher et al., 1999), and how acquired value in OFC depends on input from basolateral amygdala (Schoenbaum et al., 2003).

These properties of the CogEM model arise as emergent, or interactive, properties of the neural mechanisms that regulate reinforcement learning among the sensory cortex, amygdala/hypothalamus, and OFC; the allocation of motivated attention to chosen options in the sensory and orbitofrontal cortices as a result of this learning; and the release of motivated actions by the OFC to acquire valued goal objects that can realise these options (Grossberg, 1971, 1972a, 1972b, 1982, 2000b; Grossberg and Levine, 1987; Grossberg and Schmajuk, 1987; Grossberg and Seidman, 2006).

In particular, as summarised in Figure 2(a), the CogEM model explains how invariant object categories, in sensory cortical regions like the anterior inferotemporal cortex (ITa), and object-value categories, in cortical regions like the OFC, interact with value categories, in subcortical emotional centres like amygdala and hypothalamus. These brain regions are linked by a feedback loop which, when activated for sufficiently long time, can generate a cognitive-emotional resonance. Such a resonance can support conscious feelings while conditioned reinforcer learning pathways (from sensory cortex to amygdala; Gore et al., 2015) and incentive motivational learning pathways (from amygdala to OFC; Arana et al., 2003) focus motivated attention upon valued object and object-value representations. These attended object-value representations can, in turn, release commands to perform actions that are compatible with these feelings.

It needs immediately to be noted, however, that the CogEM circuit in Figure 2(a) cannot, by itself, maintain motivated attention during an adaptively timed interval that is sufficiently long to enable reinforcement learning to effectively occur in paradigms where rewards are delayed in time, as happens during trace conditioning and delayed match-to-sample, and to enable a conscious ‘feeling of what happens’ to emerge (Damasio, 1999). The hippocampus is needed to support both of these properties (Figure 2(b)), as sections 2.5 and 2.8 will further discuss.

The next Sections say more about these several types of categories and the learned interactions between them.

2.3. Object, value, and object-value categories

Three different types of learned representations are included in the CogEM circuit of Figure 2(a): invariant object categories respond selectively to objects that are seen from multiple views, positions, and distances from an observer. They are learned by inferotemporal (IT) cortical interactions between anterior IT (ITa), posterior IT (ITp), and prestriate cortical areas like V4 (Figure 1; Desimone, 1998; Gochin et al., 1991; Harries and Perrett, 1991; Mishkin, 1982; Mishkin et al., 1983; Seger and Miller, 2010; Ungerleider and Mishkin, 1982). How such invariant object categories may be learned as the eyes scan a scene is modelled by the ARTSCAN Search neural model that is discussed in Sections 3.28 and 3.29 (Cao et al., 2011; Chang et al., 2014; Fazl et al., 2009).

Value categories are sites of reinforcement learning that control different emotions and incentive motivational output signals. They occur in amygdala and hypothalamus in cells where reinforcing and homeostatic, or internal drive, inputs converge to generate emotional reactions and motivational decisions (Aggleton, 1993; Aggleton and Saunders, 2000; Barbas, 1995, 2007; Bower, 1981; Davis, 1994; Gloor et al., 1982; Halgren et al., 1978; LeDoux, 1993).

Object-value categories respond to converging signals from object categories and value categories. They are proposed to occur in OFC. The properties of object-value categories will be particularly discussed in this exposition. How they interact with representations in other brain regions is also an essential part of such an analysis.

Finally, motor representations (M) control discrete adaptive responses. They include multiple brain regions, including motor cortex and cerebellum (Evarts, 1973; Ito, 1984; Kalaska et al., 1989; Thompson, 1988). More complete models of the internal structure of motor representations and how they generate movement trajectories are described elsewhere (e.g. Bullock et al., 1998; Bullock and Grossberg, 1988; Cisek et al., 1998; Contreras-Vidal et al., 1997; Fiala et al., 1996; Gancarz and Grossberg, 1998, 1999) and can readily be incorporated into CogEM model extensions.

2.4. Conditioned reinforcer, incentive motivational, and motor learning: wanting

Three types of learning are shown in Figure 2(a) between these representations: conditioned reinforcer learning strengthens the pathway from an invariant object category to a value category. Incentive motivational learning strengthens the pathway from a value category to an object-value category. Motor learning enables the performance of an act aimed at acquiring a valued goal object.

Reinforcement learning, such as classical, or Pavlovian, conditioning (Kamin, 1968, 1969; Pavlov, 1927), occurs within conditioned reinforcer pathways (Figure 2(a)). A neutral event is called a CS when it is paired with an emotion-inducing, reflex-triggering event that is called an unconditioned stimulus (US). A CS can become a conditioned reinforcer when its object category is activated sufficiently often just before the value category is activated by an US. As a result of conditioned reinforcer learning, a CS can, on its own, subsequently activate a value category via this learned pathway. When this happens, the CS is called a conditioned reinforcer because it can trigger many of the same reinforcing and emotional effects as an US.

During classical conditioning, incentive motivational learning also occurs from the activated value category to the object-value category that corresponds to the CS, incentive motivational learning enables an active value category to prime, or modulate, the object-value categories of all CSs that have consistently been correlated with it. It is the kind of learning that primes an individual to think about places to eat when feeling hungry.

Motor, or habit, learning enables the sensorimotor maps, vectors, and gains that are involved in sensory-motor control to be adaptively calibrated, thereby enabling a CS to read out correctly calibrated movements via its object-value category.

These conclusions about the CogEM model also hold for operant, or instrumental, conditioning, where rewards or punishments are delivered that are contingent upon particular behaviours (Skinner, 1938). In fact, the CogEM model was introduced to explain data about operant conditioning (Grossberg, 1971). Many reinforcement learning and motivated attentional mechanisms exploit shared neural circuits, even though the experimental paradigms and behaviours that activate these circuits may differ.

The incentive motivational and motor learning pathways contribute to the process that various researchers call wanting. As Berridge et al. (2009) note, ‘By “wanting” we mean incentive salience, a type of incentive motivation that promotes approach towards and consumption of rewards’ (p. 67). See also Smith et al. (2011) for a further discussion of the different brain substrates of pleasure and incentive salience.

2.5. Category learning and memory consolidation: effects of lesions

A fourth kind of learning, category learning, adapts the connections from thalamus to an object category in sensory cortex, and/or from an object category to an object-value category in OFC. This category learning process enables external objects and events in the world to selectively activate object and object-value categories. Category learning was not simulated in the original CogEM model (Figure 2(a)), which focused on reinforcement learning, motivated attention, and the release of actions towards valued goal objects. Category learning does play a key role in extensions of CogEM, such as the neurotrophic Spectrally Timed Adaptive Resonance Theory, or nSTART, model (Figure 2(b)) of Franklin and Grossberg (2017). nSTART augments CogEM to include category learning, as well as adaptively timed learning in the hippocampus that can bridge between CS and US stimuli that are separated in time by an interval that can be hundreds of milliseconds in duration, as can occur during trace conditioning and delayed non-match to sample. Interactions between these two processes, augmented by all the other processes of CogEM, enable nSTART to explain and simulate how memory consolidation of recognition categories may occur after conditioning ends. nSTART supports this explanation by mechanistically explaining and simulating data about the complex pattern of disruptions of memory consolidation that occur in response to early versus late lesions of thalamus, amygdala, hippocampus, and OFC.

2.6. Polyvalent constraints and competition interact to choose the most valued options

Both the CogEM and nSTART circuits in Figure 2 need to have two successive sensory processing stages, an invariant object category stage in the temporal cortex, and an object-value category stage in OFC, in order to ensure that the object-value category can release motivated behaviour most vigorously if both sensory inputs from temporal cortex and motivational inputs from the amygdala are simultaneously delivered to the object-value category. A polyvalent constraint on an object-value category means that it fires most vigorously when it receives input from its invariant object category and from a value category. In other words, an object-value category is amplified when the action that it controls is valued at that time. Only when an object-value category wins a competition with other object-value categories can it trigger an action. After learning occurs, a conditioned reinforcer can, by itself, satisfy this polyvalent constraint by sending a signal from its object category directly to its object-value category, and indirectly via the (conditioned reinforcer)-(incentive motivational) pathway (Figure 2). Converging pathways from sensory cortical areas and amygdala to OFC are well known anatomically (e.g. Barbas, 1995).

The firing of each value category in the amygdala/hypothalamus is also regulated by a combination of polyvalent constraints and competition. Here, the polyvalent constraint (Figure 2(a)) is realised by two converging inputs to each value category: a reinforcing input from an US or conditioned reinforcer CS via a conditioned reinforcer pathway and a sufficiently large internal drive input (e.g. hunger, satiety). Each value category can only become active enough to reliably win the competition with other value categories when it receives sufficiently big converging inputs, and only a winning value category can generate large incentive motivational signals to object-value categories. In particular, even if visual cues such as a familiar food generate a strong conditioned reinforcer inputs to a value category, it cannot fire if its internal drive input is reduced by eating a lot, since then the hunger drive input will decrease and the satiety drive input will increase, thereby preventing its value category from winning the competition.

Because both the value categories and the object-value categories obey polyvalent constraints and compete to determine either incentive motivational or motor outputs, a CogEM circuit tends to choose options for action that are currently the most desired ones. These CogEM dynamics hereby clarify necessary conditions for the computation of desirability by the OFC.

Many issues need to be discussed to better understand how these circuits work in practice. These issues include the following: Why is the amygdala called a value category? How does a value category differ from just an internal drive such as hunger? In particular, can value categories represent specific hungers that can be selectively devalued by eating a lot of a particular food? How is the hypothalamus involved in learning a value category? Finally, are there also pathways for expressing valued goals that do not require the amygdala? Such issues will be discussed as the exposition proceeds.

2.7. Predicting what happens next in a changing world: blocking and unblocking

In the real world, a foraging animal may be confronted with multiple possible sensory cues that predict different kinds of available foods. The case of choosing Food 1 or Food 2 in response to different CSs is a special case of this situation. To more completely understand how a choice of one option over another occurs, along with how its desirability is computed, requires an understanding of how attentional blocking and unblocking occur (Kamin, 1968, 1969; Pavlov, 1927). Blocking and unblocking experiments also clarify how humans and other animals discover what combinations of cues are causal and which are predictively irrelevant. Causal feature combinations tend to be attended and used to control subsequent actions. The following explanation of how this happens can be used to design ecologically more realistic neurobiological studies of desirability.

Blocking and unblocking experiments show that the discovery of true environmental causes is an incremental process. Unless sufficiently many actions based upon unblocked cue combinations are made, and their consequences used to match and mismatch learned expectations, an irrelevant cue can be erroneously thought to be predictive, much as superstitious behaviours may be learned and maintained (Skinner, 1948). Predictive errors hereby play a crucial role in shaping the learning of environmental causes, as popular recent books have noted; for example, Schulz (2010). Section 2.14 discusses how unblocking works in greater detail.

A food’s desirability in the real world, where there may be multiple possible food options to choose from, can only be computed if it is not blocked. For example, suppose that a buzzer sound (CS1) is paired with a food reinforcer (US1) until an animal salivates to this sound in anticipation of eating the food. On subsequent learning trials, suppose that, before the food occurs, the buzzer sounds at the same time that a perceptually equally salient light flashes (CS2). Under these circumstances, the flashing light does not become a source of conditioned salivation because it does not predict, indeed does not cause, any consequence that the buzzer sound alone did not already predict. In other words, the flashing light is predictively irrelevant and is thus attentionally blocked.

On the other hand, suppose that, whenever the flashing light occurs with the buzzer sound, the amount of subsequent food (US2) is much greater than when the buzzer sound occurred alone. Then, the animal does learn to anticipate food in response to the flashing light, with the usual salivation and expectation of subsequent food, because it causally predicts an increase in food. If, instead, the amount of food that is presented when the flashing light and buzzer occur together is much less than when the buzzer sound occurred alone, then a wave of frustration (Amsel, 1962, 1992), which is a negative reinforcer, may be experienced, even though some food, which is a positive reinforcer, has been presented. The process whereby the CS2 becomes predictively relevant is called attentional unblocking, and it may become a source of either a conditioned appetitive response or conditioned frustration, depending on whether the amount of food is more or less ample after the simultaneous cues than in response to the buzzer alone. Unexpected consequences can hereby lead to the discovery of new cue combinations that cause valued consequences. Attention can then be focused on the unblocked cues, which can then be associated with appropriate new responses.

Blocking and unblocking experiments show that humans and many other animals behave like minimal adaptive predictors who can learn to focus their attention upon events that causally predict important affective consequences, while ignoring other events, at least until an unexpected consequence occurs.

The same CogEM dynamics that enable the OFC to release actions aimed at acquiring desirable goal objects also carry out blocking. Blocking can be understood in the CogEM model as a result of how a cognitive-emotional resonance using the feedback pathways between temporal cortex, amygdala, and OFC (Figure 2) triggers competition among the representations in each of these brain regions to choose, and thereby attend to, the object that has the most salient combination of sensory input and motivational feedback from its value category.

For blocking to work properly, the cells in sensory cortex and OFC need to obey the membrane equations of neurophysiology, also called shunting interactions, and to compete with each other using recurrent, or feedback, inhibitory interactions. Such recurrent shunting on-centre off-surround networks tend to conserve, or normalise, the total activity that is shared among their active cells (Grossberg, 1973, 1980, 2013b). Thus, if the activity of one object category gets most amplified by a favourable combination of feedforward and feedback signalling, then the activities of the object categories with which it is competing will be inhibited, leading to attentional blocking. Grossberg and Levine (1987) simulated attentional blocking using these CogEM interactions. An explanation of unblocking requires additional mechanisms that will be described in Section 2.14.

It should be noted that blocking and unblocking experiments share some properties with the Asaad et al. (2017) experiments on credit assignment. Section 3.19 clarifies why this is so.

2.8. Cognitive-emotional resonances, feeling of what happens, and somatic markers

Previous articles review additional psychological and neurobiological data that the CogEM model has explained and predicted, and how it compares with other models of cognitive-emotional dynamics; for example, Grossberg (2013a, 2017b). All of these data explanations are relevant, moreover, to the computation of desirability.

One such comparison relates to the ability of the CogEM model to explain and predict clinical data. Damasio (1999) has derived from clinical data a heuristic version of the CogEM model and used it to describe cognitive-emotional resonances that support ‘the feeling of what happens’. Each processing stage in Damasio’s model (see his Figure 6.1) corresponds to a processing stage in the CogEM circuit of Figure 2(a). In particular, Damasio’s ‘map of object X’ corresponds to the sensory cortical stage where invariant object categories are represented. His ‘map of the proto-self’ becomes the value category and its multiple interactions. His ‘second-order map’ becomes the object-value category. And his ‘map of object X enhanced’ becomes the object category as it is attentively amplified by feedback from the object-value category. Feedback from an object-value category to its object category closes an excitatory feedback loop between object, value, and object-value categories. Persistent activity through this loop – maintained long enough with the help of adaptively timed feedback from sensory cortex to OFC via the hippocampus (Figure 2(b)) – enables the attended object to achieve emotional and motivational significance and to drive the choice of motivated decisions that can trigger context-appropriate actions towards valued goals.

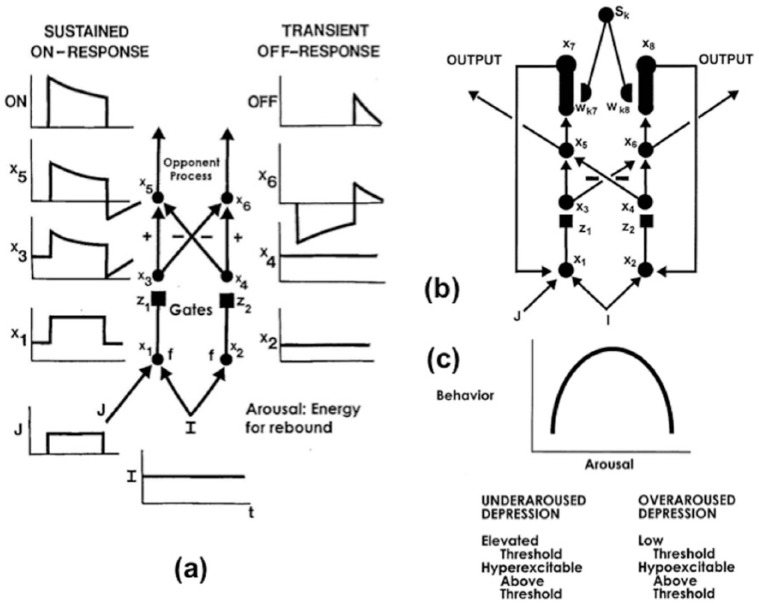

Figure 6.

(a) A gated dipole opponent process can generate habituative ON responses and transient OFF rebounds in response to the phasic onset and offset, respectively, of an input to the ON channel. These mechanisms are a phasic input to the ON channel that is turned on and off through time (input J), nonspecific arousal that is delivered equally to the ON and OFF pathways (input I), cell activities at each of the three processing stages in the ON and OFF pathways (variables xi for the six cells that are labelled with indices i = 1–6), activity-dependent habituative transmitters for the ON and OFF pathways (variables zi with i = 1 and 2, and denoted by the square synapses), competition across the ON and OFF pathways (pathways with plus and minus signs), and output thresholds that rectify the ON and OFF pathway output signals. The antagonistic rebound in response to offset of a phasic input, such as a fear-inducing shock to the ON pathway (variable J), is the transient OFF-response (e.g. relief) at the output stage of the OFF pathway. This rebound is energised by the tonic input I that equally arouses both the ON and the OFF pathways, even after the phasic input shuts off. The activities at the dipole’s several processing stages react to the phasic and tonic inputs in the following way: the ON and OFF cell activities x1 and x2 respond to the sum of tonic-plus-phasic ON pathway input I + J, and the tonic OFF pathway input I, respectively, before they generate output signals f(x1) and f(x2) to the next processing stage. Before they reach the next processing stage, these signals are multiplied, or gated, by the habituative transmitters z1 and z2, respectively. The gated output signals f(x1)z1 and f(x2)z2 excite the ON and OFF cell activities x3 and x4, respectively, at the next processing stage. The habituative transmitters convert the step-plus-baseline activity pattern x1 in the ON channel into the overshoot-habituation-undershoot-habituation pattern at activity x3. The baseline activity pattern x2 in the OFF channel is converted into the habituated baseline activity x4. Next, opponent competition occurs across the ON and OFF channels. As a result, the habituated baseline activity x4 in the OFF channel is subtracted from the ON activity x3 to yield x5. The overshoot and undershoot in x5 are now shifted down until they lie above and below the equilibrium activity zero, respectively, of x5. Then, activity x5 is thresholded to generate an ON output signal. This output signal has an initial overshoot of activity, followed by habituation. The negative activity in the undershoot of x5 is inhibited to zero by the output threshold. When the signs of excitation and inhibition are reversed in the OFF channel, the activity x6 is caused. Activity x6 is simply the flipped, or mirror, image of x5 with respect to the zero equilibrium activity. Positive (negative) activities in x5 become negative (positive) activities in x6. Thresholding x6 again cuts off negative activities, thereby allowing only the flipped undershoot to generate the OFF channel output. This rectified output is the transient antagonistic rebound. Grossberg (1972b) mathematically proved that a sudden increment in arousal can also trigger an antagonistic rebound. Since ‘novel events are arousing’, this property enables an unexpected event to trigger an antagonistic rebound. See Grossberg and Seidman (2006, Appendix A) for a review of this proof. In summary, an antagonistic rebound is due to interactions between a phasic input, tonic arousal, habituative transmitter gating, competition, and thresholding. (b) A READ (REcurrent Associative Dipole) circuit is a gated dipole with excitatory feedback, or recurrent, pathways between activities x7 and x1, and activities x8 and x2. Feedback enables the READ circuit to maintain a stable motivational baseline to support an ongoing motivated behaviour. A sensory representation Sk sends conditionable signals to the READ circuit that are multiplied, or gated, by conditioned reinforcer adaptive weights, or long-term memory (LTM) traces, wk7 and wk8 to the ON and OFF channels, respectively. Read-out of previously learned adaptive weights is dissociated from read-in of new values of the learned weights. This dissociation allows new weight learning to be generated by teaching signals from the ON or OFF channel that wins the opponent competition. The combination of recurrent feedback and associative dissociation enables the adaptive weights to avoid learning baseline noise, while they maintain in short-term memory the relative balance of ON and OFF channel conditioning during a motivated act, and preserve their learned conditioned reinforcer associations until they are disconfirmed by predictive mismatches if and when new learning contingencies are experienced. Reprinted with permission from Grossberg and Schmajuk (1987). (c) Gated dipole opponent processes exhibit an Inverted-U behavioural response as a function of arousal level, as explained in the text. Reprinted with permission from Grossberg and Seidman (2006).

Such sustained activation through a positive feedback loop gives rise to a resonant brain state. In the present instance, it is called a cognitive-emotional resonance because it binds cognitive information in the object category to emotional information in the value category. A resonance is a dynamical state during which neuronal firings across a brain network are amplified and synchronised when they interact via excitatory feedback signals during a matching process that occurs between bottom-up and top-down pathways. Grossberg (2013a, 2017b) explains in detail how resonating cell activities focus attention upon a subset of cells, and how the brain can become conscious of attended events during a resonant state. It is called an adaptive resonance because the resonant state can trigger learning within the adaptive weights, or long-term memory (LTM) traces, that exist at the synapses of these pathways. In a cognitive-emotional resonance, these adaptive weights occur in conditioned reinforcer and incentive motivational pathways, among others. Several different types of adaptive resonances will be described below.

CogEM hereby embodies and anticipated key concepts of the ‘somatic marker hypothesis’ which proposes that decision-making is a process that depends upon emotion, while also providing a mechanistic neural explanation and simulations (e.g. Grossberg et al., 2008) of various different properties of amygdala and OFC in making these decisions (Baxter et al., 2000; Bechara et al., 1999; Schoenbaum et al., 2003).

CogEM has also proposed explanations of additional clinical data, such as the data about memory consolidation that were mentioned above (Franklin and Grossberg, 2017); data about Fragile X syndrome and some types of repetitive behaviours that are found in individuals with autism (Grossberg and Kishnan, 2018); and data about emotional, attentional, and executive deficits that are found in individuals with autism or schizophrenia (Grossberg, 2000b; Grossberg and Seidman, 2006).

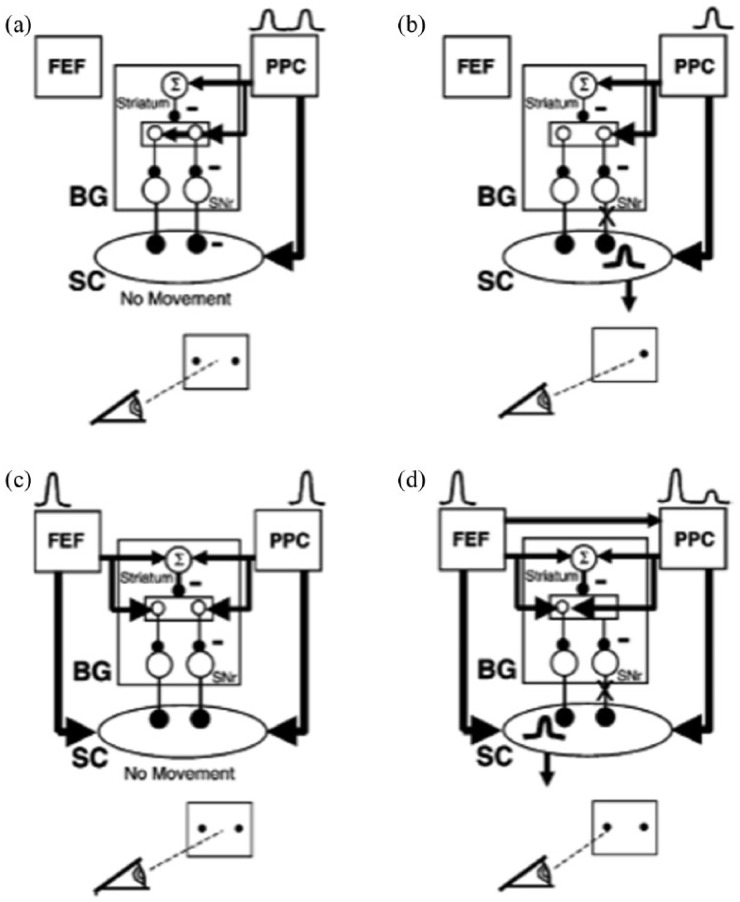

2.9. MOTIVATOR: amygdala and basal ganglia dynamics during conditioning

In order to mechanistically explain how devaluation works, and to answer the questions posed at the end of Section 2.6, the MOTIVATOR model, which unlumps the CogEM model, is needed (Dranias et al., 2008; Grossberg et al., 2008; Figure 3(a)). The MOTIVATOR model combines the CogEM model with a model of how the basal ganglia (BG) learns to respond to expected and unexpected rewards (Figure 4(a)–(c); Brown et al., 1999). Thus, in addition to clarifying how value categories are learned, the MOTIVATOR model begins to explain how the amygdala and the BG interact with one another and with the temporal and orbitofrontal cortices to focus motivated attention and trigger choices aimed at acquiring valued goal objects.

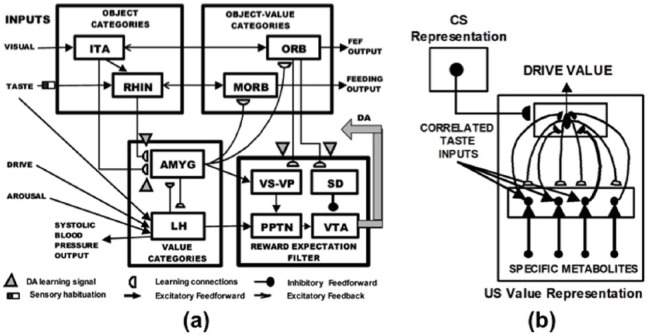

Figure 3.

(a) The MOTIVATOR neural model explains and simulates key computationally complementary functions of the amygdala and basal ganglia (SNc) during conditioning and learned performance. The basal ganglia generate Now Print signals in response to unexpected rewards. These signals modulate learning of new associations in many brain regions. The amygdala supports motivated attention to trigger actions that are expected to occur in response to conditioned or unconditioned stimuli. Object Categories represent visual or gustatory inputs in anterior inferotemporal (ITA) and rhinal (RHIN) cortices, respectively. Value Categories represent the value of anticipated outcomes on the basis of hunger and satiety inputs, in amygdala (AMYG) and lateral hypothalamus (LH). Object-Value Categories resolve the value of competing perceptual stimuli in medial (MORB) and lateral (ORB) orbitofrontal cortex. The Reward Expectation Filter detects the omission or delivery of rewards using a circuit that spans ventral striatum (VS), ventral pallidum (VP), striosomal delay (SD) cells in the ventral striatum, the pedunculopontine nucleus (PPTN) and midbrain dopaminergic neurons of the substantia nigra pars compacta/ventral tegmental area (SNc/VTA). The circuit that processes CS-related visual information (ITA, AMYG, ORB) operates in parallel with a circuit that processes US-related visual and gustatory information (RHIN, AMYG, MORB). (b) Reciprocal adaptive connections between lateral hypothalamus and amygdala enable amygdala cells to become learned value categories. The bottom region represents hypothalamic cells, which receive converging taste and metabolite inputs whereby they become taste-drive cells. Bottom-up signals from activity patterns across these cells activate competing value category, or US Value Representations, in the amygdala. A winning value category learns to respond selectively to specific combinations of taste-drive activity patterns and sends adaptive top-down priming signals back to the taste-drive cells that activated it. CS-activated conditioned reinforcer signals are also associatively linked to value categories. Adaptive connections end in (approximate) hemidiscs. See text for details.

Source: Adapted with permission from Dranias et al. (2008).

Figure 4.

(a) Model circuit for the control of dopaminergic Now Print signals in response to unexpected rewards. Cortical inputs (Ii), activated by conditioned stimuli, learn to excite the SNc via a multi-stage pathway from the ventral striatum (S) to the ventral pallidum and then on to the PPTN (P) and the SNc (D). The inputs Ii excite the ventral striatum via adaptive weights WiS, and the ventral striatum excites the PPTN via double inhibition through the ventral pallidum, with strength WSP. When the PPTN activity exceeds a threshold GP, it excites the SNc with strength WPD. The striosomes, which contain an adaptive spectral timing mechanism (xij, Gij, Yij, Zij), learn to generate adaptively timed signals that inhibit reward-related activation of the SNc. Primary reward signals (IR) from the lateral hypothalamus both excite the PPTN directly (with strength WRP) and act as training signals to the ventral striatum S (with strength WRS) that trains the weights WiS. Arrowheads denote excitatory pathways, circles denote inhibitory pathways, and hemidiscs denote synapses at which learning occurs. Thick pathways denote dopaminergic signals. Reprinted with permission from Brown et al. (1999). (b) Dopamine cell firing patterns: Left: data. Right: model simulation, showing model spikes and underlying membrane potential. (A) In naive monkeys, the dopamine cells fire a phasic burst when unpredicted primary reward R occurs, such as if the monkey unexpectedly receives a burst of apple juice. (B) As the animal learns to expect the apple juice that reliably follows a sensory cue (conditioned stimulus, CS) that precedes it by a fixed time interval, then the phasic dopamine burst disappears at the expected time of reward, and a new burst appears at the time of the reward-predicting CS. (C) After learning, if the animal fails to receive reward at the expected time, a phasic depression, or dip, in dopamine cell firing occurs. Thus, these cells reflect an adaptively timed expectation of reward that cancels the expected reward at the expected time. The data are reprinted with permission from Schultz et al. (1997). The model simulations are reprinted with permission from Brown et al. (1999). (c) Dopamine cell firing patterns: Left: data. Right: model simulation, showing model spikes and underlying membrane potential. (A) The dopamine cells learn to fire in response to the earliest consistent predictor of reward. When CS2 (instruction) consistently precedes the original CS (trigger) by a fixed interval, the dopamine cells learn to fire only in response to CS2. Data reprinted with permission from Schultz et al. (1993). (B) During training, the cell fires weakly in response to both the CS and reward. Data reprinted with permission from Ljungberg et al. (1992). (C) Temporal variability in reward occurrence: When reward is received later than predicted, a depression occurs at the time of predicted reward, followed by a phasic burst at the time of actual reward. (D) If reward occurs earlier than predicted, a phasic burst occurs at the time of actual reward. No depression follows since the CS is released from working memory. Data in C and D reprinted with permission from Hollerman and Schultz (1998). (E) When there is random variability in the timing of primary reward across trials (e.g. when the reward depends on an operant response to the CS), the striosomal cells produce a Mexican Hat depression on either side of the dopamine spike. Data reprinted with permission from Schultz et al. (1993). Model simulation reprinted with permission from Brown et al. (1999).

The BG need to be discussed along with the amygdala because it plays an important role in both the cognitive-emotional and working memory learning that are relevant to this article’s explanatory goals (Figure 1). Indeed, the amygdala and BG seem to play computationally complementary roles (Grossberg, 2000a), with the BG triggering Now Print learning signals in response to unexpected rewards, and the amygdala learning to activate incentive motivational signals with which to help acquire expected rewards. A Now Print learning signal is a signal that is broadcast broadly to many brain regions where it can modulate learning at all of its recipient neurons (Grossberg, 1974; Harley, 2004; Livingston, 1967; McGaugh, 2003).

As in CogEM, the model amygdala and LH interact to calculate the expected current value of the subjective outcome that the CS predicts, constrained by the current state of deprivation or satiation. The amygdala then relays the expected value information to orbitofrontal cells (ORB in Figure 3(a) and OFC in Figure 1) that receive visual inputs from anterior inferotemporal cells (ITA; Amaral and Price, 1984; Ghashghaei and Barbas, 2002; Öngür and Price, 2000; Reep et al., 1996) and to medial orbitofrontal cells (MORB in Figure 3(a)) that receive gustatory inputs from rhinal cortex (RHIN) (Barbas, 1993, 2000; Barbas et al. 1999; Reep et al., 1996). The activations of these orbitofrontal cells code the expected subjective values of objects. These values guide behavioural choices.

The review of Levy and Glimcher (2012) discusses the OFC computation of expected subjective value from the perspective of neuroeconomic theory. Also of neuroeconomic interest is the Grossberg and Gutowski (1987) exposition of how the CogEM model explains and simulates data of Kahneman and Tversky (1979) about decision-making under risk, thereby explaining Prospect Theory axioms using neural designs that are essential for survival, as well as data that Prospect Theory cannot explain, such as data about preference reversals.

The model BG, or Reward Expectation Filter (Figures 3(a) and 4(a)), detects errors in CS-specific predictions of the value and timing of rewards (Ljungberg et al., 1992; Schultz, 1998; Schultz et al., 1992, 1993, 1995, 1997). Excitatory primary rewarding inputs from the LH reach the substantia nigra pars compacta (SNc), via the pedunculopontine nucleus (PPTN). The SNc also receives adaptively timed inhibitory inputs from model striosomes in the ventral striatum. Mismatches between these signals can trigger widespread dopaminergic burst and dip Now Print signals from cells in SNc (Figure 4(a)) and the ventral tegmental area (VTA in Figure 3(a)). Learning in cortical and striatal regions is strongly modulated by these Now Print signals, with dopamine bursts strengthening conditioned links and dopamine dips weakening them. In particular, such a dopaminergic Now Print signal modulates learning in the two pathways that are activated by a CS in Figure 4(a). After learning occurs, one pathway, via the ventral striatum to the SNc, enables the CS to activate the SNc and generate its own Now Print signals. The other pathway, via the striosomes to the SNc, enables the CS to inhibit responses to an expected US from the LH. This SNc circuit will be important in considering the kinds of reinforcement learning that are spared when amgydala and/or OFC are lesioned.

MOTIVATOR was used to explain and simulate psychological and neurobiological data from tasks that examine food-specific satiety, Pavlovian conditioning, reinforcer devaluation, and simultaneous visual discrimination, while retaining the ability to simulate neurophysiological data about SNc. Model simulations successfully reproduced the neurophysiologically recorded dynamics of hypothalamic cell types, including signals that predict saccadic reaction times and CS-dependent changes in systolic blood pressure.

In order to more fully understand how food-specific satiation occurs, more needs to be said about how value categories in the amygdala are learned as a result of adaptive feedback interactions with the LH, and why, in order to properly regulate reinforcement learning and affective prediction, some of these pathways need to receive internal drive inputs and/or habituative reinforcing inputs. This explanation will be given in Section 2.11.

2.10. Temporal difference models of BG responses to unexpected rewards

The Reward Expectation Filter of the MOTIVATOR model was first published in Brown et al. (1999) who used it to explain and simulate many experiments about how SNc reacts to rewards whose amplitude or timing is unexpected. Several other models have attempted to describe the SNc cell behaviour using a temporal difference (TD) algorithm (Montague et al., 1996; Schultz et al., 1997; Suri and Schultz, 1998). These models suggest that the dopaminergic SNc cells compute a temporal derivative of predicted reward. In other words, they fire in response to the sum of the time-derivative of reward prediction and the actual reward received. These models have not been linked to structures in the brain that might compute the required signals. As Brown et al. (1999) have noted, the Suri and Schultz (1998) model has simulated some of the known dopamine cell data. However, their model can only learn a single fixed interstimulus interval (ISI) that corresponds to the longest-duration timed signal (xlm(t) in their model). If the ISI is shorter than this, dopamine bursts will strengthen all of the active stimulus representations predicting reward at the time of the dopamine burst or later. Thus, their model generates inhibitory reward predictions beyond the primary reward time, and predicts a lasting depression of dopamine firing subsequent to primary reward, which is not found in the data, data that the Brown et al. (1999) model explains.

In contrast to TD models that compute time derivatives immediately prior to dopamine cells, our model uses two distinct pathways: the ventral striatum and PPTN for initial excitatory reward prediction, and the striosomal cells for timed, inhibitory reward prediction. The fast excitation and delayed inhibition are hereby computed by separate structures within the brain, rather than by a single temporal differentiator. This separation avoids the problem of the Suri and Schultz (1998) model by allowing transient rather than sustained signals to cancel the primary reward signal, thereby enabling precisely timed reward-cancelling signals to be trained, and preventing spurious sustained inhibitory signals to the dopamine cells.

2.11. Learning value categories for specific foods and effects of their removal

Figure 3(b) diagrams how the MOTIVATOR model conceptualises the learning of a value category as a result of reciprocal adaptive interactions between the LH and the amygdala (AMYG). This figure summarises how the model embodies a network that calculates the drive-modulated affective value of a food US (Cardinal et al., 2002); notably, how selective responses to different foods can be acquired. Animals have specific hungers that vary inversely with blood levels of metabolites such as sugar, salt, protein, and fat (Davidson et al., 1997). Similarly, the gustatory system has chemical sensitivities to tastes such as sweet, salty, umami, and fatty (Kondoh et al., 2000; Rolls et al., 1999). An AMYG value category (top level in Figure 3(b)) learns to respond to particular LH combinations of these metabolites and tastes (bottom level in Figure 3(b)) in a selective fashion, hence can represent specific hungers.

MOTIVATOR begins its computation of food-specific selectivity with the lower layer of the model’s LH cells in Figure 3(b). These cells perform pairwise multiplications, each involving a taste and its corresponding drive level. They are therefore called taste-drive cells. LH neurons such as glucose-sensitive neurons provide examples of LH cells that are both chemical- and taste-sensitive. Indeed, glucose-sensitive neurons are excited by low glucose levels, inhibited by high glucose levels, and respond to the taste of glucose with excitation (Karadi et al., 1992; Shimizu et al., 1984).

The activation pattern across all these taste-drive cells is projected via converging adaptive pathways to a higher cell layer and summed there by an AMYG value category cell that represents the current value of the specific food US. These cells are therefore also called US-value cells. Such food selective US-value representations can be learned from a competitive learning process (Grossberg, 1976a, 1978a) that associates distributed activation patterns at the taste-drive cells with compressed representations at the US-value cells that survive the competition at the AMYG processing level. The resulting US-value cells in the AMYG help to explain data about neurons in the AMYG that respond selectively to specific foods or associated stimuli in a manner that reflects the expected consumption value of the food (e.g. Nishijo et al., 1988a, 1988b).

Figure 3(a) and (b) illustrates the hypothesis that a visual CS becomes a conditioned reinforcer by learning to activate a US-value representation in the AMYG during CS-US pairing protocols. Despite the fact the CS generates no gustatory inputs to the taste-drive cells and is not actually consumed, the model can use this CS-US association to compute the prospective value of the US, given current drives, during the period between CS onset and the delivery of the food US. The model can do this if the CS-activated US-value representation in the AMYG can, in turn, activate the taste-drive cells in the LH that have activated it in the past, when the US was being consumed.

This is accomplished, as depicted in Figure 3(a) and (b), by adaptive top-down pathways, or learned expectations, from the US-value cells in the AMYG to the taste-drive cells in the LH. The resultant bidirectional adaptive signalling between taste-drive LH cells and integrative US-value AMYG cells can prime the taste-value combinations that are expected in response to the conditioned reinforcer CS. Such reciprocal adaptive interactions have also been shown, as part of Adaptive Resonance Theory (ART), to be capable of stabilising category learning and memory, in whatever brain systems they occur (see Section 2.12; Carpenter and Grossberg, 1987, 1991; Grossberg, 1980, 2013a, 2017b). An ART circuit complements the bottom-up adaptive filter of a competitive learning model with adaptive top-down expectation signals, among other extensions. ART was introduced to overcome the catastrophic forgetting that occurs when only bottom-up learning occurs (Grossberg, 1976a, 1976b). Without top-down expectations to dynamically stabilise the learning of value categories, memory instability could become as great a problem in LH-AMYG dynamics as it would be in the learning of invariant object categories by the inferotemporal cortex (Figures 2 and 3).

These properties of AMYG value categories help to explain how an animal’s behaving changes when its AMYG is lesioned. When the AMYG is lesioned, the ability to selectively respond to specific foods is eliminated. The reduced drive inputs of a satiated food will then not be able to cause a smaller activation of its AMYG value category. Also lost will be the competition among value categories that would determine the choice of a non-satiated food in a normal animal.

If a food was visually presented to a normal animal in order to satiate it, then both reduced internal drive and external cue inputs could contribute to the choice of a non-satiated food (Figures 2 and 3). In particular, as above, eating a lot of food would lead to shrinking appetitive inputs and growing satiety drive inputs to the LH. Seeing the food repeatedly during each eating event could also habituate the conditioned reinforcer inputs that activate the corresponding AMYG value category.

It should immediately be noted that not all responsiveness to reinforcing cues is eliminated by lesions of the AMYG. The BG can still be fully functional. As illustrated by Figure 4(a), LH inputs can still regulate learning of dopaminergic Now Print signals from the SNc to large parts of the brain, including the PFC, in response to unexpected rewards. As will be discussed in Section 3, these Now Print signals can support learning of representations that are sensitive to the probability of reward over a series of previous trials.

2.12. Another mismatch mechanism for correcting disconfirmed behaviour: ART

This BG mechanism for processing an unexpected reinforcing event is not the only way that unexpected events are processed by the brain. Another fundamental process is also at work in perceptual and cognitive processes that greatly influences how the PFC makes predictions that are sensitive to the probability of reward over a series of previous trials. This process enables humans and other primates to rapidly learn new facts without being forced to just as rapidly forget what they already know, even if no one tells them how the rules of each environment differ or change through time. When such forgetting does occur, it is often called catastrophic forgetting.

Grossberg (1980) called the problem whereby the brain learns quickly and stably without catastrophically forgetting its past knowledge the stability-plasticity dilemma. ART was introduced to explain how brains solve the stability-plasticity dilemma. Since its introduction in Grossberg (1976a, 1976b), ART has been incrementally developed into a cognitive and neural theory of how the brain autonomously learns to attend, recognise, and predict objects and events in a changing world, without experiencing catastrophic forgetting. ART currently has the broadest explanatory and predictive range of available cognitive and neural theories. See Grossberg (2013a, 2017b) for reviews.

ART prevents catastrophic forgetting by proposing how top-down expectations focus attention on salient combinations of cues, called critical feature patterns. When a good enough match occurs between a bottom-up input pattern and a top-down expectation, a synchronous resonant state emerges that embodies an attentional focus. Such a resonance is capable of driving fast learning that incorporates the attended critical feature pattern into the bottom-up adaptive filters that activate recognition categories, and the top-down expectations that are read out by them – hence the name adaptive resonance – while suppressing outliers that could have caused catastrophic forgetting.

In contrast, when a bottom-up input pattern represents an unexpected event, it can cause a big mismatch with the currently active top-down expectation. As illustrated in Figure 5, such a mismatch, or disconfirmed expectation, can trigger a burst of nonspecific arousal (‘novel events are arousing’). It is called nonspecific arousal because it affects all category representations equally, since the orienting system that triggers such an arousal burst has no information about which active categories caused the mismatch, and thus must be reset (Figure 5(c)). A nonspecific arousal burst can reset whatever categories caused the mismatch (Figure 5(d)) and initiate a search for a more predictive category. In this way, rapid reset of an object category can occur when it leads to disconfirmed behaviours, and a more predictive category can automatically be chosen in its stead.