Abstract

The mind and brain sciences began with consciousness as a central concern. But for much of the 20th century, ideological and methodological concerns relegated its empirical study to the margins. Since the 1990s, studying consciousness has regained a legitimacy and momentum befitting its status as the primary feature of our mental lives. Nowadays, consciousness science encompasses a rich interdisciplinary mixture drawing together philosophical, theoretical, computational, experimental, and clinical perspectives, with neuroscience its central discipline. Researchers have learned a great deal about the neural mechanisms underlying global states of consciousness, distinctions between conscious and unconscious perception, and self-consciousness. Further progress will depend on specifying closer explanatory mappings between (first-person subjective) phenomenological descriptions and (third-person objective) descriptions of (embodied and embedded) neuronal mechanisms. Such progress will help reframe our understanding of our place in nature and accelerate clinical approaches to a wide range of psychiatric and neurological disorders.

Keywords: Consciousness, neural correlates of consciousness, selfhood, volition, predictive coding

Introduction

The relationship between subjective conscious experience and its biophysical basis has always been a defining question for the mind and brain sciences. But, at various times since the beginnings of neuroscience as a discipline, the explicit study of consciousness has been either treated as fringe or excluded altogether. Looking back over the past 50 years, these extremes of attitude are well represented. Roger Sperry (1969), pioneer of split-brain operations and of what can now be called ‘consciousness science’ lamented in 1969 that ‘[m]ost behavioral scientists today, and brain researchers in particular, have little use for consciousness’ (p. 532). Presciently, in the same article he highlighted the need for new technologies able to record the ‘pattern dynamics of brain activity’ in elucidating the neural basis of consciousness. Indeed, modern neuroimaging methods have had a transformative impact on consciousness science, as they have on cognitive neuroscience generally.

Informally, consciousness science over the last 50 years can be divided into two epochs. From the mid-1960s until around 1990 the fringe view held sway, though with several notable exceptions. Then, from the late 1980s and early 1990s, first a trickle and more recently a deluge of research into the brain basis of consciousness, a transition catalysed by – among other things – the activities of certain high-profile scientists (e.g. the Nobel laureates Francis Crick and Gerald Edelman) and by the maturation of modern neuroimaging methods, as anticipated by Sperry.

Today, students of neuroscience – for the most part – feel able to declare (or deny) a primary interest in studying consciousness. There are academic societies and conferences going back more than 20 years and scholarly journals dedicated to the topic. Above all, there is growing a body of empirical and theoretical work drawing ever closer connections between the properties of subjective experience and the operations of the densely complex neural circuits, embodied in bodies embedded in environments that together give rise to the apparent miracle of consciousness. We cannot yet know whether today’s students will find the solution to the ‘problem of consciousness’ or whether the problem as currently set forth is simply misconceived. Either way, there is much more to be discovered about the relations between the brain and consciousness, and with these discoveries will come new clinical approaches in neurology and psychiatry, as well as a new appreciation of our place as part of, and not apart from, the rest of nature.

Consciousness: 1960s until 1990

By the mid-1960s, the behaviourism that had dominated 20th century psychology (especially in America) was in retreat. A new cognitive science was emerging that recognised the existence and importance of inner mental states in mediating between stimulus and response. But consciousness as an explanatory target was still largely off-limits: ‘We should ban the word “consciousness” for a decade or two’ as cognitive scientist George Miller put it in 1962. As late as 1989, Stuart Sutherland (1989) wrote in the International Dictionary of Psychology: ‘Consciousness is a fascinating but elusive phenomenon. It is impossible to specify what it is, what it does, or why it evolved. Nothing worth reading has been written on it’. (p. 95).

Whatever its merits at the time, Sutherland’s judgement seems harsh in retrospect. Although lacking a coherent organisation or much coordination, the neuroscience of consciousness in this period saw several substantial advances. These advances, admittedly, were mainly about establishing correspondences between brain regions or activity patterns and properties of conscious experience, rather than addressing fundamental questions such as why consciousness is part of the universe in the first place. Good examples come from neurology and neuropsychology, where surgical interventions and the study of neurological illnesses, injuries and lesions were revealing the essential dependency of particular aspects of consciousness on specific brain properties. Some of these early studies remain among the most thought provoking even today.

The split-brain (more precisely: callosectomy) studies of Sperry and Michael Gazzaniga are a case in point. Their first experiments, on the World War II veteran W.J., revealed that each cerebral hemisphere could perceive a visual stimulus independently, with only the left hemisphere (in W.J.) being able to provide a verbal report (Gazzaniga et al., 1962). Follow-up studies found that the somatosensory system, the motor system, and many other perceptual and cognitive systems could be similarly ‘split’, while other systems – for example, emotion – remained intact (Gazzaniga, 2014). The extent to which a single cranium can house independent conscious subjects is still hotly debated (Gazzaniga, 2014; Pinto et al., 2017; Sasai et al., 2016) perhaps because the very idea challenges one of our most deeply held assumptions: that consciousness is necessarily unified.

The unity of consciousness was also challenged, more subtly, by psychosurgical lesions of the medial temporal lobe, which were carried out to alleviate intractable epilepsy. The case of Henry Moliason – patient H.M. – is well known to many. Following bilateral removal of the medial temporal lobe in 1953, including both hippocampi, H.M. was cured of his epilepsy but left with a profound anterograde (and substantial retrograde) amnesia (Scoville and Milner, 1957). In a series of studies, neuropsychologists Suzanne Corkin and Brenda Milner found that despite living in what Corkin (2013) called the ‘permanent present tense’, H.M. could learn new motor skills, had intact working and semantic memory, and was generally able to acquire a range of implicit (non-conscious) memories. Only his ability to acquire new explicit, conscious memories was affected. These findings not only charted a new topography of conscious and unconscious kinds of memory but they also showed how our apparently unified sense of conscious selfhood – in which episodic memories play a key role – can fragment so that some aspects persist while others are lost.

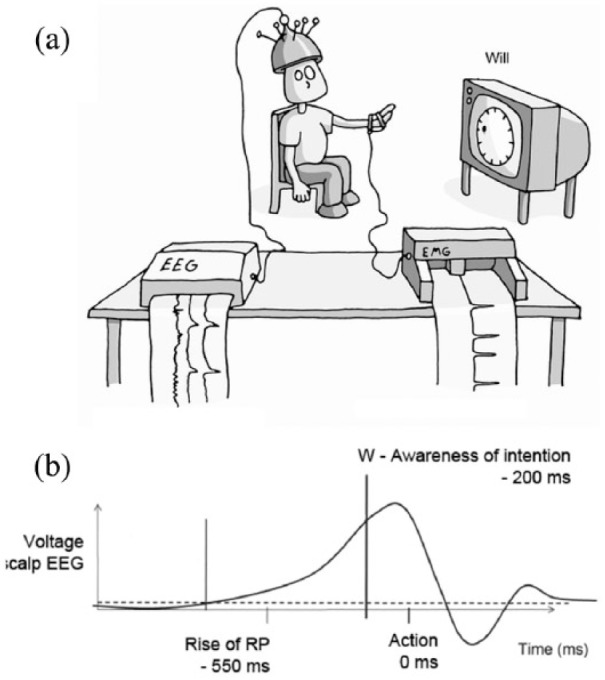

Another central feature of conscious selfhood is the experience of ‘free will’, or more precisely, experiences of volition (intentions to do this-or-that) and agency (being the cause of events). Here, the experiments of Benjamin Libet in the 1980s continue to inspire new research and to stoke controversy in equal measure. His studies, designed to measure the timing of conscious decisions to make voluntary movements, were based on a remarkably simple paradigm ((Libet, 1982) see Figure 1(a)). Participants press a button at a time of their own choosing and then report the time they felt the ‘urge’ to move – their conscious intention – by noting the position of a dot on an oscilloscope screen.

Figure 1.

(a) The Libet Paradigm. The participant makes a voluntary action and reports the time they felt the ‘conscious urge’ to move, by noting the position of the dot on the screen. Brain signals are measured using EEG and the timing of the actual movement through electromyography (EMG) attached to the wrist. Courtesy of Jolyon Troscianko (http://www.jolyon.co.uk). (b) Schematised readiness potentials in the scalp EEG. Critically, the readiness potential begins to rise before the participant is aware of their decision to move.

Source: Jolyon Troscianko (http://www.jolyon.co.uk/illustrations/consciousness-a-very-short-introduction-2/; free for academic non-profit use).

Libet first observed a previously described build-up of neural electrical activity prior to voluntary movement – the so-called ‘readiness potential’ ((Kornhuber and Deecke, 1965), (see Figure 1(b)). His key innovation was to show that this build-up started several hundred milliseconds before the participant was aware of their intention to move, challenging the assumption that the conscious ‘urge’ was the cause of the voluntary movement. This interpretation has been debated ever since, sparking many fascinating experiments (Haggard et al., 2002; Schurger et al., 2012). Libet himself was uncomfortable with the idea that conscious intentions were epiphenomenal, suggesting instead that the time between the conscious ‘urge’, and the actual movement was sufficient to allow a conscious ‘veto’ to take effect. Any conscious ‘veto’, however, is also likely to have identifiable neural precursors – so this in itself does not resolve Libet’s metaphysical quandary (Brass and Haggard, 2007). Perhaps the most convincing interpretation of these puzzling phenomena is that experiences of intention and agency label particular actions – and their consequences – as being self-generated rather than externally imposed, allowing the organism to learn and perhaps make better (voluntary) decisions in the future (Haggard, 2008).

Consciousness: from the 1990s until the present

A convenient date to mark the rehabilitation of consciousness within neuroscience is with Francis Crick and Christof Koch’s (1990) landmark paper, ‘Towards a neurobiological theory of consciousness’, which opens with the line: ‘It is remarkable that most of the work in both cognitive science and the neurosciences makes no reference to consciousness (or “awareness”)’, and which goes on to propose a specific theory of visual consciousness based on gamma-band (~40 Hz) oscillations.

Although this specific idea has now fallen from favour, a new industry rapidly developed aimed at uncovering the so-called ‘neural correlates of consciousness’ (NCCs): ‘the minimal neuronal mechanisms jointly sufficient for any one conscious percept’ (Crick and Koch, 1990). The search for NCCs, boosted by the arrival of the now ubiquitous magnetic resonance imaging (MRI) scanner (as well as good-old-fashioned EEG, and invasive neurophysiology in non-human primate studies), gave consciousness research a pragmatic spin. Instead of worrying at the so-called ‘hard problem’ of how conscious experiences could ever arise from ‘mere’ matter (Chalmers, 1996), neuroscientists could get on with looking for brain regions or processes that reliably correlated with particular conscious experiences, or with being conscious at all.

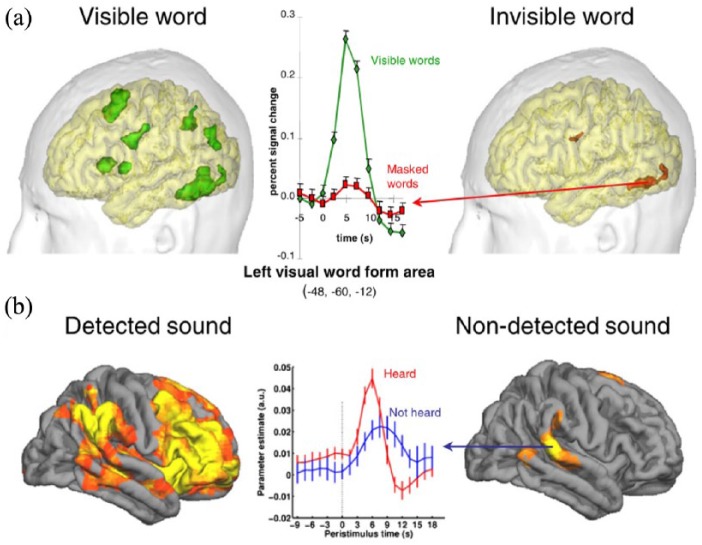

Over the past quarter-century, there has been considerable progress in identifying candidate NCCs (Koch et al., 2016; Metzinger, 2000; Odegaard et al., 2017), at least in specific contexts like visual or auditory awareness. A classic method has been to compare brain activity for ‘conscious’ and ‘unconscious’ conditions, while keeping sensory stimulation (and as far as possible, everything else) constant. For example, in binocular rivalry, conscious perception alternates even though the sensory inputs (different images to each eye) remain the same. Early studies of the brain basis of binocular rivalry used implanted electrodes in monkeys trained to report which of two visual percepts was dominant. These studies found that neuronal responses in early visual areas – in particular V1 – tracked the physical stimulus rather than the percept, while neuronal responses in ‘higher’ areas – like inferotemporal cortex (IT) – tracked the percept rather than the physical stimulus (Leopold and Logothetis, 1996; Logothetis and Schall, 1989). Subsequent human neuroimaging studies, however, found that neuronal activity in primary visual cortex did correlate with perceptual dominance (Polonsky et al., 2000), and the debate continues as to whether the neuronal mechanisms underlying perceptual transitions lie early in the visual stream, or in higher-order regions such as the parietal or frontal cortices (Blake et al., 2014). As well as rivalry experiments, so-called ‘masking’ paradigms have also been widely used in consciousness science. These paradigms enable comparison of supraliminal versus subliminal stimulus presentations in a variety of perceptual modalities, with many studies implicating activation of the fronto-parietal network in reportable conscious perception (Dehaene and Changeux, 2011; Figure 2). These two examples stand for many others; see Boly et al. (2017); Odegaard et al. (2017) for recent, and conflicting, reviews.

Figure 2.

Conscious perception of (a) words or (b) sounds is often associated with widespread activation of the brain, whereas unconscious perception is associated with local activation in specialist processing areas. The data show functional MRI responses time-locked to stimulus presentation.

Source: Dehaene and Changeux (2011).

At the same time, a separate strand of research has focussed on transitions in conscious states, both reversible (e.g. sleep and anaesthesia (Massimini et al., 2005)) and following brain injury (e.g. coma and the vegetative state (Owen et al., 2009)). Here, the challenge is to identify the neural mechanisms that support being conscious at all, rather than those associated with being conscious of this or that. One difficulty here is that global transitions of this kind affect the brain and body very generally so that it is challenging to isolate the neural mechanisms underlying consciousness per se. There are additional difficulties in distinguishing so-called ‘enabling’ conditions from those neural mechanisms that actually support conscious states. For example, certain brainstem lesions can abolish consciousness forever, but many believe that the brainstem merely enables conscious states, while the actual ‘generators’ of consciousness may lie elsewhere (Dehaene and Changeux, 2011; though see Merker, 2007)).

New theories have accompanied these empirical developments. One of the most influential is Bernard Baars’ (1988) ‘global workspace’ theory, which proposes that modular and specialised processors compete for access to a ‘global workspace’. Mental states become conscious when they are ‘broadcast’ within the workspace so that they can influence other processes, including verbal report and motor action. More recent ‘neuronal’ versions of this theory associate the global workspace with highly interconnected fronto-parietal networks, linking conscious perception to a nonlinear ‘ignition’ of activity within these networks, a position in line with many neuroimaging studies (Dehaene and Changeux, 2011; Dehaene et al., 2003; Figure 2).

Workspace theories tend to interpret conscious perception in terms of ‘access’, in the sense that a percept is defined as conscious only if it is available for verbal (or other behavioural) report, as well as to other cognitive processes (memory, attention, and so on). An advantage of this view is that conscious status is readily assessable in experiments since conscious content is by definition reportable. However, another common intuition is that perceptual or ‘phenomenal’ consciousness is ‘richer’ than we can report at any time, since reportability is limited by (among other things) the constraints of memory. The distinction between phenomenal consciousness and access consciousness (Block, 2005) remains a productive source of new experiments and controversy (Tsuchiya et al., 2015).

The road ahead

These are exciting times in consciousness science, and there is only space here to gesture at some promising research directions.

In terms of conscious level, new theories and measures have emerged based on ‘neuronal complexity’ and ‘integrated information’ (Seth et al., 2011; Tononi et al., 2016). The basic idea is that conscious scenes are both highly integrated (each is experienced as a unified whole) and highly informative (each conscious scene is one among a vast repertoire of alternative possibilities), motivating the development of mathematical metrics that combine the same properties. Excitingly, some practical approximations to these measures are showing promise in quantifying ‘residual’ awareness after brain injury, without relying on overt behaviour (Casali et al., 2013).

Research on conscious content has continued to focus on brain regions or processes that distinguish conscious from unconscious perceptions. Addressing a recurring concern about the NCC approach, new experimental paradigms are refining our understanding of interactions between the neuronal mechanisms underlying conscious perception, with those underlying behavioural report. So-called ‘no report’ paradigms, which infer perceptual transitions indirectly from (for example) automatic eye movements, are challenging the idea that frontal brain regions are constitutively involved in conscious perception ((Frassle et al., 2014), though see (Van Vugt et al., 2018) for evidence to the contrary). At the same time, advances in analysis methods like signal detection theory (Green and Swets, 1966) are enabling researchers to draw more rigorous distinctions between objective and subjective aspects of perception, as well as to quantify individual differences in metacognition (cognition about cognition) that may be relevant to consciousness (Barrett et al., 2013; Fleming and Dolan, 2012).

Theoretically, ‘predictive coding’ or ‘Bayesian brain’ approaches stand to advance our understanding of the neural basis of conscious perception. These approaches model perception as a process of (possibly Bayesian) inference on the hidden causes of the ambiguous and noisy signals that impinge on our sensory surfaces (Friston, 2009). Inverting some classical views on perception, top-down signals are proposed to convey perceptual predictions, while bottom-up signals convey only or primarily ‘prediction errors’: the discrepancy between what the brain expects and what it gets at each level of processing. This framework provides a powerful interpretation of some relatively old findings associating conscious perception with the integrity of top-down signalling (Pascual-Leone and Walsh, 2001) and is motivating new studies which explicitly manipulate perceptual expectations, examining how this alters conscious perception (De Lange et al., 2018). Excitingly, these ideas may provide a mechanistic understanding of abnormal perception in some clinical contexts, a good example being the positive symptoms (e.g. hallucinations) of psychosis (Fletcher and Frith, 2009; Powers et al., 2017; Teufel et al., 2015).

There is also increasing focus in consciousness research on experiences of selfhood, which encompass basic experiences of embodiment and body ownership (Blanke et al., 2015), experiences of volition and agency (Haggard, 2008), as well as ‘higher’ aspects of selfhood such as episodic memory and social perception. Here, new developments in virtual and augmented reality (Lenggenhager et al., 2007; Seth, 2013), as well as in characterising interoception (the sense of the body ‘from within’ (Critchley et al., 2004)) are heralding new insights into how our apparently unified experience of being a ‘self’ is constructed, on the fly, from many potentially distinguishable sub-processes – and how breakdowns in this constructive process may underlie a variety of psychiatric conditions.

From this vantage point in time, 50 years after the birth of the British Neuroscience Association, it is fair to say that the scientific study of consciousness has regained its rightful place as a central theme in the mind and brain sciences. A great deal is now known about how embodied and embedded brains shape and give rise to various aspects of conscious level, content, and self. Of course, much more remains to be discovered. Exciting new combinations of theory, experiment, and modelling are helping transform mere correlations into explanations that map from neural mechanism to phenomenology. Accompanying these developments are important clinical applications in neurology and psychiatry, as well as the deep challenges of investigating consciousness in infancy, in other non-human animals, and perhaps even in future machines. Whether or not the ‘hard’ problem of consciousness will yield to these and other developments, the history of the next 50 years will make for fascinating reading.

Footnotes

Declaration of conflicting interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: I am grateful to the Dr Mortimer and Theresa Sackler Foundation which supports the Sackler Centre for Consciousness Science. I also acknowledge support from the Wellcome Trust (Engagement Fellowship) and the Canadian Institute for Advanced Research, Azrieli Programme on Brain, Mind, and Consciousness.

References

- Baars BJ. (1988) A Cognitive Theory of Consciousness. New York: Cambridge University Press. [Google Scholar]

- Barrett AB, Dienes Z, Seth AK. (2013) Measures of metacognition on signal-detection theoretic models. Psychological Methods 18(4): 535–552. [DOI] [PubMed] [Google Scholar]

- Blake R, Brascamp J, Heeger DJ. (2014) Can binocular rivalry reveal neural correlates of consciousness? Philosophical Transactions of the Royal Society of London: Series B, Biological Sciences 369(1641): 20130211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanke O, Slater M, Serino A. (2015) Behavioral, neural, and computational principles of bodily self-consciousness. Neuron 88(1): 145–166. [DOI] [PubMed] [Google Scholar]

- Block N. (2005) Two neural correlates of consciousness. Trends in Cognitive Sciences 9(2): 46–52. [DOI] [PubMed] [Google Scholar]

- Boly M, Massimini M, Tsuchiya N, et al. (2017) Are the neural correlates of consciousness in the front or in the back of the cerebral cortex? Clinical and neuroimaging evidence. Journal of Neuroscience 37(40): 9603–9613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brass M, Haggard P. (2007) To do or not to do: The neural signature of self-control. Journal of Neuroscience 27(34): 9141–9145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casali AG, Gosseries O, Rosanova M, et al. (2013) A theoretically based index of consciousness independent of sensory processing and behavior. Science Translational Medicine 5(198): ra105. [DOI] [PubMed] [Google Scholar]

- Chalmers DJ. (1996) The Conscious Mind: In Search of a Fundamental Theory. New York: Oxford University Press. [Google Scholar]

- Corkin S. (2013) Permanent Present Tense: The Unforgettable Life of the Amnesic Patient, H. M. London: Allen Lane. [Google Scholar]

- Crick F, Koch C. (1990) Towards a neurobiological theory of consciousness. Seminars in the Neurosciences 2: 263–275. [Google Scholar]

- Critchley HD, Wiens S, Rotshtein P, et al. (2004) Neural systems supporting interoceptive awareness. Nature Neuroscience 7(2): 189–195. [DOI] [PubMed] [Google Scholar]

- De Lange FP, Heilbron M, Kok P. (2018) How do expectations shape perception? Trends in Cognitive Sciences 22(9): 764–799. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Changeux JP. (2011) Experimental and theoretical approaches to conscious processing. Neuron 70(2): 200–227. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Sergent C, Changeux JP. (2003) A neuronal network model linking subjective reports and objective physiological data during conscious perception. Proceedings of the National Academy of Sciences of the United States of America 100(14): 8520–8525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleming SM, Dolan RJ. (2012) The neural basis of metacognitive ability. Philosophical Transactions of the Royal Society of London: Series B, Biological Sciences 367(1594): 1338–1349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fletcher PC, Frith CD. (2009) Perceiving is believing: A Bayesian approach to explaining the positive symptoms of schizophrenia. Nature Reviews Neuroscience 10(1): 48–58. [DOI] [PubMed] [Google Scholar]

- Frassle S, Sommer J, Jansen A, et al. (2014) Binocular rivalry: Frontal activity relates to introspection and action but not to perception. Journal of Neuroscience 34(5): 1738–1747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ. (2009) The free-energy principle: A rough guide to the brain? Trends in Cognitive Sciences 13(7): 293–301. [DOI] [PubMed] [Google Scholar]

- Gazzaniga MS. (2014) The split-brain: Rooting consciousness in biology. Proceedings of the National Academy of Sciences of the United States of America 111(51): 18093–18094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzaniga MS, Bogen JE, Sperry RW. (1962) Some functional effects of sectioning the cerebral commissures in man. Proceedings of the National Academy of Sciences of the United States of America 48(10): 1765–1769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green DM, Swets JA. (1966) Signal Detection Theory. New York: Wiley. [Google Scholar]

- Haggard P. (2008) Human volition: Towards a neuroscience of will. Nature Reviews Neuroscience 9(12): 934–946. [DOI] [PubMed] [Google Scholar]

- Haggard P, Clark S, Kalogeras J. (2002) Voluntary action and conscious awareness. Nature Neuroscience 5(4): 382–385. [DOI] [PubMed] [Google Scholar]

- Koch C, Massimini M, Boly M, et al. (2016) Neural correlates of consciousness: Progress and problems. Nature Reviews Neuroscience 17(5): 307–321. [DOI] [PubMed] [Google Scholar]

- Kornhuber HH, Deecke L. (1965) Changes in the brain potential in voluntary movements and passive movements in man: Readiness potential and reafferent potentials. Pflügers Archiv für die gesamte Physiologie des Menschen und der Tiere 284: 1–17. [PubMed] [Google Scholar]

- Lenggenhager B, Tadi T, Metzinger T, et al. (2007) Video ergo sum: Manipulating bodily self-consciousness. Science 317(5841): 1096–1099. [DOI] [PubMed] [Google Scholar]

- Leopold DA, Logothetis NK. (1996) Activity changes in early visual cortex reflect monkeys’ percepts during binocular rivalry. Nature 379(6565): 549–553. [DOI] [PubMed] [Google Scholar]

- Libet B. (1982) Brain stimulation in the study of neuronal functions for conscious sensory experiences. Human Neurobiology 1(4): 235–242. [PubMed] [Google Scholar]

- Logothetis NK, Schall JD. (1989) Neuronal correlates of subjective visual perception. Science 245(4919): 761–763. [DOI] [PubMed] [Google Scholar]

- Massimini M, Ferrarelli F, Huber R, et al. (2005) Breakdown of cortical effective connectivity during sleep. Science 309(5744): 2228–2232. [DOI] [PubMed] [Google Scholar]

- Merker B. (2007) Consciousness without a cerebral cortex: A challenge for neuroscience and medicine. Behavioral and Brain Sciences 30(1): 63–81; discussion 81–134. [DOI] [PubMed] [Google Scholar]

- Metzinger T. (ed.) (2000) Neural Correlates of Consciousness: Empirical and Conceptual Questions. Cambridge, MA: The MIT Press. [Google Scholar]

- Odegaard B, Knight RT, Lau H. (2017) Should a few null findings falsify prefrontal theories of conscious perception? Journal of Neuroscience 37(40): 9593–9602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owen AM, Schiff ND, Laureys S. (2009) A new era of coma and consciousness science. Progress in Brain Research 177: 399–411. [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Walsh V. (2001) Fast backprojections from the motion to the primary visual area necessary for visual awareness. Science 292(5516): 510–512. [DOI] [PubMed] [Google Scholar]

- Pinto Y, Neville DA, Otten M, et al. (2017) Split brain: Divided perception but undivided consciousness. Brain 140(5): 1231–1237. [DOI] [PubMed] [Google Scholar]

- Polonsky A, Blake R, Braun J, et al. (2000) Neuronal activity in human primary visual cortex correlates with perception during binocular rivalry. Nature Neuroscience 3(11): 1153–1159. [DOI] [PubMed] [Google Scholar]

- Powers AR, Mathys C, Corlett PR. (2017) Pavlovian conditioning-induced hallucinations result from overweighting of perceptual priors. Science 357(6351): 596–600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasai S, Boly M, Mensen A, et al. (2016) Functional split brain in a driving/listening paradigm. Proceedings of the National Academy of Sciences of the United States of America 113(50): 14444–14449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schurger A, Sitt JD, Dehaene S. (2012) An accumulator model for spontaneous neural activity prior to self-initiated movement. Proceedings of the National Academy of Sciences of the United States of America 109(42): E2904–E2913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scoville WB, Milner B. (1957) Loss of recent memory after bilateral hippocampal lesions. Journal of Neurology, Neurosurgery, and Psychiatry 20(1): 11–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seth AK. (2013) Interoceptive inference, emotion, and the embodied self. Trends in Cognitive Sciences 17(11): 565–573. [DOI] [PubMed] [Google Scholar]

- Seth AK, Barrett AB, Barnett L. (2011) Causal density and integrated information as measures of conscious level. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 369(1952): 3748–3767. [DOI] [PubMed] [Google Scholar]

- Sperry RW. (1969) A modified concept of consciousness. Psychological Review 76(6): 532–536. [DOI] [PubMed] [Google Scholar]

- Sutherland S. (1989) The International Dictionary of Psychology. New York, NY: Crossroads Classic. [Google Scholar]

- Teufel C, Subramaniam N, Dobler V, et al. (2015) Shift toward prior knowledge confers a perceptual advantage in early psychosis and psychosis-prone healthy individuals. Proceedings of the National Academy of Sciences of the United States of America 112(43): 13401–13406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tononi G, Boly M, Massimini M, et al. (2016) Integrated information theory: From consciousness to its physical substrate. Nature Reviews Neuroscience 17(7): 450–461. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Wilke M, Frassle S, et al. (2015) No-report paradigms: Extracting the true neural correlates of consciousness. Trends in Cognitive Sciences 19(12): 757–770. [DOI] [PubMed] [Google Scholar]

- Van Vugt B, Dagnino B, Vartak D, et al. (2018) The threshold for conscious report: Signal loss and response bias in visual and frontal cortex. Science 360(6388): 537–542. [DOI] [PubMed] [Google Scholar]