Abstract

Understanding how cognitive functions emerge from brain structure depends on quantifying how discrete regions are integrated within the broader cortical landscape. Recent work established that macroscale brain organization and function can be described in a compact manner with multivariate machine learning approaches that identify manifolds often described as cortical gradients. By quantifying topographic principles of macroscale organization, cortical gradients lend an analytical framework to study structural and functional brain organization across species, throughout development and aging, and its perturbations in disease. Here, we present BrainSpace, a Python/Matlab toolbox for (i) the identification of gradients, (ii) their alignment, and (iii) their visualization. Our toolbox furthermore allows for controlled association studies between gradients with other brain-level features, adjusted with respect to null models that account for spatial autocorrelation. Validation experiments demonstrate the usage and consistency of our tools for the analysis of functional and microstructural gradients across different spatial scales.

Subject terms: Cognitive neuroscience, Scientific community

Vos de Wael et al. developed an open source tool called BrainSpace to quantify cortical gradients using 3 structural or functional imaging data. Their toolbox enables gradient identification, comparison, 4 visualization, and association with other brain features.

Introduction

Over the last century, neuroanatomical studies in humans and non-human animals have highlighted two complementary features of neural organization. On the one hand, studies have demarcated structurally homogeneous areas with specific connectivity profiles, and ultimately distinct functional roles1–5. In parallel, neuroanatomists have established spatial trends that span across cortical areas both in terms of their histological properties, and connectivity patterns6–9. Such characterizations of cortical areas by their placement in the broader cortical hierarchy has provided a foundation for understanding functions that emerge through cortical interactions.

Although much of the more recent work linking measures of neural processing (for example from functional magnetic resonance imaging, MRI) to cognition has focused on identifying discrete regions and modules and their specific functional roles10, recent conceptual and methodological developments have provided the data and methods that allow macroscale brain features mapped to low dimensional manifold representations, also described as gradients11. Gradient analyses operating on connectivity data were applied to diffusion MRI tractography data in specific brain regions12,13 as well as neocortical, hippocampal, and cerebellar resting-state functional MRI connectivity maps11,14–20. Similar techniques have also been used to describe myelin-sensitive tissue measures and other morphological characteristics21–23, as well as approaches based on combined network information aggregated from multiple features24. Other studies have used a similar framework to describe task based neural patterns either using meta-analytical co-activation mapping20 or large-scale functional MRI task data sets25. Gradients have also been successfully derived from non-imaging data that were registered to stereotaxic space, including hippocampal post mortem gene expression information26 and 3D histology data22, to explore cellular and molecular signatures of neuroimaging and connectome measures. Core to these techniques is the computation of an affinity matrix that captures inter-area similarity of a given feature followed by the application of dimensionality reduction techniques to identify a gradual ordering of the input matrix in a lower dimensional manifold space (Fig. 1).

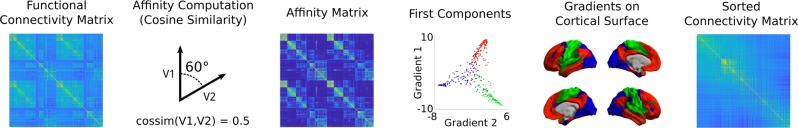

Fig. 1. A typical gradient identification workflow.

Starting from an input matrix (here, functional connectivity), we use a kernel function to build the affinity matrix (here capturing the connectivity of each seed region). This matrix is decomposed, often via linear rotations or non-linear manifold learning techniques into a set of principal eigenvectors describing axes of largest variance. The scores of each seed onto the first two axes are shown in the scatter plot, with colors denoting position in this 2D space. These colors may be projected back to the cortical surface and the scores can be used to sort the input connectome.

The ability to describe brain wide organizational principles in a single manifold offers the possibility to understand how the integrated nature of neural processing gives rise to function and dysfunction. Adopting a macroscale perspective on cortical organization has already provided insights into how cortex-wide patterns relate to cortical dynamics27 and high level cognition25,28–30. Furthermore, several studies have leveraged gradients as an analytical framework to describe atypical macroscale brain organization across clinical conditions, for example, by showing perturbations in functional connectome gradients in autism31 and schizophrenia32. Finally, comparisons of gradients across different imaging modalities have highlighted the extent to which structure directly constrains functional measures22, while consideration of gradients across species has highlighted how evolution has shaped more integrative features of the cortical landscape7,33–37.

The growth in our capacity to map whole brain cortical gradients, coupled with the promise of a better understanding of how structure gives rise to function, highlights the need for a set of tools that support the analysis of neural manifolds in a compact and reproducible manner. The goal of this paper is to present an open-access set of easy-to-use tools that allow the identification, visualization, and analysis of macroscale gradients of brain organization. We hope this will provide a method for calculating cortical manifolds that facilitates their use in future empirical work, allows comparison between studies, and allows for result replicability. To offer flexibility in implementation, we provide our toolbox in both Python and Matlab, two languages widely used in the neuroimaging and network neuroscience communities. Associated functions are freely available for download (http://github.com/MICA-MNI/BrainSpace) and complemented with an expandable online documentation (http://brainspace.readthedocs.io). We anticipate that our toolbox will assist researchers interested in studying gradients of cortical organization, and propel further work that establishes the overarching principles through which structural and functional organization of human and non-human brains gives rise to key aspects of cognition.

Results

This section illustrates the usage of BrainSpace for gradient mapping and null model generation. Examples and evaluations are based on 217 subjects from the Human Connectome Project (HCP) dataset38, as in prior work20. Matlab code is presented in the main version of the paper. Corresponding Python codes are available in the Supplementary Information.

Generating gradients

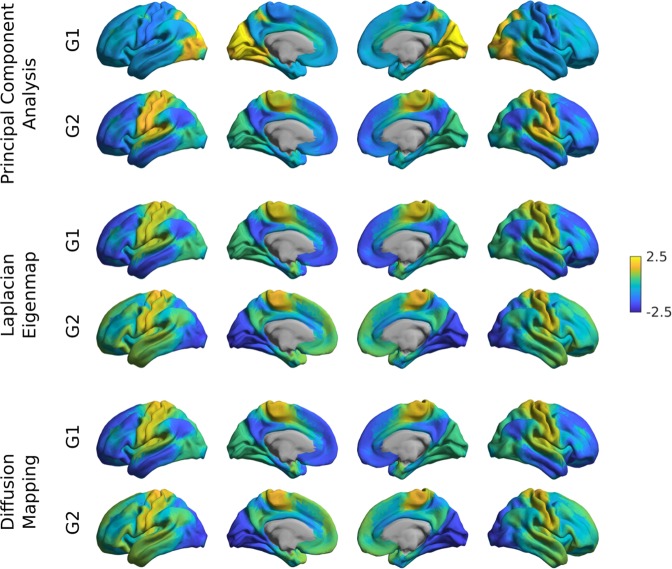

To illustrate the basic functionality of the toolbox, we computed gradients derived from resting-state functional MRI functional connectivity (FC). In short, the input matrix was made sparse (to 10% sparsity) and a cosine similarity matrix was computed. Next, three different manifold algorithms (i.e., principal component analysis (PCA), Laplacian eigenmaps (LE), diffusion mapping (DM)) were applied, followed by plotting their first and second gradients on the cortical surface (Figure 2). Resulting gradients (Fig. 3) derived from all dimensionality reduction techniques resemble those published previously11, although for PCA the somatomotor to visual gradient explains more variance than the default mode to sensory gradient.

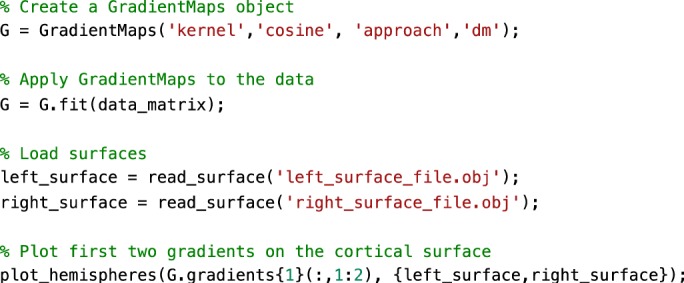

Fig. 2. Sample code 1.

A minimal Matlab example for plotting the first gradient of an input data matrix on the cortical surface. Equivalent Python code is provided in Supplementary Sample Code 1.

Fig. 3. Gradient construction with different dimensionality reduction techniques.

Gradient 1 (G1) and 2 (G2) of FC were computed using a cosine similarity affinity computation, followed by either PCA, LE, or DM. Gradients were z-scored before plotting.

Aligning gradients

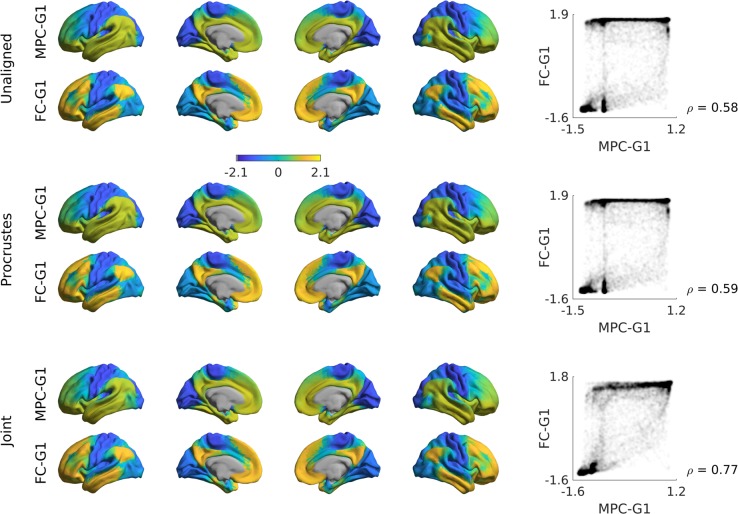

Gradient alignment across modalities. Based on subjects present in both the FC dataset as well as those used in the validation group of ref. 22 (n = 70), we examined the correspondence between gradients computed from different modalities and evaluated increases in correspondence through gradient alignment. The modalities evaluated are FC and microstructural profile covariance (MPC). Here we compared gradients of these in unaligned form, and after Procrustes alignment and joint embedding (Fig. 4, Fig. 5). As expected, gradient correspondence increased slightly following Procrustes alignment compared to unaligned gradients, and even more markedly following joint embedding. Beyond maximizing correspondence, the choice of Procrustes versus joint embedding can depend on the specific applications. Procrustes alignment preserves the overall shape of the different gradients and can thus be preferable to compare different gradients. Joint embedding, on the other hand, identifies a joint solution that maximizes their similarity, resulting in a gradient that may be more of a ‘hybrid’ of the input manifolds. Joint embedding is, thus, a technique to identify correspondence and to map from one space to another, and conceptually related to widely used multivariate associative techniques such as canonical correlation analysis or partial least squares which seek to maximize the linear associations between two multidimensional datasets39. Note that the computational cost of joint embedding is substantially higher, so Procrustes analysis may be the preferred option when computational resources are a limiting factor.

Fig. 4. Comparison of alignment methods across modalities.

Unaligned gradients 1 (top) of MPC and FC were derived using cosine similarity and diffusion mapping. Alignments using Procrustes analyses (middle) and joint embedding (bottom) are also shown. Smoothed scatter plots show correspondence between principal gradient values for FC and MPC across cortical nodes, indicating a moderate increase in Spearman correlation after joint embedding. Gradient values were z-scored before plotting.

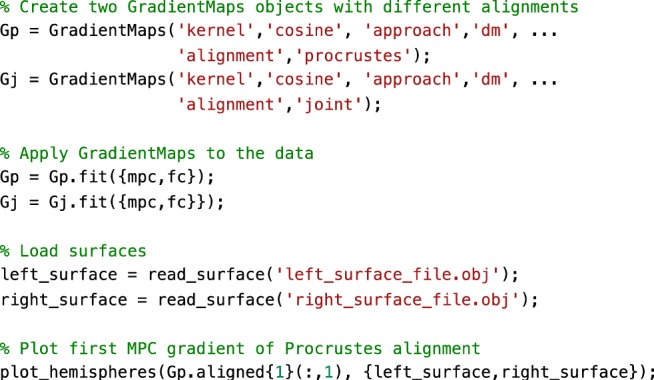

Fig. 5. Sample code 2.

A minimal Matlab example for creating and plotting gradients from different modalities, with different alignments. Equivalent Python code is provided in Supplementary Sample Code 2.

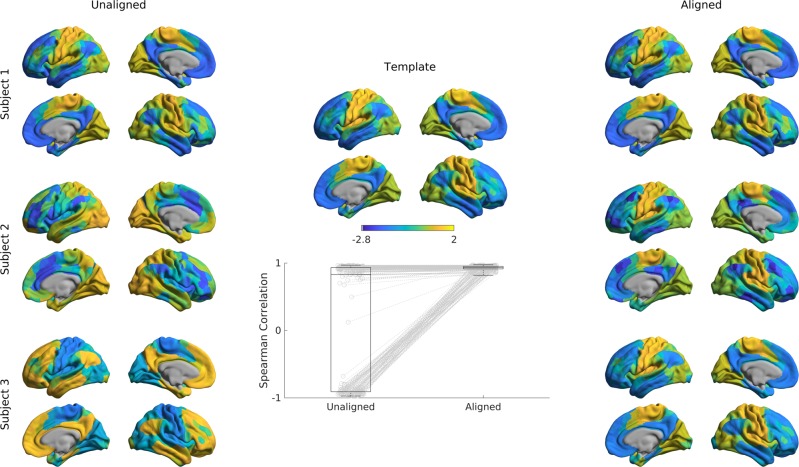

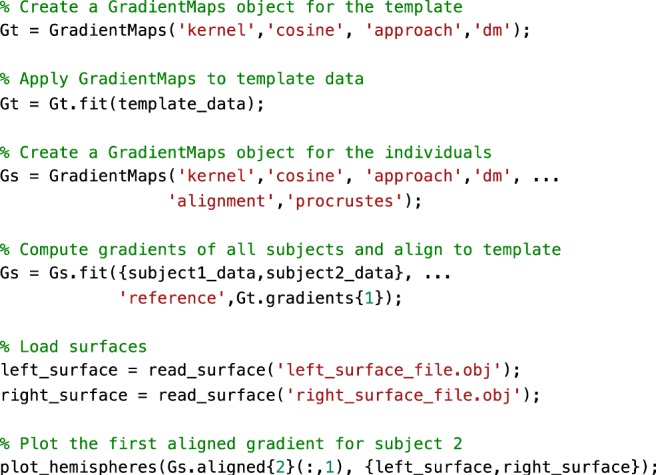

Gradient alignment across individuals. Researchers may also be interested in comparing gradient values between individuals31, for example to assess perturbations in FC gradients as a measure of brain network hierarchy. One possible approach could be to first build a group-level gradient template, to which both diagnostic groups are aligned using Procrustes rotation. After that, the two groups can be compared statistically and at each vertex, for example using tools suitable for surface-based linear modeling40.

In the example below, we computed a template gradient from an out-of-sample dataset of 134 subjects from the HCP dataset (the validation cohort used by20). Next, we used the Procrustes analysis to align individual’s gradients of each subject to the group level template (Fig. 6, Fig. 7).

Fig. 6. Alignment of subjects to a template.

Gradients 1 of single subjects computed with the cosine similarity kernel and diffusion mapping manifold (left) were aligned to an out-of-sample template (middle) using Procrustes analysis, creating aligned gradients (right). Box-plot shows the Spearman correlations of each subjects’ gradient 1 values across cortical nodes to the template gradient 1 both before, and after Procrustes alignment. The central line of the box plot denotes the median, the edges denote the 25th and 75th percentiles, and the whiskers extend to the most extreme datapoints.

Fig. 7. Sample code 3.

A minimal Matlab example for aligning the gradients of two individuals to a template gradient. Equivalent Python code is provided in Supplementary Sample Code 3.

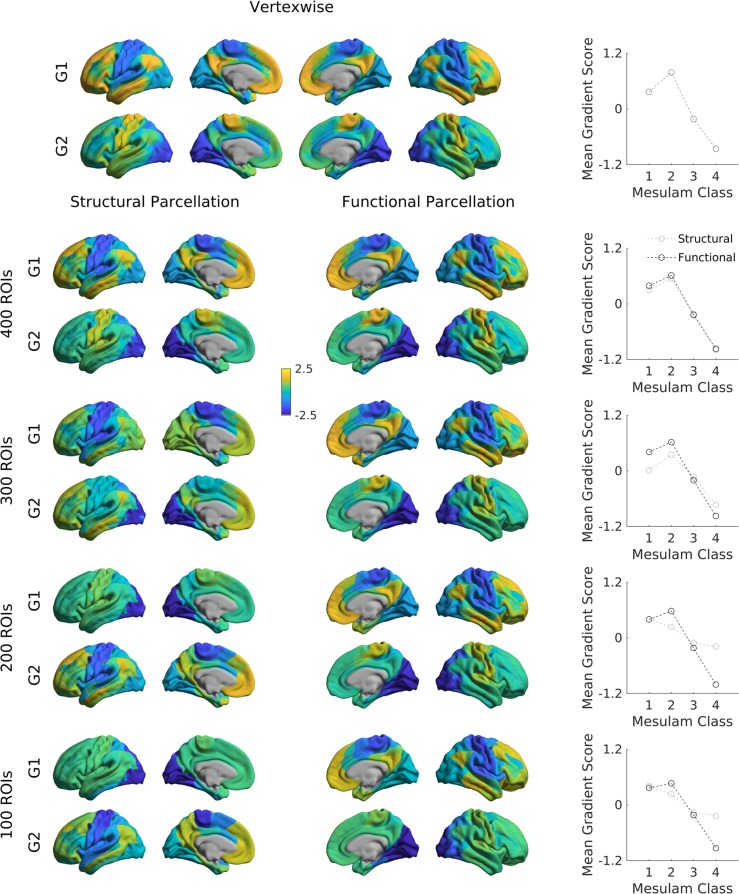

Gradients across different spatial scales. The gradients presented so far were all derived at a vertex-wise level, which requires considerable computational resources. To minimize time and space requirements and to make results comparable to parcellation-based studies, some users may be interested in deriving gradients from parcellated data. To illustrate the effect of using different parcellations, we repeated the gradient identification and analysis across different spatial scales for both a structural and a functional parcellation. Specifically, we subdivided the conte69 surface into 400, 300, 200, and 100 parcels based on both a clustering of a well-established anatomical atlas41, as well as a recently published local-global functional clustering42 and built FC gradients from these representations (Fig. 8). These parcellations and subsampling schemes are provided in the shared folder of the BrainSpace toolbox.

Fig. 8. Functional gradients across spatial scales.

The cortex was subdivided into 100 (first row), 200 (second row), 300 (third row), and 400 (fourth row) regions of interest based on an anatomical (left) and functional (right) parcellation. Displayed are gradients 1 (G1), and 2 (G2), each for one hemisphere only. Line plots show the average gradient score within each Mesulam class for the functional (dark gray) and structural (light gray).

Overall, with increasing spatial resolution the FC gradients became more pronounced and gradients derived from functional and structural parcellations were more similar. At a scale of 200 nodes or lower, putative functional boundaries may not be as reliably captured when using anatomically-informed parcellations, resulting in more marked alterations in the overall shape of the gradients.

For further evaluation, we related the above gradients across multiple scales relative to Mesulam’s classic scheme of cortical laminar differentiation and hierarchy22,43. It shows a clear correspondence between the first gradient and the Mesulam hierarchy for high resolution data of 300 nodes and more, regardless of the parcellation scheme. While high correspondence was still seen for functional parcellations at lower granularity, it was markedly reduced when using structural parcellations. For researchers interested in using Mesulam’s parcellation, it has been provided on Conte69 surfaces in the shared folder of the BrainSpace toolbox. We also provide the data required to reproduce the evaluated FC and MPC gradients across these different spatial scales. Such gradients can be used to stratify other imaging measures, including functional activation and connectivity patters28,31,44, meta-analytical syntheses11,29, cortical thickness measures or Amyloid-beta PET uptake data45.

Null models

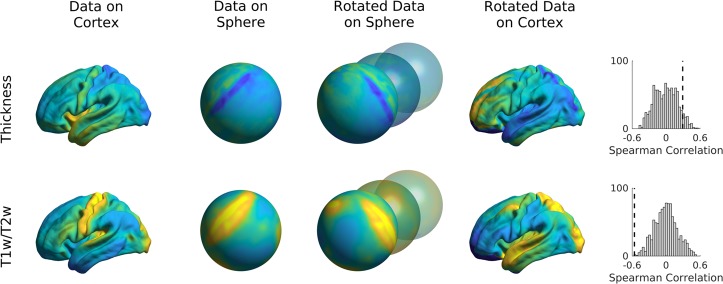

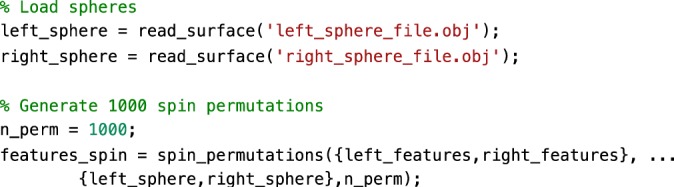

Here, we present an example to assess the significance of correlations between FC gradients and data from other modalities (cortical thickness and T1w/T2w image intensity in this example). We present code (see Fig. 9) to generate previously proposed spin tests46, which preserve the autocorrelation of the permuted features by rotating the feature data on the sphere. In our example (Fig. 10), the correlations between FC gradients and T1w/T2w stay significant (two-tailed, p < 0.001) even when comparing the correlation to 1000 null models whereas correlations between FC gradients and cortical thickness was non-significant (two-tailed, p = 0.12).

Fig. 9. Sample code 4.

A minimal Matlab example for building null models based on spin tests. Equivalent Python code is provided in Supplemental Sample Code 4.

Fig. 10. Spin tests of cortical thickness and t1w/t2w intensity.

Data were rotated on the sphere 1000 times and Spearman correlations between FC gradient 1 and the rotated data were computed. Distribution of correlation coefficients are shown in the histograms with the dashed lines denoting the true correlation.

Test-retest stability

The HCP dataset has four rs-fMRI scans, split over two days. As such, we can leverage this data to assess test-retest stability of gradients. Here, we assessed the test-retest stability of gradients at the group level. Specifically, we redid the analysis of Fig. 2, but split the dataset by day of scanning. Stability was very high for LE and DM (r > 0.99) and moderate-to-high for PCA (r ≈ 0.72) (Supplementary Fig. 1).

Discussion

While tools for unsupervised manifold identification and their alignment are extensively used in data science across multiple research domains47, and while few prior connectome level studies made their workflow openly accessible (see refs. 11,15,48), we currently lack a unified software package that incorporates the major steps of gradient construction and evaluation for neuroimaging and connectome datasets. We filled this gap with BrainSpace, a compact open-access Matlab/Python toolbox for the identification and analysis of low-dimensional gradients for any given regional or connectome-level feature. As such, BrainSpace provides an entry point for researchers interested in studying gradients as windows into brain organization and function. BrainSpace is a simple and modular package accessible to beginners, yet expandable for advanced programmers. At its core is a simple object-oriented model, allowing for flexible computation of different (i) affinity matrices, (ii) dimensionality reduction techniques, (iii) alignment functions, and (iv) null models. We also supplied precomputed gradients, a novel subparcellation of the Desikan-Killiany atlas, and a literature-based atlas of cortical laminar differentiation that we used in a recent study22,43.

As our main purpose was to provide an accessible introduction of the toolbox’s basic functionality, we focused on tutorial examples and several selected assessments that demonstrate more general aspects of gradient analyses. First, relatively consistent FC gradients were produced by different dimensionality reduction techniques (i.e., PCA, LE, DM), at least when cosine similarity was chosen for affinity matrix computations. Interactions between input data, affinity matrix kernels, and dimensionality reduction techniques may nevertheless occur, a topic worthwhile to explore in future work. Second, we could show a relative consistency of FC gradients across spatial scales in the case of vertex-wise analyses and when parcellations with 300 nodes or more were used. However, we also observed an interaction between the type of input data and parcellation at lower spatial scales. In fact, lower resolution structural parcellations might not capture fine-grained functional boundaries, specifically in heteromodal and paralimbic association cortices which may be less constrained by underlying structural-morphological features22. It will be informative for future work to clarify how input modality (e.g., FC, MPC, or diffusion MRI), the choice of parcellation, and the spatial scale impact gradient analyses.

There are two broad ways through which the gradient method and the BrainSpace toolbox may improve our understanding of neural organization and its associated functions. One avenue is the identification of similarities and differences in gradients derived from different brain measures. To address associations between cortical microstructure and macroscale function, a previous study22 demonstrated that gradients derived from 3D histology and myelin-sensitive MRI measures show both similarities and differences from those derived from resting state-state functional connectivity analysis11. This raises the possibility that the gradient method may help quantify common and distinct influences on functional and structural brain organization and shed light on the neural basis of more flexible (i.e., less structurally constrained) aspects of human cognition22. Another way that gradients can inform our understanding of how functions emerge from the cortex is through the analysis of how macroscale patterns of organization change in disease. One recent study31, for example, demonstrated differences in the principal functional gradient, identified by11, between individuals with autism spectrum disorder and typically developing controls. In this way, manifold-derived gradient analyses hold the possibility to characterize how macroscale functional organization may become dysfunctional in atypical neurodevelopment. To achieve both goals, alignment of different gradients should be considered, and this can be achieved by a number of methods we supplied, such as Procrustes alignment. This allows researchers to both compare gradients from different modalities and to homogenize measures across subjects, while minimizing the changes to individual manifolds. Our evaluations highlighted an increase in correspondence between individual subjects and the template manifold when Procrustes alignment was used compared to unaligned approaches, mainly driven by trivial changes in the sign of specific gradients in a subgroup of subjects. As an alternative to Procrustes alignment, it is also possible to align gradients via joint manifold alignments, often referred to as joint embedding37. The gradients provided by this approach can augment both cross-subject49 as well as cross-species analyses37. Of note, joint embeddings generate a new manifold, which may result in new solutions that might slightly differ from the initial gradients computed individually.

When assessing the significance of correlations between gradients and other features of brain organization, there is an increasing awareness to ideally also evaluate correlations against null models with a similar spatial autocorrelation as the original features. BrainSpace provides two different approaches to build null models, including an adaptation of a previously released spin permutation test46 and Moran’s spectral randomization50. Gradients can also serve as a coordinate system, and stratify cortical features that are not gradient-based per se. Examples include surface-based geodesic distance measures from sensory-motor regions to other regions of cortex11, task-based functional activation patterns and meta-analytical data11,28,29, as well as MRI-based cortical thickness and PET-derived amyloid beta uptake measures45. As such, using manifolds as a new coordinate system34 may complement widely used parcellation approaches2,42,51 and be can be useful for the compact representation of findings and aid in the interpretation and communication of results.

Although, we have only illustrated the use of our toolbox with neocortical surface data, the gradient-based functionality of BrainSpace is not restricted to these regions and can also be used with other datasets (e.g., hippocampal measures, subcortical measures, and volumetric data). However, as of version 0.1, visualization is only available for surface meshes. Extending the visualization functionality of BrainSpace to support other cerebral structures and volumetric data is a promising line of future work.

Methods

Input data description

Our toolbox requires a real input matrix. Let X ∈ ℝn×p be a matrix aggregating features of several seed regions. In other words, each seed is represented by a p-dimensional vector, xi, built based on the features of the i-th seed region, where denotes the j-th feature of the i-th seed. In many neuroimaging applications, X may represent a connectivity metric (e.g., resting-state functional MRI connectivity or diffusion MRI tractography derived structural connectivity) between different seed and target brain regions. When seed and target regions are identical, the input matrix X is square. Furthermore, if the connectivity measure used to build the matrix is non-directional, X is also symmetric. If seeds and targets are different, for example when assessing connectivity patterns of a given region with the rest of the brain15,20, we may have that n ≠ p, resulting in a non-square matrix. The dimensions and symmetry properties of the input matrix X may interact with the dimensionality reduction procedures presented in section 4.5. A simple strategy to make matrices symmetric and square is to use kernel functions, which will be covered in the following section.

Affinities and kernel functions

Since we are interested in studying the relationships between the seed regions in terms of their features (e.g., connectivity with target regions), our toolbox provides several kernel functions to compute the relationship between every pair of seed regions and derive a non-negative square symmetric affinity matrix A ∈ n×n, where A(i, j) = A(j, i) denotes the similarity or ‘affinity’ between seeds I and j. Moreover, a square symmetric matrix is a requirement for the next step in our framework (i.e., dimensionality reduction). Note that when the input matrix X is already square and symmetric (e.g., seed and target regions are the same), there may be no need to derive the affinity matrix and X can be used directly to perform dimensionality reduction. Accordingly, BrainSpace provides the option of skipping this step and using the input matrix as the affinity.

There are numerous kernels to compute affinity matrices. As of version 0.1, our toolbox implements the following: Gaussian, cosine similarity, normalized angle similarity, Pearson’s correlation coefficient, and Spearman rank order correlations. For simplicity, let x = xi and y = xj, these kernels can be expressed as follows:

1. Gaussian kernel:

where γ is the inverse kernel width and ||■|| denotes the l2-norm.

2. Cosine similarity:

where cossim (■,■) is the cosine similarity function and T stands for transpose.

3. Normalized angle similarity:

4. Pearson correlation:

where ρ is the Pearson correlation coefficient, and and denote the means of x and y, respectively.

5. Spearman rank order correlation:

where rx and ry denote the ranks of x and y, respectively.

Version 0.1 of BrainSpace thus includes commonly used kernels in the gradient literature and additional ones for experimentation. To our knowledge, no gradient paper has used Pearson or Spearman correlation. Note that if X is already row-wise demeaned, Pearson correlation amounts to cosine similarity. The Gaussian kernel is widely used in the machine learning community (for example in the context of Laplacian eigenmaps and support vector machines), which provides a simple approach to convert Euclidean distances between our seeds into similarities. Cosine similarity, (example application11:), computes the angle between our feature vectors to describe their similarity. Notably, cosine similarity ranges from -1 to 1, with negative correlations to be transformed to non-negative values. This motivated inclusion of the normalized angle kernel (example application:20), which circumvents negative similarities by transforming similarities to [0,1], with 1 denoting identical angles, and 0 opposing angles. Cosine similarity and Pearson and Spearman correlation coefficients may produce negative values (i.e., [–1,1]) if feature vectors are negatively correlated. A simple mitigation is to set negative values to zero11. Additionally, BrainSpace provides an option for row-wise thresholding of the input matrix11,20,22,31. Each vector of the input matrix is thresholded at a given sparsity (e.g., by keeping the weights of the top 10\% entries for each region). This procedure ensures that only strong, potentially less noisy, connections contribute to the manifold solution.

In addition to the aforementioned kernels, BrainSpace provides the option to provide a custom kernel or of skipping this step and using the input matrix as an affinity matrix.

Dimensionality reduction

In the input matrix, each seed xi is defined by a p-dimensional feature vector, where p may denote hundreds of parcels or thousands of vertices/voxels. The aim of dimensionality reduction techniques is to find a meaningful underlying low-dimensional representation, with , hidden in the high-dimensional ambient space. These methods can be grouped into linear and non-linear techniques. The former use a linear transformation to unravel the latent representation, while techniques in the second category use non-linear transformations. As of version 0.1, BrainSpace provides three of the most widely used dimensionality reduction techniques for macroscale gradient mapping: PCA for linear embedding, and LE and DM for non-linear dimensionality reduction.

- PCA is a linear approach that transforms the data to a low-dimensional space represented by a set of orthogonal components that explain maximal variance. Given a column-wise demeaned version of the input matrix Xd, the low-dimensional representation is computed as follows:

where U are the left singular vectors and S a diagonal matrix of singular values obtained after factorizing the input matrix using singular value decomposition, Xd = USVT. Although here we present the singular value decomposition version below, PCA can also be performed via eigendecomposition of the covariance matrix of X. - LE is a non-linear dimensionality reduction technique that uses the graph Laplacian of the affinity matrix A to perform the embedding:

where the degree matrix D is a diagonal matrix defined as and L is the graph Laplacian matrix. Note that we can also work with its normalized version instead 52. LE then proceeds to solve the generalized eigenvalue problem:

where the eigenvectors gk corresponding to the m smallest eigenvalues λk (excluding the first eigenvalue) are used to build the new low-dimensional representation: - DM also seeks a non-linear mapping of the data based on the diffusion operator Pα, which is defined as follows:

where α ∈ [0,1] is the anisotropic diffusion parameter used by the diffusion operator, is built by normalizing the affinity matrix according to the diffusion parameter and Dα is the degree matrix derived from Wα. When α = 0, the diffusion amounts to normalized graph Laplacian on isotropic weights, for α = 1, it approximates the Laplace-Beltrami operator and for the case where α = 0.5 it approximates the Fokker-Planck diffusion53. This parameter controls the influence of the density of sampling points on the manifold (α = 0, maximal influence; α = 1, no influence). In the gradient literature, the anisotropic diffusion hyper-parameter is commonly set to α = 0,511,16,20, a choice that retains global relations between data points in the embedded space. Similar to LE, DM computes the eigenvalues and eigenvectors of the diffusion operator. However, in this case, the new representation is constructed with the scaled eigenvectors corresponding to the largest eigenvalues, after omitting the eigenvector with the largest eigenvalue:

where t is the time parameter that represent the scale.

All aforementioned dimensionality reduction approaches assume that our high-dimensional data lies on some low-dimensional manifold embedded in ambient space, which is typically the case with neuroimaging datasets. These techniques, therefore, provide a convenient approach to handle the curse of dimensionality inherent to neuroimaging data. Moreover, they facilitate comparison by recovering representations with the same number of dimensions even when source data is non-comparable in ambient space (for example when subjects have different fMRI time series of different lengths). PCA, in particular, is able to discover the low-dimensional structure when the data lies in an approximately linear manifold, but performs poorly when there are non-linear relationships within the data. In such scenarios, LE and DM are more appropriate to discover the intrinsic geometric structure. From a technical point of view, the advantage of PCA over the non-linear approaches included in BrainSpace is that it provides a mapping from the high- to the low-dimensional space rather than simply producing the new low-dimensional representations. Hence, the choice between linear and non-linear dimensionality reduction is problem-dependent and may also be influenced by the nature of the data under study.

Alignment of gradients

Gradients computed separately for two or more datasets (e.g., patients vs controls, left vs right hippocampi) may not be directly comparable due to different eigenvector orderings in case of eigenvalue multiplicity (i.e., eigenvalues with the same value) and sign ambiguity of the eigenvectors54. Aligning gradients improves comparability and correspondence. However, we recommend visually inspecting the alignment results; if the manifold spaces are substantially different, then alignments may not provide sensible output. In version 0.1 of the BrainSpace toolbox, gradients can be aligned using Procrustes analysis55 or implicitly by joint embedding.

Procrustes analysis

Given a source and a target representation, Procrustes analysis seeks an orthogonal linear transformation ψ to align the source to the target, such that and are superimposed. Translation and scaling can also be performed by initially centering and normalizing the data prior to estimation of the transformation. For multiple datasets, a generalized Procrustes analysis can be employed. Let be the low-dimensional representations of N different datasets (i.e., input matrices XK). The procedure proceeds iteratively by aligning all representations to a reference and updating the reference by averaging the aligned representations. In the first iteration, the reference can be chosen from the available representations, or an out-of-sample template can be provided (e.g., from a hold-out group).

Joint embedding

Joint embedding is a dimensionality reduction technique that finds a common underlying representation of multiple datasets via simultaneous embedding37. The main challenge of this technique is to find a meaningful approach to establish correspondences between original datasets (i.e., X). In version 0.1 of BrainSpace, joint alignment is implemented based on spectral embedding and available for LE and DM. The only difference with these methods is that the embedding, rather than using the affinity matrices individually, is based on the joint affinity matrix , built as:

where Ak is the intra-dataset affinity of the input matrix Xk and Aij is the inter-dataset affinity between Xi and Xj. As of version 0.1, both sets of affinities are built using the same kernel. It is important to note, therefore, that joint embedding can only be used if the input matrices have the same features (e.g., identical target regions). After the embedding, the resulting shared representation will be composed of N individual low-dimensional representations, such that for the k-th input matrix Xk ∈ ℝ×m, the corresponding representation is , where nk is the number of seeds (i.e., rows) of Xk.

Null models

Many researchers have compared gradients to other continuous brain markers such as cortical thickness measures or estimates of cortical myelination. Given the spatial autocorrelation present in many modalities, applying linear regression or similar methods may provide biased test statistics. To circumvent this issue, we recommend comparing the observed test statistic to those of a set of distributions with similar spatial autocorrelation. To this end, we provide two methods: spin permutations46 and Moran spectral randomization (MSR)56,57. In cases where the input data lies on a surface and most of the sphere is used or if data can be mapped to a sphere, we recommend spin permutation. Otherwise, we recommend MSR. When performing a statistical test with multiple gradients as either predictor or response variable, we recommend randomizing the non-gradient variable as these randomizations need not maintain statistical independence across different eigenvectors.

Spin permutations

Spin permutation analysis leverages spherical representations of the cerebral cortex, such as those derived from FreeSurfer58 or CIVET59, to address the problem of spatial autocorrelation in statistical inference. Spin permutations estimate the null distribution by randomly rotating the spherical projections of the cortical surface while preserving the spatial relationships within the data46. Let Vr ∈ ℝ1×3 be the matrix of vertex coordinates in the sphere, where l is the number of vertices, and R ∈ ℝ3×3 a matrix representing a rotation along the three axes uniformly sampled from all possible rotations60. The rotated sphere Vr is computed as follows:

Samples of the null distribution are then created by assigning each vertex on Vr the data of its nearest neighbor on V.

Moran spectral randomization

Borrowed from the ecology literature, MSR can also be used to generate random variables with identical or similar spatial autocorrelation (in terms of Moran’s I correlation coefficient50). This approach requires building a spatial weight matrix defining the relationships between the different locations. For our particular case, given a surface mesh with I vertices (i.e., locations), its topological information is used to build the spatial weight matrix L ∈ l×l, such that L(i, j) > 0 if vertices i and j are neighbors, and L(i, j) = 0 otherwise. In BrainSpace, L is built using the inverse distance between each vertex and the vertices in its immediate neighborhood, although other neighborhoods and weighting schemes (e.g., binary or Gaussian weights) can also be incorporated. The computed L is doubly centered and eigendecomposed into its whole spectrum, with the resulting eigenvectors M ∈ l×l–1 being the so-called Moran eigenvector maps. Note that eigenvectors with 0 eigenvalue are dropped. One advantage of MSR is that we can work with the original cortical surfaces, and thus skip potential distortions introduced by spherical mesh parameterization.

To generate null distributions, let u ∈ l be an input feature vector defined on each vertex of our surface (e.g., cortical thickness), and r ∈ l–1 the correlation coefficients of u with each spatial eigenvector in M. MSR aims to find a randomized feature vector z that respects the autocorrelation observed in u as follows:

where and σu stand for mean and standard deviation of u respectively, and a ∈ ℝl–1 is a vector of random coefficients. Three different methods exist for generating vector a: singleton, pair, and triplet. As of version 0.1, only the singleton and pair procedures are supported in BrainSpace. Let v = MrT, in the singleton procedure, a is computed by randomizing the sign of each element in v (i.e., ). In the pair procedure, the elements of v are randomly changed in pairs. Let (vi, vj) be a pair of elements randomly chosen, then a is updated such that and , with and randomly drawn from a uniform distribution. If the number of elements of v is odd, the singleton procedure is used for the remaining element.

As opposed to the singleton procedure, the null data generated by the pair procedure does not fully preserve the observed spatial autocorrelation. We therefore recommend the singleton procedure, unless the number of required randomizations exceeds 2l–1, which is the maximum number of unique randomizations that can be produced using the singleton procedure.

Participants

All data were derived from the Human Connectome Project dataset38. For all figures showing results based only on FC, we included subjects processed for a prior study (n = 217 (122 women), mean ± SD age = 28.5 ± 3.7 y]20. In short, these were subjects for whom all resting-state fMRI and anatomical scans were fully completed, and no familial relationships existed between subjects in this group. Data shown for comparisons between FC and MPC were derived from the subjects available both in the prior dataset as well as the data used by ref. 22 [n = 70 (41 women), mean ± SD age = 28.7 ± 3.9 y]. As part of the Human Connectome Project acquisitions, informed consent was obtained from all subjects and all procedures were approved by the Washington University institutional review board.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

Mr. Vos de Wael was funded by the Savoy Foundation for Epilepsy Research. Dr. Oualid Benkarim was funded by a Healthy Brains for Healthy Lives (HBHL) postdoctoral fellowship. Dr. Jessica Royer was supported by a Canadian Open Neuroscience Platform (CONP) fellowship. Dr Smallwood was supported by the European Research Council (WANDERINGMINDS—ERC646927). Dr. Paquola was funded through a postdoctoral fellowship of the Transforming Autism Care Consortium (TACC) and the Fonds de la recherche du Québec—Santé (FRQ-S). Dr. Boris Bernhardt acknowledges research support from the National Science and Engineering Research Council of Canada (NSERC, Discovery-1304413), the Canadian Institutes of Health Research (CIHR, FDN-154298), the Azrieli Center for Autism Research of the Montreal Neurological Institute (ACAR), SickKids Foundation (NI17-039), and the Canada Research Chairs (CRC) Program. Data were provided, in part, by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by the 16 NIH Institutes and Centers that support the NIH Blueprint for Neuroscience Research; and by the McDonnell Center for Systems Neuroscience at Washington University.

Author contributions

R.V., O.B., J.S. and B.B. developed the toolbox, designed the validations, and wrote the paper. S.L., D.M., J.R., S.T., S.V, C.P. and S.H., tested the toolbox and revised the paper. M.M., G.L., T.X. and B.M. revised and approved the paper.

Data availability

All data are freely provided by the Human Connectome Project38 and available from connectomeDB61 (https://db.humanconnectome.org/).

Code availability

Our toolbox is freely available at: http://github.com/MICA-MNI/BrainSpace. The toolbox contains a parallel Python and Matlab implementation with closely-matched functionality (The Python implementation of BrainSpace incorporates a wrapper for VTK, which helps simplify object creation and pipelining). Along with the code, the toolbox contains several surface models, parcellations across multiple scales, and example data to reproduce the evaluations presented in this tutorial. Additional documentation of the proposed toolbox is available for both Python and Matlab implementations via http://brainspace.readthedocs.io.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Reinder Vos de Wael, Oualid Benkarim, Jonathan Smallwood, Boris C. Bernhardt.

Supplementary information

Supplementary information is available for this paper at 10.1038/s42003-020-0794-7.

References

- 1.Flechsig, P. E. Anatomie des menschlichen Gehirns und Rückenmarks auf myelogenetischer Grundlage vol. 1 (G. Thieme, 1920).

- 2.Glasser MF, et al. A multi-modal parcellation of human cerebral cortex. Nature. 2016;536:171. doi: 10.1038/nature18933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Brodmann, K. Vergleichende Lokalisationslehre der Grosshirnrinde in ihren Prinzipien dargestellt auf Grund des Zellenbaues. (Barth, 1909).

- 4.Palomero-Gallagher, N., & Zilles, K. Cortical layers: Cyto-, myelo-, receptor-and synaptic architecture in human cortical areas. Neuroimage197, 716-741 (2019). [DOI] [PubMed]

- 5.von Economo, C. F. & Koskinas, G. N. Die Cytoarchitektonik der Hirnrinde des erwachsenen Menschen. (J. Springer, 1925).

- 6.Dart RA. The dual structure of the neopallium: Its history and significance. J. Anat. 1934;69:3. [PMC free article] [PubMed] [Google Scholar]

- 7.Goulas A, Majka P, Rosa M, Higetag C. A blueprint of mammalian cortical connectomes. PLoS Biol. 2019;17:e2005346. doi: 10.1371/journal.pbio.2005346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pandya, D., Petrides, M., & Cipolloni, P. B. Cerebral Cortex—Architecture, connections and the dual origin concept. (Oxford University Press, 2015).

- 9.Sanides F. Comparative architectonics of the neocortex of mammals and their evolutionary interpretation. Ann. N. Y. Acad. Sci. 1969;167:404–423. doi: 10.1111/j.1749-6632.1969.tb20459.x. [DOI] [Google Scholar]

- 10.Eickhoff SB, Yeo BT, Genon S. Imaging-based parcellations of the human brain. Nat. Rev. Neurosci. 2018;19:672–686. doi: 10.1038/s41583-018-0071-7. [DOI] [PubMed] [Google Scholar]

- 11.Margulies DS, et al. Situating the default-mode network along a principal gradient of macroscale cortical organization. Proc. Natl Acad. Sci. USA. 2016;113:12574–12579. doi: 10.1073/pnas.1608282113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bajada CJ, et al. A graded tractographic parcellation of the temporal lobe. NeuroImage. 2017;155:503–512. doi: 10.1016/j.neuroimage.2017.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cerliani L, et al. Probabilistic tractography recovers a rostrocaudal trajectory of connectivity variability in the human insular cortex. Hum. Brain Mapp. 2012;33:2005–2034. doi: 10.1002/hbm.21338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Guell, Xavier, Schmahmann, J. D., Gabrieli, J. D. D. E., & Ghosh, S. S. Functional gradients of the cerebellum. ELife10.7554/eLife.36652 (2018). [DOI] [PMC free article] [PubMed]

- 15.Haak VK, Marquand AF, Beckmann CF. Connectopic mapping with resting-state fMRI. NeuroImage. 2018;170:83–94. doi: 10.1016/j.neuroimage.2017.06.075. [DOI] [PubMed] [Google Scholar]

- 16.Larivière Sara, Vos de Wael Reinder, Hong Seok-Jun, Paquola Casey, Tavakol Shahin, Lowe Alexander J, Schrader Dewi V, Bernhardt Boris C. Multiscale Structure–Function Gradients in the Neonatal Connectome. Cerebral Cortex. 2019;30(1):47–58. doi: 10.1093/cercor/bhz069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Marquand AF, Haak KV, Beckmann CF. Functional corticostriatal connection topographies predict goal directed behaviour in humans. Nat. Hum. Behav. 2017;1:0146. doi: 10.1038/s41562-017-0146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Przeździk I, Faber M, Fernandez G, Beckmann CF, Haak KV. The functional organisation of the hippocampus along its long axis is gradual and predicts recollection. Cortex. 2019;119:324–335. doi: 10.1016/j.cortex.2019.04.015. [DOI] [PubMed] [Google Scholar]

- 19.Tian Y, Zalesky A. Characterizing the functional connectivity diversity of the insula cortex: Subregions, diversity curves and behavior. Neuroimage. 2018;183:716–733. doi: 10.1016/j.neuroimage.2018.08.055. [DOI] [PubMed] [Google Scholar]

- 20.Vos de Wael, R. et al. Anatomical and microstructural determinants of hippocampal subfield functional connectome embedding. Proc. Natl Acad. Sci.USA115, 10154–10159 (2018). [DOI] [PMC free article] [PubMed]

- 21.Huntenburg JM, et al. A systematic relationship between functional connectivity and intracortical myelin in the human cerebral cortex. Cereb. Cortex. 2017;27:981–997. doi: 10.1093/cercor/bhx030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Paquola C, et al. Microstructural and functional gradients are increasingly dissociated in transmodal cortices. PLoS Biol. 2019;17:e3000284. doi: 10.1371/journal.pbio.3000284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wagstyl K, Ronan L, Goodyer IM, Fletcher PC. Cortical thickness gradients in structural hierarchies. Neuroimage. 2015;111:241–250. doi: 10.1016/j.neuroimage.2015.02.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Paquola, C. et al. The cortical wiring scheme of hierarchical information processing. BioRxiv, Preprint at https://www.biorxiv.org/content/10.1101/2020.01.08.899583v1 (2020).

- 25.Shine J, et al. Human cognition involves the dynamic integration of neural activity and neuromodulatory systems. Nat. Neurosci. 2019;22:289–296. doi: 10.1038/s41593-018-0312-0. [DOI] [PubMed] [Google Scholar]

- 26.Vogel, J. W. et al. A molecular gradient along the longitudinal axis of the human hippocampus informs large-scale behavioral systems. Nat. Commun. (In Press). [DOI] [PMC free article] [PubMed]

- 27.Wang Peng, Kong Ru, Kong Xiaolu, Liégeois Raphaël, Orban Csaba, Deco Gustavo, van den Heuvel Martijn P., Thomas Yeo B.T. Inversion of a large-scale circuit model reveals a cortical hierarchy in the dynamic resting human brain. Science Advances. 2019;5(1):eaat7854. doi: 10.1126/sciadv.aat7854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Murphy C, et al. Distant from input: Evidence of regions within the default mode network supporting perceptually-decoupled and conceptually-guided cognition. NeuroImage. 2018;171:393–401. doi: 10.1016/j.neuroimage.2018.01.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Murphy C, et al. Modes of operation: a topographic neural gradient supporting stimulus dependent and independent cognition. NeuroImage. 2019;186:487–496. doi: 10.1016/j.neuroimage.2018.11.009. [DOI] [PubMed] [Google Scholar]

- 30.Sormaz M, et al. Default mode network can support the level of detail in experience during active task states. Proc. Natl Acad. Sci. USA. 2018;115:9318–9323. doi: 10.1073/pnas.1721259115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hong SJ, et al. Atypical functional connectome hierarchy in autism. Nat. Commun. 2019;10:1022. doi: 10.1038/s41467-019-08944-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tian Y, Zalesky A, Bousman C, Everall I, Pantelis C. Insula functional connectivity in schizophrenia: subregions, gradients, and symptoms. Biol. Psychiatry.: Cogn. Neurosci. Neuroimaging. 2019;4:399–408. doi: 10.1016/j.bpsc.2018.12.003. [DOI] [PubMed] [Google Scholar]

- 33.Fulcher BD, Murray JD, Zerbi V, Wang X-J. Multimodal gradients across mouse cortex. Proc. Natl Acad. Sci. USA. 2019;116:4689–4695. doi: 10.1073/pnas.1814144116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Huntenburg JM, Bazin P-L, Margulies DS. Large-scale gradients in human cortical organization. Trends Cogn. Sci. 2018;22:21–31. doi: 10.1016/j.tics.2017.11.002. [DOI] [PubMed] [Google Scholar]

- 35.Buckner RL, Krienen FM. The evolution of distributed association networks in the human brain. Trends Cogn. Sci. 2013;17:648–665. doi: 10.1016/j.tics.2013.09.017. [DOI] [PubMed] [Google Scholar]

- 36.Buckner RL, Margulies D. Macroscale cortical organization and a default-like apex transmodal network in the marmoset monkey. Nat. Commun. 2019;10:1976. doi: 10.1038/s41467-019-09812-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Xu, T. et al. Cross-species functional alignment reveals evolutionary hierarchy within the connectome. BioRxiv, Preprint at https://www.biorxiv.org/content/10.1101/692616v1 (2019). [DOI] [PMC free article] [PubMed]

- 38.Van Essen DC, et al. The WU-Minn Human Connectome Project: an overview. Neuroimage. 2013;80:62–79. doi: 10.1016/j.neuroimage.2013.05.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mcintosh R, Misic B. Multivariate statistical analyses for neuroimaging data. Annu. Rev. Psychol. 2013;64:499–525. doi: 10.1146/annurev-psych-113011-143804. [DOI] [PubMed] [Google Scholar]

- 40.Worsley KJ, et al. SurfStat: A Matlab toolbox for the statistical analysis of univariate and multivariate surface and volumetric data using linear mixed effects models and random field theory. Neuroimage. 2009;47:S102. doi: 10.1016/S1053-8119(09)70882-1. [DOI] [Google Scholar]

- 41.Desikan RS, et al. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage. 2006;31:968–980. doi: 10.1016/j.neuroimage.2006.01.021. [DOI] [PubMed] [Google Scholar]

- 42.Schaefer A, et al. Local-global parcellation of the human cerebral cortex from intrinsic functional connectivity MRI. Cereb. Cortex. 2017;28:3095–3114. doi: 10.1093/cercor/bhx179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Mesulam MM. From sensation to cognition. Brain. 1998;121:1013–1052. doi: 10.1093/brain/121.6.1013. [DOI] [PubMed] [Google Scholar]

- 44.Larivière, S. et al. Functional connectome contractions in temporal lobe epilepsy. BioRxiv, Preprint at https://www.biorxiv.org/content/10.1101/756494v1 (2019). [DOI] [PubMed]

- 45.Lowe AJ, et al. Targeting age-related differences in brain and cognition with multimodal imaging and connectome topography profiling. Hum. Brain Mapp. 2019;40:5213–5230. doi: 10.1002/hbm.24767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Alexander-Bloch Aaron F., Shou Haochang, Liu Siyuan, Satterthwaite Theodore D., Glahn David C., Shinohara Russell T., Vandekar Simon N., Raznahan Armin. On testing for spatial correspondence between maps of human brain structure and function. NeuroImage. 2018;178:540–551. doi: 10.1016/j.neuroimage.2018.05.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pedregosa F, et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 2011;12:2825–2830. [Google Scholar]

- 48.Guell Xavier, et al. LittleBrain: a gradient-based tool for the topographical interpretation of cerebellar neuroimaging findings. PLoS One. 2019;17:e2005346. doi: 10.1371/journal.pone.0210028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Nenning K-H, et al. Diffeomorphic functional brain surface alignment: functional demons. NeuroImage. 2017;156:456–465. doi: 10.1016/j.neuroimage.2017.04.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Cliff AD, Ord JK. Spatial autocorrelation. London: Pion; 1973. [Google Scholar]

- 51.Yeo BTT, et al. The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J. Neurophysiol. 2011;106:1125–1165. doi: 10.1152/jn.00338.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ng, A. Y., Jordan, M. I., & Weiss, Y. On spectral clustering: analysis and an algorithm. Adv. Neural. Inf. Process Syst. 849–856 (2001).

- 53.Coifman RR, Lafon S. Diffusion maps. Appl. Computational Harmonic Anal. 2006;21:5–30. doi: 10.1016/j.acha.2006.04.006. [DOI] [Google Scholar]

- 54.Lombaert H, Grady L, Polimeni JR, Cheriet F. FOCUSR: feature oriented correspondence using spectral regularization—a method for precise surface matching. IEEE Trans. Pattern Anal. Mach. Intell. 2013;35:2143–2160. doi: 10.1109/TPAMI.2012.276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Langs, G., Golland, P., & Ghosh, S. S. Predicting activation across individuals with resting-state functional connectivity based multi-atlas label fusion. International Conference on Medical Image Computing and Computer-Assisted Intervention, 313–320 (2015). [DOI] [PMC free article] [PubMed]

- 56.Dray S. A new perspective about Moran’s coefficient: spatial autocorrelation as a linear regression problem. Geographical Anal. 2011;43:127–141. doi: 10.1111/j.1538-4632.2011.00811.x. [DOI] [Google Scholar]

- 57.Wagner HH, Dray S. Generating spatially constrained null models for irregularly spaced data using Moran spectral randomization methods. Methods Ecol. Evolution. 2015;6:1169–1178. doi: 10.1111/2041-210X.12407. [DOI] [Google Scholar]

- 58.Fischl B. FreeSurfer. Neuroimage. 2012;62:774–781. doi: 10.1016/j.neuroimage.2012.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kim J, et al. Automated 3-D extraction and evaluation of the inner and outer cortical surfaces using a Laplacian map and partial volume effect classification. Neuroimage. 2005;27:210–21. doi: 10.1016/j.neuroimage.2005.03.036. [DOI] [PubMed] [Google Scholar]

- 60.Blaser R, Fryzlewicz P. Random rotation ensembles. J. Mach. Learn. Res. 2016;17:126–151. [Google Scholar]

- 61.Hodge MR, et al. ConnectomeDB—sharing human brain connectivity data. Neuroimage. 2016;124:1102–1107. doi: 10.1016/j.neuroimage.2015.04.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data are freely provided by the Human Connectome Project38 and available from connectomeDB61 (https://db.humanconnectome.org/).

Our toolbox is freely available at: http://github.com/MICA-MNI/BrainSpace. The toolbox contains a parallel Python and Matlab implementation with closely-matched functionality (The Python implementation of BrainSpace incorporates a wrapper for VTK, which helps simplify object creation and pipelining). Along with the code, the toolbox contains several surface models, parcellations across multiple scales, and example data to reproduce the evaluations presented in this tutorial. Additional documentation of the proposed toolbox is available for both Python and Matlab implementations via http://brainspace.readthedocs.io.