Abstract

Background: Reperfusion is the most effective acute treatment for ischemic stroke within a narrow therapeutic time window. Ambulance-based telestroke is a novel way to improve stroke diagnosis and timeliness of treatment. This study aims to (1) assess the usability of our ambulance-based telestroke platform and (2) identify strengths and limitations of the system from the user's perspective.

Materials and Methods: An ambulance was equipped with a mobile telemedicine system to perform remote stroke assessments. Scripted scenarios were performed by actors during transport and evaluated by physicians using the National Institutes of Health Stroke Scale (NIHSS). Scores obtained during transport were compared with original scripted NIHSS scores. Participants completed the System Usability Scale (SUS), NASA Task Load Index (NASA TLX), audio/video quality scale, and a modified Acceptability of Technology survey to assess perceptions and usability. In addition, interviews were conducted to evaluate user's experience. Descriptive analysis was used for all surveys. Weighted kappa statistics was used to compare the agreement in NIHSS scores.

Results: Ninety-one percent (59/65) of mobile scenarios were completed. Median completion time was 9 min (range 4–17 min). There was moderate inter-rater agreement (weighted kappa = 0.46 [95% confidence interval 0.33–0.60, p = 0.0018]) among mobile and original scripted scenarios. The mean SUS score was 68.8 (standard deviation = 15.9). There was variability between usability score and formative feedback among all end-users in the areas of usability issues (i.e., audibility and equipment stability) and safety.

Conclusion: Before implementation of a mobile prehospital telestroke program, the use of combined clinical simulation and Plan-Do-Study-Act methodology can improve the quality and optimization of the telemedicine system.

Keywords: acute stroke, telemedicine, telestroke, mobile health, emergency medical services

Introduction

Reperfusion is the most effective acute treatment for ischemic stroke within a narrow therapeutic time window. Delays in treatment manifest as increased long-term disability and death with corresponding burden and costs. There are a number of factors that play a role in the delay to treatment, including prehospital stroke recognition, transport time, and limited access to neurological expertise. An untapped area with significant potential to address the above factors lies within the prehospital setting. One such novel solution is the use of mobile ambulance-based telestroke. In 2009, an American Heart Association/American Stroke Association (AHA/ASA) scientific statement hypothesized that videoconferencing stroke expertise to an ambulance could increase diagnostic accuracy, provide earlier resource mobilization, and timelier treatment. More research was recommended due to insufficient data.1

Over the last decade, a few simulation studies with telestroke platforms, utilizing current technologies (e.g., 4G cellular network, portable mobile devices, and advanced mobile videoconferencing platforms), supported the concept of mobile telestroke as a technically feasible and reliable way to evaluate stroke patients in the prehospital setting.2–4 However, implementation and sustainability of technology can be complicated by the intricacies of healthcare organizations, work practices, and physical environments and little is known about the integration of ambulance telestroke into prehospital workflow. In addition, usability and human factors play a significant role in the successful implementation and sustainability of e-health systems in healthcare.5,6 Technology in healthcare is viewed by many as an essential component in improving patient care and physician/patient interactions. Despite widespread agreement of its importance, these benefits have been slow due to difficulties with implementation. One example has been the high-profile healthcare IT implementation failures reported across the world. This supports the need to understand factors that influence implementation and how to best adopt the e-health system into the existing workflow.

Clinical simulation, frequently used for skills training,7–10 has a role in the design, evaluation, and testing of clinical information technology systems in new contexts.11 Such evaluations have been used to evaluate human factors, usability, doctor/patient interaction, and workflow.6,12–14 In addition, identifying potential issues before the implementation into the real clinical setting.15 In this study, the primary objective was to develop an adoptable, user-friendly, mobile telestroke platform using in situ clinical simulation-based Plan-Do-Study-Act (PDSA) methodology. The secondary objective was to identify strengths and limitations of the mobile system from all end-users' perspectives.

Materials and Methods

Development of a User-Centered Mobile Telestroke Platform

Over a 24-month period, a multistakeholder team with representation from the Departments of Neurology and Emergency Medicine, Office of Telemedicine, Center of Human Simulation and Patient Safety, local Emergency Medical Services (EMS) agency, and a telemedicine developer company collaborated to develop a mobile prehospital telestroke system. Modifications to telemedicine equipment, teleconferencing software, and protocol were implemented based on the results of serial clinical simulation-based PDSA cycles and dry runs. We used data collected via direct observation notes, survey results, and informal interviews during each clinical simulation-based PDSA cycle to test changes to improve our mobile system.

Mobile Telestroke Platform—Premodifications

One ambulance was equipped with a mobile, bidirectional audio/video streaming telemedicine system to perform remote stroke assessments. The hardware component included a touch screen, computer, wireless keyboard, pan-tilt-zoom (PTZ) camera, high-quality speaker plus microphone, and a 4G/LTE mobile gateway. Each physician was equipped with either a Windows (with Verizon Hotspot) or an iOS (Verizon cellular) tablet-based endpoint unit for mobile telecommunication.

Users/Evaluators

Volunteers were identified from the intended end-user population (i.e., neurology physicians, neurology residents, standardized patients [SP], and EMS providers). All neurologists were National Institutes of Health Stroke Scale (NIHSS) certified. For the purpose of this study, SPs were considered an end-user since patients will need to interact with the platform. Participants with different levels of telemedicine and stroke experience were included to evaluate the ease of use among all potential users.

Clinical Simulation Usability Testing with PDSA Cycles

Ten stroke scenarios with various levels of stroke severity (ranging 0–19 on the NIHSS) and various stroke syndromes and mimics were developed for testing. The script consisted of patient's age, time of symptom onset or last known well, medical history, medications, vital signs, clinical presentation, and NIHSS score. Each scenario was portrayed by SPs and transported by an EMS provider within a 10–15-mile radius from VCU Health and along different routes, chosen due to preliminary data revealing a 10-min average transport time for Richmond Ambulance Authority to VCU Health. All neurologists were blinded to scripted scenarios performed by the SPs. During ambulance transport, the EMS provider would initiate a teleconference call to the neurologist at VCU Health. Once connected, the neurologist would prompt the EMS provider to assist in assessing the patient using the NIHSS. The bedside evaluations began with either a neurology attending or resident entering the clinical examination room.

The EMS provider and neurologist completed the following usability scales: System Usability Scale (SUS), NASA Task Load Index (NASA TLX), and a modified Acceptability of Technology survey after scenarios 1, 5, and 10 in PDSA cycles 1 and 2 and after completing 5 scenarios in PDSA cycle 3. All SPs completed the modified Acceptability of Technology survey. All users provided feedback on audiovisual (AV) quality after completion of each scenario using a 1–6 rating scale. Each scenario conducted at bedside during PDSA cycles 1 and 2 was evaluated by either a neurology attending or resident in real time, obtaining clinical data points and NIHSS scores (Table 1). All evaluations were documented independently and blinded to assessments conducted by previous evaluators.

Table 1.

Description of Simulation Cycles, Evaluators, and Outcome Measures

| CYCLE | NO OF STROKE SCENARIOS | LOCATION OF ASSESSMENTS | EVALUATORS | OUTCOME MEASURES |

|---|---|---|---|---|

| 1 | 10 | Remotely via mobile telemedicine × 2 | 2 vascular neurologists with telemedicine experience | NIHSS SUS NASA-TLX AV Quality Scale Modified Acceptability of Technology survey |

| Bedside × 2 | 1 vascular neurologist 1 neurology resident |

NIHSS | ||

| 2 | 10 | Remotely via mobile telemedicine × 2 | 2 vascular neurologists with telemedicine experience 1 general neurologist with no telemedicine experience 1 neurology resident 1 neurology fellow |

NIHSS SUS NASA-TLX AV Quality Scale Modified Acceptability of Technology survey |

| Bedside × 2 | 1 vascular neurologist 1 neurology resident |

NIHSS | ||

| 3 | 5 | Remotely via mobile telemedicine × 5 | 1 vascular neurologist with telemedicine experience 1 general neurologist with telemedicine experience 1 general neurologist with no telemedicine experience 2 neurology residents |

NIHSS SUS NASA-TLX AV Quality Scale Modified Acceptability of Technology survey |

AV, audio/video; NASA-TLX, National Aeronautics and Space Administration Task Load Index; NIHSS, National Institutes of Health Stroke Scale; SUS, System Usability Scale.

Data Analysis

Technical feasibility was evaluated by the ability to perform at least 80% mobile teleconferencing encounters without prohibitive technical interruption. AV quality was assessed based on a 1–6 rating scale with a score of ≤3 defined as good to excellent. The inter-rater agreement of the NIHSS among evaluators was assessed using weighted kappa statistics and agreement percentage of NIHSS based on stroke severity range. We used descriptive statistics to summarize participant characteristics and survey scores. No statistical comparisons were conducted between simulations, as hypothesis testing was not the goal. Content analysis was conducted to identify (1) modifiable usability issues and navigational problems, and (2) strength and limitation of the mobile platform. All analyses were conducted with SAS software version 9.4 [Copyright (c) 2002–2012 by SAS Institute, Inc., Cary, NC].

Results

End-User Perceptions

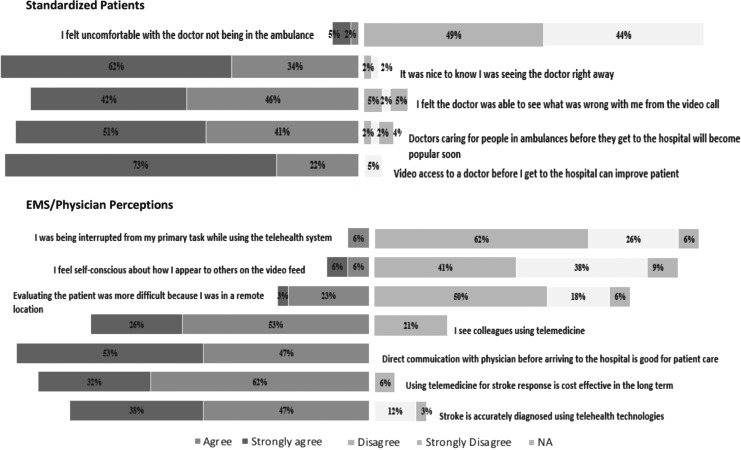

End-user participants included 3 volunteer EMS providers, 13 physicians, and 23 SPs. Of the EMS/physician group, 39% reported previous telemedicine experience, 92% reported previous stroke experience, and 85% reported previous video gaming experience. Of the SP group, 13% reported previous telemedicine experience, 61% reported previous video calling experience, and 57% reported previous video gaming experience. The majority of SPs viewed access to the physician via telemedicine on the back of the ambulance favorably. Only 7% of SPs felt uncomfortable with the doctor not being on the ambulance. Most healthcare providers (EMS/physicians) perceived the use of telestroke as cost-effective (94%) and useful to diagnose stroke accurately (85%). Six percent of healthcare providers felt they were being interrupted from their primary task and 12% felt self-conscious about how they appeared on the video feed (Fig. 1).

Fig. 1.

Percentage of end-users' responses concerning the use of mobile prehospital telestroke on a 5-point Likert scale. The first section represents the responses of standardized patients to five perception questions. The second section represents the responses of healthcare providers (EMS providers and physicians) to seven perception questions. EMS, Emergency Medical Services.

PDSA Cycles

Cycle 1

During cycle 1, 95% of mobile scenarios were completed. One was not completed due to time constraints. The attending attempted to add a resident after initiation of a call to test the ability to add a consultant to a call, which was successful during 68% of the mobile scenarios. The residents were using an iOS tablet and six of the resident calls dropped due to poor compatibility of videoconferencing application to the iOS tablet. The decision was made to only use windows-based tablets and desktop devices. SPs reported difficulty seeing the physician due to backlighting on the physician's end. One patient commented “Please do not backlight the remote doctor. All we could see was her outline and we could not see facial features at all.” Field observation and video recordings revealed that the placement of the ambulance monitor needed to be repositioned (monitor tilted toward the patient) for better viewing. Training of physicians concerning best positioning of tablet and lighting was also required. Instability of the ambulance monitor when the ambulance was in motion was another concern. One EMS provider commented “the screen flips around on turns and I have to stand to walk suddenly. I am unsecured.” Based on feedback, the ambulance monitor's mounting arm was replaced and tightened.

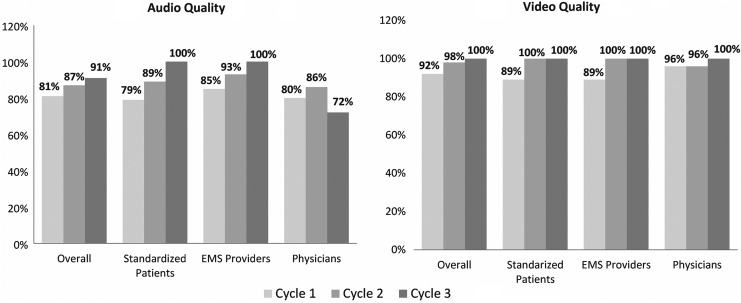

Overall, 81% and 92% raters deemed audio and video quality, respectively, as “good” or “excellent” (Fig. 2). The ratings for both audio and video were similar when comparing ambulance-based end-users (EMS providers and SPs) and hospital-based end-users (physicians). Despite reaching the predetermined goal (>80% of raters deeming AV quality as good or excellent), multiple end-users commented on the poor audio quality within the comment sections of surveys and informal interviews. One patient recalled “losing the doctor's voice when the gears/motor were revving. When the ambulance is moving, the engine noise masks the speaker. When the ambulance is idling it's quite easy to hear.” Field notes and recordings revealed that the EMS provider had to stand and walk over to the speaker/microphone to relay communication between the physician and patient due to placement of the equipment. In addition, the physician was not using a headset during scenarios. One patient suggested the use of headsets in the back of the ambulance. Based on the above findings, a Sennheiser© Digital Enhanced Cordless Telecommunication (DECT) system with two wireless headsets (for EMS provider and patient) was installed in the ambulance and Logitech© USB headsets with microphone were provided to physicians.

Fig. 2.

Percentage of average AV quality ratings of ≤3 (defined as good to excellent) for designated end-users (in-hospital and in-ambulance) and overall. The figure displays the averages of the AV quality ratings of ≤3 for each mobile simulation cycle. AV, audiovisual.

Physicians commented on difficulty maneuvering the camera. One physician stated “I had to repeatedly hit the arrows to maneuver the camera. So frustrating!!” In collaboration with the software developer, presets were developed for direct maneuvering of PTZ camera to head, eyes, arms, and legs. During transport, we experienced short freezing of AV transmission when traveling on the interstate highway. However, no scenario was aborted for technical reasons.

Cycle 2

During cycle 2, 75% of mobile scenarios were completed. One was not completed due to time restraints and four due to persistent drops and difficulties initiating calls with one of the tablet devices. A software update was conducted on the ambulance end resulting in incompatibility between the mobile and remote systems. In the middle of cycle 2, the neurologist switched to a compatible desktop device to complete the remaining scenarios. Due to the system update, the physicians were also unable to use the camera presets. A protocol was placed to ensure scheduled updates were performed on both the ambulance and physician end simultaneously. The ability to add a resident to the call was successful during all attempts. The Sennheiser© DECT system with wireless headsets was not used due to improper charging before testing. Despite the operator failure, a slight overall improvement in audio quality was seen with the physician's use of the Logitech© headsets. We continued to experience short freezing of AV transmission when traveling on the interstate highway. However, no scenario was aborted for technical reasons.

Based on review of field notes from the first two cycles and comments from participants, study team members met with stakeholders to discuss the next step. A common concern of stakeholders was the cost and size of the equipment. The decision was made to redesign the telemedicine system to make it more portable with a tablet-based touch screen monitor and dual modem. After consultation with the EMS equipment manager, it was decided to further test the equipment in one of the operational (nondiesel) ambulances typically used for stroke care. The advanced-life-support ambulance is much bigger and uses a diesel engine that resulted in additional background noise. Furthermore, the ambulance speaker/microphone was replaced and repositioned directly above the patient's head. The Sennheiser© DECT system with two wireless headsets was uninstalled to eliminate the need for additional work required to keep the headsets charged.

Cycle 3

During cycle 3, all mobile scenarios were completed without prohibitive technical interruption. Overall audio quality was deemed good to excellent by 91% of all end-users. All ambulance-based end-users deemed audio quality as good to excellent, while physician raters dropped to 72% (Fig. 2). Short freezing of AV transmission was experienced, but episodes were shorter in duration. Based on dry runs, further improvement in physician-based audio quality and AV transmission with the use of a dual modem with a private network was observed. Table 2 summarizes the major actions and results of each PDSA cycle.

Table 2.

Plan-Do-Study-Act/Clinical Simulation Process

| CYCLE | PLAN | DO | STUDY | ACT |

|---|---|---|---|---|

| Six-month time period for telemedicine equipment research, installation of equipment, preparation of scenarios, training of actors, training of volunteer EMS provider, and physicians. Multiple dry runs with stakeholders were conducted before and between simulations. | ||||

| 1 | Develop a mobile telestroke system considering the needs of all end-users based on discussions with stakeholders. | Clinical simulation with trained actors | Poor audio quality, difficulty seeing physician, unstable monitor, connectivity difficulties, difficulty maneuvering camera | Use of headsets for all end-users, set physician layout larger on monitor, provide additional training of physicians of best positions and lighting, training EMS on proper positioning of monitor for patient evaluation, improve stability of mounting device, add presets to help physician maneuver PTZ, use of dual modem to improve connectivity |

| 2 | During multiple dry runs and discussion with stakeholders, we agreed on the above-stated action plan of cycle 1 and implemented the changes. | Clinical simulation with trained actors | Poor audio quality but improved, unable to use Sennheiser DECT system with wireless headsets due to uncharged batteries, unable to use presets and technical difficulty with software secondary to glitch in software update before simulation, connectivity difficulties on interstate (I-95) | Headsets for physicians only, repositioning of ambulance speaker/mike directly above patient's head, regularly scheduled simultaneous software updates, ensure presets can be used before additional testing, dual modem to improve connectivity on a private network, further development of software to improve consistency of use, and need for additional training |

| 3 | During multiple dry runs and discussion with stakeholders, we agreed on the above-stated action plan of cycle 1 and implemented the changes. |

Clinical simulation with trained actors | Poor audio quality reported by physicians, intermittent short pauses in video, and users recommended further training be provided before live implementation | Reinstall Sennheiser DECT system with wireless headsets with a protocol in place to ensure proper charging, developing online training modules for both EMS providers and physicians. |

DECT, Digital Enhanced Cordless Telecommunication; EMS, Emergency Medical Services; PTZ, pan-tilt-zoom.

Usability Testing

Between March 2016 and June 2017, 105 scenarios (65 mobile evaluations and 40 bedside evaluations) were conducted. Ninety-one percent (59/65) of mobile and all bedside evaluations were completed. Overall, comparison of NIHSS scores between mobile simulations and original scripted scenarios showed moderate agreement (weighted kappa = 0.46). NIHSS scores between bedside simulations and original scripted scenarios revealed moderate agreement (weighted kappa = 0.62). Table 3 includes the agreement percentage based on severity range and weighed kappa scores per cycle. The median time to complete each scenario was 9 min (range 4–17, standard deviation [SD] 3.7).

Table 3.

Agreement in Assessment of NIHSS Stroke Total Scores between the Original Cases and Simulations

| SIMULATIONS | ORIGINAL CASES | |||||

|---|---|---|---|---|---|---|

| Cycle 1 | ||||||

| Mobile | Total | No symptoms | Minor | Moderate | Moderate to severe | Severe |

| No symptoms | 3 | 0 | 3 | 0 | 0 | 0 |

| Minor | 3 | 0 | 3 | 0 | 0 | 0 |

| Moderate | 23 | 0 | 0 | 23 | 0 | 0 |

| Moderate to severe | 3 | 0 | 0 | 0 | 1 | 2 |

| Total | 32 | 0 | 6 | 23 | 1 | 2 |

| Total agreement = 84%, weighted kappa = 0.75 | ||||||

| Bedside | ||||||

| No symptoms | 2 | 0 | 2 | 0 | 0 | 0 |

| Minor | 2 | 0 | 2 | 0 | 0 | 0 |

| Moderate | 14 | 0 | 0 | 13 | 1 | 0 |

| Moderate to severe | 2 | 0 | 0 | 1 | 0 | 1 |

| Total | 20 | 0 | 4 | 14 | 1 | 1 |

| Total agreement = 75%, weighted kappa = 0.62 | ||||||

| Cycle 2 | ||||||

| Mobile | ||||||

| No symptoms | 2 | 0 | 1 | 1 | 0 | 0 |

| Minor | 2 | 0 | 2 | 0 | 0 | 0 |

| Moderate | 25 | 0 | 0 | 18 | 3 | 4 |

| Moderate to severe | 2 | 0 | 2 | 0 | 0 | 0 |

| Total | 31 | 0 | 5 | 19 | 3 | 4 |

| Total agreement = 65%, weighted kappa = 0.17 | ||||||

| Bedside | ||||||

| No symptoms | 2 | 1 | 1 | 0 | 0 | 0 |

| Minor | 2 | 0 | 1 | 1 | 0 | 0 |

| Moderate | 14 | 0 | 0 | 12 | 2 | 0 |

| Moderate to severe | 2 | 0 | 0 | 0 | 0 | 2 |

| Total | 20 | 1 | 2 | 13 | 2 | 2 |

| Total agreement = 70%, weighted kappa = 0.62 | ||||||

| Cycle 3 | ||||||

| Mobile | ||||||

| No symptoms | 5 | 1 | 2 | 2 | 0 | 0 |

| Minor | 0 | 0 | 0 | 0 | 0 | 0 |

| Moderate | 15 | 0 | 0 | 11 | 3 | 1 |

| Moderate to severe | 5 | 0 | 0 | 0 | 1 | 4 |

| Total | 25 | 1 | 2 | 13 | 4 | 5 |

| Total agreement = 52%, weighted kappa = 0.46 | ||||||

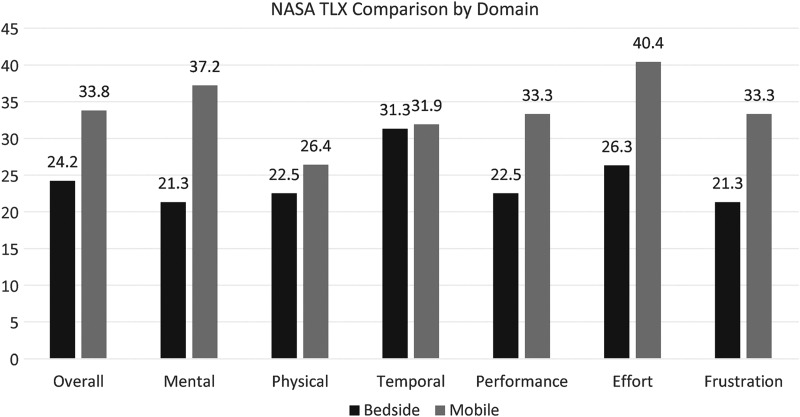

The overall SUS score was 68.8 (SD = 15.9). Table 4 presents the overall total and total end-user SUS scores in cycles 1, 2, and 3. Comparing cycles 1 and 3, the SUS scores revealed a slight improvement with modification of the mobile platform. The overall NASA TXL unweighted workload rating increased for mobile evaluations compared with bedside evaluations (Fig. 3). Overall, effort and mental demand recorded the highest mean workload score.

Table 4.

Average Call Times and Systems Usability Scale Results

| CYCLE 1 | CYCLE 2 | CYCLE 3 | |

|---|---|---|---|

| Duration of call average (range) SD | 12 min (8–16) SD 2.13 | 9 min (5–16) SD 3.41 | 7 min (4–16) SD 2.26 |

| Systems Usability Scale | |||

| Overall | 68.8 | 59.5 | 75.4 |

| EMS providers | 73.0 | 87.5 | 83.8 |

| Physicians | 66.4 | 40.9 | 72 |

SD, standard deviation.

Fig. 3.

Overall unweighted workload and dimensions of workload averages of both bedside and mobile evaluations. NASA-TLX, National Aeronautics and Space Administration Task Load Index.

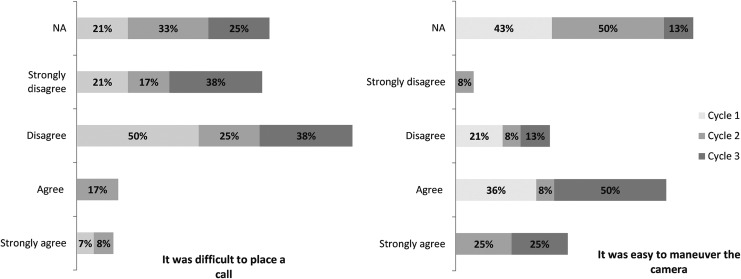

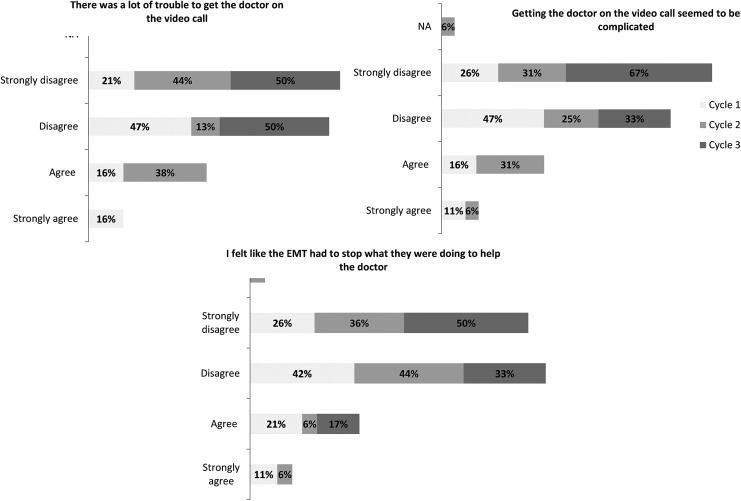

Seven percent of healthcare providers in cycle 1 and none in cycle 3 agreed or strongly agreed that it was difficult to place a call. There was a 39% improvement in favorable response to the ease of maneuvering the camera among healthcare providers after modifications (Fig. 4). There was also a decrease in percentage of SPs who agreed or strongly agreed getting the doctor on the video call seemed to be complicated from 27% to 0%. Likewise, a decrease from 32% to 0% in SPs agreeing or strongly agreeing there were difficulties getting the doctor on the video call. When asked if the EMS provider had to stop what he or she was doing to help the doctor, 68% disagreed or strongly disagreed in cycle 1 and 83% disagreed or strongly disagreed in cycle 3 (Fig. 5).

Fig. 4.

Percentage of healthcare providers' (EMS providers and physicians) responses to two of the modified acceptability of technology survey questions on a 5-point Likert scale. The figure displays the percentage of each response for all simulation cycles.

Fig. 5.

Percentage of standardized patients' responses to three of the modified acceptability of technology survey questions on a 5-point Likert scale. The figure displays the percentage of each response for all simulation cycles.

Discussion

In the early 2000s, Lamont et al. developed and piloted the first mobile prehospital telemedicine platform using 2G cellular network transmitting data in a store and forward method. The results revealed a clinical value with high inter-rater reliability but instability of transmission.16,17 With the robust advancements in technology over the years, there have been a number of pilot studies evaluating the feasibility and reliability of mobile prehospital telestroke with variable feasibility results.2–4,18–24 There is a trend favoring feasibility as wireless network development, portability of mobile devices, mobile videoconferencing applications, and high-speed modems evolve.

Most recent studies reveal cellular wireless technologies are sufficient to support prehospital evaluation of potential stroke patients with no major connectivity difficulties. In those studies, all incomplete assessments were generally secondary to modifiable human/operational errors (i.e., unscheduled software update, uncharged battery, and physician use of a noncompatible device).2–4,23,24 This supports the need to not only look at the feasibility of technology and patient outcomes to establish the potential benefits but also the adequacy of infrastructure, resources, stakeholder engagement, adoption into workflow, and individuals' knowledge and beliefs to determine the potential sustainability of technological systems in healthcare. The results of our study showed that 91% of the prehospital mobile evaluations were completed without any major technical failure. Similar to most recent studies, we found that human factors and workflow play a significant role in the overall success of facilitating mobile evaluations in the prehospital setting. We also demonstrated variability between users on usability scores and formative feedback, thus highlighting the importance of incorporating perspectives of multiple end-users when evaluating telehealth technologies. End-users identified similar usability issues with the technology such as poor audibility, unreliable connection, shifting of equipment during transport, and poor visibility during the assessment, although each user group identified unique issues within each theme. Additional unintended organizational issues that were identified and needed to be addressed before implementation included the need for adequate equipment/software training, protocol integration into current work practices, physician/EMS provider interaction, and patient safety. Equipment/software training and telemedicine experience were identified as important for effectively managing the system.

Our simulation-based PDSA evaluations led to both organizational and technical changes in the mobile telemedicine system. These modifications led to the maximization of user satisfaction, AV quality, stakeholder and end-user acceptability, patient safety, and increased understanding of work practices. The findings assisted with protocol development, training manuals, and a better understanding between all end-users and workflow. These results would not have been the same if the evaluation had not been conducted using high-fidelity clinical simulations.

The use of trained actors with no stroke experience and physicians with a variety of stroke and telemedicine experience is a strength of this study because it provided adequate representation of the heterogeneous population of end-users encountered in real acute stroke clinical practice. There are several limitations to this study. This was a prehospital simulation study without the use of sirens or other distractions associated with emergency prehospital care and may not reflect the evaluation of emergency stroke care by EMS providers. In addition, the actors varied between simulations and occasionally between runs primarily due to scheduling conflicts. This created some inconsistencies between simulations of the same scenario that the team later identified after review of recordings. In addition, this as a small pilot study and not able to account for all possible variables affecting the performance of a prehospital mobile telestroke assessment.

Conclusions

Before implementation and financial investment in a mobile prehospital telestroke program, the use of combined clinical simulation and PDSA methodology can improve the quality and optimization of the system. Our next step is to perform an assessment to determine whether it will yield patient benefits that outweigh any possible risks, any additional prospective factors that may hinder implementation, reliability, and efficacy of our ambulance-based telestroke system in a real clinical setting.

Acknowledgments

This study was supported by UL1TR000058 from the National Institutes of Health's National Center for Advancing Translational Science and National Center for Research Resources, the CCTR Endowment Fund of the Virginia Commonwealth University, and the Virginia Commonwealth University Dean's Enhancement Award. We are indebted to the participating stakeholders from the VCU Departments of Neurology and Emergency Medicine, Office of Telemedicine, Center of Human Simulation and Patient Safety, Richmond Ambulance Authority, and swyMED for their willingness to cooperate in this research study. We thank Rob Lawrence, Daniel Fellows, Wayne Harbour, Richard “Chip” Decker, Vladimir Lavrentyev, and Moshe Feldman for their significant contributions to this project. We also thank Alfred Brown for technical assistance and the VCU physicians, EMS providers, and actors for their willingness to participate in this research study.

Disclosure Statement

No competing financial interests exist.

References

- 1. Schwamm LH, Audebert HJ, Amarenco P, Chumbler NR, Frankel MR, George MG, Gorelick PB, Horton KB, Kaste M, Lackland DT, Levine SR, Meyer BC, Meyers PM, Patterson V, Stranne SK, White CJ. Recommendations for the implementation of telemedicine within stroke systems of care: A policy statement from the American Heart Association. Stroke 2009;40:2635–2660 [DOI] [PubMed] [Google Scholar]

- 2. Chapman Smith SN, Govindarajan P, Padrick MM, Lippman JM, McMurry TL, Resler BL, Keenan K, Gunnell BS, Mehndiratta P, Chee CY, Cahill EA, Dietiker C, Cattell-Gordon DC, Smith WS, Perina DG, Solenski NJ, Worrall BB, Southerland AM. A low-cost, tablet-based option for prehospital neurologic assessment: The iTREAT Study. Neurology 2016;87:19–26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Espinoza AV, Van Hooff RJ, De Smedt A, Moens M, Yperzeele L, Nieboer K, Hubloue I, de Keyser J, Convents A, Tellez HF, Dupont A, Putman K, Brouns R. Development and pilot testing of 24/7 in-ambulance telemedicine for acute stroke: Prehospital Stroke Study at the Universitiar Ziekenhuis Brussel-Project. Cerebrovasc Dis 2016;42:15–22 [DOI] [PubMed] [Google Scholar]

- 4. Barrett KM, Pizzi MA, Kesari V, TerKonda SP, Maurico A, Silvers SM, Habash R, Bronw BL, Tawk RG, Meschia JF, Wharen R, Freeman WD. Ambulance-based assessment of NIH stroke scale with telemedicine: A feasibility pilot study. J Telemed Telecare 2017;23:476–483 [DOI] [PubMed] [Google Scholar]

- 5. Karimi F, Poo DCC, Tan YM. Clinical information systems end user satisfaction: The expectations and needs congruencies effects. J Biomed Inform 2015;53:342–354 [DOI] [PubMed] [Google Scholar]

- 6. Bhattacherjee A, Premkumar G. Understainding changes in belief and attitude toward information technology usage: A theoretical model and longitudinal test. Mis Quarterly 2004;28:229–254 [Google Scholar]

- 7. Okuda Y, Bryson EO, DeMaria S, Jacobson L, Quinones J, Shen B, Levine AI. The utility of simulation in medical education: What is the evidence? Mount Sinai J Med 2009;76:330–343 [DOI] [PubMed] [Google Scholar]

- 8. Stefan MS, Belforti RK, Langlois G, Rothberg MB. A Simulation-Based program to train medical residents to lead and perform advanced cardiovascular life support. Hosp Pract 2011;39:63–69 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Spadaro S, Karbing DS, Fogagnolo A, Ragazzi R, Mojoli F, Astolfi L, Gioia A, Marangoni E, Rees SE, Volta CA. Simulation training for residents focused on mechanical ventilation: A randomized trial using mannequin-based versus computer-based simulation. Simul Healthc 2017;12:349–355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Robinson WP, Doucet DR, Simons JP, Wyman A, Aiello FA, Arous E, Schanzer A, Messina LM. An intensive vascular surgical skills and simulation course for vascular trainees improves procedural knowledge and self-rated procedural competence. J Vasc Surg 2017;65:907–915 [DOI] [PubMed] [Google Scholar]

- 11. Kumbruck C, Schneider MJ. Simulation studies: A new method of prospective technology assessment and design. Qual Life Res 1999;8:161–170 [DOI] [PubMed] [Google Scholar]

- 12. Ammenwerth E, Hackl WO, Binzer K, Christoffersen THE, Jensen S, Lawton K, Skjoet P, Nohr C. Simulation studies for the evaluation of health information technologies: Experiences and results. Health Inf Manag 2012;41:14–21 [DOI] [PubMed] [Google Scholar]

- 13. Kushniruk A, Nohr C, Jensen S, Borycki. From Usability testing to clinical simulations: Bringing context into the design and evaluation of usable and safe health information technologies. Yearb Med Inform 2013;8:78–85 [PubMed] [Google Scholar]

- 14. Jensen S, Kushniruk AW, Nohr C. Clinical simulation: A method for development and evaluation of clinical information systems. J Biomed Inform 2015;54:65–76 [DOI] [PubMed] [Google Scholar]

- 15. Jensen S, Kurshniruk A. Boundary objects in clinical simulation and design of eHealth. Health Informatics J. 2016;22:248–264 [DOI] [PubMed] [Google Scholar]

- 16. LaMonte MP, Cullen J, Gagliano DM, Gunawardane RD, Hu PF, MacKenzie CF, Xiao Y. TeleBAT: Mobile telemedicine for the brain attack team. J Stroke Cerebrovasc Dis 2000;9:128–135 [DOI] [PubMed] [Google Scholar]

- 17. LaMonte MP, Xiao Y, Hu PF, Gagliano DM, Bahouth MN, Gunawardane RD, MacKenzie CF, Gaasch WR, Cullen J. Shortening time to stroke treatment using ambulance telemedicine: TeleBAT. J Stroke Cerebrovasc Dis 2004;13:148–154 [DOI] [PubMed] [Google Scholar]

- 18. Liman TG, Winter B, Waldschmidt C, Zerbe N, Hufnagl P, Audebert HJ, Endres M. Telestroke ambulances in prehospital stroke management: Concept and pilot feasibility study. Stroke 2012;43:2086–2090 [DOI] [PubMed] [Google Scholar]

- 19. Bergrath S, Reich A, Rossaint R, Rörtgen D, Gerber J, Fischermann H, Beckers S, Brokmann J, Schulz J, Leber C, Fitzner C, Skorning M. Feasibility of prehospital teleconsultation in acute stroke—A pilot study in clinical routine. PLoS One 2012;7:e36796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Van Hooff RJ, Cambron M, Van Dyck R, De Smedt A, Moens M, Espinoza AV, Van de Casseye R, Convents A, Hubloue I, De Keyser J, Brouns R. Prehospital unassisted assessment of stroke severity using telemedicine: A feasibility study. Stroke 2013;44:2907–2909 [DOI] [PubMed] [Google Scholar]

- 21. Wu TC, Nguyen C, Ankrom C, Yang J, Persse D, Vahidy F, Grotta JC, Savitz SI. Pre-hospital utility of rapid stroke evaluation using in-ambulance telemedicine (PURSUIT): A pilot feasibility study. Stroke 2014;45:2342–2347 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Eadie L, Regan L, Mort A, Shannon H, Walker J, MacAden A, Wilson P. Telestroke assessment on the move: Prehospital streamlining of patient pathways. Stroke 2015;46:e38–e40 [DOI] [PubMed] [Google Scholar]

- 23. Lippman JM, Chapman Smith SN, McMurry TL, Sutton ZG, Gunnell BS, Cote J, Perina DG, Cattell-Gordon DC, Rheuban KS, Solenski NJ, Worrall BB, Southerland AM. Mobile telestroke during ambulance transport is feasible in a rural EMS setting: The iTREAT study. Telemed J E Health 2016;22:507–513 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Itrat A, Taqui A, Cerejo R, Briggs F, Cho SM, Organek N, Reimer AP, Winners S, Rasmussen P, Hussain MS, Uchino K. Telemedicine in prehospital stroke evaluation and thrombolysis: Taking stroke treatment to the doorstep. JAMA Neurol 2016;73:162–168 [DOI] [PubMed] [Google Scholar]