Abstract

Background

The promise of Health Information Exchange (HIE) systems rests in their potential to provide clinicians and administrative staff rapid access to relevant patient data to support judgement and decision-making. However, HIE systems can have usability and technical issues, as well as fail to support user workflow.

Objective

Share the findings from a series of studies that address HIE system deficiencies for an Electronic Health Record (EHR) viewer which accesses multiple data sources.

Methods

A variety of methods were used, in a series of studies, to gain a better understanding of issues and their mitigation through use of promising EHR viewer features.

Results

The study series results are presented by the themes that underscore the importance for users to distinguish between data that are available but missing due to connection or system errors, data that are omitted entirely because they are not available and data that are excluded due to filtered search criteria.

Conclusions

The principal findings from this study series led to improvement recommendations for the EHR viewer, as well as citing areas that are ripe for further investigation and analysis.

Summary.

What is already known?

Health Information Exchange (HIE) systems are becoming more common in the USA; however, there are many barriers still to be addressed.

What does this paper add?

A series of studies done within the Departmentof Veterans Affairs found that participants did not understand the difference between types of errors; participants essentially want to know whether or not patient information is available, regardless of the reason.

Messaging within HIE systems meant to communicate current status may be lost among all other messages.

An area ripe for further investigation andanalysis is whether or not HIE users are able to recognise why data might bemissing and understand the effect on the patient information presented.

Introduction

The implementation of Health Information Exchange (HIE) systems is becoming commonplace across the USA.1 The promise of HIE systems rests in their potential to provide clinicians and administrative staff rapid access to relevant patient data to support judgement and decision-making. Rather than patient data being siloed across different information repositories, HIE systems afford the opportunity to provide users a more holistic and complete view of the patient.

In many countries, such as Israel and England, local systems have been replaced or supplemented with a single, national system. In Israel, hospitals across the country have voluntarily adopted dbMotion and thereby established the Israeli National Health Record, which allows for information to be integrated on demand, often in less than 8 s.2 In 2002, England began movement on National Health Service (NHS) Care Records Service as a government driven, national software solution.3 However, the authors of a longitudinal study argue that the initial plan for a top-down approach have incorporated elements more similar to the USA, where incentives are provided for compliance with standards.3

In the USA, there is reliance on HIEs for data sharing. Even within one integrated healthcare system, different instantiations of a single record system could impact record availability and views. For example, within the Veterans Health Administration (VHA), the complete records from other sites (over 130) cannot be accessed without a tool to supplement a specific site’s instance of the Electronic Health Record (EHR). Maintenance of some local control, as opposed to full standardisation, was one theme resulting from a study of the roll-out of the NHS Care Records Service in England.3 Some see the concept of HIEs as being more flexible over the long term.4

Despite the increasing adoption rates of HIE systems across the healthcare industry, the actual use of HIE systems by users remains minimal, with usage statistics reported to be less than 5% in some cases.1 A systematic review conducted by the Agency for Healthcare Research and Quality outlined many of the barriers that may explain the discrepancy between health information technology (HIT) availability and the adoption of users who have yet to embrace it.1 HIE systems can (1) be plagued by usability deficiencies (eg, inundating the user with information), (2) fail to support clinical workflow (eg, requiring multiple log-ins) and (3) have technical deficiencies such as slow speed. In addition, standards on data exchange for true interoperability are still emerging (eg, see Mandel et al 5).

The purpose of this paper is to share the findings from a series of studies, completed by the Department of Veterans Affairs (VA) VHA Human Factors Engineering (HFE) team that address the intersection of these areas within a joint VA and Department of Defense (DoD) EHR viewer called the Joint Legacy Viewer (JLV). Briefly, JLV is an integrated, read-only EHR viewer that provides users with access to three data sources: (1) VHA, (2) DoD and (3) Veterans Health Information Exchange, also known as the Virtual Lifetime Electronic Record (VLER) Health Programme. VLER provides users with data when a veteran receives care from a participating non-VA/DoD community care provider. The three data sources in JLV are accessed to view the most health data available in a service members’/veterans’ longitudinal health record, as both service members and veterans can receive care in VA or DoD facilities or within the community. This arrangement creates the highest interoperability possible between all three sources.

Community Partner Data is not integrated at the clinical domain level (labs, allergies, etc) at this time, due to performance issues. While all three are presented within JLV, Community Partner Data is in its own domain. JLV’s integrated view of disparate data sources is intended for VA and DoD staff; there is not currently a corresponding view for patients.

The immense scale of JLV, however, subjects its users to long load times as the application queries each of these data streams to retrieve patient data. Latency issues can result in non responsive servers and users subsequently failing to receive patient data. This shortcoming makes it important for users to be able to distinguish between data that are available but missing due to connection errors, data that are omitted entirely because they are not available and data that are excluded due to filtered search criteria. Yet, conveying this type of information needs to be considered in the context of other types of data messaging (eg, patient safety alerts) to avoid overloading users with data and distracting from their primary task. While there is guidance on how best to provide informative feedback and construct error messages,6 7 there is the potential for these to be ignored because clinicians are inundated with alerts and warnings.8 9

This paper describes the studies and design methods employed to improve usability and technical performance and to support user workflow. Central to our investigation is the evaluation of language, messaging, information format and user interface (UI) features presented to users regarding the availability of data. Improvement recommendations and additional investigations are noted, where these will move JLV closer to achieving existence as an optimal HIE system.

Methods

Multiple methods were used to understand the complex assortment of system notifications and messages, examine the language and format of their content and determine more effective ways to convey their meanings to users.

Study design

Access to external data sources is highly varied and often done on a patient by patient basis, with the user typically searching for specific information based on each patient case. Therefore, we designed a variety of studies to understand the problem spaces and evaluate proposed features for JLV. The investigation series started with a heuristic evaluation (HE) by a Human Factors specialist, followed by additional studies using these various techniques. Individual semistructured interviews, focus groups, UI consultations, wireframing and wireframe design walkthroughs were employed as methods to elicit information related to the objectives of each study. table 1 below displays these methods, along with study objectives and type of participants.

Table 1.

Study series summary

| Methods | Study objectives | Participants |

| Heuristic evaluation, coupled with virtual SME UI consultations (clinical, JLV and patient safety) | Provide rapid feedback to the development team on the design of their connection error and warning feature. | Three total SMEs (VHA):

|

| Virtual SME interviews (semistructured); user focus group; wireframing; wireframe design walkthrough | Learn if users understand the concepts of system status and connection error and gather user perceptions on employing a graphical representation of connection errors. | Seven SME interviews (six VHA, one DoD):

Seven users in Focus Group (five VHA and two DoD) |

| Heuristic evaluation of wireframes; virtual SME interviews (semistructured) |

Since all user types do not always require information from all three data sources (VA, DoD and VLER), learn whether a proposed solution to reduce lengthy load times is comprehensible by users and can be done safely and effectively (ie, giving users the ability to turn off select data sources, at their discretion, to decrease the amount of information requested, to achieve faster response times). | Seven SMEs (five VHA, one DoD, one VBA):

|

| Wireframing; virtual SME UI consultations; virtual SME interviews (semistructured) | Identify and understand user requirements for a data source selection feature, create wireframe designs and consult with SMEs to ensure the technical feasibility and appropriateness for users. | Four SMEs (three VHA, one DoD):

|

| Virtual wireframe design walkthroughs | Obtain feedback from users for the data source selection wireframes, created in the above study, and recommend improvements. | Nine users (three VHA, three DoD, three VBA):

|

| Iterative wireframing; virtual interviews (semistructured) | Assist with the UI design to implement purpose of use privacy access controls for requesting and using community health partner digital data. | Nine SMEs (VHA):

|

DoD, Department of Defense; JLV, Joint Legacy Viewer; SME, subject matter expert; UI, user interface; VBA, Veterans Benefits Administration; VHA, Veterans Health Administration.

The first two studies focused on communication of the status of the system sources of patient information and connection errors, while the next three studies were formulated to explore a resolution to a recurring criticism from users about long load times they experienced while waiting for JLV to render patient information from all system sources onto the screen. The remaining study involved planning for implementation of purpose of use (POU) requirements for requesting and using patient digital health information from community health partners.10

Participants

Throughout the study series, JLV subject matter experts (SMEs) were consulted to determine requirements and the practicality of various designs. Although we utilised convenience samples, we aimed to include a range of user types. The SMEs were comprised of JLV application usage experts: some with clinical roles and/or some with support roles such as a programme manager, requirement analysts, trainer and help desk staff. Study participants from the field were primarily physicians and nursing staff who use JLV at VA and DoD hospitals and clinics. The clinical users in the field provided impartial comments and opinions. A total of 46 participants, comprised of field users and SMEs, took part in one or more individual studies. Participants were identified and recruited by their management from VHA, DoD and the Veterans Benefits Administration (VBA). VBA participants primarily use JLV for administrative purposes to determine veterans eligibility for VHA health benefits. Since the JLV application is less than 5 years old, all participants had used it for less than 5 years.

Results

Results of the study series are presented by theme.

Connection error and warning feature

After the JLV connection error and warning feature was implemented in the field, initial feedback from users suggested that the warnings were distracting, added to alert fatigue and that the error message was confusing and difficult to read. As a result, the HFE team was asked to investigate these complaints and identify ways to improve the user experience. Results of the study confirmed that there were issues with the warning banners, including that they took up too much screen area (decreasing the available space for displaying patient information) and competed with other system messaging. The study outcome also included an inventory of the current messages related to information availability, along with the content and the context of each message that were presented to users.11

Data source selection

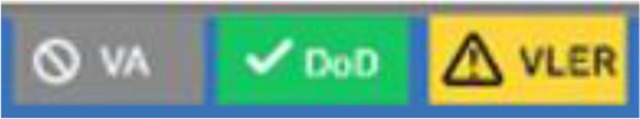

The outcome of the data source selection feasibility study provided an analysis of some proto-persona users of JLV: why they use it and what information they seek. Usage was documented for VHA staff (patient aligned care team nurse, primary care physician, emergency medicine physician), VBA staff (Veterans service representative, liaison compensation service representative) and DoD (paediatrician, occupational health physician). In addition, the results of the data source selection feasibility study indicated that the impact to patient safety was minimal if users could be informed that the data display was limited. However, this study and previous studies confirmed that clinical users have difficulty understanding when information displays are limited. Consequently, the HFE and Informatics Patient Safety study team looked holistically at messaging for data availability and the final results included a recommendation for the use of graphical indicators on the screen to depict the user’s selections of systems to query (VA, DoD, VLER) combined with a notification of each system’s connection status. The visual indicators were created by HFE based on expectations that they would be commonly recognised icons and colours for easy interpretation by the user and would be an improvement over inundating users with additional messages. A follow-up study was then conducted to obtain user feedback on the icons. figure 1 shows the final recommendation for JLV in an example where the VA data source was not selected by the user and the DoD and VLER data sources were selected. The DoD data source connection was successful and the VLER data source encountered connection errors with at least one of its systems.

Figure 1.

Human Factors Engineering prototype example of source selection indicators combined with connection status notification for Department of Veterans Affairs (VA), Department of Defense (DoD) and Virtual Lifetime Electronic Record (VLER) systems.

Purpose of use

The results of the final study, POU, uncovered that users are likely to forget their POU setting (ie, the value that identifies the reason for requesting community health partner data) and are also likely to have trouble discerning when a change to the POU setting is appropriate. Clinical SMEs saw overlap in their workflow, believing they could have multiple purposes during the same patient-record session. In addition, clinical SMEs were surprised to learn that a lack of patient consent at the community facility can omit some of the patient’s health information from being displayed in JLV.

Discussion

The design of the JLV user interface distinguishes network connection errors from system errors. A key finding from the studies was that participants did not understand the difference between these two types of errors and they essentially want to know whether, or not, patient information is available, regardless of the reason. In the event of connection errors or system errors, they want to know when the information display is limited, the type of information that is not available and when it will be obtainable, so they can make the treatment decisions necessary for their primary task: caring for the patient. In addition, it would be beneficial to inform users, by constructively suggesting a solution,12 when there is an action they can take to address an issue: for example, informing a JLV user to perform a screen refresh to display additional patient information as it becomes available or suggesting the user phone the help desk to get an estimated time when an interoperability issue will be resolved.

Because many messages that relate to information availability are currently in use, the proposition to add user ability to selectively choose data source(s) would increase notifications that may be lost in the existing messaging. By employing a visual design that cues the user to system information availability, the user is kept aware of status12 and is afforded the flexibility to refer to it as needed. Another solution aimed at avoiding overwhelming users with notifications and messages may be to adjust the salience of these according to a priority ranking by category. A theoretical framework for understanding and engineering persuasive interruptions outlines four types of beneficial interruptions for the user and correlates these with importance/urgency.13 Considering this framework, patient safety alerts have high urgency and might be delivered as an interruption to the user to gain his/her attention and action. In contrast, informational notifications such as a successful system connection would have a lower priority and be non-intrusive.

Acknowledging the challenges to presenting a set of useful messages to JLV users, several simple heuristics were identified by our team to assist with achieving an effective design7:

Messages should not obscure, or distract from, clinical decision support alerts and notifications.

Messages should not appear to conflict with other system-state or error notifications.

Messages should be distinct from functional feedback, such as ‘no data’ returned by a user-applied (or default) data filter.

Messages should not use overly bright or bold colours that distract from the available data.

Messages should not overwhelm users with technical details that are not actionable.

Limit or consolidate the space needed to present messages so that, in the event of mass network outages, the interface is not dominated or overwhelmed by errors.

Finally, while allowing non-clinical users the ability to adjust their POU settings is a straightforward and simple workflow which permits VA to provide a more detailed reason for the request of information from HIE partners, the workflow for Emergency Department staff and clinical staff who have more than one purpose for the information (such as treatment and operations) is more complicated. This area requires additional examination of workflow, policies and potential user instructions for applying POU in field work.

HIEs are a relatively new tool in the USA, and relevant policies and technologies are still evolving. While HIEs have some downsides, such as varied and unknown costs and the need for well-defined interoperability standards,4 they are the current model for making patient records more readily available in many countries. This work is a first step in highlighting some issues encountered with clear messaging within one tool that serves to share data for use at point of care. Although the results are focused on the impact of data source and interoperability messaging on HIT users within the DoD and VA, many of the findings and recommendations are broadly relevant to other healthcare institutions undergoing similar initiatives.

Limitations

This article aimed to point out the complexities for HIE and bring to light challenges for designing effective messaging patterns encountered within one system (JLV). Therefore, there are several limitations. This series of studies started as a discrete HE related to a connection error/warning feature and morphed into a series of studies, as the results from each led to additional questions. No studies included observational methods, which could yield different data related to user expectations and actual behaviours. Additional quantitative methods would have aided in data collection for the wireframe activities. However, these activities were viewed as formative at the time, and so ‘lighter weight’ methods were employed. Some JLV SMEs and users participated in more than one study as a convenience to facilitate scheduling and completion of studies in our operational setting.

Prototype screens were designed within the confines of a limited amount of available, realistic patient data. HFE practitioners’ understanding of JLV capabilities and representative patient data were limited to what was shown in demonstrations by JLV staff members. Prototypes were not prepared to fully test:

Whether user participants understood that the patient information being displayed was limited due to the user’s data source selections and/or a more detailed filtering of patient information (eg, applying date ranges).

Whether users felt overwhelmed by the number and various types of messages from multiple systems and data sources.

Conclusions

Data source and interoperability messaging are multifaceted, involving multiple IT systems, language and format of content, combined with user interface features. This is not unique to VA. The results and principal findings from this study series led us to improvement recommendations for JLV, including minimising the amount of data availability notifications and messages received by JLV users by employing graphical indicators on the screen to visually communicate the data source selections and their connection status. An area ripe for further investigation and analysis is whether, or not, HIE users are able to recognise when each of the following are being applied and understand the effect on the patient information shown: data that are available but missing due to connection errors, data that are omitted entirely because information is not available, data that are excluded due to non-selection of system source(s) and data that are excluded due to specific filtering criteria.

Acknowledgments

We would like to acknowledge Kurt Ruark, MBA, Jason J Saleem, PhD and Nancy Wilck for their support of this work.

Footnotes

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. Hersh W, Totten A, Eden K, et al. Evidence Report/Technology Assessment No. 220. (Prepared by the Pacific Northwest Evidence-based Practice Center under Contract No. 290-2012-00014-I.) AHRQ Publication No. 15(16)-E002-EF. Rockville, MD: Agency for Healthcare Research and Quality, 2015. [Google Scholar]

- 2. Saiag E. The Israeli virtual National health record: a robust National health information infrastructure based on. InConnecting medical informatics and Bio-informatics. Proceedings of MIE2005: the XIXth International Congress of the European Federation for Medical Informatics, IOS Press, 2005:427. [PubMed] [Google Scholar]

- 3. Robertson A, Cresswell K, Takian A, et al. Implementation and adoption of nationwide electronic health records in secondary care in England: qualitative analysis of interim results from a prospective national evaluation. BMJ 2010;341:c4564 10.1136/bmj.c4564 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Coiera E. Building a national health IT system from the middle out. J Am Med Inform Assoc 2009;16:271–3. 10.1197/jamia.M3183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Mandel JC, Kreda DA, Mandl KD, et al. SMART on FHIR: a standards-based, interoperable apps platform for electronic health records. J Am Med Inform Assoc 2016;23:899–908. 10.1093/jamia/ocv189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Zhang J, Johnson TR, Patel VL, et al. Using usability heuristics to evaluate patient safety of medical devices. J Biomed Inform 2003;36:23–30. 10.1016/S1532-0464(03)00060-1 [DOI] [PubMed] [Google Scholar]

- 7. Shneiderman B, Plaisant C, Cohen M, et al. Designing the user interface: strategies for effective human-computer interaction. Pearson Education Inc, 2017. [Google Scholar]

- 8. Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc 2004;11:104–12. 10.1197/jamia.M1471 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006;13:138–47. 10.1197/jamia.M1809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. HHS.gov US, Health Dof, Services H. Understanding Some of HIPAA’s Permitted Uses and Disclosures, 2016. Available: https://www.hhs.gov/hipaa/for-professionals/privacy/guidance/permitted-uses/index.html

- 11. Dobre J, Carter T, Herout J, et al. Minimizing the Impact of Interoperability Errors on Clinicians : Proceedings of the International Symposium on human factors and ergonomics in health care. 7 Sage India: New Delhi, India: SAGE Publications, 2018: 64–8. 10.1177/2327857918071013 [DOI] [Google Scholar]

- 12. Nielsen J. 10 usability heuristics for user interface design. Nielsen Norman group, 1995. Available: https://www.nngroup.com/articles/ten-usability-heuristics/

- 13. Walji M, Brixe J, Johnson-Throop K. A theoretical framework to understand and engineer persuasive interruptions. Proceedings of the annual meeting of the cognitive science Society, 2004:26. [Google Scholar]