Abstract

Biological and machine pattern recognition systems face a common challenge: Given sensory data about an unknown pattern, classify the pattern by searching for the best match within a library of representations stored in memory. In many cases, the number of patterns to be discriminated and the richness of the raw data force recognition systems to internally represent memory and sensory information in a compressed format. However, these representations must preserve enough information to accommodate the variability and complexity of the environment, otherwise recognition will be unreliable. Thus, there is an intrinsic tradeoff between the amount of resources devoted to data representation and the complexity of the environment in which a recognition system may reliably operate.

In this paper, we describe a mathematical model for pattern recognition systems subject to resource constraints, and show how the aforementioned resource–complexity tradeoff can be characterized in terms of three rates related to the number of bits available for representing memory and sensory data, and the number of patterns populating a given statistical environment. We prove single-letter information-theoretic bounds governing the achievable rates, and investigate in detail two illustrative cases where the pattern data is either binary or Gaussian.

Index Terms—: Distributed source coding, multiterminal information theory, pattern recognition

I. Introduction

PATTERN recognition is the problem of inferring the state of an environment from incoming and previously stored data. In real-world operating environments, the volume of raw data available often exceeds a recognition system’s resources for data storage and representation. Consequently, data stored in memory only partially summarizes the properties of patterns, and internal representations of incoming sensory data are likewise imperfect approximations. In other words, pattern recognition is frequently a problem of inference from compressed data. However, excessive compression precludes reliable recognition. This apparent tradeoff raises a fundamental question: In a given environment, what are the least amounts of memory data and sensory data consistent with reliable pattern recognition?

The paper is organized as follows. In Section II, we introduce the general problem informally. Relationships between the present work and other pattern recognition research is briefly described in Section III. In Section IV, we formalize our problem as that of determining which combinations of three key rates are achievable, that is, determining which rate combinations allow the theoretical possibility of reliable pattern recognition. These rates quantify the information available for representing memory and sensory data, and the number of distinct patterns which the recognition system can discriminate. Our main results are single-letter formulas providing inner and outer bounds on the set of achievable rates, presented in Section V. In Section VI, we consider some instructive special cases of the main results, and compare our results to those for the related problem of distributed source coding. In Section VII, we explore explicit formulas for the bounds in two special binary and Gaussian cases. Section VIII contains concluding remarks. Proofs for most of the results are placed in the Appendices. The entire discussion is organized around the block diagram in Fig. 1.

Fig. 1.

Pattern recognition subject to data compression.

II. Informal Problem Statement

In this section, we use an imagined example to motivate the mathematical model studied in the later technical sections. Suppose that our pattern recognition system consists of a homunculus living inside the head of some animal. The homunculus has access to a video monitor which displays data captured by the animal’s retinas, and a set of index cards for storing information about the patterns in the environment relevant to survival, constituting a “memory.” The homunculus must identify each pattern by comparing viewed images with information stored in memory. These identifications are then used to guide the animal’s behavior. Let us consider which factors govern the difficulty of our homunculus’ task.

A. Pattern Rate

First, the number of patterns that must be discriminated, Mc, obviously cannot exceed the number of images registerable on the animal’s retinas, which depends in turn on the number of retinal photoreceptors and the number of distinct signaling states of each photoreceptor. Denoting the state of the retinas as Y = (Y1, Y2, …, Yn), where each Yi takes values in a finite alphabet , the number of possible retinal images is . In a very simple animal with n = 8 photoreceptors, each able only to distinguish “bright” (Y = 1) from “dark” (Y = 0) (so ), the absolute upper limit on Mc would be .

With higher resolution eyes (larger n) an exponential explosion in the number of possible images rapidly overwhelms memory and computational resources. In humans, for whom , and n ≈ 2 × 106 [1], [2], , far exceeding estimates of the number of particles in the universe [3]. Fortunately, two features of real-world pattern recognition intervene: First, sensory data exhibits strong statistical structure, p(y), so that the vast majority of the possible images are never experienced.1 Second, much of the animal’s visual experience can be filtered out as irrelevant to survival. Thus, we express the number of patterns our homunculus must discriminate as , where is called the pattern rate, , and generally . Equivalently, we can express Mc in binary units, as , in which case .

B. Sensory Data Compression Rate

Our homunculus accesses sensory data indirectly through a video monitor that has limited display capacity. That is, whereas the retinas can be in up to distinct signaling states, the homunculus’ internal monitor can display at most , where Ry is thus the sensory data compression rate. Analogous data reductions arise in real recognition problems for various reasons, both computational (e.g., dimensionality reduction, sparsification, regularization, or other “feature extraction” operations), and economic (e.g., energy constraints, processing time constraints, storage limitations). We represent the transformation from retinal data Y to video data J by the action of an encoder ϕ, resulting in the displayed data J = ϕ(Y).

This sensory data compression step places another restriction on the number of discriminable patterns, so that, in general, ; or .

C. Memory Data Compression Rate

The job of our homunculus is to recognize patterns. More formally, the homunculus must assign to each viewed image Y one of Mc class labels, which we take to be integers . As pre-job training, we imagine the homunculus studies a set of labeled class prototypes or “templates,” T(w) = (X(w), w), , each drawn from a distribution p(x), where each template has dimensionality identical to that of the sensory data, X(w) = (X1(w), …, Xn(w)).

The homunculus creates index cards, on which it writes the class labels and descriptive information about each class template. However, the number of cards and the amount of information per card are limited, allowing only a compressed summary of the available data. We represent the information memorized about a class M(w) as the output of an encoder f, i.e., M(w) = (I(w), w) = f(T(w)), where I(w) is the compressed description of X(w) and w is the memorized class label. The degree of compression is quantified by specifying either the number of index cards comprising the memory, Mx, or by a compression rate (given in bits) .

As above, memory data compression restricts the number of discriminable patterns Mc, so that ; or, in terms of rates, .

D. Image Formation and Testing

The “testing” phase of our homunculus-driven pattern recognition system involves two processes:, image formation and recognition.

Image formation proceeds as follows. Nature selects a pattern class at random, then generates an image Y which is registered on the animal’s retinas. (The class label W is not observable by the homunculus.) We model the image formation process as the transmission of the class template X(w) through a random channel p(y|x). The retinal image Y thus represents a “signature” of the underlying pattern X(w), and the channel p(y|x) represents two types of difficulties intrinsic to most real-world pattern recognition problems: signature variation (differences in the sensory data generated on repeated viewings of the same underlying pattern); and signature ambiguities (distinct patterns may produce similar signatures).2

The homunculus receives the compressed sensory data J = ϕ(Y), compares it with the memory data , and finally reports the class label of the best match, Ŵ. The inference procedure used to make these comparisons can reflect knowledge of the pattern source p(x) and image formation process p(y|x), but at the time of testing, it must be specified so as to depend only on the available data, i.e., g must be function only of and J, . We judge the homunculus’ performance by the probability of error Pe. We will consider the system reliable if for some acceptable ϵ it achieves Pe ≤ ϵ.

E. Interpretations of the Problem Formulation

We have now introduced the basic elements of our problem, which is to determine the rate combinations (Rx, Ry, Rc) compatible with the possibility of reliable pattern recognition systems, where a “reliable” system is one for which the probability of recognition error can be made arbitrarily small. To summarize, these basic elements are 1) a model for the underlying patterns, consisting of the number of patterns , a set of class labels w ∈ {1, …, Mc}, and the class prototypes X(w) together with their generative model p(x); 2) a model of the channel connecting class prototypes to the sensory data p(y|x); and 3) budgets specifying the number of bits allowed for representing sensory data Ry and memory data Rx inside the system.

We pause here to consider a few different possible perspectives on the problem under study.

Optimization views.

From an optimization point of view, we can ask our central question in two different but equivalent ways: Given the pattern rate Rc, what are the least amounts of sensory and memory data Ry and Rx, needed for reliable pattern recognition? Alternatively, given fixed information budgets for memory and sensory data representation Rx and Ry, what is the maximum achievable pattern recognition rate Rc?

Regarding “n.”

Second, the problem has a different “feel” depending on whether one views the data dimensionality n either as a fixed or an increasing parameter. In the preceding discussion, we have primarily taken the static view, in which there are a fixed number of patterns, or “states of nature” of interest, and the problem is to investigate how many memory states Mx and sensory states My are needed to recognize them reliably. Alternatively, we may regard n as a dynamic, increasing parameter. Biologically, allowing n to increase might correspond to studying a series of animals with increasingly better eyes and memory organs. In engineering applications, the increase might correspond to building a sequence of machines with progressively higher camera resolution and data storage capacities [6]. Obviously, if while increasing n we hold the bit-budgets Rx and Ry fixed, then the number of memory and sensory states available for data representation grows exponentially, , . Less obviously, the maximum number of discriminable patterns also grows exponentially,3 with a constant rate Rc, i.e., . The “fixed n” and “increasing n” perspectives correspond to the familiar, complementary mathematical methods of proving a given inequality, respectively, either by the “adversarial” approach (given any ϵ, choose n large enough…); or the “asymptotic approach” (take the limit as n → ∞…).

An important final point regarding n is that, like many results in information theory, our results rely on asymptotic arguments. Thus, we only prove the results valid only for “sufficiently large n,” depending in turn on an ϵ corresponding to the tolerable error rate. The needed magnitude of n for a given ϵ (i.e., the issue of error exponents) will depend on the application, and is an important open problem.

III. Related Work

A. Machine Learning Approaches

Pattern recognition is a central topic in machine learning [7]–[10]. The machine learning approach to pattern recognition centers around the following problem: Given a set of labeled sensory data , we wish to find a rule g that predicts the labels for future sensory data, i.e., if Y is in fact a signature of pattern class w ∈ {1, 2, …, Mc}, we want Pr(g(Y) ≠ W) ≤ ϵ for some acceptable ϵ > 0. Broadly speaking, two competing approaches dominate the literature. In the “generative modeling” approach, one attempts to estimate the distribution underlying the data p(w, y), and then to use the conditional distribution p(w, y) to infer w from Y, i.e., . Alternatively, in the “discriminative” approach, one attempts to learn the optimal decision region boundaries directly, without estimating p(w, y).

Our problem formulation resonates with the “generative modeling” approach, in that we allow the homunculus access to p(w, y).4 Informally, such knowledge might come from allowing a very large volume of training data. Nevertheless, the distinction between generative and discriminative approaches then may become practically unimportant, as in many instances either approach can achieve asymptotically optimal performance.

In any case, in the present work we are not directly concerned with the problem of classifier learning. Rather, we investigate the conditions under which reliable classifiers can exist at all, regardless of how they are deigned or learned; we describe performance bounds to which all pattern recognition systems are subject.

It is also worth pointing out the distinction between the machine learning concept of “Vapnik–Chervonenkis (VC) dimension” and Mc in the present work. Informally, the VC dimension is the number of distinct patterns that can be shattered by a given family of classifiers (see [10], [12] for a detailed description). As such, VC dimension is a measure of the complexity of the decision boundaries that can be fit with a given family of classifiers. In contrast, Mc in our work is the number of patterns or pattern classes that can be distinguished, with no constraints on the family of classifiers.

B. Related Work in Combined Data Compression and Inference

Neuroscientist Horace Barlow has argued for more than four decades that data compression is an essential principle underlying learning and intelligent behavior in animal brains (see, e.g., [13]–[17]). Barlow and many others have amassed substantial experimental evidence showing efficient data coding mechanisms at work in the sensory systems of diverse animals, including monkeys, cats, frogs, crickets, and flies [18]. More recently, data compression is gaining appreciation as a mechanism for managing metabolic energy costs in neural systems [19].

In the engineering pattern recognition literature, data compression usually arises indirectly in the context of feature extraction, i.e., techniques for transforming raw data such that “irrelevant” data is discarded and the residual data is rendered into some advantageous format which facilitates storage and comparison, and is robust (“invariant”) with respect to signature variations [20], [21]. In the information theory literature, probably the first direct investigation of the interplay between data compression and statistical inference is due to Ahlswede and Csiszár [22]. In [23] Han and Amari reviewed work up through 1998 on rate-constrained inference problems, including hypothesis testing, pattern recognition, and parameter estimation. Recently, Ishwar et al. have studied the problem of joint classification and reconstruction of sensory data subject to a fidelity constraint, in the context of video coding [24], [25]. In contrast to the problem studied in this paper, in that work there is no data compression constraint on memory data. Work on practical algorithms for joint classification and data compression includes [26]–[28].

IV. Problem Statement

We now proceed to the formal presentation of the main results.

A. Notation

We adopt the following notational conventions. Random variables are denoted by capital letters (e.g., U), their values by lowercase letters (e.g., u), their alphabets by script capital letters (e.g., ). Sequences of symbols are denoted either by boldface letters or with a superscript, e.g., u = un = (u1, u2, …, un). The probability distribution for a random variable is denoted by pU(u), or p(u) simply when the implied subscript is clear from the context. Entropy, mutual information, and conditional mutual information are denoted in the usual ways, e.g., for random variables U, V, W, we write H(U), I(U; V), and I(U; V|W), respectively. All logarithms are understood to be base two, i.e., log = log2. Finally, to express statements such as “X and Z are conditionally independent given Y,” i.e., p(x, y, z) = p(y) p(x | y)p(z | y), we write “X – Y – Z form a Markov chain,” or simply X −.Y – Z.

B. Definitions and Assumptions

Definition 1: The environment for a pattern recognition system is a set of eight objects

where

, , are finite alphabets;

p(w), p(xy) = p(x)p(y|x), are probability distributions over , and , respectively;

is a set of pairs T(w) = (X(w), w) of random vectors X(w) drawn independent and identically distributed (i.i.d.) ~p(x), labeled by ;

Φ is a mapping from labels to vectors in , , Φ(w) = X(w).

We make the following simplifications:

the distribution over class labels is uniform, for all ;

the pattern components are i.i.d., ;

the observation channel is memoryless, .

Definition 2: An (Mc, Mx, My, n) pattern recognition code for an environment consists of three sets of integers

and three mappings

where denotes the result of applying f to the entries of

We call the pattern templates; f, the memory encoder; , the memorized data; ϕ, the sensory encoder; and g, the recognition function or classifier.

Definition 3: The operation of a pattern recognition system (“agent”) implementing a given (Mc, Mx, My, n) pattern recognition code (f, ϕ, g) for an environment is defined in terms of the following events.

Memorization phase:

The agent observes , and uses f to compute the memory data .

Access to is taken away, and thereafter the agent knows of only what is retained in .

Testing phase

Nature selects an index W ~ p(w).

Nature encodes the pattern according to X(W) = Φ(W).

The pattern X(W)passes through the channel p(y|x), giving rise to an observable signal Y.

The agent computes J = ϕ(Y).

The agent infers W by computing .

With respect to the events just described, the probability of error for a code (f, ϕ, g) in is

and the average probability of error of the code is

Definition 4: The rate R = (Rc, Rx, Ry) of an (Mc, Mx, My, n) code is

where the units are bits-per-symbol.

Definition 5: A rate R = (Rc, Rx, Ry) is achievable in a recognition environment if for any ϵ > 0 and for n sufficiently large, there exists an (Mc, Mx, My, n) code (f, ϕ, g) with rates

such that , , and .

Definition 6: The achievable rate region for a recognition environment is the set of all achievable rates R = (Rc, Rx, Ry).

Our ultimate goal in an information-theoretic analysis of this problem is to characterize the achievable rate region in a way that does not involve the unbounded parameter n, that is, to exhibit a single-letter characterization of .

V. Main results

In this section, we present inner and outer bounds on the achievable rate region . The bounds are expressed in terms of sets of “auxiliary” random variable pairs UV, defined below. In these definitions, U and V are assumed to take values in finite alphabets and and have a well-defined joint distribution with the “given” random variables XY. To each such pair of auxiliary random variables UV we associate a set of rates

Next, define two sets of random variable pairs

and

We will also sometimes summarize the independence constraints in as a single “long” Markov chain U – X − Y − V.

Next, define two additional sets of rates

and denote the convex hull of by .

Our main results are the following.

Theorem 1 (Inner Bound):

That is, every rate is achievable.

Theorem 2 (Better Inner Bound):

That is, every rate is achievable.

Theorem 3 (Outer Bound):

That is, no rate is achievable.

Finally, to ensure computability, we include a cardinality bound.

Theorem 4: Regions and are unchanged if we restrict the cardinality of to

Theorem 4 is a simple consequence of the Support Lemma [29 (p. 310)]: we must have letters to ensure preservation of p(xy|uv), and three additional letters to satisfy the constraints on I(X; U), I(Y; V) and I(XY; UV).

Remark 1: If either X = U or Y = V, or both, then the outer bound collapses to the inner bound, since in this case the extra Markov condition U – (X, Y) – V in the definition of is extraneous. For example, if U = X, then the condition is equivalent to I(U; V | XY) = I(X; V | XY) = 0, which is obviously true. Similar comments apply if U and V are any deterministic functions of X and Y, e.g., if V = γ(Y), then I(U; V | XY) = I(U; γ(Y)|XY) = 0.

Remark 2: The bounds and can be expressed in various ways. For example, it is not difficult to show that the following replacements for lead to the same sets of rates and :

| (1) |

| (2) |

That is, if we define

where * stands for either “in” or “out,” then , and . These equivalencies are proved in Appendix E, and are used in Sections VI-B and VII.

Remark 3: In general, is not a convex set, as evidenced by the examples studied in Section VIII. Thus, is in fact an improvement on . is a convex set, as shown in Appendix C.

The proofs for Theorems 2 and 3 appear in Appendices A and B. Theorem 1 follows immediately from Theorem 2. In sketch-form, the method we use to prove achievability (the inner bound), based on (1), is as follows. We represent the memory and sensory data using codewords U(i), and V(i), that are typical according to p(u) and p(v), respectively, and the recognition system stores a list of these codewords. Making Rx ≥ I(X; U) and Ry ≥ I(Y; V) provides enough U’s and V’s to “cover” and . During pretesting, the system matches each of the labeled template patterns (X(w), w), w = 1, …, Mc presented to it with a unique memory codeword, and attaches to this codeword the corresponding class label (with matching defined in the sense of joint typicality according to p(xu)). The resulting set of Mc “active,” labeled codewords constitutes the system’s memory. During subsequent testing, suppose Nature selects class w, generating sensory data Y ~ p(y|X(w)). The system receives the index J of the codeword for Y, and uses it to retrieve the sensory codeword V(J). The system can then narrow down the list of Mc active memory codewords by a factor of 2−nI(U; V) using knowledge of p(uv).5 Thus, the correct memory vector U(w) can be uniquely identified so long as , i.e., if Rc ≤ I(U; V).

It is also possible to prove the achievability result using a binning argument, which induces the set (2): Generate 2nI(X; V) U’s and 2nI(Y; V)V’s, and divide these equally among roughly and bins each, respectively. A pattern X(w) is encoded in memory by searching for a bin containing a matching (jointly typical) codeword U(X), and the bins thus selected are each assigned the class label w of the pattern stored therein. Sensory data Y is encoded as the bin index of a matching codeword V(Y). This number of U’s and V’s is sufficient to ensure that any given pair X and Y will have a matching (jointly typical) U(X), and V(Y), and the Markov lemma ensures joint typicality of the quadruple (U(X), X, Y, V(Y)). Given encoded sensory data J = ϕ(Y), recognition is done by comparing the roughly sensory codewords in bin J with the memory codewords in each of the memory bins, then reporting the class label assigned to the bin containing the matching memory codeword. No matches other than the correct one, (U(X), V(Y)), will be found provided the number of (U, V) comparisons grows exponentially with n at a rate less than I(U; V), that is, provided I(Y; V) − Ry + I(X; U) – Rx + Rc ≤ I(U; V), which simplifies to the rate–sum constraint Rx + Ry ≥ I(XY; UV) + Rc. The “side” constraints Rx ≥ I(XY; U|V) + Rc and Ry ≥ I(XY; V|U) + Rc then follow from requiring that each bin contain at least one codeword. The final inequality Rc ≤ I(U; V) follows from the first three.

VI. Discussion of the Main Results

A. The Gap Between Bounds

In general, there is a gap between and , so that . This gap is due to the different constraints in the definitions of and : Whereas distributions in satisfy three independence constraints U – X – Y, X – Y – V, and U – (X, Y) – V (equivalently, the single “long chain” constraint U – X – Y – V), distributions in only need satisfy the first two “short chain” constraints.

Further insight into the nature of the gap can be gained by attempting to construct by combining distributions from in various ways, and then considering whether the resulting distributions can be used to expand the achievable rate region.6 We consider two such constructions. In both, let be a finite random variable, independent of X and Y. Holding p(xy) fixed, to describe a pair of auxiliary random variables , with joint distribution p(xyuv), we need only to specify the marginal distribution p(uv | xy). Consider the following two sets:

| (3) |

| (4) |

In words, is the set of UV whose distributions p(uv | xy) can be constructed as “mixtures” of product marginals; and is the set of “convexifying” random variables (this terminology is explained below).7 In both of these sets it is possible to have dependencies between U and V given XY; i.e., in general p(uv | xy) ≠ p(u | x) p(v | y), hence, in general, , .

There is a gap similar to the one under discussion between the best known bounds for the distributed source coding problem (DSC), established by Berger and Tung (see Section VI-B). In both problems, the bounds are given in terms of sets with independence (Markov) constraints identical to those in and .8 Berger has suggested that in the DSC problem the gap is due to the fact that admits convex mixtures of product marginal distributions, whereas does not; i.e., in our notation, [31]. The inclusion is verified by checking U – X – Y and X – Y – V: Write

hence, U – X – Y; and a symmetric calculation shows X – Y – V. While clearly is a larger set than , it is unclear whether the admission of mixtures can account for all of the gap between and . That is, we know of no proof that . Moreover, we know of no way to use auxiliary random variables from in achievability arguments.

It is also straightforward to verify that the second set is contained in

(where the reasons are: (a) Q is independent of X and Y, and (b) ; hence, U – X – Y; and a symmetric calculation shows X − Y − V. has a form sometimes introduced in time-sharing arguments, as a means to convexify a given rate region. For example, for , we have

(where (a) is because Q is independent of X and Y) and similarly I(X; U) = Σqp(q)I(X; Uq), and I(Y; V) = Σqp(q)I(Y; Vq). It follows that the convex hull of may be represented as

| (5) |

In contrast to , auxiliary variables from can be used as the basis for standard achievability arguments, as we have done in the proof of Theorem 2 (see Appendix A). From this, we have the following logical statement:

| (6) |

Unfortunately, we have no proof that . Notwithstanding, in Subsection VII-A, we examine one case where it appears that does hold, giving grounds to conjecture that this equality may hold at least under special conditions.

B. Relationship With Distributed Source Coding

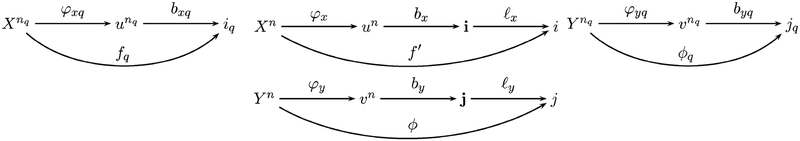

There are interesting connections between the results of Tung and Berger [32], [33] for the DSC problem and our results in Theorems 1 and 3. Briefly, the situation treated in the DSC problem is as follows (see Fig. 2). Two correlated sequences, X and Y, are encoded separately as i = f(X), j = ϕ(Y) and the decoder g must reproduce the original sequences subject to a fidelity constraint , where D = (Dx, Dy). The problem is to characterize, for any given distortion D, the set of achievable rates .

Fig. 2.

The distributed source coding problem.

The best known inner and outer bounds for the DSC problem can be expressed as follows.9 Let and be defined as above, and define two new sets incorporating the distortion constraint

where

Parallelling (1), also define the sets of rates

| (7) |

and

Then the Berger–Tung bounds for the DSC problem are and .

There are strong formal similarities between our bounds and the DSC bounds. Most importantly, the gap between bounds for both problems is due to the difference between the length-four constraint U − X − Y − V and the less stringent length-three constraints U − X – Y, X − Y − V. Further, note the formal similarity between the sets (7) and . To carry this comparison further, suppose in the problem under study that, in addition to recognizing patterns, we also wish to reproduce an estimate of the original signals subject to a fidelity constraint, as in the DSC problem.10 Denote the achievable rate region for this “joint recognition and recovery” problem by . Making this addition in fact adds little technical difficulty, and the resulting bounds can be expressed, not surprisingly, as and , where

Apparently, the pattern recognition problem can be construed as a kind of generalization of the DSC problem, with the added complication that the “decoder” receives with Y not one sequence X but such sequences X(1),…,X(Mc) and must first determine which is the appropriate one with which to jointly decode Y. This extra discrimination evidently requires that extra information be included at the encoders. This “rate excess” is the difference between the minimum encoding rates required for the DSC and pattern recognition problems.11 Comparing (7) with (7), this rate excess is the same at both decoders, and is equal to Rc. Thus, Rc can be interpreted as the number of extra bits needed at both encoders to decide which of the possible patterns X(w) the sensory data Y represents, beyond the information required to simply reproduce the pair Y, X(w) within the allowed distortion limits.

C. Degenerate Cases

We now briefly examine the degenerate cases where either X = U, or Y = V, or both. In these cases, I(U; V | XY) = 0. Hence, using (1), we see that both inner and outer bounds on both reduce to the three inequalities Rx ≥ I(U; X), Ry ≥ I(V; Y), Rc ≤ I(U; V)}. Clearly, in these cases the bounds are tight, in that the inner and outer bounds are equal; there is no gap (see Remark 1). These degenerate cases have simple interpretations and are thus useful for building intuition about Theorems 1–3.

Sharp memory, sharp eyesight.

First, consider a system in which the budgets for memory and sensory representations are unrestricted, i.e., no compression is required. In this case, we can effectively treat the memories and sensory representations as veridical; i.e., we can set U = X and V = Y. The theorem constraints then become Rx ≥ I(X; X) = H(X), Ry ≥ I(Y; Y) = H(Y), and

| (8) |

This result indicates that, in the absence of compression, the recognition problem is formally equivalent to the following classical communication problem: Transmit one of possible messages (patterns) to a receiver (the recognition module) [6]. In this case, the patterns can be thought of as random codewords stored without compression and available to the decoder; Shannon’s random coding for communication [30], [34] applies, yielding the mutual information I(X; Y) (see (8)) as the bound on Rc.

Sharp memory, poor eyesight.

Next, suppose that memory is effectively unlimited, so that we can put U = X, but sensory data may be compressed. In this case, we can readily rewrite the condition on Rc as

| (9) |

We check the extreme cases: If V is fully informative about Y, Y = ϕ−1(V), then I(X; Y | V) = H(Y | V)−H(Y | X, V) = 0, and we recover the case discussed above, Rc ≤ I(X; Y). For intermediate cases, where V is partially informative, the effect of V is to degrade the achievable performance of the system below that possible with “perfect senses,” and the reduction incurred is I(X; Y | V). In the extreme case that V is utterly uninformative (e.g., a constant V = 0, or otherwise independent of Y), I(X; Y | V) = I(X; Y), and we get Rc = 0, or ; hence, the system is useless.

Poor memory, sharp eyesight.

In the case of limited memory but unrestricted resources for sensory data representation (V = Y), we get an expression symmetric with the previous case

| (10) |

As before, if the memory is perfect (U = X), we get I(X; Y | U) = I(X; Y | X) = 0, recovering the channel coding constraint Rc ≤ I(X; Y); assuming useless memories (U = 0) yields Rc ≤ I(X; Y) − I(X; Y) = 0; and intermediate cases place the system between these extremes.

VII. Examples

In this section, we investigate the achievable rate regions for binary and Gaussian versions of our problem. For this purpose, it will be convenient to characterize the sets , and by their surfaces in the positive orthant . The surface of can be expressed as

Similarly, by direct extension of Theorems 1 and 3, and using the representation of and based on (1), the surfaces of and are

| (11) |

| (12) |

where

The expression for the inner bound surface (11) reduces to

An alternative expression for the outer bound surface which will be used in Subsection VII-B, based on RUV (1), is

| (13) |

Finally, denote the convex hull of the inner and outer bound surfaces , .

In the specific cases studied in the following examples we seek to convert these implicit characterizations into explicit formulas not involving the optimization over and .

A. Binary Case

We first investigate the inner and outer bound surfaces for a case in which the template patterns and sensory data alphabets are binary, . Let the template patterns X = (X1,…,Xn) consist of n independent drawings from a uniform Bernoulli distribution Xi ~ B(1/2), i = 1…n, and let the sensory data Y = (Y1,…,Yn) be the output of a binary-symmetric channel with crossover probability q, where ; and δ(x, y) = 1 if x = y, and otherwise δ(x, y) = 0. Equivalently, we can represent Y as Y = X ⊕ W, where W ~ B(q) and is independent of X.

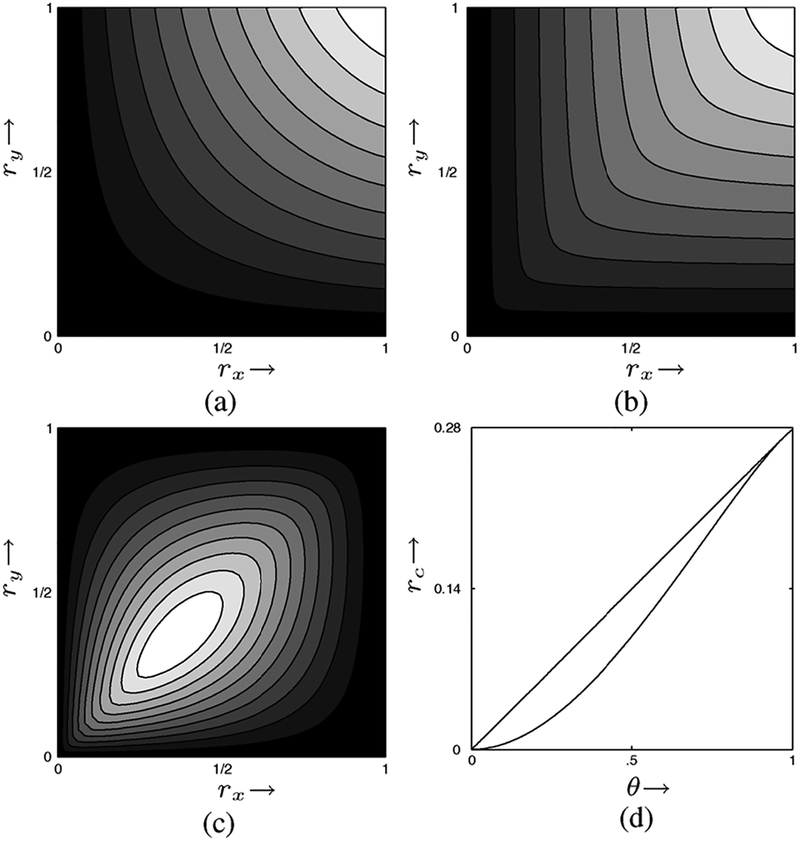

1). Numerical Results:

We have taken two approaches to studying the surfaces of and for this binary case. First, we carried out the optimizations in (11) and (12) numerically. This calculation was via a Monte Carlo method which executed a dense random sampling of the set of probability distributions p(uv | xy) associated with and .12 For each sample p(uv | xy), we calculated I(X; U), I(Y; V) and I(XY; UV); then, for each value of rx, ry ∈ [0,1] the numerical estimate of rin(rx, ry) or rout(rx, ry) was the largest sample value found by the Monte Carlo search for rx + ry – I(XY; UV). From here on, we denote the numerical surface estimates by and .

The cardinality bound in Theorem 4 is not necessarily tight. Therefore, to assess the alphabet sizes required of and for the binary case, we performed our numerical experiments for increasing values of and . For the inner bound surface, we found was sufficient: no further increase in was afforded by allowing , , 4. For the outer bound surface, was sufficient.

The surface plots from our numerical experiments are shown in Fig. 3. Fig. 4 shows representations of the distributions p(uv | xy) underlying 25 different points (rx, ry) for Fig. 3 (a) the inner and Fig. 3 (b) the outer bounds, in which probabilities are represented by the area of white squares.13, 14 The row–column format of the matrix p(uv | xy) is xy = 00, 01, 10, 11 moving down rows; moving across columns, for the inner bound with the format is uv = 00, 01, 10, 11, whereas for the outer bound with , the column format is uv = 00, 01, 1e, 10, 1e, e0, e1, ee. (The choice of for the third letter of and is explained below.)

Fig. 3.

Contour plots of the binary inner bound surface (a); outer bound surface (b); differences between the outer bound and inner bounds (c); and plot of inner and outer bound surfaces along a diagonal cut, (rx(θ), ry(θ)) = θ(1, 1), θ ∈ [0, 1]. In these plots q = 0.2.

Fig. 4.

Hinton diagrams of the maximizing probability distributions p(uv | xy) for 25 values of (rx, ry). (a) Distributions for . (b) Distributions for .

2). Conjectured Formulas:

Second, we guessed formulas for the inner and outer bound surfaces, which turned out to fit the numerical results just described. We first present the formulas, then discuss the motivations behind them.

Our formulas involve the following two functions. First, define

where

h(·) is the binary entropy function

“*” denotes binary convolution

and qx, qy ∈ [0, 1/2] to ensure that h(·) is invertible. Next, let s*(rx, ry) denote the upper concave envelope of s(rx, ry)

where ; and the supremum is over all combinations (θ, rx1, ry1, rx2, ry2) such that

and each variable in the optimization is restricted to the unit interval [0, 1]. As explained in Appendix F, in both this case and for the corresponding Gaussian formulas in the next section, the expression for this convex hull simplifies to

with the supremum over all combinations such that

Conjecture 1: For the binary case the surfaces of and are

| (14) |

| (15) |

and the surface of the achievable rate region is

| (16) |

3). Rationale for the Inner Bound (14):

The surfaces rin(rx, ry), rout(rx, ry) are specified in terms of probability distributions p(xyuv) = p(xy)p(uv | xy) that maximize (11) and (12). For rin(rx, ry), the distribution factorizes as p(uv | xy) = p(u | x)p(v | y), and a natural guess is that in the maximizing distribution both p(u | x) and p(v | y) are binary symmetric channels

or, equivalently, U = X ⊕ Wx, V = Y ⊕ Wy, where Wx ~ B(qx), Wx ~ B(qy), and qx, ; see Fig. 5(a). For this choice of U and V we calculate

and likewise ry = 1 − h(qy). Then

Clearly, s(rx, ry) is a lower bound on rin(rx, ry), since: 1) U – Y − V, hence ; 2) ; and 3)

The converse, rin(rx, ry), ≤ s(rx, ry) is unproven, so the identification of rin(rx, ry) with s(rx, ry) remains a conjecture. Nevertheless, in our numerical optimization we found no points outside of this region for any choice of (rx, ry), and the distributions which emerge from our computer experiments (Fig. 4(a)) closely resemble the long binary-symmetric channel in the calculation of s(rx, ry). This provides strong experimental evidence supporting (14) in Conjecture 1.

Fig. 5.

Binary-symmetric channel models for the inner and outer bounds. (a) Model for rin(rx, ry) with . (b) Model for rout(rx, ry) with . See text for explanation.

4). Rationale for the Outer Bound (15):

Clearly, s*(rx, ry) is a lower bound on rout, since, for all rx, ry ∈ [0, 1]

;

is convex, ⇒ ;

;

and together these imply rout(rx, ry) ≥ s*(rx, ry). Unfortunately, we do not have a proof of the converse, rout(rx, ry) ≤ s*(rx, ry), so the identification of rout(rx, ry) with s*(rx, ry) remains a conjecture. Nevertheless, empirically (i.e., according to our numerical experiments) the outer bound surface is identical to the convex hull of the inner bound surface. Moreover, empirically, the cardinalities required to construct the outer bound are .

We can provide an explicit construction of the conjectured outer bound surface and the probability distributions that achieve it as follows. The distributions in this construction also agree with those found empirically, shown in Fig. 4(b). Let . Consider the channel diagrammed in Fig. 5(b), which could be called a “synchronous erasure channel.” Here, U and V are generated by first passing X and Y through binary-symmetric channels, followed by an “erasure” E ∈ {0, 1} event in which both channel outputs are preserved with probability θ = Pr(E = 0), or both are erased (UV = ee) with probability . An explicit formula for this channel is

where

and

Equivalently, we can represent U and V as follows. Let W ~ B(q), Wx ~ B(qx), Wy ~ B(qy), E ~ B(θ) be Bernoulli random variables that are independent of each other and independent of X and Y, and define

where the multiplication by E is defined by

It is straightforward to verify . To check U – X − Y, write

where the last line follows from the independence of W, Wx and E from each other and X. A similar calculation shows X – Y – V. Finally, calculating the rate region surface associated with this choice of UV we get, first

where the last step follows from the previous calculations for the inner bound; and a similar calculation shows ry = θ(1 – h(qy)). Then, using U – X – Y, X – Y – V to write

we have (suppressing some detail)

Putting these together and canceling terms

Thus, we have constructed an explicit example which achieves s*(rx, ry) with .

B. Gaussian Case

We now consider a Gaussian version of our problem. Let X and Y be zero-mean Gaussian random variables with correlation coefficient ρxy. We propose explicit formulas for the surfaces of and for the Gaussian case, in terms of the following two functions. In both formulas, put

Note that these expressions determine the correlation coefficients ρxu and ρyv. Define

| (17) |

and

| (18) |

where

| (19) |

Conjecture 2: In the Gaussian case, the surfaces of and are

| (20) |

| (21) |

Fig. 6 shows plots of the inner and outer bounds and their difference, as well as the difference between the outer bound and the convex hull of the inner bound. Interestingly, unlike the binary case, for the Gaussian case the outer bound is not equal to the convex hull of the inner bound.

Fig. 6.

Contour plots of (a) the binary inner bound surface; (b) outer bound surface; (c) difference between the outer bound and inner bounds; and (d) plot of surfaces for the inner bound, its convex hull, and the outer bound along a diagonal cut, (rx(θ), ry(θ)) = θ(1, 1), θ ∈ [0, 1]. In these plots ρxy = 0.8.

The following proof relies on some basic properties of the mutual information between Gaussian random variables, given as lemmas in Appendix G.

In the analysis that follows, we assume that the maximizing distributions are Gaussian. Under this assumption, we solve the inner and outer bounds. Except for this unproved assumption, the proof of the conjecture is complete.

Proof: (Conjecture 2, eq. (20)) As noted in Appendix G, mutual informations between jointly Gaussian random variables are completely determined by their correlation coefficients. For a length-4 Markov chain U – X – Y – V of jointly Gaussian random variables I(U; V | XY) = 0 and, applying Lemma 9 from Appendix G we have ρuv = ρxuρxyρyv, hence

This mutual information is maximized when the constraints I(X; U) ≤ rx, I(Y; V) ≤ ry are satisfied with equality, hence when ρxu and ρyv satisfy and .

The following proof for the surface of the outer bound region uses the form of rout(rx, ry) given by (13). In this case, the optimization problem reduces to minimizing I(XY; UV) subject to the length-3 Markov constraints U – X – Y, X – Y – V.

Proof: (Conjecture 2, eq. (21)) Using Lemma 10 from Appendix G, we have

The left-hand matrix in this decomposition is Cxy,xy, denoted hereafter simply as C, and we denote the right-hand matrix by D. Then applying Lemma 8 from Appendix G yields

Substituting for the 2 × 2 matrices in this last expression and rearranging terms yields

where γ and β are defined in (19).

By assumption, ρxu and ρyv are fixed, so we optimize I(XY; UV) only with respect to ρuv. Setting ∂I(XY; UV)/∂ρuv = 0 and solving, we obtain that, if β > 2γ > 0, then the maximum is achieved at , where ρ is defined in (19).

To complete the proof we must show that β > 2γ > 0. Noting that β, γ > 0 and substituting, the desired inequality becomes

Subtracting from each side and factoring yields the equivalent inequality

To show that this holds for all ρxy, note that the maximum of the right-hand side is achieved by ρxy = 1, so that the inequality becomes

This inequality holds, since

VIII. Conclusion

We have presented an information-theoretic analysis of pattern recognition systems subject to data compression constraints. Our main results consist of fundamental bounds characterizing the minimum sensory and memory information budgets required for reliable pattern recognition, or, equivalently, the maximum number of patterns that can be discriminated on given sensory and memory data budgets.

As a starting point, we have focused on the case of unstructured data, in which patterns are representable as vectors with i.i.d. components, and the sensory data observation channel is memoryless. In recent years, there has been much theoretical and experimental work aimed at developing methods to render data into a format with independent (or approximately independent) components (see, e.g., [36]–[39]). Such methods have been especially successful in the study of “natural” signals, e.g., sounds and imagery in naturally occurring environments. Nevertheless, a decomposition into independent components is often impossible or only approximate, and it will be important in future work to extend our results to cover the case of correlated components and channels with memory.

We have focused on “reliable” pattern recognition systems, in the sense that the recognition error rate is able to be made arbitrarily close to zero. Nevertheless, in some applications it is of interest (or unavoidable) to allow less-than-perfect accuracy. This can be partly addressed by recasting the recognition problem as a “coarse-to-fine” search, where the system is given information in several successive stages, and at each stage is required only to partially recognize the pattern, i.e., to identify the pattern as belonging to a particular subclass, postponing definitive identification for the final stage. Extending our results to this successive refinement setting is relatively straightforward; see [40]. The more direct approach of explicitly allowing a strictly positive error rate is an open problem.

Much work remains to be done in designing practical pattern recognition systems that achieve the bounds described herein. One of the most challenging problems in this regard is the design of adequate statistical models of real-world signals. For examples of progress on this exciting front, see [11], [36], [41]–[48]. Another significant challenge is that of learning optimal classifiers from training data. In this connection, it will likely prove fruitful to explore connections between the present results and those established in machine learning theory; see, e.g., [7]–[10]. Another practical challenge is to build systems that make optimal use of time. Donald Geman and colleagues have been developing the theory of systems that reach their pattern recognition decisions with a minimum amount of computation [49]. It will be interesting to explore the relationship of this concept with our results concerning recognition using the minimum amount of information.

Open theoretical problems include the calculation of error exponents and, most importantly, the closing of the gap between our inner and outer bounds. As discussed in Section VI-B, the gap in our problem bears close resemblance to that in the distributed source coding problem. A solution to the distributed source coding problem would likely lead to a solution to ours, and vice versa.15

Acknowledgment

The authors gratefully acknowledge stimulating discussions with Michael DeVore, Naveen Singla, and Po-Hsiang Lai.

This work was supported by the Mathers Foundation and by the Office of Naval Research. The material in this paper was presented in part at an ONR PI meeting, Minneapolis, MN, May 2003; the Neural Information Processing Systems Workshop on Information Theory and Learning, Whistler, BC, Canada, December 2003; the IEEE International Symposium on Information Theory, Chicago, IL, June/July 2004; and the IEEE Information Theory Workshop, Punta del Este, Uruguay, March 2006.

Appendix A. Proof of the Inner Bound

In this section we prove the inner bound , Theorem 2. The proof relies on standard random coding arguments and properties of strongly jointly typical sets [30]. Given a joint distribution p(xyuv), the strongly jointly δ-typical set is defined by

where N(xyuv | xyuv) is the number of times the symbol combination xyuv occurs in xyuv. Likewise, we write. e.g., , , for singles, pairs, and triples. We will also use conditionally strongly jointly δ-typical sets, for example

The subscripts are omitted when context allows. We will also need the fact that for any positive numbers δ, ϵ > 0, fixed vector x, and large enough n

| (22) |

Theorem 1: Suppose R is a point in the convex hull of , . That is, , where p(q) is a probability distribution over some finite alphabet , and for each , .

We wish to show that for any ϵ > 0 and large enough n, there exists an (Mc, Mx, My, n) code (f, ϕ, g) with rates , , such that , , , and .

By definition, implies that for each there exist random variables Uq, Vq such that

and

for some values αxq, αyq, γq > 0 such that γq ≥ αxq + αyq.16 Now let

and

With these choices, we have , , .

Given

divide the sequences into segments with lengths nq = np(q), denoted , , i.e.,

Finally, we will use the additional notation: , and , .

We will construct the desired overall code (f, ϕ, g) with rate by first constructing encoders fq, ϕq with rates , for the component sequences , , then constructing a classifier g which acts on the combined outputs of the encoders.

Please refer to Fig. 7 for a summary of the notation introduced below.

Fig. 7.

Mappings for sequences , and concatenations (Xn, Yn).

-

1Codebooks: For each , from pq(xyuv) compute the marginal distributions pq(u),pq(v). To serve as memory codewords, select Mxq length-nq vectors by sampling with replacement from a uniform distribution over the set . Assign each codeword a unique index . To serve as sensory codewords, similarly select Myq length-nq vectors by from , and assign each an index . Denote the codebooks

-

2Encoders: We define encoders fq and ϕq in terms of maps

as follows. Given any , search the codebook for a codeword such that . If this search is successful, set , , , where iq is the index of in . If the search fails, (arbitrarily) set so that φxq and bxq are defined for all of . In the same way, given any , search for a codeword such that . If successful, denote the index of the found codeword jq, and set , , . For search failure, set jq =1, so that φyq and byq are defined for all of .

Finally, define

Now, given vectors and , the encoders above each produce vectors , , and indices , . Denote the concatenations of these

|

Note that the vector of integers i ranges over different values i(i),i = 1…Mx. Let the map between the vectors i and the corresponding integers i be ℓx, i.e., if i = i(i), let ℓx(i) = i, and . Similarly, j ranges over values, j(j),j = 1…My and we define ℓy such that if j = j(j), then ℓy(j) = j and . Then we can specify encoders and for full length-n vectors xn and yn by

To finalize the construction of the memory encoder, for any given labeled template pattern t(w) = (xn, w), let be defined by

The rates of the encoders are , as verified by calculating

-

3

Memorization: Given a realization of the template patterns and the encoders defined above f and ϕ, compute the memory data .

-

4

Recognition Function: Given the stored memory data , we proceed to construct the classifier g as follows.

For each , given any pair of length nq vectors , , define a function that tests the pair for strong joint typicality

where is the truth-indicator function if A is true, and if A is false.

Now, given the sensory data j, compute its vector representation . For each , retrieve from the corresponding codeword . Similarly, for each memory , compute the corresponding vector , and from the memory codebook retrieve . Next, define a function that tests each in against the corresponding in , reporting a 1 if all compared pairs are jointly typical and zero otherwise

where denotes the length- all-ones vector.

We can now specify the recognition function g as follows. Given the encoded sensory data j, the recognition module searches for a unique such that rw′ (j) = 1. If this search is successful, set . Otherwise, if there is none or more than one such value, declare an error and (arbitrarily) set . Thus, we have defined , , as desired.

A. Performance Analysis

1). Error Events:

We analyze the probability of error for a given W = w, T(w) = (Xn(w), w), Yn. Denote the results of processing these with the components of the code (f, ϕ, g) above by M(w) = (I(w), w) = f(T(w)), J(w) = ϕ(Yn); , for each , and Rw = rw(J(w)). The following is an exhaustive list of possible errors.

First, in words, the possible errors are as follows:

the sensory data and pattern template are not jointly typical;

the pattern template is unencodable;

the sensory data is unencodable;

the codewords for the memory and sensory data are not jointly typical;

the sensory data is jointly typical with more than one memory codeword;

two different patterns are assigned the same memory codeword.

More formally, we express the error events thus: For events Ei, let , where Ac denotes the complement of A. For each

;

;

;

; and letting , i = 1…4

;

.

Each of these vanishes as n → ∞, for the following reasons:

P(E1(q)) → 0, by the strong asymptotic equipartition property (AEP);

P(E2(q)) → 0, because ;

P(E3(q)) → 0, because ;

P(E4(q)) → 0, because of the factorization pq(xyuv) = p(xy)pq(u | x)pq(v | y) and the Markov lemma.

- Regarding P(E5) Rewrite E5 as

Then

So, P(E5) → 0 as n → ∞ if. . This is indeed the case, since

where (a) is because (γ – αxq + αyq); and (b) follows from elementary properties of mutual information and from the factorization. p(q)p(xy)p(u | xq) p(v | yq). - Regarding P(E6): Denoting the components of the codeword for each memory by , rewrite event E6 as

Then

So as if P(E6) → 0 as n → ∞ if . This is indeed the case: From our preceding calculation for P(E5), we have ; and the assumed factorization of pq(xyuv) implies that the following is a Markov chain: Uq – X – Y − Vq. Hence, by the data processing inequality

This concludes the proof of the inner bound.

Appendix B. Proof of the Outer Bound

In this section we prove Theorem 1, which states the outer bound . In the proof let W be the test index, selected from a uniform distribution p(w) over the pattern indices ; let T = T(W) = (X, W)be the selected test pattern from the set of template patterns ; let M = M(W) = (I, W) = f(T) be the compressed, memorized form of T; let be the memorized data; let Y be the sensory data; let J = J(W) = ϕ(Y) be the encoded sensory data, and let be the inferred value of W. Note that M, are random variables through their dependence on X, W, Y, and . The mutual informations in the proof are calculated with respect to the joint distribution (and its marginals) over (W, , , X, Y, M, I, J, ). We can verify that this distribution is well defined by writing it out explicitly. Let be the truth-indicator function if A is true, and if A is false. Then

where

The independence relationships underlying the structure of this distribution are evident from the block diagram of Fig. 1.

Proof: (Theorem 3) Assume . Then there exists a sequence of (Mx, My, Mc, n) codes (f, ϕ, g), such that for any ϵ > 0

and . To show that , we must construct a pair of auxiliary random variables UV such that and .

We construct the desired pair UV in three steps: 1) We introduce a set of intermediate random variable pairs UiVi, i = 1,2,…,n, individually contained in ; 2) we derive mutual information inequalities for Rx, Ry, and Rc involving sums of the intermediate variables; 3) we convert the sum-inequalities into inequalities in the desired pair UV.

Step 1:

Let the intermediate auxiliary random variables be

for i = 1,2,…,n. Each pair is in . This is verified for the Ui by calculating

where the reasons for the lettered steps are (a) conditioning does not increase entropy, (b) the Yi are independent of all other variables given Xn, and (c) the pairs XiYi are i.i.d. Hence, Ui − Xi − Yi is a Markov chain. By a similar argument, Xi − Yi − Vi is also a Markov chain. Hence, for each i = 1,2,…,n.

Step 2:

First, for the sensory encoder rate

where (a) follows from J = ϕ(Yn).

Next, taking account of all Mc memorized patterns, for the memory encoder rate we have

where (a) is simply a matter of variable definitions and notation, (b) follows from the assumption that the Xn(W)’s all have the same distribution and are drawn independently of W, (c) follows from the definition of p(w), (d) follows from the definition of conditional entropy, (e) follows from and the telescoping property, and (f) follows from the definition of Ui. Hence, .

The lettered steps are justified as follows.

- By assumption, , where . Thus, applying Fano’s inequality yields

where ϵn → 0. The test index W and patterns are drawn independently, hence, .

- Writing , , we have

since the M(i) = (I(i), i) are independent of J for i ≠ W. To justify this step, we invoke the following two results, proved in Appendix D. Let A, α, B, β and γ be arbitrary discrete random variables.

Then we get the following.

Lemma 6:

with equality if and only if I(Aα; Bβ) = I(A; B).

Lemma 7: Let Zi = (γ; Ai−1), i =1,2,…,n where the Ai are i.i.d. Then

To apply Lemma 6, make the substitution (α, β, A, B) → (M, J, Xn, Yn). Then the condition for equality is satisfied

since (a) M = (I, W) = f(Xn, W), (b) J = ϕ(Yn), and (c) Yn only depends on W through Xn = Xn(W), so that H(Yn | Xn, W) = H(Yn | Xn). Thus, we have

| (23) |

Next, apply Lemma 7 three times with the substitutions

to obtain

Adding the first two expressions and subtracting the third yields

| (24) |

Combining (23) and (24) yields

as claimed.

Step 3:

For this step, we use the following lemma, proved in Appendix C as part of the demonstration that is convex.

Lemma 1: Suppose , i = 1,2,…,n. Then there exists such that

Applying Lemma 1 to the results of Steps 1 and 2, we obtain

where . With respect to this UV, by definition we have . Hence, , and the proof is complete. □

Appendix C. Convexity of the Outer Bound

In this appendix, we prove a slightly more general version of Lemma 1 from Appendix B, and use this result to show that the outer bound rate region is convex.

In the following, let be any finite alphabet, and assume that we have pairs XqYq for all which are i.i.d. ~ p(xy).

Lemma 2: Suppose for all , and let , be any discrete random variable independent of the pairs {XqYq}. Then there exists a pair of discrete random variables such that

Remark 4: Lemma 1 in Appendix B follows immediately from the above Lemma, by choosing and p(q) = 1/n for all .

Proof: As a candidate for the pair UV in the lemma, consider for the given Q (see (4)), i.e., U = (UQ,Q) and V = (VQ,Q). To verify that , we proceed to check that U − X − Y and X − Y − V are Markov chains.

By the assumption for each , we have I(Uq; Yq | Xq) = 0 and I(Vq; Xq | Yq) = 0. Hence

where in (a) we are able to drop the subscript Q on XQ and YQ because the Xq and Yq are i.i.d. and independent of Q; and similarly (b) is because I(Q;Y | X) = 0, due to the independence of Q and Y. By an analogous calculation, we also find I(V; X | Y) = 0. Hence, U − X − Y and X − Y − V, and as desired.

It remains to demonstrate the three equalities in the lemma. For the first, write

where, as above, (a) and (b) follow from the fact that the Xq are i.i.d. and independent of Q. Similar calculations yield

and

The convexity of follows readily from the preceding lemma.

Lemma 3: is convex. That is, let Rq be any set of rates such that for all , where is a finite alphabet, and let p(q) be any probability distribution over . Then .

Proof: Fix an arbitrary distribution p(q) and rates for all . By the definition of , for each rate Rq, there exists a pair such that . Consequently

As in the proof of Lemma 2, use these pairs to construct a new pair UV, by defining U = (UQ, Q), V = (VQ, Q). From the proof of Lemma 2, we know 1) that , and 2) the sums on the right-hand sides of the inequalities above can be replaced with expressions in U and V, yielding

which means that for the given UV. Hence, . Since p(q) and were arbitrary, we conclude that is convex. □

Appendix D. Mixing Lemmas

In this appendix, we prove Lemmas 6 and 7, which are used in proving the outer bound.

Consider the elementary Shannon inequalities, stated in the following two lemmas. The variables A, B, α, β, γ, δ appearing in the lemmas denote arbitrary discrete random variables.

Lemma 4:

Proof:

Lemma 5:

Proof:

Lemma 6 follows directly from the preceding lemmas.

Lemma 6:

with equality if and only if I(A, α; B, β) = I(A; B).

Proof: Rearrange Lemma 5 to get

The lemma now follows readily from the preceding expression: We obtain equality in the lemma if (and only if) the term in brackets is zero. Otherwise, the bracketed term is nonnegative, since

where the inequality is due to the fact that conditioning does not increase entropy. □

Lemma 7: If Ui = (γ, Ai−1), then

Proof: In Lemma 4, put A = Ai, α = Ai−1 Note that U1 = γ. Hence, substituting and summing from 2 to n yields

Appendix E. Alternative Representations of and

Here we show that the alternative representations of the inner and outer bound surfaces introduced in Remark 2 are in fact equivalent, i.e., , .

We show first that . Suppose . Then, using U − X − Y, X − Y − V we have

| (25) |

which implies

and similarly Ry ≥ I(XY; V | U) + Rc; and

We conclude that , hence . A symmetrical argument shows , proving .

Next, we show that and are identical. To this end, note that these sets correspond to regions in the positive orthant , and that two such regions are identical if they have the same surfaces. Following the presentation in Section VII, the surfaces of and are

where

Thus, the desired equivalence follows simply from the fact that at the surfaces, the inequalities defining each region become equalities.

The same line of argument as above of course also shows .

Appendix F. Simplification of Convex Hulls

In this appendix, we argue geometrically that the expressions for the convex hulls of the inner bound regions simplify to just one term in both the binary and Gaussian cases. To discuss both cases simultaneously, let us represent the surface of either inner bound by a positive-valued function . Here, is a square region

and M is a positive constant. In the binary case, f(r) = s(r), and ; in the Gaussian case, f(r) = S(r) and . Some important properties shared by both cases are that for all

where the subscripts denote partial derivatives.

Denote the convex hull of f(r) by c(r). Generically, the boundary of the convex hull is

where the maximum is over all triples (θ, r1, r2) such that , and . However, as argued next, for the cases under study this simplifies to

where r = θr′.

The convex hull of a surface can be characterized in terms of its tangent planes. Given any point , if its tangent plane lies entirely above the surface, then (r′, f(r′)) is on the convex hull. If the tangent plane cuts through the surface at one or more other points, or if the tangent plane lies below the surface, then (r, f(r)) is not on the convex hull. If the tangent plane intersects the surface at exactly two points, then both points are on the convex hull.

The tangent plane at an arbitrary point is the set of points satisfying

where the partial derivatives are evaluated at r′, i.e., fx = fx(r′), fy = fy(r′), and z′ = f(r′). The tangent plane intersects the z = 0 plane in a line. Setting z(r) = 0 and solving

Since fx, fy > 0, the slope m = −(fx/fy) is negative. This line intersects the positive orthant whenever the intercept b ≥ 0, in which case the tangent plane cuts through the surface, since f ≥ 0. Thus, the only points on the original surface f(x,y) that can be on the convex hull are those for which b ≤ 0.

Next, consider any path through along a line segment y = αx, α > 0, starting from one of the “outer edges” of , where x = M or y = M, and consider what happens to the tangent plane’s line of intersection ℓ with the z = 0 plane as we move in along the path toward the origin (0, 0). Initially, the tangent planes lie entirely above the surface, and the intercept of ℓ is negative, b < 0. This intercept increases along the path until b = 0, at which point ℓ intersects (0, 0). Here, the tangent plane contains a line segment attached on one end to the point of tangency, and at the other end to the point (r, f(r)) = (0, 0, 0); everywhere else, the tangent plane is above the surface. Continuing toward the origin, all other points along the path have tangent planes such that ℓ has a positive intercept b > 0, hence, these points are excluded from the convex hull.

These considerations imply that the convex hull c(r) is composed entirely of two kinds of points. First, points which coincide with the original surface, c(r), = θf(r) with θ = 1. These points occur at values of r = (x, y) “up and to the right” of (0, 0). Second, points along line segments connecting surface points “up and to the right” (r′, f(r′)) with the point (r, f(r)) = (0, 0, 0), that is , where r = θr′ and θ ∈ [0, 1]. Hence, for all , c(r) has the desired form.

Two more examples of functions that behave in the same way just described are f(x, y) = (1 − (1 − x)2)(1 − (1 − y)2) and f(x, y) = xy, with .

Appendix G. Properties of Gaussian Mutual Information

Our analysis of the Gaussian pattern recognition problem relies on the following well-known results, stated below without proof.

Lemma 8: The mutual information between two Gaussian random vectors X and Y depends only on the matrices of correlation coefficients. Specifically

where

In the special case Y = X + W, where X and W are independent Gaussian random variables with variances P and N, respectively, we have

where the correlation coefficient .

Lemma 9: If X, Y and Z are zero-mean Gaussian random vectors that form a Markov chain X − Y − Z, then

Note that for dimension one, X → Y → Z ρx,z = ρx,yρy,z implies.

Lemma 10: Let X, Y, U, and V be jointly Gaussian random variables such that U − X − Y and X − Y − V are Markov chains. Then the matrix of correlation coefficients Cxy,uv decomposes as

This lemma follows immediately by using Lemma 9 to obtain the substitutions and .

Footnotes

Communicated by P. L. Bartlett, Associate Editor for Pattern Recognition, Statistical Learning and Inference.

If this were not the case, visual experience would be like watching television white noise.

Grenander [4] and Mumford [5] have argued that four “universal transformations” (noise and blur, superposition, domain warping, and interruptions) account for most of the ambiguity and variability in naturally occurring signals.

One should probably beware of the strange (and unnecessary) interpretation that, as we upgrade our camera (i.e., as we increase n), the number of patterns in the world consequently increases. More naturally, we may view the world as always presenting a practically unlimited number of patterns, while the number of patterns that can be taken advantage of by a system grows with increasing information processing resources.

In particular, our formulation is consistent with the “General Pattern Theory” framework of Grenander and colleagues, which has provided a basis for much of the generative modeling work in pattern recognition research [11].

This step relies on the long Markov chain U − X − Y − V and the Markov lemma.

Alternatively, one can search for ways to tighten the outer bound.

The notation is ours.

But see the footnote at the end Section VIII.

This is related to the problem addressed in [24], [25], except that in that work there is no requirement that the memory data be compressed.

Similar comments are made in [24].

The optimization over distributions p(xyuv) reduces to a search over conditional distributions p(uv | xy) because p(xy) is fixed. Details of the optimization algorithm are given in [35].

These are called Hinton diagrams in the machine learning literature, after their inventor Geoffrey Hinton.

These distributions are not unique, as the mutual informations are unchanged under various reassignments of values of x, y, u, v, and consequent rearrangements of the entries of p(uv | xy); the distributions shown have been accordingly rearranged into a common format to facilitate comparison.

During the review process for this paper, Servetto indeed claimed a solution to the distributed source coding problem using a novel approach. Unfortunately, he suffered an untimely death on 7/24/2007, before finalizing his work. The most recent public draft of his paper on this topic is available on the arXiv (see [50]).

This last condition ensures Rcq ≤ Rxq + Ryq − I(XY; UqVq).

Contributor Information

M. Brandon Westover, Department of Neurology, Massachusetts General Hospital, Boston, MA 02114-2622 USA.

Joseph A. O’Sullivan, Department of Electrical engineering, Washington University, St. Louis, MO 63130 USA.

References

- [1].Barlow HB, “Sensory mechanisms, the reduction of redundancy, and intelligence,” in Proc. Symp. Mechanization of Thought Processes, 1959, pp. 537–574. [Google Scholar]

- [2].Van Essen DC and Anderson CH, “Information processing strategies and pathways in the primate retina and visual cortex,” in An Introduction to Neural and Electronic Networks, Lau C, Zornetzer SF, and Davis JL, Eds. San Diego, CA: Academic, 1995, pp. 43–72. [Google Scholar]

- [3].Eddington AS, The Mathematical Theory of Relativity, 3rd ed Cambridge, U.K.: Cambridge Univ. Press, 1963. [Google Scholar]

- [4].Grenander U, Elements of Pattern Theory, ser Johns Hopkins Series in the Mathematical Sciences. Baltimore, MD: Johns Hopkins Univ. Press, 1996. [Google Scholar]

- [5].Mumford D, “Pattern theory: A unifying perspective,” in Perception as Bayesian Inference, Knill DC and Richards W, Eds. Cambridge, U.K.: Cambridge Univ. Press, 1996, ch. 1, pp. 25–62. [Google Scholar]

- [6].Schmid JA and O’Sullivan NA, “Performance prediction methodology for biometric systems using a large deviations approach,” IEEE Trans. Signal Process, vol. 52, no. 10, pp. 3036–3045, Oct.. [Google Scholar]

- [7].Bishop CM, Pattern Recognition and Machine Learning. New York: Springer, 2006. [Google Scholar]

- [8].MacKay DJC, Information Theory, Inference, and Learning Algorithms. Cambridge, U.K.: Cambridge Univ. Press, 2003. [Online]. Available: http://www.inference.phy.cam.ac.uk/mackay/itila/ [Google Scholar]

- [9].Kearns MJ and Vazirani UV, An Introduction to Computational Learning Theory. Cambridge, MA: MIT Press, 1994. [Google Scholar]

- [10].Vapnik VN, Statistical Learning Theory. New York: Wiley, 1998. [Google Scholar]

- [11].Grenander U and Miller M, Pattern Theory: From Representation to Inference. New York: Oxford Univ. Press, 2006. [Google Scholar]

- [12].Burges CJC, “A tutorial on support vector machines for pattern recognition,” Data Mining and Knowledge Discovery, vol. 2, no. 2, pp. 121–167, 1998. [Google Scholar]

- [13].Barlow HB, “The coding of sensory messages,” in Current Problems in Animal Behavior, Thorpe WH and Zangwill OL, Eds. Cambridge, U.K.: Cambridge Univ. Press, 1961. [Google Scholar]

- [14].Barlow HB, “Understanding natural vision,” in Physical and Biological Processing of Images, ser. Springer series in Information Sceinces, Braddick OJ and Sleigh AC, Eds. Berlin, Germany: Springer-Verlag, 1983, vol. 11, pp. 2–14. [Google Scholar]

- [15].Barlow H, “What is the computational goal of the neocortex?,” in Large Scale Neuronal Theories of the Brain, Koch C and Davis JL, Eds. Cambridge, MA: MIT Press, 1994, ch. 1, pp. 1–22. [Google Scholar]

- [16].Barlow H, “Redundancy reduction revisited,” Network-Computa. Neural Syst, vol. 12, no. 3, pp. 241–53, 2001. [PubMed] [Google Scholar]

- [17].Gardner-Medwin AR and Barlow HB, “The limits of counting accuracy in distributed neural representations,” Neural Comput, vol. 13, no. 3, pp. 477–504, 2001. [DOI] [PubMed] [Google Scholar]

- [18].Rieke F, Warland D, de Ruyter van Steveninck R, and Bialek W, Spikes: Exploring the Neural Code. Cambridge, MA: MIT Press, 1996. [Google Scholar]

- [19].Lennie P, “The cost of cortical computation,” Curr. Biol, vol. 13, no. 6, pp. 493–497, 2003. [DOI] [PubMed] [Google Scholar]

- [20].LeCun Y, Bottou L, Bengio Y, and Haffner P, “Gradient-based learning applied to document recognition,” Proc. IEEE, vol. 86, no. 11, pp. 2278–2324, Nov. 1998. [Google Scholar]

- [21].Jain AK, Duin RPW, and Mao J, “Statistical pattern recognition: A review,” IEEE Trans. Pattern Anal. Machine Intell, vol. 22, no. 1, pp. 4–37, Jan. 2000. [Google Scholar]

- [22].Ahlswede RF and Csiszár I, “Hypothesis testing with communication constraints,” IEEE Trans. Inf. Theory, vol. IT-32, no. 4, pp. 533–542, Jul. 1986. [Google Scholar]

- [23].Han TS and Amari SI, “Statistical inference under multiterminal data compression,” IEEE Trans. Inf. Theory, vol. 44, no. 6, pp. 2300–2324, Oct. 1998. [Google Scholar]

- [24].Ishwar P, Prabhakaran VM, and Ramchandran K, “Toward a theory for video coding using distributed compression principles,” in Proc. Int. Conf. Image Processing (ICIP 2), Barcelona, Spain, Sept. 2003, pp. 687–690. [Google Scholar]

- [25].Ishwar P, Prabhakaran VM, and Ramchandran K, “On joint classification and compression in a distributed source coding framework,” in Proc. IEEE Workshop on Statistical Signal Processing, St. Louis, MO, 2003, pp. 25–28. [Google Scholar]

- [26].Oehler KL and Gray RM, “Combining image compression and classification using vector quantization,” IEEE Trans. Pattern Anal. Machine Intell, vol. 17, no. 5, pp. 461–473, May 1995. [Google Scholar]

- [27].Hinton GE and Salakhutdinov RR, “Reducing the dimensionality of data with neural networks,” Science, vol. 313, no. 5786, pp. 504–507, Jul. 2006. [DOI] [PubMed] [Google Scholar]

- [28].Dong Y, Chang S, and Carin L, “Rate-distortion bound for joint compression and classification with application to multi-aspect sensing,” IEEE Sensors J, vol. 5, no. 3, pp. 481–492, Jun. 2005. [Google Scholar]

- [29].Csiszár I and Körner J, Information Theory: Coding Theorems for Discrete Memoryless Systems. New York: Academic, 1981. [Google Scholar]

- [30].Cover TM and Thomas JA, Elements of Information Theory. New York: Wiley, 1990. [Google Scholar]

- [31].Berger T, “Multiterminal source coding,” in The Information Theory Approach to Communications, Longo G, Ed. New York: Springer-Verlag, 1977. [Google Scholar]

- [32].Tung SY, “Multiterminal Source Coding,” Ph.D. dissertation, Cornell Univ., Ithaca, NY, 1978. [Google Scholar]

- [33].Berger T, The Information Theory Approach to Communications. New York: Springer-Verlag, 1977, ch. Multiterminal Source Coding. [Google Scholar]

- [34].Shannon CE, “A mathematical theory of communication,” Bell Syst. Tech. J, vol. 27, pp. 379–423, 623–656, 1948. [Google Scholar]

- [35].Westover MB, “Image Representation and Pattern Recognition in Brains and Machines,” Ph.D. dissertation, Washington Univ., St.Loouis, MO, 2004. [Google Scholar]

- [36].Olshausen BA and Field DJ, “Sparse coding with and overcomplete basis set: A strategy employed by {V}1?,” Vision Res, vol. 37, pp. 3311–3325, 1997. [DOI] [PubMed] [Google Scholar]

- [37].Sejnowski TJ and Bell AJ, “The “independent components” of natural scenes are edge filters,” Vision Res, vol. 37, pp. 3327–3338, 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Hyvärinen A, “Survey on independent component analysis,” Neural Comput. Sur, vol. 2, pp. 94–128, 1999. [Google Scholar]

- [39].Schwartz O and Simoncelli EP, “Natural signal statistics and sensory gain control,” Nature Neurosc, vol. 4, no. 8, pp. 819–825, Aug. 2001. [DOI] [PubMed] [Google Scholar]

- [40].O’Sullivan JA, Singla N, and Westover MB, “Successive refinement for pattern recognition,” in Proc. IEEE Information Theory Workshop, Punta del Este, Uruguay, 2006, pp. 141–145. [Google Scholar]

- [41].Srivastava A, Lee AB, Simoncelli EP, and Zhu SC, “On advances in statistical modeling of natural images,” J. Math. Imaging and Vision, vol. 18, no. 1, pp. 17–33, Jan. 2003. [Google Scholar]

- [42].Lee AB, Mumford D, and Huang J, “Occlusion models for natural images: A statistical study of a scale-invariant dead leaves model,” Int.J. Comp. Vision, vol. 41, pp. 35–59, 2001. [Google Scholar]

- [43].Zhu SC, “Statistical modeling and conceptualization of visual patterns,” IEEE Trans. Pattern Anal. Machine Intell, vol. 25, no. 6, pp. 691–712, Jun. 2003. [Google Scholar]

- [44].Heeger DJ and Bergen JR, “Pyramid-based texture analysis/synthesis,” in Proc. 22nd Annu. Conf. Computer Graphics and Interactive Techniques, 1995, pp. 229–238, ACM Press. [Google Scholar]

- [45].Zhu SC, Wu YN, and Mumford D, “Filters, random fields and maximum entropy (frame): Toward a unified theory for texture modeling,” Int. J. Comp. Vision, vol. 27, no. 2, pp. 107–126, 1998. [Google Scholar]