Abstract

Aims:

This study describes when and how adolescents engage with their fast-moving and dynamic digital environment as they go about their daily lives. We illustrate a new approach – screenomics – for capturing, visualizing, and analyzing screenomes, the record of individuals’ day-to-day digital experiences.

Sample:

Over 500,000 smartphone screenshots provided by four Latino/Hispanic youth, age 14–15 years, from low-income, racial/ethnic minority neighborhoods.

Method:

Screenomes collected from smartphones for one to three months, as sequences of smartphone screenshots obtained every five seconds that the device is activated, are analyzed using computational machinery for processing images and text, machine learning algorithms, human-labeling, and qualitative inquiry.

Findings:

Adolescents’ digital lives differ substantially across persons, days, hours, and minutes. Screenomes highlight the extent of switching among multiple applications, and how each adolescent is exposed to different content at different times for different durations – with apps, food-related content, and sentiment as illustrative examples.

Implications:

We propose that the screenome provides the fine granularity of data needed to study individuals’ digital lives, for testing existing theories about media use, and for generation of new theory about the interplay between digital media and development.

Keywords: screenomics, screenome, smartphone, social media, adolescence, digital media, intensive longitudinal data, experience sampling

The data about adolescents’ digital lives suggest that media are important – probably as important as any other socialization source during this period of the life course (Calvert & Wilson, 2010; Gerwin et al., 2018; Twenge, 2017). Smartphones, in particular, are prized personal possessions that are used many hours per day to gather and share information, facilitate a variety of entertainment and peer communication behaviors, and interfere with homework and sleep (e.g., Pea, et al., 2012; O’Keefle & Clarke-Pearson, 2011; Strasburger, Hogan & Mulligan, 2013; American Academy of Pediatrics, 2018). Surveys, the current de facto standard for describing digital life landscapes, regularly ask adolescents how they use media (Lenhart et al., 2015; Rideout & Robb, 2018). Generalizations in both the scientific literature and popular press are almost exclusively based on surveys of what adolescents remember and later report. Little is known about the details of what adolescents actually see and do with their screens, second-to-second, during use.

Adolescence is characterized by a variety of developmental tasks, including academic achievement in secondary school, engagement with peers, abidance of laws and moral rules of conduct, identity exploration and cohesion, and exploration of romantic relationships (McCormick, Kuo, & Masten, 2011). New behaviors and ways of interacting with the world emerge as adolescents adjust to the plethora of biological, physical, and social changes that accompany puberty and movement into adult roles (Hollenstein & Lougheed, 2013). A substantial body of research highlights how adolescents (and children and adults) engage digital media in the service of developmental tasks – for better or worse (Uhls, Ellison, & Subrahmanyam, 2017). Digital media is now used ubiquitously for formation and management of personal relationships, exploration of new domains and identities, and expression and development of autonomy from parents (Ko, Choi, Yang, Lee, & Lee, 2015; Padilla-Walker, Coyne, Fraser, Dyer, & Yorgason, 2012). Many of the developmental processes driving individuals through adolescence are available, facilitated by, influenced by, or expressed in digital life (Coyne, Padilla-Walker, & Howard, 2013). Digitalization and representation of adolescents’ developmental tasks onto personal screens provides new opportunity for observing how those tasks manifest in daily life (Lundby, 2014; Subrahmanyam & Smahel, 2011).

Once on a screen, individuals’ engagement with others, information, and the world can be paused, restarted, reordered, and atomized in any manner a user sees fit. Individuals can build personalized threads of digital experience that cut instantly between applications, platforms, and devices. Digital experiences are curated in real time as individuals select what application to engage with, for how long, and to what purpose. The technology provides opportunity to play games, do school work, listen to music, have social relationships, watch videos, and more – all within seconds of each other. What adolescents (and young adults) actually consume and produce on their smartphone devices, though, has not been documented, in part because people’s self-reports of use are inaccurate (correlations between reported and actual use, rs < .50, agreement between reported and actual use, κs < .20; Boase & Ling, 2013; Gold et al., 2015; Mireku et al., 2018). In sum, although self-reports about use provide some information, there is much more to be learned about how smartphones and other digital media are actually being used by adolescents (and others) as they interact with, test, and negotiate their way into new identities, expectations, and roles.

Inspired by the challenge of obtaining accurate and detailed information about smartphone use, we report on a novel method for capturing everything that appears on an individual’s smartphone screen in real time and in-situ. Our approach is to take screenshots continuously and frequently whenever the device is on. The resulting sequence of screenshots (and associated information) constitute the individual’s “screenome” – the structure of which can then be examined to inform knowledge of when and how individuals engage with their fast-moving and dynamic digital environment (Reeves et al., 2019). We offer analysis of four adolescents’ digital lives that demonstrate the value of the screenome and of screenomics for examining the diversity of digital content encountered, the generation of personal content, the sentiment expressed in digital selections, and how specific kinds of content (food as an illustrative example) may influence individuals’ behavior.

In-Situ Study of Digital Life

Although developmental and communication researchers are committed to describing and theorizing about the details of how adolescents use digital media, the measurement paradigms used for these descriptions do not actually observe how adolescents use their devices. Mostly, media use is assessed via self-reports about behavior over relatively long spans of time (e.g., how many hours did you spend on Snapchat this week? or how many minutes did you spend looking at food-related content yesterday?). Studies using experience sampling methods dive deeper. For example, George and colleagues (2018) followed 151 adolescents for a month, asking them each evening to estimate the amount of time they spent using social media, the Internet, texting, and the number of text messages they sent that day. Using a slightly more intensive sampling protocol, Borgogna and colleagues (2015) followed 158 adolescents for a week, and obtained three or four reports per day about whether the adolescents were or were not using electronic media (watching TV, computer/video games, working on a computer, IM/e-mail on computer, texting, listening to music, other). With similar intent, Rich and colleagues (2015) developed a multimodal method for measuring youth media exposure. In their paradigm, robust information about media exposures and influences was obtained by having youth recall their media use and estimate durations, complete time-use diaries, and periodically (four to seven times per day) take a 360-degree video pan of their current environment and respond to mobile device-based experience sampling questionnaires. While querying media use every few hours and tracking daily dosage provide for general inferences about individuals’ overall engagement with digital media, these designs obscure and/or misrepresent the actual time scale at which individuals’ media use proceeds (Reeves et al., 2019).

Consolidation of technology embedded into smartphone devices mean that it is now possible to switch between radically different content on a single screen on the order of seconds – for example, when an individual switches from watching a cat video on YouTube to taking, editing, and sharing a selfie in Snapchat to searching for a restaurant with Google to texting a friend to arrange a time to meet. Self-reports of media exposure and behavior simply do not provide accurate representation of digital lives that weave quickly – often within a few seconds – between different software, applications, locations, functionality, media, and content. In contrast, the screenome – made of screenshots taken frequently at short intervals – is a reconstruction of digital life that contains all the information (i.e., the specific words and pictures) and details about what people are actually observing, how they use software (e.g., writing, reading, watching, listening, gaming), and whether they are producing information or receiving it. The screenshots capture activity about all applications and all interfaces, regardless of whether researchers can establish connections to the sources of information via software logging or via relationships with businesses that own the logs (e.g., phone records, Facebook, and Google API’s). Sequences of screenshots (i.e., screenomes) provide the raw material and time-series records needed for fine-grained quantitative and qualitative analysis of fast changing and highly idiosyncratic digital lives.

Fine-grained temporal granularity.

The high frequency of screenshot sampling allows for analysis at multiple time scales, from seconds to hours to days to months (Ram & Reeves, 2018). One may zoom in or out across time scales to examine specific behaviors of finite length, cyclic or acyclic patterns of behavior, or long-term trends in behavior that match the times scale relevant to theoretical questions (Aigner et al., 2008). The screenome includes data about particular moments in life, repeated exposures and behaviors, and the full sequence of contiguous, intermittent, and potentially interdependent digital experiences. Over the course of hours, for example, an individual’s screenome may contain a text message from a friend, a Google search sprinkled with advertisements about nearby restaurants, the geolocation of the restaurant chosen, the selfie texted during the meal, the emotional sentiments expressed and/or received before, during, and after the meal, as well as multiple strings of unrelated Snapchat and Instagram messages exchanged with other groups of friends that interrupt the restaurant episode. This is a new scale of data – rich information that allows for detailed description, examination, and theorizing about the ways adolescents’ actually use media (Shah, Cappella, & Neuman, 2015).

Person-specific (idiographic) approach.

The temporal density of the data also provides opportunities for truly idiographic analysis. Much of the research on media and adolescents is focused on identifying between-person variations in exposures and their correlates in large samples of adolescents (e.g., higher frequency of digital media use is associated with greater symptoms of attention-deficit/hyperactivity disorder, Ra et al., 2018). In contrast, the screenome shifts focus toward description and understanding of detailed person-specific sequences.

Developmental science has long championed and prioritized analysis of intraindividual change and dynamic processes, both of which require longitudinal data (Baltes & Nessleroade, 1979; Molenaar, 2004; Ram & Gerstorf, 2009). Applying the developmental paradigm to the study of day-to-day digital life, we invoke time series analysis of single individuals and models that describe how distinct individuals progress through their digital lives. The screenome is the only data that we know of that preserves the sequencing and content of all experiences represented on digital devices and opens them up for study and analysis of within-person behavior change. Complementing bioecological models of development that opened discovery of the complex interplay of the micro- and macro-processes that drive development (Bronfenbrenner & Morris, 2006), these new data open up a new realm – the dynamic digital environment – that is already influencing individual development, health, and well-being.

The Present Study

The goal of this study is to illustrate how our new method – screenomics – can be used to describe when and how individuals engage with digital media (Reeves et al., 2019). To date, there are no accurate or comprehensive descriptions of the ephemeral, cross-platform, and cross-application digital environments that adolescents encounter in situ, whether in ads, news, entertainment, or social relationships. We have developed an end-to-end system for capturing, transforming, storing, analyzing, and visualizing the screenomes of digital life – the record of individual life experiences represented as a sequence of screens that people view and interact with over time (Reeves et al., 2019). The system includes new software and analytics that collect screenshots from smartphones every five seconds, extract text and images from the screens, and automatically categorize and store screen information with associated metadata.

We propose that the screenome provides the fine granularity of data needed for study of adolescents’ digital lives. In this paper, using a combination of contemporary quantitative and ethnographic methods, we forward a new possibility for observing, describing, and interpreting adolescents’ daily media practices. Our inquiry focuses on four specific aspects of adolescents’ digital lives: mobile smartphone apps, production versus consumption of information, and, as an illustration, food-related content and the emotional content surrounding that content.

Importantly, our analysis of food-related content is forwarded as a single, illustrative exemplar of the kinds of knowledge that may be derived from screenomics. Many other content areas could be examined. We use food here as an exemplar because some of our participants were recruited after completing a prior weight-related study. From a research perspective, we highlight how known links between individuals’ media use and health behaviors might manifest in screenomes of individuals for whom food-related content might be particularly salient, relevant, and consequential, and how detailed knowledge about food-related media exposure might influence adolescent’s daily food choices and later health (e.g., Blumberg et al., 2019; Borgogna et al., 2015; Folkvord & Van’t Riet, 2018; Vaterlaus, Patten, Roche, & Young, 2015). Altogether, the analyses are meant to illustrate the utility of the method for understanding the wider range of content, functions, and contexts that are infused in individuals’ digital lives.

Method

Participants & Procedure

Participants were four adolescents recruited from low-income, racial/ethnic minority neighborhoods near Stanford University, California. Some of the participants had participated in a prior study of weight gain prevention in Latino children, a population with high prevalence of obesity (Robinson et al., 2013). All four (2 female, 2 male) were Latino/Hispanic, age 14–15 years and had exclusive use of smartphones using the Android operating system. Screenomes were collected for between 34 and 105 days.

After expressing interest in the study, the adolescent and his/her parent/guardian were given additional information about the project, what we hoped to learn, what we would be collecting from their phones, and the potential risks and benefits of their participation. Consent forms were available in English and Spanish, with signed written consent required from parents/guardians and signed written assent from adolescents prior to participation. Participants were requested to keep the screenshot software on their phones for at least one month but informed they could end their participation at any time. The screenshot software was then loaded on the adolescent’s smartphone and the secure transmission connections to our servers tested. Participants were asked to use their devices as usual. In the background, screenshots of whatever appeared on the device’s screen (e.g., web browser, home-screen, e-mail program) were obtained every five seconds, whenever the device was activated. At periodic intervals that accommodated constraints in bandwidth and device memory, bundles of screenshots were automatically encrypted and transmitted to a secure research server. Data collection was designed to be fully unobtrusive. Other than the study enrollment meeting, no input was required from participants. Data were collected using a rigorous privacy protocol that is substantially and intentionally more controlled than many corporate data-use agreements, for example by not allowing data to be used for any other purposes or by any other parties. Our data collection and use follows a much more explicit data use and privacy agreement, and is restricted to a small set of university-based researchers. Screenshot images and associated metadata are stored and processed on a secure university-based server, viewed only by research staff trained and certified for conducting human subjects research. At the end of the collection period, the software was removed from the devices, the participants were thanked, and given a gift card ($100).

In total, we observed 751.5 hours of in-situ device use during 212 person-days of data collection from four adolescents (total of 541,103 screenshots). The resulting screenshot sequences offer a rich record of how these adolescents interacted with digital media in their daily lives.

Data Preparation (Feature Extraction & Labeling)

Screenshot data were prepared for analysis using data processing pipelines analogous to those used in metagenomics, where DNA is extracted from raw samples, sequenced, inspected/cleaned, assembled, matched to template sequences, classified, and described. Whereas the objective in metagenomics is to describe the diversity of genetic material in an environmental sample, the objective in screenomics is to describe the diverse information inhabiting an individual’s digital screens.

Extraction of behaviorally and psychologically relevant data from the digital record is facilitated by a combination of qualitative analysis (in-depth, exploratory, open-ended qualitative analysis for emergent themes), human labeling (low inference content coding to create training data for machine learning), and machine learning algorithms. In brief, each screenshot is “sequenced” using a custom-designed module wrapped around open-source tools for image and document processing. Screenshots are converted from RGB to grayscale, binarized to discriminate foreground from surrounding background, segmented into blocks of text and images, and passed through a recognition engine (Tesseract/OpenCV-based) that identifies text, faces, logos, and objects. The resulting ensemble of text snippets and image data (e.g., number of faces) are then compiled into Unicode text files, one for each screenshot, that are integrated with metadata within a document-based database that facilitates storage, retrieval (through a local API), and visualization.

Text is extracted from each screenshot using Optical Character Recognition methods (Chiatti et al., 2017) and is quantified in a variety of ways that inform us about media behavior and content. For example, word count, a simple description of quantity of text, takes a bag-of-words approach and is calculated for each screenshot as the number of unique “words” (defined as any string of characters separated by a space) that appeared on the screen. Word velocity, a description of how quickly the content is moving (e.g., when scrolling through text), is calculated as the difference between the bag-of-words obtained from two consecutive screenshots, specifically the number of unique new words that did not appear in the prior screenshot. As well, natural language processing (NLP) methods are used to interpret the text with respect to sentiment (described below) or other language characteristics (e.g., complexity). For example, a bag-of-words analysis of the text (LIWC, Pennebaker, Chung, Ireland, Gonzales, & Booth, 2007) provided 93 variables that indicated (through dictionary lookup) prevalence of first-person pronouns, social, health, and other types of words.

Graphical features of each screenshot are also quantified in a variety of ways. Image complexity was computed as the across-pixel heterogeneity (i.e., “texture”), as quantified by entropy of an image across 256 gray-scale colors (Shannon, 1948). Image velocity, a description of visual flow, was then calculated for each screenshot as the change in image complexity from the prior screenshot. The velocity measure (formally, the first derivative of the image complexity time-series) indicates how quickly the content is changing (e.g., by analogy, how quickly a video shot pans across a scene) and is known to influence viewers’ motivations and choices (Wang, Lang & Busemeyer, 2011). Logos are identified using template matching methods (Culjak, Abram, Pribanic, Dzapo, & Cifrek, 2012) that determine the probability that the edges and shapes within a screenshot are the same as in a specific template (e.g., Facebook logo). Together, the textual and graphical features provide a quantitative description of each screenshot that was used in subsequent analysis.

In parallel, selected subsets of the screenshots were used to identify and develop schema that provided meaningful description of the media that appeared on the screen. For example, screenshots can be tagged with codes that map to specific behavioral taxonomies (e.g., emailing, browsing, shopping) and content categories (e.g., food, health). Manual labeling of large data sets often use public crowd-sourcing platforms (e.g., Amazon Mechanical Turk; Buhrmester, Kwang, & Gosling, 2011), but the confidentiality and privacy protocols for the screenome require that labeling be done only by members of the research team who are authorized to view the raw data. Through a secure server, screenshot coders were presented with a series of screenshots (random selection of sequential chunks that provided coverage across participants and days), and they labeled the action and/or content depicted in each screenshot using multicategory response scales (e.g., Datavyu, 2014) or open-ended entry fields (NVivo, 2012). These annotations were then used both to describe individuals’ media use, and as ground truth data to train and evaluate the performance of a collection of machine learning algorithms (e.g., random forests) that used the textual and graphical features (e.g., image complexity) to replicate the labeling and extend it to the remaining data.

Measures/Data Analysis

This report concentrates on four aspects of adolescents’ device use derived from the human-labeling and the textual and image features described above.

Applications.

To describe the applications that adolescents used, we manually labeled a subset of 10,664 screenshots with the name of the specific applications appearing on the screen. Five labelers were tasked with generating ground truth data for the machine learning algorithms. This team’s labeling was evaluated using standard calculation of inter-rater reliability (κ > .90) on test data. Discrepancies were resolved through group discussion and look-up of potential categorization by focused search of web-based information until the matching application was identified. This ground-truth data was then used to train a machine learning algorithm to accurately identify the application based on up to 121 textual and graphical features already extracted from each screenshot. After multiple iterations, we obtained a random forest with 600 trees (Hastie et al., 2001; Liaw & Wiener, 2002) that had an out-of-bag error rate of only 6.8%, and used that model to propagate the app labels to the rest of the data. Following previous research (Böhmer et al., 2011; Murnane et al., 2016), apps were further categorized by type using the developer-specified category associated with the app in the official Android, Google Play marketplace. Thus, each screenshot was labeled with both the specific application name (e.g., Snapchat, Clock, Instagram, Youtube) and the more general application type. Represented types included Comics, Communication, Education, Games, Music & Audio, Photography, Social, Study (our data collection software), Tools, and Video Players & Editors.

Production/Consumption.

Screenshots capture moments when individuals are producing content; for example, when using the keyboard to type a text message. To identify such behavior, we manually labeled 27,000 screenshots (in our larger repository) with respect to whether the user was producing or consuming content. This ground-truth data was then used to train an extreme gradient boosting model (Chen et al., 2015; Friedman, 2001) that accurately classified screenshots as production or consumption. After tuning, through grid search of hyperparameters and 10-fold cross-validation, performance reached 99.2% accuracy. Using this model, all screenshots were labeled as an instance of production or consumption.

Food-related Content.

To illustrate the ability to explore media use more deeply within a single content area, we examined how food was represented in the screenome. Three methods were used. First, we developed a non-exhaustive dictionary of 60 words (and word stems) that included both formal food-related language used in nutrition literature (e.g., “carbohydrate”) and informal food-related language used in social media (e.g., “hangry”). Using a dictionary look-up method, we identified screenshots that contained any of these words. Second, a team of three labelers manually labeled a full day of screenshots (over 4,000) from Participant #3, who we noticed spent substantial time interacting with food-related content. Third, we engaged in qualitative analysis of those screenshots, and similar content that appeared in other participants’ screenomes, to describe how this food-related content was represented and situated in the digital media environment. Generally, we used a grounded theory approach wherein scholars with expertise in social processes, behavior change, media use, and pediatric health independently immersed themselves in the data by watching time-lapse videos of the screenshot sequences (i.e., “over the shoulder” viewing), cataloged examples of emergent themes, and met together to discuss the commonalities. Specifically, the screenomes were analyzed using a grounded theory approach following the ‘constant comparison’ process outlined by Glaser and Strauss (Glaser, 1992; Glaser & Strauss, 1967; Strauss & Corbin, 1998). We identified, separately for each participant, all screenshots from one day that contained food-related content (whether in text, image, or advertisement) with the goal of generating theory and insight into the types of food-related content the adolescents engaged with over the course of study. Screenshots were coded without a pre-existing coding frame. Themes were developed as we moved back and forth between the identification of similarities and differences of screenshots within and across sreenomes. Initial comparisons were related broadly to health and health behaviors, and then refined toward themes specific to food and food-related behaviors. Categories of content were coded to saturation in each screenome, continuing until no new categories of food-related content were evident.

Sentiment.

The text that appears in any given screenshot can be characterized with respect to emotional content or sentiment (Liu, 2015). Here, we used the modified dictionary look-up approach implemented in the sentimentr package in R (Rinker, 2018). This implementation draws from Jocker’s (2017) and Hu and Liu’s (2004) polarity lexicons to first identify and score polarized words (e.g. happy, sad), and then makes modifications based upon valence shifters (e.g., negations such as not happy). A sentiment score was calculated for each screenshot based on all the alphanumeric words extracted via OCR from each screenshot. Sentiment scores greater than zero indicate that the text in the screenshot had an overall positive valence and scores less than zero indicate an overall negative valence.

Altogether, our combined use of feature extraction tools (e.g., OCR of text, quantification of image complexity, logo detection), machine learning algorithms, human-labeling, and qualitative inquiry provided for rich idiographic description of the digital experiences of these adolescents’ everyday smartphone use.

Results

Four adolescents’ smartphone use was tracked for between one and three months during the beginning of the 2017–2018 school year (late August through early November).

Amount, Type, and Diversity of Smartphone Use

Amount of use.

Participant #1 was followed for 34 days of use, during which her smartphone screen was activated for between 25 seconds and 10.43 hours per day (Median = 3.59 hours, M = 3.67 hours, SD = 2.11 hours). Participant #2 was followed for 34 days, during which his smartphone screen was activated for between 15 seconds and 6.47 hours per day (Median = 0.34 hours, M = 1.11 hours, SD = 1.49 hours). Participant #3 was followed for 105 days, during which her smartphone screen was activated for between 31.83 minutes and 12.23 hours per day (Median = 4.58 hours, M = 4.68 hours, SD = 2.09 hours). Participant #4 was followed for 37 days, during which his smartphone screen was activated for between 11.92 minutes and 9.12 hours per day (Median = 2.39 hours, M = 2.65 hours, SD = 1.83 hours).

Type and diversity of use.

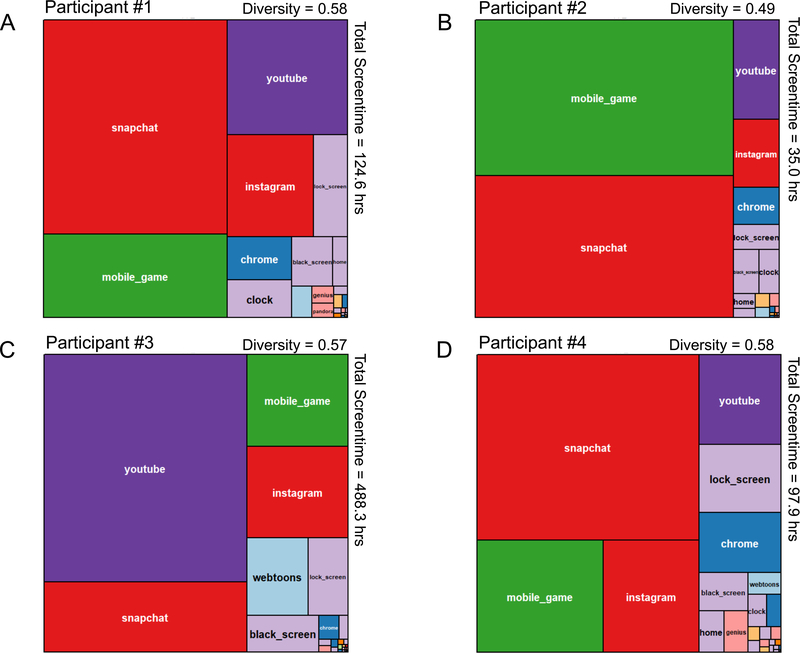

The type and diversity of applications used during the one to three months these adolescents were followed are shown in Figure 1. In each panel, the size of the tiles indicates the proportion of screen time spent with each specific application (e.g., YouTube, Snapchat), with color of the tile indicating the type/category of application as designated on Google Play (e.g., Video Players & Editors, Social).

Figure 1.

Treemap of four adolescents’ application use. In each panel, the size of the tiles indicates the proportion of total screen time spent with each specific app (e.g., YouTube, Snapchat). The color of the tile indicates the type/category of application (as designated on Google Play; e.g., Video Players & Editors, Social). For example, in Panel A the largest tile, labeled Snapchat indicated that this Social application (dark red) is Participant #1’s most frequently used app. The next largest tile, labeled mobile_game indicated the second most frequently used app, and so on.

As seen in Panel A of Figure 1, Participant #1 engaged with 26 distinct applications during her 124.6 hours of total screen time. The majority of her screen time was divided among Social apps (53.2% = 43.5% Snapchat + 9.7% Instagram), Games (16.9%), and Video Players & Editors (15.3% YouTube), with much smaller amounts of time spent with Tools (3.9% lock screen, 2.7% clock, 2.2% black screen) and Music & Audio applications (0.4% Genius, 0.4% Pandora). Diversity of activity (i.e., relative abundance across types; Koffer et al., 2018) across the ten application types was quantified using Shannon’s (1948) entropy metric (0 = use one app type the entire time, 1 = equal use of all ten app types), which was 0.58, indicating that phone time was split across multiple applications in a manner that was relatively unpredictable. With entropy = 0.0 indicating full knowledge of what app the individual would use next and entropy = 1.0 indicating total uncertainty of what app might be used next, entropy of 0.58 indicates that Participant #1’s next app is always a bit of a surprise. Participant #2, shown in Panel B, engaged with 22 distinct applications during 35.0 hours of total screen time. The majority of his screen time was divided among Games (44.4%), Social (43.9% = 40.5% Snapchat + 3.4% Instagram), and Video Players (5.1% YouTube) apps, with a smaller proportion of time spent with Tools (1.3% lockscreen, 1.2% blackscreen, 1.0% clock). Diversity of engagement across the 22 app types was 0.49. Participant #3, shown in Panel C, engaged with 30 distinct applications during 488.3 hours of total screen time. The majority of her screen time, was divided among Video Players (50.9% YouTube), Games (10.3%), Social (25.9% = 15.7% Snapchat +10.2% Instagram), and Comics (5.2% webtoons), with a smaller proportion of time spent with Tools (3.4% lockscreen, 3.0% blackscreen, 2.4% homescreen). Diversity of engagement across the 30 app types was 0.57. Finally, as shown in Panel D, Participant #4 engaged with 28 distinct applications during 97.9 hours of total screen time. The majority of his screen time, was divided among Social (57.3% = 45.4% Snapchat + 11.9% Instagram), Games (15.6%), and Video Players (8.2% YouTube), with smaller proportion of time spent with Tools (6.2% lockscreen, 2.1% blackscreen, 1.2% homescreen). Diversity of engagement across the 28 app types was 0.58.

Looking across the four adolescents, a few patterns are notable. Generally, screen time was concentrated on a few applications (top four applications accounted for ≥ 50% of screen time). Additionally, there was frequent use of Social applications (primarily Snapchat and Instagram), YouTube, and Games, all of which were heavily oriented toward visual (rather than textual) content. Although there was substantial heterogeneity in these four adolescents’ total amount of daily use (average between 1.11 to 4.68 hours per day), and substantial differences in the specific applications they used, the relative abundance of use (i.e., diversity) across application types was similar (entropy of 0.49, 0.57, 0.58, and 0.58).

Temporal Patterning of Use (Daily)

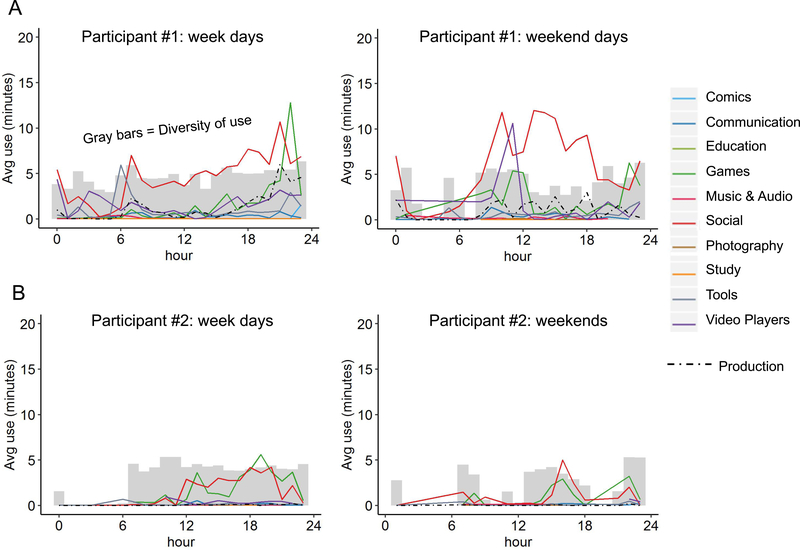

Delving deeper into how screen time was organized temporally across the day, Figures 2A to 2D illustrate, separately for week days (Monday – Friday) and weekend days (Saturday, Sunday), when different types of applications were used (average hourly use for each type indicated by a colored line) and how diversity of use (gray bars) changed throughout the day.

Figure 2A to 2D.

Temporal pattern of each participant’s app use, production, and entropy over 24 hours, shown separately for week days and weekend days. Colored lines in each panel indicate a single participant’s average amount of use of each type of application during each hour of the day, aggregating across all week days or weekend days. The dashed black line indicates the average amount of time (multiplied by five to improve readability) during each hour that the participant spent producing (versus consuming) content. Diversity of use, a measure of aggregate switching among app category types operationalized using Shannon’s entropy metric (multiplied by ten to improve readability, range 0 to 10) is indicated by the height of grey bars.

For Participant #1 in Panel A, overall use (average use within hour across all week days) was generally low during the morning hours, and increased steadily across the afternoon and into the evening. Peak use of Games apps (12.8 min/hr) was between 22:00 and 23:00 (green line). Engagement with Social apps (e.g., Snapchat) was increasing, but fairly consistent, from 3.4 to 10.7 minutes an hour from 7:00 to 23:59 (dark red line). Diversity of app use (indicated by gray bars in the background; range 0 to 10) was highest in the 23:00 hour and lowest in the 5:00 hour, an unusual hour in which her use was dominated by one Social application, Snapchat. Also notable is Participant #1 ‘s use of Social (Snapchat) and Video Player (YouTube) apps in the very early morning from 2:00 to 5:00. On weekend days, her preference for Social apps (dark red line) was similar, but overall use was higher for Video Player apps (purple line) and Game apps (green line).

Participant #2 in Panel B had a very different pattern of use. His weekday use was much lower, and he generally did not engage with his smartphone between 0:00 to 6:00. Only sporadic Tools apps (i.e., alarm) appeared during this period (blip in purple line). After 12:00, however, there was consistent use of Social (2.6 min/hr on average, dark red line) and Games (2.6 min/hr on average, green line). From 8:00 onward, diversity of app use was fairly consistent (gray bars all near the same height). Use on weekend days was similar, but overall use was less.

Participant #3 in Panel C also showed an idiosyncratic use pattern. Even on week days, this participant spent time on Video Player applications (i.e., YouTube) throughout all waking hours (dark purple line), starting in at the 7:00 hour, rising to a big peak in viewing at 20:00 (16.3 min/hr), and continuing through 1:00 of the next day. Although total time is dominated by Video Players and Social applications, diversity of app use is relatively stable (gray bars all near the same height) throughout the day. On weekend days, her preference for Social apps (dark red line) and Video Player (purple line) apps remains similar, but overall use was both higher and complemented with additional use of Game apps (green line).

Participant #4 in Panel D had very low activity between 0:00 to 7:00 on week days, but had steadily increasing use of Social (i.e., Snapchat) apps through the day (dark red line), with peak use in the evening during the 21:00 hour (14.0 min/hr). As with other participants, his diversity of engagement with different app types was fairly consistent throughout the day (gray bars all near the same height). Weekend day use was similar, with higher overall use and some additional use of Game apps (green line).

Looking across the four adolescents, we note some differences in the temporal patterns. App use habits were different, even during school hours, and especially in the evening hours. Although use of one or two application types peaked in the evening, there was surprising stability in the diversity of app types that were engaged within each hour of the day. This means that when they used their phone, these adolescents were always switching among a heterogenous collection of applications.

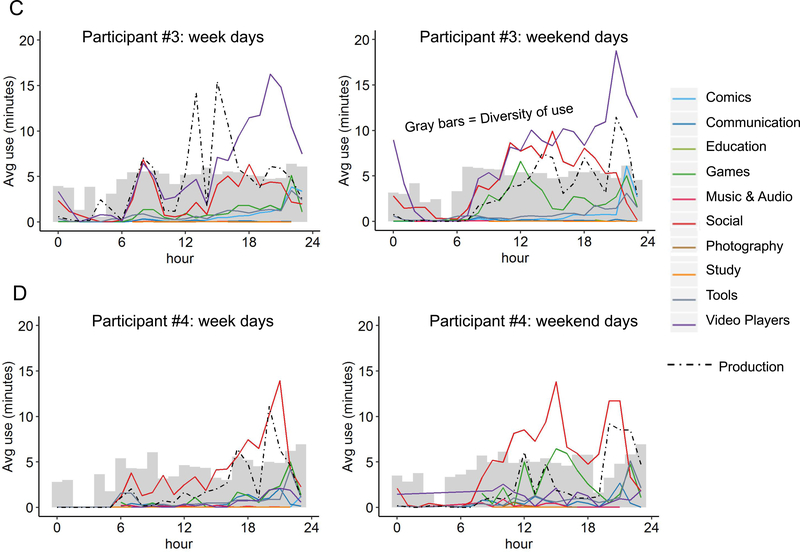

Fast Time-scale Patterning of Use (Hours, Minutes, Seconds)

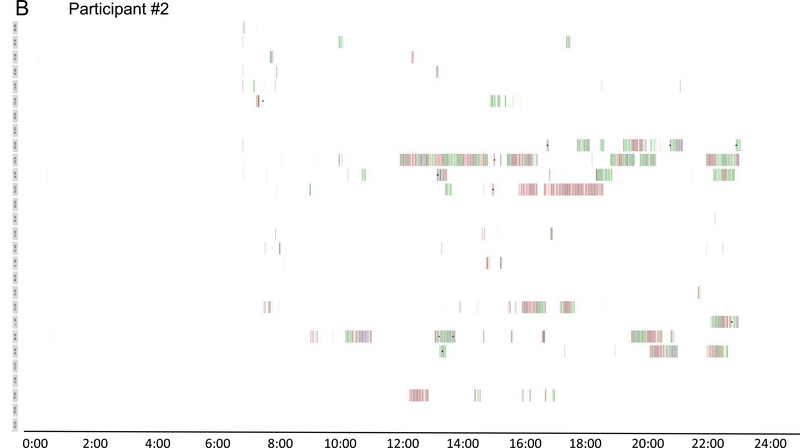

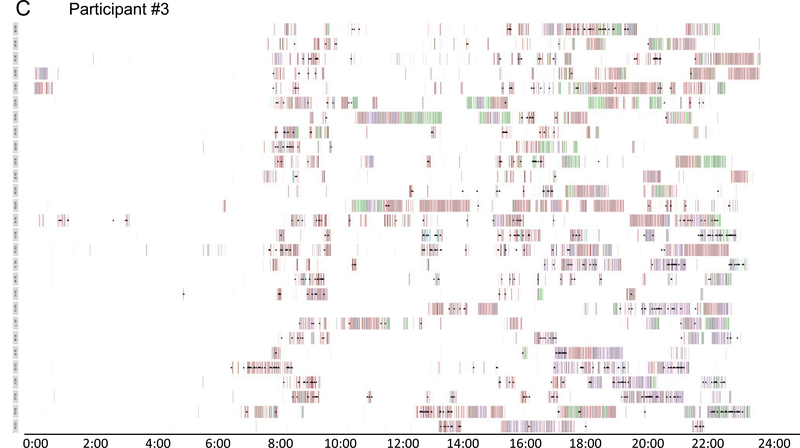

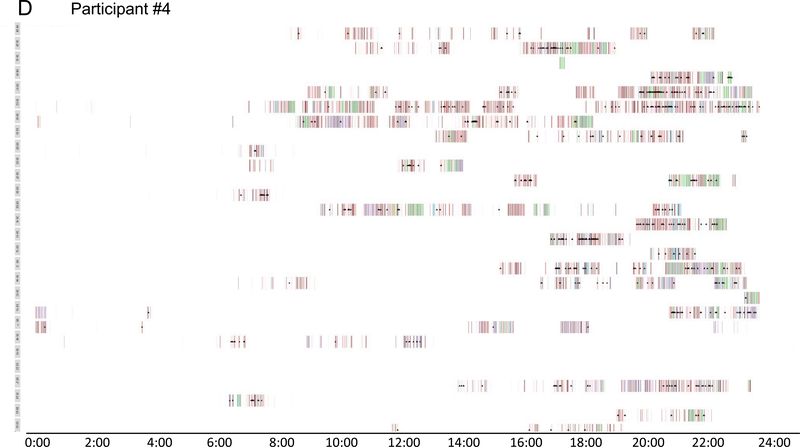

Zooming into faster time scales, we examined the fine-grained detail about when, how often, and for how long each individual engaged with the different types of applications. Four weeks of each adolescent’s smartphone use is shown in Figures 3A to 3D. For each adolescent, each of the 28 rows is a new day going from 00:00 at the left to 23:59 on the right. The colored vertical bars indicate whether the smartphone screen was on during each five second interval of the 28 days, with the colors of the bars indicating the type of application (e.g., Communication, Social, Games) that was engaged at each moment. Black triangles indicate moments when the adolescent was producing content (discussed below).

Figures 3A to 3D.

Barcode plots of one month of device use for each of four adolescents. Within a plot, each of the 28 rows is a new day spanning from 00:00 on the left to 23:59 on the right. The colored vertical bars indicate whether the smartphone screen was on during each five second interval of the 28 days depicted, with the colors of the bars indicating the type of application (e.g., Communication, Social, Games) that was engaged at each moment. Black triangles indicate moments when the adolescent was producing content.

These month-long screenomes provided an opportunity for fine-grained description and analysis of the temporal organization of each adolescents’ smartphone use. There were two levels of analysis: a phone session and a content segment within a session. A smartphone session was defined as the length of time that the smartphone screen was on continuously. Each time the screen was turned off for more than ten seconds, the next activation marked the beginning of a new session. Within each session, a segment was defined as the time spent with a specific application, with each segment beginning with a switch into an application (or screen on) and ending with a switch to a different application (or screen off). It is possible and usual to have multiple segments of engagement with the same application during a single session (e.g., from YouTube to Snapchat to YouTube to Instagram).

Participant #1 engaged with her phone, on average, on 186 separate sessions each day. These sessions lasted anywhere from 5 seconds to 2.45 hours, with the average session being 1.19 minutes long (Median = 15 seconds, SD = 4.45 minutes). Within a session, there were, on average, 8.38 segments (Median = 3, SD = 14.83, Range = 1 to 139). The longest segments were when she was engaged with Video Players applications (i.e., YouTube). Participant #2 engaged with his phone an average of 26 separate sessions each day. His sessions lasted anywhere from 5 seconds to 2.00 hours, with the average session lasing 2.54 minutes (Median = 15 seconds, SD = 8.18 minutes). Within his sessions, there were on average 31.97 segments (Median = 13, SD = 41.75, Range = 1 to 231), the lengthiest of which were when he was engaged with a Games application. Participant #3 engaged with her phone, on average, on 332 separate sessions each day. These sessions lasted between 5 seconds and 2.17 hours, with the average session lasting 50 seconds (Median = 10 seconds, SD = 3.26 minutes). Within a session, there were, on average, 5.02 segments (Median = 2, SD = 9.99, Range = 1 to 164), with the longest segments provided by Video Players applications (i.e., YouTube). Participant #4 engaged with his phone, on average, on 184 separate sessions each day. Sessions lasted between 5 seconds to 55.58 minutes, with the average session being 1.0 minute (Median = 15 seconds, SD = 2.02 minutes). Within a session, there were on average 4.84 segments (Median = 2, SD = 6.91, Range = 1 to 89), the longest of which were when he was engaged with a Games application. All four participants were similar in that a majority of their shortest segments were characterized by engagement with the lock screen.

Within and across Figures 3A to 3D, the heterogeneity is striking. Patterns of use differ substantially across persons, days, and hours. Sessions differ in duration and content. The pervasive characteristic seen in all the screenomes is frequent and abundant switching – of the smartphone screen on and off throughout these 28 days, and among application types within a phone use session. Each adolescent was exposed to different things at different times – idiosyncratic life experiences (a hallmark of development) at high speed.

Production/Consumption

In addition to providing information about the type of application engaged at each moment, the fine-grained nature of the data (sampled every five seconds) provides for examination of when each adolescent was producing screen content, for example, by composing texts in a social media application or entering information into a search bar or website form, or consuming screen content, for example, by scrolling through newsfeeds or watching video. This distinction is important given prior findings that passive consumption of social media is associated with lower subjective well-being and greater envy, both across persons and within-persons (Krasnova, Wenninger, Widjaja, Buxmann, 2013; Sagioglou, & Greitemeyer, 2014; Verduyn et al., 2015). In Figures 3A to 3D, each moment of production is indicated by a black triangle. Clear in the plots is that only a small proportion of each adolescent’s screen time was spent in a production mode. For example, Participant #1 spent only 2.6% of her screen time in production mode. The density of triangles seen in Figure 3 are summarized for the entire observation period, specifically the average minutes of production per hour of the day, as a dashed black line in Figure 2. As seen in Figure 2A, Participant #1’s production generally remains flat during the day with some upward trend in the evening, with a similar trajectory as the use of Social media (i.e., in this case, Snapchat). Participant #2 spent 0.3% of his screen time in production mode. Figure 2B shows his extremely low rate of production throughout the day (dashed black line buried along the bottom of the plot). Although primarily a consumer, when production was occurring, the participant was using a Social application. Participant #3 spent 7.0% of her screen time in production mode. As seen in Figure 2C, production was spread through much of the afternoon and evening, with peaks at typical lunch time, just after typical school hours, and in the 21:00 hour. As seen in Figure 3C, Participant #3 was mostly producing content when using Social or Video Players & Editors apps. Participant #4 spent 6.4% of his screen time in production mode, with the greatest production occurring between 20:00 to 23:00 when engaged with Social applications (mostly Snapchat).

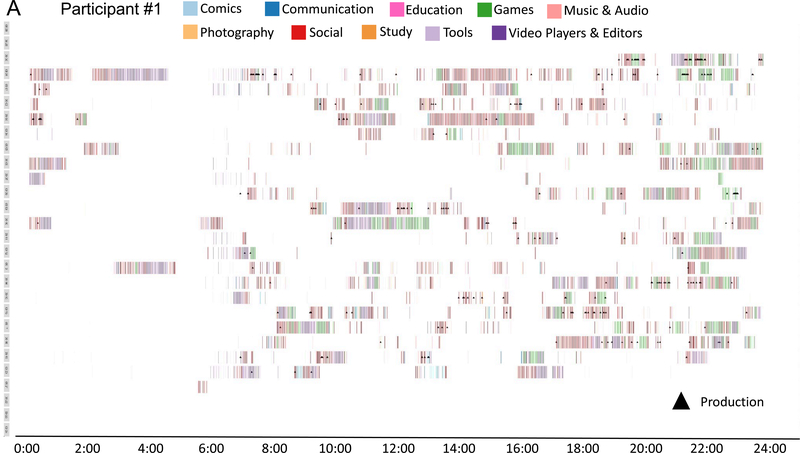

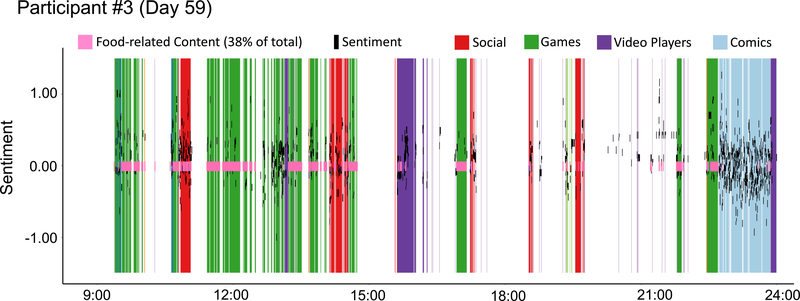

Food-Related Content

In addition to examining specific aspects of behavior (production/consumption), we can also focus on specific types of content that an individual is exposed to on any given day. For example, consider the one-day (zoomed into the 9:00 to 24:00 hours) screenome shown in Figure 4. Here, all the screenshots obtained from Participant #3 on one day (Day #59) were manually labeled for food-related content. In the figure, screenshots that contained any food-related text and/or images are indicated by the bright pink in the center of the vertical lines. We see that, of the 6.22 hours Participant #3 spent on their phone on this Saturday, 37% of screenshots contained food-related content. In line with her overall use pattern (Figure 2C), most of the food-related content was encountered between 9:00 and 15:00 while playing a Game (green) where the player runs a restaurant (World Chef). Viewed from a discovery mode (inductive), wherein the data prompt formulation of new research questions, this portion of the data prompted some additional quantitative and qualitative analysis of the food-related content.

Figure 4.

Barcode plot of one day for one participant. The colored vertical lines indicate whether the smartphone screen was on during each five second interval between 00:00 on the left to 23:59 on the right. Color indicates the type of application (e.g., Communication, Social, Games) that was engaged at each moment. The black “sprinkles” indicate the sentiment of the words that appears on screens with text. Bright pink lines in the center of the figure indicate whether a given screen include food-related content.

Quantitative assessment of food-related content and sentiment.

As noted earlier, we quantified the text that appeared in any given screenshot with respect to sentiment (Liu, 2015). As seen in Figure 4, there was substantial fluctuation in sentiment scores (black “sprinkles”) across screens. Some screens had more positive text and others more negative text, and many screens were neutral (sentiment = 0; not pictured in Figure 4). Results from a 2-group ANOVA of this day’s screens indicated that text surrounding food-related content was significantly more positive in valence than the non-food content, F(1, 2803) = 17.73, p < .001. Average sentiment of text on screens with food-related content was 0.10 (SD = 0.22), while average sentiment of text on screens with non-food content was 0.05 (SD = 0.24), a small but potentially meaningful difference (Cohen’s d = 0.22). Notably, though, about a third of the screenshots did not contain text (e.g., the food-related content was only in the images), and when they did have text, we only had a general view on the content of that text (i.e., that it contained the particular words in the sentiment lexicon). Thus, we sought to learn more about the kinds of food-related content that appeared in this and the other participants’ screenomes using a qualitative approach.

Qualitative assessment of food-related content.

The quantitative description of food-related content prompted in-depth qualitative analysis of how food and drink manifested on these four adolescents’ smartphone screens. With intent to highlight the potential value of the screenome, we highlight a few themes that we considered informative for parents’ and health professionals’ knowledge about how adolescents’ everyday media influences food-related behavior.

Images.

Sampling broadly from 751.5 hours of these adolescents’ smartphone use, we noted that images of sugary and caffeinated drinks were often shared on social media such as Snapchat and Instagram. Interestingly, these images tended to be pictures of popular branded drinks, including, for example, Starbucks Frappuccino’s with whipped cream, cans of “monster” energy drinks, and “jelly drinks.” The volume of shared images that contained food and drink images, in comparison to other types of images, underscored the significant value of both consumption and specific branding. We observed that very few of the images of drinks that were posted on social media were of non-branded drinks, and few images/videos of non-branded drinks were viewed at all. However, this was not the case for food. Many images and videos of food were viewed and shared on social media, but almost always without branding or packaging (e.g. a pizza slice, cakes on a plate, bowls of pasta). One user in particular spent a vast majority of her time viewing pictures of food, and watching videos of people eating and preparing food. This female adolescent also followed a lot of food groups on Instagram, and frequently liked posts that contained images of food with apparent high sugar, fat, and calorie content, such as cakes, biscuits, and carbohydrate-laden meals. She received frequent notifications that new food videos were posted, and often the same vloggers would appear in her screenome many times a day with different food videos – all suggesting that she purposively directed multiple food-related feeds into her media diet.

Texts.

Although there were many food-related video and images in these adolescents’ media diets, they did not frequently communicate with others about food. Text messages such as “I’m hungry” or “can you pick me up a Starbucks” were observed, and there were a couple of references to body image/weight. For example, in a series of text exchanges, one adolescent accuses his friend of calling him fat to another friend, and gets asked in return why he is “ashamed of being fat.” Another adolescent, communicating through Snapchat, asks someone why a friend “is so fat.” On the whole, however, there was little two-way-communication per se about eating, viewing particular foods, or body image. In sum, although these adolescents were not actively producing much food-related content, each individual’s digital environment was punctuated with food-related images and videos; images of sugary and caffeinated drinks were often shared on social media and; in contrast to drinks, images and videos of food viewed and shared on social media did not show branding or packaging; individuals may be purposively directing food-related content into their media diets; but do not frequently communicate with others about eating, viewing particular foods, or body image. Later we discuss how the newly accessible level of detail about individuals’ media diet provided by the screenome may inform both personalized intervention and policy.

Discussion

Research about adolescents and media, like most other literatures in social science, seeks to make accurate inferences about population-level phenomena. Inferences range from statements about how adolescents from different eras use media differently (Twenge, 2017) to gender differences in social media dependence (Pea et al., 2012) to multitasking effects that differ by cognitive abilities (Ophir, Nass, & Wagner, 2009). It is reasonable to seek characterizations of large groups of people because those generalizations provide efficient summaries of population-level use and potential to inform population-level interventions. If groups of adolescents are similar, they can be understood and reached as a group. Our aim in this study was to forward a methodology that can be used to obtain a new kind of data – data that allow for a detailed description and analysis of individuals’ actual media use in a manner that acknowledges heterogeneity and supports person-level understanding and intervention.

The comparisons of the information derived from Figures 2 and 3 illustrate how zooming in to (and aggregating across) different time scales leads to different conclusions. Figure 2 shows use by hour-of-day averaged across all available days. These plots illustrate how particular types of use tend to happen during similar or different times of day, but do not indicate anything about whether there is simultaneous (“parallel” use of multiple applications – fast switching) or contiguous (“serial” engagement – slow switching). In contrast, Figure 3 zooms in and shows the content of individual screenshots, without aggregation. At this time-scale, the screenshot-to-screenshot sequences provide for very different conclusions about how these adolescents use their phones and how quickly they switch among multiple applications. As these data show, individual adolescent’s media use can be extremely different with respect to how any given adolescent spends any one of the hundreds of media sessions they have each day, let alone how they spend a digital month. Vast between- and within-person variability in use is evident even across just four participants – all of whom were similar in age, ethnicity and socioeconomic status, were living in the same geographic area, and were primarily engaged with video games and social media.

Adolescence is known for its variability (e.g., storm and stress, see Hollenstein & Lougheed, 2013). The digitization and mediatization of so many life domains – and the ease with which individuals can access, order, switch, archive, and return to different experiences on their mobile devices – almost ensures that each person’s sequence of experiences is unique. Developmental systems and dynamic systems theories suggest that the on-going “co-actions” or “dynamic transactions” occurring between persons and their environments drive development, with differences in the timing, duration, quantity, and quality of “proximal processes” producing interindividual differences (Bronfenbrenner & Morris, 2006; Gottlieb, 1992). Our screenome data suggest that these four adolescents’ everyday media use is full of unique exposures to unique content at unique times. Lifespan developmental theory suggests that idiosyncratic encounters with unique environments are “non-normative” influences that contribute to interindividual differences in development (Baltes et al., 2006). We now have a way to capture and study fine-grained sequences of each individuals’ digital life – a massive amount of unique exposure sequences that can be used to test theory about both short-term behavioral change and long-term development.

From a methodological perspective, the uniqueness of individuals’ media use implies a shift towards person-specific idiographic approaches (see Molenaar, 2004), or at least that such analyses should supplement the typical engagement with group-level averaging. The variance around the averages is too large to leave unexplored or treat as “noise.” We contend that as the digital world itself becomes more diverse, the relevance of the “average” will decline, and that we will need to seriously re-evaluate research investments in external vs. internal validity. Studies should not be judged as useful or misleading because they make inferences from 4 or 4,000 persons’ data, but should instead be considered in relation to the detail they provide about any given adolescent’s experiences. We hope to have illustrated here how media might be used to probe fast-moving dynamic systems and to develop new models of individual behavior and intervention, without need to generalize across persons. Following the example set by genomics and the push towards personalized medicine, we forward the idea that engagement with screenomics data might open possibility for tracking personalized development.

Our findings align with research indicating that adolescents use a lot of social media and play games, and that much of their media content is heavily visual (filled with images and videos; Lenhart et al., 2015; Rideout, 2015). Our results additionally highlight how the hours these adolescents spent on their smartphones is organized temporally. The engagements with media were not contiguous. They were spread throughout the day (Figure 2) and interspersed with other activities (e.g., school). Our four participants engaged with a similar number of distinct applications (22, 26, 30, 28) during, on average, 186, 26, 332, and 84 sessions per day. The lengths of segments within each session (engagement with a single application) ranged from five seconds (the limit of our sampling design) to between 1 and 2.5 hours. For each of these four adolescents, there are moments of atomization and fragmentation, and long periods of focused use. Following inductively, further investigation is needed on how segments and sessions are organized and the psychological and developmental implications of the within-person, within-day, within-hour heterogeneity.

We used both quantitative and qualitative methods to explore how food – as just one aspect of digital life – was represented in these adolescents’ screenomes. As one example, obtained in our detailed analysis of a one-day screenome, food-related content was surrounded by or contained more positively valenced words. The qualitaitive analysis provided even more detail. Specifically, frame-by-frame observation of all four participants’ screenomes suggested that images of drinks were more likely to be branded, while images of food were more likely to be unbranded. Given that branding is known to have powerful influences on individuals’ tastes, expectations, and preferences, it is likely that depth of exposure to drink-related content may be intertwined with individuals’ consumption habits. In line with this possibility, our qualitative analysis also highlighted presence of focused personal selection or agency in these adolescents’ engagement patterns (e.g., following particular YouTube channels) that might facilitate modeling of food-related behavior through vicarious engagement with similar others (Bandura, 1986). Coupled with additional data about actual eating behavior, the detailed data in the screenome might (after addressing privacy concerns) open opportunities for (1) early detection of unhealthful eating behaviors, (2) individualized parent-child or professional-patient interventions, and (3) closed-loop, real-time, artificial intelligence-driven precision interventions for behavior change.

Examination of an individual’s screenome might identify presence of new or increasing content about weight loss and unhealthy weight control strategies (e.g., fasting, purging, appetite suppressants, anorexia nervosa) – a screening approach that, because it spans all apps and genres of screen content, may have the sensitivity and specificity needed to refer individuals to appropriate resources. For example, adolescents might ask a parent or individual provider to review a targeted summary or dashboard derived from their screenome to help them develop context-specific strategies to try to reduce feelings of hunger through the day and achieve weight and behavioral goals. Specifically, identification of how particular food-related blogs, videos, or game play are related to actual eating and snacking would facilitate engagement with personalized behavioral prescriptions, including setting of specific time or time-of-day limits or removing specific apps from their smartphone. Pushing a bit further, temporal analysis of the screenome may also facilitate identification of the specific content, contexts, and times (of day, day of week, etc.) that precede or accompany unhealthful eating behaviors (e.g., gaming sessions are followed by placement of online orders for food delivery). These may be different for different adolescents or even for different days or settings within a single adolescent. If an adolescent has opted-in for help in improving their diet, detection and eventually personalized prediction of high-risk behavior patterns could trigger just-in-time interventions (e.g., via text message) meant to interrupt and reduce consumption of unhealthful foods and promote healthful choices and establishing lower-risk behavior patterns. Iterative experimentation (A/B testing) could identify the most efficacious intervention for an individual person at a particular time and place, and in a particular combination, to fulfill the promise of precision health and well-being.

Both clinical and research settings are filled with calls for more and better person-specific data that can inform just-in-time interventions, for dashboards that provide summary information from intensive longitudinal data streams (such as might be obtained from monitors and wearables), and interpretations that might eventually become part of practitioner’s regular workflow (Nahum-Shani et al., 2017; Schneiderman et al., 2016). Possible domains of interest stretch far beyond food-related content and health behaviors. Certainly, advertisers and media content providers (e.g., Netflix) are already making use of individuals’ media use patterns in hopes of altering their customers’ viewing and purchasing behaviors. As the science of screenomics and its associated dashboards are developed, this new detail of information may contribute to individuals’ ability to optimize their goals for health and well-being.

It is, of course, important to note some of the privacy issues surrounding collection, study, and use of screenomes. The screenome contains substantial information, including information about behaviors that individuals consider private. All of the data summarized here were collected using a rigorous protocol designed to protect participants’ privacy. These data cannot be, and were not intended to be, shared with parents or health providers. New protocols, however, could be developed wherein participants explicitly consider how specific portions of their screenomes might be analyzed and interpreted in ways that inform and support their personal goals. Beyond the general data protection regulations already in place, issues surrounding how, when, and with what intent screenome information is shared with professionals, family members, or friends needs to be explored further. Adolescence, in particular, is a developmental period where children and parents are re-negotiating their autonomy and monitoring of many behaviors, including screen time and social media sharing (e.g., how adolescents and parents attempt to regulate each others’ social media content; Shin & Kang, 2016). As the value of screenomics surfaces, further consideration and study of how the availability of the screenome can, should, and should not inform adolescents’, parents’, friends’, and providers’ subsequent behavior is necessary. Certainly, many of the same legal and ethical issues surrounding collection and use of individuals’ genomes and other -omes will apply here as well (Caulfield & McGuire, 2012).

It is also important to note our own reflexivity as researchers learning about the screenome and explicitly acknowledge our role in the research process. While there was no direct interaction between researchers and participants in this study, we are conscious of and reflective about the impact of our engagement with participants being ‘observed’ as part of a study run out of a prestigious university in an affluent metropolitan area. We acknowledge that this adolescent Latino/Hispanic population is understudied, in part because of on-going cultural, geographic, and socioeconomic biases and barriers that influence research samples (Gatzke-Kopp, 2016). These specific adolescent’s choice to get involved and their actual involvement in the study may have impacted their typical mobile phone use (e.g., they may have avoided the use of particular apps or been more cautious in their digital interactions and engagement). In addition, we have considered that our own position as researchers may impact the psychological knowledge and data (qualitative and quantitative) produced in the research process (Langdridge, 2007). For example, our values, experiences, interests, beliefs, and, importantly for this study, cultural background and social and professional identities, inevitably shape how we approached and analysed the data (Berger, 2015). In this study, the researchers were female and male adults who considered themselves experts in social processes, behavior change, media use, and pediatric health, and who identified as White, Latino/Hispanic, American, Asian, and European. The analysis and conclusions are in part shaped by our own interests and backgrounds.

Limitations and Future Directions

We are both excited about the possibilities unfolding from our initial analysis of screenomes and cognizant of some limitations. First, we described screenomes provided by four Latino/Hispanic youth, age 14–15 years, from low-income, racial/ethnic minority neighborhoods. The sample and analysis does not support generalization of any results to the population or examination of group differences, nor is it meant to. The small sample size is in part intentional, and meant to highlight the need for and value of intensive longitudinal data for description of what adolescents actually do on their phones and for understanding the idiosyncratic nature of media use in today’s world. Now that we have established viability and utility, future research can engage with larger and more representative samples without compromising or losing idiographic detail. Multilevel and related analytical frameworks that provide for statistically robust and simultaneous evaluation of intraindividual and interindividual differences will be particularly useful as more data become available. We note, however, that when typical group-based statistical analyses are employed, care should be taken in the interpretation of average behavior or average associations (e.g., fixed effects in multilevel regression models). Our experience and knowledge of the literature suggests that recruitment and study of high-income White participants is normative. We are using this paper as an opportunity to provide an antidote by presenting data from Latino youth, without need to do comparison with the “majority group”, and with purposeful prioritization of individuality and interpretation by a multi-ethnic research team aware of the cultural context surrounding these adolescents digital lives.

Second, although we have all the content that appeared on the smartphone screen, we only extracted a relatively small set of features from each screenshot (e.g., complexity of image), only quantified sentiment of text using a single dictionary (e.g., not sentiment of images), and only examined one type of content (food-related images and text). The analysis pipeline and procedures developed here are being expanded (see also Reeves et al., 2019). On-going research is expanding the feature set (e.g., using natural language processing, object recognition, image clustering); expanding the perspectives from which the data are approached (e.g., cognitive costs of task switching, language of interpersonal communication) and the types of content examined (e.g., politics, finances, health); and examining how general and specific aspects of the screenome are related to and interact with individuals’ other time-invariant characteristics and time-varying behaviors. For example, researchers are beginning to examine how frequency of engagement with particular sequences of content are related to individuals’ personality, socioeconomic context, and where they currently are in their activity space. Similarly, screenome data can be supplemented and examined in relation to self-report data or sensor data (e.g., from “wearables”). For example, obtained alongside individuals’ self-reports of their on-going perceptions, cognitions, and emotions (e.g., as obtained in experience sampling studies) or monitoring of behavioral and physiological parameters, the screenome will facilitate discovery of how the fast-moving content of individuals’ screens is influencing and influenced by psychosocial, behavioral, and physiological events and experiences – in the moment and over time.

Third, we obtained screenshots every five seconds that the participants’ screens were in use during a 30 to 100-day span. While the sampling of screen content every five seconds provided unprecedented detail about actual smartphone use that is not available in any other studies that we know of, the fine granularity of the data still misses some behaviors. Our “slow frame rate” sampling misses “quick-switches” that occur when, for instance, an individual moves through multiple social media platforms in rapid succession as they check what’s new, or are engaged in synchronous text message conversations with multiple individuals simultaneously. Although costly in terms of data transfer, storage, and computation, study of some phenomena might require faster sampling that is closer to continuous video. Zooming out a few time scales, we also caution that these data were collected in Fall 2017 and must be interpreted with respect to the apps, games, fads, and memes that were available and circulating at that time. As our repository of screenomes grows, we foresee opportunities to study how changes in the larger media context influence and are influenced by individual-level, moment-to-moment media use.

Finally, we note that although our analysis made use of a variety of contemporary quantitative and qualitative methods, it is descriptive. We have not yet used the intensive time-series data to build and test process models of either moment-to-moment behavior (e.g., taskswitching) or developmental change (e.g., influence of puberty on media use). The initial explorations here have, however, developed a foundation for future data collection and for analyses that make use of mathematical and logical models that support inferential and qualitative inference to many individual-level and population-level phenomena. We look forward to the challenges and new insights yet to come. One of the main challenges we face is the need and cost (mostly in terms of time) of human labeling. The machine learning algorithms we used here for identifying the application being used in each screenshot was based on a subset of 10,664 screenshots that were labeled by our research team. Future work will eventually require more labeling. While many big-data applications make use of crowd-sourcing platforms such as Mechanical Turk to label data relatively cheaply, these platforms are not viable for labeling of screenome data. Given the potentially sensitive and personal nature of the data, very strict protocols are in place to ensure that the data remain private. Thus, labeling and coding can only be done in secure settings by approved research staff. Our protocols underscore that the richness of the data come with social responsibility for protection of the data contributors.

Conclusion

The goal of this study was to forward a new source of data – the screenome – and a new method – screenomics – for studying how adolescents engage with their fast-moving and dynamic digital environment as they go about their daily lives – in situ. We demonstrated ability to capture everything that appears on an adolescents’ smartphone screen, every five seconds that the device is on – and to extract the fine granularity of information needed for both quantitative and qualitative study of adolescents’ digital lives. Our initial results suggest that the method and data can be used to describe what adolescents actually do on their smartphones, and will lead us to new perspectives on how individuals’ digital lives contribute to and change across the life course.

Acknowledgements:

Thanks very much to the study participants for providing a detailed glimpse of their daily lives for such an extended period of time. Authors’ contributions supported by the Cyber Social Initiative at Stanford University (SPO#125124), the Stanford Child Health Research Institute, The Stanford University PHIND Center (Precision Health and Integrated Diagnostics), the Knight Foundation (G-2017-54227), the National Institute on Health (UL1 TR002014, T32 AG049676, T32 LM012415), National Science Foundation (I/UCRC award #1624727), and the Penn State Social Science Research Institute.

References

- Aigner W, Miksch S, Muller W, Schumann H, &Tominski C (2008). Visual methods for analyzing time-oriented data. Visualization and Computer Graphics, IEEE Transactions, 14(1), 47–60. [DOI] [PubMed] [Google Scholar]

- Azjen I (1985). Action-control: From cognition to behavior. New York: Springer. [Google Scholar]

- Baltes PB, Lindenberger U,&Staudinger UM (2006). Life-span theory in developmental psychology In Lerner RM (Ed.), Theoretical models of human development. Volume 1 of the Handbook of child psychology (6th ed., pp. 569–664). Editors-in-Chief: Damon W & Lerner RM. Hoboken, NJ: Wiley. [Google Scholar]

- Baltes PB, & Nesselroade JR (1979). History and rationales for longitudinal research In Nesselroade JR & Baltes PB (Eds.), Longitudinal research in the study of behavior and development (pp. 1–39). New York, NY: Academic Press. [Google Scholar]

- Bandura A (1986). Social foundations of thought and action. Englewood Cliffs, NJ: Prentice-Hall. [Google Scholar]

- Berger R (2015). Now I see it, now I don’t: Researcher’s position and reflexivity in qualitative research. Qualitative Research, 15(2), 219–234. [Google Scholar]

- Blumberg FC, Deater-Deckard K, Calvert SL, Flynn RM, Green CS, Arnold D, & Brooks PJ (2019). Digital Games as a Context for Children’s Cognitive Development: Research Recommendations and Policy Considerations. Social Policy Report, 32(1), 1–33. [Google Scholar]

- Boase J, & Ling R (2013). Measuring mobile phone use: Self-report versus log data. Journal of Computer-Mediated Communication, 18, 508–519. doi : 10.1111/jcc4.12021 [DOI] [Google Scholar]

- Böhmer M, Hecht B, Schöning J, Krüger A, & Bauer G (2011, August). Falling asleep with Angry Birds, Facebook and Kindle: A large scale study on mobile application usage.In Proceedings of the 13th international conference on Human computer interaction with mobile devices and services (pp. 47–56). ACM. [Google Scholar]

- Borgogna N, Lockhart G, Grenard JL, Barrett T, Shiffman S, & Reynolds KD (2015). Ecological momentary assessment of urban adolescents’ technology use and cravings for unhealthy snacks and drinks: differences by ethnicity and sex. Journal of the Academy of Nutrition and Dietetics, 115(5), 759–766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bronfenbrenner U, & Morris PA (2006). The bioecological model of human development In Lerner RM (Ed.), Theoretical models of human development. Volume 1 of the Handbook of child psychology (6th ed, pp. 793–828). Editors-in-Chief: Damon W & Lerner RM. Hoboken, NJ: Wiley. [Google Scholar]

- Buhrmester M, Kwang T, & Gosling SD (2011). Amazon’s Mechanical Turk: A new source of inexpensive, yet high-quality, data?. Perspectives on Psychological Science, 6(1), 3–5. [DOI] [PubMed] [Google Scholar]

- Calvert SL, & Wilson BJ (Eds.). (2010). The handbook of children, media, and development (Vol. 10). John Wiley & Sons. [Google Scholar]

- Caulfield T, & McGuire AL (2012). Direct-to-consumer genetic testing: perceptions, problems, and policy responses. Annual review of medicine, 63, 23–33. [DOI] [PubMed] [Google Scholar]

- Chen T, He T, & Benesty M (2015). Xgboost: extreme gradient boosting. Rpackage version 0.4–2, 1–4. [Google Scholar]

- Chiatti A, Yang X, Brinberg M, Cho M Jung Gagneja, A., Ram N, Reeves B, & Giles C. Lee (2017). Text Extraction from Smartphone Screenshots to Archive in situ Media Behavior. In The Knowledge Capture Conference presented at the 12/2017, Austin, TX, USA. New York, New York, USA: ACM Press. [Google Scholar]

- Common Sense Media. The common sense census: Media use by tweens and teens. Available at https://www.commonsensemedia.org/research/the-common-sense-census-media-use-by-tweens-and-teens

- Coyne SM, Padilla-Walker LM, & Howard E (2013). Emerging in a digital world: A decade review of media use, effects, and gratifications in emerging adulthood. Emerging Adulthood, 7(2), 125–137. [Google Scholar]

- Culjak I, Abram D, Pribanic T, Dzapo H, & Cifrek M (2012, May). A brief introduction to OpenCV. In MIPRO, 2072 Proceedings of the 35th International Convention (pp. 17251730). IEEE. [Google Scholar]

- Team Datavyu (2014). Datavyu: A Video Coding Tool Databrary Project, New York University; URL http://datavyu.orghttp://datavyu.org. [Google Scholar]

- Folkvord F, & Van’t Riet J (2018). The persuasive effect of advergames promoting unhealthy foods among children: A meta-analysis. Appetite, 729, 245–251. [DOI] [PubMed] [Google Scholar]

- Friedman JH (2001). Greedy function approximation: a gradient boosting machine. Annals of statistics, 1189–1232. [Google Scholar]

- Gatzke-Kopp LM (2016). Diversity and representation: Key issues for psychophysiological science: Diversity and representation. Psychophysiology, 53(1), 3–13. [DOI] [PubMed] [Google Scholar]

- George MJ, Russell MA, Piontak JR, & Odgers CL (2018). Concurrent and subsequent associations between daily digital technology use and high-risk adolescents’ mental health symptoms. Child Development, 89(1), 78–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerwin RL, Kaliebe K, & Daigle M (2018). The Interplay Between Digital Media Use and Development. Child and adolescent psychiatric clinics of North America, 27(2), 345–355. [DOI] [PubMed] [Google Scholar]

- Glaser BG (1992). Basics of grounded theory analysis. Mill Valley, CA: Sociology Press. [Google Scholar]

- Glaser BG, & Strauss AL (1967). The discovery of grounded theory: Strategies for qualitative research. Chicago, IL: Aldine. [Google Scholar]

- Gold JE, Rauscher KJ, & Zhu M (2015). A validity study of self-reported daily texting frequency, cell phone characteristics, and texting styles among young adults. BMC research notes, 8(1), 120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, & Friedman J (2001). The Elements of Statistical Learning. New York, NY, USA: Springer New York Inc. [Google Scholar]