Key Points

Question

How do patients perceive the use of artificial intelligence for skin cancer screening?

Findings

A qualitative study conducted at the Brigham and Women’s Hospital and the Dana-Farber Cancer Institute evaluated 48 patients, 33% with a history of melanoma, 33% with a history of nonmelanoma skin cancer only, and 33% with no history of skin cancer. While 75% of the patients stated that they would recommend artificial intelligence to friends and family members, 94% expressed the importance of symbiosis between humans and artificial intelligence.

Meaning

Patients appear to be receptive to the use of artificial intelligence for skin cancer screening if the integrity of the human physician-patient relationship is preserved.

Abstract

Importance

The use of artificial intelligence (AI) is expanding throughout the field of medicine. In dermatology, researchers are evaluating the potential for direct-to-patient and clinician decision-support AI tools to classify skin lesions. Although AI is poised to change how patients engage in health care, patient perspectives remain poorly understood.

Objective

To explore how patients conceptualize AI and perceive the use of AI for skin cancer screening.

Design, Setting, and Participants

A qualitative study using a grounded theory approach to semistructured interview analysis was conducted in general dermatology clinics at the Brigham and Women’s Hospital and melanoma clinics at the Dana-Farber Cancer Institute. Forty-eight patients were enrolled. Each interview was independently coded by 2 researchers with interrater reliability measurement; reconciled codes were used to assess code frequency. The study was conducted from May 6 to July 8, 2019.

Main Outcomes and Measures

Artificial intelligence concept, perceived benefits and risks of AI, strengths and weaknesses of AI, AI implementation, response to conflict between human and AI clinical decision-making, and recommendation for or against AI.

Results

Of 48 patients enrolled, 26 participants (54%) were women; mean (SD) age was 53.3 (21.7) years. Sixteen patients (33%) had a history of melanoma, 16 patients (33%) had a history of nonmelanoma skin cancer only, and 16 patients (33%) had no history of skin cancer. Twenty-four patients were interviewed about a direct-to-patient AI tool and 24 patients were interviewed about a clinician decision-support AI tool. Interrater reliability ratings for the 2 coding teams were κ = 0.94 and κ = 0.89. Patients primarily conceptualized AI in terms of cognition. Increased diagnostic speed (29 participants [60%]) and health care access (29 [60%]) were the most commonly perceived benefits of AI for skin cancer screening; increased patient anxiety was the most commonly perceived risk (19 [40%]). Patients perceived both more accurate diagnosis (33 [69%]) and less accurate diagnosis (41 [85%]) to be the greatest strength and weakness of AI, respectively. The dominant theme that emerged was the importance of symbiosis between humans and AI (45 [94%]). Seeking biopsy was the most common response to conflict between human and AI clinical decision-making (32 [67%]). Overall, 36 patients (75%) would recommend AI to family members and friends.

Conclusions and Relevance

In this qualitative study, patients appeared to be receptive to the use of AI for skin cancer screening if implemented in a manner that preserves the integrity of the human physician-patient relationship.

This qualitative study examines how the use of artificial intelligence as a screening tool is perceived by patients receiving care in a dermatology clinic.

Introduction

Artificial intelligence (AI) is a branch of computer science that focuses on the automation of intelligent behavior. Machine learning is a subfield of AI that uses data-driven techniques to uncover patterns and predict behavior.1,2 Artificial intelligence tools are being explored across the field of medicine. In dermatology, researchers are evaluating the potential of machine learning to classify skin lesions using images from standard and dermoscopic cameras.3,4,5 Direct-to-patient AI tools classify images obtained by patients outside of a clinical setting, and clinician decision-support AI tools classify images obtained by clinicians at the point of care.6

Artificial intelligence may significantly alter how patients engage in health care, and the medical literature in this field is rapidly expanding. For example, a recent issue of a journal focused on exploring benefits and risks of AI technology, ranging from gains in health care efficiency and quality to threats to patient privacy and confidentiality, informed consent, and autonomy.7 However, our current understanding of how patients perceive AI and its application to health care lacks clarity and depth.

Our primary aims in this study were to explore how patients conceptualize AI and view the use of direct-to-patient and clinician decision-support AI tools for skin cancer screening. Specifically, we sought to elucidate perceived benefits and risks, strengths and weaknesses, implementation, response to conflict between human and AI clinical decision-making, and recommendation for or against AI. Our secondary aims were to identify which entities patients view as responsible for AI accuracy and data privacy.

Methods

Patients were prospectively enrolled from general dermatology clinics at the Brigham and Women's Hospital and melanoma clinics at the Dana-Farber Cancer Institute. The study was conducted from May 6 to July 8, 2019. Our initial enrollment target was 48 patients equally distributed between 3 cohorts: history of melanoma, history of nonmelanoma skin cancer only, and no history of skin cancer. Exclusion criteria were age younger than 18 years and impaired decision-making capacity. The institutional review board of Partners Healthcare approved this study. The participants provided informed verbal consent. There was no financial compensation. This study followed the Consolidated Criteria for Reporting Qualitative Research (COREQ) reporting guideline for qualitative studies.8

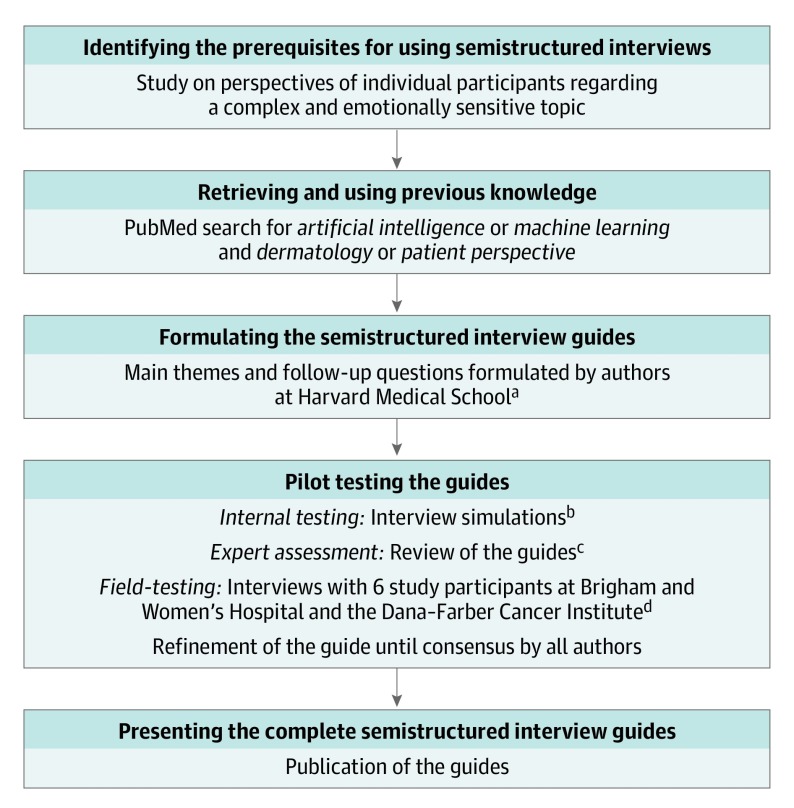

Development of the semistructured interview guides exploring direct-to-patient and clinician decision-support AI tools followed the 5-step process presented in a systematic methodologic review by Kallio et al (Figure 1).9 First, we determined that our study fulfilled prerequisites for using semistructured interviews.10 Second, we conducted a literature review. Third, 4 of us (C.A.N., L.M.P.-C., R.I.H., and A.M.) formulated the main themes and follow-up questions for the guides. Fourth, pilot testing occurred in 3 stages. During a training session for 4 of us (S.J.L., K.L., A.B.P., and E.T.) in the semistructured interview technique by 2 of us (C.A.N. and L.M.P.-C.), we tested the guides using interview simulations. After the first round of revisions, we sent the guides for review by subject matter experts on technology applications to dermatology (J.S.B. and J.M.K.) and sociology (A.V.M.). After the second round of revisions, we field tested the guides using 6 patient interviews, which resulted in no further revisions. Fifth, the complete guides are presented (eTable 1 and eTable 2 in the Supplement).

Figure 1. Flow Diagram for Development of the Semistructured Interview Guides.

aFormulated by Nelson, Pérez-Chada, Hartman, and Mostaghimi.

bSimulated by Nelson, Pérez-Chada, Li, Lo, Pournamdari, and Tkachenko.

cReviewed by Barbieri, Ko, and Menon.

dConducted by Li, Lo, Pournamdari, and Tkachenko.

Each patient was introduced to the study by their dermatologist (R.I.H. or A.M.). Additional information was provided by either a research assistant (S.J.L. or K.L.) or medical student (A.B.P. or E.T.). This individual, who had no previously established relationship with the patient, obtained verbal informed consent and conducted and recorded the interview. Half of the patients in each cohort were interviewed about a direct-to-patient AI tool and the other half were interviewed about a clinician decision-support AI tool. To minimize bias, we alternated the question order such that half of the patients in each cohort and for each AI tool were asked about benefits prior to risks and the other half were asked about risks prior to benefits. We used a standardized data collection instrument to abstract patient age, sex, race, ethnicity, and history of melanoma and/or nonmelanoma skin cancer from the electronic medical record. After each interview, we distributed a paper survey (eTable 3 in the Supplement) to collect data on patient ownership of electronic devices, usage of digital services, prior dermatology exposure, educational level, and total household income.

Statistical Analysis

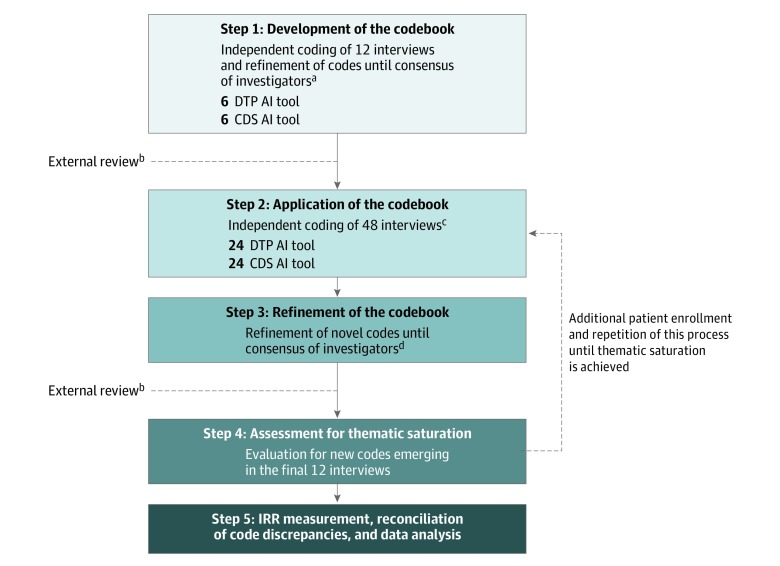

We used a grounded theory approach to develop the codebook (Figure 2). Four of us (C.A.N., L.M.P.-C., R.I.H., and A.M.) independently coded the first 12 interviews, and codes were refined until consensus was reached. The codebook was sent for expert review. After the first round of revisions, the codebook was distributed to 2 coding teams (A.C. and P.M. and S.J.L. and K.L.). Each team member independently coded 24 interviews, and novel codes were refined until consensus was reached among 8 of us (C.A.N., L.M.P.-C., A.C., S.J.L., K.L., P.M., R.I.H., and A.M.). The codebook was sent for expert review. After the second round of revisions, the codebook (eTable 4 in the Supplement) was distributed to each team. No novel codes were identified in the final 12 interviews, achieving thematic saturation. Team members independently finalized coding and reconciled discrepancies. Code frequencies were calculated based on reconciled codes.

Figure 2. Flow Diagram for Data Analysis.

AI indicates artificial intelligence; CDS, clinician decision-support; DTP, direct-to-patient; and IRR, interrater reliability.

aCoding by Nelson, Pérez-Chada, Hartman, and Mostaghimi.

bReviewed by Barbieri, Ko, and Menon.

cConducted independently by Creadore and Manjalay (24 interviews) and by Li and Lo (24 interviews).

dConsensus of Nelson, Pérez-Chada, Creadore, Li, Lo, Manjaly, Hartman, and Mostaghimi.

Continuous variables were summarized with means and SDs. Categorical variables are reported as proportions and percentages. Interrater reliability was assessed using the Cohen κ coefficient. Qualitative and quantitative analyses were performed in NVivo, version 12.1 (QSR International).

Results

All patients informed about the study consented to participate. A total of 48 patients were enrolled, and the mean (SD) interview duration was 22 (9) minutes. Patient characteristics are detailed in Table 1. The mean (SD) age was 53.3 (21.7) years; 26 participants (54%) were women. Most patients self-reported race and ethnicity as white (45 [94%]) and non-Hispanic (45 [94%]). According to the study design, 16 patients (33%) had a history of melanoma, 16 patients (33%) had a history of nonmelanoma skin cancer only, and 16 patients (33%) had no history of skin cancer. Forty-three patients (90%) reported ownership of at least 1 electronic device, most often a computer (39 [81%]) and/or smartphone (39 [81%]). Forty-six patients (96%) reported use of digital services and 43 patients (90%) reported use of digital services for health. Google was the most commonly used digital service in each category, reported by 44 patients (92%) for overall digital services and 39 patients (81%) for health. Forty-six patients (96%) reported a prior dermatology clinic visit. Patients had a high educational level (20 [42%] graduate or professional degree) and total household income (20 [42%] total household income ≥$150 000).

Table 1. Patient Characteristics.

| Characteristic | No. (%) | ||

|---|---|---|---|

| Direct-to-Patient AI Tool (n = 24) | Clinician Decision-Support AI Tool (n = 24) | Overall (N = 48) | |

| Age, mean (SD), y | 53.3 (21.7) | 54.7 (18.3) | 54.0 (19.9) |

| Sex | |||

| Women | 16 (67) | 10 (42) | 26 (54) |

| Men | 8 (33) | 14 (58) | 22 (46) |

| Race | |||

| White | 22 (92) | 23 (96) | 45 (94) |

| American Indian or Alaskan Native | 1 (4) | 1 (4) | 2 (4) |

| African American | 1 (4) | 0 | 1 (2) |

| Ethnicity | |||

| Non-Hispanic | 22 (92) | 23 (96) | 45 (94) |

| Hispanic | 2 (8) | 1 (4) | 3 (6) |

| History of skin cancera | |||

| Melanoma | 8 (33) | 8 (33) | 16 (33) |

| NMSC only | 8 (33) | 8 (33) | 16 (33) |

| No history of skin cancer | 8 (33) | 8 (33) | 16 (33) |

| Ownership of electronic devicesb | |||

| Computer | 20 (83) | 19 (79) | 39 (81) |

| Smartphone | 21 (88) | 18 (75) | 39 (81) |

| Tablet | 13 (54) | 17 (71) | 30 (63) |

| Smart watch | 4 (17) | 5 (21) | 9 (19) |

| None of the above | 2 (8) | 3 (13) | 5 (10) |

| Usage of digital servicesb,c | |||

| 21 (88) | 23 (96) | 44 (92) | |

| Videos (eg, YouTube) | 19 (79) | 20 (83) | 39 (81) |

| Wikipedia | 18 (75) | 20 (83) | 38 (79) |

| Messengers (eg, Android messages, iMessage, WhatsApp) | 18 (75) | 18 (75) | 36 (75) |

| 20 (83) | 15 (63) | 35 (73) | |

| Voice assistants (eg, Alexa, Google Assistant, Siri) | 12 (50) | 16 (67) | 28 (58) |

| 13 (54) | 12 (50) | 25 (52) | |

| Podcasts | 11 (46) | 14 (58) | 25 (52) |

| 7 (29) | 8 (33) | 15 (31) | |

| Snapchat | 8 (33) | 5 (21) | 13 (27) |

| None of the above | 1 (4) | 1 (4) | 2 (4) |

| Usage of digital services for health | |||

| 19 (79) | 20 (83) | 39 (81) | |

| Wikipedia | 9 (38) | 9 (38) | 18 (38) |

| Videos (eg, YouTube) | 5 (21) | 3 (13) | 8 (17) |

| 5 (21) | 0 | 5 (10) | |

| Podcasts | 4 (17) | 1 (4) | 5 (10) |

| Voice assistants (eg, Alexa, Google assistant, Siri) | 3 (13) | 2 (8) | 5 (10) |

| 3 (13) | 0 | 3 (6) | |

| Messengers (eg, Android messages, iMessage, WhatsApp) | 1 (4) | 0 | 1 (2) |

| Snapchat | 1 (4) | 0 | 1 (2) |

| 1 (4) | 0 | 1 (2) | |

| None of the above | 2 (8) | 3 (13) | 5 (10) |

| Prior dermatology visit | |||

| Yes | 23 (96) | 23 (96) | 46 (96) |

| No | 1 (4) | 1 (4) | 2 (4) |

| Educational levela | |||

| Less than high school | 0 | 1 (4) | 1 (2) |

| High school graduate or equivalent | 1 (4) | 4 (17) | 5 (10) |

| Some college, no degree | 1 (4) | 1 (4) | 2 (4) |

| Associate degree | 1 (4) | 2 (8) | 3 (6) |

| Bachelor degree | 11 (46) | 6 (25) | 17 (35) |

| Graduate or professional degree | 10 (42) | 10 (42) | 20 (42) |

| Total household income, $a | |||

| <50 000 | 4 (17) | 2 (8) | 6 (13) |

| 50 000-99 999 | 5 (21) | 8 (33) | 13 (27) |

| 100 000-149 999 | 1 (4) | 4 (17) | 5 (10) |

| ≥150 000 | 12 (50) | 8 (33) | 20 (42) |

| Not applicable | 2 (8) | 2 (8) | 4 (8) |

Abbreviations: AI, artificial intelligence; NMSC, nonmelanoma skin cancer.

The total percentage does not equal 100% owing to rounding.

Categories are not mutually exclusive, and therefore the total percentage exceeds 100%.

Used on at least a monthly basis.

The interrater reliability between the coding teams was κ = 0.94 (A.C. and P.M.) and κ = 0.89 (S.J.L. and K.L.), indicating almost perfect agreement.11 Table 2 presents the overall frequency of each code along with frequency in the direct-to-patient and clinician decision-support interview groups.

Table 2. Code Frequencies.

| Code | Representative Quotation | No. (%) | ||

|---|---|---|---|---|

| Direct-to-Patient AI Tool (n = 24) | Clinician Decision-Support AI Tool (n = 24) | Overall (N = 48) | ||

| AI Concepta | ||||

| Cognition (subcodes: game playing, human cognitive support, intelligence superior to human, self-learning) | “Replacement of human observation and decision-making by machines” | 17 (71) | 19 (79) | 36 (75) |

| Machine (subcodes: computer, Google, inhuman, robot) | “It's an autonomous machine that can…problem-solve.” | 7 (29) | 4 (17) | 11 (23) |

| Modernity | “It's the wave of the future.” | 3 (13) | 8 (33) | 11 (23) |

| No AI concept | “I don't even know what is it…I never think about it.” | 6 (25) | 4 (17) | 10 (21) |

| Science fiction (subcode: outer space) | “The Terminator movie” | 2 (8) | 3 (13) | 5 (10) |

| Specialized vs generalized | “[Specialized AI] knows the rules for something like Go, like AlphaGo. [Generalized AI] could potentially teach itself in other areas.” | 2 (8) | 0 | 2 (4) |

| AI Benefitsa | ||||

| Increase diagnostic speed (subcodes: early skin cancer detection, lifesaving potential) | “If the app says, ‘You probably have melanoma—go see your doctor,’ they might actually get in there sooner…so it could be lifesaving.” | 20 (83) | 9 (38) | 29 (60) |

| Increase health care access (subcodes: increase labor efficiency, increase time for physician-patient interaction, remote diagnosis, unburden the health care system) | “[AI] could reach people who don't have great access to health care but may have an iPhone.” | 19 (79) | 10 (42) | 29 (60) |

| Reduce health care cost | “[AI] would save money because early detection of melanoma would save money down the road with treatments.” | 10 (42) | 7 (29) | 17 (35) |

| Reduce patient anxiety | “Putting people at ease much quicker than waiting, waiting, waiting, waiting, waiting.” | 12 (50) | 4 (17) | 16 (33) |

| Increase triage efficiency | “[AI] can very quickly do a kind of triage where using the wonders of the diagnostic machinery, there can be an initial determination that something is not quite right, and then I would want to have a doctor talk to me about that.” | 12 (50) | 2 (8) | 14 (29) |

| Reduce unnecessary biopsies | “[AI] could stop a lot of unnecessary biopsies.” | 2 (8) | 2 (8) | 4 (8) |

| Increase patient self-advocacy | “Making people responsible for their own medical care, being their own advocate.” | 2 (8) | 1 (4) | 3 (6) |

| Stimulate technology | “[AI] is a gateway to other technology opportunities.” | 2 (8) | 0 | 2 (4) |

| Patient gain of privacy | “You don't have to share your surprise and your grief with a practitioner.” | 1 (4) | 0 | 1 (2) |

| AI Risksa | ||||

| Increase patient anxiety | “It's not always about the diagnosis…the act of delivering the diagnosis matters. So those people who are broken by the idea of getting something scary like cancer, where do they turn? They can call and make an appointment, but that's not going to help them feel better. They're going to be anxious.” | 12 (50) | 7 (29) | 19 (40) |

| Human loss of social interaction | “I love technology…but I really hate what's happened to our culture. It's become sterile and artificial and cold and people don't relate to each other. I'm still seeking the human component even if it's just emotional.” | 7 (29) | 11 (46) | 18 (38) |

| Patient loss of privacy | “The worst nightmare…is the company selling your medical data. All of a sudden you become a commodity. All your numbers are sold to every…consumer product company in the country…I think it should also be on them to have privacy guardrails in place.” | 11 (46) | 3 (13) | 14 (29) |

| Patient loss to follow-up | “I would be concerned…if people weren't followed up by a doctor because if they decided they had melanoma and decided they weren't going to get it treated, then nobody would know that they have it. And something like that would advance and of course, ultimately kill them…If people are self-diagnosing and not following up, then there could be a lot of danger in that.” | 8 (33) | 6 (25) | 14 (29) |

| Nefarious use of AI (subcodes: humans as “guinea pigs” for testing AI, human commoditization) | “But the companies that manufacture the AI, they're just thinking bottom line…so the compartmentalizing and the dehumanizing of making money ultimately is the downfall.” | 8 (33) | 3 (13) | 11 (23) |

| Human deskilling (subcode: increase human dependence on technology) | “Society has a problem of becoming too reliant on technology…If people become too dependent on technology you can't function without it.” | 3 (13) | 7 (29) | 10 (21) |

| Human loss of control over AI | “The moment this computer becomes self-aware we're in trouble.” | 3 (13) | 6 (25) | 9 (19) |

| Human job loss | “The growth in technology winds up taking the place of jobs… and I personally always prefer to deal with a human than some sort of robotic AI.” | 6 (25) | 2 (8) | 8 (17) |

| Reduce health care access (subcodes: reduce labor efficiency, burden the health care system) | “More lines at the dermatologist or longer wait times.” | 3 (13) | 3 (13) | 6 (13) |

| Risk of patient physical harm due to use of AI technology | “Are we going to find out one day using these cell phones are causing something else wrong with us, the wavelengths?” | 3 (13) | 2 (8) | 5 (10) |

| Increase health care cost | “As medical care becomes denser, where they do more things, the cost to society increases and increases and increases. So [AI] would have an unfortunate impact on the cost of health care.” | 2 (8) | 2 (8) | 4 (8) |

| Increase health care disparities (subcode: increase disparity in access to AI) | “Technological advances…tend to benefit people at a higher socioeconomic level than they do people who perhaps are most in need.” | 3 (13) | 1 (4) | 4 (8) |

| Reduce diagnostic speed | “[AI causes a] delay to care because [you’re not] accessing an actual provider. You can’t remove it or treat it.” | 1 (4) | 2 (8) | 3 (6) |

| Reduce trust in health care professionals | “[Patients] could start to not trust medical professionals.” | 1 (4) | 1 (4) | 2 (4) |

| Risk of patient physical harm due to self-treatment | “Maybe someone would take their care into their own hands if they have a diagnosis in hand that…could be potentially harmful.” | 1 (4) | 1 (4) | 2 (4) |

| AI Strengthsa | ||||

| More accurate diagnosis (subcodes: ability to draw on more data or experience than humans, ability to learn and evolve, ability to share data) | “[AI] has a huge database of what diagnosis A is supposed to look like as opposed to a human who only has their own life experiences.” | 14 (58) | 19 (79) | 33 (69) |

| Patient activation (subcodes: activate patient to seek out health information, activate patient to seek out health care) | “Rather than…pondering for weeks or months whether it's time to go see the doctor, it could be…an immediate indicator of, ‘Go see the doctor.’” | 15 (63) | 4 (17) | 19 (40) |

| More convenient diagnosis | “[AI] will help people that maybe don't have very easy access to care, not just because they're busy, but maybe because of where they live or the state of care in their country.” | 10 (42) | 4 (17) | 14 (29) |

| More consistent diagnosis | “Every physician may be different where [AI] can be a constant.” | 6 (25) | 7 (29) | 13 (27) |

| More objective diagnosis (subcodes: human distraction, human emotion, human impairment) | “[AI] can give objectivity when people have bad days.” | 4 (17) | 7 (29) | 11 (23) |

| Patient education | “With melanoma and all the research that's out there and a lot of discoveries recently, I think [AI] is very positive and anything that can keep increasing your awareness.” | 6 (25) | 5 (21) | 11 (23) |

| AI Weaknessesa | ||||

| Less accurate diagnosis (subcodes: false-negative, false-positive, inaccurate or limited training set, lack of context [eg, patient history], lack of physical examination [(eg, palpation, view from multiple angles], operator dependence) | “[AI] is not a substitute for [an] in-person exam. We're examining a photograph that you get with your phone in variable light. It's possible that you might get a false-positive or a false-negative.” | 19 (79) | 22 (92) | 41 (85) |

| Lack of verbal communication (subcodes: inability to answer patient follow-up questions, inability to discuss treatment options with patient, inability to educate patient, inability to reassure patient) | “There's something about the human interaction and the ability to answer questions and for the doctor to gauge the other individual's emotional experience. Because people can…be really anxious, sad, fearful, and the app's not going to be able to sense that.” | 16 (67) | 12 (50) | 28 (58) |

| Lack of emotion (subcodes: lack of compassion, lack of empathy) | “[AI] can't be compassionate. You can't write an algorithm to love somebody.” | 11 (46) | 9 (38) | 20 (42) |

| Lack of nonverbal communication (subcodes: lack of emotion perception, lack of eye contact, lack of physical contact) | “It's the interaction of the human touch and the human eye contact that's very important.” | 10 (42) | 9 (38) | 19 (40) |

| Lack of creativity | “Humans might be able to do better because in the long run…they may think in ways that [AI] hasn't.” | 3 (13) | 6 (25) | 9 (19) |

| Lack of social contract between AI and patient (subcodes: lack of patient accountability) | “You don't have a relationship with this thing. Whereas a doctor, you actually have some form of relationship on a professional level.” | 3 (13) | 6 (25) | 9 (19) |

| Lack of total body skin examination | “The system in which I photograph something I'm worried about, would not take care of these melanomas that are not visible to the patient.” | 4 (17) | 2 (8) | 6 (13) |

| Limited to visual inspection | “[The] visit to the dermatologist for follow-up tests and biopsies…would be more accurate.” | 2 (8) | 0 | 2 (4) |

| Uniformity restricts patient choice of health care professional | “You get to pick and choose who you go to and who you feel comfortable with. With a computer, it is what it is.” | 0 | 1 (4) | 1 (2) |

| AI Implementationa | ||||

| Symbiosis (subcodes: AI referral to physician, AI second opinion for physician) | “The problem comes from replacing a person with [AI]…if it's used in tandem, if it's used as a tool for a dermatologist as opposed to being used instead of a dermatologist.” | 22 (92) | 23 (96) | 45 (94) |

| Credibility | “I would probably need…feedback from a medical professional to…trust the app…because it's like a black box…Algorithms with databases behind them…can make errors.” | 16 (67) | 14 (58) | 30 (63) |

| Diagnostic tool (subcodes: dynamic tool, static tool) | “Maybe if a mole was changing, there would be a way to track that…If the doctor said, this is a watch and wait…maybe [AI] could…take pictures of it and feed that information to the doctor.” | 14 (58) | 11 (46) | 25 (52) |

| Setting is important (subcodes: health care institution: academic vs private; patient: age, intelligence, medical history) | “I would have to know that this application was set up by the dermatologist…or [my] medical group. I wouldn't want it to be in the hands of a private company.” | 10 (42) | 11 (46) | 21 (44) |

| Information (nondiagnostic) tool | “[AI] would be helping to aid in the decision-making. But the human would be actually making the decision after.” | 10 (42) | 8 (33) | 18 (38) |

| Challenges (subcodes: malpractice, misunderstanding of AI, need for ongoing regulation of AI, oversaturation of humans with technology) | “Unless you can guarantee under penalty of being sued out of existence that [AI] will be 100% accurate all the time, you need to…soften the presumption of accuracy.” | 6 (25) | 11 (46) | 17 (35) |

| Surgery | “Maybe in some aspects of surgery there may be a better control than the human hand, which may have a slight tremor.” | 4 (17) | 1 (4) | 5 (10) |

| Integration into electronic health record | “However well [AI] gets integrated with Partners…would be fantastic.” | 3 (13) | 1 (4) | 4 (8) |

| Response to Conflict Between Human and AI Clinical Decision-Makinga | ||||

| Seek a biopsy | “Let's get the biopsy and find out what the story is.” | 16 (67) | 16 (67) | 32 (67) |

| Trust the physician | “I would put more faith in the doctor.” | 13 (54) | 16 (67) | 29 (60) |

| Seek an opinion from another physician | “I would get another opinion from another human, another dermatologist. | 11 (46) | 9 (38) | 20 (42) |

| Seek longitudinal follow-up from the same physician | “I would make another appointment with her or him to evaluate it at a later date…to see if it's changed.” | 7 (29) | 4 (17) | 11 (23) |

| Discontinue use of AI | “I probably would never use that app again.” | 4 (17) | 2 (8) | 6 (13) |

| Seek an opinion from another AI tool | “If there's more than 1 AI program that you could check on, you could do that.” | 2 (8) | 2 (8) | 4 (8) |

| Seek longitudinal follow-up from the same AI tool | “I might even use the app again to determine maybe it was the lighting.” | 1 (4) | 0 | 1 (2) |

| Seek an opinion from family member or friend | “The machine could say something, the doctor could say something, and…you could leave it up to your wife.” | 0 | 1 (4) | 1 (2) |

| Responsibility for AI Accuracya | ||||

| Technology company | “The people who have built the database and code the AI and develop the algorithm who continue to tweak it.” | 13 (54) | 12 (50) | 25 (52) |

| Physician | “Oh, it has to be the doctor.” | 8 (33) | 12 (50) | 20 (42) |

| Collective | “Everybody along the line should hold some responsibility for it.” | 6 (25) | 6 (25) | 12 (25) |

| Health care institution | “The clinic. The hospital.” | 4 (17) | 7 (29) | 11 (23) |

| Government | “[AI] should be government regulated. So maybe by the FDA?” | 5 (21) | 2 (8) | 7 (15) |

| Patient | “If you're seeking treatment, it's your responsibility.” | 3 (13) | 2 (8) | 4 (8) |

| Organized dermatology | “I think the American [Academy] of Dermatology should be responsible.” | 1 (4) | 1 (4) | 2 (4) |

| Unsure | “I don't know whose responsibility it would be.” | 1 (4) | 1 (4) | 2 (4) |

| Responsibility for AI Data Privacya | ||||

| Health care institution | “The institution, wherever you're being treated.” | 9 (38) | 16 (67) | 25 (52) |

| Technology company | “The AI manufacturer.” | 10 (42) | 9 (38) | 19 (40) |

| Government | “Data privacy laws.” | 5 (21) | 2 (8) | 7 (15) |

| Database manager | “The person who owns the software and the hardware that hold that information.” | 4 (17) | 2 (8) | 6 (13) |

| Physician | “The physician.” | 0 | 5 (21) | 5 (10) |

| Unsure | “It's a big gray area.” | 2 (8) | 1 (4) | 3 (6) |

| Collective | “Everybody's.” | 1 (4) | 1 (4) | 2 (4) |

| Patient | “The person using it.” | 1 (4) | 1 (4) | 2 (4) |

| Unnecessary | “If they want to use them in the future [to] say, ‘Here, this is what melanoma looks like’... I don't care.” | 1 (4) | 0 | 1 (2) |

| AI Recommendation | ||||

| Recommended | “Yes. There's excellent utility for it.” | 17 (71) | 19 (79) | 36 (75) |

| Ambivalent | “Before I recommended, I'd have to experience it.” | 5 (21) | 4 (17) | 9 (19) |

| Not recommended | “No, I would tell them to see a doctor.” | 2 (8) | 1 (4) | 3 (6) |

Abbreviations: AI, artificial intelligence; FDA, US Food and Drug Administration.

Categories are not mutually exclusive.

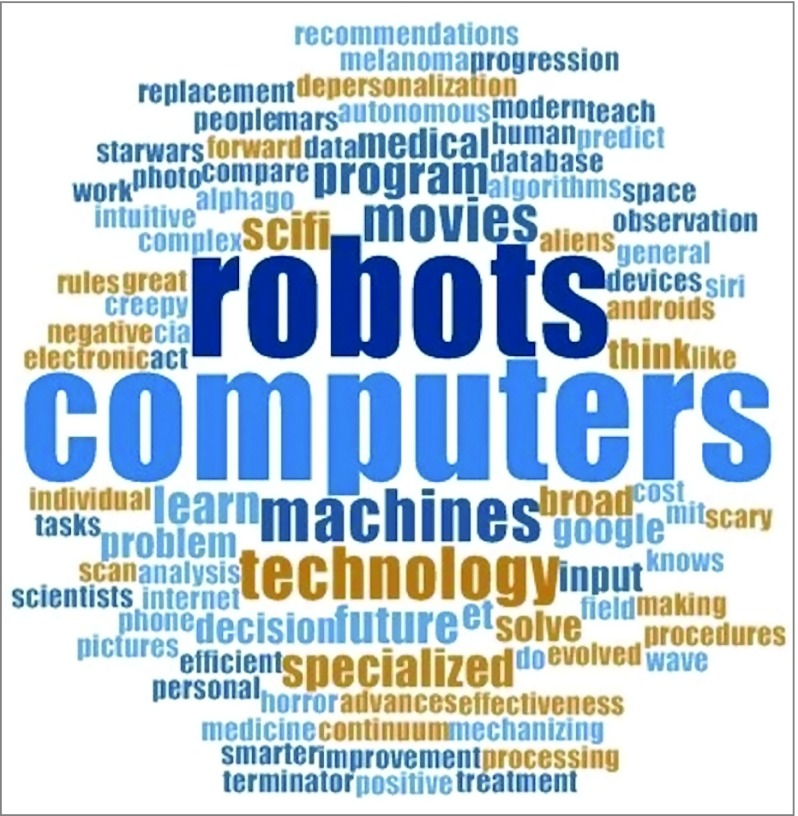

When patients were asked, “What comes to mind when you think about AI?”, the predominant theme that emerged was cognition (36 [75%]), such as game playing. Other themes included machine (11 [23%]) and modernity (11 [23%]). Some patients linked cognition and machine, for example, describing AI as the “replacement of human observation and decision-making by machines.” Figure 3 illustrates patient conceptions of AI in a word cloud. Ten patients (21%) had no concept of AI prior to the study.

Figure 3. Word Cloud Representing the Frequency of Terminology Used by Patients to Describe Artificial Intelligence.

The size of each word or phrase is proportionate to the frequency of its use by patients to describe artificial intelligence.

The most commonly perceived benefits of AI tools for skin cancer screening were increased diagnostic speed (29 [60%]) and health care access (29 [60%]). These benefits were identified more often by patients in the direct-to-patient compared with the clinician decision-support interview group. Patients associated increased diagnostic speed with early skin cancer detection and lifesaving potential. Increased health care access was multifaceted, deriving from gains in labor efficiency and time for physician-patient interaction, remote diagnosis, and unburdening of the health care system. One patient noted that AI “could reach people who don't have great access to health care but may have an iPhone.” Other perceived benefits included reduced health care cost (17 [35%]), reduced patient anxiety (16 [33%]), and increased triage efficiency (14 [29%]).

The greatest perceived risk of AI for skin cancer screening was increased patient anxiety (19 [40%]), identified more often by patients in the direct-to-patient interview group. One patient asked, “Those people who are broken by the idea of getting something scary like cancer, where do they turn? They can call and make an appointment, but that’s not going to help them feel better.” Other perceived risks included human loss of social interaction (18 [38%]), patient loss of privacy (14 [29%]), patient loss to follow-up (14 [29%]), nefarious use of AI (11 [23%]), and human deskilling (10 [21%]).

Patients perceived more accurate diagnosis (33 [69%]) as the greatest strength of AI compared with human skin cancer screening. This perception was based on the ability of AI to draw on more data or experience than humans, to learn and evolve, and to share data. One patient noted that AI “has a huge database of what diagnosis A is supposed to look like as opposed to a human who only has their own life experiences.” Another commonly perceived strength of AI was patient activation (19 [40%]) to seek both health information and health care. This strength was identified more often by patients in the direct-to-patient interview group. “Rather than…pondering for weeks or months whether it’s time to go see the doctor,” one patient noted that AI could be “an immediate indicator.” Other perceived strengths were more convenient diagnosis (14 [29%]), more consistent diagnosis (13 [27%]), more objective diagnosis (11 [23%]), and patient education (11 [23%]).

At the same time, patients perceived less accurate diagnosis (41 [85%]) as the greatest weakness of AI. This perception was based on the potential for false-negatives, false-positives, inaccurate or limited training set, lack of context, lack of physical examination, and operator dependence. “Examining a photograph that you get with your phone in variable light,” commented one patient, “is not a substitute for [an] in-person exam.” Other commonly perceived weaknesses of AI were lack of verbal communication (28 [58%]), lack of emotion (20 [42%]), and lack of nonverbal communication (19 [40%]). In the realm of verbal communication, patients called attention to the inability of AI to answer follow-up questions, discuss treatment options, and educate and reassure the patient. “People can…be really anxious, sad, fearful,” one patient commented, “and the app’s not going to be able to sense that.” In the realm of emotion, patients noted AI’s lack of compassion and empathy. One patient expressed, “You can’t write an algorithm to love somebody.” In the realm of nonverbal communication, patients called attention to AI’s lack of emotion perception, “eye contact,” and “human touch.”

The dominant theme in both interview groups was the importance of a symbiotic relationship between humans and AI (45 [94%]). Patients envisioned AI referring to a physician and providing a second opinion for a physician. “The problem comes from replacing a person with [AI],” which this patient described as a “tool for a dermatologist.” Credibility (30 [63%]) was another common theme that emerged. “I would probably need…feedback from a medical professional to…trust the app,” stated one patient, “because it’s like a black box…Algorithms with databases behind them…can make errors.” Patients perceived AI as both a dynamic and static diagnostic tool (25 [52%]). One patient suggested, “Maybe if a mole was changing, there would be a way to track that.” Patients also identified setting as important (21 [44%]) in terms of both the health care institution and the patient. “I would have to know that this application was set up by the dermatologist… or [my] medical group,” said one patient, “I wouldn’t want it to be in the hands of a private company.” Other themes included perception of AI as an information tool (18 [38%]) and implementation challenges, such as malpractice (17 [35%]).

The most common response in the event that human and AI reached conflicting diagnoses of melanoma and benign skin lesion was to seek a biopsy (32 [67%]). As one patient put it, “Let’s get the biopsy and find out what the story is.” The second most common response was to “put more faith in the doctor” (29 [60%]). The third most common response was to seek an opinion from another physician (20 [42%]). “I would get another opinion from another human,” a patient stated, “another dermatologist.” And the fourth most common response was to seek longitudinal follow-up from the same physician (11 [23%]).

When asked to identify entities responsible for AI accuracy, patients most often named the technology company (25 [52%]) and the physician (20 [42%]), followed by the collective (12 [25%]) and the health care institution (11 [23%]). When asked to identify entities responsible for AI data privacy, patients most often named the health care institution (25 [52%]) and the technology company (19 [40%]).

Overall, 36 patients (75%) would recommend the AI tool to family members and friends, 9 patients (19%) were ambivalent, and 3 patients (6%) would not recommend it. Specifically, 17 patients (71%) would recommend the direct-to-patient AI tool and 19 patients (79%) would recommend the clinician decision-support AI tool.

Discussion

The Institute of Medicine included patient centered as 1 of 6 specific aims for improving the health system, “ensuring that patient values guide all clinical decisions.”12[pg3] In an era of rapidly evolving technology, advancing our understanding of patient values is essential to optimize the quality of care.

The key finding of our study was that 75% of patients would recommend the use of AI for skin cancer screening to friends and family members; however, 94% expressed the importance of symbiosis between humans and AI. The term man-computer symbiosis was first used by Licklider13 to describe a form of teamwork in which humans provide strategic input while computers provide depth of analysis.14 Considering the use of AI for skin cancer screening, particularly the direct-to-patient tool, patients were enthusiastic about increased diagnostic speed (60%), increased health care access (60%), and patient activation (40%) but worried about increased patient anxiety (40%). Patients valued human verbal (58%) and nonverbal (40%) communication and emotion (42%). Rather than replacing a physician, patients envisioned AI referring to a physician and providing a second opinion for a physician. The significance of symbiosis to patients in this study suggests that they may be more receptive to augmented intelligence15 compared with AI tools for skin cancer screening.

A second key finding of our study was the emphasis that patients placed on the accuracy of AI for skin cancer screening. Patients viewed AI as a diagnostic tool (52%) but perceived accuracy to be both its greatest strength (69%) and greatest weakness (85%). Although this finding may seem contradictory, patients had a nuanced perspective. Patients recognized the ability of AI to draw on more data or experience than humans, learn and evolve, and share data; however, they were concerned about the potential for false-negatives, false-positives, inaccurate or limited training set, lack of context, lack of physical examination, and operator dependence. This finding highlights the importance of validating the accuracy of AI tools for skin cancer screening in a manner that is transparent to patients prior to implementation.

A third key finding of this study was the heterogeneity of patient perspectives on AI. Patients primarily conceptualized AI in terms of cognition (75%), but their words revealed both positive and negative vantage points. A multiplicity of themes emerged on the use of AI for skin cancer screening, but few were identified by most patients. For example, when asked to identify entities responsible for AI accuracy and data privacy, most patients named the technology company (52%) and health care institution (52%), respectively. However, the list of entities was long and diverse. This finding suggests that patient perspectives on AI have not yet solidified, substantiated by the emergence of credibility (63%) and setting (44%) as common themes under implementation. The most common responses in the event that a human and AI reached conflicting diagnoses of melanoma and benign skin lesion were to seek a biopsy (67%), trust the physician (60%), and seek an opinion from another physician (42%). This finding suggests that physicians are likely to play an important role in shaping patient perspectives on the use of AI for skin cancer screening moving forward.

Contextualizing our results within the medical literature is limited by the paucity of data on how patients perceive AI for skin cancer screening. Dieng et al16 found that patients lack confidence in their ability to undertake skin self-examination after treatment for localized melanoma and that some patients are receptive to assistance from new digital technologies. In line with the symbiosis theme in our study, Tran et al17 found that most patients were ready to accept the use of AI for skin cancer screening after reading a vignette but only under human control. In addition, Tong and Sopory18 found that people’s intentions to use AI for skin cancer detection were influenced by messaging that they received about the risks and benefits of AI.

Limitations

Our results should be interpreted in the context of limitations regarding our study design. First, this was a qualitative study with a limited sample size. Because, to our knowledge, no prior study had elicited domains from patients regarding the use of AI for skin cancer screening, we adopted the semistructured interview approach with the goal of establishing a platform for future quantitative investigation. To ensure rigorous methods, we followed the COREQ checklist,8 developed the guides using an established framework,9 and measured interrater reliability.11 Expert reviewers were used to develop the guides and codebook. Second, the demographic characteristics of our patients may limit generalizability to other study populations. Future studies are essential to elucidate perspectives of patients with diverse racial, ethnic, and socioeconomic backgrounds and with varying levels of education and access to dermatologic care. This expansion is particularly important in light of concerns raised that AI tools may exacerbate health care disparities in dermatology.19 In addition, patients were interviewed about a hypothetical scenario involving an AI tool with which they lacked familiarity in practice.

Conclusions

Topol2[p44] opened a recent review article on the convergence of human and artificial intelligence by writing, “Over time, marked improvements in accuracy, productivity, and workflow will likely be actualized, but whether that will be used to improve the patient-doctor relationship or facilitate its erosion remains to be seen.” Our results indicate that most patients are receptive to the use of AI for skin cancer screening within the framework of human-AI symbiosis. Although additional research is required, the themes that emerged in this study have important implications across the house of medicine. Through patients’ eyes, augmented intelligence may improve health care quality but should be implemented in a manner that preserves the integrity of the human physician-patient relationship.

eTable 1. Direct-to-Patient Semi-Structured Interview Guide

eTable 2. Clinician-Decision-Support Semi-Structured Interview Guide

eTable 3. Survey

eTable 4. Codebook

References

- 1.Balthazar P, Harri P, Prater A, Safdar NM. Protecting your patients’ interests in the era of big data, artificial intelligence, and predictive analytics. J Am Coll Radiol. 2018;15(3, pt B):580-586. doi: 10.1016/j.jacr.2017.11.035 [DOI] [PubMed] [Google Scholar]

- 2.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25(1):44-56. doi: 10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 3.Esteva A, Kuprel B, Novoa RA, et al. . Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115-118. doi: 10.1038/nature21056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Haenssle HA, Fink C, Schneiderbauer R, et al. ; Reader Study Level-I and Level-II Groups . Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann Oncol. 2018;29(8):1836-1842. doi: 10.1093/annonc/mdy166 [DOI] [PubMed] [Google Scholar]

- 5.Han SS, Kim MS, Lim W, Park GH, Park I, Chang SE. Classification of the clinical images for benign and malignant cutaneous tumors using a deep learning algorithm. J Invest Dermatol. 2018;138(7):1529-1538. doi: 10.1016/j.jid.2018.01.028 [DOI] [PubMed] [Google Scholar]

- 6.Zakhem GA, Motosko CC, Ho RS. How should artificial intelligence screen for skin cancer and deliver diagnostic predictions to patients? JAMA Dermatol. 2018;154(12):1383-1384. doi: 10.1001/jamadermatol.2018.2714 [DOI] [PubMed] [Google Scholar]

- 7.Rigby MJ. Ethical dimensions of using artificial intelligence in health care. AMA J Ethics. 2019;21(2):E121-E124. doi: 10.1001/amajethics.2019.121 [DOI] [Google Scholar]

- 8.Tong A, Sainsbury P, Craig J. Consolidated Criteria for Reporting Qualitative Research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19(6):349-357. doi: 10.1093/intqhc/mzm042 [DOI] [PubMed] [Google Scholar]

- 9.Kallio H, Pietilä AM, Johnson M, Kangasniemi M. Systematic methodological review: developing a framework for a qualitative semi-structured interview guide. J Adv Nurs. 2016;72(12):2954-2965. doi: 10.1111/jan.13031 [DOI] [PubMed] [Google Scholar]

- 10.Gill P, Stewart K, Treasure E, Chadwick B. Methods of data collection in qualitative research: interviews and focus groups. Br Dent J. 2008;204(6):291-295. doi: 10.1038/bdj.2008.192 [DOI] [PubMed] [Google Scholar]

- 11.Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005;37(5):360-363. [PubMed] [Google Scholar]

- 12.Institute of Medicine Committee on Quality of Health Care Crossing the Quality Chasm: A New Health System for the 21st Century Washington, DC: National Academies Press; 2001. [PubMed] [Google Scholar]

- 13.Licklider JCR. Man-computer symbiosis. IRE Trans Hum Factors Electron. 1960;HFE-1:4-11. doi: 10.1109/THFE2.1960.4503259 [DOI] [Google Scholar]

- 14.Nelson CA, Kovarik CL, Barbieri JS. Human-computer symbiosis: enhancing dermatologic care while preserving the art of healing. Int J Dermatol. 2018;57(8):1015-1016. doi: 10.1111/ijd.14071 [DOI] [PubMed] [Google Scholar]

- 15.Kovarik C, Lee I, Ko J; Ad Hoc Task Force on Augmented Intelligence . Position statement on augmented intelligence (AuI). J Am Acad Dermatol. 2019;81(4):998-1000. doi: 10.1016/j.jaad.2019.06.032 [DOI] [PubMed] [Google Scholar]

- 16.Dieng M, Smit AK, Hersch J, et al. . Patients’ views about skin self-examination after treatment for localized melanoma. JAMA Dermatol. 2019. doi: 10.1001/jamadermatol.2019.0434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tran V-T, Riveros C, Ravaud P. Patients’ views of wearable devices and AI in healthcare: findings from the ComPaRe e-cohort. NPJ Digit Med. 2019;2:53. doi: 10.1038/s41746-019-0132-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Tong ST, Sopory P. Does integral affect influence intentions to use artificial intelligence for skin cancer screening? a test of the affect heuristic. Psychol Health. 2019;34(7):828-849. doi: 10.1080/08870446.2019.1579330 [DOI] [PubMed] [Google Scholar]

- 19.Adamson AS, Smith A. Machine learning and health care disparities in dermatology. JAMA Dermatol. 2018;154(11):1247-1248. doi: 10.1001/jamadermatol.2018.2348 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable 1. Direct-to-Patient Semi-Structured Interview Guide

eTable 2. Clinician-Decision-Support Semi-Structured Interview Guide

eTable 3. Survey

eTable 4. Codebook