Automatic Chemical Design is a framework for generating novel molecules with optimized properties.

Automatic Chemical Design is a framework for generating novel molecules with optimized properties.

Abstract

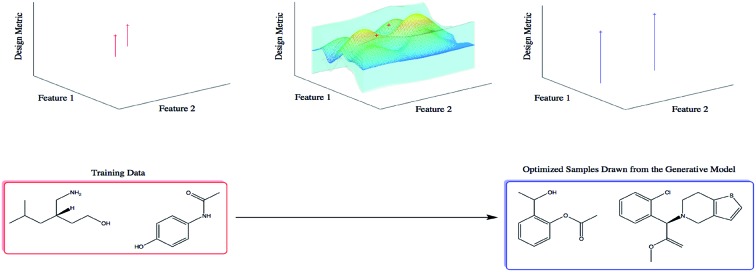

Automatic Chemical Design is a framework for generating novel molecules with optimized properties. The original scheme, featuring Bayesian optimization over the latent space of a variational autoencoder, suffers from the pathology that it tends to produce invalid molecular structures. First, we demonstrate empirically that this pathology arises when the Bayesian optimization scheme queries latent space points far away from the data on which the variational autoencoder has been trained. Secondly, by reformulating the search procedure as a constrained Bayesian optimization problem, we show that the effects of this pathology can be mitigated, yielding marked improvements in the validity of the generated molecules. We posit that constrained Bayesian optimization is a good approach for solving this kind of training set mismatch in many generative tasks involving Bayesian optimization over the latent space of a variational autoencoder.

Introduction

Machine learning in chemical design has shown promise along a number of fronts. In quantitative structure activity relationship (QSAR) modelling, deep learning models have achieved state-of-the-art results in molecular property prediction1–8 as well as property uncertainty quantification.9–12 Progress is also being made in the interpretability and explainability of machine learning solutions to chemical design, a subfield concerned with extracting chemical insight from learned models.13 The focus of this paper however, will be on molecule generation, leveraging machine learning to propose novel molecules that optimize a target objective.

One existing approach for finding molecules that maximize an application-specific metric involves searching a large library of compounds, either physically or virtually.14,15 This has the disadvantage that the search is not open-ended; if the molecule is not contained in the library, the search won't find it.

A second method involves the use of genetic algorithms. In this approach, a known molecule acts as a seed and a local search is performed over a discrete space of molecules. Although these methods have enjoyed success in producing biologically active compounds, an approach featuring a search over an open-ended, continuous space would be beneficial. The use of geometrical cues such as gradients to guide the search in continuous space in conjunction with advances in Bayesian optimization methodologies16,17 could accelerate both drug14,18 and materials19,20 discovery by functioning as a high-throughput virtual screen of unpromising candidates.

Recently, Gómez-Bombarelli et al.21 presented Automatic Chemical Design, a variational autoencoder (VAE) architecture capable of encoding continuous representations of molecules. In continuous latent space, gradient-based optimization is leveraged to find molecules that maximize a design metric.

Although a strong proof of concept, Automatic Chemical Design possesses a deficiency in so far as it fails to generate a high proportion of valid molecular structures. The authors hypothesize21 that molecules selected by Bayesian optimization lie in “dead regions” of the latent space far away from any data that the VAE has seen in training, yielding invalid structures when decoded.

The principle contribution of this paper is to present an approach based on constrained Bayesian optimization that generates a high proportion of valid sequences, thus solving the training set mismatch problem for VAE-based Bayesian optimization schemes.

Methods

SMILES representation

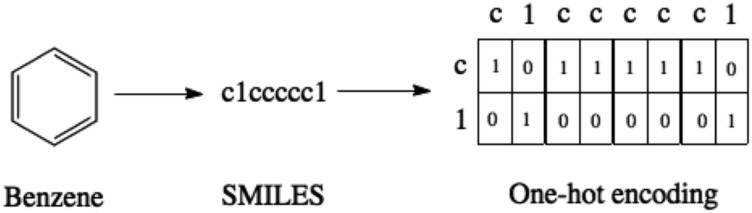

SMILES strings22 are a means of representing molecules as a character sequence. This text-based format facilitates the use of tools from natural language processing for applications such as chemical reaction prediction23–28 and chemical reaction classification.29 To make the SMILES representation compatible with the VAE architecture, the SMILES strings are in turn converted to one-hot vectors indicating the presence or absence of a particular character within a sequence as illustrated in Fig. 1.

Fig. 1. The SMILES representation and one-hot encoding for benzene. For purposes of illustration, only the characters present in benzene are shown in the one-hot encoding. In practice there is a column for each character in the SMILES alphabet.

Variational autoencoders

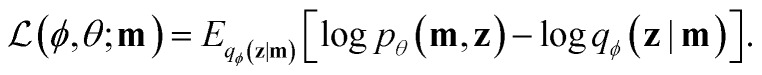

Variational autoencoders30,31 allow us to map molecules m to and from continuous values z in a latent space. The encoding z is interpreted as a latent variable in a probabilistic generative model over which there is a prior distribution p(z). The probabilistic decoder is defined by the likelihood function pθ(m|z). The posterior distribution pθ(z|m) is interpreted as the probabilistic encoder. The parameters of the likelihood pθ(m|z) as well as the parameters of the approximate posterior distribution qφ(z|m) are learned by maximizing the evidence lower bound (ELBO)

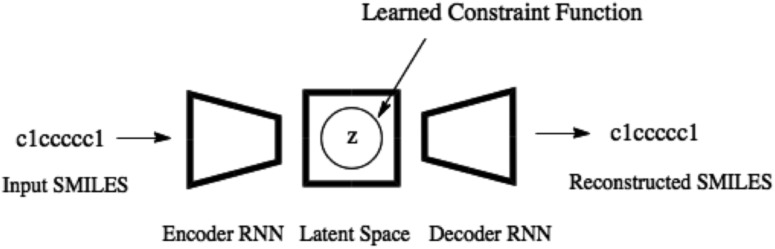

Variational autoencoders have been coupled with recurrent neural networks by ref. 32 to encode sentences into a continuous latent space. This approach is followed for the SMILES format both by ref. 21 and here. The SMILES variational autoencoder, together with our constraint function, is shown in Fig. 2.

Fig. 2. The SMILES variational autoencoder with the learned constraint function illustrated by a circular feasible region in the latent space.

The origin of dead regions in the latent space

The approach introduced in this paper aims to solve the problem of dead regions in the latent space of the VAE. It is first however, important to understand the origin of these dead zones. Three ways in which a dead zone can arise are:

(1) Sampling locations that are very unlikely under the prior. This was noted in the original paper on variational autoencoders30 where sampling was adjusted through the inverse conditional distribution function of a Gaussian.

(2) A latent space dimensionality that is artificially high will yield dead zones in the manifold learned during training.33 This has been demonstrated to be the case empirically in ref. 34.

(3) Inhomogenous training data; undersampled regions of the data space are liable to yield gaps in the latent space.

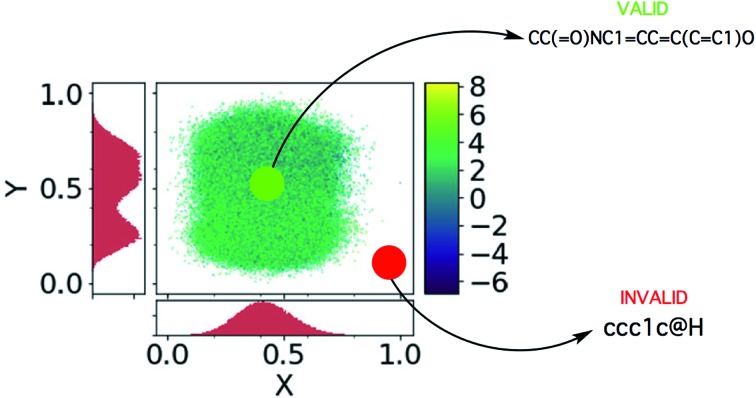

A schematic illustrating sampling from a dead zone, and the associated effect it has on the generated SMILES strings, is given in Fig. 3. In our case, the Bayesian optimization scheme is decoupled from the VAE and hence has no knowledge of the location of the learned manifold. In many instances the explorative behaviour in the acquisition phase of Bayesian optimization will drive the selection of invalid points lying far away from the learned manifold.

Fig. 3. The dead zones in the latent space, adapted from ref. 21. The x and y axes are the principle components computed by PCA. The colour bar gives the log P value of the encoded latent points and the histograms show the coordinate-projected density of the latent points. One may observe that the encoded molecules are not distributed uniformly across the box constituting the bounds of the latent space.

Objective functions for Bayesian optimization of molecules

Bayesian optimization is performed here in the latent space of the variational autoencoder in order to find molecules that score highly under a specified objective function. We assess molecular quality on the following objectives:Jcomplog P(z) = log P(z) – SA(z) – ring-penalty(z),JcompQED(z) = QED(z) – SA(z) – ring-penalty(z),JQED(z) = QED(z).z denotes a molecule's latent representation, log P(z) is the water–octanol partition coefficient, QED(z) is the quantitative estimate of drug-likeness35 and SA(z) is the synthetic accessibility.36 The ring penalty term is as featured in ref. 21. The “comp” subscript is designed to indicate that the objective function is a composite of standalone metrics.

It is important to note, that the first objective, a common metric of comparison in this area, is misspecified as has been pointed out by ref. 37. From a chemical standpoint it is undesirable to maximize the log P score as is being done here. Rather it is preferable to optimize log P to be in a range that is in accordance with the Lipinski rule of five.38 We use the penalized log P objective here because regardless of its relevance for chemistry, it serves as a point of comparison against other methods.

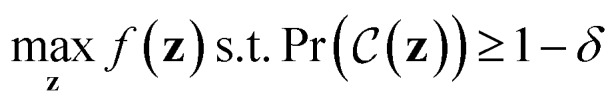

Constrained Bayesian optimization of molecules

We now describe our extension to the Bayesian optimization procedure followed by ref. 21. Expressed formally, the constrained optimization problem is where f(z) is a black-box objective function,

where f(z) is a black-box objective function,  denotes the probability that a Boolean constraint

denotes the probability that a Boolean constraint  is satisfied and 1 – δ is some user-specified minimum confidence that the constraint is satisfied.39 The constraint is that a latent point must decode successfully a large fraction of the times decoding is attempted. The specific fractions used are provided in the results section. The black-box objective function is noisy because a single latent point may decode to multiple molecules when the model makes a mistake, obtaining different values under the objective. In practice, f(z) is one of the objectives described in Section 2.3.

is satisfied and 1 – δ is some user-specified minimum confidence that the constraint is satisfied.39 The constraint is that a latent point must decode successfully a large fraction of the times decoding is attempted. The specific fractions used are provided in the results section. The black-box objective function is noisy because a single latent point may decode to multiple molecules when the model makes a mistake, obtaining different values under the objective. In practice, f(z) is one of the objectives described in Section 2.3.

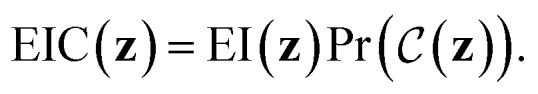

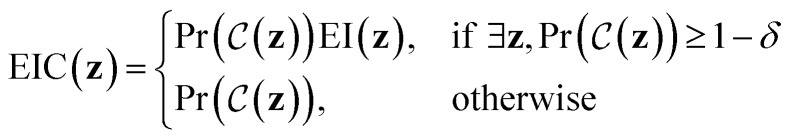

Expected improvement with constraints (EIC)

EIC may be thought of as expected improvement (EI),EI(z) = Ef(z)[max(0,f(z) – η)],that offers improvement only when a set of constraints are satisfied:40

The incumbent solution η in EI(z), may be set in an analogous way to vanilla expected improvement41 as either:

(1) The best observation in which all constraints are observed to be satisfied.

(2) The minimum of the posterior mean such that all constraints are satisfied.

The latter approach is adopted for the experiments performed in this paper. If at the stage in the Bayesian optimization procedure where a feasible point has yet to be located, the form of acquisition function used is that defined by ref. 41. with the intuition being that if the probabilistic constraint is violated everywhere, the acquisition function selects the point having the highest probability of lying within the feasible region. The algorithm ignores the objective until it has located the feasible region.

with the intuition being that if the probabilistic constraint is violated everywhere, the acquisition function selects the point having the highest probability of lying within the feasible region. The algorithm ignores the objective until it has located the feasible region.

Related work

The literature concerning generative models of molecules has exploded since the first work on the topic.21 Current methods feature molecular representations such as SMILES42–54 and graphs55–72 and employ reinforcement learning73–83 as well as generative adversarial networks84 for the generative process. These methods are well-summarized by a number of recent review articles.85–89 In terms of VAE-based approaches, two popular approaches for incorporating property information into the generative process are Bayesian optimization and conditional variational autoencoders (CVAEs).90 When generating molecules using CVAEs, the target data y is embedded into the latent space and conditional sampling is performed47,91 in place of a directed search via Bayesian optimization. In this work we focus solely on VAE-based Bayesian optimization schemes for molecule generation and so we do not benchmark model performance against the aforementioned methods. Principally, we are concerned with highlighting the issue of training set mismatch in VAE-based Bayesian optimizations schemes and demonstrating the superior performance of a constrained Bayesian optimization approach.

Results and discussion

Experiment I

Drug design

In this section we conduct an empirical test of the hypothesis from ref. 21 that the decoder's lack of efficiency is due to data point collection in “dead regions” of the latent space far from the data on which the VAE was trained. We use this information to construct a binary classification Bayesian Neural Network (BNN) to serve as a constraint function that outputs the probability of a latent point being valid, the details of which will be discussed in the section on labelling criteria. The BNN implementation is adapted from the MNIST digit classification network of ref. 92 and is trained using black-box alpha divergence minimization. Secondly, we compare the performance of our constrained Bayesian optimization implementation against the original model (baseline) in terms of the numbers of valid, realistic and drug-like molecules generated. We introduce the concept of a realistic molecule i.e. one that has a SMILES length greater than 5 as a heuristic to gauge whether the decoder has been successful or not. Our definition of drug-like is that a molecule must pass 8 sets of structural alerts or functional group filters from the ChEMBL database.93 Thirdly, we compare the quality of the molecules produced by constrained Bayesian optimization with those of the baseline model. The code for all experiments has been made publicly available at ; https://github.com/Ryan-Rhys/Constrained-Bayesian-Optimisation-for-Automatic-Chemical-Design.

Implementation

The implementation details of the encoder-decoder network as well as the sparse GP for modelling the objective remain unchanged from ref. 21. For the constrained Bayesian optimization algorithm, the BNN is constructed with 2 hidden layers each 100 units wide with ReLU activation functions and a logistic output. Minibatch size is set to 1000 and the network is trained for 5 epochs with a learning rate of 0.0005. 20 iterations of parallel Bayesian optimization are performed using the Kriging-Believer algorithm94 in all cases. Data is collected in batch sizes of 50. The same training set as ref. 21 is used, namely 249, 456 drug-like molecules drawn at random from the ZINC database.95

Diagnostic experiments and labelling criteria

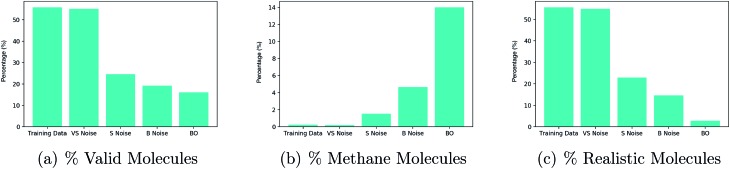

These experiments were designed to test the hypothesis that points collected by Bayesian optimization lie far away from the training data in latent space. In doing so, they also serve as labelling criteria for the data collected to train the BNN acting as the constraint function. The resulting observations are summarized in Fig. 4.

Fig. 4. Experiments on 5 disjoint sets comprising 50 latent points each. Very small (VS) noise are training data latent points with approximately 1% noise added to their values, small (S) noise have 10% noise added to their values and big (B) noise have 50% noise added to their values. All latent points underwent 500 decode attempts and the results are averaged over the 50 points in each set. The percentage of decodings to: (a) valid molecules (b) methane molecule, (c) realistic molecules.

There is a noticeable decrease in the percentage of valid molecules decoded as one moves further away from the training data in latent space. Points collected by Bayesian optimization do the worst in terms of the percentage of valid decodings. This would suggest that these points lie farthest from the training data. The decoder over-generates methane molecules when far away from the data. One hypothesis for why this is the case is that methane is represented as ‘C’ in the SMILES syntax and is by far the most common character. Hence far away from the training data, combinations such as ‘C’ followed by a stop character may have high probability under the distribution over sequences learned by the decoder.

Given that methane has far too low a molecular weight to be a suitable drug candidate, a third plot in Fig. 3(c), shows the percentage of decoded molecules such that the molecules are both valid and have a tangible molecular weight. The definition of a tangible molecular weight was interpreted somewhat arbitrarily as a SMILES length of 5 or greater. Henceforth, molecules that are both valid and have a SMILES length greater than 5 will be referred to as realistic. This definition serves the purpose of determining whether the decoder has been successful or not.

As a result of these diagnostic experiments, it was decided that the criteria for labelling latent points to initialize the binary classification neural network for the constraint would be the following: if the latent point decodes into realistic molecules in more than 20% of decode attempts, it should be classified as realistic and non-realistic otherwise.

Molecular validity

The BNN for the constraint was initialized with 117 440 positive class points and 117 440 negative class points. The positive points were obtained by running the training data through the decoder assigning them positive labels if they satisfied the criteria outlined in the previous section. The negative class points were collected by decoding points sampled uniformly at random across the 56 latent dimensions of the design space. Each latent point undergoes 100 decode attempts and the most probable SMILES string is retained. Jcomplog P(z) is the choice of objective function. The raw validity percentages for constrained and unconstrained Bayesian optimization are given in Table 1.

Table 1. Percentage of valid molecules produced.

| Run | Baseline | Constrained |

| 1 | 29% | 94% |

| 2 | 51% | 97% |

| 3 | 12% | 90% |

| 4 | 37% | 93% |

| 5 | 49% | 86% |

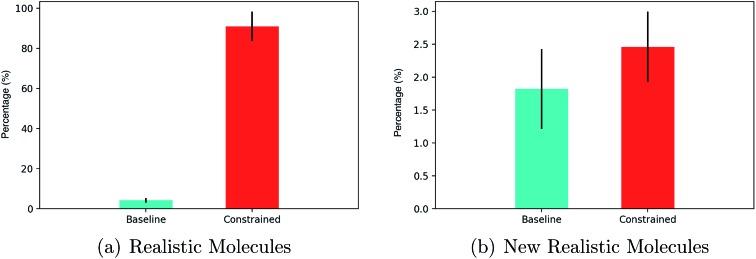

In terms of realistic molecules, the relative performance of constrained Bayesian optimization and unconstrained Bayesian optimization (baseline)21 is compared in Fig. 5(a).

Fig. 5. (a) The percentage of latent points decoded to realistic molecules. (b) The percentage of latent points decoded to unique, novel realistic molecules. The results are from 20 iterations of Bayesian optimization with batches of 50 data points collected at each iteration (1000 latent points decoded in total). The standard error is given for 5 separate train/test set splits of 90/10.

The results show that greater than 80% of the latent points decoded by constrained Bayesian optimization produce realistic molecules compared to less than 5% for unconstrained Bayesian optimization. One must account however, for the fact that the constrained approach may be decoding multiple instances of the same novel molecules. Constrained and unconstrained Bayesian optimization are compared on the metric of the percentage of unique novel molecules produced in Fig. 5(b).

One may observe that constrained Bayesian optimization outperforms unconstrained Bayesian optimization in terms of the generation of unique molecules, but not by a large margin. A manual inspection of the SMILES strings collected by the unconstrained optimization approach showed that there were many strings with lengths marginally larger than the cutoff point, which is suggestive of partially decoded molecules. We run a further test of drug-likeness for the unique novel molecules generated by both methods consisting of passing a number of functional group filters consisting of 8 sets of structural alerts from the ChEMBL database. The alerts consisted of the Pan Assay Interference Compounds (PAINS)96 alert set for nuisance compounds that elude usual reactivity, the NIH MLSMR alert set for excluded functionality filters, the Inpharmatica alert set for unwanted fragments, the Dundee alert set,97 the BMS alert set,98 the Pfizer Lint procedure alert set99 and the Glaxo Wellcome alert set.100 An additional screen dictating that molecules should have a molecular weight between 150–500 daltons was also included. The results are given in Table 2.

Table 2. Percentage of novel generated molecules passing ChemBL structural alerts.

| Baseline | Constrained |

| 6.6% | 35.7% |

In the next section we compare the quality of the novel molecules produced as judged by the scores from the black-box objective function.

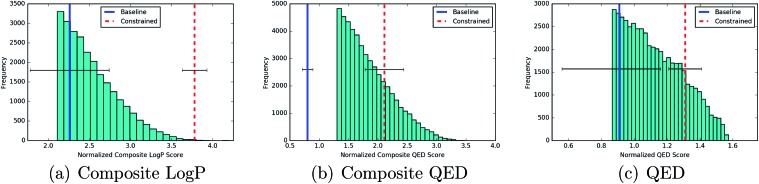

Molecular quality

The results of Fig. 6 indicate that constrained Bayesian optimization is able to generate higher quality molecules relative to unconstrained Bayesian optimization across the three drug-likeness metrics introduced in Section 2.3. Over the 5 independent runs, the constrained optimization procedure in every run produced new molecules ranked in the 100th percentile of the distribution over training set scores for the Jcomplog P(z) objective and over the 90th percentile for the remaining objectives. Table 3 gives the percentile that the averaged score of the new molecules found by each process occupies in the distribution over training set scores. The Jcomplog P(z) objective is included as a metric for the generative performance of the models. It has been previously noted that it should not be beneficial for the purposes of drug design.

Fig. 6. The best scores for new molecules generated from the baseline model (blue) and the model with constrained Bayesian optimization (red). The vertical lines show the best scores averaged over 5 separate train/test splits of 90/10. For reference, the histograms are presented against the backdrop of the top 10% of the training data in the case of composite log P and QED, and the top 20% of the training data in the case of composite QED.

Table 3. Percentile of the averaged new molecule score relative to the training data. The results of 5 separate train/test set splits of 90/10 are provided.

| Objective | Baseline | Constrained |

| log P composite | 36 ± 14 | 92 ± 4 |

| QED composite | 14 ± 3 | 72 ± 10 |

| QED | 11 ± 2 | 79 ± 4 |

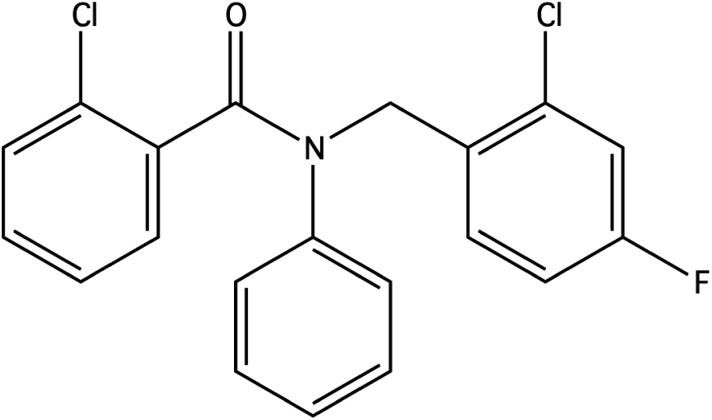

For the penalised log P objective function, scores for each run are presented in Table 4. The best score obtained from our constrained Bayesian optimization approach is compared against the scores reported by other methods in Table 5. The best molecule under the penalised log P objective obtained from our method is depicted in Fig. 7.

Table 4. Penalised log P objective scores with the best score obtained highlighted in bold.

| Run | Baseline | Constrained |

| 1 | 2.02 | 4.01 |

| 2 | 2.81 | 3.86 |

| 3 | 1.45 | 3.62 |

| 4 | 2.56 | 3.82 |

| 5 | 2.47 | 3.63 |

Table 5. Comparison of penalised log P objective function scores against other models. Note that the results are taken from the original works and as such don't constitute a direct performance comparison due to different run configurations.

Fig. 7. The best molecule obtained by constrained Bayesian optimization as judged by the penalised log P objective function score.

Experiment II

Combining molecule generation and property prediction

In order to show that the constrained Bayesian optimization approach is extensible beyond the realm of drug design, we trained the model on data from the Harvard Clean Energy Project19,20 to generate molecules optimized for power conversion efficiency (PCE). In the absence of ground truth values for the PCE of the novel molecules generated, we use the output of a neural network trained to predict PCE as a surrogate. As such, the predictive accuracy of the property prediction model will be a bottleneck for the quality of the generated molecules.

Implementation

A Bayesian neural network with 2 hidden layers and 50 ReLU units per layer was trained to predict the PCE of 200 000 molecules drawn at random from the Harvard Clean Energy Project dataset using 512 bit Morgan circular fingerprints101 as input features with bond radius of 2 computed using RDKit.102 While a larger radius may be appropriate for the prediction of PCE in order to represent conjugation, we are only interested in showing how a property predictor might be incorporated into the automatic chemical design framework and not in optimizing that predictor. The network was trained for 25 epochs with the ADAM optimizer103 using black box alpha divergence minimization with an alpha parameter of 5, a learning rate of 0.01, and a batch size of 500. The RMSE on the training set of 200 000 molecules is 0.681 and the RMSE on the test set of 25 000 molecules is 0.999.

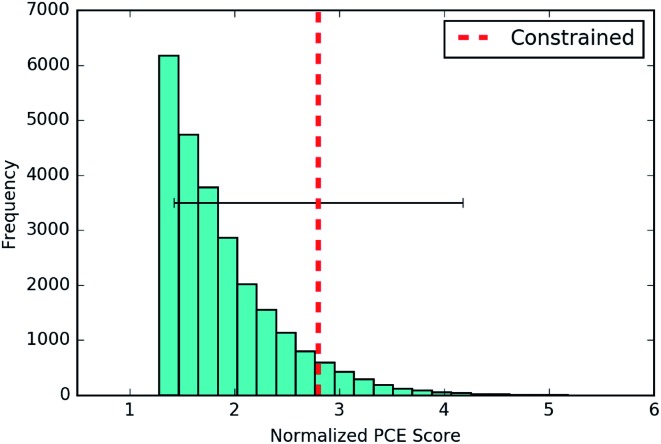

PCE scores

The results are given in Fig. 8. The averaged score of the new molecules generated lies above the 90th percentile in the distribution over training set scores. Given that the objective function in this instance was learned using a neural network, advances in predicting chemical properties from data104,105 are liable to yield concomitant improvements in the optimized molecules generated through this approach.

Fig. 8. The best scores for novel molecules generated by the constrained Bayesian optimization model optimizing for PCE. The results are averaged over 3 separate runs with train/test splits of 90/10. The PCE score is normalized to zero mean and unit variance by the empirical mean and variance of the training set.

Concluding remarks

The reformulation of the search procedure in the Automatic Chemical Design model as a constrained Bayesian optimization problem has led to concrete improvements on two fronts:

(1) Validity – the number of valid molecules produced by the constrained optimization procedure offers a marked improvement over the original model.

(2) Quality – for five independent train/test splits, the scores of the best molecules generated by the constrained optimization procedure consistently ranked above the 90th percentile of the distribution over training set scores for all objectives considered.

These improvements provide strong evidence that constrained Bayesian optimization is a good solution method for the training set mismatch pathology present in the unconstrained approach for molecule generation. More generally, we foresee that constrained Bayesian optimization is a workable solution to the training set mismatch problem in any VAE-based Bayesian optimization scheme. Our code is made publicly available at https://github.com/Ryan-Rhys/Constrained-Bayesian-Optimisation-for-Automatic-Chemical-Design. Further work could feature improvements to the constraint scheme106–111 as well as extensions to model heteroscedastic noise.112

In terms of objectives for molecule generation, recent work by44,89,91,113,114 has featured a more targeted search for novel compounds. This represents a move towards more industrially-relevant objective functions for Bayesian optimization which should ultimately replace the chemically misspecified objectives, such as the penalized log P score, identified both here and in ref. 37. In addition, efforts at benchmarking generative models of molecules115,116 should also serve to advance the field. Finally, in terms of improving parallel Bayesian optimization procedures in molecule generation applications one point of consideration is the relative batch size of collected points compared to the dataset size used to initialize the surrogate model. We suspect that in order to gain benefit from sequential sampling the batch size should be on the same order of magnitude as the size of the initialization set as this will induce the uncertainty estimates of the updated surrogate model to change in a tangible manner.

Conflicts of interest

There are no conflicts to declare.

Supplementary Material

Acknowledgments

The authors thank Jonathan Gordon for useful discussions on the interaction between the Bayesian optimization scheme and the latent space of the variational autoencoder.

Footnotes

†Electronic supplementary information (ESI) available: Additional experimental results validating the algorithm configuration on the toy Branin-Hoo function. See DOI: 10.1039/c9sc04026a

References

- Ryu S., Lim J., Hong S. H. and Kim W. Y., Deeply learning molecular structure-property relationships using attention-and gate-augmented graph convolutional network, arXiv preprint arXiv:1805.10988, 2018.

- Ryu J. Y., Kim H. U., Lee S. Y. Proc. Natl. Acad. Sci. U. S. A. 2018;115:E4304–E4311. doi: 10.1073/pnas.1803294115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turcani L., Greenaway R. L., Jelfs K. E. Chem. Mater. 2018;31:714–727. [Google Scholar]

- Dey S., Luo H., Fokoue A., Hu J., Zhang P. BMC Bioinf. 2018;19:476. doi: 10.1186/s12859-018-2544-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coley C. W., Barzilay R., Green W. H., Jaakkola T. S., Jensen K. F. J. Chem. Inf. Model. 2017;57:1757–1772. doi: 10.1021/acs.jcim.6b00601. [DOI] [PubMed] [Google Scholar]

- Gu G. H., Noh J., Kim I., Jung Y. J. Mater. Chem. A. 2019;7:17096–17117. [Google Scholar]

- Zeng M., Kumar J. N., Zeng Z., Savitha R., Chandrasekhar V. R. and Hippalgaonkar K., Graph convolutional neural networks for polymers property prediction, arXiv preprint arXiv:1811.06231, 2018.

- Coley C. W., Jin W., Rogers L., Jamison T. F., Jaakkola T. S., Green W. H., Barzilay R., Jensen K. F. Chem. Sci. 2019;10:370–377. doi: 10.1039/c8sc04228d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cortés-Ciriano I., Bender A. J. Chem. Inf. Model. 2018;59:1269–1281. doi: 10.1021/acs.jcim.8b00542. [DOI] [PubMed] [Google Scholar]

- Zhang Y., Lee A. A. Chem. Sci. 2019 [Google Scholar]

- Janet J. P., Duan C., Yang T., Nandy A., Kulik H. Chem. Sci. 2019 doi: 10.1039/c9sc02298h. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ryu S., Kwon Y. and Kim W. Y., Uncertainty quantification of molecular property prediction with Bayesian neural networks, arXiv preprint arXiv:1903.08375, 2019.

- McCloskey K., Taly A., Monti F., Brenner M. P., Colwell L. J. Proc. Natl. Acad. Sci. U. S. A. 2019;116:11624–11629. doi: 10.1073/pnas.1820657116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pyzer-Knapp E. O., Suh C., Gómez-Bombarelli R., Aguilera-Iparraguirre J., Aspuru-Guzik A. Annu. Rev. Mater. Res. 2015;45:195–216. [Google Scholar]

- Playe B., Méthodes d’apprentissage statistique pour le criblage virtuel de médicament, PhD thesis, Paris Sciences et Lettres, 2019. [Google Scholar]

- Hernández-Lobato J. M., Requeima J., Pyzer-Knapp E. O. and Aspuru-Guzik A., Parallel and distributed Thompson sampling for large-scale accelerated exploration of chemical space, Proceedings of the 34th International Conference on Machine Learning, 2017, vol. 70, pp. 1470–1479. [Google Scholar]

- Pyzer-Knapp E. IBM J. Res. Dev. 2018;62:2–1. [Google Scholar]

- Gómez-Bombarelli R., Aguilera-Iparraguirre J., Hirzel T. D., Duvenaud D., Maclaurin D., Blood-Forsythe M. A., Chae H. S., Einzinger M., Ha D.-G., Wu T. Nat. Mater. 2016;15:1120–1127. doi: 10.1038/nmat4717. [DOI] [PubMed] [Google Scholar]

- Hachmann J., Olivares-Amaya R., Atahan-Evrenk S., Amador-Bedolla C., SÃČÂąnchez-Carrera R. S., Gold-Parker A., Vogt L., Brockway A. M., Aspuru-Guzik A. J. Phys. Chem. Lett. 2011;2:2241–2251. [Google Scholar]

- Hachmann J., Olivares-Amaya R., Jinich A., Appleton A. L., Blood-Forsythe M. A., Seress L. R., Roman-Salgado C., Trepte K., Atahan-Evrenk S., Er S. Energy Environ. Sci. 2014;7:698–704. [Google Scholar]

- Gómez-Bombarelli R., Wei J. N., Duvenaud D., Hernández-Lobato J. M., Sánchez-Lengeling B., Sheberla D., Aguilera-Iparraguirre J., Hirzel T. D., Adams R. P., Aspuru-Guzik A. ACS Cent. Sci. 2018;4:268–276. doi: 10.1021/acscentsci.7b00572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weininger D. J. Chem. Inf. Comput. Sci. 1988;28:31–36. doi: 10.1021/ci950169+. [DOI] [PubMed] [Google Scholar]

- Schwaller P., Gaudin T., Lanyi D., Bekas C., Laino T. Chem. Sci. 2018;9:6091–6098. doi: 10.1039/c8sc02339e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jin W., Coley C., Barzilay R. and Jaakkola T., Predicting Organic Reaction Outcomes with Weisfeiler–Lehman Network, Advances in Neural Information Processing Systems, 2017, pp 2604–2613. [Google Scholar]

- Coley C. W., Jin W., Rogers L., Jamison T. F., Jaakkola T. S., Green W. H., Barzilay R., Jensen K. F. Chem. Sci. 2019;10:370–377. doi: 10.1039/c8sc04228d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwaller P., Laino T., Gaudin T., Bolgar P., Bekas C. and Lee A. A., Molecular transformer for chemical reaction prediction and uncertainty estimation, arXiv preprint arXiv:1811.02633, 2018.

- Bradshaw J., Kusner M. J., Paige B., Segler M. H. and Hernández-Lobato J. M., A Generative Model of Electron Paths, International Conference on Learning Representations, 2019. [Google Scholar]

- Bradshaw J., Paige B., Kusner M. J., Segler M. H. and Hernández-Lobato J. M., A Model to Search for Synthesizable Molecules, arXiv preprint arXiv:1906.05221, 2019.

- Schwaller P., Vaucher A. C., Nair V. H. and Laino T., Data-Driven Chemical Reaction Classification with Attention-Based Neural Networks, ChemRxiv, 2019.

- Kingma D. P. and Welling M., Auto-Encoding Variational Bayes, International Conference on Learning Representations, 2014. [Google Scholar]

- Kingma D. P., Mohamed S., Rezende D. J. and Welling M., Semi-supervised learning with deep generative models, Advances in Neural Information Processing Systems, 2014, pp. 3581–3589. [Google Scholar]

- Bowman S. R., Vilnis L., Vinyals O., Dai A. M., Józefowicz R. and Bengio S.Generating Sentences from a Continuous Space, CoNLL, 2015. [Google Scholar]

- White T., Sampling Generative Networks, arXiv preprint arXiv:1609.04468, 2016.

- Makhzani A., Shlens J., Jaitly N., Goodfellow I. and Frey B., Adversarial autoencoders, arXiv preprint arXiv:1511.05644, 2015.

- Bickerton R. G., Paolini G. V., Besnard J., Muresan S., Hopkins A. L. Nat. Chem. 2012;4:90–98. doi: 10.1038/nchem.1243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ertl P., Schuffenhauer A. J. Cheminf. 2009;1:8. doi: 10.1186/1758-2946-1-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffiths R.-R., Schwaller P. and Lee A., Dataset Bias in the Natural Sciences: A Case Study in Chemical Reaction Prediction and Synthesis Design, ChemRxiv, 2018.

- Lipinski C. A., Lombardo F., Dominy B. W., Feeney P. J. Adv. Drug Delivery Rev. 1997;23:3–25. doi: 10.1016/s0169-409x(00)00129-0. [DOI] [PubMed] [Google Scholar]

- Gelbart M. A., Snoek J. and Adams R. P., Bayesian optimization with unknown constraints, Proceedings of the Thirtieth Conference on Uncertainty in Artificial Intelligence, 2014, pp. 250–259. [Google Scholar]

- Schonlau M., Welch W. J. and Jones D. R., Global versus local search in constrained optimization of computer models, Lecture Notes - Monograph Series, 1998, pp. 11–25. [Google Scholar]

- Gelbart M. A., Constrained Bayesian Optimization and Applications, PhD thesis, Harvard University, 2015. [Google Scholar]

- Janz D., van der Westhuizen J., Paige B., Kusner M. and Lobato J. M. H., Learning a Generative Model for Validity in Complex Discrete Structures, International Conference on Learning Representations, 2018. [Google Scholar]

- Segler M. H., Kogej T., Tyrchan C., Waller M. P. ACS Cent. Sci. 2017 doi: 10.1021/acscentsci.7b00512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blaschke T., Olivecrona M., Engkvist O., Bajorath J., Chen H. Mol. Inf. 2017 doi: 10.1002/minf.201700123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skalic M., Jiménez J., Sabbadin D., De Fabritiis G. J. Chem. Inf. Model. 2019;59:1205–1214. doi: 10.1021/acs.jcim.8b00706. [DOI] [PubMed] [Google Scholar]

- Ertl P., Lewis R., Martin E. J. and Polyakov V., In silico generation of novel, drug-like chemical matter using the LSTM neural network, arXiv preprint arXiv:1712.07449, Dec 20, 2017.

- Lim J., Ryu S., Kim J. W., Kim W. Y. J. Cheminf. 2018;10:31. doi: 10.1186/s13321-018-0286-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang S., Cho K. J. Chem. Inf. Model. 2018;59:43–52. doi: 10.1021/acs.jcim.8b00263. [DOI] [PubMed] [Google Scholar]

- Sattarov B., Baskin I. I., Horvath D., Marcou G., Bjerrum E. J., Varnek A. J. Chem. Inf. Model. 2019;59:1182–1196. doi: 10.1021/acs.jcim.8b00751. [DOI] [PubMed] [Google Scholar]

- Gupta A., Müller A. T., Huisman B. J., Fuchs J. A., Schneider P., Schneider G. Mol. Inf. 2018;37:1700111. [Google Scholar]

- Harel S., Radinsky K. Mol. Pharm. 2018;15:4406–4416. doi: 10.1021/acs.molpharmaceut.8b00474. [DOI] [PubMed] [Google Scholar]

- Yoshikawa N., Terayama K., Sumita M., Homma T., Oono K., Tsuda K. Chem. Lett. 2018;47:1431–1434. [Google Scholar]

- Bjerrum E., Sattarov B. Biomolecules. 2018;8:131. doi: 10.3390/biom8040131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohammadi S., O'Dowd B., Paulitz-Erdmann C. and Görlitz L., Penalized Variational Autoencoder for Molecular Design, ChemRxiv, 2019.

- Simonovsky M. and Komodakis N., GraphVAE: Towards Generation of Small Graphs Using Variational Autoencoders, Artificial Neural Networks and Machine Learning, 2018, pp. 412–422. [Google Scholar]

- Li Y., Vinyals O., Dyer C., Pascanu R. and Battaglia P., Learning deep generative modelsLearning deep generative models of graphsof graphs, arXiv preprint arXiv:1803.03324, 2018.

- Jin W., Barzilay R. and Jaakkola T., Junction Tree Variational Autoencoder for Molecular Graph Generation, International Conference on Machine Learning, 2018, pp 2328–2337. [Google Scholar]

- De Cao N. and Kipf T., MolGAN: An implicit generative model for small molecular graphs, arXiv preprint arXiv:1805.11973, 2018.

- Kusner M. J., Paige B. and Hernández-Lobato J. M., Grammar Variational Autoencoder, International Conference on Machine Learning, 2017, pp. 1945–1954. [Google Scholar]

- Dai H., Tian Y., Dai B., Skiena S. and Song L., Syntax-Directed Variational Autoencoder for Structured Data, International Conference on Learning Representations, 2018. [Google Scholar]

- Samanta B., Abir D., Jana G., Chattaraj P. K., Ganguly N. and Rodriguez M. G., Nevae: A deep generative model for molecular graphs, Proceedings of the AAAI Conference on Artificial Intelligence, 2019, pp. 1110–1117. [Google Scholar]

- Li Y., Zhang L., Liu Z. J. Cheminf. 2018;10:33. [Google Scholar]

- Kajino H., Molecular Hypergraph Grammar with Its Application to Molecular Optimization, International Conference on Machine Learning, 2019, pp. 3183–3191. [Google Scholar]

- Jin W., Yang K., Barzilay R. and Jaakkola T., Learning Multimodal Graph-to-Graph Translation for Molecule Optimization, International Conference on Learning Representations, 2019. [Google Scholar]

- Bresson X. and Laurent T., A Two-Step Graph Convolutional Decoder for Molecule Generation, arXiv, abs/1906.03412, 2019.

- Lim J., Hwang S.-Y., Kim S., Moon S. and Kim W. Y., Scaffold-based molecular design using graph generative model, arXiv preprint arXiv:1905.13639, 2019. [DOI] [PMC free article] [PubMed]

- Pölsterl S. and Wachinger C., Likelihood-Free Inference and Generation of Molecular Graphs, arXiv preprint arXiv:1905.10310, 2019.

- Krenn M., Häse F., Nigam A., Friederich P. and Aspuru-Guzik A., SELFIES: a robust representation of semantically constrained graphs with an example application in chemistry, arXiv preprint arXiv:1905.13741, 2019.

- Maziarka Ł., Pocha A., Kaczmarczyk J., Rataj K. and Warchoł M., Mol-CycleGAN-a generative model for molecular optimization, arXiv preprint arXiv:1902.02119, 2019. [DOI] [PMC free article] [PubMed]

- Madhawa K., Ishiguro K., Nakago K. and Abe M., GraphNVP: An Invertible Flow Model for Generating Molecular Graphs, arXiv preprint arXiv:1905.11600, 2019.

- Shen R. D., Automatic Chemical Design with Molecular Graph Variational Autoencoders, M.Sc. thesis, University of Cambridge, 2018. [Google Scholar]

- Korovina K., Xu S., Kandasamy K., Neiswanger W., Poczos B., Schneider J. and Xing E. P., ChemBO: Bayesian Optimization of Small Organic Molecules with Synthesizable Recommendations, arXiv e-prints, arXiv:1908.01425, 2019.

- Guimaraes G. L., Sanchez-Lengeling B., Farias P. L. C. and Aspuru-Guzik A., Objective-reinforced generative adversarial networks (ORGAN) for sequence generation models, arXiv preprint arXiv:1705.10843, May 30, 2017.

- Zhou Z., Kearnes S., Li L., Zare R. N., Riley P. Sci. Rep. 2019;9:10752. doi: 10.1038/s41598-019-47148-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Putin E., Asadulaev A., Vanhaelen Q., Ivanenkov Y., Aladinskaya A. V., Aliper A., Zhavoronkov A. Mol. Pharm. 2018;15:4386–4397. doi: 10.1021/acs.molpharmaceut.7b01137. [DOI] [PubMed] [Google Scholar]

- You J., Liu B., Ying Z., Pande V. and Leskovec J., Graph Convolutional Policy Network for Goal-Directed Molecular Graph Generation, Advances in Neural Information Processing Systems, 2018, vol. 31, pp 6410–6421. [Google Scholar]

- Putin E., Asadulaev A., Ivanenkov Y., Aladinskiy V., Sanchez-Lengeling B., Aspuru-Guzik A., Zhavoronkov A. J. Chem. Inf. Model. 2018;58:1194–1204. doi: 10.1021/acs.jcim.7b00690. [DOI] [PubMed] [Google Scholar]

- Yang X., Zhang J., Yoshizoe K., Terayama K., Tsuda K. Sci. Technol. Adv. Mater. 2017;18:972–976. doi: 10.1080/14686996.2017.1401424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wei H., Olarte M. and Goh G. B., Multiple-objective Reinforcement Learning for Inverse Design and Identification, 2019. [Google Scholar]

- Ståhl N., Falkman G., Karlsson A., Mathiason G., Boström J. J. Chem. Inf. Model. 2019 doi: 10.1021/acs.jcim.9b00325. [DOI] [PubMed] [Google Scholar]

- Kraev E., Grammars and reinforcement learning for molecule optimization, arXiv preprint arXiv:1811.11222, 2018.

- Olivecrona M., Blaschke T., Engkvist O., Chen H. J. Cheminf. 2017;9:48. doi: 10.1186/s13321-017-0235-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Popova M., Shvets M., Oliva J. and Isayev O., MolecularRNN: Generating realistic molecular graphs with optimized properties, arXiv preprint arXiv:1905.13372, 2019.

- Prykhodko O., Johansson S., Kotsias P.-C., Bjerrum E. J., Engkvist O. and Chen H., A de novo molecular generation method using latent vector based generative adversarial network, ChemRxiv, 2019. [DOI] [PMC free article] [PubMed]

- Xue D., Gong Y., Yang Z., Chuai G., Qu S., Shen A., Yu J., Liu Q. Wiley Interdiscip. Rev.: Comput. Mol. Sci. 2019;9:e1395. [Google Scholar]

- Elton D. C., Boukouvalas Z., Fuge M. D., Chung P. W. Mol. Syst. Des. Eng. 2019;4:828–849. [Google Scholar]

- Schwalbe-Koda D. and Gómez-Bombarelli R., Generative Models for Automatic Chemical Design, arXiv preprint arXiv:1907.01632, 2019.

- Chang D. T., Probabilistic Generative Deep Learning for Molecular Design, arXiv preprint arXiv:1902.05148, 2019.

- Sanchez-Lengeling B., Aspuru-Guzik A. Science. 2018;361:360–365. doi: 10.1126/science.aat2663. [DOI] [PubMed] [Google Scholar]

- Sohn K., Lee H. and Yan X., Learning structured output representation using deep conditional generative models, Advances in neural information processing systems, 2015, pp 3483–3491. [Google Scholar]

- Polykovskiy D., Zhebrak A., Vetrov D., Ivanenkov Y., Aladinskiy V., Bozdaganyan M., Mamoshina P., Aliper A., Zhavoronkov A., Kadurin A. Mol. Pharm. 2018 doi: 10.1021/acs.molpharmaceut.8b00839. [DOI] [PubMed] [Google Scholar]

- Hernández-Lobato J. M., Li Y., Rowland M., Bui T., Hernández-Lobato D. and Turner R. E., Black-Box Alpha Divergence Minimization, Proceedings of The 33rd International Conference on Machine Learning, New York, New York, USA, 2016, pp 1511–1520. [Google Scholar]

- Gaulton A., Bellis L. J., Bento A. P., Chambers J., Davies M., Hersey A., Light Y., McGlinchey S., Michalovich D., Al-Lazikani B. Nucleic Acids Res. 2011;40:D1100–D1107. doi: 10.1093/nar/gkr777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ginsbourger D., Le Riche R. and Carraro L., A multi-points criterion for deterministic parallel global optimization based on Gaussian processes, HAL preprint hal-00260579, 2008.

- Irwin J. J., Sterling T., Mysinger M. M., Bolstad E. S., Coleman R. G. J. Chem. Inf. Model. 2012;52:1757–1768. doi: 10.1021/ci3001277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baell J. B., Holloway G. A. J. Med. Chem. 2010;53:2719–2740. doi: 10.1021/jm901137j. [DOI] [PubMed] [Google Scholar]

- Brenk R., Schipani A., James D., Krasowski A., Gilbert I. H., Frearson J., Wyatt P. G. ChemMedChem. 2008;3:435–444. doi: 10.1002/cmdc.200700139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearce B. C., Sofia M. J., Good A. C., Drexler D. M., Stock D. A. J. Chem. Inf. Model. 2006;46:1060–1068. doi: 10.1021/ci050504m. [DOI] [PubMed] [Google Scholar]

- Blake J. F. Med. Chem. 2005;1:649–655. doi: 10.2174/157340605774598081. [DOI] [PubMed] [Google Scholar]

- Hann M., Hudson B., Lewell X., Lifely R., Miller L., Ramsden N. J. Chem. Inf. Comput. Sci. 1999;39:897–902. doi: 10.1021/ci990423o. [DOI] [PubMed] [Google Scholar]

- Rogers D., Hahn M. J. Chem. Inf. Model. 2010;50:742–754. doi: 10.1021/ci100050t. [DOI] [PubMed] [Google Scholar]

- Landrum G., RDKit: open-source cheminformatics software, 2016.

- Kingma D. and Ba J., Adam: A method for stochastic optimization, arXiv preprint arXiv:1412.6980, 2014.

- Duvenaud D., Maclaurin D., Aguilera-Iparraguirre J., Gómez-Bombarelli R., Hirzel T., Aspuru-Guzik A. and Adams R. P., Convolutional Networks on Graphs for Learning Molecular Fingerprints, Proceedings of the 28th International Conference on Neural Information Processing Systems, 2015, pp 2224–2232. [Google Scholar]

- Ramsundar B., Kearnes S. M., Riley P., Webster D., Konerding D. E. and Pande V. S., Massively multitask networks for drug discovery, arXiv preprint arXiv:1502.02072, Feb 6, 2015.

- Rainforth T., Le T. A., van de Meent J.-W., Osborne M. A. and Wood F., Bayesian optimization for probabilistic programs, Advances in Neural Information Processing Systems, 2016, pp 280–288. [Google Scholar]

- Mahmood O. and Hernández-Lobato J. M., A COLD Approach to Generating Optimal Samples, arXiv preprint arXiv:1905.09885, 2019.

- Astudillo R. and Frazier P., Bayesian Optimization of Composite Functions, International Conference on Machine Learning, 2019, pp 354–363. [Google Scholar]

- Hase F., Roch L. M., Kreisbeck C., Aspuru-Guzik A. ACS Cent. Sci. 2018;4:1134–1145. doi: 10.1021/acscentsci.8b00307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moriconi R., Kumar K. and Deisenroth M. P., High-Dimensional Bayesian Optimization with Manifold Gaussian Processes, arXiv preprint arXiv:1902.10675, 2019.

- Bartz-Beielstein T., Zaefferer M. Appl. Soft Comput. 2017;55:154–167. [Google Scholar]

- Griffiths R.-R., Garcia-Ortegon M., Aldrick A. A. and Lee A. A., Achieving Robustness to Aleatoric Uncertainty with Heteroscedastic Bayesian Optimisation, arXiv preprint arXiv:1910.07779, 2019.

- Tabor D. P., Roch L. M., Saikin S. K., Kreisbeck C., Sheberla D., Montoya J. H., Dwaraknath S., Aykol M., Ortiz C., Tribukait H. Nat. Rev. Mater. 2018;3 [Google Scholar]

- Aumentado-Armstrong T., Latent Molecular Optimization for Targeted Therapeutic Design, arXiv preprint arXiv:1809.02032, 2018.

- Brown N., Fiscato M., Segler M. H., Vaucher A. C. J. Chem. Inf. Model. 2019;59:1096–1108. doi: 10.1021/acs.jcim.8b00839. [DOI] [PubMed] [Google Scholar]

- Polykovskiy D., Zhebrak A., Sanchez-Lengeling B., Golovanov S., Tatanov O., Belyaev S., Kurbanov R., Artamonov A., Aladinskiy V. and Veselov M., et al., Molecular Sets (MOSES): A Benchmarking Platform for Molecular Generation Models, arXiv, abs/1811.12823, 2018. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.