A device combining soft, wireless wearable electronics with virtual reality offers portable therapeutics for eye disorders.

Abstract

Recent advancements in electronic packaging and image processing techniques have opened the possibility for optics-based portable eye tracking approaches, but technical and safety hurdles limit safe implementation toward wearable applications. Here, we introduce a fully wearable, wireless soft electronic system that offers a portable, highly sensitive tracking of eye movements (vergence) via the combination of skin-conformal sensors and a virtual reality system. Advancement of material processing and printing technologies based on aerosol jet printing enables reliable manufacturing of skin-like sensors, while the flexible hybrid circuit based on elastomer and chip integration allows comfortable integration with a user’s head. Analytical and computational study of a data classification algorithm provides a highly accurate tool for real-time detection and classification of ocular motions. In vivo demonstration with 14 human subjects captures the potential of the wearable electronics as a portable therapy system, whose minimized form factor facilitates seamless interplay with traditional wearable hardware.

INTRODUCTION

Ocular disorders are affecting us at increasing rates in the smartphone era, as more people are using electronic devices at close ranges for prolonged periods. This problem is of particular concern among younger users, as they are being exposed to excessive use of smart devices at an early age. One such ocular disease is convergence insufficiency (CI), which affects children below age 19 (1 to 6%), adults, and military personnel with mild traumatic brain injury (40 to 46%) in the United States (1–3). Similar to CI, strabismus, an eye disorder commonly known as “crossed-eye,” affects approximately 4% of children and adults in the United States (4, 5). People with CI and strabismus show debilitating symptoms of headaches, blurred vision, fatigue, and attention loss (6). These symptoms have contributed to the cost of $1.2 billion in productivity as a result of computer-related visual complaints (7). There are numerous causes for these ocular diseases, ranging from sports-related concussions to genetic disorders, because even the simple movement of our eyes is a manifestation of a collaborative work among the brain, optic nerves, ocular muscles, and the eye itself (8, 9). However, not only are the causes for most pediatric cases unknown, but also precise surgical or genetic approaches to address only the diseased tissues are absent, often leaving the clinical options to treatment plans based on ocular therapies (10). Numerous therapeutic techniques are available for CI with the best overall method being office-based visual therapy (OBVT). Typical convergence orthoptics requires a patient to visit an optometrist’s office for 1 hour every week for 12 weeks of procedural therapy targeting two ocular feedback methods, including vergence and accommodation (7). The two therapeutic methods used in conjunction allow the human eyes to converge on near point and far point objects. Although OBVT with pencil push-ups for the home training has shown success rates as high as 73%, the home-based vision therapy often results in diminishing success rates down to just 33% over time (6, 11–13). Thus, an alternative method is required to reduce outpatient visits by providing a reliable and effective home-based visual therapy. One possible solution is the use of virtual reality (VR) therapy systems, which have now become portable and affordable. With a VR system running on a smartphone, we can create “virtual therapies,” which can be used anywhere anytime. By integrating a motion vergence system, we can allow the user to carry out the therapy even in the absence of clinical supervision. Alternative stereo displays are common for vision therapy, but a VR headset maintains binocular vision while disabling head motions for eye vergence precision. Currently, the most common techniques for measuring vergence motions are video-oculography (VOG) and electrooculography (EOG). VOG uses a high-speed camera that is stationary or attached to a pair of goggles with a camera oriented toward the eye, while EOG techniques use a set of Ag/AgCl electrodes, mounted on the skin, to noninvasively record eye potentials. VOG has received research attention in diagnosis of autism, Parkinson’s disease, and multiple system atrophy (14, 15). A few studies demonstrate the capability of using VOG for CI assessments in vergence rehabilitation (12, 13) and testing of reading capabilities of children (16). Unfortunately, because of the bulk of the hardware components, conventional VOG is still limited for home-based applications and requires the user to visit an optometrist’s office equipped with a VOG system (17). Recent advancements in device packaging and image processing techniques have opened the possibility of incorporating an eye tracking capability within a VR headset by integrating infrared cameras near the lens of the headset, as demonstrated in a prior work (18). While embedding the eye tracking functionality in a VR headset may appear simple and convenient, there are notable disadvantages. First, the accuracy of an image-based eye tracking system relies heavily on the ability to capture clear pupil and eye images of the user. Thus, the wide physiological variabilities, such as covering of the pupil by eyelashes and eyelids, can reduce successful detection of the pupil. For example, Schnipke and Todd (19) reported in their study that only 37.5% of the participants were able to provide eye tracking data acceptable for the study. Second, even state-of-the-art eye tracking algorithms exhibited variable performances that depended on different types of eye image libraries and showed eye detection rates as low as 0.6 (20). Third, integration of the eye tracking components with a VR hardware requires the modification process, thus inevitably increasing the system cost, ultimately discouraging the dissemination of the technology. Furthermore, the hardware integration necessitates calibration whenever the headset shifts and prevents the ability to monitor the eyes without the use of the VR headset. Fourth, while near-infrared is classified as nonionizing, unknown biological effects other than corneal/retinal burns must be investigated for prolonged, repeated, near-distance, and directed ocular near-infrared exposure (21). On the other hand, since EOG is a measurement of naturally changing ocular potential, placement of electrodes around the eyes can allow a highly sensitive detection (22) of eye movements (vergence) while obviating the concerns regarding physiological variabilities, image processing algorithms, hardware modification, and negative biological effects and potentially enable a low-cost and portable eye monitoring solution (23). One prior work (24) shows feasibility of EOG-based detection of eye vergence but lacks classification accuracy of multidegree motions of eyes. In addition, the typical sensor package—using conductive gels, adhesives, and metal conductors—is uncomfortable to be mounted on sensitive tissues surrounding eyes and is too bulky to conform to the nose. Kumar and Sharma (25) demonstrated the use of EOG for game control in a VR, a potential hardware solution for this study, but the use of bulky hardware, cables, and electrodes does not lend the required portability. Xiao et al. (26) also implemented an EOG-controlled VR environment by a customized headset and light-emitting diode wall displays; however, the sophisticated setup also renders the solution impossible for a portable and affordable therapeutic tool. Moreover, these systems rely on the detection of blinking and limited eye movement and lack the critical capability to detect multidegree vergence, which is essential for diagnosis and therapy for eye disorders. Overall, not only are the conventional EOG settings highly limited for seamless integration with a VR system, but also existing VR-combined EOG solutions lack the feasibility for home-based ocular therapeutics. To address these issues, we introduce an “all-in-one” wearable periocular wearable electronic platform that includes soft skin-like sensors and wireless flexible electronics. The soft electronic system offers accurate, real-time detection and classification of multidegree eye vergence in a VR environment toward portable therapeutics of eye disorders. For electrode fabrication, we use aerosol jet printing (AJP), an emerging direct printing technique, to leverage the potential for scalable manufacturing that bypasses costly microfabrication processes. Several groups have used AJP for the development of flexible electronic components and sensors (27–29), and few others have demonstrated its specific utility toward wearable applications (30, 31). In this study, we demonstrate the first implementation of AJP for fabrication of highly stretchable, skin-like, biopotential electrodes through a comprehensive set of results encompassing process optimization, materials analysis, performance simulation, and experimental validation. Because of the exceptionally thin form factor, the printed electrodes can conform seamlessly to the contoured surface of the nose and surrounding eyes and fit under a VR headset comfortably. The open-mesh electrode structure, designed by computational modeling, provides a highly flexible (180° with radius of 1.5 mm) and stretchable (up to 100% in biaxial strain) characteristic verified by cyclic bending and loading tests. A highly flexible and stretchable circuit, encapsulated in an adhesive elastomer, can be attached to the back of the user’s neck without requiring adhesives or tapes, providing a portable, wireless, real-time recording of eye vergence via Bluetooth radio. When worn by a user, the presented EOG system enables accurate detection of eye movement while fully preserving the user’s facial regions for comfortable VR application. Collectively, we herein present the first eye vergence study that uses wearable soft electronics, combined with a VR environment to classify vergence for portable therapeutics of eye disorders. In vivo demonstration of the periocular wearable electronics with multiple human subjects sets a standard protocol for using EOG quantification with VR and physical apparatus, which will be further investigated for clinical study with patients with eye disorder in the future.

RESULTS

Overview of a wireless, portable ocular wearable electronics with a VR gear for vergence detection

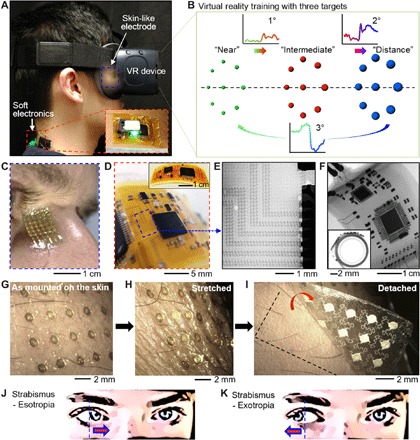

Recording eye vergence via EOG has been deemed difficult (32) by ocular experts because of the signal sensitivity, warranted by the lower conformality and motion artifacts of conventional gel electrodes in comparison with skin-like, soft electrodes (22, 33, 34). In addition, a pragmatic experimental setup that can invoke a precise eye vergence response is lacking. Therefore, we developed a VR-integrated eye therapy system and wearable ocular electronics (Fig. 1). The portable therapeutic system (Fig. 1A) incorporates a set of stretchable aerosol jet–printed electrodes, a VR device, and soft wireless electronics on the back of the user’s neck. The portable system offers a highly sensitive, automatic detection of eye vergence via a data classification algorithm. The VR therapy environment (Fig. 1B) simulates continuous movements of multiple objects in three varying depths of near, intermediate, and distance, which correspond to 1°, 2°, and 3° of eye motions, which enables portable ocular therapeutics without the use of physical apparatus. Audio feedback in the system interface guides the user through the motions for high-fidelity, continuous signal acquisition toward training and testing purposes. The performance of the VR system in vergence recording is compared with a physical apparatus (details of the setup appear in fig. S1A, and example of vergence detection is shown in movie S1). We engineered nanomaterials to design an ultrathin, skin-like sensor for high-fidelity detection of slight eye movements via conformal lamination on the contoured areas around the eyes and nasal region (Fig. 1C). A scalable additive manufacturing method using AJP was used to fabricate the skin-wearable sensor, which was connected to a fully portable, miniaturized wireless circuit that is ultralight and highly flexible for gentle lamination on the back of the neck. For optimization of sensor location and signal characterization, a commercial data acquisition system (BioRadio, Great Lakes NeuroTechnologies) was initially used (details of two types of systems appear in fig. S1B and table S1). The systematic integration of lithography-based open-mesh interconnects on a polyimide (PI) film, bonding of small chip components, and encapsulation with an elastomeric membrane enables the soft and compliant electronic system. Details of the fabrication methods and processes appear in Materials and Methods and fig. S2. The flexible electronic circuit consists of a few different modules that allow for wireless, high-throughput EOG detection (fig. S3A). The antenna is a monopole ceramic antenna that is matched with a T-matching circuit to the impedance of the Bluetooth radio (nRF52, Nordic Semiconductor), which is serially connected to a four-channel 24-bit analog-to-digital converter (ADS1299, Texas Instruments) for high-resolution recording of EOG signals (Fig. 1D). The bias amplifier is configured for a closed-loop gain of the signals. In the electronics, an array of 1-μm-thick and 18-μm-wide interconnects (Fig. 1E) ensures high mechanical flexibility by adsorbing applied strain to isolate it from chip components. Experiments of bending in Fig. 1F capture the deformable characteristics of the system, even with 180° bending at a small radius of curvature (1 mm). The measured resistance change is less than 1% at the area of bending in between the two largest chips, which means that the unit continues to function [details of the experimental setup and data are shown in fig. S3 (B to D)]. In addition, the ultrathin, dry electrode (thickness: 67 μm, including a backing elastomer) makes an intimate and conformal bonding to the skin (Fig. 1, G and H) without the use of gel and adhesive. The conformal lamination comes from the elastomer’s extremely low modulus and high adhesion forces as well as the reduced bending stiffness of the electrode by incorporating the open-mesh and serpentine geometric patterns (details of conformal contact analysis appear in section S1) (33, 35). The open-mesh, serpentine structure can even accommodate dynamic skin deformation and surface hairs near the eyebrow for sensitive recording of EOG. The device is powered by a small rechargeable lithium-ion polymer battery that is connected to the circuit via miniaturized magnetic terminals for easy battery integration. Photographs showing the details of battery integration and magnetic terminals are shown in fig. S3 (E to H). In conjunction with a data classification algorithm for eye vergence, the ocular wearable electronic system described here aims to diagnose diseases like strabismus that is most commonly described by the direction of the eye misalignment (Fig. 1, J and K). With the integration of a VR program, this portable system could serve as an effective therapeutic tool to find many applications in eye disorder therapy.

Fig. 1. Overview of a soft, wireless periocular electronic system that integrates a VR environment for home-based therapeutics of eye disorders.

(A) A portable, wearable system for vergence detection via skin-like electrodes and soft circuit and relevant therapeutics with a VR system. The zoomed inset details the magnetically integrated battery power source. (B) VR therapy environment that simulates continuous movements of multiple objects in three varying depths of near, intermediate, and distance, which correspond to 1°, 2°, and 3° of eye motions. (C) Zoomed-in view of a skin-like electrode that makes a conformal contact with the nose. (D) Highly flexible, soft electronic circuit mounted on the back of the neck. (E and F) X-ray images of the magnified mesh interconnects (E) and circuit flexion with a small bending radius (F). (G to I) Photos of an ultrathin, mesh-structured electrode, mounted near the eyebrow. (J and K) Two types of eye misalignment due to a disorder: esotropia (inward eye turning) (J) and exotropia (outward deviation) (K), which is detected by the wireless ocular wearable electronics. Photo credit: Saswat Mishra; photographer institution: Georgia Institute of Technology.

Characterization and fabrication of wearable skin-like electrodes via AJP

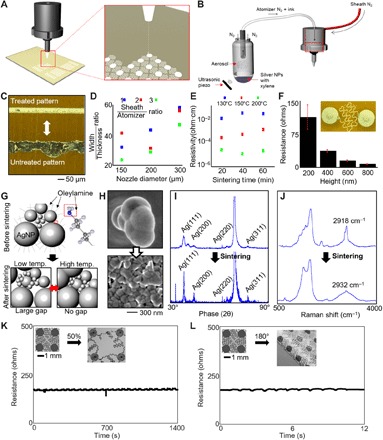

A highly sensitive detection of EOG signals requires a low skin-electrode contact impedance and minimized motion artifacts. Although a conventional electrode offers a low contact impedance due to the electrolyte gel, the combination of the sensor rigidity, size, and associated adhesive limits an application onto the sensitive and highly contoured regions of the nose and eye areas. Here, we developed an additive manufacturing technique (Fig. 2) to design an ultrathin, highly stretchable, comfortable dry electrode for EOG detection. As a potentially low-cost and scalable printing method (36), AJP allows a direct printing of an open-mesh structure onto a soft membrane (Fig. 2A) without the use of an expensive nanomicrofabrication facility (37). For successful printing, we conducted a parametric study to understand the material properties of silver nanoparticles (AgNPs) (UT Dots) along with the controlled deposition through various nozzles of the AJP (AJ200, Optomec; Fig. 2B). Before the electrode fabrication, printing lines were assessed for its wettability by qualitatively determining the surface energy on a PI substrate. Plasma treatment along with platen heat was used to produce fine features (Fig. 2C). To find the optimal printed structure, we varied a few key parameters, including the ratio of line width and thickness for each nozzle diameter with different focusing ratios and sintering time with different temperatures (Fig. 2, D and E). In the focusing ratio (Fig. 2D), the sheath flow controls the material flow rate through the deposition head, and the atomizer flow controls the material leaving the ultrasonic atomizer bath. The highest focusing ratio has the lowest width-to-thickness ratio, which ensures a smaller width for a higher thickness. This yields a lower resistance for finer traces, which is necessary for the ideal sensor geometry [width of a trace, 30 μm; entire dimension, 1 cm2; Fig. 2A; see also details in fig. S4 (A and B)]. A four-point probe system (S-302-A, Signatone) measures the resistivity of the printed ink sintered at different temperatures and time periods (Fig. 2E). As expected, multilayer printing of AgNPs provides lowered resistance (Fig. 2F). The good conductivity of printed traces was ensured by the sintering process at 200°C (Fig. 2G). Images from scanning electron microscopy (SEM; Fig. 2H) reveal the AgNP agglomeration and densification, which is an indirect indication of the dissociation of oleylamine binder material that surrounds the NPs (see additional images in fig. S4C). In addition, x-ray diffraction shows various crystalline planes of AgNPs that become more evident after sintering because of the increasing grain sizes, demonstrated by the decrease in broadband characteristics of the peaks in Fig. 2I. This change in crystallinity is due to the decomposition of the oleylamine capping agent (38). In Fig. 2J, Raman spectroscopy shows a narrow peak at 2918 cm−1 before sintering, which is converted to a much broader peak centered at 2932 cm−1 after sintering. This indicates a decrease of CH2 bonds in the capping agent and a small shift in the amine group (1400 to 1600 cm−1) (39). Last, a Fourier transform infrared analysis further verifies the dissociation of the binder. The Fourier transform infrared transmittance spectra for the printed electrodes before and after sintering indicate the disappearance of “NH stretch,” “NH bend,” and “C-N stretching,” which confirms the dissociation of oleylamine (fig. S4D). Furthermore, surface characterization using x-ray photoelectron spectroscopy indicates a decrease in the full width at half maximum of carbon and an increase in that of oxygen, which resembles an overall percentage of decrease in the elemental composition. These atomic percentage increases are due to the breakdown of the binder and the increase in oxidation of AgNPs (details of the experimental results appear in fig. S4E). The mesh design of an electrode provides a highly flexible and stretchable mechanics upon multimodal strain, supported by the computational modeling. A set of experimental tests validates the mechanical stability of the sensor, where the structure can be stretched up to 100% before a change in resistance is observed (fig. S5, A to D), cyclically stretched up to 50% without a damage (Fig. 2K), and bendable up to 180° with a very small radius of curvature (250 μm; Fig. 2L). Details of the mechanical stretching and bending apparatus and processes are shown in fig. S5 (E to H). The printed electrode follows dry etching of PI and transfer onto an elastomeric membrane by dissolving a sacrificial layer, which prepares the sensor to be mounted on the skin (details of the processes appear in fig. S5I). Last, the performance of the printed skin-like electrodes was compared to that of conventional gel electrodes (MVAP II Electrodes, MVAP Medical Supplies) by simultaneously applying the two types of electrodes on each side of a subject with an electrode configuration enabling the measurements of vertical eye movements. Skin-electrode contact impedances are measured by plugging the pair of electrodes (positioned above and below each eye) to a test equipment (Checktrode Model 1089 MK III, UFI) and recording the measurement values at both the start and the finish of the experimental session. A 1-hour period is chosen to represent the average duration of vergence therapeutic protocols. At the start of the session, the average impedance values for the two types of electrodes show 7.6 and 8.4 kΩ for gel and skin-like electrodes, respectively. After 1 hour, the respective impedance values change to 1.2 and 6.3 kΩ, indicating the formation of excellent skin-to-electrode interfaces by both types of electrodes. Next, the subject performs a simple vertical eye movement protocol by looking up and down to generate EOG signals for signal-to-noise ratio (SNR) comparison. The SNR analysis, which is carried out by finding the log ratio of root mean square (RMS) values for each EOG signal (up and down) and the baseline signal from 10 trials, is also performed at the start and finish of the 1-hour session. While the SNR results show comparable performances and no meaningful changes in the two types of electrodes over the 1-hour period, the skin-like electrodes maintained higher SNR values in all cases. Last, the electrode’s sensitivity to movement artifacts is quantified by requesting the user to walk on a treadmill with a speed of 1.4 m/s for 1 min. The RMS of the two data from each electrode type are quantified to be 2.36 and 2.45 μV for gel and skin-like electrodes, respectively, suggesting that, in terms of movement artifact, the use of skin-like electrodes does not offer realistic disadvantages to using the gel adhesive electrodes. The details of the experimental setup and analysis results are included in fig. S6.

Fig. 2. AJP parametric study supplemented by the characterization of deposited NP ink.

(A) AJP out of deposition head with a close-up of deposition. (B) Atomization begins in the ultrasonic vial and a carrier gas (N2) flows the ink droplets through tubing, the diffuser, and the deposition head where a sheath gas focuses the particles into a narrow stream with a diameter of ~5 μm. (C) Platen is heated to ensure evaporation of the solvents, but surface treatment with plasma cleaner enables clean lines (top). Otherwise, the traces tend to form into bubbles over a large area. (D) High focusing ratio with low width/thickness ratio enables lower resistivity. (E) Resistivity measurements from a four-point probe system was determined for various sintering temperatures and time. (F) Ultimately, the resistance of the electrodes needs to be low, so a two-point probe measurement demonstrates the resistance across a fractal electrode in series. (G) AgNPs capped with oleylamine fatty acid that prevents agglomeration end up breaking down after low- and high-temperature sintering. The grain size for small NPs increases at low temperatures, and then, that for bigger NPs increases at higher temperatures. (H) SEM images show the agglomeration and densification of the binder material after sintering. (I) X-ray diffraction analysis shows larger crystallization peaks after sintering and the recrystallization of peaks along (111), (200), and (311) crystal planes. The large peak between (220) and (311) represents Si substrate. (J) Raman spectroscopy demonstrates the loss of carbon and hydrogen peaks from the binder dissociation near wave number 2900 cm−1. (K) Cyclic stretching (50% strain) of the electrode. (L) Cyclic bending (up to 180°) of the electrode.

Study of ocular vergence and classification accuracy based on sensor positions

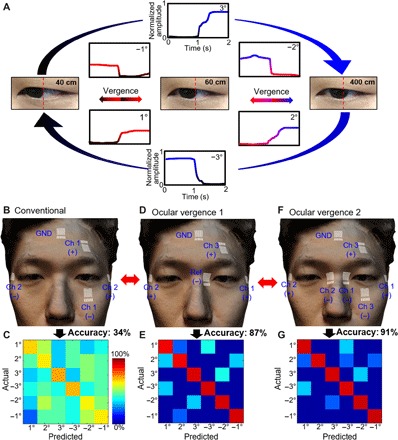

Recording of ocular vergence via EOG requires meticulous optimization to produce the maximum functionalities. We assessed a series of distances with eye vergence to establish a metric for classification. The most common distances that humans observe in daily life were the basis for the procedure in the physical and virtual domains. The discrepancy of the degrees of eye motion is a necessary physical attribute for eye vergence classification. Figure 3A shows the individual converging and diverging ocular motions with the corresponding EOG signals. An example of diverging motions, from 40 cm to 60 and 400 cm, corresponds to positive potentials as the degree of eye vergence increases from 1° to 3° based on an interpupillary distance of 50 mm. Converging movements show an inverted potential in the negative direction, from 400 cm to 60 and 40 cm, corresponding from 3° to 1°. Conventional EOG sensor positioning aims to record synchronized eye motions with a large range of potentials in observing gaze tracking (40, 41). An experimental setup in Fig. 3B resembles the conventional setting (two recording channels and one ground on the forehead) for typical vertical and horizontal movements of eyes. A subject wearing the soft sensors was asked to look at three objects, located 40, 60, and 400 cm away. The measured classification accuracy of eye vergence signals shows only 34% (Fig. 3C) because of the combination of inconsistent potentials and opposing gaze motions. To preserve the signal quality of ocular vergence, we used three individual channels using a unipolar setup with a combined reference electrode positioned on the contour of the nose (ocular vergence 1; Fig. 3D), which results in an accuracy of 87% (Fig. 3E). Last, we assessed the accuracy of our electrode positioning by adding two more electrodes (ocular vergence 2; Fig. 3F), which yielded 91% accuracy (Fig. 3G) with the least amount of deviation in the signals. The classification accuracy per sensor location is the averaged value from three human subjects (details of individual confusion matrices with associated accuracy calculation are summarized in fig. S7). In addition, the recording setup with the case of ocular vergence 2 (Fig. 3F) shows signal resolution as high as ~26 μV per degree, which is substantially higher than our prior work (~11 μV per degree) (22). The lowest eye resolution that we could achieve using the Brock String program yielded 0.5° eye vergence using our device (fig. S8A). The increased sensitivity of vergence amplitude is due to the optimized positioning of the electrodes closer to the eyes, such as on the nose and the outer canthus. In addition, the portable, wireless wearable circuit has an increased gain of 12 in the internal amplifier.

Fig. 3. Study of ocular vergence and classification accuracy based on sensor positions.

(A) Converging and diverging ocular motions and the corresponding EOG signals with three different targets, placed at 40, 60, and 400 cm away. (B and C) Sensor positioning that resembles the conventional setting (two recording channels and one ground). (D and E) Alternate positions for improved detection of ocular vergence, showing an enhanced accuracy of 87%. (F and G) Finalized sensor positions (ocular vergence 2) that include three channels and one ground, showing the highest accuracy of eye vergence. Photo credit: Saswat Mishra; photographer institution: Georgia Institute of Technology.

Optimization of vergence analysis via signal processing and feature extraction

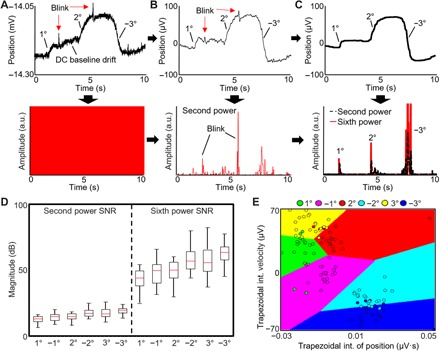

We assessed the variability of ocular vergence in both physical and VR domains to realize a fully portable vergence therapeutic program. The acquired EOG signals from vergence motions require a mathematical translation using statistical analysis for quantitative signal comparison (Fig. 4). Before algorithm implementation, raw EOG signals (top graph in Fig. 4A) from the ocular wearable electronics are converted to the derivative data (bottom graph in Fig. 4A). Because of the noise and baseline drift, a third-order Butterworth band-pass filter is implemented from 0.1 to 10 Hz. The band-pass filter removes the drift and high-frequency noise, so the derivative is much cleaner as shown in Fig. 4B, according to the vergence motions. The dataset shows that blink velocity is faster than vergence motions, which corrupts thresholding. A 500-point median filter can remove any presence of the blink, as shown in Fig. 4C. However, stronger blinking from certain subjects remains and is removed by thresholding an amplified derivative signal. Increasing the second-order derivative filter to sixth order can assist in positively altering the range of thresholds by changing the SNR (Fig. 4D). The final step is to parse the data into a sliding window, compute features, and input them into the classifier. A decision boundary of two dimensions of the feature set with the ensemble classifier is shown in Fig. 4E. A wrapper feature selection algorithm was applied to determine whether the utilization of 10 features was optimized for the recorded EOG signals. In addition to five features (definite integral, amplitude, velocity, signal mean, and wavelet energy) from our prior study (22), we studied other features that can be easily converted into C programming using a MATLAB coder (see details in Materials and Methods). The result of the wrapper feature selection indicates a saturation of the accuracy with a mean accuracy of 95% (fig. S8B). Therefore, all 10 features were used in the classification methods with a sliding window of 2 s. To achieve the highest accuracies for real time and cross-validation, the classification algorithm requires the test subject to follow protocols evoking a response of eye vergence in two directions. The test subject followed a repeated procedure from motion to blink, motion, and blink. This procedure allows the data to be segmented into its specific class for facile training with the classifier by following directions 1 and 2 four times each (fig. S8C). The integration of the training procedure with filters, thresholds, and the ensemble classifier enables our high classification accuracies. Consequently, the presented set of high-quality EOG measurement, integrated algorithm, and training procedures allow the calibration of vergence classification specific for the user, regardless of the variabilities in EOG arising from individual differences, such as face size or shape. Multiple classifiers were tested in the MATLAB’s classification learner application; however, the results show k nearest neighbor, and support vector machine was inferior to ensemble subspace discriminant, which showed accuracies above 85% for subject 12 (see details in fig. S8D and section S2).

Fig. 4. Optimization of vergence analysis via signal processing and feature extraction.

(A) Raw eye vergence signals (top) acquired by the wireless ocular wearable electronics in real time and the corresponding derivative signals (bottom). a.u., arbitrary units. (B) Preprocessed data with a band-pass filter and the corresponding derivative, raised to the second power. (C) Further processed data with a 500-point median filter and the corresponding derivative, raised to the sixth power. A coefficient is multiplied to increase the amplitude of the second- and sixth-order differential filters. (D) SNR comparison between the second- and sixth-order differential filters, showing an increased range of the sixth-order data. The sixth-order data are used for thresholding the vergence signals for real-time classification of the dataset. (E) Data from the sliding window are added into the ensemble subspace classifier (shown by the decision boundaries of two dimensions of the feature set).

Comparison of ocular classification accuracy between VR and physical apparatus

This work summarizes the experimental results of ocular classification comparison between the soft ocular wearable electronics with a VR headset (Fig. 1A) and a conventional device with a physical target apparatus (fig. S1A). We analyzed and compared only the center position data among the circular targets (Fig. 1B) because this resembles the most accurate vergence motions without any noise from gaze motions. We transferred our physical apparatus to the virtual domain by converting flat display screen images to fit the human binocular vision. The VR headset enables us to capture ideal eye vergence motions because head motions are disabled and the stimulus is perfectly aligned with the user’s binocular vision. This is evident from the averaged signal and SD of the normalized position of the ideal datasets shown in Fig. 5 (A and B) [red line, average; gray shadow, deviation with different gain values (12 for VR and 1 for physical system)]. The physical apparatus data show a larger variation in overall trials in comparison with the VR headset, as observed in fig. S9 (A and B). This is a consequence of the test apparatus with each user’s variability in observation of the physical apparatus. We also compared the normalized peak velocities according to the normalized positions for both convergence and divergence in Fig. 5 (C and D) [additional datasets are summarized in fig. S9 (C and D)]. Using a rise time algorithm, the amplitude changes and variation of all datasets from physical and VR are shown in fig. S10 (A and B). The summarized comparison of classification accuracy based on cross-validation with the VR-equipped soft ocular wearable electronics shows a higher value (91%) than that with the physical apparatus (80%), as shown in Fig. 5 (E and F). The intrinsic quality of the ensemble classifier that we used shows high variance in cross-validation assessments. Even with the real-time classification, the ensemble classifier yields about 83 and 80% for the VR environment and the physical apparatus, respectively. The VR real-time classification is higher than the physical apparatus because of less variation between opposing motions of positive and negative changes. Details of the classification accuracies in cross-validation and real time are summarized in fig. S10 (C to F) and tables S2 to S5.

Fig. 5. Comparison of ocular classification accuracy between VR and physical apparatus.

(A and B) Representative normalized data of eye vergence (red line, average; gray shadow, deviation), recorded with a VR system (A) and a physical apparatus (B). The VR device uses a higher signal gain than the physical setup. (C and D) Normalized peak velocities according to the normalized positions for both convergence and divergence in the VR (C) and the physical setup (D). (E and F) Summarized comparison of averaged classification accuracy (total of six human subjects) based on cross-validation with the VR-equipped soft ocular wearable electronics (91% accuracy) (E) and physical apparatus (80% accuracy) (F).

VR-enabled vergence therapeutic system

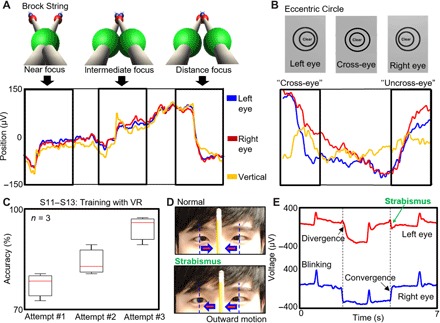

As the gold standard method, conventional home-based vergence therapy uses pencil push-ups in conjunction with OBVT (2). Adding a VR headset to home-based therapy with a portable data recording system can certainly make a patient use the same therapeutic program both at an optometrist’s office and at home. To integrate with the developed ocular wearable electronics, we designed two ocular therapy programs in a Unity engine (unity3d.com). The first program enables a patient to use the virtual “Brock String” (Fig. 6A), which is a string of 100 cm in length with three beads (see VR program in movie S2), originally designed to treat patients with strabismus (11). The string is offset 2 cm below the eye and centered between the eyes with the three beads at varying distances. Three channels of soft sensors on the face (Fig. 3F) measure EOG signals, corresponding to a subject’s eye movements targeting on three beads (Fig. 6A). An example of a VR-based training apparatus is shown in movie S3. The second therapy program uses a set of two cards with concentric circles on each card referred to as “Eccentric Circle” (Fig. 6B), which is also a widely used program in vergence therapy (11). The corresponding EOG signals show the user, wearing the VR headset, cross his eyes to yield the center card. The distance between the left and right cards can be increased to make this task more difficult. The signal also demonstrates the difficulty of the eye crossing motion due to the lower velocity. These programs can be used as an addition to the office therapy of the CI treatment procedures. Continuous use of the VR headset program (Fig. 6C) presents improved eye vergence, acquired from three human subjects with no strabismus issues from near point convergence. Users indicate the difficulty of converging in earlier tests (accuracy, ~75%), which improves over time (final accuracy, ~90%). In addition, we found a subject who had difficulty holding the near point convergence motions in the VR headset, which was determined to be strabismus exotropia (Fig. 6D). This subject was also asked to perform pencil push-ups from 3 up to 60 cm in the physical domain (Fig. 6D). The corresponding EOG signals upon convergence and divergence during the pencil push-ups appear in Fig. 6E. While the right eye signals (blue line) show the correct divergence and convergence positions, his left eye shows slower response at convergence, followed by an exotropic incidence after the blink at the near position. Typically, a blink results in high velocity increase and decrease of potentials, but in this case, the potential does not decrease after the blink moment. A divergence motion should increase the EOG potential (as the right eye), but the signal drops to 100 μV, meaning that the left eye moved outward.

Fig. 6. VR-enabled vergence therapeutic system.

(A) Example of ocular therapy programs in the VR system: Brock String, which is a string of 100 cm in length with three beads (top images) and measured eye vergence signals (bottom graph). (B) Program, named Eccentric Circle, that uses a set of two cards with concentric circles on each card (top images) and corresponding EOG signals (bottom graph). (C) Continuous use of the VR headset program, showing improved eye vergence, acquired from three human subjects with no strabismus issues from near point convergence. (D) Photos of strabismus exotropia, showing a subject who had difficulty holding the near point convergence during pencil push-ups. (E) Corresponding EOG signals upon convergence and divergence during the pencil push-ups; the left eye shows slower response at convergence, followed by an exotropic incidence after the blink at the near position. Photo credit: Saswat Mishra; photographer institution: Georgia Institute of Technology.

DISCUSSION

Collectively, this work introduced the development of a fully portable, all-in-one, periocular wearable electronic system with a wireless, real-time classification of eye vergence. The comprehensive study of soft and nanomaterials, stretchable mechanics, and processing optimization and characterization for aerosol jet–printed EOG electrodes enabled the first demonstration of highly stretchable and low-profile biopotential electrodes that allowed a comfortable, seamless integration with a therapeutic VR environment. Highly sensitive eye vergence detection was achieved by the combination of the skin-like printed EOG electrodes, optimized sensor placement, signal processing, and feature extraction strategies. When combined with a therapeutic program–embedded VR system, the users were able to successfully improve the visual training accuracy in an ordinary office setting. Through the in vivo demonstration, we showed that the soft periocular wearable electronics can accurately provide quantitative feedback of vergence motions, which is directly applicable to many patients with CI and strabismus. Overall, the VR-integrated wearable system verified its potential to replace archaic home-based therapy protocols such as the pencil push-ups.

While the current study focused on the development of the integrated wearable system and demonstration of its effectiveness on vergence monitoring with healthy population, our future work will investigate the use of the wearable system for home-based and long-term therapeutic effects with patients with eye disorder. We anticipate that the quantitative detection of eye vergence can also be used for diagnosis of neurodegenerative diseases and childhood learning disorders, both of which are topics of high-impact research studies that are bottlenecked by the lack of low-cost and easy-to-use methods for field experiments. For example, patients with Parkinson’s disease exhibit diplopia and CI, while patients with rarer diseases such as spinocerebellar ataxia types 3 and 6 can demonstrate divergence insufficiency and diplopia as well (42). Although these diseases are not yet fully treatable, quality of life can be improved if the ocular conditions are treated with therapy. Beyond disease diagnosis and treatment domains, the presented periocular system may serve as a unique and timely research tool in the prevention and maintenance of ocular health, a research topic with increasing interests because of the excessive use of smart devices.

MATERIALS AND METHODS

Fabrication of a soft, flexible electronic circuit

The portable and wearable flexible electronic circuit was fabricated to integrate a set of skin-like electrodes for wireless detection of eye vergence. The unique combination of thin-film transfer printing, hard-soft integration of elastomer and miniaturized electronic components allowed the successful manufacturing of the flexible electronics (details of the device fabrication appear in section S3 and fig. S2). The ocular wearable electronic system includes multiple units: a set of skin-like sensors, signal filtering/amplification, Bluetooth low-energy wireless telemetry, and an antenna.

Fabrication of soft, skin-like, stretchable electrodes

We used an AJP method to design and manufacture the skin-like electrodes (details appear in section S3 and fig. S5I). The additive manufacturing method patterned AgNPs on glass slides spin-coated with poly(methyl methacrylate) (PMMA) and PI. A reactive ion etching was followed to remove the exposed polymers to create stretchable mesh patterns. A flux was used to remove an oxidized layer on the patterned electrode. The last step was to dissolve the PMMA in acetone and transfer onto an elastomeric membrane, facilitated by a water-soluble tape (ASWT-2, Aquasol).

In vivo experiment with human subjects

The eye vergence study involved 14 volunteers aged 18 to 40, and the study was conducted by following the approved Institutional Review Board protocol (no. H17212) at the Georgia Institute of Technology. Before the in vivo study, all subjects agreed to the study procedures and provided signed consent forms.

Vergence physical apparatus

An aluminum frame–based system was built to accustom a human subject with natural eye vergence motions in the physical domain (fig. S1A and section S4). An 1/8″ thick glass was held erect by a thin 3-foot threaded nylon rod screwed into a rail car. The rail car could be moved to any position, horizontally, along the 5-foot aluminum bar at a height of 5 foot. A human subject was asked to place the head on an optometric mount for stability during the vergence test. Furthermore, a common activity recognition setup was placed at a table consisting of a smartphone, monitor, and television. The user was required to observe the same imagery on the screen at three different locations.

VR vergence training program

A portable VR headset running on a smartphone (Samsung Gear VR) was used for all training and therapy programs (section S4). Unity engine made the development of VR applications simpler by accurately positioning items that mimic human binocular vision. We simulated our eye vergence physical apparatus on the VR display and optimized head motions by disabling the feature for idealistic geometric positioning. A training procedure was evoked with audio feedback from the MATLAB application.

VR vergence therapy program

The eye therapy programs were chosen on the basis of eye vergence and accommodation therapy guidelines from the literature (43). Two types of home-based visual therapy techniques were reproduced, including Brock String (phase 1) and Eccentric Circles (phase 2). Brock String involved three dots, at variable distances to simulate near, intermediate, and distance positions. Each individual dot can be moved for the near (20 to 40 cm), intermediate (50 to 70 cm), and distance (80 to 100 cm) positions. Eccentric Circles allowed the user to move the cards laterally outward and inward to make cross-eye motions difficult. This motion was controlled by the touchpad and buttons on the Samsung Gear VR.

Classification feature selection

The first feature (Eq. 1) shows cumulative trapezoidal method in which the filtered signal f(t) is summed upon each unit step, i to i + 1, using the trapezoidal method for quick computation. The next feature (Eq. 2) is the variance of the filtered signal. An RMS is used (Eq. 3), in conjunction with peak-to-RMS ratio (Eq. 4). The final feature is a ratio of the maximum over the minimum of the filtered window (Eq. 5).

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

The rationalization of the use of the ensemble classifier is supported by the MATLAB’s classification learner application. This application assesses numerous classifiers by applying k-fold cross-validation using the aforementioned features and additional 60 features. The datasets from our in vivo test subjects indicated that a couple of classifiers, quadratic support vector machine, and ensemble subspace discriminant were consistently more accurate than others. The latter is consistently higher in accuracy with various test subjects in cross-validation assessments. The ensemble classifier uses a random subspace with discriminant classification rather than nearest neighbor. Unlike the other ensemble classifiers, random subspace does not use decision trees. The discriminant classification combines the best of the feature set and discriminant classifiers while removing the weak decision trees to yield its high accuracy. A custom feature selection script with the ideas of wrapper and embedded methods was conducted by incorporating the ensemble classifier.

Supplementary Material

Acknowledgments

We thank the support from the Institute for Electronics and Nanotechnology, a member of the National Nanotechnology Coordinated Infrastructure, which is supported by the NSF (grant ECCS-1542174). Funding: W.-H.Y. acknowledges funding support from the NextFlex funded by the Department of Defense; the Georgia Research Alliance based in Atlanta, GA; and the Nano-Material Technology Development Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT, and Future Planning (2016M3A7B4900044). K.J.Y. acknowledges support from the National Research Foundation of Korea (Grant Nos. NRF-2018M3A7B4071109 and NRF-2019R1A2C2086085) and Yonsei-KIST Convergence Research Program. Author contributions: S.M., Y.-S.K., and W.-H.Y. designed the research project. S.M., Y.-S.K., J.I., Y.-T.K., Y.L., M.M., H.-R.L., R.H., C.S.A., and W.-H.Y. performed research. S.M., Y.-S.K., J.I., K.J.Y., C.S.A., and W.-H.Y. analyzed the data. S.M., Y.-S.K., J.I., C.S.A., and W.-H.Y. wrote the paper. Competing interests: Georgia Institute of Technology has a pending patent application related to the work described here. Data materials and availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper may be requested from the authors.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/6/11/eaay1729/DC1

Section S1. Conformal contact analysis for aerosol jet–printed electrodes

Section S2. Methods for cross-validation

Section S3. Fabrication and assembly process

Section S4. Vergence physical apparatus and VR system

Fig. S1. Apparatus for testing eye vergence motions.

Fig. S2. Fabrication and assembly processes for the flexible device and the skin-like electrodes.

Fig. S3. Circuit components, bending, and powering of the flexible device.

Fig. S4. Design and characterization of the AgNP electrodes.

Fig. S5. Stretching/bending properties of the skin-like electrodes fabricated by AJP.

Fig. S6. Comparison between Ag/AgCl gel electrodes and aerosol jet–printed skin-like electrodes.

Fig. S7. Electrode assessment for subjects 11 to 13.

Fig. S8. Sensitivity of the periocular wearable electronics.

Fig. S9. Performance differences between the periocular wearable electronics and the physical apparatus.

Fig. S10. Comparison of average amplitudes from vergence training and a summary of classification accuracies.

Table S1. Feature comparison between BioRadio and periocular wearable electronics.

Table S2. OV2 cross-validation accuracies of subjects 1 to 5 using the physical apparatus.

Table S3. OV1 cross-validation assessment of subjects 6 to 10 using the physical apparatus.

Table S4. OV2 real-time classification of test subjects 6 to 10 using the physical apparatus.

Table S5. OV2 real-time classification of test subjects 4, 8, and 9 using the physical apparatus.

Movie S1. An example of a real-time vergence detection with a physical apparatus.

Movie S2. An example of operation of a VR program—Brock String.

Movie S3. An example of a real-time VR-based training apparatus.

REFERENCES AND NOTES

- 1.Govindan M., Mohney B. G., Diehl N. N., Burke J. P., Incidence and types of childhood exotropia: A population-based study. Ophthalmology 112, 104–108 (2005). [DOI] [PubMed] [Google Scholar]

- 2.Baek J.-Y., An J.-H., Choi J.-M., Park K.-S., Lee S.-H., Flexible polymeric dry electrodes for the long-term monitoring of ECG. Sens. Actuators A Phys. 143, 423–429 (2008). [Google Scholar]

- 3.Alvarez T. L., Kim E. H., Vicci V. R., Dhar S. K., Biswal B. B., Barrett A. M., Concurrent vision dysfunctions in convergence insufficiency with traumatic brain injury. Optom. Vis. Sci. 89, 1740–1751 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Donnelly U. M., Stewart N. M., Hollinger M., Prevalence and outcomes of childhood visual disorders. Ophthalmic Epidemiol. 12, 243–250 (2005). [DOI] [PubMed] [Google Scholar]

- 5.Martinez-Thompson J. M., Diehl N. N., Holmes J. M., Mohney B. G., Incidence, types, and lifetime risk of adult-onset strabismus. Ophthalmology 121, 877–882 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rouse M. W., Borsting E. J., Mitchell G. L., Scheiman M., Cotter S. A., Cooper J., Kulp M. T., London R., Wensveen J.; Convergence Insufficiency Treatment Trial (CITT) Investigator Group , Validity and reliability of the revised convergence insufficiency symptom survey in adults. Ophthalmic. Physiol. Opt. 24, 384–390 (2004). [DOI] [PubMed] [Google Scholar]

- 7.Cooper J., Jamal N., Convergence insufficiency—A major review. Optometry 23, 137–158 (2012). [PubMed] [Google Scholar]

- 8.Pearce K. L., Sufrinko A., Lau B. C., Henry L., Collins M. W., Kontos A. P., Near point of convergence after a sport-related concussion: Measurement reliability and relationship to neurocognitive impairment and symptoms. Am. J. Sports Med. 43, 3055–3061 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Engle E. C., The genetic basis of complex strabismus. Pediatr. Res. 59, 343–348 (2006). [DOI] [PubMed] [Google Scholar]

- 10.Weinstock V. M., Weinstock D. J., Kraft S. P., Screening for childhood strabismus by primary care physicians. Can. Fam. Physician 44, 337–343 (1998). [PMC free article] [PubMed] [Google Scholar]

- 11.Convergence Insufficiency Treatment Trial Study Group , Randomized clinical trial of treatments for symptomatic convergence insufficiency in children. Arch. Ophthalmol. 126, 1336–1349 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hirota M., Kanda H., Endo T., Lohmann T. K., Miyoshi T., Morimoto T., Fujikado T., Relationship between reading performance and saccadic disconjugacy in patients with convergence insufficiency type intermittent exotropia. Jpn. J. Ophthalmol. 60, 326–332 (2016). [DOI] [PubMed] [Google Scholar]

- 13.Kapoula Z., Morize A., Daniel F., Jonqua F., Orssaud C., Brémond-Gignac D., Objective evaluation of vergence disorders and a research-based novel method for vergence rehabilitation. Transl. Vis. Sci. Technol. 5, 8 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chita-Tegmark M., Social attention in ASD: A review and meta-analysis of eye-tracking studies. Res. Dev. Disabil. 48, 79–93 (2016). [DOI] [PubMed] [Google Scholar]

- 15.Gorges M., Maier M. N., Rosskopf J., Vintonyak O., Pinkhardt E. H., Ludolph A. C., Müller H.-P., Kassubek J., Regional microstructural damage and patterns of eye movement impairment: A DTI and video-oculography study in neurodegenerative parkinsonian syndromes. J. Neurol. 264, 1919–1928 (2017). [DOI] [PubMed] [Google Scholar]

- 16.Gaertner C., Bucci M. P., Ajrezo L., Wiener-Vacher S., Binocular coordination of saccades during reading in children with clinically assessed poor vergence capabilities. Vision Res. 87, 22–29 (2013). [DOI] [PubMed] [Google Scholar]

- 17.Farivar R., Michaud-Landry D., Construction and operation of a high-speed, high-precision eye tracker for tight stimulus synchronization and real-time gaze monitoring in human and animal subjects. Front. Syst. Neurosci. 10, 73 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Yaramothu C., d’Antonio-Bertagnolli J. V., Santos E. M., Crincoli P. C., Rajah J. V., Scheiman M., Alvarez T. L., Proceedings #37: Virtual eye rotation vision exercises (VERVE): A virtual reality vision therapy platform with eye tracking. Brain Stimul. 12, e107–e108 (2019). [Google Scholar]

- 19.S. K. Schnipke, M. W. Todd, in CHI'00 Extended Abstracts on Human Factors in Computing Systems, The Hague, Netherlands, 1 to 6 April 2000 (ACM, 2000).

- 20.Fuhl W., Tonsen M., Bulling A., Kasneci E., Pupil detection for head-mounted eye tracking in the wild: An evaluation of the state of the art. Mach. Vis. Appl. 27, 1275–1288 (2016). [Google Scholar]

- 21.Kourkoumelis N., Tzaphlidou M., Eye safety related to near infrared radiation exposure to biometric devices. Sci. World J. 11, 520–528 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mishra S., Norton J. J. S., Lee Y., Lee D. S., Agee N., Chen Y., Chun Y., Yeo W.-H., Soft, conformal bioelectronics for a wireless human-wheelchair interface. Biosens. Bioelectron. 91, 796–803 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Stuart S., Hickey A., Galna B., Lord S., Rochester L., Godfrey A., iTrack: Instrumented mobile electrooculography (EOG) eye-tracking in older adults and Parkinson’s disease. Physiol. Meas. 38, N16–N31 (2017). [DOI] [PubMed] [Google Scholar]

- 24.Morahan P., Meehan J. W., Patterson J., Hughes P. K., Ocular vergence measurement in projected and collimated simulator displays. Human Factors 40, 376–385 (1998). [DOI] [PubMed] [Google Scholar]

- 25.D. Kumar, A. Sharma, Electrooculogram-based virtual reality game control using blink detection and gaze calibration, in 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Jaipur, India, 21 to 24 September 2016 (IEEE, 2016), pp. 2358–2362. [Google Scholar]

- 26.Xiao J., Qu J., Li Y. Q., An Electrooculogram-based interaction method and its music-on-demand application in a virtual reality environment. IEEE Access 7, 22059–22070 (2019). [Google Scholar]

- 27.Jin S. L., Shaokai G. P., Liang W. R., Zhang C., Wang B., Working mechanisms of strain sensors utilizing aligned carbon nanotube network and aerosol jet printed electrodes. Carbon 73, 303–309 (2014). [Google Scholar]

- 28.Tu L., Yuan S., Zhang H., Wang P., Cui X., Wang J., Zhan Y.-Q., Zheng L.-R., Aerosol jet printed silver nanowire transparent electrode for flexible electronic application. J. Appl. Phys. 123, 174905 (2018). [Google Scholar]

- 29.Jing Q., Choi Y. S., Smith M., Ćatić N., Ou C., Kar-Narayan S., Aerosol-jet printed fine-featured triboelectric sensors for motion sensing. Adv. Mater. Technol. 4, 1800328 (2019). [Google Scholar]

- 30.Agarwala S., Goh G. L., Dinh Le T.-S., An J., Peh Z. K., Yeong W. Y., Kim Y.-J., Wearable bandage-based strain sensor for home healthcare: Combining 3D aerosol jet printing and laser sintering. ACS Sens. 4, 218–226 (2019). [DOI] [PubMed] [Google Scholar]

- 31.Williams N. X., Noyce S., Cardenas J. A., Catenacci N., Wiley B. J., Franklin A. D., Silver nanowire inks for direct-write electronic tattoo applications. Nanoscale 11, 14294–14302 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hung G. K., Semmlon J. L., Ciuffreda K. J., The near response: Modeling, instrumentation, and clinical applications. IEEE Trans. Biomed. Eng. 31, 910–919 (1984). [DOI] [PubMed] [Google Scholar]

- 33.Jeong J.-W., Yeo W.-H., Akhtar A., Norton J. J. S., Kwack Y.-J., Li S., Jung S.-Y., Su Y., Lee W., Xia J., Cheng H., Huang Y., Choi W.-S., Bretl T., Rogers J. A., Materials and optimized designs for human-machine interfaces via epidermal electronics. Adv. Mater. 25, 6839–6846 (2013). [DOI] [PubMed] [Google Scholar]

- 34.Herbert R., Kim J.-H., Kim Y. S., Lee H. M., Yeo W.-H., Soft material-enabled, flexible hybrid electronics for medicine, healthcare, and human-machine interfaces. Materials 11, 187 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Yeo W.-H., Kim Y.-S., Lee J., Ameen A., Shi L., Li M., Wang S., Ma R., Jin S. H., Kang Z., Huang Y., Rogers J. A., Multifunctional epidermal electronics printed directly onto the skin. Adv. Mater. 25, 2773–2778 (2013). [DOI] [PubMed] [Google Scholar]

- 36.T. Blumenthal, V. Fratello, G. Nino, K. Ritala, Aerosol Jet® Printing Onto 3D and Flexible Substrates (Quest Integrated Inc., 2017). [Google Scholar]

- 37.Saengchairat N., Tran T., Chua C.-K., A review: Additive manufacturing for active electronic components. Virtual Phys. Prototyp. 12, 31–46 (2017). [Google Scholar]

- 38.Kim N. R., Lee Y. J., Lee C., Koo J., Lee H. M., Surface modification of oleylamine-capped Ag–Cu nanoparticles to fabricate low-temperature-sinterable Ag–Cu nanoink. Nanotechnology 27, 345706 (2016). [DOI] [PubMed] [Google Scholar]

- 39.G. Socrates, Infrared and Raman Characteristic Group Frequencies (John Wiley & Sons, ed. 3, 2001), pp. 362. [Google Scholar]

- 40.Ma J., Zhang Y., Cichocki A., Matsuno F., A novel EOG/EEG hybrid human–machine interface adopting eye movements and ERPs: Application to robot control. IEEE Trans. Biomed. Eng. 62, 876–889 (2015). [DOI] [PubMed] [Google Scholar]

- 41.E. Moon, H. Park, J. Yura, D. Kim, Novel design of artificial eye using EOG (electrooculography), in 2017 First IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 10 to 12 April 2017 (IEEE, 2017), pp. 404–407. [Google Scholar]

- 42.Kang S. L., Shaikh A. G., Ghasia F. F., Vergence and strabismus in neurodegenerative disorders. Front. Neurol. 9, 299 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kulp M., Mitchell G. L., Borsting E., Scheiman M., Cotter S., Rouse M., Tamkins S., Mohney B. G., Toole A., Reuter K.; Convergence Insufficiency Treatment Trial Study Group , Effectiveness of placebo therapy for maintaining masking in a clinical trial of vergence/accommodative therapy. Invest. Ophthalmol. Visual Sci. 50, 2560–2566 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/6/11/eaay1729/DC1

Section S1. Conformal contact analysis for aerosol jet–printed electrodes

Section S2. Methods for cross-validation

Section S3. Fabrication and assembly process

Section S4. Vergence physical apparatus and VR system

Fig. S1. Apparatus for testing eye vergence motions.

Fig. S2. Fabrication and assembly processes for the flexible device and the skin-like electrodes.

Fig. S3. Circuit components, bending, and powering of the flexible device.

Fig. S4. Design and characterization of the AgNP electrodes.

Fig. S5. Stretching/bending properties of the skin-like electrodes fabricated by AJP.

Fig. S6. Comparison between Ag/AgCl gel electrodes and aerosol jet–printed skin-like electrodes.

Fig. S7. Electrode assessment for subjects 11 to 13.

Fig. S8. Sensitivity of the periocular wearable electronics.

Fig. S9. Performance differences between the periocular wearable electronics and the physical apparatus.

Fig. S10. Comparison of average amplitudes from vergence training and a summary of classification accuracies.

Table S1. Feature comparison between BioRadio and periocular wearable electronics.

Table S2. OV2 cross-validation accuracies of subjects 1 to 5 using the physical apparatus.

Table S3. OV1 cross-validation assessment of subjects 6 to 10 using the physical apparatus.

Table S4. OV2 real-time classification of test subjects 6 to 10 using the physical apparatus.

Table S5. OV2 real-time classification of test subjects 4, 8, and 9 using the physical apparatus.

Movie S1. An example of a real-time vergence detection with a physical apparatus.

Movie S2. An example of operation of a VR program—Brock String.

Movie S3. An example of a real-time VR-based training apparatus.