Abstract

Electroencephalography (EEG)-based brain–computer interfaces (BCIs) for motor rehabilitation aim to “close the loop” between attempted motor commands and sensory feedback by providing supplemental information when individuals successfully achieve specific brain patterns. Existing EEG-based BCIs use various displays to provide feedback, ranging from displays considered more immersive (e.g., head-mounted display virtual reality (HMD-VR)) to displays considered less immersive (e.g., computer screens). However, it is not clear whether more immersive displays improve neurofeedback performance and whether there are individual performance differences in HMD-VR versus screen-based neurofeedback. In this pilot study, we compared neurofeedback performance in HMD-VR versus a computer screen in 12 healthy individuals and examined whether individual differences on two measures (i.e., presence, embodiment) were related to neurofeedback performance in either environment. We found that, while participants’ performance on the BCI was similar between display conditions, the participants’ reported levels of embodiment were significantly different. Specifically, participants experienced higher levels of embodiment in HMD-VR compared to a computer screen. We further found that reported levels of embodiment positively correlated with neurofeedback performance only in HMD-VR. Overall, these preliminary results suggest that embodiment may relate to better performance on EEG-based BCIs and that HMD-VR may increase embodiment compared to computer screens.

Keywords: brain–computer interface, neurofeedback, immersive virtual reality, head-mounted display, electroencephalography, presence, embodiment

1. Introduction

Neurofeedback training produces beneficial changes in motor function and has been shown to be successful in motor rehabilitation for clinical populations, such as individuals with stroke [1]. Electroencephalography (EEG) can be used to measure brain activity used by brain–computer interfaces (BCI) to provide sensory feedback to reward specific brain activity patterns. This feedback can then be used to control a robotic or computerized device (e.g., movement of an object on a computer screen) to train individuals to control their own brain activity. BCIs designed for the rehabilitation of individuals with severe motor impairment attempt to “close the loop” between motor commands and sensory feedback by providing supplemental sensory information when individuals successfully establish specific brain patterns. Successfully closing the loop is achieved when individuals perceive the control of supplemental sensory information as their own (i.e., embodiment). Perceived embodiment can influence an individual’s sense of agency [2] where greater embodiment may result in a sense of increased control, but a lack of embodiment may result in a sense of decreased control or distress and lead to a distortion of capabilities [3].

Given that individuals with severe motor impairment cannot generate active volitional movement, a primary neurofeedback approach is to use imagined movement (i.e., motor imagery) to drive the BCI. Motor imagery (MI) is thought to engage areas that modulate movement execution [4,5,6]. MI has been shown to be an effective intervention for motor rehabilitation, especially when it is coupled with physical practice [7,8]. Previous work has shown that BCIs employing MI can produce clinically meaningful improvements in motor function in individuals with motor impairments [9,10,11,12,13,14]. These BCIs have used a variety of displays to provide feedback, ranging from devices that provide an immersive and compelling experience (e.g., projected limbs, robotic orthoses, or exoskeletons) [1,10,11,12] to devices that are considered less immersive (e.g., computer screens) [9,13]. Recently, BCIs have also begun to incorporate immersive virtual reality using a head-mounted display (HMD-VR) in order to provide a more immersive and realistic environment [15] and to provide more biologically relevant feedback [16]. However, it is not known whether HMD-VR improves neurofeedback performance compared to feedback provided on a screen. It is also unclear whether neurofeedback provided in HMD-VR increases embodiment, which in the context of HMD-VR can be defined as the perceptual ownership of a virtual body in a virtual space [17,18], compared to screen-based neurofeedback.

Studies have shown that HMD-VR facilitates the embodiment of a virtual body and that the observation of this virtual body in the first person perspective is enough to induce a strong feeling of embodiment for the virtual body’s actions [17,19,20,21,22]. In HMD-VR, individuals exhibit behaviors that match those of a digital self-representation, such as overestimating object sizes when an adult has been given a virtual child body [17] or exhibiting a reduction in implicit racial bias when given a body of a different race [23]. Initially coined the Proteus effect [19], this sense of embodiment that arises from viewing a virtual limb has the potential to alter one’s own neurophysiology and behavior. Regarding motor behavior, an increased level of embodiment has been shown to be related to increased sensorimotor rhythms (SMR) desynchronization [24]. In related work, observing the actions of virtual limbs in virtual reality has been shown to increase SMR desynchronization [25]. In addition, the immersive nature of HMD-VR has also been shown to increase an individual’s sense of presence in the virtual environment [24], which in the context of HMD-VR can be defined as the illusion of actually being present in the virtual environment [26]. It is also unclear whether neurofeedback provided in HMD-VR increases one’s feeling of presence compared to screen-based neurofeedback. Here we examined the role of both embodiment and presence on neurofeedback performance using HMD-VR versus a computer screen through the use of qualitative questionnaires previously used to measure these concepts [17,27,28].

We have created a hybrid brain–computer interface for individuals with severe motor impairments called REINVENT (Rehabilitation Environment using the Integration of Neuromuscular-based Virtual Enhancements for Neural Training), which provides brain (EEG) and/or muscle (electromyography (EMG)) neurofeedback in HMD-VR. Although we designed REINVENT as an EEG-based BCI device for individuals with severe motor impairments, in this pilot study, we first wanted to examine whether providing neurofeedback in HMD-VR improves neurofeedback performance compared to receiving the same neurofeedback on a computer screen in healthy adults. Furthermore, we wanted to examine whether there were differences in the levels of embodiment and presence induced by HMD-VR versus a computer screen and how individual differences in these features relate to neurofeedback performance in each environment. As embodiment and presence play an important role in increasing SMR desynchronization and HMD-VR induces high levels of embodiment and presence, we predicted that participants would show better neurofeedback performance in an HMD-VR environment compared to a computer screen, and that improved performance would be related to increased embodiment and presence.

2. Materials and Methods

2.1. Participants

Twelve healthy participants were recruited for this experiment (7 females/5 males; age: M = 24.4 years, SD = 2.7 years) where all participants underwent the same experimental design (see Section 2.3). Eligibility criteria included healthy, right handed individuals, and informed consent was obtained from all participants. Eight participants reported being naive to immersive virtual reality using a head-mounted display; the four participants with previous use of head-mounted displays reported using the device no more than four times. The experimental protocol was approved by the University of Southern California Health Sciences Campus Institutional Review Board and performed in accordance with the 1964 Declaration of Helsinki.

2.2. REINVENT Hardware, Software, Online Processing, and Data Integration

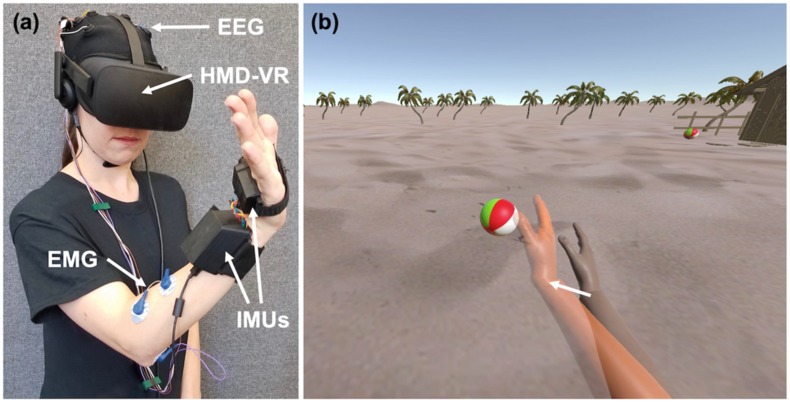

The REINVENT system (see Figure 1a for an example) is a brain–computer interface (BCI) composed of four main components: EEG, EMG, an inertial measurement unit (IMU), and a HMD-VR system [29]. While the current study only utilized the EEG and IMU components, we describe the entire system here.

Figure 1.

Example of the REINVENT system. (a) REINVENT hardware used here is composed of electroencephalography (EEG), electromyography (EMG), inertial measurement units (IMUs), and a head-mounted display virtual reality (HMD-VR) system. Written informed consent for the publication of this image was obtained from the individual depicted. (b) The environment participants observed on both a computer screen and in HMD-VR; arm movements are goal-oriented such that when the arm reaches a target position, it interacts with an object (e.g., hitting a beach ball). On EEG blocks (Screen, HMD-VR), participants would attempt to move their virtual arm (right arm) to the orange target arm (left arm) by thinking about movement. On the IMU block, the virtual arm would match participants actual arm movements.

2.2.1. Electroencephalography (EEG) and Electromyography (EMG)

The EEG/EMG component of REINVENT is composed of hardware from OpenBCI (www.openbci.com), a low-cost solution for measuring brain and muscle activity. The EEG component consists of reusable dry EEG electrodes, and the EMG component consists of snap electrode cables connected to mini disposable gel electrodes (Davis Medical Electronics, Inc.). Both EEG and EMG wires were connected to a 16-channel, 32-bit v3 processor (Cyton + Daisy Biosensing Open BCI Board) and sampled at 125 Hz. Twelve EEG locations based on the international 10-20 system [30] and concentrated over the prefrontal and motor cortex are used to record brain activity (F3, F4, C1, C2, C3, C4, CP1, CP2, CP5, CP6, P3, and P4); however, in the current study we were primarily interested in channels closest to the left motor network (i.e., C1, C3, and CP1). Ground and reference electrodes are located at the right and left earlobes, respectively. EMG is recorded from four electrodes placed on the wrist flexors and extensors on the muscle bellies of the right forearm, with a reference electrode on the bony prominence of the elbow. In the current experiment, muscle activity from EMG was collected during the experiment to examine EEG–EMG coherence, but as these analyses are beyond the scope of the current study, they are not included here.

Custom software is used to control the BCI and provide users with real-time neurofeedback of a virtual arm. The neurofeedback, composed of the sum desynchronization from C1, C3, and CP1, was used to drive the movement of a virtual right arm towards a target arm. EEG signals were recorded from electrodes of interest over the left motor cortex (i.e., C1, C3, and CP1, based on the international 10-20 system) with both ear lobes used as the ground and reference electrodes, and sent to the REINVENT software. Data processing occurred online. Individual channels were high-pass filtered using a second order Butterworth filter with a cutoff of 3 Hz, and a sliding window consisting of 125 incoming samples were fast Fourier transformed (FFT). Power was then computed between the frequency ranges of 8-24 Hz, capturing the broad activity in alpha and beta bands that may correspond to motor imagery (i.e., sensorimotor desynchronization). The virtual arm direction updated every second and moved towards the target in response to sensorimotor desynchronization, measured as a decrease in amplitude compared to the baseline recording of the left sensorimotor area (i.e., the combined C1, C3, and CP1).

2.2.2. Inertial Measurement Unit (IMU)

The IMU component of REINVENT is composed of two nine degrees of freedom IMUs, with one placed on the hand and the other placed on the wrist of the right arm. To foster a sense of embodiment between the participant and the virtual arm, the REINVENT system allows for the participant’s own arm movements to be recorded. Before beginning this experiment, the participant’s arm was passively moved by the experimenter, and the virtual representation of the arm was shown on the computer screen and in HMD-VR. In this way, a sensorimotor contingency was developed between the participant’s own arm and the virtual arm they were subsequently asked to control.

2.3. Experimental Design

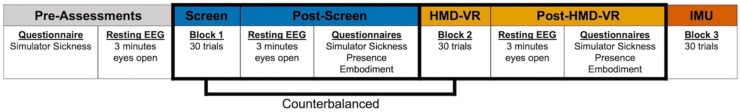

We used a within-subject experimental design where all participants underwent the same protocol (Figure 2). Prior to the experiment, participants underwent pre-assessments that included a simulator sickness questionnaire (see Section 2.6) and a resting EEG baseline recording. The resting EEG baseline recording lasted three minutes and was recorded while the HMD-VR was removed. For the duration of the recording, participants were instructed to keep their eyes open and fixed on a location at the center of the computer screen and asked to think about a stationary object and to stay as still as possible. The recording was used to provide the baseline EEG values for the experiment. Following the resting EEG baseline recoding, participants completed three blocks of 30 trials (90 trials in total) where each block was a separate condition. The conditions were (1) controlling the virtual arm with brain activity on the computer screen (Screen), (2) controlling the virtual arm with brain activity in a head-mounted display virtual reality (HMD-VR) system, and (3) controlling the virtual arm with actual arm movements in a head-mounted display (IMU). Participants completed the conditions in the following block order: Block 1 (Screen), Block 2 (HMD-VR), Block 3 (IMU), with Blocks 1 and 2 (Screen, HMD-VR) counterbalanced. In this experiment, the IMU condition strictly provided a control condition of real movement instead of neurofeedback; these data are briefly reported but not focused on in this paper.

Figure 2.

Experimental timeline. Prior to the experimental blocks, participants completed a questionnaire relating to simulator sickness and then completed a resting EEG recording for three minutes with eyes open. Participants then completed the three experimental blocks where the first two blocks were counterbalanced; during Blocks 1 and 2 (Screen, HMD-VR), participants were asked to think about movement in order to move their virtual arm to a virtual target arm on either a computer screen or in HMD-VR. After the Screen condition and after the HMD-VR condition, participants completed a resting EEG recording for three minutes with eyes open and then completed a series of questionnaires relating to simulator sickness, presence, and embodiment. During Block 3 (IMU), participants were asked to move their physical arm to a virtual target arm in HMD-VR, as a control condition.

Before starting the experimental conditions, participants were given instructions on how to control their virtual arm (i.e., “You will see two right arms. One is orange and that is the target arm that moves to different positions. The other is your arm. We want you to move it to match the target arm’s position. You can move your arm in two ways. First, you will complete 60 trials of moving the virtual arm with just your thoughts by thinking about moving; 30 of the trials will be on the computer screen, without the head-mounted display, and 30 trials will be with the head-mounted display. Then you will complete 30 trials of moving the virtual arm using your actual arm movements.”). Instructions were repeated at the start of each condition. For each EEG neurofeedback condition (Screen, HMD-VR), participants were instructed to stay as still as possible. After the completion of each EEG neurofeedback condition, a resting-EEG acquisition of three minutes was recorded while the HMD-VR was removed; participants were again instructed to keep their eyes open and fixed on the center of the screen for the duration of the recording. For the duration of the experiment, participants were seated and asked to rest their hands comfortably on a pillow placed across their lap.

2.4. Individual Trials

At the start of each trial, a target arm animated a wrist extension pose in one of three target positions. Once the target arm stopped moving, participants were instructed to move their virtual arm to match the position of the target arm given the current condition (i.e., in the case of the two EEG neurofeedback conditions (Screen, HMD-VR), and they were asked to think about moving; in the case of the IMU condition, they were asked to actually move their arm to the target location). During the EEG neurofeedback condition trials, the virtual hand incremented either forward or backward, as determined by the sum of the three channel EEG desynchronization compared to baseline. Most of the time, the EEG activity was significantly above or below the baseline; however, if the sensorimotor activity was hovering around the baseline, the arm would move back and forth. The duration of each trial was 15 seconds. If the target arm was reached within this time constraint, a successful auditory tone was played; however, if the target arm was not reached, then an unsuccessful auditory tone was played. At the completion of each trial, the target and virtual arms returned to their starting position.

2.5. Displays and Neurofeedback

For all conditions, participants observed the virtual arm from a first person perspective. For the HMD-VR and IMU conditions, we used the Oculus CV1, which includes positional and rotational tracking to display the stimuli. For the Screen condition, we used a 24.1 inch, 1920 × 1200 pixel resolution computer monitor (Hewlett-Packard) to display the stimuli. In both displays, participants observed a scene that included two virtual arms: (1) one virtual arm that represented the participant’s own arm and (2) a second virtual arm, colored in orange, that provided different target arm positions that participants were asked to move their own virtual arm towards (Figure 1b). For all conditions, the target arm generated wrist extension movements to different target locations; participants were either asked to either think about moving their arm to these locations (EEG neurofeedback conditions: Screen, HMD-VR) or actually move their arm to these locations (IMU condition). In the IMU condition of the experiment, participants were required to actually perform wrist extension movements to match the virtual arm in the HMD-VR display.

2.6. Subjective Questionnaires

Prior to the experiment, participants were given a series of standard questions about their baseline comfort levels (simulator sickness questionnaire; adapted from Kennedy et al. (1993)) [31]. After participants completed each EEG neurofeedback condition (Screen, HMD-VR), they were given the same simulator sickness questionnaire to examine changes following each block. Responses were reported on a 0 to 3-point scale, and questions were collapsed along three main features: Nausea, Oculomotor, and Disorientation. In addition, after completing both the Screen and HMD-VR conditions, participants were also asked questions pertaining to their overall sense of presence and embodiment in each respective environment. The Presence Questionnaire was adapted from Witmer and Singer (1998) [27] and revised by the UQO Cyberpsychology Lab (2004) and asked participants a series of questions to gauge their sense of presence in each environment. Responses were reported on a 1 to 7-point scale, and questions were collapsed along five main features: Realism, Possibility to Act, Quality of Interface, Possibility to Examine, and Self-Evaluation of Performance. The Presence Questionnaire is a validated questionnaire [32] that has been used in research using HMD-VR [33]. The Embodiment Questionnaire was adapted from Bailey et al. (2016) [28] and Banakou et al. (2013) [17] and asked participants a series of questions to gauge their sense of embodiment. Responses were reported on a 1 to 10-point scale and questions were averaged to generate an overall Embodiment feature. In addition, we also collapsed questions relating to either Self Embodiment or Spatial Embodiment to generate two embodiment sub-features. Self Embodiment describes the extent to which participants felt the virtual arm was an extension of their own arm, and Spatial Embodiment describes the extent to which participants felt that they were in the virtual environment. Table 1 includes individual questions asked on the Embodiment Questionnaire.

Table 1.

Individual questions on Embodiment Questionnaire.

| Type | Question | Referenced | Scoring Scale |

|---|---|---|---|

| Self | To what extent did you feel that the virtual arm was your own arm? | Own Arm | Not at all/Very much (1…10) |

| Self | How much did the virtual arm’s actions correspond with your commands? | Arms Actions | Not at all/Very much (1…10) |

| Self | To what extent did you feel if something happened to the virtual arm it felt like it was happening to you? | Happening to Arm | Not at all/Very much (1…10) |

| Self | How much control did you feel you had over the virtual arm in this virtual environment? | Amount of Arm Control | No control/Full control (1…10) |

| Self | How much did you feel that your virtual arm resembled your own (real) arm in terms of shape, skin tone or other visual features? | Resembled Arm | Not at all/Very much (1…10) |

| Self | Did the virtual arm seem bigger, smaller or about the same as what you would expect from your everyday experience? | Size of Arm | Smaller/Larger (1…10) |

| Spatial | To what extent did you feel like you were really located in the virtual environment? | Location | None/Completely (1…10) |

| Spatial | To what extent did you feel surrounded by the virtual environment? | Surrounded | None/Completely (1…10) |

| Spatial | To what extent did you feel that the virtual environment seemed like the real world? | Real World | None/Completely (1…10) |

| Spatial | To what extent did you feel like you could reach out and touch the objects in the virtual environment? | Reach Out and Touch | None/Completely (1…10) |

2.7. Analyses

2.7.1. Post-Hoc EEG Analysis on Activity During Task

In addition to the online processing (see Section 2.2), post-hoc EEG signals were processed offline using MATLAB® (R2017a, The MathWorks, MA, USA) with the EEGLAB toolbox [34]. After importing the data and channel information, a high-pass filter at 1 Hz was applied to remove the baseline drift followed by line-noise and harmonics removal at 60 Hz. Furthermore, bad channels were rejected, while any potential missing channels were interpolated before the re-referencing stage. Additionally, all channels were re-referenced to the average. Next, data epoching was performed by extracting the trials from the EEG neurofeedback conditions (Screen, HMD-VR) for each participant. Artifact rejection was performed using independent component analysis (ICA) over the epoched data and visual inspection of the time-series. Finally, the baseline data (180 seconds) were extracted from the resting-state session that occurred before the task.

For computing the average spectral power, Welch’s method for power spectral density (PSD) of the power spectrum [35] was used across the online frequency range (8–24 Hz) and for the alpha (8–12 Hz) and beta (13–24 Hz) bands. PSD was extracted from both the epoched motor-related data and the baseline. As there was only one movement detection with one degree of freedom, classifier training did not take place. We used the target location C3 to represent the primary motor cortex; thus, the band power was extracted over the C3 electrode location and calculated using the following formula:

| (1) |

Similarly, for analyzing the primary ipsilateral motor cortex, we used the target location C4 [36,37,38].

2.7.2. Statistical Analysis

All data used in statistical results can be found in the supplementary materials (supporting datasets). Statistical analysis for neurofeedback performance, subjective experience from questionnaires, and EEG activity during the task was analyzed using the statistical package R (Version 3.2.2) using R Studio (Version 1.1.423). For each variable, we checked for normality using a Shapiro–Wilk test. To assess statistical differences in performance, subjective experience, and average spectral power during the task between the two EEG conditions (Screen, HMD-VR), a paired t-test was performed on each measure found to be normally distributed, and a Mann–Whitney U test was performed on each measure found to not be normally distributed. Means (M), standard deviations (SD), and skewness are reported for each measure (Supplementary Table S1). To confirm that neurofeedback based on motor imagery was successfully used to increase performance, we ran a simple linear regression on neurofeedback performance based on PSD. Lastly, we examined the relationship between neurofeedback performance and responses from the Presence Questionnaire and the Embodiment Questionnaire using regression analysis. For both questionnaires, we first tested for normality using a Shapiro–Wilk normality test. For the Presence Questionnaire, we ran a multiple regression analysis on neurofeedback performance based on the five presence features for each condition (Screen, HMD-VR). For the Embodiment Questionnaire, we first ran a simple linear regression analysis on neurofeedback performance based on the overall Embodiment feature for each condition. Then, we ran a multiple regression analysis on neurofeedback performance based on the two embodiment sub-features (Self Embodiment and Spatial Embodiment) for each condition. For all regression analyses, adjusted R2 is reported. Finally, all participants completed the control IMU condition with 100% accuracy, and therefore this condition is not included in further analysis.

3. Results

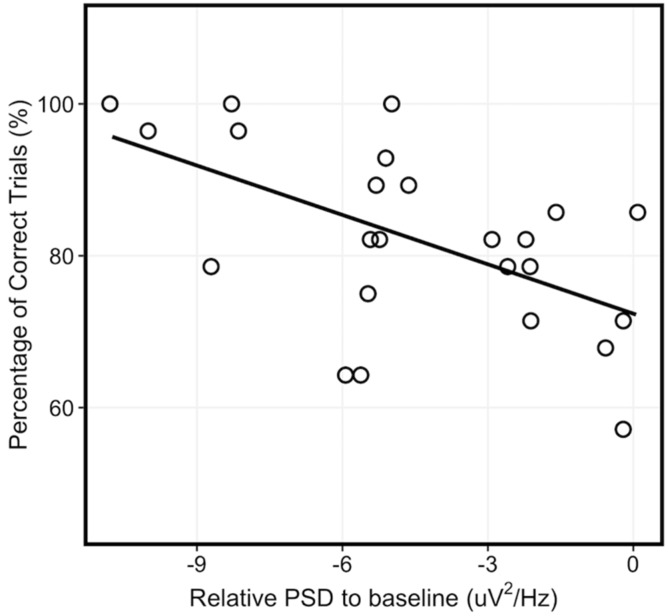

3.1. Relationship Between Power Spectral Density and Neurofeedback Performance

To confirm the relationship between PSD in the 8-24 Hz frequency range and the corresponding neurofeedback performance, we ran a simple linear regression of neurofeedback performance based on PSD across the combined EEG neurofeedback conditions (i.e., Screen and HMD-VR). We found a significant relationship between PSD and neurofeedback performance (Figure 3; F(1,22) = 9.328, p = 0.006; R2 = 0.266) where an increased sensorimotor desynchronization corresponded to better neurofeedback performance.

Figure 3.

Relationship between power spectral density and neurofeedback performance. There was a significant relationship between power spectral density (PSD) and neurofeedback performance across the EEG neurofeedback conditions (combined Screen and HMD-VR).

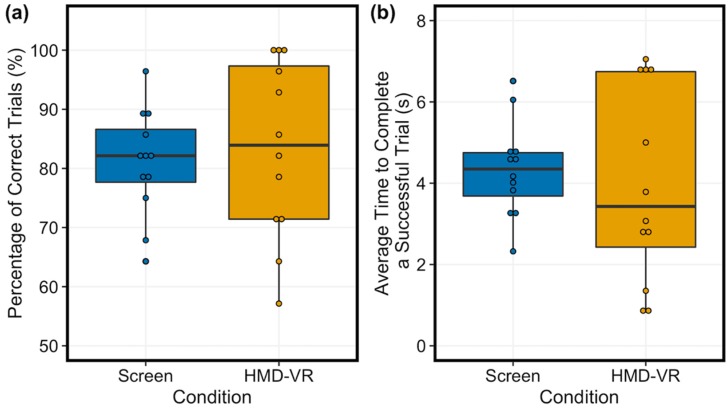

3.2. Comparison of Neurofeedback Performance and Time to Complete Successful Trials Between Screen and HMD-VR

The proportion of correct trials completed was similar between the two conditions (Figure 4a; t(11) = −0.46, p = 0.656, d = 0.19; Screen: M = 80.95%, SD = 9.1%, and HMD-VR: M = 83.33%, SD = 14.9%). These results suggest that participants seemed to perform similarly independent of whether neurofeedback was provided in HMD-VR or on a computer screen. In addition, the time to complete each of the successful trials was also similar between the two conditions (Figure 4b; t(11) = 0.54, p = 0.597, d = 0.19; Screen: M = 4.347 s, SD = 1.17 s, and HMD-VR: M = 3.996 s, SD = 2.41 s). These results suggest that when participants were able to increment the virtual arm towards the target with their brain activity, the efficiency of control was similar whether viewing the arm in the HMD-VR environment or on a computer screen. The distribution of the data was not significantly different from a normal distribution for neurofeedback performance and time to complete successful trials.

Figure 4.

Average performance on trials and time to complete successful trials between conditions. (a) The analysis showed no significant differences in performance between Screen (left, blue) and HMD-VR (right, yellow) conditions. (b) The analysis showed no significant differences in time on successful trials between Screen (left, blue) and HMD-VR (right, yellow) conditions.

3.3. Comparison of Power Spectral Density Between Screen and HMD-VR

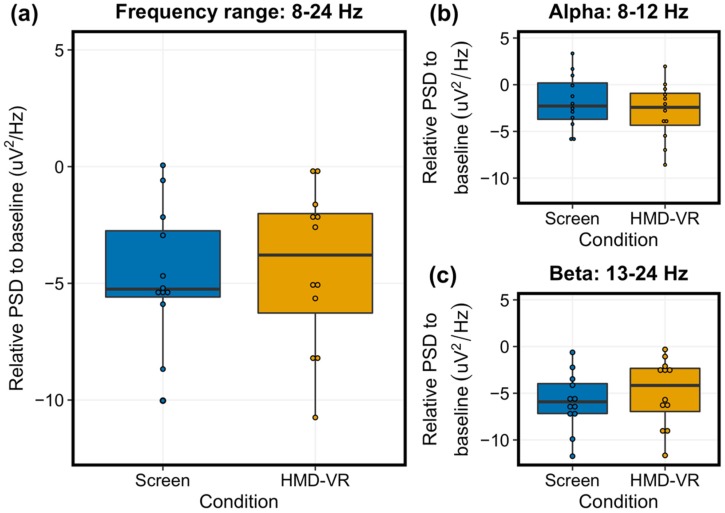

Similar to the neurofeedback performance results, we did not find significant differences in group-level PSD between the Screen and HMD-VR conditions across the 8–24 Hz frequency range (Figure 5a; t(11) = 0.475, p = 0.644, d = 0.12; Screen: M = −4.69, SD = 2.96, and HMD-VR: M = −4.32, SD = 3.41). We also explored alpha and beta bands separately and did not find significant differences in group-level PSD between the Screen and HMD-VR conditions in either band (alpha: Figure 5b, t(11) = 1.363, p = 0.200, d = 0.35, Screen: M = −1.84, SD = 2.90, and HMD-VR: M = −2.89, SD = 3.04; beta: Figure 5c, t(11) = −1.141, p = 0.278, d = 0.29, Screen: M = −5.88, SD = 3.08, and HMD-VR: M = −4.92, SD = 3.63). This further suggests that participants had similar levels of sensorimotor activity whether neurofeedback was provided in HMD-VR or on a computer screen. The distribution of the data was not significantly different from a normal distribution for each power spectral density variable. Additionally, we have included two supplementary figures reporting individual participant EEG activity in alpha and beta bands for both C3 (Supplementary Figure S1; contralateral to and controlling of the virtual hand) and C4 (Supplementary Figure S2; ipsilateral to the virtual hand) recordings.

Figure 5.

Average power spectral density during trials between conditions. (a) The relative group-level PSD for the target electrode C3, representing the left motor cortex (8-24 Hz) between the Screen (left, blue) and HMD-VR (right, yellow) conditions was not significantly different. (b) The relative group-level alpha between the Screen (left, blue) and HMD-VR (right, yellow) conditions was also not significantly different. (c) The relative group-level beta between the Screen (left, blue) and HMD-VR (right, yellow) conditions was also not significantly different.

3.4. Comparison of Simulator Sickness Between Screen and HMD-VR

We checked for normality for each feature obtained by the simulator sickness questionnaire (i.e., Nausea, Oculomotor, Disorientation). For two of the features, the distribution of the data was significantly different from a normal distribution; thus, we used a nonparametic Mann–Whitney U test for each of the comparisons.

We found no significant differences in reports of simulator sickness between the Screen (Nausea: M = 1.59, SD = 8.94; Oculomotor: M = 9.48, SD = 12.15; Disorientation: M = 4.64, SD = 17.13) and the HMD-VR (Nausea: M = 2.39, SD = 5.93; Oculomotor: M = 9.45, SD = 9.76; Disorientation: M = 3.48, SD = 8.65) conditions (Nausea: U = 66, p = 0.730, d = 0.10; Oculomotor: U = 68, p = 0.837, d = 0.00; Disorientation: U = 67.5, p = 0.745, d = 0.09). These results suggest that HMD-VR neurofeedback does not cause additional adverse effects beyond using a computer screen in healthy individuals.

3.5. Comparison of Presence and Embodiment Between Screen and HMD-VR

We checked for normality for each feature obtained by the Presence Questionnaire (i.e., Realism, Possibility to Act, Quality of Interface, Possibility to Examine, and Self-Evaluation of Performance) and Embodiment Questionnaire (i.e., Embodiment, Self Embodiment, Spatial Embodiment). For each feature, the distribution of the data was not significantly different from a normal distribution.

There was a significant difference in reports of embodiment between the two conditions (t(11) = −2.21, p = 0.049, d = 0.48; Screen: M = 4.68, SD = 1.27, and HMD-VR: M = 5.4, SD = 1.71) where individuals reported higher levels of Embodiment in the HMD-VR condition. We then examined the sub-features of embodiment and found a significant difference in reports of Spatial Embodiment between the two conditions (t(11) = −3.77, p = 0.003, d = 0.87; Screen: M = 3.60, SD = 2.04, and HMD-VR: M = 5.35, SD = 2.00) where individuals reported higher levels of Spatial Embodiment in the HMD-VR condition. However, there was no significant difference in reports of Self Embodiment between the two conditions (t(11) = −0.10, p = 0.922, d = 0.03; Screen: M = 5.39, SD = 1.17, HMD-VR: M = 5.43, SD = 1.76). These results suggest that neurofeedback presented in a first person perspective in HMD-VR may increase one’s feeling of embodiment compared to neurofeedback presented on a computer screen.

In addition, there were no significant differences between reports of presence in the two conditions (Realism: t(11) = −1.95, p = 0.078, d = 0.47, Screen: M = 30.00, SD = 6.35, HMD-VR: M = 33.00, SD = 6.40; Possibility to Act: t(11) = −1.37, p = 0.199, d = 0.44, Screen: M = 18.17, SD = 3.70, HMD-VR: M = 19.92, SD = 4.19; Quality of Interface: t(11) = − 0.62, p = 0.548, d = 0.19, Screen: M = 12.83, SD = 3.07, HMD-VR: M = 13.42, SD = 2.97; Possibility to Examine: t(11) = − 2.01, p = 0.070, d = 0.72, Screen: M = 13.17, SD = 2.59, HMD-VR: M = 14.92, SD = 2.27; Self-Evaluation of Performance: t(11) = −1.24, p = 0.241, d = 0.49, Screen: M = 10.0, SD = 1.95, HMD-VR: M = 11.00, SD = 2.13). This suggests that HMD-VR neurofeedback may specifically increase embodiment but not presence in healthy individuals.

3.6. Relationship Between Embodiment, Presence, and Neurofeedback Performance

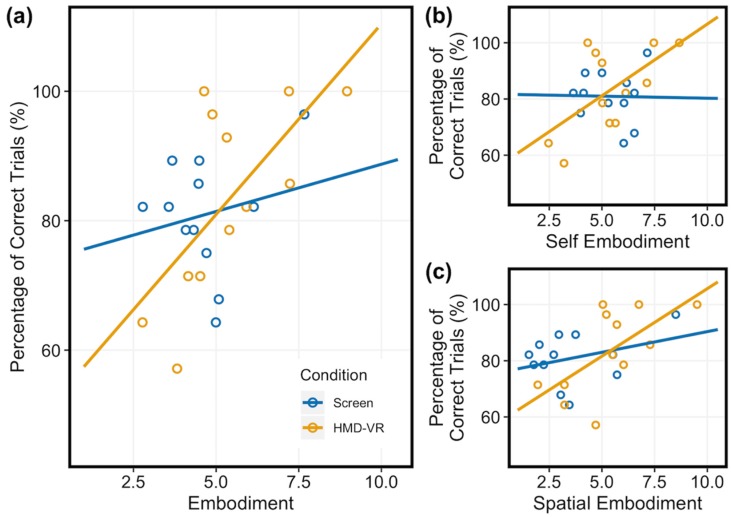

We next examined whether individual differences in embodiment related to neurofeedback performance for each condition. We ran a simple linear regression of neurofeedback performance based on the overall Embodiment feature. For the HMD-VR condition, we found a significant relationship between embodiment and neurofeedback performance (F(1,10) = 8.293, p = 0.016; R2 = 0.399). However, for the Screen condition, we did not find a significant relationship between embodiment and neurofeedback performance (F(1,10) = 0.434, p = 0.525; R2 = −0.054). These results suggest that level of embodiment is specifically related to neurofeedback performance only in HMD-VR and not on a computer screen (Figure 6a).

Figure 6.

Relationship between subjective experience and neurofeedback performance in Screen (blue) and HMD-VR (yellow). Participants reported their level of Embodiment on a scale from 1 to 10 (Table 1). (a) Embodiment: For the HMD-VR condition, embodiment was significantly related to performance. However, for the Screen condition, embodiment did not significantly relate to neurofeedback performance. (b) Self Embodiment and (c) Spatial Embodiment: For the HMD-VR condition, we found a near significant relationship between the two embodiment sub-features and neurofeedback performance. However, for the Screen condition, we did not find a significant relationship between the two embodiment sub-features and neurofeedback performance.

To better understand whether specific sub-features of embodiment also related to neurofeedback performance, we then examined if participants’ levels of self and spatial embodiment related to their neurofeedback performance for each condition (Screen, HMD-VR). We ran a multiple linear regression of neurofeedback performance based on the two embodiment sub-features (i.e., Self Embodiment, Spatial Embodiment). For the HMD-VR condition, we found a near significant relationship between the two embodiment sub-features and neurofeedback performance (F(2,9) = 3.858, p = 0.0617; R2 = 0.342). For the Screen condition, we did not find a significant relationship between the two embodiment sub-features and neurofeedback performance (F(2,9) = 0.706, p = 0.519; R2 = −0.056). These results further suggest that level of embodiment is specifically related to HMD-VR neurofeedback performance. Figure 6b,c shows regression lines for both Self Embodiment and Spatial Embodiment, respectively.

Although there were no differences in presence between the Screen and HMD-VR conditions, we also explored whether individual differences in presence related to neurofeedback performance for each condition (Screen, HMD-VR). We ran a multiple linear regression of neurofeedback performance based on the five presence features (i.e., Realism, Possibility to Act, Quality of Interface, Possibility to Examine, and Self-Evaluation of Performance). We did not find a significant relationship between the five presence features and neurofeedback performance for either the Screen or HMD-VR condition (HMD-VR: F(5,6) = 0.476, p = 0.452; R2 = 0.039; Screen: F(5,6) = 0.840, p = 0.567; R2 = −0.078). These results suggest that the level of presence does not seem to be significantly related to either HMD-VR or computer screen neurofeedback performance.

4. Discussion

The current pilot study examined whether neurofeedback from a motor-related brain–computer interface provided in HMD-VR could lead to better neurofeedback performance compared to the same feedback provided on a standard computer screen. In addition, differences in embodiment and presence between Screen and HMD-VR conditions were examined. Finally, we explored whether individual differences in embodiment and presence related to neurofeedback performance in each condition.

Overall, we found preliminary evidence that healthy participants showed similar levels of neurofeedback performance in both Screen and HMD-VR conditions; however, we found a trend for better performance in the HMD-VR condition. Additionally, participants reported greater embodiment in the HMD-VR versus Screen condition and higher reported levels of embodiment related to better neurofeedback performance in the HMD-VR condition only. These preliminary results suggest that HMD-VR-based neurofeedback may rely on an individual’s sense of embodiment for successful use and improved performance. This is in line with our theoretical framework, which is further detailed in [29,39], in which we propose that greater embodiment of a virtual avatar should lead to larger changes in neural activity in the direction of the desired feedback. These results suggest that greater embodiment of HMD-VR neurofeedback essentially augments the weight of the neurofeedback, creating a more effective and responsive closed-loop neurofeedback system. This has important implications for a number of more recent HMD-VR neurofeedback systems [16,40,41,42,43]. However, future studies should explore these findings with a larger sample size over a longer period of time.

4.1. Similar Neurofeedback Performance and Time to Complete Successful Trials Between a Computer Screen and HMD-VR

Regardless of condition (Screen, HMD-VR), we found that on average, individuals were able to accurately modulate their brain activity to successfully control a virtual arm on over 80 percent of trials. These results suggest that neurofeedback based on motor imagery, using biologically relevant stimuli, can occur either on a computer screen or in immersive virtual reality using a head-mounted display. However, as seen in Figure 4a,b, there is a trend towards better performance and faster time to complete a successful trial in the HMD-VR condition compared to the Screen condition, which may not allow for significance because of our limited dataset (further discussed in Section 4.7). This trend towards greater sensorimotor desynchronization can also be observed in the individual subject data (Supplementary Figures S1 and S2), with more individuals showing more sensorimotor activity for the HMD-VR condition than the Screen condition. Additionally, there is a larger range of interindividual variability in both performance and average time to complete a successful trial in the HMD-VR condition, suggesting that some individuals may benefit from HMD-VR compared to others. This suggestion is further supported by the correlation between performance and embodiment, in which we show that individuals who had greater embodiment had better performance in HMD-VR only (further discussed in Section 4.5). An important future question to examine is whether neurofeedback performance in a clinical population (e.g., individuals with stroke) also shows no differences between HMD-VR and computer screen conditions.

4.2. Similar Power Spectral Density Between a Computer Screen and HMD-VR

Similarly, regardless of condition (Screen, HMD-VR), we found that on average, individuals had similar levels of sensorimotor activity, as measured by PSD between 8-24 Hz and when divided into alpha and beta frequency bands. This was expected as the sensorimotor desynchronization used to calculate PSD was also used to drive the virtual arm in the task. However, similar to the performance results, we see a trend for greater desynchronization in the alpha band for the HMD-VR condition (Figure 5b). While we do not see a trend for greater desynchronization in the beta band for the HMD-VR condition (Figure 5c), these results may indicate a neurofeedback-based effect for the different displays, suggesting that feedback type may be able to alter brain activity. We also showed a significant relationship between PSD and neurofeedback performance, where increased desynchronization corresponded to increased performance.

4.3. Similar Simulator Sickness Between a Computer Screen and HMD-VR

As expected, regardless of condition (Screen, HMD-VR), we found that on average, individuals had similar reported levels of simulator sickness. These results were expected given that our experimental design did not involve factors found to result in adverse simulator sickness (e.g., high accelerated movements, jumping movements, long usage time, etc.) [44] and suggest that HMD-VR neurofeedback does not cause additional adverse effects beyond using a computer screen in healthy individuals.

4.4. A higher Level of Embodiment in HMD-VR Compared to a Computer Screen

After performing the neurofeedback task in each condition (Screen, HMD-VR), participants reported having higher levels of embodiment in HMD-VR compared to the computer screen. This is in agreement with previous research showing that HMD-VR is effective for inducing embodiment [21,45]. However, while it has been intuitively suggested that viewing a virtual body in HMD-VR should induce greater embodiment than viewing the same virtual body on a computer screen, to our knowledge, there has been little empirical evidence to demonstrate this. Here, we address this gap by providing evidence that HMD-VR does seem to in fact increase embodiment compared to a computer screen during a neurofeedback task.

4.5. Greater Embodiment is Related to Better Neurofeedback Performance in HMD-VR

In line with our hypothesis, we show that greater embodiment was positively related to better neurofeedback performance in HMD-VR. This uniqueness to HMD-VR could possibly be explained by an increased range of embodiment levels in the HMD-VR condition compared to the Screen condition. These results are consistent with previous research where embodiment has been shown to lead to neurophysiological and behavioral changes based on the virtual body’s characteristics, such as overestimating object distances after being given an elongated virtual arm in HMD-VR [20]. These results are also constant with previous research showing embodiment of a humanoid arm compared to a robotic arm improves motor imagery skills [46]. While our findings do not support causality, they are important because they suggest that embodiment may have the potential to improve an individual’s neurofeedback performance, and HMD-VR may be able to increase the level of embodiment of an individual, beyond that of a normal computer screen. This suggests that if individuals were to encounter a ceiling effect while controlling neurofeedback on a computer screen, they might be able to show greater improvements, beyond this ceiling, if they show greater embodiment in HMD-VR.

4.6. Future Clinical Implications

We designed REINVENT as an EEG-based BCI with HMD-VR neurofeedback for individuals with severe motor impairments, such as stroke. However, before exploring the effectiveness of this device in a population with severe motor impairments, we first examined whether providing neurofeedback in HMD-VR improves performance compared to receiving the same neurofeedback on a computer screen in healthy adults. Our findings suggest that increased embodiment may improve individuals’ neurofeedback performance, which could potentially improve patients’ recovery. Furthermore, our results suggest that HMD-VR may facilitate an increased level of embodiment, beyond what might be seen with traditional screen-based BCIs.

The findings in this pilot study are important considering that a patients’ perception of BCI control can have an effect on their own perceived capabilities [3]. Patients exhibiting a lack of embodiment may experience distress or a loss of control [3], which could then stifle recovery. Therefore, it is important to measure patients’ sense of embodiment in EEG-based BCI therapy to avoid feelings of estrangement. Future work might explore whether measures of embodiment, administered prior to HMD-VR neurofeedback training, could predict embodiment and neurofeedback performance. If so, these “pre-assessments” of embodiment potential could be used to predict and personalize EEG-based BCI therapy. As previous brain–computer interfaces have been shown to have a positive change on muscle and sensorimotor brain activity in post-stroke individuals, even when using screen-based environments [47], we anticipate that embodiment in HMD-VR may lead to even greater improvements. However, as these data are preliminary, more data are needed to explore this hypothesis.

4.7. Limitations

Our pilot study has several limitations. First was the limited sample size of 12 individuals and the limited number of trials collected per condition (i.e., 30 trials per condition). However, even with this limited sample, we were still able to extract the PSD, calculate relative PSD to baseline, and find a significant relationship between PSD and neurofeedback performance. However, this limited power may have resulted in the lack of significance seen between conditions in performance, PSD, and presence, where we see trends towards increased performance, greater sensorimotor desynchronization, and increased presence in the HMD-VR condition compared to the Screen condition, but these trends do not reach significance. Future research should explore this with greater power both in the number of participants and in the number of trials collected. Additionally, as our study was a within-subject experimental design, we were not able to examine differences in age and gender, which could influence EEG-based BCI performance. In addition to increasing the number of participants and trials collected, future studies should also consider a between-subjects experimental design.

A second limitation was the use of only eight channels of dry electrodes to collect sensorimotor activity and the broad frequency band used (8-24 Hz). Given that our system was initially designed to provide a low-cost rehabilitation intervention, we chose to drive the neurofeedback-based device with a limited number of dry electrodes as previous studies have found dry electrodes to be suitable for neurofeedback applications [15,48]. However, we recognize that the signal quality of these electrodes can be noisy, and even though we were able to successfully extract power spectral density, in future studies, we plan to use higher quality electrodes (e.g., active gel electrodes), which would also allow us to narrow the frequency band and personalize the feedback across individuals. In addition, although the low resolution from eight channels, primarily clustered around bilateral sensorimotor regions, facilitated a faster application of the EEG cap, it also limited our post-hoc analyses. Future research studies should utilize more channels for higher resolution. This would enable topographical analyses of whole brain activity during neurofeedback training as well as the ability to examine brain activity in non-motor regions as control regions.

A third limitation was the use of two separate questionnaires to examine presence and embodiment. Although presence and embodiment are often discussed separately, there are certainly overlapping features of the two constructs (e.g., greater embodiment often leads to greater presence). Future work may consider the use of standardized questionnaires that combine measures of presence and embodiment into a single questionnaire, such as the recently proposed questionnaire by Gonzalez-Franco and Peck, 2018 [49].

A fourth limitation is that here we studied only healthy individuals. This is notable as the effects observed may be smaller than those of a clinical population, who may have more room to improve. Specifically, the healthy individuals in our study showed, on average, 80% accuracy with the based BCI within a short time frame, which may reflect their intact sensorimotor control. However, individuals with severe motor impairments may start with lower scores and have greater room for improvement due to damage to these same networks. Future work may examine extended training with the HMD-VR environment to see if it is possible for individuals to improve beyond their current levels with greater time in the environment, as well as the effects of embodiment on EEG-based BCI performance in individuals with stroke, which may provide a greater range of abilities and thus greater potential effects with immersive virtual reality. Additionally, future work may examine whether EEG-based BCI training has an effect on real world movements and whether these effects are different between computer screen or HMD-VR environments. Future work should build upon these modest results and explore the effects of embodiment on HMD-VR neurofeedback performance with large samples and in clinical populations.

5. Conclusions

This preliminary work suggests that individuals have higher levels of embodiment when given immersive virtual reality-based neurofeedback compared to the neurofeedback displayed on a computer screen. Furthermore, this increased sense of embodiment in immersive virtual reality neurofeedback has the potential to improve neurofeedback performance in healthy individuals over their performance on a computer screen. HMD-VR may provide a unique medium for improving EEG-based BCI performance, especially in clinical settings related to motor recovery. Future work will explore ways to increase presence and embodiment in immersive virtual reality and examine these effects on motor rehabilitation in patients with severe motor impairment.

Acknowledgments

We thank David Saldana for assistance with recruitment and Catherine Finnegan for assistance with recruitment and initial pilot data collection.

Supplementary Materials

The following are available online at https://www.mdpi.com/1424-8220/20/4/1204/s1, Supplementary Table S1: Mean, SD, and skewness for all variables, Supplementary Figure S1: Individual participant EEG activity for C3, Supplementary Figure S2: Individual participant EEG activity for C4.

Author Contributions

Conceptualization, T.A.; E.S.; D.M.K. and S.-L.L.; Data curation, J.M.J.; Formal analysis, J.M.J.; A.V. and S.L.; Funding acquisition, T.A.; E.S.; D.M.K. and S.-L.L.; Investigation, J.M.J.; Methodology, J.M.J. and S.-L.L.; Project administration, J.M.J. and S.-L.L.; Software, R.P.S. and T.A.; Supervision, S.-L.L.; Validation, K.J., E.S. and D.M.K.; Visualization, J.M.J.; Writing—original draft, J.M.J.; Writing—review & editing, J.M.J., R.P.S., A.V., S.L., K.J., T.A., E.S., D.M.K. and S.-L.L. All authors have read and agree to the published version of the manuscript.

Funding

This research was funded by the American Heart Association (Grant #16IRG26960017) and the National Institutes of Health National Center for Medical Rehabilitation Research (Grant #K01HD091283).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Ramos-Murguialday A., Broetz D., Rea M., Läer L., Yilmaz Ö., Brasil F.L., Liberati G., Curado M.R., Garcia-Cossio E., Vyziotis A., et al. Brain-machine interface in chronic stroke rehabilitation: A controlled study. Ann. Neurol. 2013;74:100–108. doi: 10.1002/ana.23879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Caspar E.A., Cleeremans A., Haggard P. The relationship between human agency and embodiment. Conscious. Cogn. 2015;33:226–236. doi: 10.1016/j.concog.2015.01.007. [DOI] [PubMed] [Google Scholar]

- 3.Gilbert F., Cook M., O’Brien T., Illes J. Embodiment and estrangement: Results from a first-in-human “intelligent BCI” trial. Sci. Eng. Ethics. 2019;25:83–96. doi: 10.1007/s11948-017-0001-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dechent P., Merboldt K.-D., Frahm J. Is the human primary motor cortex involved in motor imagery? Cogn. Brian Res. 2004;19:138–144. doi: 10.1016/j.cogbrainres.2003.11.012. [DOI] [PubMed] [Google Scholar]

- 5.Naito E., Kochiyama T., Kitada R., Nakamura S., Matsumura M., Yonekura Y., Sadato N. Internally simulated movement sensations during motor imagery activate cortical motor areas and the cerebellum. J. Neurosci. 2002;22:3683–3691. doi: 10.1523/JNEUROSCI.22-09-03683.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jackson P.L., Lafleur M.F., Malouin F., Richards C.L., Doyon J. Functional cerebral reorganization following motor sequence learning through mental practice with motor imagery. Neuroimage. 2003;20:1171–1180. doi: 10.1016/S1053-8119(03)00369-0. [DOI] [PubMed] [Google Scholar]

- 7.Guerra Z.F., Bellose L.C., Danielli Coelho De Morais Faria C., Lucchetti G. The effects of mental practice based on motor imagery for mobility recovery after subacute stroke: Protocol for a randomized controlled trial. Complement. Ther. Clin. Pract. 2018;33:36–42. doi: 10.1016/j.ctcp.2018.08.002. [DOI] [PubMed] [Google Scholar]

- 8.Carrasco D.G., Cantalapiedra J.A. Effectiveness of motor imagery or mental practice in functional recovery after stroke: A systematic review. Neurol. English Ed. 2016;31:43–52. doi: 10.1016/j.nrleng.2013.02.008. [DOI] [PubMed] [Google Scholar]

- 9.Ang K.K., Guan C., Phua K.S., Wang C., Zhou L., Tang K.Y., Ephraim Joseph G.J., Kuah C.W.K., Chua K.S.G. Brain-computer interface-based robotic end effector system for wrist and hand rehabilitation: Results of a three-armed randomized controlled trial for chronic stroke. Front. Neuroeng. 2014;7:30. doi: 10.3389/fneng.2014.00030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pichiorri F., Morone G., Petti M., Toppi J., Pisotta I., Molinari M., Paolucci S., Inghilleri M., Astolfi L., Cincotti F., et al. Brain-computer interface boosts motor imagery practice during stroke recovery. Ann. Neurol. 2015;77:851–865. doi: 10.1002/ana.24390. [DOI] [PubMed] [Google Scholar]

- 11.Cincotti F., Pichiorri F., Aricò P., Aloise F., Leotta F., De Vico Fallani F., Millán J.d.R., Molinari M., Mattia D. EEG-based Brain-Computer Interface to support post-stroke motor rehabilitation of the upper limb; Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; San Diego, CA, USA. 28 August–1 September 2012; San Diego, CA, USA: IEEE; 2012. pp. 4112–4115. [DOI] [PubMed] [Google Scholar]

- 12.Frolov A.A., Mokienko O., Lyukmanov R., Biryukova E., Kotov S., Turbina L., Nadareyshvily G., Bushkova Y. Post-stroke rehabilitation training with a motor-imagery-based Brain-Computer Interface (BCI)-controlled hand exoskeleton: A randomized controlled multicenter trial. Front. Neurosci. 2017;11:400. doi: 10.3389/fnins.2017.00400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tung S.W., Guan C., Ang K.K., Phua K.S., Wang C., Zhao L., Teo W.P., Chew E. Motor imagery BCI for upper limb stroke rehabilitation: An evaluation of the EEG recordings using coherence analysis; Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Osaka, Japan. 3–7 July 2013; pp. 261–264. [DOI] [PubMed] [Google Scholar]

- 14.Biasiucci A., Leeb R., Iturrate I., Perdikis S., Al-Khodairy A., Corbet T., Schnider A., Schmidlin T., Zhang H., Bassolino M., et al. Brain-actuated functional electrical stimulation elicits lasting arm motor recovery after stroke. Nat. Commun. 2018;9:2421. doi: 10.1038/s41467-018-04673-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.McMahon M., Schukat M. A low-cost, open-source, BCI-VR game control development environment prototype for game based neurorehabilitation; Proceedings of the IEEE Games, Entertainment, Media Conference (GEM); Galway, Ireland. 15–17 August 2018; pp. 1–9. [Google Scholar]

- 16.Vourvopoulos A., Bermúdez i Badia S. Motor priming in virtual reality can augment motor-imagery training efficacy in restorative brain-computer interaction: A within-subject analysis. J. Neuroeng. Rehabil. 2016;13:69. doi: 10.1186/s12984-016-0173-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Banakou D., Groten R., Slater M. Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes. Proc. Natl. Acad. Sci. 2013;110:12846–12851. doi: 10.1073/pnas.1306779110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kilteni K., Groten R., Slater M. The Sense of Embodiment in Virtual Reality. Presence Teleoperators Virtual Environ. 2012;21:373–387. doi: 10.1162/PRES_a_00124. [DOI] [Google Scholar]

- 19.Yee N., Bailenson J.N. The Proteus effect: The effect of transformed self-representation on behavior. Hum. Commun. Res. 2007;33:271–290. doi: 10.1111/j.1468-2958.2007.00299.x. [DOI] [Google Scholar]

- 20.Kilteni K., Normand J.-M., Sanchez-Vives M.V., Slater M. Extending body space in immersive virtual reality: A very long arm illusion. PLoS ONE. 2012;7:e40867. doi: 10.1371/journal.pone.0040867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Osimo S.A., Pizarro R., Spanlang B., Slater M. Conversations between self and self as Sigmund Freud—A virtual body ownership paradigm for self counselling. Sci. Rep. 2015;5:13899. doi: 10.1038/srep13899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kilteni K., Bergstrom I., Slater M. Drumming in immersive virtual reality: The body shapes the way we play. IEEE Trans. Vis. Comput. Graph. 2013;19:597–605. doi: 10.1109/TVCG.2013.29. [DOI] [PubMed] [Google Scholar]

- 23.Banakou D., Hanumanthu P.D., Slater M. Virtual embodiment of white people in a black virtual body leads to a sustained reduction in their implicit racial bias. Front. Hum. Neurosci. 2016;10:601. doi: 10.3389/fnhum.2016.00601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Vecchiato G., Tieri G., Jelic A., De Matteis F., Maglione A.G., Babiloni F. Electroencephalographic correlates of sensorimotor integration and embodiment during the appreciation of virtual architectural environments. Front. Psychol. 2015;6:1944. doi: 10.3389/fpsyg.2015.01944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Pavone E.F., Tieri G., Rizza G., Tidoni E., Grisoni L., Aglioti S.M. Embodying others in immersive virtual reality: Electro-cortical signatures of monitoring the errors in the actions of an avatar seen from a first-person perspective. J. Neurosci. 2016;36:268–279. doi: 10.1523/JNEUROSCI.0494-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Slater M. Immersion and the illusion of presence in virtual reality. Br. J. Psychol. 2018;109:431–433. doi: 10.1111/bjop.12305. [DOI] [PubMed] [Google Scholar]

- 27.Witmer B.G., Singer M.J. Measuring presence in virtual environments: A presence questionnaire. Presence. 1998;7:225–240. doi: 10.1162/105474698565686. [DOI] [Google Scholar]

- 28.Bailey J.O., Bailenson J.N., Casasanto D. When does virtual embodiment change our minds? Presence. 2016;25:222–233. doi: 10.1162/PRES_a_00263. [DOI] [Google Scholar]

- 29.Spicer R., Anglin J.M., Krum D.M., Liew S.-L. REINVENT: A low-cost, virtual reality brain-computer interface for severe stroke upper limb motor recovery; Proceedings of the IEEE Virtual Reality; Los Angeles, CA, USA. 18–22 March 2017; pp. 385–386. [Google Scholar]

- 30.Klem G.H., Lüders H.O., Jasper H.H., Elger C. The ten-twenty electrode system of the International Federation. Electroencephalogr. Clin. Neurophysiol. 1999;52:3–6. [PubMed] [Google Scholar]

- 31.Kennedy R.S., Lane N.E., Berbaum K.S., Michael G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993;3:203–220. doi: 10.1207/s15327108ijap0303_3. [DOI] [Google Scholar]

- 32.Witmer B.G., Jerome C.J., Singer M.J. The factor structure of the Presence Questionnaire. Presence. 2005;14:298–312. doi: 10.1162/105474605323384654. [DOI] [Google Scholar]

- 33.Lee J., Kim M., Kim J. A study on immersion and VR sickness in walking interaction for immersive virtual reality applications. Symmetry. 2017;9:78. [Google Scholar]

- 34.Delorme A., Makeig S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- 35.Welch P.D. The use of fast Fourier transform for the estimation of power spectra: A method based on time averaging over short, modified periodograms. IEEE Trans. Audio Electroacoust. 1967;15:70–73. doi: 10.1109/TAU.1967.1161901. [DOI] [Google Scholar]

- 36.Neuper C., Wörtz M., Pfurtscheller G. ERD/ERS patterns reflecting sensorimotor activation and deactivation. Prog. Brain Res. 2006;159:211–222. doi: 10.1016/S0079-6123(06)59014-4. [DOI] [PubMed] [Google Scholar]

- 37.Pfurtscheller G. Spatiotemporal ERD/ERS patterns during voluntary movement and motor imagery. Suppl. Clin. Neurophysiol. 2000;53:196–198. doi: 10.1016/s1567-424x(09)70157-6. [DOI] [PubMed] [Google Scholar]

- 38.Pfurtscheller G., Neuper C. Motor imagery activates primary sensorimotor area in humans. Neurosci. Lett. 1997;239:65–68. doi: 10.1016/S0304-3940(97)00889-6. [DOI] [PubMed] [Google Scholar]

- 39.Vourvopoulos A., Pardo O.M., Lefebvre S., Neureither M., Saldana D., Jahng E., Liew S.L. Effects of a brain-computer interface with virtual reality (VR) neurofeedback: A pilot study in chronic stroke patients. Front. Hum. Neurosci. 2019;13:210. doi: 10.3389/fnhum.2019.00210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Vourvopoulos A., Jorge C., Abreu R., Figueiredo P., Fernandes J.C., Bermúdez i Badia S. Efficacy and brain imaging correlates of an immersive motor imagery BCI-driven VR system for upper limb motor rehabilitation: A clinical case report. Front. Hum. Neurosci. 2019;13:244. doi: 10.3389/fnhum.2019.00244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Blanco-Mora D.A., Almeida Y., Vieira C., Badia S.B.i. A study on EEG power and connectivity in a virtual reality bimanual rehabilitation training system; Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC); Bari, Italy. 6–9 October 2019; pp. 2818–2822. [Google Scholar]

- 42.Johnson N.N., Carey J., Edelman B.J., Doud A., Grande A., Lakshminarayan K., He B. Combined rTMS and virtual reality brain–computer interface training for motor recovery after stroke. J. Neural Eng. 2018;15:016009. doi: 10.1088/1741-2552/aa8ce3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Luu T.P., He Y., Brown S., Nakagame S., Contreras-Vidal J.L. Gait adaptation to visual kinematic perturbations using a real-time closed-loop brain-computer interface to a virtual reality avatar. J. Neural Eng. 2016;13:036006. doi: 10.1088/1741-2560/13/3/036006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Porcino T.M., Clua E., Trevisan D., Vasconcelos C.N., Valente L. Minimizing cyber sickness in head mounted display systems: Design guidelines and applications; Proceedings of the 2017 IEEE 5th International Conference on Serious Games and Applications for Health (SeGAH); Perth, WA, Australia. 2–4 April 2017; pp. 1–6. [Google Scholar]

- 45.Slater M., Sanchez-Vives M.V. Enhancing our lives with immersive virtual reality. Front. Robot. AI. 2016;3:74. doi: 10.3389/frobt.2016.00074. [DOI] [Google Scholar]

- 46.Alimardani M., Nishio S., Ishiguro H. The importance of visual feedback design in BCIs; from embodiment to motor imagery learning. PLoS ONE. 2016;11:e0161945. doi: 10.1371/journal.pone.0161945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Ono T., Shindo K., Kawashima K., Ota N., Ito M., Ota T., Mukaino M., Fujiwara T., Kimura A., Liu M., et al. Brain-computer interface with somatosensory feedback improves functional recovery from severe hemiplegia due to chronic stroke. Front. Neuroeng. 2014;7 doi: 10.3389/fneng.2014.00019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Uktveris T., Jusas V. Development of a modular board for eeg signal acquisition. Sensors. 2018;18:2140. doi: 10.3390/s18072140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Gonzalez-Franco M., Peck T.C. Avatar embodiment. Towards a standardized questionnaire. Front. Robot. AI. 2018;5:74. doi: 10.3389/frobt.2018.00074. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.