Abstract

Purpose

To analyze and quantify the source of electronic health record (EHR) text documentation in ophthalmology progress notes.

Design

EHR documentation review and analysis.

Methods

Setting

A single academic ophthalmology department.

Study Population

A cohort study conducted between November 1, 2016 and December 31 2018 using secondary EHR data, and a follow up manual review of a random sample. The cohort study included 123,274 progress notes documented by 42 attending providers; these notes were for patients with the 5 most common primary ICD 10 parent codes for each provider. For the manual review, 120 notes from 8 providers were randomly sampled.

Main Outcome Measures

Characters or number of words in each note categorized by attribution source, author type, and time of creation.

Results

Imported text entry made up the majority of text in new and return patients, 2978 characters (77%) and 3612 characters (91%) respectively. Support staff members authored substantial portions of notes, 3,024 characters (68%) of new patient notes, 3,953 characters (83%) of return patient notes. Finally, providers completed large amounts of documentation after clinical visits 135 words (35%) of new patient notes, 102 words (27%) of return patient notes.

Conclusions

EHR documentation consists largely of imported text, is often authored by support staff, and is often written after the end of a visit. These findings raise questions about documentation accuracy and utility, and may have implications for quality of care and patient-provider relationships.

While electronic health records (EHRs) were designed to improve safety, quality, and efficiency, their implementation has posed significant challenges to documentation and care.1–8 While EHRs clearly improve the legibility and accessibility of clinical information, evidence for the impact of EHRs on care quality, efficiency, and safety has been mixed with largely consistent findings that EHR use is associated with increased documentation time.9–14 Within ophthalmology, studies have similarly shown that EHR adoption has been associated with either a decrease or no change in outpatient clinic volumes, but increased documentation time.5,15 As the majority of ophthalmology practices have adopted EHRs, providers now self-report that productivity and practice revenue have decreased while practice costs have increased.16

Much of the efficiency loss is due to the time burden of EHR documentation which may be a result of poor EHR usability and the increased complexity of clinical documentation introduced by regulation and quality metrics.17–19 We recently showed that ophthalmologists at our institution spend 3.7 total hours per clinic day using the EHR, 1.6 of which are after the clinic, and that, a decade after EHR adoption, ophthalmologists spend more time documenting per patient than they did when the EHR was first adopted.20,21 Other studies outside ophthalmology have similarly found that physicians spend at least as much time on desk and EHR work as they spend in face-to-face interactions with patients.22–25

To save time documenting in the EHR, clinicians use import technologies such as copy-paste, copy-forward, and templated text with automated imports.26–29 While import technologies can improve documentation efficiency and standardization, their indiscriminate use can have negative consequences leading to inaccurate documentation, propagated errors, highly redundant text, and the inability to determine true authorship.26,28,30–32 The purpose of the progress note is to document important findings and clinical decision-making by the provider; however, the use of import technologies and multiple authors raises important concerns about whether documentation using EHRs is optimally supporting the practice of medicine.

While the use of import technologies within EHR notes has been established, the prevalence of import technology use within outpatient ophthalmology has not been addressed in current literature. The purpose of this study is to address this gap by measuring the amount of imported and copied text in progress notes, by author and by time of day, to characterize current EHR documentation habits within outpatient ophthalmology. Understanding this process is necessary to identify potential shortcomings and areas for improvement.

METHODS

This retrospective cohort study was approved by the Oregon Health & Science University (OHSU) institutional review board which granted a waiver of informed consent for analysis of coded EHR data, and adhered to the tenets of the Declaration of Helsinki.

Description of Study Institution

OHSU is a large academic medical center in Portland, Oregon. Casey Eye Institute, OHSU’s ophthalmology department, includes over 50 faculty providers, who perform over 130,000 annual outpatient examinations. The department provides primary eye care, includes all major ophthalmology subspecialties, and serves as a major referral center in Pacific Northwest and nationally.

Over several years, an EHR system (EpicCare; Epic, Verona, WI) was implemented throughout OHSU. This vendor develops software for mid-size and large medical practices and is a market share leader among large hospitals. In 2006, all ophthalmologists at OHSU began using this EHR. All ambulatory practice management, clinical documentation, order entry, medication prescribing, and billing tasks are performed using components of the EHR. Ophthalmologists and their support staff at OHSU use the Kaleidoscope module (Epic, Verona, WI) for entering ophthalmic exam findings. These findings, together with subjective and other objective findings are summarized in the progress note along with the assessment and plan for the encounter.

Note Text Composition Data

We analyzed office visit notes from January 1, 2017- December 31, 2018. We selected ophthalmologists with stable practices who regularly saw patients in each year of the study. Ophthalmologists were included each year if: in the current or a previous year they saw patients in January and February, had over 200 office visits, and either had office visits in November and December, or over 200 office visits the following year. We selected notes most representative of a provider’s practice, including those from visits with one of a provider’s five most common ICD-10 parent diagnosis codes. We excluded notes which were likely written by trainees, using regular expression matching of note text in Python (Version 3.7, Python Software Foundation, https://www.python.org/) to identify notes signed by a resident or fellow.

For all notes, data regarding authorship, attribution, and note text were gathered from OHSU’s clinical data warehouse (EpicCare; Epic, Verona, WI). This data reported the number of characters (as opposed to the number of words) in a progress note that were manually entered, copied, and generated from a template. Characters that were not manually entered were labeled Imported Text, and text was categorized as authored either by the provider or by other staff. For notes that were authored by both staff and providers, data was analyzed regarding the proportion of text entered by each author, along with the source of the text. To ensure we were capturing typical notes in this second analysis, notes were included if the providers authored at least 30 notes with their staff. While we are only able to report character counts for note authorship and attribution in this large-scale analysis due how our EHR tracks attribution, in Tables 1 and 2 we also report the average number of words in notes for Return and New visits, counted from full note text using R (version 3.5.0).33

Table 1: Source of documentation in electronic health record notes for ophthalmology office visits based on automated text analysis of 123,274 notes from 42 ophthalmology providers.

Character counts are displayed for new and return patient progress notes, based on text source (manually-entered vs imported). Total imported entry includes: templated (smart phrases, outlines, macros, labs, medication list) and copied text. Templates can include imported text from the chart and previous notes. Comparisons were made between total manual and imported text using a paired t-test after data had been log-transformed to convert log-normal distributions to normal distributions.

| Encounter Type | N | Word Count Mean ± SD | Text Source | Character Count Mean ± SD | P-Value |

|---|---|---|---|---|---|

| New Patient | 17,078 | 557 ± 295 | Total Manual Entry | 896 ± 893 (23) | <2.2e-16 |

| Total Imported Entry | 2978 ± 1995 (77) | ||||

| Template | 2778 ± 1752 (72) | ||||

| Copied | 200 ± 750 (5) | ||||

| Return Patient | 106,196 | 582 ± 560 | Total Manual Entry | 355 ± 380 (9) | <2.2e-16 |

| Total Imported Entry | 3612±3831 (91) | ||||

| Template | 2600 ± 1767 (66) | ||||

| Copied | 1012 ± 3489 (25) | ||||

Table 2: Authorship in electronic health record notes for office visits based on automated text analysis of 84,271 notes from 41 ophthalmology providers.

Character counts are displayed for new and return patient progress notes, based on author and text source (manually-entered vs imported). Only notes with both provider and technician text were analyzed, and only providers with more than thirty notes with provider and technician text were included in the analysis. Comparisons were made between amount of text generated by providers and staff for each encounter type using a paired t-test after data had been log-transformed to convert log-normal distributions to normal distributions.

| Encounter Type | N | Word Count, Mean ± SD | Author | Text Source | Character Count, Mean ± SD (%) | P-Value |

|---|---|---|---|---|---|---|

| New Patient | 12,116 | 626 ± 293 | Provider | Total | 1404 ± 1658 (32) | <2.2e-16 |

| Manual | 573 ± 669 (13) | |||||

| Imported | 832 ± 1265 (19) | |||||

| Template | 754 ± 1134 (17) | |||||

| Copied | 78 ± 335 (2) | |||||

| Staff | Total | 3024 ± 2335 (68) | ||||

| Manual | 502 ± 603 (11) | |||||

| Imported | 2521± 2009 (57) | |||||

| Template | 2343 ± 1816 (53) | |||||

| Copied | 129 ± 793 (3) | |||||

| Return Patient | 72,155 | 688 ± 637 | Provider | Total | 798 ± 1336 (17) | <2.2e-16 |

| Manual | 222 ± 258 (5) | |||||

| Imported | 576 ± 1279 (12) | |||||

| Template | 490 ± 927 (10) | |||||

| Copied | 86 ± 825 (2) | |||||

| Staff | Total | 3953 ± 4410 (83) | ||||

| Manual | 200 ± 316 (4) | |||||

| Imported | 3754 ± 4341 (79) | |||||

| Template | 2432 ±1842 (51) | |||||

| Copied | 1322 ± 4080 (28) | |||||

Manually Reviewed Cases

In addition to the large-scale data analysis, we conducted manual review of a representative selection of progress notes to gain further insight. We sampled 120 random progress notes: 5 new and 10 return from 8 providers (2 each from cornea, retina, neuro-ophthalmology, and comprehensive ophthalmology providers). Notes were sampled from the time period November 1, 2016 to October 31, 2017, and were again from visits with each provider’s five most common ICD-10 parent codes.

Analysis of Cases: Source, Authorship, and Timing

When manually reviewing notes, we used attribution data provided by the EHR in the chart review display of the progress note. The provided attribution filter (Epic; Verona, WI) displayed the author, text source, and time of creation for the associated block of text. We copied each text block into a spreadsheet and used R to count words by authorship, source, and time of creation (version 3.5.0).33 Text was categorized as manual or imported, and authored by a provider or staff. With manual review, it was possible to identify the scribes for individual notes, and scribe text was attributed to the provider for purposes of this analysis. Text was categorized as Before Encounter (created before the patient checked in), During Encounter (created between patient check in and checkout times), and After Encounter (created after the patient checkout time).

Statistical Analysis

Descriptive statistics were calculated using R (version 3.5.0).33 Since character and word-length were log-normally distributed, T-tests for differences between means of log-transformed data were used to determine statistically significance in R (version 3.5.0).33

RESULTS

Characteristics of Data Set

Forty-two ophthalmologists authored a total of 182,915 visit notes during the study period. After applying exclusion criteria, there were 123,274 progress notes to analyze.

Source of Progress Note Text

Table 1 displays the source of data from progress notes. Imported text represented 2,978 ± 1,995 characters (77%) of new progress notes, and 3,612 ± 3,831 (91%) of return progress notes. Most imported text was templated text, making up 2,778 ± 1,752 characters (72%) of new progress notes and 2,600 ± 1,767 characters (66%) of return progress notes. More text was copied in return patient notes compared with new patient notes (1,012 ± 3,489 characters [25%] vs 200 ± 750 characters [5%]).

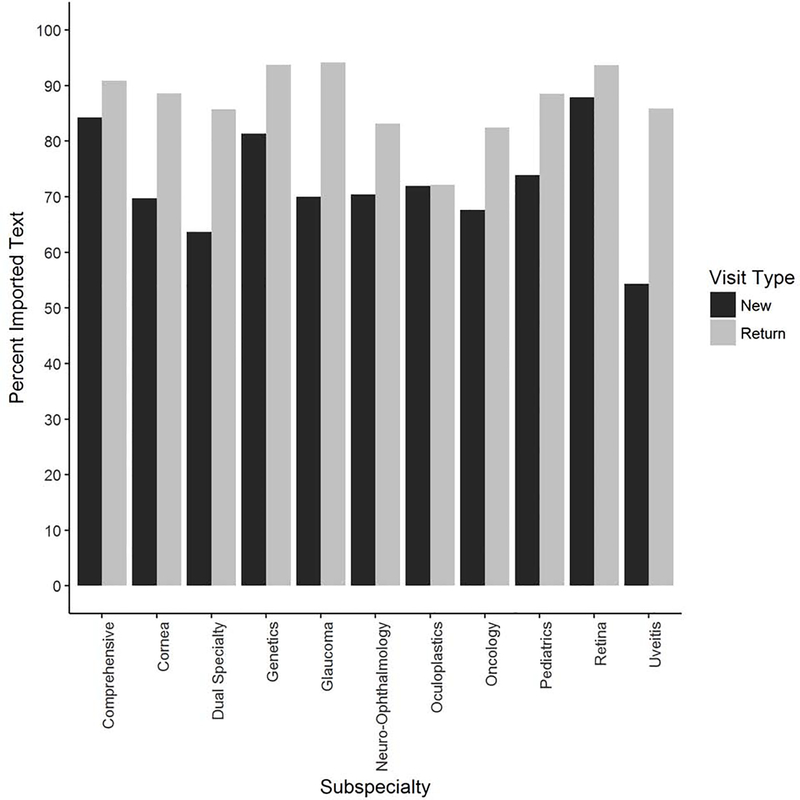

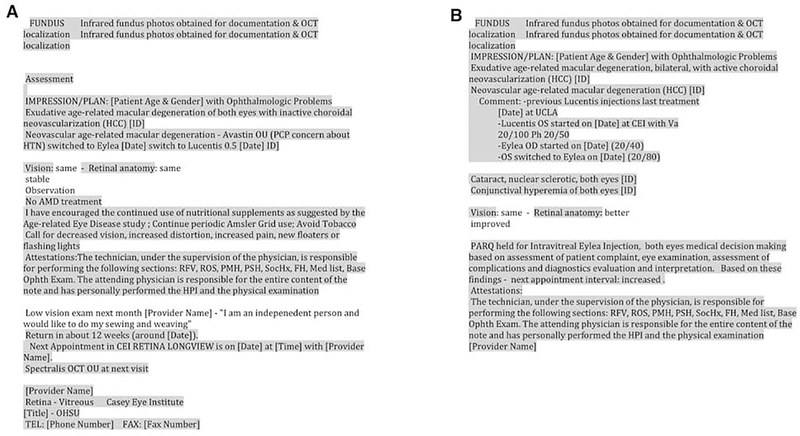

Figure 1 shows variation of percent imported text across subspecialties, with a minimum of 54% for new uveitis notes, and a maximum of 94% for return glaucoma notes. Figure 2 displays a representative example from two progress notes for different patients with the same diagnosis with imported text (i.e., copied or templated) highlighted.

Figure 1: Source of text in ophthalmology electronic health record notes.

Percentage of imported text is displayed for new and return patient visits by subspecialty. Imported entry included templated (smart phrases, outlines, macros, labs, medication list) or copied text. Templates may include imported text from the chart and previous notes.

Figure 2: Representative pages from two ophthalmology progress notes from different patients with the same diagnosis.

Imported text is highlighted in gray, and manually typed text is not highlighted.

Authorship

For the analysis of proportion of notes authored by staff and ophthalmologists, there were 84,271 notes authored by 41 ophthalmologists along with their staff that met the inclusion criteria. Table 2 summarizes the analysis of text authorship. Providers authored 1,404 ± 1,658 characters (32%) of new patient note text and 798 ± 1,336 characters (17%) of return patient text. There were significant differences in the amount of text entered by providers and staff with staff entereing more text for both new (3024 ± 2335, 68%, p<2.2e-16) and return patient visits (3953 ± 4410, 83%, p<2.2e-16). On average, providers’ tended to have slightly more imported text than manually entered text for both new patient notes (832 ± 1265 vs. 573 ± 669 charachters), and return patient notes (576 ± 1279 vs. 222 ± 258 charachters). Technicians’ note text was predominately imported text for both new (2521 ± 2009 vs. 502 ± 603 charachters) and return patients (3754 ± 4341 vs. 200 ± 316 charachters).

Manual Review: Timing of Text Creation

Note composition and authorship of manually-reviewed notes largely reflected the results above. Imported text consisted of 482 ± 181 words (76%) of text in new patient notes, and 628 ± 299 words (88%) in return patient notes (as compared to 77% and 91% in the large-scale analysis). For the 83 notes which had both provider and staff authored text, technicians wrote 350 ± 256 words (50%) of total text in new patient notes and 495 ± 312 words (65%) of total text in return patient notes (as opposed to 68% and 85% in the large-scale analysis).

Table 3 displays findings from more detailed analysis of provider EHR documentation practices during vs. after patient visits for the 119 manually reviewed notes with provider text. Large portions of note text were generated after the visit: 135 ± 182 words (35%) of new patient note text, and 101 ± 299 words (27%) of return patient note text, both of which were significantly greater than zero (p<2.2e-16).

Table 3: Timing of text creation by providers in electronic health record notes for ophthalmology office visits based on manual text analysis of 119 notes from 8 ophthalmology providers.

Mean word count is displayed by text time of creation and text source for new and return ophthalmology progress notes. Before encounter text was created before check-in time. During encounter text was created between check-in and checkout time. After encounter text was created after checkout time. Only notes where a provider generated text were analyzed. Comparisons were made between total text generated before, during, or after visits by encounter type and the hypothesis that zero text was entered during that time period using a one-sample t-test on data that had been log-transformed to convert log-normal distributions to normal distributions.

| Encounter Type | N | Timing | Text Source | Word Count Mean ± SD (% Total) | P-Value |

|---|---|---|---|---|---|

| New Patient | 40 | Before | Total | 0 ± 0 (0) | NA |

| Manual | 0 ± 0 (0) | ||||

| Import | 0 ± 0 (0) | ||||

| Template | 0 ± 0 (0) | ||||

| Copied | 0 ± 0 (0) | ||||

| During | Total | 258 ± 251 (65) | 1.3e-13 | ||

| Manual | 67 ± 75 (17) | ||||

| Import | 192 ± 217 (49) | ||||

| Template | 186 ± 211 (47) | ||||

| Copied | 6 ± 19 (2) | ||||

| After | Total | 135 ± 182 (35) | 2.8e-11 | ||

| Manual | 52 ± 64 (13) | ||||

| Import | 83 ± 153 (21) | ||||

| Template | 77 ± 151 (20) | ||||

| Copied | 6 ± 24 (2) | ||||

| Return Patient | 79 | Before | Total | 24 ± 98 (7) | .024 |

| Manual | 1 ± 4 (0) | ||||

| Import | 23 ± 95 (6) | ||||

| Template | 20 ± 79 (5) | ||||

| Copied | 4 ± 27 (1) | ||||

| During | Total | 245 ± 235 (66) | 2.2e-16 | ||

| Manual | 40 ± 37 (11) | ||||

| Import | 204 ±221 (55) | ||||

| Template | 175 ± 195 (47) | ||||

| Copied | 30 ± 67 (8) | ||||

| After | Total | 101 ± 299 (27) | 2.2e-16 | ||

| Manual | 18 ± 23 (5) | ||||

| Import | 83 ± 296 (22) | ||||

| Template | 76 ± 296 (21) | ||||

| Copied | 7 ± 23 (2) | ||||

DISCUSSION

Building on our prior work characterizing the time burden and redundancy of ophthalmic clinical documentation,20,21,31,34,35 this study presents three key findings: 1) the majority of EHR progress note text is copied or generated from pre-existing templates rather than manually entered; 2) staff enter a significant proportion of ophthalmology progress note text; and 3) a significant proportion of EHR text entered by providers is done after the patient leaves.

The first key finding is that imported text entry comprises the majority of clinical note text for both new and return patient notes, with most of the imported text coming from templates. Imported text comprised 77% of new patient notes and 91% of return patient notes (Table 1), which closely matches findings in internal medicine and orthopedics.28,36 While the percent of imported text varied by subspecialty, it comprised the majority of the note text in all subspecialties. Import technologies improve documentation efficiency, structure, and consistency, but may negatively impact note accuracy and patient safety.26,28,30,37 Specifically, templates and other import technologies may contribute to redundancy between notes (Figure 2). Import technologies may also lead to overly lengthy notes in which new and important clinical information is a small percentage of the note and difficult to identify.

Recent studies have shown that providers struggle to manage the amount of information available in the EHR.39,40 In clinical practice, reviewing notes with excessive templated text may contribute to increased time requirements.5,14 One survey found that 70% of physicians reported reviewing notes took more time with EHRs than with paper.41 In addition, reviewing notes largely comprised of imported text can be challenging for determining what actually happened during the clinical encounter, versus what was simply populated by import technology (comprehensive review of medications, detailed examinations, problem lists, etc.).8,42 This reliance on imported text is illustrated in Figure 2A, where the majority of the note, including the assessment and plan, is composed of imported text, making new information difficult to identify. Further, this imported text may propagate errors, particularly when large blocks of text are copied-forward.37

Although possible consequences of copy-pasted text are well documented,26 most imported text in our study was templated text (Table 1). While we were originally puzzled about where the small amount of copied text came from in New Patient notes, an informal post-hoc analysis of text copied into the 40 new patient notes included in our manual review revealed most of this copied text appeared to be from other structured parts of the patient record (e.g., medication lists, lab results) rather than other notes. The abundance of templated text may be driven by the use of EHR progress notes for assessment of quality and billing metrics, making it easier to enter text to meet regulations.5,18,20,30,43 While templated text may be more accurate than manual typing (e.g., importing a medication list), it may create other problems. For instance, one study found that providers use the medical record to create a “memory picture” of a returning patient.44 Use of templated text that results in similar notes for different patients (e.g., Figure 2B) may reduce the ability of the medical record to support this function, which negatively impacts the relationship between providers and individual patients. However, the same study noted that structure can be beneficial to the medical record.44 This suggests that templated text is useful for adding structure to the note, but not necessarily for generating large amounts of imported, similar text. The use of well-designed templates can create complete notes,45–47 but we believe excessive use of import technology can mask relevant data which might be more selectively reported when entered into notes manually. The use of non-progress note data fields for billing and quality metrics is one potential approach to restore the integrity and relevancy of the clinical progress note.

The second key finding is that staff enter a significant proportion of ophthalmology progress note text. In this study, staff wrote on average 68% of new patient notes, and 83% of return patient notes (Table 2). For the manual review, staff authored 50% of new patient notes and 65% of return patient notes. The large portions of notes authored by staff raise concerns about the ability to identify the author.48 In paper charts, authorship is often recognized by handwriting, but this is often not immediately apparent in EHRs. Providers commonly solve problems using “data-driven reasoning” accessing all available information to inform their decision making process, including authorship.49 EHR attribution data used to generate this study identifies authorship, but is often not available in real-time during patient visits, nor is it available for notes created in older versions of the EHR at our institution. It is also not available for electronically transferred notes from other EHR systems, or for printed or faxed copies of notes. Simple methods for identifying different authors, such as font color and type, may help provide greater transparency of note authorship.

Our final key finding is that providers continue to document notes after the clinic visit. Of the manually-reviewed text authored by providers, large portions (35% new, 27% return) were added after the visit (Table 3). This finding is consistent with a previously-published study reporting that providers spent 43% of time on the EHR outside of the clinical session, and with a previously-published study from our group showing that providers completed 1.6 hours out of 3.7 total EHR hours after clinic.5,20,25

Documentation after the patient encounter may result from the negative impact of EHRs on clinical efficiency, making it difficult to finish during the patient exam.5,14,50 Providers self-report that productivity and revenue have decreased after EHR implementation compared to paper notes.16 These efficiency and financial challenges coupled with documentation work following the clinic visit23,25 may negatively impact provider satisfaction and increase stress and burnout.23,40,51 Documentation following clinical visits may also have implications for patient safety due to inaccurate or incomplete recall, especially for exam findings or key discussion points. Our study showed that providers used similar amounts of both manual and imported text after the visit, suggesting that providers are still actively documenting after the visit rather than merely reviewing and electronically signing notes. Reliance on templated text to fill in details after-hours may produce notes that do not accurately represent what actually occurred during the clinical visit, and may lead to errors and medicolegal liability.8 The relationship between after-hours documentation and note accuracy warrants further study.

Interestingly, there was a wide range of documentation habits among the providers in our study, with some completing nearly all documentation during a clinic visit, and others completing the majority after the visit (data not shown). For example, among the providers with manually-reviewed study data, the provider that completed the highest percentage of documentation during the office visit also relied heavily on import technology (93% new patients, 95% return patients). Thus, while completion of charts in real-time appears achievable, it may require heavier use of imported text. Real-time completion of charts may also be limited by provider knowledge and efficiency with the EHR, length of patient examination and discussion, concerns for interference with the provider-patient relationship, and level of documentation detail required by certain subspecialties.5,20

We hope these study findings lend evidence to discussions of EHR documentation policy change. The Centers for Medicare and Medicaid Services (CMS) recently proposed payment changes to allow for lower documentation requirements, including focused history and examination components, and review of support staff documentation without re-entering it.52 While the financial consequences for such changes have yet to be determined, the adjustments in documentation requirements may allow for less reliance on lengthy templates, facilitate more transparency in authorship, and decrease documentation time.

This study had several limitations which future work could address. First, the study was retrospective and did not allow for causative conclusions. Second, both the large-scale analysis of ~125,000 progress notes and manual review of 120 notes occurred at a single academic study site, which may not be representative of all ophthalmologists in all settings. That said, our manual sample had largely similar note composition and authorship results to our larger data set, which suggests the sample was reflective of typical provider notes in this setting. Additional research is warranted to examine generalizability of study findings. This study did not account for scribes in the analysis, which warrants its own study. Third, this study was not designed to examine the utility or harm of different methods of inputting note text. Future studies are need to test specific hypotheses such as those that that importing text enhances note structure but leads to greater rates of including outdated information. Finally, this study excluded office visits with trainees, which may have different patterns of documentation with respect to text source and time.36

Overall, these findings raise concerns about patterns of ophthalmology EHR documentation. The majority of text in new and return patient notes is created using imported text, which may lack accuracy and mask relevant data. Support staff author large portions of notes, but attribution data is often not readily visible. Providers complete large portions of documentation following clinical visits, which raises questions about accuracy of the note, efficiency of workflow, and quality of life for providers. We feel there are important opportunities for collaboration among ophthalmologists, system developers, informatics experts, and policymakers toward improving the utility of clinical documentation in EHRs.

This study analyzed and quantified the source of electronic health record (EHR) text documentation in ophthalmology progress notes using over 100,000 progress notes documented by 42 ophthalmologists. Results show that EHR documentation consists largely of imported text, is often authored by support staff, and is often written after the end of a visit. These findings raise questions about documentation accuracy and utility, and may have implications for quality of care and patient-provider relationships.

ACKNOWLEDGEMENTS

a. Funding/Support: Supported by unrestricted departmental funding from Research to Prevent Blindness, New York, NY; Supported by National Institutes of Health, Bethesda, MD [grant numbers R00LM12238, P30EY10572, and T15LM007088].

b. Financial Disclosures: MFC is an unpaid member of the Scientific Advisory Board for Clarity Medical Systems (Pleasanton, CA), a Consultant for Novartis (Basel, Switzerland), and an initial member of Inteleretina, LLC (Honolulu, HI). All other authors have no financial disclosures.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- 1.Bates DW, Gawande AA. Improving Safety with Information Technology. N Engl J Med. 2003;348(25):2526–2534. [DOI] [PubMed] [Google Scholar]

- 2.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330(7494):765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Zheng K, Abraham J, Novak LL, Reynolds TL, Gettinger A. A Survey of the Literature on Unintended Consequences Associated with Health Information Technology: 2014–2015. Yearb Med Inform. 2016;(1):13–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hanauer DA, Branford GL, Greenberg G, et al. Two-year longitudinal assessment of physicians’ perceptions after replacement of a longstanding homegrown electronic health record: does a J-curve of satisfaction really exist? J Am Med Inform Assoc. 2017;24(e1):e157–e165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chiang MF, Read-Brown S, Tu DC, et al. Evaluation of Electronic Health Record Implementation in Ophthalmology at an Academic Medical Center (An American Ophthalmological Society Thesis). Trans Am Ophthalmol Soc. 2013;111:70–92. [PMC free article] [PubMed] [Google Scholar]

- 6.Ehrlich JR, Michelotti M, Blachley TS, et al. A Two-Year Longitudinal Assessment of Ophthalmologists’ Perceptions after Implementing an Electronic Health Record System. Appl Clin Inform. 2016;7(4):930–945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pandit RR, Boland MV. The impact of an electronic health record transition on a glaucoma subspecialty practice. Ophthalmology. 2013;120(4):753–760. [DOI] [PubMed] [Google Scholar]

- 8.Sanders DS, Lattin DJ, Read-Brown S, et al. Electronic health record systems in ophthalmology: impact on clinical documentation. Ophthalmology. 2013;120(9):1745–1755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752. [DOI] [PubMed] [Google Scholar]

- 10.Goldzweig CL, Towfigh A, Maglione M, Shekelle PG. Costs and benefits of health information technology: new trends from the literature. Health Aff Proj Hope. 2009;28(2):w282–293. [DOI] [PubMed] [Google Scholar]

- 11.Black AD, Car J, Pagliari C, et al. The impact of eHealth on the quality and safety of health care: a systematic overview. PLoS Med. 2011;8(1):e1000387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Buntin MB, Burke MF, Hoaglin MC, Blumenthal D. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health Aff Proj Hope. 2011;30(3):464–471. [DOI] [PubMed] [Google Scholar]

- 13.Jones SS, Rudin RS, Perry T, Shekelle PG. Health information technology: an updated systematic review with a focus on meaningful use. Ann Intern Med. 2014;160(1):48–54. [DOI] [PubMed] [Google Scholar]

- 14.Baumann LA, Baker J, Elshaug AG. The impact of electronic health record systems on clinical documentation times: A systematic review. Health Policy Amst Neth. June 2018. [DOI] [PubMed] [Google Scholar]

- 15.Singh RP, Bedi R, Li A, et al. The Practice Impact of Electronic Health Record System Implementation Within a Large Multispecialty Ophthalmic Practice. JAMA Ophthalmol. 2015; 133(6):668–674. [DOI] [PubMed] [Google Scholar]

- 16.Lim MC, Boland MV, McCannel CA, et al. Adoption of Electronic Health Records and Perceptions of Financial and Clinical Outcomes Among Ophthalmologists in the United States. JAMA Ophthalmol. December 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Blumenthal D, Abrams M, Nuzum R. The Affordable Care Act at 5 Years. N Engl J Med. 2015;372(25):2451–2458. [DOI] [PubMed] [Google Scholar]

- 18.Clough JD, McClellan M. Implementing MACRA: Implications for Physicians and for Physician Leadership. JAMA. 2016;315(22):2397–2398. [DOI] [PubMed] [Google Scholar]

- 19.Cohen DJ, Dorr DA, Knierim K, et al. Primary Care Practices’ Abilities And Challenges In Using Electronic Health Record Data For Quality Improvement. Health Aff Proj Hope. 2018;37(4):635–643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Read-Brown S, Hribar MR, Reznick LG, et al. Time Requirements for Electronic Health Record Use in an Academic Ophthalmology Center. JAMA Ophthalmol. 2017; 135(11): 1250–1257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Goldstein IH, Hwang T, Gowrisankaran S, Bales R, Chiang MF, Hribar MR. Changes in Electronic Health Record Use Time and Documentation over the Course of a Decade. Ophthalmology. January 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.McDonald CJ, Callaghan FM, Weissman A, Goodwin RM, Mundkur M, Kuhn T. Use of internist’s free time by ambulatory care Electronic Medical Record systems. JAMA Intern Med. 2014;174(11):1860–1863. [DOI] [PubMed] [Google Scholar]

- 23.Sinsky C, Colligan L, Li L, et al. Allocation of Physician Time in Ambulatory Practice: A Time and Motion Study in 4 Specialties. Ann Intern Med. 2016;165(11):753–760. [DOI] [PubMed] [Google Scholar]

- 24.Tai-Seale M, Olson CW, Li J, et al. Electronic Health Record Logs Indicate That Physicians Split Time Evenly Between Seeing Patients And Desktop Medicine. Health Aff Proj Hope. 2017;36(4):655–662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: Primary Care Physician Workload Assessment Using EHR Event Log Data and Time-Motion Observations. Ann Fam Med. 2017;15(5):419–426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Weis JM, Levy PC. Copy, Paste, and Cloned Notes in Electronic Health Records. Chest. 2014;145(3):632–638. [DOI] [PubMed] [Google Scholar]

- 27.Lowry SZ, Ramaiah M, Prettyman SS, et al. Examining the Copy and Paste Function in the Use of Electronic Health Records. NIST InteragencyInternal Rep NISTIR - 8166. January 2017. https://www.nist.gov/publications/examining-copy-and-paste-function-use-electronic-health-records. Accessed September 5, 2018.

- 28.Winn W, Shakir IA, Israel H, Cannada LK. The role of copy and paste function in orthopedic trauma progress notes. J Clin Orthop Trauma. 2017;8(1):76–81. d [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.O’Donnell HC, Kaushal R, Barron Y, Callahan MA, Adelman RD, Siegler EL. Physicians’ attitudes towards copy and pasting in electronic note writing. J Gen Intern Med. 2009;24(1):63–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Siegler EL, Adelman R. Copy and paste: a remediable hazard of electronic health records. Am J Med. 2009;122(6):495–496. [DOI] [PubMed] [Google Scholar]

- 31.Hribar MR, Rule A, Huang AE, et al. Redundancy of Progress Notes for Serial Office Visits. Ophthalmology. 2019;0(0). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hirschtick RE. A piece of my mind. Copy-and-paste. JAMA. 2006;295(20):2335–2336. [DOI] [PubMed] [Google Scholar]

- 33.R Core Team. The R Programming Language. Vienna, Austria: R Foundation for Statistical Computing; 2019. https://www.R-project.org/. [Google Scholar]

- 34.Goldstein IH, Hribar MR, Reznick LG, Chiang MF. Analysis of Total Time Requirements of Electronic Health Record Use by Ophthalmologists Using Secondary EHR Data. In: AMIA Annual Symposium Proceedings.; 2018. [PMC free article] [PubMed] [Google Scholar]

- 35.Huang AE, Hribar MR, Goldstein IH, et al. Clinical Documentation in Electronic Health Record Systems: Analysis of Similarity in Progress Notes from Consecutive Outpatient Ophthalmology Encounters. Ophthalmol Rev. [PMC free article] [PubMed] [Google Scholar]

- 36.Wang MD, Khanna R, Najafi N. Characterizing the Source of Text in Electronic Health Record Progress Notes. JAMA Intern Med. 2017;177(8):1212–1213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Silverstone DE, Lim MC, American Academy of Ophthalmology Medical Information Technology Committee. Ensuring information integrity in the electronic health record: the crisis and the challenge. Ophthalmology. 2014;121(2):435–437. [DOI] [PubMed] [Google Scholar]

- 38.dummy reference [Google Scholar]

- 39.Rathert C, Porter TH, Mittler JN, Fleig-Palmer M. Seven years after Meaningful Use: Physicians’ and nurses’ experiences with electronic health records. Health Care Manage Rev. 2019;44(1):30–40. [DOI] [PubMed] [Google Scholar]

- 40.Kroth PJ, Morioka-Douglas N, Veres S, et al. The electronic elephant in the room: Physicians and the electronic health record. JAMIA Open. 2018;1(1):49–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Jamoom EW, Heisey-Grove D, Yang N, Scanlon P. Physician Opinions about EHR Use by EHR Experience and by Whether the Practice had optimized its EHR Use. J Health Med Inform. 2016;7(4):1000240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Embi PJ, Yackel TR, Logan JR, Bowen JL, Cooney TG, Gorman PN. Impacts of computerized physician documentation in a teaching hospital: perceptions of faculty and resident physicians. J Am Med Inform Assoc JAMIA. 2004;11(4):300–309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Abelson R, Creswell J, Palmer G. Medicare Billing Rises at Hospitals With Electronic Records. The New York Times. https://www.nytimes.com/2012/09/22/business/medicare-billing-rises-at-hospitals-with-electronic-records.html. Published September 21,2012. Accessed September 5, 2018.

- 44.Nygren E, Henriksson P. Reading the medical record. I. Analysis of physicians’ ways of reading the medical record. Comput Methods Programs Biomed. 1992;39(1–2): 1–12. [DOI] [PubMed] [Google Scholar]

- 45.Aylor M, Campbell EM, Winter C, Phillipi CA. Resident Notes in an Electronic Health Record: A Mixed-Methods Study Using a Standardized Intervention With Qualitative Analysis. Clin Pediatr (Phila). 2017;56(3):257–262. [DOI] [PubMed] [Google Scholar]

- 46.Dean SM, Eickhoff JC, Bakel LA. The Effectiveness of a Bundled Intervention to Improve Resident Progress Notes in an Electronic Health Record (EHR). J Hosp Med. 2015;10(2):104–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Sharpe B, Bell J, Stewart E, et al. A Prescription for Note Bloat: An Effective Progress Note Template. J Hosp Med. 2018;13(6):378–382. [DOI] [PubMed] [Google Scholar]

- 48.Krauss JC, Boonstra PS, Vantsevich AV, Friedman CP. Is the problem list in the eye of the beholder? An exploration of consistency across physicians. J Am Med Inform Assoc. 2016;23(5):859–865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Patel VL, Arocha JF, Kaufman DR. A primer on aspects of cognition for medical informatics. J Am Med Inform Assoc JAMIA. 2001;8(4):324–343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Chan P, Thyparampil PJ, Chiang MF. Accuracy and speed of electronic health record versus paper-based ophthalmic documentation strategies. Am J Ophthalmol. 2013;156(1):165–172.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Babbott S, Manwell LB, Brown R, et al. Electronic medical records and physician stress in primary care: results from the MEMO Study. J Am Med Inform Assoc. 2014;21(e1):e100–106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Centers for Medicare and Medicaid Services. Proposed Policy, Payment, and Quality Provisions Changes to the Medicare Physician Fee Schedule for Calendar Year 2020 | CMS. cms.gov Newsroom. https://www.cms.gov/newsroom/fact-sheets/proposed-policy-payment-and-quality-provisions-changes-medicare-physician-fee-schedule-calendar-year-2. Published July 29, 2019. Accessed November 12, 2019.