Abstract

Tumor-free surgical margins are critical in breast-conserving surgery. In up to 38% of the cases, however, patients undergo a second surgery since malignant cells are found at the margins of the excised resection specimen. Thus, advanced imaging tools are needed to ensure clear margins at the time of surgery. The objective of this study was to evaluate a random forest classifier that makes use of parameters derived from point-scanning label-free fluorescence lifetime imaging (FLIm) measurements of breast specimens as a means to diagnose tumor at the resection margins and to enable an intuitive visualization of a probabilistic classifier on tissue specimen. FLIm data from fresh lumpectomy and mastectomy specimens from 18 patients were used in this study. The supervised training was based on a previously developed registration technique between autofluorescence imaging data and cross-sectional histology slides. A pathologist’s histology annotations provide the ground truth to distinguish between adipose, fibrous, and tumor tissue. Current results demonstrate the ability of this approach to classify the tumor with 89% sensitivity and 93% specificity and to rapidly (∼ 20 frames per second) overlay the probabilistic classifier overlaid on excised breast specimens using an intuitive color scheme. Furthermore, we show an iterative imaging refinement that allows surgeons to switch between rapid scans with a customized, low spatial resolution to quickly cover the specimen and slower scans with enhanced resolution (400 μm per point measurement) in suspicious regions where more details are required. In summary, this technique provides high diagnostic prediction accuracy, rapid acquisition, adaptive resolution, nondestructive probing, and facile interpretation of images, thus holding potential for clinical breast imaging based on label-free FLIm.

1. Introduction

Breast cancer is the most frequently diagnosed cancer and the leading cause of cancer death among females worldwide [1]. Breast-conserving therapy tends to be the preferred surgical procedure after an early breast cancer diagnosis. The surgeon attempts to excise the entire tumor volume including a surrounding layer (margins) of healthy tissue, to minimize the risk of local recurrence. A major limiting factor for complete surgical resection is the physician’s ability to identify the complex tumor margins. On one end, excessive removal of normal tissue to ensure complete cancer removal can compromise the cosmetic outcome and impair functionality. On the other end, insufficient removal of normal tissue leads to potentially incomplete removal of cancer. Patients are recommended to undergo a second operation to ensure that margins are negative for malignancy, with the consequences of delayed subsequent therapy initiation (i.e., radiation or chemotherapy) and excess healthcare resource utilization. Furthermore, numerous studies have demonstrated that re-excision is associated with a higher risk of recurrence [2,3].

Postoperative analysis of histopathological sections is common practice for surgical margin assessment. Preparation of the specimen involves fixing, sectioning, and staining with hematoxylin and eosin (H&E). Interpretation and treatment planning is finally based on a microscopic assessment of the specimen. If the tumor is extending to the surface (positive margin), re-excision is required. The surgeon correlates the excised specimen to find critical locations where tumor cells are close to the surface and whether margin involvement is focal or extensive. However, the correlation between the excised specimen can be challenging and imprecise. Furthermore, standard histopathological assessment is time-consuming, causes stress for the patient and potential risks of surgical infection, limiting the potential for rapid intraoperative consultation.

In this respect, there is an unmet need for enhanced intrasurgical imaging techniques to ensure clear margins right away at the operating table. New technologies, such as intraoperative cytologic (touch-prep cytology) or pathologic (frozen-section) analysis, can address some of the weaknesses of conventional histopathologic analysis. However, they are either time-consuming, require special training, or have intrinsic variability in subjective interpretation. Several studies indicated that optical techniques have the potential to overcome these limitations due to non-destructive tissue probing. Moreover, they provide information about physiological, chemical, and morphological changes associated with cancer. Recent studies address breast cancer diagnostics using Raman spectroscopy [4,5], optical coherence tomography [6,7], photoacoustic tomography [8,9], fluorescence lifetime imaging [10,11], diffuse reflectance spectroscopy [12,13] and micro-elastography [14].

Although some of these techniques achieved high accuracy, none have been widely adopted into regular clinical practice. All methods have their pitfalls, such as reduced sensitivity, lack of acquisition speed to quickly cover a larger tissue area, requirement of expert skills, and destructive characteristics [15]. Another important need for acceptance by the wider clinical community is real-time capability combined with an intuitive visualization of conclusive diagnostic information. A recent approach combined near-infrared fluorescence with augmented real-time imaging and navigation to assess breast tumor margins in real-time [16]. NIR fluorescence image-guided surgery demonstrated great potential for rapid intraoperative visualization of tumors. However, this near-infrared fluorescence technique requires injection of a contrast agent, which exposes patients to risks of allergic reactions, and the timing of the surgery need to account for contrast agent delivery time and uptake.

Label-free fluorescence lifetime imaging (FLIm) allows for rapid data acquisition and processing of tissue diagnostic data derived from breast tumor specimens. Recent studies have demonstrated that FLIm images can be acquired either during surgery [17–19] or on excised specimens [10] to characterize biochemical features and associated pathologies. In particular, FLIm has been demonstrated to identify breast tumor regions [10,20], glioma tumors [21], oropharyngeal cancer [22] and atherosclerotic lesions [18]. In a recent study, the ability to identify breast tumors was demonstrated for small regions correlated visually with histology slides [10]. However, an ad hoc real-time visualization of diagnostic information was not provided in this earlier study. Furthermore, detection ability was demonstrated for small regions correlated visually with histology slides. Validation of classification algorithms and imaging technology demands a precise match between the optical measurements and the histopathology, which is considered as the gold standard for the evaluation of surgical margins. In this paper, we pursue the next steps towards a practical implementation focusing on: (1) Demonstrating real-time tissue diagnosis based on parameters derived from fluorescence decay, and (2) Intuitive visualization of tissue type. Due to possible inter-patient variability of fluorescence signatures we transform a Random Forest classifier output into a probability distribution over classes and finally into a simple color scheme representing the different classes tumor, adipose, and fibrous tissue. The visualization is based on probabilistic classification outputs encoding the type of tissue and the certainty of the probabilistic output. Thus, regions that are identified with insufficient certainty are labeled as such and can be considered by the surgeon. In addition, (3) we show an iterative imaging refinement that allows a surgeon to switch between rapid scans with a low spatial resolution and slower scans with enhanced resolution in suspicious regions where more details are required. The supervised learning scheme and its evaluation were based on a well characterized set of samples (N=18 patients) leveraging on a previously developed registration technique between autofluorescence imaging data and cross-sectional histology slides [23].

2. Materials and methods

2.1. Breast specimens

Eighteen tissue specimens were obtained from eighteen patients who underwent lumpectomy (n=6) or mastectomy surgeries (n=12) at the University of California Davis Health System (UCDHS) and imaged within an hour of resection. All patients provided informed consent. For each patient, one piece of tissue with a diameter of 15 to 30 mm that was assumed to contain a tumor was studied. Prior to imaging, a 405nm continuous-wave (CW) laser diode was used to generate 4 to 8 clearly observable landmarks (fiducial points) on the tissue block in order to enable an accurate registration between the video images and the corresponding histology slides [23]. Histology confirmed 14 specimens with the diagnosis of invasive cancer and 4 specimens with DCIS. Note that the specimens used for a recent image registration study [23] have been also used in this study.

2.2. Instrumentation and imaging setup

A prototype time-domain multispectral time-resolved fluorescence spectroscopy (ms-TRFS) system [24] with an integrated aiming beam (Fig. 1(a) ) was used in the study. The ms-TRFS system consisted of a fluorescence excitation source, a wavelength-selection module, and a fast-response fluorescence detector. A pulsed 355 nm laser (Teem Photonics, France) was exciting the fluorescence through a fiber (Pulse duration: 650 ps, Energy per pulse: 1.2 µJ, Repetition rate of the laser: 120 Hz). Autofluorescence was spectrally resolved into four spectral bands (channels) using dichroic mirrors and bandpass filters: 390/40 nm (Channel 1), 470/28 nm (Channel 2), 542/28 nm (Channel 3), and 629/53 nm (Channel 4). Specifically, the fluorescence emission in these four bands is adapted to resolve collagen, NADH, FAD, and porphyrins. Each channel outputs the autofluorescence to an optical delay fiber with an increasing length from channel 1 to channel 4. This organization enabled temporal multiplexing of the spectral channels so that the decay waveforms arrive sequentially at distinct time points at the detector (single microchannel plate photomultiplier tube, MCP-PMT, R3809U-50, Hamamatsu, 45 ps FWHM). Subsequently, the signal is amplified (RF amplifier, AM-1607-3000, 3-GHz bandwidth, Miteq) and digitized (PXIe-5185, National Instruments, 12.5-GS/s sampling rate).

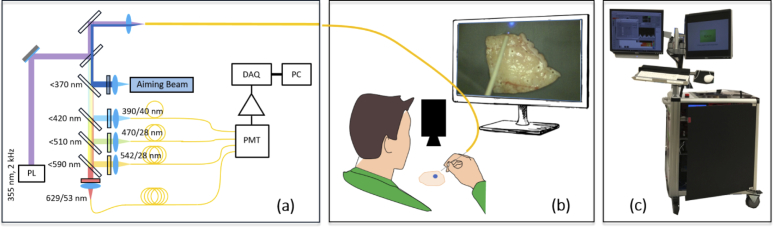

Fig. 1.

(a) Schematic of the ms-TRFS instrumentation used for imaging purposes. A single fiber is used for excitation and autofluorescence collection. PL: Pulsed Laser, DAQ: Data Acquisition, PMT: Photomultiplier. (b) Imaging setup. A hand-guided scan was performed for each specimen. An aiming beam is integrated into the optical path and serves as a marker to overlay fluorescence data on the video where the measurement was taken. (c) FLIm system and computers assembled on a cart equipped with two screens that was used to image the breast specimens.

Digitizing and deconvolution (see Sect. 2.6) as well as image processing, classification, and visualization tasks are performed on separate computers communicating via TCP/IP protocol. Digitizing and deconvolution was performed on an Intel Celeron dual-core T3100 CPU, 3 GB RAM using LabVIEW (National Instruments). Image processing, classification and visualization were implemented in C++ and OpenCV running on an Intel Core i7-3632QM CPU (4 kernels) equipped with 16 GB of RAM. The whole system was assembled on a cart (Fig. 1(c)) for mobility on demand. Matlab (Mathworks, Inc.) was used for registering autofluorescence imaging data and cross-sectional histology slides [23].

2.3. Imaging protocol

For all specimens, the fiber probe was hand-guided during imaging (Fig. 1(b)). During the scan, the distance from the sample to the probe was kept at a few millimeters. In case that the fiber tip moved too far from the sample, the computer recognized a diminished signal amplitude triggering an acoustic alarm to remind the imaging person to get closer to the sample. If the fiber tip accidentally touched the sample, a reference measurement was performed after the scan. If deviations were recognized, the fiber tip was cleaned, and the scan was repeated. Scans were also repeated if the sample was accidentally moved. The scanning time per sample ranged from 2 to 6 minutes to cover the entire specimen. This corresponds to a scanning time per area of approximately 0.4 - 0.5 s/mm2. After imaging, specimens were placed in formalin and processed routinely for histologic analysis.

2.4. Aiming beam principle

An external camera (Point Gray Chameleon3 1.3 MP Color USB3 vision with Fujinon HF9HA-1B 2/3"9 mm lens) captured the specimen during the scanning procedure. A 445 nm laser diode (TECBL-50G-440-USB, World Star Tech, Canada) was integrated into the optical path (Fig. 1(a)) delivering to the measured area via the same fiber-optic probe. The incident power of the aiming beam is approximately 0.35 mW. The blue aiming beam served as an optical marker on the sample to highlight the point where the current measurement is carried out (Fig. 1(b)). It was tracked in the video image by transforming the image into the HSV color space and thresholding the hue and saturation channels [20]. Information obtained from the fluorescence measurements was successively overlaid on the marker positions during the scan creating an artificial color overlay on the sample.

2.5. Histological preparation and registration

After imaging the specimens, histology sections were cut in parallel to the imaging plane at 4 μm thickness using a microtome. The first continuous large slice that covers the whole area of the specimen was then stained with hematoxylin and eosin (H&E) and scanned with an Aperio Digital Pathology Slide Scanner (Leica Biosystems). The pathologist assessed the pathology slides and delineated regions of fibrous tissue, normal ducts and lobules, fat, invasive and ductal carcinoma in situ using Aperio ImageScope (Leica Biosystems). The pathologist’s annotations (the delineations and tissue labels) were automatically exported and further processed with a custom made registration tool registering the fluorescence data with the histology annotations. This allowed assigning the fluorescence signatures with histological findings to build up a sophisticated training set for the classifier and validate its performance.

The registration tool relies on a previously presented method to register data acquired with a point-scanning spectroscopic imaging technique from fresh surgical tissue specimen blocks with corresponding histological sections [23]. The lasermarks, generated with a 405-nm CW laser diode, served as fiducial markers and were visible in both the camera image and the histology slide. The registration pipeline was built as a two-stage process. First, a rough alignment was achieved from a rigid registration by minimizing the distances between corresponding laser marks in the histology and camera image. Second, a piecewise shape matching was used to match the outer shape of the specimen in the camera image and the histology section and to refine the initial rigid registration [23].

2.6. Deconvolution and parameter extraction

Mathematically, the measured autofluorescence response (y) from tissue to an excitation laser pulse can be modeled as convolution of the fluorescence impulse response function (fIRF, h) with the instrument impulse response function (iIRF, I) stemming from delay components and modal dispersion. Thus,

| (1) |

where with are discrete time points for N uniform sampling intervals and is an additive white noise component. In order to estimate h, a constrained Laguerre model was used [25],

| (2) |

where is an ordered set of Laguerre basis functions with the maximum order L and with constraints that is strictly convex, positive and monotonically decreasing for . In this study, we used and .

From the decay profile, we extract average lifetime and intensities , each for the four spectral channels,

| (3) |

and

| (4) |

In order to make the intensity parameter insensitive to extrinsic factors, the intensity ratios were used

| (5) |

where specifies the spectral channel. The set of parameters spanning the feature space serving as input for the classifier had 56 dimensions. It consisted of 4 channels, each characterized by 12 Laguerre coefficients, 4 lifetime and 4 intensity parameters.

2.7. Classifier setup and training

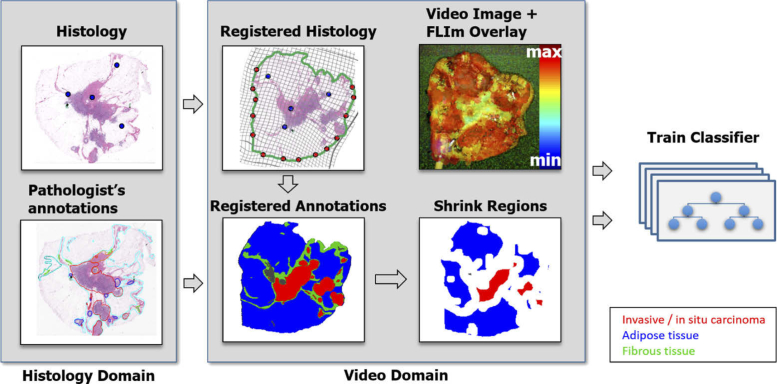

The training pipeline is illustrated in Fig. 2 . The semiautomatic histology registration procedure was used to map the histology slide and the pathologist’s annotations on the video image serving as ground truth for the training. Due to potential registration errors [23], the delineated regions of tumor, fibrous, and adipose tissue were shrank down by using morphological erosion, and the laser markers are excluded from the training set. The numbers of pixels before and after morphological erosion are given in Table 1 . If the markers are evenly distributed, the shrinkage ensures that possible registration errors do not exceed (in accordance with erosion) and therefore, do not impact the quality of training data [23]. A random forest classifier was trained with the 56 dimensional feature vector obtained from the morphological filter results.

Fig. 2.

The supervised training pipeline involves registration of cross-sectional histology with the video image using a hybrid registration method [23]. Pathologist tracings from the histology are mapped to the video domain. In order to account for possible registration errors, regions are narrowed by 0.5 mm. Fluorescence parameters from the resulting regions are fed into a random forest classifier.

Table 1. Number of pixels of tissue types obtained from registered histology in video domain.

| Case no. | after morphological erosion | before morphological erosion | ||||

|---|---|---|---|---|---|---|

| Tumor | Fibrous | Adipose | Tumor | Fibrous | Adipose | |

| 1 | 15866 | 621 | 2127 | 21715 | 3975 | 8023 |

| 2 | 32604 | 0 | 0 | 37836 | 697 | 614 |

| 3 | 16759 | 7486 | 1863 | 22057 | 16205 | 5578 |

| 4 | 1161 | 1406 | 4864 | 3120 | 9610 | 12444 |

| 5 | 3045 | 26 | 19436 | 6862 | 6192 | 32247 |

| 6 | 1085 | 79 | 12207 | 2285 | 4181 | 23233 |

| 7 | 3817 | 2469 | 5790 | 5429 | 13632 | 12905 |

| 8 | 2215 | 506 | 4969 | 3402 | 1262 | 7923 |

| 9 | 1805 | 2915 | 1454 | 3624 | 7175 | 3490 |

| 10 | 10996 | 231 | 1843 | 13273 | 1056 | 5104 |

| 11 | 18434 | 7 | 1172 | 20172 | 436 | 2332 |

| 12 | 0 | 5520 | 12570 | 0 | 10726 | 18342 |

| 13 | 11272 | 214 | 10323 | 13149 | 2480 | 14621 |

| 14 | 3356 | 841 | 11049 | 5574 | 3178 | 14080 |

| 15 | 11440 | 1810 | 9844 | 14710 | 5698 | 13234 |

| 16 | 5079 | 2587 | 11277 | 6156 | 6232 | 15411 |

| 17 | 6068 | 1300 | 8438 | 8321 | 5421 | 11796 |

| 18 | 0 | 1216 | 29564 | 0 | 6678 | 40977 |

Random forest [26] is an ensemble of classification or regression trees using a random subset of features at each candidate split in the learning process that is induced from bootstrap samples of the training data. Predictions are derived from averaging or majority voting of all individual regression trees. Therefore, Random Forests are intrinsically suited for multi-class problems. The combination of less correlated trees combined with randomized node optimization and bagging has demonstrated a reduced variance and sensitivity to overfitting. The maximum number and depth of trees were set to 100 and 10, respectively, in order to limit the size and complexity of the trees. In each split, features were used where N denotes the total number of features. The classes were balanced according to their sample size.

For training and validation, the leave-one-subject-out strategy was pursued. This involved sequentially leaving data from a single patient out of the training set, then testing the classification accuracy on that single specimen that was left out for training. This procedure was repeated for all specimens. For each FLIm point measurement, the output of the random forest was compared against the registered histology mappings. Due to imbalanced data (tumor, fibrous and adipose tissue), the Matthews correlation coefficient (MCC) [27] and Receiver operating characteristic (ROC) curves were evaluated for each point measurement in order to assess the predictive power of the classifier.

2.8. Visualization and overlay refinement

The output of the random forest classifier was transformed into a simple color scheme representing the probability that the interrogated point scanned with the fiber optic was identified as adipose, fibrous, or tumor tissue. Unlike multilayer perceptrons and other variations of artificial neural networks, random forests do not inherently provide posterior class probability estimates. Instead, the outputs can be transformed into a probability distribution over classes by averaging the unweighted class votes by the trees of the forest, where each tree votes for a single class [28]. This is in contrast to Support Vector Machines, where outputs have to be transformed into a probability distribution over classes, e.g. using Platt scaling [29]. The visualization scheme encodes tissue types in different colors: tumor in red, fibrous in green and adipose in blue. The interrogated position was colored according to

| (6) |

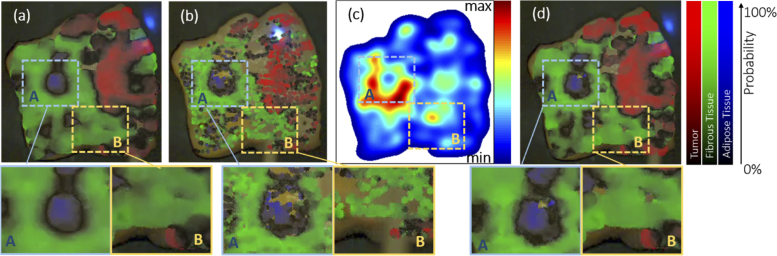

where denote the posterior class probabilities of tumor, fibrous and adipose tissue types and , if the majority of trees voted for class i and in any other case. Thus, the output color encodes the type of tissue in color and the certainty of the probabilistic output in saturation (see Fig. 3 ). If the output is close to black , the feature vector is close to decision borders within the feature space. The visible aiming beam, delivered through the optical probe, enables creating the color overlay scheme as described in Eq. (6) on the video in real-time. However, inhomogeneous tissue properties and different wavelengths of the excitation light and the aiming beam result in a difference between the aiming beam size and the area over which fluorescence is measured [20,30]. In a recent study [20], our group described a strategy where the size of the element is linearly scaled with the scanning speed providing an optimal coverage but at the cost of reduced spatial resolution. The size of region that is colored per point measurement according to the FLIm measurement constitutes a trade-off between resolution and scanning speed. If the size is chosen too large (Fig. 3(a)), the sample can be covered quickly but the overlay becomes blurred and delineation might become imprecise. Contrarily, if the size is chosen small, scanning takes a longer time (Fig. 3(b)).

Fig. 3.

Augmented classification overlay with fixed element size (a) and (b). If the is too large, the overlay gets blurred and imprecise. Contrarily, a small diameter will lead to poor coverage of the sample. The adaptive refinement (d) is based on the sample accumulation (Eq. (7)) quantifying the local sampling density shown in (c). It acquires a high level of detail for densely sampled regions (such as area A) and maintain a good coverage in sparsely sampled regions (such as area B).

Here, we pursue a different strategy aiming for a local refinement of resolution at areas of interest. The diameter of the circular element is adjusted according to the local sampling density. The scan starts with , where is the fiber core diameter and the maximum scaling factor. The choice of thus defines the initial size of the element. If the fiber probes an area of interest for a longer time period, is reduced automatically to increase spatial resolution locally. Accumulating the sampling points

| (7) |

approximates the number of sampling points in the local neighborhood of the current probing position x where xi are sampling points that have been already scanned with the probe and

| (8) |

The diameter

| (9) |

thus depends on the local sampling density where

| (10) |

is the local refinement level. The sampling step size specifies the number of samples in the local environment that lead to the next refinement level. This refinement strategy enables an initial rough scan of the sample. For inhomogeneous regions, the color scheme produces a black output indicating a mixed tissue decomposition. Re-scanning the ambiguous region leads to a local refinement and increase of the local spatial resolution. This principle sample is illustrated in Fig. 3. Based on the local sampling point density , shown in (c), the refinement (d) provides a complete coverage of the sample but also exhibits local details at regions with a high sampling density.

3. Results

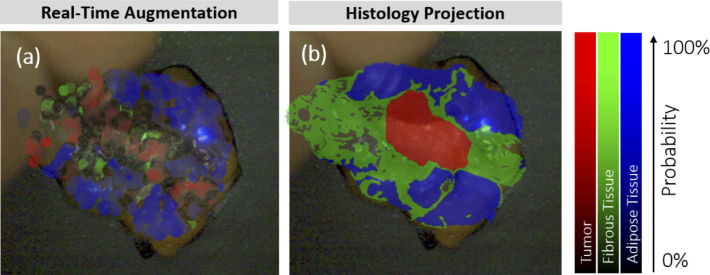

3.1. Visualization

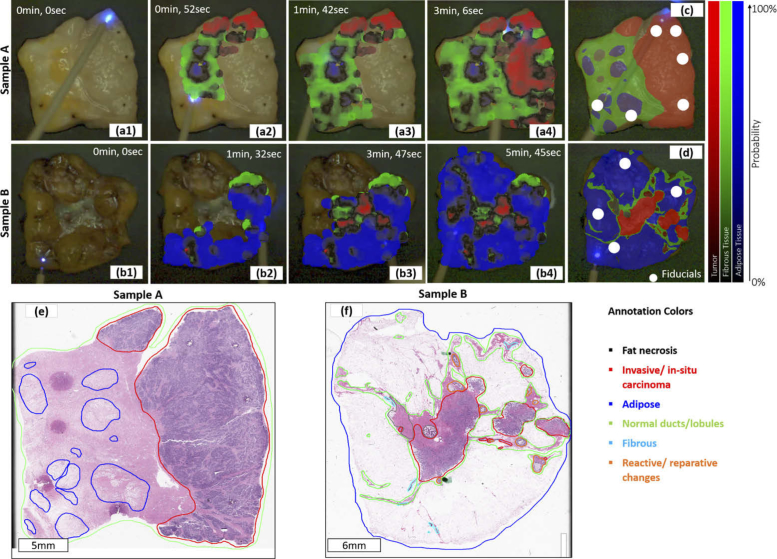

Figure 4 depicts the scanning process for two specimens, including the color overlay for different time stamps, the histology slide with delineated regions, and the histology projection registered to the video domain. The final overlay exhibits a high level of agreement with the registered histology findings. Note that the fiducial markers (marked with a white circle in the histology projection) stem from a 405 nm CW laser diode to generate clearly observable landmarks on the tissue block to enable registration with the video image [23]. As previously reported, the autofluorescence signatures in cauterized or burnt tissues can be considerably altered [31–33]. Thus, the regions of the laser markers used as fiducials for registration purposes (white circles in Fig. 4(c) and (e)) were excluded from the training and validation set. The color scheme immediately reveals the tissue composition is confirmed with the pathologist’s registered histology annotations, the ’gold standard’ for the evaluation of surgical margins. The color scheme exhibits a lower probability at the border between two different tissue types. This is due to a mixed tissue composition within the area over which fluorescence is measured pushing the feature vector towards the decision boundaries. The local refinement level was chosen to start with a maximum scaling factor of which corresponds to a diameter of (Eq. (9)), thus covering 36 times the area at the finest level . The system was running with approximately 20 frames per second where the bottleneck was clearly on the image processing side. Beam tracking and image preprocessing were most demanding and took ≈70% of the time, while classification and visualization only made up 9% and 7%. The rest was related to TCP/IP communication and image acquisition.

Fig. 4.

Two examples (invasive carcinoma) of the augmented real-time overlay for different scanning times (sample A: a1-a4 and sample B: b1-b4, see supplemental videos Visualization 1 (7.4MB, mp4) and Visualization 2 (11MB, mp4) ), corresponding H&E histology slides with the pathologist’s annotations (sample A: e and sample B: f) and the corresponding registered annotations, mapped to the video domain (sample A: c and sample B: d) and providing the ground truth obtained from histology overlaid onto the video image.

3.2. Classification accuracy

For the three-class problem tumor vs. adipose vs. fibrous based on the Random Forest classifier applied to 18 samples, the Matthews correlation coefficient was 0.86 for identifying tumor, 0.81 for adipose, and 0.70 for fibrous tissue.

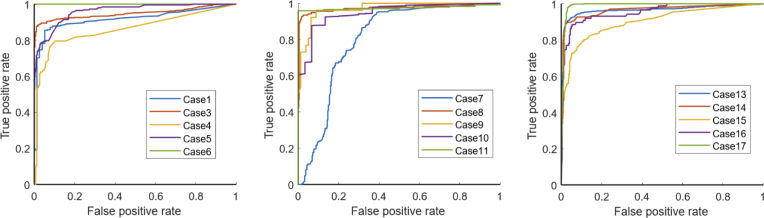

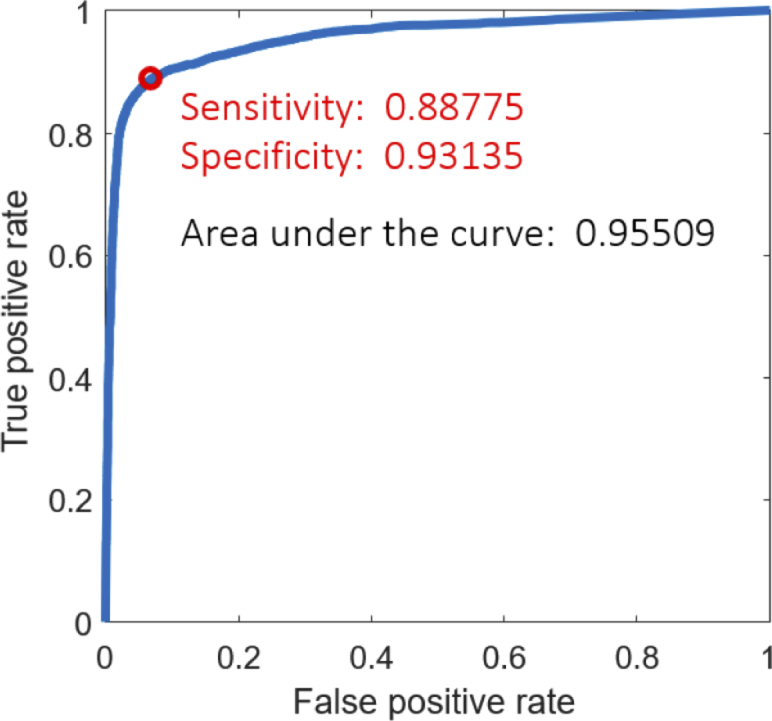

Figure 5 depicts the receiver operating characteristic (ROC) curve illustrating the classification accuracy for the 2-class problem (tumor vs. no tumor). The area under the ROC curve was 0.96 and the Matthews correlation coefficient was 0.86 corroborating a well-fitted classification model. The ROC curve shows the performance as a trade-off between sensitivity and specificity. Assuming the same costs for true negative and false positive predictions, the classifier yields 88.78% sensitivity and 93.14% specificity. A case-wise analysis of the classifier performance is given in Fig. 6 . Samples 2, 12, and 18 were homogeneous tissue samples (tumor only / no tumor only) that were classified correctly, thus being excluded from the ROC curve analysis. A high consistency can be seen across the samples providing stable classifier performances. However, a performance loss was observed for one case (case 7). The ROC curve clearly stands out showing a shift towards an increased false positive rate. The augmented overlay and the corresponding histology projection of case 7 are depicted in Fig. 7 . On the left side of the specimen, fibrous tissue is erroneously classified as a tumor, reaching an a posteriori probability of up to 70%.

Fig. 5.

ROC curve for the two class problem tumor vs. no tumor for all specimens.

Fig. 6.

ROC curves for each individual case. Three cases where histology revealed tumor /no tumor only were excluded from the analysis.

Fig. 7.

Augmented overlay and histologic ground truth of case 7 (invasive carcinoma) showing a considerable deterioration of prediction accuracy in Fig. 6. The overlay exhibits an erroneous classification of tumor

4. Discussion

Fluorescence lifetime imaging (FLIm) is a label-free modality for in situ characterization of biochemical alterations in tissue. Here we demonstrated the potential of FLIm to distinguish in real-time between adipose, fibrous, and cancerous regions in breast specimens from women undergoing lumpectomies and mastectomies, and to augment the diagnostic information on the surgical specimen. Specifically, we developed and tested a supervised classification and visualization approach that allows for automated display of diagnostic information and intuitive visualization of such information with adaptive spatial resolution.

Cytology and frozen sections are among the most common techniques to delineate breast tumor margins but they require significant time and cost. In order to overcome these crucial limitations, a variety of optical imaging techniques have been combined with machine learning algorithms. Among them are deep neural networks applied on optical coherence tomography (OCT) images [34], a modified version of deep convolutional U-Net architecture for hyperspectral imaging data [35] and ultrasound combined with a random forest classifier [36] reporting sensitivity; specificity of 91.7%; 96.3%, 80-98%; 93-99% and 75.3%; 82.0%. Although a direct comparison of these modalities remains difficult due to varying datasets and metrics, ultrasound will probably not fulfill requirements to solve the margin status problem [15]. OCT has been applied ex vivo to image excised breast specimens [7] and invivo scanning the cavity after resection [6] with high overall accuracy. However, OCT still has limited ability to distinguish between cancerous and fibrous breast tissue due to potentially similar structural features of these tissue types [7]. Hyperspectral imaging has also demonstrated high accuracy for margin assessment and a fast scanning speed, but its transition to in vivo imaging requires a complicated instrument due to complex imaging geometry of a surgical cavity [37] and possible specular reflections of the liquid on the tissue surface [38]. Diffuse reflectance spectroscopy has shown promising results for discriminating healthy breast tissue from tumor tissue [39,40]. A recent study demonstrates a combination of diffuse reflectance spectroscopy with a Support Vector Machine for in vivo detction of breast cancer [40]. The authors report an excellent accuracy and Matthews Correlation Coefficient of 0.93 and 0.87, respectively. Drawbacks are a relatively slow acquisition speed and influence of ambient light.

FLIm allows for rapid and label-free imaging of tissue diagnostic data derived from breast tumor specimens and has already been demonstrated for invivo use [17–19]. FLIm-based discrimination between breast tissue types is based on endogenous fluorescence of the fluorophores: collagen fibers, NADH, and FAD. Consistent with our recent findings [10], we observed longer lifetimes in spectral channels 2, 3 and 4 for adipose tissue. For fibrous tissue, shorter lifetimes were seen but longer than carcinoma. A recent study demonstrated the feasibility of FLIm to distinguish between adipose, fibrous, and cancerous regions. In this study, we developed a pipeline that combines FLIm with machine learning and advanced visualization technique, which enables immediate feedback on the interrogated location along with an intuitive visualization of the classifier output. The visualization uses a simple color scheme for displaying tissue type (output of the classification results) as well as the probability of prediction. A coarse scan without refinement provides a good overview. A low probabilistic output (encoded in black paintings) indicates either inhomogeneous tissue composition or a general uncertainty of the classifier. Rescanning the suspicious region will increase the local resolution to uncover details that cannot be concluded from the initial coarse scan. The adaptive resolution principle used in this study has a clear advantage of rapid data collection on the regions of interest from merely the initial scan. Thus, the scanning time, a crucial factor in breast-conserving therapy [15], can be considerably reduced as breast tissue predominantly consists of fat.

The results of this study have demonstrated that spectroscopic FLIm features derived from a large set of point measurements (1,000 - 10,000 point measurements per sample) can delineate the cancerous regions in breast specimens with high accuracy (89% sensitivity and 93% specificity). The classifier performed very well on all samples, except one. The classification accuracy can be influenced by multiple factors. For example, tissue sections were cut in parallel to the imaging plane so that the section used for histology staining might be a few micrometer off the imaged plane. To investigate the impact of a potential offset, in two cases, multiple sections were cut within the imaged volume. As no substantial differences were seen, the remaining study has been performed with a single section. The other possible cause is a low inter-patient variability due to an insufficient number of patients for training. In order to prevent and limit such drawbacks, future studies will require multiple sections for histology from one specimen and a more extensive database from a larger number of patients. A larger cohort will also be necessary to investigate, whether FLIm can distinguish between invasive cancer and DCIS.

Due to the manipulation of mechanically flexible specimens during the standard histopathological protocol, the difference in shape between the pathology slide and the acquired imaging data is one of the major challenges for any training for machine learning techniques [37]. Unlike our previous study [10], here we register the histology slide with the fluorescence measurements using a method [23] that accounts for possible tissue deformations and loss resulting from histological preparation. This approach allowed for better registration of FLIm maps with histopathological section and pathologist annotations. Using a sophisticated registration that accounts for tissue deformations leads to a higher number and quality of labeled samples. Especially including critical FLIm data acquired close to the margins leads to more accurate classification of FLIm signatures. Although the registration is providing reliable training set, small focal tumors with a diameter are not evaluated in the statistics as registration accuracy cannot be guaranteed to provide a correct labeling of the measurement.

While this study focused on excised specimens, the long-term goal is to establish FLIm as an intraoperative tool scanning the surgical bed with a fiber optic. Recent studies from our group demonstrated the applicability of FLIm for in vivo applications [17,22,41]. The transition from ex vivo to in vivo settings will involve a few adaptations of the procedure. To account for the volumetric information that is necessary to facilitate accurate surgical resection, a stereo camera setup during the scan can be used to collect depth information [42]. Thus, the adaptive refinement will require re-triangulation for each measurement to build up a color 3d profile of the scanned region. Moreover, parameters and will be redefined for in vivo scans as they define a trade-off between resolution and scanning speed. Real-time segmentation of the aiming beam will also be more challenging as it has to deal with a variety of geometries, specular reflections, and illumination conditions. To account for these scenarios, we consider a more robust tracking procedure [42] where the aiming beam is pulsed with 50Hz to easily identify the beam position in a subtraction of subsequent images with aiming beam on and off. Finally, we also expect altered fluorescence signatures in in vivo applications due to cauterized tissue and altered metabolism making supervised training of an in vivo classifier a highly challenging task. Standard classifiers are not able effectively account for changes in data distributions between training and test phases. Generative Adversarial Networks (GANs) have been used to adapt synthetic input data from a simulator to a set of sparsely labeled real data (Domain Adaptation) using a neural network [43,44]. Domain Adaptation methods could adapt well-labeled ex vivo training data to the in vivo domain. Due to the simple color-coding scheme and real-time capability, such method holds great potential to guide surgeons intraoperatively when scanning the surgical bed to see if additional tissue needs to be excised.

5. Conclusions

This study demonstrates the potential application of FLIm for margin assessment of resected breast lumpectomy specimens when combined with machine learning and real-time visualization. The presented method here holds many desirable features including high prediction accuracy, rapid acquisition speed that allows for rapid large area scan, spatial refinement capability for suspicious regions, and nondestructive probing. This method is easy to use as it provides an intuitive visualization of tissue characteristics and demonstrates high tumor classification sensitivity (89%) and specificity (93%). Current method provides a path for future applications in vivo to identifying tumor infiltrations in the surgical bed. This future application could potentially provide the surgeon with additional information that reflects the histopathology of the tissue at the resection margin.

Funding

National Institutes of Health10.13039/100000002 (R01 CA187427, R03 EB026819).

Disclosures

The authors declare that there are no conflicts of interest related to this article.

References

- 1.Torre L. A., Bray F., Siegel R. L., Ferlay J., Lortet-Tieulent J., Jemal A., “Global cancer statistics, 2012,” Ca-Cancer J. Clin. 65(2), 87–108 (2015). 10.3322/caac.21262 [DOI] [PubMed] [Google Scholar]

- 2.Mandpe A. H., Mikulec A., Jackler R. K., Pitts L. H., Yingling C. D., “Comparison of response amplitude versus stimulation threshold in predicting early postoperative facial nerve function after acoustic neuroma resection,” Am. J. Otol. 19(1), 112–117 (1998). [PubMed] [Google Scholar]

- 3.Gage I., Schnitt S. J., Nixon A. J., Silver B., Recht A., Troyan S. L., Eberlein T., Love S. M., Gelman R., Harris J. R., Connolly J. L., “Pathologic margin involvement and the risk of recurrence in patients treated with breast-conserving therapy,” Cancer 78(9), 1921–1928 (1996). [DOI] [PubMed] [Google Scholar]

- 4.Keller M. D., Vargis E., Mahadevan-Jansen A., de Matos Granja N., Wilson R. H., Mycek M., Kelley M. C., “Development of a spatially offset raman spectroscopy probe for breast tumor surgical margin evaluation,” J. Biomed. Opt. 16(7), 077006 (2011). 10.1117/1.3600708 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Thomas G., Nguyen T.-Q., Pence I. J., Caldwell B., O’Connor M. E., Giltnane J., Sanders M. E., Grau A., Meszoely I., Hooks M., Kelley M. C., Mahadevan-Jansen A., “Evaluating feasibility of an automated 3-dimensional scanner using raman spectroscopy for intraoperative breast margin assessment,” Sci. Rep. 7(1), 13548 (2017). 10.1038/s41598-017-13237-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Erickson-Bhatt S. J., Nolan R. M., Shemonski N. D., Adie S. G., Putney J., Darga D., McCormick D. T., Cittadine A. J., Zysk A. M., Marjanovic M., Chaney E. J., Monroy G. L., South F. A., Cradock K., Liu Z. G., Sundaram M., Ray P. S., Boppart S., “Real-time imaging of the resection bed using a handheld probe to reduce incidence of microscopic positive margins in cancer surgery,” Cancer Res. 75(18), 3706–3712 (2015). 10.1158/0008-5472.CAN-15-0464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nguyen F. T., Zysk A. M., Chaney E. J., Kotynek J. G., Oliphant U. J., Bellafiore F. J., Rowland K. M., Johnson P. A., Boppart S. A., “Intraoperative evaluation of breast tumor margins with optical coherence tomography,” Cancer Res. 69(22), 8790–8796 (2009). 10.1158/0008-5472.CAN-08-4340 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Piras D., Xia W., Steenbergen W., van Leeuwen T. G., Manohar S., “Photoacoustic imaging of the breast using the twente photoacoustic mammoscope: present status and future perspectives,” IEEE J. Sel. Top. Quantum Electron. 16(4), 730–739 (2010). 10.1109/JSTQE.2009.2034870 [DOI] [Google Scholar]

- 9.Li R., Wang P., Lan L., Lloyd F. P., Goergen C. J., Chen S., Cheng J., “Assessing breast tumor margin by multispectral photoacoustic tomography,” Biomed. Opt. Express 6(4), 1273–1281 (2015). 10.1364/BOE.6.001273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Phipps J. E., Gorpas D., Unger J., Darrow M., Bold R. J., Marcu L., “Automated detection of breast cancer in resected specimens with fluorescence lifetime,” Phys. Med. Biol. 63(1), 015003 (2017). 10.1088/1361-6560/aa983a [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sharma V., Shivalingaiah S., Peng Y., Euhus D., Gryczynski Z., Liu H., “Auto-fluorescence lifetime and light reflectance spectroscopy for breast cancer diagnosis: potential tools for intraoperative margin detection,” Biomed. Opt. Express 3(8), 1825–1840 (2012). 10.1364/BOE.3.001825 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Keller M. D., Majumder S. K., Kelley M. C., Meszoely I. M., Boulos F. I., Olivares G. M., Mahadevan-Jansen A., “Autofluorescence and diffuse reflectance spectroscopy and spectral imaging for breast surgical margin analysis,” Lasers Surg. Med. 42(1), 15–23 (2010). 10.1002/lsm.20865 [DOI] [PubMed] [Google Scholar]

- 13.Nichols B. S., Schindler C. E., Brown J. Q., Wilke L. G., Mulvey C. S., Krieger M. S., Gallagher J., Geradts J., Greenup R. A., Von Windheim J. A., Ramanujam N., “A quantitative diffuse reflectance imaging (qdri) system for comprehensive surveillance of the morphological landscape in breast tumor margins,” PLoS One 10(6), e0127525–25 (2015). 10.1371/journal.pone.0127525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kennedy K. M., Chin L., McLaughlin R. A., Latham B., Saunders C. M., Sampson D. D., Kennedy B. F., “Quantitative micro-elastography: imaging of tissue elasticity using compression optical coherence elastography,” Sci. Rep. 5(1), 15538 (2015). 10.1038/srep15538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Maloney B., McClatchy D., Pogue B., Paulsen K., Wells W., Barth R., “Review of methods for intraoperative margin detection for breast conserving surgery,” J. Biomed. Opt. 23(10), 1–19 (2018). 10.1117/1.JBO.23.10.100901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mondal B., Gao S., Zhu N., Sudlow G., Liang K., Som A., Akers W., Fields R., Margenthaler J., Liang R., Gruev V., Achilefu S., “Binocular goggle augmented imaging and navigation system provides real-time fluorescence image guidance for tumor resection and sentinel lymph node mapping,” Sci. Rep. 5(1), 12117 (2015). 10.1038/srep12117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gorpas D., Phipps J., Bec J., Ma D., Dochow S., Yankelevich D., Sorger J., Popp J., Bewley A., Gandour-Edwards R., Marcu L., Farwell D. G., “Autofluorescence lifetime augmented reality as a means for real-time robotic surgery guidance in human patients,” Sci. Rep. 9(1), 1187 (2019). 10.1038/s41598-018-37237-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bec J., Phipps J. E., Gorpas D., Ma D., Fatakdawala H., Margulies K. B., Southard J. A., Marcu L., “In vivo label-free structural and biochemical imaging of coronary arteries using an integrated ultrasound and multispectral fluorescence lifetime catheter system,” Sci. Rep. 7(1), 8960 (2017). 10.1038/s41598-017-08056-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Butte P. V., Mamelak A. N., Nuno M., Bannykh S. I., Black K. L., Marcu L., “Fluorescence lifetime spectroscopy for guided therapy of brain tumors,” NeuroImage 54, S125–S135 (2011). 10.1016/j.neuroimage.2010.11.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gorpas D., Ma D., Bec J., Yankelevich D. R., Marcu L., “Real-time visualization of tissue surface biochemical features derived from fluorescence lifetime measurements,” IEEE Trans. Med. Imag. 35(8), 1802–1811 (2016). 10.1109/TMI.2016.2530621 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Alfonso-Garcia A., Bec J., Sridharan Weaver S., Hartl B., Unger J., Bobinski M., Lechpammer M., Girgis F., Boggan J., Marcu L., “Real-time augmented reality for delineation of surgical margins during neurosurgery using autofluorescence lifetime contrast,” J. Biophotonics 13(1), e201900108 (2020). 10.1002/jbio.201900108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Weyers B. W., Marsden M., Sun T., Bec J., Bewley A. F., Gandour-Edwards R. F., Moore M. G., Farwell D. G., Marcu L., “Fluorescence lifetime imaging for intraoperative cancer delineation in transoral robotic surgery,” Trans. Biophotonics 1(1-2), e201900017 (2019). 10.1002/tbio.201900017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Unger J., Sun T., Chen Y., Phipps J. E., Bold R. J., Darrow M. A., Ma K., Marcu L., “Method for accurate registration of tissue autofluorescence imaging data with corresponding histology: a means for enhanced tumor margin assessment,” J. Biomed. Opt. 23(1), 1–11 (2018). 10.1117/1.JBO.23.1.015001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Yankelevich D. R., Ma D., Liu J., Sun Y., Sun Y., Bec J., Elson D. S., Marcu L., “Design and evaluation of a device for fast multispectral time-resolved fluorescence spectroscopy and imaging,” Rev. Sci. Instrum. 85(3), 034303 (2014). 10.1063/1.4869037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Liu J., Sun Y., Qi J., Marcu L., “A novel method for fast and robust estimation of fluorescence decay dynamics using constrained least-squares deconvolution with laguerre expansion,” Phys. Med. Biol. 57(4), 843–865 (2012). 10.1088/0031-9155/57/4/843 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Breiman L., “Random forests,” Mach. Learn. 45(1), 5–32 (2001). 10.1023/A:1010933404324 [DOI] [Google Scholar]

- 27.Matthews B. W., “Comparison of the predicted and observed secondary structure of t4 phage lysozyme,” Biochim. Biophys. Acta 405(2), 442–451 (1975). 10.1016/0005-2795(75)90109-9 [DOI] [PubMed] [Google Scholar]

- 28.Boström H., “Calibrating random forests,” in 2008 Seventh International Conference on Machine Learning and Applications, (2008), pp. 121–126. [Google Scholar]

- 29.Platt J. C., “Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods,” in Advances in large margin classifiers, (MIT Press, 1999), pp. 61–74. [Google Scholar]

- 30.Ma D., Bec J., Gorpas D., Yankelevich D. R., Marcu L., “Technique for real-time tissue characterization based on scanning multispectral fluorescence lifetime spectroscopy (ms-trfs),” Biomed. Opt. Express 6(3), 987–1002 (2015). 10.1364/BOE.6.000987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lagarto J. L., Phipps J. E., Faller L., Ma D., Unger J., Bec J., Griffey S., Sorger J., Farwell D. G., Marcu L., “Electrocautery effects on fluorescence lifetime measurements: An in vivo study in the oral cavity,” J. Photochem. Photobiol., B 185, 90–99 (2018). 10.1016/j.jphotobiol.2018.05.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lin M.-G., Yang T.-L., Chiang C.-T., Kao H.-C., Lee J.-N., Lo W., Jee S.-H., Chen Y.-F., Dong C.-Y., Lin S.-J., “Evaluation of dermal thermal damage by multiphoton autofluorescence and second-harmonic-generation microscopy,” J. Biomed. Opt. 11(6), 064006 (2006). 10.1117/1.2405347 [DOI] [PubMed] [Google Scholar]

- 33.Kaiser M., Yafi A., Cinat M., Choi B., Durkin A., “Noninvasive assessment of burn wound severity using optical technology: a review of current and future modalities,” Burns 37(3), 377–386 (2011). 10.1016/j.burns.2010.11.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Triki A. R., Blaschko M. B., Jung Y. M., Song S., Han H. J., Kim S. I., Joo C., “Intraoperative margin assessment of human breast tissue in optical coherence tomography images using deep neural networks,” Comput. Med. Imag. Grap. 69, 21–32 (2018). 10.1016/j.compmedimag.2018.06.002 [DOI] [PubMed] [Google Scholar]

- 35.Kho E., Dashtbozorg B., de Boer L. L., de Vijver K. K. V., M. Sterenborg H. J. C., Ruers T. J. M., “Broadband hyperspectral imaging for breast tumor detection using spectral and spatial information,” Biomed. Opt. Express 10(9), 4496–4515 (2019). 10.1364/BOE.10.004496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Shan J., Alam S. K., Garra B., Zhang Y., Ahmed T., “Computer-aided diagnosis for breast ultrasound using computerized bi-rads features and machine learning methods,” Ultrasound Med. Biol. 42(4), 980–988 (2016). 10.1016/j.ultrasmedbio.2015.11.016 [DOI] [PubMed] [Google Scholar]

- 37.Boppart S. A., Brown J. Q., Farah C. S., Kho E., Marcu L., Saunders C. M., M. Sterenborg H. J. C., “Label-free optical imaging technologies for rapid translation and use during intraoperative surgical and tumor margin assessment,” J. Biomed. Opt. 23(2), 1–10 (2017). 10.1117/1.JBO.23.2.021104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lu G., Wang D., Qin X., Halig L., Muller S., Zhang H., Chen A., Pogue B. W., Chen Z. G., Fei B., “Framework for hyperspectral image processing and quantification for cancer detection during animal tumor surgery,” J. Biomed. Opt. 20(12), 126012 (2015). 10.1117/1.JBO.20.12.126012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.de Boer L. L., Molenkamp B. G., Bydlon T. M., Hendriks B. H. W., Wesseling J., M. Sterenborg H. J. C., Ruers T. J. M., “Fat/water ratios measured with diffuse reflectance spectroscopy to detect breast tumor boundaries,” Breast Cancer Res. Treat. 152(3), 509–518 (2015). 10.1007/s10549-015-3487-z [DOI] [PubMed] [Google Scholar]

- 40.de Boer L., Bydlon T., van Duijnhoven F., F. D. Vranken-Peeters M.-J. T., Loo C. E., Winter-Warnars G. A. O., Sanders J., M. Sterenborg H. J. C., Hendriks B. H. W., Ruers T. J. M., “Towards the use of diffuse reflectance spectroscopy for real-time in vivo detection of breast cancer during surgery,” J. Transl. Med. 16(1), 367 (2018). 10.1186/s12967-018-1747-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Phipps J. E., Unger J., Gandour-Edwards R., Moore M. G., Beweley A., Farwell G., Marcu L., “Head and neck cancer evaluation via transoral robotic surgery with augmented fluorescence lifetime imaging,” in Biophotonics Congress: Biomedical Optics Congress 2018, (Optical Society of America, 2018), p. CTu2B.3. [Google Scholar]

- 42.Unger J., Lagarto J., Phipps J., Ma D., Bec J., Sorger J., Farwell G., Bold R., Marcu L., “Three-dimensional online surface reconstruction of augmented fluorescence lifetime maps using photometric stereo,” in Advanced Biomedical and Clinical Diagnostic and Surgical Guidance Systems XV, vol. 10054 International Society for Optics and Photonics (SPIE, 2017), p. 65. [Google Scholar]

- 43.Shrivastava A., Pfister T., Tuzel O., Susskind J., Wang W., Webb R., “Learning from simulated and unsupervised images through adversarial training,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2017). [Google Scholar]

- 44.Ben-David S., Blitzer J., Crammer K., Kulesza A., Pereira F., Vaughan J. W., “A theory of learning from different domains,” Mach. Learn. 79(1-2), 151–175 (2010). 10.1007/s10994-009-5152-4 [DOI] [Google Scholar]