Abstract

Background

In 2003, Project ECHO (Extension for Community Healthcare Outcomes) began using technology-enabled collaborative models of care to help general practitioners in rural settings manage hepatitis C. Today, ECHO and ECHO-like models (EELM) have been applied to a variety of settings and health conditions, but the evidence base underlying EELM is thin, despite widespread enthusiasm for the model.

Methods

In April 2018, a technical expert panel (TEP) meeting was convened to assess the current evidence base for EELM and identify ways to strengthen it.

Results

TEP members identified four strategies for future implementors and evaluators of EELM to address key challenges to conducting rigorous evaluations: (1) develop a clear understanding of EELM and what they are intended to accomplish; (2) emphasize rigorous reporting of EELM program characteristics; (3) use a wider variety of study designs to fill key knowledge gaps about EELM; (4) address structural barriers through capacity building and stakeholder engagement.

Conclusions

Building a strong evidence base will help leverage the innovative aspects of EELM by better understanding how, why, and in what contexts EELM improve care access, quality, and delivery, while also improving provider satisfaction and capacity.

Electronic supplementary material

The online version of this article (10.1007/s11606-019-05599-y) contains supplementary material, which is available to authorized users.

KEY WORDS: telemedicine, telehealth, Project ECHO, evaluation, evidence base, distance learning, continuing medical education, capacity building

INTRODUCTION

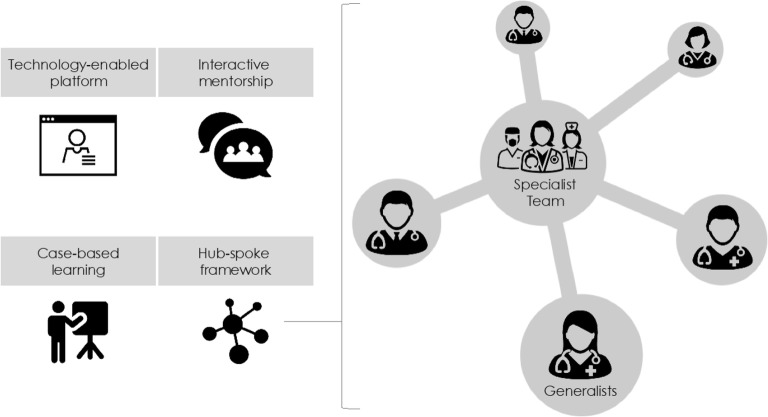

A growing number of health care organizations and primary care practitioners across the U.S. and the world have implemented models of care delivery using technology-enabled collaborative learning to build provider capacity. Such models are designed to help clinicians deliver care to patients with conditions that are within their scope of practice but they feel less prepared to handle. The first known example, Project ECHO (Extension for Community Healthcare Outcomes), is a hub-and-spoke model connecting generalists in one or more locations (“spokes”) with specialists at a different location (the “hub”) through videoconferencing sessions, which typically include a didactic component and deidentified case presentations and discussions (Fig. 1). Project ECHO launched in 2003 in New Mexico with a focus on supporting hepatitis C virus (HCV) management by primary care physicians in rural regions of the state. Since then, adaptations of this model have been implemented in many different locations and settings, addressing a wide range of conditions and topics, including tuberculosis, addiction, and antibiotic stewardship. We refer to these technology-enabled collaborative learning models of care delivery as ECHO and ECHO-like models (EELM).

Figure 1.

Key components of EELM (Sources for graphics: Noun Project/MRFA; danishicon; Samy Menai; Gregor Cresnar; Creative Stall).

A 2011 study describing the impact of Project ECHO on HCV outcomes in New Mexico1 generated enthusiasm for EELM, which led to a rapid increase in both the number of EELM programs and the number of clinical topics they address. Despite this momentum, the evidence base for their impact on key patient and provider outcomes remains limited. In part, this may be because many implementation efforts have focused on diffusing variations on the model rather than systematically evaluating them.

In December 2016, Congress passed the Expanding Capacity for Health Outcomes Act (“ECHO Act”), requiring an evaluation of the evidence base for technology-enabled collaborative models of care.2 As part of this evaluation, the Office of the Assistant Secretary for Planning and Evaluation (ASPE) in the U.S. Department of Health and Human Services contracted with the RAND Corporation to facilitate a technical expert panel (TEP) meeting, which occurred on April 9, 2018, in Washington, DC. Participants are listed in Online Appendix A. The goal of the meeting was to assess the evidence base for EELM and identify opportunities to expand it. TEP members were asked to consider the following questions: (1) given what is currently known about the effectiveness of EELM, what are the knowledge gaps to be addressed?, (2) why do these gaps exist, and (2) how could those gaps best be filled? In preparation for the TEP meeting, RAND researchers developed an inventory of EELM, conducted a systematic review of published evaluations of EELM, and conducted semi-structured discussions with key informants implementing EELM, selected to ensure diversity by geography and health conditions addressed, which were synthesized into nine case studies. These materials were shared with the TEP before the meeting as a basis for discussion.

This article summarizes the meeting’s findings, beginning with a description of the knowledge gaps around EELM. Next, the TEP members identified challenges to conducting rigorous evaluations that help explain why the knowledge gaps exist. These challenges are grouped into four categories, and for each category, options to overcome these challenges are presented.

NUMEROUS KNOWLEDGE GAPS AROUND EELM BUT ENTHUSIASM FOR ITS PROMISE

TEP members agreed that the central question about EELM is whether “it works,” but that there are other important knowledge gaps that exist. TEP members generated numerous potential research questions related to, for instance, implementation and dissemination; impacts on patient, provider, and system outcomes; and impacts on population health and health equity (Online Appendix B). The TEP distilled these potential research areas down to four overarching questions to guide future evaluation efforts (Table 1).

Table 1.

Overarching Questions About EELM

| • What is the evidence for the impact of EELM on patient health? | |

| • Across which conditions are EELM most effective in improving patient health? | |

| • For which conditions do EELM provide the most value (improved outcomes relative to cost)? | |

| • To what degree do EELM achieve their intended purpose(s)? |

TEP members noted that most existing evidence around EELM concerns provider satisfaction with participating in an EELM program and the extent to which knowledge and self-efficacy improved. Focusing in future evaluations on measuring patient outcomes, expanded definitions of provider satisfaction and engagement, and care processes that could affect system-level improvements (e.g., decreased wait times, lower costs) would significantly strengthen the evidence base for EELM, and these four priority questions shown in Table 1 offer a starting point.

Finally, while they recognized that the evidence base needs strengthening, the TEP stressed the importance of acknowledging providers’ enthusiasm for the promise of EELM. The implementation of these models in various contexts indicates that there is significant demand for this approach, and better understanding what is driving this demand would help guide the design of future evaluations. Thus, TEP members cautioned against interpreting the relatively weak evidence base as evidence that EELM is ineffective.

WHY THESE KNOWLEDGE GAPS EXIST: CHALLENGES AND POTENTIAL OPTIONS TO ADDRESS THEM

The TEP discussion generated four key categories of challenges to strengthening the evidence base for EELM as well as potential options to address each of these challenges (Table 2). TEP members noted that the options require coordination and ongoing engagement among multiple stakeholders, including implementors and evaluators of EELM, funders, and policymakers.

Diversity of intended purposes of EELM: TEP members commented that, as EELM have expanded to encompass a range of conditions beyond HCV, it has become clear that they can serve a variety of purposes. Two of these include improving access to care often provided by specialists and improving the quality of care for more common conditions such as depression. In short, a challenge to evaluating whether EELM “work” is a lack of consensus among stakeholders on the intended objectives of these programs and how achievement of these objectives can best be evaluated. Given the diversity of intended purposes of EELM, it will be important for future evaluators to devise both a core set of outcomes to measure across types of EELM (e.g., retention of physician-participants), as well as context-specific metrics that are appropriate to the intended purpose(s) of the program being studied (e.g., processes and outcomes for a particular condition).

-

2.

Variable implementation of the model: EELM have been implemented in diverse ways, varying in their design with respect to the original Project ECHO model and intended for a wide range of clinical conditions and populations. Thus, it is important that evaluations document both characteristics of the intervention itself as well as its implementation (i.e., fidelity to the model versus adaptation). Without collecting data on how EELM are implemented in different contexts (e.g., number of intended and actual telementoring sessions conducted and duration, types of providers participating, retention rate), it is difficult to assess which aspects of the model contribute to success and how to generalize evaluation findings.

-

3.

Limited use of a variety of possible study designs for rigorous evaluation, leading to less confidence in evaluations: Most existing studies of EELM have used a pre-post design without a comparator, which could lead to conclusions that EELM are effective for many situations in which a different intervention might have been more effective. The panel suggested potential comparators that could be used in different contexts. For example, programs focusing on supporting generalists in managing complex cases of a common condition might be compared with a continuing medical education webinar. A program seeking to expand access to care for a rarer condition typically managed by specialists might compare EELM with telemedicine.

Furthermore, existing evaluations have been conducted over short time frames; little is known about whether EELM continue to be effective beyond the study period or whether provider knowledge gained through EELM “decays” over time. Evaluators may want to consider additional assessments after the intervention ends to capture whether and when this decay occurs, and how to prevent it. In addition, it is not known the extent to which knowledge gained through case presentations better equips providers to treat patients who were not discussed during EELM sessions.

Table 2.

Challenges to Conducting Rigorous Evaluations of EELM and Potential Options to Address Them

| Challenge | Potential options to address the challenge | Summary actions |

|---|---|---|

| 1: Diversity of intended purposes of EELM | a: Build consensus around the definition and purposes of EELM | Develop a clear understanding of EELM |

| b: Evaluation outcomes should reflect intended purposes of EELM | ||

| 2: Variable implementation of the model | a: Programs should document details of model implementation | Emphasize rigorous reporting of program characteristics and implementation |

| 3: Limited use of variety of study designs | a: Consider strategies to facilitate randomization | Use a wider variety of study designs |

| b: Leverage non-randomized study designs that have advantages over study designs that have been used to date | ||

| c. Choose meaningful comparators | ||

| d: Document persistence or waning of effects of EELM over time, and spillover into other patients that were not presented as cases | ||

| 4: Structural barriers to high-quality evaluation | a: Support sustainable funding streams for EELM evaluations | Provide technical assistance and build capacity; engage with policymakers and funders |

| b: Enlist champions to support evaluations of EELM | ||

| c: Provide technical assistance and build capacity to conduct evaluations of EELM | ||

| d: Support data-sharing and interoperability |

Thus far, evaluations have been unable to definitively show that EELM improve patient, provider, and system outcomes or put more simply that “it works.” The RAND team assessed the quality of existing EELM evaluations using the Grading of Recommendations Assessment, Development, and Evaluation (GRADE) framework as “low” or “very low” for the outcomes of interest, and TEP members concurred. While this level of evidence is not unusual for innovations in health care delivery, there are opportunities to increase the rigor of EELM evaluations. Specifically, alternatives to traditional randomized controlled trials, which can be challenging and expensive to implement in real-world settings, include other experimental and quasi-experimental designs, such as crossover, non-placebo-controlled parallel, or stepped-wedge, as well as non-randomized designs such as pre-post intervention with a control group. Mixed-method evaluations with a qualitative component should also be considered. For example, in-depth interviews could provide context for measured outcomes and elicit perspectives on the importance of charismatic leaders, characteristics of high-functioning teams, and quality and relevance of specialists’ recommendations.

-

4.

Structural barriers to high-quality evaluation: As with evaluations of other innovations in health care delivery, there are several structural barriers to high-quality evaluation of EELM. These include a focus on implementation and a lack of resources devoted to evaluation, lack of internal expertise to conduct rigorous evaluations using a wider variety of study designs noted above, limited access to high-quality data, and lack of clarity on appropriate outcome measures and how they can be operationalized. Addressing these barriers requires garnering the technical and financial support needed to carry out higher quality studies. Efforts could include creating sustainable funding streams with resources for rigorous evaluation; leveraging efforts to improve data interoperability and exchange at various levels (hubs, local, state, and federal); harnessing the enthusiasm of EELM champions among administrative and clinical leaders so that EELM activities and evaluations receive appropriate prioritization and funding; and establishing resource centers to provide tools and technical assistance to EELM programs in order to conduct evaluations.

CONCLUSIONS

TEP members assessed EELM’s evidence base and identified multiple ways to better understand EELM’s benefits and limitations. To address four key challenges to conducting rigorous evaluations of these promising models, the following overarching strategies were viewed as critical to developing EELM’s evidence base:

Develop a clear understanding of EELM, what they are intended to accomplish, and the critical components of EELM that are necessary to meet their goals. Evaluators must recognize the diversity of EELM and how they vary in their design with respect to the original Project ECHO model, sometimes reflecting the particular health conditions and objectives addressed by a given EELM. They should also attempt to identify the core desired outcomes of EELM and how to measure them through evaluations.

-

2.

Emphasize rigorous reporting of EELM program characteristics. Reporting on a broader set of EELM program characteristics would elucidate variations of the model in practice and what “ingredients” may lead to better outcomes for various conditions and program objectives.

-

3.

Focus on the four overarching questions identified above, and use a wider variety of study designs to fill the numerous knowledge gaps about EELM. There are many potential research questions about EELM, and the TEP identified a small number of broad questions to guide future evaluation efforts. To rigorously answer these questions, there are opportunities to employ a variety of study designs, more clearly define the patient-, provider-, and system-level outcomes of interest, include appropriate comparators, and extend the time frames of evaluations.

-

4.

To address structural barriers to rigorous evaluations of EELM, provide technical assistance and build evaluation capacity, when additional expertise would be beneficial. In addition, engage with policymakers, funders, and other stakeholders to gain support and funding for conducting evaluations. Implementors and evaluators need to explore mutually beneficial mechanisms for supporting rigorous evaluation to expand the evidence base for EELM.

The TEP members’ assessment of the evidence base for EELM and suggestions for strengthening it informed the development of a public report3 submitted to Congress by ASPE, responding to the ECHO Act.

Project ECHO celebrated its 15th anniversary in 2018, and EELM continues to be implemented in various settings. Well-designed evaluations can help policymakers, researchers, and clinicians understand how EELM can be effective and in what contexts, thereby identifying to what extent these models can contribute to expanding access to care, improving quality, building provider capacity, and enhancing health care delivery to underserved populations.

Electronic Supplementary Material

(DOCX 17.8 kb)

Acknowledgments

The authors gratefully acknowledge the following contributors: the funders of this project at the U.S. Department of Health and Human Services Office of the Assistant Secretary for Planning and Evaluation (ASPE), including Nancy De Lew, Caryn Marks, and Rose Chu for their support. Among our colleagues at the RAND Corporation, we are grateful to Jessica Sousa, Ryan McBain, Tricia Soto, Lisa Turner, Justin Timbie, Lori Uscher-Pines, Christine Eibner, and Paul Koegel.

Funding Information

This work was supported by the Office of the Assistant Secretary for Planning and Evaluation, U.S. Department of Health and Human Services, under master contract, Building Analytic Capacity for Monitoring and Evaluating the Implementation of the ACA, HHSP23320095649WC. Dr. Faherty, Dr. Rose, Dr. Fischer, and Ms. Martineau received support through this contract with ASPE.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Arora S, Thornton K, Murata G, et al. Outcomes of treatment for hepatitis C virus infection by primary care providers. N Engl J Med. 2011;364(23):2199–2207. doi: 10.1056/NEJMoa1009370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Expanding Capacity for Health Outcomes Act (ECHO Act). In. Public Law No: 114–270. Washington, DC: 114th Congress (2015–2016); 2016.

- 3.Office of the Assistant Secretary for Planning and Evaluation. Report to Congress: Current State of Technology-Enabled Collaborative Learning and Capacity Building Models. Available at: https://aspe.hhs.gov/pdf-report/report-congress-current-state-technology-enabled-collaborative-learning-and-capacity-building-models. 03/01/2019. Accessed 21 October 2019.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 17.8 kb)