Abstract

Humans and other animals use spatial hearing to rapidly localize events in the environment. However, neural encoding of sound location is a complex process involving the computation and integration of multiple spatial cues that are not represented directly in the sensory organ (the cochlea). Our understanding of these mechanisms has increased enormously in the past few years. Current research is focused on the contribution of animal models for understanding human spatial audition, the effects of behavioral demands on neural sound location encoding, the emergence of a cue-independent location representation in the auditory cortex, and the relationship between single-source and concurrent location encoding in complex auditory scenes. Furthermore, computational modeling seeks to unravel how neural representations of sound source locations are derived from the complex binaural waveforms of real-life sounds. In this article, we review and integrate the latest insights from neurophysiological, neuroimaging, and computational modeling studies of mammalian spatial hearing. We propose that the cortical representation of sound location emerges from recurrent processing taking place in a dynamic, adaptive network of early (primary) and higher-order (posterior-dorsal and dorsolateral prefrontal) auditory regions. This cortical network accommodates changing behavioral requirements, and is especially relevant for processing the location of real-life, complex sounds and complex auditory scenes.

Introduction

The position of a sound source reveals vital information about relevant events in the environment, especially for events taking place out of sight (for example, in a crowded visual scene). However, as sound location is not mapped directly onto the sensory epithelium in the cochlea, spatial hearing poses a computational challenge for the auditory system. The computational complexity is increased by the need to integrate information across multiple location cues and sound frequency ranges. For decades, auditory neuroscientists have examined the neuronal mechanisms underlying spatial hearing. This research shows that spatial cues are extracted and processed to a large extent in subcortical structures, but also underscores a crucial role for the auditory cortex in neural sound location encoding (especially lesion studies1-7).

This Review brings together the latest insights into the cortical encoding of sound location in the horizontal plane (although of great interest, sound localization in the vertical plane is outside the scope of the present Review). Focusing on the specific contributions of the cortex to spatial hearing (that is, over and above subcortical processing), we discuss the empirical and theoretical work in the context of emergent perceptual representations of sound location. In particular, we describe the growing evidence for the relevance of cortical mechanisms and networks for goal-oriented sound localization, for spatial processing of real-life sounds, and for spatial hearing in complex auditory scenes.

Mammalian spatial hearing

The anatomy of the head, torso and pinna (the part of the ear residing outside of the head) introduces disparities in the time and intensity of sound waves emitted by a sound source when these arrive at the two ears. For mammals, these binaural disparities – the interaural time difference (ITD) and interaural level difference (ILD) – provide information on the spatial position of a sound source in the horizontal plane (FIG. 1A). In the case of periodic sounds (that is, pure tones consisting of a single sine wave), the delay between the sound waves arriving at each ear can also be expressed as the interaural phase difference (IPD, Box 1) instead of as a time difference. Another set of cues are the monaural, spectral cues. These cues are introduced by the shape of the pinnae and contribute to both horizontal and vertical sound localization8, and to resolving front–back ambiguities in the horizontal plane9.

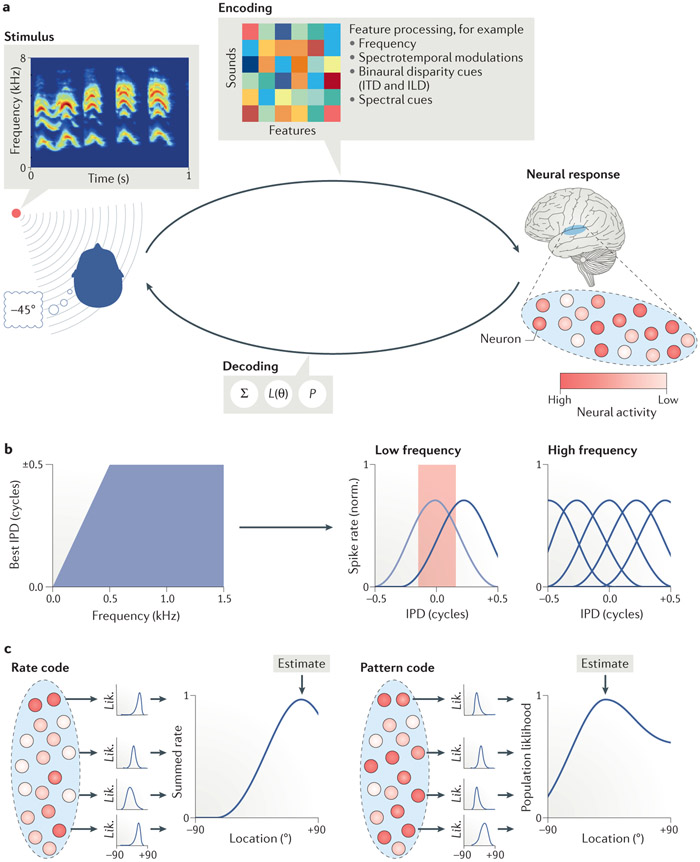

Figure 1. Sound localization in humans.

(A) Schematic representation of binaural disparity cues: Interaural time and level differences (ITD and ILD, respectively). A sound wave emitted by a source at a lateral position is delayed and attenuated in one ear compared to the other ear as a result of the head being in between. (B) Human localization acuity in the horizontal plane. Acuity is best at frontal locations around the interaural midline (at 0°) and deteriorates towards the periphery and back, indicated here by the green to red color gradient

Box 1: The physiology of sound perception and the encoding of interaural delays.

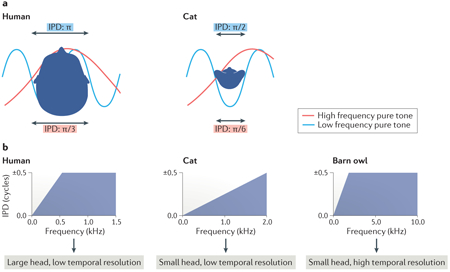

The delay between sound waves arriving at the two ears can be described in terms of timing, i.e. interaural time differences (ITDs). The physiological range of ITDs is determined by head size: for instance, the maximum ITD in cats is ~300 μs, and in humans ~600 μs. However, for periodic tones the time differences up to half the period of the tone can also be expressed in terms of phase shifts, i.e. interaural phase differences (IPDs); note that larger time differences result in phase ambiguities because it is unclear which ear is lagging and which is leading. Cochlear filtering breaks broadband sounds into their respective frequency components such that the delay between the two ears is not (only) processed for the overall sound wave, but for the individual frequency components. These frequency components (essentially pure tones) are periodic and therefore the delay between the ears can also be expressed in terms of IPDs. Similar to ITDs, the physiological range of IPDs is determined by head size. Additionally, the maximum IPD is dependent on sound frequency: low frequency tones have smaller maximal IPDs than high frequency tones (see figure, part a). Finally, the upper limit of the physiological range of IPDs is determined by the temporal resolution of the auditory system (that is, the highest frequency to which the firing of auditory nerve fibers and neurons at subsequent stages in the subcortical auditory pathway can phase lock). Taken together, the physiological range of IPDs is a function of sound frequency, head size, and temporal resolution of the auditory system across mammalian species (see figure, panel b, see also main text for further details). Part b is adapted with permission from REF.75, Springer Nature Limited.

According to the duplex theory of spatial hearing, the contribution of each binaural cue to sound localization is dependent on sound frequency. Specifically, ITDs are considered most relevant for localization of low frequency sounds (<1.5 kHz), and ILDs for localization of high frequency sounds (>1.5 kHz)10. Several psychoacoustic studies support this apparent cue-dichotomy for sound localization11-15; however, this theory has also been challenged by other studies showing that each type of binaural cue contributes to sound localization in a wide range of frequencies. For instance, ITDs conveyed by the envelope of high-frequency sounds, as well as the ILDs present in low-frequency sounds, can be used for localization16-18 (especially in reverberant listening settings19). In addition, no clear relationship exists between neural tuning to sound frequency and to ITDs or ILDs. That is, ITDs modulate not only the firing rate of neurons that are tuned to low frequencies, but also those that are tuned to high frequencies20. Neurons in the inferior colliculus of the guinea pig that are tuned to low frequencies even respond maximally to ITDs outside of the physiological range21. Comparably, neural encoding of ILDs in the chinchilla midbrain is frequency invariant22. Thus, binaural cues seem to be relevant for a wider range of frequencies than predicted by the duplex theory, and neurons encode both types of binaural cue irrespective of their frequency tuning.

In terms of localization acuity, psychoacoustic studies show that the resolution of spatial hearing in the horizontal plane is highest around the interaural midline and deteriorates towards the acoustic periphery, and especially the back9,23,24 (FIG. 1 B). Furthermore, localization acuity is higher for broadband than for narrowband sounds, mostly because of the presence of monaural, spectral cues in broadband sounds9,25-27. However, most sound localization studies use artificial stimuli that listeners do not encounter regularly in daily life, such as tones, clicks and noise bursts9,11,14,23,25,28-30. Therefore, little is known about localization of complex, meaningful sounds. A study addressing this gap in psychoacoustic research showed that, in addition to the acoustic features described before, higher-order sound characteristics such as level of behavioral relevance and sound category modulate the localization acuity of complex, meaningful sounds 31. These results highlight the need for realistic experimental set-ups using real-life sounds in ecologically valid listening settings (for example, with reverberation) in order to develop a more complete understanding of the complex mechanisms involved in mammalian spatial hearing.

Cortical spatial tuning properties

To understand the cortical mechanisms involved in spatial hearing, auditory neuroscientists have first sought to characterize neural spatial tuning properties in the network of densely interconnected primary and higher-order areas that together make up the mammalian auditory cortex32-35. In nonhuman primates, neurophysiological measurements have revealed that cortical spatial tuning is generally broad and predominantly contralateral (that is, the majority of neurons responds maximally to sound locations in the contralateral space)36-38. However, comparing spatial tuning between primary and higher-order auditory regions in response to complex, behaviorally meaningful sounds, such as conspecific calls, showed that neurons in higher-order caudal belt areas (especially the caudolateral belt area39) have a markedly higher spatial selectivity than those in the primary auditory cortex (PAC)38-41. By contrast, neural responses in rostral belt fields are less selective for space39,40.

Similar spatial tuning properties have been observed in the auditory cortex of cats and ferrets. That is, the majority of neurons in the PAC is sensitive to sound location, their tuning is largely contralateral, and they typically have broad spatial receptive fields42-47. Moreover, under anesthesia, tuning in the majority of neurons in the PAC is level-dependent such that spatial receptive fields broaden further with increasing sound level42,44,45 (but this was not confirmed in alert cats48). Further, similar to the findings in non-human primates, spatial selectivity in cat PAC was lower than in several higher-order areas, especially the dorsal zone and posterior auditory field43,46,47.

A comparable cortical organization for spatial processing has been found in humans. Functional MRI (fMRI) research showed that sound location processing activates in particular the posterior auditory cortex, that is, the planum temporale49-52, and the inferior parietal cortex53. Additionally, spatial tuning is broad and mostly contralateral54-56, and spatial selectivity is higher in the posterior, higher-order planum temporale than in the PAC57.

Taken together, several neuronal spatial tuning properties seem to be consistent across mammalian species: broad spatial receptive fields, an oversampling of contralateral space, and relatively higher spatial selectivity in posterior-dorsal regions. However, novel empirical work has revealed that understanding of these properties requires further refinement. For instance, the behavioral state of a listener has been shown to affect neuronal spatial selectivity. In alert and behaving cats and humans, spatial tuning sharpened during goal-oriented sound localization, especially in the PAC57,58. This finding emerged only recently because, until now, most studies measured neuronal spatial tuning properties during passive listening37,40,49-52,54 or even under anesthesia42-47. Importantly, these findings emphasize that measurements in alert and behaving subjects are needed to reveal modulatory influences of task performance and attention on neural sound location encoding.

In addition, findings from the past few years emphasize that some caution is required when generalizing neuronal spatial tuning properties across mammalian species owing to the differences in head morphology and size and their effects on neuronal spatial tuning. Specifically, in species with a relatively large head size (such as humans and other primates), the distribution of IPD tuning is not uniformly contralateral but dependent on sound frequency59. For instance, in macaque monkeys, the bimodal distribution of IPD preference consisting of two populations of laterally tuned neurons (as described in the previous paragraphs) is only observed for low sound frequencies (<1,000 Hz). For high sound frequencies (>1,000 Hz), the distribution of IPD tuning across the neural population is homogeneous and spans the entire azimuth. Only in mammals with a small head size, does spatial tuning consistently resemble the bimodal distribution of lateral best IPDs across low and high sound frequencies (as measured in subcortical structures)21,59. Thus, these findings challenge the notion that neuronal spatial tuning is predominantly contralateral for all mammalian species, and highlight differences between mammals with small heads and mammals with relatively large heads.

Neural coding of sound location

Given the properties of cortical spatial tuning, the question arises as to how sound location is processed in the auditory cortex. Specifically, what is the neural representation of sound location? Two complementary approaches have been used to address this question (FIG. 2). Most attention has been given to ‘decoding’ approaches, examining which aspects of the neural responses are most informative of sound location, and how this information can be read-out by downstream (that is, frontal) regions. Less attention has been given to the complementary ‘encoding’ approach, which aims to provide a mechanistic explanation of the transformation from binaural sound wave into neural response. Both approaches provide important insights into cortical spatial auditory processing, which we describe here.

Figure 2. From binaural real-life sound to location percept: Encoding and decoding.

(A) The complex sound wave of a real-life sound at a lateral position arrives at the two ears, which generates different binaural disparity cues as a function of location and ultimately the listener’s perception of sound location. Sound location (and the perception thereof) is coded by the response patterns of neuronal populations in the auditory cortex. Circles represent neurons and the intensity of red reflects degree of activity (that is, higher intensity corresponds to higher activity). Encoding refers to the computations required to transform the binaural presentation of a real-life sound into a neural representation of location. Computational models are used to examine the processing mechanisms and understand how relevant features are analyzed and combined. Decoding refers to the read-out of (perceived) sound source location from the neuronal population signal. Possible decoding strategies include a summed rate code (∑), maximum likelihood estimation (L(θ)), and others (P). (B) Illustration of the optimal coding model75 in humans. The left panel shows the physiological range of IPDs for binaural sound as a function of sound frequency. The right panel shows examples of the optimal distribution – given the physiological range of IPDs displayed on the left – of neural tuning to IPD to encode a low frequency sound (middle) and a high frequency sound (right). Each curve represents a tuning curve of a neuron to IPD. Specifically, the plot illustrates that given the narrow range of IPDs for low frequencies (indicated by the red rectangle in middle panel), neurons with peak responses to small IPDs (grey curve) would be modulated little by small changes in IPD, while neurons with peak responses outside of the physiological range (black curve) would be modulated maximally by small changes in IPD. In contrast, given that for high frequencies the physiological range of IPDs encompasses the entire phase (blue rectangle in panel on the right), a homogenous distribution of IPDs leads to more accurate encoding of all possible IPDs. (C) Schematic representations of a maximum likelihood population pattern code for sound location. The left and right panels each represent the transformation from neural responses to a specific sound location across a population to a likelihood function (Lik.). Specifically, the activity of each neuron (indicated by the intensity of red) in the population is multiplied (that is, weighted) by the logarithm of the neuron’s tuning curve. For each neuron, the resulting likelihood function (Lik.) reflects the probability that the observed neural response was elicited by a sound at a given location (the graphs in the middle display likelihood functions for a few neurons in the population). The likelihood functions of all neurons are subsequently pooled to arrive at a population likelihood function. The peak of the population likelihood function reflects the estimated location (approximately 80° and −10° in the left and right panel, respectively). ILD, interaural level difference; ITD, interaural time difference; norm., normalized. Part b adapted from REF.75, Springer Nature Limited.

Encoding models of binaural sound

Computational models of the transformation from stimulus to neural representation provide valuable insights into sensory processing. For example, a model based on interdependent spectro-temporal modulation encoding60 accurately captures the transformation from real-life sound to its neural representation in the auditory cortex of humans61,62 and macaques63. For sound localization, the place code proposed by Jeffress64 was a first – and influential – step towards an encoding model describing the transformation from binaural sound to neural response. The place code posits that ITDs are encoded through ipsilateral and contralateral axonal delay lines of varying length, each projecting to coincidence detectors at the next stage in the auditory hierarchy64. These coincidence detectors are thought to be tuned to a specific ITD to which they respond with the maximum firing rate64. Additionally, the coincidence detectors presumably have relatively sharp, level-invariant tuning curves that match the resolution of spatial hearing in behavioral reports of sound localization acuity, sample the azimuth homogenously and are organized topographically (reviewed elsewhere65). Thus, according to the place code, sound location is encoded by activating distinct clusters of neurons in a topographic manner through a system of delay lines and coincidence detectors.

The advantage of encoding models such as the place code is that they generate predictions about the neural response (in this case to sound location) that can be compared to actual neural data. For the place code, single-unit recordings confirmed the existence of azimuthal ITD maps in the brain stem of barn owls66-68 and in multi-sensory structures in the brain stem of mammals69 (Box 2). However, the broad spatial receptive fields with an oversampling of contralateral locations that are found in subcortical and cortical auditory regions in mammalian species are not in agreement with the topographic, homogenous neuronal sampling of the azimuth predicted by the place code. Moreover, the existence of ITD maps in barn owls can also be explained by other neural coding strategies59 (see Box 1 and the next section).

Box 2: Spatial processing in the subcortical auditory pathway.

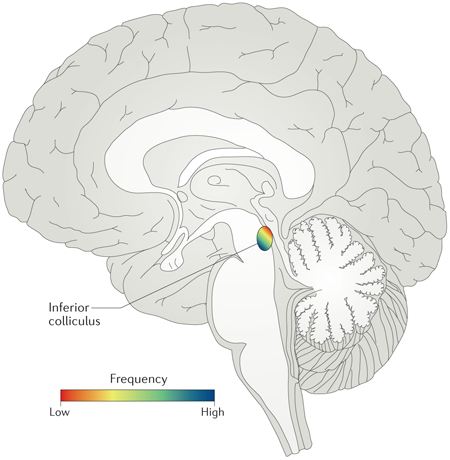

Binaural spatial cues (ITDs and ILDs) are processed to a large extent, or even completely, before arriving at the auditory cortex (reviewed elsewhere65). In brief, sound waves are transduced into action potentials by the hair cells in the cochlea and projected through the auditory nerve fibers to the cochlear nucleus. Subsequently, processing splits into distinct pathways for each type of binaural cue, continuing either into the medial or into the lateral nucleus of the superior olive161 (MSO and LSO, respectively). In MSO, encoding of ITDs relies on excitatory-excitatory (EE) neurons with Gaussian-shaped tuning curves65,190-193 while ILDs are encoded by excitatory-inhibitory neurons in LSO, resulting in sigmoidal tuning curves194,195. Although research in avian species established the existence of ‘auditory space maps’ in subcortical structures66-68, in mammals neither best ILDs nor best ITDs seem to be organized topographically within subcortical structures such as the MSO, LSO, and inferior colliculus65. Only multisensory nuclei such as the deep layers of the superior colliculus contain topographic maps of auditory space as well as maps of visual space69.

Until recently our knowledge of subcortical auditory spatial processing was based solely on single-unit recordings in non-human animals. Owing to the small size of subcortical structures in the human auditory pathway (e.g. the human inferior colliculus has an average width of ~7mm196) and the limited spatial resolution of conventional fMRI research at the 3-Tesla level it has heretofore been impossible to measure these processes in humans. However, new developments in ultra-high field MRI (at magnetic field strengths of 7 Tesla or higher) now enable fMRI measurements at sub-millimeter resolution, opening up the functional organization of the human subcortical auditory pathway for non-invasive research. The potential of these technological advances in neuroimaging is demonstrated by studies revealing, for the first time, the tonotopic organization of human IC197 (consisting of a dorso-lateral to ventro-medial low to high frequency tuning gradient, see figure) and medial geniculate body (MGB)198, the joint encoding of frequency and sound location in MGB, and the use of tonotopic mapping in MGB to distinguish the different sub nuclei in vivo (i.e. the dorsal [MGd] and ventral division [MGv])198.

Despite these apparent shortcomings of the place code, only a few other encoding models have been proposed. Młynarski70 described a model that utilizes statistical regularities of real-life sounds as the driving force in the transformation from binaural real-life sounds to neural representations. This model is based on the theory of efficient coding for neural processing71, which argues that neural stimulus representations are derived in a hierarchical, sparse manner from statistical regularities in the environment72,73. Founded on these principles, Mlynarski describes a two-stage model that transforms a binaural sound into a neural representation of spectrotemporal and interaural features. In the first stage, phase and amplitude information in each ear are extracted and separated with complex-valued, sparse coding that mimics cochlear filtering processes. In this stage, binaural phase information is also transformed into IPDs (reflecting medial superior olive processing). In the second stage, the model uses sparse coding to jointly encode monaural amplitude information from each ear and the IPDs, resulting in a spectrotemporal representation of the sound combined with spatial cues at the output stage (presumably the cortex). Given binaural real-life sound as input, this model predicts a cortical representation of sound location consisting of two neuronal subpopulations with broad receptive fields, tuned to lateral locations in opposing hemifields.

These model predictions are in close alignment with observations of neuronal spatial tuning in cat auditory cortex46,70, indicating that the model describes relatively well the transformation from binaural sound to neural representation (at least for cats). This confirmation of the model’s predictions also suggests that cortical spatial receptive fields in cats indeed reflect statistical regularities in binaural sound70. A similar relationship between statistical regularities in sound and neural encoding has been shown for other sound attributes. For example, cortical spectrotemporal sensitivity61,63 reflects the statistical regularities of spectrotemporal modulations in real-life sounds74.

Along the same lines, Harper and McAlpine75 introduced the notion of ‘optimal coding’. Although this model is a conceptual rather than a formal encoding model (that is, it does not provide a mechanistic explanation for how binaural sound is transformed into the predicted neural representation), the optimal coding model is conceptually congruent with Mlynarski’s encoding model70 hence we discuss it here. Harper and McAlpine75 posit that the neuronal representation of IPDs is organized such that the range of IPDs present in real-life binaural sounds is encoded as accurately as possible. In other words, the neural representation is considered to be a consequence of the sensory input that it receives, similar to the sensory processing theories underlying Mlynarski’s model.

More concretely, because the range of IPDs in real-life sounds is dependent on head size and sound frequency (Box 1), the optimal coding model predicts that the neural coding strategy across different mammalian species is also dependent on these factors75. That is, for animals with a relatively large head size (such as macaques and humans), the optimal coding model predicts a mixture of neural IPD tuning: two subpopulations that have lateral IPDs (outside of the physiological range) in opposing hemifields for low frequency tones, and a homogeneous distribution of IPDs spanning the entire azimuth for high frequency tones (FIG. 2). For animals with a relatively small head size (such as gerbils), the optimal coding model predicts two subpopulations with lateral IPD tuning even at higher frequencies. As we described previously (see Cortical spatial tuning properties above), these predictions are in line with measurements of mammalian neural spatial tuning.

Interestingly, even though barn owls are considered distinct from mammals in terms of spatial auditory processing65, the optimal coding model also explains the neural spatial tuning observed in this species. That is, neural encoding of IPDs in barn owls fits within the optimal coding framework by taking into account differences in the temporal resolution of the auditory system across species (Box 1): auditory nerve fibers in barn owls can phase lock to frequencies up to 10 kHz76,77, enabling the extraction of accurate phase information at much higher sound frequencies than in other mammals (for example, in mammals such as humans, phase locking occurs up to a maximum of 1.5 kHz78). Consequently, even though barn owls have a small head, the optimal coding model predicts that for tones >3 kHz the most accurate IPD encoding requires a homogeneous distribution of IPDs across auditory neurons, in line with single-unit measurements of neural spatial tuning in barn owls66. Whether or not this homogeneous IPD distribution – either in barn owls or in mammals with a relatively large head size – arises from a delay line organization as proposed by Jeffress64 is another matter that is not addressed by the optimal coding model.

In summary, the first steps in computational modeling of sound location encoding have been taken, but further developments are needed. The validity of existing models needs to be tested empirically, and comparisons across different models are required to better understand which computational mechanisms explain best the transformation from real-life binaural sound to neural representation. Such comparisons can also be made with fMRI in humans, using model-based analyses62,79,80 or representational similarity analysis81. These methods can evaluate models in terms of their ability to predict measured fMRI response patterns, and have been used to investigate the neural representations of sensory features in vision79-81, as well as in audition61,62,82. For example, a comparative study testing the accuracy of predictions of various computational models of cortical sound encoding by measuring neuronal responses to naturalistic sounds with fMRI, showed that the cortex contains interdependent, multi-resolution representations of sound spectrograms61. Similar fMRI encoding studies can provide important insights into the cortical representational mechanisms of sound location. Importantly, the increased resolution and submillimeter specificity enabled by high-field fMRI (7 Tesla and higher) may be crucial to link the results in humans with those obtained in animal models83.

Encoding of multiple sound attributes

The spatial position of a sound source is just one of the many attributes of sound. However, sound location can be considered somewhat distinct from other perceptual attributes that have a role in object recognition and categorization (for example, pitch) because it is used most directly for guiding sensorimotor behavior. Nevertheless, questions arise about when and how acoustic, perceptual, and location features are integrated to give rise to a unified neural representation of a localized sound object (reviewed in detail elsewhere84).

Interdependent encoding of multiple sound attributes may provide a (partial) answer to these questions. For instance, the encoding model70 described in the previous section argues for joint encoding of spatial and spectrotemporal sound features. Single-cell recordings in ferrets also support this idea: neurons in the primary and higher-order auditory cortex of ferrets are not only sensitive to sound location but are also co-modulated by perceptual features such as pitch and timbre85. In mammals with intermediate or large head sizes, such as macaques, cortical tuning to IPD is a function of sound frequency59. Further, reports of a relationship between frequency tuning and ITD tuning have also been reported on a subcortical level21,86. However, research directly testing the principles of interdependent encoding for binaural real-life sound is scarce and more extensive studies are required to develop a better understanding of the relationship between the encoding of spatial position and other sound attributes84.

Decoding models: reading the code.

In the previous sections, we described computational models of the physiological mechanisms underlying the transformation from binaural real-life sound into neural response (encoding models). A complementary question is how this information is subsequently read out by downstream areas (such as the frontal cortex) to give rise to a perception of sound location. That is, which information in the neural response is most informative about location, and how is this read-out by higher-order, non-sensory regions? To answer these questions, researchers have used decoding approaches that reconstruct (decode) the position of a sound source as accurately as possible from measured neural activity. In the next paragraphs, we discuss insights derived from these studies.

A first, much debated concept that has been investigated with decoding approaches is whether the neural representation of sound location is based on opponent coding mechanisms. Theories of opponent coding build on the observation that the majority of spatially sensitive neurons exhibit the greatest response modulation at locations around the midline (both in subcortical structures and in the cortex)21,37,46,54,87. At a computational level, integrating information across neuronal populations with such tuning properties leads to the highest spatial acuity in the region around the midline – as evidenced by psychoacoustic studies9,23,24 (see the section on mammalian spatial hearing). In addition, opponent coding has been considered a possible means to resolve the problem of level-invariance46,54. For accurate spatial hearing, the neural representation of sound location needs to be robust to changes in sound level. This can be achieved by an opponent coding mechanism in which the activity in two neuronal populations tuned to opposite hemifields is compared.

On a neural level, opponent coding mechanisms can take different forms: comparing the difference in mean or summed activity between two contralaterally tuned channels across hemispheres21,46, or between an ipsilateral and contralateral channel within a single hemisphere46,70. Other studies have even suggested that a third, frontally tuned channel is involved88,89. Decoding studies in cats46, humans54 and rabbits87 confirm that such opponent coding strategies convey information on sound location. Other empirical work – such as neural adaptation experiments and psychoacoustic studies – further support the validity of an opponent population rate code in the human auditory cortex90-93.

The opponent coding mechanisms described above rely on population activity rate; however, whether population sums or averages capture the richness of information available in the population response has been a matter of debate. Researchers have therefore also explored biologically plausible ways in which the brain can decode sound location from the pattern of population activity, focusing on approaches such as population vectors94,95 and maximum likelihood estimation (MLE)96. Although population vector codes based on the activity of broadly direction-sensitive neurons accurately represent the direction of arm movement in the motor cortex, they have been less successful in applications to the representation of sound location87,97.

More promising are the results of maximum likelihood models, which have provided sound location estimates corresponding to the level of behavioral acuity from spike rate patterns in the caudolateral area of macaque auditory cortex97, and in rabbit inferior colliculus87. Likelihood estimation is a form of template matching in which the observed neural response across a population is compared to a template (that is, the idealized response curve) that is derived from tuning curves of neurons96,98. Consequently, the neurons that contribute most to the location estimate are the neurons for which the estimate lies in the region of largest modulation (that is, the slope of the tuning curve), not the neurons that are most active98. Thus, MLE models are well suited to extract information from cortical neural response patterns. Furthermore, although these MLE models were initially developed for neural spike rates, they can be adapted to other types of data. For instance, a 2018 study successfully applied a modified version of the MLE model to fMRI activity patterns to decode sound location from the human auditory cortex57. Moreover, likelihood estimation does not require an explicit definition of opponent coding as a subtraction mechanism, and can even accommodate a mixed coding strategy in which spatial tuning is dependent on sound frequency and head size60. Thus, likelihood estimation is a good candidate model for the read-out of sound location from population activity patterns by higher-order regions.

Taken together, evidence is growing that – in mammals – population pattern representations are more informative of sound location than rate codes or population vector representations. Additionally, most empirical findings described here indicate that the read-out of sound location from neural responses benefits from the inclusion of opponent mechanisms. The precise form of such opponent coding remains a matter of debate. Furthermore, understanding of where this read-out of spatial information takes place is also limited. In the next paragraphs, we therefore examine sound location processing on a larger scale, that is, within the cortical auditory processing network.

The cortical spatial auditory network

Hierarchical, specialized processing.

A prominent model of auditory processing is the dual-stream model, which posits that auditory processing takes place in two functionally specialized pathways99,100: A ventral ‘what’ pathway dedicated to processing sound object identity, and a dorsal ‘where’ pathway dedicated to spatial processing (analogous to the dual-stream model for the visual system101,102). That is, this framework of functionally specialized processing views cortical auditory processing as a hierarchical series of feed-forward analysis stages from sensory (acoustic) processing in the PAC to specialized processing of higher-order sound attributes (such as sound location) in higher-level areas. Thus, in this view, sound localization is a higher-order sound attribute.

Evidence for this dual hierarchical organization of auditory processing comes from animal as well as human studies. Single-cell recordings first identified functionally specialized ‘what’ and ‘where’ pathways in rhesus monkeys39,99,100. Anatomical studies of corticocortical connections in non-human primates provided further support, showing a dorsal stream originating from caudal belt fields and projecting to the posterior parietal cortex (PPC), to eventually end in the dorsolateral prefrontal cortex (dlPFC103). In humans, neuroimaging studies revealed a similar functional dissociation between spatial and object identity processing104-106. Anatomically, the human homologue of the dorsal spatial processing pathway projects from the planum temporale to the inferior parietal lobule (IPL), premotor cortex (PMC), and finally dlPFC107-110 – or its equivalent in the inferior frontal cortex (IFC)110-112. Finally, lesion studies provide causal evidence for the notion of dual auditory pathways in the ventral and dorsal streams of humans113,114 and other mammals115.

In the past few years, functions associated with the dorsal ‘where’ stream have been extended beyond spatial auditory processing to include auditory motion processing116-118, temporal processing119 and sensorimotor functions (reviewed elsewhere110). These functions are closely related to spatial processing, and altogether robust evidence across mammalian species indicates the involvement of posterior-dorsal regions in spatial auditory processing. However, later empirical work suggests that a strictly hierarchical, feed-forward notion of spatial auditory processing may be incomplete. Below, we review these findings and discuss their implications for the cortical processing of sound location.

A recurrent model.

Psychophysical studies show that active spatial listening is dynamic. For instance, attention to specific locations leads to more rapid processing of auditory targets at these locations120-122, and spatial attention facilitates the understanding of speech in the presence of competing but spatially separated speech streams123. According to the hierarchical view of auditory processing, the neural mechanisms supporting such dynamic spatial listening are expected to be found in the posterior-dorsal auditory areas that are functionally specialized for sound location processing. However, research shows that the PAC might sustain this dynamic spatial listening57,58 (see Cortical spatial tuning properties).

Specifically, single-cell recordings in alert and behaving cats revealed that spatial tuning in the PAC sharpens during task performance58. Although this effect was also present in ‘spatial’ areas of the dorsal zone and posterior auditory field, the effect was stronger in the PAC 124. Similar sharpening of spatial tuning in the PAC during active, goal-oriented sound localization was demonstrated recently with fMRI in humans57. Notably, although these effects may have been expected in the planum temporale – the human ‘spatial’ auditory area – task performance did not modulate spatial tuning in this region. Another fMRI study in humans did not observe similar changes in ILD and ITD selectivity with task performance55. However, this study considered average response functions across the entire auditory cortex, which may have diluted localized effects within the PAC 55.

Thus, the combined evidence from animal and human studies provides a first indication that spatial sensitivity in the PAC is flexible and dependent on behavioral demands, and that the PAC is involved in sound location processing during active, goal-oriented localization. These findings tap into a long-standing debate on the functional role of the PAC in the transformation from acoustic processing to the representation of higher-order ‘abstract’ sound properties that takes place in the auditory system84. Even though comparable modulations of neuronal tuning in the PAC by behavioral demands have been reported for other acoustic attributes, these effects typically concerned low-level (acoustic) features. For example, attending to a particular reference sound can induce adaptive changes in spectrotemporal tuning that facilitate target detection125-127 (reviewed elsewhere128).

In the context of spatial processing, it may be argued that neurons in the PAC are selective for elementary spatial cues such as ITDs and ILDs rather than location per se129-131 (see section on cue integration).In that case, sharpening of neural tuning in the PAC reflects sharpening of responses at a processing level similar to the sharpening of spectrotemporal tuning with attention. Alternatively, following the idea that sound location is a higher-order attribute, sharpening of spatial receptive fields in the PAC during active sound localization seems to call into question the proposed strictly hierarchical nature of cortical spatial processing.

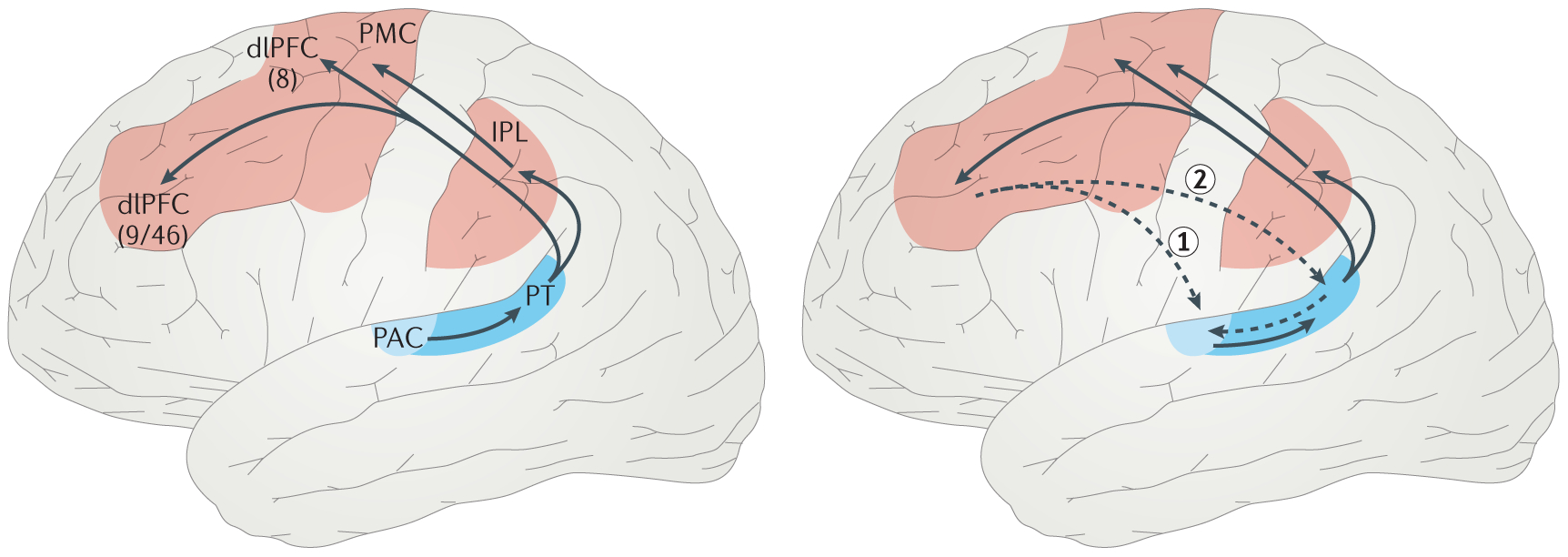

The observed effects in the PAC might be a consequence of feedback projections from higher-order regions that are initiated or strengthened by behavioral or cognitive demands during goal-oriented sound localization (FIG. 3). Such feedback connections have also been incorporated in models of visual processing (for example, recurrent132 and integrated133 models, and the ‘reverse hierarchy’ model for visual and auditory sensory learning134). For auditory spatial processing, dlPFC is a likely candidate for the source of origin of the top-down modulations135,136. This region is assumed to translate sensory representations into task-based representations137.

Figure 3. Cortical network of sound location processing.

Schematic representation of the different stages of sound location processing in the cortical auditory pathway during passive sound localization (left), and active, goal-oriented localization (right). Blue areas indicate auditory cortical regions, with darker blue representing increased spatial sensitivity. The red areas are sensorimotor regions of the auditory dorsal stream. Black arrows signal functional cortico-cortical (feedforward) connections. The dashed arrows indicate two potential routes for feedback connections to explain the sharpening of spatial tuning in the primary auditory cortex (PAC) during active sound localization. Arrow 1 reflects a direct feedback route for top-down modulations of activity in PAC by dorsolateral prefrontal cortex (dlPFC). Arrow 2 reflects an indirect feedback route in which top-down modulations of PAC activity by dlPFC are mediated by planum temporale (PT), the area that is traditionally implicated in spatial processing The numbers 8, 9, and 46 refer to Brodmann areas.

However, a more intriguing question is what the targets of the frontal feedback projections are. Possibly, these feedback projections are reciprocal to the feedforward projections within the auditory dorsal stream. In this scenario, feedback projections reach the PAC either directly (although no evidence exists for an anatomical connection between PAC and PFC), or indirectly through the connection between posterior-dorsal regions (that is, the caudal belt in non-human primates and the planum temporale in humans) and the PAC32,34. An alternative scenario involves corticofugal projections from PFC to subcortical structures, which in turn affect PAC processing58. That is, corticofugal projections from PFC can modulate activity in the thalamic reticular nucleus, as has been demonstrated for visual attention138. These effects are then propagated as inhibitory modulations to the medial geniculate body139, subsequently leading to neural response changes in the PAC140.

In summary, empirical work indicates a stronger involvement of the PAC in spatial auditory processing during active, goal-oriented localization than assumed within the hierarchical framework of cortical spatial auditory processing. This finding suggests that the hierarchical framework needs to be extended by incorporating recurrent connections to accommodate task-dependent modulations of spatial tuning in primary regions. Which form this dynamic cortical auditory network takes is presently not clear, although several hypotheses can be formulated. To test the validity of these hypotheses, functional connectivity studies in humans and non-human primates are needed to measure the task-dependent modulations of interactions between cortical (and subcortical) regions during sound localization. On a smaller scale, laminar electrophysiology141-143 and laminar fMRI144-146 can elucidate the cortical micro-circuitry of feedforward and feedback connections involved in dynamic spatial receptive fields and attentional effects.

Is one hemisphere enough?

Another important debate concerning the cortical network for sound location processing is whether both hemispheres are required for accurate sound localization, or only one. Lesion studies typically report contra-lesional localization impairments following unilateral lesions3,4,114,147,148, suggesting that one hemisphere is enough to accurately localize sounds in contralateral space. However, location decoding studies typically report more accurate location estimates when combining information across hemispheres21,37,46,57, indicating that combining information from bilateral auditory cortices is optimal. Possibly, these divergent findings are the result of differences in the behavioral state of the listener between these experimental paradigms; localization impairments in lesion studies are measured during active, goal-oriented sound localization, whereas location decoding is most often performed using measurements of neural activity acquired during passive listening.

Specifically, in previous sections we described empirical work showing that the behavioral state of a listener modulates spatial tuning within the auditory cortex (see the section on cortical spatial tuning properties). Similarly, behavioral demands (that is, engaging in active sound localization) might conceivably strengthen the functional connection between the bilateral auditory cortices, which in turn affects the neural representation of sound location within each hemisphere. Thus, during passive listening the interhemispheric connection is marginal and each hemisphere contains an isolated, sub-optimal representation of predominantly contralateral sound locations. In this case, a post-hoc combination of the neural activity in each hemisphere by a location decoding approach integrates unique spatial information, resulting in more accurate location estimates. This ‘bilateral gain’ has been demonstrated in population coding studies that measured neural responses during passive listening46,54.

By contrast, during active, goal-oriented sound localization, the neural representation of sound location becomes more precise within each hemisphere (that is, sharper spatial tuning)57,58, resulting in accurate contralateral sound localization (in line with lesion studies3,4,114,147,148). In addition, the stronger functional connection between bilateral auditory cortices during active localization leads to interhemispheric information exchange, resulting in comparable spatial representations. A post-hoc combination of neural activity across hemispheres in a decoding approach will therefore not necessarily lead to better location estimates. Importantly, the results of a decoding study in humans confirm this pattern: during a non-localization task each hemisphere contained complementary information, while the information was redundant during an active sound localization task57.

Thus, the behavioral state of a listener appears to affect the cortical network of auditory spatial processing in multiple ways: in terms of feedback connections from frontal regions to cortical auditory regions, and in terms of interhemispheric connections. We are only beginning to unravel the nature of these dynamic mechanisms in the cortical network for sound location processing, and more research on the functional connections within this network – in varying behavioral conditions – is required.

Adaptive mechanisms

The neural processing of sound location is not only shaped by the behavioral state of the listener, but also by the input to the auditory system. This effect is demonstrated clearly by research into sound localization in unilateral hearing loss (reviewed in detail elsewhere149). Specifically, monaural deprivation can have a detrimental effect on spatial hearing150-152, but behavioral data shows that adaptive mechanisms can mediate the effects of a change in input and – partly – restore spatial hearing149. These adaptive mechanisms include the reweighting of spatial cues (resulting in an increased reliance on spectral cues for horizontal localization153-157), and the remapping of binaural cues to correct for the altered input to the two ears in case of asymmetric hearing loss (that is, with some remaining input to the deprived ear)157,158.

Single-cell recordings in ferrets156-158 and rats159 indicate that these compensatory mechanisms are the result of neural plasticity in the PAC (although adaptive changes at other sites may also be of relevance160). Interestingly, both compensatory mechanisms are represented in the PAC: a subpopulation of neurons exhibits enhanced sensitivity to spectral cues156, while a separate distinct subpopulation exhibits remapping of ILD sensitivity157,158. Importantly, the latter finding suggests that neural tuning to binaural spatial cues is a function of the input to the auditory system (hence the shift in sensitivity in monaural deprivation), but that the underlying neural coding principles for binaural sound localization (that is opponent coding) are robust to changes in input.

Cue integration

Until now, we have described cortical mechanisms for spatial hearing mostly in terms of processing of ‘sound location’. However, to arrive at a perception of sound location, the auditory system has to combine information from different types of spatial cues: ITDs, ILDs and spectral cues (see the section on mammalian spatial hearing). Given that ITDs and ILDs are processed in anatomically distinct pathways in the brainstem, at least until the level of the inferior colliculus161 (Box 2), such cue integration might conceivably start either at the inferior colliculus or at the cortex. In fact, cue integration might be one of the most important contributions of the auditory cortex to spatial auditory processing. Whereas most of the studies described so far employed stimuli that contained a mixture of spatial cues, other lines of research have focused on unraveling cortical cue integration with artificially spatialized stimuli in which ITDs and ILDs can be manipulated in isolation.

The results of such approaches are equivocal. Some studies report that ITDs and ILDs are processed in overlapping cortical regions55,56,162. These findings are indicative of an abstract, cue-independent representation of sound location in the cortex. Further evidence for integrated processing comes from magnetoencephalography measurements demonstrating location-dependent neural adaptation irrespective of the type of spatial cue of the probe and adaptor sound163. Moreover, cortical fMRI activity patterns in response to ITDs are similar to those in response to ILDs, even to the extent that location can be decoded by a classifier across cues55. However, other studies have shown distinct topographies and time-courses for each binaural cue129-131, suggesting that ITDs and ILDs are processed in parallel (possibly interacting) cortical networks. The findings of a lesion study in humans also contradict the idea of fully integrated processing: differential patterns of localization errors were observed for sounds spatialized with ITDs or ILDs after brain damage (although considerable variety existed in the extent and onset of lesions between participants)5.

How can these discrepancies in experimental results be reconciled? Possibly, neural encoding of sound location is a two-stage process that starts from lower-order representations of individual binaural cues that later converge to a generalized representation of sound location. Similar frameworks have been proposed for the encoding of other sound attributes. For example, the auditory system has been proposed to extract pitch information from two different mechanisms (a temporal and a spectral mechanism) that may converge later in a cortical ‘pitch center’164,165 to arrive at a generalized representation166 (although the notion of a pitch center is debated, reviewed elsewhere167). However, if this two-stage process for encoding of sound location is correct, where and how the convergence of ITD and ILD processing occurs in the cortex is unclear. Thus, the cortical contribution to cue integration and the emergence of an abstract perception of sound location are important areas for future research.

Sound localization in complex scenes

In this review, we have – so far – discussed cortical mechanisms for the encoding of absolute sound location. However, in daily life, listeners are typically presented with complex auditory scenes consisting of multiple, spatially separated sound sources (FIG. 4). Such listening environments introduce additional difficulties for absolute sound localization, but simultaneously provide new information to the auditory system in terms of relative sound location. In this final section, we review empirical work addressing these topics concerning spatial hearing in complex auditory scenes.

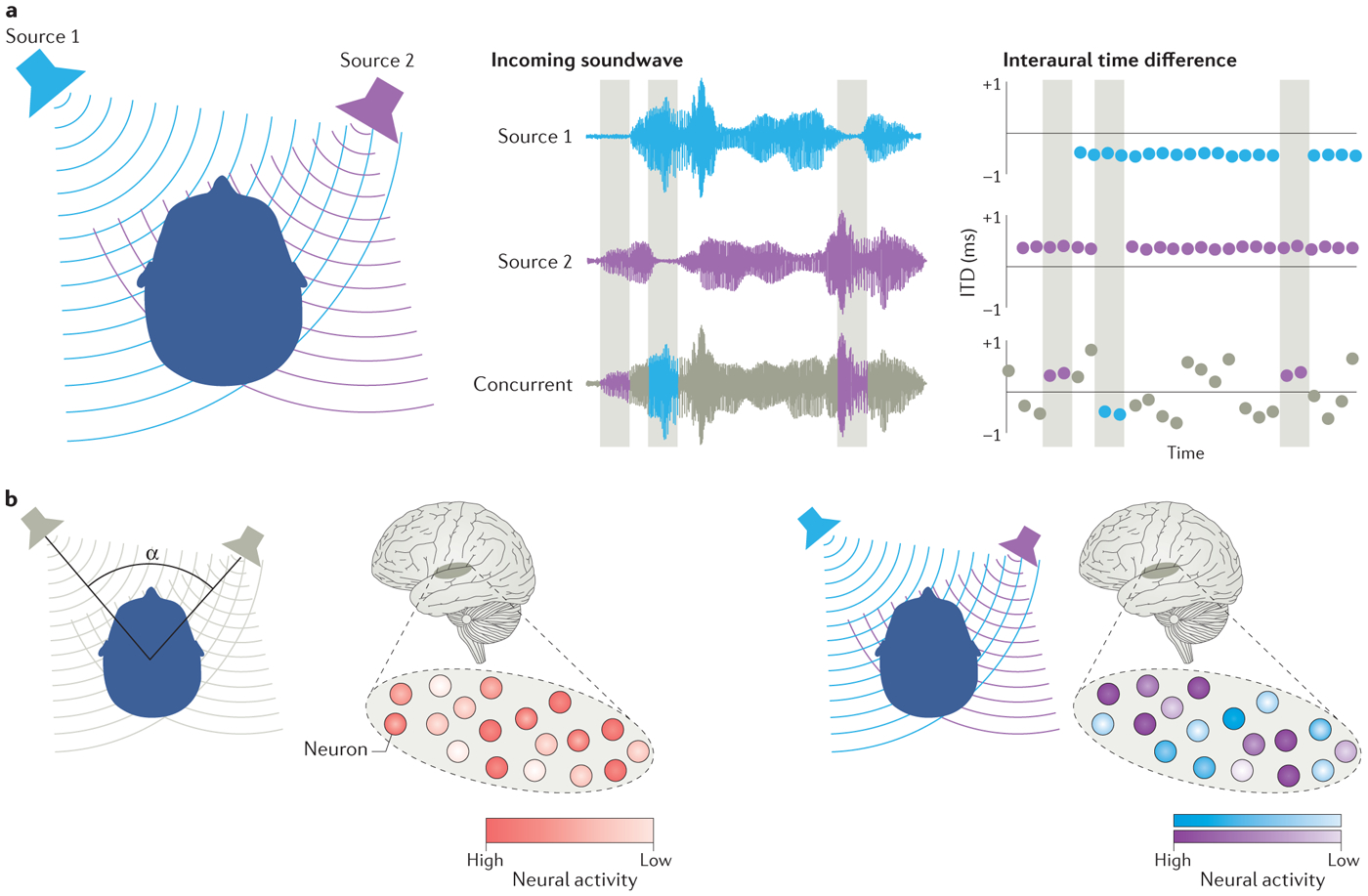

Figure 4. Sound localization in complex auditory scenes.

(A) The distortion of interaural time differences (ITDS) in complex (multi-source) listening scenes. Left panel: the spatial lay-out of a complex auditory scene with two sound sources. Middle panel: the sound wave of the listening scene if source 1 is present in isolation (top row), if source 2 is present in isolation (middle row) or if source 1 and 2 are concurrent (bottom row); owing to the sparse character of natural sounds, concurrent sound streams contain time instants in which only one sound source is present (indicated by the gray rectangles). Right panel: a schematic representation of ITDs to be expected if source 1 is present in isolation (top row), if source 2 is present in isolation (middle row), and if source 1 and 2 are concurrent (bottom row). ITDs for concurrent sounds are distorted and fluctuate over time (bottom row) but accurately reflect the position of a single source in time instants when only this sound source is present (gray rectangles). The auditory system is hypothesized to use the ITDs in these time instants for sound localization. (B) Two potential neural codes for spatial separation. The left panel illustrates a neural representation of relative sound location that is independent of the absolute location of the individual sound sources. That is, the pattern of neural activity in the population is determined by the angle of spatial separation between the individual sound sources (α). Importantly, an identical perceptual spatial separation (that is, identical α) will result in the same pattern of neural activity even when the locations of the sound sources differ from those depicted here. By contrast, the right panel shows a neural representation of spatial separation that emerges from the absolute location of individual sound sources. Specifically, the pattern of activity in the neuronal population is determined by the location of the individual sound sources. Thus, an identical angle of spatial separation (α) will result in a different pattern of neural activity when the two sound sources are at locations different from those depicted here.

Interfering sounds.

Multi-source listening environments result in distorted and fluctuating binaural cues20. Nevertheless, humans are often still able to localize a target accurately168-171 (although one study showed that the presence of a distractor shifts the perceived location of the target towards the distractor172). Although cortical top-down mechanisms such as spatial selective attention can help to reduce the confusion between a target and interfering sound sources173, this mechanism does not solve the inherent ambiguity of the binaural cues. Accordingly, the presentation of an interfering, spatially separated sound distorts azimuth response functions in the auditory pathway of cats174 and rabbits20. Given this ambiguity in the neural response, how does the auditory system derive the spatial position of a target?

One possible strategy – considering the relatively sparse temporal and spectral character of real-life sounds – is to localize the target in the short periods in which one of the sources is present in relative isolation20,175 (Fig. 4). This approach effectively removes the binaural ambiguity and reduces the computation to that of single-source localization. However, how the brain determines whether one or more sound sources are simultaneously present is not clear. It is possible that the auditory system uses the degree of interaural decorrelation (for example interaural coherence) as a criterion175,176. Interaural coherence decreases in the presence of multiple, spatially separated sound sources, and ITD sensitive neurons in the inferior colliculus and auditory cortex of alert rabbits typically exhibit sensitivity to interaural coherence as well87,177. However, no direct evidence yet exists to support this hypothesis, and the mechanisms underlying localization in the presence of an interfering, spatially separated sound source are not well understood.

Spatial processing for scene analysis.

A related topic is the neural encoding of relative sound location, which contains useful information for the auditory system. For instance, spatial separation can contribute to the grouping of incoming soundwaves into coherent auditory objects, a process known as auditory scene analysis178. In this context, spatial cues seem to be especially relevant for grouping (streaming) of interleaved sound sequences over time173,179 (although experimental evidence is equivocal180,181).

Neurophysiological measurements show that auditory stream segregation on the basis of acoustic properties such as sound frequency involves the PAC182-185. However, research into spatial stream segregation is very limited. A study in cats showed that spatial stream segregation for sequential, interleaved streams can be predicted from neural activity in the PAC using a model of neural spatial sensitivity to isolated sound sources, in combination with an attenuation factor representing the observed decrease in neuronal response in the presence of a competing sound source186. Thus, the neural representation of spatial stream segregation in cat PAC appeared to be contingent on the representation of absolute sound location (FIG. 4).

By contrast, psychophysical results in humans point in the direction of distinct location and separation processing187. These diverging results might – once more – be traced to differences in the behavioral state of the listener: the human listeners were alert and performing a stream segregation task, whereas the single-cell recordings were made in anesthetized cats. Possibly, the representation of spatial separation is more distinct from absolute location processing during active spatial streaming than during passive listening. To test this hypothesis, neurophysiological research examining the relationship between absolute and relative sound location processing in different behavioral conditions is required. Such research would also advance our understanding of the relative contribution of primary and higher-order auditory regions (especially the ‘computational hub’, that is, the planum temporale188,189) to spatial segregation, a question that has not been addressed directly by existing studies.

Conclusions

Novel research paradigms using alert and responsive subjects, real-life sounds, and advanced computational modeling approaches have contributed significantly to our understanding of the complex computational mechanisms underlying spatial hearing. Evidence is growing that the hierarchical model for location processing might need to be extended to include recurrent (and possibly even interhemispheric connections) to accommodate goal-oriented sound localization. Furthermore, the notion of a neural representation of sound location as population pattern code is gaining momentum, even if the transformation from binaural sound to neural response is still poorly understood. Additionally, an important note of caution for cross-species comparisons has emerged from insights into the influence of head size on IPD encoding in mammals. In terms of the role of the auditory cortex in spatial hearing, the research discussed in this Review highlights that the auditory cortical network is especially relevant for spatial processing in the context of behavioral goals, and for spatial processing of complex, real-life sounds and in multi-source auditory scenes.

Amid these advancing insights, several important questions remain unresolved. For example, a mechanistic understanding of the integration of different spatial cues, or the localization of sounds in multi-source settings, is still lacking. Knowledge of the interaction between neural processing of sound location and other sound attributes is equally minimal. For future research directions, the work discussed here emphasizes that experimental set-ups using real-life, complex sounds in ecologically valid listening scenes are required in order to gain a better understanding of the full complexity of cortical sound location processing. Merging computational modeling strategies with neurophysiological measurements will provide significant support to these research efforts.

ACKNOWLEDGEMENTS

K.v.d.H. was partially supported by the Erasmus Mundus Auditory Cognitive Neuroscience Network. E.F. was partially supported by The Netherlands Organisation for Scientific Research (VICI grant number 453–12-002) and the Dutch Province of Limburg (Maastricht Centre for Systems Biology). J.P.R. was partially supported by the U.S. National Science Foundation (PIRE grant number OISE-0730255), the National Institutes of Health (grant numbers R01EY018923 and R01DC014989), and the Technische Universität München Institute for Advanced Study, funded by the German Excellence Initiative and the European Union Seventh Framework Programme Grant (grant number 291763). B.d.G. was partially supported by the European Union Seventh Framework Programme for Research (grant number 295673), and the European Union’s Horizon 2020 Research and Innovation Programme (grant number 645553).

GLOSSARY

- Front-back ambiguities:

Humans can have difficulty distinguishing whether a sound source is located behind or in front of them because the interaural time and level differences are identical for sound sources at the same angular position with respect to the interaural midline yet located in the front and back

- Coincidence detectors:

Neuron whose firing rate is modulated by the time of arrival of input from two lower-level neurons, such that it responds maximally when the input arrives simultaneously

- Sparse coding:

A neural coding strategy in which single neurons encode sensory stimuli efficiently by representing the maximal amount of information possible (thereby saving computational resources), and neuronal populations consist of neurons that encode unique information (that is, neural responses are independent)

- Opponent coding:

A neural representational mechanism in which sensory stimuli are represented by the integrated activity of two neuronal populations tuned to opposite values of the characteristic under consideration (for sound location: the integrated activity of a population tuned to the left and a population tuned to the right hemifield)

- Relative sound location:

In multi-source listening environments, the relative sound location refers to the location of the individual sound sources with respect to each other, that is, the spatial separation

- Spatial selective attention:

The attentional focus of a listener on a particular location and the sounds presented at this location, while ignoring sounds at other locations

- Auditory stream segregation:

The segregation and grouping of concurrent or interleaved sound streams in multi-source listening environments into their respective sound sources

REFERENCES

- 1.Heffner H & Masterton B Contribution of auditory cortex to sound localization in the monkey (Macaca mulatta). Journal of Neurophysiology 38, 1340–1358 (1975). [DOI] [PubMed] [Google Scholar]

- 2.Heffner HE & Heffner RS Effect of bilateral auditory cortex lesions on sound localization in Japanese macaques. Journal of Neurophysiology 64, 915–931 (1990). [DOI] [PubMed] [Google Scholar]

- 3.Malhotra S, Hall AJ & Lomber SG Cortical control of sound localization in the cat: unilateral cooling deactivation of 19 cerebral areas. Journal of Neurophysiology 92, 1625–1643 (2004). [DOI] [PubMed] [Google Scholar]

- 4.Malhotra S, Stecker GC, Middlebrooks JC & Lomber SG Sound localization deficits during reversible deactivation of primary auditory cortex and/or the dorsal zone. Journal of Neurophysiology 99, 1628–1642 (2008). [DOI] [PubMed] [Google Scholar]

- 5.Spierer L, Bellmann-Thiran A, Maeder P, Murray MM & Clarke S Hemispheric competence for auditory spatial representation. Brain 132, 1953–1966 (2009). [DOI] [PubMed] [Google Scholar]

- 6.Zatorre RJ & Penhune VB Spatial localization after excision of human auditory cortex. Journal of Neuroscience 21, 6321–6328 (2001). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zündorf IC, Karnath H-O & Lewald J The effect of brain lesions on sound localization in complex acoustic environments. Brain 137, 1410–1418 (2014). [DOI] [PubMed] [Google Scholar]

- 8.Hofman PM, Van Riswick JG & Van Opstal AJ Relearning sound localization with new ears. Nature neuroscience 1, 417 (1998). [DOI] [PubMed] [Google Scholar]

- 9.Oldfield SR & Parker SP Acuity of sound localisation: a topography of auditory space. I. Normal hearing conditions. Perception 13, 581–600 (1984). [DOI] [PubMed] [Google Scholar]

- 10.Rayleigh Lord. On our perception of sound direction. The London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science 13, 214–232 (1907). [Google Scholar]

- 11.Blauert J Sound localization in the median plane. Acta Acustica united with Acustica 22, 205–213 (1969). [Google Scholar]

- 12.Mills AW Lateralization of high‐frequency tones. The Journal of the Acoustical Society of America 32, 132–134 (1960). [Google Scholar]

- 13.Sandel T, Teas D, Feddersen W & Jeffress L Localization of sound from single and paired sources. the Journal of the Acoustical Society of America 27, 842–852 (1955). [Google Scholar]

- 14.Stevens SS & Newman EB The localization of actual sources of sound. The American journal of psychology 48, 297–306 (1936). [Google Scholar]

- 15.Wightman FL & Kistler DJ The dominant role of low‐frequency interaural time differences in sound localization. The Journal of the Acoustical Society of America 91, 1648–1661 (1992). [DOI] [PubMed] [Google Scholar]

- 16.Bernstein LR & Trahiotis C Lateralization of sinusoidally amplitude‐modulated tones: Effects of spectral locus and temporal variation. The Journal of the Acoustical Society of America 78, 514–523 (1985). [DOI] [PubMed] [Google Scholar]

- 17.Hartmann WM, Rakerd B, Crawford ZD & Zhang PX Transaural experiments and a revised duplex theory for the localization of low-frequency tones. The Journal of the Acoustical Society of America 139, 968–985 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Henning GB Detectability of interaural delay in high‐frequency complex waveforms. The Journal of the Acoustical Society of America 55, 84–90 (1974). [DOI] [PubMed] [Google Scholar]

- 19.Rakerd B & Hartmann W Localization of sound in rooms, II: The effects of a single reflecting surface. The Journal of the Acoustical Society of America 78, 524–533 (1985). [DOI] [PubMed] [Google Scholar]

- 20.Day ML, Koka K & Delgutte B Neural encoding of sound source location in the presence of a concurrent, spatially separated source. Journal of neurophysiology 108, 2612–2628 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.McAlpine D, Jiang D & Palmer AR A neural code for low-frequency sound localization in mammals. Nature neuroscience 4, 396–401 (2001). [DOI] [PubMed] [Google Scholar]

- 22.Jones HG, Brown AD, Koka K, Thornton JL & Tollin DJ Sound frequency-invariant neural coding of a frequency-dependent cue to sound source location. Journal of neurophysiology 114, 531–539 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Makous JC & Middlebrooks JC Two‐dimensional sound localization by human listeners. The journal of the Acoustical Society of America 87, 2188–2200 (1990). [DOI] [PubMed] [Google Scholar]

- 24.Heffner RS & Heffner HE Sound localization acuity in the cat: effect of azimuth, signal duration, and test procedure. Hearing research 36, 221–232 (1988). [DOI] [PubMed] [Google Scholar]

- 25.Butler RA The bandwidth effect on monaural and binaural localization. Hearing research 21, 67–73 (1986). [DOI] [PubMed] [Google Scholar]

- 26.Carlile S, Delaney S & Corderoy A The localisation of spectrally restricted sounds by human listeners. Hearing research 128, 175–189 (1999). [DOI] [PubMed] [Google Scholar]

- 27.Tollin DJ, Ruhland JL & Yin TC The role of spectral composition of sounds on the localization of sound sources by cats. Journal of Neurophysiology 109, 1658–1668 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Musicant AD & Butler RA Influence of monaural spectral cues on binaural localization. The Journal of the Acoustical Society of America 77, 202–208 (1985). [DOI] [PubMed] [Google Scholar]

- 29.Van Wanrooij MM & Van Opstal AJ Sound localization under perturbed binaural hearing. Journal of neurophysiology 97, 715–726 (2007). [DOI] [PubMed] [Google Scholar]

- 30.Voss P, Tabry V & Zatorre RJ Trade-off in the sound localization abilities of early blind individuals between the horizontal and vertical planes. Journal of Neuroscience 35, 6051–6056 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Derey K, Rauschecker JP, Formisano E, Valente G & de Gelder B Localization of complex sounds is modulated by behavioral relevance and sound category. The Journal of the Acoustical Society of America 142, 1757–1773 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hackett TA et al. Feedforward and feedback projections of caudal belt and parabelt areas of auditory cortex: refining the hierarchical model. Frontiers in neuroscience 8, 72 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kaas JH & Hackett TA Subdivisions of auditory cortex and processing streams in primates. Proceedings of the National Academy of Sciences 97, 11793–11799 (2000). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Moerel M, De Martino F & Formisano E An anatomical and functional topography of human auditory cortical areas. Frontiers in neuroscience 8, 225 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rauschecker JP, Tian B & Hauser M Processing of complex sounds in the macaque nonprimary auditory cortex. Science 268, 111–114 (1995). [DOI] [PubMed] [Google Scholar]

- 36.Benson D, Hienz R & Goldstein M Jr Single-unit activity in the auditory cortex of monkeys actively localizing sound sources: spatial tuning and behavioral dependency. Brain research 219, 249–267 (1981). [DOI] [PubMed] [Google Scholar]

- 37.Ortiz-Rios M et al. Widespread and Opponent fMRI Signals Represent Sound Location in Macaque Auditory Cortex. Neuron 93, 971–983. e4 (2017).A macaque neuroimaging study providing evidence for an opponent coding mechanism for the neural representation of sound location.

- 38.Recanzone GH, Guard DC, Phan ML & Su T-IK Correlation between the activity of single auditory cortical neurons and sound-localization behavior in the macaque monkey. Journal of neurophysiology 83, 2723–2739 (2000). [DOI] [PubMed] [Google Scholar]

- 39.Tian B, Reser D, Durham A, Kustov A & Rauschecker JP Functional specialization in rhesus monkey auditory cortex. Science 292, 290–293 (2001). [DOI] [PubMed] [Google Scholar]

- 40.Woods TM, Lopez SE, Long JH, Rahman JE & Recanzone GH Effects of stimulus azimuth and intensity on the single-neuron activity in the auditory cortex of the alert macaque monkey. Journal of neurophysiology 96, 3323–3337 (2006). [DOI] [PubMed] [Google Scholar]

- 41.Kuśmierek P & Rauschecker JP Selectivity for space and time in early areas of the auditory dorsal stream in the rhesus monkey. Journal of neurophysiology 111, 1671–1685 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Brugge JF, Reale RA & Hind JE The structure of spatial receptive fields of neurons in primary auditory cortex of the cat. Journal of Neuroscience 16, 4420–4437 (1996). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Harrington IA, Stecker GC, Macpherson EA & Middlebrooks JC Spatial sensitivity of neurons in the anterior, posterior, and primary fields of cat auditory cortex. Hearing research 240, 22–41 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Mrsic-Flogel TD, King AJ & Schnupp JW Encoding of virtual acoustic space stimuli by neurons in ferret primary auditory cortex. Journal of neurophysiology 93, 3489–3503 (2005). [DOI] [PubMed] [Google Scholar]

- 45.Rajan R, Aitkin L, Irvine D & McKay J Azimuthal sensitivity of neurons in primary auditory cortex of cats. I. Types of sensitivity and the effects of variations in stimulus parameters. Journal of Neurophysiology 64, 872–887 (1990). [DOI] [PubMed] [Google Scholar]

- 46.Stecker GC, Harrington IA & Middlebrooks JC Location coding by opponent neural populations in the auditory cortex. PLoS biology 3, e78 (2005).Extracellular recordings in cat auditory cortical regions demonstrating the validity of opponent population coding for the neural representation of sound location.

- 47.Stecker GC & Middlebrooks JC Distributed coding of sound locations in the auditory cortex. Biological cybernetics 89, 341–349 (2003). [DOI] [PubMed] [Google Scholar]

- 48.Mickey BJ & Middlebrooks JC Representation of auditory space by cortical neurons in awake cats. Journal of Neuroscience 23, 8649–8663 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Brunetti M et al. Human brain activation during passive listening to sounds from different locations: an fMRI and MEG study. Human brain mapping 26, 251–261 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Deouell LY, Heller AS, Malach R, D’Esposito M & Knight RT Cerebral responses to change in spatial location of unattended sounds. Neuron 55, 985–996 (2007). [DOI] [PubMed] [Google Scholar]

- 51.Van der Zwaag W, Gentile G, Gruetter R, Spierer L & Clarke S Where sound position influences sound object representations: a 7-T fMRI study. Neuroimage 54, 1803–1811 (2011). [DOI] [PubMed] [Google Scholar]

- 52.Warren JD & Griffiths TD Distinct mechanisms for processing spatial sequences and pitch sequences in the human auditory brain. Journal of Neuroscience 23, 5799–5804 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Krumbholz K et al. Representation of interaural temporal information from left and right auditory space in the human planum temporale and inferior parietal lobe. Cerebral Cortex 15, 317–324 (2004). [DOI] [PubMed] [Google Scholar]

- 54.Derey K, Valente G, de Gelder B & Formisano E Opponent Coding of Sound Location (Azimuth) in Planum Temporale is Robust to Sound-Level Variations. Cerebral Cortex 26, 450–464 (2015).A neuroimaging study in humans demonstrating the validity of an opponent coding mechanism for sound location encoding in planum temporale.

- 55.Higgins NC, McLaughlin SA, Rinne T & Stecker GC Evidence for cue-independent spatial representation in the human auditory cortex during active listening. Proceedings of the National Academy of Sciences 114, E7602–E7611 (2017).An fMRI study with an active task paradigm and a multivariate pattern analysis approach, showing that human auditory cortex represents sound location independent of the type of binaural cue (that is, ILD or ITD).

- 56.McLaughlin SA, Higgins NC & Stecker GC Tuning to binaural cues in human auditory cortex. Journal of the Association for Research in Otolaryngology 17, 37–53 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.van der Heijden K, Rauschecker JP, Formisano E, Valente G & de Gelder B Active sound localization sharpens spatial tuning in human primary auditory cortex. Journal of Neuroscience 38, 0587–0518 (2018).An fMRI study measuring spatial selectivity in human auditory cortex in varying behavioral conditions, demonstrating sharpening of spatial tuning during active sound localization and accurate decoding of sound location using a maximum likelihood approach.

- 58.Lee C-C & Middlebrooks JC Auditory cortex spatial sensitivity sharpens during task performance. Nature neuroscience 14, 108 (2011).Single- and multi-unit recordins in primary auditory cortex of awake and behaving cats show that neural spatial sensitivity sharpens during task performance.

- 59.Harper NS, Scott BH, Semple MN & McAlpine D The neural code for auditory space depends on sound frequency and head size in an optimal manner. PloS one 9, e108154 (2014).Empirical evidence from neurophysiological data in various species supporting the optimal coding model for sound location based on head size and sound frequency.

- 60.Chi T, Ru P & Shamma SA Multiresolution spectrotemporal analysis of complex sounds. The Journal of the Acoustical Society of America 118, 887–906 (2005). [DOI] [PubMed] [Google Scholar]

- 61.Santoro R et al. Encoding of natural sounds at multiple spectral and temporal resolutions in the human auditory cortex. PLoS computational biology 10, e1003412 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Santoro R et al. Reconstructing the spectrotemporal modulations of real-life sounds from fMRI response patterns. Proceedings of the National Academy of Sciences 114, 4799–4804 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]