Abstract

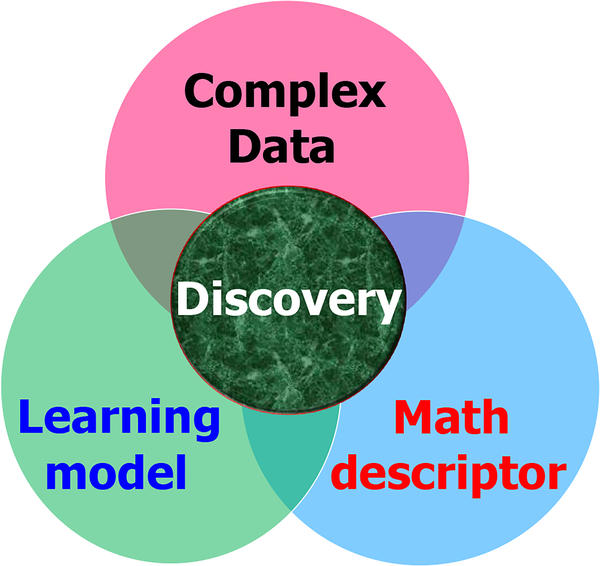

Recently, machine learning (ML) has established itself in various worldwide benchmarking competitions in computational biology, including Critical Assessment of Structure Prediction (CASP) and Drug Design Data Resource (D3R) Grand Challenges. However, the intricate structural complexity and high ML dimensionality of biomolecular datasets obstruct the efficient application of ML algorithms in the field. In addition to data and algorithm, an efficient ML machinery for biomolecular predictions must include structural representation as an indispensable component. Mathematical representations that simplify the biomolecular structural complexity and reduce ML dimensionality have emerged as a prime winner in D3R Grand Challenges. This review is devoted to the recent advances in developing low-dimensional and scalable mathematical representations of biomolecules in our laboratory. We discuss three classes of mathematical approaches, including algebraic topology, differential geometry, and graph theory. We elucidate how the physical and biological challenges have guided the evolution and development of these mathematical apparatuses for massive and diverse biomolecular data. We focus the performance analysis on the protein-ligand binding predictions in this review although these methods have had tremendous success in many other applications, such as protein classification, virtual screening, and the predictions of solubility, solvation free energy, toxicity, partition coefficient, protein folding stability changes upon mutation, etc.

Keywords: Machine learning, deep learning, data representations, binding data, algebraic topology, differential geometry, graph theory

I. Introduction

Recently, Google’s DeepMind has caught the world’s breath in winning the 13th Critical Assessment of Structure Prediction (CASP13) competition using its latest artificial intelligence (AI) system, AlphaFold1. The goal of the CASP is to develop and recognize the state-of-the-art technology in constructing protein three-dimensional (3D) structure from protein sequences, which are abundantly available nowadays. While many people were surprised by the power of AI when AlphaGo beat humans for the first time in the highly intelligent Go game a few years ago, it was not clear whether AI could tackle scientific challenges. Since CASP has been regarded as one of the most important challenges in computational biophysics, AlphaFold’s dominant win of 25 out of 43 contests ushers in a new era of scientific discovery.

The algorithms underpinning ALphaFold’s AI system are machine learning (ML), including deep learning (DL). Indeed, ML is one of the most transformative technologies in history. The combination of big data and ML has been referred to as both the “fourth industrial revolution”2 and the “fourth paradigm of science”3. However, this two-element combination may not work very well for biological science, particularly, biomolecular systems because of the intricate structural complexity and the intrinsic high dimensionality of biomolecular datasets4. For example, a typical human protein-drug complex has so many possible configurations that even if a computer enumerates one possible configuration per second, it would still take longer than the universe has existed to reach the right configuration. The chemical and pharmacological spaces of drugs are so large that even all the world’s computers put together do not have enough power for automated de novo drug design due to additional requirements in solubility, partition coefficient, permeability, clearance, toxicity, pharmacokinetics, and pharmacodynamics, etc.

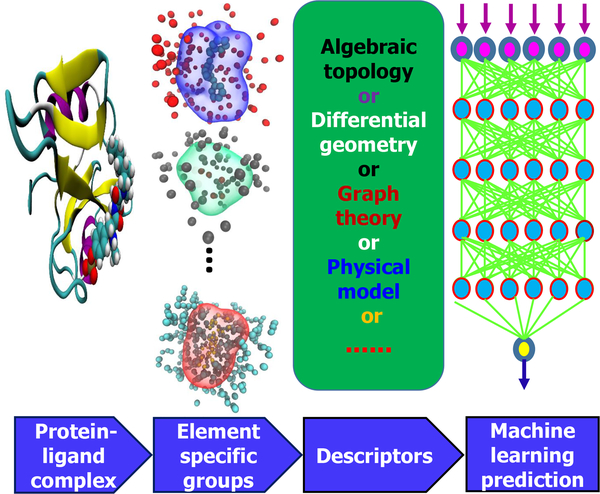

An appropriate low-dimensional representation of biomolecular structures is required4–9 to translate the complex structural information into machine learning feature vectors or mathematical representations as shown in Fig. 2. As a result, various machine learning algorithms, particularly relatively simple ones without complex internal structures, can work efficiently and robustly with biomolecular data.

Figure 2:

Illustration of descriptor-based learning processes.

Descriptors or fingerprints are indispensable even for small molecules – they play a fundamental role in quantitative structure-activity relationship (QSAR) and quantitative structure-property relationships (QSPR) analysis, virtual screening, similarity-based compound search, target molecule ranking, drug absorption, distribution, metabolism, and excretion (ADME) prediction, and other drug discovery processes. Molecular descriptors are property profiles of a molecule, usually in the form of vectors with each vector component indicating the existence, the degree or the frequency of one certain structure feature10–12. Various descriptors have been developed in the past few decades13–15. Most of them are 2D ones that can be extracted from molecular simplified molecular-input line-entry system (SMILES) strings without 3D structure information. High dimensional descriptors have also been developed to utilize 3D molecular structures and other chemical and physical information16. There are four main categories of 2D descriptors: 1) substructure keys-based fingerprints, 2) topological or path-based fingerprints, 3) circular fingerprints, and 4) pharmacophore fingerprints. Substructure keys-based fingerprints, such as molecular access system (MACCS)17, are bit strings representing the presence of certain substructures or fragments from a given list of structural keys in a molecule. Topological or path-based descriptors, e.g., FP218, Daylight19 and electro-topological state (Estate)20, are designed to analyze all the fragments of a molecule following a (usually linear) path up to a certain number of bonds, and then hashing every one of these paths to create fingerprints. Circular fingerprints, such as extended-connectivity fingerprint (ECFP)13, are also hashed topological fingerprints but rather than looking for paths in a molecule, they record the environment of each atom up to a pre-defined radius. Pharmacophore fingerprints include the relevant features and interactions needed for a molecule to be active against a given target, including 2D-pharmacophore21, 3D-pharmacophore21 and extended reduced graph (ERG)22 fingerprints as examples.

However, typically designed for 2D SMILES strings, the aforementioned small-molecular descriptors do not work well for macromolecules that have complex 3D structures. The complexity of biomolecular structure, function, and dynamics often makes the structural representation inconclusive, inadequate, inefficient and sometimes intractable. These challenges call for innovative design strategies for the representation of macromolecules.

Popular molecular mechanics models use bonded terms for describing covalent bond interactions and non-bonded terms for representing long-range electrostatic and van der Waals effects. As a result, the early effort has been focused on exploring related physical descriptors to account for hydrogen bonds, electrostatic effects, van der Waals interactions, hydrophilicity, and hydrophobicity. These descriptors have been applied to many macromolecular systems, such as protein-protein interaction hot spots6,7,23,24. Similar physical descriptors in terms of van der Waals interaction, Coulomb interaction, electrostatic potential, electrostatic binding free energy, reaction field energy, surface areas, volumes, etc, were applied by us to predictions of protein-ligand affinity25 and solvation free energy26,27. However, the major limitation of physical descriptors is that they highly depend on existing molecular force fields, such as radii, partial charges, polarizability, dielectric constant, and van der Waals well depth, and thus could inherit errors from upstream physical models. As a result, these descriptors are often not as competitive as state-of-art force-field-free models based on advanced mathematics9,28.

Topology analyzes space, connectivity, dimension, and transformation. Topology offers the highest level of abstraction and thus could provide an efficient tool for tackling high-dimensional biological data30–32. However, topology typically oversimplifies geometric information. Persistent homology is a new branch of algebraic topology that is able to bridge geometry and topology31,33,34. This approach has been applied to macromolecular analysis35–45. Nonetheless, it neglects critical chemical/biological information when it is directly applied to complex biomolecular structures. Recently, we have introduced element-specific persistent homology to retain critical biological information during the topological abstraction, rendering a potentially revolutionary representation for biomolecular data46–49.

Graph theory studies the modeling of pairwise relations between vertices or nodes50. Geometric graphs admit geometric objects as graph nodes while algebraic graphs utilize algebraic techniques to study the relations between nodes. Both geometric graph theory and algebraic graph theory have been widely applied to biomolecular systems8,51–53. For example, spectral graph theory has been used to represent protein Cα atoms as an elastic massand-spring network in Gaussian network model (GNM)54 and anisotropic network model (ANM)55. Extremal graph theory concerns unavoidable patterns and structures in graphs with given density or distribution. It has potential applications to chromosome packing and Hi-C data. However, most graph theory methods suffer from the neglecting of critical biological information and non-covalent interactions, and sometimes, inappropriate distance metrics for biomolecular interactions. In the past few year, we have developed weighted graphs56–62, multiscale graphs60,63, and colored graphs64,65 for modeling biomolecular systems. These new graph theory methods are found to be some of the most powerful representations of macromolecules64–66.

How biomolecules assume complex structures and intricate shapes and why biomolecular complexes admit convoluted interfaces between different parts can be naturally described by differential geometry, a mathematical subject drawing on differential calculus, integral calculus, algebra, and differential equation to study problems in geometry or differentiable manifolds. Einstein used this approach to formulate his general theory of relativity. Curve and curvature analysis has been applied to the shape analysis of molecular surfaces67 and protein folding trajectories68,69. In the past two decades, we have developed a variety of differential geometry models for biomolecular surface analysis70–75, solvation modeling76–85, ion-channel study80–82,86,87, protein binding pocket detection88, and protein-ligand binding affinity prediction89. Differential geometry-based representations are able to offer a high-level abstraction of macromolecular structures89.

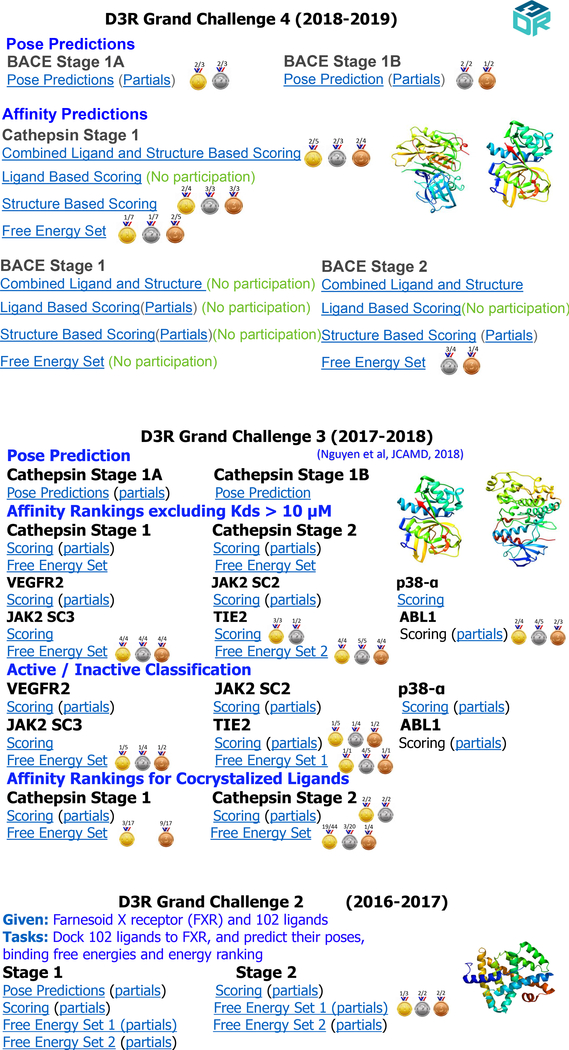

We have pursued differential geometry, algebraic topology, graph theory and other mathematical methods, such as de Rham-Hodge theory90,91, for modeling, analysis and characterization of biomolecular systems for near two decades. Using these representations, we have studied a number of biomolecular systems and problems, including macromolecular electrostatics, implicit solvent models, ion channels, protein flexibility, geometric analysis, surface modeling, and multiscale analysis. Our mathematical representations have evolved and improved over time. In 2015, we proposed one of the first integration of persistent homology and machine learning and applied this new approach to protein classification. Since then, we have demonstrated the superiority of our mathematical representations over other existing methods in a wide variety of other applications, including the predictions of protein thermal fluctuations59,60,63,65, toxicity92, protein-ligand binding affinity25,47,64,66,89, mutation-induced protein stability changes46,48, solvation26,27,79,93, solubility94, partition coefficient94 and virtual screening49. As shown in Fig. 3, the aforementioned mathematical approaches have enabled us to win many contests in D3R Grand Challenges, a worldwide competition series in computer-aided drug design28.

Figure 3:

Wei team’s performance in D3R Grand Challenges 2, 3 and 428,29, community-wide competition series in computer-aided drug design, with components addressing blind predictions of pose-prediction, affinity ranking, and binding free energy. The golden medal, silver medal, and bronze medal label the contest where our prediction was ranked 1st, 2nd, and 3rd, respectively. The numbers (a∕b) right beside each medal, say gold medal, implies we have a predictions were ranked 1st and there was a total of b submissions sharing the first position. “No Participation” is placed in the contests that we unintendedly did not participate due to the inconsistent announcement from the D3R organizer.

Due to the abstract nature of mathematical representations and the fact that our results are scattered over a large number of subjects and topics it is difficult for the researcher who has no formal training in mathematics to use these methods. Therefore, there is a pressing need to elucidate these methods in physical terms, provide simplified representations, and interpret their working principles. To this end, we provide a review of our mathematical representations. Our goal is to offer a coherent description of these methods for protein-ligand binding interactions so that the reader can better understand how to use advanced mathematics for describing macromolecules and their interaction complexes.

Like small molecular descriptors, macromolecular representations, once designed, can be applied to different tasks in principle. However, many different types of applications require specially designed macromolecular representations. For example, in protein B-factor prediction, one deals with the atomic property, while in predicting protein stability changes upon mutation, solubility, etc. one considers molecular properties. Additionally, in protein-ligand binding affinity predictions, one deals with the property of protein-ligand complexes. Therefore, different mathematical representations are required to tackle atomic, molecular, and molecular complex properties. Another complication is due to different systems. For example, representations for the binding affinity of protein-ligand interactions should differ from those for the binding affinity of protein-protein interactions or protein-nucleic acid interactions. The other hindrance arises from specific tasks. For example, protein classification, one concerns secondary structures and needs to design macromolecular representations to capture secondary structural differences. In general, macromolecules and their interactive complexes are inherent of multiscale, multiphysics, multi-dynamics and multifunction. Their descriptions can vary from cases to cases. We cannot cover all possible situations in this review.

Biologically, protein-ligand binding interactions are tremendously important for living organisms. ligand-receptor agonist binding is known to initiate a vast variety of molecular and/or cellular processes, from transmitter-mediated signal transduction, hormone or growth factor regulated metabolic pathways, stimulus-initiated gene expression, enzyme production, to cell secretion. Therefore, the understanding of protein-ligand binding interactions is a central issue in biological sciences, including drug design and discovery. Despite much research in the past, the molecular mechanism of protein-ligand binding interactions is still elusive. A prevalent view is that protein-ligand binding is initiated through protein-ligand molecular recognition, synergistic corporation, and conformational changes. Computationally, the prediction of protein-ligand binding affinity is sufficiently challenging. Consequently, we focus on mathematical representations for protein-ligand binding affinity predictions to illustrate their design and application in the present review.

II. Methods

In this section, we briefly review three classes of mathematical representations, i.e., representations constructed from algebraic topology, graph theory, and differential geometry.

II.A. Algebraic topology-based methods

II.A.1. Background

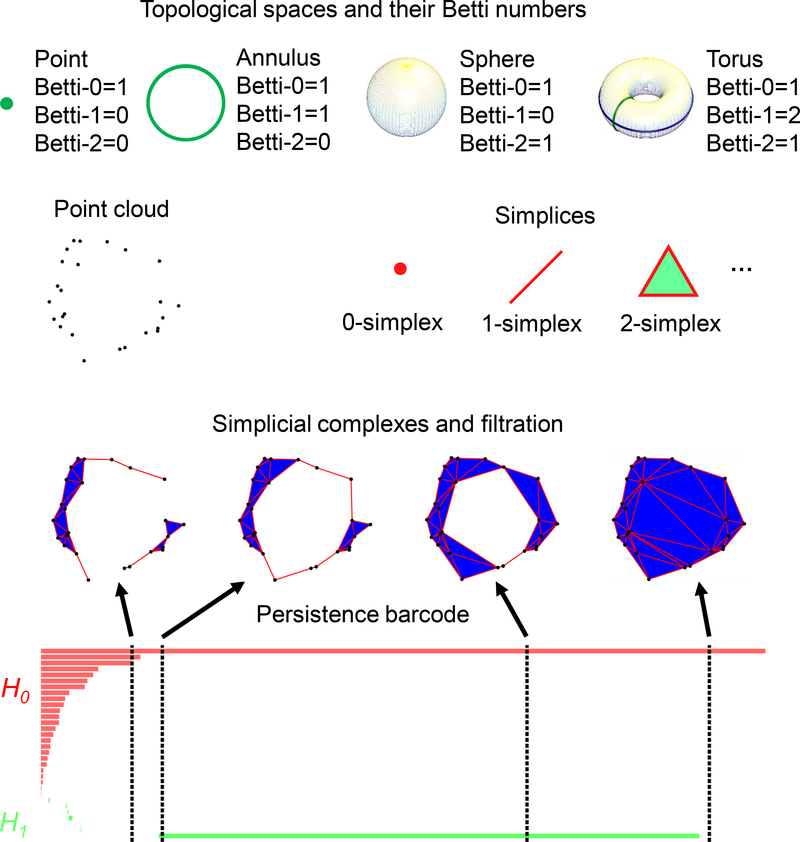

Topology dramatically simplifies geometric complexity23,30–32,95–98. The study of topology deals with the connectivity of different components in space and characterizes independent entities, rings, and higher dimensional faces within the space99. For example, simplicial homology, a type of algebraic topology, concerns the identification of topological invariants from a set of discrete node coordinates such as atomic coordinates in a protein. For a given (protein) configuration, independent components, rings, and cavities are topological invariants and their numbers are called Betti-0, Betti-1, and Betti-2, respectively, see Fig. 4. To study topological invariants in a discrete dataset, simplicial complexes are constructed by gluing simplices under various settings, such as the Vietoris-Rips (VR) complex, Čech complex or alpha complex. Specifically, a 0-simplex is a vertex, a 1-simplex an edge, a 2-simplex a triangle, and a 3-simplex a tetrahedron, as illustrated in Fig. 4. Algebraic groups built on these simplicial complexes are used in simplicial homology to systematically compute various Betti numbers. There is also cubical complex99 built upon volumetric data, including those from biomolecules44.

Figure 4:

Topological representation of point clouds via persistent homology. Top panel: The Betti numbers for some objects. Middle panel: Many datasets are represented as a point cloud and the simplices are the building blocks for constructing a simplicial complex to topologically characterize the point cloud. Bottom panel: the persistence barcode of the point cloud and some example simplicial complexes at different stages of the filtration.

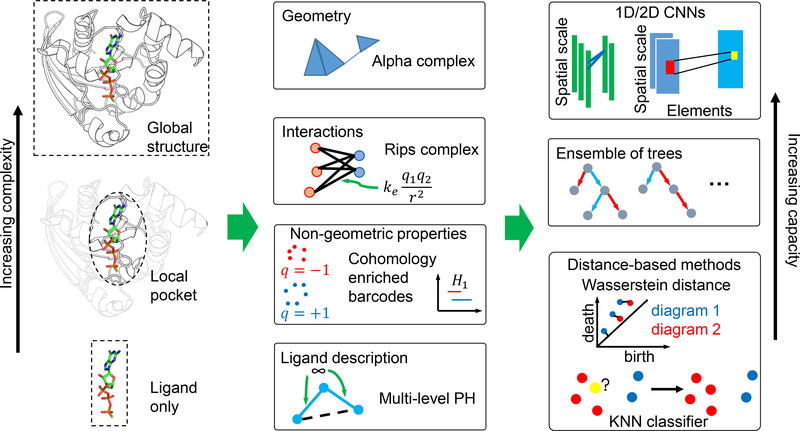

However, conventional topology or homology is truly free of metrics or coordinates, and thus retains too little geometric information to be practically useful. Persistent homology is a relatively new branch of algebraic topology that embeds multiscale geometric information into topological invariants to achieve a topological description of geometric details31,33. It creates a sequence of topological spaces of a given object by varying a filtration parameter, such as the radius of a ball or the level set of a surface function as shown in Fig. 4. As a result, persistent homology can capture topological structures continuously over a range of spatial scales. Unlike commonly used computational homology which results in truly metric free representations, persistent homology embeds essential geometric information in topological invariants, e.g., topological representations or barcodes100 shown in Fig. 4, so that “birth” and “death” of isolated components, circles, rings, voids or cavities can be monitored at all geometric scales by topological measurements. A schematic illustration of our persistent homology-based machine learning predictions is given in Fig. 6. Key concepts are briefly discussed below. More mathematical detail can be found in the literature31, including ours37,38.

Figure 6:

Workflow of topology based protein-ligand binding affinity prediction. In multi-level persistent homology, the distance between covalent bonds are set to ∞ to avoid their disturbance to the topological representation of non-covalent bonds.

Simplicial complex

A simplicial complex is a topological space consisting of vertices (points), edges (line segments), triangles, and their high dimensional counterparts. Based on the simplicial complex, simplicial homology can be defined and used to analyze topological invariants. The essential building blocks of geometry induced simplicial complex are simplices. Specifically, let v0, v1, v2, … , vk be k +1 affinely independent points; a (geometric) k-simplex σk = {v0, v1, v2, … , vk} is the convex hull of these points in , and can be expressed as

An i-dimensional face of σk is defined as the convex hull formed by the subset of i+1 vertices from σk (k ≥ i). Geometrically, a 0,1,2, and 3-simplex corresponds to a vertex, an edge, a triangle, and a tetrahedron, respectively. A simplicial complex K is a finite set of simplices such that any face of a simplex from K is also in K and the intersection of any two simplices in K is either empty or a face of both. The underlying space |K| is a union of all the simplices of K, i.e., .

Homology

The basic algebraic structure, chain groups, are defined for simplicial complexes so that homology can be characterized. A k-chain [σk] is a formal sum of k-simplices . The coefficients αi are often chosen in an algebraic field (typically, ). The set of all k-chains of the simplicial complex K together with addition operation forms an abelian group . The homology of a topological space is represented also by a series of abelian groups, constructed based on these spaces of chains connected by boundary operators. The boundary operator on chains ∂k : Ck → Ck−1 are defined by linear extension from the boundary operators on simplices. The boundary of a k-simplex σk = {v0, v1, v2, … , vk} is defined to be the alternating sum of its codimension-1 faces, , where is the (k−1)-simplex excluding vi from the vertex set. A key property of the boundary operator is that ∂k−1∂k = Ø and ∂0 = Ø. The k-cycle group Zk and the k-boundary group Bk are the subgroups of Ck defined as, , .

An element in the k-th cycle group Zk (or the k-th boundary group Bk) is called a k-cycle (or the k-boundary, resp.). As the boundary of a boundary is always empty ∂k−1∂k = Ø, one has Bk ⊆ Zk ⊆ Ck. Topologically, a k-cycle is a union of k dimensional loops (or closed membranes). The k-th homology group Hk is the quotient group generated by the k-cycle group Zk and k-boundary group Bk: Hk = Zk/Bk. Two k-cycles are called homologous if they differ by a k-boundary element. From the fundamental theorem of finitely generated abelian groups, the k-th homology group Hk can be expressed as a direct sum, , where βk, the rank of the free subgroup, is the k-th Betti number. Here is torsion subgroup with torsion coefficients {pi|i = 1, 2, … , n}, powers of prime numbers. The Betti number can be simply calculated by βk = rank Hk = rank Zk−rank Bk. The geometric interpretations of Betti numbers in are as follows: β0 represents the number of isolated components, β1 is the number of independent one-dimensional loops (or circles), and β2 describes the number of independent two-dimensional voids (or cavities). Together, the Betti numbers {β0, β1, β2,…} describes the intrinsic topological property of a system.

Persistent homology

For a simplicial complex K, a filtration is defined as a nested sequence of subcomplexes, Ø = K0 ⊆ K1 ⊆ … ⊆ Km = K. Generally speaking, abstract simplicial complexes generated from a filtration give a multiscale topological representation of the original space, from which related homology groups can be evaluated to reveal topological features. Specifically, upon passing the previous sequence to homology, we obtain a sequence of vector spaces connected by homomorphisms: H*(K0) → H*(K1) → … → H*(Km). Following this sequence of homology groups, sometimes new homology classes are created (i.e., without pre-image under the map H*(Ki) → H*(Ki+1)), and sometimes certain homology classes are destroyed (i.e., they have trivial image under H*(Kj) → H*(Kj+1)). The concept of persistence is introduced to measure the “life-time” of such homological features. The results can be summarized in the persistence barcodes (or equivalently persistence diagrams), consisting of a set of intervals [x, y) with the beginning and ending values representing the birth and death of homology classes. The introduction of filtration is of essential importance and directly leads to the invention of persistent homology. Generally speaking, abstract simplicial complexes generated from a filtration give a multiscale representation of the corresponding topological space, from which related homology groups can be evaluated to reveal topological features. Furthermore, the concept of persistence is introduced for long-lasting topological features. However, we have shown that short-lived topological features are also important for biomolecular systems37. The p-persistent of k-th homology group, Ki, is

| (1) |

Through the study of the persistence pattern of these topological features, the so-called persistent homology is capable of capturing the intrinsic properties of the underlying space solely from a discrete point set.

Filtration

Given a set of discrete sample points, there are different ways to construct simplicial complexes. Typical constructions are based on the intersection patterns of the set of expanding balls centered at the sample points, such as Čech complex, (Vietoris-)Rips complex and alpha complex101,102. The corresponding topological invariants, e.g., the Betti numbers, could be different depending on the choice of simplicial complexes. A common filtration for a set of atomistic data of a macromolecule is constructed by enlarging a common atomic radius r from 0. As the value of r increases, the solid balls will grow and new simplices can be defined through the overlaps among the set of balls. In Figure 4, we illustrate this process by a set of points. In Fig. 5, we demonstrate the persistent homology analysis of different aspects of a protein-ligand complex using the barcode representation.

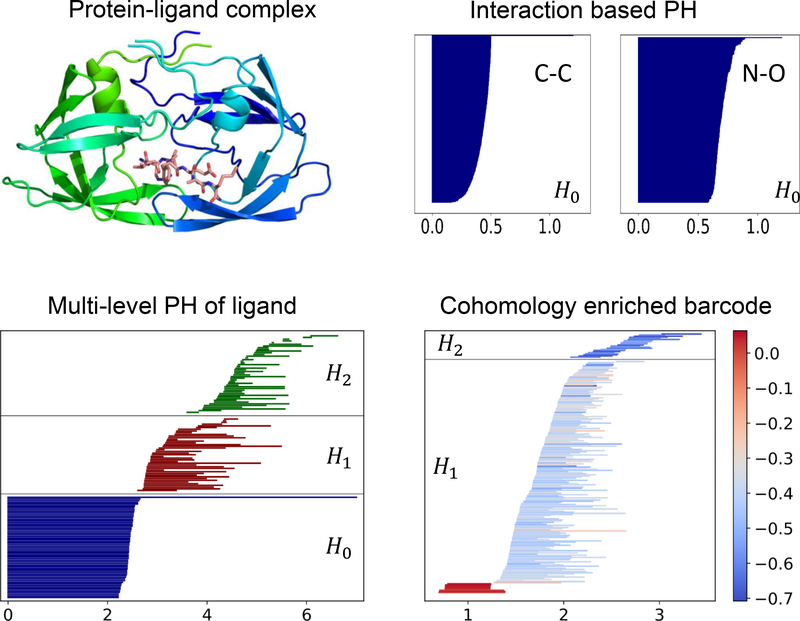

Figure 5:

Topological fingerprints addressing different aspects of the protein-ligand complex. (a) The example protein-ligand complex (PDB:1A94). (b) The H0 barcodes from Rips filtration based on the Coulomb potential for carbon-carbon and nitrogen-oxygen interactions between protein and ligand. (c) The multi-level persistent homology characterization of the ligand revealing the non-covalent intramolecular interaction network. (d) The enriched barcode via persistent cohomology for atomic partial charges as the non-geometric information.

II.A.2. Challenge

Conventional topology and homology are independent of metrics or coordinates and thus retain too little geometric information to be practically useful in most biomolecular systems. While persistent homology incorporates more geometric information, it typically treats all atoms in a macromolecule indifferently, which fails to recognize detailed chemical, physical, and biological information35,36. We introduced persistent homology as a quantitative tool for analyzing biomolecular systems37–42,44,45. In particular, we introduced one of the first topology-based machine learning algorithms for protein classification in 201543. We further introduced element specific persistent homology, i.e., element-induced topology, to deal with massive and diverse bimolecular datasets43,45–48. Moreover, we introduced multi-level persistent homology to extract non-covalent-bond interactions49. Furthermore, physics-embedded persistent homology was proposed to incorporate physical laws into topological invariants49. These new topological tools are potentially revolutionary for complex biomolecular data analysis9.

II.A.3. Element specific persistent homology

Many types of interactions exist in a protein-ligand complex, for example, hydrophobic effects, hydrogen bonds, and electrostatics. Due to the mechanisms of these interactions, they happen under different geometric distances. Persistent homology, when applied to all the atoms, however, will mostly capture the interactions among nearest neighbors and hinder the detection of long-range interactions. Additionally, it does not distinguish the difference between different element types and their combinations and thus, neglects important chemistry and biology. Element specific persistent homology provides a simple yet effective solution to these issues. Instead of computing persistent homology for the whole molecule once, we perform persistent homology computations on a collection of subsets of atoms. For example, persistent homology on only carbon atoms characterizes the hydrophobic interaction network and the hydrogen bond interactions can be described by persistent homology on the set of nitrogen and oxygen atoms. Although different types of interactions have different characteristics, they may also influence each other. This encourages the iteration over all combinations of atom types which may result in large computation cost. Fortunately, as Vietoris-Rips filtration is often used to characterize the interaction networks, we only need to generate the filtered simplicial complex once for all atoms and perform persistent homology computation on the subcomplexes of the filtered simplicial complex.

II.A.4. Multi-level persistent homology

Vietoris-Rips complex based only on pairwise distance is a widely used realization of filtration. When directly feeding the Euclidean distance between atoms to Rips complex construction, the interactions of interest such as electrostatic interactions can be flushed away by covalent bonds which usually have shorter lengths. This motivates us to incorporate a simple yet effective strategy to recover these important interactions by masking the original Euclidean distance matrix. Specifically, we keep only the entries corresponding to the interaction of interest and set every other entry to infinity in the distance matrix. For example, we set distances between atoms from the same component (protein or ligand) to infinity to focus on the interactions between the protein and ligand. This strategy was found especially useful when dealing with ligands alone which often have a much simpler structure than the proteins or the protein-ligand complexes. We call this approach to small molecules multi-level persistent homology of level n where we set the distance between two atoms to infinity if the shortest path between them through the covalent bond network is at most of the length n. This treatment has led to powerful predictive tools in tasks only explicitly involving small molecules49,92.

II.A.5. Physics-embedded persistent homology

All the topological methods discussed above are force-field-free approaches. In other words, they depend only on atomic coordinates and types without the need for molecular force field information. However, despite being insufficient, non-unique, and subject to errors, many biophysical models offer important approximations to the ground truth of biological science and reflect some of our best understandings of the biological world. Therefore, it is crucial to develop the so-called “physics-embedded” topology which incorporates physical models into topological invariants.

We are particularly interested in physical models that quantify the interaction strengths and directions. To characterize electrostatics interactions, we can construct a Rips filtration based on the Coulomb’s potential,

| (2) |

where the filtration value Fele(i, j) for the edge between atom i and j depends on their partial charges qi and qj and their geometric distance dij 49. The part due to the Coulomb’s potential in Eq. (2) can be substituted by other models, such as the van der Waals potential. We can also use cubical persistent homology103 to characterize the charge density as volumetric data, for example, one estimated from point charges,

| (3) |

where ri is the position of atom i and ηi is a characteristic bond-length parameter.

In a more general setting, there often are available properties defined on the simplices in the simplicial complex representing the protein-ligand complex. The interaction strength characterized by physical models as in Eq. 2 is indeed a property defined on the 1-simplices (edges). There are also various properties given on the 0-simplices (nodes/atoms) including atomic weight, atomic radii, and partial charges. Another way of incorporating these properties into the topological representation is to attach additional attributes to the persistence barcodes obtained through geometric filtration. We developed a method called enriched barcode through cohomology theory104. The usage of cohomology has led to efficient algorithms105 as well as richer representations106. We are unable to elaborate on the details of cohomology here and the interested reader is referred to the aforementioned references.

Consider a persistence barcode {[bi, di)}i ∈ I of dimension k obtained by a geometric based filtration of the molecular system, for example, the Vietoris-Rips filtration built upon the Euclidean distance between atoms in space. Let K(x; k) be the set of k-simplices of the simplicial complex in the corresponding filtration with the filtration parameter x. Our goal is to annotate each persistence pair [bi, di) in the barcode with the non-geometric information provided by . We proposed to embed such non-geometric information via cohomology104. Specifically, for an x ∈ [bi, di), let ωi,x be a real k-cocycle lifted from the representative cocycle from the persistent (co)homology computation106. A smoothed cocycle can be obtained by solving the following problem,

| (4) |

where is the real (k−1)-cochain on K(x), d is the coboundary operator, and is an Laplacian operator. This smoothed representative k-cocycle annotates the simplices with weights which can be used to describe the non-geometric information on this persistence pair,

| (5) |

Intuitively, this obtained function describes the average value of f near the k-dimensional hole associated to the persistence pair [bi, di). We call this object enriched barcode 104. In practice, we only compute for several filtration values in the interval or even only one such as the midpoint of each persistence pair.

II.A.6. From topological invariants to machine learning algorithms

While persistent homology already significantly reduces the complexity of the molecular system description, directly feeding it to machine learning algorithms can cause too many model parameters compared to the moderate size of available data in this field. Also, the outputs of persistent homology are similar to unstructured point clouds. Additional processing is needed to integrate persistent homology characterization with machine learning models.

In the application to biomolecular structure description, prior knowledge is available on the approximate distance ranges for different interactions. Therefore, we first divide an interval [0,D] where D is the longest range among the interactions of interest into bins. We then count the number of events in each bin, namely, 1) birth of persistence pairs, 2) death of persistence pairs, and 3) overlaps of bars with the bins. These approaches result in a 1-dimensional image-like feature tensor with three channels which can be fed into a 1-dimensional convolutional neural network or any other machine learning model that accepts structured features. Prior knowledge on the spatial range of different types of interactions can guide the decision of bin endpoints. We have also found similar performance with uniform partitioning. Another way of vectorization is to statistically describe the unstructured persistence barcodes, for example, the mean value and standard deviation of birth, death, and bar lengths.

The Wasserstein distance between the resulting persistence barcodes also works well with distance-based methods, such as k-nearest-neighbor-based regression and classification or k-means clustering. This approach was found effective especially when the objects are moderately complex. It has been successfully applied to ligand-based tasks49.

In general applications of integrating persistent homology with machine learning, the persistence barcodes can become sparse and available field knowledge might be insufficient to guide the vectorization. In this case, a neural network layer with each neuron learning a kernel function can automatically vectorize the barcodes. Specifically, one neuron in such layer is a function that takes the persistence barcode and output a number,

| (6) |

where ϕ is a distance-based kernel function with learnable parameters Θ and the center (μb, μd). This layer can be the first layer in a neural network for supervised learning. This layer can also be used as the first layer of an autoencoder that tries to reconstruct the persistence barcodes controlled by the Wasserstein metric. On the other hand, kernel density estimators with a fixed number of kernels can also be used as a vectorization tool. Specifically, a kernel density estimator with nk kernels each of which has np parameters to optimize can turn a persistence barcode into a feature vector of size nk * np. Treatment such as truncated kernels might be needed to take care of the nature of persistence barcodes that the points are only in the upper left part of the first quadrant.

II.B. Differential geometry-based methods

II.B.1. Background

Differential geometry has a long history of development in mathematics and has been consistently studied since the 18th century. Nowadays, many differential geometry branches have been created from Riemannian geometry, differential topology, to Lie groups. As a result, differential geometry has been used in various interdisciplinary fields including physics, chemistry, economics, and computer vision. In 2005, we unfolded a curvature-based model to generate biomolecular surfaces70. In the following years, we successfully formulated Laplace-Beltrami operator based minimal molecular surface (MMS) for macromolecular systems71,72,107. This approach is applied to multiscale solvation modeling in which the molecular surfaces are described via the differential geometry of surfaces. Specifically, the solute molecule is still described in microscopic detail while the solvent is treated as a macroscopic continuum to reduce a large number of degrees of freedom76–79,83,84. Differential geometry-based multiscale models incorporates molecular dynamics, elasticity and fluid flow to further couple the discrete macromolecular and continuum solvent domains80–82,86,87. In the past few years, we have improved the computational efficiency of the geometric modeling by incorporating the differential geometry based multiscale paradigms in Lagrangian73,74 and Eulerian representations75,108.

Differential geometry-based multiscale models have been used for solvation free energies prediction79,93 and ion channel transport analysis80–82,87,109 to demonstrate their model efficiency in comparison with atomistic scale models.

Another type of applications of differential geometry in biomolecular systems is to utilize curvatures to characterize the macromolecular surface landscape and further infer chemical and biological properties. For example, the minimum and maximum curvatures are combined with the surface electrostatic potential to detect both positively charged and negatively charged protein binding sites75,108.

The other type of applications of differential geometry in molecular science is to carry out curvature-based solvation free energy prediction85. In this approach, the total Gaussian, mean, minimum, and maximum curvatures of a molecule are computed for a molecule and correlated with its solvation free energy.

II.B.2. Challenge

Differential geometry based multiscale models bridge the discrete and continuum descriptions and enable physical interpretation of molecular mechanisms. Curvature-based modeling of biomolecular binding sites and solvation free energy reveals macromolecular interactive landscapes. These methods are designed as physical models to enhance our understanding of biomolecular systems. However, they have limited capability in predicting massive and diverse datasets due to their dependence on physical models such as the Poisson-Boltzmann equation or the Poisson-Nernst-Planck equation or their excessive reduction of geometric shape information, i.e., a molecular-level average of local curvatures. Indeed, physical models depend on force field parameters which are subject to errors. Meanwhile, molecular-level descriptions are too coarse-gained for large datasets. In contrast, atomistic descriptions not only involve too much detail but also are not scalable for molecules with different sizes in a large dataset. As a result, machine learning algorithms cannot be effectively implemented.

To overcome these obstacles, we have designed new differential geometry-based models to extract element-level geometric information which automatically leads to scalable machine learning representations. Additionally, the effort is given to encode intermolecular and intramolecular non-covalent interactions. Therefore, these novel models can be handily applied for a diverse molecular and biomolecular datasets, including protein-ligand binding analysis and prediction.

II.B.3. Multiscale discrete-to-continuum mapping

Biomolecular datasets provide atomic coordinate and type information. To facilitate differential geometry modeling, this discrete representation is transformed into a continuum one by the so-called discrete-to-continuum mapping. In a given biomolecule or molecule with N atoms, denote and qj the position of jth atom and its partial charge, respectively. For any point r in three-dimensional space, a discrete-to-continuum mapping56,59,62 defines the molecular number/charge density as the following

| (7) |

Especially, the density ρ indicates the molecular number density when wj = 1, and represents the molecular charge density when wj = qj. In addition, ηj describes characteristic distances, ∥ · ∥ is the second norm, and Φ with C2 property satisfies the following admissibility conditions

| (8) |

| (9) |

In principle, the density function can accept all radial basis functions (RBFs) as well as C2 delta sequence of the positive type examined in this work110. In practice, the generalized exponential functions

| (10) |

and generalized Lorentz functions

| (11) |

seem to be the most optimal choice for the biomolecular datasets56,59. Here power parameters κ and ν vary for different datasets and are systemically selected.

To generate the multiscale representation for ρ(r, {ηj}, {wj}), one can vary different values for scale parameters {ηj}. The published work42 has shown that the molecular number density Eq. (7) is an efficient representation for molecular surfaces. Unfortunately, such molecular-level description serves a little role in the predictive models for massive data.

II.B.4. Element interactive densities

To handle the diversity molecular or biomolecular datasets, we have upgraded differential geometry representations with an emphasis on non-covalent intramolecular interactions in a molecule and intermolecular interactions in complexes, such as protein-ligand, protein-nucleic acid, and protein-protein complexes. Also, our differential geometry features can characterize the geometric information at element-specific interactions and are scalable despite a wide range of molecular sizes.

To accurately encode the physical and biological information in the differential geometry representations, we describe the molecular interactions at the element-level in a systematical manner. For instance, in the protein-ligand datasets, the intermolecular interactions are decomposed into element-level descriptions based on the commonly occurring element type in proteins and ligands. Typically, protein structures usually consist of H,C,N,O, S, and ligand structures often include H,C,N,O,S,P, F, Cl, Br, I. That results in 50 element-level intermolecular descriptions. In practice, hydrogen atoms are missing in most Protein Data Bank (PDB) datasets for proteins. Therefore, we do not include it in our models for macromolecules or for both proteins and ligands. Finally, we end up with 40 or 36 element-specific groups to express the intermolecular interactions in the protein-ligand complexes. This element-specific approach can be straightforwardly carried out in other interactive systems in chemistry, biology and material science. For example, in protein-protein interactions, one can similarly arrive at a total of 16 element-level descriptions for practical use.

In a given molecule, based on the most frequently appearing element types included in the set , we collect N atoms. For each jth atom in that collection, we label it as {(rj, αj, qj). Here αj is the element type of jth atom, and indicates the kth element type in set .

Before defining the element interactive density, we have to designate the non-covalent interactions between two element types and . Such interactions can be represented by correlation kernel Φ

| (12) |

where ri and rj are the atomic radii of ith and jth atoms, respectively and σ is the mean value of the standard deviations of all ri and rj in the dataset. The inequality constraint ∥ri − rj∥ > ri + rj + σ serves the purpose of excluding the covalent forces.

Given a point r in , we define the element interactive density induced by the pairwise interaction between two chemical element types and

| (13) |

where Dk is so-called atomic-radius-parametrized van der Waals domain given by the union of all the balls with centers are the Ck atomic positions with the corresponding atomic radius rk. In other words, if B(ri, ri) is denoted as a ball with a center ri and a radius ri, Dk can be expressed as

| (14) |

Note that element interactive density represented in (13) is only good for k ≠ k′. When density is calculated based on the interactions between the same element types, i.e. k = k′, each Ck atom will belong to the atomic-radius-parametrized van der Waals domain and element interactive density representation. To this end, we define such density formulation as the following

| (15) |

in which, domain is just a single ball B(ri, ri), and the density function ρkk is evaluated at all .

The element interactive density ρkk is the linear combination of correlation kernel Φ of pairs of element types. Consequently, the smoothness of ρkk is the same as that of Φ. Moreover, by changing a level constant c, one can attain a family of element interactive manifolds as

| (16) |

Figure 7 illustrates a few element interactive manifolds.

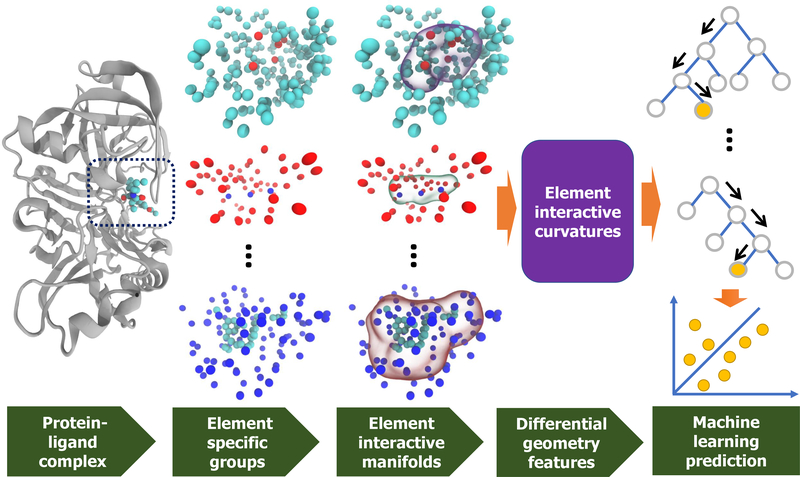

Figure 7:

Illustration of the DG-GL strategy for complex with PDBID: 5QCT (first column). The second column presents the different element specific groups including OC, CN, and CH, respectively from top to bottom. The third column depicts the element interactive manifolds for the corresponding element specific groups. A predictive model in the last column integrates the differential geometry features (fourth column) with the machine learning algorithm.

II.B.5. Element interactive curvatures

Differential geometry of differentiable manifolds

We here describe the geometric information calculation on a differential manifold. Consider U being an open subset of with its closure is compact72,86,111, we are interested in a C2 immersion . Given a vector u = (u1, u2,… , un) ∈ U, we express the Jacobian matrix with respect to u as

| (17) |

The first fundamental form is written in the metric tensor with its coefficients gij = 〈Xi,Xj〉, where 〈, 〉 is the Euclidean inner product in .

We define the unit normal vector via the Gauss map

| (18) |

| (19) |

where “×′′ denotes the cross product. If we denote ⊥uf the normal space of f at point X = f(u), then N(u) ∈ ⊥uf. In addition, one can form a second fundamental form via the means of the normal vector N and tangent vector Xi:

| (20) |

Then, the Gaussian curvature K and the mean curvature H are determined as the following

| (21) |

The Einstein summation convention is used in the curvature expressions and (gij) = (gij)−1.

Element interactive curvatures

With an element interactive manifolds defined via element interactive density ρ(r) describing in (16) and the expressions in (21), one can further formulate the representations for the Gaussian curvature (K) and the mean curvature (H) as the following75,112

| (22) |

and

| (23) |

where .

In addition, the minimum curvature (κmin) and maximum curvatures (κmax) can be evaluated based on the Gaussian and mean curvature values

| (24) |

It is noted that in the curvature representations in (22), (23), and (24), the derivatives of the density function can be analytically calculated. For the convenience, we denote the curvatures associated with the density function as , , , . In practical use, the element interactive curves are only evaluated at the atomic positions in a given molecule or biomolecule structure. Notice that, due to the variant sizes in different biomolecular structures, numbers of selected atoms for the curvature evaluations vary. To achieve element-level geometry information, we propose the element interactive mean curvature as the following

| (25) |

and

| (26) |

The other element-level interactive curvatures for Gaussian curvature , minimum curvature , and maximum curvature are defined in a similar manner.

II.B.6. Differential geometry based geometric learning (DG-GL)

Geometric learning

In our differential geometry based geometric learning (DG-GL) model, we incorporate the geometric representations such as element-level interactive curvatures with advanced machine learning algorithms to form powerful predictive models. Given a training set , in which is the input data for the ith molecule and I is the set of the molecular indices in the training data. We denote is a differential geometric functions encoding the the input structures via the given hyperparameter set ζ into aforementioned DG descriptions. Our DG-GL model learns the training set by minimizing the following loss functions

| (27) |

in which L is the loss function, yi is the target label of molecule , and θ is the set of parameters of a selected machine learning algorithm. It is worth noting that the DG representation encoded in F does not depend on the type of learning task. Therefore, our DG-GL models can adapt any regressors or classifiers models such as linear regression, support vector machine, random forest, gradient boosting trees, artificial neural networks, and convolutional neural networks. Besides the machine learning hyperparameters, the kernel parameters in the encoding DG function F need to be optimized for a specific learning algorithm and a particular training set .

In the validation, we only utilize the gradient boosting trees (GBTs) even though the other advanced machine learning models including convolutional neural networks can be incorporated with minimal effort. The general framework of DG-GL model is depicted in Figure (7). The GBTs in the DG-GL score are employed via the gradient boosting regression module in scikit-learn v0.19.1 package with the following hyperparameters: n_estimators=10000, max_depth=7, min_samples_split=3, learning_rate=0.01, loss=ls, subsample=0.3, max_features=sqrt for all experiments.

Model parametrization

In our differential geometry-based approach, we calculate the element interactive curvatures (EICs) of type C based on kernel α with parameters (δ, τ). We denote such model . Here, C ∈ {K,H, kmin, kmax} and α = E and α = L indicate generalized exponential and generalized Lorentz kernels, respectively. In addition, δ refers to the kernel order and is denoted as κ if α = E or ν if α = L. Another kernel parameter is τ defined by the following relationship

| (28) |

where and stand for the van der Waals radii of element type k and element type k′, respectively. These kernel parameters are selected via a 5-fold cross-validation on a specific training set with the range of τ and δ varying from 0.5 to 6 with an increment of 0.5. Moreover, we are interested in high values of power order, δ ∈ {10, 15, 20}, which accounts for the ideal low-pass filter (ILF)63. These parameter ranges are also listed in Table 1.

Table 1:

The ranges of DG-GL hyperparameters for 5-fold cross-validations

| Parameter | Domain |

|---|---|

| τ | {0.5, 1.0, …, 6} |

| δ | {0.5, 1.0, …, 6} ∪ {10, 15, 20} |

| C | {K, H, kmin, kmax} |

To enable the multiscale descriptions in differential geometry representation, we employ multiple kernels to evaluate the EICs. For instance, if two kernels with the following parameters (α1, δ1, τ1) and (α2, δ2, τ2) are utilized, our EIC model can be written as .

In a protein-ligand complex, we are interested in 4 commonly occurred protein atom types {C,N,O, S}, and 10 commonly occurred ligand atom types {H,C,N,O,F,P, S, Cl, Br, I}. That results in a total of 40 different combinations. With a set of calculated atomic pairwise curvatures, we construct 10 statistical features, namely sum, the sum of absolute values, minimum, the minimum of absolute values, maximum, the maximum of absolute values, mean, the mean of absolute values, standard deviation, and the standard deviation of absolute values. In total, we attain 400 features for the current differential geometry-based models.

II.C. Graph theory-based methods

II.C.1. Background

Graph theory is one of the most popular subjects in discrete mathematics. In graph theory, the information inputs are represented in the graph structures formed by vertices that are connected by edges and/or high-dimensional simplexes. Different ways to interpret the graph result in different graph theories such as geometric graph theory, algebraic graph theory, and topological graph theory. In geometric graph study, the graph information is extracted based on the geometric objects drawn in the Euclidean plane113. If there are algebraic methods involving in graph structure processing, that approach belongs to algebraic graph theory. There are two common approaches to this branch. The first one is to use linear algebra to study the spectrum of various types of matrices representing graph including adjacency matrix and Laplacian matrix114. Another approach relies on the group theory, especially automorphism groups115 and geometric group theory116, for the study of graphs. Unlike the aforementioned graph theories, the algebraic graph theory considers graphs as topological spaces by associating different types of simplicial complexes such as abstract simplicial complex117 and Whitney complex118.

Due to the natural representations for structured information, graph theory enacts enormous applications in various fields including computer science, linguistics, physics, chemistry, biology, and social sciences. Especially in the chemical and biological study, graph theory is commonly used since molecular structures always feature graph information in which vertices illustrate atoms and graph edges represent bonds. Indeed, graph-based approaches have been utilized to describe chemical datasets119–124 as well as biomolecular datasets54,125–130. In addition, one can make use of graph representations to uncover the connectivity of different components of a molecule such as centrality131–133, contact map54,134, and topological index123,135. Moreover, graph extracting representations can be employed in chemical analysis52,120,121 and biomolecular modeling136. Particularly, some research groups have invested their efforts to carry out the graph-based representation to model protein flexibility and long-time dynamics such as normal-mode analysis (NMA)137–140 and elastic network model (ENM)54,55,141–144.

II.C.2. Challenge

Due to the richness in geometric interpretations, graph theory-based approaches have shown their efficiency in the qualitative and descriptive models. However, oversimplified representations and the lack of physical and biological detailed information may render graph theory-based approaches less attractive in the quantitative analysis. For instance, in Gaussian network model (GNM)54,142,145, the use of the spectrum of the Laplacian matrix is quite efficient to decompose the flexible and rigid regions and domains of proteins but its fluctuation predictions on protein Cα atoms were not reliable with the Pearson correlation coefficient as low as 0.6 for three datasets146. To predict the mutations in proteins, the graph-based mCSM method was not competent as physical and knowledge-based or topological fingerprint-based models46,147.

The poor performances of the aforementioned graph theory-based models on quantitative tasks are due to the lack of three main components in our point of view. Firstly, these graph theory-based structures do not provide the information at the chemical element level. Consequently, these models treat different element types equally which results in inadequate coded information from the original structures. Secondly, non-covalent interactions between two atoms are overlooked in many graph edges which cause the unphysical representations for most molecular and biomolecular data. Finally, the edges in the many graph-based models express the connectivity between a pair of atoms based on the number of covalent bonds between these two atoms, which inaccurately describe many interactions that depend on the Euclidean distance.

To address the aforementioned issues in graph based-modeling, we have developed the weighted graphs, termed as the flexibility-rigidity index (FRI), to predict the B-factor of protein atoms. In our FRI model, the graph edges were formulated by the radial basis functions (RBFs)58–60,62 which properly describe the interaction strengths between two atoms in the equilibrium structures. The original FRI was upgraded to multiscale FRI60,63 for capturing the multiscale interactions in biological structures. Specifically, the graph in the multiscale FRI model is allowed to have multiple edges formed by RBFs with careful selections of scaled and power parameters. Although our FRI models have outperformed the GNM in B-factor predictions, they provide only coarse-grained molecular-level descriptions. To overcome this limitation, we have proposed graph coloring based methods with vertices colored differently based on the corresponding element types. Consequently, we ended up with various element-specific subgraphs taking care of different types of physical interactions, such as hydrophilic, hydrophobic, hydrogen bonds64,65. As a result, the predicted accuracy for protein B-factors by our multiscale weighted colored graphs is over 40% higher than GNM models65. The success of multiscale weighted colored graph models on B-factor prediction encouraged us to design graph-based scoring functions to predict protein-ligand binding affinities. The protein-ligand binding mechanism is more complex than the protein B-factor. Therefore, it requires sophisticated graph-based models to accurately encode the physical and biological properties to unveil its molecular mechanism. The development of such graphs is described in the following sections.

II.C.3. Multiscale weighted colored geometric subgraphs

In this section, we discuss general graph representations for a molecule or biomolecule. Graph-based representations are systematical, scalable, and straightforward applied not only to the predictions of protein-ligand binding affinity but also for various bioactivities such as toxicity, solvation, solubility, partition coefficient, mutation-induced protein folding stability change, and protein-nucleic acid interactions. In a given molecule or biomolecule in a dataset, we denote a graph to represent a subset of its N atoms. The set of its vertices consists of coordinates and chemical element types of atoms, defined as

| (29) |

where rj is the 3D position of jth atom, and αj is its element type which belongs to a predefined set of commonly occurred chemical element types as introduced in Section II.B.4. To accomplish a meaningful encoded physical and biological information in the graph, graph edges have to express the non-covalent interactions. Moreover, to accommodate for the interactions between k element atoms and k′ element type atoms, we consider a set of graph edges represented by RBFs as the following

| (30) |

where ∥ri−rj∥ accounts for the Euclidean distance between the ith and jth atoms, ri and rj are the atomic radii of ith and jth atoms, respectively. Moreover, σ is the mean value of the standard deviations of all atomic radii belonging to element types and in the dataset. The exclusion of the covalent interactions are portrayed in this inequality ∥ri − rj∥ > ri + rj + σ. Φ is a predefined RBF representing a graph weight and has the following properties56,59

| (31) |

| (32) |

where is a characteristic distance between the atoms. We now achieve the weight colored subgraphs (WCS) or denote for short.

In principle, our WCS can adopt any RBFs. In practice, the generalized exponential functions (10) and generalized Lorentz functions (11) seem to be the most optimal choice for the biomolecular datasets56,59. Here power parameters κ and ν vary for different datasets and are systemically selected. To illustrate WCS of a given molecule, we use the uracil compound (C4H4N2O2) as an example. Figure 8 depicts WCS for nitrogen and oxygen atoms . To elicit the geometrical invariants of WCS formed by element types and , we propose a collective representation at the element level as follows

| (33) |

where which is a geometric subgraph centrality for the ith atom has been developed in our previous work for protein B-factors predictions65. The summation over in Eq. (33) gives rise to WCS rigidity between element types and . In fact, is the generalized form of our successful rigidity index model for protein-ligand binding affinity prediction in the previous work64. it is noticed that the WCS for the protein-ligand system is bipartite since each of its edges presents the interaction between one atom in the protein and another protein in the ligand. With that design, a variety of physical and biological properties such as electrostatics, van der Waals interactions, hydrogen bonds, polarization, hydrophilicity, hydrophobicity can be successfully encoded in our WCS representations.

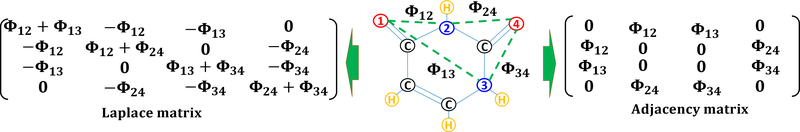

Figure 8:

Illustration of weighted colored subgraph (Left), its Laplacian matrix (Middle), and adjacency matrix (Right) for uracil molecule (C4H4N2O2). Graph vertices, namely oxygen (i.e., atoms 1 and 4) and nitrogen (i.e., atoms 2 and 3), are labeled in red and blue colors, respectively. Here, graph edges (i.e., Φij) are labeled by green-dashed lines which are not covalent bonds. Here, Φij are distance-weighted edges. Note that there are 9 other nontrivial subgraphs for this molecule (i.e., ).

To exhibit the intermolecular and intramolecular properties, one can vary the characteristic distance to set up multiscale weighted colored subgraphs (MWCS). To methodically attain multiscale graph-based molecular and biomolecular representations in a collective and scalable manner, one can aptly select groups of pairwise element interactions k and k′, the choice of subgraph weights Φ and their parameters.

II.C.4. Multiscale weighted colored algebraic subgraphs

In this section, we present another approach to extract the meaningful representation for biomolecules from their WCS. This scheme depends on the algebraic graph or spectral graph formulations. Since geometric and algebraic approaches handle the graph information differently. Therefore, these two kinds of subgraphs will be expected to encode the physical and biological information in varied aspects. In the algebraic graph theory, matrices are utilized to represent a given subgraph. Two of the most common ones are the Laplacian matrix and the adjacency matrix.

Multiscale weighted colored Laplacian matrix

Considering a weighted colored subgraph defined at Eqs. (29) and (30), we construct a following weighted colored Laplacian matrix describing the interaction between element types and

| (34) |

For the illustration, we explicitly formulate the Laplacian matrix of the WCS for the uracil molecule (C4H4N2O2) in Figure 8. It is obvious to learn that all eigenvalues of our element-level WCS Laplacian matrix are nonnegative due to its symmetric, diagonally dominant, and positive-semidefinite properties. Moreover, every row sum and column sum of is zero. In consequence, its first eigenvalue is 0. The second smallest eigenvalue of is so-called algebraic connectivity (also known as Fiedler value) which approximates the sparest cut of a graph. With a given WCS one can easily see its geometrical invariant proposed at Eq. (33) is fully recovered in the trace of its Laplacian matrix

| (35) |

where Tr is the trace.

In the algebraic graph, we are interested in using the eigenvalue and eigenvector information to extract the graph invariants. To this end, we denote and the eigenvalues and eigenvectors of . The element-level molecular representations of the Laplacian matrix is proposed as the following

| (36) |

where is so-called an atomic representation for the ith atom

| (37) |

where T is the transpose. It is noted that is the atomic representation of the generalized GNM54,63. Therefore, it can be directly utilized to capture atomic properties such as protein B-factors. Moreover, the element-level invariant of the Laplacian matrix can be enriched via the statistical information of values, namely sum, mean, maximum, minimum and standard deviation.

Another way to extract the invariant representation from the WCS Laplacian matrix is the direct use of nontrivial eigenvalues . Also, the statistical analysis of those eigenvalues can be incorporated to form a feature vector to characterize element-level information of the molecule and biomolecule.

Multiscale weighted colored adjacency matrix

By setting all diagonal entities of the Laplacian matrix to be 0, we end up with an adjacency matrix with simpler representation but still preserve the essential properties of the original molecular structures. With a given WCS , the adjacency matrix is given as

| (38) |

Since the adjacency matrix defined in (38) is undirected, is symmetric. Thus, all the eigenvalues of it are real. Moreover, due to being a bipartite graph, for each eigenvalue λ, its opposite −λ is also an eigenvalue of . In consequence, only positive eigenvalues are used in the molecular representation. For the sake of illustration, Figure 8 illustrates the adjacency matrices for the weighted colored subgraph GNO in the uracil molecule (C4H4N2O2). It can be seen from the Perron-Frobenius theorem that the spectral radius of , denoted as ρ(A), is bounded by the range of the diagonal elements of the corresponding Laplacian matrix

| (39) |

It is easy to see that all elements in the Laplacian matrix belong to [0,1] and depends on the scale parameter ηkk′. At a characteristic scale range for capturing hydrogen bonds or van der Waals interactions, the Laplacian matrix has many zeros. However, the scale parameter ηkk′ can be very huge in electrostatic and hydrophobic interactions47, which results in many elements in the Laplacian matrix nearly 1. In that particular situation, the spectral radius of the adjacency matrix A(ηkk′) is bounded by n − 1, where n is the number of atoms in WCS .

Similarly to the approach of forming feature representation for the Laplacian matrix, all positive eigenvalues , and their statistical information such as sum, mean, maximum, minimum, and standard deviation are included in element-level molecular representations. If we define as the eigenvectors corresponding to eigenvalues , then the atomic representations can be attained as

| (40) |

where is composed by n linearly independent eigenvectors of A(ηkk′); thus Q is invertible. Moreover, Λ is a diagonal matrix with each diagonal element Λii being the eigenvalue . Unfortunately, formulation given in Eq. (40) is very computationally expensive due the involvement of the inverse-matrix calculation.

In general, the methods regarding the eigenvalues and eigenvectors analysis often pose a great challenge for sustaining an efficient computation strategy. Fortunately, the construction of WCS enables us to design a less-expensive computational model due to two facts. Firstly, the protein-ligand binding site only involves a small region of the whole complex structure. Second, WCS only admits the specific element types in the matrix construction, which further reduces the size of matrices for eigenvalue and eigenvector calculations. As a result, these facts offer an efficient spectral graph-based model for protein-ligand affinity analysis.

II.C.5. Graph-based learning models

Graph learning

The eigenvalue related information obtained from the algebraic graph approach is incorporated with machine learning algorithms to form predicting models for molecular and biomolecular properties. Depends on the nature of each learning task, regressor or classifier algorithms will be utilized. To illustrate the learning process, we denote the ith structure in the training data and denote a function representing the graph information of sample with respect to kernel parameters ζ. Generally, during the training process, machine learning models will minimize the following loss

| (41) |

where is the loss function, yi indicates the training labels. In addition, θ is the machine learning parameters. In principle, the set of parameters θ will be optimized for a specific training set and the choice of a machine learning algorithm. With the current graph presentations, one can make use of advanced machine learning models such as random forest (RF), gradient boosting trees (GBTs), deep learning neural networks to minimize the loss function . To illustrate the performance of our graph-based model, we employ GBTs for a balance between accuracy and complexity. The flow chart of the proposed model is illustrated in Figure 9.

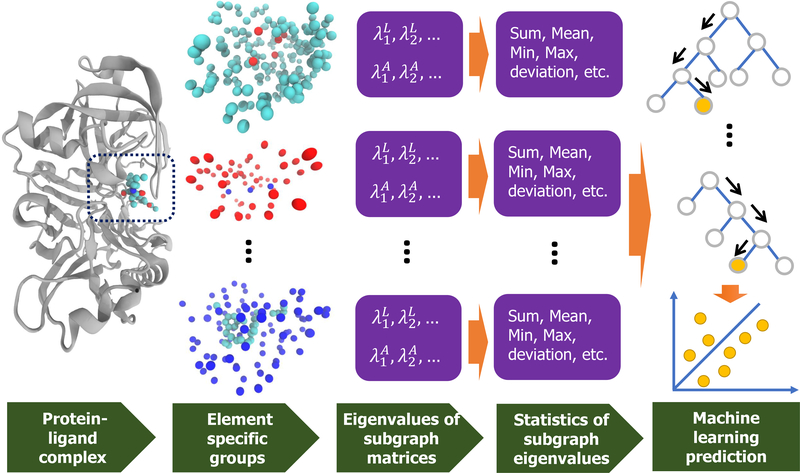

Figure 9:

A paradigm of the graph-based approach. The first column is the complex input with PDBID 5QCT. The second column illustrates the element-specific groups in the binding site. The third column presents the eigenvalues of the corresponding weighted colored graph Laplacian and adjacency matrices in the second column. The statistics of these eigenvalues are calculated in the fourth column. The final column forms a gradient boosting trees model using these eigenvalues.

All the experiments in this graph learning task are carried out by the Gradient Boosting Regressor module implemented in the scikit-learn v0.19.1. The detailed parameters are given as n_estimators=10000, max_depth=7, min_samples_split=3, learningrate=0.01, loss=ls, subsample=0.3, and max_features=sqrt. That parameter selection is nearly optimal and is the same for all calculations.

Model parametrization

Avoiding the wording, this notation represents the AGL-Score features encoded based on the interactive matrix type along with kernel type Ω and kernel parameters δ and τ. Furthermore, , , and represent adjacent matrix, Laplacian matrix, and the pseudo inverse of Laplacian matrix, respectively. In the kernel type notation, Ω = E and Ω = L, respectively, indicate generalized exponential kernel and generalized Lorentz kernels. Since the kernel order notation depends on the specific kernel type, we denote δ = κ if Ω = E, and δ = ν if Ω = L. Lastly, the scale factor τ i implicitly imply this expression , in which and are the van der Waals radii of element type k and element type k′, respectively.

In the multiscale representation for the AGL-Score, we naturally extend the single-scale notation. Only at most two different kernels are carrying out in the AGL-Score model, and the resulting model is denoted as .

To achieve the optimal parameter selection in the AGL-Score’s kernels, we perform 5-fold cross-validation (CV) on the training data of the benchmark. Ideally, one needs to revise the machine learning model for different problem settings. To demonstrate the robustness of our graph-based features, we only train the AGL-Score’s parameters on CASF-2007 benchmark with a training data size of 1105 complexes. Similar to our previous work, we select the range of the graph-based model’s hyperparameters as demonstrated in Table 2. The ranges of AGL’s kernel parameters are selected similarity to ones in DG-GL models discussed in Section II.B.6. For the CASF benchmark datasets, we take into account 4 atom types in protein, namely {C,N,O, S}, and 10 atom types in the ligand, namely {H,C,N,O,F,P, S, Cl, Br, I}, that results in 40 different atom-pairwise combinations. Due to having the opposite eigenvalues in the adjacency matrix, we only consider its positive eigenvalues. Moreover, the statistical properties of these eigenvalues such as sum, minimum (i.e., the Fiedler value for Laplacian matrices or the half band gap for adjacency matrices), maximum, mean, median, standard deviation, and variance are collected. Moreover, the number of distinct eigenvalues, as well as the summation of the second power of them, are calculated. Finally, we form a representation vector of 360 features.

Table 2:

The ranges of AGL hyperparameters for 5-fold cross-validations

| Parameter | Domain |

|---|---|

| τ | {0.5, 1.0, …, 6} |

| δ | {0.5, 1.0, …, 6} ∪ {10, 15, 20} |

| {Adj, Lap, Inv} |

II.D. Machine learning algorithms

It is generally true that our mathematical representations can be paired with any machine learning model. However, the devil is in the details: difference machine learning algorithms respond differently to data size, representation dimension, representation noise, representation correlation, representation amplitude, and representation distribution. Therefore, it is useful to design learning-model adapted mathematical representations.

In the past few years, we have integrated various mathematical representations with a variety of machine learning algorithms, namely k-nearest neighbors (KNNs)26,49, learning to rank (LR)25,27, support vector machine (SVM)43, gradient boosted decision trees (GBDT)46,47, random forest (RF)64,92,94, extra-trees (ET)49, deep artificial neural network (ANN)92,94, deep convolutional neural network (CNN)48,49, multitask ANN92,94, multitask CNN48, and generative networks148.

Due to the extensive variability in the possible types of biological tasks and machine learning algorithms for potentially many data conditions, it is very challenging to provide an exhaustive list of fully optimized representations for a specific combination of biological tasks, learning algorithms and datasets. Nevertheless, one can explore near-optimal representations to each potential combination of biological task, learning model, and dataset and select appropriate mathematical representations with suitable parameters. Using topological representations as an example, we outline the construction of a few topological learning strategies. In general, kNNs are very simple and are used to facilitate optimal transport approaches, such as Wasserstein metrics. However, their results might not be the optimal49. LR algorithms can be quite accurate25,27, but their training is quite time-consuming. Ensemble methods, such as RF, GBDT, and ET, are relatively accurate and efficient49,64,92,94. In particular, RF should be the method of choice for a new problem due to its fewer parameters and robustness. Due to its accuracy and robustness, RF method is often used to rank the feature importance. Utilizing a few more parameters, GBDT can typically improve RF’s predictions after a more intensive parameter search.

Ensemble methods and deep CNNs can be very accurate and robust against overfitting originated from large machine learning dimensions by shrinkage and dropout techniques, respectively46,47. Therefore, they can be used to examine a large number of representations. It is worthy to note that none of these methods works well when the statistics of the test set differs much from that of the training set. When training datasets are sufficiently large, deep learning methods can be more accurate but might involve a very expensive training because of multiple layers of neurons48,49,92,94. Transfer learning or multitask learning can be used to improve the prediction of small datasets when they are coupled to a large dataset that shares similar statistics and the same representation structure48,92,94.

Intrinsically low-dimensional representations based on advanced mathematics can be constructed for complex learning models involving multiple neural networks, such as domain adaptation, active learning, recurrent neural network, long short term memory, autoencoder, generative adversarial networks, and various reinforcement learning algorithms.

III. Datasets and evaluation metrics

III.A. Datasets

In this review, we illustrate our models against three commonly used drug-discovery related benchmark datasets, namely, CASF-2007149, CASF-2013150, and CASF-2016151. These benchmarks are collected in the PDBbind database and have been used to evaluate the general performance of a scoring function on a diverse set of protein-ligand complexes.

Note that for docking power and screening power assessments, additional data information is given for CASF-2007149 and CASF-2013150,152 as described in the next section.

III.B. Evaluation metrics

In the drug-design related benchmark, a scoring function (SF) is often validated based on four commonly metrics, namely scoring power, ranking power, docking power, and screening power149,152. The following sections briefly offer introductions for these matrices and the associated datasets.

III.B.1. Scoring power

This metric measures how good a scoring function in predicting affinities that linearly correlate to the experimental data. To this end, the standard Pearson’s correlation coefficient (Rp) is employed

| (42) |

where xi and yi are, respectively, predicted binding affinity and experimental data for the ith complex. The average of all predicted and experimental values are denoted as and , respectively. All three benchmark datasets, CASF-2007, CASF-2013, and CASF-2016, were used to evaluate the scoring power of our models.

III.B.2. Ranking power

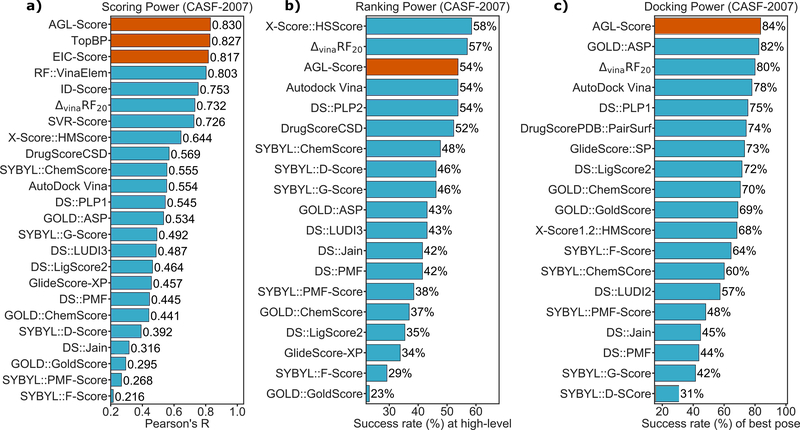

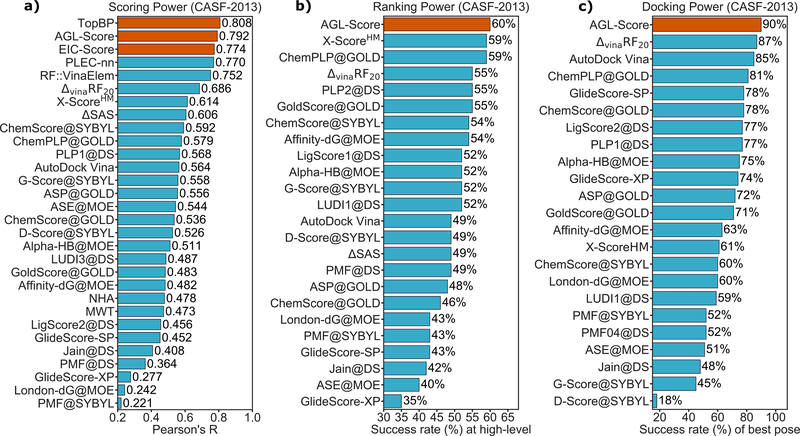

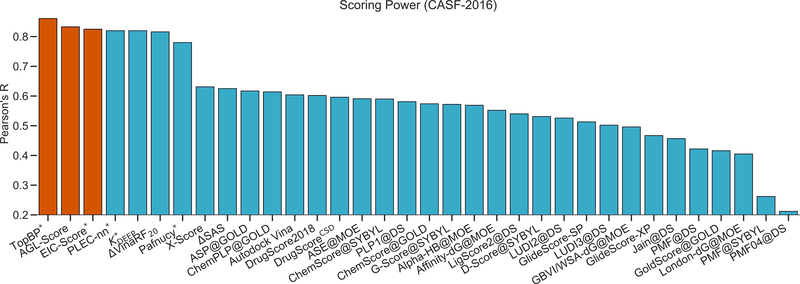

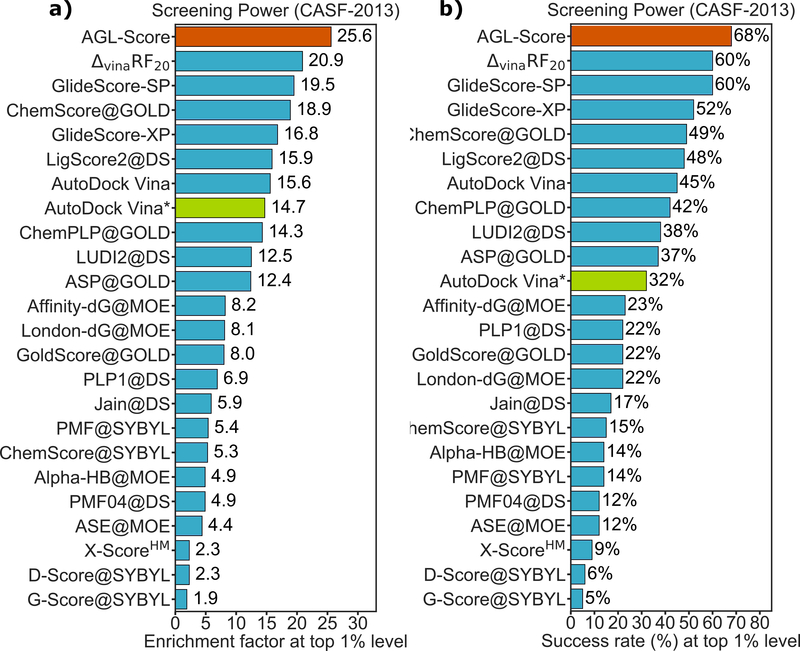

In this assessment, the ability to ranking the binding affinity of complexes in the same cluster is stressed149,152. Two benchmarks, CASF-2007 and CASF-2013, were used to test our AGL-Score’s ranking power. Both these datasets have 65 different protein targets, and each protein has three binding distinct ligands. There two different levels of the assessments. The first is high-level success measurement which testifies if the affinities of three ligands in each cluster are correctly ranked. The other assessment is the so-called low-level success measurement which determines whether a scoring function can identify the ligand with the highest binding affinity in its cluster. The score in this assessment is calculated by the percentage of successful ranking in a given benchmark.