Abstract

Medical data is one of the most rewarding and yet most complicated data to analyze. How can healthcare providers use modern data analytics tools and technologies to analyze and create value from complex data? Data analytics, with its promise to efficiently discover valuable pattern by analyzing large amount of unstructured, heterogeneous, non-standard and incomplete healthcare data. It does not only forecast but also helps in decision making and is increasingly noticed as breakthrough in ongoing advancement with the goal is to improve the quality of patient care and reduces the healthcare cost. The aim of this study is to provide a comprehensive and structured overview of extensive research on the advancement of data analytics methods for disease prevention. This review first introduces disease prevention and its challenges followed by traditional prevention methodologies. We summarize state-of-the-art data analytics algorithms used for classification of disease, clustering (unusually high incidence of a particular disease), anomalies detection (detection of disease) and association as well as their respective advantages, drawbacks and guidelines for selection of specific model followed by discussion on recent development and successful application of disease prevention methods. The article concludes with open research challenges and recommendations.

Keywords: Disease prevention, Data analytics, Healthcare, Knowledge discovery, Prevention methodologies

Introduction

Due to the rise of healthcare expenditures, early disease prevention has never been important as it is today. This is particularly due to the increased threats of new disease variants, bio-terrorism as well as recent improvement development in data collection and computing technology. Increase amount of healthcare data increases the demand to develop an efficient, sensitive and cost-effective solution for disease prevention. Traditional preventive measures mainly focus on promotion of healthcare benefits and have lack of methods to process huge amount of data. Using IT to promote healthcare quality can serve to improve health promotion and disease prevention. It is true inter-disciplinary challenge that requires number of types of expertise in different research areas and really big data. It raises some fundamental questions.

How do we reduce the increasing number of patients through effective disease prevention?

How do we cure or slow down the disease progression.

How do we reduce the healthcare cost by providing quality care?

How do we maximize the role of IT in identifying and curing the risk at early stage?

Clear answer to these question is the use of intelligent data analytics methods to find information from glut of healthcare data. Data analytics researchers are poised to come up with huge beneficial advancement in patient care. There is vast potential for data analytics applications in healthcare sector. Currently, data analytics, machine learning and data mining made it possible for early disease identification and treatment. Early monitoring and detection of disease being in practice in many countries, i.e., BioSense (USA), CDPAC (Canada), SAMSS, AIHW (Australia), SentiWeb (France) etc.

This paper discusses the IT-based methods for disease prevention. We chose to focus on data-mining-based prevention methodologies because recent development in data mining approaches led the researcher to develop number of prevention systems. Tremendous progress has been made for early disease identification and its complication management.

What is data mining and data analytics

Exponential time increase in data made tough to get useful information form that data. Traditional methods showed much performance; however, their predictive power is limited as traditional analysis deals only with primary analysis, whereas data analytics deals with secondary analysis. Data mining is the digging or mining of data from many dimensions or perspectives through data analysis tools to find prior unknown pattern and relationship in data that may be used as valid information; moreover, it makes the use of this extracted information to build predictive model. It has been used intensively and extensively by many organizations especially in healthcare sector.

Data mining is not a magic wand but in fact a big giant tool that does not discover solutions without guidance. Data mining is useful for the following purposes:

Exploratory analysis: Examining the data to summarize its main characteristics.

Descriptive modeling: Partitioning of the data into subgroups based on its properties.

Predictive modeling: Forecasting information form existing data.

Discovering pattern: Discover pattern that occur frequently.

Retrieval by content: Discovering hidden patterns

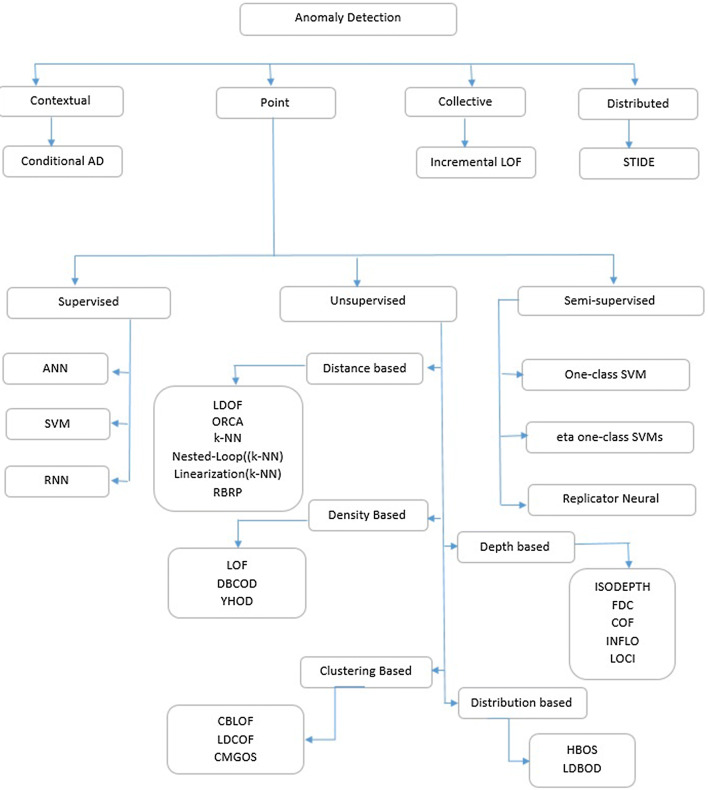

Big data and machine learning holds great potential for Healthcare providers to systematically use data and analytics to discover interesting pattern that are previously unknown and uncover the inefficiencies from vast data stores in order to build predictive models for best practices that improve quality of healthcare as well as reduces the cost. EHR system are producing huge amount of data on daily basis which is a rich source of information that can be used by healthcare organization to explore the interesting fact and findings that can help to improve patient care. Figure 1 shows the data analytics generic architecture for healthcare applications.

Fig. 1.

Architecture of health care data analytics

As health sector data is moving toward really big data, thus better tools and techniques are required as compared to traditional data analytics tools. Traditional analytics tools are user friendly and transparent as compared to big data analytics tools that are complex and programming intensive and required variety of skills. Some famous big data analytics tools are summarized in Table 1.

Table 1.

Big data analytics tools

| Platforms and tools | Description |

|---|---|

| Advanced data visualization | ADV can reduce quality problems which can occur when retrieving medical data for extra analysis |

| Presto | Distributed SQL query engine used to analyze huge amount of data that collected every single day |

| The Hadoop Distributed File System (HDFS) | HDFS enables the underlying storage for the Hadoop cluster and enhances healthcare data analytics system by dividing large amount of data into smaller one and distributed it across various servers/nodes |

| MapReduce | Breaks task into subtasks and gathering its outputs and efficient for large amount of data |

| Mahout | An apache project, goal is to generate free applications of distributed and scalable ML algorithms that supports healthcare data analytics on Hadoop systems |

| Jaql | Functional, declarative query language, aim to process large datasets. It facilitates parallel processing by converting high-level queries into low-level ones |

| PIG and PIG Latin | Configured to assimilate all types of data (structured/unstructured, etc.) |

| Avro | Facilitates data encoding and serialization that improves data structure by specifying data types, meaning and scheme |

| Zookeeper | Allows a centralized infrastructure with various services, providing synchronization across a cluster of servers |

| Hive | Hive is a run-time Hadoop support architecture that permits to develop Hive Query Language (HQL) statements akin to typical SQL statements |

What is disease prevention and its challenges

Every year millions of people die of preventable death [1]. In 2012, about 56 million people died worldwide and two-thirds of these deaths were due to non-communicable disease including diabetes, cardiovascular and cancer. Moreover, 5.9 million children died in 2015 before reaching the fifth year of their life and most of these death were due to infection (i.e., diarrhea, malaria, birth asphyxia, pneumonia etc.); however, this number can be reduced to half at least by treating or preventing through the access to simple affordable interventions [2]. Core problem in healthcare sector is to overcome the huge number of causalities as well as reduce the cost. The goal is to reduce the prevalence of disease, help people to live longer and healthier life as well as reduce the cost. One of the main interests in disease prevention is driven by the need to reduce the cost. The lifetime medical expenditures are increased from $ 14k to $ 83k per person and this increase is up to 160K after the age of 65.

Thus, the proportion of average world GDP devoted to healthcare sector is increased from 5.28% in 1995 to 5.99% in 2014 and is expected to increase in future (i.e., from 17.1% in 2014 to 19.9 of US GDP by 2022%) [3, 4]. This increase in medical expenditures is mainly due to the aging and growing populations, the rising prevalence of chronic diseases as well as for infrastructure improvement. Thus, the cost-saving and cost-effective preventive solutions are required to reduce the burden on economy. Traditional preventive measures mainly focus on promotion of healthcare benefits. The cost-effectiveness ratio is said to be unfavorable when intervention incremental cost are larges relative to the healthcare benefits. USA spent 90% of budget on disease treatment and their complication rather than prevention (only 2–3%) whereas many of these diseases can be prevented at first stage [5, 6]. Spending more on health does not guarantee of health system efficiency. The investment on prevention can help to reduce the cost as well as improve the health quality and efficiency. Health industry is facing considerable challenges in the promotion and protection of health at a time when there is huge pressure due to the considerable budgets constraints and resources in many countries. Early detection and prevention of disease plays a very important role in reducing deaths as well as healthcare cost. Thus, the core question is: How data can help to reduce the patients or disease effect in the population?

Concept and traditional methodology

Disease prevention focuses on prevention strategies to minimize the future hazards to health by early detection and prevention of disease. An effective disease management strategy reduces the risks from disease, slow down its progression and reduces symptoms. It is the most efficient and affordable way to reduce the risk of disease. Preventive measures strategies are divided into different stages, e.g., primary, secondary and tertiary. Disease prevention can be applied at any prevention level along with the disease history, with the goal of preventing its progression further. Primary It seeks to reduce the occurrence of new cases, e.g., stress management, exercises, smoking cessation to prevent lung cancer and immunization against communicable diseases. Thus, it is most applicable at the suspected stage of a patient. Strategies of primary prevention include risk factor reduction, general health promotion and other protective measure. This can be done by bringing up the healthier lifestyles and environmental health approaches through health education and promotion program. Secondary Purpose of secondary prevention is to either cure the disease, slow down its progression, or reduce its impact and is the most appropriate for those in the stage of early-stage or pre-symptomatic disease. It attempts to reduce the number of cases through early detection of the disease and reducing or halting its progression, e.g., detection of coronary heart patient after their first heart attack, blood tests for lead exposure, eye tests for glaucoma, lifestyle and dietary modification. Common approach to secondary prevention includes procedure to detect and treat preclinical pathological changes early through screening for disease, e.g., mammography for early-stage breast cancer detection. Tertiary The key aim of tertiary disease prevention is to enhance life quality of patient. Once the disease is firmly established and has been treated in its acute clinical phase, it seeks to soften the impact of disease on the patient through therapy and rehabilitation, e.g., tight control of type-1 diabetes, assisting a cardiac patient to lose weight and improving the functioning of stroke patient through rehabilitation program.

Effective primary prevention to avert new cases, secondary prevention for early detection and treatment and tertiary prevention for better diseases management are not only to improve the quality of life but also helps to reduce unnecessary healthcare initialization. Extensive medicine knowledge and clinical expertise are required to predict the probability of patient that are contracting disease (Table 2).

Table 2.

Prevention level

| Leavell’s levels of prevention | ||

|---|---|---|

| Stage of disease | Prevention level | Type of response |

| Pre-disease | Primary prevention | Specific protection and Health promotion |

| Latent disease | Secondary prevention | Pre-symptomatic diagnosis and treatment |

| Symptomatic disease | Tertiary prevention | Disability limitation for early symptomatic disease |

Challenges

Un-automated analysis of huge and complex volumes of data is expensive as well as impractical. Data mining provides great benefits for the disease identification and treatment; however, there are several limitations and challenges involved in adapting DM analysis techniques. Successful prevention depends upon knowledge of disease causation, transmission dynamics, risk factor and group identification, early detection and treatment methods, implementation of these methods and continuous evaluation and development of prevention and treatment methods. Additionally, data accessibility (data integration) and constraints(missing, unstructured, corrupted, non-standardized data and noisy) add more challenges. Due to the huge number of patients, it is impossible to consider all those parameters to develop cost-effective and cost-efficient prevention system. The expansion of medical records databases and increased linkage between physician, patient and health record led the researcher to develop efficient prevention system.

Healthcare applications generate mound of complex data. To transform this data into information for decision making, traditional approaches are lagging behind, and they barely adopt advanced information technologies, such as data mining, data analytics, big data etc. Tremendous advancement in hardware, software and communication technologies opens up opportunities for innovative prevention by provided cost-saving and cost-effective solution by improving the health outcomes, properly analyzing the risk and overcoming the duplicate efforts. Barriers to develop such system include non-standard (interoperability), heterogeneous, unstructured, missing or incomplete, noisy or incorrect data.

Disease prevention mainly depends on the data interchange across different healthcare system thus interoperability plays major role in success of prevention system, whereas healthcare sector is still on the way. ISO/TC 215 includes standards for disease prevention and promotion. Standards are a critical component, whereas it is not yet mature in healthcare sector. Many stakeholders (HL7, ISO and IHTSDO (organization that maintain SNOMED CT) with aim to have common data representation are working to address semantic interoperability. Healthcare data is diverse and have different format. Moreover, with the rapid use of wearable sensors in healthcare results in tremendous increase in the size of heterogeneous data. For effective prevention methods, integration of data is required. For years, documentation of clinical data has trained clinician to record data in most convenient way irrespective, how this data could be aggregated and analyzed. Electronic health record systems attempt to standardize the data collection but clinician are reluctant to adopt for documentation.

Accuracy of data analysis depends significantly on the correctness and completeness of database. It is a big challenge to find problems in data and even harder to correct the data, moreover data is missing. Using incorrect data will defiantly provide incorrect result. Whereas ignoring the incorrect data, or issue of missing data introduce bias into analysis that leads to inaccurate conclusion. For the extraction of useful knowledge from large volume of complex data that consist missing data and incorrect values, we need sophisticated methods for data analysis and association. Moreover, data privacy and liability, capital cost, technical issue are other factors. Data privacy is another major hurdle in development of prevention system. Most of the healthcare organizations have HIPAA certification; however, it does not guarantee the privacy and security of data as HIPAA is considering security and policy rather than implementation [7]. With the increase popularity of wearable devices, mobiles and online availability of healthcare data put it on emerging threat. In addition to that, it may increase racial and ethnic disparate because these may not be equally available due to economic barrier.

Rest of the paper is organized as: Sect. 2 describes the existing prevention methodologies and is categorized into three subsections nutrients, policies and HIT. Section 3 presents the data mining development for disease prevention followed by data analytics-based disease prevention application in Sect. 4. Finally, some openly available medical datasets are discussed in Sect. 5 followed by open issues and research challenges are presented in Sect. 6.

Existing disease prevention methodologies

Although chronic diseases are among the most common and costly health problems, however, these are the most preventable. Early identification and prevention is the most effective, affordable way to reduce morbidity and mortality as well as helps to improve the life quality [8]. Not only data mining, several other prevention methods are being to reduce the risk factor.

Nutrients, foods, and medicine

Diet acts as medical intervention, to maintain, prevent, and treat disease. It is major lifestyle factor that contributes extensively for disease prevention such as diabetes, cancer, cardiovascular disease, metabolic syndrome and obesity etc. Poor diet and inactive lifestyle are lethal combination. Joint WHO/FAO expert consultation on diet, nutrition and the prevention of chronic diseases states that chronic diseases are preventable and developing countries are facing consequences of nutritionally compromised diet [9]. Individual has power to reduce the risk of chronic disease by making positive changes in lifestyle and diet. Use of Tobacco, unhealthy diet, and lack physical activity are associated with many chronic conditions. Evidence shows that healthy diet and physical activity does not only influence present health but also helps to decrease morbidity and mortality. Specific diet and lifestyle changes and their benefits are summarized in Table 3. Food and nutrition interventions can be effective at any prevention stage. In primary stage, food and nutrition therapy could be used to prevent the occurrence of disease such as obesity. What if disease is already identified and how diet can help to reduce the effect of disease? Recent studies shows, potential of food and nutrition interventions as secondary and tertiary prevention is also effective preventive strategy that reduce the risk factor and slow down the progression or mitigate the symptoms and complications. Thus, at secondary, it could be used to reduce the impact of a disease and at tertiary stage, it helps to reduce the complications, i.e., stomach ulcer. Dietitian plays critical role in disease prevention, i.e., change in lifestyle can help to delay or prevent type II diabetes.

Table 3.

Convincing and probable relationships between dietary and lifestyle factors and chronic diseases [10]

| Factors | CVD | Type-2 diabetes | Cancer | Dental disease | Fracture | Cataract | Birth defect | Obesity | Metabolic syndrome | Depression | Sexual dysfunction |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Life style | |||||||||||

| Avoid smoking | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↑ | ↓ | |||

| Physical activity | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | |||

| Avoiding overweight | ↓ | ↓ | ↓ | ↑ | ↓ | ↓ | ↓ | ||||

| Diet | |||||||||||

| Using healthy fat | ↓ | ↓ | ↓ | ↓ | |||||||

| Fruits and veggetable | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | |||||

| Using whole grains | ↓ | ↓ | ↓ | ↓ | |||||||

| Reducing sugar | ↓ | ↓ | ↓ | ↓ | ↓ | ||||||

| Reducing calories | ↓ | ↓ | |||||||||

| Reduction in sodium | ↓ | ||||||||||

↓ Decrease risk, ↑ increase risk

Please add the following required packages to your document preamble:

Policy, systems and environmental change

After many year focus on individual; policies, systems and environmental changes are new way of thinking to improve the quality of healthcare sector. It affects large segments of the world population simultaneously. Disease prevention is much easy if we develop such environment that can help community to adopt health lifestyle, proper nutrition and medications etc. Developed nations are promoting social, environmental, policy, and systems approaches to support healthy life such as low fat diet at restaurants, smoking restricted areas, increase in prices of tobacco items and urban, healthy food restriction for all students, infrastructure design that leads to lifestyle change, i.e., increase in physical activity. United nations economic commission for Europe (UNECE) member states have committed to implement health policies to ensure the increase in longevity by quality of life [11]. Some good examples are 5 A DAY program to increase healthy nutrition in the UK, WHO: Age-friendly cities, Lifetime homes in the UK, Support program for dementia patients living alone in Germany [12].

Policy change includes new rules and regulation at the legislative or organizational level, i.e., tax on tobacco item and soft drinks, provide time off during office hours for physical activity, changing community park laws to allow fruit trees. System change includes change in systems strategies within organization such as improving school infrastructure, transportation systems. Environmental change includes the changes in physical environment such as incorporating sidewalks and recreation areas in community areas, healthy food in restaurants.

Information technologies

Health Information Technology (HIT) strategy is to put information technology to work in healthcare sector in order to reduce healthcare cost and increase efficiency. HIT makes it possible to get maximum benefits for patient, healthcare organizations and government through intelligent patient information processing. Happy marriage of healthcare and information technology includes variety of electronic approaches that are used not only to manage information but to improves the quality of clinical and other preventive services, i.e., disease prevention, early disease detection, risk factor reduction and complication management. Healthcare transaction generates huge amount of data. This expansion of medical record databases and increased linkage between physician, patient and health record led the researcher to develop efficient disease prevention systems. Thus, there is a need to transform health data to information through the use of innovative, collaborative and cost-effective informatics and information technology. IT provides strategic value to achieve health impact and health quality by transforming these mounds of data into information. Computer-based disease control and prevention is an ongoing area of interest to the healthcare community. Disparities in access to health information can affect preventive services. Increased access to the technology (i.e., handled devices and fast communication) and availability of online health information reduced the disease risk. It makes the user to be able to access health information and make good decision. Increased use of HIT tools (i.e., reminders, virtual reality applications and decision support system) helps to reduce the risk factor.

Effective disease prevention requires identifying and treating individuals at risk. Several preventive measures are being used to improve therapy adherence. Relevant information, such as blood pressure, cholesterol measurement, fasting plasma glucose etc., is recorded electronically, and it makes automatic risk prediction possible. Moreover, the rapid growth of cellular networks provides opportunity to be in touch with patient [13]. Reminder-based preventive services involves continuous risk assessment of several life-threatening disease (cardiac disease [14–17], diabetes management [18–21] through vital sciences [14, 15, 18–20] or reminder of due for specific preventive services such as vaccination, follow-up appointment, weight loss [22–25] or reminder for medicine [22, 23, 26]. This type of communication could by any electronic medium, i.e., call, SMS or emails. Studies found that automated reminder services are very effective in boosting patient adherence [27, 28]. Healthcare databases contain cardiac disease data, but practical methods are required to identify patient at risk and continuous monitoring of those patient through electronic vita science data, i.e., Blood pressure, cholesterol and glucose etc. Several studies have been performed to reduce risk through automated reminder using collected primary care data [14, 15]. Text message-based intervention for supporting diabetic patient helps to improve self-management behaviors and achieve better control over glycemic [18–20]. Research studies show that reminders (through emails, SMS, calls, social media and wearable devices etc.) based individual data leveraged to reduce risk. Integration of wearable sensors, EHR and expert system with automatic reminder will end up at efficient disease prevention system.

The wish of efficient healthcare is coming within the reach after the advent of smart wearable technology. These sensors are reliable and efficient for real-time data collection and analysis [29–31] thus are really good source of preventative methods for several threatening disease, i.e., cardiovascular [32–34], cardiopulmonary [35, 36], diabetes [37, 38] and neurological function [39]. Several companies (i.e., Medtronic, BottomLine, Medisafe, ImPACT, Actismile. Allianz and AiQ) are providing low cost wearable solution like concussion management, cardiovascular, monitoring, reminders, diabetes, overweight, metabolism etc. Recent development in intelligent data analysis methods nano-electronics, communication and sensor technology made possible the development of small wearable devices for healthcare monitoring. Wearable sensors are able to collect most of the useful medical data required for early identification and prevention, but it is still costly and most of the healthcare system are not yet able to process this data. In near future, wearable devices are getting cheaper (few dollars), smaller (almost invisible, i.e., sensor-clad smart garments, implanted devices), accurate (no approximation of data) and powerful (low battery consumption, high communication and processing power). Moreover, due to the recent development high processing sensors, research is shifting form simple reasoning to high-level data processing by implementing pattern recognition, data mining methodologies to provide much valuable information, i.e., fall detection, heart attack detection via data processing and sending message to emergency services or family member for immediate action.

Algorithms and methods

Biomedical data is more complicated and is getting larger day by day. Efficient analysis of this huge amount of data provides large amount of useful information that could be used for health promotion and prevention. Traditional methods deal with primary data and fail to analyze big data. Thus question arises: how to analyze it efficiently? Answer is: we need much smarter algorithms to analyze this data. The major objective of data analytics is to find hidden pattern and interesting relations in the data. The significance of such approaches is to provide timely identification with less number of clinical attributes [40]. This secondary data is used for important decision making.

Healthcare data analytics is multidisciplinary area of data mining, data analytics, big data, machine learning and pattern recognition [41]. Intelligent disease control and prevention is an ongoing area of interest to the healthcare community. The basic goal of data analytics-based disease prevention is to take real-world patient data and to help to reduce the patient at risk. Recent development in machine learning and data analytics for handling complex data opens new opportunities for cost-effective and efficient prevention methods that can handle really big data. Data mining provides the methodology and technology to transform these mounds of data into useful information for decision making by exploring and modeling big data [42]. This section is divided into four subsection based on data mining functionalities in healthcare, i.e., classification of disease based on symptoms. In this section, our goal does not go much into detail but shows the basic concept and difference between different algorithms.

Classification is the process of finding set of variables to classify data into various types, i.e., disease identification, or medication etc.

Clustering: data grouping or data distinguishing used for decision making, i.e., finding pattern in EHR, prediction of readmission.

Associate analysis: discover interesting, hidden relation between medical data, i.e., frequently occurrence disease.

Anomaly detection: is the identification of abnormality that does not follow any specific pattern.

Classification

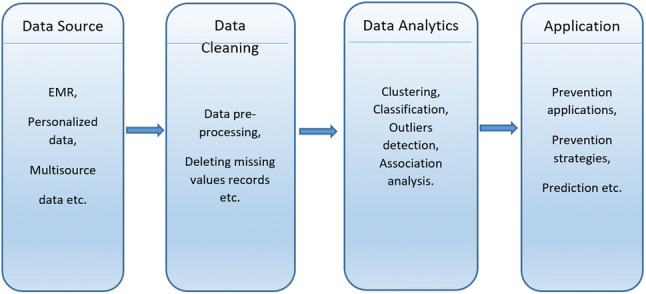

Success of disease prevention is based on early detection of disease. Accurate disease identification is necessary to help the physicians to deal it with proper medication. The goal of classification is to accurately predict target case dataset from unseen data. Classification is the process of assigning given object to one of the predefined class. There has always been a debate on the selection of best classification algorithm. Several statistical and machine learning-based contributions were made for disease prediction and wide range of classifiers (shown in Fig. 2) has been used, i.e., decision tree [43–46], SVM [47–49], Naive Bayes [43, 50], neural network [51] and k-nearest neighbor [43, 52], PCA [53, 54] for disease classification.

Fig. 2.

Classification methodologies

Decision tree

Decision tree is used as prediction model that maps observation sequence to classified items. Classification method uses tree-like graph and is based on sorting of feature values. Each node represents the feature in an instance to be classified, whereas each branch in decision tree represents the value that the node can assume. In decision tree, nodes may have two or more child nodes; internal nodes are denoted by rectangle and ladled with input features; leaf nodes are denoted by oval and ladled with class or probability distribution over class; classification rules are represented by path from root to the leaf and internal nodes. The decision tree are of two types: regression tree analysis (predicted outcome is real value number) and classification tree analysis (predicted outcome is class). Several popular decision tree algorithms used for data mining purpose are ID3 (C4.5, SPRINT), AID (CHAID), CART and MARS.

ID3 or Iterative Dichotomiser-3 is used to generate decision tree from dataset and one of the simplest learning method [55]. C4.5 is the extension of ID3 and uses gain ratio as splitting criteria whereas ID3 uses binary splitting [56]. Unlike ID3, it can handle data with missing values, attributes with differing costs and handle both discrete and continuous attributes. It became quite popular and was ranked at No. 1 in the list of top 10 algorithms in data mining. C5.0 is the extension of C4.5 and introduces new features such as misclassification cost (weight on classification error based on its importance), case weight attribute (quantification the importance of each case). Misclassification cost boosting leads to improvement in predictive accuracy [57]. It also supports cross-validation and sampling. Several new data types are included especially not application if data values are missing.

ID3 and its descendant construct flowchart-like tree structure and are based on the recursive and divide-and-conquer algorithm.

If set S is small or all examples of S belong to same class

return a new leaf and label it with class C in S.

Otherwise

Run a test based on single attribute with two or more outcome;

Make this test as a root of the tree with one branch for each outcome.

Partition S into subset S1, S2 and apply same procedure recursively.

Stop if all its instances have the same class.

Support vector machine

It is supervised learning approach that is based on statistical learning theory [58]. It is one of the most simple, robust, accurate and successful classification method that provided high performance in solving complex classification problems especially in healthcare. Due to its discriminative power especially for small data and large set of variables, data driven and modal free property, it is has been widely used for disease identification and classification problems. Unlike logistic regression that depends on pre-determined modal for prediction of binary event through fitting of data onto logistic curve, SVM produces binary classifier that discriminates between two classes by separating the hyperplanes through nonlinear mapping of data into feature space that is high dimensional. Unlike regression-based methods, SVM allows more input features than samples, so it is particularly suitable in classifying high-dimensional data. Moreover, some kernels even allow SVMs to be considered as a dimensionality reduction technique.

SVM hyperplanes are the decision boundaries between two different sets of classes. SVM uses support vectors to find hyperplane with maximum margin form set of possible hyperplane. In ideal case, two classes can be separated by a linear hyperplane; however, in real world, data is not that simple. Due to the limitation of SVM such as binary classification, memory requirement and computational complexity, several other variations of SVM are presented such as GVSM, RSVM, LSVM [59], TWSVMs (twin support vector) VaR-SVM (value-at-risk support vector machine), SMM [60], SSMM [61], RSMM [62, 63], cooperative evolution SVM [64]. Using Kernel functions (polynomial, linear, sigmoid, and radial) to add more dimensions to low dimensional space, two class problem could be separable in high-dimensional space. As compared to other classification methods, SVM training is extremely slow and computational complex. However, it does always rank well among the list of best classifier.

Machine learning

Machine learning have emerged as advanced data analytics tools and recently have been successfully applied in a variety of applications including medical diagnosis assistance to the physician. Why machine learning is important for data analytics even though state-of-the-art data analytics algorithms are available? The essence of the argument is that in some cases where other analytics methods may not produce satisfiable predictive result, machine learning can help to improve the generalization ability of the systems by training on the data annotated by human experts. Mostly, the predictive accuracy obtained using machine learning is slightly higher than other analytics methods or human experts [65] and it is high affordable to the noise data. However, despite these fact, neural networks was not preferable choice for data analytics due to facts: NN is quite slow in training and classification making it impractical for large data and trained neural networks are usually not comprehensible [66]. However, due to the recent advancement in computation power, neural network is increasingly favored for the development of data analytics applications. Important neural network algorithms are backpropagation neural network (BPNN), associative memory, and the recurrent network.

Deep machine learning also known as hierarchical learning or deep learning is a branch of machine learning based on a set of algorithms which have one or more hidden layers that automatically extract high-level and complex abstractions as data representation [67]. Data is passed through multiple layers in hierarchical manner and each layer applies nonlinear transformation on to data. With its amazing empirical result over past couple of years, it is one of the best predictive algorithms for big data. Main advantage of deep learning is automatic analysis and learning of huge amount of unlabeled data typically learning data representations in a greedy layer-wise fashion [67, 68]. Several attempt has been made for disease prevention using deep learning methods [69, 70]. An important question is whether to use deep learning for medical data analytics or not. As most of the healthcare data is unstructured and unlabeled, due to the automatic high-level data representation property of deep learning, it could be used in effective way for prediction problems, but it needs huge volume of data to be trained.

Bayesian networks

Bayesian network, Bayes network or Bayes model is probabilistic directed acyclic graph that encodes probabilistic relationships among variables of interest. Nodes represent set of random variable and their conditional dependencies (represented by edges) via directed acyclic graph. Unconnected nodes represents conditionally independent variables, for example, probabilistic relationships between diseases and its symptoms. Nodes are associated with probability distribution function that takes set of values as input and output the probability of variable represented by node. Advantages of Bayes networks are model interpretability, efficient for complex data, requiring small training dataset. For example, Bayes classifier to diagnose correct disease based on patient observed symptoms. Important algorithms of Bayesian networks are Naive Bayes, semi Naive Bayes, selective Naive Bayes, one-dependence Bayesian classifiers, unrestricted Bayesian classifiers, and Bayesian multinets, Bayesian network-augmented Naive Bayes and k-dependence Bayesian classifiers etc.

Naive Bayes has proved itself a powerful supervised learning algorithm for solving classification problems and has been extensively used for healthcare data analysis specially for disease prevention. It is built upon strong assumption that features are independent with each other (so that classifier could be simple and fast) and it assumes that the effect of variable value on the given class is independent of other variable values. Mostly, Naive Bayes uses maximum likelihood for parameter estimation and classification is done by taking highest posterior of classified values. Based on given set of variables that belong to known class, the aim is to construct rules for classification of unknown data based. Limitation of Naive Bayes is sensitivity to correlated features. Selective Naive Bayes uses only subset of given attributes in making prediction [71]. It reduces the strong bias of Naive independence assumptions owing to variable selection [72]. The objective is to find among all variables, the best classifier, compliant with the Naive Bayes assumption. Several selection methods have been presented so far [71–74]. Selective Naive approach is good for datasets with a reasonable number of variables; however, it does not scale for large datasets with large number variables and instances [72].

k-nearest neighbor

The k-nearest neighbor or k-NN algorithm, a non-parametric classification and regression method is based on nearest neighbor algorithms and is one of the top 10 data mining algorithms [75]. The aim is to find output with a class membership. kNN finds a group of k objects in the training dataset that are closest to the test object and decides the assignment of a label on the predominance of a particular class in this neighborhood. k-nearest neighbors are identified by computing the distance of the object to labeled object. The calculated distance is used to assigner class. The main advantages of kNN are simple in implementation, robust with regard to search space, and online updation of classifier and few parameters to tune.

There are a lot of different improvements in the traditional KNN algorithm, such as the wavelet based k-nearest neighbor partial distance search, equal average nearest neighbor search, equal average equal-norm nearest neighbor code word search, equal-average equal-variance equal-norm nearest neighbor search and several other variations.

Guidelines The complexity in classification of data arises due to the uncertainty and the high dimensionality of medical data. Studies [76–78] show that there is a large amount of redundancy, missing values as well as irrelevant variables in healthcare data that can effect the accuracy of disease prediction. Conventional wisdom is that larger corpora yield better predicting accuracy however data redundancy and irrelevancy introduces bias rather than benefits that distorts learned models. Thus, before applying any prediction model, dataset needs to be processed carefully by removing the redundant data (e.g., age, DOB, redundant lab reports) as well irrelevant data (e.g., gender attribute in case of gestational diabetes). However, little is known about the redundancy that exists in the data as well as what type of redundancy is beneficial as opposed to harmful. Statistical methods (correlation analysis etc) could be used to identify irrelevant variables, other alternative is feature selection based on the importance of specific variable. To deal with missing values, it is recommended to use data analysis methods that are robust to missingness that are good to use when we are confident that mild to moderate violations of the technique’s key assumptions will produce little or may be no biasness in the resultant conclusions. As healthcare data is highly sensitive, one drawback of these method for missing or irrelevant information may lead to distortion of important relationship between set of dependent and independent variables. For example, smoking, drinking and helicobacter pylori individually might not affect stomach cancer significantly whereas all together could effect significantly [41]. Thus, efficient approaches are required for data preprocessing to avoid ignorance of that type associated data as deletion of such depended variable will significantly effect prediction power.

As discussed above, no generic classifier works for all type of data and it varies form data to data and nature of the problem. For example, small and labeled data, classifier with high bias (Naive Bayes) is best choice. However, if the data is quite large, classifier doesn’t really matter so much, so the selection of classifier is based on its scalability and run-time efficiency. Classifier performance can be significantly improved through effective features selection. In case of big data, deep learning is good choice as it learns features automatically. For better prediction, different feature sets and a couple of classifiers should be selected and tested. The best classifier that beats all other classifiers could be selected for prediction activities.

Clustering

Clustering is a descriptive data analysis task that partitions the data into homogeneous groups based on the information found in data that best describes the data and its relationship, i.e., classifying patients into groups. Organization of data into clusters shows the internal structure of the data. The goal is to group the individuals or objects that resemble each other for the purposes of improved understanding. The greater the dissimilarity between groups and the greater the similarity within group provides better clustering, i.e., a new observation is assigned to the cluster with closest match and it is assumed to have similar properties to others in same cluster. It is unsupervised learning that occurs by observing only independent variable. Unlike classification, it does not have training stage and does not use “class”.

The heart of clustering analysis is the selection of the clustering algorithm. Selection of proper clustering method is important because different methods tend to find different type of cluster structure and is based on the type of data structure. A number of clustering methods have been proposed and has been widely used in several disciplines, i.e., model fitting, data exploration, data reduction, grouping similar entities, prediction based on groups etc. It is categorized into partitional(unnested), hierarchical(nested), grid-based, density-based, subspace-clustering and some other clustering algorithms fuzzy, conceptual clustering as shown in Table 4.

Table 4.

Clustering algorithms

| Category | Algorithm |

|---|---|

| Partition | k-mean, k-medoids, weighted k-mean, CLARA |

| CLARANS PAM etc. | |

| Hierarchy | Agglomerative algorithms (CURE, CHAMELEON, ROCK), |

| Divisive algorithms (average link divisive, PDDP etc) | |

| Distribution | DBCLASD, GMM etc. |

| Density | DBSCAN, OPTICS, mean-shift etc. |

| Fuzzy | FCS, FCM, MM etc. |

| Grid | STING, CLIQUE etc. |

| Graph | CLICK, MST etc. |

| Fractal | FC etc. |

| Model | GMM, SOM, ART, genetic algorithms etc. |

Partitional clustering requires a preset number of clusters and it decomposes a set of N observations into a set of k disjoint clusters by moving them from one cluster to another, starting from an initial partitioning. It classifies the data into k cluster based on requirements: each observation belongs to exactly one cluster and each cluster contains at least one observation. An observation may belong to more than one cluster in fuzzy partitioning [79]. One of the most important issues in clustering is the selection of number of clusters and it is complicated if no prior information exist. Another issue is the selection of proper parameter and proper clustering algorithm. Inappropriate choice of clustering algorithm or wrong choice of the parameters, clustering may not reflect the desired data partition [79]. Not all methods are applicable to all type of problem, so selection of algorithm is based on the type of problem. Several contributions have been made to address this issue [79–84]. One of the most common partitional algorithms is k-means. It uses an iterative refinement technique and attempts to minimize the dissimilarity between each element and the center of its cluster. k-mean partition the data into k cluster represent by their center. Cluster center is computed as mean of all instance belong to that cluster. k-medoids, k-medians, k-means++, Minkowski weighted k-means, bisecting k-means, spherical k-means etc are some variations of k-mean.

Hierarchical clustering also called hierarchical cluster analysis or HCA is a clustering method that seeks to build a hierarchy of clusters (permit clusters to have sub-clusters) and can be viewed as sequence of partitional clustering. Based on pairwise distance between sets of observations, HCA successively merges (hierarchically group) most similar observations until a termination condition holds. Unlike partition, it does not assume a particular value of k. First step in hierarchical clustering is to look the most similar/closet pair which are then joined to make clustering tree. Division or merging of cluster is performed on the similarity measure that is based on optimal criteria. Strategies for hierarchical clustering generally fall into two types agglomerative and divisive also known as bottom-up and top-down respectively. Divisive clustering starts with single cluster containing all observation and consider all possible way to split into appropriate sub-clusters recursively, whereas agglomerative starts with one point cluster and recursively merges two or more clusters into a new cluster in bottom-up fashion. Main disadvantages of the hierarchical methods are inability to scale and no back-tracking capability. Standard hierarchical approaches suffer from high computational complexity. Typical hierarchical clustering includes BIRCH, CURE and ROCK etc. To improve the performance, several approaches have been presented. In fuzzy based clustering also called soft clustering, where each data point can belong to more than one cluster. It is continuous interval [0, 1] of belonging label, 0, 1 is being used instead of discrete value in order to describe relationship more reasonably. Most widely used fuzzy clustering algorithms are the fuzzy C-means (FCM), fuzzy C-shells and mountain method (MM) algorithm. Fuzzy C-Means get membership of each data point to every cluster by optimizing the object function. Distribution-based clustering is iterative, fast and natural clustering of large dataset. It automatically determines the number of clusters and produces complex models for clusters that captures the dependences and correlation of data attributes. Data generated from the same distribution belongs to the same cluster if there exists several distributions in the original data [85]. GMM using expectation maximization and DBCLASD are of the most prominent method. Density-based clustering identifies distinctive clusters in the data based on high-density region (contagious region of high-density area separated from other clusters having low density region). Typical methods of density-based clustering includes DBSCAN, OPTICS and Mean-shift. DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is the most well-known density-based clustering algorithm and does not require the number of clusters as a parameter. Further detail on clustering algorithms can be found at [85].

Guidelines Clustering is normally an option when there is little or no data available. Moreover, they do not concentrate on all requirements simultaneously which makes the result uncertain. Clustering efficiency could be affected by outliers, missing attributes, skewness of data, high dimensionality, distributed data and selecting of proper clustering method with respect to application context. Missing and outlier can significantly effect the measurements of similarity and the computational complexity. Thus, before going to clustering of data, it is recommended to eliminate the outliers. Grouping the data into cluster provides signification information about the object. Without prior knowledge of number of clusters or any other composition information of clusters, cluster analysis cannot be performed. Clustering (distance-based clustering) performance is also effected by distance function used. High dimensionally is another issue of data clustering. Number of features are very high and may even exceed the number of samples. Fixed number of data points become increasingly sparse as the dimensionality increase. Moreover, the data is so skewed that it cannot be safely modeled by normal distributions. In that case, data standardization and model based clustering or density-based clustering could perform better.

Selection of clustering algorithms for particular problem is quite difficult. Different algorithms may produce different results. In case when no data or little data is available, then hierarchical clustering could be good option as they do not requires predetermination of number of clusters. However, hierarchical clustering methods are computationally expensive and un-scalable thus not good option for large data. When the number of sample are high, algorithms have to be very conscious of scaling. Generally, successful clustering methods scale liner or log-liner. Quadratic and cubic scaling is also fine but with linear behavior. Generally, healthcare data is not totally numerical, thus conversion is required to make it useful. Conversion into numerical value could distort the data that could affect the accuracy, for example there attributes values A, B, C are converted to numerical values 1, 2 and 3. Here, there could be distortion conversion from attribute value to numerical, i.e., if distance of A and B is 2 whereas B and C is 1.

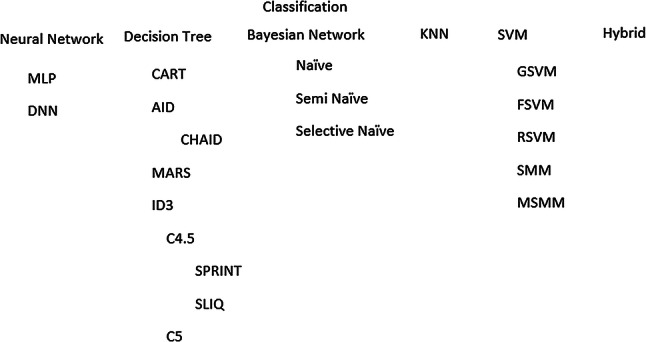

Association analysis

It is a highly unsupervised approach for discovering of hidden and interesting relations between variables in large datasets (Fig. 3). It is intended to identify strong rules that will predict the occurrence of an item based on occurrence of other item. For example, which drug combinations are associated with adverse events?. Association rule is an implication of the form where .

Fig. 3.

Association mining methodologies

Strength of association rule is the measure in term of its support and confidence (rules are required to satisfy user-specified minimum support and confidence at the same time). Support is an indication of how frequently a rule is applied to a give dataset and confidence is an indication of how frequently the rule has been found to be true. Uncovered relations could be represented using association rules or set of frequent items. The following rules shows that there is strong relationship between diabetic patient and retinopathy or heart-block can leads to hypertension. Association rules interestingness is measure through support and confidence. Support of 6% means that pregnancy and gestational diabetes occurred together in 6% of all the transactions in the database and Confidence of 90% means that the patients who are pregnant, 90% of the times, they also suffer gestational diabetes.

Support and confidence are commonly used to extract association rules through measuring interestingness of rule. Support of 6% means that pregnancy and diabetes occurred together in 6% of all the transactions contained in the database. While confidence of 75% means that, the woman who are pregnant, 75% of the times, suffer type-II diabetes as well. However, it is well known that even the rules with a strong support and confidence could be uninteresting.

In healthcare, this kind of association rules could be used to assist physician to cure patient or to find the relationships between various diseases and drugs or occurrence of other associated disease as it is revealed that occurrence of one disease can lead to several other diseases. Association rules are created by analyzing data for frequent if/then patterns and using the criteria support and confidence to identify the most important relationships. Thus, the problem of association rules is divided into two phases: generation of frequent itemset (find all the itemset: frequent pattern that satisfy the minsup threshold) and rule generation (extract the all high confidence rules form frequent patterns). Computation requirement of frequent-pattern generation are more expensive than rule generation that is straightforward. Finding of all frequent item-sets involves searching all possible item-sets in the database thus is difficult and computational expensive that can be reduced by reducing the number of candidate (a priori method) or reducing the number of comparison (FP-Growth, tree generation etc).

To design efficient algorithms for association rules computation, several efforts were presented over time, i.e., Sequence (Apriori, AprioriDP, tree based partition), Parallel (FP-growth, partition based, i.e., Par-CSP [86] and hybrid (Eclat). The most common algorithm for association rule is the Apriori algorithm. It is an algorithm for frequent item set mining and association rule learning over transactional databases. It uses breadth-first search and hash tree strategy to count the support of itemsets and uses a candidate generation function which exploits the downward closure property of support. AprioriDP is extension of Apriori that utilizes Dynamic Programming in frequent itemset mining. Finding of frequent sets requires several passes thus performance depends upon the size of the data. Processing of high density in primary memory is not feasible. Accesses to secondary memory could be reduce by effective partitioning. Tree based partitioning approach organizes the data into tree structures that can be processed independently [87]. To relieve the sequential bottlenecks, parallel frequent-pattern discovery algorithms exploit parallel and distributed computing resources. FP-growth counts item occurrence and stores into ’header table’ followed by construction of FP-tree structure by inserting instances. Core of GP-growth is the use of frequent-pattern tree (FP-tree), a special data structure to retain the itemset association information. FP-tree is a compact structure that store quantitative information about frequent pattern. Further detail on association algorithms can be found at [30, 88].

Guidelines Traditionally, association analysis was considered an unsupervised problem and has been applied in knowledge discovery. Recent studies showed that it has been applied for prediction in classification problem. Generating association rules algorithms must be tailored to the particularities of the prediction to build effective classifiers. Computational cost could be reduced by sampling database, adding extra constraints, parallelization and reducing the number of passes over the database. To expedite multilevel association rules searching as well as avoiding the excessive computation in the meantime, much progress has been made during last few years. Other issues involved in association mining are selection of non-relevant rules, huge number of rules and selection of proper algorithm. A small number of rules are used by domain expert whereas all rules are used by classification system. For good accuracy, it would be better, if the non-relevant rules are eliminated by domain experts (e.g., gestational diabetes females) and to readily organize raw association rules using a concept hierarchy.

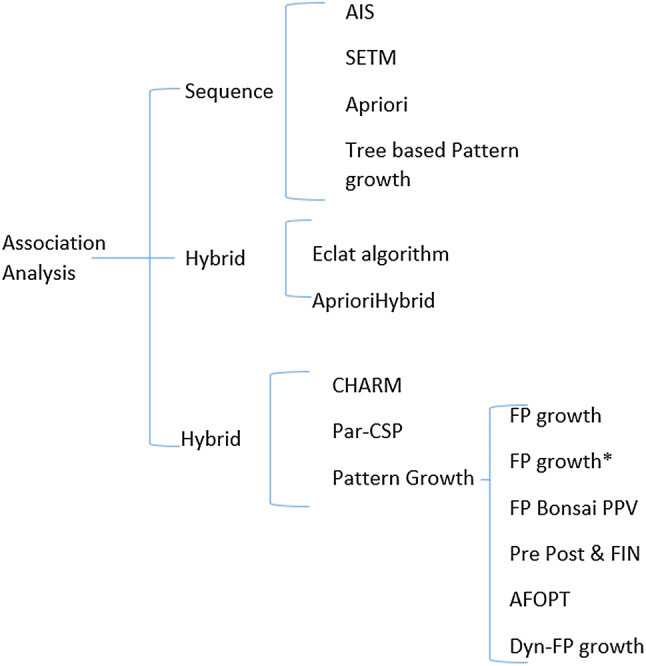

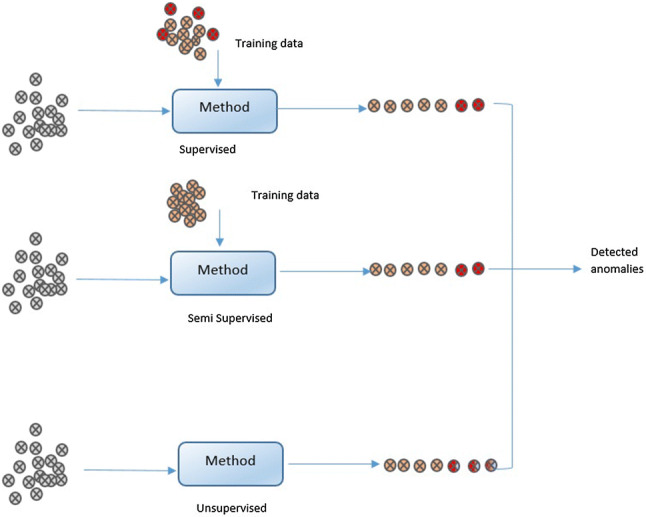

Anomaly detection

Anomaly detection has been widely researched areas and provides useful and actionable information in a variety of real-world scenarios such as timely detection of an epidemic that can helps to save human life or ECG signals or other body sensors for critical patient monitoring to detect critical, possibly life-threatening situations [89]. It is quite challenging to develop generic framework for anomaly detection due to unavailability of labeled data and domain specific normality; thus, most of the anomaly detection methods have been developed for certain domain [90]. It is an important problem and has a significant impact on the efficiency of any data mining system. The importance of its detection is due to the fact that its presence in data can compromise data quality and reduce the effectiveness of learning algorithm. Thus, it is a very critical problem and requires high degree of accuracy. Anomaly detection is the detection of items, events or observations in the data that do not conform to an expected pattern [91]. Broadly speaking, based on their nature, anomalies can be classified into four categories are point (individual data instance is considered as anomalous with respect to the rest of data), contextual (data instance is anomalous in a specific context), collective anomalies (collection of related data instances is anomalous with respect to the rest of dataset).

Based on the available data labels, three broad categories of anomaly detection techniques exist: unsupervised, supervised and semi-supervised anomaly detection. As availability of labeled dataset for anomaly detection is not easily possible; thus, most of the approaches are based on unsupervised or semi-supervised learning methods where the purpose is to find the abnormal behavior. Supervised learning-based methods uses fully labeled data, semi-supervised anomaly detection uses anomaly-free dataset for training and find anomaly if it deviated from trained dataset whereas unsupervised detection method uses intrinsic information to detect anomalous values based on the deviation form majority of data. Why the supervised learning methods is not that successful for anomaly detection? First of all, not all supervised classification method suits for this task, i.e., C4.5 cannot be applied to unbalanced data [92]. Secondly, anomalies are abnormal behavior and they might not have known prior. Semi-supervised is one-class (normal data)-based method that is trained on normal data without anomalies and after that deviations from that data are considered anomalies. Unsupervised is the commonly used method for anomalies detection and it does not require data labeling. Distance or densities are used to estimates the abnormalities based on intrinsic properties of the dataset. Clustering is the simplest unsupervised methods to identify anomaly in data (Fig. 4).

Fig. 4.

Anomaly detection methods based on dataset

The performance of detection method depends on dataset and parameter selection. Several methods for anomaly detection have been presented in literature, some of most popular anomaly detection are SVM, Replicator neural networks, correlation-based detection, density-based techniques (k-nearest neighbor, local outlier factor), deviations from association rules and ensemble techniques (using feature bagging or score normalization). Output of anomaly detection system could be score or label [93]. Score (commonly used for unsupervised or semi-supervised) is a ranked list of anomalies that are assigned to each instance depending on the degree to which instance is considered anomaly whereas labeling (used for supervised) are binary output (anomaly or not) (Fig. 5).

Fig. 5.

Anomaly detection methods

k-NN (not k-nearest neighbor classification)-based unsupervised anomaly detection is a straightforward way for anomaly detection. It is not able to detect local anomalies and only detect global anomalies. Anomaly score is computed on k-nearest-neighbors distance (computed either single (known as kth-NN) [94] or average (known as k-NN) [95]). Outlier Factor Local outlier factor is well know method for finding anomalous data points by measuring the local deviation of a given data point with respect to its neighboring point. LOF works in three steps: k-NN is applied to all data points; estimation of local density using local readability density; and finally computation of LOF score through LRD comparison with its neighbor LRD. In fact LOF is the ratio of local density. Connectivity-Based Outlier Factor (COF) is based on the spherical density computation rather than k-NN-based selection. Unlike LOF and COF, Cluster-Based Local Outlier Factor (CBLOF) performs density estimation for clusters. First, clustering is performed to find clusters and then anomaly score is calculated based on the distance of each data point with cluster center. LDCOF (local density cluster-based outlier factor) is the extension of CBLOF that considers the density of local cluster as well by segmenting into small and large clusters. LDCOF (local density cluster-based outlier factor) is the extension of LDLOF to overcome the shortcoming of CBLOF and unweighted-CBLOF by estimating the clusters densities assuming a spherical distribution of the cluster members. LDCOF is local score as it is based on distance of instance to its cluster center. LOF and COF output are scores, but they do not determine the threshold. Outlier Probability resolves this issue. Clustering-based Multivariate Gaussian Outlier Score (CMGOS) is another extension of cluster-based anomaly detection. First, k-means clustering is performed followed by computation of covariance matrix. Local density estimation is calculated by estimating a multivariate Gaussian model and Mahalanobis distance is used for distance computation. Histogram-based Outlier Score (HBOS) is unsupervised, anomaly detection method [96]. It is fast than multivariate approach due to independence of features. For each feature, histogram is computed followed by multiplication of inverse height of the bins it resides for each instance. Due to the feature independence, HBOS can process a dataset under a minute, whereas other approaches may take hours.

One-class SVM [97] is a commonly used semi-supervised anomaly detection method that is trained on anomalous free data [92]. SVM is trained on anomaly-free data whereas normal data is used for classification and once-class SVM classifies into normal or anomaly. In one-class SVM-based supervised anomaly detection scenario, it is trained on dataset and for anomaly detection, each instance in data is scored by normalized distance [98]. Conditional anomaly detection is different from standard anomaly detection method [99]. The goal is to detect unusual pattern relating input attributes X an output attributed y in an example a whereas standard anomaly detection identify data that deviate from other data. Amer presented eta one-class SVM to deal with the sensitivity of one-class SVM for outlier data [98]. An improved one-class SVM “OC-SVM” that exploits the inner-class structure of the training set via minimizing the within-class scatter of the training data [100].

Guidelines Not only generalized healthcare data, automated anomaly detection, even for a specific disease like thyroid disease, is not easy to solve. Successful approaches need to use a range of techniques to deal with real-world problem especially when data is quite large and complicated. During recent years, a large number of methods have been presented for anomaly detection, selection of method depends upon the type of anomaly and data itself. Before selecting a method, it is important to know which anomaly detection technique is best suited for a given anomaly detection problem and data. When dimensions are high in number, NN and clustering-based methods are not good option as the distance measures in high number of dimensions are not able to differentiate between anomalous and normal data. To deal with high-dimensional data, spectral techniques could be used as they explicitly address high dimensionality issue through high to low dimensional projection mapping. If fully labeled or partially-labeled data is available then supervised- or semi-supervised-based method could be used even in case of high-dimensional data. Semi-supervised method are preferable over supervised methods as supervised methods are poor to deal distribution imbalance of labels (normal vs specific anomaly) thus data need to be preprocessed to overcome this biasness issue. Due to the sensitivity of healthcare finding, one main challenge is to find best feature vector that provide maximum discrimination power and avoid false positive to minimum and has high accuracy. Thus, it is required to find efficient feature vector that characterizes the anomalous occurrence and its location causing agent in time or frequency domain using some domain transformation or wavelets. Another issue that should be considered is computational complexity as anomaly detection methods have high computational complexity especially when dealing with real-time data, i.e., data generated by ICU monitoring sensors. Most of the anomaly detection methods are unsupervised or semi-supervised and these techniques require expensive testing phases which can limit it in real setting. To overcome this issue, one should consider model size and its complexity. Another big challenge that effects the performance of anomaly detection method is data itself. Data should be accurate, complete and consistent. In healthcare sector, data contains noise which tends to be similar as anomalies or effect detection, Thus before applying anomaly detection, data should be cleaned carefully.

Applications

Today, abundant data collected in medical sciences that could be utilized by healthcare organization to get wide range of benefits, i.e., descriptive analytics (What has happened based on symptoms), predictive analytics (What will happen based on current situation) and prescriptive analytics (how to deal with this situation based on best practice and the most effective treatments. As discussed earlier, traditional methods are not to process mounds of data. These days, much of the focus in recent development has been on early detection and prevention of diseases and data mining and data analytics interventions in health sector are increasingly being used for this purpose. By extracting hidden information form medical data using data analytics and machine learning techniques, intelligent system can be designed that besides the physician knowledge and be used as expert system for early diagnose and treatment. To promote early detection and prevention of disease, several intelligent approaches have been presented. Based on the types of applications, we have divided the following discussion into four subsections.

Examples/case studies

Despite the extensive need of data analytics applications in healthcare, although the work done is not that efficient; however, data analytics is driving vast improvements in healthcare sector and in future, we will see the rapid, widespread implementation across the healthcare organizations. Here are some case studies for disease prevention in past few years.

ScienceSoft, Truven, Artelnies, TechVantage, Xerox research centre India (XRCI), are some of solution provider companies that are applying data analytics for clinical data efficiently. ScienceSoft provides specific data analysis services to help healthcare providers make efficient and timely decisions and better understand activities. Truven health Analytics provide solution for healthcare data analytics. Artelnies applied artificial intelligence methods to analyze clinical data and provide solutions like medical diagnosis, medical prognosis, microarray analysis and protein design and designed several application for early diagnose and disease prevention, i.e., breast cancer hazard assessment (predict if a patient could have a recurrence of cancer or not), diagnosing heart disease (diagnose a heart problem) and early prognostic in patients with liver disorders (investigating early-stage liver disorder before it becomes serious). XRCI is performing predictive analytics to identify high-risk patients in hospitals and delivering several clinical decision support systems, i.e., ICU admission prediction, complication prediction in ICUs, ICU mortality prediction and stroke severity and outcome prediction.

Kaiser Permanente is one of the first medical organizations to implement an EHR system and is the largest integrated health system serving mainly the western U.S. Kaiser primarily uses SAS analytics tools and SAP’s Business Objects business intelligence software to support data analytics activities against the EHR system. Uncertainty of clinical decisions often mislead clinicians to deal with patients (i.e., treatment of newborns using antibiotics: 0.05% newborns having the infection confirmed by blood culture [101] and only 11% of those newborn received antibiotics). Kaiser Permanente system allows clinicians to steer high-cost interventions to deal such issues. System would be able to predict accurately which antibiotics are required for the baby based on the mothers clinical data and the baby's condition immediately after birth that result in cost reduction as well as reduces side effect among newborns. Optum Labs, USA collected EHRs of more than 30 million patients to create database for predictive analytics in order to improve the quality of care. Goal is to use predictive analytics to help physicians to improve patient care by aiding decision power especially for the patients with complex medical histories and suffering from multiple conditions. Healthcare Analytics by Mckinsey & Company combines strategy big data and advanced analytics, and implementation processes.

Prediction of Zika virus has been challenging for public health officials. NASA is assisting public health officials, scientists and communities to limit the spread of Zika virus and identify its causes. Researcher developed computation model based on historical patterns of mosquito-borne diseases (i.e., chikungunya and dengue) to predict the spread of virus and characterized by slow growth and high spatial and seasonal heterogeneity, attributable to the dynamics of the mosquito vector and to the characteristics and mobility of the human populations [102]. Researchers used temperature, rainfall and socioeconomic factors and identified the Zika transmission and predict the number of cases for next year by combining real-world data on population, human mobility and climate.

Harvard Medical School and Harvard Pilgrim Health Care applied analytical methods to EHR data to identify patient with diabetes and classify them into groups (Type 1 and Type II diabetes). Four years worth of data based on numerous indicators from multiple sources have been analyzed. Patient could be grouped into high-risk disease groups and risk could be minimized by preventive care, i.e., new preventive treatment protocols could be introduced among patient groups with high cholesterol. Rizzoli Orthopaedic Institute, Italy, is using advanced analytics to gain more granular understanding of dynamics of clinical variability within families for improving care and reducing treatment costs for hereditary bone diseases. Result showed significant improvement in healthcare efficiency, i.e., 30% reductions in annual hospitalizations and over 60% reductions in the number of imaging tests. Hospital is planning to gain more benefits by insights into the complex interplay of genetic factors and identify cures. Columbia University Medical Center is using analytical technique to analyze brain injured patients to detect complications earlier. To deal with complications proactively rather than re-actively, physiologic data form the patient who have suffered form bleeding stroke from a ruptured brain aneurysm is analyzed to provide critical information to medical professionals. Analytical techniques are applied on real-time data as well as persistent data to detect hidden pattern that indicate occurrence of complications. North York general hospital, implemented a scalable, real-time analytics solution to improve patient outcomes and develop deeper understanding of the operational factors driving its business. System provides analytical finding to physicians and administrators to improve the patient outcome.

Intensive Care Unit (ICU) is one of the main section where analytics method could be applied on real-time data for prediction to improve quality care. A number of organizations are working on integration data analytics methods with body sensors and other medical devices to detect plummeting vital signs hours before humans have a clue. SickKids hospital, Toronto, is largest center working on advancing children's health and improving outcomes for infants susceptible to life-threatening nosocomial infections. Hospital applied analytical methods to vital science and other data from real-time monitoring (captured from monitoring devices up to 1000 times per second) to improve the child health. Potential signs of an infection is predicted before it happens (24 h earlier than with previous methods). Researchers believe that, in future, it will be significantly beneficial for other medical diagnose. University of California, Davis applied analytical methods to EHR to identify sepsis early. QPID analytical system is used by Massachusetts general hospital to predict surgical risk, to help patients with the right course of actions and to ensure that they don't miss critical patient data during admission and treatment.

Even though some of the advanced health organizations have implemented data analytics technologies, however, it still has enough room to grow on analytics.

Classification

Most important and common use of data analytics and data mining in healthcare probably involves predictive modeling. One key problem is the selection of effective classifier. One general classifier will not work for all types of problems, selection of classifier based on the type of problem and data itself. In the literature, several classification methods is being employed for different disease prediction.

Cardiovascular disease (CVD) can lead to a heart attack, chest pain or stroke due to the blockage of blood vessels. It is one of the leading causes of death (31.5%), whereas 90 % of Cardiovascular diseases are preventable [103]. Lot of research is going on for the early identification and treatment of CVD using data mining tools. Jonnagaddala et al. presented a system for heart risk factor identification and progression for diabetic patients unstructured EHR data [104]. System consist of three modules core NLP, risk factor recognition and attribute assignment module. NLP assigns POS-tags and identifies noun phrases and forwards to risk factor recognition phase that identifies medications, disease disorder mentions, family history, smoking history and heart risk factors. Identified risk factors are then forwards to attribute assignment module assign indicator and time attributes to risk factors. In another study, Jonnagaddala et al. presented rule-based system to extract risk factors using clinical text mining [105]. Risk factor is extracted from unstructured electronic health records. Rules were developed to remove records, which do not contain age and gender information. Alneamy and Alnaish used hybrid teaching learning-based optimization (TLBO) and fuzzy wavelet neural network (FWNN) for identification of heart disease [106]. TLBO is used for training parameter updation used for training FWNN. Training data consists of 13 attributes are forward to FWNN and mean square error is computed that is used for weight updation using TBLO. TLBO-FWNN-based system provided 90.29% accuracy Cleveland heart disease dataset.

Rajeswari et al. [107] presented feature selection using feed forward neural network for ischemic heart disease identification. The feature set is reduced to 12 most discriminant features form 17 that increased the accuracy from 82.2 to 89.4%. In another study, Arslan et al. [108] compared SVM, stochastic gradient boosting (SGB) and penalized logistic regression (PLR) for ischemic stroke identification. SVM provide slightly higher accuracy as compared to SGB and PLR. Anooj [109] used weighted fuzzy rule-based system based on Mamdani fuzzy inference for the diagnosis of heart disease. Attribute selection and attribute weighted method is used for the development of fuzzy rules. Mining procedure is applied to generate appropriate attributes which are use to develop fuzzy rules. Weighted based on frequency is added to attributes in the learning process for effective learning.