Abstract

In this paper, we present a formal quantification of uncertainty induced by numerical solutions of ordinary and partial differential equation models. Numerical solutions of differential equations contain inherent uncertainties due to the finite-dimensional approximation of an unknown and implicitly defined function. When statistically analysing models based on differential equations describing physical, or other naturally occurring, phenomena, it can be important to explicitly account for the uncertainty introduced by the numerical method. Doing so enables objective determination of this source of uncertainty, relative to other uncertainties, such as those caused by data contaminated with noise or model error induced by missing physical or inadequate descriptors. As ever larger scale mathematical models are being used in the sciences, often sacrificing complete resolution of the differential equation on the grids used, formally accounting for the uncertainty in the numerical method is becoming increasingly more important. This paper provides the formal means to incorporate this uncertainty in a statistical model and its subsequent analysis. We show that a wide variety of existing solvers can be randomised, inducing a probability measure over the solutions of such differential equations. These measures exhibit contraction to a Dirac measure around the true unknown solution, where the rates of convergence are consistent with the underlying deterministic numerical method. Furthermore, we employ the method of modified equations to demonstrate enhanced rates of convergence to stochastic perturbations of the original deterministic problem. Ordinary differential equations and elliptic partial differential equations are used to illustrate the approach to quantify uncertainty in both the statistical analysis of the forward and inverse problems.

Electronic supplementary material

The online version of this article (doi:10.1007/s11222-016-9671-0) contains supplementary material, which is available to authorized users.

Keywords: Numerical analysis, Probabilistic numerics, Inverse problems, Uncertainty quantification

Introduction

Motivation

The numerical analysis literature has developed a large range of efficient algorithms for solving ordinary and partial differential equations, which are typically designed to solve a single problem as efficiently as possible (Hairer et al. 1993; Eriksson 1996). When classical numerical methods are placed within statistical analysis, however, we argue that significant difficulties can arise as a result of errors in the computed approximate solutions. While the distributions of interest commonly do converge asymptotically as the solver mesh becomes dense [e.g. in statistical inverse problems (Dashti and Stuart 2016)], we argue that at a finite resolution, the statistical analyses may be vastly overconfident as a result of these unmodelled errors.

The purpose of this paper is to address these issues by the construction and rigorous analysis of novel probabilistic integration methods for both ordinary and partial differential equations. The approach in both cases is similar: we identify the key discretisation assumptions and introduce a local random field, in particular a Gaussian field, to reflect our uncertainty in those assumptions. The probabilistic solver may then be sampled repeatedly to interrogate the uncertainty in the solution. For a wide variety of commonly used numerical methods, our construction is straightforward to apply and provably preserves the order of convergence of the original method.

Furthermore, we demonstrate the value of these probabilistic solvers in statistical inference settings. Analytic and numerical examples show that using a classical non-probabilistic solver with inadequate discretisation when performing inference can lead to inappropriate and misleading posterior concentration in a Bayesian setting. In contrast, the probabilistic solver reveals the structure of uncertainty in the solution, naturally limiting posterior concentration as appropriate.

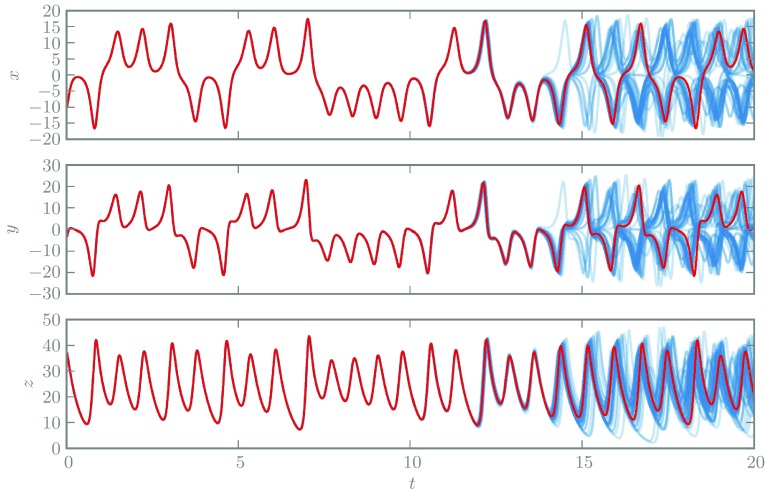

As a motivating example, consider the solution of the Lorenz’63 system. Since the problem is chaotic, any typical fixed-step numerical methods will become increasingly inaccurate for long integration times. Figure 1 depicts a deterministic solution for this problem, computed with a fixed-step, fourth-order, Runge–Kutta integrator. Although the solver becomes completely inaccurate by the end of the depicted interval given the step-size selected, the solver provides no obvious characterisation of its error at late times. Compare this with a sample of randomised solutions based on the same integrator and the same step-size; it is obvious that early-time solutions are accurate and that they diverge at late times, reflecting instability of the solver. Every curve drawn has the same theoretical accuracy as the original classical method, but the randomised integrator provides a detailed and practical approach for revealing the sensitivity of the solution to numerical errors. The method used requires only a straightforward modification of the standard Runge–Kutta integrator and is explained in Sect. 2.3.

Fig. 1.

A comparison of solutions to the Lorenz’63 system using deterministic (red) and randomised (blue) integrators based on a fourth-order Runge–Kutta integrator

We summarise the contributions of this work as follows:

Construct randomised solvers of ODEs and PDEs using natural modification of popular, existing solvers.

Prove the convergence of the randomised methods and study their behaviour by showing a close link between randomised ODE solvers and stochastic differential equations (SDEs).

Demonstrate that these randomised solvers can be used to perform statistical analyses that appropriately consider solver uncertainty.

Review of existing work

The statistical analysis of models based on ordinary and partial differential equations is growing in importance and a number of recent papers in the statistics literature have sought to address certain aspects specific to such models, e.g. parameter estimation (Liang and Wu 2008; Xue et al. 2010; Xun et al. 2013; Brunel et al. 2014) and surrogate construction (Chakraborty et al. 2013). However, the statistical implications of the reliance on a numerical approximation to the actual solution of the differential equation have not been addressed in the statistics literature to date and this is the open problem comprehensively addressed in this paper. Earlier work in the literature including randomisation in the approximate integration of ordinary differential equations (ODEs) includes (Coulibaly and Lécot 1999; Stengle 1995). Our strategy fits within the emerging field known as Probabilistic Numerics (Hennig et al. 2015), a perspective on computational methods pioneered by Diaconis (1988), and subsequently (Skilling 1992). This framework recasts solving differential equations as a statistical inference problem, yielding a probability measure over functions that satisfy the constraints imposed by the specific differential equation. This measure formally quantifies the uncertainty in candidate solution(s) of the differential equation, allowing its use in uncertainty quantification (Sullivan 2016) or Bayesian inverse problems (Dashti and Stuart 2016).

A recent Probabilistic Numerics methodology for ODEs (Chkrebtii et al. 2013) [explored in parallel in Hennig and Hauberg (2014)] has two important shortcomings. First, it is impractical, only supporting first-order accurate schemes with a rapidly growing computational cost caused by the growing difference stencil [although Schober et al. (2014) extends to Runge–Kutta methods]. Secondly, this method does not clearly articulate the relationship between their probabilistic structure and the problem being solved. These methods construct a Gaussian process whose mean coincides with an existing deterministic integrator. While they claim that the posterior variance is useful, by the conjugacy inherent in linear Gaussian models, it is actually just an a priori estimate of the rate of convergence of the integrator, independent of the actual forcing or initial condition of the problem being solved. These works also describe a procedure for randomising the construction of the mean process, which bears similarity to our approach, but it is not formally studied. In contrast, we formally link each draw from our measure to the analytic solution.

Our motivation for enhancing inference problems with models of discretisation error is similar to the more general concept of model error, as developed by Kennedy and O’Hagan (2001). Although more general types of model error, including uncertainty in the underlying physics, are important in many applications, our focus on errors arising from the discretisation of differential equations leads to more specialised methods. Future work may be able to translate insights from our study of the restricted problem to the more general case. Existing strategies for discretisation error include empirically fitted Gaussian models for PDE errors (Kaipio and Somersalo 2007) and randomly perturbed ODEs (Arnold et al. 2013); the latter partially coincides with our construction, but our motivation and analysis are distinct. Recent work (Capistrán et al. 2013) uses Bayes factors to analyse the impact of discretisation error on posterior approximation quality. Probabilistic models have also been used to study error propagation due to rounding error; see Hairer et al. (2008).

Organisation

The remainder of the paper has the following structure: Sect. 2 introduces and formally analyses the proposed probabilistic solvers for ODEs. Section 3 explores the characteristics of random solvers employed in the statistical analysis of both forward and inverse problems. Then, we turn to elliptic PDEs in Sect. 4, where several key steps of the construction of probabilistic solvers and their analysis have intuitive analogues in the ODE context. Finally, an illustrative example of an elliptic PDE inference problem is presented in Sect. 5.1

Probability measures via probabilistic time integrators

Consider the following ordinary differential equation (ODE):

| 1 |

where is a continuous function taking values in .2 We let denote the flow map for Eq. (1), so that . The conditions ensuring that this solution exists will be formalised in Assumption 2, below.

Deterministic numerical methods for the integration of this equation on time interval [0, T] will produce an approximation to the equation on a mesh of points , with , (for simplicity we assume a fixed mesh). Let denote the exact solution of (1) on the mesh and denote the approximation computed using finite evaluations of f. Typically, these methods output a single discrete solution , often augmented with some type of error indicator, but do not statistically quantify the uncertainty remaining in the path.

Let denote the Banach space . The exact solution of (1) on the time interval [0, T] may be viewed as a Dirac measure on at the element u that solves the ODE. We will construct a probability measure on , that is straightforward to sample from both on and off the mesh, for which h quantifies the size of the discretisation step employed, and whose distribution reflects the uncertainty resulting from the solution of the ODE. Convergence of the numerical method is then related to the contraction of to .

We briefly summarise the construction of the numerical method. Let denote a classical deterministic one-step numerical integrator over time-step h, a class including all Runge–Kutta methods and Taylor methods for ODE numerical integration (Hairer et al. 1993). Our numerical methods will have the property that, on the mesh, they take the form

| 2 |

where are suitably scaled, i.i.d. Gaussian random variables. That is, the random solution iteratively takes the standard step, , followed by perturbation with a random draw, , modelling uncertainty that accumulates between mesh points. The discrete path is straightforward to sample and in general is not a Gaussian process. Furthermore, the discrete trajectory can be extended into a continuous time approximation of the ODE, which we define as a draw from the measure .

The remainder of this section develops these solvers in detail and proves strong convergence of the random solutions to the exact solution, implying that in an appropriate sense. Finally, we establish a close relationship between our random solver and a stochastic differential equation (SDE) with small mesh-dependent noise. Intuitively, adding Gaussian noise to an ODE suggests a link to SDEs. Additionally, note that the mesh-restricted version of our algorithm, given by (2), has the same structure as a first-order Ito–Taylor expansion of the SDE

| 3 |

for some choice of . We make this link precise by performing a backwards error analysis, which connects the behaviour of our solver to an associated SDE.

Probabilistic time integrators: general formulation

The integral form of Eq. (1) is

| 4 |

The solutions on the mesh satisfy

| 5 |

and may be interpolated between mesh points by means of the expression

| 6 |

We may then write

| 7 |

where is an unknown function of time. In the algorithmic setting, we have approximate knowledge about g(s) through an underlying numerical method. A variety of traditional numerical algorithms may be derived based on approximation of g(s) by various simple deterministic functions . The simplest such numerical method arises from invoking the Euler approximation that

| 8 |

In particular, if we take and apply this method inductively the corresponding numerical scheme arising from making such an approximation to g(s) in (7) is Now consider the more general one-step numerical method This may be derived by approximating g(s) in (7) by

| 9 |

We note that all consistent (in the sense of numerical analysis) one-step methods will satisfy

The approach based on the approximation (9) leads to a deterministic numerical method which is defined as a continuous function of time. Specifically, we have Consider again the Euler approximation, for which , and whose continuous time interpolant is then given by Note that this produces a continuous function, namely an element of , when extended to The preceding development of a numerical integrator does not acknowledge the uncertainty that arises from lack of knowledge about g(s) in the interval We propose to approximate g stochastically in order to represent this uncertainty, taking

where the form an i.i.d. sequence of Gaussian random functions defined on [0, h] with .3

We will choose to shrink to zero with h at a prescribed rate (see Assumption 1), and also to ensure that almost surely. The functions represent our uncertainty about the function g. The corresponding numerical scheme arising from such an approximation is given by

| 10 |

where the i.i.d. sequence of functions lies in and is given by

| 11 |

Note that the numerical solution is now naturally defined between grid points, via the expression

| 12 |

When it is necessary to evaluate a solution at multiple points in an interval, , the perturbations must be drawn jointly, which is facilitated by their Gaussian structure. Although most users will only need the formulation on mesh points, we must consider off-mesh behaviour to rigorously analyse higher order methods, as is also required for the deterministic variants of these methods.

In the case of the Euler method, for example, we have

| 13 |

and, between grid points,

| 14 |

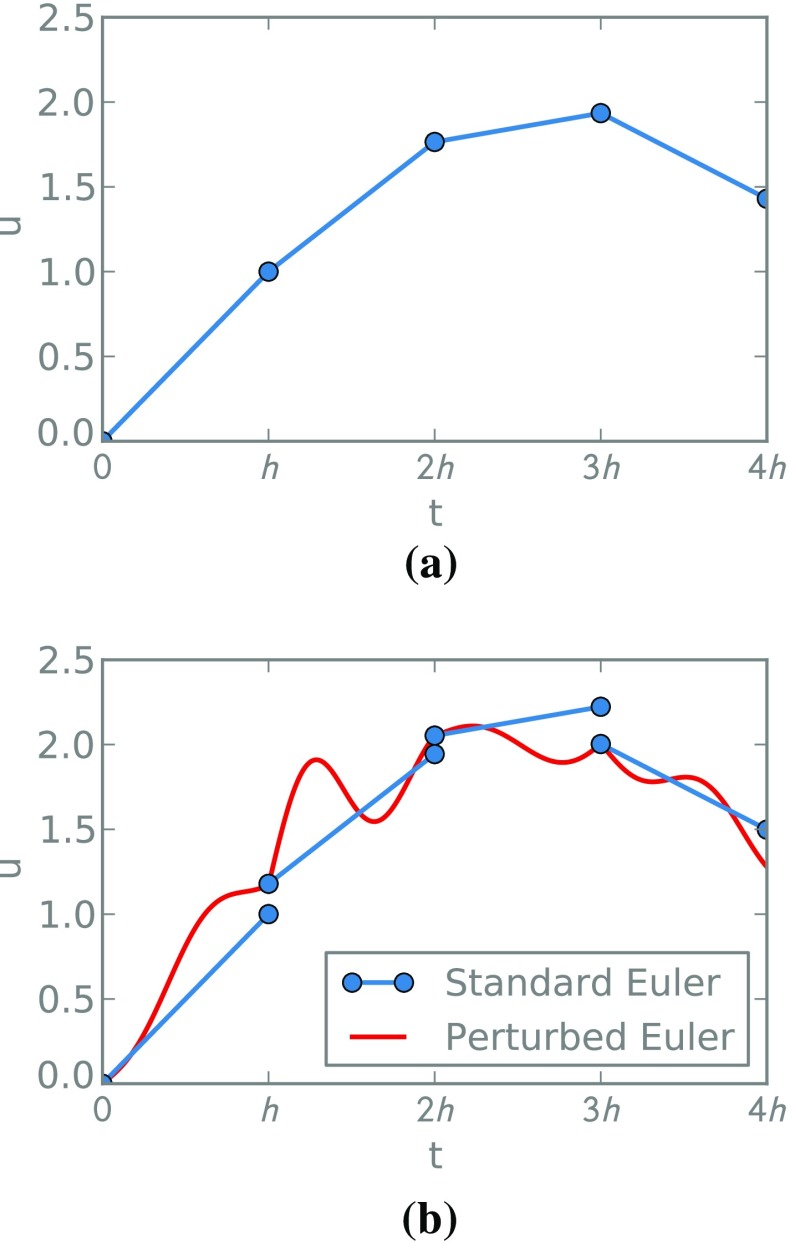

This method is illustrated in Fig. 2. Observe that Eq. (13) has the same form as an Euler–Maryama method for an associated SDE (3) where depends on the step-size h. In particular, in the simple one-dimensional case, would be given by . Section 2.4 develops a more sophisticated connection that extends to higher order methods and off the mesh.

Fig. 2.

An illustration of deterministic Euler steps and randomised variations. The random integrator in (b) outputs the path in red; we overlay the standard Euler step constructed at each step, before it is perturbed (blue)

While we argue that the choice of modelling local uncertainty in the flow map as a Gaussian process is natural and analytically favourable, it is not unique. It is possible to construct examples where the Gaussian assumption is invalid; for example, when a highly inadequate time-step is used, a systemic bias may be introduced. However, in regimes where the underlying deterministic method performs well, the centred Gaussian assumption is a reasonable prior.

Strong convergence result

To prove the strong convergence of our probabilistic numerical solver, we first need two assumptions quantifying properties of the random noise and of the underlying deterministic integrator, respectively. In what follows we use and to denote the Euclidean inner product and norm on . We denote the Frobenius norm on by , and denotes expectation with respect to the i.i.d. sequence .

Assumption 1

Let with Then there exists such that, for all , in particular Furthermore, we assume the existence of matrix Q, independent of h, such that

Here, and in the sequel, K is a constant independent of h, but possibly changing from line to line. Note that the covariance kernel is constrained, but not uniquely defined. We will assume the form of the constant matrix is , and we discuss one possible strategy for choosing in Sect. 3.1. Section 2.4 uses a weak convergence analysis to argue that once Q is selected, the exact choice of has little practical impact.

Assumption 2

The function f and a sufficient number of its derivatives are bounded uniformly in in order to ensure that f is globally Lipschitz and that the numerical flow map has uniform local truncation error of order :

Remark 2.1

We assume globally Lipschitz f, and bounded derivatives, in order to highlight the key probabilistic ideas, whilst simplifying the numerical analysis. Future work will address the non-trivial issue of extending of analyses to weaken these assumptions. In this paper, we provide numerical results indicating that a weakening of the assumptions is indeed possible.

Theorem 2.2

Under Assumptions 1, 2 it follows that there is such that

Furthermore,

This theorem implies that every probabilistic solution is a good approximation of the exact solution in both a discrete and continuous sense. Choosing is natural if we want to preserve the strong order of accuracy of the underlying deterministic integrator; we proceed with the choice , introducing the maximum amount of noise consistent with this constraint.

Examples of probabilistic time integrators

The canonical illustration of a probabilistic time integrator is the probabilistic Euler method already described.4 Another useful example is the classical Runge–Kutta method which defines a one-step numerical integrator as follows:

where

The method has local truncation error in the form of Assumption 2 with It may be used as the basis of a probabilistic numerical method (12), and hence (10) at the grid points. Thus, provided that we choose to perturb this integrator with a random process satisfying Assumption 1 with ,5 Theorem 2.2 shows that the error between the probabilistic integrator based on the classical Runge–Kutta method is, in the mean square sense, of the same order of accuracy as the deterministic classical Runge–Kutta integrator.

Backward error analysis

Backwards error analyses are useful tool for numerical analysis; the idea is to characterise the method by identifying a modified equation (dependent upon h) which is solved by the numerical method either exactly, or at least to a higher degree of accuracy than the numerical method solves the original equation. For our random ODE solvers, we will show that the modified equation is a stochastic differential equation (SDE) in which only the matrix Q from Assumption 1 enters; the details of the random processes used in our construction do not enter the modified equation. This universality property underpins the methodology we introduce as it shows that many different choices of random processes all lead to the same effective behaviour of the numerical method.

We introduce the operators and defined so that, for all ,

| 15 |

Thus and is the kernel for the Markov chain generated by the probabilistic integrator (2). In fact we never need to work with itself in what follows, only with , so that questions involving the operator logarithm do not need to be discussed.

We now introduce a modified ODE and a modified SDE which will be needed in the analysis that follows. The modified ODE is

| 16 |

whilst the modified SDE has the form

| 17 |

The precise choice of is detailed below. Letting denote expectation with respect to W, we introduce the operators and so that, for all ,

| 18 |

| 19 |

Thus,

| 20 |

where : denotes the inner product on which induces the Frobenius norm, that is, A:B .

The fact that the deterministic numerical integrator has uniform local truncation error of order (Assumption 2) implies that, since ,

| 21 |

The theory of modified equations for classical one-step numerical integration schemes for ODEs (Hairer et al. 1993) establishes that it is possible to find in the form

| 22 |

such that

| 23 |

We work with this choice of in what follows.

Now for our stochastic numerical method we have

Furthermore, the last term has mean of size . From Assumption 1 we know that Thus

| 24 |

From this it follows that

| 25 |

Finally we note that (20) implies that

Thus we have

| 26 |

Now using (23), (25), and (26) we obtain

| 27 |

Balancing these terms, in what follows we make the choice . If we adopt the convention that the drift is simply f. With this choice of q we obtain

| 28 |

This demonstrates that the error between the Markov kernel of one-step of the SDE (17) and the Markov kernel of the numerical method (2) is of order . Some straightforward stability considerations show that the weak error over an time interval is . We make assumptions giving this stability and then state a theorem comparing the weak error with respect to the modified Eq. (17), and the original Eq. (1).

Assumption 3

The function f is in and all its derivatives are uniformly bounded on . Furthermore, f is such that the operators and satisfy, for all and some ,

Remark 2.3

If in what follows (our recommended choice) then the weak order of the method coincides with the strong order; however, measured relative to the modified equation, the weak order is then one plus twice the strong order. In this case, the second part of Theorem 2.2 gives us the first weak order result in Theorem 2.4. Additionally, Assumption 3 is stronger than we need, but allows us to highlight probabilistic ideas whilst keeping overly technical aspects of the numerical analysis to a minimum. More sophisticated, but structurally similar, analysis would be required for weaker assumptions on f. Similar considerations apply to the assumptions on .

Theorem 2.4

Consider the numerical method (10) and assume that Assumptions 1 and 3 are satisfied. Then, for function with all derivatives bounded uniformly on , we have that

and

Example 2.5

Consider the probabilistic integrator derived from the Euler method in dimension . We thus have , and we hence set . The results in Hairer et al. (2006) allow us to calculate with . The preceding theory then leads to strong order of convergence 1, measured relative to the true ODE (1), and weak order 3 relative to the SDE

These results allow us to constrain the behaviour of the randomised method using limited information about the covariance structure, . The randomised solution converges weakly, at a high rate, to a solution that only depends on Q. Hence, we conclude that the practical behaviour of the solution is only dependent upon Q, and otherwise, may be any convenient kernel. With these results now available, the following section provides an empirical study of our probabilistic integrators.

Statistical inference and numerics

This section explores applications of the randomised ODE solvers developed in Sect. 2 to forward and inverse problems. Throughout this section, we use the FitzHugh–Nagumo model to illustrate ideas (Ramsay et al. 2007). This is a two-state non-linear oscillator, with states (V, R) and parameters (a, b, c), governed by the equations

| 29 |

This particular example does not satisfy the stringent Assumptions 2 and 3 and the numerical results shown demonstrate that, as indicated in Remarks 2.1 and 2.3, our theory will extend to weaker assumptions on f, something we will address in future work.

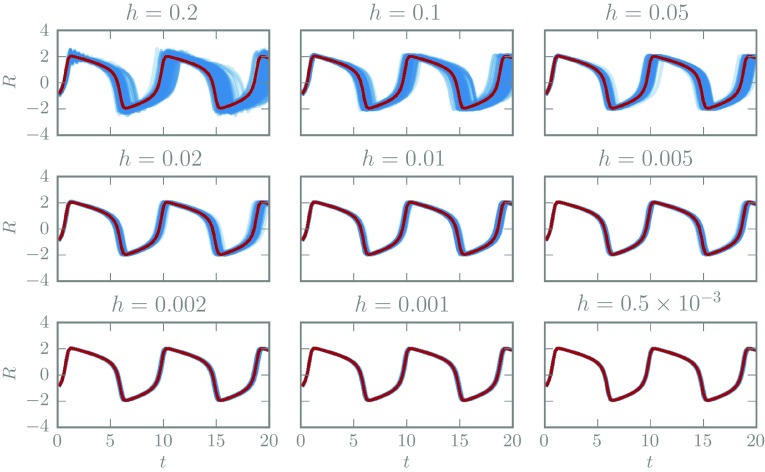

Calibrating forward uncertainty propagation

Consider Eq. (29) with fixed initial conditions , and parameter values (.2, .2, 3). Figure 3 shows draws of the V species trajectories from the measure associated with the probabilistic Euler solver with , for various values of the step-size and fixed . The random draws exhibit non-Gaussian structure at large step-size and clearly contract towards the true solution.

Fig. 3.

The true trajectory of the V species of the FitzHugh–Nagumo model (red) and one hundred realisations from a probabilistic Euler ODE solver with various step-sizes and noise scale (blue)

Although the rate of contraction is governed by the underlying deterministic method, the scale parameter, , completely controls the apparent uncertainty in the solver.6 This tuning problem exists in general, since is problem dependent and cannot obviously be computed analytically.

Therefore, we propose to calibrate to replicate the amount of error suggested by classical error indicators. In the following discussion, we often explicitly denote the dependence on h and with superscripts, hence the probabilistic solver is and the corresponding deterministic solver is . Define the deterministic error as . Then we assume there is some computable error indicator , defining . The simplest error indicators might compare differing step-sizes, , or differing order methods, as in a Runge–Kutta 4–5 scheme.

We proceed by constructing a probability distribution that is maximised when the desired matching occurs. We estimate this scale matching by comparing: (i) a Gaussian approximation of our random solver at each step k, and (ii) the natural Gaussian measure from the deterministic solver, , and the available error indicator, , We construct by penalising the distance between these two normal distributions at every step: . We find that the Bhattacharyya distance (closely related to the Hellinger metric) works well (Kailath 1967), since it diverges quickly if either the mean or variance differs. The density can be easily estimated using Monte Carlo. If the ODE state is a vector, we take the product of the univariate Bhattacharyya distances. Note that this calibration depends on the initial conditions and any parameters of the ODE.

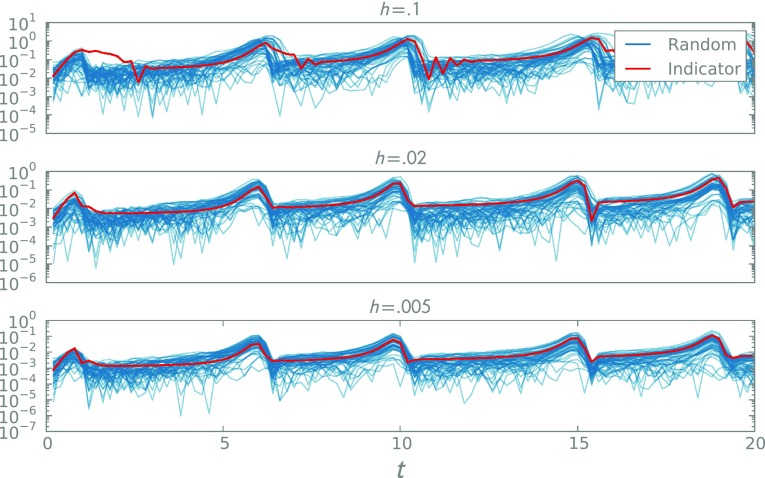

Returning to the FitzHugh–Nagumo model, sampling from yields strongly peaked, uni-modal posteriors, hence we proceed using . We examine the quality of the scale matching by plotting the magnitudes of the random variation against the error indicator in Fig. 4, observing good agreement of the marginal variances. Note that our measure still reveals non-Gaussian structure and correlations in time not revealed by the deterministic analysis. As described, this procedure requires fixed inputs to the ODE, but it is straightforward to marginalise out a prior distribution over input parameters.

Fig. 4.

A comparison of the error indicator for the V species of the FitzHugh–Nagumo model (blue) and the observed variation in the calibrated probabilistic solver. The red curves depict 50 samples of the magnitude of the difference between a standard Euler solver for several step-sizes and the equivalent randomised variant, using , maximising

Bayesian posterior inference problems

Given the calibrated probabilistic ODE solvers described above, let us consider how to incorporate them into inference problems.

Assume we are interested in inferring parameters of the ODE given noisy observations of the state. Specifically, we wish to infer parameters for the differential equation , with fixed initial conditions (a straightforward modification may include inference on initial conditions). Assume we are provided with data , at some collection of times , corrupted by i.i.d. noise, . If we have prior , the posterior we wish to explore is, where density compactly summarises this likelihood model.

The standard computational strategy is to simply replace the unavailable trajectory u with a numerical approximation, inducing approximate posterior Informally, this approximation will be accurate when the error in the numerical solver is small compared to and often converges formally to as (Dashti and Stuart 2016). However, highly correlated errors at finite h can have substantial impact.

In this work, we are concerned about the undue optimism in the predicted variance, that is, when the posterior concentrates around an arbitrary parameter value even though the deterministic solver is inaccurate and is merely able to reproduce the data by coincidence. The conventional concern is that any error in the solver will be transferred into posterior bias. Practitioners commonly alleviate both concerns by tuning the solver to be nearly perfect, however, we note that this may be computationally prohibitive in many contemporary statistical applications.

We can construct a different posterior that includes the uncertainty in the solver by taking an expectation over random solutions to the ODE

| 30 |

where is a draw from the randomised solver given parameters and random draw . Intuitively, this construction favours parameters that exhibit agreement with the entire family of uncertain trajectories. The typical effect of this expectation is to increase the posterior uncertainty on , preventing the inappropriate posterior collapse we are concerned about. Indeed, if the integrator cannot resolve the underlying dynamics, will be large. Then is independent of , hence the prior is recovered, .

Notice that as , both the measures and typically collapse to the analytic posterior, , hence both methods are correct. We do not expect the bias of to be improved, since all of the averaged trajectories are of the same quality as the deterministic solver in . We now construct an analytic inference problem demonstrating these behaviours.

Example 3.1

Consider inferring the initial condition, , of the scalar linear differential equation, with We apply a numerical method to produce the approximation . We observe the state at some times , with additive noise : . If we use a deterministic Euler solver, the model predicts These model predictions coincide with the slightly perturbed problem

hence error increases with time. However, the assumed observational model does not allow for this, as the observation variance is at all times.

In contrast, our proposed probabilistic Euler solver predicts

where we have made the natural choice , where is the problem-dependent scaling of the noise and the are i.i.d. For a single observation, and every are independent, so we may rearrange the equation to consider the perturbation as part of the observation operator. Hence, a single observation at k has effective variance

Thus, late-time observations are modelled as being increasingly inaccurate.

Consider inferring , given a single observation at time k. If a Gaussian prior is specified for , then the posterior is , where

The observation precision is scaled by because late-time data contain increasing information. Assume that the data are , for some given true initial condition and noise realisation Consider now the asymptotic regime, where h is fixed and . For the standard Euler method, where , we see that , whilst . Thus the inference scheme becomes increasingly certain of the wrong answer: the variance tends to zero and the mean tends to infinity.

In contrast, with a randomised integrator, the fixed h, large k asymptotics are

Thus, the mean blows up at a modified rate, but the variance remains positive.

We take an empirical Bayes approach to choosing , that is, using a constant, fixed value , chosen before the data are observed. Joint inference of the parameters and the noise scale suffer from well-known MCMC mixing issues in Bayesian hierarchic models. To handle the unknown parameter , we can marginalise it out using the prior distribution, or in simple problems, it may be reasonable to choose a fixed representative value.

We now return to the FitzHugh–Nagumo model; given fixed initial conditions, we attempt to recover parameters from observations of both species at times . The priors are log-normal, centred on the true value with unit variance, and with observational noise . The data are generated from a high-quality solution, and we perform inference using Euler integrators with various step-sizes, , spanning a range of accurate and inaccurate integrators.

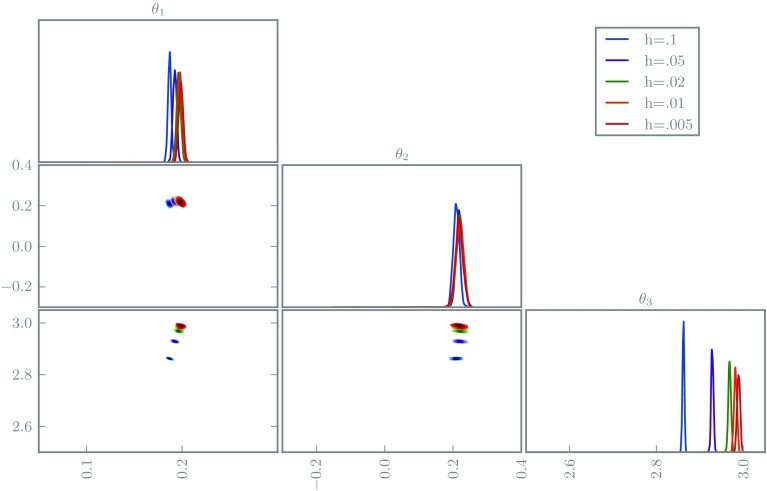

We first perform the inferences with naive use of deterministic Euler integrators. We simulate from each posterior using delayed rejection MCMC (Haario et al. 2006), shown in Fig. 5. Observe the undesirable concentration of every posterior, even those with poor solvers; the posteriors are almost mutually singular, hence clearly the posterior widths are meaningless.

Fig. 5.

The posterior marginals of the FitzHugh–Nagumo inference problem using deterministic integrators with various step-sizes

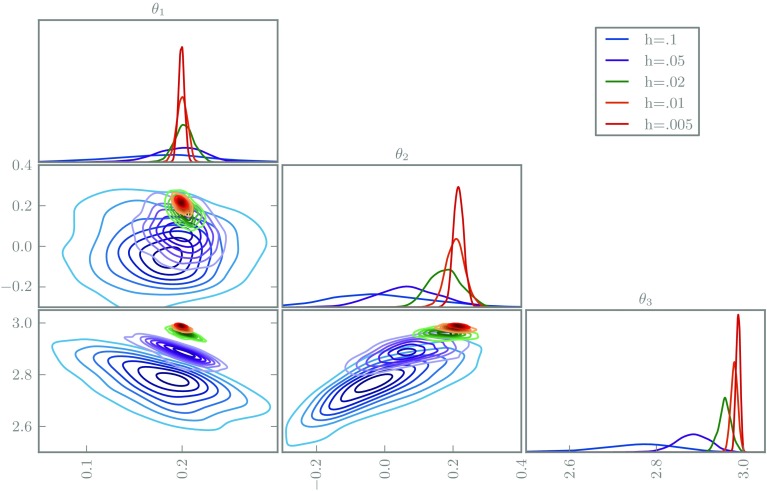

Secondly, we repeat the experiment using our probabilistic Euler integrators, with results shown in Fig. 6. We use a noisy pseudomarginal MCMC method, whose fast mixing is helpful for these initial experiments (Medina-Aguayo et al. 2015). These posteriors are significantly improved, exhibiting greater mutual agreement and obvious increasing concentration with improving solver quality. The posteriors are not perfectly nested, possible evidence that our choice of scale parameter is imperfect, or that the assumption of locally Gaussian error deteriorates for large step-sizes. Note that the bias of is essentially unchanged with the randomised integrator, but the posterior for broadens and is correlated to , hence introduces a bias in the posterior mode; without randomisation, only the inappropriate certainty about allowed the marginal for to exhibit little bias.

Fig. 6.

The posterior marginals of the FitzHugh–Nagumo inference problem using probabilistic integrators with various step-sizes

Probabilistic solvers for partial differential equations

We now turn to present a framework for probabilistic solutions to partial differential equations, working within the finite element setting. Our discussion closely resembles the ODE case, except that now we randomly perturb the finite element basis functions.

Probabilistic finite element method for variational problems

Let be a Hilbert space of real-valued functions defined on a bounded polygonal domain . Consider a weak formulation of a linear PDE specified via a symmetric bilinear form , and a linear form to give the problem of finding This problem can be approximated by specifying a finite-dimensional subspace and seeking a solution in instead. This leads to a finite-dimensional problem to be solved for the approximation U:

| 31 |

This is known as the Galerkin method.

We will work in the setting of finite element methods, assuming that , where is locally supported on a grid of points The parameter h is introduced to measure the diameter of the finite elements. We will also assume that

| 32 |

Any element can then be written as

| 33 |

from which it follows that The Galerkin method then gives , for , , and

In order to account for uncertainty introduced by the numerical method, we will assume that each basis function can be split into the sum of a systematic part and random part , where both and satisfy the nodal property (32), hence . Furthermore, we assume that each shares the same compact support as the corresponding , preserving the sparsity structure of the underlying deterministic method.

Strong convergence result

As in the ODE case, we begin our convergence analysis with assumptions constraining the random perturbations and the underlying deterministic approximation. The bilinear form is assumed to induce an inner product, and then norm via furthermore, we assume that this norm is equivalent to the norm on . Throughout, denotes expectation with respect to the random basis functions.

Assumption 4

The collection of random basis functions are independent, zero-mean, Gaussian random fields, each of which satisfies and shares the same support as the corresponding systematic basis function For all j, the number of basis functions with index k which share the support of the basis functions with index j is bounded independently of J, the total number of basis functions. Furthermore, the basis functions are scaled so that

Assumption 5

The true solution u of problem (4.1) is in Furthermore, the standard deterministic interpolant of the true solution, defined by satisfies

Theorem 4.1

Under Assumptions 4 and 5 it follows that the approximation U, given by (31), satisfies

As for ODEs, the solver accuracy is limited by either the amount of noise injected or the convergence rate of the underlying deterministic method, making the natural choice.

Poisson solver in two dimensions

Consider a Poisson equation with Dirichlet boundary conditions in dimension , namely

We set and H to be the space with inner product and resulting norm The weak formulation of the problem has the form (4.1) with

Now consider piecewise linear finite elements satisfying the assumptions of Sect. 4.2 in Johnson (2012) and take these to comprise the set Then h measures the width of the triangulation of the finite element mesh. Assuming that it follows that and that

| 34 |

Thus . We choose random basis members so that Assumption 4 hold with . Theorem 4.1 then shows that, for , We note that that in the deterministic case, we expect an improved rate of convergence in the function space H. Such a result can be shown to hold in our setting, following the usual arguments for the Aubin–Nitsche trick Johnson (2012), which is available in the supplementary materials.

PDE inference and numerics

We now perform numerical experiments using probabilistic solvers for elliptic PDEs. Specifically, we perform inference in a 1D elliptic PDE, for , given boundary conditions . We represent as piecewise constant over ten equal-sized intervals; the first, on is fixed to be one to avoid non-identifiability issues, and the other nine are given a prior . Observations of the field u are provided at , with i.i.d. Gaussian error, ; the simulated observations were generated using a fine grid and quadratic finite elements, then perturbed with error from this distribution.

Again we investigate the posterior produced at various grid sizes, using both deterministic and randomised solvers. The randomised basis functions are draws from a Brownian bridge conditioned to be zero at the nodal points, implemented in practice with a truncated Karhunen–Loève expansion. The covariance operator may be viewed as a fractional Laplacian, as discussed in Lindgren et al. (2011). The scaling is again determined by maximising the distribution described in Sect. 3.1, where the error indicator compares linear to quadratic basis functions, and we marginalise out the prior over the values.

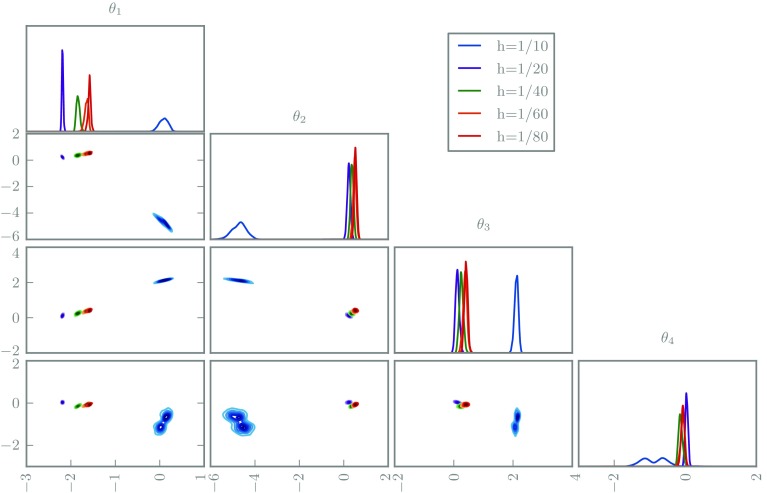

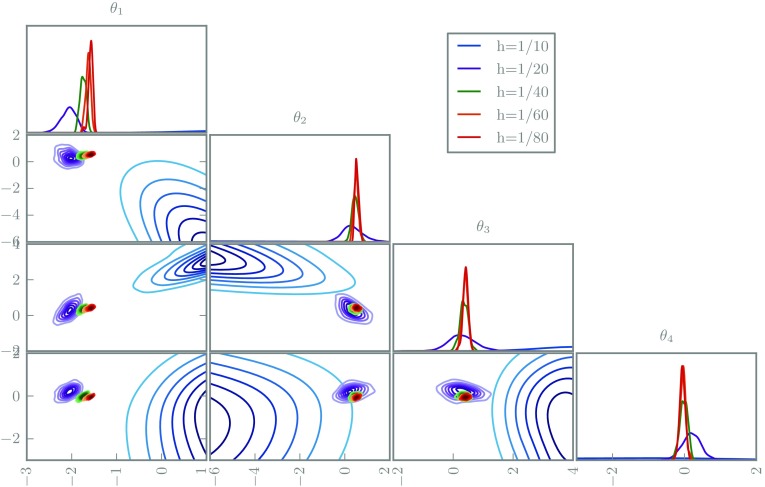

The posteriors are depicted in Figs. 7 and 8. As in the ODE examples, the deterministic solvers lead to incompatible posteriors for varying grid sizes. In contrast, the randomised solvers suggest increasing confidence as the grid is refined, as desired. The coarsest grid size uses an obviously inadequate ten elements, but this is only apparent in the randomised posterior.

Fig. 7.

The marginal posterior distributions for the first four coefficients in 1D elliptic inverse problem using a classic deterministic solver with various grid sizes

Fig. 8.

The marginal posterior distributions for the first four coefficients in 1D elliptic inverse problem using a randomised solver with various grid sizes

Conclusions

We have presented a computational methodology, backed by rigorous analysis, which enables quantification of the uncertainty arising from the finite-dimensional approximation of solutions of differential equations. These methods play a natural role in statistical inference problems as they allow for the uncertainty from discretisation to be incorporated alongside other sources of uncertainty such as observational noise. We provide theoretical analyses of the probabilistic integrators which form the backbone of our methodology. Furthermore we demonstrate empirically that they induce more coherent inference in a number of illustrative examples. There are a variety of areas in the sciences and engineering which have the potential to draw on the methodology introduced including climatology, computational chemistry, and systems biology.

Our key strategy is to make assumptions about the local behaviour of solver error, which we have assumed to be Gaussian, and to draw samples from the global distribution of uncertainty over solutions that results. Section 2.4 describes a universality result, simplifying task of choosing covariance kernels in practice, within the family of Gaussian processes. However, assumptions of Gaussian error, even locally, may not be appropriate in some cases, or may neglect important domain knowledge. Our framework can be extended in future work to consider alternate priors on the error, for example, multiplicative or non-negative errors.

Our study highlights difficult decisions practitioners face, regarding how to expend computational resources. While standard techniques perform well when the solver is highly converged, our results show standard techniques can be disastrously wrong when the solver is not converged. As the measure of convergence is not a standard numerical analysis one, but a statistical one, we have argued that it can be surprisingly difficult to determine in advance which regime a particular problem resides in. Therefore, our practical recommendation is that the lower cost of the standard approach makes it preferable when it is certain that the numerical method is strongly converged with respect to the statistical measure of interest. Otherwise, the randomised method we propose provides a robust and consistent approach to address the error introduced into the statistical task by numerical solver error. In difficult problem domains, such as numerical weather prediction, the focus has typically been on reducing the numerical error in each solver run; techniques such as these may allow a difference balance between numerical and statistical computing effort in the future.

The prevailing approach to model error described in Kennedy and O’Hagan (2001) is based on a non-intrusive methodology where the effect of model discrepancy is allowed for in observation space. Our intrusive randomisation of deterministic methods for differential equations can be viewed as a highly specialised discrepancy model, designed using our intimate knowledge of the structure and properties of numerical methods. In this vein, we intend to extend this work to other types of model error, where modifying the internal structure of the models can produce computationally and analytically tractable measures of uncertainty which perform better than non-intrusive methods. Our future work will continue to study the computational challenges and opportunities presented by these techniques.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgments

The authors gratefully acknowledge support from EPSRC Grant CRiSM EP/D002060/1, EPSRC Established Career Research Fellowship EP/J016934/2, EPSRC Programme Grant EQUIP EP/K034154/1, and Academy of Finland Research Fellowship 266940. Konstantinos Zygalakis was partially supported by a grant from the Simons Foundation. Part of this work was done during the author’s stay at the Newton Institute for the program “Stochastic Dynamical Systems in Biology: Numerical Methods and Applications.;”

A Numerical analysis details and proofs

Proof

(Theorem 2.2) We first derive the convergence result on the grid, and then in continuous time. From (10) we have

| 35 |

whilst we know that

| 36 |

Define the truncation error and note that

| 37 |

Subtracting Eq. (37) from (36) and defining , we get

Taking the Euclidean norm and expectations give, using Assumption 1 and the independence of the ,

where the constant in the term is uniform in Assumption 2 implies that again uniformly in Noting that is globally Lipschitz with constant bounded by under Assumption 2, we then obtain

Using Cauchy–Schwarz on the inner product, and the fact that is Lipschitz with constant bounded independently of h, we get

Application of the Gronwall inequality gives the desired result.

Now we turn to continuous time. We note that, for ,

Let denote the -algebra of events generated by the up to time t. Subtracting we obtain, using Assumptions 1 and 2 and the fact that has Lipschitz constant of the form ,

Now taking expectations we obtain

Using the on-grid error bound gives the desired result, after noting that the constants appearing are uniform in

Proof

(Theorem 2.4) We prove the second bound first. Let and Then let It follows from the Markov property that

Using (28) and Assumption 3 we obtain

Iterating and employing the Gronwall inequality gives the second error bound.

Now we turn to the first error bound, comparing with the solution u of the original Eq. (1). From (25) and then (21) we see that

This gives the first weak error estimate, after using the stability estimate on from Assumption 3.

Proof (Theorem 4.1) Recall the Galerkin orthogonality property which follows from subtracting the approximate variational principle from the true variational principle: it states that, for ,

| 38 |

From this it follows that

| 39 |

To see this note that, for any , the orthogonality property (38) gives

| 40 |

Thus, by Cauchy–Schwarz, implying (39). We now set, for ,

By the mean-zero and independence properties of the random basis functions we deduce that

Ornstein–Uhlenbeck integrator

An additional example of a randomised integrator is an integrated Ornstein–Uhlenbeck process, derived as follows. Define, on the interval , the pair of equations

| 41a |

| 41b |

Here W is a standard Brownian motion and and are invertible matrices, possibly depending on h. The approximating function is thus defined by V(s), an Ornstein–Uhlenbeck process.

Integrating (41b) we obtain

| 42 |

where and the form an i.i.d. sequence of Gaussian random functions defined on [0, h] with

Note that the h-dependence of comes through the time interval on which is defined, and through and .

Integrating (41a), using (42), we obtain

| 43 |

where , and, for ,

| 44 |

The numerical method (43) may be written in the form (12), and hence (10) at the grid points, with the definition

This integrator is first-order accurate and satisfies Assumption 2 with . Choosing to scale with h so that in Assumption 1 leads to convergence of the numerical method with order 1.

Had we carried out the above analysis in the case we would have obtained the probabilistic Euler method (14), and hence (13) at grid points, used as our canonical example in the earlier developments.

Convergence rate of convergence

When considering the Poisson problem in two dimensions, as discussed in Sect. 4, we expect an improved rate of convergence in the function space H. We now show that such a result also holds in our random setting.

Note that, under Assumption 4, if we introduce that is 1 when two basis functions have overlapping support, and 0 otherwise, then is symmetric and there is constant C, independent of j and J, such that Now let solve the equation Then We define and in analogy with the definitions of and . Following the usual arguments for application of the Aubin–Nitsche trick Johnson (2012), we have Thus

| 45 |

We note that where, by Sobolev embedding ( here), satisfies Note, however, that the are random and correlated with all of the random basis functions. Using this, together with (34), in (45), we obtain

We see that

From this and the symmetry of , we obtain

Taking expectations, using that , we find, using Assumption 4, that as desired. Thus we recover the extra order of convergence over the rate 1 in the norm (although the improved rate is in whilst the lower rate of convergence is in ).

Footnotes

Supplementary materials and code are available online: http://www2.warwick.ac.uk/pints.

To simplify our discussion we assume that the ODE is autonomous, that is, f(u) is independent of time. Analogous theory can be developed for time-dependent forcing.

We use to denote a zero-mean Gaussian process defined on [0, h] with a covariance kernel .

An additional example of a probabilistic integrator, based on a Ornstein–Uhlenbeck process, is available in the supplementary materials.

Implementing Eq. 10 is trivial, since it simply adds an appropriately scaled Gaussian random number after each classical Runge–Kutta step.

Recall that throughout we assume that, within the context of Assumption 1, . More generally it is possible to calibrate an arbitrary positive semi-definite Q.

References

- Arnold A, Calvetti D, Somersalo E. Linear multistep methods, particle filtering and sequential Monte Carlo. Inverse Probl. 2013;29(8):085,007. doi: 10.1088/0266-5611/29/8/085007. [DOI] [Google Scholar]

- Brunel NJB, Clairon Q, dAlch Buc F. Parametric estimation of ordinary differential equations with orthogonality conditions. J. Am. Stat. Assoc. 2014;109(505):173–185. doi: 10.1080/01621459.2013.841583. [DOI] [Google Scholar]

- Capistrán, M., Christen, J.A., Donnet, S.: Bayesian analysis of ODE’s: solver optimal accuracy and Bayes factors (2013). arXiv:1311.2281

- Chakraborty A, Mallick BK, Mcclarren RG, Kuranz CC, Bingham D, Grosskopf MJ, Rutter EM, Stripling HF, Drake RP. Spline-based emulators for radiative shock experiments with measurement error. J. Am. Stat. Assoc. 2013;108(502):411–428. doi: 10.1080/01621459.2013.770688. [DOI] [Google Scholar]

- Chkrebtii, O.A., Campbell, D.A., Girolami, M.A., Calderhead, B.: Bayesian uncertainty quantification for differential equations (2013). arXiv:1306.2365

- Coulibaly I, Lécot C. A quasi-randomized Runge–Kutta method. Math. Comput. Am. Math. Soc. 1999;68(226):651–659. doi: 10.1090/S0025-5718-99-01056-X. [DOI] [Google Scholar]

- Dashti M, Stuart A. The Bayesian approach to inverse problems. In: Ghanem R, Higdon D, Owhadi H, editors. Handbook of Uncertainty Quantification. New York: Springer; 2016. [Google Scholar]

- Diaconis P. Bayesian numerical analysis. Stat. Decision Theory Relat. Top. IV. 1988;1:163–175. doi: 10.1007/978-1-4613-8768-8_20. [DOI] [Google Scholar]

- Eriksson, K.: Computational Differential Equations, vol. 1. Cambridge University Press, Cambridge (1996). https://books.google.co.uk/books?id=gbK2cUxVhDQC

- Haario H, Laine M, Mira A, Saksman E. DRAM: efficient adaptive MCMC. Stat. Comput. 2006;16(4):339–354. doi: 10.1007/s11222-006-9438-0. [DOI] [Google Scholar]

- Hairer, E., Nørsett, S., Wanner, G.: Solving Ordinary Differential Equations I: Nonstiff Problems. Solving Ordinary Differential Equations, Springer, New York (1993). https://books.google.co.uk/books?id=F93u7VcSRyYC

- Hairer E, Lubich C, Wanner G. Geometric Numerical Integration: Structure-Preserving Algorithms for Ordinary Differential Equations. New York: Springer; 2006. [Google Scholar]

- Hairer E, McLachlan RI, Razakarivony A. Achieving Brouwer’s law with implicit Runge–Kutta methods. BIT Numer. Math. 2008;48(2):231–243. doi: 10.1007/s10543-008-0170-3. [DOI] [Google Scholar]

- Hennig, P., Hauberg, S.: Probabilistic solutions to differential equations and their application to Riemannian statistics. In: Proceedings of the 17th International Conference on Artificial Intelligence and Statistics (AISTATS), vol. 33 (2014)

- Hennig, P., Osborne, M.A., Girolami, M.: Probabilistic numerics and uncertainty in computations. Proceedings of the Royal Society A (2015) (in press) [DOI] [PMC free article] [PubMed]

- Johnson, C.: Numerical Solution of Partial Differential Equations by the Finite Element Method. Dover Books on Mathematics Series, Dover Publications, New York (2012). Incorporated, https://books.google.co.uk/books?id=PYXjyoqy5qMC

- Kailath T. The divergence and Bhattacharyya distance measures in signal selection. IEEE Trans. Commun. Technol. 1967;15(1):52–60. doi: 10.1109/TCOM.1967.1089532. [DOI] [Google Scholar]

- Kaipio J, Somersalo E. Statistical inverse problems: discretization, model reduction and inverse crimes. J. Comput. Appl. Math. 2007;198(2):493–504. doi: 10.1016/j.cam.2005.09.027. [DOI] [Google Scholar]

- Kennedy MC, O’Hagan A. Bayesian calibration of computer models. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2001;63(3):425–464. doi: 10.1111/1467-9868.00294. [DOI] [Google Scholar]

- Liang H, Wu H. Parameter estimation for differential equation models using a framework of measurement error in regression models. J. Am. Stat. Assoc. 2008;103(484):1570–1583. doi: 10.1198/016214508000000797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindgren F, Rue H, Lindström J. An explicit link between Gaussian fields and Gaussian Markov random fields: the stochastic partial differential equation approach. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2011;73(4):423–498. doi: 10.1111/j.1467-9868.2011.00777.x. [DOI] [Google Scholar]

- Medina-Aguayo, F.J., Lee, A., Roberts, G.O.: Stability of Noisy Metropolis-Hastings (2015). arxiv:1503.07066 [DOI] [PMC free article] [PubMed]

- Ramsay JO, Hooker G, Campbell D, Cao J. Parameter estimation for differential equations: a generalized smoothing approach. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2007;69(5):741–796. doi: 10.1111/j.1467-9868.2007.00610.x. [DOI] [Google Scholar]

- Schober, M., Duvenaud, D.K., Hennig, P.: Probabilistic ODE solvers with Runge–Kutta means. In: Advances in Neural Information Processing Systems, pp. 739–747 (2014)

- Skilling, J.: Bayesian solution of ordinary differential equations. In: Maximum Entropy and Bayesian Methods, pp 23–37. Springer, New York (1992)

- Stengle G. Error analysis of a randomized numerical method. Numer. Math. 1995;70(1):119–128. doi: 10.1007/s002110050113. [DOI] [Google Scholar]

- Sullivan T. Uncertainty Quantification. New York: Springer; 2016. [Google Scholar]

- Xue, H., Miao, H., Wu, H.: Sieve estimation of constant and time-varying coefficients in nonlinear ordinary differential equation models by considering both numerical error and measurement error. Ann. Stat. 38(4), 2351–2387 (2010) [DOI] [PMC free article] [PubMed]

- Xun X, Cao J, Mallick B, Maity A, Carroll RJ. Parameter estimation of partial differential equation models. J. Am. Stat. Assoc. 2013;108(503):1009–1020. doi: 10.1080/01621459.2013.794730. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.